Abstract

Artificial intelligence (AI) has shown excellent diagnostic performance in detecting various complex problems related to many areas of healthcare including ophthalmology. AI diagnostic systems developed from fundus images have become state-of-the-art tools in diagnosing retinal conditions and glaucoma as well as other ocular diseases. However, designing and implementing AI models using large imaging data is challenging. In this study, we review different machine learning (ML) and deep learning (DL) techniques applied to multiple modalities of retinal data, such as fundus images and visual fields for glaucoma detection, progression assessment, staging and so on. We summarize findings and provide several taxonomies to help the reader understand the evolution of conventional and emerging AI models in glaucoma. We discuss opportunities and challenges facing AI application in glaucoma and highlight some key themes from the existing literature that may help to explore future studies. Our goal in this systematic review is to help readers and researchers to understand critical aspects of AI related to glaucoma as well as determine the necessary steps and requirements for the successful development of AI models in glaucoma.

Similar content being viewed by others

Introduction

Glaucoma is an optic neuropathy accompanied by characteristic structural and functional changes [1]. It affects over 90 million individuals worldwide and constitutes the second leading cause of blindness and subsequent disability overall [2, 3]. The number of people aged 40–80 years with glaucoma worldwide was estimated to be 64.3 million in 2013, with projections that this number will increase to 76.0 million in 2020, and 111.8 million in 2040 [4]. Because older people make up the fastest-growing part of the US population, glaucoma will become even more prevalent in the US in the coming decades. As such, population-based screening for glaucoma becomes critical.

Glaucoma detection is challenging particularly at the early stages of the disease; however, early detection may lead to slowing its progression and future vision loss [5]. A major challenge in detecting glaucoma is that signs and symptoms may manifest only when significant vision has been already lost. Therefore, it is critical to diagnose glaucoma early to prevent future vision loss [6]. Glaucoma diagnosis typically includes assessment of the optic nerve head (ONH) through retinal examination, intraocular pressure (IOP) measurement, evaluation of visual fields (VFs), and examining other related factors.

Assessing the ONH in glaucoma is an important diagnostic step, as the primary implication for glaucoma is glaucomatous optic neuropathy (GON), which is widely identified through fundus photographs or optical coherence tomography (OCT) images. Currently, fundus photography is better suited for glaucoma screening because fundus cameras are affordable and more importantly, portable. However, development of low-cost and portable OCT systems is advancing as well, and these portable OCT technologies are poised to gain popularity in the coming years. Fundus photography has been the most established modality for documenting the status of the optic nerve and detecting GON since 1857. As a result, large, annotated datasets of fundus photographs are currently available and importantly, they are appropriate for machine learning (ML) and deep learning (DL) models. In contrast, clinical evaluations of the ONH are subjective and prone to error. According to prior research, it has been reported that both ophthalmology trainees and comprehensive ophthalmologists underestimated the likelihood of glaucoma in 20% of disc photographs. Additionally, ophthalmology trainees and comprehensive ophthalmologists were twice as likely to underestimate or overestimate the likelihood of glaucoma due to various factors, such as the variability in cup-to-disc ratio, peripapillary RNFL atrophy, and the presence of disc hemorrhage [7]. Optic nerve assessment is primarily performed in a subjective manner while most of the useful structural and functional features of healthy and diseased patients are overlapping therefore leading to inter- and intra-observer variability. The problem is worse for monitoring as glaucoma progression is often slow and happens over decades, making prediction of progression highly challenging. As such, automated ML models may provide more objective, consistent, and more accurate outcomes.

Artificial intelligence (AI) and DL models are emerging areas that automate the interpretation of retinal images. Advancements in computer systems and availability of large datasets and algorithms allow these systems to mimic human thought processes such as learning, reasoning, and self-correction. DL, a subfield of ML and AI, has made significant progress over the past few years and development of objective systems to automate glaucoma detection has been highly promising [8]. Although many studies demonstrated promising results in detecting glaucoma using AI, limitations still exist in many perspectives. For example, lack of standardized and consensus glaucoma definitions makes it difficult to evaluate the results consistently in different datasets; the shortage of large, well-annotated datasets of good quality limited the generalizability of AI model; the limited interpretability and liability of the DL model hurdled the implementation of it in clinic.

This review aims to identify ML-based models applied to glaucoma over the past few years and create a reference of those models and their performance. We also generate several taxonomies including categories of ML models, input data types, and level of performance and compare different ML types to identify better performing approaches. Lastly, we provide insight into current challenges and future directions as well.

The paper is organized as follows:

This paragraph ends the introductory section. An overview of AI in glaucoma is presented in Section “Literature review”. Section "Overview of the AI models in glaucoma" outlines applications of specific AI models in glaucoma based on various categories. Section "Discussion” presents open issues and future directions of AI in glaucoma. Finally, Section “Conclusions” concludes this review. Section "Methods” presents literature search and filtering strategies for this review.

Literature review

Glaucoma

Glaucoma is a group of heterogenous diseases that may lead to irreversible vision loss [1]. In some forms of glaucoma, increased IOP impacts the retina and ONH, which in turn, may lead to irreversible vision loss [9]. Lowering IOP is a proven treatment for open-angle glaucoma (OAG).

Although glaucoma detection is challenging, particularly at the early stages of the disease, early detection is critical in order to provide timely treatments that may be effective in slowing its progression [5]. Glaucoma is typically diagnosed by evaluating the ONH and the adjacent retinal nerve fiber layer (RNFL) through retinal examination and imaging tools, assessing VFs, and evaluating IOP levels.

AI for glaucoma

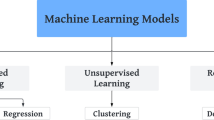

Not only is glaucoma diagnosis potentially time-consuming and costly, but also its reliance on an individual clinician’s knowledge and ability makes it subjective and prone to over/under estimation [10]. Alternatively, automated AI models could minimize subjectivity by interpreting and quantifying retinal and optic nerve images. AI has several other applications for glaucoma. For instance, AI can be used to optimize workflows and processes in glaucoma clinics that may lead to more time for clinicians to interact with patients thus enhancing overall care. AI could be used to quantify optic cup, disc, and rim characteristics in fundus images, retinal layers in OCT images, and patterns of VF loss. Such applications hold promise for providing improved glaucoma assessment, as well as forecasting, screening, diagnosing, and prognosing glaucoma. Figure 1 describes the overall AI domain and its subcategories including ML and DL. While DL models are more appropriate for analysis of image-based glaucoma data, other categories of ML models may be more appropriate for VF and other non-image data. Different categories of AI models including expert systems may be utilized to optimize glaucoma clinic workflows and processes.

Overview of the AI models in glaucoma

This section reviews different AI models from the literature that have been used for glaucoma assessment. Figure 2 shows different ML-based models that have been applied to detect glaucoma. These models have been grouped into two major categories including supervised and unsupervised learning models. In supervised learning, the model is trained on labeled data with each data instance having a known outcome. Unsupervised learning describes algorithms for finding patterns in data, without prior knowledge of outcomes for data instances [11]. Based on this taxonomy, we will highlight some of the representative ML studies applied to glaucoma and provide their strengths and limitations.

Machine learning

Supervised machine learning

Supervised ML [12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67,68,69,70,71,72,73,74,75,76,77,78,79,80] has been widely used in glaucoma detection, severity classification, progression prediction, segmentation, etc., based on different modalities, such as VF, fundus, OCT, clinical data, transcriptomic data, etc.

Several metrics were used for model evaluation, such as: accuracy (the proportion of correctly classified samples relative to the total number of samples); sensitivity/recall (the rate of positive samples correctly classified, reflecting the ratio of correctly classified positive samples to all samples assigned to the positive class); specificity (measures the rate of negative samples correctly classified, determined by the ratio of correctly classified negative samples to all samples belonging to the negative class); error rates (the ratio of the incorrectly classified samples to the total number of samples); precision (the proportion of true positive predictions out of all positive predictions from the model); true positive rate (TPR, the proportion of actual positive samples that the model correctly identified as positive); false positive rate (FPR, the proportion of actual negative samples that the model incorrectly identified as positive); area under the receiver operating characteristic (ROC) curve (AUC, the model’s performance across various thresholds, presenting TPR against FPR at various threshold settings), area under precision—recall curve (AUPRC, the classification model performance appropriate for imbalanced classes, demonstrating the precision against the recall at different threshold settings).

Logistic regression (LR) is a supervised model designed to estimate the probability between categorical classes. LR has also been used in glaucoma diagnosis in various studies. Lu et al. [28] used four ML classifiers to detect glaucoma based on biomechanical data from 52 patients including 20 glaucoma (40 eyes) and 32 healthy (64 eyes). The LR model obtained the best accuracy of 0.983, AUC of 0.990, sensitivity of 98.9% (at 80% specificity), and sensitivity of 97.7% (at 95% specificity) based on 3-fold cross-validation (CV). Baxter et al. [35] developed machine models to predict the requirement for surgical intervention in individuals diagnosed with primary open-angle glaucoma (POAG) utilizing systemic parameters obtained from the electronic health records (EHR) system from 385 POAG patients. They used leave-one-out CV, and the best model was multivariable LR with an AUC of 0.67. This model also provided the odds ratio of the factors which were associated with the risk of glaucoma surgery. Higher mean systolic blood pressure (OR: 1.09, P < 0.001) and use of anticoagulant medication (OR: 2.75, p = 0.042) were significantly associated with increased risk of glaucoma surgery. The major advantage of the LR models is their simplicity and explainability, which would be an essential advantage in glaucoma research and clinics.

K-Nearest Neighbor (KNN) is a supervised classification technique to estimate the likelihood that a data point belonging to a specific group by analyzing the groups to which its nearest neighboring data points belong. The KNN model has been used in glaucoma detection and some of the studies obtained better results using KNN than the other models. Singh, et al. [40] developed several ML models for glaucoma diagnosis from 70 glaucomatous and 70 healthy eyes based on OCT data. They extracted 45 features from OCT images and the highest AUC of 0.97 was achieved by a KNN model tested using 5-fold CV. Singh et al. [48] also developed an interconnected architecture with Customized Particle Swarm Optimization (CPSO) and four machine-learning classifiers based on 110 fundus images and found CPSO-KNN demonstrated superior performance compared to other models, achieving an accuracy of 0.99, specificity of 0.96, sensitivity of 0.97 and precision of 0.97, F1-score of 0.97 and kappa of 0.94 by utilizing 5-fold CV.

Support Vector Machine (SVM): SVM is a supervised ML technique that has the capability to tackle both classification and regression problems. The algorithm aims to identify the optimal line or decision boundary that separates different groups, enabling accurate classification of additional data points into their respective categories. SVM classifier has been widely reported in the literature for detecting glaucoma. For instance, Goldbaum et al. [12] compared various ML models for glaucoma diagnosis based on Standard Automated Perimetry (SAP) data collected from 189 normal eyes and 156 glaucomatous eyes, and SVM with Gaussian kernel with the input of VF sensitivities at each of 52 locations plus age. Results from this study obtained the second highest performance with AUC of 0.903, sensitivity of 0.53 (at specificity of 1.0) and sensitivity of 0.71 (at specificity of 0.9) based on 10-fold CV. Zangwill et al. [13] employed SVM models to detect glaucoma based on Heidelberg Retina Tomograph (HRT) data collected from 95 glaucomatous eyes and 135 normal eyes, and obtained the best performance with AUC of 0.964, sensitivity of 97% (at 75% specificity), and sensitivity of 85% (at 90% specificity) with the input of all parameters combined, including RNFL regional and global parameters, sectoral mean height contour along the disc margin, sectoral parapapillary mean height contour, sectoral RNFL thickness along the disc margin, and sectoral parapapillary RNFL thickness. Evaluations were performed based on 10-fold CV. Burgansky-Eliash et al. [14] developed different ML models for glaucoma detection from 47 glaucomatous eyes and 42 healthy eyes based on OCT parameters and found SVM with eight OCT parameters achieved the best performance with AUC of 0.981, accuracy of 0.966, sensitivity of 97.9% (at the specificity of 80%), and sensitivity of 92.5% (at specificity of 92.5%) using 6-fold CV. Townsend et al. [15] developed several ML models for glaucoma detection based on HRT3 data collected from 60 healthy subjects and 140 glaucomatous subjects. The SVM-radial applied on all HRT3 parameters showed significant improvement over the other models and obtained an AUC of 0.904, accuracy of 85.0%, sensitivity of 85.7% (at 80% specificity) and 64.8% sensitivity (at 95% specificity) based on leave-one-out CV. Garcia-Morate et al. [16] developed an SVM model to detection glaucoma using 136 glaucomatous eyes and 117 non-glaucomatous eyes based on HRT2 parameters. The SVM model exploiting 22 parameters obtained the highest performance with AUC of 0.905, sensitivity of 85.3% (at 75% specificity), and 79.4% sensitivity (at 90% specificity) using 10-fold CV. Bizios et al. [17] developed numerous AI models to detect glaucoma based on OCT-A scans collected from 90 healthy and 62 glaucomatous subjects and reported that SVM achieved the best performance with an AUC of 0.989 (95% confidence interval: 0.979–1.0) using 10-fold CV.

In summary, SVM worked well in dealing with VF, HRT, and OCT parameters to detect glaucoma mostly in earlier studies from 2002 to 2010. SVM is straightforward to implement and has relatively high explainability.

Tree-based ensembled model: The tree-based ensembled method has been reported to have better performance than a single tree-based model. Random Forest (RF), XGboost, and gradient boosting models from this family have been widely used in glaucoma diagnosis based on VFs and OCT parameters and have shown reasonable performance. Barella et al. [19] developed multiple ML models to detect early to moderate POAG from 57 early to moderate POAG and 46 healthy patients based on RNFL and optic nerve parameters collected from SD-OCT instrument. RF obtained the best AUC of 0.877 based on 13 input parameters with sensitivity of 64.9% (at 80% specificity) and sensitivity of 49.1% (at 90% specificity) using 10-fold CV. Hirasawa et al. [18] used various ML models to predict vision-related quality of life (VRQoL) based on VF and visual acuity from 164 glaucomatous patients. Based on this regression problem, RF and boosting models obtained the lowest error rate with root mean square error (RMSE) of 1.99. Silva et al. [20] developed models for glaucoma diagnosis based on SD-OCT and SAP (24–2) data collected from 62 glaucomatous patients and 48 healthy subjects. Based on four features, RF achieved the best AUC of 0.946 with the sensitivity of 95.16% (at 80% specificity), and the sensitivity of 82.25% (at 90% specificity) using 10-fold CV. Kim et al. [25] developed several ML models for diagnosis of glaucoma based on RNFL thickness and VFs collected from 399 cases and RF showed the best performance with an accuracy of 98%, sensitivity of 98.3%, specificity of 97.5%, and AUC of 0.979 when using seven features on internal testing set with 100 cases. Oh et al. [44]applied various ML models to detect glaucoma based on clinical data (IOP, OCT measurements, VF examinations) collected from 1244 eyes and observed XGboost the best performing model with an accuracy of 94.7%, sensitivity of 94.1%, specificity of 95.0%, and AUC of 0.945 with 10-fold CV.

Neural network (NN), also known as artificial neural networks (ANNs), are structured with layers of nodes and usually consist of an input layer, one or multiple hidden layers, and an output layer. Within this network, each node, also known as an artificial neuron, establishes connections with other nodes and possesses an assigned weight and threshold. When the output of a node surpasses a predetermined threshold, it becomes activated and transmits its output to the subsequent layer of the network; otherwise, no output is passed along to the next layer of the network. NNs have long been used for glaucoma-related tasks based on VFs and other imaging parameters. Omodaka et al. [24] used an NN with a structure of nine input layer units, eight hidden layer units, and four output layer units to identify the status of 163 eyes based on 15 features selected by minimum redundancy maximum relevance (mRMR) from 91 OCT parameters. The model achieved an accuracy of 87.8% (Cohen’s Kappa of 0.83) based on 10-fold CV. An et al. [27] used the same dataset and compared the performance of NN, SVM, and Naïve Bayesian models based on nine parameters selected by combining mRMR and genetic-algorithm-based feature selection. They identified the NN model as the best performing algorithm, with accuracy of 87.8% using 10-fold CV.

Overall, these conventional supervised ML models have been used widely in glaucoma diagnosis based on VFs, RNFL parameters, or other clinical factors. Among these models, we observed that SVM was used more frequently and obtained better performance, compared to other conventional ML models.

Unsupervised machine learning

Unsupervised learning is used for learning representative features and extracting patterns from data. Many studies applied unsupervised learning for extracting VF or RNFL loss patterns, glaucoma staging, segmentation and other features by using clustering, association analysis, dimension reduction, etc. [37, 41, 43, 49, 56, 60, 62, 81,82,83,84,85,86,87,88,89,90,91,92].

Clustering

We can broadly group the clustering models into either of two categories: hard or soft clustering algorithms.

Hard clustering: In hard clustering, each data point is clustered or grouped to just one cluster and not others. K-Means is a hard clustering algorithm. In K-Means, the algorithm determines the optimal initial centroid points by minimizing the sum of the squared distances between each point and its assigned centroid across all clusters. Huang et al. [87] applied K-Means clustering to identify different stages of glaucoma without any supervision by experts. They identified four severity levels based on 13,231 VFs and determined objective thresholds of − 2.2, − 8.0 and − 17.3 dB for VF mean deviation for distinguishing normal, early, moderate, and advanced stages of glaucoma. Ammal et al. [90] used K-Means to segregate optic disc (OD) and optic cup (OC) for further glaucoma diagnosis model development based on fundus images from the Retinal Fundus Images for Glaucoma Analysis (RIGA) dataset, and validated the model using another dataset with 90 images. The result of the K-Means was compared with severity levels determined by ophthalmologists on the same data set, and authors showed that the outcome was similar.

Soft clustering: In soft or fuzzy clustering, instead of putting each data points into only one cluster, the data point can be assigned to different clusters with different likelihoods. Fuzzy c-Means and Gaussian Mixture Model (GMM) are examples of soft clustering. Fuzzy-c Means: Praveena et al. [86] applied K-Means, Fuzzy c-Means (FCM) and Spatially Weighted fuzzy C-Means Clustering (SWFCM) to automatically determine the cup-to-disc ratio (CDR) from the fundus photographs of 50 normal and 50 glaucoma eyes. The K-value is determined by hill climbing algorithm. K-Means was used for segmenting OD, while FCM was used for segmenting optic cup. SWFCM was used for segmenting both OD and OC. The error rate was calculated with reference to the manually determined CDR value (considered the gold standard) provided by ophthalmologists for comparison. The mean error of the K-Means clustering method for elliptical and morphological fitting was 4.5% and 4.1%, respectively. The mean error was reduced by the FCM clustering to 3.83% and 3.52%, and the mean error was minimized to 3.06% and 1.67% using SWFCM. GMM: GMM attempts to find a mixture of multidimensional Gaussian probability distributions. Yousefi et al. [82] applied GMM to identify different patterns of VF loss. Their GMM successfully detected three distinct clusters, which included normal eyes, eyes in the early stage of glaucoma, and eyes in the advanced stage of glaucoma based on SAP VFs collected from 2085 eyes. Based on another subset with 270 eyes, they showed that GMM detected progressing eyes at an earlier stage, compared to other methods.

Association analysis

Association analysis: Association rule algorithms aims to find interesting relationships among variables within extensive datasets, provided they satisfy the predetermined minimum support (a threshold for determining the minimum association) and confidence level (or accuracy, minimum threshold of the correct rule or prediction) set by the user, in order to discover a pattern with strong association. Apriori algorithms, one of the association rule categories, focus on identifying frequent associations in order to unveil intriguing relationships between attributes. Al-Shamiri et al. [89] applied Apriori algorithm to discover risk factors of glaucoma based on a survey dataset from 4000 patients. Their association analysis revealed several glaucoma risk factors including family history of glaucoma, high IOP, optic nerve damage, high myopia, diabetes, hypertension, history of eye surgery, use of some medicines, psychological stress, pressure work or study-related pressure, gastroenterology disease, and chronic constipation.

Dimensionality reduction

A comprehensive eye examination to diagnose glaucoma typically includes collecting numerous imaging, VF, and ocular measurements, and thus provides high-dimensional datasets. A challenge is that ML models typically tend to overfit when dealing with glaucoma data and thus is not generalizable when tested on new data. However, reducing the dimensionality may address the issue by selecting a smaller subset of the features or deriving new features from a pool of features, while preserving the information of the original features as much as feasible.

Transformations: Transformation methods typically perform linear or nonlinear transformations to map a high-dimensional space of initial input data into a lower dimensional space of the features to reduce dimensionality. The widely used methods in glaucoma research are principal component analysis (PCA), archetypal analysis, and non-negative matrix factorization (NMF).

PCA: PCA [93] refers to a mathematical technique that employs an orthogonal transformation to convert a collection of observations, which may consist of correlated variables, into a new set of linearly uncorrelated variables known as principal components (PCs). Christopher et al. [29] applied PCA to structural RNFL features from RNFL thickness maps and retained 10 PCs for glaucoma diagnosis and glaucoma progression prediction based on 235 eyes and compared OCT and SAP features. The LR model based on PCA from RNFL data obtained a better performance than other available parameters such as mean cpRNFL, SAP 24–2 MD, and FDT MD in both glaucoma diagnosis (AUC of 0.95; CI 0.92–0.98) and progression prediction (AUC of 0.74; CI 0.62–0.85) using leave-one-out CV. Yousefi et al. [88] applied PCA to 52 TD values of 13,231 VFs and selected 10 PCs (explained 90% of variance in VFs) which were subsequently input to a manifold learning model, two features (dimensions) were ultimately retained. They applied unsupervised ML and identified 30 clusters based on those two features and used those clusters for glaucoma progression detection.

Archetypal Analysis: Wang et al. [83] applied Archetypal Analysis to identify the central VF patterns of loss from 13,951 VF tests (Humphery, 10–2). They discovered 17 distinct central VF patterns and noted that incorporating coefficients from central VF archetypical patterns strongly enhances the prediction of central VF loss compared to using global indices only. In another study, Wang et al. [84] employed Archetypal Analysis to identify the central VF patterns in end-stage glaucoma based on 2912 reliable VFs (10–2) and found central VF loss in end-stage glaucoma exhibits characteristic patterns that could potentially be associated with various subtypes. Nasal loss is likely the initial central VF loss, and a particular subtype of nasal loss is highly prone to progress into complete or total loss. Such explainable models may also shed light on some aspects of glaucoma pathophysiology. Follow-up studies based on deep archetypal analysis (DAA) identified more patterns of VF loss, their role in forecasting glaucoma, and their association with rapid glaucoma progression [94,95,96].

NMF: Wang et al. [81] utilized NMF to identify distinct patterns of the RNFL thickness (RNFLT) based on RNFLT collected from 691 eyes. NMF identified 16 distinct RNFLT patterns (RPs). Using these RPs resulted in a substantial enhancement in the prediction of VF sensitivities. The AI-based RNFLT patterns hold promise in assisting clinicians to more effectively evaluate and interpret RNFLT maps.

Feature selection: Feature selection models to identify the most optimal subset of original input variables. Various feature selection methods have been used in glaucoma research and some improved the performance of ML models. Examples include Pearson Correlation Coefficient (PCC)-based variable selection, Markov Blanket (MB) variable selection, and the minimum Redundancy Maximum Relevance (mRMR) approaches. Lee et al. [33] developed a set of ML models for glaucoma diagnosis based on VF data collected from 632 eyes and compared the result of models with different input features which were selected by PCC-based variable selection, MB variable selection, mRMR, and features extracted by PCA. By employing a combination of total deviation (TD) values, the GHT sector map, and variable selection using SVM and MB methods, the researchers achieved the highest performance, as evaluated through 5-fold CV, with an AUC of 0.912. Omodaka et al. [24] used mRMR to select 15 features from 91 quantified ocular parameters and used an NN model for OD classification based on 165 eyes of 105 POAG patients, and obtained an accuracy of 87.8%, Cohen’s kappa of 0.83 using 10 -fold CV. An et al. [27] applied a combination of mRMR and genetic algorithm-based feature selection to identify nine most valid and relevant features from a pool of 91 ocular parameters and patients’ background information of 163 glaucomatous eyes to develop ML model for classifying glaucomatous optic discs, and using the selected nine parameters yielded the highest accuracy of 87.8% with an NN model evaluated based on 10 -fold CV.

Deep learning

Emerging DL models have been widely used in ophthalmology and many DL models have shown promising performance in glaucoma screening, diagnosis, or quantification and segmentation of fundus photographs, VFs, and OCT images [31, 34, 51, 53, 85, 97,98,99,100,101,102,103,104,105,106,107,108,109,110,111,112,113,114,115,116,117,118,119,120,121,122,123,124,125,126,127,128,129,130,131,132,133,134,135,136,137,138,139,140,141,142,143,144,145,146,147,148,149,150,151,152,153,154,155,156,157,158,159,160,161,162,163,164,165,166,167,168,169,170,171,172,173,174,175,176,177,178,179,180,181,182,183,184,185,186,187,188,189,190,191,192,193,194,195,196,197,198,199,200,201,202,203,204,205,206,207,208,209,210,211,212,213,214,215,216,217,218,219,220,221,222,223,224,225,226,227,228,229,230,231,232,233,234,235,236,237,238,239,240,241,242,243,244,245,246,247,248,249,250,251,252,253,254,255,256,257,258,259,260,261,262,263,264,265,266,267,268,269,270,271,272,273,274,275,276,277,278,279,280,281,282,283,284,285,286,287,288,289,290]. We will discuss some of the applications in glaucoma in three broad categories of discriminative, generative, and hybrid models, as shown in Fig. 3.

Classification of DL models. CNN: Convolutional Neural Network; RNN: Recurrent Neural Network; LSTM: long short-term memory; DCGAN: Deep Convolutional Generative Adversarial Network; SSCNN: Convolutional Neural Network model with self-learning; SSCNN-DAE: Semi-supervised Convolutional Neural Network model with autoencoder

Discriminative models

Discriminative models separate data points into different classes and learn the boundaries using probability estimates and maximum likelihood. Discriminative models are most common in glaucoma and have been extensively used in detection, optic disc/cup, and region of interest (ROI) segmentation.

Convolutional Neural Network (CNN): CNN has been widely used in glaucoma diagnosis based on retinal images such as fundus photograph and OCT images. Chen et al. [97] developed one of the earliest CNN models for glaucoma diagnosis based on fundus images from ORIGA and SCES datasets. The CNN model with five layers obtained the best performance with AUCs of 0.838 and 0.898 based on fundus images from the ORIGA (internal testing) and SCES (independent validation) datasets, respectively. Ahn et al. [100] developed a CNN model to discriminate glaucomatous from normal eyes based on 1542 fundus images. The model outperformed LR and InceptionV3 model with an accuracy of 87.9% and AUC of 0.94 on internal testing set. Norouzifard et al. [102] applied transfer learning based on VGG19 and Inception-ResNet-V2 architectures in identifying glaucoma from 447 fundus photograph and re-tested the model on an independent dataset (HRF with 30 fundus images). They reported that that VGG19 obtained 80.0% accuracy on independent dataset. Masumoto et al. [103] developed a CNN model to classify glaucoma patients into four severity levels based on fundus images from 1399 patients/images. The model obtained AUCs of 0.872, 0.830, 0.864, 0.934 for normal vs all glaucoma, early glaucoma, moderate glaucoma, and severe glaucoma, respectively, on the internal testing set. Fuentes-Hurtado et al. [104] applied DenseNet-201 in classifying 1912 rat OCT images into healthy and pathological using leave-P-out CV (P = 15) and obtained an AUC of 0.99. Shibata et al. [105] developed a CNN model using the ResNet architecture based on 1364 glaucomatous and 1768 non-glaucomatous fundus images and tested on independent dataset with 60 glaucomatous eyes and 50 normal eyes. The model obtained an AUC of 0.965 (95% CI 0.935–0.996) that was higher than the performance of ophthalmology residents with an AUC between 0.762 and 0.912 and other models, such as VGG16, RF and SVM. Asaoka et al. [31] developed a six-layer DL model to diagnose early-glaucoma based on 4316 OCT images and obtained an AUC of 0.937 (95% CI 0.906–0.968) based on an independent dataset with 114 patients with glaucoma and 82 normal subjects using a DL transfer model, which was significantly higher than the AUC of 0.631 to 0.862 obtained based on other models (RF and SVM). Using the Youden method, the model attained optimal discrimination with a sensitivity of 82.5% and specificity of 93.9%. Phene et al. [109] developed a CNN model based on Inception-v3 architecture to predict referable GON from ONH features using 86,618 color fundus images and validated the model using three independent datasets, then compared the outcome with glaucoma specialists. For referable GON, they achieved AUCs of 0.945 (0.929–0.960), 0.855 (0.841–0.870), and 0.881 (0.838–0.918) based on the fundus images in the first dataset (with 1205 images), second dataset (with 9642 images), and third dataset (with 346 images), respectively. The model’s AUCs ranged from 0.661 to 0.973 based on glaucomatous ONH features. The CNN model detected referable GON with higher sensitivity than compared with eye care providers.

Al-Aswad et al. [289] evaluated the performance of Pegasus (an AI system based on deep learning) in glaucoma screening based on color fundus photographs by comparing with six ophthalmologists. They found there was no statistically significant distinction between Pegasus (AUC of 0.926, sensitivity and specificity of 83.7% and 88.2%, respectively) and the “optimal” consensus among ophthalmologists (AUC of 0.891, sensitivity and specificity of 61.3–81.6% and 80.0–94.1%, respectively). The correspondence between Pegasus and the gold standard yielded a score of 0.715, whereas the highest level of agreement between ophthalmologists and the gold standard stood at 0.613. Jammal et al. [112] developed a CNN model using ResNet34 architecture (M2M DL) to predict RNFL thickness and grading glaucomatous eyes based on 32,820 pairs of fundus photographs and SD-OCT scans. A total of 490 images were used for testing the model, then the outcome was compared with two glaucoma specialists, the predicted RNFL thickness obtained through M2M DL exhibited a notably stronger absolute correlation with SAP mean deviation (r = 0.54) compared to the probability of GON determined by human graders (r = 0.48; P < 0.001). Furthermore, the M2M DL algorithm demonstrated a significantly higher partial AUC compared to the probability of GON assessed by human graders (partial AUC = 0.529 vs 0.411, respectively; P = 0.016). Yu et al. [274] trained a 3D CNN to estimate global VF indices based on macula and optic disc OCT scans from 10,370 eyes, and showed that integrating information from macula and optic disc scan achieved better result compared with inputting separate scan. The combined scan obtained 0.76 Spearman’s correlation coefficient and 0.87 Pearson’s correlation based on VFI and MD while the median absolute error was 2.7 for VFI and 1.57 dB for MD from one of the 8-fold CVs. Wang et al. [34] compared four different CNN models for detecting glaucoma based on RNFL thickness maps from 93 glaucomatous eyes and 128 healthy eyes and found ResNet-18 and a customized CNN architecture called GlaucomaNet had higher performance compared to SVM and KNN. The ResNet-18 architecture obtained the highest accuracy of 90.5%, with sensitivity of 86.0%, specificity of 93.8%, and AUC of 0.906 using 5-fold CV. Kim et al. [113] developed DL models for glaucoma diagnosis based on 1903 fundus images using VGG16 and ResNet-152-M architectures and employed Grad-CAM to visualize regions that were more important for the model to make diagnosis. They used both the whole fundus image as well as cropped versions with OD region only and observed that the ResNet-152-M model achieved an accuracy of 96%, sensitivity of 96%, and specificity of 100% based on 220 fundus images from an independent dataset.

CNN models have been also applied to VFs to detect glaucoma. Kucur et al. [101] proposed an eight-layer CNN model for discrimination of early-glaucoma versus control samples, trained on Glaucoma Center of Semmelweis University in Budapest (BD) dataset with 2267 OCTOPUS G1 VFs (30°) and Rotterdam Eye Hospital (RT) dataset with 2573 HFA VFs. The CNN model had the highest average precision (AP) with 0.874 and 0.986 based on BD and RT datasets, respectively, using 10-fold CV. Performance was similar to other methods using MD thresholds and NN model.

CNN models were also applied to ROI localization in glaucoma studies. Mitra et al. [108] developed a CNN mode to detect the bounding box coordinates of OD that acts as a ROI based on fundus images from MESSIDOR and Kaggle datasets and tested the model using fundus images in the DRIVE and STARE datasets. The average IOU of their model was 96.83%, 95.45%, 96.19%, 95.93% on internal testing set of MESSIDOR and Kaggle, independent datasets of DRIVE and STARE, respectively.

CNN models have been widely employed in optic disc/cup segmentation, which plays an important role in glaucoma detection as CDR is a glaucoma risk factor. Kim et al. [114] developed a fully convolutional networks (FCN) with U-Net architectures for optic disc/cup segmentation based on fundus ROI region from 750 fundus images of RIGA dataset. The best segmentation results for OD showed Jaccard index of 0.95, F-measure of 0.98, and accuracy of 99%. The best segmentation results for OC showed Jaccard index of 0.80, F-measure of 0.88, and accuracy of 99% evaluated by 5-fold CV. Xie et al. [210] developed a new method to segment inner retina thickness using 41 OCT macular scans. The approach addressed spike-like segmentation errors and lack of contextual data by reconstructing more B-scans, concatenating smoothed and contrast-enhanced images into a six-channel input image stack, and merging predicted surfaces from both horizontal and vertical B-scans. The suggested method surpassed the performance of cutting-edge techniques when it came to mean absolute surface distances (normal: 2.18, glaucoma: 3.02), Dice coefficients (GCIPL normal: 0.952, GCIPL glaucoma: 0.899), and Hausdorff distance (RNFL-GCL normal: 12.1; RNFL-GCL glaucoma: 28.9) in an independent test dataset. Li et al. [182] developed a joint OD and OC segmentation model using a region-based DCNN (R-DCNN) based on 2440 fundus images and validated the model based on both in-house testing dataset and public datasets (DRISHIT-GS and RIM-ONE v3) and compared with that of ophthalmologists. The model achieved high Dice similarity coefficient (DC) and Jaccard coefficient (JC) for both OD (DC: 98.51%, JC: 97.07%) and OC (DC: 97.63%, JC: 95.39%) segmentation on the in-house dataset, comparable to that of ophthalmologists. On DRISHTI-GS and RIM-ONE v3 datasets, the model obtained higher DC (DRISHTI-GS: OD: 97. 23%, OC: 94.56%; RIM-ONE v3: OD: 96.89%, OC: 88.94%) and JC (DRISHTI-GS: OD: 94.17%, OC: 89.92%; RIM-ONE v3: OD: 91.32%, OC: 78.21%) values than previous studies.

An automatic two-stage glaucoma screening system was developed by Sreng et al. [291] and was evaluated on 2787 retinal images from 5 public datasets (REFUGE, ACRIMA, ORIGA, RIM-ONE and DRISHTI-GS1). The system utilized DeepLabv3 + combined with pretrained networks for OD segmentation in the first stage and pretrained networks ensembled with SVM for glaucoma classification in the second stage. The best model for OD segmentation achieved high accuracy (99.70%), Dice coefficient (91.73%), and IoU (84.89%) on REFUGE dataset based on the combination of DeepLabv3 + and MobileNet. The ensembled classification model outperformed conventional methods with high accuracy (97.37%, 90.00%, 86.84%, 99.53% and 95.59%) and AUC (100%, 92.06%, 91.67%, 99.98% and 95.10%) values on RIM-ONE, ORIGA, DRISHTI-GS1, ACRIMA and REFUGE datasets.

Recurrent Neural Network (RNN): RNN is specifically designed to handle sequential data by preserving an internal state, enabling the network to remember information from prior inputs. The network takes a sequence of inputs, one at a time, and updates its internal state based on the current input and its previous state. The output of the network at each step depends on the current input and the current state. Long Short-Term Memory (LSTM) networks were introduced as a specialized type of RNN. LSTM networks incorporate memory cells and forget gates, allowing them to manage information as it enters and exits the memory, thus mitigating the drawbacks of traditional RNNs. Veena et al. [145] used an RNN-LSTM model with three dense and three dropout layers and one batch normalization layer for glaucoma diagnosis based on the segmentation result of the fundus images from DRISHTI-GS (101 images) database (no report of classification accuracy). LSTM has been successfully applied to longitudinal data in glaucoma studies as well. Dixit et al. [147] used LSTM to assess glaucoma progression based on longitudinal VF data from 11,242 eyes. Using four consecutive VFs for each subject, the convolutional LSTM network achieved an accuracy of 91–93% when evaluated against various conventional glaucoma progression algorithms. The model trained on both VFs and clinical data displayed superior diagnostic capabilities (AUC:0.89–0.93) compared to a model exclusively trained on VF (AUC:0.79–0.82, P < 0.001) using 3-fold CV. In summary, the majority of the studies using discriminative DL models in glaucoma have applied transfer learning and compared performance of different CNN model architectures in a specific task, such as classification based on fundus, VFs, or OCT images. Among the pretrained CNN architectures in glaucoma studies, ResNet has been the most popular architecture used in CNN models and has achieved a higher accuracy compared to the other CNN architectures.

Generative Adversarial Network (GAN)/semi-supervised model

Generative Adversarial Networks (GANs) represent a recent breakthrough in DL. First proposed by Goodfellow et al. [292], it constitutes two networks one for image generation and the other for discrimination (between the generated image and authentic). This model has demonstrated high levels of performance in a variety of applications including glaucoma to generate synthesized retinal images in a semi-supervised learning fashion. Diaz-Pinto et al. [240] developed a new retinal image synthesizer and a semi-supervised learning approach for glaucoma assessment utilizing a Deep Convolutional Generative Adversarial Network (DCGAN) based on a dataset consisting of 86,926 fundus images. The model was able to generate (close to) realistic retinal images and discriminate glaucomatous eyes from normal ones with an AUC of 0.9017, specificity of 79.86%, sensitivity of 82.90%, and F1-score of 0.8429 based on the internal testing set. Tang et al. [205] developed a semi-supervised model using a multi-level amplification iterative training method to detect glaucoma based on three different datasets; Sanyuan dataset (11,443 images), Tongren dataset (7806 images), and Xiehe dataset (4363 images). They tested the model based on REFUGE dataset and obtained an accuracy of 95.75%, sensitivity of 87.5%, specificity of 96.7%, and F1-score of 0.919, which were higher than the accuracy of the models previously published. Alghamdi et al. [241] developed a semi-supervised CNN model with self-learning (SSCNN) and Semi-supervised CNN model with autoencoder (SSCNN-DAE) based on both labeled and unlabeled data from RIM-ONE and RIGA datasets. Compared with transfer CNN (TCNN), SSCNN-DAE obtained a higher accuracy of 93.8%, sensitivity of 98.9% and AUC of 0.95 based on the internal testing set.

Overall, GAN and semi-supervised learning can improve the model performance significantly compared to supervised DL models when dealing with small datasets or datasets with limited number of labeled images. Because this is a typical problem in glaucoma studies, such models may be applicable to address related glaucoma challenges. However, GAN has been not widely used in glaucoma studies probably because there is a huge concern related to synthesizing retinal images in ophthalmology [293].

Hybrid models

Hybrid models, or fusion networks, are formed based on combining multiple DL models using a single modality or multiple modalities. It has demonstrated a better performance in some glaucoma diagnosis scenarios than a single CNN model [294,295,296,297,298]. In addition, Muhammad et al. [107] developed a hybrid deep CNN model based on AlexNet architecture to extract features from OCT scans, coupled with RF classifier to distinguish healthy suspects and mild glaucoma using 102 eyes. The model with the input of RNFL probability map had the best accuracy of 93.1% using leave-one-out validation. An et al. [110] developed a hybrid CNN mode based on fundus and OCT scans from 208 glaucomatous eyes and 149 healthy eyes. They first trained a VGG19 architecture separately based on fundus images cantered at optic disc, disc RNFL thickness maps, macular GCC thickness maps, disc RNFL deviation maps, and macular GCC deviation maps, then combined the feature vector representation of each CNN model and used a RF classifier to combine models for glaucoma diagnosis. The hybrid model achieved an AUC of 0.963 based on 10-fold CV. Patil et al. [111] developed GlaucoNet which stacked an autoencoder with a CNN model for glaucoma diagnosis based on fundus images from DRISHTI-GS and DRION-DB datasets. The accuracy, precision, F1-score, recall, specificity, and AUC of the model based on the DRISHTI-GS (internal testing set) were 98.2%, 94.6%, 0.979, 99.6%, 94.6%, and 0.94, respectively. Based on the DRION-DB (internal testing set), the corresponding performance metrics were 96.3%, 93.9%, 0.941, 94.2%, 92.6%, and 0.90, respectively. The model outperformed the previous state-of-the-art studies. Cho et al. [247] developed a hybrid model composed of an ensemble of 56 CNNs with different architectures by averaging the outcome of those models based on 3460 fundus photographs to identify unaffected controls, early-stage, and late-stage glaucoma. The proposed hybrid model demonstrated a significantly better performance compared with the best single CNN model, with an accuracy of 88.1% and an average AUC of 0.975 based on 10-fold CV. Akbar et al. [248] developed a hybrid model by combining the DenseNet and DarkNet CNN architectures for glaucoma diagnosis based on 1270 fundus images from HRF, RIM 1, and ACRIMA databases. The hybrid model outperformed the two single CNN networks and achieved an accuracy of 99.7%, sensitivity of 98.9%, and specificity of 100% based on the HRF as the internal validation set. Based on the RIM1 as the internal validation set, accuracy, sensitivity, and specificity were 89.3%, 93.3%, 88.46%, respectively, while based on ACRIMA as the internal validation set, accuracy, sensitivity, and specificity were 99.0%, 100%, and 99%, respectively. Joshi et al. [178] developed a hybrid model based on the ensemble of VGGNet-16, ResNet-50, and GoogLeNet CNN architectures using fundus images collected from a private dataset (PSGIMSR with 1150 images) and three publicly available datasets (DRISHTI-GS with 101 images; DRIONS-DB with 110 images; and HRF with 30 images). The hybrid model outcome was formed based on the majority voting of the three models. The hybrid model yielded an accuracy of 91.13%, sensitivity of 86.58%, and specificity of 95.21% on PSGIMSR dataset, and achieved accuracies of 95.63%, 98.67%, 95.64%, and 88.96%, respectively, on the DRIONS-DB, HRF, DRISHTI-GS, and combined datasets based on 10-fold CV.

It is becoming evident that hybrid CNN models with fusion of different single CNN architectures using a single data modality or multi-modality are being increasingly applied and receiving more attention from investigators. Because glaucoma is a complex and multifactorial disease, hybrid models that utilize different data modalities to detect glaucoma may provide different pieces of information regarding glaucoma to better portray the disease. This is true based on information theory. In addition, multiple sources of information may increase the overall information about the disease. Thus, we predict attention to, and utilization of, hybrid CNN models will continue to increase in glaucoma studies.

Overall, diagnosis, screening of glaucoma and glaucoma progression detection are the major goals in studies discussing various applications of AI in glaucoma. Currently, most of the studies are focused on glaucoma diagnosis or screening and have applied both conventional ML and DL approaches. However, detecting glaucoma progression remains a significant hurdle in clinical practice since detecting true changes due to the disease is challenging. Some of the imaging modalities generate substantial test–retest variability, which complicates distinction between genuine change and fluctuations. Furthermore, there is a lack of consensus regarding specific criteria for glaucoma progression based on VF or structural parameters. Despite these obstacles, several research groups have proposed models for detecting glaucoma progression using traditional ML based on VF test [46, 82, 299] or both VF test and OCT parameters [29, 38]. Wang et al. [299] applied archetypal analysis to detect VF progression from 11,817 eyes and validated it on 397 eyes and achieved an agreement (kappa) and accuracy of 0.51 and 77%, respectively. They showed that archetypal analysis was more accurate than Advanced Glaucoma Intervention Study (AGIS) scoring, Collaborative Initial Glaucoma Treatment Study (CIGTS) scoring, MD slope, and permutation of pointwise linear regression (PoPLR). Shuldiner et al. [46] assessed several ML models to predict rapid progression based on 22,925 initial VFs from 14,217 patients and found SVM as the best performing model with an AUC of 0.72 (95% CI 0.70–0.75). Lee et al. [38] included 33 initial clinical parameters in ML models to predict normal-tension glaucoma (NTG) progression in young myopic patients based on 155 patients and obtained an AUC of 0.881 (95% CI 0.814–0.945) based on extremely randomized tree which was better than RF. There are also several studies that applied DL models to detect or predict progression based on VF and clinical data [147], OCT images [237, 243] or fundus images [164]. Bowd et al. [243] developed a DL-autoencoder (AE) to detect glaucoma progression based on OCT RNFLROI from 44 progressing, 189 non-progressing, and 109 healthy eyes. The DL-AE ROIs achieved sensitivity and specificity of 0.90 and 0.92, respectively, which was higher than models based on global cpRNFL annulus thicknesses. Li et al. [164] developed AI models to predict glaucoma progression based on color fundus photographs and demonstrated AUCs of 0.87 (0.81–0.92) and 0.88 (0.83–0.94) based on two external datasets. Successful detection of progression may facilitate earlier interventions thus diminishing the likelihood of patients experiencing vision impairment due to glaucoma over their lifetimes. Nevertheless, existing studies have not showed solid results in forecasting the rate of progression or the timing of its occurrence.

Discussion

AI is an active research area that encompasses a wide range of approaches of ML, employing different applications such as ML and DL algorithms, which have been successfully applied in various domains such as image processing, pattern recognition, speech recognition, and natural language processing. When applied in medical fields such as ophthalmology, these models show promising potential for improving access to health care and enhancing patient outcomes. Early ML models in the form of neural networks were applied to VFs for diagnosing glaucoma in the 1990’s [300, 301]. With later advancements in AI models, various groups demonstrated the efficacy of these models in detecting glaucoma. The following is a summary of our findings regarding AI models in glaucoma. DL-based models are typically more accurate than conventional ML approaches, as evidenced by their performance in glaucoma applications such as image processing, pattern recognition, diagnosis, and prognosis. ML/DL techniques, such as CNNs, RNNs, LSTM, GAN, DBN, etc., can be easily adapted to various glaucoma problems including screening, diagnosis, and prognosis. DL-based techniques can handle more complicated problems such as high-dimensional data and interactions between different data modalities. Ensemble DL models can improve the performance of glaucoma detection. Combination of multiple modalities of the data can improve the model performance. However, there are also several limitations with respect to AI liability, reimbursement, and ethical principles, including non-maleficence of AI models in glaucoma, patient autonomy, and equity and absence of bias in benefits and rights, that are out of the scope of this article and may be discussed in more focused future studies.

Datasets

Datasets serve as the key element for developing AI models and they play a critical role in reproducibility and generalizability. Conventional statistical learning models can be applied to clinical datasets to identify associations even based on a relatively small sample size. However, in addition to quality, quantity is also important in developing emerging DL models. For instance, labeling fundus images by non-glaucoma experts may degrade the quality of the dataset, leading to non-solid constructs. Increased quantity, along with improved quality, usually leads to better model performance and higher likelihood of generalizability. Well-annotated, multi-modal datasets can also positively impact AI models for more accurate detection of the disease. However, obtaining a sufficiently large dataset with several modalities can be challenging due to numerous hurdles such as low disease prevalence, data confidentiality, data protection regulations, and labor-intensiveness of the process.

Moreover, lack of standardized definitions for glaucoma poses another challenge in achieving consistent evaluation of the AI models. Christopher et al. [136] investigated the impact of study population, labeling, and training on glaucoma detection using AI models, and found that the diagnosis performance varied based on the reference standard (RS) and labeling strategy. To develop solid AI models for detecting glaucoma, it is vital to train the models based on dependable datasets and evaluate the models based on consistent ground truths. Thakoor et al. [281] attempted to improve the generalizability of AI models in glaucoma detection and found that the model trained and tested with the same RS demonstrated the highest accuracy. However, substantial disagreement, even among experienced glaucoma specialists, makes it challenging to establish a uniform reference for ground-truth labeling [281].

Many of the currently available datasets have been gathered from populations with limited representation of different ethnicities, used specific hardware and imaging settings to collect data, and used high-quality images that are far from real-world settings. One potential drawback of lack of diversity within the models, is that these datasets may not readily generalize to real-world patient populations. Asaoka et al. [118] applied ResNet model based on 3132 fundus images 0.877 to 0.948 and from 0.945 to 0.997 based on two independent validations datasets. Nonetheless, their model was not evaluated based on diverse ethnic groups, which is important as fundus images from patients with different ethnic backgrounds may exhibit distinct characteristics such as variation in retina color as well as optic disc structure.

AI models

Although there are several different CNN-based AI models reported in the literature with high performance in detecting glaucoma, the generalizability of those models is questionable since independent validation is lacking in many studies. Independent validation is a crucial step in developing AI models because it can determine whether a model has learned meaningful disease-related features and patterns to generalize well to unseen data. It also aids uncovering potential biases or overfitting issues when the model performs exceptionally well based on the training data but performs poorly based on the new data. Moreover, assessing the robustness of AI models against variations and changes in the data distribution is especially important when deploying models in real-world applications where input data may change over time. Additionally, validating AI models based on independent (accessible) datasets allows for fair comparison of AI models using the same evaluation dataset, ensuring transparency. For the literature we reviewed, most of the articles only used internal testing or CV, and less than about one-third of the articles used independent validation, which are summarized in Table 1. Also, many models were developed based on very clean datasets, for example including only high-quality images and clear pathological features, whereas this does not reflect the case in real-world data. In addition, many models demonstrated good performance in differentiating healthy versus moderate/severe glaucoma; however, this does not add significant clinical value, as differentiation between these two types of conditions is also easily achieved by clinicians. Thus, more helpful models should be developed based on a wide spectrum of glaucoma severity subjects, including early-stage glaucoma.

It is also challenging to compare the performance of different AI models as they typically used differing definitions of glaucoma, different methodologies to develop models and various strategies for labeling, as well as images from glaucoma patients at varying levels of severity. In addition, they typically employed images with diverse quality under different instrument settings and included different populations from primary, secondary, or tertiary centers. As a result, for example, it is unfair to compare a model trained on normal subjects and glaucoma patients at the moderate to advanced stages of the disease with another model trained on a dataset with patients at a wider spectrum of glaucoma severity. Model reliability is also a barrier for real-life clinical applications, as most DL models are deterministic and provide an output regardless of whether the input image is even relevant. Finally, most DL models provide a “black box” architecture without appropriate visualization and interpretability, thus lowering user trust and creating another challenge for a successful integration.

Future directions

Two autonomous AI models have been approved by the FDA to detect more than mild diabetic retinopathy and diabetic macular edema [302]; however, there is no FDA-approved autonomous AI instrument for detecting glaucoma. Therefore, the development of innovative AI models to autonomously assess and detect glaucoma remains an important goal for improving treatment outcomes for this major blinding disorder. To achieve this goal, we suggest implementing the following directions in the future studies:

Lack of consistent and objective definitions of glaucoma and its progression leads to improper AI model evaluations. As such, addressing this challenge is a primary step to improve integration of AI glaucoma research and clinical applications. One potential solution may be using quantified parameters from VF and OCT data to create objective criteria for glaucoma definition. More recently, some groups have worked on identifying more objective criteria for defining GON and glaucoma staging based on OCT and VFs [87, 303, 304]. However, this active area of research requires further studies. Firstly, these criteria need to be validated further based on larger and more diverse datasets. Secondly, data collected from different OCT or VF instruments may impact the identified objective criteria. Lastly, comorbidity and ethnic-specific criteria may influence findings thus considering the effect of these parameters is vital. The other area that needs attention is the architecture of the AI models. In particular, DL models sometimes can be fragile, unexplainable, and non-interpretable. Many teams are currently working on these limiting aspects of the DL models and hopefully some limitations will be addressed in the near future. New studies may incorporate interpretability into the AI model through various techniques, such as feature importance, SHAP value, Grad-CAM, saliency map, and Poly-CAM. Some current studies have already included interpretability elements like feature importance and Grad-CAM. Thakoor et al. [279] developed end-to-end CNN architectures to detect glaucoma based on OCT images. They applied Grad-CAM and tested with concept activation vectors (TCAVs) to infer what image concepts CNN models rely on to make predictions and compared with that of human experts by tracking eye fixations. They identified consistent regions of OCT are evaluated based on CNN and OCT experts in detecting glaucoma. Such studies can shed lights on improving interpretability of AI models by applying multiple consistent methods and comparing with clinicians’ eye fixations. Future work may validate these studies using more diverse datasets. Also, comparative studies can be conducted based on multiple data modalities by including or masking human focused region to indirectly identify areas that are more important for CNN models.

Another practical approach to partially address some of these limitations is to perform accurate quantification of CDR in fundus and RNFL thickness profiles in OCT images to enhance performance and improve interpretability. Moreover, longitudinal assessment of these clinically relevant quantified parameters may allow a more consistent and accurate monitoring and progression detection.

Another future direction could be improving datasets for training AI models. Some of the reference datasets are annotated by non-glaucoma experts thus may not represent GON accurately. Therefore, generating more accurate reference datasets with panels of glaucoma specialists are warranted. We also suggest selecting diverse populations of patients (for instance, datasets for population-based screening and diagnosis for intended use) from different ethnicities across all glaucoma severity levels to minimize selective bias. Due to the complex nature of glaucoma (e.g., different disease processes can lead to the same optic nerve degeneration, glaucoma can look and progress differently due to different disease mechanisms, glaucoma has several phenotypes such as open angle, closed angle, secondary glaucoma, low tension, etc.), making it difficult to standardize outcomes of glaucoma studies across different investigations, we strongly recommend generating multi-modal glaucoma datasets given the fact that glaucoma is multi-factorial and complex, thus several modalities may enhance detection and monitoring tasks. However, obtaining a sufficiently large dataset with several modalities can be challenging. A primary priority for future AI studies could be implementing guidelines regarding designing and reporting AI studies [305, 305,306,307] to minimize evaluation and comparison challenges.

Another promising solution is developing foundational model using self-supervised learning [308] based on known diverse datasets to improve the performance and generalizability. This is particularly important given the fact that the performance of the AI models can vary depending on the severity level of the patients with glaucoma in the dataset, as AI models may perform better on datasets with a greater number of patients at the advanced level of glaucoma compared to datasets with a greater number of patients at the early stages of glaucoma.

Improving dependability of AI models can build trust. One direction could be developing probabilistic DL models [231] that can generate the likelihood as well as the level of confidence of the model on generated outcome. This way, clinicians have two levels of outcome to make a final decision and thus may trust the class of AI models better. However, probabilistic models are more challenging to develop due primarily to more complexity that typically leads to suboptimal performance, thus only a few studies have utilized probabilistic models. Recent CNN architectures such as ConvNeXt [309] and availability of larger datasets may however address these challenges.

The paradigm of applications of AI in glaucoma changed in 2016 with applications of deep CNN models in glaucoma. [310]. However, we believe a second major impact will result from the application of ChatGPT [311], first initiated in late 2022. ChatGPT can be a great tool for many different aspects of glaucoma, and a recent study showed that ChatGPT can assist glaucoma diagnosis based on clinical case reports and obtained comparable performance with senior ophthalmology residents [312]. We believe the development of large language models (LLMs) with glaucoma domain-specific knowledge that leverage multi-modal data in combination with active learning holds more promise for future integration into clinical practice.

Conclusions

The advancement of AI in glaucoma detection and monitoring is progressing rapidly. In recent years, numerous innovative DL models have been developed specifically for diagnosing glaucoma, showcasing remarkable performance. However, despite their promising results, none of these models have received FDA approval for being used in glaucoma clinical practice. This is partly due to obstacles such as inconsistencies in defining glaucoma, the generalizability and reliability of the models, and their interpretability. To enhance the integration of these technologies into healthcare settings, future research is essential to address these potential challenges, including generation of dependable gold standards, improving model generalizability, reliability, interpretability as well as legal, ethical, and patient privacy issues, among several others. Successful integration of AI in glaucoma clinical practice, and ophthalmology, requires addressing challenges facing all elements of the healthcare pipeline.

Methods

We searched PubMed, Scopus, and Embase databases for the AI-related studies in glaucoma conducted through 2022. Figure 4 shows the methodology utilized in this review. We first used “glaucoma”, “machine learning”, “deep learning”, “artificial intelligence”, and “neural network” keywords and searched through the title of papers in these three databases to identify related papers. Our initial search identified 986 articles. We then screened the collected articles for duplicates and irrelevant topics and included 430 relevant and unique papers. Finally, we went through the remaining articles and excluded 139 articles that were review papers, or the contents were irrelevant, or essential information (number of samples) was missed, the full texts were not in English or the full text cannot be obtained. The final study included 291 full-text articles discussing various applications of AI in glaucoma.

Data availability

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

References

Jonas JB, Aung T, Bourne RR, Bron AM, Ritch R, Panda-Jonas S. Glaucoma. Lancet. 2017;390(10108):2183–93.

Allison K, Patel D, Alabi O. Epidemiology of glaucoma: the past, present, and predictions for the future. Cureus. 2020;12(11): e11686.

Quigley HA, Broman AT. The number of people with glaucoma worldwide in 2010 and 2020. Br J Ophthalmol. 2006;90(3):262–7.

Tham YC, Li X, Wong TY, Quigley HA, Aung T, Cheng CY. Global prevalence of glaucoma and projections of glaucoma burden through 2040: a systematic review and meta-analysis. Ophthalmology. 2014;121(11):2081–90.

Rosenberg LF. Glaucoma: early detection and therapy for prevention of vision loss. Am Fam Physician. 1995;52(8):2289–98.

Lucy KA, Wollstein G. Structural and functional evaluations for the early detection of glaucoma. Expert Rev Ophthalmol. 2016;11(5):367–76.

Gandhi M, Dubey S. Evaluation of the optic nerve head in glaucoma. J Curr Glaucoma Pract. 2013;7(3):106–14.

Yousefi S. Clinical applications of artificial intelligence in glaucoma. J Ophthalmic Vis Res. 2023;18(1):97–112.

Mahabadi N, Foris LA, Tripathy K. Open angle glaucoma. Treasure Island: StatPearls; 2022.

Susanna R Jr, De Moraes CG, Cioffi GA, Ritch R. Why do people (still) go blind from glaucoma? Transl Vis Sci Technol. 2015;4(2):1–1.

Deo RC. Machine learning in medicine. Circulation. 2015;132(20):1920–30.

Goldbaum MH, Sample PA, Chan K, et al. Comparing machine learning classifiers for diagnosing glaucoma from standard automated perimetry. Invest Ophthalmol Vis Sci. 2002;43(1):162–9.

Zangwill LM, Chan K, Bowd C, et al. Heidelberg retina tomograph measurements of the optic disc and parapapillary retina for detecting glaucoma analyzed by machine learning classifiers. Invest Ophthalmol Vis Sci. 2004;45(9):3144–51.

Burgansky-Eliash Z, Wollstein G, Chu T, et al. Optical coherence tomography machine learning classifiers for glaucoma detection: a preliminary study. Invest Ophthalmol Vis Sci. 2005;46(11):4147–52.

Townsend KA, Wollstein G, Danks D, et al. Heidelberg retina tomograph 3 machine learning classifiers for glaucoma detection. Br J Ophthalmol. 2008;92(6):814–8.

García-Morate D, Simón-Hurtado A, Vivaracho-Pascual C, Antón-López A. A new methodology for feature selection based on machine learning methods applied to glaucoma. In: Cabestany J, Sandoval F, Prieto A, Corchado JM, editors. Bio-inspired systems: computational and ambient intelligence. Berlin: Springer; 2009.

Bizios D, Heijl A, Hougaard JL, Bengtsson B. Machine learning classifiers for glaucoma diagnosis based on classification of retinal nerve fibre layer thickness parameters measured by Stratus OCT. Acta Ophthalmol. 2010;88(1):44–52.

Hirasawa H, Murata H, Mayama C, Araie M, Asaoka R. Evaluation of various machine learning methods to predict vision-related quality of life from visual field data and visual acuity in patients with glaucoma. Acta Ophthalmol. 2010. https://doi.org/10.1111/j.1755-3768.2009.01784.x.

Barella KA, Costa VP, Goncalves Vidotti V, Silva FR, Dias M, Gomi ES. Glaucoma diagnostic accuracy of machine learning classifiers using retinal nerve fiber layer and optic nerve data from SD-OCT. J Ophthalmol. 2013;2013: 789129.

Silva FR, Vidotti VG, Cremasco F, Dias M, Gomi ES, Costa VP. Sensitivity and specificity of machine learning classifiers for glaucoma diagnosis using spectral domain OCT and standard automated perimetry. Arq Bras Oftalmol. 2013;76(3):170–4.

Vidotti VG, Costa VP, Silva FR, et al. Sensitivity and specificity of machine learning classifiers and spectral domain OCT for the diagnosis of glaucoma. Eur J Ophthalmol. 2012. https://doi.org/10.5301/ejo.5000183.

Kavitha S, Duraiswamy K, Karthikeyan S. Assessment of glaucoma using extreme learning machine and fractal feature analysis. Int J Ophthalmol. 2015;8(6):1255–7.

Kuppusamy PG. An artificial intelligence formulation and the investigation of glaucoma in color fundus images by using BAT algorithm. J Comput Theor Nanosci. 2017;14:1–5.

Omodaka K, An G, Tsuda S, et al. Classification of optic disc shape in glaucoma using machine learning based on quantified ocular parameters. PLoS ONE. 2017;12(12): e0190012.

Kim SJ, Cho KJ, Oh S. Development of machine learning models for diagnosis of glaucoma. PLoS ONE. 2017;12(5): e0177726.

Abidi SSR, Roy PC, Shah MS, Yu J, Yan S. A data mining framework for glaucoma decision support based on optic nerve image analysis using machine learning methods. J Healthc Inform Res. 2018;2(4):370–401.

An G, Omodaka K, Tsuda S, et al. Comparison of machine-learning classification models for glaucoma management. J Healthc Eng. 2018;2018:6874765.

Lu SH, Lee KY, Chong JIT, Lam AKC, Lai JSM, Lam DCC. Comparison of Ocular Biomechanical Machine Learning Classifiers for Glaucoma Diagnosis. In: 2018 IEEE International Conference on Bioinformatics and Biomedicine (BIBM); 3–6 Dec. 2018, 2018.

Christopher M, Belghith A, Weinreb RN, et al. Retinal nerve fiber layer features identified by unsupervised machine learning on optical coherence tomography scans predict glaucoma progression. Invest Ophthalmol Vis Sci. 2018;59(7):2748–56.

Martin KR, Mansouri K, Weinreb RN, et al. Use of machine learning on contact lens sensor-derived parameters for the diagnosis of primary open-angle glaucoma. Am J Ophthalmol. 2018;194:46–53.

Asaoka R, Murata H, Hirasawa K, et al. Using deep learning and transfer learning to accurately diagnose early-onset glaucoma from macular optical coherence tomography images. Am J Ophthalmol. 2019;198:136–45.

Thomas PBM, Chan T, Nixon T, Muthusamy B, White A. Feasibility of simple machine learning approaches to support detection of non-glaucomatous visual fields in future automated glaucoma clinics. Eye (Lond). 2019;33(7):1133–9.

Lee SD, Lee JH, Choi YG, You HC, Kang JH, Jun CH. Machine learning models based on the dimensionality reduction of standard automated perimetry data for glaucoma diagnosis. Artif Intell Med. 2019;94:110–6.

Wang P, Shen J, Chang R, et al. Machine learning models for diagnosing glaucoma from retinal nerve fiber layer thickness maps. Ophthalmol Glaucoma. 2019;2(6):422–8.

Baxter SL, Marks C, Kuo TT, Ohno-Machado L, Weinreb RN. Machine learning-based predictive modeling of surgical intervention in glaucoma using systemic data from electronic health records. Am J Ophthalmol. 2019;208:30–40.

Mukherjee R, Kundu S, Dutta K, Sen A, Majumdar S. Predictive diagnosis of glaucoma based on analysis of focal notching along the neuro-retinal rim using machine learning. Pattern Recognit Image Anal. 2019;29(3):523–32.

Thakur N, Juneja M. Classification of glaucoma using hybrid features with machine learning approaches. Biomed Signal Process Control. 2020;62: 102137.

Lee J, Kim YK, Jeoung JW, Ha A, Kim YW, Park KH. Machine learning classifiers-based prediction of normal-tension glaucoma progression in young myopic patients. Jpn J Ophthalmol. 2020;64(1):68–76.

Brandao-de-Resende C, Cronemberger S, Veloso AW, et al. Use of machine learning to predict the risk of early morning intraocular pressure peaks in glaucoma patients and suspects. Arq Bras Oftalmol. 2021;84(6):569–75.

Singh LK, Garg H, Khanna M. An artificial intelligence-based smart system for early glaucoma recognition using OCT images. Int J E-Health Med Commun (IJEHMC). 2021;12(4):32–59.

Yoon BW, Lim SH, Shin JH, Lee JW, Lee Y, Seo JH. Analysis of oral microbiome in glaucoma patients using machine learning prediction models. J Oral Microbiol. 2021;13(1):1962125.

Wu CW, Shen HL, Lu CJ, Chen SH, Chen HY. Comparison of different machine learning classifiers for glaucoma diagnosis based on spectralis OCT. Diagnostics (Basel). 2021;11(9):1718.

Elizabeth Jesi V, Mohamed Aslam S, Ramkumar G, Sabarivani A, Gnanasekar AK, Thomas P. Energetic glaucoma segmentation and classification strategies using depth optimized machine learning strategies. Contrast Media Mol Imaging. 2021;2021:5709257.

Oh S, Park Y, Cho KJ, Kim SJ. Explainable machine learning model for glaucoma diagnosis and its interpretation. Diagnostics (Basel). 2021;11(3):510.

Fernandez Escamez CS, Martin Giral E, Perucho Martinez S, Toledano FN. High interpretable machine learning classifier for early glaucoma diagnosis. Int J Ophthalmol. 2021;14(3):393–8.

Shuldiner SR, Boland MV, Ramulu PY, et al. Predicting eyes at risk for rapid glaucoma progression based on an initial visual field test using machine learning. PLoS ONE. 2021;16(4): e0249856.

Wu J, Xu M, Liu W, et al. Glaucoma characterization by machine learning of tear metabolic fingerprinting. Small Methods. 2022;6(5):2200264.

Singh LK, Khanna M, Thawkar S. A novel hybrid robust architecture for automatic screening of glaucoma using fundus photos, built on feature selection and machine learning-nature driven computing. Expert Syst. 2022;39(10): e13069.

Khan SI, Choubey SB, Choubey A, Bhatt A, Naishadhkumar PV, Basha MM. Automated glaucoma detection from fundus images using wavelet-based denoising and machine learning. Concurr Eng. 2022;30(1):103–15.

Dai M, Hu Z, Kang Z, Zheng Z. Based on multiple machine learning to identify the ENO2 as diagnosis biomarkers of glaucoma. BMC Ophthalmol. 2022;22(1):155.

Wong D, Chua J, Bujor I, et al. Comparison of machine learning approaches for structure-function modeling in glaucoma. Ann N Y Acad Sci. 2022;1515(1):237–48.

Banna HU, Zanabli A, McMillan B, et al. Evaluation of machine learning algorithms for trabeculectomy outcome prediction in patients with glaucoma. Sci Rep. 2022;12(1):2473.

Kooner KS, Angirekula A, Treacher AH, et al. Glaucoma diagnosis through the integration of optical coherence tomography/angiography and machine learning diagnostic models. Clin Ophthalmol. 2022;16:2685–97.

Chen RB, Zhong YL, Liu H, Huang X. Machine learning analysis reveals abnormal functional network hubs in the primary angle-closure glaucoma patients. Front Hum Neurosci. 2022;16: 935213.

Leite D, Campelos M, Fernandes A, et al. Machine Learning automatic assessment for glaucoma and myopia based on Corvis ST data. Proc Comput Sci. 2022;196:454–60.

Gajendran MK, Rohowetz LJ, Koulen P, Mehdizadeh A. Novel machine-learning based framework using electroretinography data for the detection of early-stage glaucoma. Front Neurosci. 2022;16: 869137.

Xu Y, Hu M, Xie X, Li H-X. Knowledge-based machine learning for glaucoma diagnosis from fundus image data. J Med Imaging Health Inform. 2014;4:776.

Wyawahare M, Patil PM. Machine learning classifiers based on structural ONH measurements for glaucoma diagnosis. Int J Biomed Eng Technol. 2016;21:343.