Abstract

Glaucoma is one of the prevalent causes of blindness in the modern world. It is a salient chronic eye disease that leads to irreversible vision loss. The impediments of glaucoma can be restricted if it is identified at primary stages. In this paper, a novel two-phase Optic Disk localization and Glaucoma Diagnosis Network (ODGNet) has been proposed. In the first phase, a visual saliency map incorporated with shallow CNN is used for effective OD localization from the fundus images. In the second phase, the transfer learning-based pre-trained models are used for glaucoma diagnosis. The transfer learning-based models such as AlexNet, ResNet, and VGGNet incorporated with saliency maps are evaluated on five public retinal datasets (ORIGA, HRF, DRIONS-DB, DR-HAGIS, and RIM-ONE) to differentiate between normal and glaucomatous images. This study’s experimental results demonstrate that the proposed ODGNet evaluated on ORIGA for glaucoma diagnosis is the most predictive model and achieve 95.75, 94.90, 94.75, and 97.85% of accuracy, specificity, sensitivity, and area under the curve, respectively. These results indicate that the proposed OD localization method based on the saliency map and shallow CNN is robust, accurate and saves the computational cost.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Glaucoma is one of the predominant causes of visual disability globally, which accounts for more than 12% of overall blindness [1]. Glaucoma is a salient chronic eye disease that leads to irreversible vision loss if it is not detected and cured at earlier stages. It is an enlightened optic neuropathy visible within the macula and optic disc [2]. According to the World Health Organization (WHO), glaucoma cases will rise up to 76 million by 2020 [3], which is about 3-5% global occurrence of glaucoma for 40-80 years older people. Glaucoma progressively damages the optic nerve by degenerating the nerve fibers, which causes visual impairment leading to blindness [4]. Fig. 1 shows the severity levels of glaucoma in fundus images.

Glaucoma is primarily classified into two types based on the increased intraocular pressure (IOP): open-angle and angle-closure glaucoma. In both types of glaucoma, the liquid’s affluence termed as Aqueous Humor (AH) is congested and led to rising the IOP behind the eye and influencing the optic nerve head (ONH). Most of the existing studies utilized three common methods to diagnose glaucoma i.e., IOP measurement, visual field test, and ONH diagnosis. Early detection and continuous screening may lessen the blindness rate up to 50% [5], but manual screening is a tedious and time taking effort. Therefore, an automated method is essential for the detection of glaucoma.

Computer-aided diagnosis (CAD) is a cost-effective technique for early-stage glaucoma detection in retinal fundus images. It is important to develop a CAD system for glaucoma diagnosis to assist the ophthalmologists for a better screening process. Machine learning (ML) based glaucoma diagnosis systems achieved remarkable accuracy from 90%-98% based on handcrafted features and different classifier type, as given in Table 1. [6,7,8,9,10,11,12,13,14,15,16,17]. Usually, cup-to-disc ratio (CDR) is evaluated by applying various feature extraction techniques such as wavelet transform [12, 13], thresholding [18, 19] or high order spectral transforms [15, 16] on OD images. Then these manually extracted features are fed into ML classifiers like Support Vector Machine (SVM), Artificial Neural Network (ANN), k-nearest neighbor (kNN) and Random Forests (RF), etc. Although ML-based methods attained state-of-the-art performance results, the manual feature extraction and selection are time-consuming effort and based on the ophthalmologist’s subjectivity.

Recently, deep learning (DL) has emerged as the most employed field for various tasks such as image classification [20], natural language processing [21], and medical image analysis [22]. A convolutional neural network (CNN) is the class of DL, which is commonly utilized for image classification [23].

Maheshwari et al. [24] developed a glaucoma diagnosis system by employing a local binary pattern (LBP) based on data augmentation and retinal fundus images. Initially, they extracted red, green, and blue channels of the fundus images separately and then employed LBP for data augmentation of each channel. Finally, the fusion-based technique is used to combine the decisions from the corresponding CNN model. A glaucoma diagnosis system based on the optic disc and cup localization has been introduced in [25]. An ML-based system is used for the segmentation of optic disc and cup and CNN based system for glaucoma diagnosis. The proposed method evaluated on two public retinal datasets DRISHTI and RIM-ONE achieved 0.96 accuracies. Kim et al. proposed an automatic diagnosis and localization of glaucoma by using deep learning. The proposed model is evaluated on a private dataset collected from Samsung medical center and achieved a high diagnostic accuracy of 96%. Moreover, they developed a web-based system Medinoid for automatic diagnosis and localization of glaucoma [26]. Cerentini et al. [27] proposed a glaucoma identification system based on GoogLeNet. They first extracted region of interest (ROI) and then applied a sliding window approach for glaucoma classification. The authors report 82.20% accuracy. Diaz-Pinto et al. [28] used various transfer learning based models (VGG-16, VGG-19, Inception-V3, ResNet-50 and Xception) for detection of glaucoma using retinal images. They evaluated the proposed on five public datasets and achieved an average accuracy of 73.5%, and AUC of 96%. A hybrid convolutional neural network and recurrent neural network based automatic glaucoma detection has been proposed in [29]. The proposed has shown significant performance for the both the spatial and temporal features extraction from fundus images. The hybrid CNN/RNN method is evaluated on 1810 fundus images and achieved an average accuracy of 94%. Serte et al. [30] proposed an ensemble of graph-based saliency and CNN for automatic glaucoma detection. The proposed approach employed graph-based saliency for cropping of optic disc, and then classification is performed using convolutional neural network. The proposed method is evaluated on public dataset comprising of 1542 retinal images, and achieved 0.88 of accuracy. A novel TWEEC model for glaucoma diagnosis based on deep learning models has been performed in [31]. The proposed model is designed to extract anatomical features of optic disc and adjoining blood vessels. The wavelet approximation and spatial fundus images sub-bands are used an input of the proposed model and results are compared with state-of-the-art CNN models. A visual saliency thresholding (VST) method for the extraction of optic disc along with ROI generation has been proposed in [32]. The saliency parameters are used optic disc detection, and compared with other segmentation techniques like Otsu thresholding and region growing. The proposed is evaluated on DRISHTIGS1 public dataset, and attained promising results.

Although CNN based systems leverage the automatic feature extraction and classification, the training of CNN requires a larger dataset. To overcome such limitations, transfer learning-based methods such as AlexNet, ResNet, VGGNet has shown significant performance on a larger ImageNet dataset with over 1000 classes [33]. In this paper, we proposed a novel deep learning-based multitask model ODGNet. The proposed model is composed of two major steps: optic disc localization and glaucoma detection. In the first phase, a saliency map is used to determine salient regions for OD localization based on the cascading localization method of deep learning. In the second phase, three CNN models (AlexNet and VGG-16, and ResNet-34) are used via transfer learning to classify the extracted optic disc into normal or glaucomatous. Alternatives to these three pre-trained models, the shallow CNN and the other variations of VGGNet and ResNet are also investigated. The proposed ODGNet is evaluated on five larger public retinal fundus datasets: ORIGA [34], HRF [35], DRIONS-DB [36], DR-HAGIS [37], and RIM-ONE [38]. Contrary to most of the established detection techniques, the proposed method employs saliency map incorporated with shallow CNN for accurate OD localization, and transfer learning based classification has achieved state-of-the-art performance in terms of accuracy, sensitivity, specificity, and AUC.

The main contributions of the paper are as follows:

-

A novel ODGNet method is proposed for appropriate OD localization and glaucoma diagnosis.

-

A deep learning-based cascading localization method is introduced which employs a saliency map for OD localization.

-

Three CNN models (AlexNet, VGGNet-16, and ResNet-34) are used via transfer learning to classify the localized OD into normal or glaucomatous.

-

ODGNet is evaluated on five larger retinal datasets ORIGA, HRF, DRIONS-DB, DR-HAGIS, and RIM-ONE.

-

The proposed model obtained the highest diagnostic performance on the ORIGA dataset with 95.75%, 94.90%, and 94.75% for accuracy, specificity, and sensitivity, respectively, and outperformed the baseline methods.

2 Materials and methods

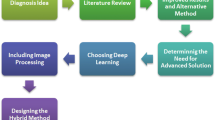

The schematic of the ODGNet architecture is shown in Fig. 2. The proposed model is comprised of two main components, i.e., OD localization and Glaucoma classification. Firstly, the salient region is extracted via a saliency map and integrated with a shallow CNN model for faster and cost-effective OD localization. Then, the extracted OD region is further fed to pre-trained deep learning models (AlexNet, VGGNet, and ResNet) to differentiate between healthy and glaucomatous images. The steps are explained in the subsequent sections.

Detail description of the proposed framework for OD localization and Glaucoma classification. The OD localization is performed based on a saliency map incorporated with a shallow CNN model, and the Glaucoma classification is performed via transfer learning-based method VGGNet with hyperparameters settings. The performance of the proposed model is evaluated by using various performance matrices

2.1 Saliency map based OD localization

Saliency represents the identical features of the image, such as pixels and resolution that stand out to neighboring pixels [39]. The detection of salient regions within the image plays an important role in object recognition or segmentation. The detected salient regions produce salient maps with defined boundaries of the object. In this research, we detected salient regions by using a frequency-tuned approach as in [40].

In the frequency-tuned approach, low-level features of color and luminance are used to compute the saliency in images, which provide high-level saliency maps and easy implementation. The saliency map makes the salient region more prominent than the bounded regions as depicted in Fig. 3. As the salient map is used to make a brighter region concerning human vision characteristics, the object with high intensity is considered as the OD region. The saliency map falsely detects the OD because of noise factors such as brighter lesions or bright fringe of the retina. The noise factor is always conceivable because of the pathological changes in the retinal fundus images. The OD localization by using lonely saliency maps yields 91%, 92%, and 94% accuracy on HRF, DRIONS-DB, and ORIGA datasets, respectively. Thus, we employed shallow CNN on the salient region to determine whether the selected region is OD or not.

2.2 Shallow CNN-based OD region classification

Convolutional neural network (CNN) is the most prominent model of deep learning models, which has shown great strength for computer vision tasks such as image classification [26]. CNN is typically used to handle high-dimensional inputs and to learn and classify hierarchical features directly from the image without human intervention. The automated extracted features using end-to-end learning have achieved state-of-the-art accuracy for various fundus images classification, i.e., diabetic retinopathy detection [41] and cataract classification [42]. The architecture of CNN is composed of various layers, including the convolution layer, max-pooling layer, and fully connected layer. We preferred to use shallow networks than deep networks because of its simplicity, lesser computational time, and about the same degree of accuracy for OD feature representation and region classification. Although CNN is a powerful model to learn the features directly from the input image, scanning the whole fundus image, elapse more time and extra computational cost. In this paper, we extracted the salient region and then used shallow CNN to determine the region of interest is OD or not. Thus, it provides fast OD detection with promising accuracy. As the saliency maps are the most visible region of the fundus image, but sometimes the saliency map marks the salient region other than OD because of the low quality and pathological changes of the fundus image. In this study, we developed a shallow CNN model to determine the salient region into OD or non-OD region. A sliding window approach is used to train the shallow CNN model by sliding the whole image to select the patches with or without OD and the saliency map target the next salient region in case of the non-OD region. The saliency map incorporated with shallow CNN achieved 99.37%, 98.83%, 98.40%, 98.05%, and 99.05% of localization accuracy on ORIGA, DRIONS-DB, DR-HAGIS, HRF, and RIM-ONE datasets, respectively. Fig. 3 indicates the OD localization based on a saliency map incorporated with shallow CNN.

2.3 Glaucoma classification

There are various deep learning-based studies have been proposed over the last few years for the automatic detection and classification of glaucoma [26, 43, 44]. In this study, we investigated three pre-trained CNNs: AlexNet [45], VGGNet [46], and ResNet [47] for automatic glaucoma diagnosis. The baseline VGGNet and ResNet use Stochastic Gradient Descent (SGD) optimizer, which has high fluctuate error and slow convergence. To overcome such issues, we uniformly applied the Adaptive Moment (ADAM) optimizer for all the employed networks. Furthermore, we performed various hyperparameters configurations to use these transfer learning-based methods. The details of these hyperparameters configurations are illustrated in Table 2.

2.3.1 AlexNet

AlexNet [45] won the first ImageNet Large Scale Visual Recognition Challenge (ILSVRC) in 2012. This method attained 84.7% by surpassing the second-best method with 73.8% accuracy. The architecture of AlexNet was comprised of 8 hidden layers (5 convolutions and 3 fully connected layers) along with data augmentation that was used to increase the training dataset. The ReLU activation function was employed for vanishing gradient problem and the dropout layer was added to address overfitting problems.

2.3.2 VGGNet

VGGNet [46] is the project of the visual geometry group for object recognition and won the (ILSVRC) in 2014. There are different variations of VGGNet based on the number of network layers. The common variations of VGGNet are VGG-16 and VGG-19 and among them, VGG-16 has better top-1 accuracy. The basic VGG-16 has fixed 3*3 kernel size for all the convolution layers unlike the AlexNet with variable sizes of 5*5 and 11*11 which reduced 28% and 62.8% training variables, respectively.

2.3.3 ResNet

Residual neural network (ResNet) [47] won the 2015 ILSVRC for object localization and classification. ResNet introduced skip-connections (residual blocks) to address the issues of vanishing gradient, which is iterated all over the network. There are different variations of ResNet based on the number of layers, such as ResNet-18, ResNet-50, and ResNet-101. In this study, we employed ResNet-34 because of less validation error and to restrict trainable parameters. ResNet-34 obtained 7.40% top-5 error rate on ImageNet dataset.

3 Dataset and experiments

In this paper, we used five state-of-the-art datasets to evaluate the performance of the ODGNet method. A total of 958 public retinal fundus images (665 healthy, 293 glaucomatous images) were collected from different resources. The details of these public datasets are given in Table 3.

3.1 Datasets

-

1.

ORIGA (Online Retinal Images for Glaucoma Analysis): It is one of the largest retinal fundus image datasets for glaucoma detection [34]. This dataset has been used as a standard dataset for various recent studies. The dataset was obtained from the Singapore Eye Research Center and comprised 650 images (168 glaucoma affected people and 468 healthy people). Besides the ground truth fundus images, it also provides a manual segmented disc and cup for all the images. A cup-to-disc (CDR) ratio with labels of normal and glaucomatous images are also given with this dataset.

-

2.

HRF (High-Resolution Fundus): This retinal image dataset was acquired from Ophthalmology Department, Friedrich-Alexander University, Germany [35]. The dataset provides 15 images for normal, glaucomatous, and DR, along with gold-standard vessel segmentation traced by experts.

-

3.

DRIONS-DB (Digital Retinal Image for Optic Nerve Segmentation Database): It is a public retinal images dataset for ONH segmentation [36]. The dataset was collected from Ophthalmology Service, Spain, and comprised 110 retinal images (50 normal and 60 glaucomatous images). This dataset also provides independent ONH contours verified by two retinal experts using software tools.

-

4.

DR HAGIS (Diabetic Retinopathy Hypertension Age-related Macular Degeneration and Glaucoma Images): This dataset comprised 40 images taken for DR screening program by Health Intelligence, Sandbach, UK [37]. The first subgroup of the dataset is for glaucoma analysis, which consists of 10 retinal images. The dataset also provides masks and ground truth images by expert graders.

-

5.

RIM-ONE (Retinal Images for Optic Nerve Evaluation): It is a retinal images database specially designed for glaucoma analysis [38]. The dataset was developed jointly by three hospitals in Spain. RIM-ONE contains 169 non-mydriatic fundus images and their ONH images (118 normal, 40 glaucomatous, 11 ocular hypertension images). The dataset also provides manual ONH segmentation as a gold standard by 5 experts.

3.2 Implementation

We performed experimentation of the proposed ODGNet model on Intel E5-2620 CPU, NVIDIA Tesla M2090 GPU, using Python 3 and Keras library running on top of Tensorflow.

3.3 Performance evaluation

The evaluation metrics such as accuracy (ACC), sensitivity (Sn), specificity (Sp), precision, F1-score, and area under the curve (AUC) are used to measure the performance of the proposed method evaluated on various retinal images datasets. Sensitivity and specificity represent the proportion of correctly identified glaucomatous and normal images, respectively. Accuracy is the ratio between correctly identified images (either normal or glaucomatous) and the total number of images. F1-score embodies the harmonic mean between precision and recall. The receiver operator characteristics (ROC) curve can be visually plotted with the help of the true positive rate (TPR) and true negative rate (TNR). The area under the curve (AUC) delimits the proposed models’ prediction ability [13]. The mathematical expressions of these metrics are given as follows:

Where TP, FP, FN, and TN symbolize true positive, false positive, false negative, and true negative, respectively, and the terms TP and TN represent the glaucoma images and normal images correctly predicted as glaucomatous and normal images. Simultaneously, the FP and FN denote the normal and glaucomatous image incorrectly classified as a glaucomatous and normal image, respectively.

4 Results and discussions

Our proposed method focused on two-stages to improve model generalizability. In the first stage, the OD region is localized using a saliency map incorporated with a shallow-CNN model. In the second stage, transfer learning-based three pre-trained models AlexNet, ResNet, and VGGNet are used to classify the retinal fundus image as normal or glaucomatous. Alternatives to these three pre-trained models, the shallow CNN and the other variations of VGGNet and ResNet are also investigated. Although, CNN leverages automatic feature extraction and classification directly from the input image, which is comparatively slow and has a high computational cost. The visual saliency map with CNN provides faster and more accurate OD localization.

The experimental results of these methods evaluated on different datasets are mentioned in Table 4. The proposed saliency map-based localization incorporated with shallow CNN are more promising compared to the baseline methods evaluated on five public datasets. The saliency map-based OD localization by incorporating various methods is shown in Fig. 4.

After extracting ROI, the glaucoma classification is performed by employing transfer learning methods, i.e., AlexNet, ResNet, and VGGNet. The statistical measures such as accuracy (Acc), sensitivity (Sen), specificity (Spe), precision (Prc), F1-score, and AUC are used to measure the proposed method’s performance evaluated on five public retinal datasets, as mentioned in Table 5.

The proposed method’s effectiveness in terms of accuracy, sensitivity, and specificity for these public retinal datasets is illustrated in Fig. 5. It can be observed that our method evaluated on the ORIGA dataset has shown more promising results with the highest values of 95.75, 94.90, and 94.75 for accuracy, sensitivity, and specificity, respectively.

An alternative to the proposed ensemble of saliency map with shallow CNN and VGGNet-16 model, we investigated various transfer learning methods and their variations. The comparative analysis of various TL-based methods and their variations is shown in Fig. 6. The proposed model’s performance evaluated on the ORIGA dataset achieved the highest accuracy of 95.75%. Fig. 7 shows the system’s confusion matrices using the shallow CNN and the VGGNet-16 on five public datasets.

To measure the effectiveness of the proposed model, we compared our results with some state-of-the-art methods. A comparative study of baseline methods for glaucoma diagnosis evaluated on the ORIGA dataset is given in Table 6. The graphical representation of this comparison is shown in Fig. 8.

5 Conclusion

In this paper, a novel ODGNet method has been proposed for OD localization and glaucoma classification. For optic disc localization, a saliency map roughly determines the salient region, and then shallow CNN is used to differentiate between OD and non-OD regions. The segmented OD regions are fed to transfer learning models such as AlexNet, ResNet, and VGGNet for glaucoma diagnosis. The experimental results indicates that the performance of the proposed ODGNet improves by employing saliency map incorporated with shallow CNN. The proposed approach yields 95.75% accuracy, which can assist the ophthalmologists in reducing the burden on mass screening. In future, we are intended to integrate both the handcrafted and automatic features for glaucoma classification, and to expand the proposed study on other retinal diseases.

References

Dervisevic E, Pavljasevic S, Dervisevic A, Kasumovic SS (2016) Challenges in early glaucoma detection. Med Arch 70(3):203

Burgoyne CF, Downs JC, Bellezza AJ, Suh J-KF, Hart RT (2005) The optic nerve head as a biomechanical structure: a new paradigm for understanding the role of iop-related stress and strain in the pathophysiology of glaucomatous optic nerve head damage. Prog Retin Eye Res 24(1):39–73

Bock R, Meier J, Nyúl LG, Hornegger J, Michelson G (2010) Glaucoma risk index: automated glaucoma detection from color fundus images. Med Image Anal 14(3):471–481

Quigley HA, Addicks EM, Green WR, Maumenee A (1981) Optic nerve damage in human glaucoma: Ii the site of injury and susceptibility to damage. Arch Ophthalmol 99(4):635–649

Michelson G, Hornegger J, Wärntges S, Lausen B (2008) The papilla as screening parameter for early diagnosis of glaucoma. Deutsches Aerzteblatt Int 105(34–35):583

Maheshwari S, Pachori RB, Acharya UR (2016) Automated diagnosis of glaucoma using empirical wavelet transform and correntropy features extracted from fundus images. IEEE J Biomed Health Inform 21(3):803–813

Simonthomas S, Thulasi N, Asharaf P (2014) Automated diagnosis of glaucoma using haralick texture features. In: International conference on information communication and embedded systems (ICICES2014), pp 1–6 . IEEE

Raghavendra U, Bhandary SV, Gudigar A, Acharya UR (2018) Novel expert system for glaucoma identification using non-parametric spatial envelope energy spectrum with fundus images. Biocybern Biomed Eng 38(1):170–180

Zilly J, Buhmann JM, Mahapatra D (2017) Glaucoma detection using entropy sampling and ensemble learning for automatic optic cup and disc segmentation. Comput Med Imaging Graph 55:28–41

Annu N, Justin J (2013) Automated classification of glaucoma images by wavelet energy features. Int J Eng Technol 5(2):1716–1721

Acharya UR, Bhat S, Koh JE, Bhandary SV, Adeli H (2017) A novel algorithm to detect glaucoma risk using texton and local configuration pattern features extracted from fundus images. Comput Biol Med 88:72–83

Dua S, Acharya UR, Chowriappa P, Sree SV (2011) Wavelet-based energy features for glaucomatous image classification. IEEE Trans Inf Technol Biomed 16(1):80–87

Singh A, Dutta MK, ParthaSarathi M, Uher V, Burget R (2016) Image processing based automatic diagnosis of glaucoma using wavelet features of segmented optic disc from fundus image. Comput Methods Programs Biomed 124:108–120

Chua CK, Chandran V, Acharya RU, Min LC (2009) Cardiac health diagnosis using higher order spectra and support vector machine. Open Med Inform J 3:1

Acharya UR, Dua S, Du X, Chua CK et al (2011) Automated diagnosis of glaucoma using texture and higher order spectra features. IEEE Trans Inf Technol Biomed 15(3):449–455

Krishnan MMR, Faust O (2013) Automated glaucoma detection using hybrid feature extraction in retinal fundus images. J Mech Med Biol 13(01):1350011

Acharya UR, Ng E, Eugene LWJ, Noronha KP, Min LC, Nayak KP, Bhandary SV (2015) Decision support system for the glaucoma using gabor transformation. Biomed Signal Process Control 15:18–26

Nayak J, Acharya R, Bhat PS, Shetty N, Lim T-C (2009) Automated diagnosis of glaucoma using digital fundus images. J Med Syst 33(5):337

Issac A, Sarathi MP, Dutta MK (2015) An adaptive threshold based image processing technique for improved glaucoma detection and classification. Comput Methods Programs Biomed 122(2):229–244

ur Rehman S, Tu S, Waqas M, Huang Y, ur Rehman O, Ahmad B, Ahmad S, (2019) Unsupervised pre-trained filter learning approach for efficient convolution neural network. Neurocomputing 365:171–190

Tu S, Huang Y, Liu G et al (2017) Csfl: A novel unsupervised convolution neural network approach for visual pattern classification. AI Commun 30(5):311–324

Latif J, Xiao C, Tu S, Rehman SU, Imran A, Bilal A (2020) Implementation and use of disease diagnosis systems for electronic medical records based on machine learning: a complete review. IEEE Access 8:150489–150513

Rehman SU, Tu S, Rehman OU, Huang Y, Magurawalage CMS, Chang C-C (2018) Optimization of cnn through novel training strategy for visual classification problems. Entropy 20(4):290

Maheshwari S, Kanhangad V, Pachori R.B. (2020) Cnn-based approach for glaucoma diagnosis using transfer learning and lbp-based data augmentation. arXiv preprint arXiv:2002.08013

Civit-Masot J, Domínguez-Morales MJ, Vicente-Díaz S, Civit A (2020) Dual machine-learning system to aid glaucoma diagnosis using disc and cup feature extraction. IEEE Access 8:127519–127529

Kim M, Han JC, Hyun SH, Janssens O, Van Hoecke S, Kee C, De Neve W (2019) Medinoid: computer-aided diagnosis and localization of glaucoma using deep learning. Appl Sci 9(15):3064

Cerentinia A, Welfera D, d’Ornellasa MC, Haygertb CJP, Dottob GN (2018) Automatic identification of glaucoma sing deep learning methods u. In: MEDINFO 2017: Precision Healthcare through informatics: Proceedings of the 16th World Congress on Medical and Health Informatics, vol. 245, p. 318 . IOS Press

Diaz-Pinto A, Morales S, Naranjo V, Köhler T, Mossi JM, Navea A (2019) Cnns for automatic glaucoma assessment using fundus images: an extensive validation. Biomed Eng Online 18(1):1–19

Gheisari S, Shariflou S, Phu J, Kennedy PJ, Agar A, Kalloniatis M, Golzan SM (2021) A combined convolutional and recurrent neural network for enhanced glaucoma detection. Sci Rep 11(1):1–11

Serte S, Serener A (2021) Graph-based saliency and ensembles of convolutional neural networks for glaucoma detection. IET Image Processing

Abdel-Hamid L (2021) Tweec: Computer-aided glaucoma diagnosis from retinal images using deep learning techniques. Int J Imag Syst Technol 32(1):387

Rayen SN, Subha V (2021) A novel visual saliency thresholding method for accurate roi segmentation in optic disc from feis for glaucoma detection. Int J Comput Digital Syst

Pan SJ, Yang Q (2010) A survey on transfer learning. IEEE Trans Knowl Data Eng 22(10):1345

Zhang Z, Yin F.S., Liu J, Wong WK, Tan NM, Lee BH, Cheng J, Wong TY (2010) Origa-light: An online retinal fundus image database for glaucoma analysis and research. In: 2010 Annual international conference of the IEEE engineering in medicine and biology, pp 3065–3068 . IEEE

Budai A, Bock R, Maier A, Hornegger J, Michelson G (2013) Robust vessel segmentation in fundus images. Int J Biomed Imag

Carmona EJ, Rincón M, García-Feijoó J, Martínez-de-la-Casa JM (2008) Identification of the optic nerve head with genetic algorithms. Artif Intell Med 43(3):243–259

Holm S, Russell G, Nourrit V, McLoughlin N (2017) Dr hagis-a fundus image database for the automatic extraction of retinal surface vessels from diabetic patients. J Med Imag 4(1):014503

Fumero F, Alayón S, Sanchez JL, Sigut J, Gonzalez-Hernandez M (2011) Rim-one: An open retinal image database for optic nerve evaluation. In: 2011 24th international symposium on computer-based medical systems (CBMS), pp 1–6 . IEEE

Simonyan K, Vedaldi A, Zisserman A (2013) Deep inside convolutional networks: Visualising image classification models and saliency maps. arXiv preprint arXiv:1312.6034

Achanta R, Hemami S, Estrada F, Susstrunk S (2009) Frequency-tuned salient region detection. In: 2009 IEEE conference on computer vision and pattern recognition, pp. 1597–1604 . IEEE

Gulshan V, Peng L, Coram M, Stumpe MC, Wu D, Narayanaswamy A, Venugopalan S, Widner K, Madams T, Cuadros J et al (2016) Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA 316(22):2402–2410

Imran A, Li J, Pei Y, Yang J-J, Wang Q (2019) Comparative analysis of vessel segmentation techniques in retinal images. IEEE Access 7:114862–114887

Gómez-Valverde JJ, Antón A, Fatti G, Liefers B, Herranz A, Santos A, Sánchez CI, Ledesma-Carbayo MJ (2019) Automatic glaucoma classification using color fundus images based on convolutional neural networks and transfer learning. Biomed Opt Express 10(2):892–913

Bajwa MN, Malik MI, Siddiqui SA, Dengel A, Shafait F, Neumeier W, Ahmed S (2019) Two-stage framework for optic disc localization and glaucoma classification in retinal fundus images using deep learning. BMC Med Inform Decis Mak 19(1):136

Krizhevsky A, Sutskever I, Hinton GE (2017) Imagenet classification with deep convolutional neural networks. Commun ACM 60(6):84–90

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of financial or personal interests

The authors declare no conflict of financial or personal interests that could have appeared to influence the work reported in this paper. All procedure performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki Declaration and its later amendments or comparable ethical standards. Informed consent was obtained from all individual participants involved in the study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Latif, J., Tu, S., Xiao, C. et al. ODGNet: a deep learning model for automated optic disc localization and glaucoma classification using fundus images. SN Appl. Sci. 4, 98 (2022). https://doi.org/10.1007/s42452-022-04984-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42452-022-04984-3