Abstract

Diabetic retinopathy (DR) significantly burdens ophthalmic healthcare due to its wide prevalence and high diagnostic costs. Especially in remote areas with limited medical access, undetected DR cases are on the rise. Our study introduces an advanced deep transfer learning-based system for real-time DR detection using fundus cameras to address this. This research aims to develop an efficient and timely assistance system for DR patients, empowering them to manage their health better. The proposed system leverages fundus imaging to collect retinal images, which are then transmitted to the processing unit for effective disease severity detection and classification. Comprehensive reports guide subsequent medical actions based on the identified stage. The proposed system achieves real-time DR detection by utilizing deep transfer learning algorithms, specifically VGGNet. The system’s performance is rigorously evaluated, comparing its classification accuracy to previous research outcomes. The experimental results demonstrate the robustness of the proposed system, achieving an impressive 97.6% classification accuracy during the detection phase, surpassing the performance of existing approaches. Implementing the automated system in remote areas has transformed healthcare dynamics, enabling early, cost-effective DR diagnosis for millions. The system also streamlines patient prioritization, facilitating timely interventions for early-stage DR cases.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In 2019, the International Diabetes Federation reported that the global number of people suffering from diabetes mellitus (DM) was approximately 463 million, projected to reach around 700 million by 2045. Diabetic retinopathy (DR) is a retinal disorder resulting from impaired glucose tolerance, which leads to the deterioration of retinal capillaries as a consequence of diabetes [1] It can result in visual issues, making early diagnosis of the severity of DR crucial for effective treatment [2, 3]. DR affects about 80% of diabetics, and its early stages often have no symptoms, though some individuals may notice minor eye-light changes. It is classified as an inflammatory neurovascular disorder with neurological impairment, eventually leading to compromised vision and potential blindness. Consequently, DR represents a significant and prevalent disease in remote areas, especially where proper medical facilities are unavailable or limited [4, 5]. Diabetic retinopathy is challenging to diagnose and requires multiple clinical tests, such as visual acuity assessment, pupil dilation, and computerized tomography [6].

1.1 Diabetic Retinopathy

One of the leading causes of visual loss is diabetic retinopathy in both developing and industrialized nations’ working populations. Diabetic people are 25 times more at risk of becoming blind as compared to healthy people [7, 8]. Retinopathy is a condition that affects the retina and the blood capillaries due to diabetes. Blood vessels are tree-shaped branching patterns with minor curvature that arise from the optic disc. The vessel’s average diameter is roughly 1/40 of the retina diameter. The bright yellowish disc from which arteries and optic nerve fibers emerge is known as the optic disc. Electrical impulses were sent from the retina to the visual cortex via the optic disc. It has a diameter of 1.5–2 mm [9]. Diabetic retinopathy in its initial phase is known as non-proliferative diabetic retinopathy (NPDR). At least one microaneurysm is present in this condition, with or without hemorrhages in the retina, hard fluid, cotton wool patches, or venous loops. Microaneurysms are the most common clinical abnormality in the eye [1, 10]. They may appear as minute, dark red spots or as tiny hemorrhages inside the light-sensitive retina, either alone or in clusters. Some blood veins that supply the retina get blocked as the disease advances, and this stage is known as moderate NPDR. Severe NPDR is the following stage, where several more capillaries are blocked [11]. Both types of diabetes, diabetes mellitus and diabetes insipidus, induce diabetic retinopathy. In its early stages, it is asymptomatic, but if left untreated for an extended period, it can result in irreversible eyesight loss. The issue is that patients may not be aware of it until it has progressed to an advanced stage. Diabetes mellitus can cause proliferative diabetic retinopathy (PDR), which is a condition that can cause permanent vision impairments. As the disease progresses, eyesight loss becomes unavoidable. Diabetes-related retinopathy is the primary cause of blindness worldwide, especially in developing nations. Therefore, there is a critical need for an effective and accurate diagnostic system [12, 13]. Figure 1 illustrates the various levels of diabetic retinopathy disease.

To prevent vision loss caused by this severe disease, the global scientific community strives to develop more efficient and precise methods for early identification of diabetic retinopathy [14]. Utilizing automated detection techniques and advanced technologies can significantly save the diagnostic process time, effort, and resources [15]. Implementing an automated detection system holds great potential for providing high-quality eye-related services in remote areas, emphasizing the importance of addressing this issue and offering an automated solution as a preventive measure. Deep learning (DL) algorithms have emerged as a prominent solution for various medical imaging analysis challenges, surpassing the limitations of traditional machine learning methods [16, 17]. Deep learning models excel in swiftly identifying significant features in retinal images without human intervention. By employing multiple processing layers, these models can learn data representations at different levels of abstraction. These advancements have made substantial progress in voice recognition, pattern recognition, object identification, and other domains. DL’s backpropagation approach allows the exploration of complex pattern structures within extensive datasets, enabling the model to update its internal parameters and compute representations for each layer based on the input from the preceding layer.

DL-based has emerged as a focal point in various academic disciplines in recent years, driven by its remarkable ability to directly extract meaningful features from training data [18]. DL is now considered a promising image/video categorization and detection technology. DL algorithms employ sophisticated processes, including data processing and abstraction construction, to optimize performance. In genomics, machine learning is extensively utilized to unveil intricate relationships within data and generate novel biological insights [19]. However, the continuous growth of genomics data demands even more powerful DL models to unlock deeper insights. DL’s transformative impact is evident in domains such as machine learning and natural language processing, where it efficiently harnesses vast datasets to achieve significant advancements [20].

Deep learning has emerged as a potent instrument for improving computer-aided diagnosis (CAD) systems, resulting in enhanced diagnostic accuracy, expanded coverage of disorders, and the implementation of real-time medical image disease detection systems. Notably, Wang et al. [21] introduced a DL architecture based on the U-net model, which effectively differentiated optic discs in diabetic retinopathy detection. Utilizing convolutional neural networks (CNNs), the DL model independently analyzed dark and shaded retinal fundus images, yielding distinct and accurate segmentation outputs. DL’s reliance on vast datasets can be challenging despite its effectiveness. To address this, transfer learning has emerged as a valuable approach, reducing the demand for extensive data by leveraging previously optimized model parameters over new data distribution domains. This technique reduces training time when adapting the model to related tasks with different datasets [22]. Often, models pre-trained on complex feature datasets like ImageNet are employed for transfer learning, leading to improved accuracy across various tasks [23]. With diabetes cases projected to reach 552 million worldwide by 2030, telemedicine programs need to enhance their imaging capabilities and embrace automated image analysis methods to effectively address and manage diabetic retinopathy [24,25,26].

1.2 Problem Statement

The increasing prevalence of diabetic retinopathy in remote areas and limited access to medical resources and timely diagnosis present a significant healthcare challenge. The scarcity of doctors has led to more undetected cases, raising the risk of vision impairment and blindness among affected individuals. Addressing this pressing issue is vital to prevent further escalation of the disease’s impact on public health. Harnessing cutting-edge methods and sophisticated technologies is essential to address this healthcare disparity. Advancements in medical imaging and diagnostic techniques offer transformative opportunities, especially for conditions like diabetic retinopathy. Among these technologies, the fundus imaging system stands out for its exceptional ability to capture detailed and comprehensive retinal images. This study introduces a groundbreaking fundus camera-based real-time monitoring system designed to enable early identification of diabetic retinopathy. The system intends to provide accurate and efficient disease detection by employing cutting-edge computer vision algorithms and DL-based techniques. The integration of real-time monitoring capabilities enables timely interventions, optimizing patient outcomes and enhancing disease management. Beyond immediate patient benefits, the proposed automated monitoring system has the potential to revolutionize healthcare in remote regions. Optimizing medical resources and streamlining disease detection can alleviate the burden on healthcare facilities, empowering them to focus on critical cases and adopt a comprehensive approach to diabetic retinopathy management. Patients with diabetic retinopathy stand to derive substantial advantages from this technological breakthrough. The system’s automated nature and remote accessibility empower individuals to manage their health proactively. Regular monitoring and timely interventions are essential in preventing disease progression, ultimately reducing the risk of vision loss and lessening the burden on individuals and healthcare systems.

1.3 Aim of the Research

The proposed model aims to develop a system capable of analyzing images and accurately identifying the level of diabetic retinopathy. The suggested model utilizes a more straightforward and lighter approach, requiring less CPU computing power, making it suitable for smaller devices. Moreover, the suggested method demonstrates improved accuracy in data categorization compared to existing models. This diagnosis system would aid clinicians in evaluating the disease by detecting diabetic retinopathy by capturing retinal images using a fundus camera.

1.4 Objectives of the Research

This study aims to contribute significantly to diabetic retinopathy diagnosis by introducing innovative and effective methods for assessing the disease and providing optimal patient care.

-

to improve the classification accuracy of diabetic retinopathy stages through preprocessing approaches such as transformation, non-local mean denoising, and image filtering.

-

To address data imbalance by applying data augmentation specific to each grade.

-

To employ transfer learning-based models for automatic feature extraction, reducing training overhead and handling insufficient annotated training data.

-

To develop a healthcare system for diabetic retinopathy using a fundus camera to capture eye images

-

To focus on accurately classifying the level or stage of diabetic retinopathy disease.

-

To generate automated reports for further consultations or medication decisions.

The framework of the article is as follows: Sect. 2 provides an exhaustive overview of related work in the disciplines of detection, segmentation, colorization, and classification. Section 3 provides an overview of the dataset, processing, data augmentation, and feature extraction techniques. The Sect. 4 describes the framework, mathematical modeling, and algorithms used in the study, along with an overview of the model. The empirical findings are thoroughly analyzed and discussed in Sect. 5. Finally, Sect. 6 the conclusion is presented and outlines possible future directions for research.

2 Literature Review

Kimar et al. [24] proposed a system to aid prediction and diagnosis in cases of DR. The system’s effectiveness and utility for DR applications were demonstrated, showcasing its potential for improved diagnostic capabilities. Iqbal et al. [3] suggested a computer-assisted approach for rating the severity of different phases of DR. Their method involved extracting unique characteristics from retinal layers, resulting in twelve distinctive features. By employing a deep fusion approach for classification, the authors achieved high accuracy rates, with 93% accuracy for binary classification between normal and retinopathy grades and 98% accuracy for mild/moderate DR grade classification. Ali et al. [27] suggested a three-classification approach to identify retinopathy lesions. They utilized a filter bank approach to retrieve lesion candidates for each lesion and subsequently categorized DR lesions. The system’s performance was thoroughly validated using various performance measures. Azar et al. [5] developed a robust segmentation technique for detecting and evaluating DR and maculopathy. After preprocessing, distinct portions of the retinal image, including the fovea, microaneurysms (MAs), exudates (EXs), and hemorrhages (HMs), were segmented. Researchers used concentric circular zones to differentiate maculopathy and non-proliferative diabetic retinopathy (NPDR) using aberrant fundus images and abnormalities patterns. Skouta et al. [28] proposed an automated classification approach for diabetic retinopathy, emphasizing contrast normalization for better differentiation between noise spots and microaneurysms (MAs) detection.

Sabeena et al. [29] utilized customized convolutional networks to differentiate retinal impairments, such as hemorrhages, microaneurysms, and neovascularization. They employed a variety of pre-trained networks to surpass the performance of existing systems. Ramachandran et al. [30] developed a tailored strategy for feature learning and characterization of non-diabetic retinopathy (DR) stages from DR-affected stages using deep convolutional neural networks (CNNs). To enhance the effectiveness of the DR classification system, they integrated image information with the extracted CNN features. Das et al. [31] applied a heat map optimization approach for CNN training to detect DR disease. Their method involved image and lesion-level classification to detect lesions such as hard or soft exudates and hemorrhages. Khana et al. [32] introduced a CNN design based on the Inception-V3 model for distinguishing between mild to severe DR, diabetic macular edema, and completely gradable cases. Rachapudi et al. [33] developed various segmentation methodologies for identifying retinal vessels, hard exudates, and microaneurysms. It utilized PCA for feature extraction and neural networks for two-class categorization. Minija et al. [34] introduced a textural analysis approach using deep CNNs for identifying blood arteries and hemorrhages in diabetic retinopathy. Their process involved diagnosis and severity level grading of retinal fundus images through a two-step approach. Saranya et al. [35] designed a three-stage categorization system for classifying fundus images of the retina in diabetic retinopathy using colored fundus images. Saranya et al. [36] developed a patch-based microaneurysm detection technique for five-stage diabetic retinopathy severity grading, utilizing random forest and neural network classifiers. PCA and random forest were employed for dimensionality reduction and performance improvement [37].

The advancements in deep learning have profoundly impacted various fields, including machine learning and natural language processing, primarily due to its ability to leverage vast datasets. In genomics modeling tasks, deep learning has become the preferred approach for estimating the influence of genetic variation on gene regulation mechanisms like DNA availability, especially in tasks related to DR. DL-based models, such as deep artificial neural networks (ANNs), have proven effective in capturing complex patterns in data, making them valuable tools for medical image disease detection systems [38]. The author of this study developed a specialized CNN structure based on the U-net model, designed explicitly for recognizing optical plates in retinal fundus images. By analyzing dark and shaded fundus images independently, the CNN produced distinct outputs, leading to improved detection of disorders and enabling real-time medical image disease detection [39]. Previous research often relied on end to end training of deep learning systems from raw fundus images to DR grade labels. However, these systems can miss critical lesion characteristics due to the inherent "black-box" nature of deep learning. To overcome this limitation, the study developed a lesion-aware subnetwork that enhances the ability to extract lesion characteristics. By embedding prior knowledge into the DR grading network, the study aimed to enhance the overall efficacy of the grading system and guide the model to make more informed decisions [40]. This research uses a DeepDR network trained end to end, utilizing features from the lesion-aware sub-network and original images, unlike previous studies using multiple CNNs to identify and classify lesions. This comprehensive approach resulted in improved grading outcomes and better performance for diagnosing various phases of DR in real-world datasets [41]. In other relevant works, researchers [42] developed a model to identify optic disc (OD) and optic cup (OC) by accurately measuring their respective areas. They employed watershed change and morphological separation procedures for locating and differentiating OD, achieving high predictive performance scores in their testing. Similarly, in [43], a method was proposed that successfully localized various shades of retinal fundus images and achieved a mean predictive performance of 92.8%, showcasing the effectiveness of their approach.

In achieving optimum picture classification outcomes, it is common practice to include handmade and non-handcrafted features inside a computer vision system [44]. Non-handcrafted features, including PCA, CBD, and CNN, were extracted, while handcrafted features were derived from localized phase quantization and completed local features. Parthiban et al. [45] introduced a diabetic retinopathy (DR) evaluation technique based on Google Inception, outperforming licensed ophthalmologist grading. Pratt et al. [46] adapted an existing denoising method for classifying a dataset of fundus images of the retina. They designed a convolutional neural network (CNN) capable of distinguishing microaneurysms, hemorrhages, and exudates by identifying intricate characteristics, particularly for the early detection of microaneurysms. Belderrar et al. [47] developed an autonomous diabetic retinopathy disease detection method. They pre-processed fundus images using a fuzzy histogram-based approach, followed by feature learning and intensity grade classification. Mansour et al. [48] developed a computer-based diagnostic method for classifying damaged blood vessels and fundus images into distinct categories. Hasan et al. [49] presented a novel detection method using convolutional neural networks with leveraged residual connections. This approach enhanced the authenticity of the detection system by strengthening the CNN model through PCA utilization [50]. The Kaggle dataset was employed to assess the accuracy of DR diagnosis, and the results showed promising performance compared to other chronic and semi-DR categorization methodologies. Automated detection of this disease can assist ophthalmologists in managing their workload by identifying patients who require essential ophthalmic care. Despite the potential of CNNs in DR diagnostic frameworks, they still pose challenges for clinical applications [40]. DL-based methods often use numerous image patches for image-level classification, embracing short-range dependencies and ensemble approaches such as SVM or majority voting. However, these models frequently overlook long-range connections.

3 Materials and Methods

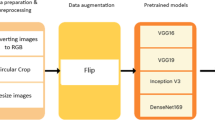

The methodology employed in this study involves up-sampling and down-sampling techniques applied to the dataset, segmentation of the optic disc, preprocessing of images for transformation, and the use of a CNN-based model. The suggested model’s comprehensive design is depicted in Fig. 2. To address data imbalance, the method initiates sampling and downscaling, effectively mitigating the issue in subsequent stages of the system. Next, optical disc segmentation is employed to prevent skewed findings, as the optical disc’s presence may lead to erroneous decisions due to its resemblance to lesions. Preprocessing follows, reducing irrelevant noise and highlighting pertinent information crucial for precise predictions [51]. This preprocessing procedure is straightforward and efficient, requiring minimal computing resources.

The provided figure Fig. 2 illustrates the detection process for diabetic retinopathy used in the study as its visual realization. It states the iterative stages in diabetic retinopathy detection including data balancing, image pre-processing and classification. The process has each operation illustrated which readers understand on the spot. The figure breaks down the detection process into separate steps, which give a structured representation of how the model works, thus enabling the understanding of the employed methodology.

The proposed methodology investigates an innovative technique for the identification and categorization of diabetic retinopathy, with a focus on assessing its degree of severity. Identifying DR illness can be challenging clinically, especially in mild and moderate stages. This approach aids clinical professionals in early disease prediction using fundus images, potentially improving patient satisfaction. The system acquires essential data, such as eye images, using a fundus camera integrated with the system. The acquired data undergoes preprocessing, including edge detection, resizing, and normalization. The CNN classifier receives a completely preprocessed picture and proceeds to assess the level of severity of diabetic retinopathy (DR).

The process flow diagram for the classification and image processing is shown in Fig. 3, which includes several preprocessing steps, such as edge detection, scaling, interpolation, and normalization. The CNN algorithm is utilized for classifying the severity degree of DR. In the developed model, the detection, analysis, and treatment of diabetic retinopathy, along with the consultation process. The retinal image is acquired through a fundus camera and then undergoes preprocessing, including edge detection and resizing. The fully preprocessed image is further segmented to detect the different disease levels of diabetic retinopathy. If the patient is healthy, the diagnosis concludes; otherwise, if the image shows disease detection, a report is sent to connected doctors for telemedicine treatment and further care [52].

The proposed model for diabetic retinopathy uses data balancing, optical disc segmentation, and preprocessing to detect and classify the condition. This method improves patient care and diagnosis accuracy by overcoming noise and enhancing relevant information, thereby enhancing the overall effectiveness of the model

The detection process is summarized in Fig. 3. It presents the main processing steps which include edge detection, scaling, interpolation, and normalization, followed by the classification using the CNN algorithm. Its simplicity notwithstanding the figure reflects the core of the detection process and thus allows readers to grasp the main features of the methodology easily.

3.1 Dataset

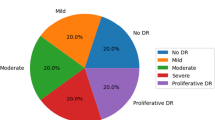

In this research, the IDRiD and MESSIDOR datasets from ADCIS were utilized. The MESSIDOR collection consists of 1200 raw retinal color fundus images, categorized into four classes (0–3) depending on the severity of Diabetic Retinopathy. Class 0 represents a normal eye image with no signs of disease, while Class 1, Class 2, and Class 3 depict varying levels of disease severity. The MESSIDOR dataset exhibits class imbalance, with most Class 0 images accounting for almost 50% and a limited number of Class 1 images, possibly contributing to model bias. The IDRiD dataset comprises 413 training retinal images and about 103 testing images. These images represent either the left or right eye, with no specific age group information provided [53]. Additionally, retina fundus images from the public dataset EyePACS, available on Kaggle.com, were used. The ophthalmologists assigned labels to these images and categorized them into five classes: normal, mild, moderate, severe, and proliferative diabetic retinopathy (DR). Detailed information about this dataset is presented in Table 1.

Table 1 shows the Kaggle EyePACS dataset used in this study in detail. The dataset is classified into five classes per the severity of diabetic retinopathy which ranges from normal to proliferative diabetic retinopathy (PDR). The table shows the number of images for each class as well as its corresponding percentage on the total dataset. The table being presented gives a detailed proportion of the dataset composition and as such it presents valuable information with regards to the distribution of different severity levels which is very crucial for the training and evaluation of machine learning models. Scientists can use this information to evaluate the representativeness of the data set and the existing biases.

3.2 Data Upsampling and Downsampling

The research incorporates upsampling and downsampling techniques to achieve a balanced dataset and address data imbalance. Upsampling involves replicating photographs in regions where data is scarce, ensuring a balanced representation of classes and avoiding bias towards a specific category. On the other hand, downsampling involves removing samples from specific classes, preventing them from dominating the dataset and introducing bias. This approach guarantees that the classification algorithm is not adversely impacted by randomly reducing data from the dataset. In the study, the Kaggle dataset also exhibits imbalance, prompting the implementation of upsampling and downsampling methods on photos belonging to different series [26].

3.3 Segmentation of Optic Disc

In the fundus image of the eye retina, the presence of the optic disc poses a challenge in distinguishing bright lesions from dark lesions. The equivalent intensity values of the two lesions make it difficult for the neural network to differentiate between them reliably. As a result, the optic disc needs to be removed to minimize its detrimental impact on the predictions. However, this presents a unique set of problems as the optic disc exhibits comparable intensity changes as bright lesions [54]. The typical retinal fundus image is depicted in Fig. 4a, and the retinal image after subdividing the optic disc in Fig. 4b.

The figure highlights the success of a proposed system’s optic disc segmentation process to eliminate the optic disc’s impact on accurately identifying bright and dark lesions in the fundus image of the eye retina. The technique effectively finds the brightest point, isolating a single pixel as the brightest within the image. This segmentation process greatly improves the model’s ability to distinguish lesions, leading to better diagnosis and management of diabetic retinopathy disease without introducing any bias

This Fig. 4b presents optic disc segmentation, an essential component of the diabetic retinopathy detection process. It visually depicts the segmentation method that removes the optic disc from retinal fundus images which helps in the accurate detection of lesions. The comparison of the before and after images highlights the effect of segmentation on improving the visibility of lesions, e.g., microaneurysms and hemorrhages. The figure, illustrating the outcomes of the segmentation method, serves as visual proof of the approach’s effectiveness, thus exposing its role in the precision improvement of diabetic retinopathy. A thorough examination of the dataset revealed that the brightest pixel inside the picture is usually located at the middle side of the optic disc. This is because of the imaging technology used to acquire retinal fundus images. To address this issue, a novel approach for optic disc segmentation was developed in the proposed system. Instead of relying on intensity values, the approach searches for the brightest point. Global maxima are employed to find the brightest spot in the picture, resulting in a single brightest pixel within the image. Once the brightest point is located, a circle is drawn around it, and the rest of the picture is removed. This approach effectively addresses the challenges of the optic disc segmentation process [54].

3.4 Image Preprocessing

Experiments revealed the optimal approach for enhancing effectiveness and utility in image preprocessing involves cropping, eliminating optic disc, resizing, interpolation, and normalizing [1, 55]. Edges in a picture are described as abrupt changes/discontinuities that really can encode almost as much data as pixels. Smoothing the image to decrease noise and then identifying these abrupt changes in intensity are all part of the canny edge detection procedure. Reducing noise, discovering the intensity gradient, non-maximum suppressing, and hysteresis threshold are all steps in the process. Most accessible fundus photos are lossy compressed, causing minute structures like MAs to be distorted. That makes it challenging to isolate the most distinctive characteristics. Furthermore, due to the tiny length of MAs, it is critical to limit the noise impact. As a result, before moving on to the actual detection procedures, it required to examine a lot of image smoothing. An Image enhancement with something like a width of 7 and a variance of 1.0 is used in our implementation. If you need to reduce or increase the number of pixels in an image, resize it. When you enlarge an image, the pixel information changes. An image is expanded based on the estimate to get an image of a large size. The resolution of the photographs used is 2240 \(\times \) 1488 pixels. Computing such huge pictures is computationally unfeasible since more pixels means many heavy loads linked together with the image, which requires more processing power for modification. Interpolation is a technique for inserting additional data points into a set of existing discrete data points. It is an estimating function that can be utilized for locating lost data. The procedure used here is the method of non-adaptive interpolation, which is required when the image is scaled. Image interpolation operates in both planes. The suggested approach deemed the best for resizing employs inter-area- Area Interpolation. This approach uses the pixel area relationship to resample, resulting in noise-free output. The normalizing technique is used to alter the limits of pixel values. It may also be called contrast stretching as well as histogram stretching. It is done to reduce image noise and output the values back to the intensity. To do so, divide all the pixels by 255 to put them within the range of zero to one. Figure 5a, b. show a normal fundus eye picture and a fundus image after preprocessing [56].

The image processing steps deployed on retinal fundus images before classification are depicted in Fig. 5. It displays a normal eye fundus photograph and a preprocessed fundus image with cropping, resizing, and normalization adjustments made during the preprocessing. Visualizing the preprocessing pipeline with a figure, the latter allows the readers to understand how methods improve the quality and suitability of images for further analysis. It emphasizes the role of preprocessing in improving image quality and enabling precise diabetic retinopathy diagnosis.

3.5 Data Augmentation

The training dataset significantly impacts deep learning algorithms’ performance, making it crucial to have a larger dataset with intricate network architecture [57]. Medical imaging datasets are typically limited, so researchers use distinct data augmentation techniques, such as rotation, shifting, flipping, and cropping, to improve the training dataset. Cropping removes surplus areas, while rotation angles ranging from 0 to 180 degrees are applied to the patches. Shifting operations are also performed within a specified frame, and flipping techniques are employed to enhance the training dataset.

3.6 Feature Extraction Process

This process aims to find all micro-aneurysms (MAs) in the pre-processed picture and pick them. Micro-aneurysms show distinct patterns that are not related to the vessels. Micro-aneurysms can be classified depending on their form, size, and intensity level. Micro-aneurysms are microscopic red spots that range in size from 10 to 100 microns in diameter and are round. The prospective micro-aneurysms are differentiated by isolating them from the capillaries after pre-processing the picture. MAs and vessels are reddish and cannot be found on vessels. Blood vessels have an enormous surface area and are related components. Therefore, they may be distinguished from MA by their size. Experimentation is used to determine the threshold value. Objects with an area more significant than the threshold value are removed to eliminate blood vessels, as seen in Fig. 2. Micro-aneurysms and noise may appear in the final image, which are disconnected vessels and other particles in the fundus picture. MAs have a diameter of 10–100 microns; therefore, they may be distinguished from noise by their area. Experimentation yielded two threshold values for removing noise objects with areas bigger and less than MAs. The generated picture contains objects with similar areas, some of which are micro-aneurysms [58].

3.6.1 Blood Vessels

The human eye’s digital fundus photography provides crisp pictures of the blood vessels in the retina. This approach provides a good view of the health of a DR patient. Figure 6 depicts an example of blood vessel identification using several forms of DR. The blood artery architecture was derived by applying diverse image processing methods to the green channel of the RGB fundus image [59]. Two-dimensional matching filters were used to locate blood vessels. The Gaussian distribution may be used as an approximation for the gray-level profile of a blood vessel cross-section. The method of vascular approximation was used, whereby the matched filter detection technique was applied to identify sections of blood vessels that demonstrate a piecewise linear pattern.

Figure 6 presents the outcomes of blood vessel detection in normal and PDR cases. It shows that the detection method is successful in identifying blood vessels on retinal images. Such identification is necessary for diabetic retinopathy diagnosis. The figure presents sample images with annotated blood vessels which serves as visual evidence of the accuracy of the detection process and highlights how much it matters to assess retinal health. The graph provides evidence of the capability of the method of detection to accurately detect blood vessels which, consequently influences the accuracy of the system.

3.6.2 Exudates

Exudates are buildups of lipids and proteins in retinal tissue, causing dazzling white or cream-colored lesions. These conditions indicate vascular permeability and potential retinal edema. Although not directly threatening to vision, they indicate fluid accumulation in the retina. Lesions near the macula center are considered sight-threatening. They are often encountered in conjunction with micro-aneurysms. As a result of these micro-aneurysms indicating increasing leakage, the traditional lesion is a circular ring of exudates having multiple micro-aneurysms at its core. Figure 7 is an example of exudate detection from several kinds of DR. The images depict black regions indicating the absence of exudates, while white regions indicate the presence of exudates. Eliminating prominent retinal components, such as the blood vascular network and the optic disc, is a pivotal step in the extraction procedure. After removing these structures, the exudates were discovered using image processing methods.

Figure 7 illustrates the procedure of identifying the exudates, supposed to be the lipid and protein aggregations in retinal tissue that imply the possibility of retinal edema. These lesions commonly seen near the macular center correspond to vascular permeability and fluid accumulation. In the Fig. 7, exudates are marked as white or cream-colored lesions in contrast to a black background which signifies the presence or absence of the space occupying the lesion. The method is based on the segmentation of exudates in the image by eliminating other retinal components including blood vessels and the optic disc/ disk of the eye. This visualization will help us understand how exudates are recognized in retinal images which is fundamental for diagnosing diabetic retinopathy.

3.6.3 Detection of Microaneurysms

Detecting microaneurysms is crucial as they are the first recognized indicator of diabetic retinopathy. Studies have examined their incidence and regression in the early stages. Color fundus pictures and MA-tracker technology were used to calculate microaneurysm turnover. Results show stability over time, with only 29% of microaneurysms persisting in their initial site. Figure 8 illustrates the process of identifying microaneurysms in both normal individuals and patients with PDR. Microaneurysms were captured using the green channel of RGB fundus images, removing prominent elements like blood vessel trees and optic discs. Advanced image processing methodologies were employed to identify microaneurysm-containing regions within fundus images [60].

Figure 8 shows the identification of microaneurysms, the initial signs of diabetic retinopathy. Microaneurysms appear as either circular or bead-like structures in the retinal vessels. the recognition step is composed of detecting them by the green channel of RGB fundus images and advanced image processing techniques for localization. The figure underlines microaneurysms in both normal and diabetic patients thus portraying their importance in disease diagnosis and treatment.

3.6.4 Hemorrhages

As the degree of DR intensifies, the presence of retinal hemorrhages becomes apparent. The authors propose augmenting ischemia, which is the inadequate oxygen supply, in the retinal tissue. As the quantity of retinal vessels rises, their susceptibility to injury and leakage escalates, leading to the exudation of fluid, lipids, and proteins. Figure 9 depicts the outcome of bleeding detection. The regions devoid of color in the image are indicative of hemorrhages. The detection of hemorrhages has two distinct components: (a) The identification of blood vessels, (b) the identification of blood vessels that exhibit hemorrhages. To get the image depicting hemorrhages, the image just displaying blood vessels was removed from the image, including both blood vessels and hemorrhages.

Figure 9 displays the detection of retinal hemorrhages, which are visible in the disease progress. Hemorrhages are presented as colorless areas in the retinal tissue. Vessel segmentation is followed by defining regions inside those vessels having hemorrhages. This visualization aids in comprehending how hemorrhages are identified in retinal images, signifying the gravity of diabetic retinopathy and the necessity for prompt action.

4 Classification Process

Classification seems to be a challenge in disease prediction to determine a specific class using sample points. Labels and targets are other terms for the classes. Classification is the process of estimating the mapping function from data input to outputs [61]. There are two classification problems in medical imaging, binary and multi-classification. Binary classification has two target classes: cancer classification benign vs. malignant. Over two classes are included in the multi-classification process. For example, consider diabetic retinopathy categorization (normal, mild, moderate, severe, and PDR). The classification model needs sufficient training samples to determine how and why the input function corresponds to a target class. Labeled data from all four categories of diabetic retinopathy will be utilized as training data in diabetic retinopathy classification. After training, the classifier can be used to categorize unlabeled data. Classification is a supervised learning technique in which the output is pre-defined concerning the input data. To achieve classification, the DL classifier is employed as discussed below.

Medical images are high-quality images that retain specific information. Deep learning models are used to train these images, but they often suffer from the loss of contextual information. Techniques like support vector machines (SVM) and majority voting have shown efficacy in this context, but they also result in the loss of spatial data. Researchers have proposed techniques like context-aware learning and patch probability fusion to preserve contextual information. Context-aware learning combines four feature vectors from patch-wise methods but faces challenges in capturing spatial data constrained within certain boundaries. Patch probability fusion uses a patch-wise network to extract spatial characteristics, followed by an image-wise network for classification. However, this method lacks the preservation of distant contextual information. To address these issues, a transfer learning-based technique for classification was implemented on a CNN-based model to preserve contextual information of features. The methodology extracts and classifies several characteristics based on the derived feature set.Three pre-trained networks, namely AlexNet [62], VGGNet [63], and ResNet [64], were used for feature fusion as shown in Fig. 10.

The efficiency of deep learning models for medical image identification is well acknowledged on a global scale. Training deep learning models requires a larger quantity of data, which is often inadequate for the dataset of medical pictures. Training deep learning models from scratch is a time-consuming process. To address these challenges, the researchers used transfer learning as a methodology. Transfer learning involves using a model trained on a particular task dataset to address comparable problems, with the ability to make modest adjustments to the hyperparameters.

The models often used for image classification have shown satisfactory accuracy in the ILSVRC (ImageNet Large Scale Visual Recognition Challenges) [65]. The study investigates VGGNet and ResNet models, focusing on shallower versions like VGGNet-16 and ResNet-18, to mitigate overfitting issues in limited training data. The global average pooling layer is replaced with the first fully connected layer, and a single fully connected layer is retained after the GAP layer. The pre-existing models, namely AlexNet, VGGNet-16, and ResNet-18, are only used to extract features. Subsequently, a non-linear support vector machine (SVM) is employed to categorize cataracts. VGGNet [63] is a visual geometry group at Oxford University research initiative supervised by Zisserman and Simonyan (VGG). It came in second place in the ILSVRC-2014 Challenging Challenge. In contrast to the AlexNet architecture, which incorporates larger kernel-size filters \(11\times 11\) and \(5\times 5\), the VGG16 model consists of 16 convolutional layers and primarily employs 3 \(\times \) 3 kernel-size filters. The VGG model has a substantial parameter count of 138 million, which presents challenges in training the network due to the process’s associated difficulties and time-consuming nature. Combining the two methodologies yields imprecision among the top five rankings, amounting to 6.8%. The model has several convolutional layers preceding the fully connected layers. Every convolutional layer is annotated with its kernel size, number, and stride thus showing the direction of the information flow through the network. Max-pooling layers are added for subsampling feature maps and fully connected layers handle classification tasks. Acquiring this architecture is pivotal for getting how the model adheres to retinal pictures to categorize diabetic retinopathy.Though deep learning algorithms can solve various classification issues, the fundamental issue with medical image classification is the lack of labeled data. Transfer learning is extensively used to overcome the lack of labeled data by reusing previously trained deep CNN for a similar job. As a result, it may be used to reduce training overhead and train with a smaller dataset. There are concerns about using transfer learning to overcome the lack of labeled data in DL-based medical images. Categorization. This study used pre-trained VGGNet-16 to identify using fixed size \(3 \times 3\) kernel filters in this experiment. Table 2 illustrates the architecture of the suggested model. The input layer is \(512\times 512\), and the architecture has 16 levels. For all convolutional layers, save the first layer, which has a stride of 2, a \(3\times 3\) kernel size filter, unified bias, and stride of 1 are used. Each max-pooling layer in the network employs a \(2\times 2\) kernel size filter with a stride of 2. The resulting features obtained from the pooling operation are then flattened before being sent to the subsequent levels in the network. To mitigate the issue of overfitting, all layers within the model use the rectified linear unit (ReLU) activation function. Additionally, a dropout rate of 0.5 is implemented before the first two fully connected layers. After the convolution layers, two interconnected layers are introduced, each consisting of 1024 neurons. Finally, for early-stage detection and classification of diabetic retinopathy, a softmax function is utilized as a single neuron output layer. The process of fine-tuning the hyperparameters is used in the training of the VGGNet, which is based on transfer learning. The learning rate was 0.0001, and the model underwent training using the Adam optimization algorithm. The network weights are randomly initialized using a batch size of 32, followed by training the network for 300 epochs. The momentum magnitude is defined as 0.9, and the categorical cross-entropy is chosen as the objective function. Data augmentation techniques are used separately for each grade to solve the issue of data imbalance.

Table 2 provides a detailed breakdown of the architecture employed for the suggested VGGNet model. It enumerates the layers by type, kernel size, number, stride and output dimensions, respectively. This concise summary, in turn, enables us to understand the architecture of the VGGNet model and see how the information is processed through its layers.

5 Result Comparison

The proposed study aims to develop advanced models for multi-stage cataract grading and provide an efficient and timely diagnosis. The experimental results of the proposed methods indicate that these models have a strong capability to predict and grade early-stage cataracts with higher prediction performance. The proposed models are evaluated on a private dataset using different statistical measures, including F-score, specificity, precision, AUC, sensitivity, and accuracy. Applied preprocessing techniques such as image resizing, contrast-limited adaptive histogram equalization, top-bottom hat transformation, non-local mean denoising, green channel extraction, and image filtering to diminish noise and expand the retinal image quality. Proposed novel data augmentation operations like Gaussian scale-space-based theory and other data augmentation techniques on each cataract grade to address dataset unbalancing and annotated training dataset insufficiency issues. Proposed a novel ensemble technique of transfer learning and machine learning models for diagnosing cataracts, and to achieve better diagnostic performance and reduce computational time.

5.1 Configurations

The testing was conducted utilizing a Quadro K620 GPU with 8 GB of RAM, running on the Ubuntu 16 operating system. The Keras framework (http://keras.io/) was used for the experiments. The dataset, including retinal data, was divided into two subsets: a training set and a testing set. A random selection process is used to allocate 80% of the pictures for training purposes, while the remaining 20% are reserved for testing as shown in Table 3.

5.2 The Performance Metrics

To assess the efficacy of the proposed approach, an analysis was conducted on the performance of recognizing abnormal human behavior. This analysis included examining a confusion matrix and calculating several performance metrics afterward. The concept of precision refers to the level of accuracy or exactness in measurements or calculations. The positive predictive value (PPV) represents academic discourse accuracy by calculating the sum of true positives (TP) and false positives (FP) in a component tag. Precision can be measured as in Eq. 1.

The F1 score is computed as the harmonic mean of recall and accuracy as in Eq. 2.

Accuracy evaluates system performance by calculating the ratio of true positives (TP) and negatives (TN) to the total number of components, including TP, TN, FP, and FN. The accuracy may be determined using the equation presented in Eq. 3.

Specificity measures the negative rate relative to the total components in the negative class, including both TN and FP instances. The mathematical expression is shown in Eq. 4.

The calculation of the area under the curve (AUC) involves the execution of a specific integral across the interval defined by the two given points. The measurement of this quantity may be obtained by the use of the mathematical equation presented in Eq. 5.

Different transfer learning models were implemented in addition to the suggested VGGNet technique, as indicated in Table 4. The performance metrics of various deep learning architectures for diabetic retinopathy classification are compared by the given table. The metrics are sensitivity, specificity, accuracy, precision, F1-score, and area under the curve. Each row is distinct in terms of architecture, and the values in each column are the performance of that architecture for diabetic retinopathy classification. Studying this table helps in the comprehension of the degree of correctness of different models in the precise detection of diabetic retinopathy.

5.3 Result Analysis

The first pairplot which is an analysis of the dataset distribution in multi dimensions, that is the comparison of ‘Feature 1’ against ‘Feature 2’ is a fundamental exploration of the dataset. This scatter plot depicts the joint distribution of the two features, capturing the way they interrelate at different severity levels of diabetic retinopathy. The pair plot colors the data points based on the level of severity, adding another layer of information to aid in the identification of possible clusters and patterns that are associated with the different severity categories as shown in Fig. 11

The first pair plot compares ’Feature 1’ and ’Feature 2’ to examine the dataset’s simple matters. It shows the joint distribution of the two features and their interdependence over different disease severity grades of diabetic retinopathy. The color-coding on the plot helps identify possible clusters and patterns with different severity degrees

Each dot of the pair plot stands for one data point, the position of which is determined by the values of ’Feature 1’ and ’Feature 2’ as shown in Fig. 11. The color of the dots is dependent on their severity level allowing analysts to distinguish the combinations of features which occur more frequently in particular severity categories. For example, if a particular part of the plot is made up mostly of red dots (representing serious cases), the corresponding feature attribute values may be indicative of severe diabetic retinopathy. Instead, if another region is occupied by green dots (representing mild cases) it means that a different pattern is identified with less severity levels. The other pairplot which is compared against itself ’Feature 1’ gives a more detailed view of the distribution of this feature with each severity level shown in Fig. 12. This plot exhibits how ’Feature 1’ varies with the reference to both axes considering the severity level as the categorical variable. Through the visualization of the point distribution as well as their coloring by severity, the analysts can tell whether there exists a general pattern or outlier in some of the severity categories.

The second pair plot, visualizing ’Feature 1’ versus itself, shows the distribution of this feature among different severity grades. This plot shows how Feature 1 changes along both axes while severity level is the categoric variable. By visualizing the point distribution and color-coding them by severity, analysts can identify general patterns or outliers within each severity category

For instance, if ’Feature 1’ has widely varied values among the severe cases but less variability among mild cases, it implies that the feature is of more importance in the prediction of severe diabetic retinopathy as shown in Fig. 13. Concerning this, if the distribution of ’Feature 1’ is almost the same across severity levels, it suggests that this feature is not so tightly related to the condition severity.

The third pair plot charts the relationship between the ’Feature 2’ and itself and it is similar to the exploration of the distribution of this feature across all the severity levels. Using this graphic, analysts can see any distinct patterns or correlations between ’Feature 2’ and retinopathy severity

The Fig. 13 shows third pair plot focusing on Feature 2 versus itself gives a similar exploration of the distribution of this feature across different severity levels. This plot enables analysts to observe any unique patterns or linkage between ’Feature 2’ and diabetic retinopathy severity. By analyzing the differences in the distribution of ‘Feature 2’ within each category of severity, the analysts can acquire clues about ‘Feature 2”s possible predictive power.

For example, in the severe cases of diabetic retinopathy, some of the ranges among ’Feature 2’ values are predominant; meaning the ’Feature 2’ is a reliable indicator of the level of severity of the disease. As a whole, these pairplots for a complete visual analysis of the dataset allowing analysts to discover critical information about the complex relationship between features and the severity of diabetic retinopathy. Using these visualizations researchers can better understand the underlying patterns in the data, pinpoint possible risk factors or predictive markers, and thus, ultimately improve decision-making and patient management in diabetic retinopathy. To mitigate computational expenses, this research uses four widely used pre-trained models, AlexNet, GoogLeNet, ResNet, and VGGNet. These models have been recognized for their exceptional learning capabilities. We hypothesized that the classification approach would achieve optimum performance by integrating the feature set derived from each CNN model. Transfer learning included transmitting the acquired learning data to the composite extracted characteristics after training the suggested model. The proposed model showed he maximum training and validation accuracy as shown in the Fig. 14

The results of the experiments show that the suggested architecture is more accurate than existing techniques. The suggested model on a balanced supplemented dataset is more accurate in identifying and diagnosing diabetic retinopathy. The training plots and validation accuracy of the proposed VGGNET model exhibit a gradual rise over time, as seen in Fig. 14a, b. The training plots and validation accuracy of the proposed model Alex-Net exhibit an upward trend with time, as seen in Fig. 15a, b. VGGNet’s fine-tuned version performs admirably. With each epoch that passes, the training and validation accuracy rate improves. The loss curve shows that the training and validation losses decrease with each epoch.

The model under consideration achieved a 97.61% accuracy rate, making it a reliable tool for diabetic retinopathy identification and classification. Data were divided into training and testing datasets, with 20% of available data used for evaluation and 80% allocated for training purposes. Both the original and supplemented datasets were used to evaluate the suggested model as shown in Fig. 16.

The proposed system proved immensely helpful and efficient for the early detection of diabetic retinopathy and its stages in patients belonging to remote areas.

The observations’ accuracy using the methods in the Table 6 above ranges from 82.3 to 85.3 to 90.4, 78.7 to 95.68 to 95.03%. The accuracy achieved by this approach, which is substantially greater than baseline methods, was 96.6%.

The mean accuracy values for ResNet, GoogleLeNet, and AlexNet were 92.40%, 93.75%, and 94.62%, respectively.

Still, there are some difficulties that we faced in implementing this system. The overall implementation of devices was a significant task as fundus cameras and classification systems were complex to handle compared to other devices customarily used. An expert was needed to capture a good-quality eye image for handling and using the fundus camera. The installation process was also quite complex as it must be handled carefully. Meanwhile, collaboration with expert doctors was also a difficult task. Some limitations are associated with proposed models irrespective of their diagnostic performance and effectiveness. The dataset utilized in this study is acquired from the local hospital in China, as the retina size may vary from one region to another; thus, collecting a diverse dataset covering all regions is the key constraint of this study. The adopted dataset is minimal compared to the natural images dataset. The proposed study is only focused on cataract detection and grading. The other overlapping diseases may also be considered. The limitation of deep learning-based fundus image analysis is the inherited computational complexity and requires GPUs to train the network. Research is an evolving area, and modern available techniques can be improved further with technological advancement. Various retinal datasets (either public or private) can be combined and annotated by professional ophthalmologists to evaluate the effectiveness of proposed models on diverse datasets. The experimental results obtained in this study may also be improved by combining various high and low-level features. The localization of other handcrafted features, along with deep learning-based extracted features, can further help improve accuracy. Since the growths of vessels are so essential in cataracts, using a stochastic approach may help estimate vessel growth. This detail may be included and used by medical practitioners to monitor the disease’s progression.

5.4 Sensitivity Analysis Comparison for Various Proposed DCNN-Based Architectures

Sensitivity analysis is a statistical method used in cataract diagnosis to assess the impact of uncertainties in input image data on detection models. It helps researchers identify significant features that affect the model’s performance and optimize parameters and configuration. Statistical measures like MAE, MSE, RMSE, sensitivity, area under the curve (AUC), and R-squared \((R^2)\) can quantify the prediction error between predicted values and target classes. This helps assess the model’s accuracy and overall performance, improving the reliability and effectiveness of the detection and classification process.

A VGGNet classifier achieved an MAE of 0.018, MSE of 0.013, RMSE of 0.117, and an R-squared score of 0.046. It also had an AUC of 0.0.981 and a sensitivity of 0.983, indicating that the classifier correctly identified all positive samples, as plotted in Fig. 13. The CNN classifier performed well, achieving high AUC values and sensitivity. Table 5 presents the results of the prediction error obtained by applying DL-based algorithms.

The Alex-net classifier demonstrated strong classification performance on presented datasets, with an AUC value of 0.9449 and a sensitivity score of 0.949. Although marginally improved, the classifier’s AUC and sensitivity scores were lower than those of the VGGNet classifier. This suggests that the former may not possess the same level of efficacy in accurately categorizing data. Overall, the Alexnet classifier’s high AUC and sensitivity scores make it a potentially valuable instrument for categorizing cataracts.

5.5 Performance Comparison with Other Cutting-Edge Research

The efficacy of the suggested approach was evaluated by comparing its findings with those obtained from four established methods, therefore assessing its relative robustness. The average accuracy of the baseline approaches discussed in Table 6, with values of 91%, 88%, 86%, and 95%, respectively. On the other hand, the approach suggested has a 97% accuracy rate, surpassing that of conventional methods. Furthermore, the findings of this study provide evidence that our approach exhibits superior performance in terms of accuracy compared to other methods. Table 6 presents the findings of a comprehensive analysis conducted to evaluate the robustness of the suggested design using five contemporary approaches.

The proposed method outperforms four well-known methods in terms of accuracy, compared to baseline methods achieving an average accuracy of 91%, 88%, 86%, and 95%, respectively, can be observed from Table 6. The proposed method achieves 97% accuracy, surpassing mainstream methods and outperforming others in terms of accuracy. Table 6 shows the results of a comparative examination of the proposed design using five current methodologies to determine its strength.

Transfer learning techniques are often used in machine learning and are specifically tailored to a particular issue. These methods may be repurposed for other related problems by adjusting the hyperparameters via a process known as fine-tuning. The primary network is designated for transfer learning, whereby the pre-existing weights of the network are adapted. The initial weights of the network undergo continuous modifications to extract characteristics relevant to the job at hand. Recent research has shown that transfer learning techniques, namely those involving fine-tuning, have proven effective in addressing a range of medical imaging objectives, such as detecting DR and categorizing skin cancer. The present research used the four prevailing CNN-based architectures, AlexNet, GoogLeNet, VGGNet, and ResNet, to classify fundus images. Data processing, often in areas such as healthcare where the details are all-important is an exercise requiring full investigation and visualization. The enclosed code develops pairplots using Python libraries such as Seaborn, Pandas, and Matplotlib to investigate the features‘s relationship with the severity of diabetic retinopathy.

6 Conclusion

The proposed research aims to create a tool based on computer-based diagnosis and analysis to identify and categorize diabetic retinopathy levels. After a thorough review of the available literature, it was discovered that there were few studies in which CNN was employed, and strong results were produced. Even among the subset of individuals who had favorable outcomes, the approach proved to be computationally demanding, hence requiring the use of advanced computing equipment. Consequently, an approach based on CNN addresses the limitations seen in prior models. To remove extraneous information, many pre-processing processes were conducted. Based on the disease level of diabetic retinopathy, color fundus pictures of eyes were divided into four categories: moderate DR, No DR, Mild DR, and Severe DR. The suggested approach proved successful in detecting and segmenting the disease. The reports generated by the automatic detection system are then sent to the doctors attached to the system for further analysis, medications, or surgeries. The collected images represent the limitations of the method, as the classification accuracy is significantly affected by the quality of the images. There is still room for growth in the given technique. Due to second-grade variability, some individuals may already have moderate diabetic retinopathy at baseline, particularly for subtle abnormalities like microaneurysms. Although users should be aware that a high projected risk could suggest already-existing diabetic retinopathy, the deep-learning system’s prediction that these individuals will acquire diabetic retinopathy is semantically accurate. Future research may examine possible remedies, such as the simultaneous application of a diabetic retinopathy grading methodology using this risk classification tool. It may also be investigated if the deep-learning system may assist in predicting individuals who already have diabetic retinopathy, regardless of how severe it is or how it is graded on a scale of 1–10. The study found that diabetic retinopathy grades were based on one- or three-field color fundus images, making it difficult to detect macular edema. The lack of optical coherence tomography made it difficult to assess the progression to vision-threatening diabetic retinopathy. Patients were often referred for ophthalmology follow-up when moderate or severe diabetic retinopathy was found. A patient-level analysis, ideally in prospective situations, could help assess clinical significance. This system may be efficient for detecting diabetic retinopathy early in areas lacking good health facilities, but adaptation may be problematic due to the need for trained staff and system maintenance.

Availability of data and materials

This study used retina fundus images from the public dataset EyePACS, and can be accessed on Kaggle.com

Change history

12 June 2024

A Correction to this paper has been published: https://doi.org/10.1007/s44196-024-00557-x

References

Atteia, G., El-kenawy, E.-S.M., Samee, N.A., Jamjoom, M.M., Ibrahim, A., Abdelhamid, A.A., Azar, A.T., Khodadadi, N., Ghanem, R.A., Shams, M.Y.: Adaptive dynamic dipper throated optimization for feature selection in medical data. Comput. Mater. Continua 75(1), 1883–1900 (2023)

Kashif, J., et al.: Transfer learning-based model for diabetic retinopathy diagnosis using retinal images. Brain Sci. 12(535), 1–12 (2022)

Iqbal, S., Qureshi, A.N., Ullah, A., Li, J., Mahmood, T.: Improving the robustness and quality of biomedical cnn models through adaptive hyperparameter tuning. Appl. Sci. 12(22), 11870 (2022)

Hartnett, M., et al.: Perceived barriers to diabetic eye care: qualitative study of patients and physicians. Arch. Ophthalmol. 123(15), 387–391 (2005)

Azar, A.T., Khan, Z.I., Amin, S.U., Fouad, K.M.: Hybrid global optimization algorithm for feature selection. Comput. Mater. Continua 74, 2021–2037 (2023)

Iqbal, S., Qureshi, A.N., Li, J., Mahmood, T.: On the analyses of medical images using traditional machine learning techniques and convolutional neural networks. Arch. Comput. Methods Eng. 30(5), 3173–3233 (2023)

Michael Liew, M., Bunce, C.: A comparison of the causes of blindness certifications in England and wales in working age adults (16–64 years). BMJ Open 4(2), 1–20 (2014)

IIqbal, S., Qureshi, A.N., Li, J., Choudhry, I.A., Mahmood, T.: Dynamic learning for imbalance data in learning chest x-ray and ct images. Heliyon (2023)

Ali, S., Li, J., Pei, Y., Khurram, R., Rehman, K.U., Mahmood, T.: A comprehensive survey on brain tumor diagnosis using deep learning and emerging hybrid techniques with multi-modal mr image. Arch. Comput. Methods Eng. 29(7), 4871–4896 (2022)

Hassan, S.A., Akbar, S., Rehman, A., Saba, T., Kolivand, H., Bahaj, S.A.: Recent developments in detection of central serous retinopathy through imaging and artificial intelligence techniques-a review. IEEE Access 9, 168731–168748 (2021)

Sidrah, T.J., Tariq, K.: Frequency of diabetic retinopathy and factors for suboptimal diabetic control in type 2 diabetic patients in a trust hospital of pakistan. Pakistan J. Ophthalmol. 37(2), 183–187 (2021)

Ramadan, R.A., Khedr, A.Y., Yadav, K., Alreshidi, E.J., Sharif, M.H., Azar, A.T., Kamberaj, H.: Convolution neural network based automatic localization of landmarks on lateral x-ray images. Multimed. Tools Appl. 81(26), 37403–37415 (2022)

Rehman, K.U., Li, J., Pei, Y., Yasin, A., Ali, S., Mahmood, T.: Computer vision-based microcalcification detection in digital mammograms using fully connected depthwise separable convolutional neural network. Sensors 21(14), 4854 (2021)

Jamal, A., Alkawaz, M.H., Rehman, A., Saba, T.: Retinal imaging analysis based on vessel detection. Microsc. Res. Tech. 80(7), 799–811 (2017)

Saba, T., Akbar, S., Kolivand, H., Bahaj, S.A.: Automatic detection of papilledema through fundus retinal images using deep learning. Microsc. Res. Tech. 84(12), 3066–3077 (2021)

Krishna, N.V., et al.: The communal system for early detection microaneurysm and diabetic retinopathy grading through color fundus images. Int. J. Sci. Eng. Technol. 2(4), 228–232 (2013)

Jabbar, A., Naseem, S., Mahmood, T., Saba, T., Alamri, F.S., Rehman, A.: Brain tumor detection and multi-grade segmentation through hybrid caps-vggnet model. IEEE Access 11(1), 72518–72536 (2023)

Suma, K., Saravana Kumar, V.: A quantitative analysis of histogram equalization-based methods on fundus images for diabetic retinopathy detection. In: Computational Intelligence and Big Data Analytics, pp. 55–63. Springer (2019)

Kornblith, S, Shlens, J., Le, Q.V.: Do better imagenet models transfer better? In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (2019)

Jadhav, A.S. et al.: Computer-aided diabetic retinopathy diagnostic model using optimal thresholding merged with neural network (2020)

Walter, T., et al.: A contribution of image processing to the diagnosis of diabetic retinopathy-detection of exudates in color fundus images of the human retina. IEEE Trans. Med. Imaging 21(10), 1236–1243 (2002)

Mahmood, T., Li, J., Pei, Y., Akhtar, F., Imran, A., Rehman, K.U.: A brief survey on breast cancer diagnostic with deep learning schemes using multi-image modalities. IEEE Access 8, 165779–165809 (2020)

Al-Badri, A.H., Ismail, N.A., Al-Dulaimi, K., Rehman, A., Abunadi, I., Bahaj, S.A.: Hybrid cnn model for classification of rumex obtusifolius in grassland. IEEE Access 10, 90940–90957 (2022)

Kimar, D., Taylor, G., Wong, A.: Discoveryradiomics with clear-dr: interpretable computer aided diagnosis of diabetic retinopathy. IEEE Access 7, 25891–25896 (2019)

Eltanboly, A., et al.: A computer-aided diagnostic system for detecting diabetic retinopathy in optical coherence tomography images. Med. Phys. 44(3), 914–923 (2017)

Abunadi, I.: Deep and hybrid learning of mri diagnosis for early detection of the progression stages in Alzheimer’s disease. Connect. Sci. 34(1), 2395–2430 (2022)

Ali, S., Shaukat, Z., Azeem, M., Sakhawat, Z., Mahmood, T., ur Rehman, K.: An efficient and improved scheme for handwritten digit recognition based on convolutional neural network. SN Appl. Sci. 1, 1–9 (2019)

Skouta, A., Elmoufidi, A., Jai-Andaloussi, S., Ouchetto, O.: Deep learning for diabetic retinopathy assessments: a literature review. Multimed. Tools Appl. 82(27), 41701–66 (2023)

Sabeena, A.S., Jeyakumar, M.K.: Gd-stfa: gradient descent sea turtle foraging algorithm enabled deep q network for diabetic retinopathy detection. Multimed. Tools Appl. 1–20 (2023)

Ramachandran, S.K., Manikandan, P.: An efficient alo-based ensemble classification algorithm for medical big data processing. Int. J. Med. Eng. Inform. 13(1), 54–63 (2021)

Das, S., Saha, S.K.: Diabetic retinopathy detection and classification using cnn tuned by genetic algorithm. Multimed. Tools Appl. 81(6), 8007–8020 (2022)

Khanna, M., Singh, L.K., Thawkar, S., Goyal, M.: Deep learning based computer-aided automatic prediction and grading system for diabetic retinopathy. Multimed. Tools Appl. 82(25), 39255–302 (2023)

Rachapudi, V., Rao, K.S., Rao, T.S., Dileep, P., Deepika Roy, T.L.: Diabetic retinopathy detection by optimized deep learning model. Multimed. Tools Appl. 82(18), 27949–71 (2023)

Minija, S.J., Rejula, M.A., Ross, B.S.: Automated detection of diabetic retinopathy using optimized convolutional neural network. Multimed. Tools Appl. 83(7), 21065–80 (2023)

Saranya, P., Prabakaran, S.: Automatic detection of non-proliferative diabetic retinopathy in retinal fundus images using convolution neural network. J. Ambient Intell. Humaniz. Comput. 1–10 (2020)

Saranya, P., Umamaheswari, K.M.: Detection of exudates from retinal images for non-proliferative diabetic retinopathy detection using deep learning model. Multimed. Tools Appl. 1–21 (2023)

Erciyas, A., Barişçi, N.: A meta-analysis on diabetic retinopathy and deep learning applications. Multimed. Tools Appl. 1–20 (2023)

Al-Karawi, A., Avşar, E.: A deep learning framework with edge computing for severity level detection of diabetic retinopathy. Multimed. Tools Appl. 1–22 (2023)

Thomas, N.M., Jerome, S.A.: Diabetic retinopathy detection using eadbsc and improved dilated ensemble cnn-based classification. Multimed. Tools Appl. 1–23 (2023)

Kale, Y., Sharma, S.: Detection of five severity levels of diabetic retinopathy using ensemble deep learning model. Multimed. Tools Appl. 82(12), 19005–19020 (2023)

Ohri, K., Kumar, M.: Domain and label efficient approach for diabetic retinopathy severity detection. Multimed. Tools Appl. 1–30 (2023)

Kwasigroch, A., Jarzembinski, B., Grochowski, M.: Deep cnn based decision support system for detection and assessing the stage of diabetic retinopathy. In: 2018 International Interdisciplinary PhD Workshop (IIPhDW), pp. 111–116 (2018)

Dehghani, A., Moin, M.-S., Saghafi, M.: Localization of the optic disc center in retinal images based on the harris corner detector. Biomed. Eng. Lett. 2(3), 198–206 (2012)

Dubey, S., Dixit, M.: Recent developments on computer aided systems for diagnosis of diabetic retinopathy: a review. Multimed. Tools Appl. 82(10), 14471–14525 (2023)

Parthiban, K., Kamarasan, M.: Diabetic retinopathy detection and grading of retinal fundus images using coyote optimization algorithm with deep learning. Multimed. Tools Appl. 82(12), 18947–18966 (2023)

Pratt, H., et al.: Convolutional neural networks for diabetic retinopathy. Proc. Comput. Sci. 90, 200–205 (2016)

Belderrar, A., Hazzab, A.: Real-time estimation of hospital discharge using fuzzy radial basis function network and electronic health record data. Int. J. Med. Eng. Inform. 13(1), 75–83 (2021)

Mansour, R.: Deep learning based automatic computer-aided diagnosis system for diabetic retinopathy. Biomed. Eng. Lett. 8(1), 41–57 (2018)

Hasan, M., Uguz, H.: A new early-stage diabetic retinopathy diagnosis model using deep convolutional neural networks and principal component analysis. Traitement du Signal 37(5), 711–722 (2020)

Gupta, S., Thakur, S., Gupta, A.: Comparative study of different machine learning models for automatic diabetic retinopathy detection using fundus image. Multimed. Tools Appl. 1–32 (2023)

Belay, H., Ayalew, S., Hailu, D.: Hydrological performance evaluation of multiple satellite precipitation products in the upper blue nile basin. J. Hydrol. Reg. Stud. 27, 1–34 (2020)

Oacgade, S., et al.: Retinal fundus multi-disease image dataset: a dataset for multi-disease detection research. Data 6(14), 1–14 (2021)

Jinfeng, G., et al.: Ensemble framework of deep cnns for diabetic retinopathy detection. Comput. Intell. Neurosci. 1–10, 2020 (2020)

Wang, Y., et al.: Automatic segmentation of optic disc and cup in retinal fundus images using improved two-layer level set method. Math. Probl. Eng. 1–15, 2019 (2019)

Mahmood, T., Li, J., Pei, Y., Akhtar, F., Jia, Y., Khand, Z.H.: Breast mass detection and classification using deep convolutional neural networks for radiologist diagnosis assistance. In: 2021 IEEE 45th Annual Computers, Software, and Applications Conference (COMPSAC), pp. 1918–1923. IEEE (2021)

Alyoubi, L., Maysoon, F., Wafaa, M.: Diabetic retinopathy fundus image classification and lesions localization system using deep learning. Sensors 21(3704), 1–22 (2021)

Mahmood, T., Li, J., Pei, Y., Akhtar, F., Rehman, M.U., Wasti, S.H.: Breast lesions classifications of mammographic images using a deep convolutional neural network-based approach. PLoS One 17(1), e0263126 (2022)

Sujith, S., Vipula, S.: Automatic detection of diabetic retinopathy in nondilated rgb retinal fundus images. Int. J. Comput. Appl. 47(19), 1–7 (2012)

Imran, Q., Jjun, M., Qaisar, A.: Recent development on detection methods for the diagnosis of diabetic retinopathy. Symmetry 11(749), 1–34 (2019)

Shengchun, L., et al.: Microaneurysms detection in color fundus images using machine learning based on directional local contrast. BioMed. Eng. Online 9(21), 1–25 (2020)

Alfian, G., et al.: Deep neural network for predicting diabetic retinopathy from risk factors. Mathematics 8(1620), 1–19 (2020)

Krizhevsky, A., Sutskever, I., Hinton, G.E.: Imagenet classification with deep convolutional neural networks. Adv. Neural. Inf. Process. Syst. 25(1), 1097–1105 (2012)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition (2014). arXiv:1409.1556

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

Deng, J., Dong, W., Socher, R., Li, L.-J., Li, K., Fei-Fei, L.: Imagenet: a large scale hierarchical image database. In: 2009 IEEE Conference on Computer Vision and Pattern Recognition, pp. 248–255 (2009)

Nanni, L., Ghidoni, S., Brahnam, S.: Handcrafted vs non-handcrafted features for computer vision classification. Pattern Recogn. 71, 158–172 (2017)