Abstract

Background

The implementation of evidence-based healthcare interventions is challenging, with a 17-year gap identified between the generation of evidence and its implementation in routine practice. Although contextual factors such as culture and leadership are strong influences for successful implementation, context remains poorly understood, with a lack of consensus regarding how it should be defined and captured within research. This study addresses this issue by providing insight into how context is defined and assessed within healthcare implementation science literature and develops a definition to enable effective measurement of context.

Methods

Medline, PsychInfo, CINAHL and EMBASE were searched. Articles were included if studies were empirical and evaluated context during the implementation of a healthcare initiative. These English language articles were published in the previous 10 years and included a definition and assessment of context. Results were synthesised using a narrative approach.

Results

Three thousand and twenty-one search records were obtained of which 64 met the eligibility criteria and were included in the review. Studies used a variety of definitions in terms of the level of detail and explanation provided. Some listed contextual factors (n = 19) while others documented sub-elements of a framework that included context (n = 19). The remaining studies provide a rich definition of general context (n = 11) or aspects of context (n = 15). The Alberta Context Tool was the most frequently used quantitative measure (n = 4), while qualitative papers used a range of frameworks to evaluate context. Mixed methods studies used diverse approaches; some used frameworks to inform the methods chosen while others used quantitative measures to inform qualitative data collection. Most studies (n = 50) applied the chosen measure to all aspects of study design with a majority analysing context at an individual level (n = 29).

Conclusions

This review highlighted inconsistencies in defining and measuring context which emphasised the need to develop an operational definition. By providing this consensus, improvements in implementation processes may result, as a common understanding will help researchers to appropriately account for context in research.

Similar content being viewed by others

Background

“Context is one of those words you will encounter again and again, without anyone offering anything like a useful definition. It is something of a catch-all word usually used to mean “all those things in {a} situation which are relevant to the meaning in some sense, but which I haven’t identified” [1].

The implementation of evidence-based healthcare interventions is challenging with a 17-year gap identified between the generation of evidence and the implementation of interventions in routine practice [2,3,4,5]. It is argued that we cannot understand or explain these findings without looking at the context in which the intervention was embedded [6,7,8]. However, contextual factors are often overlooked by researchers in the field of implementation science. Despite its proclaimed influence on implementation efforts [9,10,11,12], context remains poorly understood and reported [13]. This insufficient understanding has been attributed to the variability in how context is defined and measured in various studies [13,14,15,16]. An example of this lack of consensus is reflected in the terms used within implementation science frameworks. Few refer to context explicitly (e.g. Promoting Action on Research Implementation in Health Services (PARiHS)) with a majority referring to the construct indirectly using expressions such as “inner setting” and “outer setting” (Consolidated Framework for Implementation Research (CFIR)).

Research that has begun to investigate how context is conceptualised have confirmed the existence of inconsistencies [16,17,18]. McCormack et al. [17] was one of the first to complete a concept analysis of ‘context’. They reported that the lack of clarity associated with context was related to its characterisation as an objective entity; the environment or setting in which the intervention is implemented. Similarly, Pfadenhauer et al. [16] found that the construct remains unmatured but suggest that instead of a passive phenomenon, context is dynamic, embracing not only the physical setting but also the social environment. While writing the findings of this review article, a scoping review by Nilsen and Bernhardsson [18] was published investigating the conceptualisation of context within determinant frameworks. Like the aforementioned studies [16, 17], Nilsen and Bernhardsson found that most studies included a narrow description of context but they also identified common contextual determinants across frameworks including; organisational support, financial resources, social relations, leadership, and organisational culture and climate. Although Nilsen and Bernhardsson’s article conceptualises context, the findings are limited to definitions provided within the determinant frameworks of the 22 included publications. Therefore, the question remains as to how context is defined more broadly within implementation research and whether the determinants identified by Nilsen and Bernhardsson are applicable to this wider literature base. This review addresses this question and aims to improve the consistency for the use of the term ‘context’ by developing an operational definition for this construct.

Literature suggests that to understand the dynamic relationship between context and implementation, conceptualisations of context need to be translated into practical methods of assessment [19, 20]. Fernandez et al. [20] argue that the ability to intervene upon contextual factors is dependent on an ability to measure them. However, the subordinate role context plays within implementation research [16] has led to a dearth of guidance on how to measure it. Lewis et al. [21] identified 420 instruments relevant to implementation science, however, it is unclear what methods exist to specifically measure contextual domains. Additionally it is recognised that interactions between context and intervention implementation occurs at multiple levels of the system [18], something that has not been examined in existing literature.

To address identified gaps in the evidence base, this paper aims to answer the following research question: “How is context defined and measured within healthcare implementation science literature?” Whilst providing greater clarity regarding how context is defined, assessed and analysed, it is hoped this review will also enhance the rigour of future studies exploring context within implementation research. The development of an operational definition and the identification of methods of assessment may better enable comparative evaluations to be conducted, enhancing our understanding of how context influences implementation processes.

Methods

This systematic review was conducted to explore the proposed research question. This study was informed by the Cochrane handbook’s [22] guidance for conducting systematic reviews and the Preferred Reporting Items of Systematic Reviews and Meta-Analyses (PRISMA) [23]. The review protocol was published on the PROSPERO Database in January 2019 (CRD42019110922).

Search strategy

Extant literature within the field of implementation science informed the search strategy (Additional file 1). Using keywords in conjunction with truncation and Boolean operators, four electronic databases were searched: Medline, CINAHL, EMBASE and PsychINFO. Reference lists of included studies were also hand searched to identify potentially relevant studies that were not retrieved from the database searches. However, no additional relevant articles were retrieved.

Inclusion and exclusion criteria

The studies were restricted to peer-reviewed articles published in English in the previous 10 years (January 1st, 2008 to September 25th, 2018). The eligibility criteria were broad to ensure a balance between a specific and sensitive search of the literature. Empirical studies were included if context was a key component or focus during the implementation of an initiative in a healthcare setting and if a definition and assessment of context was included.

Study screening and data extraction

Covidence [24], an online data management system, was employed to manage the review process. Article screening and selection was performed independently by two reviewers (LR and ADB) against the eligibility criteria. Any disagreements over a study’s inclusion was resolved through discussion with a third reviewer (EMC) (n = 2). To guide data abstraction, the reviewers developed a standardised data extraction tool (Additional file 2). The quality of the definition outlined in each article was assessed by identifying whether articles simply listed contextual factors relating to the construct, outlined sub-elements of a framework that included context or if a rich definition of context was provided (Table 1). Kirk et al.’s [29] approach was adopted to examine the depth of application of each context assessment by determining 1) how the method was used in the included studies (data collection, descriptive data analysis, or both); 2) whether the measure was used to investigate the association between context and implementation success; and 3) examine the unit of analysis (individual, team, organisation, system level).

Quality appraisal

As appropriate for the study design, sections of the Mixed Methods Appraisal Tool (MMAT) were used to assess the quality of each included article [30]. Consistent with best practice, two reviewers (LR and ADB) independently appraised each study. Any disagreements over the quality or risk of bias of included papers were resolved through discussion. To enhance the transparency in reporting the appraisal process, a summary of the quality assessment can be seen in Table 2.

Data synthesis

Due to aim of this study and the heterogeneity of the articles included, a narrative synthesis [88] and thematic analysis [89] of the findings was the most appropriate approach to examine the review question. This approach enabled the extent of convergence, divergence, and contradiction among studies on how to define, and assess context to be explored [90]. NVivo software was used to manage the synthesis of the data and the results are reported in accordance with PRISMA guidelines [23].

Results

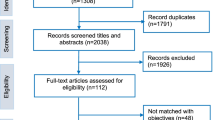

The search returned a total of 3021 records. Of these, 975 were duplicates and were removed. One thousand, eight hundred, and thirteen articles were excluded following title and abstract screening and a further 169 were excluded during full text review as they did not meet the inclusion criteria. Reasons for exclusion are evident on the PRISMA diagram (Fig. 1). On further assessment 60 of these papers had more than one reason for exclusion. In total 64 studies met the inclusion criteria and were reviewed.

Table S1 and Table 3 summarise the studies included in this review, outlining the study characteristics, the quality of the definitions employed, the depth of application for these measures and the unit of analysis chosen by each study.

Most of the included studies were conducted in the USA [20, 26, 28, 32, 34, 41, 43, 51,52,53, 63, 66, 71, 73, 76,77,78, 82, 84,85,86], UK, [19, 27, 40, 47,48,49,50, 57, 61, 70, 74] and Canada [44, 54, 56, 60, 62, 64, 75, 87]. Primarily studies were conducted within a primary care, [8, 19, 20, 26,27,28, 32, 34, 35, 37, 38, 42, 43, 52, 53, 57,58,59, 62,63,64, 69, 76, 79, 85] or hospital setting [25, 31, 33, 39, 41, 45, 47, 49, 55, 56, 61, 65,66,67, 70, 72, 73, 75, 78, 80, 81, 84, 87] with others taking place across these settings [36, 48, 60, 82], at district level [50, 68, 74] or at a national level within health systems [40]. For some articles, it was unclear which care setting the research was conducted [44, 46, 51, 54, 71, 77, 83, 86]. Figure 2 illustrates the growing research interest in context within the field of implementation science, however, a slight decline is noted within the previous 2 years.

Thirty-four of the included studies used a qualitative approach [8, 19, 26, 31,32,33, 35,36,37,38,39,40,41, 43,44,45, 47, 49, 50, 52, 54, 56, 57, 59,60,61,62,63,64, 66,67,68,69, 71], 18 studies were purely quantitative [20, 25, 72,73,74,75,76,77,78,79,80,81,82,83,84,85,86,87], while 12 conducted a mixed methods evaluation [27, 28, 34, 42, 46, 48, 51, 53, 55, 58, 65, 70]. Studies using a qualitative approach commonly used interview methods, while quantitative studies predominantly employed surveys for data collection. Most engaged in primary data collection, however, four studies conducted a retrospective evaluation of existing data sources [19, 66, 69, 71]. Table 2 summarises the results of the quality appraisal using the appropriate sections of the MMAT for study design.

Narrative synthesis

Given the heterogeneity of included papers, studies were categorised by the quality of the definition provided, the depth of application for the context assessment chosen and the unit of analysis employed and are described in the following narrative synthesis.

Definition of context

Studies used a variety of definitions to describe context. Of the 64 articles, 60% did not meet our classification of providing a rich definition for this construct (n = 38). Instead much of the literature simply listed contextual factors [25, 37, 42, 48, 50, 56, 58, 61, 63, 64, 69, 73, 77, 78, 80, 81, 83, 85, 87] or sub-elements of a framework that includes context [26, 32, 41, 43, 45, 46, 49, 51,52,53,54, 59, 60, 66, 67, 72, 75, 79, 86]. Of the remaining articles, 11 provided a comprehensive definition of context generally [8, 19, 27, 32, 35, 38,39,40, 55, 68, 70, 71, 84], while 15 defined an aspect of context [20, 28, 31, 33, 36, 44, 47, 57, 65, 74, 76, 82, 84, 85, 87]. Definitions from the included papers are categorised in terms of their quality as outlined in Table 1. The full definitions of context included in each paper are presented in Table S1.

Listing contextual factors

Most of the available literature on context described the construct by merely recording associated factors. Some studies defined context by identifying a broad list of features such as the political or economic environment [48, 56, 69]. One paper associated context generally with multiple levels of the healthcare system including the “team working on the project, the microsystem in which they function … the organisation in which they work, and the external environment” [42](p717). Others provided specific examples of contextual conditions that relate to these domains and system levels. To effectively synthesise the studies included in this subsection, definitions will be categorised in accordance with individual, team, organisational and external characteristics.

Individual

Some studies highlight the perceptions and attitudes of individuals as key components of context. Individual autonomy [25], self-efficacy [81], individual knowledge, attitudes and beliefs [87] are described as influential contextual conditions within included papers. Additional factors identified include the interpretations of individuals about the initiative [50], with one study simply acknowledging the socioeconomic background of participants as a contextual determinant [37].

Team

Other studies adopt a different perspective suggesting that the perceptions of teams rather than individuals are important contextual features. Spitzer-Shohat et al. [58] propose that the team’s perceptions of the availability of resources, their capabilities in succeeding as well as the social relationships between team members and management are key contextual drivers. Team characteristics and teamwork [73] as well as team stability, morale, workload and staffing [61] were also identified as key contextual characteristics.

Organisational

Most of the literature incorporates organisational features within definitions of context. Whilst Chan et al. [73] and Chiu and Ku [81] adopt a broader perspective, referring to organisational support or facilitating factors, others specify this support in terms of organisational resources [25, 63, 64, 73, 78, 80, 83, 87]. While some studies broadly outline the availability of these resources [50, 64, 73, 83, 87], others specify this to include relevant “expertise” [63] and incorporate the ownership of resource allocation [78]. Others specify that organisational resources incorporate adequate training [25], staffing, time and space [80].

Leadership is listed as a key contextual feature for many studies included in this section of the review. However, Yamada et al. [87] is the only paper to classify this characteristic as an organisational factor. Similarly, four studies specify organisational norms as a contextual determinant [50, 63, 83, 85] with two acknowledging the workload and demands of organisations [50, 77]. Additional contextual factors cited include organisational climate [85], organisation size, change and structure [50], and organisational capacity [83].

External environment

Five studies include aspects of the external setting within their definition of context. Health policy [64], public reporting structures [61], the structure and dynamics of the wider health service [37, 80] and the capacity of the community [78] are outlined as influential contextual conditions. For some studies it is unclear whether the contextual driver mentioned was a micro, meso or macro level feature as these factors may be evident across multiple levels of the health system. Leadership was outlined as a contextual determinant in much of the literature included [61, 63, 64, 73, 80]. This concept is further described as the presence of “champions” during implementation [63], or the support of leaders [73] or management [25, 61]. Similarly, social relationships are cited within three definitions [33, 36, 69]. Additional multi-level contextual factors include culture, feedback mechanisms [80], support [83], learning climate [64], and compatibility [81] with two studies outlining contextual drivers specific to their study [25, 77].

Listing sub-elements of a framework that includes context

Most of the papers list elements of a framework that includes context. Seven of the articles define context by listing elements of the PARiHS framework; culture, leadership and evaluation [26, 41, 46, 52, 66, 72, 75]. Two of these studies, provide a brief description of these determinants [52, 66], with one outlining factors of a prevailing context [52]. In contrast, Arney et al. [32] refer to the PARiHS framework within their definition but do not list its sub-elements, rather they provide a broad perspective of context as the physical and social climate at each site. Similarly, Presseau et al. [54] define context as the physical location, however, refer to the Theoretical Domains Framework (TDF) within this description.

Three articles use the outer and inner setting domains of the CFIR to describe context [59, 60, 86]. While two of these studies focus on broad factors relating to context [60, 86], VanDevanter et al. [59] list contextual drivers specific to their study. Two papers utilise the Exploration, Preparation, Implementation, Sustainment (EPIS) model to define context. Like VanDevanter et al., Gadomski et al. [43] provides a tailored definition of the outer context that is specific to their study, while Padwa et al. [53] provides a description of outer and inner context that is broadly applicable.

Only one study used elements of the Model for Understanding Success in Quality (MUSIQ) framework within their description of context [49]. Within this definition the setting and environment are noted to be distinct features with leadership listed as an additional factor. Georgiou & Westbrook [45] is the only study included to use elements of the Kaplan’s 4C’s framework to describe context, where the setting and culture are acknowledged as key contextual drivers [45].

Two papers used aspects of the sociotechnical framework within their definition of context [51, 67]. Workflow and communication are factors listed within both studies, however, one includes costs and management as additional contextual conditions [67], while the other acknowledges internal and external policies, culture and monitoring systems as contextual drivers [51]. One paper [79] employed a bespoke framework developed from the theoretical approaches of Cretin et al. [91] and Lin et al. [92] which incorporates the organisational contextual factors of culture, commitment to quality improvement and climate.

Provides a meaningful definition of general context

Eleven studies provided a broad but rich definition of context. Within many of these definitions, the influence of context on implementation success is acknowledged as critical [19, 27, 35, 38,39,40, 55, 68, 70, 71]. Some papers elaborate that contextual factors are the barriers and facilitators encountered during implementation [39, 40, 70, 71]. Within the included papers context related to the pre-existing structural and organisational factors of the setting [27], or also included dynamic determinants that arose as implementation progressed [70]. Some studies suggest that contextual factors can be internal or external to the intervention [8, 39, 40, 70], while others propose that these conditions have no association with the initiative but rather with micro, meso and macro levels of the health system [19, 35, 38, 55, 68, 71]. Context is categorised by some papers as the internal (organisational level features) and external environment (macro level conditions) [38, 55] while other studies also incorporate individual characteristics within their definitions of context [35, 39]. Conversely, one study [8] adopts elements of a conceptual framework [93] to assist in defining context which encompasses the setting, the behavioural environment, the language and extra-situational context. Only three studies detail the possible interactions between these system levels within their definition of context [8, 35, 71].

Provides a meaningful definition of an element of context

Fifteen studies included in this review provide a rich definition of an aspect of context. Five studies provide a comprehensive definition of contextual characteristics [28, 34, 65, 76, 82] while the remaining papers offer a rich definition for sub-elements of a framework that includes context [20, 31, 33, 36, 44, 47, 57, 62, 74, 84].

All five studies that define an aspect of context conceptualise organisational climate and/or organisational culture as important constructs. Three studies employ both concepts and use similar definitions; the norms that characterise how work is done within the organisation (organisational culture) and the shared perceptions of how the work environment affects the wellbeing of staff (organisational climate) [28, 34, 76]. Whilst Ehrhart et al. [82] focuses exclusively on organisational climate, unlike other studies, it is specified to include molar (totality of the organisation) and focused climates (components of an organisation). In addition to defining organisational culture, Erasmus et al. [65] refers to organisational trust as an important contextual determinant.

Six papers use a rich definition of an element of the TDF to describe context [31, 33, 47, 57, 62, 74]. Five of these studies [31, 33, 47, 57, 62] use the same definition created by Cane et al. [94] to describe this concept; “any circumstance of a person’s situation or environment that discourages or encourages the development of skills and abilities, independence, social competence, and adaptive behaviour” (p14). One study adapted this definition to apply to their specific topic of study [74].

Other papers describe context by offering a generic definition for the construct while also detailing the context relevant aspects of the PARiHS framework [36, 44, 84]. Some studies introduce context as characteristics of the environment in which the proposed change will occur [36, 84], while another describes context as a multi-dimensional construct that can be referred to as “anything that cannot be described as an intervention or its outcome”, incorporating system level factors [44] (p2). Each study proceeds to detail culture, leadership and evaluation (sub-elements of the PARiHS framework). Bergstrom et al. [36] offers a detailed definition for each of these contextual drivers, while the remaining studies describe characteristics of receptive contexts; an organisational culture open to change, supportive leadership encouraging staff engagement and facilitates change and feedback mechanisms [44, 84].

Fernandez et al. [20] employed a pre-existing definition of the inner setting domain of CFIR to describe context. The inner setting is noted to incorporate innovation and organisational characteristics that can influence the likelihood of adoption, ultimately impacting implementation success.

Tables 4, 5, 6, 7 and 8 present the contextual factors included across the definitions of reviewed papers. For some articles it was not possible to isolate this information due to the broad definitions provided. However, for the remaining articles, contextual features are divided in terms of their relevance to micro, meso and macro levels of the healthcare system with some determinants recognised as applicable across multiple domains. Fourteen contextual factors relevant to individuals or teams were identified with individual perceptions for the implemented initiative the most cited determinant (Table 4 and 5). Seven organisational features were extracted with organisational culture the most commonly listed (Table 6). While five macro level factors were identified from the external environment, with political drivers such as policy the most commonly recorded feature (Table 7). The most common contextual determinants relevant to multiple levels of the health system were culture, leadership (Table 8) and resources (Tables 5, 6, 7 and 8).

By synthesising the definitions included in this review, the findings generate a broad definition of context. Context is defined as a multi-dimensional construct encompassing micro, meso and macro level determinants that are pre-existing, dynamic and emergent throughout the implementation process. These factors are inextricably intertwined, incorporating multi-level concepts such as culture, leadership and the availability of resources.

Application of context measures

Among the 64 articles included in this review, over 40 approaches to assess context were employed. Within quantitative papers, 22 context measures were identified with the Alberta Context Tool the most frequently applied (n = 4). Some measures were used in more than one study and are presented in Fig. 3. Most qualitative papers used frameworks to guide their context assessment with the PARiHS the most frequently applied (n = 7). Among the qualitative articles, 16 different approaches to assessment were used and the most highly cited are also represented in Fig. 3. Mixed methods studies used diverse approaches to assess context. Some authors used frameworks to inform the qualitative and quantitative methods chosen while others used quantitative tools to inform qualitative data collection.

Depth of measure application

The depth of application for all context assessment methods was appraised in relation to the application of the measure in the methods of each study and whether the measure was used to investigate an association between context and implementation success (Table 3). 50 of the included studies used their chosen context measure(s) to guide data collection, descriptive analysis and to investigate the association between context and implementation success. No study used measures to inform data collection alone while one study used their chosen assessment method for descriptive analysis only [27]. Whilst two studies used their context measures to guide data collection and descriptive analysis [31, 37], eleven used their assessment methods to inform data collection or data analysis and to investigate the association between context and implementation success [19, 26, 44, 48, 55, 56, 66, 70, 73, 78, 81].

Unit of analysis

Despite the majority of included studies incorporating characteristics from multiple levels of the health system within their definition of context, the majority chose to analyse the construct at an individual level [25, 26, 31,32,33, 41, 43,44,45,46,47, 52, 54, 56, 57, 59, 60, 62, 68, 69, 72, 74, 76, 79, 76, 81, 83, 84, 86, 87] (Fig. 4). Some articles aggregated individual level findings to either a team [75, 82] or organisational level [8, 27, 28, 34, 36, 37, 48,49,50,51, 53, 67, 85] while three articles measured context at multiple levels of the healthcare system: individual, organisational and national [19, 63] or regional level [78]. Nine papers examined context exclusively at an organisational level [38,39,40, 55, 58, 61, 64, 65, 77], three assessed context using the team as the unit of analysis [20, 71, 80], while two paper analysed context at both a team and organisational level [42, 73]. The remaining three studies examined the construct at an organisational and regional level [35] or project level [66] with the unit of analysis unclear for one study [70]. Irrespective of the level of analysis, as outlined in Fig. 4, most studies failed to provide a comprehensive definition of context or apply their chosen measure holistically to all aspects of study design (data collection, descriptive data analysis and exploring the association between context and implementation success).

Discussion

The objective of this systematic review was to explore how context is defined and measured within implementation science literature. The findings demonstrate the variability with which context is defined, assessed and analysed. Studies varied in terms of the level of detail and explanation offered within their definition of context with only 40% of included papers meeting our classification of providing a rich definition. Inconsistencies were also acknowledged in the approaches used in assessing context with over 40 methods identified within the 64 included papers. Therefore, it is not surprising that context remains poorly understood within implementation research [13].

Consistent with previous literature, this review found that most studies provided a narrow conceptualisation of context and simply listed contextual determinants associated with the construct [16,17,18]. Like Nilsen and Bernhardsson’s [18] scoping review, this systematic review examined the contextual factors cited within the definitions of included studies. There are some similarities between the contextual determinants identified and those previously reported [18]; social relations, leadership, organisational culture and climate. However, this review found additional features that were common across included studies; individual perceptions of the implementation effort, organisational characteristics, the wider political environment, and the multilevel concepts of culture and resources. This additional information may reflect the wider scope of this review (n = 64 papers included) as Nilsen and Bernhardsson restricted their search to literature conceptualising context within determinant frameworks (n = 22 publications included).

Few papers used team characteristics to define context [36, 58, 61, 73]. Unlike studies which listed determinants from an individual, organisation and/or external environment, only one contextual feature at a team level (teamwork) was shared across papers [36, 73]. This finding suggests that our understanding of team contextual determinants is limited as they have been almost entirely overlooked in the classification of context. This is surprising as teams are central to the organisational structure of healthcare, playing an integral role in care provision. Therefore, despite the findings suggesting an emphasis on organisational determinants, future developments within the field of implementation research requires the assessment of context at the team level.

Regardless of whether a study simply listed determinants related to context or provided a rich description, it is evident from the definitions included that context is a multifaceted term incorporating multiple levels of the healthcare system. Bergstrom et al. [36] argues that the influence of internal context on implementation success cannot be assessed without examining the impact of the wider health system in which these factors are situated. Literature suggests that the relationship between system components is of greater importance than the individual features themselves [95, 96]. This is particularly applicable when introducing an initiative within healthcare which is characterised by an infinite combination of care activities, events, interactions and outcomes [96,97,98,99]. Implementation researchers would benefit from employing a complexity science perspective when designing future studies. Complexity science recognises the interconnections of system components, acknowledging that the health system is made up of “messy, fuzzy, unique and context embedded problems” [100, 101] (p801). This perspective aligns with the study of context acknowledging that implementation is impacted by the configuration of local services and the variation in the attitudes and norms of those expected to adopt the envisioned change [102].

Although the findings of this review support the opinion that indistinct boundaries exist between system levels and implementation [9, 103, 104], intervention characteristics appear to be distinct features rarely encompassed within conceptualisations of context. Most of the studies identified that context incorporates determinants independent of the intervention with few mentioning the innovation itself. This finding is consistent with previous literature that views context as “everything else that is not the intervention” [18, 105] (p605).

By generating a common definition of context, the findings of this review have the potential to improve the consistency with which the term ‘context’ is used within implementation research. By using this broad definition as outlined in the results as a guideline, greater consistency will likely enable an enhanced understanding of the construct and direct attention to the multi-level nature of context. However, to ensure clarity, future research should expand this developed definition to incorporate the specific contextual factors they are measuring in their study, whilst also specifying the level (i.e. individual, team, organisation, system) to which these factors pertain.

There was heterogeneity observed in how context is assessed within implementation science literature. Although the papers included highlight the benefits in using context assessments, the lack of standardisation restricts our ability to compare the findings, impacting our understanding of the construct. Quantitative elements of included papers mostly used validated, context sensitive surveys to examine context or used contextual features outlined in their definition or a context relevant framework to inform survey development. Although the questionnaires used in these studies heightened the reach and possible generalisability of findings, studies using a qualitative approach enabled a greater exploration into the richness and complexity of the construct as relevant contextual determinants could emerge rather than those narrowly specified in some quantitative assessments. For qualitative aspects of included studies, context assessments were mostly used to guide data collection through the development of topic guides and/or employed deductively during data analysis to inform the development of coding templates.

Despite the variation in how context is measured within implementation science research, most studies applied their context assessment holistically to all aspects of study design (data collection and descriptive analysis) and investigated the association between context and implementation success. While the PARiHS was the most frequently employed framework within this review, qualitative studies that used the TDF to inform their assessment of context provided the most detailed description of the construct. A possible explanation for this might be the uniformity with which context was defined across papers and the consistency of the approach (framework informed data collection and analysis). Articles using this method recognised that it enabled a greater understanding of implementation determinants [47], improved the efficiency with which data could be coded [33], and promoted the rigour and trustworthiness of the research [57]. Development within the field would benefit from employing a similar approach in designing future studies to ensure a comprehensive evaluation of context is achieved.

This review illustrates inconsistencies in how context is defined and how it is subsequently analysed. Although only 10 articles listed individual contextual factors within their definition of context, 45% of all included papers analysed context exclusively at an individual level. Just four papers [50, 65, 73, 77] were consistent with their approach; explicitly listing contextual factors and the relevant system level in their definition and analysed their data accordingly. The remaining studies either used broad multi-level system components within their definition of context which could not be specified to a level of analysis or included system-level contextual factors within their definition which were not analysed at the appropriate level. For example, Yamada et al. [87] exclusively lists organisational factors within their definition of context but employ an individual level of analysis. However, of the four papers that defined and analysed context consistently, Erasmus et al.’s [65] study was the only paper to also provide a rich definition of context, use their chosen context assessment holistically and analyse the data appropriately. Future research must ensure consistency with how context is defined, measured and analysed within the field of implementation science. Greater clarity will enhance the rigour associated with studies exploring context, developing the fields ‘true’ understanding of how context influences implementation.

Limitations

Although this study provides a broader conceptualisation of context in comparison to previous literature, the findings are limited to definitions retrieved through the search strategy applied. One challenge is the multitude of terms used to describe ‘implementation science’. Despite the final search yielding thousands of articles, the endeavour to strike a balance between a sensitive and specific search strategy increases the possibility that relevant articles may have been omitted from this review. The inclusion of purely empirical studies heightens the risk of publication bias as the grey literature was not appraised. However, we hope to have limited the impact of these challenges by using previous literature to inform the search strategy and scanning reference lists of included articles to retrieve additional relevant studies. It is hoped that this approach has ensured that a comprehensive synthesis of the best available evidence has been presented.

Conclusion

This review set out to systematically investigate how context is defined, measured and analysed within implementation science literature. The review confirms that context is generally not comprehensively defined and adds to the extant literature [16,17,18] by developing an operational definition to improve the consistency with which the term is used. Due to the variability in how context is assessed, it is recommended that a standardised approach using qualitative methods informed by a comprehensive framework is the most suitable assessment to explore the complexity of this phenomenon. Additionally, the need for researchers to define, assess and analyse context coherently was highlighted as most studies failed to use a consistent approach. Enhanced clarity and consistency when studying context, may result in improvements in implementation processes. A heightened understanding will help researchers appropriately account for context in research, enhancing the rigour and learning acquired which can aid in the translation of evidence-based healthcare interventions into routine practice.

Availability of data and materials

Data analysed in this study is available through the journal articles cited herein.

Abbreviations

- PARiHS:

-

Promoting Action on Research Implementation in Health Services

- CFIR:

-

Consolidated Framework for Implementation Research

- EPIS:

-

Evaluation, Planning, Implementation, Sustainability

- PRISMA:

-

Preferred Reporting Items of Systematic Reviews and Meta-Analyses

- PROSPERO:

-

database for protocol details for systematic reviews relevant to health and social care, welfare, public health, education, crime, justice, and international development, where there is a health-related outcome

- MMAT:

-

Mixed Methods Appraisal Tool

- USA:

-

United States of America

- UK:

-

United Kingdom

- MUSIQ:

-

Model for Understanding Success in Quality

- TDF:

-

Theoretical Domains Framework

References

Williams NR. How to get a 2:1 in media, communication and cultural studies. London: SAGE; 2004.

Balas EA, Boren SA. Managing clinical knowledge for health care improvement. Yearb Med Inform. 2000;9(1) Available from: https://augusta.openrepository.com/augusta/handle/10675.2/617990.

Grant J, Green L, Mason B. Basic research and health: a reassessment of the scientific basis for the support of biomedical science. Res Eval. 2003;12(3):217–24.

Morris AC, Hay AW, Swann DG, Everingham K, McCulloch C, McNulty J, et al. Reducing ventilator-associated pneumonia in intensive care: impact of implementing a care bundle. Crit Care Med. 2011;39(10):2218–24.

Bauer MS, Damschroder L, Hagedorn H, Smith J, Kilbourne AM. An introduction to implementation science for the non-specialist. BMC Psychol. 2015;3(1) Available from: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4573926/.

May CR, Johnson M, Finch T. Implementation, context and complexity. Implement Sci. 2016;11(141):1–12.

Davidoff F. Understanding contexts: how explanatory theories can help. Implement Sci. 2019;14(1):23.

Hansen HP, Tjørnhøj-Thomsen T, Johansen C. Rehabilitation interventions for cancer survivors: the influence of context. Psychooncology. 2011;20:51–2.

Pfadenhauer LM, Gerhardus A, Mozygemba K, Lysdahl KB, Booth A, Hofmann B, et al. Making sense of complexity in context and implementation: the Context and Implementation of Complex Interventions (CICI) framework. Implement Sci. 2017;12(1) Available from: http://implementationscience.biomedcentral.com/articles/10.1186/s13012-017-0552-5.

Ovretveit J, Dolan-Branton L, Marx M, Reid A, Reed J, Agins B. Adapting improvements to context: when, why and how? Int J Qual Health Care. 2018;30(suppl_1):20–3.

Tomoaia-Cotisel A, Scammon DL, Waitzman NJ, Cronholm PF, Halladay H, Driscoll DL, et al. Context matters: the experience of 14 research teams in systematically reporting contextual factors important for practice change. Ann Fam Med. 2013;11:S115–23.

Moore GF, Audrey S, Barker M, Bond L, Bonell C, Hardeman W, et al. Process evaluation of complex interventions: Medical Research Council guidance. BMJ. 2015;350 Available from: http://www.bmj.com/cgi/doi/10.1136/bmj.h1258.

Nilsen P. Making sense of implementation theories, models and frameworks. Implement Sci. 2015;10(1):53.

Dopson S, Fitzgerald L. Knowledge to action?: evidence-based health care in context. Oxford: Oxford University Press; 2006.

Kaplan HC, Brady PW, Dritz MC, Hooper DK, Linam WM, Froehle CM, et al. The influence of context on quality improvement success in health care: a systematic review of the literature. Milbank Q. 2010;88(4):500–59.

Pfadenhauer LM, Mozygemba K, Gerhardus A, Hofmann B, Booth A, Lysdahl KB, et al. Context and implementation: a concept analysis towards conceptual maturity. Z Evidenz Fortbild Qual Im Gesundheitswesen. 2015;109(2):103–14.

McCormack B, Kitson A, Harvey G, Rycroft-Malone J, Titchen A, Seers K. Getting evidence into practice: the meaning of `context. J Adv Nurs. 2002;38(1):94–104.

Nilsen P, Bernhardsson S. Context matters in implementation science: a scoping review of determinant frameworks that describe contextual determinants for implementation outcomes. BMC Health Serv Res. 2019;19(1):189.

Murdoch J. Process evaluation for complex interventions in health services research: analysing context, text trajectories and disruptions. BMC Health Serv Res. 2016;16(1):407.

Fernandez ME, Walker TJ, Weiner BJ, Calo WA, Liang S, Risendal B, et al. Developing measures to assess constructs from the inner setting domain of the consolidated framework for implementation research. Implement Sci. 2018;13(1):52.

Lewis CC, Stanick CF, Martinez RG, Weiner BJ, Kim M, Barwick M, et al. The Society for Implementation Research Collaboration Instrument Review Project: a methodology to promote rigorous evaluation. Implement Sci. 2015;10(1):2.

Higgins JP, Green S. Cochrane Handbook for Systematic Reviews: Wiley; 2011. Available from: http://handbook-5-1.cochrane.org/.

Moher D, Liberati A, Tetzlaff J, Altman DG. Prisma group. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. Ann Intern Med. 2009;151(4):264–9.

Veritas Health Innovation. Covidence systematic review software. Melbourne; 2019. Available from: www.covidence.org.

Abdekhoda M, Ahmadi M, Gohari M, Noruzi A. The effects of organizational contextual factors on physicians’ attitude toward adoption of electronic medical records. J Biomed Inform. 2015;53:174–9.

Bokhour BG, Saifu H, Goetz MB, Fix GM, Burgess J, Fletcher MD, et al. The role of evidence and context for implementing a multimodal intervention to increase HIV testing. Implement Sci. 2015;10:22.

Bradley DKF, Griffin M. The well organised working environment: a mixed methods study. Int J Nurs Stud. 2016;55:26–38.

Kramer TL, Drummond KL, Curran GM, Fortney JC. Assessing culture and climate of federally qualified health centers: a plan for implementing behavioral health interventions. J Health Care Poor Underserved. 2017;28(3):973–87.

Kirk MA, Kelley C, Yankey N, Birken SA, Abadie B, Damschroder L. A systematic review of the use of Consolidated Framework for Implementation Research. Implement Sci. 2016;11(72):1–13.

Hong QN, Pluye P, Fàbregues S, Bartlett G, Boardman F, Cargo M, et al. Mixed methods appraisal tool (MMAT), version 2018: Canadian Intellectual Property Office, Industry Canada; 2018. Available from: http://mixedmethodsappraisaltoolpublic.pbworks.com/w/file/fetch/127916259/MMAT_2018_criteria-manual_2018-08-01_ENG.pdf.

Al Shemeili S, Klein S, Strath A, Fares S, Stewart D. An exploration of health professionals’ experiences of medicines management in elderly, hospitalised patients in Abu Dhabi. Int J Clin Pharm. 2016;38(1):107–18.

Arney J, Thurman K, Jones L, Kiefer L, Hundt NE, Naik AD, et al. Qualitative findings on building a partnered approach to implementation of a group-based diabetes intervention in VA primary care. BMJ Open. 2018;8(1):1–9.

Bain E, Bubner T, Ashwood P, Van Ryswyk E, Simmonds L, Reid S, et al. Barriers and enablers to implementing antenatal magnesium sulphate for fetal neuroprotection guidelines: a study using the theoretical domains framework. BMC Pregnancy Childbirth. 2015;15:176.

Beidas RS, Wolk CLB, Walsh LM, Evans ACJ, Hurford MO, Barg FK. A complementary marriage of perspectives: understanding organizational social context using mixed methods. Implement Sci. 2014;9:175.

Belaid L, Ridde V. Contextual factors as a key to understanding the heterogeneity of effects of a maternal health policy in Burkina Faso? Health Policy Plan. 2015;30(3):309–21.

Bergstrom A, Peterson S, Namusoko S, Waiswa P, Wallin L. Knowledge translation in Uganda: a qualitative study of Ugandan midwives’ and managers’ perceived relevance of the sub-elements of the context cornerstone in the PARIHS framework. Implement Sci. 2012;7:117.

Bocoum FY, Tarnagda G, Bationo F, Savadogo JR, Nacro S, Kouanda S, et al. Introducing onsite antenatal syphilis screening in Burkina Faso: implementation and evaluation of a feasibility intervention tailored to a local context. BMC Health Serv Res. 2017;17(1):378.

Burau V, Carstensen K, Fredens M, Kousgaard MB. Exploring drivers and challenges in implementation of health promotion in community mental health services: a qualitative multi-site case study using normalization process theory. BMC Health Serv Res. 2018;18(1):36.

Busetto L, Kiselev J, Luijkx KG, Steinhagen-Thiessen E, Vrijhoef HJM. Implementation of integrated geriatric care at a German hospital: a case study to understand when and why beneficial outcomes can be achieved. BMC Health Serv Res. 2017;17(1):180.

Cheyne H, Abhyankar P, McCourt C. Empowering change: realist evaluation of a Scottish government programme to support normal birth. Midwifery. 2013;29(10):1110–21.

Drainoni M-L, Koppelman EA, Feldman JA, Walley AY, Mitchell PM, Ellison J, et al. Why is it so hard to implement change? A qualitative examination of barriers and facilitators to distribution of naloxone for overdose prevention in a safety net environment. BMC Res Notes. 2016;9(1):465.

Eboreime EA, Nxumalo N, Ramaswamy R, Eyles J. Strengthening decentralized primary healthcare planning in Nigeria using a quality improvement model: how contexts and actors affect implementation. Health Policy Plan. 2018;33(6):715–28.

Gadomski AM, Wissow LS, Palinkas L, Hoagwood KE, Daly JM, Kaye DL. Encouraging and sustaining integration of child mental health into primary care: interviews with primary care providers participating in project TEACH (CAPES and CAP PC) in NY. Gen Hosp Psychiatry. 2014;36(6):555–62.

Gagliardi AR, Webster F, Brouwers MC, Baxter NN, Finelli A, Gallinger S. How does context influence collaborative decision-making for health services planning, delivery and evaluation? BMC Health Serv Res. 2014;14:545.

Georgiou A, Westbrook JI. Clinician reports of the impact of electronic ordering on an emergency department. Stud Health Technol Inform. 2009;150:678–82.

Gibb H. An environmental scan of an aged care workplace using the PARiHS model: assessing preparedness for change. J Nurs Manag. 2013;21(2):293–303.

Glidewell L, Boocock S, Pine K, Campbell R, Hackett J, Gill S, et al. Using behavioural theories to optimise shared haemodialysis care: a qualitative intervention development study of patient and professional experience. Implement Sci. 2013;8:118.

Greenhalgh T, Stramer K, Bratan T, Byrne E, Mohammad Y, Russell J. Introduction of shared electronic records: multi-site case study using diffusion of innovation theory. BMJ. 2008;337.

Griffin A, McKeown A, Viney R, Rich A, Welland T, Gafson I, et al. Revalidation and quality assurance: the application of the MUSIQ framework in independent verification visits to healthcare organisations. BMJ Open. 2017;7(2):e014121.

Higgins A, O’Halloran P, Porter S. The Management of Long-Term Sickness Absence in large public sector healthcare Organisations: a realist evaluation using mixed methods. J Occup Rehabil. 2015;25(3):451–70.

Menon S, Smith MW, Sittig DF, Petersen NJ, Hysong SJ, Espadas D, et al. How context affects electronic health record-based test result follow-up: a mixed-methods evaluation. BMJ Open. 2014;4(11):1–9.

Naik AD, Lawrence B, Kiefer L, Ramos K, Utech A, Masozera N, et al. Building a primary care research partnership: lessons learned from a telehealth intervention for diabetes and depression. Fam Pract. 2015;32(2):216–23.

Padwa H, Teruya C, Tran E, Lovinger K, Antonini VP, Overholt C, et al. The implementation of integrated behavioral health protocols in primary care settings in project care. J Subst Abus Treat. 2016;62:74–83.

Presseau J, Mutsaers B, Al-Jaishi AA, Nesrallah G, McIntyre CW, Garg AX, et al. Barriers and facilitators to healthcare professional behaviour change in clinical trials using the Theoretical Domains Framework: A case study of a trial of individualized temperature-reduced haemodialysis. Trials. 2017;18(1) Available from: http://www.embase.com/search/results?subaction=viewrecord&from=export&id=L616333097.

Rabbani F, Lalji SN, Abbas F, Jafri SW, Razzak JA, Nabi N, et al. Understanding the context of balanced scorecard implementation: a hospital-based case study in Pakistan. Implement Sci. 2011;6:31.

Rotteau L, Webster F, Salkeld E, Hellings C, Guttmann A, Vermeulen MJ, et al. Ontario’s emergency department process improvement program: the experience of implementation. Acad Emerg Med Off J Soc Acad Emerg Med. 2015;22(6):720–9.

Smith KG, Paudyal V, MacLure K, Forbes-McKay K, Buchanan C, Wilson L, et al. Relocating patients from a specialist homeless healthcare Centre to general practices: a multi-perspective study. Br J Gen Pract J R Coll Gen Pract. 2018;68(667):e105–13.

Spitzer-Shohat S, Shadmi E, Goldfracht M, Key C, Hoshen M, Balicer RD. Evaluating an organization-wide disparity reduction program: understanding what works for whom and why. PLoS One. 2018;13(3):e0193179.

VanDevanter N, Kumar P, Nguyen N, Nguyen L, Nguyen T, Stillman F, et al. Application of the consolidated framework for implementation research to assess factors that may influence implementation of tobacco use treatment guidelines in the Viet Nam public health care delivery system. Implement Sci. 2017;12(1):27.

Ware P, Ross HJ, Cafazzo JA, Laporte A, Gordon K, Seto E. Evaluating the implementation of a Mobile phone-based Telemonitoring program: longitudinal study guided by the consolidated framework for implementation research. JMIR MHealth UHealth. 2018;6(7):e10768.

Williams L, Rycroft-Malone J, Burton C. Implementing best practice in infection prevention and control. A realist evaluation of the role of intermediaries. Int J Nurs Stud. 2016;60:156–67.

Yamada J, Potestio ML, Cave AJ, Sharpe H, Johnson DW, Patey AM, et al. Using the theoretical domains framework to identify barriers and enablers to pediatric asthma management in primary care settings. J Asthma. 2018;55(11):1–14.

Yip M-P, Chun A, Edelson J, Feng X, Tu S-P. Contexts for sustainable implementation of a colorectal Cancer screening program at a community health center. Health Promot Pract. 2016;17(1):48–56.

Durbin J, Selick A, Casson I, Green L, Spassiani N, Perry A, et al. Evaluating the implementation of health checks for adults with intellectual and developmental disabilities in primary care: the importance of organizational context. Intellect Dev Disabil. 2016;54(2):136–50.

Erasmus E, Gilson L, Govender V, Nkosi M. Organisational culture and trust as influences over the implementation of equity-oriented policy in two south African case study hospitals. Int J Equity Health. 2017;16(1):164.

Hill JN, Guihan M, Hogan TP, Smith BM, LaVela SL, Weaver FM, et al. Use of the PARIHS framework for retrospective and prospective implementation evaluations. Worldviews Evid-Based Nurs. 2017;14(2):99–107.

Iribarren SJ, Sward KA, Beck SL, Pearce PF, Thurston D, Chirico C. Qualitative evaluation of a text messaging intervention to support patients with active tuberculosis: implementation considerations. JMIR MHealth UHealth. 2015;3(1):e21.

Prashanth NS, Marchal B, Kegels G, Criel B. Evaluation of capacity-building program of district health managers in India: a contextualized theoretical framework. Front Public Health. 2014;2:89.

Rodriguez DC, Peterson LA. A retrospective review of the Honduras AIN-C program guided by a community health worker performance logic model. Hum Resour Health. 2016;14(1):19.

Baron J, Hirani S, Newman S. Challenges in patient recruitment, implementation, and Fidelity in a Mobile Telehealth study. Telemed J E-Health Off J Am Telemed Assoc. 2016;22(5):400–9.

Vanderkruik R, McPherson ME. A contextual factors framework to inform implementation and evaluation of public health initiatives. Am J Eval. 2017;38(3):348–59.

Forberg U, Unbeck M, Wallin L, Johansson E, Petzold M, Ygge B-M, et al. Effects of computer reminders on complications of peripheral venous catheters and nurses’ adherence to a guideline in paediatric care-a cluster randomised study. Implement Sci. 2016;11:10.

Chan KS, Hsu Y-J, Lubomski LH, Marsteller JA. Validity and usefulness of members reports of implementation progress in a quality improvement initiative: findings from the team check-up tool (TCT). Implement Sci. 2011;6:115.

Beenstock J, Sniehotta FF, White M, Bell R, Milne EM, Araujo-Soares V. What helps and hinders midwives in engaging with pregnant women about stopping smoking? A cross-sectional survey of perceived implementation difficulties among midwives in the North East of England. Implement Sci. 2012;7:36.

Cummings GG, Hutchinson AM, Scott SD, Norton PG, Estabrooks CA. The relationship between characteristics of context and research utilization in a pediatric setting. BMC Health Serv Res. 2010;10:168.

Glisson C, Landsverk J, Schoenwald S, Kelleher K, Hoagwood KE, Mayberg S, et al. Assessing the organizational social context (OSC) of mental health services: implications for research and practice. Admin Pol Ment Health. 2008;35(1–2):98–113.

Guerrero EG, Heslin KC, Chang E, Fenwick K, Yano E. Organizational correlates of implementation of colocation of mental health and primary Care in the Veterans Health Administration. Adm Policy Ment Health Ment Health Serv Res. 2015;42(4):420–8.

Hoffman GJ, Rodriguez HP. Examining Contextual Influences on Fall-Related Injuries Among Older Adults for Population Health Management. Popul Health Manag. 2015;18(6):437–48.

Lemmens K, Strating M, Huijsman R, Nieboer A. Professional commitment to changing chronic illness care: results from disease management programmes. Int J Qual Health Care. 2009;21(4):233–42.

Almblad AC, Siltberg P, Engvall G, Malqvist M. Implementation of pediatric early warning score; adherence to guidelines and influence of context. J Pediatr Nurs. 2018;38:33–9.

Chiu TM, Ku BP. Moderating effects of voluntariness on the actual use of electronic health records for allied health professionals. JMIR Med Inform. 2015;3(1):e7.

Ehrhart MG, Aarons GA, Farahnak LR. Assessing the organizational context for EBP implementation: the development and validity testing of the implementation climate scale (ICS). Implement Sci. 2014;9:157.

Huijg JM, Gebhardt WA, Dusseldorp E, Verheijden MW, van der Zouwe N, Middelkoop BJC, et al. Measuring determinants of implementation behavior: psychometric properties of a questionnaire based on the theoretical domains framework. Implement Sci. 2014;9:33.

Obrecht JA, Van Hulle VC, Ryan CS. Implementation of evidence-based practice for a pediatric pain assessment instrument. Clin Nurse Spec CNS. 2014;28(2):97–104.

Beidas RS, Marcus S, Aarons GA, Hoagwood KE, Schoenwald S, Evans AC, et al. Predictors of community therapists’ use of therapy techniques in a large public mental health system. JAMA Pediatr. 2015;169(4):374–82.

Douglas NF. Organizational context associated with time spent evaluating language and cognitive-communicative impairments in skilled nursing facilities: survey results within an implementation science framework. J Commun Disord. 2016;60:1–13.

Yamada J, Squires JE, Estabrooks CA, Victor C, Stevens B, CIHR Team in Children’s Pain. The role of organizational context in moderating the effect of research use on pain outcomes in hospitalized children: a cross sectional study. BMC Health Serv Res. 2017;17(1):68.

Popay J, Roberts H, Sowden A, Petticrew M, Arai L, Rodgers M, et al. Guidance on the conduct of narrative synthesis in systematic reviews. In: A product from the ESRC methods programme; 2006.

Braun V, Clarke V. Using thematic analysis in psychology. Qual Res Psychol. 2006;3:77–101.

O’Cathain A, Thomas K. Combining qualitative and quantitative methods. In: Qualitative research in health care. Oxford: Blackwell; 2006. p. 102–11.

Cretin S, Shortell SM, Keeler EB. An evaluation of collaborative interventions to improve chronic illness care: framework and study design. Eval Rev. 2004;28(1):28–51.

Lin MK, Marsteller JA, Shortell SM, Mendel P, Pearson M, Rosen M, et al. Motivation to change chronic illness care: results from a National Evaluation of quality improvement Collaboratives. Health Care Manag Rev. 2005;30(2):139.

Goodwin C, Duranti A. Rethinking context: an introduction. In: Rethinking context: language as an interactive phenomenon. Cambridge: Cambridge University Press; 1992.

Cane J, O’Connor D, Michie S. Validation of the theoretical domains framework for use in behaviour change and implementation research. Implement Sci. 2012;7:37.

Daft RL. Organisation theory and design. 7th ed. Ohio: South-Western College Publishing; 2001.

Plsek PE, Wilson T. Complexity science: complexity, leadership, and Management In Healthcare Organisations. BMJ. 2001;323:746–9.

Begun JW, Zimmerman B, Dooley KJ. Health care Organisations as complex adaptive systems. In: Advances in Health Care Organization Theory: Wiley; 2004. p. 253–88.

Braithwaite J. Changing how we think about healthcare improvement. Br Med J. 2018;361 Available from: https://www.bmj.com/content/361/bmj.k2014.

Braithwaite J, Churruca K, Long JC, Ellis LA, Herkes J. When complexity science meets implementation science: a theoretical and empirical analysis of systems change. BioMed Cent Med. 2018;16(63).

Hawe P, Bond L, Butler H. Knowledge theories can inform evaluation practice: what can a complexity lens add? New Dir Eval. 2009;124:89–100.

Fraser SW, Greenhalgh T. Coping with complexity: educating for capability. BMJ. 2001;323:799–803.

Churruca K, Ludlow K, Taylor N, Long JC, Best S, Braithwaite J. The time has come: embedded implementation research for health care improvement. J Eval Clin Pract. 2019:1–8.

Van Herck P, Vanhaecht K, Deneckere S, Bellemans J, Panella M, Barbieri A, et al. Key interventions and outcomes in joint arthroplasty clinical pathways: a systematic review. J Eval Clin Pract. 2010;16:39–49.

Wells M, Williams B, Treeweek S, Coyle J, Taylor J. Intervention description is not enough: evidence from an in-depth multiple case study on the untold role and impact of context in randomised controlled trials of seven complex interventions. Trials. 2012;13(95) Available from: https://trialsjournal.biomedcentral.com/articles/10.1186/1745-6215-13-95.

Ovretveit JC, Shekelle PG, Hempel S, Pronovost P, Rubenstein L, Taylor SL, et al. How does context affect interventions to improve patient safety? An assessment of evidence from studies of five patient safety practices and proposals for research. BMJ Qual Saf. 2011;20:604–10.

Acknowledgements

Early results from this research were presented at the Society for Implementation Research Collaboration (SIRC) 2019 Conference; https://societyforimplementationresearchcollaboration.org/wp-content/uploads/2019/11/2019-SIRC-program-PDF-FINAL-Compressed-Review-Format.pdf.

Funding

This research is part of the Collective Leadership and Safety Cultures (Co-Lead) research programme which is being funded by the Health Research Board (RL-2015–1588) and the Health Service Executive. Funding sources were not involved in any stage of the review process in relation to data collection, data analysis and the interpretation of the data.

Author information

Authors and Affiliations

Contributions

LR, ADB and EMA designed the search strategy. LR and ADB retrieved and screened titles and abstracts against the inclusion criteria and EMA resolved conflicts. LR and ADB extracted data. LR conducted the analysis and drafted the paper. All contributed to and approved the final version of the manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Additional file 1.

Search Strategy.

Additional file 2.

Data extraction template.

Additional file 3: Table S1.

Summary of included papers.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Rogers, L., De Brún, A. & McAuliffe, E. Defining and assessing context in healthcare implementation studies: a systematic review. BMC Health Serv Res 20, 591 (2020). https://doi.org/10.1186/s12913-020-05212-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12913-020-05212-7