Abstract

Background

Medical educators seek innovative ways to engage learners efficiently and effectively. Gamification has been explored as one way to accomplish this feat; however, questions remain about which contexts gamification would be most useful. Time constraints and student interest present major barriers for teaching laboratory medicine to students. This study aims to compare two versions of an interactive online module, one gamified and one not, for teaching laboratory medicine concepts to pre-clinical medical students.

Methods

First-year medical students reviewed either a gamified or non-gamified version of an interactive online module in preparation for an in-person flipped classroom session on Laboratory Medicine. Learning theory guided the design of the modules and both contained identical content, objectives, and structure. The “gamified” module included the additional elements of personalization, progress meters, points, badges, and story/role play. After reviewing the module, students completed an anonymous knowledge check and optional survey.

Results

One hundred seventy-one students completed the post module knowledge check as assigned (82 gamified, 89 non-gamified). Knowledge check scores were higher for the students who reviewed the gamified module (p < 0.02), corresponding to an effect size of 0.4 for the gamified module. Eighty-one students completed optional post-module surveys (46 gamified, 35 non-gamified). Instructional efficiency was calculated using task difficulty questions and knowledge check scores, and the resulting instructional efficiency was higher for the gamified module. There was no significant difference in the student-reported time required to complete the modules. Additionally, both versions of the module were well received and led to positive ratings related to motivation and confidence. Finally, examination of open-ended survey results suggested that the addition of game elements added value to the gamified module and enhanced engagement and enjoyment.

Conclusions

In this setting, the addition of gamification to an interactive online module enhanced learning outcome, instructional efficiency, student engagement and enjoyment. These results should inspire further exploration of gamification for teaching Laboratory Medicine concepts to pre-clinical medical students.

Similar content being viewed by others

Background

Today’s medical educators are charged with creating efficient and engaging ways to enlighten their students. Many institutions are reducing the time medical students spend studying basic sciences, while concurrently aiming to foster curious, life-long learners [1]. This changing curricular landscape has led medical educators to adjust their teaching strategies [1,2,3,4,5,6,7,8]. Animations [6], interactive online quizzes [3], adaptive e-learning [7], and virtual reality tours [8] are some of the innovative strategies that have been explored. Importantly, careful curricular planning, with instructional designs grounded in educational theory, and use of comparable comparisons when appropriate are vital for the successful evaluation of new learning activities [1, 9,10,11]; unfortunately, many educational innovations described in the literature do not meet these standards [2, 6, 12]. For example, in their review of animations used for medical education, Yue et al. found that very few followed the principles of the cognitive theory of multimedia learning [6]. Additionally, McBrien et al. found that fewer than half of the Pathology educational interventions they reviewed described the use of a control group [2].

Laboratory medicine / clinical pathology instruction

Laboratory medicine, an often overlooked subset of undergraduate pathology education [13], is an area that may benefit from well-planned innovative teaching solutions. As medical knowledge continues to expand, so do discoveries related to diagnostic testing [14, 15]; however, many medical students graduate unprepared to be good stewards of the clinical laboratory [15,16,17,18,19,20]. Improper utilization of laboratory services can lead to waste, misdiagnosis, and patient harm [14,15,16, 21, 22]. Consequently, many groups have proposed bolstering students’ exposure to laboratory topics during medical school [13,14,15,16, 21, 23]; however, limited time and low student interest present major barriers to this education [24]. Capstone courses [14], case-based interdisciplinary activities [25, 26], and virtual laboratory tours [8, 27] are some of the interventions that have been described to combat these challenges.

Gamification

Many medical educators have turned to gamification in a bid to innovate and engage students [5, 28,29,30]. Gamification incorporates game design elements such as points, badges, leaderboards, immediate feedback, and narratives into non-game settings [28, 30]. Importantly, successful gamification must be built upon learning activities with theory-driven, quality designs because, as explained by Landers, “The goal of gamification cannot be to replace instruction, but instead to improve it.” [31]. Additionally, not all game elements appeal to all learners. For example, some learners do not appreciate activities that overemphasize competition or employ extrinsic rewards without a link to learning objectives [29, 30, 32, 33].

Carefully designed gamification shows the potential to improve learner motivation and knowledge [28,29,30,31, 33,34,35], which makes it an attractive tool for Pathology educators who seek to enhance student interest in and understanding of the clinical laboratory. However, medical education is a diverse landscape, and the effects of gamification can vary depending on the context in which is deployed [29, 33, 34, 36]. The use of gamification for teaching medical students about the clinical laboratory is not well established; and, while Tsang et al. recently described a virtual reality lab tour that incorporated gamification in the form of a scavenger hunt activity, no outcome data was reported [8]. Using educational theory and design processes to create and test a gamified activity for teaching clinical laboratory medicine concepts can offer a glimpse into the utility of gamification in this area of undergraduate medical education and inspire future innovation.

Study aim

The aim of this study was to explore the use of gamification in teaching basic Pathology and Laboratory Medicine concepts to first year medical students at Virginia Commonwealth University School of Medicine by comparing two versions of a carefully designed interactive online module (gamified and non-gamified) to answer the question: Do learning outcomes, instructional efficiency, motivation, confidence, and/or subjective experiences differ for students who review the gamified versus non-gamified module?

Methods

Setting and participants

Two versions of a Pathology and Laboratory Medicine module, gamified and non-gamified, were created to provide background information for an in-person flipped classroom session on Laboratory Medicine, part of the first-year curriculum at a large, urban allopathic medical school in the United States. At this institution, first-year medical students enter medical school having already completed a 4-year university degree. Their first year of study consists of basic medical science courses with some early introductory clinical experiences. The discipline of Pathology is introduced to students during the Foundations of Disease course, which typically takes place in December. The Laboratory Medicine session takes place during this course, and offers students an early introduction to the laboratory, an ideal setting to explore ways to foster curiosity and engagement with this topic, as students will have limited structured instruction in this area going forward. There were 188 students enrolled in this course in December 2022.

Module creation and piloting

Both versions of the module were created using the Articulate 360 Storyline platform (Articulate Storyline 360 [Computer software]. 2022. retrieved from https://articulate.com/360). which allowed for the creation of branching feedback and tracking of points and progress. The modules shared the same objectives and covered the same content, which was reviewed by two Clinical Pathology experts (SR and KS) and was based on the Association of Pathology Chairs suggested goals and objectives for medical students [13]. Additionally, the modules shared the same basic structure, each with the same four sections (Table 1), introductory pretraining, and interactive questions. The shared design was guided by educational theories such as the cognitive load theory [11, 37, 38] and the cognitive theory of multimedia learning [6, 10]. Common to both modules were techniques to manage the intrinsic load (pretraining and segmenting), limit extrinsic load (temporal and spatial contiguity and worked examples) and maximize germane load (interactivity and self-explanation). Strategies to enhance learner motivation such as providing low-stakes assessments with immediate feedback were also employed in both versions of the module. [39].

Unique elements added to the gamified module included the addition of personalization, progress meters, points, badges, rewards and basic narratives/role play [28, 30] (Fig. 1).

Examples of unique elements added to gamified module. Legend: Personalization, story elements, progress meters and points/badges/rewards were added to the gamified version of the module in an effort to enhance learners’ feelings of engagement, enjoyment, and competence [28, 30]. Personalization included the optional use of the learner's name throughout the module (engagement and enjoyment). Story elements were added by putting learners in a role and asking questions as part of a scenario (engagement and enjoyment). Progress meters offered learners different illustrations to denote progress in different sections (competence and enjoyment). Finally, the incorporation of points, badges and rewards included tracking points earned throughout the module, displaying badges at the end of completed sections, and including extra messages of encouragement when learners achieved multiple correct answers in a row (competence and enjoyement)

The modules, knowledge check and survey were subject to expert review, cognitive interviews, piloting, and multiple revisions before being implemented in the classroom. During the piloting process, Pathology residents and fourth-year medical students on Pathology rotations were invited to choose one of the modules to review and complete the post-module knowledge check and survey. Seven total knowledge checks (2 gamified and 5 non-gamified) and five surveys (1 gamified and 4 non-gamified) were completed. This exercise allowed for a final check of the logistics of module sharing and data collection.

The knowledge check consisted of 7 multiple choice questions related to the module objectives and was created as a post-module assessment. To bolster content validity, the questions were reviewed by two of the authors (KS and SR), clinical pathology experts. Further, during the piloting phase, it was noted that pathology residents scored higher on average than fourth year medical students (6.9 versus 6.7) on the assessment, an expected finding based on experience level.

Survey questions and, when appropriate, 5-point Likert scale answer choices were constructed using best practices outlined by Artino et al. [40]. Questions related to motivation (interest/relevance and confidence) were inspired by motivational theories and by surveys of student motivation and engagement [39, 41,42,43,44,45,46]. (The complete survey can be found in the Supplemental material).

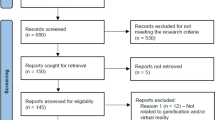

Procedures

An imperfect randomization, utilizing the school’s four pre-existing medical student groups, was employed to assign and evaluate the modules in the full class setting. Assignments were made using a random number generator, with two student groups being assigned the gamified module and two assigned the non-gamified module. Students were encouraged to review their assigned module; however, they were given the option to choose. Additionally, they were able to review both versions of the module and revisit the modules as needed for study after their initial review; however, they were asked to only complete the knowledge check and survey following the first module they reviewed. Sample size was not calculated for this project as the modules were being provided to the given number of students as part of their coursework. All students were assigned the knowledge check, so limiting sample size would not be an option. Also, all voluntary survey responses were valued input for improvement purposes.

In this mixed-methods convergent design, concurrent quantitative and qualitative data were anonymously collected via knowledge checks and surveys embedded at the end of the modules. QuestionPro (QuestionPro survey software [Computer software]. 2023. retrieved from htpps://questionpro.com) was used to create both the knowledge check and survey. Unique module links were crafted so that data from “assigned” and “choice” groups could be sorted while still maintaining anonymity. Completion of the post-module knowledge check was a required component of prework, and these results helped guide the first portion of the subsequent in-person flipped classroom activity, which included an interactive discussion and review of the questions and answers. Therefore, knowledge check assessments submitted after the start of the in-person class period (10:00 AM, 12/5/2022) were not included in the analysis. Survey completion was optional. Neither knowledge check scores nor survey participation affected student course grades.

Analysis

Quantitative results were analyzed using the statistical software, STATA, Version 17 (StataCorp. 2021. Stata Statistical Software: Release 17. College Station, TX: StataCorp LLC.). Statistical significance between scores/ratings for the two module groups was determined using the two-tailed t-test for continuous variables and the Chi-square test for categorical variables, with a p value of less than 0.05 considered statistically significant.

Additionally, the effect size (Cohen’s d) was calculated. This calculation expresses the difference between the means of the two groups in units of standard deviation [47]. Cohen’s d was originally described as having three levels of effect sizes (0.2 = small, 0.5 = medium, 0.8 = large) [47]. Importantly, however, an effect size of 0.4 or higher has been used in education as a threshold for identifying “promising” educational interventions [48, 49].

Instructional efficiency, a concept that combines both cognitive effort and learning outcomes, was also calculated. Questions asking students to rate the perceived difficulty of the exercise were included in the survey to help determine the cognitive effort required to complete the activities [50]. For this analysis, the adapted instructional efficiency measure was calculated utilizing standardized Z scores for knowledge check performance and self-perceived difficulty for the two groups [51].

Qualitative results from open-ended survey questions were analyzed by the primary author (MD) utilizing thematic analysis and an inductive approach [52]. A codebook was created, and all responses were coded using this guide (see Supplemental material for codebook). A second coder (KF) checked the codes’ interpretation against the data. Subsequently, categories and themes emerged from iterative examination and analysis.

This project was deemed Educational Quality Improvement during IRB pre-screening at Virginia Commonwealth University and Quality Improvement Program consultation at Harvard University. The SQUIRE 2.0 revised standards for quality improvement reporting excellence were followed [53].

Results

Overall, 171 students completed the 7-question knowledge check as assigned and on time (Mean (SD) = 6.4 (0.9)). Of these students, the 82 who reviewed the gamified module scored slightly higher on average (Mean (SD) = 6.5 (0.7)) than the 89 who completed the non-gamified module (Mean (SD) = 6.2 (1.0)) This difference was statistically significant (p = 0.02), and the effect size was 0.4, making it a “promising” educational intervention [48, 49]. Of note, only 4 students chose the module not assigned (2 gamified and 2 non-gamified).

A total of 81 students chose to fill out the optional post-module survey (35 reviewed the standard, non-gamified module and 46 reviewed the gamified module). Additionally, 55 students answered at least one open-ended survey question (21 reviewed the standard module and 34 reviewed the gamified module). Only one student who completed the survey chose their module (gamified).

No relationship was identified between the self-reported time required to complete the module and the version of module reviewed (p = 0.7), with 79 out of the 80 students who answered this question reporting that the module took one hour or less to complete (< 30 min or 30 – 60 min). Additionally, most students reported being only slightly or moderately familiar with the material covered in the module from prior experience or coursework (63/80, 78.8%). Only one student reported being extremely familiar with the material. There was no significant difference in reported familiarity with the material between the students who reviewed the gamified and standard modules (p = 0.5).

When asked how easy or difficult the module was to understand, most students for both the gamified and standard module answered “easy” or “neutral” to the question. No student reported that either version of the module was “difficult” or “extremely difficult” to understand. No relationship was identified between the self-perceived difficulty and the version of module reviewed (p = 0.6).

As can be seen in Fig. 2, instructional efficiency was higher for the gamified module.

Scatterplot of instructional efficiency of the gamified module and standard module. Legend: Standardized Z scores for knowledge check performance on the y-axis and standardized Z scores for self-perceived difficulty on the x-axis. (efficiency graph format adapted from Paas & Van Merrienboer, 1993 [51])

Measures of motivation and confidence

Seven survey questions related to students’ general motivation to learn about lab medicine (relevance, interest, importance, general confidence). The calculated Cronbach’s alpha for this construct was 0.81. The overall results indicate that after completing either version of the module, students were, on average, more than moderately motivated to learn laboratory medicine topics in general (Mean (SD): 3.2(0.6), n = 79). Scores for this metric were similar between groups (Mean (SD) = 3.3(0.6), n = 45 for gamified group and Mean (SD) = 3.1(0.5), n = 34 for standard group), and no statistically significant difference was identified (p = 0.3).

An additional set of 6 questions asked students to rate their confidence with the specific material presented in the module. The questions were based on module objectives. The calculated Cronbach’s alpha for this construct was 0.91. The students’ average level of confidence with the specific objectives covered in the Lab Medicine module was 3.2 (SD = 0.7) for all 77 respondents. These results indicate that, on average, the students were more than moderately confident with their understanding of the specific objective-based topics covered in the modules. Average scores for this metric differed slightly between groups, with the students who reviewed the gamified module reporting slightly higher average confidence with these specific topics (Mean (SD) = 3.4 (0.7), n = 44) than the students who reviewed the standard module (Mean (SD) = 3.0(0.7), n = 33). However, this difference did not reach statistical significance (p = 0.06).

Attitudes and subjective experiences

Of the 81 students who submitted a survey, 55 answered at least one of the open-ended questions asking what they liked about the module, how it could be improved, if they would like to see more modules like this, and for any additional comments. A codebook was created (see Supplemental material for codebook). All responses were coded using this as a guide and categories and themes emerged from further examination. Figure 3 summarizes the themes identified and Table 2 provides illustrative quotes. Students expressed similar positive sentiments towards both versions of the module. Many students expressed enthusiasm for this form of interactive prework, and when asked if they would like to see more modules like this in their courses, 89.1% (41 of 46 respondents to this question) answered affirmatively. In their elaboration, many students declared the modules to be superior to other forms of prework, including prereading, reviewing PowerPoint slides, and watching pre-recorded lectures. They appreciated the interactive nature and feedback provided in both versions of the module. Additionally, the students who reviewed the gamified module found that the game elements added value to the experience and enhanced enjoyment and efficiency. Students specifically commented on the progress, personalization and story elements that were unique to the gamified module.

Overview of categories and themes emerging from students’ open-ended survey results. Legend: Review of student comments revealed that both modules were appreciated by students, while the game elements also added value. Students found that both modules conveyed valuable information in an engaging, enjoyable, effective, and efficient manner. Overall, students preferred either module to alternative prework. Feedback for future improvements was similar for both modules and included adding more control for the video portions of the module. The unique elements found in the gamified module added value by boosting student enjoyment and engagement

Discussion

In this project, the use of gamification for teaching Laboratory medicine to first year medical students was explored. To accomplish this, two versions of an interactive online module for teaching Laboratory Medicine to first year medical students at a single institution were evaluated. Both modules were interactive and designed using relevant learning theories as a guide such as the cognitive load theory [11, 37, 38] and the cognitive theory of multimedia learning [6, 10]. One of these modules was “gamified” with the addition of game features (progress meters, points, badges, and story elements). While the gamified module was considered the “intervention,” the standard “control” module was designed to be an effective learning tool on its own. Providing students with a similar alternative learning tool allowed for an effective evaluation of gamification in this setting. Even though the use of gamification has been reported in other areas of medical education, it was important to investigate its use in laboratory medicine education for medical students since data is lacking in this area of study and the effects of gamification can vary depending on the context [34, 36].

Improved learning outcomes were seen for the students who reviewed the gamified module. These students performed better on the post-module knowledge check than the “control” group of students who reviewed the standard (non-gamified module). The difference in scores corresponds to an effect size of 0.4 for the gamified module, suggesting that it is a promising intervention [48, 49].

While the knowledge check findings were encouraging, it was important to consider the instructional efficiency of these modules. This efficiency metric incorporated the students’ ratings of task difficulty in addition to learning outcomes [50, 54]. Using this calculation, the gamified module was more efficient than the standard module at imparting knowledge to students. Additionally, there was no difference in the self-reported time required for module completion or prior familiarity with the material between the two groups.

This instructional efficiency finding indicates that, even though the game elements such as progress indicators, badges, and narrative word bubbles were not essential for learning and may have added to the extraneous load of the module. [11, 37], they did not create a more noticeably difficult learning experience. It is possible that the base module design (shared by both the standard and gamified versions) optimized the cognitive load well enough to allow room in working memory for these additional elements to be added without negative consequences to difficulty or learning. This result highlights the importance of the instructional design component of gamified interventions [30, 31].

Analysis of additional survey results found no significant differences in survey items related to “general motivation for learning lab medicine” or “confidence with specific objective-based laboratory medicine concepts” between the two module types. These findings were surprising since gamification has been shown to boost student motivation in previous studies and this enhanced motivation to learn has been used to help explain improved learning outcomes like those seen in this study [28,29,30,31, 33, 34]. Overall, students in both groups reported that they were more than moderately motivated to learn about lab medicine and confident with the material presented. These scores were slightly higher (though not statistically significant) for students who reviewed the gamified module. Perhaps a larger sample or additional survey questions could have provided more insight.

Open ended survey responses suggested that students appreciated the interactivity and feedback included in both versions of the module, while also offering suggestions for improvement such as the desire for increased learner control of the audio/video speed. In addition, the game elements within the gamified module added value to many students’ experiences. The students specifically mentioned liking the progress indicators, personalization, and story-based questions within the gamified version of the module. The students felt these elements created a “fun” and “enjoyable” experience. While not captured in the survey results, this added enjoyment may have promoted student motivation and could help explain the difference in learning outcomes [28, 31].

While this project produced some intriguing results, it is important to note its limitations. First, this was conducted within a single class in a single institution. In addition, while the students were asked to complete the module version assigned to their group, they were also given the allowance to choose their module, which introduced the potential for bias. However, very few students opted to choose (4/171 knowledge check and 1/81 survey). In addition, the students were fully informed of the version of module they were assigned and not all students filled out the optional survey. These two limitations may have also introduced bias. Also, since the students completed this assignment remotely on their own, there may have been differences in their learning environment (i.e., noisy coffee shop versus quiet office) or choices within the module (use of closed captioning option) that could have affected their performance [11, 55]. Additionally, students self-reported the time required for the module completion. Furthermore, learning outcomes focused on immediate testing, and the students’ retention of the information presented in the module was not tested. Also, while the knowledge check was developed and reviewed by experts and piloted with students and residents, the small number of individuals in the piloting phase and the number of questions limited its validity and reliability. In addition, these types of modules were new to the students, so the interest shown towards them by the students may have been due to their novelty [56]. Finally, the gamified intervention included several different game elements (points, badges, progress meters, personalization, narrative). In the present study, it is unknown which of these specific features helped or hindered the learning process.

To address these issues, future studies could include multiple classes and/or institutions, employ strict randomization and blinding, test for knowledge growth and retention, and collect more detailed and objective information about the learners and their time on task. Such studies would produce more statistical power and generalizable results. An experimental design that evaluates the value added by individual game features would also be helpful [49]. In addition, developing and testing a longitudinal series of modules to be used during an entire year or course could reduce the potential for novelty bias [56]. Finally, long term follow up could be considered to not only test retention of facts but also the incorporation of these concepts into medical practice.

Conclusion

Using gamification to teach Laboratory Medicine concepts in this setting provided students with an engaging and enjoyable experience while also bolstering short-term learning outcomes and instructional efficiency compared to the standard non-gamified control. These results, though localized, should inspire further investigation into the use of this strategy in medical education, particularly in the area of Laboratory Medicine.

Availability of data and materials

The data that support the findings of this study are available from the corresponding author upon reasonable request.

References

Schwartzstein RM, Dienstag JL, King RW, Chang BS, Flanagan JG, Besche HC, et al. The Harvard Medical School Pathways Curriculum: Reimagining Developmentally Appropriate Medical Education for Contemporary Learners. Acad Med. 2020;95(11):1687–95.

McBrien S, Bailey Z, Ryder J, Scholer P, Talmon G. Improving Outcomes. Am J Clin Pathol. 2019;152(6):775–81.

Bijol V, Byrne-Dugan CJ, Hoenig MP. Medical student web-based formative assessment tool for renal pathology. Med Educ Online. 2015;20:26765.

Thompson AR, Lowrie DJ. An evaluation of outcomes following the replacement of traditional histology laboratories with self-study modules. Anat Sci Educ. 2017;10(3):276–85.

McCoy L, Lewis JH, Dalton D. Gamification and Multimedia for Medical Education: A Landscape Review. J Am Osteopath Assoc. 2016;116(1):22–34.

Yue C, Kim J, Ogawa R, Stark E, Kim S. Applying the cognitive theory of multimedia learning: an analysis of medical animations. Med Educ. 2013;47(4):375–87.

Fontaine G, Cossette S, Maheu-Cadotte MA, Mailhot T, Deschênes MF, Mathieu-Dupuis G, et al. Efficacy of adaptive e-learning for health professionals and students: a systematic review and meta-analysis. BMJ Open. 2019;9(8):e025252.

Tsang HC, Truong J, Morse RJ, Hasan RA, Lieberman JA. The transfusion service laboratory in virtual reality. Transfusion. Cited 2022 Jan 23;n/a(n/a). Available from: http://onlinelibrary.wiley.com/doi/abs/10.1111/trf.16799.

Chen BY, Kern DE, Kearns RM, Thomas PA, Hughes MT, Tackett S. From Modules to MOOCs: Application of the Six-Step Approach to Online Curriculum Development for Medical Education. Acad Med. 2019;94(5):678–85.

Issa N, Schuller M, Santacaterina S, Shapiro M, Wang E, Mayer RE, et al. Applying multimedia design principles enhances learning in medical education. Med Educ. 2011;45(8):818–26.

van Merriënboer JJG, Sweller J. Cognitive load theory in health professional education: design principles and strategies. Med Educ. 2010;44(1):85–93.

Sandars J, Patel RS, Goh PS, Kokatailo PK, Lafferty N. The importance of educational theories for facilitating learning when using technology in medical education. Med Teach. 2015;37(11):1039–42.

Knollmann-Ritschel BEC, Regula DP, Borowitz MJ, Conran R, Prystowsky MB. Pathology Competencies for Medical Education and Educational Cases. Acad Pathol. 2017;4:2374289517715040.

Molinaro RJ, Winkler AM, Kraft CS, Fantz CR, Stowell SR, Ritchie JC, et al. Teaching laboratory medicine to medical students: implementation and evaluation. Arch Pathol Lab Med. 2012;136(11):1423–9.

Laposata M. Insufficient Teaching of Laboratory Medicine in US Medical Schools. Acad Pathol. 2016;3:2374289516634108.

Barai I, Gadhvi K, Nair P, Prasad S. The importance of laboratory medicine in the medical student curriculum. Med Educ Online. 2015;20:30309.

Chu Y, Mitchell RN, Mata DA. Using exit competencies to integrate pathology into the undergraduate clinical clerkships. Hum Pathol. 2016;47(1):1–3.

Doi D, do Vale RR, Monteiro JMC, Plens GCM, Ferreira Junior M, Fonseca LAM, et al. Perception of usefulness of laboratory tests ordering by internal medicine residents in ambulatory setting: A single-center prospective cohort study. PLoS One. 2021;16(5):e0250769.

Peedin AR. Update in Transfusion Medicine Education. Clin Lab Med. 2021;41(4):697–711.

Saffar H, Saatchi M, Sadeghi A, AsadiAmoli F, Tavangar SM, Shirani F, et al. Knowledge of Laboratory Medicine in Medical Students: Is It Sufficient? Iran J Pathol. 2020;15(2):61–5.

Smith BR, Aguero-Rosenfeld M, Anastasi J, Baron B, Berg A, Bock JL, et al. Educating medical students in laboratory medicine: a proposed curriculum. Am J Clin Pathol. 2010;133(4):533–42.

Molero A, Calabrò M, Vignes M, Gouget B, Gruson D. Sustainability in Healthcare: Perspectives and Reflections Regarding Laboratory Medicine. Ann Lab Med. 2021;41(2):139–44.

Ford J, Pambrun C. Exit competencies in pathology and laboratory medicine for graduating medical students: the Canadian approach. Hum Pathol. 2015;46(5):637–42.

Smith BR, Kamoun M, Hickner J. Laboratory Medicine Education at U.S. Medical Schools: A 2014 Status Report. Acad Med. 2016;91(1):107–12.

Guarner J, Winkler AM, Flowers L, Hill CE, Ellis JE, Workowski K, et al. Development, Implementation, and Evaluation of an Interdisciplinary Women’s Health and Laboratory Course. Am J Clin Pathol. 2016;146(3):369–72.

Roth CG, Huang W, Sekhon N, Caruso A, Kung D, Greely J, et al. Teaching Laboratory Stewardship in the Medical Student Core Clerkships Pathology-Teaches. Arch Pathol Lab Med. 2020;144(7):883–7.

Scordino TA, Darden AG. Implementation and Evaluation of Virtual Laboratory Tours for Laboratory Diagnosis of Hematologic Disease. Am J Clin Pathol. 2022;157(6):801–4.

Singhal S, Hough J, Cripps D. Twelve tips for incorporating gamification into medical education. MedEdPublish. 2019;8:216.

van Gaalen AEJ, Brouwer J, Schönrock-Adema J, Bouwkamp-Timmer T, Jaarsma ADC, Georgiadis JR. Gamification of health professions education: a systematic review. Adv Health Sci Educ Theory Pract. 2021;26(2):683–711.

Rutledge C, Walsh CM, Swinger N, Auerbach M, Castro D, Dewan M, et al. Gamification in Action: Theoretical and Practical Considerations for Medical Educators. Acad Med. 2018;93(7):1014–20.

Landers RN. Developing a Theory of Gamified Learning: Linking Serious Games and Gamification of Learning. Simul Gaming. 2014;45(6):752–68.

Kirsch J, Spreckelsen C. Caution with competitive gamification in medical education: unexpected results of a randomised cross-over study. BMC Med Educ. 2023;23(1):259.

Willig JH, Croker J, McCormick L, Nabavi M, Walker J, Wingo NP, et al. Gamification and education: A pragmatic approach with two examples of implementation. J Clin Transl Sci. 2021;5(1):e181.

Krath J, Schürmann L, von Korflesch HFO. Revealing the theoretical basis of gamification: A systematic review and analysis of theory in research on gamification, serious games and game-based learning. Comput Hum Behav. 2021;125:106963.

Arruzza E, Chau M. A scoping review of randomised controlled trials to assess the value of gamification in the higher education of health science students. J Med Imaging Rad Sci. 2021;52(1):137–46.

Hamari J, Koivisto J, Sarsa H. Does Gamification Work? – A Literature Review of Empirical Studies on Gamification. In: 2014 47th Hawaii International Conference on System Sciences. 2014. p. 3025–34.

Sweller J. Cognitive load theory and educational technology. Educ Tech Res Dev. 2020;68(1):1–16.

Sweller J, van Merriënboer JJG, Paas F. Cognitive Architecture and Instructional Design: 20 Years Later. Educ Psychol Rev. 2019;31(2):261–92.

Yarborough, CB, Fedesco HN. Vanderbilt Center for Teaching. 2020. Cited 2022 Apr 17. Motivating Students. Available from: https://cft.vanderbilt.edu/guides-sub-pages/motivating-students/. Accessed 17 Apr 2022.

Artino AR, La Rochelle JS, Dezee KJ, Gehlbach H. Developing questionnaires for educational research: AMEE Guide No. 87. Med Teach. 2014;36(6):463–74.

Cook DA, Beckman TJ, Thomas KG, Thompson WG. Measuring Motivational Characteristics of Courses: Applying Keller’s Instructional Materials Motivation Survey to a Web-Based Course. Acad Med. 2009;84(11):1505–9.

Dohn NB, Fago A, Overgaard J, Madsen PT, Malte H. Students’ motivation toward laboratory work in physiology teaching. Adv Physiol Educ. 2016;40(3):313–8.

Intrinsic Motivation Inventory (IMI) – selfdeterminationtheory.org. Cited 2023 Feb 25. Available from: https://selfdeterminationtheory.org/intrinsic-motivation-inventory/. Accessed 25 Feb 2023.

The Instructional Materials Motivation Survey (IMMS) | Learning Lab. Cited 2022 Apr 28. Available from: https://learninglab.uni-due.de/research-instrument/13887. Accessed 28 Apr 2022.

Loorbach N, Peters O, Karreman J, Steehouder M. Validation of the Instructional Materials Motivation Survey (IMMS) in a self-directed instructional setting aimed at working with technology. Br J Edu Technol. 2015;46(1):204–18.

Wiggins BL, Eddy SL, Wener-Fligner L, Freisem K, Grunspan DZ, Theobald EJ, et al. ASPECT: A Survey to Assess Student Perspective of Engagement in an Active-Learning Classroom. CBE Life Sci Educ. 2017;16(2):ar32.

Cohen J. Statistical power analysis for the behavioral sciences. 2nd ed. Hillsdale, N.J: L. Erlbaum Associates; 1988. p. 567.

Hattie J. Visible Learning: A Synthesis of Over 800 Meta-Analyses Relating to Achievement. London: Routledge; 2008. p. 392.

Mayer RE. Computer Games in Education. Annu Rev Psychol. 2019;70(1):531–49.

van Gog T, Paas F. Instructional Efficiency: Revisiting the Original Construct in Educational Research. Educ Psychol. 2008;43(1):16–26.

Paas F, Van Merrienboer JJG. The efficiency of instructional conditions: An approach to combine mental effort and performance measures. Hum Factors. 1993;35:737–43.

Kiger ME, Varpio L. Thematic analysis of qualitative data: AMEE Guide No. 131. Med Teach. 2020;42(8):846–54.

Ogrinc G, Davies L, Goodman D, Batalden P, Davidoff F, Stevens D. SQUIRE 2.0 (Standards for QUality Improvement Reporting Excellence): revised publication guidelines from a detailed consensus process. BMJ Qual Saf. 2016;25(12):986–92.

Kalyuga S, Chandler P, Sweller J. Incorporating learner experience into the design of multimedia instruction. J Educ Psychol. 2000;92(1):126–36.

Choi HH, van Merriënboer JJG, Paas F. Effects of the Physical Environment on Cognitive Load and Learning: Towards a New Model of Cognitive Load. Educ Psychol Rev. 2014;26(2):225–44.

Tsay CHH, Kofinas AK, Trivedi SK, Yang Y. Overcoming the novelty effect in online gamified learning systems: An empirical evaluation of student engagement and performance. J Comput Assist Learn. 2020;36(2):128–46.

Acknowledgements

Not applicable

Funding

Not applicable.

Author information

Authors and Affiliations

Contributions

MD – conceived of the project, analyzed all data, created tables and figures and was the major contributor in writing the manuscript. KS—contributed to project’s conception, study design and writing of the manuscript. SR—contributed to project’s conception, study design and writing of the manuscript. AH—contributed to project’s study design, module design and writing of the manuscript. HB—contributed to project’s study design, data presentation, and writing of the manuscript. KF – contributed extensively to project’s conception, study design, data analysis, data presentation, and writing the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

The Virginia Commonwealth University Institutional Review Board deemed this study (reference # HM20024819) exempt from formal review as it was considered a program evaluation for the purpose of curricular quality improvement, for which written informed consent is not required. Survey participation was voluntary and respondent identities were not tracked. All study methods were carried out in accordance with relevant guidelines and regulations, and meet all requirements in the Declaration of Helsinki.

Consent for publication

Not applicable.

Competing interests

The corresponding author, Marie Do, has communicated with LevelEx, a medical video game studio, and was offered a medical advisor position with the company. The contract for this position was finalized on 11/12/2023. Communication with the company began in July 2023, well after the completion of the educational project described in this submission and after the partial results had been presented during the HMS research symposium and VCU Health Lab Week.

This interest did not influence the results or discussion reported in this paper.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1: Supplemental Information.

Survey Questions. Supplemental material. Codebook for qualitative analysis.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Do, M., Sanford, K., Roseff, S. et al. Gamified versus non-gamified online educational modules for teaching clinical laboratory medicine to first-year medical students at a large allopathic medical school in the United States. BMC Med Educ 23, 959 (2023). https://doi.org/10.1186/s12909-023-04951-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12909-023-04951-5