Abstract

Faculty development (FD) programs are critical for providing the knowledge and skills necessary to drive positive change in health professions education, but they take many forms to attain the program goals. The Macy Faculty Scholars Program (MFSP), created by the Josiah Macy Jr. Foundation (JMJF) in 2010, intends to develop participants as leaders, scholars, teachers, and mentors. After a decade of implementation, an external review committee conducted a program evaluation to determine how well the program met its intended goals and defined options for ongoing improvement.

The committee selected Stufflebeam’s CIPP (context, input, process, products) framework to guide the program evaluation. Context and input components were derived from the MFSP description and demographic data, respectively. Process and product components were obtained through a mixed-methods approach, utilizing both quantitative and qualitative data obtained from participant survey responses, and curriculum vitae (CV).

The evaluation found participants responded favorably to the program and demonstrated an overall increase in academic productivity, most pronounced during the two years of the program. Mentorship, community of practice, and protected time were cited as major strengths. Areas for improvement included: enhancing the diversity of program participants, program leaders and mentors across multiple sociodemographic domains; leveraging technology to strengthen the MFSP community of practice; and improving flexibility of the program.

The program evaluation results provide evidence supporting ongoing investment in faculty educators and summarizes key strengths and areas for improvement to inform future FD efforts for both the MFSP and other FD programs.

Similar content being viewed by others

Introduction

Background

Robust faculty development (FD) programs in the health professions are necessary to provide faculty with the knowledge and skills required to prepare learners for an ever-evolving healthcare landscape. Although FD initially emerged as a form of training to develop teaching skills, it has since expanded to include activities that improve knowledge and skills in other domains such as research, administration and clinical skills [1,2,3]. In addition to benefiting learners, FD programs can augment the careers of health professions educators through improved self-efficacy and sense of belonging within the broader education community [4]. On an institutional level, FD programs can define and strengthen a medical center’s academic profile [5]. Similarly, national FD programs may benefit from the reputation of the sponsoring institution and its particular goals and mission.

Despite the importance of FD programs, there exist several barriers to participation, including: a lack of protected time for attendees, competing responsibilities, a perceived lack of recognition and financial reward for teaching, a perceived lack of direction from and connection to the institution, and logistical factors [6]. Lack of protected time specifically remains the most commonly reported barrier to scholarship, which is a key output of many FD programs. This constraint is particularly true for faculty managing competing administrative and leadership activities, additional key outputs of FD programs [7, 8]. Previous FD literature has identified dedicated time and financial resources as primary drivers for FD program participants’ ability to actualize benefits of participation, by creating capacity for learning, reflection, scholarship and development of leadership skills [8,9,10]. The importance of FD programs has resulted in the growth in the number and size of such programs, with corresponding financial commitments. Supporting evidence for ongoing investment in FD programs, as well as good stewardship of this investment of money, time, and people, requires evaluation of the intended and unintended outcomes of such programs.

One such flagship program is the Macy Faculty Scholars Program (MFSP). The MFSP is a FD program created by the Josiah Macy Jr. Foundation (JMJF) in 2010 with the goal of developing health professions educators as leaders, scholars, teachers and mentors [11]. The MFSP selects mid-career faculty who have already demonstrated promise, and subsequently provides them with a structured curriculum, protected time, mentorship, a national network, and a community of practice (CoP). The vision of the program was for Scholars to become “the drivers for change in health professions education, toward the goal of creating an educational system that better meets the needs of the public.” Specifically, the JMJF noted interest in projects that: advance equity, diversity and belonging; enhance collaboration among health professionals, educators, and learners; and successfully navigate and address ethical dilemmas that arise when principles of the health professions conflict with barriers imposed by the health delivery system.

The program selects 5 Scholars per year from institutional nominations through a rigorous, multi-level selection process. Accepted Scholars receive base salary support plus fringe benefits to protect at least 50% of the Scholar’s time for two years. Scholars produce academic output, engage in the national MFSP educational network, and participate in the Harvard Macy Institute Program for Educators in Health Professions [12]. Applicants are selected based on their application, project proposal, institutional support and are mentored throughout the program by a national group of mentors.

To date, the JMJF had not yet engaged in a significant, formal, ongoing evaluation of the MFSP, as the priorities thus far had included building the program, recruiting the mentors, developing selection criteria, partnering with the Harvard Macy Institute for the curriculum, and refining various policies. More generally, rigorous evaluations utilizing both qualitative and quantitative methods of robust national FD programs often remain neglected or considered as an afterthought in the literature, highlighting the need to publish program evaluations that are completed in an academically rigorous manner for transparency of program outcomes [13,14,15]. Therefore, at the decade mark, the JMJF elected to commission an external review of the MFSP to evaluate components of the program, namely: curriculum, mentorship models, selection, and program and Scholar outcomes. Consequently, the authors conducted a robust program evaluation of the MFSP which is detailed in this paper. Subsequently, this paper can serve as an example of a academically rigorous program evaluation utilizing both quantitative and qualitative methodology and as additional data for other health profession educational leaders seeking to justify, build, or evaluate other FD programs to demonstrate the value of investment in health professions educators [16,17,18].

Methods

Evaluation framework

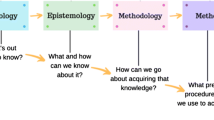

The evaluation team elected to apply Stufflebeam’s CIPP evaluation framework, which contains four major levels of evaluation: Context (program objectives and the basis for those objectives), Input (assessment of the educational strategies employed to meet the objectives), Process (actual implementation and how it compares to planned activities) and Product (resulting outcomes and if they met the needs of the target population).[19, 20]. The CIPP model avoids an overly simplistic approach by incorporating systems theory, which postulates that the whole equals more than the sum of its parts and acknowledges the interrelations among individual components. CIPP also incorporates complexity theory, which accounts for the relationship of elements to program participants and relationship of program participants with one another [19, 21,22,23]. Although this evaluation occurred at the 10-year-mark, the Macy Foundation leadership intended to use data gleaned both summatively to assess its return on investment, as well as formatively to inform future iterations of the program.

We obtained the context and input components of the evaluation from the MFSP program description and Scholar demographic data, respectively. We applied a mixed-methods approach to evaluate the process and product, utilizing both quantitative and qualitative data obtained from program statistics, survey responses and curriculum vitae (CV). Informed consent was obtained from all subjects and the study was reviewed by the University of Michigan Institutional Review Board, HUM00175014, for ethical approval and ruled to be exempt from ongoing review. All methods and experimental protocols were approved and carried out in accordance with relevant guidelines and regulations.

Curriculum Vitae (CV) analysis

MFSP Scholars with a minimum of one year of post-program activity submitted current CVs for analysis of types and number of academic products. We aggregated and de-identified the CVs. We used three time periods to classify academic output: time period 1 (pre-MFSP) represents years before the program (equals number of years in time period 3); time period 2 (intra-MFSP) represents the two years during the program; and time period 3 (post-MFSP) represents years after the program up until the end of 2019, ranging from 1 year, for those who entered in 2016, up to 6 years for those who entered in 2011, with a mean of 3.5 years. Nine of the authors (RG, MH, LG, PM, KN, RP, KR, MT, JT) participated in data extraction and performed exploratory data analyses including a review of data distributions by histogram and Q-Q plots, as well as descriptive statistics such as mean, median, variance, skewness, and kurtosis, and outlier analyses using interquartile range and Cook’s distance (Additional file 1: Appendix 1 for CV data collection form).

Survey

The evaluation team developed survey questions (see Additional file 1: Appendix 2 for complete survey) through an iterative process of expert input and literature review, with content focused on strengths and areas of improvement of the MFSP program. The evaluation team piloted the instrument, refined the questions accordingly, and generated a Qualtrics survey requiring roughly 5- to 10-min to complete. A set of representative MFSP Scholars did not pilot the survey due to the small size of the cohort and potential for generating bias from seeing the questions twice. The MFSP Scholars received the survey via email. Responses were confidential and de-identified in the analysis.

Quantitative analysis

For the quantitative analysis, we used demographic data, data extracted from the CV analysis, and survey items with Likert-type scale questions. We expressed number of academic outputs from the CV analysis as rates (number per year for a given time period). We utilized descriptive statistics and analysis of variance (ANOVA) to examine the results. We log-transformed numerical data to meet assumptions of normality for the statistical tests prior to comparison between groups. We used a one-way, within subjects ANOVA to test within-subject differences in outcomes during time periods 1, 2, and 3 for statistical significance. We used partial eta-square as effect size measures. Post-hoc analyses assessed the effect of protected time on these outcomes by an analysis of covariance (ANCOVA) using protected time as a covariate. All statistical tests were two-sided with a 0.05 significance level. We performed analyses with IBM SPSS version 26.0 (Armonk, NY).

Qualitative analysis

Following the Standards for Reporting Qualitative Research, we utilized a constructivist approach to engage in a qualitative analysis of open-ended survey responses to further understand the participants' experience with the MFSP program [24]. With regard to the positionality and reflexivity of the coding group, a gender and experientially diverse group of health professions educators comprised the coding team. Four authors (JDT, RP, KN, KR) reviewed and analyzed transcripts under the direction of a qualitative research expert (JV). All coding authors used a group format to create and discuss code agreement for three questions. Two teams split the remaining questions (authors KN/RP and authors JDT/KR) and each team coded half the questions independently. The independent teams then convened to discuss all codes and determine appropriate themes.

Results

Quantitative analysis (demographic, CV, survey)

Table 1 details baseline demographic data of MFSP applicants and selected Scholars as a component of the inputs in the CIPP model for the MFSP program.

Table 2 summarizes the products of the CIPP model, or rates of academic outputs per year for each category of the CV analysis over each time period and results of the statistical analysis to determine between-group differences and effect size. A total of 31 CVs met criteria for inclusion in the CV analysis.

Three areas of accomplishment demonstrated statistically significant temporal differences, though with different patterns of change. The rate of education-specific grants (in principal investigator role) decreased going from time period 1 to 2 as well as from time period 2 to 3. The rate of total grant dollars (either in a PI or co-investigator role) decreased going from time period 1 to 2 but increased going from time period 2 to 3. The rate of education-specific national presentations increased going from time period 1 to 2 but decreased going from time period 2 to 3.

Four areas demonstrated a non-statistically significant trend toward temporal differences. The rate of education-specific grants (either in a PI or co-investigator role) decreased going from time period 1 to 2 as well as from time period 2 to 3. The rates of total publications, education-specific presentations (any regional, national, and international), and education-specific international presentations increased going from time period 1 to 2 but decreased going from time period 2 to 3.

Table 3 details a post-hoc analysis to assess the effect of protected time on the rate of accomplishments, and demonstrated total grants, total grants as PI, education-specific grants, education-specific grants as PI, education-specific publications, and education-specific last author publications all had statistically significant correlation with the amount of protected time.

Survey response rate was 94% (34/36). Most respondents “agreed” or “strongly agreed” that the MFSP developed them as a teacher (29/34), mentor (27/34), scholar (33/34), and educational leader (34/34). Most “agreed” or “strongly agreed” that the program was a valuable use of time (33/34), allowed things to be accomplished that otherwise would not have been (34/34), and would recommend the program to others (33/34). Scholars reported a mean and median of 51 ± 12% protected time.

Qualitative analysis

The main themes identified in the qualitative analysis provide insight into the process component of the CIPP model and fit under two overarching domains: areas of strength and areas for improvement (Table 4). Themes related to strengths included: mentorship, community of practice, and resources. Themes related to areas for improvement included: lack of diversity; technology; and individualization.

Discussion

We utilized the CIPP framework to complete a robust, academically rigorous, program evaluation of a national FD program to add and further advance the literature supporting the need to invest in health profession educators. Our data demonstrated that the participants of this program were developed as mentors, scholars, and educational leaders, indicating a successful interplay of the CIPP framework components: context, inputs and process generating intended products. In addition to a program evaluation of the MFSP, our data provide additional information for FD leaders to help design successful programs by highlighting the importance of protected time on increased scholarly activity and how mentorship, CoPs, resources, and diversity serve as key components of all FD programs.

Context

In the CIPP model, the context is defined as the program objectives, the basis that supports those objectives, and the learner identification and demand [19, 20]. To understand the context of the MFSP program, we analyzed the MFSP program description. Scholars accepted to the MFSP program receive 50% protected time for two years during the program. The expectation is that Scholars produce academically, are engaged in the MFSP CoP, and participate in the Harvard Macy Institute Program for Educators in Health Professions throughout that time period [12]. This context positively affected career growth and academic output for the Scholars as demonstrated through increased academic output of publications and presentations during the program years.

Inputs

The inputs of the CIPP model are described as the assessment of the educational strategies employed to meet the objectives, including program plans and resources. The MFSP scholars represent key inputs as they form a CoP. Regarding Scholar demographics, our data demonstrated diversity in gender, race, professional degree, and academic rank of the Scholars accepted to the program. In our analysis, the percentage of Scholars identifying as women matched the percentage of women applicants. Furthermore, the percentage of underrepresented in medicine (URM) Scholars represented double the percentage of URM applicants. Lack of diversity in the health professions remains a critically important issue with evidence that diversity within the care team enhances patient care [25,26,27,28,29,30,31,32]. In addition, FD opportunities for URM and women health professionals enhance retention and advancement of these same groups of faculty [33,34,35,36,37]. By continuing to emphasize recruitment of URM Scholars and mentors to the MFSP, and focusing curricular interventions on addressing implicit bias, social determinants of health, health care disparities, and systemic racism within medical education, FD programs can better meet their goal of training Scholars to drive critical change.

The MFSP utilized several educational strategies to meet the previously described objectives. First, they utilized their national network of mentors, faculty, and alumni to create a robust CoP that facilitated career growth and academic output for the Scholars. Second, the program accessed the Harvard Macy Institute Program for Educators in Health Professions curriculum which allowed the Scholars to participate in an established program that guided their own professional development as health professions education scholars. The MFSP use of these resources and educational strategies supported the program’s intended goals, and the actual implementation was further explored as part of the process analysis.

Process

Regarding the process of the CIPP model, or the actual implementation and execution of the planned activities to support the products, we found that the MFSP program was ultimately successful in its implementation and execution of planned activities. Our data demonstrated successful mentorship relationships, robust CoP, significant resources, and growth as educational scholars. While the participants rated the program highly overall, the analysis also revealed specific areas for improvement, including recommendations regarding mentorship, diversity, technology, and a request for continued engagement in the program post-graduation.

Mentorship and sponsorship in academic medicine represent key drivers of personal and professional development, research productivity, and career satisfaction [38,39,40]. Although most Scholars rated mentorship and sponsorship highly, many Scholars noted a lack of standardization of mentorship. Although the MFSP included nationally recognized, prolific scholars as mentors, many had participated in the program since its inception, which limited the diversity of perspective and also potentially contributed to a lack of diversity among the MFSP program faculty, as mentioned by the Scholars. One resultant suggestion for the MFSP and similar FD programs would be to have mentors fill a limited-service term before allowing new mentors to enter. Additionally, allowing mentees to select their own mentors, ensuring incorporation of components of formal mentoring programs, encouraging a written mentor–mentee contract, and exploring alternate models for mentorship arrangements represent potential future strategies previously described [39].

In addition, Scholars indicated that the MFSP afforded entry into a robust CoP of educators in the health professions, including other Scholars, alumni, and faculty of the program. Membership in CoPs result in increased social support, which can enhance well-being and engagement, and may mitigate the risk of burnout [41,42,43]. Also, Scholars noted resources to be a strength of the MFSP as protected time and financial support facilitated productivity by addressing a common barrier to scholarly work [8]. The majority of Scholars reported that they did achieve the intended 50% protected time. Although participation in FD programs often comes with the promise of “protected time,” the degree to which it materializes varies. Even with funding, clinical and other administrative demands can easily consume time intended for academic pursuits, particularly for clinician-educators [44, 45]. Without truly protected time, faculty must utilize evening and weekend hours to accomplish scholarly and professional development activities, with weekend activities being a driver of burnout [46]. The compelling finding that Scholars largely received the amount of protected time promised likely contributed significantly to the products of the program and should be duplicated in other FD programs to achieve similar successes in scholarly activity.

Finally, integration of technology represents another potential area of growth for the MFSP and FD programs in general. Specifically, Scholars suggested improving the MFSP’s social media presence and increasing the frequency of contact via videoconferencing and other digital platforms, a theme further highlighted in the context of COVID-19 related gathering restrictions. Furthermore, Scholars requested continuous programming and connection to the MFSP community beyond the two-year fellowship by enhancing the alumni network and promoting communication in between in-person meetings. Engaging past participants of FD programs as future leaders of the program enlarges its CoP, while also providing future sponsorship and leadership opportunities to HPEs. Utilizing these approaches allow for affordable and flexible ways to promote engagement among geographically distant participants and enhance a CoP [47,48,49].

Products

The products of the CIPP model, the resulting outcomes or achievements of the program, demonstrated that the MFSP successfully developed its Scholars academically. Prior studies have evaluated competitive grant awards and publications as indicators of academic success among faculty and we also utilized these metrics to assess that objective [36, 50,51,52,53,54]. We found that education publications and presentations increased during and following MFSP participation, suggesting that the Scholars were disseminating their research and innovations in education. The pronounced increase in publications and presentations during the program years likely indicate the particularly beneficial effect of protected time. Of note, there was a decrease in productivity observed when moving from time in the program to time outside of the program which likely reflects the challenges with the ‘transition back’ from a significant proportion of protected time to typical faculty roles. Prior literature has found that individual priorities and work environment characteristics such as number of work hours, quality of mentorship relationships, and institutional support augment success with this transition [55]. Ongoing mentorship and participation in the CoP through the MFSP alumni status and creative efforts by individual institutions to provide ongoing protected time for productivity and development beyond the completion of the program may sustain benefits of participation [55, 56]. Indeed, the amount of protected time offered by the MFSP distinguishes it among FD programs for health professions educators; yet, this finding highlights the continued need for FD programs to support protected time, while adding to the body of literature demonstrating the meaningful outcomes generated by such an investment.

Implications of program evaluation findings

As the MFSP represents a flagship FD program for health professions educators, lessons learned through its evaluation provide further evidence for policymakers, organizational and institutional leaders that investing in health professions educators, particularly through providing actualized protected time, provides meaningful return on investment with regard to developing leaders, scholars, teachers, and mentors, with the intent of benefiting society at large. Additionally, the program evaluation generated a list of key themes and resultant strategies to inform future iterations of the MFSP program that are broadly applicable to other faculty developers in health professions education as they create, optimize, and expand their programs (Table 5).

In applying the lessons derived from the program evaluation, however, the learning and practice environments of related FD programs must be taken into consideration. The MFSP is a premier, nationally recognized program that draws faculty learners from institutions throughout the country. There are few similar programs. Instead, most longitudinal FD programs draw from, and are supported by, single institutions which seek to build their local educational expertise. The contrast between the national focus of the MFSP and the local focus of most institutional programs is significant in many ways. The financial support for protected time is critical to the MFSP but “support” from local programs may well reflect the influence of other values and priorities to foster the strength and reputation of the institution – above and beyond the finances for “protected time.”

Similarly, the MFSP seeks to build a national community of scholars and collaborators, whereas local programs are likely to be more concerned with building their own, local CoP. There is a risk of a local FD program focusing too narrowly on the needs and benefits of the institution but we, the authors, many of whom have participated in local FD programs, believe firmly that all local programs should recognize that medical education is a national, even international, enterprise that extends beyond the narrower interests of local contexts.

Finally, we believe that all longitudinal FD programs should rigorously evaluate themselves for both local impact but also national significance. The perspective of evaluation needs to be national in scope and simply describing a program is only a first step towards the needed analysis of the key features of the program, the characteristics of the learners, and the nature of the educational context. Leaders of such FD programs need to build the community’s knowledge and practice, not just a local institution. This is how individual programs contribute to the greater good in health professions education.

Limitations

We acknowledge several limitations of our evaluation. Of note, over the 10-year period examined, the curriculum changed dynamically in response to ongoing feedback, such that Scholars' experiences varied depending on the years they participated. Additionally, CVs vary in their format and content, and different reviewers vary in their CV interpretation. In order to streamline data extraction, the CV analysis subgroup jointly coded a CV in order to develop the extraction form (see Additional file 1: Appendix 2) through an iterative process that generated qualifying footnotes for various categories and involved real-time communication to resolve discrepancies. Ideally, the evaluation would have been strengthened by obtaining CV data from faculty not selected to serve as a control group, accessing data from non-Scholar stakeholders (i.e., medical school deans, mentors, mentees), and analyzing program costs. Ultimately, we chose the more feasible approach of surveying Scholars and using them as their own individual controls by comparing an equivalent number of years of productivity during pre- and post- time periods. Thus, we evaluated a longer time period for the earlier Scholar cohorts and a shorter time period for later Scholars, which could potentially skew results. Although a more resource-intensive approach, conducting focus groups or interviews would have greatly enhanced the richness of data generated around the scholars’ experiences with program implementation, and the authors recommend for those considering embarking on robust FD program evaluation to incorporate these methods if feasible. Additionally, many other external confounding factors likely contributed to differences between the time periods.

Of note, the large number of statistical comparisons could technically lead to spurious results for the quantitative data. Although conservative measures such as Bonferroni corrections were considered, we withheld use of them, given that the trends were of greater practical concern for the program evaluation than statistical significance. In addition, trends were also supported by themes identified by a separate review group in the qualitative analysis data.

Despite these limitations, the evaluation process’ many strengths included: multiple independent and objective perspectives of early career health professions educators who represent potential stakeholders, a detailed analysis of Scholars’ CVs, a relatively high response rate to the survey administered, use of an applicable evaluation framework previously utilized for FD programs, and integration of findings with relevant education theory and literature.

Conclusions

Over the 10-year course of its history, the MFSP has received positive responses from participants and has successfully developed Scholars as mentors, teachers, educators, and scholars. The increase in academic productivity observed most prominently during the two-year period of the program highlights the importance of investing in health professions education faculty through actualized, rather than simply promised, protected time. Scholars also commonly cited the mentorship and CoP as the most valuable components of the program for achieving its stated goals. Areas for improvement included enhancing the diversity of program participants, program leaders and mentors across multiple domains including race, gender, geographic location, and health profession type; leveraging technology to strengthen the MFSP CoP through increased social media presence and use of remote teaching strategies; and improving flexibility of the program to meet Scholar needs. Through this program evaluation, we have demonstrated key strategies that can be utilized by other existing FD programs to inform continued development and expansion of their programs and ultimately, support the important work and development of health profession educators.

Availability of data and materials

The datasets generated and/or analyzed during the current study are not publicly available because the Macy Foundation is a private foundation but are available from the corresponding author on reasonable request.

Change history

01 May 2023

A Correction to this paper has been published: https://doi.org/10.1186/s12909-023-04256-7

References

Guskey TR. What Makes Professional Development Effective? Phi Delta Kappan. 2003;84:748–50.

Steinert Y. Staff Development. In: Dent JA, Harden RM, Hunt D, Hodges BD, editors. A practical guide for medical teachers. 5th ed. Edinburgh ; New York: Elsevier; 2017.

Sheets KJ, Schwenk TL. Faculty development for family medicine educators: An agenda for future activities. Teach Learn Med. 1990;2:141–8.

Sethi A, Schofield S, Ajjawi R, McAleer S. How do postgraduate qualifications in medical education impact on health professionals? Med Teach. 2016;38:162–7.

Hoy WK, Miskel CG, Tarter CJ. Educational administration: theory, research, and practice. 9th ed. New York: McGraw-Hill Humanities/Social Sciences/Languages; 2012.

Steinert Y, McLeod PJ, Boillat M, Meterissian S, Elizov M, Macdonald ME. Faculty development: a ‘Field of dreams’? Med Educ. 2009;43:42–9.

Steinert Y, Nasmith L, McLeod PJ, Conochie L. A teaching scholars program to develop leaders in medical education. Acad Med. 2003;78:142–9.

Zibrowski EM, Weston WW, Goldszmidt MA. ‘I don’t have time’: issues of fragmentation, prioritisation and motivation for education scholarship among medical faculty. Med Educ. 2008;42:872–8.

Rushmer R, Kelly D, Lough M, Wilkinson JE, Davies HTO. Introducing the learning practice – II. Becoming a learning practice. J Eval Clin Pract. 2004;10:387–98.

Onyura B, Ng SL, Baker LR, Lieff S, Millar B-A, Mori B. A mandala of faculty development: using theory-based evaluation to explore contexts, mechanisms and outcomes. Adv Health Sci Educ. 2017;22:165–86.

Macy Faculty Scholars. Josiah Macy Jr Foundation. https://macyfoundation.org/macy-scholars. Accessed 20 May 2021.

Harvard Macy Institute. https://harvardmacy.org/index.php.

Leslie K, Baker L, Egan-Lee E, Esdaile M, Reeves S. Advancing faculty development in medical education: a systematic review. Acad Med. 2013;88:1038–45.

Thompson BM, Searle NS, Gruppen LD, Hatem CJ, Nelson E. A national survey of medical education fellowships. Med Educ Online. 2011;16:5642.

Steinert Y, Mann K, Centeno A, Dolmans D, Spencer J, Gelula M, et al. A systematic review of faculty development initiatives designed to improve teaching effectiveness in medical education: BEME Guide No. 8. Med Teach. 2006;28:497–526.

Onyura B. Useful to whom? Evaluation utilisation theory and boundaries for programme evaluation scope. Med Educ. 2020;54:1100–8.

Carraccio C, Englander R, Van Melle E, ten Cate O, Lockyer J, Chan M-K, et al. Advancing competency-based medical education: a charter for clinician-educators. Acad Med. 2016;91:645–9.

Sklar DP, Weinstein DF, Carline JD, Durning SJ. Developing programs that will change health professions education and practice: principles of program evaluation scholarship. Acad Med. 2017;92:1503–5.

Stufflebeam DL, Shinkfield AJ. Evaluation theory, models, and applications. San Francisco, CA: Jossey-Bass; 2007.

Steinert Y. Faculty development in the new millennium: key challenges and future directions. Med Teach. 2000;22:44–50.

Frye AW, Hemmer PA. Program evaluation models and related theories: AMEE guide no. 67. Med Teach. 2012;34:e288-299.

Stufflebeam D. Program Evaluation. In: Evaluation Models. Boston: Kluwer-Nijhoff; 1983.

Popham WJ. Educational evaluation. 3rd ed. Boston: Allyn and Bacon; 1993.

O’Brien BC, Harris IB, Beckman TJ, Reed DA, Cook DA. Standards for reporting qualitative research: a synthesis of recommendations. Acad Med. 2014;89:1245–51.

Yu PT, Parsa PV, Hassanein O, Rogers SO, Chang DC. Minorities struggle to advance in academic medicine: A 12-y review of diversity at the highest levels of America’s teaching institutions. J Surg Res. 2013;182:212–8.

Karani R, Varpio L, May W, Horsley T, Chenault J, Miller KH, et al. Commentary: racism and bias in health professions education. Acad Med. 2017;92:S1-6.

Association of American Medical Colleges. Diversity in Medicine: Facts and Figures 2019. 2019.

Agency for Healthcare Research and Quality. National Healthcare Disparities Report. Rockville, MD: AHRQ; 2017.

Brown DJ, DeCorse-Johnson AL, Irving-Ray M, Wu WW. Performance evaluation for diversity programs. Policy Polit Nurs Pract. 2005;6:331–4.

Grumbach K, Mendoza R. Disparities in human resources: addressing the lack of diversity in the health professions. Health Aff (Millwood). 2008;27:413–22.

Health Resources and Services Administration. The rationale for diversity in the health professions: a review of the evidence. Rockville, MD: U.S. DHHS; 2006.

Smedley BD, Butler A, Bristow L. In the Nation’s Compelling Interest: Ensuring Diversity in the Health-Care Workforce. Washington, D.C: National Academies Press; 2004.

Daley S, Wingard DL, Reznik V. Improving the retention of underrepresented minority faculty in academic medicine. J Natl Med Assoc. 2006;98:1435–40.

Rodriguez JE, Campbell KM, Fogarty JP, Williams RL. Underrepresented minority faculty in academic medicine: a systematic review of URM faculty development. Fam Med. 2014;46:100–4.

Chang S, Guindani M, Morahan P, Magrane D, Newbill S, Helitzer D. Increasing promotion of women faculty in academic medicine: impact of national career development programs. J Womens Health. 2020;29:837–46.

Rust G, Taylor V, Herbert-Carter J, Smith QT, Earles K, Kondwani K. The Morehouse faculty development program: evolving methods and 10-year outcomes. Fam Med. 2006;38:43–9.

Guglielmo BJ, Edwards DJ, Franks AS, Naughton CA, Schonder KS, Stamm PL, et al. A critical appraisal of and recommendations for faculty development. Am J Pharm Educ. 2011;75:122.

Sambunjak D, Straus SE, Marušić A. Mentoring in Academic Medicine: A Systematic Review. JAMA. 2006;296:1103.

Kashiwagi DT, Varkey P, Cook DA. Mentoring programs for physicians in academic medicine: a systematic review. Acad Med. 2013;88:1029–37.

Ayyala MS, Skarupski K, Bodurtha JN, González-Fernández M, Ishii LE, Fivush B, et al. Mentorship is not enough: exploring sponsorship and its role in career advancement in academic medicine. Acad Med. 2019;94:94–100.

Bartle E, Thistlethwaite J. Becoming a medical educator: motivation, socialisation and navigation. BMC Med Educ. 2014;14:110.

Maslach C, Schaufeli WB, Leiter MP. Job Burnout Annu Rev Psychol. 2001;52:397–422.

Glew RH, Russell JC. The importance of community in academic health centers. Teach Learn Med. 2013;25:272–4.

Sheffield JV, Wipf JE, Buchwald D. Work activities of clinician-educators. J Gen Intern Med. 1998;13:406–9.

Beasley BW, Wright SM. Looking forward to promotion: characteristics of participants in the prospective study of promotion in academia. J Gen Intern Med. 2003;18:705–10.

West CP, Dyrbye LN, Shanafelt TD. Physician burnout: contributors, consequences and solutions. J Intern Med. 2018;283:516–29.

Cahn PS, Benjamin EJ, Shanahan CW. ‘Uncrunching’ time: medical schools’ use of social media for faculty development. Med Educ Online. 2013;18:20995.

Klein M, Niebuhr V, D’Alessandro D. Innovative online faculty development utilizing the power of social media. Acad Pediatr. 2013;13:564–9.

Chan TM, Gottlieb M, Sherbino J, Cooney R, Boysen-Osborn M, Swaminathan A, et al. The ALiEM faculty incubator: a novel online approach to faculty development in education scholarship. Acad Med. 2018;93:1497–502.

Roy KM, Roberts MC, Stewart PK. Research productivity and academic lineage in clinical psychology: who is training the faculty to do research? J Clin Psychol. 2006;62:893–905.

Sax LJ, Hagedorn LS, Arredondo M, Dicrisi FA III. Faculty research productivity: exploring the role of gender and family-related factors. Res High Educ. 2002;43:423–46.

Eisen A, Barlett P. The Piedmont project: fostering faculty development toward sustainability. J Environ Educ. 2006;38:25–36.

Karimi R, Arendt CS, Cawley P, Buhler AV, Elbarbry F, Roberts SC. Learning bridge: curricular integration of didactic and experiential education. Am J Pharm Educ. 2010;74:48.

Nunez-Wolff C. A study of the relationship of external funding to medical school faculty success. Diss Abstr Int Sect Humanit Soc Sci. 2007;68:141–223.

Jagsi R, Griffith KA, Jones RD, Stewart A, Ubel PA. Factors associated with success of clinician-researchers receiving career development awards from the national institutes of health: a longitudinal cohort study. Acad Med. 2017;92:1429–39.

Anderson CM, Campbell J, Grady P, Ladden M, McBride AB, Montano NP, et al. Transitioning back to faculty roles after being a Robert Wood Johnson Foundation Nurse Faculty scholar: challenges and opportunities. J Prof Nurs Off J Am Assoc Coll Nurs. 2020;36:377–85.

St-Onge C, Young M, Varpio L. Development and validation of a health profession education-focused scholarly mentorship assessment tool. Perspect Med Educ. 2019;8:43–6.

Acknowledgements

The authors wish to thank Dr. Patricia Mullan, PhD for her assistance providing resources related to FD program evaluation during the planning of this endeavor.

Funding

Mary Haas, MD, MHPE, Justin Triemstra, MD, MHPE, Marty Tam, MD, Katie Neuendorf, MD, Katherine Reckelhoff, DC, MHPE, Rachel Gottlieb-Smith, MD, MHPE, Ryan Pedigo, MD, MHPE, Suzy McTaggart, John Vasquez, PhD have no disclosures. Bobbie Berkowitz, PhD, RN, NEA-BC, FAAN, Edward Hundert, MD, and Larry Gruppen, PhD were paid by the Macy Foundation to plan and conduct the evaluation of the MFSP. Holly J. Humphrey, MD, MACP is the President of the Macy Foundation.

Author information

Authors and Affiliations

Contributions

Authors MH and JT wrote and edited the manuscript. Author MH and MT prepared tables 1-3 and author JT prepared table 4. Authors KN, KR, RGS, RP, SM, and JV assisted in data collection and data analysis. Authors EMH, BB, HJH and LG assisted in designing and overseeing the project. All authors reviewed the manuscript. The author(s) read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Informed consent was obtained from all subjects and the study was reviewed by the University of Michigan Institutional Review Board, HUM00175014, for ethical approval and ruled to be exempt from ongoing review. All methods and experimental protocols were approved and carried out in accordance with relevant guidelines and regulations.

Consent for publication

Not applicable.

Competing interests

Bobbie Berkowitz, PhD, RN, NEA-BC, FAAN, Edward Hundert, MD, and Larry Gruppen, PhD were paid by the Macy Foundation to plan and conduct the evaluation of the MFSP. Holly J. Humphrey, MD, MACP is the President of the Macy Foundation. All other authors report no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The original version of this article was revised: we have deleted Daniel D. Federman and Ralph W. Gerard as authors and we have updated the affiliation of author Holly J. Humphrey.

Supplementary Information

Additional file 1:

Appendix 1. CV Data Extraction Form. Appendix 2. MFSP Scholar Survey.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Haas, M., Triemstra, J., Tam, M. et al. A decade of faculty development for health professions educators: lessons learned from the Macy Faculty Scholars Program. BMC Med Educ 23, 185 (2023). https://doi.org/10.1186/s12909-023-04155-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12909-023-04155-x