Abstract

Background

YouTube is a valuable source of health-related educational material which can have a profound impact on people’s behaviors and decisions. However, YouTube contains a wide variety of unverified content that may promote unhealthy behaviors and activities. We aim in this systematic review to provide insight into the published literature concerning the quality of health information and educational videos found on YouTube.

Methods

We searched Google Scholar, Medline (through PubMed), EMBASE, Scopus, Direct Science, Web of Science, and ProQuest databases to find all papers on the analysis of medical and health-related content published in English up to August 2020. Based on eligibility criteria, 202 papers were included in our study. We reviewed every article and extracted relevant data such as the number of videos and assessors, the number and type of quality categories, and the recommendations made by the authors. The extracted data from the papers were aggregated using different methods to compile the results.

Results

The total number of videos assessed in the selected articles is 22,300 (median = 94, interquartile range = 50.5–133). The videos were evaluated by one or multiple assessors (median = 2, interquartile range = 1–3). The video quality was assessed by scoring, categorization, or based on creators’ bias. Researchers commonly employed scoring systems that are either standardized (e.g., GQS, DISCERN, and JAMA) or based upon the guidelines and recommendations of professional associations. Results from the aggregation of scoring or categorization data indicate that health-related content on YouTube is of average to below-average quality. The compiled results from bias-based classification show that only 32% of the videos appear neutral toward the health content. Furthermore, the majority of the studies confirmed either negative or no correlation between the quality and popularity of the assessed videos.

Conclusions

YouTube is not a reliable source of medical and health-related information. YouTube’s popularity-driven metrics such as the number of views and likes should not be considered quality indicators. YouTube should improve its ranking and recommender system to promote higher-quality content. One way is to consider expert reviews of medical and health-related videos and to include their assessment data in the ranking algorithm.

Similar content being viewed by others

Background

YouTube is the world’s second most popular search engine and social media platform [1]. In 2020, YouTube had more than 2.1 billion users, resulting in over one billion hours of video being viewed per day and over 500 hours of video being uploaded each minute [2]. According to published statistics, over 95% of the Internet population are regularly interacting with YouTube in 76 different languages from more than 88 countries [3, 4]. A telephone survey conducted in the United States revealed that more than74% of adults were using YouTube in September 2020 [5].

The growing popularity of YouTube can be attributed to multiple factors. First, users with an internet connection can easily access YouTube’s video service via PCs, laptops, tablets, or mobile phones. More than 70% of YouTube users are accessing online videos through the mobile phone application [6]. This made the YouTube video experience much more enjoyable and available to all users on-demand, anywhere and anytime. Moreover, YouTube is particularly popular among young people who spend hours watching online videos, interacting with others, and sometimes creating their own content [4, 7]. A study conducted in Portugal in 2018 revealed that YouTube is popular among young trainees and residents for surgical preparation [8]. Another aspect that encourages users to utilize YouTube is sharing the enormous advertising revenue with the content creators (also known as YouTubers). This motivates young users to invest in YouTube and spend more time creating and editing online videos.

Nowadays, YouTube has emerged as a valuable educational resource. Specifically, the YouTube model represents a visual model that includes both theoretical and practical knowledge that could be used for teaching purposes. YouTube’s popularity, ease of access, and social nature made it a powerful tool for influencing individuals’ decisions and promoting their well-being.

For example, Mamlin and colleagues (2016) predicted that social media platforms, such as YouTube, would be widely used to (i) exchange healthcare information between healthcare providers and consumers, (ii) facilitate peer-to-peer patient support, and (iii) enhance public health surveillance [9]. The health information videos on YouTube are derived from various sources such as doctors, health institutions, universities and medical schools, patients, and advertisers. However, regardless of the content’s source, YouTube’s terms of service stipulate that “the content is the responsibility of the person or entity that provides it to the Service” [10]. YouTube’s search results are based on popularity, relevancy, and view history rather than content quality. This creates an issue for informal or unguided learners who are increasingly exposed to unverified and partly misleading content that could promote unhealthy habits and activities [11]. For example, a recent study found that more than 25% of the most viewed YouTube videos addressing COVID-19 contained misleading information that reached millions of people worldwide [12]. Furthermore, Nour and colleagues (2016) reported that both accurate and inaccurate YouTube videos discussing psoriasis received similar views [13].

Considering the literature, a limited number of in-depth literature reviews have addressed the content quality issue of healthcare-related videos on YouTube; either in general [14, 15] or in particular to specific topics such as surgical education [16]. Each of these studies has reviewed 7 to 18 articles only. Due to the limited number of reviewed articles, such studies did not provide an extensive analysis of the problem nor a comprehensive discussion of the results and recommendations. Most of these studies highlighted that patient education on YouTube doesn’t follow quality standards and could be misleading.

This paper presents a comprehensive review of the literature related to the content quality of healthcare information of YouTube videos.

Methods

Literature search

The search was conducted using Google Scholar, Medline (through PubMed), EMBASE, Scopus, Direct Science, and Web of Science databases from April 1st through April 31st, 2021. ProQuest database was also searched for dissertations and theses to avoid publication bias. As we observed a noticeable shift in the number of publications discussing the COVID-19 pandemic after that, which may, in return, affect our conclusions, we have limited our search to papers published by August 2020 to ensure unbiased coverage of health information topics. We also found that by that time, similar systematic reviews on YouTube COVID-19 pandemics had already been published [12, 17, 18]. We searched several databases for publications that contain the keywords “YouTube” and “quality” in the title and at least one of the following terms “medical, medical education, health, healthcare, health information.” In addition to the common limiters (see eligibility below), we applied the OR and AND Boolean operators to restrict the keyword searches. The searches were conducted independently by two researchers (FA, WO). In case of disagreements, a third researcher (AS) was consulted.

Eligibility

The inclusion criteria for the papers were: peer-reviewed original articles about the educational quality of YouTube medical videos published between 2005, which is the YouTube’s establishment year, until end of August 2020 in English Language. The exclusion criteria included papers that did not meet the inclusion criteria, duplicate publications, technical reports, organization websites, case reports, and organizational reports.

Papers selection

In this step, we went through the titles and abstracts of the collected papers to assess them against the inclusion criteria. During this process, we performed an initial annotation of the papers and classified them into three classes: eligible, not eligible, undecided. In some cases, the abstracts were not sufficiently informative, and we couldn’t decide whether to include the papers or not. Thus, we scanned the full texts and the supplementary materials of these papers to decide upon their eligibility.

Data extraction

In this step, we used a datasheet to record the following information about every publication: title, abstract, topic, quality assessment score and results, number of assessors, number of videos, the resulting categories and classifications, type and source of bias, and conclusion and recommendations. Data extraction and analysis were conducted using the PRISMA recommendations for systematic reviews [19].

Data synthesis

The reviewed studies followed three schemes for evaluating the content quality on YouTube: scoring-, categorization-, or bias-based evaluation. We performed data synthesis depending on these evaluation schemes. For this purpose, we used simple descriptive statistics such as means, standard deviations, ranges, interquartile ranges, and frequency distribution using histograms. In the case of categorization-based evaluation, the researchers used different numbers and labels for the quality categories. To compile the data from these papers, we created new quality classes and used a heuristic approach to map the categories in the reviewed studies to our classes. Furthermore, we applied qualitative analysis of authors’ recommendations to compile a concise set of general recommendations for improving the quality of medical content on YouTube.

Due to the nature of the studies, it was not possible to discuss the sources of heterogeneity in the data or perform sensitivity analyses, aside from the differences between the languages of the videos analyzed across papers. A challenge we encountered in this study was the inability to apply the normal sources of bias found in clinical trials and research, aside from language bias. There has been no meta-analysis performed.

Results

Methodological aspects and general findings

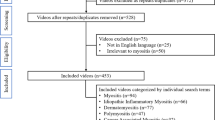

Our initial search returned 1982 publications. In this review, the final number of considered studies was 202 articles. In Fig. 1, we present PRISMA flow chart and the approach we followed to come up with the number of 202 eligible papers. In reviewing the full-texts, we reviewed the five theses [20,21,22,23,24]; but we excluded three of them from this study [20,21,22] since they presented descriptive analyses of health topics without assessing the quality of the research as an eligibility criterion. The titles and topics of all considered articles are summarized in Supplementary Table 1.

We found that the researchers in this field followed the same general approach that is characterized by the following points:

-

1.

Focusing on a single topic, such as a particular disease or treatment.

-

2.

Considering a cross-sectional analysis of the considered videos after identifying the inclusion criteria, such as being in English or having a minimum number of views.

-

3.

Evaluating the considered videos by one or more experts, who are usually among the authors themselves. Researchers in some studies evaluated the scoring reliability using an inter-rater agreement analysis. During this process, discrepancies were resolved by consensus, or by inviting an additional assessor to settle disagreements.

-

4.

Assessors used a scoring system to assess the quality of health-related content. The scoring systems are either self-devised or standardized such as GQS, DISCERN, and JAMA. JAMA is a 4-point scoring system while the other two standards have a 5-point scale.

-

5.

According to the scores, videos were assigned to different quality classes.

-

6.

Depending on the outcome of the scoring or the classification processes, authors gave a general evaluation of the ability of YouTube videos to provide reliable health-related information.

-

7.

Based on this judgment, some general remarks and recommendations were made.

In the following section, we summarize the results of our review considering this general approach. We first highlight some methodological aspects and then compile the findings of reviewed papers.

Table 1, summarizes some statistics related to the number of videos, assessors, and the number of quality categories included in the reviewed studies. The total number of videos in all the reviewed studies that we considered was approximately 22,300 videos. The median number of videos per article was 94 (interquartile range = 50.5–133), and a median of two assessors were involved in each assessment in each article (interquartile range = 1–3). The variation in the number of quality categories poses some difficulty for aggregating the data, as will be discussed later. As an example, some studies classify the videos into three classes, for example “excellent”, “moderate”, and “poor” [25], whereas other studies use four categories, for example “very useful”, “useful”, “slightly useful”, or “misleading” [26].

As mentioned before, each reviewed paper examined a series of videos related to a single topic. In a few cases, the same topic was discussed in more than one study. This results in a wide variety of topics included in the reviewed papers. To provide a concise overview, of grouped these topics into 30 different medical categories and determined the number of studies within each category as shown in Fig. 2.

For assessing the content quality, most authors used their scoring systems which are frequently based on some reference in the respective medical field (Fig. 3). For instance, Brooks and colleagues (2014) based their evaluation of videos about patient information for lumbar discectomy on the recommendations of the British Association of Spine Surgeons [27]. Aside from this, many authors adhere to general quality standards. Among these are the Global Quality Standard (GQS), the DISCERN instrument, and the Journal of American Medical Association (JAMA) benchmark criteria. These are described in more detail elsewhere [28, 29].

Quality assessment results

In the reviewed studies, content quality was assessed through scoring, categorizing, or both. Furthermore, many authors have correlated quality metrics with video popularity metrics such as the number of views and likes. Almost all reviewed papers provided some recommendation statements at the end. The following section compiles the findings of the reviewed studies according to these aspects.

Quality assessment by scores

Figure 4 presents the mean quality score of videos according to the three most used standards, GQS, DISCERN, and JAMA. As an example, 25 papers used the GQS standard and provided a mean score for all assessed videos. The value of 2.68 in Fig. 4 is the average of the 25 mean values presented in related papers. As shown in the figure, the mean score is average for all three standards. Note that JAMA is a 4-point scoring system while the other two standards have a 5-point scale.

Quality assessment by classification

Researchers who evaluated content quality through classification used category labels related to quality, usefulness, accuracy, and reliability. To aggregate the data of these studies, we mapped the used category labels to one of the five labels provided in Table 2. For example, the labels “Excellent”, “Very useful”, “Very accurate,” and “High quality” in the analyzed papers were mapped to the label “Excellent quality” in this paper. In determining the mapping, we relied on a heuristic methodology that took into account the number and intensity of labels in the papers.

Figure 5 illustrates the results of data aggregation. The upper dark bars represent the average percentage of videos classified into each category. For example, the percentage of 40% in the figure is the average of the percentages of videos, which were assigned to the category “poor” in the reviewed papers. The light bars indicate the relative frequency of using these categories in the related studies. Accordingly, “not useful “ was the most frequently used category, followed closely by “poor quality” and “good quality,” while “excellent quality” was the least frequently used category.

In some papers, controversial topics were discussed, such as vaccination [30] or unauthorized treatments [31]. In such cases, the authors try to classify the videos according to the bias of the producer towards or against the addressed topic. The results are shown in Fig. 6. The figure indicates that 58% of the videos are in support of the treatment discussed. Most of the videos reflect commercial interests (51%), while only 32% are neutral, highlighting the advantages and disadvantages of the presented topics without supporting or devaluing them.

Almost one-third of the papers try to correlate the quality of analyzed videos to their popularity metrics, including the number views, likes, dislikes, shares, and comments. Figure 7 summarizes such analyses. For example, the figure shows that 23 papers found no correlation between the number of views and the quality of the videos and 13 found a negative correlation [32, 33]. Negative correlation means that the videos of lower quality were viewed more often than higher-quality videos [34, 35]. Only seven papers found a positive correlation between the quality and popularity in terms of both the number of views and the number of likes [36, 37].

In addition, some papers classified videos according to their comprehensiveness, that is, whether the videos covered all the information that was considered significant for each topic [38,39,40]. As an example, Pant and colleagues (2012) assessed the credibility of YouTube content on acute myocardial infarction and discovered that only 6% of the reviewed videos addressed all relevant aspects according to the authors’ criteria [41]. The average percentage of comprehensive videos in all reviewed papers is 13.2%.

Reviewed papers’ recommendations

Finally, almost all reviewed papers provide one or more recommendations based on the research findings. Most of these recommendations aim at improving the quality of health-related content on YouTube, as depicted in Fig. 8. Accordingly, 44% of the papers highlight the role of reputable sources such as professional societies, health organizations, academic institutions, medical institutions in providing qualified content on YouTube [42,43,44,45].

13% of the papers urge users to be cautious while using YouTube [46, 47] and 17% emphasize that experts should guide users by referring them to high-quality content on YouTube [48, 49]. 10% of the papers suggest that the content on YouTube should be reviewed by experts [50, 51] and 5% recommend that YouTube’s ranking and filtration systems should be improved so that reliable content is presented first, and misleading videos are dropped out [42, 43]. 11% of the reviewed papers explicitly or implicitly regard the situation as irredeemable and entirely discourage using YouTube as a source for health-related information [52,53,54,55,56]. Only a few authors take the opposite position and recommend YouTube without concerns [57, 58].

Discussion

Good health is a great asset. People seem to place more value on health than on income, career, or education [59]. Human health, however, is vulnerable to diverse internal and external threats inclusive of own behaviors. To avoid, prevent, or mitigate risks to our health, we are dependent on information. Like our health, the quality of this information should be non-negotiable. Unfortunately, however, the ubiquity of digital media is increasingly challenging this principle. This study has shown that YouTube, the most visited media website worldwide, does not only host a significant portion of poor and misleading content but it also promotes these videos through its popularity-based system. So, these videos are accessed and watched by users and patients at least as frequently as good-quality videos [60, 61].

Why do we have this situation?

YouTube is a business that lives from its viewership and the frequency and duration of access to the platform. To attract more viewers and motivate them to stay longer on the website, YouTube offers growing content and allows any registered member to post videos with almost no restrictions on content quality [17]. This ease of use along with YouTubers’ competition for subscribers, views, likes, and shares has indeed shaped YouTube and contributed to the proliferation of lower-quality content among the search and recommendation lists [62]. On the other hand, the general YouTube user is strongly driven by the entertainment nature of this website and is less skeptical of content quality [63]. This relaxes the demand for verified content from producers. Many consulting agencies are out there to help YouTubers improve the visibility and popularity of their channels [62, 64]. We are not aware of any consultancy specialized in content verification because this remains the sole responsibility of the YouTuber who is seen as the field expert.

What does this situation mean for the YouTube user?

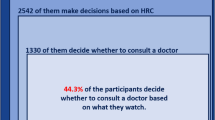

This systematic review has shown that most researchers are concerned about the quality of medical and health-related information on YouTube. These concerns are serious because watching poor-quality videos can mislead viewers into wrong decisions, practices, or behaviors. However, this cause-effect relationship is under-researched in the literature. So, we don’t yet know whether and how far viewing health-related videos impacts users’ habits, behaviors, decisions. Qualitative studies using surveys and interviews can be helpful in this direction. Indeed, the specific medical field and the urgency of the user’s quest for information can moderate this effect. For example, patients who seek YouTube to learn about the pros and cons of a specific neurological operation towards making a decision are indeed more sensitive to information quality than users who are looking for an effective 5-minute fitness exercise.

Can the situation of low-quality content on YouTube be resolved or improved?

The analysis of authors’ responses to the current situation showed that many of them urge users to refrain from using YouTube as a source for medical and health-related information. While this avoidance guideline provides the best protection against misleading content, it presents a complete deprivation of the benefits of valuable material on YouTube and disregards the efforts of professional creators who care about content quality. Most of the authors recognize the potential of YouTube and suggest different strategies to overcome the quality challenge. The primary recommendation made by the authors is to encourage recognized professional institutions such as health organizations to be more active in the creation and upload of high-quality content on YouTube. Collaborative efforts to increase the portion of verified content on YouTube are indeed necessary, but is there a guarantee or at least a good chance that such content will appear at the top of search and recommended lists on YouTube? It has been observed that the top-10 video clips in the list returned by the YouTube search engine receive a higher number of views, likes, and comments [65]. The authors attributed this to the preferential attachment process (Yule process), which describes how individuals, who are already wealthy, receive more than those who have less. This indicates that adding new high-quality videos should be accompanied by strategies to promote these videos to appear on the top and become more visible to the user. Such strategies are also in line with the recommendations of some other authors who suggested improving YouTube’s ranking and filtration system. Other strategies to counter the quality challenge address the users who should exercise caution while seeking YouTube for health-related information and consult with experts about this. An efficient way to guide users and patients could be to identify and recommend high-quality channels rather than individual videos [66]. Verified channels can be a reliable point of reference that helps users and patients on a wide range of topics. At the same time, experts should consider the video production styles and strategies that might increase the number of likes per view to promote high-quality content [67]. Finally, many authors recommend that YouTube content should undergo peer review. Expert evaluation is certainly determinant for judging the quality of medical content, but how should evaluation data be made available to YouTube’s viewership? Some efforts have been made to evaluate selected online videos and make them available to students through repositories [68,69,70]. Csurgeries for example is an excellent educational source which provides a limited set of peer-reviewed videos with surgery content for medical studies [70]. This repository-based approach, however, takes the learner away from YouTube. So, it should be seen as an alternative to YouTube, rather than as a solution for its quality issue. It would be desired if YouTube or intermediate services can provide special interfaces that allow registered experts to review and endorse medical content using general quality standards and employ evaluation data to improve YouTube’s filtration and ranking system [71].

Important take-home messages for clinical practice

Doctors and patients should exercise caution when using YouTube to access medical information. In addition, physicians should warn patients against relying too much on YouTube. Doctors should also identify and keep a record of high-quality videos and channels related to their fields of study and recommend them to their patients.

Important take-home messages for clinical research

There is a need for research to identify common features that can be used as quality indicators in health-related videos. Using this information will help users make the appropriate selection. There is also need for research to identify common characteristics that can serve as indicators of health-related video quality. Identifying these characteristics will be helpful in selecting health-related videos.

Limitations

This study had several potential limitations. First, while mapping the quality categories, specified in the reviewed studies, into quality labels, some minor variations might have occurred. Second, we did not perform sub-analyses for each of the disease classes. Thus, we could not decide whether YouTube’s content about a particular disease had better quality than other content. Despite limiting our search to August 2020 to avoid bias towards COVID-19 content, it may have resulted in a selection bias. We believe that a separate study needs to be conducted on COVID-19 disease and vaccines. It wasn’t possible to apply the sources of bias that are commonly encountered in clinical trials and medical research in our study. The reason is that the research methodology and analyses followed in this study differ from how medical research is usually carried out. However, one possible source of bias in this study is restricting our search to English articles. Despite this, we found that the results of four papers that were not written in English were in line with our results [8, 72,73,74]. Furthermore, the protocol of this review was not pre-registered for this purpose (e.g., in PROSPERO), which may introduce potential bias per Cochrane guidelines. Initially, we did not register our review in PROSPERO since we noted that similar studies on the same topic were not also registered. However, upon attempting to do that, we were unable to pre-register the application because PROSPERO was overburdened with COVID-19 reviews. Finally, although we included 202 articles in our review, we may have missed some articles that we do not believe will have a significant impact on the study’s findings.

Conclusions and future work

YouTube is not a reliable source of medical and health-related information. YouTube’s popularity-driven metrics such as the number of views and likes should not be considered quality indicators. YouTube should improve its ranking and recommender system to promote higher-quality content. One way is to consider expert reviews of medical and health-related videos and to include their assessment data in the ranking algorithm.

Availability of data and materials

All data is included in the manuscript. Any request about the study design, search strategy, or any other inquiries will be addressed upon contacting the corresponding author.

References

Alexa. The top 500 sites on the web. 2022: https://www.alexa.com/topsites.

Statista. Hours of video uploaded to YouTube every minute as of February 2020. 2020 https://www.statista.com/statistics/259477/hours-of-video-uploaded-to-youtube-every-minute/.

Syed-Abdul S, et al. Misleading health-related information promoted through video-based social media: anorexia on YouTube. J Med Internet Res. 2013;15:e30.

FortuneLords. 37 Mind Blowing YouTube Facts, Figures and Statistics - 2021 https://fortunelords.com/youtube-statistics/.

Statista. Percentage of adults in the United States who use selected social networks as of September 2020. https://www.statista.com/statistics/246230/share-of-us-internet-users-who-use-selected-social-networks/.

Infographics, G. M. I.-. YouTube User Statistics 2021. https://www.globalmediainsight.com/blog/youtube-users-statistics/.

Salama A, et al. Consulting “Dr. YouTube”: an objective evaluation of hypospadias videos on a popular video-sharing website. J Pediatr Urol. 2020;16:70.e71–9.

Mota P, et al. Video-based surgical learning: improving trainee education and preparation for surgery. J Surg Educ. 2018;75:828–35.

Mamlin BW, Tierney WM. The promise of information and communication technology in healthcare: extracting value from the chaos. Am J Med Sci. 2016;351:59–68.

YouTube. Terms of Service. https://www.youtube.com/static?template=terms.

Nour MM, Nour MH, Tsatalou O-M, Barrera A. Schizophrenia on YouTube. Psychiatr Serv. 2017;68:70–4.

Li HO-Y, Bailey A, Huynh D, Chan J. YouTube as a source of information on COVID-19: a pandemic of misinformation? BMJ Glob Health. 2020;5:e002604.

Qi J, Trang T, Doong J, Kang S, Chien AL. Misinformation is prevalent in psoriasis-related YouTube videos. Dermatol Online J. 2016;22(11).

Gabarron E, Fernandez-Luque L, Armayones M, Lau AY. Identifying measures used for assessing quality of YouTube videos with patient health information: a review of current literature. Interact J Med Res. 2013;2:e2465.

Madathil KC, Rivera-Rodriguez AJ, Greenstein JS, Gramopadhye AK. Healthcare information on YouTube: a systematic review. Health Informatics J. 2015;21:173–94.

Farag M, Bolton D, Lawrentschuk N. Use of youtube as a resource for surgical education—clarity or confusion. Eur Urol Focus. 2020;6:445–9.

Ataç Ö, et al. YouTube as an information source during the coronavirus disease (COVID-19) pandemic: evaluation of the Turkish and English content. Cureus. 2020;12(10):e10795.

Khatri P, et al. YouTube as source of information on 2019 novel coronavirus outbreak: a cross sectional study of English and mandarin content. Travel Med Infect Dis. 2020;35:101636.

Moher D, Altman DG, Liberati A, Tetzlaff J. PRISMA statement. Epidemiology. 2011;22:128.

Randolph-Krisova A. Descriptive analysis of the most viewed YouTube videos related to the opioid epidemic: Teachers College, Columbia University; 2018.

Baquero EP. A descriptive analysis of the most viewed YouTube videos related to depression, Columbia University; 2018.

Aldridge MD. A qualitative study of the process of learning nursing skills among undergraduate nursing students: University of Northern Colorado; 2016.

Foster CB. Mental health on Youtube: exploring the potential of interactive media to change knowledge, attitudes and behaviors about mental health: University of South Carolina; 2013.

Kressler J. Women's stories of breast cancer: sharing information through Youtube video blogs; 2014.

Yavuz MC, Buyuk SK, Genc E. Does YouTube™ offer high quality information? Evaluation of accelerated orthodontics videos. Ir J Med Sci (1971-). 2020;189:505–9.

Lee JS, Seo HS, Hong TH. YouTube as a source of patient information on gallstone disease. World J Gastroenterol: WJG. 2014;20:4066.

Brooks F, Lawrence H, Jones A, McCarthy M. YouTube™ as a source of patient information for lumbar discectomy. Ann R Coll Surg Engl. 2014;96:144–6.

Ferhatoglu SY, Kudsioglu T. Evaluation of the reliability, utility, and quality of the information in cardiopulmonary resuscitation videos shared on open access video sharing platform YouTube. Australas Emerg Care. 2020;23:211–6.

Askin A, Tosun A. YouTube as a source of information for transcranial magnetic stimulation in stroke: a quality, reliability and accuracy analysis. J Stroke Cerebrovasc Dis. 2020;29:105309.

Robichaud P, et al. Vaccine-critical videos on YouTube and their impact on medical students’ attitudes about seasonal influenza immunization: a pre and post study. Vaccine. 2012;30:3763–70.

Pithadia DJ, Reynolds KA, Lee EB, Wu JJ. A cross-sectional study of YouTube videos as a source of patient information about phototherapy and excimer laser for psoriasis. J Dermatol Treat. 2020;31(7):707–10.

Hassona Y, Taimeh D, Marahleh A, Scully C. YouTube as a source of information on mouth (oral) cancer. Oral Dis. 2016;22:202–8.

MacLeod MG, et al. YouTube as an information source for femoroacetabular impingement: a systematic review of video content. Arthroscopy. 2015;31:136–42.

Loeb S, et al. Dissemination of misinformative and biased information about prostate cancer on YouTube. Eur Urol. 2019;75:564–7.

Erdem MN, Karaca S. Evaluating the accuracy and quality of the information in kyphosis videos shared on YouTube. Spine. 2018;43:E1334–9.

Kovalski LNS, et al. Is the YouTube™ an useful source of information on oral leukoplakia? Oral Dis. 2019;25:1897–905.

Şahin A, Şahin M, Türkcü FM. YouTube as a source of information in retinopathy of prematurity. Ir J Med Sci (1971). 2019;188:613–7.

Castillo J, Wassef C, Wassef A, Stormes K, Berry AE. YouTube as a source of patient information for prenatal repair of myelomeningocele. Am J Perinatol. 2021;38(2):140–4.

Camm CF, Russell E, Xu AJ, Rajappan K. Does YouTube provide high-quality resources for patient education on atrial fibrillation ablation? Int J Cardiol. 2018;272:189–93.

Joshi S, Dimov V. Quality of YouTube videos for patient education on how to use asthma inhalers. World Allergy Organization Journal. 2015;8:A221.

Pant S, et al. Assessing the credibility of the “YouTube approach” to health information on acute myocardial infarction. Clin Cardiol. 2012;35:281–5.

Chen H-M, et al. Effectiveness of YouTube as a source of medical information on heart transplantation. Interact J Med Res. 2013;2:e2669.

Brar J, Ferdous M, Abedin T, Turin TC. Online information for colorectal cancer screening: a content analysis of YouTube videos. J Cancer Educ. 2021;36:826–31.

Lenczowski E, Dahiya M. Psoriasis and the digital landscape: YouTube as an information source for patients and medical professionals. J Clin Aesthet Dermatol. 2018;11:36.

Gupta N, Sandhu G, Aggarwal A, Singh H, Leanne F. Quality assessment of YouTube videos as a source of information on colonoscopy. Abdomen. 2015;2.

Dubey D, Amritphale A, Sawhney A, Dubey D, Srivastav N. Analysis of YouTube as a source of information for West Nile virus infection. Clin Med Res. 2014;12:129–32.

Larouche M, et al. Mid-urethral slings on YouTube: quality information on the internet? Int Urogynecol J. 2016;27:903–8.

Duncan I, Yarwood-Ross L, Haigh C. YouTube as a source of clinical skills education. Nurse Educ Today. 2013;33:1576–80.

Rittberg R, Dissanayake T, Katz SJ. A qualitative analysis of methotrexate self-injection education videos on YouTube. Clin Rheumatol. 2016;35:1329–33.

Atci AG, Atci IB. Quality and reliability of information available on YouTube videos pertaining to transforaminal lumbar epidural steroid injections. Roman Neurosurg. 2019;XXXIII(3):299–304.

Bora K, Das D, Barman B, Borah P. Are internet videos useful sources of information during global public health emergencies? A case study of YouTube videos during the 2015–16 Zika virus pandemic. Pathog Glob Health. 2018;112:320–8.

Kwok TM, Singla AA, Phang K, Lau AY. YouTube as a source of patient information for varicose vein treatment options. J Vasc Surg Venous Lymphat Disord. 2017;5:238–43.

ElKarmi R, Hassona Y, Taimeh D, Scully C. YouTube as a source for parents’ education on early childhood caries. Int J Paediatr Dent. 2017;27:437–43.

Ovenden CD, Brooks FM. Anterior cervical discectomy and fusion YouTube videos as a source of patient education. Asian Spine J. 2018;12:987.

Prabhu V, Lovett JT, Munawar K. Role of social and non-social online media: how to properly leverage your internet presence for professional development and research. Abdom Radiol. 2021;46(12):5513–20.

Kunze KN, Krivicich LM, Verma NN, Chahla J. Quality of online video resources concerning patient education for the meniscus: a YouTube-based quality-control study. Arthroscopy. 2020;36:233–8.

Wong K, Doong J, Trang T, Joo S, Chien AL. YouTube videos on botulinum toxin A for wrinkles: a useful resource for patient education. Dermatol Surg. 2017;43:1466–73.

Felgoise S, Monk M, Gentis K. Information shared on YouTube by individuals affected by long QT syndrome: a qualitative study; 2016.

Adler MD, Dolan P, Kavetsos G. Would you choose to be happy? Tradeoffs between happiness and the other dimensions of life in a large population survey. J Econ Behav Organ. 2017;139:60–73.

Elangovan S, Kwan YH, Fong W. The usefulness and validity of English-language videos on YouTube as an educational resource for spondyloarthritis. Clin Rheumatol. 2021;40:1567–73.

Pandey A, Patni N, Singh M, Sood A, Singh G. YouTube as a source of information on the H1N1 influenza pandemic. Am J Prev Med. 2010;38:e1–3.

Haslam K, et al. YouTube videos as health decision aids for the public: an integrative review. Can J Dent Hyg. 2019;53:53.

Mangan MS, et al. Analysis of the quality, reliability, and popularity of information on strabismus on YouTube. Strabismus. 2020;28:175–80.

Chen L, Zhou Y, Chiu DM. Analysis and detection of fake views in online video services, vol. 11: ACM Transactions on Multimedia Computing, Communications, and Applications (TOMM); 2015; 11(2s). p. 1–20.

Chelaru SV, Orellana-Rodriguez C, Altingovde IS. Can social features help learning to rank YouTube videos? International Conference on Web Information Systems Engineering; 2012. LNISA volume 7651. p. 552–566 (Springer).

Tadbier AW, Shoufan A. Ranking educational channels on YouTube: aspects and issues. Educ Inf Technol. 2021;26:3077–96.

Shoufan A, Mohamed F. On the likes and dislikes of YouTube's educational videos: a quantitative study. Proceedings of the 18th annual conference on information technology education; 2017. p. 127–132.

https://www.watchknowlearn.org/, W.-K.-L.

NeoK12, h. w. n. c.

https://csurgeries.com/, C. P.-R. S. V. J.

Shoufan A, Omar F, and Ernesto D. Endorsement system and techniques for educational content. WIPO. Publication number: WO/2021/209901. https://patentscope.wipo.int/search/en/detail.jsf?docId=WO2021209901.

Lee TH, et al. Medical professionals’ review of YouTube videos pertaining to exercises for the constipation relief. Korean J Gastroenterol. 2018;72:295–303.

Ruppert L, et al. YouTube as a source of health information: analysis of sun protection and skin cancer prevention related issues. Dermatol Online J. 2017;23(1):13030.

Sabra MA, Kamel AA, Malak MZ. Alzheimer disease health-related information on YouTube: a video reviewing study. J Nurs Educ Pract. 2016;6(10).

Acknowledgements

The authors would like to thank Student Mona Youssef, who helped in the initial paper search collection as an independent study.

Funding

There is no funding declared for this manuscript.

Author information

Authors and Affiliations

Contributions

AS designed the study protocol with the support of WO; FA and WO separately performed the search, performed the analysis, and summarized the search data; WO, FA, ME, and AS interpreted the results; WO, FA, ME wrote the manuscript and ME has critically revised the manuscript. All authors have approved the submitted version of the manuscript.

Corresponding authors

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

Authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Osman, W., Mohamed, F., Elhassan, M. et al. Is YouTube a reliable source of health-related information? A systematic review. BMC Med Educ 22, 382 (2022). https://doi.org/10.1186/s12909-022-03446-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12909-022-03446-z