Abstract

Background

Mental Rehearsal (MR) the cognitive act of simulating a task in our heads to pre-experience events imaginatively. It has been used widely to improve individual and collective performance in fields outside healthcare and offers potential for more efficient training in time pressured surgical and medical team contexts. The study aims to review the current systematic review literature to determine the impact of MP on surgical performance and learning.

Methods

Medline, Embase, British Educational Index, CINAHL, Web of Science PsycINFO, Cochrane databased were searched in the period 1994–2018. The primary outcomes measure were performance improvements in surgical technical skills, stress reduction, confidence and team performance. Study quality of the Systematic Reviews was assessed using AMSTAR 2, a critical appraisal tool for systematic reviews. The reported impacts of MP in all included studies were mapped onto Kirkpatrick’s framework for the evaluation of educational interventions.

Results

Six Systematic reviews were identified which met the inclusion criteria, of which all reported positive and varying benefits of MP on surgical performance, confidence, and coping strategies. However, reported impacts on a modified Kirkpatrick’s framework did not exceed level 3. Mental practice was described in terms of mental imagery and mental rehearsal with most authors using each of the terms in their search strategies. The impacts on transfer to practice and the long- term acquisition of skills, but also personal uptake of mental practice routines were not reported.

Conclusion

The majority of studies demonstrate benefits of MP for technical performance. Overall the systematic reviews were of medium to high quality. However, studies lacked a sufficiently articulated evaluation methodology to examine impacts beyond the immediate experimentations. This is also due to the limitations found in the primary studies. Future research should look at longitudinal mixed method evaluation designs and focus on real clinical teams.

Similar content being viewed by others

Background

Practising surgical skills is one of the most crucial tasks for both the novice surgeon learning new procedures and surgeons already in practice learning new techniques or preserving acquired ones. The increased complexity of surgical procedures has heightened interest regarding how to help surgeons attain, enhance and maintain expert performance more effectively.

In recent years the question of what constitutes effective surgical education for all levels of experience has driven increasing interest in the use of various modalities of clinical simulation. However, technology-based simulations with VR and or mannequins are expensive, resource intensive and time consuming. Mental practice (MP) is purported to be an alternative simulation approach to enhance performance.

Mental practice (MP) is most commonly described as the ‘symbolic’ mental rehearsal of a task in the absence of actual physical rehearsal [1,2,3]. Fittingly, it is closely aligned with a notion of consciousness entailing the setting up and planning of future goals [4]. Evidence in a variety of fields spanning elite sports and music to neuro-rehabilitation have lent substance to the idea that MP can be effective in improving practice [5,6,7,8,9,10]. Interestingly, the research also suggests that the process of MP may be either individual or coordinated with others [8, 11,12,13,14,15].

The concept of mental practice (MP) subsumes notions of rehearsal, mental imagery and mental simulation and the ever-increasing number of publications in surgery attests to surgical educators’ enduring expectations that it is beneficial (Fig. 1).

Proponents of MP claim it offers a number of potential benefits for surgeons at all levels of training [16]. It may for example augment physical practice in improving accuracy and precision of surgical movements [17]. An early meta-analysis by Driskell et al. [3] gave an explanatory mechanism for this suggesting that by imagining how a movement looks, feels and affects a patient MP may strengthen cortical representations of the task performed by previous physical practice, or may prime specific neuromuscular pathways.

More recent notions of mental practice in the literature go beyond an exclusive focus on individual psychomotor skills and they embrace a broad range of cognitive skills too. These range for example from mental readiness, risk assessment, and anticipatory planning to a wide spectrum of other non-technical skills such as team work, coping strategies, situational awareness and task management [8, 18,19,20,21,22,23,24]. These skills are therefore individual but also emerge from social interactions with other team members. Nevertheless, there is still some confusion about the boundaries of what constitutes simulation through mental practice, and the degree of interplay between these broader social cognitive skills and a narrower focus on psychomotor skills [25,26,27,28]. Agreement exists however that each is important for effective surgical practice as each captures cognitive skills that resonate on a very practical level of surgical work.

In this article we construe mental simulation as encompassing mental rehearsal activities undertaken by practicing surgeons prior to performing a surgical task to improve their knowledge, skills and behaviour and with the aim of enhancing overall surgical performance and how it is planned and organized.

Mental practice activities vary in format, content, duration and setting. As a result, surgical educators have to make choices when designing and delivering training to improve performance, either at individual or on a team level. This includes interventions developed for training purposes in simulated contexts but also priming exercises prior to actual surgical procedures. In these circumstances research evidence about the impacts of ‘mental practice’ has a useful role in shaping decisions about what kind of mental practice may be effective for which groups of practitioners in which contexts. Systematic reviews of research about impacts can provide a comparatively efficient and rigorous source of evidence for this purpose and ensure that future research on education meets the criteria of scientific validity, high-quality, and practical relevance that is sometimes lacking in existing evidence on educational activities, processes, and outcomes [29].

This study presents the findings and discusses the implication for practice and research of our analysis of a number of systematic reviews of ‘mental practice’. These reviews were identified to answer the following questions: which kinds of mental practice lead to what outcomes for what kinds of surgical practitioner?

Methods

We identified systematic reviews of ‘mental practice’ in surgery which, together with the studies included in them provide a comprehensive view of the research literature on the impact of mental practice on surgical teams.

We used an approach that has been termed a systematic-rapid evidence assessment (SREA) [30]. This approach follows the principles of systematic review but a number of strategies are used to accelerate a more rapid review process. Specific adaptations used in this SREA included a selective data extraction process, limited quality assessment process, and simple synthesis of included materials. In addition, we undertook a ‘review of reviews’ method, as opposed to reviewing primary research studies. A SREA approach retains the advantages of transparency and rigor in the review process compared with non-systematic literature reviews; however, it reduces the time resources required when undertaking a comprehensive systematic review of primary research. The specific procedures used in this SREA are detailed below.

Locating systematic reviews

We searched a range of sources including bibliographic databases (BEI, ERIC, Medline, CINAHL PsycINFO, Web of Science); the internet (Google Scholar); and systematic review organisations, specifically the Cochrane EPOC group, The Best Evidence in Medical Education (BEME) network, and the Joanna Briggs Institute. A search string comprising a variety of synonyms for ‘mental practice’ – ‘mental imagery’, ‘mental rehearsal’, ‘mental simulation’ – was combined using Boolean term ‘or’, the results of which were then combined with ‘surgical teams’ and ‘systematic review’. We conducted the literature search between July 2018 to December 2018.

Selection criteria

The following selection criteria were applied to titles and abstracts of provisionally identified papers to identify relevant reviews [1]: reviews investigating the impact of mental practice on surgeons or members of surgical teams [2] systematic reviews (i.e. reviews of research with recognised review methods reported [3] reviews in English, French or Italian since 2000. A review had to meet all these criteria to be included. At the second stage of screening (full papers) these selection criteria were reapplied. Reference lists of included studies were scrutinized for additional papers. Figure 2 provides an overview using the PRISMA framework [31]. One author conducted the initial search for literature and the initial exclusion stage (HS). Both authors then reviewed the remaining titles, abstracts, and full texts for eligibility and extracted the relevant information (HS, BG).

Data extraction, analysis, and synthesis

Reviews that met the selection criteria were coded for relevant details about contexts, methods, and review results or outcomes and a narrative synthesis of each study was written. This narrative involved summarising and combining the descriptive and contextual outcome information from the included reviews. The reported outcomes were categorised using a version of Kirkpatrick’s Evaluation model adapted by Simpson and Scheer for surgery [32] to gauge the overall quality of the evidence (Table 1).

The Kirkpatrick model defines four levels of training evaluation. As Table 2 shows, levels 1, 2a, 2b, and 3 cover outcomes that measure the impact on the surgical team members who participated in a ‘mental practice’ intervention, whereas levels 4a, 4b, and 4c measure impacts on organisational practices (e.g. dedicated times, pre-surgical briefings, guidelines and training documents, collective perceptions of safer practice, sustained learning and or the quality of patient care).

Quality assurance processes

The search strategy and inclusion criteria were tested and developed iteratively by the two authors. Screening of abstracts was done by one of the authors with a random 10% sample screened by a second author. Any discrepancies were discussed until consensus was reached. Data extraction and coding of the key information was undertaken by one of the authors (HS). A second author checked the extracted information for consistency and conducted a short quality assessment review of 3 of the selected systematic review using AMSTAR-2 [35] which stands for ‘A MeaSurement Tool to Assess systematic Reviews’. Once again, discrepancies were discussed and resolved for each study.

Results

Our literature searches initially generated 535 abstracts (Fig. 2). A detailed key word search result table is produced in Supplementary materials. After removing duplicates, the titles and abstracts of 404 studies were screened applying the criteria described above. Ten full text articles were assessed for eligibility, of which four were subsequently excluded (Table 2). Six systematic reviews of ‘mental practice’ were included in the analysis [24, 36,37,38,39,40,41].

Stated aims and conceptual field

The aims of the studies and the terms authors used to encompass ‘mental practice’ are shown in Table 3. The stated aims of the reviews ranged from searches to identify effective designs of mental practice tasks, to the application of mental practice to improve surgical education, to comparison of impacts of MP on performance of novices and experts, to improvements in technical confidence as well as stress reduction, and lastly to prime surgical performance in ‘warm up’ routines.

The key words used by authors to describe the conceptual domain are for the most part linguistically unmarked, the most common imbrications being ‘mental practice’, ‘mental imagery’ and ‘mental rehearsal’. The less common and more marked concepts included terms such as ‘mental simulation’, ‘eidetic imagery’, motor imagery’ and ‘visual imagery’.

Mental practice content and process

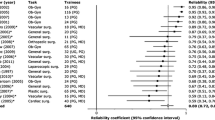

An outline of mental practice activities contained in the included reviews is presented in Table 4. All six reviews attempted to assess surgical performance in relation to MP and its impact on psychomotor execution of the surgical task. Table 4 lists the variety of validated rating scales the reviews identified for both technical and non-technical skill ratings.

In general, the reviews defined ‘mental practice’ with variable emphases. Three studies focussed on cognitive and motor task activities, but also considered the effects of MP on managing non-technical skills, and in particular the management of stress [36, 38, 41]. Differences across studies emerge concerning how broadly or circumscribed the nature of MP is defined. One review investigating optimal educational designs to improve motor coordination ‘of moving body parts’ described MP as an umbrella term covering ‘mental imagery’ and broader mental ‘training’ interventions [40]. While most of the reviews assume in varying degrees that MP is a given capability among subjects, two argue that MP may depend on imagery strength and the neural networks underlying imagery [40, 41]. For instance, Sevdalis et al. [41] argue that individual differences across the population in visual representation strength determines how MP impacts performance. According to these authors, groups therefore need to be separated on the strength of their imagery using validated tools such as the Mental Imagery Questionnaire [5, 42].

Although the reviews reported MP practices in medicine, two reviews compared MP across different domains. Cocks et al. [38] compared MP in medicine and sports psychology to see what insights could be applied to both novice and experienced surgeons. Schuster et al. [40] examined MP across five different domains – education, medicine, music, psychology and sports. The authors included only two studies with practising surgeons and another seven with undergraduates or nurses which they organised under ‘educational studies’. A further 37 studies on MP in rehabilitation with patients were included under ‘Medicine’. MI activities were described as cognitively-focussed or task-oriented and all the included studies measured change with a pre-post test. Activities using MP spanned sterile glove removal to laparoscopic cholecystectomy. MP was used before physical practice with a duration across trials of less than 30 min. The mode of MP was not reported.

According to the included reviews, most interventions were carried out in simulation laboratories, with very few in clinical contexts. The use of control groups and comparison interventions is consistent across the studies reviewed as are common standard outcome measures in surgery. Table 4 lists the different measures. The most commonly used evidence of change tools following MP were the Objective Structured Assessment of Technical Skills and the Observational Teamwork Assessment for Surgery rating scales [42, 43]. Tools used in adjunct to these comprised observational Global Rating Scales for operative performance measures, [36, 38, 39, 41] measures of stress [36, 37, 40, 41] and lastly mental imagery measures [36, 37, 39, 41].

In contrast, less comparability is possible in descriptions of the delivery mode of MP. These included MP delivered in groups, delivered one-to-one, with the adjunct of video and written scripts, MP directed and taught formally (face to face) and undirected or self-directed MP by participants at home. MP was also embedded in an assortment of other activities which ranged from relaxation with hypnosis, talk aloud protocols and finally with teaching underpropped by psychologists or MP experts. One review’s account of MP was wrapped in a miscellany of warm-up activities consisting of techniques for attention management, stress control, and goal-setting [36]. As a result, it was difficult to discern within and across the reviews the exact nature of the impacts of MP. Or rather it was difficult to see the boundaries of MP with other kinds of stimuli to shape performance. Populations too were heterogeneous ranging from first year undergraduates to novice trainees to senior surgeons.

More importantly, the reviewers often provide little descriptive detail of how MP was enacted as an intervention. It is not clear whether the lack of description reflected a lack of detail in the primary studies or in the systematic review process. Others have already reported a lack of detail to be a common feature of MP studies, along with unsatisfactory experimental designs [41, 44].

Reported impact on learning outcomes

Of 73 experimental reports contained in the reviews, fifty-one reported positive impacts of MP while 16 described no effect, or negative effects on performance. We classified the reported outcomes in each systematic review using a modified outcomes typology [32] as shown in Table 5. The table lists the number of reported impacts from primary studies synthesised in the reviews.

Looking at Level 1, there is little description in the reviews of how reactions were collected. Reactions cover the learners’ views of the learning experience, its organisation, presentation, content, teaching methods and quality of instruction. Where personal reactions were captured in primary studies these were related to use of the MIQ tool.

Changes in learner confidence and improved stress reduction strategies are assimilated into Level 2 with the implication that MP changes participants’ conceptions of how to prepare, mentally rehearse or simulate tasks.

What emerges from this synthesis is that the majority of outcomes are at level 2a (Learner perceptions) and 2b (Knowledge & Skills acquisition) where the reported evidence base is stronger and intervention groups significantly outperformed controls. Measurements were made using a combination of validated instruments such as the Observed Structured Assessment of Technical Skills (OSATS), Global Rating Scales, and stress related measures.

Figure 3 shows an overview of the application of the Kirkpatrick model to the selected studies. The number of reviews classifying the quality of data at each level is enumerated in each column. This shows that evidence for positive impacts of MP is strongest at the Level 2a and 2b, which relates to perceptions by learners of positive impacts (confidence, emotions) and psychomotor performance immediately following the MP intervention. (See also Table 2).

Only three reviews referred to evidence for behaviour change (Level 3) for longer-term impacts of MP – e.g. sustained acquisition of psychomotor skills, knowledge and coping strategies - although in all three, reviewers expressed concerns for data quality. None of the studies reported results at Level 4 (Changes in practice at system level). However, some sporadic descriptions of positive trends in transfer of learning emerge across the reviews. Cocks et al. [38] report one study of learning ‘transfer’ where MP and physical practice in combination, improved medical students suturing skills at distance of time on anaesthetised rabbits compared to controls who did physical practice alone. Similarly, Anton et al. report a recent study in endovascular surgery [45] in which error rates during the endovascular phase were lower after the MP intervention compared to before. Other reviewers [37, 40] cited evidence of positive long-term impacts and transfer at Levels 3–4 in populations excluded from their selection criteria: for example, patients in neuro-rehabilitation and pain management [9, 46]. Tellingly, all of these studies applied longitudinal and mixed method designs for evaluation of impacts with significant improvements compared to control groups.

Generally, reviewers struggled to find details of follow up reports on the role of MP as a process and technique for fine honing individual or collective learning. Perhaps less justifiably there was also little detailed discussion of methods of how this may be achieved in future research. Nevertheless, three reviews did advocate the need for prospective investigations into impacts which included outcome measures derived from systematic ethnographic observations, follow-up self-reports and evidence of changes in local training methods, team routines or curriculum [37, 38, 41].

Quality issues in the systematic review

An outline of the methods used in the reviews is presented in Table 6. All six reviews reported using standard systematic review methods for searching, selecting, analysing and report writing.

All the reviews undertook searches on several bibliographic databases. Two studies included searches of reference lists of included articles, journal hand searches, and expert recommendations. One study searched for studies that were not published in academic journals by searching the internet.

Of the 73 studies that we identified in the reviews as being an included study, only 13 appeared in more than two of the reviews. This seems quite low given many of the studies were published after 2010.

A closer look at the selection criteria used in the reviews suggests this was possibly due to specific selection criteria, for example, the inclusion or not of specific activities under the term ‘mental practice’. Nevertheless, the development of findings of the earlier reviews was generally reiterated in the reporting of subsequent reviews.

All of the reviews reported some form of quality assessment, but there was considerable variation. For example, only two reviews offered explanations of how the scoring was operationalised to measure possible threats to validity (bias) or how the scores awarded to included studies were calculated [37, 40]. The remaining four studies reported no synthesis method.

The use of systematic approach to synthesis is an important element in any systematic review. In the only two reviews where the synthesis method was described as meta-analysis, [37, 40] this synthesis proved impossible because of the heterogeneity of the primary studies. The authors subsequently used trend analysis and Jadad [47] scoring of RCTs to rate the quality of the studies. It was not clear in any of the other reviews how study quality assessment ratings were incorporated in the process of synthesising results from individual studies (e.g. through weighting or individual reviewer’s interpretation).

To further support our critical appraisal of secondary studies we used the AMSTAR 2 critical appraisal tool for systematic reviews [35]. AMSTAR is not intended to generate an overall score but can be used to indicate four broad levels of quality: Critically Low, Low, Moderate and High Quality. It has 16 items with an overall rating based on weaknesses in critical domains. Two authors independently applied the tool to the reviews and came to a consensus through discussion whenever discrepancies emerged. For example, these occurred in 2 papers [36, 39] when collating factors to determine a ‘moderate’ or ‘low rating’ using the AMSTAR tool.

Table 6 shows that three of the reviews were rated as ‘High Quality’ using the tool, two of ‘Moderate Quality’ and one of ‘Low quality’. None of the studies satisfied criteria for a rating of Critically Low Quality’.

Theoretical perspectives

All six reviews referred explicitly to theory to provide explanatory frameworks for how MP enhances learning and performance. Table 7 provides an overview.

Theories were drawn from a broad field stretching from Neurophysiology to motivational psychology to goal-setting and to educational and feedback theories of learning. Theories of Psychological cognition (e.g. Dual Coding Theory) and the bases of MP in Neurophysiology were referred to most commonly; the later draws on empirical evidence of neuroplasticity and the proposition that learning is reinforced when the brain activates neuronal pathways to simulate or ‘rehearse’ physical actions. While reviews made reference to their own theoretical presuppositions, there was little synthesis of theories espoused in the primary research they had examined. It is not clear therefore to what extent primary studies were moored or unmoored to any discernible theoretical underpinning.

Discussion

The use of the Kirkpatrick Model allowed us to identify what type of evidence was available for MP, and to what extent reviews demonstrated the effectiveness of training intervention. In our analysis, we used the model as an educational heuristic and did not assume causal links between the levels.

Consistent with previous evidence on the potential value of mental practice in sports psychology and neurobiology, two-thirds of primary studies reviewed concluded that MP significantly improves subsequent (surgical) performance.

Improvement in skills were perceived to encapsulate two broader areas: 1) technical and 2) non- technical skills. Technical skills were perceived to be motor skills which enabled actions, such as surgical movements and flow. Non-technical skills included skills which underpinned actions, such as communication, coping strategies and team-work.

There are marked differences in designs, research protocols, training regimens, populations and outcome measures among the studies reviewed. Despite this heterogeneity, positive effects of MP on both technical (motor function) and non-technical skills (stress and confidence) were reported. All of the reviews found some strong evidence for the positive impact of MP on Levels 2a and 2b of the Kirkpatrick framework.

It does appear therefore that surgeons can benefit from exercising and honing this skill both at novice and experienced levels of practice. We concur therefore with the findings in the comprehensive review by Sevdalis et al. [41] that appreciable evidence exists to support further investigation. However, there are a number of outstanding questions and the limitations of this review should be considered alongside its findings.

Review limitations

We used MP as an umbrella of mental imagery, mental simulation and mental rehearsal as these key words (and others) were reported across the reviews as search terms (Table 3). However, this encompassing conception may risk skewing more highly focussed definitions and their findings [44]. Mental practice is concerned with mental processes and can also involve all the senses, but in some studies the focus was on visual mental imagery as most empirical work has addressed this sensory domain [48]. Nevertheless, evidence demonstrates that these sense modalities interact in a variety of ways [49, 50] and there is still incomplete knowledge regarding the degree of interplay between broader cognitive skills and a narrower focus on psychomotor skills.

The critical issue here is the heterogeneity of mental representations which points to practical and theoretical reasons why mental practice is challenging to study in whatever way we choose to decline it. Furthermore, a detailed understanding of what MP comprises, by its very nature, can remain an illusion. In agreement with Schuster et al. [40] we chose a broad and inclusive working definition which can be understood as an interaction between cognitive, perceptual and motor systems, but acknowledge this may be less precise than among other reviewers.

Despite an inclusive search strategy around mental practice as ‘simulation’ we did not include technology-based simulation approaches to priming performance. Excluding grey literature and non-peer reviewed articles may have overestimated the quality of the literature field in medicine. This however supports findings that the MP and MI are inadequately defined.

Poor reporting in primary studies may also have led to an unduly negative assessment of quality of the reviews. The depth of impact, i.e. along the Kirkpatrick model, was relatively limited. The preponderance of RCT designs [51] in the reviews may have restricted the analysis of more enduring examples of acceptance, learning and acquisition of skills thus explaining the inability to reach higher levels on the Kirkpatrick scale. Surprisingly however, and even when the focus of primary studies was narrower, what exactly MP comprises was insufficiently described to make the interventions easily reproducible.

A further criticism could concern our choice of an outcomes-based evaluation framework. In fact, to guide our abstraction of the different learning outcomes of MP, we used Kirkpatrick’s model of educational impacts. This model offers a pragmatic four-point description of educational outcomes which is followed by the Best Evidence in Medical Education group (BEME) [52]. In our analysis of outcomes with Kirkpatrick’s framework we do acknowledge some conceptual limitations.

A criticism of Kirkpatrick is that the use of levels concept implies the framework is about a product, a particular learning outcome of interest, rather than a process [53]. We agree the process is important because it illustrates the quality of teaching and learning, directed and self-directed, needed to achieve the outcome. However, we believe this is not subverted by the model. In an influential review of skill acquisition Wulf et al. [54] make the important distinction between learning and performance. The papers in the reviews privilege the latter by comparing MP with no mental practice on immediate or very short-term performance outcomes. These studies attempt to evince how performance is influenced by a particular training method. This may or may not have anything to do with how much was learned. In other words, performance and learning can be uncomfortably aligned. The reason is because learning is a relatively enduring transformation in a person’s capability to perform a skill. Significantly, learning also shapes how we think about how we learn and prepare for performance. These metacognitive adjustments shore up retention and resilience over time. Surprisingly, and despite comparisons with expert practices, the value adjudicated to mental practice was largely restricted to a specific and time-limited skill performance, rather than acceptance (by participants), or retention and transferability of the MP ritual by participants to other clinical skill contexts. Given the accumulated evidence of MP benefits outside of healthcare, whether and how users apply MP post training is surely a key researchable impact. Remarkably, however, only half the reviews mention its desirability.

A further and final criticism is that Kirkpatrick levels delineate a patchwork of different beneficiaries: levels 1–3 concern learners (where reviews reported positive improvements), but levels 4a concerns organisations and level 4b concerns patients. Perhaps critically, teachers are missing from the scheme altogether [55]. Despite these limitations of the model, we believe it does invite evaluators in systematic reviews to think about what happens afterwards, and longitudinally. This implies prospective evaluators should consider evidence for sustained changes in individual and collective practices, including the frequency and exposure to independent user follow up of MP and how this is supported in practice and policy in the organisation. And not simply in training curricula re-design, but for instance in the time and space allocated to MP in pre-surgical routines and briefings. The impact of sustained MP on surgical flow and its effects on patient care should also be analysed.

Evaluation researchers therefore need to think of the longer term and not simply the immediate performance. In particular, they need to identify different methods of data collection and a variety of sources within the umbrella levels which could throw light on different impacts.

Implications

At first glance it appears that MP is still largely in thrall to the taxonomy of psycho-motor skills and step-wise mental imagery. The obverse side however is that our human capacity for reflection, self-awareness, and meta representations, the ability to have also a concept of the mental representations of others, invites a broader set of propositions about MP and learning in educational contexts [4, 56,57,58,59]. Mental practice (and mental imagery) allow us to visualise, remember, plan for the future, navigate and make decisions. Quintessentially, what emerges from a number of reviews is that MP harbours a logic of ‘expertise’ [36, 38, 39]. It postulates, for instance, that through guided mimicry of expert routines less experienced surgeons can be inducted to practices that help bridge the gaps, thereby shortening their learning trajectories. Consequently, this lends substance to the idea that we should inquire into the pedagogic, learning and cultural conditions that might render the practice of MP more likely over time. Implicit in this view is that MP itself is a key transferable skill. That is, it can be practised, learned and improved upon. From this standpoint we believe that MP is still under theorised and little acknowledged.

This brings us to the following related point. MP assumes that practitioners already have ‘imaginative’ resources that are critical to quality and safety but that these resources need to be supported organisationally – for example by being instantiated in pre-surgical routines. This requires organisational spaces, leadership and involves a kind of interpersonal expertise. Interestingly, recent research suggests that teams can share their practical envisioning derived through MP with others to improve their collective performance [15, 60, 61]. In fact, MP posits that this sharing of an individual’s ‘mental rehearsals’ – at expert but even novice levels - can lead to better performance and enhanced safety in the team simply by dint of its communicative and rhetorical force [15, 60, 62, 63]. Organisational researchers have referred to these interactions as ‘zones of collaborative attention.’ [64] Mental practice, in other words, can also be an ‘information sharing’ practice which is proximal to the task and imbricated with important and familiar notions in surgery of ‘shared mental models’, ‘anticipatory thinking’ and ‘situational awareness.’ There is a difference of emphasis here with mainstream educational notions of ‘reflective practice’: MP privileges cultivating ‘foresight’ over retrospective review or ‘reflection on action’. [65] Future research in learning and improvement science needs to address these dimensions of MP and their contributions to how safety is achieved.

In consideration of these factors, in designing procedures for surgical training, surgical team building and surgical safety, MP has potential to improve performance and its application is highly economical, sustainable and feasible with current resources.

Conclusions

Although the literature has burnished attempts to apply MP to surgical education, there is a need for more thoughtfulness about evaluation methods. These should explore not only the immediate effects on skill demonstration, but broader notions of acquisition and, importantly, uptake of MP among users over time to enhance their performance.

Beyond the individual, training interventions should aspire to instigate similar change across the knowledge, skills, attitudes and behaviours of teams and organisations. Clarity on these micro and meso-level objectives we believe are essential in the design and delivery of effective educational interventions and also their evaluation.

There are strong implications too for the social function of mental rehearsal in teams and its contribution to safety. MP can potentially shape the way a surgical team acts as a ‘collectivity’ which is critical to how safety is accomplished [66, 67]. Determining the most influential shifts in practice, at the individual, team and organisation levels will require more validation in future studies using more sophisticated and longitudinal research designs.

Availability of data and materials

All data generated or analysed during this study are included in this published article [and its supplementary information files].

Abbreviations

- FLS:

-

Fundamentals of Laparoscopic Surgery Simulation

- HRV:

-

Heart Rate Variability

- LC:

-

Laparoscopic cholecystectomy

- MHPTS:

-

Mayo High Performance Teamwork Scale

- OSATS:

-

Objective Structured Assessment of Technical Skills

- PSCE:

-

Objective structured clinical examination

- OTAS:

-

Observational Teamwork Assessment for Surgery

- ISAT:

-

The Imperial Stress Assessment Tool

- GSOP:

-

The Global Scale of Operative Performance

- SRC:

-

Surgical Coping Scale

- STAI:

-

State-Trait Anxiety Inventory

- VH:

-

Vaginal Hysterectomy

- VR:

-

Virtual Reality

- MIT:

-

Visual Imagery Test

- OR:

-

Operating Room

References

Bandura A. Social cognitive theory. In: Vasta R, editor. Annals of child development. Greenwich: JAI Press; 1986. p. 1–60.

Inzana CM, Driskell JE, Salas E, et al. Effects of preparatory information on enhancing performance under stress. J Appl Psychol. 1996;81(4):429–35 [published Online First: 1996/08/01].

Driskell JE, Moran A. Does mental practice enhance performance? J Appl Psychol. 1994:481–92.

Latham GP, Locke EA. The science and practice of goal setting. Hum Resour Manag. 2015;5.

Cumming J, Hall C. Deliberate imagery practice: the development of imagery skills in competitive athletes. J Sports Sci. 2002;20(2):137–45 https://doi.org/10.1080/026404102317200846.

Eccles D, Ward P, Woodman T. Competition-specific preparation and expert performance. Psychol Sport Exerc. 2009;10:96–107.

Boomsma C, Pahl S, Andrade J. Imagining change: an integrative approach toward explaining the motivational role of mental imagery in pro-environmental behavior. Front Psychol. 2016;7:1780 https://doi.org/10.3389/fpsyg.2016.01780.

Arp R. Scenario visualization: an evolutionary account of creative problem solving: Bradford books; 2008.

Barclay-Goddard RE, Stevenson TJ, Poluha W, et al. Mental practice for treating upper extremity deficits in individuals with hemiparesis after stroke. Cochrane Database Syst Rev. 2011;(5):CD005950 https://doi.org/10.1002/14651858.CD005950.pub4 [published Online First: 2011/05/13].

Louhivuori J, Eorola T, Saarikallio S, et al. Mental practice in Music memorization: an ecological empirical study, 7th Triennial Conference European Society for the Cognitive Sciences of Music. Jyväsklä: ESCOM; 2009.

Sternburg RJ. Why schools should teach for wisdom:the balance theory of wisdom in educational settings. Educ Psychol. 2001;36(4):227–45.

DeChurch L, Mesmer-Magnus J. The cognitive underpinings of effective teamwork: a meta analysis. J Appl Psychol. 2010;95:32–53.

Driskell JE, Salas E, Driskell T. Foundations of teamwork and collaboration. Am Psychol. 2018;73(4):334–48 https://doi.org/10.1037/amp0000241 [published Online First: 2018/05/25].

Gershgoren L, Basevitch I, Filho E, et al. Expertise in soccer teams: a thematic inquiry into the role of shared mental models within team chemistry. Psychol Sport Exerc. 2016;24:128–39.

Crisp RJ, Birtel MD, Meleady R. Mental simulations of social thought and action: trivial tasks or tools for transforming social policy? Curr Dir Psychol Sci. 2011;20(4):261–4.

Mick P, Dadgostar A, Ndoleriire C, et al. Mental practice in surgical training. Clin Teach. 2016;13(6):443–4 https://doi.org/10.1111/tct.12412 [published Online First: 2015/06/03].

Saab SS, Bastek J, Dayaratna S, et al. Development and validation of a mental practice tool for Total abdominal hysterectomy. J Surg Educ. 2017;74(2):216–21 https://doi.org/10.1016/j.jsurg.2016.10.005.

Arora S, Aggarwal R, Hull L, et al. Mental practice enhances technical skills and teamwork in crisis simulations - a double blind, randomised controlled study. J Am Coll Surg. 2011;213(3) https://doi.org/10.1016/j.jamcollsurg.2011.06.302.

Bowers C, Kreutzer C, Cannon-Bowers J, et al. Team Resilience as a Second-Order Emergent State: A Theoretical Model and Research Directions. Front Psychol. 2017;8:1360 https://doi.org/10.3389/fpsyg.2017.01360 [published Online First: 2017/09/02].

Cannon-Bowers JA, Salas E, Converse SA. Shared mental models in expert team decision making. In: Castellan NJ, editor. Individual and group decision making: Current issues. Hillsdale: Lawrence Erlbaum Associates; 1993. p. 201–3.

Eccles DW, Tran KT. Getting them on the same page: strategies for enhancing Coordinationand communication in sports teams. J Sport Psychol Action. 2010;3(1):30–40.

Flin R, Maran N. Identifying and training non-technical skills for teams in acute medicine. Qual Saf Health Care. 2004:80–4.

Aoun SG, Batjer HH, Rezai AR, et al. Can neurosurgical skills be enhanced with mental rehearsal? World Neurosurg. 2011;76(3–4):214–5 https://doi.org/10.1016/j.wneu.2011.06.058.

Pike TW, Pathak S, Mushtaq F, et al. A systematic examination of preoperative surgery warm-up routines. Surg Endosc. 2017;31(5):2202–14 https://doi.org/10.1007/s00464-016-5218-x [published Online First: 2016/09/17].

Hegarty M. Mechanical reasoning by mental simulation. Trends Cogn Sci. 2004;8(6):280–5 https://doi.org/10.1016/j.tics.2004.04.001.

Frank C, Land WM, Popp C, et al. Mental representation and mental practice: experimental investigation on the functional links between motor memory and motor imagery. PLoS One. 2014;9(4):e95175 https://doi.org/10.1371/journal.pone.0095175 [published Online First: 2014/04/20].

Hales S, Blackwell SE, Di Simplicio M. Imagery practice and the development of surgical skills. In: Brown GP, Clark DA, editors. Assessment in Cognitive Therapy. New York: Guilford Press; 2015.

Brogaard B, Gatzia DE. Unconscious Imagination and the Mental Imagery Debate. Front Psychol. 2017;8:799 https://doi.org/10.3389/fpsyg.2017.00799 [published Online First: 2017/06/08].

Davies P. What is evidence-based education? Br J Educ Stud. 1999;47(2):108–21 https://doi.org/10.1111/1467-8527.00106.

Newman M, Reeves S, Fletcher S. Critical Analysis of Evidence About the Impacts of Faculty Development in Systematic Reviews: A Systematic Rapid Evidence Assessment. J Contin Educ Health Prof. 2018;38(2):137–44 https://doi.org/10.1097/CEH.0000000000000200 [published Online First: 2018/06/01].

Moher D, Liberati A, Tetzlaff J, et al. Preferred Reporting Items for Systematic Reviews and Meta-Analyses: The PRISMA Statement. BMJ (OPEN ACCESS). 2009;339:1–8 https://doi.org/10.1136/bmj.b2535.

Simpson JS, Scheer AS. A Review of the Effectiveness of Breast Surgical Oncology Fellowship Programs Utilizing Kirkpatrick’s Evaluation Model. J Cancer Educ. 2016;31(3):466–71 https://doi.org/10.1007/s13187-015-0866-4 [published Online First: 2015/06/11].

Marcus H, Vakharia V, Kirkman MA, et al. Practice makes perfect? The role of simulation-based deliberate practice and script-based mental rehearsal in the acquisition and maintenance of operative neurosurgical skills. Neurosurgery. 2013;72(Suppl 1):124–30.

Shearer DA, Thomson R, Mellalieu SD, et al. The relationship between imagery type and collective efficacy in elite and non elite athletes. J Sports Sci Med. 2007;6(2):180–7.

Shea B, Reeves B, Wells G, et al. AMSTAR 2: a critical appraisal tool for systematic reviews that include randomised or non-randomised studies of healthcare interventions, or both. BMJ. 2017;358(j4008).

Anton NE, Bean EA, Hammonds SC, et al. Application of Mental Skills Training in Surgery: A Review of Its Effectiveness and Proposed Next Steps. J Laparoendosc Adv Surg Tech A. 2017;27(5):459–69 https://doi.org/10.1089/lap.2016.0656 [published Online First: 2017/02/23].

Rao A, Tait I, Alijani A. Systematic review and meta-analysis of the role of mental training in the acquisition of technical skills in surgery. Am J Surg. 2015;210(3):545–53 https://doi.org/10.1016/j.amjsurg.2015.01.028 [published Online First: 2015/06/21].

Cocks M, Moulton CA, Luu S, et al. What surgeons can learn from athletes: mental practice in sports and surgery. J Surg Educ. 2014;71(2):262–9 https://doi.org/10.1016/j.jsurg.2013.07.002 [published Online First: 2014/03/08].

Davison S, Raison N, Khan MS, et al. Mental training in surgical education: a systematic review. ANZ J Surg. 2017;87(11):873–8 https://doi.org/10.1111/ans.14140 [published Online First: 2017/08/30].

Schuster C, Hilfiker R, Amft O, et al. Best practice for motor imagery: a systematic literature review on motor imagery training elements in five different disciplines. BMC Med. 2011;9:75 https://doi.org/10.1186/1741-7015-9-75 [published Online First: 2011/06/21].

Sevdalis N, Moran A, Arora S. Mental Imagery and Mental Practice Applications in Surgery: State of the Art and Future Directions. In: Lawson R, editor. Multisensory Imagery. New York: Springer; 2013. p. 343–63.

Arora S, Aggarwal R, Sevdalis N, et al. Development and validation of mental practice as a training strategy for laparoscopic surgery. Surg Endosc. 2009; https://doi.org/10.1007/s00464-009-0624-y.

Martin J, Regehr G, Reznick R, et al. Objective structured assessment of technical skill (OSATS) for surgical residents. Br J Surg. 1997;119(9):273–8.

Sevdalis N, Moran A, Arora S. Methodological issues surrounding ‘The mind’s scalpel in surgical education: a randomised controlled trial of mental imagery’. BJOG. 2013;120(6):775–6 https://doi.org/10.1111/1471-0528.12171 [published Online First: 2013/04/10].

Patel SR, Gohel MS, Hamady M, et al. Reducing errors in combined open/endovascular arterial procedures: influence of a structured mental rehearsal before the endovascular phase. J Endovasc Ther. 2012;19(3):383–9 https://doi.org/10.1583/11-3785R.1.

Polli A, Moseley GL, Gioia E, et al. Graded motor imagery for patients with stroke: a non-randomized controlled trial of a new approach. Eur J Phys Rehabil Med. 2017;53(1):14–23 https://doi.org/10.23736/S1973-9087.16.04215-5 [published Online First: 2016/07/22].

Jadad AR, Moore RA, Carroll D, et al. Assessing the quality of reports of randomized clinical trials: is blinding necessary? Control Clin Trials. 1996;17(1):1–12 https://doi.org/10.1016/0197-2456(95)00134-4 [published Online First: 1996/02/01].

Pearson J, Naselaris T, Holmes EA, et al. Mental Imagery: Functional Mechanisms and Clinical Applications. Trends Cogn Sci. 2015;19(10):590–602 https://doi.org/10.1016/j.tics.2015.08.003 [published Online First: 2015/09/29].

Bertelson P, de Gelder B. The psychology of multimodal perception. In: Spence C, Driver J, editors. Crossmodal space and crossmodal attention. Oxford: Oxford University Press; 2004. p. 141–77.

O’Callaghan C. Not all perceptual experience is modality specific. In: BS SD, Matthen M, editors. Perception and its modalities. Oxford: Oxford University Press; 2014.

Norman G. RCT = results confounded and trivial: the perils of grand educational experiments. Med Educ. 2003;37:582–4.

Barrett A, Galvin R, Steinert Y, et al. A BEME (Best Evidence in Medical Education) systematic review of the use of workplace-based assessment in identifying and remediating poor performance among postgraduate medical trainees. Syst Rev. 2015;4:65 https://doi.org/10.1186/s13643-015-0056-9 [published Online First: 2015/05/09].

Roland D. Proposal of a linear rather than hierarchical evaluation of educational initiatives: the 7Is framework. J Educ Eval Health Prof. 2015;12(35).

Wulf G, Shea C, Lewthwaite R. Motor skill learning and performance: a review of influential factors. Med Educ. 2010;44:75–84.

Yardley S, Dornan T. Kirkpatrick’s levels and education ‘evidence’. Med Educ. 2012;46(1):97–106 https://doi.org/10.1111/j.1365-2923.2011.04076.x [published Online First: 2011/12/14].

Schön D. The Refelective practitioner: how professionals think in action. 1st ed. New York: Basic Books; 1984.

Schön D, Argyris C. Theory in practice: increasing professional effectiveness. San Francisco: Jossey-Bass; 1992.

Gibbs G. Learning by doing: a guide to teaching and learning methods. Oxford: Further Education Unit. Oxford Polytechnic; 1988.

Bandura A. Exercise of personal and collective-efficacy in changing societies. Cambridge: Cambridge University Press; 1997.

Lorello G, Hicks C, Ahmed S, et al. Mental practice: a simple tool to enhance team-based trauma resuscitaion. Can J Emerg Med. 2016;18(2):136–42.

Prati F, Crisp RJ, Rubini M. Counter-stereotypes reduce emotional intergroup bias by eliciting surprise in the face of unexpected category combinations. J Exp Soc Psychol. 2015;61:31–43.

Iedema R. Patient Safety and Clinical Practice Improvement: the importance of reflecting on real-time In situ care processes. In: Rowley E, Waring J, editors. . Ashgate: A socio-cultural perspective on patient safety; 2011.

Iedema R, Mesman J, Carroll K. Visualising health care practice improvement - innovation from within. London: Radcliffe; 2013.

Gherardi S. Introduction: the critical power of the practice lens. Manag Learn. 2009;40:115–28.

Snelgrove H, Fernando A. Practising forethought: the role of mental simulation. BMJ Simul Technol Enhanced Learn. 2018;4(2):45–6 https://doi.org/10.1136/bmjstel-2017-000281.

Weick KE. Sensemaking in organisations. London: Sage; 1995.

Weick K, Sutcliffe K, Obstfeld D. Organizing for High Reliability: Processes of Collective Mindfulness. In: Boin A, editor. Crisis Management. London: Sage; 2008. p. 31–66.

Acknowledgements

Not applicable.

Funding

None.

Author information

Authors and Affiliations

Contributions

HS made substantial contribution to the conception and design of the paper. BG and HS both made substantial contributions to the acquisition of data, analysis and interpretation of data. HS and BG drafted the paper and HS revised it critically and wrote the final version. All authors have read and approved the manuscript.

Authors’ information

HS is a Medical Educationalist working in the GAPS clinical simulation unit at St George’s University Hospitals NHS Foundation Trust. BG is a surgical trainee in orthopaedics.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare they have no competing interests or financial ties to disclose.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Additional file 1: Appendix 1.

Supplementary Data

Additional file 2.

Search Strategies

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Snelgrove, H., Gabbott, B. Critical analysis of evidence about the impacts on surgical teams of ‘mental practice’ in systematic reviews: a systematic rapid evidence assessment (SREA). BMC Med Educ 20, 221 (2020). https://doi.org/10.1186/s12909-020-02131-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12909-020-02131-3