Abstract

Background

When multiple endpoints are of interest in evidence synthesis, a multivariate meta-analysis can jointly synthesise those endpoints and utilise their correlation. A multivariate random-effects meta-analysis must incorporate and estimate the between-study correlation (ρ B ).

Methods

In this paper we assess maximum likelihood estimation of a general normal model and a generalised model for bivariate random-effects meta-analysis (BRMA). We consider two applied examples, one involving a diagnostic marker and the other a surrogate outcome. These motivate a simulation study where estimation properties from BRMA are compared with those from two separate univariate random-effects meta-analyses (URMAs), the traditional approach.

Results

The normal BRMA model estimates ρ B as -1 in both applied examples. Analytically we show this is due to the maximum likelihood estimator sensibly truncating the between-study covariance matrix on the boundary of its parameter space. Our simulations reveal this commonly occurs when the number of studies is small or the within-study variation is relatively large; it also causes upwardly biased between-study variance estimates, which are inflated to compensate for the restriction on B . Importantly, this does not induce any systematic bias in the pooled estimates and produces conservative standard errors and mean-square errors. Furthermore, the normal BRMA is preferable to two normal URMAs; the mean-square error and standard error of pooled estimates is generally smaller in the BRMA, especially given data missing at random. For meta-analysis of proportions we then show that a generalised BRMA model is better still. This correctly uses a binomial rather than normal distribution, and produces better estimates than the normal BRMA and also two generalised URMAs; however the model may sometimes not converge due to difficulties estimating ρ B .

Conclusion

A BRMA model offers numerous advantages over separate univariate synthesises; this paper highlights some of these benefits in both a normal and generalised modelling framework, and examines the estimation of between-study correlation to aid practitioners.

Similar content being viewed by others

Background

Traditionally, meta-analysis models combine summary measures of a single quantitative endpoint, taken from different studies, to produce a single pooled result. However, multiple pooled results are required whenever there are multiple outcomes [1] or multiple treatment groups [2]. A multivariate meta-analysis model uses the correlation between the endpoints and obtains the multiple pooled results collectively [3, 4]. For example, Reitsma et al. [5] have suggested a bivariate random-effects meta-analysis (BRMA) to jointly synthesise logit-sensitivity and logit-specificity values from diagnostic studies. Multivariate meta-analysis has been quite widely used, including application to genetic associations [6], surrogate endpoints [7, 8], psychological findings [9] and prognostic markers [10].

Often the advantage of a multivariate random-effects meta-analysis lies in its ability to use the between-study correlation of the multiple endpoints of interest. For example, in diagnostic studies the sensitivity and specificity are usually negatively correlated across studies due to the use of different thresholds [5]. Van Houwelingen et al. [4] use the between-study correlation to describe the shape of the bivariate relationship between the true log-odds in a treatment group and the true log-odds in a control group (baseline risk). Riley et al. [10] algebraically assess BRMA and show that the correlation allows a 'borrowing of strength' across endpoints. This leads to pooled estimates that have a smaller standard error than those from corresponding univariate random-effects meta-analyses (URMAs), especially when some endpoints are missing at random across studies.

Some recent articles have indicated the between-study correlation may often be estimated at the end of its parameter space, as +1 or -1. Thompson et al. [6] apply a normal BRMA model to genetic studies of coronary heart disease and report that the between-study correlation was 'poorly estimated' with the likelihood peaking at +1, and an estimate of +1 has also been reported in other applications [4, 11]. To aid practitioners, in this paper we analytically consider why this occurs, and then explore the impact, if any, this has on the pooled estimates and between-study variance estimates. This investigation also allows us to examine the general benefits of BRMA over URMA, to build on a number of other recent articles [5, 10], and encourage a greater use and appreciation of BRMA in practice.

The outline of the paper is as follows. We begin by introducing the general normal model for BRMA and discuss analytically why the between-study correlation can be estimated at the edge of its parameter space. We then apply the model to two real examples from the literature, one involving a diagnostic marker and one involving a surrogate outcome, and these both give a between-study correlation estimate of -1. This then motivates a realistic simulation study to examine how the estimate of between-study correlation affects the statistical properties of the pooled and between-study variance estimates. It also allows us to compare the performance of the normal BRMA model to two separate URMAs, the more common approach in practice [10]. We then extend our work to consider meta-analysis of proportions, and highlight why a generalised model for BRMA is preferred to the general normal BRMA model [12], and also two separate generalised URMA models. We conclude by summarising the broad benefits of BRMA for practitioners, and discuss future research priorities.

Methods

In this section we introduce a hierarchical normal framework for BRMA and URMA. We describe how the BRMA normal model is estimated, and analytically consider the estimation of between-study correlation. We then describe the rationale and methodology for our simulation study of BRMA versus URMA. To ensure a real world context, this section also includes two motivating examples from the medical literature where a BRMA is potentially important.

Motivating example 1 – the telomerase data

Glas et al. [13] systematically review the sensitivity and specificity of tumour markers used for diagnosing primary bladder cancer. One of these markers was telomerase, a ribonucleoprotein enzyme, evaluated in 10 studies as shown in Table 1. Rather than applying a URMA independently for sensitivity and specificity, the authors jointly synthesise the logit-transformed sensitivity and the logit-transformed specificity in a normal BRMA model as described below and recently proposed elsewhere [5].

A general normal model for bivariate random-effects meta-analysis (BRMA)

Suppose that i = 1 to n studies are identified by a systematic review, and that two endpoints (j = 1 or 2) are available from each study. Each study supplies summary measures, Y ij , and associated standard errors, s ij , for each endpoint. For instance, for diagnostic studies Reitsma et al. [5] suggest the logit-sensitivity is Y i1 and the logit-specificity is Y i2. Each summary statistic (Y ij ) is assumed to be an estimate of a true value (θ ij ) in each study, and in a hierarchical structure each θ ij is assumed to be drawn from a distribution with mean (or 'pooled') value β j and between-study variance . If Y ij /θ ij and θ ij are both assumed normally distributed then the BRMA can be specified as:

This model is the general normal model for BRMA [4], where δ i and Ω are the within-study and the between-study covariance matrices respectively. Usually of key interest from the analysis are the pooled estimates of β 1 and β 2, although sometimes an estimated function of these may be desired; for instance in the telomerase example an estimate of the log of the diagnostic odds ratio is given by 1 + 2. The BRMA differs from two independent URMAs by the inclusion of the within-study correlations (i.e. the ρ Wi s) and the between-study correlation (ρ B ). Equation (2) is equivalent to two independent URMAs when ρ Wi = ρ B = 0 for all i, i.e. there is zero correlation:

In equation (1) it is common to assume the s and ρ Wi s are known [1, 4]. Including the uncertainty of the s is unnecessary for URMA [14], but whether the uncertainty of the s and ρ Wi s should be incorporated in BRMA is yet to be examined. This issue is outside the scope of this paper but we note that a Bayesian framework is particularly flexible for incorporating such uncertainty [15].

Within- and between-study correlation

The within-study correlation, ρ Wi , indicates whether Y i1 and Y i2 are correlated within a study, and these ρ Wi s are usually assumed known. For the telomerase data the ρ Wi s might be assumed to be zero because sensitivity and specificity values are calculated independently in a study using different sets of patients. In other BRMA applications the ρ Wi s can be non-zero, for example where the two endpoints are overall and disease-free survival [10]. In practice it may be difficult to obtain the value of non-zero ρ Wi s, although it can be done as evident in Berkey et al. [1] and the 'motivating example 2' below [7]. Suggestions for limiting the problem of unavailable ρ Wi s have been proposed [15–17], and this issue is considered further in the discussion.

The between-study correlation, ρ B , is not generally known and has to be estimated when fitting the BRMA. It indicates how the underlying true values, i.e. the θ i1s and the θ i2 s, are related across studies. It may be caused by differences across studies in patient-level characteristics, such as age and stage of disease, or changes in study-level characteristics, such as the threshold level in diagnostic studies

Motivating example 2 – the CD4 data

Daniels and Hughes [7] assess whether the change in CD4 cell count is a surrogate for time to either development of AIDS or death in drug trials of patients with HIV. They consider between-treatment-arm log-hazard ratios of time to onset of AIDS or death (Y i1), and between-treatment-arm differences in mean changes in CD4 count (Y i2) from pre-treatment baseline to about six months. Fifteen relevant trials were identified. Some of the trials involved three or four treatment arms, but to enable application to BRMA here we only consider outcome differences between the control arm and the first treatment arm in the reported dataset [7]. All fifteen trials provided complete data, including the within-study correlations which were quite small, varying between -0.22 and 0.17 with a mean of -0.08.

Estimation

In our analyses of equation (1) in this paper the between-study parameters (i.e. , and ρ B ) and the two pooled values (β 1 and β 2) are estimated iteratively using restricted maximum likelihood (REML) in SAS Proc Mixed, as described elsewhere [4]. Unless otherwise stated, we also use Cholesky decomposition [18] of Ω to ensure that this matrix is estimated to be positive semi-definite and therefore that the between-study correlation estimate, B , is in the range [-1,1]. Cholesky decomposition of Ω also helps ensure convergence when B is very close to 1 or -1.

Analytic consideration of the between-study covariance parameters

Estimation and inference in classical linear mixed models are based on the marginal model, which for equation (1) is the bivariate normal model with variance-covariance δ i + Ω. Assume for the sake of simplicity that the within-study covariance matrix δ i = δ for all studies. Then the covariance matrix of the observed Y i1 s and Y i2 s is given by V = δ + Ω, where δ is known. Now, this puts (severe) restrictions on V, namely that V - δ is a covariance matrix, that is non-negative definite. If the estimated V does not satisfy this restriction, the maximum likelihood estimate of Ω will be truncated on the boundary of its parameter space. This means that if Ω is diagonal (as for URMA) the maximum likelihood estimate on the boundary will have one or both of the between-study variances equal to zero; else if Ω is non-diagonal (as for BRMA) then either one or both of the between-study variances equals zero or else the between-study correlation equals -1 or +1. In meta-analysis a between-study variance estimate of zero is well-understood, but a between-study correlation estimate of -1 or +1 is likely to be less familiar to practitioners. Thompson et al. [6] refer to this issue as 'poor estimation' but it perhaps should rather be considered a natural consequence of the sensible restrictions imposed on V, and one that prevents a variance estimate < 0 or a correlation estimate > +1 or < -1, as might otherwise be obtained.

Rationale for the research in this paper

In this paper, to aid practitioners we will further assess the normal BRMA model and the role of between-study correlation. In particular, we aim to identify the situations when B is likely to be +1 or -1 and examine the impact this has on the pooled and between-study variance estimates. We will also evaluate the benefits of BRMA over two separate URMAs, the more common approach in practice, and explore extensions to a generalised BRMA model for meta-analysis of proportions. To achieve these goals we firstly apply the normal BRMA of equation (1) to the two motivating examples. We then perform a simulation study of the normal BRMA model, as described below. The generalised BRMA model is then introduced and assessed in relation to the normal BRMA model and two separate generalised URMAs (see Results).

A simulation study to assess BRMA and the between-study correlation

We carried out a simulation study of the general normal model for BRMA in 11 scenarios, labelled (i) to (xi) (Table 2). Each of scenarios (i) to (xi) relates to a different but realistic specification of equation (1). Scenarios (i) to (vi) consider complete data, as in the telomerase example, whereas scenarios (vii) to (vi) consider when some data are missing at random across studies, as assumed in the BRMA of Thompson et al. [6]. The scenarios also vary in the relative sizes of the within- and between-study correlations, and also the within- and between-study variation. For example, scenarios (i) to (iv) involve within-study variances similar in size to the between-study variances, as observed in prognostic studies [10], and in scenario (vi) there is one relatively low and one relatively high between-study variance, as for the CD4 dataset. The sizes of the meta-analysis were either n = 5 or n = 50 studies for complete data, and either n = 10 or n = 50 for missing data. Our method of simulation was deliberately chosen to be similar to that previously used by Berkey et al. [1] and Sohn [19]. As an example, we now describe the simulation procedure for scenario (i) with n = 50.

Description of the simulation procedure for scenario (i) with n = 50

Generation of a dataset of 1000 meta-analyses

We chose β 1 = 0 in order to reflect little clinical benefit (e.g. a sensitivity of 50%) and in contrast β 2 = 2 (e.g. a specificity of 88%). The within study variances required, i.e. the 50 s and 50 s, were each found by sampling from a N(0.25,0.50) distribution and squaring the value obtained. This produced a median of 0.25 and an interquartile range of 0.7, values similar to those for the telomerase data (median = 0.21) and for the BRMA of Thompson et al. [6] (median = 0.28, interquartile range = 0.76). The between-study variances, and , were chosen to be 0.25 in scenario (i), which meant that they were similar in size to the median within-study variances. The within and between-study correlations were both set to zero for this scenario. All these choices were substituted into equation (1) and 1000 meta-analyses each of 50 studies were generated. Calculations were performed in S-Plus using the 'rmvnorm' function for generating bivariate normal values (code available upon request).

Estimation using the dataset of 1000 meta-analyses

Each of the 1000 meta-analyses in scenario (i) were analysed separately by:

-

fitting two separate URMAs as in equation (2) (where ρ B = 0) using REML to estimate β 1, β 2, and

-

fitting a BRMA as in equation (1) using REML to estimate β 1, β 2, , , and ρ B

The 1000 BRMA estimates and the corresponding 1000 URMA estimates from scenario (i) were then compared by calculating:

-

average parameter estimates across all the simulations (to check for bias)

-

coverage of the 95% confidence intervals for β 1 and β 2

-

average standard error and mean-square error (MSE) of β 1 and β 2

-

the number of occasions B was equal to +1 or -1 in the BRMA

To assess coverage, the 95% confidence intervals for β j were calculated using:

with n j the number of studies providing endpoint j. This t-distribution is commonly used in the meta-analysis literature, although it is only an approximation [20].

Description of the simulation procedure for scenarios (ii) to (xi)

Simulations in the other scenarios followed in the same manner as described above but with the data generated from the parameter values specific to each scenario as given in Table 2. For those missing data simulations of scenarios (viii) to (xi) we simulated data as described for complete data, except that for each generated meta-analysis we removed the data for the second endpoint in a randomly selected 50% of studies. So, for example, with n = 50 in scenario (viii) each of the 1000 simulated meta-analyses contained 25 studies with complete data and 25 studies with data for the first endpoint only.

Results

Application to the telomerase and CD4 data

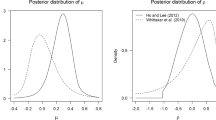

The normal model for BRMA (equation (1)) and then two separate URMAs (equation (2)) were applied to the telomerase data. Both approaches gave a pooled sensitivity of about 76% and a pooled specificity of about 88% (Table 3). The BRMA gave a between-study correlation estimate of -1 but the profile likelihood reveals that there is little information regarding ρ B with the log-likelihood gradually increasing as B approaches -1 (Figure 1), the end of its parameter space. Interestingly, the between-study variances were estimated to be somewhat larger in the BRMA than the URMA, and the standard errors of the pooled estimates were also slightly larger in the BRMA; just the opposite of what one might expect from a bivariate analysis utilising large correlation [10]. A similar finding was observed upon application of BRMA and URMA to the CD4 data. The BRMA again gave a between-study correlation estimate of -1 and both between-study variances were estimated somewhat larger in the BRMA than the URMA, as were the standard errors of the pooled estimates.

The question is thus posed: is the estimation of ρ B at the boundary of its parameter space adversely influencing the other BRMA parameter estimates and, if so, how (e.g. does it introduce bias)? Also, in terms of the individual pooled estimates, the telomerase and CD4 examples indicate little benefit of BRMA over two separate URMAs, despite the utilisation of correlation; but is this generally true and in what situations should a BRMA be preferred? To understand the answers to these important questions, it is helpful to now consider the results from our simulation study.

We note at this point that, for both telomerase and CD4, we also tried estimation of BRMA using an unstructured form of Ω, rather than using Cholesky decomposition of Ω as previously. Interestingly, this approach produced non-sensical between-study correlation estimates of -1.12 and -1.074 for the telomerase and CD4 datasets respectively. This emphasises the importance of a boundary constraint on ρ B as imposed by the Cholesky decomposition.

Simulation results

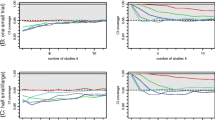

Table 4 includes the simulation results for scenarios (i), (ii), (viii) and (ix). The results for all other scenarios are provided in Appendix 1 (see Additional file 1). We now discuss the key findings.

Between-study correlation

One can see from Table 4 that the normal model for BRMA often estimates the between-study correlation, ρ B , as either +1 or -1, especially when the number of studies in the meta-analysis is small. For example, with n = 5 in scenario (ii), where the true ρ B was 0.8, 605 of the 1000 simulations (60.5%) gave B equal to +1 and 103 simulations (10.3%) gave B equal to -1. This led to a mean value of B equal to 0.639, a downward bias of about 20%. However, this downward bias is not in itself a concern because it is simply caused by the maximum likelihood estimator sensibly truncating B at +1 and -1, which improves the mean-square error of B . Furthermore, B is clearly asymptotically unbiased, with the occurrence of B equal to -1 or +1 and thus the bias in mean B becoming increasingly less as the number of studies in the meta-analysis increases (Table 4). Interestingly though, the number of studies required to reduce the occurrence of B equal to -1 or +1 was far greater when the within-study variation was large relative to the between-study variation. For example, even with a large n = 50 studies in scenario (v), where the within-study variation was relatively large, 58% of the simulations gave a between-study correlation of -1 or +1.

These findings indicate why B equals -1 in the BRMAs of the telomerase and CD4 datasets. For the telomerase data there are 10 studies; the simulations show that this magnitude of studies will often provide little information about ρ B , causing B to often be constrained at -1 or +1 so that the restrictions imposed on V are met. For the CD4 data, even though there are five more studies than telomerase, the mean within-study variance for endpoint j = 1 is 0.15 and this is large relative to the between-study variation ( = 0.048). In such situations where the within-study variation dominates, the simulations again show that B will often require truncation at the end of its parameter space to ensure V is non-negative definite.

Between-study variance estimates

Our simulation results show that the between-study variance estimates were less frequently truncated at zero in the BRMA than the URMA (Table 4). For example, in scenario (ii) with n = 5 was zero for 104 of the URMA simulations and none of the BRMA simulations. Furthermore, in those scenarios where the between-study correlation was often +1 or -1 (e.g. scenario (ii) with n = 5), the normal BRMA model produces a noticeable upward bias in the between-study variance estimates. For example, in scenario (ii) with n = 5 there was an upward bias of 0.024 in and , about 10% above their true value. To understand analytically why this occurs, we need to consider that the between-study covariance (τ 12) is formulated by τ 12 = ρ B τ 1 τ 2. Now, if B is constrained at -1 or +1, then to obtain the necessary solution for 12 the maximum likelihood estimator can only increase the j s, which do not have an upper bound constraint. Thus the between-study variance estimates are inflated to compensate for the constraint on B . This explains why the BRMAs of the telomerase and CD4 data, where B was truncated at -1, give j s that are noticeably larger than those from two separate URMAs. Practitioners need to be aware of this issue; however, we do not consider it a major concern as the maximum likelihood estimator for is still asymptotically unbiased (the bias decreases as the number of studies increases) and the inflation is simply caused by the sensible and necessary constraint on B . Furthermore, the inflation is essentially conservative, leading to a larger standard error and mean-square error of pooled estimates as now discussed.

Pooled estimates

For all complete and missing data scenarios, the pooled estimates were approximately unbiased for both BRMA and URMA. Even in those scenarios where B was often + 1 or -1 it is encouraging that, despite the upward bias in between-study variances, there was no systematic bias in the pooled estimates from the BRMA (Table 4 and Appendix 1 – see Additional file 1). The main affect on the pooled estimates was an inflated standard error and mean-square error. This is a conservative property, but meant that in some complete data scenarios the BRMA performed slightly worse than URMA, despite the utilisation of correlation in the BRMA that might be expected to improve efficiency [10]. For example, in the n = 5 results for scenario (iii), where about 56% of simulations gave B equal to +1 or -1 and there was an upward bias in between-study variances, the standard error/mean-square error of 1 was larger in the BRMA (0.272/0.083) than the URMA (0.268/0.081). This explains the BRMA results for telomerase and CD4, where the standard errors of pooled estimates were larger in the BRMA than the URMA due to the inflated between-study variances.

The coverage of β 1 and β 2 was between 93% and 98% in most scenarios assessed, and was often similar in URMA and BRMA. It is hard, though, to make general conclusions regarding coverage, as those situations where B is often +1 or -1 are the same situations where the t-distribution with n-1 degrees of freedom is a poor approximation to the true sampling distribution. The true degrees of freedom to use here are complex and account for the within-study variances [12]; however this is rarely done in meta-analysis and is beyond the scope of this paper.

BRMA versus URMA for estimating the pooled values

For complete data, in most scenarios the BRMA was marginally superior to URMA as the pooled estimates had slightly smaller standard errors and mean-square errors, especially given large correlations (Table 4 and Appendix 1 – see Additional file 1). However, the URMA sometimes performed equally well, and occasionally even better in those scenarios where B was often +1 or -1 as discussed above. We also compared the subset of BRMA results where B did not equal +1 or -1 with the corresponding URMA results, and again found that BRMA was generally slightly superior to URMA. This finding agrees with previous algebraic results [10], that given complete data there is generally a very small benefit of BRMA over URMA for estimating β 1 and β 2 themselves. Our focus here is on the individual pooled estimates, but we note that there are also broader reasons why a BRMA may be preferred over URMA for complete data. These are summarised in the Discussion to ensure a more complete picture for practitioners considering BRMA.

For the missing data simulations, the pooled estimate for endpoint j = 2 was of particular interest because of the missing data for this endpoint. Encouragingly, the mean-square error and mean standard error of 2 were much smaller in the BRMA than the URMA, although the coverage was comparable (Table 4 and Appendix 1 – see Additional file 1). For example, in the n = 10 simulations of scenario (xi) the mean standard error was 0.225 in the BRMA compared to 0.262 in the URMA, and the MSE was 0.0708 in the BRMA compared to 0.0921 in the URMA. The reduction in standard error and MSE was larger when both the within- and between-study correlations were high. Even when ρ Wi was zero there was still a reasonable benefit if ρ B was high; for example, in the n = 10 simulations of scenario (viii) the mean standard error of 2 was 0.174 in the BRMA compared to 0.209 in the URMA. This finding agrees with algebraic work regarding the benefits of BRMA for when there are data missing at random [10]. Practitioners should again consider this benefit alongside the other broader reasons for using BRMA rather than URMA (see Discussion).

Extended simulations of the normal BRMA model

In our above simulations of the normal BRMA model we used non-negative within- and between-study correlations; however, in reality negative correlations may arise as in the telomerase and CD4 examples. Also, our simulations took the within-study correlations to be the same in each study, while in reality their value may vary. Further simulations were thus performed to assess negative correlation and discrepant within-study correlations. These gave findings consistent with those identified previously (Appendix 2 – see Additional file 2); the BRMA was still beneficial over URMA for estimating the pooled endpoints, and where the between-study correlation estimate was often +1 or -1 there was again an upward bias in the BRMA between-study variance estimates.

For simplicity, in all our simulations and were generated independently but in reality they are likely to be correlated due to the sample size being similar for both endpoints. We also generated the s independent to the Y ij s, yet in many situations, such as the synthesis of log-odds ratios, the size of may be related to the size of Y ij . To address this, we also performed further simulations where we firstly generated individual binary data for diagnostic studies, using simulation code kindly provided by Chu and Cole [12]. From this realistic raw data we then calculated the Y ij s and their s, before then fitting the normal BRMA model as before. The results again show that the between-study correlation estimate is often +1 or -1 and the BRMA is still preferable to URMA, with improved mean-square error, coverage and, especially, bias of estimates (Table 5). However, the results also revealed some severe limitations of a normal model for meta-analysis of binary data, as now discussed.

A generalised model for BRMA of proportions

So far in this paper we have modelled the summary statistics across studies, i.e. the Y ij s, and assumed they are normally distributed. Indeed, in our main simulations we generated the Y ij s directly from the normal BRMA model of equation (1); thus, our conclusions are only valid for Y ij s that can truly be assumed normally distributed. This normality assumption is common in the meta-analysis field, and will often be suitable (see Discussion). However, in our two motivating examples it is more plausible for the CD4 data than the telomerase data as the latter involves a meta-analysis of proportions, for which the normality assumption is not appropriate when some studies have a small number of patients or the proportions are close to 0 or 1. For this reason recent articles [12] suggest that, rather than modelling the logit-proportions using the normal distribution, one should directly model the binary data using a binomial distribution. This approach also avoids the use of ad hoc continuity corrections in those studies which have zero cells. In terms of diagnostic studies, this generalised model for BRMA of sensitivity and specificity can be written as follows [12]:

no. testing positive i ~Binomial (total no. true positives i , sensitivity i ) logit(sensitivity i ) = β 1 + u 1

no. testing negative i ~Binomial (total no. true negatives i , specificity i ) logit(specificity i ) = β 2 + u 2

Equation (3) can be fitted using maximum likelihood estimation in SAS NLMIXED. Chu and Cole [12] show that where the true sensitivity and specificity are large, this generalised BRMA model produces close to unbiased pooled and between-study correlation estimates, whereas the general normal BRMA model produces somewhat biased estimates. This can also be seen in our simulations results for meta-analysis of proportions in Table 5. The mean-square error, coverage, and, most noticeably, bias of estimates are far superior in the generalised BRMA than the normal BRMA. Furthermore, the generalised BRMA is also marginally superior in terms of bias to two separate generalised URMAs (i.e. equation (3) where ρ B = 0), emphasising that the BRMA is also beneficial over URMA in the generalised model framework. Note though that, although it is the best method, the generalised BRMA model is itself not without bias (Table 5); to rectify this, extension to REML or other estimation techniques is potentially important.

To conclude our research we applied the generalised BRMA model to the telomerase data. Unfortunately the model would not converge appropriately; different starting values all produced a between-study correlation estimate of -1 but gave markedly different parameter estimates and caused spurious standard errors. For example, for one set of starting values the model gave the standard error of 1 as 30.5, whereas for another set the standard error was close to zero. Indeed SAS provides the following warning: 'the final Hessian matrix is not positive definite, and therefore the estimated covariance matrix is not full rank and may be unreliable'. The problem here is again due to the between-study correlation of estimate -1 in Ω, as this causes the determinant of to be zero. This has greater implications in equation (3) than for the normal BRMA model of equation (1). The maximum likelihood estimator for equation (1) involves the determinant of δ i + in each study, which will not be zero unless the within-study correlations are also +1 or -1. However, in equation (3) the maximum likelihood estimator involves the determinant of itself, which causes problems akin to dividing by zero, which is why spurious estimates and standard errors are produced. It is clear that there is simply little information to estimate the between-study correlation for the telomerase dataset, due to the small number of studies. This issue is also evident in our n = 10 simulations of the generalised BRMA model (Table 5), where 397 of the 1000 simulations did not converge appropriately. In such situations where estimating ρ B is difficult, application of two generalised URMAs may be the most appropriate option available (Table 3), although specifically for diagnostic studies other methods may also be valuable [21].

Discussion

Multivariate meta-analysis models are increasingly used to synthesise multiple, correlated endpoints of interest, especially in studies of diagnosis [5, 21] and surrogate outcomes [7, 8]. The Campbell Collaboration suggests that meta-analysts 'should not ignore the dependence among study outcomes'; however, they also note that 'the consequences of accounting for (modelling) dependence or ignoring it are not well understood' [22]. To therefore aid practitioners considering the approach, in this paper we have examined two models for BRMA and compared them to separate univariate syntheses, the traditional approach. We now discuss the main conclusions from our work and suggest future research priorities.

The general normal model for BRMA

A normal meta-analysis model is appropriate when the Y ij s can be assumed normally distributed; this assumption is commonly used, for example where the Y ij s are log-odds ratios [15], log-hazard ratios [10], mean differences [1] and log-event rates [11].

Between-study covariance parameters

It is clear from our work that maximum likelihood estimation of a normal random-effects meta-analysis model will often truncate the between-study covariance matrix, Ω, on the boundary of its parameter space. For URMA this is observed by a between-study variance estimate of zero, whilst in BRMA it is more likely observed by a between-study correlation of +1 or -1. Practitioners are likely to be familiar with the concept of zero variance, but are perhaps less likely to appreciate why a correlation is estimated at unity. However, both arise for the same reason, namely that Ω must be a non-negative definite matrix such that is not < 0 and ρ B is not > +1 or < -1. Our simulations show that, especially when the number of studies is small and/or the within-study variance is large relative to the between-study variance, such truncation is often necessary to ensure the sensible restrictions are met. In the normal BRMA, we have also shown that a consequence of B being truncated at +1 or -1 is an upward bias in between-study variance estimates, which are inflated upwards to compensate for the restriction on B . Practitioners should not, though, be overly concerned by this. We have shown it does not cause any systematic bias in the pooled estimates from BRMA, and it leads to conservative standard errors and mean-square errors.

The benefits over URMA for the pooled estimates

Our simulation results highlight that a normal model for BRMA is preferable to two separate URMAs for estimating the pooled endpoints, and our results are consistent with previous findings that show how the inclusion of correlation allows the 'borrowing of strength' across endpoints [1, 10, 19]. We thus recommend practitioners use a BRMA rather than two separate URMAs where possible. In particular, when some data are missing at random the BRMA is likely to produce a much smaller standard error and mean-square error of pooled estimates than URMA, even for moderate correlations. Riley et al. [10] give an applied example that shows this. For complete data, practitioners should not expect to see much gain in statistical efficiency over URMA; the mean-square error and standard error of pooled estimates are generally only marginally smaller in BRMA than URMA, and on the occasion of B = +1 or -1 they may even be slightly worse in BRMA (due to the inflated between-study variances, as in the telomerase and CD4 examples). However, there are broader reasons why BRMA may still be preferable in this situation (see below).

The generalised model for BRMA

In equation (3) we extended our work to a generalised BRMA model for meta-analysis of two proportions. For synthesis of two proportions, like sensitivity and specificity, this approach is preferable to the general normal BRMA model (Table 5) because the normality assumption breaks down when the proportions are close to 0 or 1 and when there are small patient numbers [12]. It also avoids the use of ad-hoc continuity corrections when there are zero cells in some studies. Practitioners synthesising diagnostic studies are thus encouraged to use the generalised BRMA model, rather than the normal model or indeed two separate generalised URMAs. However, they should also be aware that a between-study correlation estimate of +1 or -1 in the generalised BRMA model is likely to be associated with non-convergence and unstable pooled estimates, as discussed for the telomerase data. In such situations there may be little information to estimate the correlation, and so practitioners may wish to consider other methods for synthesising diagnostic studies, such as the hierarchical summary receiver operating characteristic (HSROC) method [21]; if this is also not possible then the best option may be a generalised URMA for sensitivity and specificity separately (Hamza et al., personal communication).

The broader benefits of BRMA

Our simulations focused mainly on the benefits of BRMA over URMA for estimating the pooled endpoints. However, there are also broader reasons why a BRMA may be preferable to URMA, for either complete or missing data. For example, BRMA allows one to describe the bivariate relationship between endpoints [4, 5], model, test or make predictions from their association [7], and estimate some function of the two pooled endpoints, like β 1 - β 2 [10], β 1 + β 2 [5], or β 1/β 2 [6]. For instance, for the telomerase data, a BRMA enables a single framework to estimate the pooled sensitivity, pooled specificity, and the pooled diagnostic odds ratio (exp(β 1 + β 2)) [21]. Furthermore, Reitsma et al. [5] show that a BRMA of diagnostic studies enables the correlation between pooled endpoints to be estimated, which allows one to measure the shape of their bivariate relation and construct confidence ellipses. It also allows calculation of the conditional variance in one parameter given a fixed value of the other parameter, and allows drawing of the summary ROC curve. In terms of the CD4 data, the estimated correlation between pooled endpoints from a BRMA enables one to predict the time of onset of AIDS or death from a future patient's CD4 level. Thus the BRMA can help establish whether CD4 should be used as a surrogate of disease-free survival [7, 8], and further research of multivariate meta-analysis in this context is recommended. A BRMA may also be extended to a bivariate meta-regression by including additional study-level covariates that explain the between-study heterogeneity. For example, one may wish to include a covariate for study quality in meta-analysis of diagnostic studies [23]. Berkey et al [1] show that a bivariate meta-regression is more efficient than separate univariate meta-regressions for assessing such study-level covariates, again due to the inclusion of correlation.

Further research suggestions

For the general normal BRMA model, the role of estimation techniques other than REML would be interesting to consider, especially as other potentially better options have just been proposed [24]. For the generalised BRMA model, SAS NLMIXED currently only allows maximum likelihood estimation and so extension to REML is required, especially as the maximum likelihood estimates are not without bias (Table 5). Further research of multivariate meta-analysis within a Bayesian framework is also potentially important, as it would enable the incorporation of prior knowledge about the parameters, which may be valuable when the number of studies is small [15, 25]. It would also allow the uncertainty of the s, s and ρ Wi s to be taken into account, as in practice they will only be estimates themselves as mentioned by Daniels and Hughes [7]. Further assessment of the role of the within-study correlations is also required, in particular what should we do when they are non-zero but unavailable? For the meta-analysis of surrogate endpoints it has been suggested that the within-study correlations are likely to be small (between 0 and 0.2) and can plausibly be considered constant across studies [7], or even zero [26]. However, this is not necessarily true in other fields; for example, in a multivariate meta-analysis of longitudinal data the within-study correlations varied between 0.48 and 0.97 (Jones et al., personal communication).

The use of individual patient data (IPD) in multivariate meta-analysis should also be considered, especially as IPD is the gold-standard for meta-analysis [27] and it would allow any unavailable within-study correlations to be calculated directly [7]. In practice though, IPD may only be available for a proportion of studies, and so methods for multivariate meta-analysis are required that combine IPD and aggregate data [27, 28]. There has also be little consideration of how to assess dissemination bias using a multivariate meta-analysis framework [29, 30], and this warrants attention as meta-analysis datasets are often fraught with such issues as publication bias [31] and within-study selective reporting [32, 33]. In such scenarios some of the missing endpoints may not be missing at random, and so sensitivity analyses to assess how the meta-analysis results change under a variety of missing data assumptions would be potentially valuable [34].

Conclusion

In this paper we have used analytic reasoning, two applied examples and a realistic simulation study to highlight the benefits of a normal model for BRMA over two separate URMAs, and explain why the between-study correlation is often estimated as +1 or -1. For meta-analysis of proportions, we also extended our work to a generalised model for BRMA, to ensure the binary data is modelled correctly. Our work adds to a growing body of literature indicating the rationale and benefits of a multivariate approach to meta-analysis, and we encourage meta-analysts to consider the approach in practice.

Abbreviations

- BRMA:

-

Bivariate random-effects meta-analysis

- IPD:

-

Individual patient data

- URMA:

-

Univariate random-effects meta-analysis

- REML:

-

Restricted maximum likelihood

References

Berkey CS, Hoaglin DC, Antczak-Bouckoms A, Mosteller F, Colditz GA: Meta-analysis of multiple outcomes by regression with random effects. Stat Med. 1998, 17 (22): 2537-2550. 10.1002/(SICI)1097-0258(19981130)17:22<2537::AID-SIM953>3.0.CO;2-C.

Hasselblad V: Meta-analysis of multitreatment studies. Med Decis Making. 1998, 18 (1): 37-43.

Becker BJ, Tinsley HEA, Brown S: . Multivariate Meta-analysis. 2000, San Diego , Academic Press

Van Houwelingen HC, Arends LR, Stijnen T: Advanced methods in meta-analysis: multivariate approach and meta-regression. Stat Med. 2002, 21 (4): 589-624. 10.1002/sim.1040.

Reitsma JB, Glas AS, Rutjes AW, Scholten RJ, Bossuyt PM, Zwinderman AH: Bivariate analysis of sensitivity and specificity produces informative summary measures in diagnostic reviews. J Clin Epidemiol. 2005, 58 (10): 982-990. 10.1016/j.jclinepi.2005.02.022.

Thompson JR, Minelli C, Abrams KR, Tobin MD, Riley RD: Meta-analysis of genetic studies using Mendelian randomization--a multivariate approach. Stat Med. 2005, 24 (14): 2241-2254. 10.1002/sim.2100.

Daniels MJ, Hughes MD: Meta-analysis for the evaluation of potential surrogate markers. Stat Med. 1997, 16 (17): 1965-1982. 10.1002/(SICI)1097-0258(19970915)16:17<1965::AID-SIM630>3.0.CO;2-M.

Gail MH, Pfeiffer R, Van Houwelingen HC, Carroll RJ: On meta-analytic assessment of surrogate outcomes. Biostatistics. 2000, 1 (3): 231-246. 10.1093/biostatistics/1.3.231.

Kalaian HA, Raudenbush SW: A Multivariate Mixed Linear Model for Meta-Analysis. Psychological Methods. 1996, 1(3): 227-235. 10.1037/1082-989X.1.3.227.

Riley RD, Abrams KR, Lambert PC, Sutton AJ, Thompson JR: An evaluation of bivariate random-effects meta-analysis for the joint synthesis of two correlated outcomes. Statistics in Medicine. 2007, 26: 78-97. 10.1002/sim.2524.

Arends LR, Voko Z, Stijnen T: Combining multiple outcome measures in a meta-analysis: an application. Stat Med. 2003, 22 (8): 1335-1353. 10.1002/sim.1370.

Chu H, Cole SR: Bivariate meta-analysis for sensitivity and specificity with sparse data: a generalized linear mixed model approach (letter to the Editor). Journal of Clinical Epidemiology. 2006, (in press):

Glas AS, Roos D, Deutekom M, Zwinderman AH, Bossuyt PM, Kurth KH: Tumor markers in the diagnosis of primary bladder cancer. A systematic review. J Urol. 2003, 169 (6): 1975-1982. 10.1097/01.ju.0000067461.30468.6d.

Hardy RJ, Thompson SG: A likelihood approach to meta-analysis with random effects. Stat Med. 1996, 15 (6): 619-629. 10.1002/(SICI)1097-0258(19960330)15:6<619::AID-SIM188>3.0.CO;2-A.

Nam IS, Mengersen K, Garthwaite P: Multivariate meta-analysis. Stat Med. 2003, 22 (14): 2309-2333. 10.1002/sim.1410.

Berrington A, Cox DR: Generalized least squares for the synthesis of correlated information. Biostatistics. 2003, 4 (3): 423-431. 10.1093/biostatistics/4.3.423.

Raudenbush SW, Becker BJ, Kalaian H: Modeling multivariate effect sizes. Psychological Bulletin. 1988, 103 (1): 111-120. 10.1037/0033-2909.103.1.111.

Gentle JE, Gentle JE: Cholesky Factorization. Numerical Linear Algebra for Applications in Statistics. 1998, Berlin , Springer-Verlag, 93-95.

Sohn SY: Multivariate meta-analysis with potentially correlated marketing study results. Naval Research Logistics. 2000, 47: 500-510. 10.1002/1520-6750(200009)47:6<500::AID-NAV3>3.0.CO;2-Z.

Follmann DA, Proschan MA: Valid inference in random effects meta-analysis. Biometrics. 1999, 55 (3): 732-737. 10.1111/j.0006-341X.1999.00732.x.

Harbord RM, Deeks JJ, Egger M, Whiting P, Sterne JA: A unification of models for meta-analysis of diagnostic accuracy studies. Biostatistics. 2006

Becker BJ, Hedges LV, Pigott TD: Campbell Collaboration Statistical Analysis Policy Brief. A Campbell Collaboration resource document (available at http://wwwcampbellcollaborationorg/ECG/policy_statasp). 2004

Westwood ME, Whiting PF, Kleijnen J: How does study quality affect the results of a diagnostic meta-analysis?. BMC Med Res Methodol. 2005, 5 (1): 20-10.1186/1471-2288-5-20.

Sidik K, Jonkman JN: A comparison of heterogeneity variance estimators in combining results of studies. Stat Med (in press).

Abrams KR, Sutton AJ, Cooper NJ, Sculpher MPS, Ginlley L, Robinson M: Populating economic decision models using meta-analyses of heterogenously reported studies augmented with expert beliefs. Technical Report 05-06, Centre for Biostatistics and Genetic Epidemiology, University of Leicester. 2005

Korn EL, Albert PS, McShane LM: Assessing surrogates as trial endpoints using mixed models. Stat Med. 2005, 24 (2): 163-182. 10.1002/sim.1779.

Riley RD, Look MP, Simmonds MC: Combining individual patient data and aggregate data in evidence synthesis: a systematic review identified current practice and possible methods. Journal of Clinical Epidemiology (in press). 2007

Goldstein H, Yang M, Omar RZ, Turner RM, Thompson SG: Meta-analysis using multilevel models with an application to the study of class size effects. Applied Statistics. 2000, 49: 399-412.

Riley RD, Sutton AJ, Abrams KR, Lambert PC: Sensitivity analyses allowed more appropriate and reliable meta-analysis conclusions for multiple outcomes when missing data was present. Journal of Clinical Epidemiology. 2004, 57(9): 911-924. 10.1016/j.jclinepi.2004.01.018.

Jackson D, Copas J, Sutton AJ: Modelling reporting bias: the operative mortality rate for ruptured abdominal aortic aneurysm repair . Journal of the Royal Statistical Society, Series A. 2005, 168: 737-752.

Sterne JA, Egger M, Smith GD: Systematic reviews in health care: Investigating and dealing with publication and other biases in meta-analysis. BMJ. 2001, 323 (7304): 101-105. 10.1136/bmj.323.7304.101.

Hahn S, Williamson PR, Hutton JL, Garner P, Flynn EV: Assessing the potential for bias in meta-analysis due to selective reporting of subgroup analyses within studies. Stat Med. 2000, 19 (24): 3325-3336. 10.1002/1097-0258(20001230)19:24<3325::AID-SIM827>3.0.CO;2-D.

Hutton JL, Williamson PR: Bias in meta-analysis due to outcome variable selection within studies. Appl Stat. 2000, 49: 359-370.

Copas J, Shi JQ: Meta-analysis, funnel plots and sensitivity analysis. Biostatistics. 2000, 1 (3): 247-262. 10.1093/biostatistics/1.3.247.

Pre-publication history

The pre-publication history for this paper can be accessed here:http://www.biomedcentral.com/1471-2288/7/3/prepub

Acknowledgements

Richard Riley is funded by the Department of Health National Coordinating Centre for Research Capacity Development as a Post-doctoral Research Scientist in Evidence Synthesis. We would like to thank the three reviewers of our manuscript, whose comments have substantially improved the content of this paper. We also greatly thank Haitao Chu, who kindly provided SAS simulation code that enabled us to assess the generalised bivariate model.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The author(s) declare that they have no competing interests.

Authors' contributions

RDR, KRA, AJS and PCL developed the study. RDR performed analysis of the telomerase and CD4 data. RDR, PL and JRT performed the normal model simulations; RDR performed the generalized model simulations. All authors examined the simulation results and discussed their interpretations. RDR wrote the paper with contributions from all authors.

Electronic supplementary material

12874_2006_186_MOESM1_ESM.doc

Additional file 1: Appendix 1. Simulation results of the normal BRMA and URMA models for scenarios (iii) to (vii), (x) and (xi) (DOC 150 KB)

12874_2006_186_MOESM2_ESM.doc

Additional file 2: Appendix 2. Simulation results for the normal BRMA and URMA models for some scenarios involving negative correlation (DOC 86 KB)

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

This article is published under license to BioMed Central Ltd. This is an Open Access article distributed under the terms of the Creative Commons Attribution License (http://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Riley, R.D., Abrams, K.R., Sutton, A.J. et al. Bivariate random-effects meta-analysis and the estimation of between-study correlation. BMC Med Res Methodol 7, 3 (2007). https://doi.org/10.1186/1471-2288-7-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1471-2288-7-3