Abstract

Background

The use of systematic literature review to inform evidence based practice in diagnostics is rapidly expanding. Although the primary diagnostic literature is extensive, studies are often of low methodological quality or poorly reported. There has been no rigorously evaluated, evidence based tool to assess the methodological quality of diagnostic studies.

The primary objective of this study was to determine the extent to which variations in the quality of primary studies impact the results of a diagnostic meta-analysis and whether this differs with diagnostic test type. A secondary objective was to contribute to the evaluation of QUADAS, an evidence-based tool for the assessment of quality in diagnostic accuracy studies.

Methods

This study was conducted as part of large systematic review of tests used in the diagnosis and further investigation of urinary tract infection (UTI) in children. All studies included in this review were assessed using QUADAS, an evidence-based tool for the assessment of quality in systematic reviews of diagnostic accuracy studies. The impact of individual components of QUADAS on a summary measure of diagnostic accuracy was investigated using regression analysis. The review divided the diagnosis and further investigation of UTI into the following three clinical stages: diagnosis of UTI, localisation of infection, and further investigation of the UTI. Each stage used different types of diagnostic test, which were considered to involve different quality concerns.

Results

Many of the studies included in our review were poorly reported. The proportion of QUADAS items fulfilled was similar for studies in different sections of the review. However, as might be expected, the individual items fulfilled differed between the three clinical stages. Regression analysis found that different items showed a strong association with test performance for the different tests evaluated. These differences were observed both within and between the three clinical stages assessed by the review. The results of regression analyses were also affected by whether or not a weighting (by sample size) was applied. Our analysis was severely limited by the completeness of reporting and the differences between the index tests evaluated and the reference standards used to confirm diagnoses in the primary studies. Few tests were evaluated by sufficient studies to allow meaningful use of meta-analytic pooling and investigation of heterogeneity. This meant that further analysis to investigate heterogeneity could only be undertaken using a subset of studies, and that the findings are open to various interpretations.

Conclusion

Further work is needed to investigate the influence of methodological quality on the results of diagnostic meta-analyses. Large data sets of well-reported primary studies are needed to address this question. Without significant improvements in the completeness of reporting of primary studies, progress in this area will be limited.

Similar content being viewed by others

Background

The use of systematic literature review to inform evidence-based practice in diagnostics is rapidly expanding. Although the primary diagnostic literature is extensive, there remain a number of problems for systematic reviews of diagnostic tests. Appropriate methods for rigorous evaluation of diagnostic technologies have been well established [1–5]. However, available studies have generally been poorly designed and reported [6–8]. Similarly, although a number of quality checklists for diagnostic accuracy studies have been proposed [9] and there is growing evidence on the effects of bias in such studies [10], there has been no rigorously evaluated, evidence-based quality assessment tool for diagnostic studies.

The objective of this study was to investigate the impact of quality on the results of a diagnostic meta-analysis, using regression analysis. A large diagnostic systematic review was required to enable the use of regression analysis to investigate the impact of components of quality upon results.

We have recently completed a systematic review, which aimed to determine the most appropriate pathway for the diagnosis and further investigation of UTI in children [11]. It included an assessment of the accuracy of tests for three different clinical stages of UTI: the diagnosis UTI, localisation of infection, and further investigation of patients with confirmed UTI. The nature of the tests included in these three clinical sections of this review differed. Tests used to diagnose UTI were generally laboratory-based or near-patient methods, with relatively objective interpretation of results, e.g. dipstick tests and microscopy. By contrast, tests used to investigate confirmed UTI mainly utilised imaging technologies which are largely subjective in their interpretation, and where diagnostic thresholds are difficult to define. Tests used to localise infection spanned both categories. We hypothesised that the components of methodological quality affecting results were likely to differ between the three sections of the review. Such potential differences may indicate a need for topic-specific checklists for the assessment of quality in diagnostic studies.

A secondary aim of this study was to contribute to the evaluation of QUADAS, an evidence-based tool for the assessment of the quality of diagnostic accuracy studies that was specifically developed for use in systematic reviews of diagnostic tests [12], by investigating the importance of specific QUADAS items.

Methods

We used QUADAS [12] (Table 1) to assess the quality of primary studies included in the review. Items were rated as 'yes', 'no', or 'unclear'. We examined differences in the individual QUADAS items fulfilled, as well as their impact on test performance. The review divided the diagnosis and further investigation of UTI into the following three clinical stages: diagnosis of UTI, localisation of infection, and further investigation of the UTI. Each stage used different types of diagnostic test, which were considered to involve different quality concerns.

We analysed results grouped by clinical stage. Within these groups, we pooled studies of similar tests or test combinations where sufficient data were available and where pooling was clinically meaningful. (Table 2) The minimum number of studies that we required for regression analysis was ten. This choice was made based on published guidance [13, 14].

We estimated summary receiver operator characteristic (SROC) curves using the following equation [15]:

a and b were estimated by regressing D against S for each study:

D = a + bS

D = {logit (sensitivity) - logit (1-specificity)} = log diagnostic odds ratio (DOR)

S = {logit (sensitivity) + logit (1-specificity)}

We used both weighted and unweighted models. For the weighted model we weighted on sample size. We chose to weight on sample size rather than inverse variance, a method sometimes used in this type of analysis, as we believe that weighting on the inverse variance can produce biased results. The reason for this bias is that the DOR is associated with its variance and so large DORs will inevitably have large variances, which will be reflected in the weightings.

We assessed between study heterogeneity through visual examination of forest plots and statistically using the Q statistic [16]. Where sufficient data were available, we used regression analysis to investigate whether individual QUADAS items and additional variables thought likely to be associated with diagnostic accuracy were associated with the DOR and hence whether differences in these items between the studies accounted for some of the observed heterogeneity. Where data were available, the following additional variables were investigated:

Patient age (<2 years, <5 years, <12 years and <18 years) was included to examine possible variation with age within the paediatric population.

The geographic region where studies were conducted was included to account for possible regional differences in test technology and infective agent.

Specific variations in index test technique were also included. For microscopy for pyuria and bacteriuria a variable on whether the sample was centrifuged was included, and for microscopy for bacteriuria a variable for Gram stain was included. For ultrasound for the detection of reflux a variable for whether or not the ultrasound involved a contrast agent was included.

The SROC model [15], was extended to include each of the 14 QUADAS items and each of the variables above as individual covariates [17]. As each QUADAS item can be scored as "yes", "no" or "unclear", we included QUADAS items as categorical variables with 3 possible outcomes, thus including the comparisons of "yes vs no", and "yes vs unclear". This allowed us to make some distinction between associations of aspects of methodological quality with test performance and associations of completeness of reporting with test performance. A number of QUADAS items only received two of the three possible scores (i.e. were scored either "yes" or "no", or "yes" or "unclear", or "no" or "unclear"). These items were therefore included as dichotomous variables.

A multivariate linear regression analysis was conducted. Initially, we performed univariate analysis with all items included separately in the model. Items that showed moderate evidence of an association with D, defined as p < 0.10, were investigated further using step-down regression analysis. All items found to show moderate evidence of an association in the univariate models were entered into the multivariate model, then dropped in a step-wise fashion with the item with the weakest evidence of an association (largest p-value) dropped first. For covariates with more than one level, evidence of an association of one indicator variable with test performance was considered sufficient for inclusion in the model. The final model was achieved when all items remaining showed strong evidence of an association with D, defined as p < 0.05. Interaction terms were not included. Associations of covariates with D were expressed as relative diagnostic odds ratios (RDOR). The DOR is used as an overall measure of diagnostic accuracy. It is calculated as the odds of positivity among diseased persons, divided by the odds of positivity among non-diseased. When a test provides no diagnostic evidence then the DOR is 1.0. The RDOR is calculated as the DOR when the covariate is present divided by the DOR when the covariate is absent. It therefore provides an indicator of the overall impact on diagnostic accuracy of the presence of a given covariate.

Results

Results of QUADAS assessment

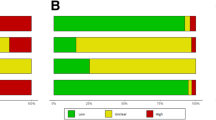

The proportion of QUADAS items fulfilled by studies included in our systematic review was similar for each of the three clinical stages assessed in the review. Studies evaluating tests to diagnose UTI fulfilled a median of 8 (range 5–13) items, those evaluating tests used to localise infection also fulfilled a median of 8 (range 3–13) items, and those evaluating further investigations fulfilled a median of 7.5 (range 3–12) items. Figure 1 illustrates the number of QUADAS items fulfilled by studies in each category. The similarity in numbers of QUADAS items fulfilled masks apparent differences in the individual items fulfilled.

Figure 2 shows the proportion of studies that scored "yes", "no" and "unclear" for each of the QUADAS items, separately for the three sections of the review.

Tests for the diagnosis of UTI (n = 79 studies) [18–96]

The use of an inappropriate spectrum of patients and inadequate reporting of inclusion criteria were problematic for studies in this category. The majority of studies provided insufficient details on how the reference standard was performed. Studies failed to report sufficient details on clinical review bias, diagnostic review bias and test review bias to judge whether these were avoided. Study withdrawals and handling of uninterpretable results were also poorly reported.

Tests for the localisation of infection (n = 39 studies) [48, 69, 97–133]

The time delay between the index test and reference standard was more of a problem with these studies than with those on the diagnosis of UTI. The use of an appropriate reference standard was also an issue in some of these studies. Spectrum composition and reporting of details of how children were selected for inclusion in the study was better in these studies than in the studies of the diagnosis of UTI. Only around half of studies provided sufficient details of how the index test and reference standard were performed to allow replication of these tests. More studies in this category, almost 40%, provided information indicating that test and diagnostic review bias had been avoided, in the remainder of studies this information was not reported. As with studies of the diagnosis of UTI, reporting of clinical review bias, handling of uninterpretable results, and withdrawals from the study was poor.

Tests for the further investigation of confirmed UTI (n = 71 studies) [134–204]

As with studies of the diagnosis of UTI, spectrum composition and reporting of inclusion criteria were poor in this group. The time delay between the index test and reference standard was also an issue in many of these studies. Around half of studies reported that diagnostic and test review bias had been avoided, the remaining studies did not report whether the index test and reference standard were interpreted blind to the results of each other. This was similar to the situation seen for studies on the localisation of infection. Reporting of the reference standard was poor. As in all previous groups, studies also provided very little information on whether appropriate clinical information was available when test results were interpreted, how uninterpretable results were handled, and whether there were any withdrawals from the study and if so whether all withdrawals were accounted for.

Results of multivariate regression analysis

Tests for the diagnosis of UTI

Tests involving dipstick or microscopy techniques were the only categories where enough studies were available to enable regression analysis. Table 3 summarises the results of the regression analysis for studies assessing dipstick tests. For dipstick to detect urinary nitrite (23 studies) [20, 26, 28, 34, 36, 40, 41, 43, 52, 54–57, 60, 63, 66, 72, 74, 84, 88, 93–95], the weighted analysis found that studies reporting that clinical review bias had been avoided had a DOR 4.7 (95% CI: 1.7, 12.7) times greater than those which did not report on whether clinical information was available to those interpreting the test results (p = 0.004). This is what would be expected, as the DOR is likely to be higher when those interpreting test results have access to appropriate clinical information similar to that, which would be available in practice. No studies reported the presence of clinical review bias. This was the only item investigated to show strong evidence of an association with test performance in the weighted multivariate analysis, although age and geographic region did show moderate evidence of an association in the univariate analysis. The unweighted analysis showed slightly different results. The same three items were found to show at least moderate evidence of an association in the univariate analysis. However, only country remained in the multivariate model, suggesting that studies conducted in North America showed higher accuracy than studies conducted in Europe or other areas (p < 0.05).

For dipsticks measuring urinary leukocyte esterase (14 studies) [20, 28, 34, 36, 43, 56, 57, 60, 63, 66, 72, 84, 94, 95] and for dipsticks for the presence of either nitrite or leukocyte esterase (15 studies) [19–21, 28, 34, 56, 60, 63, 66, 84–86, 92, 94–96], no items showed strong evidence of an association with the DOR in the weighted analysis. However, for urinary leukocyte esterase, the unweighted analysis found strong evidence of an association between patient age and the DOR. There was strong evidence (p = 0.015) that the dipstick was more accurate in children aged <12 years than in those aged <2 years (RDOR = 28.1, 95% CI: 2.3, 343.3). There was no evidence of any difference in accuracy between children aged <18 years and those aged <2 years (p = 0.703), and very little evidence of any difference between children aged <5 years and those aged <2 years (p = 0.158).

Table 4 summarises the results of the regression analysis for studies that assessed the accuracy of microscopy. In studies evaluating microscopy to detect pyuria three items showed a strong association with test performance in the weighted analysis (28 studies) [19–23, 28, 29, 34, 35, 41, 43, 46, 47, 49, 50, 58, 59, 63, 67, 70, 75, 77, 80, 81, 83, 85, 92–94]. The DOR was 1.3 (95% CI: 1.1, 1.6; p = 0.007) times higher in studies that adequately reported details of the reference standard execution. The DOR was lower, RDOR = 0.2 (95% CI: 0.1, 0.4; p < 0.001) in studies that did not report on reasons for withdrawals compared to studies in which it was unclear whether there were any withdrawals, and 1.8 times higher (95 % CI: 1.0, 3.4; p = 0.056) in studies in which withdrawals were accounted for compared to those in which this was unclear. The DOR was lower, RDOR = 0.2 (95% CI: 0.1, 0.3; p < 0.001), in studies where samples were centrifuged compared to studies in which samples were not centrifuged. In the unweighted analysis, only centrifugation showed any evidence of an association with test performance (p = 0.08). All of these items, with the exception of centrifugation, relate to the completeness of reporting. The association for centrifugation is counter-intuitive, as we would expect centrifugation of the sample to lead to improved test accuracy.

Two items showed a strong evidence of an association with the DOR in the weighted analysis of studies evaluating microscopy to detect bacteriuria (22 studies) [20, 21, 23, 28, 34, 35, 41, 47, 50, 61–64, 67, 70, 76, 77, 80, 85, 90, 91, 94]. The DOR was 3.0 (95% CI: 1.6, 5.5, p = 001) times greater in studies in which incorporation bias was present compared to those in which it was avoided, and 5.3 (95% CI: 2.3, 12.0, p = 0.001) times greater if samples were Gram stained. We would expect both Gram staining and the presence of incorporation bias to increase test performance as found in the analysis. The unweighted analysis found very similar results.

Tests for the localisation of infection

Only the evaluation of ultrasound for the localisation of infection provided sufficient data to enable the conduct of regression analysis (20 studies) [48, 69, 97, 99–102, 109–111, 113–115, 117, 118, 121, 126, 128, 132, 133]. None of the QUADAS items, or other items investigated, showed moderate evidence of an association with the DOR in this analysis, using either the weighted or unweighted model.

Tests for the further investigation of confirmed UTI

Table 5 summarises the results of the regression analysis for studies assessing this clinical stage. The use of ultrasound to detect reflux was the only test in this category with sufficient data to support regression analysis (28 studies) [69, 135, 137, 140, 141, 150, 152, 153, 155, 164, 169, 170, 172, 176–178, 181, 185, 187, 189, 190, 195–198, 202–204]. Three items showed strong evidence of an association with the DOR in the weighted analysis. The DOR was 8.0 (95% CI: 2.9, 22.0; p < 0.001) times greater in studies that used contrast enhanced ultrasound compared to those that used standard ultrasound. As this was also thought to be a clinically important variable it was included in all further analyses. The DOR was 1.4 (95% CI: 1.0, 1.9; p = 0.033) times higher in studies that reported that disease progression bias had been avoided compared to those in which this information was not reported. No studies reported sufficient information to determine that disease progression bias was present. Studies in which details were provided on reasons for withdrawals had DORs that were 2.8 times higher (95% CI: 1.1, 6.9, p = 0.033) than those in which it was unclear whether there had been any withdrawals. There was no evidence of any difference in the DOR between studies that did not report on reasons for withdrawals and studies in which it was unclear whether there were any withdrawals (p = 0.502). In the unweighted analysis, only two items showed a strong evidence of an association with the DOR. As in the weighted analysis there was very strong evidence that the DOR was higher in studies that used contrast enhanced ultrasound than those that used standard ultrasound (RDOR = 29.8, 95% CI: 13.5, 65.8, p < 0.001). Studies in which partial verification bias was avoided had DORs 4.1 times higher (95% CI: 1.1, 14.8) than those that did not (p = 0.034).

Discussion

The methodological quality of primary studies remains a significant issue for systematic reviews of diagnostic tests [8, 205, 206]. The STARD initiative has provided clear guidance for the reporting of diagnostic accuracy studies [5]. This should have a positive impact on the quality of the diagnostic literature in the future. The QUADAS tool facilitates systematic evaluation of the quality of diagnostic accuracy studies, and was specifically developed for use in systematic reviews of diagnostic tests [12]. However, where studies are poorly reported the information that can be derived from quality assessment becomes limited. We cannot know whether an unreported QUADAS item reflects a true methodological flaw or poor reporting of a study that may be methodologically sound. Many of the studies included in our review were poorly reported. Our assessment of the impact of components of methodological quality on diagnostic accuracy may therefore partially reflect completeness of reporting. Whilst poor reporting remains a widespread problem, it is almost impossible to assess the impact of components of methodological quality on the results of diagnostic meta-analyses.

The common practice of using summary quality scores in systematic reviews has been widely debated elsewhere [207–209]. Summary scores, when used to inform quality-based analyses, may mask important effects of individual quality components [210]. As we report, the numbers of QUADAS items that were adequately addressed by studies included in our review were similar between the three clinical stages assessed in the review. Had the number of QUADAS items fulfilled been used as a summary score, potentially important variations in the individual items fulfilled would have been hidden. We therefore advocate that components of quality assessment should be reported fully, and their impact on outcome measures analysed individually rather than as summary scores.

Although ours was a large review, it included 187 studies reporting 487 data sets, our analysis of the impact of methodological quality on diagnostic accuracy was severely limited both by the diversity of the included studies (few tests were evaluated by sufficient studies to allow meaningful use of meta-analytic pooling and investigation of heterogeneity), and by incomplete reporting. All of the data sets used were sub-optimal, in that the numbers of observations were low in comparison to the number of variables investigated in the multivariate analyses[13]. Although different types of diagnostic tests were evaluated in the three clinical stages used by the review, generalisibility is limited in that all data concerned a single condition (UTI). A number of the items found to be associated with test performance related to specific test methodologies (e.g. Gram stain and contrast-enhanced ultrasound) and have no generalisability elsewhere. These items were found to show association in both the weighted and unweighted analyses. For the individual quality items there were some differences between the results of the weighted and unweighted analyses. In general, the results of weighted analyses showed more intuitive associations. Unweighted analyses more often produced results that were difficult to explain, for example, in leukocyte esterase dipstick tests the unweighted analysis found that the test was more accurate in the group of children aged <12 years than in those aged <2 years. This might be expected and would probably reflect a higher likelihood of sample contamination in younger children, however, no difference in accuracy was found between under 18's and children aged <2 years. For both tests on the diagnosis of and further investigation of UTI weighted analyses showed an association between a number of variables relating to quality of reporting and diagnostic accuracy (well reported studies had higher DORs). We might expect this association to extend to diagnostic accuracy studies of all types of tests, but the present study is not adequate to demonstrate this. Weighted analysis of studies of ultrasound for the detection of reflux showed that the DOR was higher where studies reported information to determine that disease progression bias had been avoided. Disease progression bias is a particular issue for imaging studies of this type where follow-up examinations (used as the reference standard of diagnosis) may be scheduled some time after ultrasound (usually the initial examination). This association was not shown in the unweighted analysis.

The information derived from these analyses is also limited by the use of the summary ROC approach to pool studies. This method takes the DOR as the dependent variable. The DOR is used as a single indicator of test performance and shows how much more frequently a positive test result occurs in a person with the condition of interest than in one without the condition, relative to how much more frequently a negative result occurs in a person without the condition than in one with the condition. Using the DOR to investigate heterogeneity means that we cannot assess whether the factors investigated are associated with paired measures of diagnostic accuracy, such as sensitivity and specificity, or positive and negative likelihood ratios. Often factors that lead to an increase in sensitivity will lead to a decrease in specificity and vice versa. Factors that lead to this pattern of change may have no effect on an overall measure such as the DOR. Using the DOR to investigate heterogeneity may thus miss relevant clinical associations. Recently a new method for pooling sensitivity and specificity has been developed. This method is known as the "bivariate model" [211]. It preserves the underlying two-dimensional nature of the data and produces direct pooled estimates of sensitivity and specificity, incorporating any correlation that might exist between these two measures. The model can be extended to include explanatory variables leading to separate effects on sensitivity and specificity. This method has two advantages over the standard methods: (1) the pooled estimates of sensitivity and specificity take into account the correlation between these two measures; (2) the effect of possible sources of heterogeneity on both sensitivity and specificity can be investigated in a single model rather than just looking at the effect of these variables on a single measure of test performance, the DOR. These methods may have potential applications in future studies of this type.

Conclusion

Given the limitations we describe, the results of this study should be treated as hypothesis generating. Further work is needed to elucidate the influence of components of the methodological quality of primary studies on the results of diagnostic meta-analyses. Large data sets of well-reported primary studies are needed to address this question. Without significant improvements in the reporting of primary studies, progress in this area will be limited. The components of quality assessment should always be reported, and their impact on summary outcome measures be investigated, individually rather than as summary quality scores. Careful consideration should be given to the choice of weighting when conducting regression analyses. Weighting by sample size appears the most appropriate method for analyses of diagnostic accuracy studies, but this area requires further investigation.

References

Deeks JJ: Using evaluations of diagnostic tests: understanding their limitations and making the most of the available evidence. Annals of Oncology. 1999, 10: 761-768. 10.1023/A:1008359805260.

Sackett DL, Haynes RB: The architecture of diagnostic research. BMJ. 2002, 324: 539-541. 10.1136/bmj.324.7336.539.

Irwig L, Bossuyt PMM, Glasziou P, Gatsonis C, Lijmer JG: Designing studies to ensure that estimates of test accuracy are transferable. BMJ. 2002, 324: 669-671. 10.1136/bmj.324.7338.669.

Knottnerus JA, Muris JW: Assessment of the accuracy of diagnostic tests: the cross-sectional study. Journal of Clinical Epidemiology. 2003, 56: 1118-1128. 10.1016/S0895-4356(03)00206-3.

Bossuyt PMM, Reitsma JB, Bruns DE, Gatsonis C, Glasziou P, Irwig L, Moher D, Rennie D, de Vet HCW, Lijmer JG: The STARD statement for reporting studies of diagnostic accuracy: Explanation and elaboration. Annals of Internal Medicine. 2003, 138: W1-W12.

Sheps SB, Schechter MT: The assessment of diagnostic tests. A survey of current medical research. JAMA. 1984, 252: 2418-2422. 10.1001/jama.252.17.2418.

Reid MC, Lachs MS, Feinstein AR: Use of methodological standards in diagnostic test research. getting better but still not good. JAMA. 1995, 274: 645-651. 10.1001/jama.274.8.645.

Revicki DA, Yabroff KR, Shikiar R: Outcomes research in radiologic imaging: Identification of barriers and potential solutions. Academic Radiology. 1999, 6: S20-S28.

Whiting P, Rutjes AWS, Dinnes J, Reitsma JB, Bossuyt PM, Kleijnen J: A systematic review finds that diagnostic reviews fail to incorporate quality despite available tools. J Clin Epidemiol. 2005, 58: 1-12. 10.1016/j.jclinepi.2004.04.008.

Whiting P, Rutjes AWS, Reitsma JB, Glas AS, Bossuyt PM, Kleijnen J: Sources of Variation and Bias in Studies of Diagnostic Accuracy: A Systematic Review. Annals of Internal Medicine. 2004, 140: 189-202.

Whiting P, Westwood M, Ginnelly L, Palmer S, Richardson G, Cooper J, Watt I, Glanville J, Sculpher M, Kleijnen J: A systematic review of tests for the diagnosis and evaluation of urinary tract infection (UTI) in children under five years. HEALTH TECHNOLOGY ASSESSMENT. In press

Whiting P, Rutjes AWS, Reitsma JB, Bossuyt PM, Kleijnen J: The development of QUADAS: a tool for the quality assessment of studies of diagnostic accuracy included in systematic reviews. BMC Med Res Methodol. 2003, 3: 25-10.1186/1471-2288-3-25.

Altman DG: Practical statistics for medical research. 1991, London, Chapman & Hall

Vamvakas EC: Meta-analyses of studies of the diagnostic accuracy of laboratory tests: a review of the concepts and methods. Archives of Pathology & Laboratory Medicine. 1998, 122: 675-686.

Moses LE, Shapiro D, Littenberg B: Combining independent studies of a diagnostic test into a summary ROC curve: data-analystic approaches and some additional considerations. Statistics in Medicine. 1993, 12: 1293-1316.

Fleiss JL: The statistical basis of meta-analysis. Statistical Methods in Medical Research. 1993, 2: 121-145.

Lijmer JG, Bossuyt PMM, Heisterkamp SH: Exploring sources of heterogeneity in systematic review of diagnostic tests. Statistics in Medicine. 2002, 21: 1525-1537. 10.1002/sim.1185.

Ahmad T, Vickers D, Campbell S, Coulthard MG, Pedler S: Urine collection from disposable nappies. Lancet. 1991, 338: 674-676. 10.1016/0140-6736(91)91242-M.

Anad FY: A simple method for selecting urine samples that need culturing. Annals of Saudi Medicine. 2001, 21: 104-105.

Armengol CE, Hendley JO, Schlager TA: Should we abandon standard microscopy when screening for urinary tract infections in young children?. Pediatric Infectious Disease Journal. 2001, 20: 1176-1177. 10.1097/00006454-200112000-00018.

Armengol CE, Hendley JO, Schlager TA: Urinary tract infection in young children cannot be excluded with urinalysis. Pediatric Research. 2000, 47: 172A-

Aronson AS, Gustafson B, Svenningsen NW: Combined suprapubic aspiration and clean-voided urine examination in infants and children. Acta Paediatrica Scandinavica. 1973, 62: 396-400.

Arslan S, Caksen H, Rastgeldi L, Uner A, Oner AF, Odabas D: Use of urinary gram stain for detection of urinary tract infection in childhood. Yale Journal of Biology and Medicine. 2002, 75: 73-78.

Bachur R, Harper MB: Reliability of the urinalysis for predicting urinary tract infections in young febrile children. Archives of Pediatrics & Adolescent Medicine. 2001, 155: 60-65.

Baum JD, Darrell JH, Lambert RA: Evaluation of dip inoculum urine culture. Archives of Disease in Childhood. 1972, 47: 977-978.

Boreland PC, Stoker M: Dipstick analysis for screening of paediatric urine. Journal of Clinical Pathology. 1986, 39: 1360-1362.

Braude H, Forfar JO, Gould JC, McLeod JW: Diagnosis of urinary tract infection in childhood based on examination of pared non-catheter and catheter specimens of urine. British Medical Journal. 1967, 4: 702-705.

Bulloch B, Bausher JC, Pomerantz WJ, Connors JM, Mahabee-Gittens M, Dowd MD: Can urine clarity exclude the diagnosis of urinary tract infection?. Pediatrics. 2000, 106: E60-10.1542/peds.106.5.e60.

Rodriguez Caballero AM, Novoa Vazquez P, Perez Ruiz A, Carmona Perez A, Cano Fernandez J, Sanchez Bayle M: Assessment of leukocyturia in the diagnosis of urinary tract infections. Revista Espanola de Pediatria. 2001, 57: 305-308.

Rodriguez Cervilla J, Alonso Alonso C, Fraga Bermudez JM, Perez Munuzuri A, Gil Calvo M, Ariceta Iraola G, Fernandez Lorenzo JR: Urinary tract infection in children: Clinical and analytical prospective study for differential diagnosis in children with suspicion of an infectious disease. Revista Espanola de Pediatria. 2001, 57: 144-152.

Cid E, Fernandez Seara MJ, Buznego R, Pavon P, Rodrigo E, Castro-Gago M: Comparative study between Uricult and urine culture for the diagnosis of urinary infections in infants. Revista Espanola de Pediatria. 1992, 48: 23-25.

Cohen HA, Woloch B, Linder N, Vardi A, Barzilai A: Urine samples from disposable diapers: an accurate method for urine cultures. Journal of Family Practice. 1997, 44: 290-292.

Craver RD, Abermanis JG: Dipstick only urinalysis screen for the pediatric emergency room. Pediatric Nephrology. 1997, 11: 331-333. 10.1007/s004670050288.

Dayan PS, Bennett J, Best R, Bregstein JS, Levine D, Novick MK, Sonnett FM, Stimell-Rauch ML, Urtecho J, Wagh A, Miller SZ: Test characteristics of the urine Gram stain in infants less than 60 or 60 days of age with fever. Pediatric Emergency Care. 2002, 18: 12-14. 10.1097/00006565-200202000-00004.

Dayan PS, Chamberlain JM, Boenning D, Adirim T, Schor JA, Klein BL: A comparison of the initial to the later stream urine in children catheterized to evaluate for a urinary tract infection. Pediatric Emergency Care. 2000, 16: 88-90. 10.1097/00006565-200004000-00005.

Demi M, Costa L, Zanardo V: [Urinary tract infections in newborns: sensitivity, specificity, and predictive value of urinary screening with the reagent strip test]. Pediatria Medica e Chirurgica. 1993, 15: 29-31.

Dosa S, Houston IB, Allen LB, Jennison R: Urinary glucose unreliable as test for urinary tract infection in infancy. Archives of Disease in Childhood. 1973, 48: 733-737.

Farrell M, Devine K, Lancaster G, Judd B: A method comparison study to assess the reliability of urine collection pads as a means of obtaining urine specimens from non-toilet-trained children for microbiological examination. Journal of Advanced Nursing. 2002, 37: 387-393. 10.1046/j.1365-2648.2002.02097.x.

Feasey S: Are Newcastle urine collection pads suitable as a means of collecting specimens from infants?. Paediatric Nursing. 1999, 11: 17-21.

Fennell RS, Wilson SG, Garin EH, Pryor ND, Sorgen CD, Walker RD, Richard GA: The combination of two screening methods in a home culture program for children with recurrent bacteriuria. An evaluation of a culture method plus a nitrite reagent test strip. Clinical Pediatrics. 1977, 16: 951-955.

Benito Fernandez J, Garcia Ribes A, Trebolazabala Quirante N, Mintegi Raso S, Vazquez Ronco M, Urra Zalbidegoitia E: [Gram stain and dipstick as diagnostic methods for urinary tract infection in febrile infants]. Anales Espanoles de Pediatria. 2000, 53: 561-566.

Benito Fernandez J, Sanchez Echaniz J, Mintegui Raso S, Montejo F: Urinary tract infection in infants: Use of urine specimens obtained by suprapubic bladder aspiration in order to determine the reliability of culture specimen of urine collected in perineal bag. Anales Espanoles de Pediatria. 1996, 45: 149-152.

Giraldez M, Perozo M, González F, Rodríguez M: Infección urinaria cinta reactiva y sedimento urinario vs. urocultivo para determinación de bacteriuria. Salus militiae. 1998, 23: 27-31.

Godard C, Frutiger P, Delarue C, Christen JP, Wavre D, Girardet P: Testing for bacteriuria by home culturing in preschool girls. Helvetica Paediatrica Acta. 1979, 34: 209-212.

Gorelick MH, Shaw KN: Clinical decision rule to identify febrile young girls at risk for urinary tract infection. Archives of Pediatrics & Adolescent Medicine. 2000, 154: 386-390.

Hardy JD, Furnell PM, Brumfitt W: Comparison of sterile bag, clean catch and suprapubic aspiration in the diagnosis of urinary infection in early childhood. British Journal of Urology. 1976, 48: 279-283.

Hiraoka M, Hida Y, Hori C, Tsuchida S, Kuroda M, Sudo M: Urine microscopy on a counting chamber for diagnosis of urinary infection. Acta Paediatrica Japonica. 1995, 37: 27-30.

Hitzel A, Liard A, Vera P, Manrique A, Menard JF, Dacher JN: Color and power Doppler sonography versus DMSA scintigraphy in acute pyelonephritis and in prediction of renal scarring. Journal of Nuclear Medicine. 2002, 43: 27-32.

Hoberman A, Wald ER, Reynolds EA, Penchansky L, Charron M: Is urine culture necessary to rule out urinary tract infection in young febrile children?. Pediatric Infectious Disease Journal. 1996, 15: 304-309. 10.1097/00006454-199610000-00034.

Hoberman A, Wald ER, Reynolds EA, Penchansky L, Charron M: Pyuria and bacteriuria in urine specimens obtained by catheter from young children with fever. Journal of Pediatrics. 1994, 124: 513-519.

Hoberman A, Wald ER, Penchansky L, Reynolds EA, Young S: Enhanced urinalysis as a screening test for urinary tract infection. Pediatrics. 1993, 91: 1196-1199.

Holland PD, Doyle CT, English L: An evaluation of chemical tests for significant bacteriuria. Journal of the Irish Medical Association. 1968, 61: 128-130.

Kohler L, Fritz H, Schersten B: Screening for bacteriuria with Uriglox in children. Acta Paediatrica Scandinavica Supplement. 1970, 206: 76-78.

Kunin CM, DeGroot JE: Sensitivity of a nitrite indicator strip method in detecting bacteriuria in preschool girls. Pediatrics. 1977, 60: 244-245.

Labbe J: [Usefulness of testing for nitrites in the diagnosis of urinary infections in children]. Union Medicale du Canada. 1982, 111: 261-265.

Lagos Zuccone R, Carter S J, Herrera Labarca P: Utilidad de una tira reactiva y del aspecto macroscópico de la orina para descartar la sospecha clínica de infección del tracto urinario en niños ambulatorios. Rev Chil Pediatr. 1994, 65: 88-94.

Lejeune B, Baron R, Guillois B, Mayeux D: Evaluation of a screening test for detecting urinary tract infection in newborns and infants. Journal of Clinical Pathology. 1991, 44: 1029-1030.

Lin DS, Huang FY, Chiu NC, Koa HA, Hung HY, Hsu CH, Hsieh WS, Yang DI: Comparison of hemocytometer leukocyte counts and standard urinalyses for predicting urinary tract infections in febrile infants. Pediatric Infectious Disease Journal. 2000, 19: 223-227. 10.1097/00006454-200003000-00010.

Lin DS, Huang SH, Lin CC, Tung YC, Huang TT, Chiu NC, Koa HA, Hung HY, Hsu CH, Hsieh WS, Yang DI, Huang FY: Urinary tract infection in febrile infants younger than eight weeks of age. Pediatrics. 2000, 105: E20-20. 10.1542/peds.105.2.e20.

Liptak GS, Campbell J, Stewart R, Hulbert WC: Screening for urinary tract infection in children with neurogenic bladders. American Journal of Physical Medicine & Rehabilitation. 1993, 72: 122-126.

Littlewood JM, Jacobs SI, Ramsden CH: Comparison between microscopical examination of unstained deposits of urine and quantitative culture. Archives of Disease in Childhood. 1977, 52: 894-896.

Lockhart GR, Lewander WJ, Cimini DM, Josephson SL, Linakis JG: Use of urinary gram stain for detection of urinary tract infection in infants. Annals of Emergency Medicine. 1995, 25: 31-35.

Lohr JA, Portilla MG, Geuder TG, Dunn ML, Dudley SM: Making a presumptive diagnosis of urinary tract infection by using a urinalysis performed in an on-site laboratory. Journal of Pediatrics. 1993, 122: 22-25.

Manson R, Scholefield J, Johnston RJ, Scott R: The screening of more than 2,000 schoolgirls for bacteriuria using an automated fluorescence microscopy system. Urological Research. 1985, 13: 143-148. 10.1007/BF00256077.

Marret M, Tay S, Yap HK, Murugasu B: Comparison of two rapid screening tests for urinary tract infection in children. Annals Academy of Medicine Singapore. 1995, 24: 299-

Marsik FJ, Owens D, Lewandowski J: Use of the leukocyte esterase and nitrite tests to determine the need for culturing urine specimens from a pediatric and adolescent population. Diagnostic Microbiology & Infectious Disease. 1986, 4: 181-183. 10.1016/0732-8893(86)90155-0.

Matthai J, Ramaswamy M: Urinalysis in urinary tract infection. Indian Journal of Pediatrics. 1995, 62: 713-716.

Mongeau JG, Robillard JE, Brousseau Y: Screening for bacteriuria in children: comparison of two dip-tests. Canadian Medical Association Journal. 1972, 107: 227-229.

Morin D, Veyrac C, Kotzki PO, Lopez C, Dalla Vale F, Durand MF, Astruc J, Dumas R: Comparison of ultrasound and dimercaptosuccinic acid scintigraphy changes in acute pyelonephritis. Pediatric Nephrology. 1999, 13: 219-222. 10.1007/s004670050596.

Morton RE, Lawande R: The diagnosis of urinary tract infection: comparison of urine culture from suprapubic aspiration and midstream collection in a children's out-patient department in Nigeria. Annals of tropical paediatrics. 1982, 2: 109-112.

Dominguez Navarrete N: [Evaluation of triphenyl tetrazolium chloride as a diagnostic test in urinary infections]. Anales de la Facultad de Medicina, Universidad Nacional Mayor de San Marcos de Lima. 1966, 49: 294-307.

Villanustre Ordonez C, Buznego Sanchez R, Rodicio Garcia M, Rodrigo Saez E, Fernandez Seara MJ, Pavon Belinchon P, Castro-Gago M: Comparative study of semiquantitative methods (leukocytes, nitrite test and uricult) with urine culture for the diagnosis of urinary tract infection during infancy. Anales Espanoles de Pediatria. 1994, 41: 325-328.

Palmer LS, Richards I, Kaplan WE: Clinical evaluation of a rapid diagnostic screen (URISCREEN) for bacteriuria in children. Journal of Urology. 1997, 157: 654-657. 10.1097/00005392-199702000-00083.

Parmington J, Kornberg A: Nitrite screening for urinary tract infection in a Pediatric Emergency Department. Pediatric Emergency Care. 1989, 5: 285-286.

Pryles CV, Eliot CR: Pyuria and bacteriuria in infants and children. The value of pyuria as a diagnostic criterion of urinary tract infections. American Journal of Diseases of Children. 1965, 110: 628-635.

Purwar VN, Agrawal SP, Dikshit SK: Gram stained urine slides in the diagnosis of urinary tract infections in children. Journal of the Indian Medical Association. 1972, 59: 387-388.

Pylkkanen J, Vilska J, Koskimies O: Diagnostic value of symptoms and clean-voided urine specimen in childhood urinary tract infection. Acta Paediatrica Scandinavica. 1979, 68: 341-344.

Ramage IJ, Chapman JP, Hollman AS, Elabassi M, McColl JH, Beattie TJ: Accuracy of clean-catch urine collection in infancy. Journal of Pediatrics. 1999, 135: 765-767.

Rich G, Glass NJ, Selkon JB: Cost-effectiveness of two methods of screening for asymptomatic bacteriuria. British Journal of Preventive & Social Medicine. 1976, 30: 54-59.

Santos MA, Mos EN, Schmidt BJ, Piva S: Comparacion entre el estudio bacterioscopico cuantitativo y el urocultivo para el diagnostico de infeccion urinaria en pediatria. Bol Méd Hosp Infant Méx. 1982, 39: 526-530.

Saxena H, Ajwani KD, Mehrotra D: Quantitative pyuria in the diagnosis of urinary infections in children. Indian Journal of Pediatrics. 1975, 42: 35-38.

Schersten B, Dahlqvist A, Fritz H, Kohler L, Westlund L: Screening for bacteriuria with a test paper for glucose. JAMA. 1968, 204: 205-208. 10.1001/jama.204.3.205.

Schreiter G, Buhtz P: [Diagnostic value of the cytologic and bacteriologic urine examinations in pediatrics. II. Comparison of leukocyturia and bacteriuria]. Deutsche Gesundheitswesen. 1971, 26: 1318-1323.

Sharief N, Hameed M, Petts D: Use of rapid dipstick tests to exclude urinary tract infection in children. British Journal of Biomedical Science. 1998, 55: 242-246.

Shaw KN, McGowan KL, Gorelick MH, Schwartz JS: Screening for urinary tract infection in infants in the emergency department: which test is best?. Pediatrics. 1998, 101: E1-E5. 10.1542/peds.101.6.e1.

Shaw KN, Hexter D, McGowan KL, Schwartz JS: Clinical evaluation of a rapid screening test for urinary tract infections in children. Journal of Pediatrics. 1991, 118: 733-736.

Struthers S, Scanlon J, Parker K, Goddard J, Hallett R: Parental reporting of smelly urine and urinary tract infection. ARCHIVES OF DISEASE IN CHILDHOOD. 2003, 88: 250-252. 10.1136/adc.88.3.250.

Tahirovic H, Pasic M: A modified nitrite test as a screening test for significant bacteriuria. European Journal of Pediatrics. 1988, 147: 632-633. 10.1007/BF00442479.

Todd J, McLain L, Duncan B, Brown M: A nonculture method for home follow-up of urinary tract infections in childhood. Journal of Pediatrics. 1974, 85: 514-516.

Vangone G, Russo G: [Bacteria and leukocyte count in the urine in the diagnosis of urinary tract infections]. Pediatria Medica e Chirurgica. 1985, 7: 125-129.

Vickers D, Ahmad T, Coulthard MG: Diagnosis of urinary tract infection in children: fresh urine microscopy or culture?. Lancet. 1991, 338: 767-770. 10.1016/0140-6736(91)90662-9.

Waisman Y, Zerem E, Amir L, Mimouni M: The validity of the uriscreen test for early detection of urinary tract infection in children. Pediatrics. 1999, 104: e41-10.1542/peds.104.4.e41.

Wammanda RD, Aikhionbare HA, Ogala WN: Use of nitrite dipstick test in the screening for urinary tract infection in children. West African Journal of Medicine. 2000, 19: 206-208.

Weinberg AG, Gan VN: Urine screen for bacteriuria in symptomatic pediatric outpatients. Pediatric Infectious Disease Journal. 1991, 10: 651-654.

Wiggelinkhuizen J, Maytham D, Hanslo DH: Dipstick screening for urinary tract infection. South African Medical Journal. 1988, 74: 224-228.

Woodward MN, Griffiths DM: Use of dipsticks for routine analysis of urine from children with acute abdominal pain. BMJ. 1993, 306: 1512-

Andrich MP, Majd M: Evaluation of febrile urinary tract infections in children utilizing Technetium-99m DMSA scanning. Journal of Nuclear Medicine. 1992, 33: 976-

Barnett GR, Abbott GD: Localization of gram negative urinary tract infection by immunofluorescence in infants and children. Australian Paediatric Journal. 1978, 14: 143-146.

Benador D, Benador N, Slosman DO, Nussle D, Mermillod B, Girardin E: Cortical scintigraphy in the evaluation of renal parenchymal changes in children with pyelonephritis. Journal of Pediatrics. 1994, 124: 17-20.

Biggi A, Dardanelli L, Pomero G, Cussino P, Noello C, Sernia O, Spada A, Camuzzini G: Acute renal cortical scintigraphy in children with a first urinary tract infection. Pediatric Nephrology. 2001, 16: 733-738. 10.1007/s004670100657.

Bircan ZE, Buyan N, Hasanoglu E, Ozturk E, Bayhan H, Isik S: Radiologic evaluation of urinary tract infection. International Urology & Nephrology. 1995, 27: 27-32.

Boudailliez B, Berro Y, Hosri JA, Esper IE, Grumbach Y: A prospective study of imaging modalities in acute pyelonephritis (49 cases): DMSA renal scintigraphy versus power Doppler (PD) renal sonography. Pediatric Nephrology. 1998, 12: C132-

Buyan N, Bircan ZE, Hasanoglu E, Ozturk E, Bayhan H, Rota S: The importance of 99mTc DMSA scanning in the localization of childhood urinary tract infections. International Urology & Nephrology. 1993, 25: 11-17.

Capa Kaya G, Taskiran Y, Bak M, Aydin A, Toksavul O, Uslu Y, Ozturk E, Durak H: Urinary N-acetyl-beta-glucosaminidase in children with upper urinary tract infection, in relation to Tc-99m DMSA scintigraphy. European Journal Of Nuclear Medicine. 2001, 28: 1156-1156.

Dacher JN, Pfister C, Monroc M, Eurin D, Le Dosseur P: Power Doppler sonographic pattern of acute pyelonephritis in children: Comparison with CT. American Journal of Roentgenology. 1996, 166: 1451-1455.

Everaert K, Raes A, Hoebeke P, Samijn W, Delanghe J, Vande Wiele C, Vande Walle J: Combined use of urinary alpha1-microglobulin and 99mTc DMSA scintigraphy in the diagnosis and follow-up of acute pyelonephritis and cystitis in children. European Urology. 1998, 34: 486-491. 10.1159/000019788.

Fretzayas A, Moustaki M, Gourgiotis D, Bossios A, Koukoutsakis P, Stavrinadis C: Polymorphonuclear elastase as a diagnostic marker of acute pyelonephritis in children. Pediatrics. 2000, 105: E28-10.1542/peds.105.2.e28.

Gervaix A, Galetto-Lacour A, Gueron T, Vadas L, Zamora S, Suter S, Girardin E: Usefulness of procalcitonin and C-reactive protein rapid tests for the management of children with urinary tract infection. Pediatric Infectious Disease Journal. 2001, 20: 507-511.

Castello Girona F, Vilaplana Canto E, Yeste Fernandez D, Roca Bielsa I, Enriquez Civico G: 99mTc dimercaptosuccinic scan in the study of the first urinary tract infection in infants. Anales Espanoles de Pediatria. 1995, 42: 118-122.

Guermazi F, Lenoir P, Verboven M, Smets A, Braeckman J, Jonckheer MH, Piepsz A: [Technetium 99m labeled dimercaptosuccinic acid (99m Tc-DMSA) scintigraphy in the diagnosis and follow-up of urinary infections in children]. Archives Francaises de Pediatrie. 1993, 50: 391-398.

el Hajjar M, Launay S, Hossein-Foucher C, Foulard M, Robert Y: [Power Doppler sonography and acute pyelonephritis in children: comparison with Tc-DMSA scintigraphy]. Archives de Pediatrie. 2002, 9: 21-25. 10.1016/S0929-693X(01)00689-3.

Hellerstein S, Kennedy E, Nussbaum L, Rice K: Localization of the site of urinary tract infections by means of antibody-coated bacteria in the urinary sediments. Journal of Pediatrics. 1978, 92: 188-193.

Hitzel A, LiardZmuda A, Manrique A, Dacher JN, Vera P: Comparative study of DMSA scintigraphy (DMSA) and Doppler sonography (DS) in the diagnosis of acute pyelonephritis and scarring in children. Journal of Nuclear Medicine. 2000, 41: 209-209.

Ilyas M, Mastin ST, Richard GA: Age-related radiological imaging in children with acute pyelonephritis. Pediatric Nephrology. 2002, 17: 30-34. 10.1007/s004670200005.

Jakobsson B, Nolstedt L, Svensson L, Soderlundh S, Berg U: 99mTechnetium-dimercaptosuccinic acid scan in the diagnosis of acute pyelonephritis in children: relation to clinical and radiological findings. Pediatric Nephrology. 1992, 6: 328-334. 10.1007/BF00869725.

Jantausch BA, Rifai N, Getson P, Akram S, Majd M, Wiedermann BL: Urinary N-acetyl-beta-glucosaminidase and beta-2-microglobulin in the diagnosis of urinary tract infection in febrile infants. Pediatric Infectious Disease Journal. 1994, 13: 294-299.

Jequier S, Jequier JC, Hanquinet S: Acute childhood pyelonephritis: Predictive value of positive sonographic findings in regard to later parenchymal scarring. Academic Radiology. 1998, 5: 344-353.

Krzemien G, Roszkowska-Blaim M, Brzewski M, Kostro I, Szmigielska A, Karpinska M, Marcinski A: Comparison of power Doppler ultrasonography with 99mTc-DMSA renal scintigraphy in the diagnosis of acute pyelonephritis. Polski Merkuriusz Lekarski. 2002, 12: 405-407.

Landau D, Turner ME, Brennan J, Majd M: The value of urinalysis in differentiating acute pyelonephritis from lower urinary tract infection in febrile infants. Pediatric Infectious Disease Journal. 1994, 13: 777-781.

Landau D, Brennan J, Turner ME, Majd M: A negative urinalysis predicts the absence of acute pyelonephritis in febrile infants. Pediatric Research. 1994, 35: 185-

Lavocat MP, Granjon D, Allard D, Gay C, Freycon MT, Dubois F: Imaging of pyelonephritis. Pediatric Radiology. 1997, 27: 159-165. 10.1007/s002470050091.

Lonergan GJ, Pennington DJ, Morrison JC, Haws RM, Grimley MS, Kao TC: Childhood pyelonephritis: comparison of gadolinium-enhanced MR imaging and renal cortical scintigraphy for diagnosis. Radiology. 1998, 207: 377-384.

Montplaisir S, Courteau C, Martineau B, Pelletier M: Limitations of the direct immunofluorescence test for antibody-coated bacteria in determining the site of urinary tract infections in children. CMAJ: Canadian Medical Association Journal. 1981, 125: 993-996.

Pylkkanen J: Antibody-coated bacteria in the urine of infants and children with their first two urinary tract infections. Acta Paediatr Scand. 1978, 67: 275-279.

La Cava G, Sciagra R, Materassi M, Ienuso R, Meldolesi U: Accuracy of renal sequential scintigraphy for the recognition of renal involvement in pediatric patients affected by urinary tract infection. European Journal of Nuclear Medicine. 1990, 16: 415-

Sfakianakis GN, Mylonakis T, Zilleruelo G, Leon M, Flores F, Ganz W, Serafini A, Abitbol C, Strauss J: The importance of Technetium-99m Gh scintigraphy in infants with first UTI. Journal of Nuclear Medicine. 1989, 30: 915-916.

Smolkin V, Koren A, Raz R, Colodner R, Sakran W, Halevy R: Procalcitonin as a marker of acute pyelonephritis in infants and children. Pediatric Nephrology. 2002, 17: 409-412. 10.1007/s00467-001-0790-1.

Sreenarasimhaiah V, Alon US: Uroradiologic evaluation of children with urinary tract infection: are both ultrasonography and renal cortical scintigraphy necessary?. Journal of Pediatrics. 1995, 127: 373-377.

Stokland E, Hellstrom M, Jacobsson B, Jodal U, Lundgren P, Sixt R: Early 99mTc dimercaptosuccinic acid (DMSA) scintigraphy in symptomatic first-time urinary tract infection. Acta Paediatrica. 1996, 85: 430-436.

Traisman ES, Conway JJ, Traisman HS, Yogev R, Firlit C, Shkolnik A, Weiss S: The localization of urinary tract infection with 99Tc glucoheptonate scintigraphy. Pediatric Radiology. 1986, 16: 403-406.

Verboven M, Ingels M, Delree M, Piepsz A: 99mTc-DMSA scintigraphy in acute urinary tract infection in children. Pediatric Radiology. 1990, 20: 540-542.

Bykov S, Chervinsky L, Smolkin V, Halevi R, Garty I: Power Doppler sonography versus Tc-99m DMSA scintigraphy for diagnosing acute pyelonephritis in children: Are these two methods comparable?. Clinical Nuclear Medicine. 2003, 28: 198-203. 10.1097/00003072-200303000-00006.

Muro MD, Sanguesa C, Otero MC, Piqueras AI, Lloret MT: Acute pyelonephritis in pediatric age: Comparative study between power Doppler ultrasound scan and DMSA. Radiologia. 2002, 44: 237-242.

Alon U, Pery M, Davidai G, Berant M: Ultrasonography in the radiologic evaluation of children with urinary tract infection. Pediatrics. 1986, 78: 58-64.

Alzen G, Wildberger JE, Muller-Leisse C, Deutz FJ: [Ultrasound screening of vesico-uretero-renal reflux]. Klinische Padiatrie. 1994, 206: 178-180.

Bagni B, Orsolon P, Fattori A, Guerra UP: Renal SPECT with Tc-99m DMSA in children with upper urinary tract infections using a triple-headed gamma camera. Clinical Nuclear Medicine. 1997, 22: 838-843. 10.1097/00003072-199712000-00007.

Baronciani D, Bonora G, Andreoli A, Cambie M, Nedbal M, Dellagnola CA: The value of ultrasound for diagnosing the uropathy in children with urinary-tract infections. Rivista Italiana Di Pediatria Italian Journal Of Pediatrics. 1986, 12: 214-220.

Barry BP, Hall N, Cornford E, Broderick NJ, Somers JM, Rose DH: Improved ultrasound detection of renal scarring in children following urinary tract infection. Clinical Radiology. 1998, 53: 747-751.

Benigno V, Di Peri S, Distefano F, Como G, Boncori R: [Laboratory parameters as a guide for the radiologic study of infections of the urinary tract in childhood]. Pediatria Medica e Chirurgica. 1986, 8: 91-93.

Bergius AR, Niskanen K, Kekomaki M: Detection of significant vesico-ureteric reflux by ultrasound in infants and children. Zeitschrift fur Kinderchirurgie. 1990, 45: 144-145.

Berrocal T, Gaya F, Arjonilla A, Lonergan GJ: Vesicoureteral reflux: Diagnosis and grading with echo-enhanced cystosonography versus voiding cystourethrography. Radiology. 2001, 221: 359-365.

Bower G, Lovegrove FT, Geijsel H, Van der Schaff A, Guelfi G: Comparison of "direct" and "indirect" radionuclide cystography. Journal of Nuclear Medicine. 1985, 26: 465-468.

Cavanagh PM, Sherwood T: Too many cystograms in the investigation of urinary tract infection in children?. British Journal of Urology. 1983, 55: 217-219.

Chan YL, Chan KW, Yeung CK, Roebuck DJ, Chu WC, Lee KH, Metreweli C: Potential utility of MRI in the evaluation of children at risk of renal scarring. Pediatric Radiology. 1999, 29: 856-862. 10.1007/s002470050713.

Clarke SEM, Risheq F, Mistry R, Maisey MN: An evaluation of Technetium-99m dimercaptosuccinic acid DMSA tomographic imaging in children with urinary tract infection. European Journal of Nuclear Medicine. 1990, 16: 415-

De Sadeleer C, De Boe V, Keuppens F, Desprechins B, Verboven M, Piepsz A: How good is technetium-99m mercaptoacetyltriglycine indirect cystography?. European Journal of Nuclear Medicine. 1994, 21: 223-227.

Ditchfield MR, De Campo JF, Cook DJ, Nolan TM, Powell HR, Sloane R, Grimwood K, Cahill S: Vesicoureteral reflux: an accurate predictor of acute pyelonephritis in childhood urinary tract infection?. Radiology. 1994, 190: 413-415.

Drachman R, Valevici M, Vardy PA: Excretory urography and cystourethrography in the evaluation of children with urinary tract infection. Clinical Pediatrics. 1984, 23: 265-267.

Elison BS, Taylor D, Van der Wall H, Pereira JK, Cahill S, Rosenberg AR, Farnsworth RH, Murray IP: Comparison of DMSA scintigraphy with intravenous urography for the detection of renal scarring and its correlation with vesicoureteric reflux. British Journal of Urology. 1992, 69: 294-302.

Evans ED, Meyer JS, Harty MP, Bellah RD: Assessment of increase in renal pelvic size on post-void sonography as a predictor of vesicoureteral reflux. Pediatric Radiology. 1999, 29: 291-294. 10.1007/s002470050591.

Farnsworth RH, Rossleigh MA, Leighton DM, Bass SJ, Rosenberg AR: The detection of reflux nephropathy in infants by 99mtechnetium dimercaptosuccinic acid studies. Journal of Urology. 1991, 145: 542-546.

Foresman WH, Hulbert WC, Rabinowitz R: Does urinary tract ultrasonography at hospitalization for acute pyelonephritis predict vesicoureteral reflux?. Journal of Urology. 2001, 165: 2232-2234. 10.1097/00005392-200106001-00004.

Berrocal Frutos T, Gaya Moreno F, Gomez Leon N, Jaureguizar Monereo E: [Cystosonography with echoenhancer. A new imaging technique for the diagnosis of vesicoureteral reflux]. Anales Espanoles de Pediatria. 2000, 53: 422-430.

Gordon I, Anderson PJ, Lythgoe MF, Orton M: Can technetium-99m-mercaptoacetyltriglycine replace technetium-99m-dimercaptosuccinic acid in the exclusion of a focal renal defect?. Journal of Nuclear Medicine. 1992, 33: 2090-2093.

Haberlik A: Detection of low-grade vesicoureteral reflux in children by color Doppler imaging mode. Pediatric Surgery International. 1997, 12: 38-43.

Hanbury DC, Whitaker RH, Sherwood T, Farman P: Ultrasound and plain X-ray screening in childhood urinary tract infection. British Journal of Urology. 1989, 64: 638-640.

Hedman PJ, Kempi V, Voss H: Measurement of vesicoureteral reflux with intravenous 99mTc-DTPA compared to radiographic cystography. Radiology. 1978, 126: 205-208.

Hellstrom M, Jacobsson B, Marild S, Jodal U: Voiding cystourethrography as a predictor of reflux nephropathy in children with urinary-tract infection. AJR American Journal of Roentgenology. 1989, 152: 801-804.

Jequier S, Forbes PA, Nogrady MB: The value of ultrasonography as a screening procedure in a first-documented urinary tract infection in children. Journal of Ultrasound in Medicine. 1985, 4: 393-400.

Johnson CE, Shurin PA, Marchant CD, Strieter CM, Murdell-Panek D, Debaz BP, Shah ZR, Scillian JJ, Hall PW: Identification of children requiring radiologic evaluation for urinary infection. Pediatric Infectious Disease. 1985, 4: 656-663.

Johnson CE, Vacca CV, Fattlar D, Fulton DJ, Hall PW: Urinary N Acetyl-Beta-Glucosaminidase and the selection of children for radiologic evaluation after urinary tract infection. Pediatrics. 1990, 86: 211-216.

Kenda R, Kenig T, Silc M, Zupancic Z: Renal ultrasound and excretory urography in infants and young children with urinary tract infection. Pediatric Radiology. 1989, 19: 299-301.

Kenda RB, Novljan G, Kenig A, Hojker S, Fettich JJ: Echo-enhanced ultrasound voiding cystography in children: a new approach. Pediatric Nephrology. 2000, 14: 297-300. 10.1007/s004670050762.

Kessler RM, Altman DH: Real-time sonographic detection of vesicoureteral reflux in children. American Journal of Roentgenology. 1982, 138: 1033-1036.

Leonidas JC, McCauley RG, Klauber GC, Fretzayas AM: Sonography as a substitute for excretory urography in children with urinary tract infection. AJR American Journal of Roentgenology. 1985, 144: 815-819.

LeQuesne GW, Davies R: Ultrasonic assessment of reflux nephropathy. PEDIATRIC NEPHROLOGY. 1986, 16: 335-335.

Lindsell D, Moncrieff M: Comparison of ultrasound examination and intravenous urography after a urinary tract infection. Archives of Disease in Childhood. 1986, 61: 81-82.

MacKenzie JR, Fowler K, Hollman AS, Tappin D, Murphy AV, Beattie TJ, Azmy AF: The value of ultrasound in the child with an acute urinary tract infection. British Journal of Urology. 1994, 74: 240-244.

Mage K, Zoppardo P, Cohen R, Reinert P, Ponet M: Imagerie et premiere infection urinaire de l'enfant: place respective de chaque examen lors du bilan initial a propos de 122 observations. Journal de radiologie. 1989, 70: 279-283.

Mahant S, Friedman J, MacArthur C: Renal ultrasound findings and vesicoureteral reflux in children hospitalised with urinary tract infection. Archives of Disease in Childhood. 2002, 86: 419-420. 10.1136/adc.86.6.419.

McLorie GA, Aliabadi H, Churchill BM, Ash JM, Gilday DL: Technetium-99m-dimercapto-succinic acid renal scanning and excretory urography in diagnosis of renal scars in children. Journal of Urology. 1989, 142: 790-792.

Mentzel HJ, Vogt S, John U, Kaiser WA: Voiding urosonography with ultrasonography contrast medium in children. Pediatric Nephrology. 2002, 17: 272-276. 10.1007/s00467-002-0843-0.

Merrick MV, Uttley WS, Wild SR: The detection of pyelonephritic scarring in children by radioisotope imaging. British Journal of Radiology. 1980, 53: 544-556.

Misselwitz J, Stoll W, Vogt S: [Value of radioisotope renography compared to urography, phenol test and concentration test in the diagnosis of pyelonephritis in childhood]. Kinderarztliche Praxis. 1976, 44: 69-76.

Mucci B, Maguire B: Does routine ultrasound have a role in the investigation of children with urinary tract infection?. Clinical Radiology. 1994, 49: 324-325.

Muensterer OJ: Comprehensive ultrasound versus voiding cysturethrography in the diagnosis of vesicoureteral reflux. European Journal of Pediatrics. 2002, 161: 435-437. 10.1007/s00431-002-0990-0.

Oostenbrink R, van der Heijden AJ, Moons KG, Moll HA: Prediction of vesico-ureteric reflux in childhood urinary tract infection: a multivariate approach. Acta Paediatrica. 2000, 89: 806-810. 10.1080/080352500750043693.

Piaggio G, Degli'Innocenti ML, Toma P, Calevo MG, Perfumo F: Cystosonography and voiding cystourethrography in the diagnosis of vesicoureteral reflux. PEDIATRIC NEPHROLOGY. 2003, 18: 18-22. 10.1007/s00467-002-0974-3.

Pickworth FE, Vivian GC, Franklin K, Brown EF: 99Tcm-mercapto acetyl triglycine in paediatric renal tract disease. British Journal of Radiology. 1992, 65: 21-29.

Piepsz A, Pintelon H, Verboven M, Keuppens F, Jacobs A: Replacing 99Tcm-DMSA for renal imaging?. Nuclear Medicine Communications. 1992, 13: 494-496.

Radmayr C, Klauser A, Pallwein L, Zurnedden D, Bartsch G, Frauscher F: Contrast enhanced reflux sonography in children: A comparison to standard radiological imaging. Journal of Urology. 2002, 167: 1428-1430. 10.1097/00005392-200203000-00070.

Redman JF, Seibert JJ: The role of excretory urography in the evaluation of girls with urinary tract infection. Journal of Urology. 1984, 132: 953-955.

Rehling M, Jensen JJ, Scherling B, Egeblad M, Lonborg-Jensen H, Kanstrup I, Dige-Petersen H: Evaluation of renal function and morphology in children by 99mTc-DTPA gamma camera renography. Acta Paediatrica Scandinavica. 1989, 78: 601-607.

Rickwood AM, Carty HM, McKendrick T, Williams MP, Jackson M, Pilling DW, Sprigg A: Current imaging of childhood urinary infections: prospective survey. Bmj. 1992, 304: 663-665.

Von Rohden L, Bosse U, Wiemann D: [Reflux sonography in children with an ultrasound contrast medium in comparison to radiologic voiding cystourethrography]. Paediat Prax. 1995, 49: 49-58.

Rossleigh MA, Wilson MJ, Rosenberg AR, Elison BS, Cahill S, Farnsworth RH: DMSA studies in infants under one year of age. Contributions to Nephrology. 1990, 79: 166-169.

Salih M, Baltaci S, Kilic S, Anafarta K, Beduk Y: Color flow Doppler sonography in the diagnosis of vesicoureteric reflux. European Urology. 1994, 26: 93-97.

Scherz HC, Downs TM, Caesar R: The selective use of dimercaptosuccinic acid renal scans in children with vesicoureteral reflux. Journal of Urology. 1994, 152: 628-631.

Schneider K, Jablonski C, Weissner M, et al: Screening for vesicoureteral reflux in children using real-time sonography. Pediatric Radiology. 1984, 14: 400-403.

Siamplis D, Vasiou K, Giarmenitis S, Frimas K, Zavras G, Fezoulidis I: Sonographic detection of vesicoureteral reflux with fluid and air cystography. Comparison with VCUG. RoFo Fortschritte auf dem Gebiete der Rontgenstrahlen und der Neuen Bildgebenden Verfahren. 1996, 165: 166-169.

Smellie JM, Rigden SP, Prescod NP: Urinary tract infection: a comparison of four methods of investigation. Archives of Disease in Childhood. 1995, 72: 247-250.

Stokland E, Hellstrom M, Jacobsson B, Jodal U, Sixt R: Evaluation of DMSA scintigraphy and urography in assessing both acute and permanent renal damage in children. Acta Radiologica. 1998, 39: 447-452.

Stokland E, Hellstrom M, Jacobsson B, Jodal U, Sixt R: Renal damage one year after first urinary tract infection: role of dimercaptosuccinic acid scintigraphy. Journal of Pediatrics. 1996, 129: 815-820.

Stokland E, Hellstrom M, Hansson S, Jodal U, Oden A, Jacobsson B: Reliability of ultrasonography in identification of reflux nephropathy in children. Bmj. 1994, 309: 235-239.

Tan SM, Chee T, Tan KP, Cheng HK, Ooi BC: Role of renal ultrasonography (RUS) and micturating cystourethrogram (MCU) in the assessment of vesico-ureteric reflux (VUR) in children and infants with urinary tract infection (UTI). Singapore Medical Journal. 1988, 29: 150-152.

Dura Trave T, Gonzalez Montero R, Juste Ruiz M, Gonzalez de Dios J, Carratala Marco F, Moya Benavent M, Verdu Rico J, Caballero Calpena O: [Usefulness of renal scintigraphy in the assessment of the first febrile urinary infection in children]. Anales Espanoles de Pediatria. 1997, 47: 378-382.

Valentini AL, Salvaggio E, Manzoni C, Rendeli C, Destito C, Summaria V, Campioni P, Marano P: Contrast-enhanced gray-scale and color Doppler voiding urosonography versus voiding cystourethrography in the diagnosis and grading of vesicoureteral reflux. Journal of Clinical Ultrasound. 2001, 29: 65-71. 10.1002/1097-0096(200102)29:2<65::AID-JCU1000>3.0.CO;2-I.

Verber IG, Strudley MR, Meller ST: 99mTc dimercaptosuccinic acid (DMSA) scan as first investigation of urinary tract infection. Archives of Disease in Childhood. 1988, 63: 1320-1325.

Li Volti S, Di Bella D, Garozzo R, Di Fede GF, Mollica F: Imaging of urinary tract malformations: intravenous urography and/or kidney ultrasonography?. Child Nephrology & Urology. 1991, 11: 96-99.

Whitear P, Shaw P, Gordon I: Comparison of 99Tcm dimercaptosuccinic acid scans and intravenous urography in children. British Journal of Radiology. 1990, 63: 438-443.

Wujanto R, Testa HJ, Shields RA, Prescott MC, Lawson RS, Cohen SJ: Assessment of renal function and scarring: is a DMSA scan always necessary?. Contributions to Nephrology. 1987, 56: 250-255.

McEwing RL, Anderson NG, Hellewell S, Mitchel J: Comparison of echo-enhanced ultrasound with fluoroscopic MCU for the detection of vesicoureteral reflux in neonates. Pediatric Radiology. 2002, 32: 853-858. 10.1007/s00247-002-0812-6.

Nakamura M, Wang Y, Shigeta K, Shinozaki T, Taniguchi N, Itoh K: Simultaneous voiding cystourethrography and voiding urosonography: An in vitro and in vivo study. Clinical Radiology. 2002, 57: 846-849.

Uhl M, Kromeier J, Zimmerhackl LB, Darge K: Simultaneous voiding cystourethrography and voiding urosonography. Acta Radiologica. 2003, 44: 265-268. 10.1034/j.1600-0455.2003.00065.x.

Flegel KOR: Adverse effects of diagnostic tests. A study of the quality of reporting. ARCHIVES OF INTERNAL MEDICINE. 1982, 142: 883-887. 10.1001/archinte.142.5.883.

Bossuyt PM: The quality of reporting in diagnostic test research: getting better, still not optimal. Clin Chem. 2004, 50: 465-466. 10.1373/clinchem.2003.029736.

Detsky AS, Naylor CD, O'Rourke K, McGeer AJ, L'Abbe KA: Incorporating variations in the quality of individual randomized trials into meta-analysis. Journal of Clinical Epidemiology. 1992, 45: 255-265. 10.1016/0895-4356(92)90085-2.

Moher D, Jadad AR, Nichol G, Penman M, Tugwell P, Walsh S: Assessing the quality of randomized controlled trials: an annotated bibliography of scales and checklists. Controlled Clinical Trials. 1995, 16: 62-73. 10.1016/0197-2456(94)00031-W.

Juni P, Witschi A, Bloch R, Egger M: The hazards of scoring the quality of clinical trials for meta-analysis. JAMA. 1999, 282: 1054-1060. 10.1001/jama.282.11.1054.

Juni P, Altman DG, Egger M: Systematic reviews in healthcare: Assessing the quality of controlled clinical trials. BMJ. 2001, 323: 42-46. 10.1136/bmj.323.7303.42.

Reitsma JB, Glas AS, Rutjes AWS, Scholten RJPM, Bossuyt PMM, Zwinderman AH: Direct pooling of sensitivity and specificity using bivariate models in meta-analysis of studies of diagnostic accuracy. Submitted.

Pre-publication history

The pre-publication history for this paper can be accessed here:http://www.biomedcentral.com/1471-2288/5/20/prepub

Acknowledgements

We would like to thank Professor Martin Bland of the University of York and Mr Roger Harbord of the University of Bristol for their statistical advice.

The work was done as part of a project commissioned and funded by the NHS R&D Health Technology Assessment Programme (project number 01/66/01). The views expressed in this review are those of the authors and not necessarily those of the Standing Group, the Commissioning Group, or the Department of Health.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The author(s) declare that they have no competing interests

Authors' contributions

All authors contributed towards the conception and design of the study and the interpretation of the data. They also read and approved the final manuscript. PW and MW participated in data extraction, the analysis of data, and drafted the article.

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

This article is published under an open access license. Please check the 'Copyright Information' section either on this page or in the PDF for details of this license and what re-use is permitted. If your intended use exceeds what is permitted by the license or if you are unable to locate the licence and re-use information, please contact the Rights and Permissions team.

About this article

Cite this article

Westwood, M.E., Whiting, P.F. & Kleijnen, J. How does study quality affect the results of a diagnostic meta-analysis?. BMC Med Res Methodol 5, 20 (2005). https://doi.org/10.1186/1471-2288-5-20

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1471-2288-5-20