Abstract

We continue investigating the superintegrability property of matrix models, i.e. factorization of the matrix model averages of characters. This paper focuses on the Gaussian Hermitian example, where the role of characters is played by the Schur functions. We find a new intriguing corollary of superintegrability: factorization of an infinite set of correlators bilinear in the Schur functions. More exactly, these are correlators of products of the Schur functions and polynomials \(K_\Delta \) that form a complete basis in the space of invariant matrix polynomials. Factorization of these correlators with a small subset of these \(K_\Delta \) follow from the fact that the Schur functions are eigenfunctions of the generalized cut-an-join operators, but the full set of \(K_\Delta \) is generated by another infinite commutative set of operators, which we manifestly describe.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In celestial mechanics, superintegrability (SI) implies existence of an additional operator (Laplace-Runge-Lenz vector) which commutes with the Hamiltonian and is somehow different (superficial) as compared to the “obvious” commuting set of operators (rotations), which are responsible for the ordinary integrability.

In the case of matrix models, even this language is still to be developed: our original definition of superintegrability in [1, 2] (based on the phenomenon earlier observed in [3,4,5,6,7,8,9,10,11,12], see also some preliminary results in [27,28,29,30,31,32] and later progress in [13,14,15,16,17,18,19,20,21,22,23,24, 24,25,26, 33]) implies the mapping between a big space \(\mathcal{X}\) (functions of matrix eigenvalues or time-variables \(p_k\)) to a small one, \(\mathcal{Z}\) (functions of the matrix size N) with the Schur functions \(S_R\) being a kind of eigenfunctions of this contraction map. Despite the setting looks very poor, the phenomenon clearly exists: a minor deformation of the Gaussian measure (which preserves integrability) is not compensated by a small deformation of the Schur functions so that the superintegrability property \(\Big <S_R\{p\}\Big > \ \sim \ S_R\{N\}\) is preserved. Obviously, the setting should be lifted to the case when the both spaces, \(\mathcal{X}\) and \(\mathcal{Z}\) are “equal”. This could mean, for instance, that one can consider the Schur functions not just as a subjects of averaging over matrices, but rather as common eigenfunctions of a commuting set of operators. In fact, integrability and superintegrability are both related to existence of different mutually commuting operators. But how to distinguish between different operators, and separate them into sets which are responsible respectively for integrability and for the superintegrability?

A related question can be what is the reason for an additional enhancement in the case of Dotsenko-Fateev (double logarithmic) measure, where one gets a whole set of factorized Kadell integrals with not only averages of Schur functions, but also of their peculiar multilinear combinations being described by nice factorization formulas (giving rise to Nekrasov functions) [24, 33, 34]?

In this letter, we argue that these two questions are related to each other, and demonstrate that, in the Gaussian Hermitian model case, factorization of bilinear averages of Schur functions, which encodes the superintegrability is also due to existence of an infinite set of commuting operators. Note that the very idea is close to what was discussed in [35, 36], where the author used an infinite set of commuting operators in order to study correlators in Gaussian matrix models with different gauge groups (exploiting a specific different structure: the embedding structure of gauge groups depending on N).

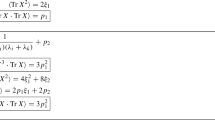

More exactly, we demonstrate that there is a complete set of symmetric polynomials \(K_\Delta \) such that the averages \(\big <K_\Delta S_R\big>\) are proportional to the averages \(\big <S_R\big>\):

where the eigenvalues \(\mu _{\Delta ,R}\) do not depend on N. This set of polynomials \(K_\Delta \) is generated by an infinite set of commuting operators \(W^-_\Delta \).

The letter is organized as follows. In Sects. 2 and 3, we discuss the generic property of superintegrability. In Sect. 4, we consider the set of cut-and-join operators \(W_\Delta \) [37, 38] as a natural candidate for the infinite set of commuting operators generating \(K_\Delta \), and realize that it gives rise to only part of \(K_\Delta \). Hence, in Sect. 5, we construct the set \(W^-_\Delta \) that generates all \(K_\Delta \). In Sect. 6, we discuss examples of eigenvalues \(\mu _{\Delta ,R}\), and, in Sect. 7, we find an explicit formula for \(\mu _{\Delta ,R}\). Section 8 contains concluding remarks, and, in the Appendix, we explicitly list polynomials \(K_\Delta \) for all \(\Delta \) up to level 6.

Notation The Schur functions are symmetric polynomials of variables \(x_i\), \(i=1,\ldots ,N\). In particular, \(x_i\) can be eigenvalues of a matrix H. We denote through \(S_R\{p_k\}\) the Schur functions as functions of power sums \(p_k=\sum _ix_i^k\). When we emphasize that \(x_i\) are the eigenvalues of H, we use the notation \(P_k:=\textrm{tr}\,H^k\).

The Schur function depends on the partition (Young diagram) R, which is a set of lines with lengths \(R_1\ge R_2\ge \cdots \ge R_{l_R}\). We also denote through \(S_{R/T}\) the skew Schur functions, and sometimes use the notation

2 SI in matrix models

According to [2], see also [24] and references therein, SI means that there exits a linear basis in the space of observables such that all the elements of the basis have “very simple” averages. In practice, this “very simple” means fully factorized. Moreover, this distinguished basis is usually formed by characters of an underlying symmetry algebra (to which the matrices belong), and the average of each character is again just the same character, only at a different (diminished) space of variables. The typical example is the Gaussian Hermitian model, where averages over Hermitian matrices are defined

dH being the Haar measure on Hermitian matrices normalized in such a way that \(\Big <1\Big>\).

If the function F is invariant, i.e. depends on the eigenvalues \(h_i\) of H, one can integrate over angular variables, and

SI in this case states that averages of the Schur functions \(S_R\{P_k=\textrm{tr}\,H^k\}\) are

At the r.h.s. are the same Schur functions but at very special points: the main one is \(S_R\{N\}:=S_R\{p_k=N\}\).

There are very similar statements \(<character>\ \sim character\) for a variety of other eigenvalue models, see [24] for an extensive list.

3 Does SI really exist in matrix models?

A natural question is if there is any true sense in the above observation? Perhaps, one can always find such a distinguished basis? It is therefore instructive to look at what happens in the same Hermitian model when one changes the background potential from the Gaussian one to anything else.

The Gaussian partition function

allows one to define an average in arbitrary non-Gaussian potential \(\sum _k T_k X^k\):

On the other hand, one can rewrite it as a sum over averages in the T-background,

i.e. the T-deformed averages (note that the normalization is still Gaussian, i.e. the definition of average is not changed) are

These averages are non-factorized infinite series that, actually, can not to be simplified even for finitely many non-vanishing \(T_k\). They much more complicated as compared to \( \Big <S_R\{P\}\Big > = \frac{S_R[N]\cdot S_R\{\delta _{k,2}\}}{S_R\{\delta _{k,1}\}}\), and there is no any (obvious) way to modify the Schur functions in order to produce factorized averages, not to say that the preferred basis, even if existed, would not be formed by the characters of \(sl_N\). The only exceptions are the deformation by \(T_1\) and \(T_2\) only, which preserve Gaussianity.

In this sense, what we call superintegrability is an obviously non-trivial feature, which, in this concrete example, distinguishes the Gaussian potential among the arbitrary ones. Note that it is clearly a further restriction as compared to the ordinary integrability, the latter one is preserved by arbitrary T-deformations and does not require Gaussianity: all \(Z^{(T)}\{p\}\) are KP \(\tau \)-functions, just they are associated with T-dependent points of the universal Grassmannian. Thus, superintegrability exists and is a strong refinement of ordinary integrability.

4 Constructing \(K_\Delta \): W-operators

In the next sections, we assume that Schur functions are restricted to the Miwa locus \(S_R\{p_k=\textrm{tr}\,H^k\}\) with \(N\times N\) matrix H. It is a little less general than arbitrary time variables, but still far away from restricting the Schur functions to their Gaussian averages \(\Big <S_R\Big>\). We will assume that \(N\ge |R|\) though this restriction is not necessary, and formulas are basically correct at any N: one just has to be careful with normalizations. For instance, \(\mu _{\Delta ,R}\) is a ratio of two zeroes unless \(N\ge |R|\). In a proper normalization, both sides of formulas typically vanish unless \(N\ge |R|\).

Since the Schur functions are common eigenfunctions [37, 38] of the operators

where the eigenvalues are appropriately normalized symmetric-group characters, \(\lambda _{\Delta ,R}=\varphi _R(\Delta )\) [37, 38], and the normal ordering \(:\ldots :\) implies all the derivatives put to the right. One can use integration by parts to get

Since the expression in brackets at the l.h.s. is a polynomial in H, it can be expanded into a linear combination of the Schur functions,

with

where the normal ordering \(\ddag \cdots \ddag \) this time implies that all the derivatives are put to the left.

In particular, for \(\hat{W}_{[1]}\), which is just a dilatation operator with \(\lambda _{[1],R} = |R|\), this means:

which is indeed true (for example, one can use that \(p_2S_{[2r]} = S_{[2r+2]} + S_{[2r,2]}-S_{[2r,1,1]}\) and similar relations for non-symmetric representations R). For example,

Equation (13) are rather poor – they are not sufficient to express all Gaussian pair correlators, they are just sum rules, which impose certain constraints on them. This is because the number of Young diagrams \(\#_{2n} > \#_n\), the former number is what we need for complete set of pair correlators, the latter number is what we can actually deduce from (11).

5 Constructing \(K_\Delta \): \(W^-\)-operators

In this section, we discuss that, in order to construct the full set of operators, one has to consider another set of commuting operators, which are a kind of “lowering” operators in the space of Schur functions.

Let us note that, in addition to relations (16), there more bilinear Schur averages of the (1) type: for instance, there is the relation

The l.h.s. of this formula would appear if we act on \(e^{-\frac{1}{2}\textrm{tr}\,H^2}\) with the operator \((\textrm{tr}\,\frac{\partial }{\partial H})^2\). This operator with \(\textrm{tr}\,\frac{\partial }{\partial H} = N\frac{\partial }{\partial p_1} + \sum _{k=2}^\infty p_{k-1}\frac{\partial }{\partial p_k}\) does not have \(S_R\) as an eigenfunction,

What happens is that its action is conspired with the SI formula: despite (18) forbids \(S_R\) to be an eigenfunction, i.e. equation does not hold at the “operator level”, it does hold for the Gaussian averages:

Only for restricted set (16) they are promoted to the operator level (11).

Now our main claim is that one can construct in a similar way the full set of polynomials \(K_\Delta \) for (1). That is, define

Then,

where

Note that

so that they form a complete set polynomials at any given level.

These formulas can be considered as one more reformulation (avatar) of superintegrability. As we already discussed in the Introduction, it is related to an infinite set of commuting operators \(W^-_\Delta \), which are manifestly given by (20).

In Sect. 7, we prove these relations, and find explicit expressions for \(\mu _{\Delta ,R}\). Examples at level 2 are given by (15) and (17), examples at level 4 are (examples up to level 6 can be found in the Appendix)

The underlined term could be eliminated with the help of (17), but this causes an N-dependent shift of the eigenvalue \(\mu _{[3,1]}\longrightarrow \mu _{[3,1]}-3N\mu _{[1,1]}\). In (24) per se all \(\mu _R\) are independent of N. However, one can use (15) instead in order to remove the second and forth terms in this formula: this would give rise to the N-independent shift \(\mu _{[3,1]}\longrightarrow \mu _{[3,1]}+3\mu _{[2]}\).

Note that two equations of (16) can be compared with the corresponding equations from this list, they differ by adding lower N-independent averages so that \(\lambda _{[2]}=\mu _{[4]}+\mu _{[1,1]}\) and \(\lambda _{[1,1]}= \mu _{[2,2]}+\mu _{[2]}\). Generally, the identification for the part of relations that can be generated by the W-operators is \(\lambda _{\Delta ,R} = \mu _{2\Delta ,R}+lower\ terms\), where \(2\Delta \) denotes a Young diagram with all line lengths doubled, \(2\Delta :=\{2\Delta _1\ge 2\Delta _2 \ge \ldots \ldots 2\Delta _{l_\Delta }\}\).

6 Values of \(\mu _{\Delta ,R}\)

Finding the “eigenvalues” \(\mu _{\Delta ,R}\) is a separate challenge. As we explain in the next section, there is a general formula for them. However, the formula is not that simple, and it is instructive to look at examples. The list of the first few is in the Table.

R\(\Big \backslash \Delta \) | [2] | \({[1^2]}\) | [4] | [3,1] | \({[2^2]}\) | \({[2,1^2]}\) | \({[1^4]}\) | [6] | [5,1] | [4,2] | \({[4,1^2]}\) | \({[3^2]}\) | [3,2,1] | \({[2^3]}\) | \({[3,1^3]}\) | \({[2^2,1^2]}\) | \({[2,1^4]}\) | \({[1^6]}\) |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

[2] | 2 | 2 | ||||||||||||||||

\({[1^2]}\) | 2 | \(-2\) | ||||||||||||||||

[4] | 4 | 4 | 8 | 8 | 8 | 8 | 8 | |||||||||||

[3, 1] | 4 | \(-4\) | 8 | 0 | 8 | \(-8\) | \(-24\) | |||||||||||

\({[2^2]}\) | 4 | 0 | 0 | \(-4\) | 8 | 0 | 8 | |||||||||||

\({[2,1^2]}\) | 4 | 4 | \(-8\) | 0 | 8 | 8 | \(-24\) | |||||||||||

\({[1^4]}\) | 4 | \(-4\) | \(-8\) | 8 | 8 | \(-8\) | 8 | |||||||||||

[6] | 6 | 6 | 24 | 24 | 24 | 24 | 24 | 48 | 48 | 48 | 48 | 48 | 48 | 48 | 48 | 48 | 48 | 48 |

[5, 1] | 6 | −6 | 24 | 0 | 24 | −24 | −72 | 48 | 0 | 48 | −48 | 48 | 0 | 48 | −96 | −48 | −144 | −240 |

[4, 2] | 6 | 2 | 8 | 0 | 24 | 8 | 24 | 0 | −16 | 16 | −16 | 0 | 0 | 48 | 0 | 16 | 48 | 144 |

\({[4,1^2]}\) | 6 | 6 | 0 | 12 | 24 | 24 | −24 | −24 | 0 | 0 | 0 | −24 | 24 | 48 | −24 | 48 | −48 | −240 |

\({[3^2]}\) | 6 | −2 | 8 | −8 | 24 | −8 | −8 | 0 | 0 | 16 | 16 | −32 | −16 | 48 | 16 | −16 | −16 | −80 |

[3, 2, 1] | – | – | – | – | – | – | – | – | – | – | – | – | – | – | – | – | – | – |

\({[2^3]}\) | 6 | 2 | −8 | −8 | 24 | 8 | −8 | 0 | 0 | −16 | 16 | 32 | −16 | 48 | −16 | 16 | −16 | 80 |

\({[3,1^3]}\) | 6 | −6 | 0 | 12 | 24 | −24 | −24 | −24 | 0 | 0 | 0 | 24 | 24 | 48 | 24 | −48 | −48 | 240 |

\({[2^2,1^2]}\) | 6 | −2 | −8 | 0 | 24 | −8 | 24 | 0 | 16 | −16 | −16 | 0 | 0 | 48 | 0 | −16 | 48 | −144 |

\({[2,1^4]}\) | 6 | 6 | −24 | 0 | 24 | 24 | −72 | 48 | 0 | −48 | −48 | −48 | 0 | 48 | 96 | 48 | −144 | 240 |

\({[1^6]}\) | 6 | − 6 | −24 | 24 | 24 | −24 | 24 | 48 | −48 | −48 | 48 | −48 | 48 | 48 | −48 | −48 | 48 | −48 |

Clearly, transposition of R preserves the absolute value of \(\mu \):

We will prove it in the next section.

In fact, the quantities in the Table are given by product formulas, in particular:

These \(\mu _{\Delta ,R}\) are shown boldfaced in the table. Expression through the values of Schur functions at \(p_k=\delta _{k,2}\) are explained in (39) below.

All averages \(\left<K_Q \cdot S_{[3,2,1]}\right>=0\), because \(\left<S_{[3,2,1]}\right>=0\), this is, in turn, because the factor \(S_{[3,2,1]}\{\delta _{k,2}\} = 0\) in (5). Therefore, the corresponding \(\mu _{Q,[3,2,1]}\) are not defined. The same is true for all \(S_{[\ldots 4321]}\), which are independent of even time-variables. In fact, it is sufficient for vanishing of the average that the Schur polynomials does not contain the item \(p_2^{|R|/2}\), this happens for \(S_{[5,2,1]}\), \(S_{[1,1,1,2,3]}\) and a number of other examples of bigger sizes.

The table has clearly a triangle structure, since, if \(|\Delta |>|R|\), the corresponding \(W^-_\Delta \) contains more derivatives than the degree of H in \(S_R\).

The first example is provided by \(\mu _{[1,1],R}\) at level 2. While

is very simple, expression for \(\mu _{[1,1],R}\) is quite involved: it depends on the number \(l_R\) of columns in the diagram \(R=\left[ r1\ge r_2\ge r_3\ge \cdots \ge r_{l_R}>0\right] \):

Projector \(P_{eoo}\) here, for instance, means that \(r_1\) is even, \(r_2\) and \(r_3\) are odd so that all the values of |R| are even. Averages of the type oeo, eoeo and oeoe are all vanishing.

Different lines in these formulas are connected smoothly, one should just pick up the terms with E at the very right position and put the highest \(r_{l_R}=0\).

7 Derivation of (21) and explicit formula for \(\mu _{\Delta ,R}\)

Alternative representation of \(\mu _{[1,1],R}\) can be deduced from (19), which we are going to derive now. The first examples of this relation are

Clearly, these formulas are obtained by successive application of the operator

where the box \(\Box _{i,j}\) with coordinates (i, j) is removed from the Young diagram R so that \(R-\Box _{i,j}\) is still a Young diagram. This formula is a kind of inverse of the Pieri rule.

Despite this time the operator changes \(S_R\), which is no longer its eigenfunction, like it was in (11), it does not change the average! This is because of the very special coefficient at the r.h.s. of (29). Indeed, note that

and hence

This means that

with

The sum over the boxes of the Young diagram is such that the diagram obtained after removing any of these two boxes and both of them still remains a Young diagram. When there are two different ways to achieve the final state, a combinatorial coefficient 2 appears. For example,

When \(S_R\{\delta _{k,2}\}=0\), the corresponding Gaussian average vanishes and \(\mu _{[1,1],R}\) is not defined. One can check that in these cases the numerator vanishes as well.

One can similarly consider the action higher (even) degrees of operator \(\left( \textrm{tr}\,\frac{\partial }{\partial H}\right) ^{2n}\), in this case with the same line of reasoning, one obtains

where one has to remove 2n boxes from the Young diagram preserving the property of being the Young diagram.

Furthermore,

where the two boxes are removed from rightmost part of the R in such a way that obtained is still a Young diagram. The general formula is

where \(h_k\) is a border strip of length k (i.e. containing k boxes) [39], \(\textrm{ht}(h_k)\) is its height (defined to be one less than the number of rows it occupies), the sum runs over all such border strips, and all the coefficients \(c_{_{R,h_k}}\) are products of \(N-i+j\) over subtracted boxes. Note that the border strips are called rim hooks in [12]. In order to get \(W^-_\Delta \) with a few rows, one has to apply (37) sequentially.

Formula (37) is a kind of inverse to the Pieri rule

Now one immediately obtains the explicit formula for \(\mu _{\Delta ,R}\):

where \(h_{\Delta _a}\) are the border strips removed from the Young diagram R in accordance with (37), with the corresponding sign taken into account.

Note that, since \(S_{R^\vee }\{p_k\}=(-1)^{|R|}S_R\{-p_k\}\) and \(S_R\{p_k=\delta _{k,2}\}\sim p_2^{|R|/2}\), one immediately obtains from this formula relation (25). Indeed, let us look, for instance, at the case of \(K_{[2]}\). Then,

and every term in the sum gets the sign \((-1)^{|\Delta |/2}\) under conjugation of the Young diagram. Besides, in the course of this conjugation, one has to permute the two terms in (40). This gives total sign \((-1)^{|\Delta |/2+1}\). This case is certainly very trivial, because the difference of two sums (40) is equal to |R|.

For the generic \(K_\Delta \), there is a sign factor \((-1)^{|\Delta |}\) from the ratios of \(S_{R-\Delta }/S_R\) and an additional factor of \((-1)^{l_\Delta }\) that follows from the fact that the conjugation of R changes additionally the sign of \(\mu _{\Delta ,R}\) for every even part of partition \(\Delta \) because of formula (37) (the height of the border strip changes the parity under conjugation in this case). This gives \((-1)^{|\Delta |+\#_e}\), where \(\#_e\) is the number of even parts of partition \(\Delta \). However, as soon as the number of odd parts (which do not give rise to this additional sign changing) is even, this formula can be changed for \((-1)^{|\Delta |+l_\Delta }\).

8 Conclusion

To conclude, we have found a new indirect implication of superintegrability: factorization of peculiar pair correlators, where one component of the pair is just the character, while the other one is its N-dependent deformation. This factorization is due to existence of an infinite set of commuting operators \(W^-_\Delta \), (20) that provide a mapping from the space \(\mathcal{X}\) of invariant matrix polynomials to the space \(\mathcal{Z}\) of polynomials of matrix size, \(F_{W_\Delta ^-}:\ \mathcal{X}\longrightarrow \mathcal{Z}\). This mapping is manifestly given by the matrix averaging

The space \(\mathcal{Z}\) can be spanned (ambiguously) by matrix averages of the Schur functions \(\Big <S_R\Big>\). As we demonstrated in this paper, the Schur functions turn out to be eigenvectors of the mapping:

while, on the space \(\mathcal{X}\), i.e. before the averaging, this is not the case, since the action of \(W_\Delta ^-\) on \(S_R\) decrease its grading to \(|R|-|\Delta |\).

It is an open question, if this construction persists in other superintegrable models, which could help to understand if it is in fact a direct corollary of SI or not. Note also that, in other models, the space \(\mathcal{Z}\) may need an extension to the space of rational functions of the matrix size, see, for instance, the case of two-logarithm (Selberg) models, [24].

Also an important issue is what are interrelations between the set of operators \(W_\Delta ^-\) and a commutative set of generalized cut-and-join operators \(W_\Delta \). Indeed, the Schur function are the eigenvectors of the latter already on the space \(\mathcal{X}\). However, as a price of it, if one rewrites (42) as a statement about the pair correlator (21),

the set of \(W_\Delta \) generates too little set of polynomials \(K_\Delta \), while the set of \(W_\Delta ^-\) gives rise to the complete basis of \(K_\Delta \). The operators \(W_\Delta ^-\) are of a kind of lowering operators in an algebra, and \(W_\Delta \) are Cartan-like operators in it. Indeed, as one can notice, the operators \(W_k^-\) looks as positive harmonics in the \(w_\infty \)-algebra, while \(W_k\), as zeroth harmonics in it: as \(\hat{W}^{(k+1)}_k\) and \(\hat{W}^{(k+1)}_0\) correspondingly in terms of [10, secs.8-9]. Note that these zeroth harmonic operators can generate the Virasoro and W-algebra constraints [40].

Data Availability

This manuscript has no associated data or the data will not be deposited. [Authors’ comment: This is a theoretical study and no experimental data has been listed].

References

H. Itoyama, A. Mironov, A. Morozov, JHEP 1706, 115 (2017). arXiv:1704.08648

A. Mironov, A. Morozov, Phys. Lett. B 771, 503 (2017). arXiv:1705.00976

P. Di Francesco, C. Itzykson, J.B. Zuber, Commun. Math. Phys. 151, 193 (1993). arXiv:hep-th/9206090

P. Di Francesco, C. Itzykson, Ann. Inst. H. Poincare Phys. Theor. 59, 117–140 (1993). arXiv:hep-th/9212108

I.K. Kostov, M. Staudacher, Phys. Lett. B 394, 75–81 (1997). arXiv:hep-th/9611011

I.K. Kostov, M. Staudacher, T. Wynter, Commun. Math. Phys. 191, 283–298 (1998). arXiv:hep-th/9703189

A. Orlov, Int. J. Mod. Phys. A 19(supp02), 276–293 (2004). nlin/0209063

A. Mironov, A. Morozov, S. Shakirov, JHEP 02, 067 (2011). arXiv:1012.3137

A. Mironov, A. Morozov, S. Shakirov, A. Smirnov, Nucl. Phys. B 855, 128–151 (2012). arXiv:1105.0948

A. Alexandrov, A. Mironov, A. Morozov, S. Natanzon, JHEP 11, 080 (2014). arXiv:1405.1395

S. Natanzon, A. Orlov, arXiv:1407.8323

C. Cordova, B. Heidenreich, A. Popolitov, S. Shakirov, Commun. Math. Phys. 361, 1235 (2018). arXiv:1611.03142

A. Mironov, A. Morozov, Phys. Lett. B 774, 210 (2017). arXiv:1706.03667

A. Morozov, A. Popolitov, S. Shakirov, Phys. Lett. B 784, 342 (2018). arXiv:1803.11401

A. Mironov, A. Morozov, JHEP 1808, 163 (2018). arXiv:1807.02409

A. Mironov, A. Morozov, Phys. Lett. B 816, 136196 (2021). arXiv:2011.01762

A. Mironov, A. Morozov, Phys. Lett. B 816, 136221 (2021). arXiv:2011.02855

A. Mironov, A. Morozov, Eur. Phys. J. C 81, 270 (2021). arXiv:2011.12917

A. Mironov, A. Morozov, Phys. Lett. B 816, 136268 (2021). arXiv:2102.01473

L. Cassia, R. Lodin, M. Zabzine, JHEP 10, 126 (2020). arXiv:2007.10354

A. Mironov, A. Morozov, A. Popolitov, Phys. Lett. B 824, 136833 (2022). arXiv:2107.13381

L.Y. Wang, R. Wang, K. Wu, W.Z. Zhao, Nucl. Phys. B 973, 115612 (2021). arXiv:2110.14269

A. Mironov, V. Mishnyakov, A. Morozov, A. Zhabin, arXiv:2112.11371

A. Mironov, A. Morozov, arXiv:2201.12917

A. Mironov, A. Morozov, Z. Zakirova, Phys. Lett. B 831, 137178 (2022). arXiv:2203.03869

V. Mishnyakov, N. Oreshina, arXiv:2203.15675

V.A. Kazakov, M. Staudacher, T. Wynter, hep-th/9601153, 1995 Cargèse Proceedings

S. Corley, A. Jevicki, S. Ramgoolam, Adv. Theor. Math. Phys. 5, 809–839 (2002). arXiv:hep-th/0111222

C. Kristjansen, J. Plefka, G.W. Semenoff, M. Staudacher, Nucl. Phys. B 643, 3–30 (2002). arXiv:hep-th/0205033

M. Tierz, Mod. Phys. Lett. A 19, 1365–1378 (2004). arXiv:hep-th/0212128

A. Brini, B. Eynard, M. Mariño, Annales Henri Poincaré 13(8) (2012). arXiv:1105.2012

R. de Mello Koch, S. Ramgoolam, arXiv:1002.1634

A. Mironov, A. Morozov, AGT and Nekrsov calculus as a corollary of superintegrability (To appear)

K.W.J. Kadell, Compos. Math. 87, 5–43 (1993)

P. Diaz, JHEP 10, 228 (2013). arXiv:1309.1180

P. Diaz, JHEP 09, 031 (2014). arXiv:1406.7671

A. Mironov, A. Morozov, S. Natanzon, Theor. Math. Phys. 166, 1–22 (2011). arXiv:0904.4227

A. Mironov, A. Morozov, S. Natanzon, J. Geom. Phys. 62, 148–155 (2012). arXiv:1012.0433

I.G. Macdonald, Symmetric Functions and Hall Polynomials, 2nd edn. (Oxford University Press, Oxford, 1995)

A. Mironov, V. Mishnyakov, A. Morozov, R. Rashkov, Eur. Phys. J. C 81, 1140 (2021). arXiv:2105.09920

Acknowledgements

Our work is partly supported by the grant of the Foundation for the Advancement of Theoretical Physics “BASIS”, by joint grants 21-52-52004-MNT-a and 21-51-46010-ST-a.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

We list here the first few operators \(K_\Delta \). They celebrate the property that their bilinear averages with all \(S_R\), are proportional to the averages of \(S_R\): \(\Big<K_\Delta S_R\Big > = \mu _{\Delta ,R}\Big <S_R\Big>\). However, this condition does not fix \(K_\Delta \) unambiguously, therefore we use a precise definition: \(K_\Delta \) are obtained by the action of operators \(\hat{W}^-\Delta \) on the Gaussian weight \(e^{-\frac{1}{2}\textrm{tr}\,H^2}\), see Eq. (22). Then,

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

Funded by SCOAP3. SCOAP3 supports the goals of the International Year of Basic Sciences for Sustainable Development.

About this article

Cite this article

Mironov, A., Morozov, A. Bilinear character correlators in superintegrable theory. Eur. Phys. J. C 83, 71 (2023). https://doi.org/10.1140/epjc/s10052-023-11211-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjc/s10052-023-11211-9