Abstract

Monte Carlo (MC) simulations are extensively used for various purposes in modern high-energy physics (HEP) experiments. Precision measurements of established Standard Model processes or searches for new physics often require the collection of vast amounts of data. It is often difficult to produce MC samples containing an adequate number of events to allow for a meaningful comparison with the data, as substantial computing resources are required to produce and store such samples. One solution often employed when producing MC samples for HEP experiments is to partition the phase space of particle interactions into multiple regions and produce the MC samples separately for each region. This approach allows to adapt the size of the MC samples to the needs of physics analyses that are performed in these regions. In this paper we present a procedure for combining MC samples that overlap in phase space. The procedure is based on applying suitably chosen weights to the simulated events. We refer to the procedure as “stitching”. The paper includes different examples for applying the procedure to simulated proton-proton collisions at the CERN Large Hadron Collider.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Monte Carlo (MC) simulations [1, 2] are used for a plethora of different purposes in contemporary high-energy physics (HEP) experiments. Applications for experiments currently in operation include detector calibration; optimization of analysis techniques, including the training of machine learning algorithms; the modelling of backgrounds, as well as the modelling of signal acceptance and efficiency. Besides, MC simulations are extensively used for detector development and for estimating the physics reach of experiments that are presently in construction or planned in the future.

The production of MC samples containing a sufficient number of events often poses a material challenge in terms of the computing resources required to produce and store such samples [3]. This is especially true for experiments at the CERN Large Hadron Collider (LHC) [4,5,6], firstly due to the large cross section for proton-proton (pp) scattering and secondly due to the large luminosity delivered by the LHC.

The number of pp scattering interactions, \(N_{{\mathrm{data}}}\), that occur within a given interval of time is given by the product of the pp scattering cross section, \(\sigma \), and of the integrated luminosity, L, that the LHC has delivered during this time: \(N_{{\mathrm{data}}} = \sigma \, L\). We refer to the ensemble of pp scattering interactions that occur within the same crossing of the proton bunches as an “event”. The interaction with the highest momentum exchange between the protons is referred to as the “hard-scatter” interaction, and the remaining interactions are referred to as “pileup”. The inelastic pp scattering cross section at the center-of-mass energy of \(\sqrt{s}=13~\mathrm{TeV}\), the energy achieved during the recently completed Run 2 of the LHC (in the period 2015–2018), amounts to \(\approx 75\) mb [7, 8]. The pp scattering data recorded by the ATLAS and CMS experiments during LHC Run 2 amounts to an integrated luminosity of \(\approx 140~\mathrm{fb}^{-1}\) per experiment [9,10,11,12]. Thus, \(N_{{\mathrm{data}}} \approx 10^{16}\) inelastic pp scattering interactions occurred in each of the two experiments during this time. Ideally, one would want the number of simulated events to be higher than the number of events in the data, such that the statistical uncertainties on the MC simulation are small compared to the statistical uncertainties on the data. The production of such large MC samples is clearly prohibitive, however.

Even if one restricts the production of MC samples to processes with a cross section that is significantly smaller than the inelastic pp scattering cross section, such as Drell–Yan (DY) production, the production of W bosons (W+jets), and the production of top quark pairs (\({\mathrm{t}}\bar{\mathrm{t}}\)+jets), the production of MC samples containing a sufficient number of events to allow for a meaningful comparison with the data represents a formidable challenge. The DY, W+jets, and \({\mathrm{t}}\bar{\mathrm{t}}\)+jets production processes are used for detector calibration and Standard Model (SM) precision measurements. They also constitute relevant backgrounds to searches for physics beyond the SM. Their cross sections amount to 6.08 nb for DY production, 61.5 nb for W+jets production, and 832 pb for \({\mathrm{t}}\bar{\mathrm{t}}\)+jets production [13,14,15].Footnote 1 The ATLAS and CMS experiments would each need to produce MC samples containing 840 million DY, 8.61 billion W+jets, and 116 million \({\mathrm{t}}\bar{\mathrm{t}}\)+jets events in order to reduce the statistical uncertainties on the MC simulation to the same level as the uncertainties on the LHC Run 2 data.

In order to mitigate the effect of limited computing resources, both experiments employ sophisticated strategies for the production of MC samples. A common feature of these strategies is to vary the expenditure of computing resources across phase space (PS), depending on the needs of physics analyses. When searching for new physics, for example, it is important to produce sufficiently many events in the tails of distributions, as otherwise potential signals may be obscured by the statistical uncertainties on the SM background.

Different mechanisms for adapting the expenditure of computing resources to the needs of physics analyses have been proposed in the literature. Modern MC programs (“generators”) such as Powheg [16,17,18], MadGraph5_aMC@NLO [19], Sherpa [20], Pythia [21], and Herwig [22] provide functionality that allows to adjust the number of events sampled in different regions of PS through user-defined weighting functions. This approach has been used in Ref. [23]. An alternative approach is to partition the PS into distinct regions (“slices”) and to produce separate independent MC samples covering each slice. Following Ref. [3], we refer to the first approach as “biasing” and to the second one as “slicing”.

In this paper, we focus on the case that MC samples have already been produced and present a method that makes optimal use of these samples, where “optimal” refers to yielding the lowest statistical uncertainty on the signal or background estimate that is obtained from these samples. The samples in general overlap in PS. For example, one set of MC samples may partition the PS based on the number of jets, whereas another set of samples may partition the PS based on \(H_{\mathrm{T}} \), the scalar sum in \(p_{\mathrm{T}} \) of these jets. Our method is general enough to handle arbitrary overlaps between these samples. The overlap is accounted for by applying appropriately chosen weights to the simulated events. We refer to the procedure as “stitching”. One useful feature of the stitching method is that it allows to increase the number of simulated events incrementally in certain regions of PS in case these regions are not yet sufficiently populated by the existing MC samples.

In the following, we will assume that all MC samples that are subject to the stitching procedure have been produced with the same version of the MC program and consistent (i.e. identical) settings for parton distribution functions, scale choices, parton-shower and underlying-event tunes, etc. In case a given set of MC samples was produced with inconsistent settings, the effect of the inconsistencies either need to be small (compared to e.g. the systematic uncertainties) or the events need to be reweighted to make all MC samples consistent prior to applying the stitching procedure.

Variants of the stitching procedure described in the first part of this manuscript have been used by the ATLAS and CMS experiments since LHC Run 1, but, to the best of our knowledge, have not been described in detail in a public document yet. The formalism for the computation of stitching weights is detailed in Sect. 2. Concrete examples for using the formalism in physics analyses are given in Sects. 3.1.1 and 3.1.2. The examples characterize the use of the stitching procedure by the CMS experiment during LHC Runs 1 and 2. They are chosen with the intention to provide a reference. In Sect. 3.2 we extend the stitching procedure to the case of estimating trigger rates at the High-Luminosity LHC (HL-LHC) [24], scheduled to start operation in 2027. The distinguishing feature between the applications of the stitching procedure described in Sects. 3.1 and 3.2 is that in the former (but not in the latter) the cross section of the process that is modeled by the MC simulation is orders of magnitude smaller compared to the inelastic pp scattering cross section. In the former case one can make the simplifying assumption that the process of interest (the process modeled by the MC simulation) solely occurs in the hard-scatter interaction and not in pileup interactions. For the purpose of estimating trigger rates, a relevant use case is that the hard-scatter interaction as well as the pileup interactions are inelastic pp scattering interactions, and the hard-scatter interaction is in fact indistinguishable from the pileup. As described in detail in Sect. 3.2, we account for this indistinguishability by making suitable modifications to the formalism for the computation of stitching weights. The modified stitching procedure detailed in Sect. 3.2 has been used to estimate trigger rates for the HL-LHC upgrade technical design report of the CMS experiment [25]. We conclude the paper with a summary in Sect. 4.

2 Computation of stitching weights

As explained in the introduction, contemporary HEP experiments often employ MC production schemes that first partition the PS into multiple regions and then produce separate MC samples covering each region. We use the term “MC production scheme” to refer to the strategy for choosing which MC samples to produce and how to produce these samples (which MC generator programs to use, how to partition the PS into regions, which settings to use when executing the MC generator programs, etc) and the term “MC sample” to refer to the set of all output files produced by one execution of a MC generator program. When using these MC samples in physics analyses, the overlap of the samples in PS needs to be accounted for by applying weights to the simulated events. The weights need to be chosen such that the weighted sum of simulated events in each region i of PS matches the SM prediction in that region:

where the symbol L corresponds to the integrated luminosity of the analyzed dataset and \(\sigma ^{i}\) denotes the fiducial cross section for the process under study in the PS region i. The first (second) sum on the left-hand side extends over the MC samples j (over the events k in the jth MC sample, where \(N_{j}\) denotes the total number of simulated events in the sample j). The symbol \(w_{j}^{k}\) denotes the weight assigned to event k by the MC generator program, while \(s_{j}^{i}\) denotes the “stitching” weight that is applied to events from the sample j falling into the PS region i. The symbol \(P_{j}^{i}\) corresponds to the probability for an event in MC sample j to fall into PS region i. Equation (1) holds separately for each signal or background process under study.

One can show that the statistical uncertainty on the signal or background estimate gets reduced when all simulated events that fall into PS region i have the same weight, regardless of which MC sample j contains the event. We hence choose the stitching weight to depend only on the PS region i (and not on the MC sample j) and refer to these weights using the symbol \(s^{i}\) from now on.

We define the symbol \(P_{{\mathrm{incl}}}^{i}\) as the ratio of the fiducial cross section \(\sigma ^{i}\) to the “inclusive” cross section \(\sigma _{{\mathrm{incl}}}\), which refers to the whole PS:

Upon inserting this relation into Eq. (1) and solving for the weight \(s^{i}\), we obtain:

A special case, which is frequently encountered in practice, is that one MC sample covers the whole PS, while additional MC samples are used to reduce the statistical uncertainties in the tails of distributions. We refer to the MC sample that covers the whole PS as the “inclusive” sample and the corresponding PS as the “inclusive” PS. In this case, Eq. (2) can be rewritten in the form:

where \(w_{{\mathrm{incl}}}^{k}\) refers to the weights assigned to events in the inclusive sample by the MC generator program and \(N_{{\mathrm{incl}}}\) denotes the total number of events in the inclusive sample. The sum over j in Eq. (3) extends over the additional MC samples, which each cover a different region in PS. We will refer to these samples as the “exclusive” samples. We assume that the weights \(w_{{\mathrm{incl}}}^{k}\) and \(w_{j}^{k}\) are normalized such that the average of these weights, \(\bar{w} _{{\mathrm{incl}}} = \frac{1}{N_{{\mathrm{incl}}}} \, \sum _{k=1}^{N_{{\mathrm{incl}}}} \, w_{{\mathrm{incl}}}^{k}\) and \(\bar{w} _{j} =\frac{1}{N_{j}} \, \sum _{k=1}^{N_{j}} \, w_{j}^{k}\), equals unity for the inclusive sample and for each exclusive sample jFootnote 2. The two factors in Eq. (3) may be interpreted in the following way: The product of \(w_{{\mathrm{incl}}}^{k}\) and the first factor, \(w_{{\mathrm{incl}}}^{k} \, \frac{L \, \sigma _{{\mathrm{incl}}}}{\sum _{k=1}^{N_{{\mathrm{incl}}}} \, w_{{\mathrm{incl}}}^{k}}\), corresponds to the weight that one would apply to an event in PS region i in case no exclusive samples are available and the signal or background estimate in PS region i is based solely on the inclusive sample. The availability of the additional exclusive samples increases the number of simulated events in the PS region i, from \(N_{{\mathrm{incl}}} \, P_{{\mathrm{incl}}}^{i}\) to \(N_{{\mathrm{incl}}} \, P_{{\mathrm{incl}}}^{i} + \sum _{j} \, N_{j} \, P_{j}^{i}\), and reduces the weights that are applied to simulated events falling into the region i. The reduction in the event weight is given by the second factor in Eq. (3). It has the effect of reducing the statistical uncertainty on the signal or background estimate in PS region i by the square-root of this factor, i.e. by \(\sqrt{\frac{P_{{\mathrm{incl}}}^{i} \, \sum _{k=1}^{N_{{\mathrm{incl}}}} \, w_{{\mathrm{incl}}}^{k}}{P_{{\mathrm{incl}}}^{i} \, \sum _{k=1}^{N_{{\mathrm{incl}}}} \, w_{{\mathrm{incl}}}^{k} + \sum _{j} \, P_{j}^{i} \, \sum _{k=1}^{N_{j}} \, w_{j}^{k}}}\).

3 Examples

In this section, we illustrate the formalism developed in Sect. 2 with concrete examples, drawn from two different applications: the modelling of W+jets production in physics analyses at the LHC and the estimation of trigger rates at the HL-LHC.

3.1 Modelling of W+jets production in physics analyses at the LHC

The production of W bosons is interesting to study at the LHC for several reasons. The measurement of the mass of the W boson is an important input to global fits to SM parameters [26]. The fits allow to test the overall consistency of the SM and to set constraints on physics beyond the SM. The sensitivity of these fits is currently limited by the precision of the W boson mass measurement [26]. Differential measurements of the cross section for W+jets production are used to constrain parton distribution functions [27,28,29,30]. In particular, the measurement of the associated production of a W boson with a charm quark provides sensitivity to the strange quark content of the proton [31,32,33] and allows to tune MC generators to improve the modelling of heavy flavour production at hadron colliders. The production of W bosons also constitutes a relevant background to measurements of other SM processes and to searches for new physics, see for example Refs. [34,35,36,37]. In this section, we focus on W+jets production with subsequent leptonic decay of the W boson.

Simulated samples of W+jets events have been produced for pp collisions at \(\sqrt{s}=13~\mathrm{TeV}\) center-of-mass energy using matrix elements computed at leading order (LO) accuracy in perturbative quantum chromodynamics (pQCD) with the program MadGraph5_aMC@NLO 2.6.5 [19]. The parton distribution functions of the proton are modeled using the NNPDF3.1 set [38]. Parton showering, hadronization, and the underlying event are modeled using the program Pythia v8.240 [21] with the tune CP5 [39]. The matching of matrix elements to parton showers is done using the MLM scheme [40]. We restrict the analysis of these samples to particles originating from the hard-scatter interaction and do not add any pileup to these samples. Samples containing either 1, 2, 3, or 4 jets at matrix-element level are complemented by an “inclusive” sample and by samples binned in the scalar sum in \(p_{\mathrm{T}} \) of these jets. We denote the multiplicity of jets at the matrix-element level by the symbol \(N_{{\mathrm{jet}}}\) and the scalar sum in \(p_{\mathrm{T}} \) of these jets by the symbol \(H_{\mathrm{T}} \). The inclusive and \(H_{\mathrm{T}} \)-binned samples contain events with between 0 and 4 jets at the matrix-element level.

The weights \(w_{j}^{k}\) and \(w_{{\mathrm{incl}}}^{k}\) are equal to one for all events in these samples. Thus, \(\sum _{k=1}^{N_{j}} \, w_{j}^{k} =N_{j}\) and \(\sum _{k=1}^{N_{{\mathrm{incl}}}} \, w_{{\mathrm{incl}}}^{k} = N_{{\mathrm{incl}}}\) for this example, which allows us to simplify Eq. (3) to:

All samples are normalized using a k-factor of 1.14, given by the ratio of the inclusive cross section computed at next-to-next-to leading order (NNLO) accuracy in pQCD, with electroweak corrections taken into account up to NLO accuracy [14], and the inclusive cross section computed at LO accuracy by the program MadGraph5_aMC@NLO. The product of the inclusive W+jets production cross section times the branching fraction for the decay to a charged lepton and a neutrino amounts to 61.5 nb.

We will demonstrate the stitching of these samples based on the two observables \(N_{{\mathrm{jet}}}\) and \(H_{\mathrm{T}} \). The PS region in which we perform the stitching will be either one- or two-dimensional. We will show that for our formalism it makes little difference whether the stitching is performed in one dimension or in two. The stitching of W+jets samples based on the observable \(N_{{\mathrm{jet}}}\) will be discussed first and then we will discuss the stitching of W+jets samples based on the two observables \(N_{{\mathrm{jet}}}\) and \(H_{\mathrm{T}} \).

3.1.1 Stitching of W+jets samples by \(N_{{\mathrm{jet}}}\)

In this example, an inclusive W+jets sample simulated at LO accuracy in pQCD is stitched with exclusive samples containing events with \(N_{{\mathrm{jet}}}\) equal to either 1, 2, 3, or 4. The inclusive sample contains events with \(N_{{\mathrm{jet}}}\) between 0 and 4. We partition the PS into slices based on the multiplicity of jets at the matrix-element level and set the index i equal to \(N_{{\mathrm{jet}}}\). The number of events in each MC sample is chosen such that the stitching weights decrease by about a factor of two for each increase in jet multiplicity. The decrease in the cross section as function of \(N_{{\mathrm{jet}}}\) allows to reduce the statistical uncertainties in the tail of the \(N_{{\mathrm{jet}}}\) distribution without significantly increasing the expenditure of computing resources required to produce and store these samples. The number of events contained in each sample and the values of the probabilities \(P_{{\mathrm{incl}}}^{i}\) and \(P_{j}^{i}\) are given in Tables 1 and 2. The probabilities \(P_{{\mathrm{incl}}}^{1}\), \(P_{{\mathrm{incl}}}^{2}\), \(P_{{\mathrm{incl}}}^{3}\), and \(P_{{\mathrm{incl}}}^{4}\) are computed by taking the ratio of cross sections, computed at LO accuracy by the program MadGraph5_aMC@NLO, for the exclusive samples with respect to the cross section \(\sigma _{{\mathrm{incl}}}\) of the inclusive sample. The probability \(P_{{\mathrm{incl}}}^{0}\) is obtained using the relation \(P_{{\mathrm{incl}}}^{0} = 1 - \sum _{i=1}^{4} P_{{\mathrm{incl}}}^{i}\). The probabilities \(P_{j}^{i}\) for the exclusive samples are 1 if \(i=j\) and 0 otherwise, as each of the exclusive samples j covers exactly one PS region i. The corresponding stitching weights \(s^{i}\), computed according to Eq. (4), are given in Table 3.

In order to demonstrate that the stitching procedure is unbiased, we compare the normalization and shape of distributions obtained using the stitching procedure with the normalization and shape of distributions obtained from the inclusive sample. Distributions in \(p_{\mathrm{T}} \) of the “leading” and “subleading” jet (the jets of, respectively, highest and second-highest \(p_{\mathrm{T}} \) in the event), in the multiplicity of jets and in the observable \(H_{\mathrm{T}} \) are shown in Fig. 1. The distributions obtained from the inclusive sample are represented by black markers (“inclusive only”), while those obtained by applying the stitching procedure to the combination of the inclusive sample and the samples binned in \(N_{{\mathrm{jet}}}\) are represented by pink lines (“stitched”). The contributions of individual exclusive samples j to the stitched distribution are indicated by shaded areas of different color in the upper part of each figure. The white area (“inclusive stitched”) represents the contribution of the inclusive sample to the stitched distribution. The shaded areas of different color and the white area add up to the pink line. We remark that the “inclusive only” and “inclusive stitched” distributions contain the exact same events. The sole difference between these two distributions is that the stitching weights, given in Table 3, are applied to the “inclusive stitched”, but not to the “inclusive only” distribution. In the lower part of each figure, we show the difference in normalization and shape between the distribution obtained using the stitching procedure and the distribution obtained when using solely the inclusive sample. The differences are given relative to the distribution obtained from our stitching procedure. The size of statistical uncertainties on the “inclusive only” and “stitched” distributions is visualized in the lower part of each figure and is represented by the length of the error bars and by the height of the dark shaded area, respectively. The jets shown in the figure are reconstructed using the anti-\(k_{\mathrm{t}}\) algorithm [41, 42] with a distance parameter of 0.4, using all stable generator-level particles (after hadron shower and hadronization) except neutrinos as input, and are required to satisfy the selection criteria \(p_{\mathrm{T}} > 25~\mathrm{GeV}\) and \(\vert \eta \vert < 5.0\). The observable \(H_{\mathrm{T}} \) is computed as the scalar sum in \(p_{\mathrm{T}} \) of these jets. Note that the multiplicity of jets and the observable \(H_{\mathrm{T}} \) shown in Fig. 1 differ from the observables \(N_{{\mathrm{jet}}}\) and \(H_{\mathrm{T}} \) that are used in the stitching procedure: The former refer to jets at the generator (detector) level, while the latter refer to jets at the matrix-element level. The distributions are normalized to an integrated luminosity of 140 fb\(^{-1}\).

The distributions for the inclusive sample and for the sum of inclusive plus exclusive samples, with the stitching weights applied, are in agreement within the statistical uncertainties. The exclusive samples reduce the statistical uncertainties in particular in the tails of the distributions.

Distributions in \(p_{\mathrm{T}} \) of the a leading and b subleading jet, in c the multiplicity of generator-level jets and in d the observable \(H_{\mathrm{T}} \), the scalar sum in \(p_{\mathrm{T}} \) of these jets, for the case of W+jets samples that are stitched based on the observable \(N_{{\mathrm{jet}}}\) at the matrix-element level. The W bosons are required to decay leptonically. The event yields are computed for an integrated luminosity of \(140~\mathrm{fb}^{-1}\)

3.1.2 Stitching of W+jets samples by \(N_{{\mathrm{jet}}}\) and \(H_{\mathrm{T}} \)

This example extends the previous example. It demonstrates the stitching procedure based on two observables, \(N_{{\mathrm{jet}}}\) and \(H_{\mathrm{T}} \). The exclusive samples are simulated for jet multiplicities of \(N_{{\mathrm{jet}}} = 1\), 2, 3, and 4 and for \(H_{\mathrm{T}} \) in the ranges 70–100, 100–200, 200–400, 400–600, 600–800, 800–1200, 1200–2500, and \(> 2500~\mathrm{GeV}\) (up to the kinematic limit). We refer to the exclusive samples produced in slices of \(N_{{\mathrm{jet}}}\) as the “\(N_{{\mathrm{jet}}}\)-samples” and to the samples simulated in ranges in \(H_{\mathrm{T}} \) as the “\(H_{\mathrm{T}} \)-samples”. The inclusive sample contains events with jet multiplicities between 0 and 4 and covers the full range in \(H_{\mathrm{T}} \). The number of events in the \(H_{\mathrm{T}} \)-samples are given in Table 4. The information for the inclusive sample and for the \(N_{{\mathrm{jet}}}\)-samples is the same as for the previous example and is given in Table 1.

The corresponding PS regions i, defined in the plane of \(N_{{\mathrm{jet}}}\) versus \(H_{\mathrm{T}} \), are shown in Fig. 2. In total, the probabilities \(P_{{\mathrm{incl}}}^{i}\) and \(P_{j}^{i}\) and the corresponding stitching weights \(s^{i}\) are computed for 45 separate PS regions.

In some of the 45 PS regions, the probabilities \(P_{{\mathrm{incl}}}^{i}\) are rather low, on the level of \(10^{-7}\). In order to reduce the statistical uncertainties on the probabilities \(P_{{\mathrm{incl}}}^{i}\) and \(P_{j}^{i}\), we compute these probabilities using the following procedure: For all regions i of PS that are covered by the inclusive sample and by one or more \(N_{{\mathrm{jet}}}\)- or \(H_{\mathrm{T}} \)-samples, we determine the probabilities \(P_{{\mathrm{incl}}}^{i}\) and \(P_{j}^{i}\) by the method of least squares [43]. Details of the computation are given in the appendix. The probability \(P_{{\mathrm{incl}}}^{0}\) for the PS region \(N_{{\mathrm{jet}}} = 0\) and \(H_{\mathrm{T}} < 70~\mathrm{GeV}\), which is solely covered by the inclusive sample, is computed according to the relation \(P_{{\mathrm{incl}}}^{0} = 1 - \sum _{i=1}^{44} \, P_{{\mathrm{incl}}}^{i}\). The numerical values of the probabilities \(P_{{\mathrm{incl}}}^{i}\) and \(P_{j}^{i}\) obtained by the least-square method and of the stitching weights \(s^{i}\), computed according to Eq. (4), are given in Tables 7 and 8.

Distributions in \(p_{\mathrm{T}} \) of the leading and subleading jet, in the multiplicity of jets, and in the observable \(H_{\mathrm{T}} \) obtained from our stitching procedure are compared to the distributions obtained from the inclusive sample in Fig. 3. The distributions obtained by stitching the inclusive sample with the samples binned in \(N_{{\mathrm{jet}}}\) and in \(H_{\mathrm{T}} \) are represented by pink lines, while those obtained when using solely the inclusive sample are represented by black markers. The weights given in Table 8 are applied to the stitched distributions. The contribution of all \(N_{{\mathrm{jet}}}\)-binned samples to the stitched distribution is represented by the blue shaded area (“sum of \(N_{{\mathrm{jet}}}\) samples”), while the contribution of all samples produced in ranges in \(H_{\mathrm{T}} \) is represented by the yellow shaded area (“sum of \(H_{\mathrm{T}} \) samples”) in the upper part of the figures. Following Fig. 1, the white area represents the contribution of the inclusive sample to the stitched distribution. The jets are reconstructed as described in Sect. 3.1.1 and are required to pass the selection criteria \(p_{\mathrm{T}} > 25~\mathrm{GeV}\) and \(\vert \eta \vert < 5.0\). In the lower part of each figure, we again show the difference between the distributions obtained from the inclusive sample and obtained by using our stitching procedure, and also the respective statistical uncertainties. As one would expect, the addition of samples simulated in ranges in \(H_{\mathrm{T}} \) to the example given in Sect. 3.1.1 reduces the statistical uncertainties in the tails of the distributions. The reduction is most pronounced in the tails of the distributions in leading and subleading jet \(p_{\mathrm{T}} \) and in the observable \(H_{\mathrm{T}} \).

Distributions in \(p_{\mathrm{T}} \) of the a leading and b subleading jet, in c the multiplicity of generator-level jets and in d the observable \(H_{\mathrm{T}} \), the scalar sum in \(p_{\mathrm{T}} \) of these jets, for the case of W+jets samples that are stitched based on the observables \(N_{{\mathrm{jet}}}\) and \(H_{\mathrm{T}} \) at the matrix-element level. The W bosons are required to decay leptonically. The event yields are computed for an integrated luminosity of \(140~\mathrm{fb}^{-1}\)

3.2 Estimation of trigger rates at the HL-LHC

We choose the task of estimating trigger rates for the upcoming high-luminosity data-taking period of the LHC as second example to illustrate the stitching procedure. The “rate” of a trigger corresponds to the number of pp collision events that satisfy the trigger condition per unit of time. The estimation of trigger rates constitutes an important task for demonstrating the physics potential of the HL-LHC. The HL-LHC physics program demands a large amount of integrated luminosity to be delivered by the LHC, in order to facilitate measurements of rare signal processes (such as the precise measurement of H boson couplings and the study of H boson pair production), as well as to enhance the sensitivity of searches for new physics, by the ATLAS and CMS experiments. In order to satisfy this demand, the HL-LHC is expected to operate at an instantaneous luminosity of 5–\(7.5 \times 10^{34}\) cm\(^{-2}\) s\(^{-1}\) at a center-of-mass energy of \(\sqrt{s} = 14~\mathrm{TeV}\) [24]. The challenge of developing triggers for the HL-LHC is to design the triggers such that rare signal processes pass the triggers with a high efficiency, while the rate of background processes gets reduced by many orders of magnitude, in order not to exceed bandwidth limitations on the detector read-out and on the rate with which events can be written to permanent storage.

The inelastic pp scattering cross section at \(\sqrt{s} = 14~\mathrm{TeV}\) amounts to \(\approx 80\) mb, resulting in up to 200 simultaneous pp interactions per crossing of the proton beams at the nominal HL-LHC instantaneous luminosity [24]. The vast majority of these interactions are inelastic pp scatterings with low momentum exchange, which predominantly arise from the exchange of gluons between the colliding protons. We refer to inelastic pp scattering interactions with no further selection applied as “minimum bias” events. In order to estimate the rates of triggers at the HL-LHC, MC samples of minimum bias events are produced at LO in pQCD using the program Pythia. The minimum bias samples are complemented by samples of inelastic pp scattering interactions in which a significant amount of transverse momentum, denoted by the symbol \(\hat{p}_{\mathrm{T}} \), is exchanged between the scattered protons. The stitching of the minimum bias samples with samples generated for different ranges in \(\hat{p}_{\mathrm{T}} \) allows to estimate the trigger rates with lower statistical uncertainties.

The production of MC samples used for estimating trigger rates at the HL-LHC proceeds by first simulating one “hard-scatter” (HS) interaction within a given range in \(\hat{p}_{\mathrm{T}} \) and then adding a number of additional inelastic pp scattering interactions of the minimum bias kind to the same event, in order to simulate the pileup (PU). We use the symbol \(N_{{\mathrm{PU}}}\) to denote the total number of PU interactions that occur in the same crossing of the proton beams as the HS interaction. No selection on \(\hat{p}_{\mathrm{T}} \) is applied when simulating the PU interactions. We remark that the distinction between the HS interaction and the PU interactions is artificial and solely made for the purpose of MC production. The HS interaction and the PU interactions will be indistinguishable in the data that will be recorded at the HL-LHC: The scattering in which the transverse momentum exchange between the protons amounts to \(\hat{p}_{\mathrm{T}} \) may occur in any of the \(N_{{\mathrm{PU}}} + 1\) simultaneous pp interactions. Our formalism treats the HS interaction and the PU interactions on an equal footing.

The “inclusive” sample in this example are events containing \(N_{{\mathrm{PU}}} + 1\) minimum bias interactions, where for each event the number of PU interactions, \(N_{{\mathrm{PU}}}\), is sampled at random from the Poisson probability distribution:

with a mean \(\bar{N} = 200\). The exclusive samples contain one HS interaction of transverse momentum within a specified range in \(\hat{p}_{\mathrm{T}} \) in addition to \(N_{{\mathrm{PU}}}\) minimum bias interactions. The latter represent the PU. The number \(N_{{\mathrm{PU}}}\) of PU interactions is again sampled at random from a Poisson distribution with a mean of \(\bar{N} = 200\).

We enumerate the ranges in \(\hat{p}_{\mathrm{T}} \) by the index i and denote the number of \(\hat{p}_{\mathrm{T}} \) ranges used to produce the exclusive samples by the symbol m. We further introduce the symbol \(n_{i}\) to refer to the number of inelastic pp scattering interactions that fall into the ith interval in \(\hat{p}_{\mathrm{T}} \). The inelastic pp scatterings may occur either in the HS interaction or in any of the \(N_{{\mathrm{PU}}}\) PU interactions. The “phase space” corresponding to a given event is represented by a vector \(I=n_{1},\dots ,n_{m}\) of dimension m. The ith component of this vector indicates the number of inelastic pp scattering interactions that fall into the ith interval in \(\hat{p}_{\mathrm{T}} \).

The probability \(P^{I}\) for an event in the inclusive sample that contains \(N_{{\mathrm{PU}}}\) pileup interactions to feature \(n_{1}\) inelastic pp scatterings that fall into the first interval in \(\hat{p}_{\mathrm{T}} \), \(n_{2}\) that fall into the second,\(\ldots \), and \(n_{m}\) that fall into the mth follows a multinomial distribution [44] and is given by:

where the symbols \(p_{i}\) correspond to the probability for a single inelastic pp scattering interaction to feature a transverse momentum exchange that falls into the ith interval in \(\hat{p}_{\mathrm{T}} \). The \(n_{i}\) satisfy the condition \(\sum _{i=1}^{m} \, n_{i} = N_{{\mathrm{PU}}} + 1\).

The corresponding probability \(P_{j}^{I}\) for an event in the jth exclusive sample that contains \(N_{{\mathrm{PU}}}\) pileup interactions is given by:

The \(n_{i}\) again satisfy the condition \(N_{{\mathrm{PU}}} + 1 =\sum _{i=1}^{m} \, n_{i}\). The fact that for all events in the jth exclusive sample the transverse momentum \(\hat{p}_{\mathrm{T}} \) that is exchanged in the HS interaction falls into the jth interval in \(\hat{p}_{\mathrm{T}} \) implies that \(N_{{\mathrm{PU}}} + 1\) needs to be replaced by \(N_{{\mathrm{PU}}}\) and \(n_{j}\) by \(n_{j} - 1\) in Eq. (7) compared to Eq. (6), as one of the inelastic pp scatterings that fall into the jth interval in \(\hat{p}_{\mathrm{T}} \) is “fixed” and thus not subject to the random fluctuations, which are modeled by the multinomial distribution. The ratio of Eq. (7) to Eq. (6) is given by the expression:

The validity of Eq. (8) includes the case \(n_{j}=0\).

The expression for the stitching weight \(s^{I}\) is given by an expression similar to Eq. (3), the main difference being that the index i is replaced by the vector I, the probabilities \(P_{{\mathrm{incl}}}^{i}\) and \(P_{j}^{i}\) are replaced by the probabilities \(P_{{\mathrm{incl}}}^{I}\) and \(P_{j}^{I}\) and the product of luminosity times cross section, \(L \, \sigma _{{\mathrm{incl}}}\), is replaced by the frequency F of pp collisions:

The probabilities \(P_{{\mathrm{incl}}}^{I}\) and \(P_{j}^{I}\) are given by Eqs. (6) and (7). Dividing both numerator and denominator on the right-hand side of Eq. (9) by \(P_{{\mathrm{incl}}}^{I}\) and replacing the ratio \(P_{j}^{I}/P_{{\mathrm{incl}}}^{I}\) by Eq. (8) yields:

The sum over j refers to the exclusive samples. At the HL-LHC, the pp collision frequency F amounts to 28 MHz.Footnote 3 Eq. (10) represents the equivalent of Eq. (3), tailored to the case of estimating trigger rates instead of estimating event yields. The weights \(w_{{\mathrm{incl}}}^{k}\) and \(w_{j}^{k}\) are equal to one for all events in this example, which allows to simplify Eq. (10). Using the relations \(\sum _{k=1}^{N_{{\mathrm{incl}}}} \, w_{{\mathrm{incl}}}^{k} = N_{{\mathrm{incl}}}\) and \(\sum _{k=1}^{N_{j}} \, w_{j}^{k} = N_{j}\), we obtain the expression:

The ranges in \(\hat{p}_{\mathrm{T}} \) used to produce the exclusive samples and the number of events contained in each sample are given in Table 5. The association of the index i to the different ranges in \(\hat{p}_{\mathrm{T}} \) and the corresponding values of the probabilities \(p_{i}\) are given in Table 6. The probabilities \(p_{i}\) are computed by taking the ratio of cross sections computed by the program Pythia for the case of single inelastic pp scattering interactions with a transverse momentum exchange that is within the ith interval in \(\hat{p}_{\mathrm{T}} \) and for the case that no condition is imposed on \(\hat{p}_{\mathrm{T}} \).

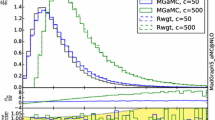

We cannot give numerical values of the stitching weights \(s^{I}\) for this example, as I is a high-dimensional vector, and also because the stitching weights vary depending on \(N_{{\mathrm{PU}}}\). Instead, we show in Fig. 4 the spectrum of the stitching weights that we obtain when inserting the numbers given in Tables 5 and 6 into Eq. (11). For comparison, we also show the corresponding weight, given by \(s_{{\mathrm{incl}}} =F/N_{{\mathrm{incl}}}\), for the case that only the inclusive sample is used to estimate the trigger rate. The weight \(s_{{\mathrm{incl}}}\) amounts to 35 Hz in this example. As can be seen in Fig. 4, the addition of samples produced in ranges of \(\hat{p}_{\mathrm{T}} \) to the inclusive sample reduces the weights. Lower weights in turn reduce the statistical uncertainties on the trigger rate estimate. The different maxima in the distribution of stitching weights \(s^{I}\) correspond to events in which the transverse momentum exchanged between the scattered protons falls into different ranges in \(\hat{p}_{\mathrm{T}} \). The spectrum of weights shown in Fig. 4 is plotted before any trigger selection is applied. As the probability for an event to pass the trigger increases with \(\hat{p}_{\mathrm{T}} \), the stitching weights \(s^{I}\) are on average smaller for events that pass than for events that fail the trigger selection. Consequently, the reduction in statistical uncertainties that one obtains by using the exclusive samples and applying the stitching weights becomes even more pronounced after the trigger selection is applied (the weight \(s_{{\mathrm{incl}}}\) remains fixed at 35 Hz).

Stitching weights \(s^{I}\), computed according to Eq. (11), for the inclusive sample and for the samples produced in ranges of \(\hat{p}_{\mathrm{T}} \)

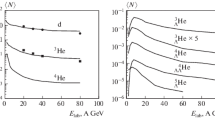

The rates expected for a single jet trigger and for a dijet trigger at the HL-LHC are shown in Fig. 5. The rates are computed as function of the \(p_{\mathrm{T}} \) threshold that is applied to the jets. In case of the dijet trigger, the same \(p_{\mathrm{T}} \) threshold is applied to both jets. The jets are reconstructed as described in Sect. 3.1.1 and are required to be within the geometric acceptance \(\vert \eta \vert < 5.0\). All stable generator-level particles (except neutrinos) originating either from the HS interaction or from any of the PU interactions are used as input to the jet reconstruction. The statistical uncertainties on the rate estimates obtained from the inclusive sample are represented by error bars, while those obtained from the sum of inclusive plus exclusive samples are represented by the shaded area.

The rate estimate obtained for the inclusive sample and for the sum of inclusive plus exclusive samples, with the stitching weights computed according to Eq. (11), agree within statistical uncertainties, demonstrating that the estimate of the trigger rate obtained from the stitching procedure is unbiased. The modest difference between the rate estimates for the dijet trigger with jet \(p_{\mathrm{T}} \) thresholds higher than \(280~\mathrm{GeV}\) is not statistically significant. It is important to keep in mind that the trigger rate estimates for adjacent bins are correlated, because all events that pass the trigger for a given jet \(p_{\mathrm{T}} \) threshold also pass the trigger for all lower thresholds.

For both triggers and all jet \(p_{\mathrm{T}} \) thresholds, the statistical uncertainties obtained with the stitching procedure are smaller than the uncertainties obtained in case only the inclusive sample is used. The reduction in the statistical uncertainties is significantly less pronounced for the single jet trigger than for the dijet trigger, however. For the latter, the stitching procedure reduces the statistical uncertainties in particular for jet \(p_{\mathrm{T}} \) thresholds higher than \(100~\mathrm{GeV}\). The reduction in the statistical uncertainties for the single jet trigger is limited by events with low \(\hat{p}_{\mathrm{T}} \) that contain a single jet of high \(p_{\mathrm{T}} \). The stitching weights \(s^{I}\) for these low \(\hat{p}_{\mathrm{T}} \) events are not much smaller than the weights for the inclusive sample. These low \(\hat{p}_{\mathrm{T}} \) events also cause a “flattening” of the single jet trigger rate for jet \(p_{\mathrm{T}} \) thresholds higher than \(400~\mathrm{GeV}\). The requirement of a second high \(p_{\mathrm{T}} \) jet removes most of these low \(\hat{p}_{\mathrm{T}} \) events, with the effect that the dijet trigger rate decreases more rapidly as function of the jet \(p_{\mathrm{T}} \) threshold and the stitching procedure becomes more effective in reducing the statistical uncertainties for the dijet trigger.

4 Summary

The production of MC samples containing a sufficient number of events to allow for a meaningful comparison with the data is often a challenge in modern HEP experiments, due to the computing resources required to produce and store such samples. This is particularly true for experiments at the CERN LHC, firstly because of the large cross sections of relevant processes (e.g. DY, W+jets, and \({\mathrm{t}}\bar{\mathrm{t}}\)+jets production) and secondly because of the large luminosity delivered by the LHC.

In this paper we have focused on the case that the MC samples have already been produced and we have presented a procedure that allows to reduce the statistical uncertainties by combining MC samples which overlap in PS. The procedure is based on applying suitably chosen weights to the simulated events. We refer to the procedure as “stitching”.

The formalism for computing the stitching weights is general enough to be applied to a variety of use-cases. When used in physics analyses, the stitching procedure allows to reduce the statistical uncertainties in particular in the tails of distributions. Examples that document the typical use of the stitching procedure in physics analyses performed by the CMS experiment during LHC Runs 1 and 2 have been presented. The formalism has been extended to the case of estimating trigger rates at the HL-LHC. Up to 200 simultaneous pp collisions are expected per crossing of the proton beams at the HL-LHC. The distinguishing feature of this application of the stitching procedure is that the same physics process, inelastic pp scattering interactions in which a transverse momentum \(\hat{p}_{\mathrm{T}} \) is exchanged between the protons, may occur in the “hard-scatter” (HS) interaction and in “pileup” (PU) interactions. Our formalism for computing the stitching weight treats the HS and PU interactions on equal footing.

The examples demonstrate that the stitching procedure provides unbiased estimates of event yields and rates as well as of the shapes of distributions. The reduction in the statistical uncertainties achieved by the stitching method depends on the number of events contained in the MC samples that are subject to the stitching procedure and ranges from moderate to significant.

Data Availability

This manuscript has no associated data or the data will not be deposited. [Authors’ comment: There is no data to be submitted with this publication, because all the results of the paper are based on Monte Carlo simulation.]

Notes

The quoted cross sections refer to, respectively, DY production of lepton (electron, muon, and \(\tau \)) pairs of mass \(>50~\mathrm{GeV}\), W+jets production with subsequent leptonic decay of the W boson, and to the pair production of top quarks of mass \(172.5~\mathrm{GeV}\).

If this is not the case for a given set of MC samples, it can be achieved by a simple multiplication of the weights \(w_{{\mathrm{incl}}}^{k}\) and \(w_{j}^{k}\) by the factors \(1/\bar{w} _{{\mathrm{incl}}}\) and \(1/\bar{w} _{j}\).

The beams cross every 25 ns, but pp collisions occur only in \(\approx 70\%\) of those beam crossings [24].

References

W.L. Dunn, J.K. Shultis, Exploring Monte Carlo methods (Elsevier Science, Amsterdam, 2011)

D.P. Kroese, T. Brereton, T. Taimre, Z. Botev, Why the Monte Carlo method is so important today. Wiley Interdiscip. Rev. Comput. Stat. 6, 386 (2014)

S. Amoroso et al., Challenges in Monte Carlo event generator software for High-Luminosity LHC. Comput. Softw. Big Sci. 5(1), 12 (2021). https://doi.org/10.1007/s41781-021-00055-1

LHC Design Report Vol. 1: The LHC main ring (2004). https://doi.org/10.5170/CERN-2004-003-V-1

LHC Design Report Vol. 2: The LHC infrastructure and general services (2004). https://doi.org/10.5170/CERN-2004-003-V-2

LHC Design Report Vol. 3: The LHC injector chain (2004). https://doi.org/10.5170/CERN-2004-003-V-3

ATLAS Collaboration, Measurement of the inelastic proton-proton cross section at \(\sqrt{s} = 13~{\rm TeV}\) with the ATLAS Detector at the LHC. Phys. Rev. Lett. 117(18), 182,002 (2016). https://doi.org/10.1103/PhysRevLett.117.182002

CMS Collaboration, Measurement of the inelastic proton-proton cross section at \(\sqrt{s}=13~{\rm TeV}\). JHEP 07, 161 (2018). https://doi.org/10.1007/JHEP07(2018)161

ATLAS Collaboration, Luminosity determination in pp collisions at \(\sqrt{s}=13~{\rm TeV}\) using the ATLAS detector at the LHC. ATLAS CONF Note (ATLAS-CONF-2019-021). https://cds.cern.ch/record/2677054

CMS Collaboration, CMS luminosity measurements for the 2016 data-taking period. CMS Physics Analysis Summary (CMS-PAS-LUM-17-001). http://cds.cern.ch/record/2257069

CMS Collaboration, CMS luminosity measurement for the 2017 data-taking period at \(\sqrt{s} = 13\text{TeV}\). CMS Physics Analysis Summary (CMS-PAS-LUM-17-004). http://cds.cern.ch/record/2621960

CMS Collaboration, CMS luminosity measurement for the 2018 data-taking period at \(\sqrt{s} = 13\text{ TeV }\). CMS Physics Analysis Summary (CMS-PAS-LUM-18-002). http://cds.cern.ch/record/2676164

M. Czakon, A. Mitov, Top++: a program for the calculation of the top-pair cross section at hadron colliders. Comput. Phys. Commun. 185, 2930 (2014). https://doi.org/10.1016/j.cpc.2014.06.021

Y. Li, F. Petriello, Combining QCD and electroweak corrections to dilepton production in fewz. Phys. Rev. D 86, 094,034 (2012). https://doi.org/10.1103/PhysRevD.86.094034

K. Melnikov, F. Petriello, Electroweak gauge boson production at hadron colliders through \(O(\alpha _s^2)\). Phys. Rev. D 74, 114,017 (2006). https://doi.org/10.1103/PhysRevD.74.114017

S. Alioli, P. Nason, C. Oleari, E. Re, A general framework for implementing NLO calculations in shower Monte Carlo programs: the Powheg box. JHEP 06, 043 (2010). https://doi.org/10.1007/JHEP06(2010)043

S. Frixione, P. Nason, C. Oleari, Matching NLO QCD computations with parton shower simulations: the Powheg method. JHEP 11, 070 (2007). https://doi.org/10.1088/1126-6708/2007/11/070

P. Nason, A new method for combining NLO QCD with shower Monte Carlo algorithms. JHEP 11, 040 (2004). https://doi.org/10.1088/1126-6708/2004/11/040

J. Alwall, R. Frederix, S. Frixione, V. Hirschi, F. Maltoni, O. Mattelaer, H.S. Shao, T. Stelzer, P. Torrielli, M. Zaro, The automated computation of tree-level and next-to-leading order differential cross sections, and their matching to parton shower simulations. JHEP 07, 079 (2014). https://doi.org/10.1007/JHEP07(2014)079

T. Gleisberg, S. Höche, F. Krauss, M. Schönherr, S. Schumann, F. Siegert, J. Winter, Event generation with Sherpa 1.1. JHEP 02, 007 (2009). https://doi.org/10.1088/1126-6708/2009/02/007

T. Sjöstrand, S. Ask, J.R. Christiansen, R. Corke, N. Desai, P. Ilten, S. Mrenna, S. Prestel, C.O. Rasmussen, P.Z. Skands, An introduction to Pythia 8.2. Comput. Phys. Commun. 191, 159 (2015). https://doi.org/10.1016/j.cpc.2015.01.024

M. Bahr et al., Herwig++ physics and manual. Eur. Phys. J. C 58, 639 (2008). https://doi.org/10.1140/epjc/s10052-008-0798-9

ATLAS Collaboration, Modelling and computational improvements to the simulation of single vector-boson plus jet processes for the ATLAS experiment (2021) arXiv:2112.09588 [hep-ex]

G. Apollinari, I. Béjar Alonso, O. Brüning, P. Fessia, M. Lamont, L. Rossi, L. Tavian, High-Luminosity Large Hadron Collider (HL-LHC). CERN Yellow Rep. Monogr. 4, 1 (2017). https://doi.org/10.23731/CYRM-2017-004

CMS Collaboration, The Phase-2 upgrade of the CMS data acquisition and high-level trigger. Technical Design Report (in preparation)

M. Baak, J. Cúth, J. Haller, A. Hoecker, R. Kogler, K. Mönig, M. Schott, J. Stelzer, The global electroweak fit at NNLO and prospects for the LHC and ILC. Eur. Phys. J. C 74, 3046 (2014). https://doi.org/10.1140/epjc/s10052-014-3046-5

ATLAS Collaboration, Precision measurement and interpretation of inclusive \(\text{ W}^{+}\), \(\text{ W}^{-}\) and \(\text{ Z }/\gamma ^{*}\) production cross sections with the ATLAS detector. Eur. Phys. J. C 77(6), 367 (2017). https://doi.org/10.1140/epjc/s10052-017-4911-9

ATLAS Collaboration, Measurement of the cross section and charge asymmetry of W bosons produced in pp collisions at \(\sqrt{s} = 8~{\rm TeV}\) with the ATLAS detector. Eur. Phys. J. C 79(9), 760 (2019). https://doi.org/10.1140/epjc/s10052-019-7199-0

CMS Collaboration, Measurement of the differential cross section and charge asymmetry for inclusive \(\text{ pp }\rightarrow \text{ W}^{\pm } + \rm X\) production at \(\sqrt{s} = 8~{\rm TeV}\). Eur. Phys. J. C 76(8), 469 (2016). https://doi.org/10.1140/epjc/s10052-016-4293-4

CMS Collaboration, Measurements of the W boson rapidity, helicity, double-differential cross sections, and charge asymmetry in pp collisions at \(\sqrt{s} = 13~{\rm TeV}\). Phys. Rev. D 102(9), 092,012 (2020). https://doi.org/10.1103/PhysRevD.102.092012

ATLAS Collaboration, Measurement of the production of a W boson in association with a charm quark in pp collisions at \(\sqrt{s} =7~{\rm TeV}\) with the ATLAS detector. JHEP 05, 068 (2014). https://doi.org/10.1007/JHEP05(2014)068

CMS Collaboration, Measurement of associated \(\text{ W }+\text{ charm }\) production in pp collisions at \(\sqrt{s} = 7~{\rm TeV}\). JHEP 02, 013 (2014). https://doi.org/10.1007/JHEP02(2014)013

CMS Collaboration, Measurement of associated production of a W boson and a charm quark in proton-proton collisions at \(\sqrt{s} = 13~{\rm TeV}\). Eur. Phys. J. C 79(3), 269 (2019). https://doi.org/10.1140/epjc/s10052-019-6752-1

ATLAS Collaboration, Observation and measurement of Higgs boson decays to \(\text{ WW}^{*}\) with the ATLAS detector. Phys. Rev. D 92(1), 012,006 (2015). https://doi.org/10.1103/PhysRevD.92.012006

ATLAS Collaboration, Search for non-resonant Higgs boson pair production in the \(\text{ bb }l\nu l\nu \) final state with the ATLAS detector in pp collisions at \(\sqrt{s}=13~{\rm TeV}\). Phys. Lett. B 801, 135,145 (2020). https://doi.org/10.1016/j.physletb.2019.135145

CMS Collaboration, Search for the Standard Model Higgs boson in the \(\text{ H }\rightarrow \text{ WW } \rightarrow l\nu \text{ jj }\) decay channel in pp collisions at the LHC. CMS Physics Analysis Summary (CMS-PAS-HIG-13-027). http://cds.cern.ch/record/1743804

CMS Collaboration, Search for resonant and non-resonant Higgs boson pair production in the \({\rm b}\bar{{\rm b}}l\nu l\nu \) final state in proton-proton collisions at \(\sqrt{s}=13~{\rm TeV}\). JHEP 01, 054 (2018). https://doi.org/10.1007/JHEP01(2018)054

R.D. Ball et al., Parton distributions from high-precision collider data. Eur. Phys. J. C 77, 663 (2017). https://doi.org/10.1140/epjc/s10052-017-5199-5

CMS Collaboration, Extraction and validation of a new set of CMS Pythia 8 tunes from underlying-event measurements. Eur. Phys. J. C 80(1), 4 (2020). https://doi.org/10.1140/epjc/s10052-019-7499-4

J. Alwall et al., Comparative study of various algorithms for the merging of parton showers and matrix elements in hadronic collisions. Eur. Phys. J. C 53, 473 (2008). https://doi.org/10.1140/epjc/s10052-007-0490-5

M. Cacciari, G.P. Salam, G. Soyez, The anti-\(k_{{\rm t}}\) jet clustering algorithm. JHEP 04, 063 (2008). https://doi.org/10.1088/1126-6708/2008/04/063

M. Cacciari, G.P. Salam, G. Soyez, FastJet user manual. Eur. Phys. J. C 72, 1896 (2012). https://doi.org/10.1140/epjc/s10052-012-1896-2

G. Cowan, Statistical data analysis (Oxford University Press, Oxford, 1998)

M. Evans, N. Hastings, B. Peacock, C. Forbes, Statistical distributions (Wiley, New York, 2011)

Acknowledgements

This work was supported by the Estonian Research Council Grant PRG445.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

In this section, we detail the application of the least-squares method [43] to the computation of the probabilities \(P_{{\mathrm{incl}}}^{i}\) and \(P_{j}^{i}\) in the example given in Sect. 3.1.2. The aim is to make the computation of these probabilities less prone to statistical fluctuations in the MC samples.

Our use of the least-squares method is based on assuming the following relation to hold:

except for statistical fluctuations on the \(P_{{\mathrm{incl}}}^{i}\) and \(P_{j}^{i}\). The \(P_{{\mathrm{incl}}}^{i}\) and \(P_{j}^{i}\) are obtained by determining the fraction of events in the inclusive and exclusive MC samples that fall into PS region i. The symbol j in Eq. (12) refers to those \(N_{{\mathrm{jet}}}\)- and \(H_{\mathrm{T}} \)-samples that cover the PS region i. The symbol \(\sigma _{j}\) refers to the fiducial cross section corresponding to the sample j. We denote the unknown true value of the left-hand side (and equivalently of the right-hand side) of Eq. (12) by the symbol \(\lambda _{i}\) and use the symbols \(r_{{\mathrm{incl}}}^{i}\) and \(r_{j}^{i}\) to refer to the deviations (“residuals”), caused by statistical fluctuations, between the true values of the probabilities \(P_{{\mathrm{incl}}}^{i}\) and \(P_{j}^{i}\) and the values obtained using the MC samples. We further use the symbols \(e_{{\mathrm{incl}}}^{i}\) and \(e_{j}^{i}\) to denote the expected statistical fluctuations (“errors”) of these probabilities. According to the least-squares method, the best estimate for the value of \(\lambda _{i}\) is given by the solution to the following system of equations:

subject to the condition that the sum of residuals:

attains its minimal value. The expected statistical fluctuations \(e_{{\mathrm{incl}}}^{i}\) and \(e_{j}^{i}\) of the probabilities \(P_{{\mathrm{incl}}}^{i}\) and \(P_{j}^{i}\) are given by the standard errors of the Binomial distribution [43]:

The fluctuations decrease proportional to the inverse of the square-root of the number of events in the MC samples. The solution for \(\lambda _{i}\) is given by the expression:

from which the probabilities \(P_{{\mathrm{incl}}}^{i} =\lambda _{i}/ \sigma _{{\mathrm{incl}}}\) and \(P_{j}^{i} = \lambda _{i}/\sigma _{j}\) follow. The symbols \(\alpha _{{\mathrm{incl}}}^{i}\) and \(\alpha _{j}^{i}\) are defined as:

and act as “weights” in the expression on the right-hand side of Eq. (13), which has the form of a weighted average. We use the symbols \(\alpha _{{\mathrm{incl}}}^{i}\) and \(\alpha _{j}^{i}\) to refer to these weights, in order to distinguish them from the weights \(w_{{\mathrm{incl}}}^{k}\) and \(w_{j}^{k}\) computed by the MC generator program and from the stitching weights \(s^{i}\).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

Funded by SCOAP3

About this article

Cite this article

Ehatäht, K., Veelken, C. Stitching Monte Carlo samples. Eur. Phys. J. C 82, 484 (2022). https://doi.org/10.1140/epjc/s10052-022-10407-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjc/s10052-022-10407-9