Abstract

Accurate Monte Carlo simulations for high-energy events at CERN’s Large Hadron Collider, are very expensive, both from the computing and storage points of view. We describe a method that allows to consistently re-use parton-level samples accurate up to NLO in QCD under different theoretical hypotheses. We implement it in MadGraph5_aMC@NLO and show its validation by applying it to several cases of practical interest for the search of new physics at the LHC.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The search of new physics is one of the main priorities of the LHC. The recent observation of an anomaly in the di-photon spectra [1, 2] gives hope that we might have a first evidence of Beyond Standard Model (BSM) physics very soon. In that case, we would only be at the beginning of a long program of investigations of what the underlying physics is. In any case, searches of new particles or modifications of the interactions among the SM particles will continue as well as progress associated to our ability to provide precise predictions to be compared with data.

In the recent years, efforts have focussed on providing accurate theoretical predictions for a large number of BSM models at Leading Order (LO), in the form of event generators. First, various programs such as FeynRules [3], LanHep [4] or Sarah [5] have automated the extraction of the Feynman rules from a given Lagrangian. Secondly several matrix element based generators like MadGraph5_aMC@NLO [6] (referred to as MG5_aMC later on), Sherpa [7] or Whizard [8] have extended the class of BSM model they support with extensions in various directions: high spins, high color representations and any kind of Lorentz structure [9,10,11]. More recently, automated Next-to-Leading Order (NLO) prediction (in QCD) for BSM models are available thanks to the NLOCT [12] package of FeynRules which adds in the model the additional elements (R2 and UV counter-terms) required by loop computations.

It is now possible to generate Monte Carlo sample for a large class of BSM theories at LO and for an increasing number at NLO accuracy. Even though technically possible, producing samples for many models and benchmark points down to full detector level at the high luminosity expected at the LHC would require an unmanageable number of computing and storage resources. However, the stages of a simulation (parton-level generation, parton-shower and hadronisation, detector simulation, and reconstruction) are independent and factorise. Therefore changes in local probabilities happening at very short distance, i.e. from BSM physics, decouple from the rest of the simulation stages. This is particularly interesting since the slowest part of the simulation is the full simulation of the detector.

A logical possibility therefore arises: one can generate large samples under a SM or basic BSM hypothesis and then continuously and locally deform the probability functions associated to the distributions of parton-level events in the phase space by changing the “weight” of each event in a sample to account for an alternative theory or benchmark point. Under a not-too-restrictive set of hypotheses which are easy to list, such an event-by-event re-weighting can be shown to be exactly equivalent (at least in the infinite statistic limit) to a direct generation in the BSM. Note that such an event-by-event re-weighting is conceptually different from the very common yet very crude method where events are re-weighted using a pivotal one-dimensional distribution. Event-by-event re-weighting is a common practice in MC simulations, yet currently it has been only publicly available at LO [13, 14] or available at NLO for very specific cases (e.g. [15]) or in methods where NLO accuracy is far from ensured [16, 17]. It is the aim of this work to show that a consistent (and practical) re-weighting of events can also be done at NLO accuracy.

The plan of this paper is as follows. Before introducing the NLO re-weighting method, we will focus on the LO case in order to explain the intrinsic limitations of such types of methods (Sect. 2). In Sect. 3, we present three types of NLO re-weighting, two of them correspond to methods already introduced in the literature [14, 18]. The third one is the NLO accurate re-weighting method introduced here for the first time. In Sect. 4, we present some validation plots performed with MG5_aMC. We then present our conclusions in Sect. 5.

2 Re-weighting at the leading order

As stated in the introduction, the re-weighting method consists in attaching a new weight to every parton-level event as corresponding to a different scenario. The new weights allow to predict accurately (up to statistical precision) all the LO differential distributions at the parton level, leading also to the possibility of performing a single shower and detector simulation for all the models under consideration. At LO accuracy the new weight (\(W_{new}\)) can be easily obtained from the original one (\(W_{orig}\)) by simply multiplying it by the ratio of the matrix-elements estimated on that event for both models (noted respectively \(|M_{orig}|^2\) and \(|M_{new}|^2\)) [13, 14]:

In practice, in a weighted Monte Carlo generation, the weights are simply given byFootnote 1

where \(f_i(x_i,\mu _F)\) is the parton-distribution function estimated on the Bjorken fraction \(x_i\) at the factorization scale \(\mu _F\). \(\Omega _{PS}\) is the phase-space measure of the phase-space volume associated to the events.Footnote 2 From this equation it is clear that Eq. 1 is the correct procedure since the weight is exactly multiplicative. This property is preserved by the unweighting procedure making Eq. 1 to hold for both weighted and un-weighted samples (an actual proof is presented in Appendix).

A few remarks are in order regarding the range of validity of this method. First, even if the method returns the correct weight, it requires that the event sampling related to \(W_{orig}\) covers appropriately the phase-space for the new theory. In particular, \(W_{orig}\) must be non-zero in all regions where \(W_{new}\) is non-vanishing. Though obvious, this requirement is in fact the most important and critical one. In other words, the phase-space where the new theoretical hypothesis contributes should be a subset of the original one. For example, re-weighing can not be used for scanning over different mass values of the final state particlesFootnote 3, yet it is typically well-suited for probing different types of spin and/or coupling structures. More in detail, when the new theory has large contribution in a region of the phase-space where the original sample has only few events – since the original is sub-dominant in that part of the phase-space –, the statistical uncertainty of the re-weighted sample becomes very large and the resulting predictions unreliable. To appreciate quantitatively such an effect, we can use a naive estimator assuming a gaussian behavior. In that case one can write the estimated uncertainty as

where \(\bar{w}\) and Std(w) are respectively the mean and the standard deviation of the ratio of the weights and  ,

,  are an observable and the associated statistical uncertainty. As a consequence, the relative uncertainty can be enhanced if the weights have a large variance. In Appendix, we introduce, as a proof of principle, a second method on how to estimate the statistical uncertainty from the distribution of the weights.

are an observable and the associated statistical uncertainty. As a consequence, the relative uncertainty can be enhanced if the weights have a large variance. In Appendix, we introduce, as a proof of principle, a second method on how to estimate the statistical uncertainty from the distribution of the weights.

Second, the parton-level configuration feeder to parton-shower programs not only depends of the four-momenta but also of additional information, which is commonly encoded in the LesHouches Event File (LHEF) [19, 20]. Consequently, re-weighting by an hypothesis that does not preserve such additional information is not accurate. In general, such informations are related to:

-

Helicity The helicity state of the external states of a parton-level event is optional in the LHEF convention, yet some programs (e.g. [21]) use this information to decay the heavy state with an approximated spin-correlation matrix. In this case it is easy to modify Eq. 1 to correctly take into account the helicity information by using the following re-weighting:

$$\begin{aligned} W_{new} = \frac{|M^{h}_{new}|^2}{|M^{h}_{orig}|^2}W_{orig}, \end{aligned}$$(4)where \(|M^{h}_{new}|^2\) and \(|M^{h}_{orig}|^2\) are the matrix elements associated to the event for a given helicity h – the one written in the LHEF – and for the corresponding theoretical hypothesis. This re-weighting is allowed since the total cross-section is equal to the sum of the individual polarized cross-sections.

-

Color-flow A second piece of information presented in the LHEF is the color assignment in the large \(N_c\) limit. This information is used as the starting point for the dipole emission of the parton shower and therefore determines the result of the QCD evolution and hadronisation. Such information is untouched by the re-weigthing limiting the validity of the method. For example, it is not possible to re-weight events with a Higgs boson, with a process where the Higgs boson is replaced by a colored particle. One could think that, as for the helicity case, one could amend the re-weighting formula to be able to handle modifications in the relative importance between various flows. While possible in principle, in practice such re-weighting would require to store additional information (the relative probabilities of all color flows in the old model) in the LHEF, something that does not seem practical.

-

Internal resonances In presence of on-shell propagators, the associated internal particle is written in the LHEF. This is used by the parton-shower program to guarantee that the associated invariant mass is preserved during the re-shuffling procedure intrinsic to the showering process. Consequently, modifying the mass/width of internal propagator should be done with caution since it can impact the parton-shower behaviour. This information can not be corrected via a re-weighting formula, as it links in a non-trivial way short-distance with long-distance physics.

Selected results obtained with this re-weighting are presented in Sect. 4.

3 Next to leading order re-weighting

In this section, we will present three re-weighting methods for NLO samples. First we will present a LO type of re-weighting that we dubbed “Naive LO-like” re-weighting introduced in VBFNLO (i.e. REPOLO [17]) and MadSpin [22, 23]. As it will become clear later, this method is not NLO accurate and should be used only if the difference between the two theories factorizes from the QCD production. The second method that we propose is original and consists in a fully accurate and general NLO re-weighting. Finally, we present the “loop-improved” re-weighting method [18] to perform approximate NLO computation for loop-induced processes when the associated two-loop computations are not available.

3.1 Naive LO-like re-weighting

Following the MC@NLO method [24, 25], the cross-section can be decomposed in two parts, each of which can be used to generate events associated to a given final state multiplicity:

where R, S, C, SC, MC correspond respectively to the contributions of the fully-resolved configuration (the real), of its soft (including the Born matrix-element), collinear, soft-collinear limits (the counter-events) and the Monte Carlo (MC) counter-term. The \((\mathbb {S})\) (for standard) part corresponds to events generated with the Born configuration (N particles in the final state), while the \((\mathbb {H})\) (for hard) part corresponds to events generated with the real configuration (N\(+\)1 particles in the final state). The MC counter-term (shower dependent) assures the coherent treatment with the parton-shower (no double counting) while preserving the NLO accuracy of the computation.

The Naive LO-like re-weighting computes the weights based on the multiplicity of the events before parton shower. i.e.,

,

,  are respectively the weights for Born/real topology events for the hyppothesis

are respectively the weights for Born/real topology events for the hyppothesis  (where

(where  is either the orig or new label).

is either the orig or new label).  is the Born matrix element squared (

is the Born matrix element squared (  ) while

) while  is the real matrix element squared (

is the real matrix element squared ( ).

).

As this method does not consider the dependence of the virtual contributions, it fails to be NLO accurate. To ensure NLO accuracy, it requires that the effect of the new theory factorises out, i.e., when

where  is the finite piece of the virtual contribution (the interference term between the Born and the loop amplitude). Such relation should hold over the full phase-space with a universal constant since the MC counter terms connect the born and the real in a non local way. Nevertheless, as we will see later, the effect of the MC counter terms are quite mild, as expected since their contribution to the total cross-section are exactly zero by construction. This allows the Naive LO-like method to nicely approximate the NLO differential cross-section for many processes/theories where the last equation needs to be valid only phase-space point by phase-space point (i.e. when the ratio of the real matches the ratio of the Born and of the virtual in the soft and/or collinear limit).

is the finite piece of the virtual contribution (the interference term between the Born and the loop amplitude). Such relation should hold over the full phase-space with a universal constant since the MC counter terms connect the born and the real in a non local way. Nevertheless, as we will see later, the effect of the MC counter terms are quite mild, as expected since their contribution to the total cross-section are exactly zero by construction. This allows the Naive LO-like method to nicely approximate the NLO differential cross-section for many processes/theories where the last equation needs to be valid only phase-space point by phase-space point (i.e. when the ratio of the real matches the ratio of the Born and of the virtual in the soft and/or collinear limit).

3.2 NLO re-weighting

In order to have an accurate NLO re-weighting method, one should explicitly factorise out the dependence in the (various) matrix elements (i.e. in the Born squared matrix element – \(\mathcal {B}\) – , the real squared matrix element – \(\mathcal {R}\) – and in the finite piece of the virtual – \(\mathcal {V}\) – ). We use the decomposition of the differential described in [25]Footnote 4 introduced in the context of the evaluation of the systematics uncertainties:

where the \(\alpha \) index is either R, S, C, SC, MC (see previous section). Q is the Ellis-Sexton scale and \(d\chi ^\alpha \) is the phase-space measure.

The expression of the \(\mathcal {W}^\alpha _0\), \(\mathcal {W}^\alpha _F\), \( \mathcal {W}^\alpha _R\) are given in the appendix of [25] and are not repeated here. All those expressions have linear dependencies in the Born, the virtual, the real, the color connected Born \(\mathcal {B}_{CC}\) (this term is defined Eq. (3.28) of [26]) and the reduced matrix element \(\mathcal {B}_{RM}\) (Eq. (D.1) of [26]). This allows us to decompose the corresponding expressions as:Footnote 5

where the \(\beta \) index is either 0, R or F. The  are expressions which do not depend of either the PDF/scale or the matrix-element. From this expression we define the following three terms:Footnote 6

are expressions which do not depend of either the PDF/scale or the matrix-element. From this expression we define the following three terms:Footnote 6

By keeping track of the  at the generation time and writing it in the final event, one can perform an NLO re-weighting by:

at the generation time and writing it in the final event, one can perform an NLO re-weighting by:

The final weight associated to the event can then be calculated by combining those various pieces as it is done for the estimation of the systematics uncertainty (see Appendix of [25]). One can notice that both the color-connected Born and the reduced matrix-element are simply re-weighted by the ratio of the Born which can lead to a breaking of the NLO accuracy of the method. In the case of the color-connected Born, this does not consist in an additional limitation of the method since the re-weighting factors should differ only if the two theories present a difference in the relative importance of the various color-flows (a case already not handled at LO accuracy). The case of the reduced matrix-element is actually different since the contribution related to this matrix-element vanishes after integration over the azimutal angle [26]. The infra-red observables are therefore not sensitive to such contribution and consequently neither on the re-weighting used for such contribution.

More generally, the possible drawbacks and limitations on the statistical precision of the method are the same as for the LO case. However, for NLO calculations in MG5_aMC we face one additional source of statistical uncertainty due to the method used to integrate the virtual contribution. This method reduces the number of computations of the virtual by using an approximate of the virtual contribution based on the Born amplitudes times a fitted parameter \(\kappa \). It performs a separate phase-space integration to get the difference between the virtual and its approximation (full description of the method is presented in Section 2.4.3 of [6]). Schematically it can be written as:

If it exists a value of \(\kappa \) such that \(\kappa \mathcal {B}\approx \mathcal {V}\), the second integral is approximately zero and does not need to be probed as often as the first integral (thanks to importance sampling [27]), reducing the amount of time used in the evaluation of the loop-diagrams. However the re-weighting proposed in Eq. 14 will highly enhance the contribution of the second integral since each term of the integral will be re-weighted by a different factor, having a direct impact on the statistical uncertainty.

To reduce this effect, we propose to use a slightly more advanced re-weighting technique. We split the contribution proportional to the Born (\(\mathcal {W}^\alpha _{\beta ,B}\)) in two parts: \(\mathcal {W}^\alpha _{\beta ,BC}\) and \(\mathcal {W}^\alpha _{\beta ,BB}\). \(\mathcal {W}^\alpha _{\beta ,BC}\) is the part, proportional to the Born, related to the one of the counterterms, while \(\mathcal {W}^\alpha _{\beta ,BB}\) includes all of the other contributions (the Born itself and the approximate virtual). We then apply the following re-weighting:

Both the virtual and the approximate virtual are re-weighted by the same pre-factor which should allow to limit the enhancement of the second integral. The demonstration that such re-weighting is NLO accurate is presented in Appendix. It can be intuitively understood considering (\(\mathcal {B}+\mathcal {V}\)) as a single block which is re-weighted accordingly.

3.3 Loop improved re-weighting

A third type of re-weighting was originally introduced in the context of multiple Higgs production [18, 28,29,30], which we now briefly describe. In this case the idea is to perform the NLO computation in the infinite top-mass limit and then re-introduce the finite top-mass effects via re-weighting. Equation 16 is directly applicable if the exact finite virtual part is known. If not, one can still use an approximate method:

Both this method and the Naive LO-like method are not NLO accurate. However one can expect that the loop improved method has a better accuracy than the other one due to the correct treatment of the various counter terms.

4 Implementation and validation

The various methods of re-weighting discussed in the previous section have been implemented in MG5_aMC and are publicly available starting from version 2.4.0. At the LO, the default re-weighting mode is based on the helicity information present in the event (Eq. 4), while for NLO samples, the default re-weighting mode is the NLO accurate one (Eq. 16). Fixed-order NLO generation can not be re-weighted since no event generation is performed in this mode. A manual of the code is available online at the following address: https://cp3.irmp.ucl.ac.be/projects/madgraph/wiki/Reweight.

In this section, we will present four validation examples covering the various types of re-weighting introduced in the previous section. Since the purpose of this section is mainly to validate our method, the details of the simulation used (cuts, type of scale, ...) are kept to a minimum. Unless otherwise stated, the settings used correspond to the default value of MG5_aMC (version 2.4.0). In particular the minimal transverse momentum on jet is of 20 GeV at LO and of 10 GeV at NLO.

4.1 ZW associated production in the effective field theory at the LO

For the first validation, we will use the effective field theory (EFT) in the Electro-Weak sector [31]. We will focus on the associated production of the W and Z boson for the following dimension six operator:

with

and \(g_W\) is the weak gauge coupling, \(\tau ^I\) are the pauli matrices and \(W^I_\mu \) is the gauge Field of SU(2).

In Fig. 1 we present the differential distributions for the transverse momenta of the Z boson at LO accuracy. Starting from a sample of Standard Model events (black solid curve), we have re-weighted our sample to get the SM plus the interference term with the dimension six operator for two values of the associated coupling: \(c=50\, \text {TeV}^{-2}\) (dashed blue) and \(c=500\, \text {TeV}^{-2}\) (dashed green). This second value is clearly outside the validity region for the EFT approach as the differential distributions turns to be negative at low transverse momentum. Nevertheless, having such large effects is interesting for the validation of the re-weighting method. The same differential distributions are generated with MG5_aMC (solid green and blue) and validates the re-weighting method.

The ratios between the differential curves obtained with each method are presented in the second inset. This inset contains also the statistical uncertainty (yellow band) for the ratio of two independent SM samples. The compatibility of those two ratio plots with the expected statistical fluctuation validates our approach/code implementation. The first inset presents the ratio between the EFT and SM predictions. It shows that the method works correctly for quite small and quite large modifications of the differential distributions.

One can note that in the context of EFTs, the weight is linear in the dim-6 couplingFootnote 7 therefore it is trivial to predict the weight from any value of the coupling as soon as the weights for two different values of the coupling are known. This property can be used to further speed up the computation of the weight.

4.2 ZH associated production in the effective field theory at NLO

For our first NLO validation, we consider the associated production of a Z and H boson in the EFT as implemented in the Higgs Characterisation framework/model [33]. We use two of the benchmarks introduced in [34]: HD and HDder. In more details, the effective Lagrangian relevant for this example is

where \(\Lambda \) is the high energy scale (set to \(1 \text {TeV}\)), \(\kappa _{HWW}\), \(\kappa _{H\partial Z}\), \(\kappa _{H\partial W}\) are dimensionless couplings (set to one). H is the Higgs doublet field and \(V_{\mu \nu } = \partial _\mu V_\nu -\partial _\nu V_\mu \); \(V=Z, W^-,W^+\).

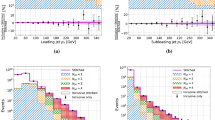

In Fig. 2 we present the differential cross-section for the transverse momentum of the Higgs and for its rapidity. In both cases, we present the curve for the SM, HD and HDder benchmarks. For the transverse momentum, we start from an HDder sample of events and perform the re-weighting to the other scenarios. While for the rapidity we present the plot where the original sample is the HD theory. Each re-weighted curve is then compared with a dedicated generation and the associated ratio plot is displayed below with the statistical uncertainty expected for the generation of two independent samples. The agreement between the two is excellent for both the NLO accurate re-weighting and the Naive LO-like re-weighting. In this case the NLO QCD effects factorise from the BSM ones and therefore the NLO accuracy of the Naive LO-like approach can only be spoiled by MC counter terms – which are as expected quite mild – .

Differential cross-section for \(p p \rightarrow Z H\) at 13 TeV LHC featuring both LO and NLO re-weighting methods. Events have been showered with Herwig6 [32]. See text for details

From the comparison of the two methods for the HD curve in the plot of the transverse momenta (top plot), we can observe that the statistical fluctuations are more pronounced for the curve obtained by re-weighting. This is an example of enhancement of statistical uncertainty due to the re-weighting as discussed around Eq. 3 since in the high \(p_T\) region, the HDder is suppressed compare to the other theories under consideration (HD and SM).

4.3 Effective field theory (\(t\bar{t}Z\)) at NLO

In this second NLO example, we will use the EFT framework in the context of the top-quark [35] and focus on the chromomagnetic operator:

where Q is the third generation left-handed quark doublet, \(\varphi \) and t are respectively the Higgs and top quark fields, \(g_s\) is the SM strong coupling constant, \(y_t\) is the top-Yukawa coupling and \(T^A\) is the SU(3) generator.

In Fig. 3, we present the transverse momentum of the Z boson in the associated production of this boson with a top/anti-top quark pair. We present the result for both the full matrix element squared (labelled \(\sigma ^{(2)}\)) and for the SM contribution plus the interference with the dimension 6 operator only (labelled \(\sigma ^{(1)}\)).

As in the previous section, we present our prediction both via the Naive LO-like re-weighting method (RWGT-LO) and via the NLO accurate one (RWGT-NLO), The ratio to the SM curves are presented in the first inset while the ratio between our prediction and the direct computation in MG5_aMC for \(\sigma ^{(2)}\) is presented in the second inset. The green band represents the expected statistical uncertainty for the ratio of two MG5_aMC samples. It is not possible to extract in automatic way the contribution of \(\sigma ^{(1)}\) from MG5_aMC and therefore we do not provide any comparison for this curve. As before, we observe a case where the statistical uncertainties are enhanced by the re-weighting approach and where both the Naive LO-like and the NLO re-weighting provides similar results. In this case the theory do not factorise and the ratio of the virtual and of the Born are not expected to match. The nice agreement is explained by the small contribution of the virtual and, once again, by the mild effect of the MC counter terms.

Differential cross-section for \(p p \rightarrow Z t \bar{t}\) at 13 TeV LHC featuring both LO and NLO re-weighting methods. The shower have been performed with Herwig6 [32]. See text for details

4.4 Higgs plus one jet production at LO and NLO order

In this last example, we will present results for the associated production of a SM Higgs with one jet. In Fig. 4, we present the transverse momentum of the Higgs at both LO and NLO accuracy. For the LO case, we present three curves. The first one is the curved obtained within the heft model [36] featuring the dimension five operator obtained by integrating out the top quark (HEFT LO). The second line (SM LO/RWGT) is the one obtained by re-weighting the previous curve by the full one loop matrix element squared which contain the complete top-quark mass dependence. The last LO curve is the one obtained via direct integration of the one-loop amplitude squared by MG5_aMC [37] (SM LO). At NLO accuracy, we have the curve in the infinite top mass limit (HEFT NLO) using the Higgs characterization model [34]. This sample is then re-weighted by the full-loop (Loop Improved) following the loop-improved method presented in the previous section. It is so far not possible to compute the NLO contribution directly in order to compare the accuracy of such method.

The first inset presents the ratio at LO and NLO of the infinite top mass limit over the full theory. For the NLO case, the full theory is approximated by the loop-improved method. The two ratios are very similar showing that the loop-improved method re-introduces the top-mass effects in a sensible way. The second inset presents the ratio between the re-weighting and the direct approach in the LO case, the statistical uncertainty of the ratio of two independent SM sample is presented by the yellow band. Its bumpy shape is due to the use of multiple samples with different cuts to decrease the statistical uncertainty. This ratio plot fully validates the re-weighting in the case of the LO curves.

Differential cross-section of the Higgs transverse momentum in the heavy top mass limit (both LO and NLO) re-weighted to include the finite top mass effect. This is compare to the loop-induced processes (LO). The shower have been performed with Herwig6 [32]. See text for details

5 Conclusion

We have presented the implementation of several methods that can be used for re-weighting LO and NLO samples and discuss the associated intrinsic limitations. We have released a new version of MG5_aMC that allows the users to employe the various re-weighting methods presented in this paper in a fully automatic and user-friendly way. In particular we have introduced for the first time an NLO accurate re-weighting method and compared it with the approximate methods available in the literature. Other re-weighting methods like the Naive LO-like and the loop-improved are for the first time available in a public code.

The comparison between the various methods shows that the approximate method (the Naive LO-like re-weighting) performs a satisfactory job. This indicates that the non locality of the MC counter terms is often more a theoretical problem than a contribution spoiling the NLO accuracy of the Naive LO-like re-weighting. Therefore the Naive LO-like re-weighting should be a good approximation in a quite large class of model/observable either when the virtual contribution is sub-dominant and/or when the effect of the BSM physics factorises. Consequently, we recommend phenomenologist to first test the Naive LO-like re-weighting and in case of loss of accuracy move forward to the slower NLO method. On the other hand for mass production at the LHC, where the samples are often used for more than one study, we recommend to always use the NLO accurate method.

The framework introduced here is flexible enough to accommodate different types of re-weighting approaches. In the near future we plan to capitalise on this to allow different type of functionalities. First we plan to implement a standard systematics uncertainty computation module as it is done in [16, 25, 38,39,40,41,42]. Compared to the existing module of MG5_aMC [25], this new module will allow to perform this determination independently of the event generation which will be extremely useful to evaluate the effect of a new PDF set/test a new scale scheme on existing samples. In a second stage, we plan to be able to compute the systematics uncertainty for the re-weighted BSM sample at the time of the re-weighting.

Notes

For the simplicity of the discussion, we will always consider that the sum of the weights is equal to the total cross-section of the sample.

The normalisation choice implies that the phase-space factor \( \Omega _{PS}\) is proportional to \(N^{-1}\) where N is the number of phase-space points used to probe the phase space.

For intermediate particle a small variation of the mass – order of the width – is reasonable.

We also use the same (MC) counter terms as described in that paper.

Due to the presence of multiple couter terms, the kinematic configuration on which the matrix-element is evaluated is not unique: an implicit sum over such kinematical configurations is assumed here and in the rest of the paper.

One can notice that \(\mathcal {W}_{\beta ,V}^{\alpha } = \mathcal {W}_{\beta ,R}^{\alpha } = 0 \) for \(\beta = R,F\) due to the use of the Ellis-Sexton scale [6].

There would also be quadratic contribution if we include the squared matrix element associated to the dimension six operator.

For non definite positive quantity the same idea holds by using \(\max _i (|W^i_{orig}|)\).

References

ATLAS, ATLAS-CONF-2015-081 (2015)

CMS, CMS-PAS-EXO-15-004 (2015)

A. Alloul, N.D. Christensen, C. Degrande, C. Duhr, B. Fuks, Comput. Phys. Commun. 185, 2250 (2014). doi:10.1016/j.cpc.2014.04.012

A. Semenov, Comput. Phys. Commun. 201, 167 (2016). doi:10.1016/j.cpc.2016.01.003

F. Staub, Comput. Phys. Commun. 185, 1773 (2014). doi:10.1016/j.cpc.2014.02.018

J. Alwall, R. Frederix, S. Frixione, V. Hirschi, F. Maltoni, O. Mattelaer, H.S. Shao, T. Stelzer, P. Torrielli, M. Zaro, JHEP 07, 079 (2014). doi:10.1007/JHEP07(2014)079

T. Gleisberg, S. Hoeche, F. Krauss, M. Schonherr, S. Schumann, F. Siegert, J. Winter, JHEP 02, 007 (2009). doi:10.1088/1126-6708/2009/02/007

W. Kilian, T. Ohl, J. Reuter, Eur. Phys. J. C 71, 1742 (2011). doi:10.1140/epjc/s10052-011-1742-y

C. Degrande, C. Duhr, B. Fuks, D. Grellscheid, O. Mattelaer, T. Reiter, Comput. Phys. Commun. 183, 1201 (2012). doi:10.1016/j.cpc.2012.01.022

P. de Aquino, W. Link, F. Maltoni, O. Mattelaer, T. Stelzer, Comput. Phys. Commun. 183, 2254 (2012). doi:10.1016/j.cpc.2012.05.004

S. Hche, S. Kuttimalai, S. Schumann, F. Siegert, Eur. Phys. J. C 75(3), 135 (2015). doi:10.1140/epjc/s10052-015-3338-4

C. Degrande, Comput. Phys. Commun. 197, 239 (2015). doi:10.1016/j.cpc.2015.08.015

J.S. Gainer, J. Lykken, K.T. Matchev, S. Mrenna, M. Park, JHEP 10, 78 (2014). doi:10.1007/JHEP10(2014)078

J. Baglio et al. (2014). arXiv:1404.3940

S. Frixione, B.R. Webber, JHEP 06, 029 (2002). doi:10.1088/1126-6708/2002/06/029

S. Alioli, P. Nason, C. Oleari, E. Re, JHEP 06, 043 (2010). doi:10.1007/JHEP06(2010)043

K. Arnold et al. REPOLO: REweighting POwheg events at Leading Order (2012). https://www.itp.kit.edu/vbfnlo/wiki/lib/exe/fetch.php?media=documentation:repolo_1.0.pdf (Online)

R. Frederix, S. Frixione, V. Hirschi, F. Maltoni, O. Mattelaer, P. Torrielli, E. Vryonidou, M. Zaro, Phys. Lett. B 732, 142 (2014). doi:10.1016/j.physletb.2014.03.026

J. Alwall et al., Comput. Phys. Commun. 176, 300 (2007). doi:10.1016/j.cpc.2006.11.010

J.R. Andersen et al. (2014). arXiv:1405.1067

P. Meade, M. Reece (2007). arXiv:hep-ph/0703031

P. Artoisenet, R. Frederix, O. Mattelaer, R. Rietkerk, JHEP 03, 015 (2013). doi:10.1007/JHEP03(2013)015

S. Frixione, E. Laenen, P. Motylinski, B.R. Webber, JHEP 04, 081 (2007). doi:10.1088/1126-6708/2007/04/081

S. Frixione, F. Stoeckli, P. Torrielli, B.R. Webber, C.D. White (2010)

R. Frederix, S. Frixione, V. Hirschi, F. Maltoni, R. Pittau, P. Torrielli, JHEP 02, 099 (2012). doi:10.1007/JHEP02(2012)099

R. Frederix, S. Frixione, F. Maltoni, T. Stelzer, JHEP 10, 003 (2009). doi:10.1088/1126-6708/2009/10/003

S. Weinzierl (2000). arXiv:hep-ph/0006269

F. Maltoni, E. Vryonidou, M. Zaro, JHEP 11, 079 (2014). doi:10.1007/JHEP11(2014)079

R. Frederix, S. Frixione, E. Vryonidou, M. Wiesemann, JHEP 8, 006 (2016). doi:10.1007/JHEP08(2016)006

M. Buschmann, D. Goncalves, S. Kuttimalai, M. Schonherr, F. Krauss, T. Plehn, JHEP 02, 038 (2015). doi:10.1007/JHEP02(2015)038

C. Degrande, N. Greiner, W. Kilian, O. Mattelaer, H. Mebane, T. Stelzer, S. Willenbrock, C. Zhang, Ann. Phys. 335, 21 (2013). doi:10.1016/j.aop.2013.04.016

G. Corcella, I.G. Knowles, G. Marchesini, S. Moretti, K. Odagiri, P. Richardson, M.H. Seymour, B.R. Webber, JHEP 01, 010 (2001). doi:10.1088/1126-6708/2001/01/010

F. Demartin, F. Maltoni, K. Mawatari, B. Page, M. Zaro, Eur. Phys. J. C 74(9), 3065 (2014). doi:10.1140/epjc/s10052-014-3065-2

F. Maltoni, K. Mawatari, M. Zaro, Eur. Phys. J. C 74(1), 2710 (2014). doi:10.1140/epjc/s10052-013-2710-5

O.B. Bylund, F. Maltoni, I. Tsinikos, E. Vryonidou, C. Zhang (2016)

J. Alwall, P. Demin, S. de Visscher, R. Frederix, M. Herquet, F. Maltoni, T. Plehn, D.L. Rainwater, T. Stelzer, JHEP 09, 028 (2007). doi:10.1088/1126-6708/2007/09/028

V. Hirschi, O. Mattelaer, JHEP 10, 146 (2015). doi:10.1007/JHEP10(2015)146

Z. Bern, L.J. Dixon, F. Febres Cordero, S. Hche, H. Ita, D.A. Kosower, D. Maitre, Comput. Phys. Commun. 185, 1443 (2014). doi:10.1016/j.cpc.2014.01.011

E. Bothmann, M. Schnherr, S. Schumann, Eur. Phys. J. C 76, 590 (2016). doi:10.1140/epjc/s10052-016-4430-0

S. Hoeche, M. Schonherr, Phys. Rev. D. 86, 094042 (2012). doi:10.1103/PhysRevD.86.094042

S. Aioli, P. Nason, C. Oleari, R. Re. http://powhegbox.mib.infn.it/

J. Bellm, S. Pltzer, P. Richardson, A. Sidmok, S. Webster, Phys. Rev. D. 94(3), 034028 (2016). doi:10.1103/PhysRevD.94.034028

Acknowledgements

I would like to thank all the authors of MG5_aMC for their discussions, help and support at many stages of this Project. I would like also to thank C. Degrande, R. Frederix and F. Maltoni to have read and comment on this manuscript, E. Vryonidou, F. DeMartin, I. Tsinikos, V. Hirschi for their help during the validation of this implementation. O.M. is supported by a Durham International Junior Research Fellowship. This work is supported in part by the IISN “MadGraph” convention 4.4511.10, by the Belgian Federal Science Policy Office through the Interuniversity Attraction Pole P7/37, by the European Union as part of the FP7 Marie Curie Initial Training Network MCnetITN (PITN-GA-2012-315877), and by the ERC Grant 291377 LHCtheory: Theoretical predictions and analyses of LHC physics: advancing the precision frontier.

Author information

Authors and Affiliations

Corresponding author

Appendix A: Theoretical proof

Appendix A: Theoretical proof

1.1 A.1: Unweighting

In order to have a formal proof that the unweighting procedure can commute with the re-weighting, we first have to formalize the procedure. Following the convention adopted in the previous sections, a standard Monte Carlo integration is:

To get an unweighted sample, we first need to multiply and divide this expression by \(\max _i (W^i_{orig}) \):

Finally, the term \(\frac{W^i_{orig}}{\max _i (W^i_{orig})}\) can be re-interpretted as a probability to accept/reject the phase-space point.Footnote 8

By randomly selecting a sub-sample of phase-space points with that probability, we reduce significantly the sample size. Additionally, all the remaining events have the same weight (\(\max _i (W^i_{orig})\)) and the associated distribution of events follows the physical distributions.

where \(Acc_i\) is either 0 or 1 depending on whether the event was kept or rejected following the \(\frac{W^i_{orig}}{ \max _i(W^i_{orig})}\) probability distribution.

Let’s now proof that the re-weighting works on a unweighted sample, by doing the same for a second theory. But instead of multiplying and dividing by \(\max _i(W^i_{new})\) we will use the maximum weight of the original theory:

Since \(W^i_{new} = \frac{|M^i_{new}|^2}{|M^i_{old}|^2}W^i_{old}\) (See Eq. 23), this is equal to

We recover in that equation the same ratio which was used to unweight the original theory. We can therefore select the same sub-sample of events and just re-weight them by the ratio of the matrix element squared.

1.2 A.2: Statistical uncertainty from an un-weighted sample

One can notice that the estimated uncertainty can not be obtained via re-weighting for an unweighted sample due to the non linear dependence in the matrix element squared.

We will show in this section what needs to be done in order to build an estimator of the variance from a re-weighted sample. Following the idea of the unweighting procedure, we can rewrite the standard estimator of the variance by:

As for the unweighting case, we can re-interpret the ratio \(\frac{W^i_{orig}}{ \max _i(W^i_{orig})}\equiv P^{orig}_{acc,i}\) as the probability to keep the event during the unweighting procedure. Therefore after the event unweighting the equation can be read as:

In this case, a dependence remains in the unweighting probability as well as in the number of generated and accepted events. If those informations were kept during the unweighting procedure it would be possible to construct the above estimator of the variance. The re-weighting of such information is then possible and one can construct such an estimator for any re-weighted sample:

Note that in presence of multi-channel integration such information need to be provided for each channel individually.

This method is currently not implemented in MG5_aMC but we plan to include it in a near future and study the accuracy of such an estimator.

1.3 A.3: NLO-reweighting

In order to proof that the re-weighting proposed in Eq. 16 is correct we first need to formalise the loop integration method. We will use in this section a simplified notation such that

Where B, V, C represents respectively the Born, the virtual and the counter terms contribution. Since the counter terms do not play any role in this optimisation procedure (and have a natural re-weighting) we will focus on the \( \sigma ^{soft,B}_{orig} \) pieces: In this simplified formalism the phase-space optimisation method can be written has (see Eq. 15):

In those equations, we first (Eq. 38) add and subtract the approximant of the virtual: \(\kappa _{orig}\,B^i_{orig}\), while in the second equation we integrate on different statistics the two pieces of the sum. We run k times less phase-space point in the second and therefore have to multiply it by the factor k.

If we want to use the re-weighting on the sample generated via this method, we have to apply the same method with the same value of \(\kappa _{orig}\)

Inspired by Eq. 16, we will multiply all those terms by the identity factor \(1 = \frac{B^i_{orig} + V^i_{orig}}{B^i_{orig}+V^i_{orig}}\):

We can rewrite the expression as the expected re-weighting formula plus some rest-over

If the same phase-space sampling is used for both parts (\(k=1\)) then the second and third lines cancel. The remaining lines correspond to the re-weighting of Eq. 16. If both integral are sampled in a different way (\(k\ne 1\)), then the cancellation is not exact but should still occur for large enough samples. Therefore this optimization method introduces a new contribution to the statistical uncertainty.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

Funded by SCOAP3.

About this article

Cite this article

Mattelaer, O. On the maximal use of Monte Carlo samples: re-weighting events at NLO accuracy. Eur. Phys. J. C 76, 674 (2016). https://doi.org/10.1140/epjc/s10052-016-4533-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjc/s10052-016-4533-7