Abstract

Out-of-plane lesions pose challenges for CT-guided interventions. Augmented reality (AR) headsets are capable to provide holographic 3D guidance to assist CT-guided targeting. A prospective trial was performed assessing CT-guided lesion targeting on an abdominal phantom with and without AR guidance using HoloLens 2. Eight operators performed a cumulative total of 86 needle passes. Total needle redirections, radiation dose, procedure time, and puncture rates of nontargeted lesions were compared with and without AR. Mean number of needle passes to reach the target reduced from 7.4 passes without AR to 3.4 passes with AR (p = 0.011). Mean CT dose index decreased from 28.7 mGy without AR to 16.9 mGy with AR (p = 0.009). Mean procedure time reduced from 8.93 min without AR to 4.42 min with AR (p = 0.027). Puncture rate of a nontargeted lesion decreased from 11.9% without AR (7/59 passes) to 0% with AR (0/27 passes). First needle passes were closer to the ideal target trajectory with AR versus without AR (4.6° vs 8.0° offset, respectively, p = 0.018). AR reduced variability and elevated the performance of all operators to the same level irrespective of prior clinical experience. AR guidance can provide significant improvements in procedural efficiency and radiation dose savings for targeting out-of-plane lesions.

Similar content being viewed by others

Introduction

Augmented reality (AR) technologies are able to seamlessly merge virtual objects with the surrounding environment. Extensive technological progress has been made with AR headset devices since 2006 with the development of one of the first AR guidance systems, called Reality Augmentation for Medical Procedures (RAMP), for CT-guided interventions via custom headset with video see-through overlay1. Since then, many other applications have been developed with AR devices to enhance training and image-guided procedures2,3.

However, despite technical achievements with commercial headsets now capable of projecting 3D holograms merged with transparent views of the real world, or optical see-through, clinical utility and adoption of AR technologies have made only marginal progress since RAMP. Still, the goals of any navigation system for improving CT-guided interventions remain the same. Challenging lesions and anatomy can make targeting difficult and result in prolonged procedure times, increased radiation exposure, and more complications4,5. Out-of-plane approaches, in particular, are often required for challenging lesions6. These may entail a greater number of needle misplacements, repeated skin punctures, and unintended traversal of critical structures along the trajectory path. As a consequence, the patient can experience increased pain and discomfort, bleeding complications, or inadvertent organ injury and perforation requiring additional interventions.

Many AR-assisted guidance systems have been recently developed for percutaneous needle-based interventions with the widespread availability of commercial AR devices. Smartphone or tablet-based AR navigation platforms for CT-guided needle insertion have demonstrated sub-5 mm accuracies, decreased procedure times, and fewer intermediate CT scans7,8. 3D AR-assisted navigation systems using HoloLens (v1, Microsoft, Redmond, WA) have received unanimously positive feedback among operators for its potential to enhance safety, aid in execution, and improve depth perception and spatial understanding9,10. Although such systems show promise, no prior study to date has demonstrated the procedural effects of AR guidance using an optical see-through headset device through a systematic trial.

This study describes the design of a 3D AR-assisted navigation system using the next-generation HoloLens 2 (Microsoft, Redmond, WA) headset device. Unlike other existing AR-assisted navigation systems, no additional or extra hardware components are needed aside from the headset. Registration was performed automatically to a CT grid routinely used in clinical practice, as opposed to using separate external image-based markers or matrix barcodes required in other systems3. Evaluation was performed through a preclinical trial simulating CT-guided needle targeting of an out-of-plane lesion in an abdominal phantom with and without AR guidance. The number of needle redirections, radiation dose, procedure time, and puncture rates of nontargeted lesions are compared.

Materials and methods

This study is Institutional Review Board exempt from the University of Pennsylvania as no actual patient data was obtained or analyzed. CT-guided percutaneous needle targeting was simulated on a phantom model (071B, CIRS, Norfolk, VA) containing multiple targets of various sizes. A CT grid (Guidelines 117, Beekley Medical, Bristol, CT) commonly used in clinical practice was placed on the anterior surface of the phantom for planning and to serve as a fiducial target for registration.

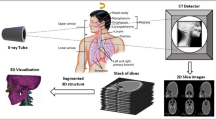

Preoperative imaging and 3D modeling

A preoperative CT scan of the phantom was performed at 120 kVp and 2 mm slice thickness on Siemens SOMATOM Force (Fig. 1). An 11 mm lesion was selected for targeting. Manual and semi-automated segmentations of the lesions, CT grid and bony structures, and skin surface were performed with ITK-SNAP using threshold masking and iterative region growing11. Segmentation meshes were exported in STL file format followed by mesh decimation using Meshmixer (Autodesk, San Rafael, CA) to eliminate redundant vertices and reduce mesh size to improve 3D rendering performance. Reduced meshes were then exported in OBJ file format and material textures, including colors and transparencies, were applied using Blender (Amsterdam, Netherlands). The target lesion was colored in green; all other nontargeted lesions were colored in red. The final 3D surface-rendered model was exported in FBX file format (Fig. 2). Total model generation time was less than 45 min.

Three-dimensional surface-rendered model of phantom. (A) Lines from the CT grid can be seen along the anterior surface. Target lesion is specified in green. All other nontargeted lesions are specified in red. (B) Wireframe view of model which contains 58,498 polygons with a total file size of only 1.6 MB.

Target trajectory

A long, out-of-plane trajectory with a narrow-window access was intentionally chosen to the 11-mm target from a skin entry site along the inferior aspect between CT gridlines 3 and 4 (Fig. 3). This trajectory angle was beyond the maximum gantry tilt for potential compensation by the CT scanner.

Trajectory to targeted lesion from specified skin entry site. (A) Down-the-barrel look at trajectory to targeted lesion (green) from skin entry site at the inferior aspect between labeled gridlines 3 and 4 (black box). Several nontargeted lesions (red) can be seen in close proximity to the trajectory. (B) Vector of ideal trajectory based on preoperative CT scan from specified skin entry site. Total trajectory distance of 14.1 cm from skin with 23.4° angle relative to the z-plane (5.8 cm lateral, 11.6 cm deep, and 5.6 cm cranial component). Target and CT grid are not drawn to scale.

Augmented reality system

Holographic 3D AR visualization and interaction were performed using a HoloLens 2 headset device. A custom HoloLens application was developed in Unity 2019.2.21 and Mixed Reality Toolkit Foundation 2.3.0. Automated registration of the 3D model to CT grid was performed using computer vision and Vuforia 9.0.12 with the CT grid as the image target. Features on the CT grid can be reliably and quickly detected by Vuforia12, and studies have validated the accuracy of Vuforia on HoloLens (v1)10,13,14,15,16,17. Registration accuracies were not directly validated in this study; registration fidelity was confirmed visually by the operator based on complete alignment of the virtual gridlines with the physical gridlines. A virtual needle trajectory was added into the 3D model based on the ideal trajectory. This virtual guide allowed the user to easily trace the ideal trajectory using a real needle (Fig. 4).

Augmented reality (AR)-assisted navigation using HoloLens 2. (A) Participant inserts the needle while wearing HoloLens 2. (B) View of needle insertion without AR. (C) View of needle insertion through HoloLens 2 with three-dimensional model and virtual needle guide projected onto the phantom. Registration is visually confirmed with the actual CT gridlines aligned with the virtual gridlines. The needle is seen aligned with the virtual guide (purple line) displaying the ideal trajectory to the target lesion (green ball). Note that this two-dimensional captured image does not fully represent the three-dimensional stereoscopic view seen with HoloLens 2.

CT-guided procedure simulation

All simulations were performed on a Siemens SOMATOM Force CT scanner at 120 kVp and 2 mm slice thickness. CT scanner operation was performed within the guidelines and regulations of the Department of Radiology at the University of Pennsylvania. After applying a surgical drape over the phantom, percutaneous CT-guided targeting using a 21G-20 cm Chiba needle was simulated in the same standard fashion performed clinically. Following a topogram, an initial CT scan of the phantom was performed and reviewed for trajectory planning. The needle was then passed into the phantom and iteratively advanced, redirected, or retracted, as many times as needed, until the tip of the needle was in the target. Interval CT scans were performed following any needle adjustment. Each adjustment was counted as a needle pass, and these passes were cumulatively documented.

A total of 8 participants simulated CT-guided needle targeting: 2 attendings, 3 interventional radiology (IR) residents, and 3 medical students. Both attendings had greater than 5 years of experience. 2 residents were in their final year of training. All 3 medical students had never previously seen nor performed a CT-guided intervention. Aside from 1 resident, all other participants had no prior experience wearing or interacting with HoloLens 2. In order to limit bias, participants were randomized into cohorts: CT-guided targeting 1) without AR and then repeated with AR or 2) with AR and then repeated without AR (Fig. 5).

Procedural imaging and vector analysis

Total number of needle passes were recorded. Total CT dose index (CTDIvol) and dose-length product (DLP) were obtained from the CT dose report. Procedure duration was measured from the acquisition time, or image metadata DICOM tag (0008,0032), of the CT scan following the 1st needle pass to the acquisition time of the final CT scan with the needle tip in the target. Vector analysis of the CT scan after the first, initial needle pass was performed (Fig. 6). These CT scans were resampled into isotropic volumes (1 × 1 × 1 mm) using 3D Slicer 4.10.1 and linear interpolation18. Voxel locations at the skin entry site, needle tip, and target centroid were recorded. Distances and angles were calculated using vector magnitude and dot product, respectively. All CT scans were reviewed to record needle passes that unintentionally punctured or traversed through a nontargeted lesion.

Diagram demonstrating calculations in two dimensions for illustrative purposes only. Actual calculations were performed in three dimensions based on voxel locations. Blue solid arrow represents distance of needle tip from skin entry site. Red solid arrow represents remaining distance to center of target. Yellow dotted arrow represents ideal trajectory from skin entry site to center of target. Angle offsets were calculated between the needle trajectory (blue solid arrow) relative to the ideal trajectory (yellow dotted arrow).

Statistical analysis

Vector analyses, means, paired t-tests, and F-tests were performed using Google Sheets (Mountain View, CA). Post hoc power analysis suggested a total sample size of 8 for a power of 0.8 and effect size of 1 to achieve a statistical significance level of 0.05.

Results

A comparison of CT-guided needle targeting performed with and without AR is summarized in Table 1. The use of AR-assisted guidance significantly reduced the number of iterative needle passes and redirections required to reach the selected target, from a mean of 7.4 passes without AR down to 3.4 passes with AR (54.2% decrease, p = 0.011). Radiation dose savings were also significant with mean CTDIvol decreasing from 28.7 mGy without AR to 16.9 mGy with AR (41.2% decrease, p = 0.009), and DLP decreasing from 538 mGy-cm without AR to 318 mGy-cm with AR (41.0% decrease, p = 0.009). Mean procedure duration significantly reduced from 8.93 min without AR to 4.42 min with AR (50.6% decrease, p = 0.027). The puncture rate of a nonselected target also decreased from 11.9% (7/59 passes) without AR to 0% with AR (0/27 passes).

The first, initial needle pass angle was more aligned with the ideal trajectory with AR guidance compared to needle insertion without using any guidance, as is common standard clinical practice (4.6° vs 8.0° offset, respectively, p = 0.018). In addition, the 1st pass distance traveled was deeper and the needle tip was closer to the target with AR versus without AR but did not reach statistical significance (p = 0.763 and p = 0.330, respectively).

Subgroup analysis was performed comparing results based on prior clinical experience (Table 2). Without AR guidance, participant performances were heterogeneous with experience. The puncture rate of a nonselected target along the trajectory path decreased with increasing clinical experience; medical students had the highest total number of passes that punctured or traversed a nonselected target, and attendings had zero passes that punctured a nonselected target. Medical students also had the greatest 1st pass traveled distance of 14.7 cm compared to residents and attendings with 6.4 cm and 6.7 cm, respectively.

Using 3D AR guidance, participant performances were more homogeneous regardless of experience. Collectively, there were significant reductions in the variability in the number of passes and 1st pass distances with AR (p = 0.002 and p = 0.012, respectively). All subgroups performed similarly with the mean number of passes ranging between 3–4 passes and the 1st pass distance averaging approximately 10 cm among each subgroup. Additionally, there were no punctures of a nonselected target with AR. Medical students with no prior clinical experience performed at the same level as experienced attendings with AR.

Discussion

Holographic 3D AR-assisted guidance showed significant reductions in needle passes, radiation dose, and procedure time for CT-guided targeting of a challenging, out-of-plane lesion. AR guidance decreased the total number of needle passes by 54.2%. Fewer needle passes also resulted in fewer interval CT scans, leading to a 41.2% decrease in total radiation dose. This decrease in radiation dose did not quite match the decrease in needle passes since an initial CT scan was performed for planning at the start of all simulations. Both fewer needle passes and interval CT scans led to a reduction in procedure time by 50.6%. Furthermore, with AR guidance, the 1st needle pass was more in line with the ideal trajectory compared with no guidance. This likely contributed to the fewer number of subsequent, iterative needle adjustments required to reach the target.

Some degree of order bias and recall did occur during non-AR-assisted simulations. The cohort that performed targeting with HoloLens 2 first and then repeated without AR had fewer total needle passes without AR (22 passes) compared to the cohort that performed targeting without AR first (37 passes). These data suggest that visually seeing the trajectory in 3D in addition to physically performing the procedure concurrently may enhance spatial understanding and innate recall19. However, these effects were not realized during AR-assisted simulations, which overall had consistent findings regardless of the order of interventions between the cohorts (13 vs 14 passes). This suggests that the benefits of having real-time 3D navigation likely supersede advantages associated with prior experience or recall. This contention is further supported by the fact that medical students performed at the same level as experienced attendings with 3D AR guidance.

Generally, medical students were aggressive on their first needle pass without AR, advancing the needle a distance of over twice that of residents and attendings with a goal of getting close to the target as opposed to multiple smaller passes to ensure the trajectory is on course, which may come with clinical experience. As expected, medical students had the greatest number of unintentional punctures of adjacent nontargeted lesions. Without AR, residents and attendings took more conservative 1st initial passes and required a greater number of overall passes but had fewer punctures of nontargeted lesions, with attendings having none. With AR guidance, there was significantly less variability in performance. Attendings, residents, and students all performed at the same level with similar total passes and 1st-pass distances as well as having zero punctures of nontargeted lesions.

The primary limitation of this study was the evaluation of this system using a stationary, inanimate phantom. As with any navigation system, patient motion, respiratory breathing, soft tissue deformation, and needle bending are important factors that can affect navigational performance. Breathing can be compensated by techniques such as simple respiratory gating20 or high frequency jet ventilation under general anesthesia21. Soft tissue deformation can be compensated by deformable modeling9, and needle bending can be extrapolated using a shape sensing needle22. Further developments will incorporate some of these features prior to evaluation with live subjects.

In summary, holographic 3D AR guidance using an optical see-through headset device can decrease needle redirections, reduce radiation dose, shorten procedure time, and minimize punctures of nontargeted structures during CT-guided interventions. The use of 3D AR guidance may thus facilitate the treatment of challenging, hard-to-reach, or out-of-plane lesions. Additionally, these data suggest that AR guidance may immediately help to elevate the performance of inexperienced operators, providing added opportunities to treat challenging lesions that were previously declined due to limited operator experience. Although this preclinical trial shows promising and translatable benefits of 3D AR guidance, further developments and robust clinical testing will be needed for adoption into actual practice.

Abbreviations

- AR:

-

Augmented reality

- CTDIvol:

-

CT dose index

- DLP:

-

Dose-length product

- IR:

-

Interventional radiology

- 3D:

-

Three-dimensional

References

Das, M. et al. Augmented reality visualization for CT-guided interventions: system description, feasibility, and initial evaluation in an abdominal phantom. Radiology 240, 230–235. https://doi.org/10.1148/radiol.2401040018 (2006).

Uppot, R. N. et al. Implementing virtual and augmented reality tools for radiology education and training, communication, and clinical care. Radiology 291, 570–580. https://doi.org/10.1148/radiol.2019182210 (2019).

Park, B. J. et al. Augmented and mixed reality: technologies for enhancing the future of IR. J. Vasc. Interv. Radiol. 31, 1074–1082. https://doi.org/10.1016/j.jvir.2019.09.020 (2020).

Maybody, M., Stevenson, C. & Solomon, S. B. Overview of navigation systems in image-guided interventions. Tech. Vasc. Interv. Radiol. 16, 136–143. https://doi.org/10.1053/j.tvir.2013.02.008 (2013).

Durand, P. et al. Computer assisted electromagnetic navigation improves accuracy in computed tomography guided interventions: a prospective randomized clinical trial. PLoS ONE 12, e0173751. https://doi.org/10.1371/journal.pone.0173751 (2017).

Schubert, T. et al. CT-guided interventions using a free-hand, optical tracking system: initial clinical experience. Cardiovasc. Interv. Radiol. 36, 1055–1062. https://doi.org/10.1007/s00270-012-0527-5 (2013).

Solbiati, M. et al. Augmented reality for interventional oncology: proof-of-concept study of a novel high-end guidance system platform. Eur. Radiol. Exp. 2, 18. https://doi.org/10.1186/s41747-018-0054-5 (2018).

Hecht, R. et al. Smartphone augmented reality CT-based platform for needle insertion guidance: a phantom study. Cardiovasc. Interv. Radiol. 43, 756–764. https://doi.org/10.1007/s00270-019-02403-6 (2020).

Si, W., Liao, X., Qian, Y. & Wang, Q. Mixed reality guided radiofrequency needle placement: a pilot study. J. IEEE Access 6, 31493–31502 (2018).

Al-Nimer, S. et al. 3D Holographic guidance and navigation for percutaneous ablation of solid tumor. J. Vasc. Interv. Radiol. 31, 526–528. https://doi.org/10.1016/j.jvir.2019.09.027 (2020).

Yushkevich, P. A. et al. User-guided 3D active contour segmentation of anatomical structures: significantly improved efficiency and reliability. NeuroImage 31, 1116–1128 (2006).

Park, B. J., Hunt, S., Nadolski, G. & Gade, T. Registration methods to enable augmented reality-assisted 3D image-guided interventions. In Proceedings of SPIE 11072, 15th International Meeting on Fully Three-Dimensional Image Reconstruction in Radiology and Nuclear Medicine, 11072. https://doi.org/10.1117/12.2533787 (2019).

Frantz, T., Jansen, B., Duerinck, J. & Vandemeulebroucke, J. Augmenting Microsoft’s HoloLens with vuforia tracking for neuronavigation. Healthc. Technol. Lett. 5, 221–225. https://doi.org/10.1049/htl.2018.5079 (2018).

Condino, S., Carbone, M., Piazza, R., Ferrari, M. & Ferrari, V. Perceptual limits of optical see-through visors for augmented reality guidance of manual tasks. IEEE Trans. Biomed. Eng. https://doi.org/10.1109/TBME.2019.2914517 (2019).

Heinrich, F., Schwenderling, L., Becker, M., Skalej, M. & Hansen, C. HoloInjection: augmented reality support for CT-guided spinal needle injections. Healthc. Technol. Lett. 6, 165–171. https://doi.org/10.1049/htl.2019.0062 (2019).

van Doormaal, T. P. C., van Doormaal, J. A. M. & Mensink, T. Clinical accuracy of holographic navigation using point-based registration on augmented-reality glasses. Oper Neurosurg. (Hagerstown) 17, 588–593. https://doi.org/10.1093/ons/opz094 (2019).

Bettati, P. et al. Augmented reality-assisted biopsy of soft tissue lesions. Proc. SPIE Int. Soc. Opt. Eng. https://doi.org/10.1117/12.2549381 (2020).

Kikinis, R., Pieper, S. D. & Vosburgh, K. G. 3D Slicer: A Platform for Subject-Specific Image Analysis, Visualization, and Clinical Support (Springer, New York, 2014).

Park, B. et al. 3D augmented reality visualization informs locoregional therapy in a translational model of hepatocellular carcinoma. J. Vasc. Interv. Radiol. https://doi.org/10.1016/j.jvir.2020.01.028 (2020).

Nicolau, S. A. et al. An augmented reality system for liver thermal ablation: design and evaluation on clinical cases. Med. Image Anal. 13, 494–506. https://doi.org/10.1016/j.media.2009.02.003 (2009).

Volpi, S. et al. Electromagnetic navigation system combined with high-frequency-jet-ventilation for CT-guided hepatic ablation of small US-Undetectable and difficult to access lesions. Int. J. Hyperth. 36, 1051–1057. https://doi.org/10.1080/02656736.2019.1671612 (2019).

Lin, M. A., Siu, A. F., Bae, J. H., Cutkosky, M. R. & Daniel, B. L. HoloNeedle: augmented reality guidance system for needle placement investigating the advantages of three-dimensional needle shape reconstruction. J. IEEE Robot. Autom. Lett. 3, 4156–4162. https://doi.org/10.1109/LRA.2018.2863381 (2018).

Acknowledgements

This work was supported by RSNA R&E Foundation Dr. Alexander Margulis Siemens Healthineers Fellow Research Grant. All figures and illustrations were taken or drawn by the corresponding author.

Author information

Authors and Affiliations

Contributions

All authors contributed to study design. B.J.P. developed the A.R. system. B.J.P. and T.P.G. wrote the main manuscript text. B.J.P. prepared the figures. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Park, B.J., Hunt, S.J., Nadolski, G.J. et al. Augmented reality improves procedural efficiency and reduces radiation dose for CT-guided lesion targeting: a phantom study using HoloLens 2. Sci Rep 10, 18620 (2020). https://doi.org/10.1038/s41598-020-75676-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-020-75676-4

- Springer Nature Limited