Abstract

In complex product design, lots of time and resources are consumed to choose a preference-based compromise decision from non-inferior preliminary design models with multi-objective conflicts. However, since complex products involve intensive multi-domain knowledge, preference is not only a comprehensive representation of objective data and subjective knowledge but also characterized by fuzzy and uncertain. In recent years, enormous challenges are involved in the design process, within the increasing complexity of preference. This article mainly proposes a novel decision-making method based on generalized abductive learning (G-ABL) to achieve autonomous and efficient decision-making driven by data and knowledge collaboratively. The proposed G-ABL framework, containing three cores: classifier, abductive kernel, and abductive machine, supports preference integration from data and fuzzy knowledge. In particular, a subtle improvement is presented for WK-means based on the entropy weight method (EWM) to address the local static weight problem caused by the fixed data preferences as the decision set is locally invariant. Furthermore, fuzzy comprehensive evaluation (FCE) and Pearson correlation are adopted to quantify domain knowledge and obtain abducted labels. Multi-objective weighted calculations are utilized only to label and compare solutions in the final decision set. Finally, an engineering application is provided to verify the effectiveness of the proposed method, and the superiority of which is illustrated by comparative analysis.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Preference-based decision-making with multi-objective conflicts is a widespread and critical task in the preliminary design of complex products. However, due to the proliferation of product complexity and scale, preference determination, with uncertainty and fuzziness, is not only based on data-perceived features but also related to amounts of domain knowledge. Immense challenges remain in the decision-making of complex product design, stemming from the difficulty of balancing both data and knowledge for mutual benefit [1, 2].

Complex product design is typically categorized as multi-disciplinary design optimization (MDO) problem, where multi-objective conflicts are widespread [3, 4]. However, there exists no single optimal solution that optimizes all objectives concurrently. Therefore, conflicts are always weighed to obtain designer-interested “preferred” solutions, formally known as the Pareto solution set [5–7]. Further, designers are accustomed to selecting or developing an appropriate product design in detail concerning “preferred” solutions. In recent years, research on the aided design of complex products based on MDO and multi-objective optimization (MOO) has attracted much attention and obtained fruitful achievements, which are applied in various engineering fields such as electromagnetics and space [8–10].

The Pareto solutions (PS) are unable to realize the enhancement in one optimization objective without deteriorating their performance in at least one of the rest [11]. For complex product design problems with multiple objectives, variables, and constraints, it is indispensable to keep the diversity of solutions so that solutions are sufficiently close to the Pareto front. As a result, the number of preferred solutions recommended to designers remains large. Designers from different disciplines and departments have to consume a lot of time and resources on collaborative actions (calculation, verification, discussion, consensus, etc.) to obtain a compromise decision [12]. This status fails to address the increasing engineering requirements of improving product design efficiency and shortening the research and development cycle. Hence, this article seeks to propose a collaborative decision-making method to help designers decide the most preferred solution more autonomously and efficiently.

Multi-objective decision-making (MODM) is an effective method to resolve multi-attribute conflicts to obtain a comprehensive solution [13]. The pivotal problem of MODM is to acquire the appropriate decision preferences, which are traditionally mapped into different objective weights [14–16]. Related researches, Entropy-based method [17], AHP [18], TOPSIS [19], and CCDS [20], etc., contribute to measuring weights. Due to the increasing scale and complexity of products, the uncertainty, ambiguity, and disciplinary differences in preferences are prominent. Thus, collaborative decision-making attracts research attention. Meaningful applications based on weighted least squares [21], distributed gradient [22], or weight estimation [23] are proposed in this area. Simultaneously, various innovation methods are proposed. Zhang and Li [24] combined the fuzzy c-means method (FCM) with the gray relational projection (GRP) to extract the best compromise solution reflecting preferences in PS. A cross-entropy measure is presented to address the unknown attribute weights problem [25]. In addition, preference deviation and credibility of experts are concerned and discussed [26, 27].

Based on the above analysis, previous methods can be divided into three categories: method based on expert knowledge, method based on data feature analysis, and integration method. However, there exist respective limitations. 1) The expert knowledge-based method has strong subjective characteristics, and there still exist preference deviation and reliability risk, and even unknown knowledge problem. 2) The data-based method is relatively easy to calculate. However, as for knowledge-intensive decision-making of complex product design, it is obviously inadvisable to make decisions ignoring knowledge. 3) The integrated method can combine the advantages of the above two. However, existing researches generally follow a serial framework in which one model assists the other, so the auxiliary model is obviously not fully exploited. Therefore, autonomous and efficient decision-making, integrating preferences from data and knowledge in a mutually beneficial mode, becomes a novel problem for urgent attention.

Recently, Zhou [28] proposed the abductive learning (ABL) method for bridging data-driven machine learning and knowledge-driven logical reasoning. The ABL framework, originally created for classification, will undoubtedly help to address design decision-making problems co-driven by data and knowledge. However, to the best knowledge of authors, no published related researches are available. Simultaneously, ABL uses logical reasoning, which is helpless to deal with fuzzy domain knowledge in complex product design decision-making. This article offers the following contributions. 1) The ABL framework is generalized to fit MODM. In the G-ABL framework, it is possible to realize the knowledge flow and data flow of decision preference to develop in parallel and mutually beneficial. 2) The G-ABL based collaborative decision-making method is proposed to realize data and fuzzy knowledge co-driven design decision-making for complex products. 3) An engineering problem, the decision of horizontal tail control system design, is better addressed based on the proposed method.

The remainder of this article is arranged as follows. Section 2 introduces some preliminaries. A novel G-ABL framework is presented in Sect. 3. In Sect. 4, the G-ABL-based collaborative decision-making method is proposed, and the “Classifier”, “Abductive Kernel”, and “Abductive Machine” of the proposed method are established, respectively. Section 5 provides an engineering application to verify the effectiveness of the proposed method. Finally, Sect. 6 and Sect. 7 discuss and conclude this article.

2 Preliminaries

This section introduces some propaedeutics of the proposed method.

2.1 Abductive learning

A novel framework, Abductive Learning (ABL), bridges two independently evolving paradigms to artificial intelligence for mutual benefit—Machine Learning and Logical Reasoning [29]. Abduction differs from traditional deduction and induction, its essence is to correct data-perceived facts in machine learning with prior knowledge. With iterative learning, the accuracy and reliability of machine learning models are significantly improved after consistency optimization. The classical ABL framework is shown in Fig. 1 [30].

2.2 EWM

The entropy weight method is an objective weighting method based on data [31]. The information entropy is applied to measure the magnitude of objective variability and obtain objective weights. Suppose that the dimensionless data matrix \({X_{n \times m}}\). n is the number of solutions, and m is the number of objectives. The objective information entropy is given as

where \({p_{ij}} = {{{X_{ij}}} / {\sum_{i = 1}^{n} {{X_{ij}}} }}\). If \({p_{ij}} = 0\), let \({e_{j}} = 0\). The objective entropy weight can be calculated by

2.3 WK-means

WK-means is an intelligent clustering algorithm with the autonomous calculation of objective weights [32]. The introduced iterative weight quantifies the importance of the characterization objective in the clustering to reduce the influence of the interference dimension. Its minimization objective function is defined as

where U is the cluster assignment matrix, Z is the cluster center matrix and W is the weight vector. k is the number of classes. β is a dynamic parameter, varying with iteration, for objective weight \({w_{j}}\).

2.4 FCE

FCE is a method based on fuzzy criteria, comprehensively evaluating alternatives [33]. The core is to give a fuzzy evaluation vector B.

where W is the weight vector of the target, R is the fuzzy evaluation matrix. “∘” is the fuzzy operator.

Especially, after processing by the maximum membership principle or fuzzy scoring method, B can be regarded as a label corresponding to the alternative. The membership function is obtained empirically and the fuzzy matrix R is solved as a result. The process offers the possibility of giving a wealth of domain knowledge to R. It is the basis for introducing FCE into the proposed method.

3 G-ABL framework

When AI is applied in knowledge-intensive complex system engineering, the independence of the two main types of information, data and knowledge, needs to be broken. Decision-making that combines data and knowledge is almost certain. Thus, broadly, ABL can be regarded as an intelligent framework integrating data and knowledge to accommodate advances in engineering information.

The main challenge addressed by classical ABL is bridging numerical optimization and symbolic logic to evolve artificial intelligence. Admittedly, data is the source of information for numerical optimization (machine learning model). However, due to the uncertainty and ambiguity of complex product knowledge, the proportion of knowledge that can be expressed explicitly and with complete credibility decreases. Logical reasoning based on formal symbols is not a single method for exploiting knowledge, much less a single criterion for correcting facts inducted from data. Hence, we presented a novel G-ABL framework, shown in Fig. 2. Simultaneously, the consistency optimization in classical ABL should also be transformed.

The G-ABL framework contains three primary blocks: Classifier, Abductive Kernel, and Abductive Machine. All of them are general terms for a class of methods, algorithms, or principles with similar functions, whose details are explained as follows.

-

1)

Classifier

The classifier outputs labels based on numerical feature analysis. The initial classifier can be obtained by clustering, transferring, etc. Since the training data with real labels are sparse, pseudo labels (red labels in Fig. 2), even a large number, may exist in the previous generations.

-

2)

Abductive Kernel

The abductive kernel (a generalization of logical reasoning) can output labels based on knowledge-based methods, such as reasoning, and evaluation. Incompletely reliable grey labels may exist. However, aided by prior knowledge, grey labels are fewer and more credible than pseudo labels.

-

3)

Abductive Machine

The abductive machine (a generalization of consistency optimization) can correct data-based labels by referring to knowledge-based labels based on established principles, and obtain abducted labels. The determination of abducted labels combines two information sources, data and knowledge. Generally, there are more correct labels in abducted labels, whose reliability is superior to the above two.

As the abductive process iterates, the continuously augmented supervised data optimize the classifier performance to excel in addressing the classification problem. It falls within the scope of classical ABL. However, the possibilities for data updates go far beyond that. If the update direction is to select data that better match the preferences and eliminate the rest, the G-ABL framework works for solving MODM problems by combining data and knowledge.

4 Collaborative decision-making method based on G-ABL

This section first provides an overview of the collaborative decision-making method, followed by the internal details.

4.1 Methodology

In complex product design, the collaborative decision-making task is to select a “most preferred” design from a set of “preferred” preliminary design models with conflicting objectives, autonomously and efficiently based on decision preferences. Formally, the input of the proposed method is a Pareto solution set constituted by various non-dominated solutions, which is described as

where \({X_{i}} = ( {{x_{i1}},{x_{i2}}, \ldots ,{x_{iL}}} )\), \(i = 1,2, \ldots ,N\), \(x_{il}\), \(l = 1,2, \ldots ,L\) are optimization variables. Correspondingly, the Pareto front is represented as

where \({Y_{i}} = \vec{f} ( {{X_{i}}} ) = ( {{f_{1}} ( {{X_{i}}} ),{f_{2}} ( {{X_{i}}} ), \ldots ,{f_{M}} ( {{X_{i}}} )} ) = ( {y_{i1}},{y_{i2}}, \ldots , {y_{iM}} )\), \(\vec{f} ( \cdot )\) is combinatorial mapping of multi-objective functions, \({f_{m}} ( \cdot )\), \(m = 1,2, \ldots ,M\) are objective functions.

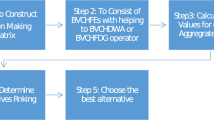

In terms of the G-ABL framework, the process of the collaborative decision-making method can be depicted in Fig. 3. EWM based WK-means is proposed as a classifier to address the classification problem with iterative variable weights that symbolize data preference. FCE is imported as an abductive kernel to evaluate knowledge-based labels. The membership function generated from prior domain knowledge and fuzzy logic can effectively measure complex knowledge preferences. The label update principle is defined as an abductive machine to obtain abductive labels based on Pearson correlation.

Three points should be interpreted.

1) Since WK-means is a clustering method, labeling is necessary. We calculate the multi-objective weighted mean within a class, while the later inter-class comparison is made to label. The multi-objective weighted sums in the last generation are also used to select the “most preferred” solution.

2) Data update means removing the Pareto solutions labeled as the current worst class in the decision set.

3) There are two iteration termination conditions listed below. One of them is satisfied to stop the iteration.

-

Condition 1

The number of Pareto solutions in the abducted decision set is less than the class number.

-

Condition 2

Only the best class has elements and the rest are empty.

4.2 Classifier: improved EWM based WK-means

Formally, the decision set is represented as

where \(( {{X_{i}},{Y_{i}}} )\), \(i = 1,2, \ldots ,N\) are solutions of the decision set. The input Pf is expanded as

where N is the number of Pareto solutions in the current decision set and M is the number of objectives. All objectives are assumed to be minimization objectives (subsequent calculations are based on the premise, and if other kinds of objectives exist, they are transferred to minimize.). Then, Pf is forward-oriented. For a single \(y_{ij}\) in Pf, we have

where σ is a minimal positive number and \({y_{ij}}^{\prime }>0\) is guaranteed. Then, \({y_{ij}}^{\prime }\) is nondimensionalized as

The information entropy of jth objective is calculated by

The entropy weight of jth objective is calculated by

Entropy weight vector is obtained as

The entropy weight vector W is introduced to quantify the data preferences of the decision set, so that weights are closely related to the decision set. In the global decision-making process, W should be constantly changing with the decision set. However, for a single abductive deduction process, the decision set is locally invariant. Hence, the weights in WK-means also should not change with the iteration of the clustering algorithm. We describe the above situation as a local static weight problem. The original WK-means model, shown in Eq. (3), can not implement static weights in algorithm iterations. Therefore, a subtle improvement is presented, and the objective function of the WK-means model is modified to

where K is the predetermined number of classes. The detailed clustering process of WK-means is described in [32]. Here, it is simplified to a clustering mapping \(C ( \cdot )\), as

where \({lb_{i}}^{\mathrm{pre}}\) is the clustering label. However, clustering labels can only represent classes but cannot judge the features of classes. For example, assuming that the clustering results are “Class-1” and “Class-2”. But which class of solutions is more consistent with the current data preferences on the Pareto front? In response, a multi-objective weighted mean vector is established. Suppose “Class-k” contains \(Nu{m_{k}}\) solutions, whose corresponding Pareto front is represented as \({Y_{j}} = ( {{y_{j1}},{y_{j2}}, \ldots ,{y_{jM}}} )\), \(j = 1,2, \ldots ,Nu{m_{k}}\). The multi-objective weighted mean \(F{m_{k}}\) is calculated by

where \({\xi _{j}} = ( {{\varepsilon _{j1}},{\varepsilon _{j2}}, \ldots ,{\varepsilon _{jM}}} )\), by Eq. (10). Arrange \(F{m_{k}}\) in descending order to obtain Fm, as

Then, all solutions in “Class-k” obtain the same label \({lb_{k}}\), as

Extending to the entire decision set, all solutions are given the data-based label \(Dlb ( i )\), \(i = 1,2, \ldots ,N\), i.e.,

Hereto, labeling is completed, resulting in

where \(\mathscr{C} ( \cdot )\) denotes classified mapping.

4.3 Abductive kernel: FCE

For all Solutions in the decision set, the index set of the FCE is constructed as

where \({u_{j}}\), \(j = 1,2, \ldots ,M\) is the jth dimensional optimization objective of the Pareto front. The remark set is constructed as

Especially, the number of remark levels is equal to the number of classes. Then, the domain knowledge induced by prior expertise needs to be transferred to the fuzzy matrix R to form knowledge preferences. However, the proposed method emphasizes the autonomy and efficiency of decision-making. For each solution, there must be an R corresponding to it, and diversity and iteration-varying exist. Obviously, fuzzy statistics and expert scoring methods are not applicable. Therefore, the membership function is constructed for each index in a hierarchy based on domain knowledge, represented as

Then, there is a fuzzy matrix \(R_{i}\) for any solution \(( {{X_{i}},{Y_{i}}} )\).

The fuzzy comprehensive evaluation of the solution is calculated as

where W is the current objective entropy weight (by Eq. (13)). Extrapolating to the entire decision set, all solutions will obtain the knowledge-based label \(Klb ( i )\), as

Hereto, the task of abductive kernel is achieved, resulting in

where \(\mathscr{K} ( \cdot )\) is abductive kernel mapping.

4.4 Abductive machine: label update principle based on Pearson correlation

Hereinbefore, the data-based label \(Dlb\) and the knowledge-based label \(Klb\) of solutions are obtained, both of which are K-dimension vectors. Combining information about the two types of labels is essential to form appropriate abducted labels. Pearson correlation allows to analyze the degree of linear correlation and it is a valid measure of the correlation between \(Dlb\) and \(Klb\). The correlation coefficient is calculated as

Then, the resulting correlation coefficients are divided into three levels, with different principles for obtaining the abducted label \(Alb\). The details of the label update principle are shown in Algorithm 1.

-

Level 1

\(r ( {Dlb,Klb} ) > {2 / 3}\), implies similar data preferences and knowledge preferences. \(Dlb\) is used as \(Alb\).

-

Level 2

\({1 / 3} \le r ( {Dlb,Klb} ) \le {2 / 3}\), implies uncertainty exists, \(Alb\) is co-determined by \(Dlb\) and \(Klb\). Especially, to prevent the removal of solutions with a high degree of uncertainty, \(Alb\) should be assigned to a better class whenever possible.

-

Level 3

\(r ( {Dlb,Klb} ) < {1 / 3}\), implies the existence of a significant conflict. In this case, the reliability of \(Klb\) is usually high. The maximum membership method is used to correct \(Klb\) to obtain \(Alb\).

Hereto, the abducted label \(Alb\) is obtained. According to the methodology proposed in Subsect. 4.1, when the iteration is terminated, the final decision set \(D{s_{f}}\) is determined. If \(D{s_{f}}\) contains a single solution, it is considered to be the “most preferred” solution recommended to designers. Otherwise, the entropy weight vector, \({W_{f}} = ( {{w_{1}}^{*},{w_{2}}^{*}, \ldots ,{w_{M}}^{*}} )\), is calculated again. The multi-objective weighted sums are calculated by

where \(N_{f}\) is the number of the solutions in \(Ds_{f}\). Finally, the solution with the minimal multi-objective weighted sum will be the “most preferred” one.

5 Experiment

In this section, an engineering application, the decision problem of the horizontal tail control system design, is used to verify the effectiveness of the proposed method. Data required for this article are derived from our previous research [12], a multi-objective optimization design of the horizontal tail control system. Finally, simulation and comparison analysis are involved.

5.1 Data and problem description

The horizontal tail is one of the key components to control the pitch stability of an aircraft [34]. Its design process involves mechanical, electronic, hydraulic and control disciplines, making it a typical aviation complex product. Besides, the core of horizontal tail control is a hydraulic servo system, which is also widely used in various engineering products [35].

In [12], the MOO-based preliminary design is completed for the horizontal tail control system. 43 Pareto solutions are obtained, the Pareto front is shown in Fig. 4. Each Pareto solution represents a non-inferior equipment selection in the preliminary design of the horizontal tail control system.

The optimization model consists of five optimization variables and three optimization objectives. The 5 variables correspond to the models of control valve, hydraulic cylinder, hydraulic oil, transmission components, and horizontal tail. The 3 objectives are control performance (\(f_{1}\)), weight (\(f_{2}\)), and price(\(f_{3}\)), and they are all minimization objectives. By Fig. 4, obviously, the optimization objectives are in conflict with each other and non-consistent change trends.

Excessive recommended preliminary designs are not designer friendly and do not have a targeted reference value. Thus, the collaborative decision problem is defined as obtaining a “most preferred” design from these preliminary designs to push to designers. The initial decision set is constructed as

where \({X_{i}} = ( {{x_{i1}},{x_{i2}}, \ldots ,{x_{i5}}} )\), \({Y_{i}} = ( {{y_{i1}},{y_{i2}},{y_{i3}}} )\), \(i = 1,2, \ldots ,43\).

5.2 Details

To present the experimental results concisely, 3 classes are set in the classifier, respectively named “Excellent Class”, “Medium Class” and “Inferior Class”. Correspondingly, the remark set of the abductive kernel is

Based on the knowledge preferences provided by the domain experts, the membership functions of the objectives are constructed, shown in Fig. 5.

Based on the raw data (initial decision set described in Eq. (30) and Fig. 4) and the fuzzy knowledge input (shown in Fig. 5), the G-ABL based collaborative decision-making method can be generated as

-

Step 1: data-based labeling

1) Input the decision set Ds, calculate the entropy weight vector W based on Eqs. (8)-(13).

2) Input the obtained W to Eq. (14) and perform WK-means to cluster Ds into 3 classes.

3) Labeling data-based classes (\(Dlb\)) for Ds according to Eqs. (16)-(19).

-

Step 2: knowledge-based labeling

1) Based on the membership function (as Fig. 5), calculate the fuzzy matrix R for Ds

2) Bring the calculated W and R into Eqs. (25)-(26), and obtain the knowledge-based label (\(Klb\)) for Ds.

-

Step 3: abduction

1) Input \(Dlb\) and \(Klb\), the abducted label of Ds is obtained based on Algorithm 1.

2) Remove solutions with the worst class label to update Ds.

-

Step 4: iteration termination judgment

If Condition 1 or Condition 2 is satisfied? Yes: obtain the final decision set \(Ds_{f}\); No: return Step 1.

After 4 abductive iterations, Condition 2 is satisfied and the iteration terminates. The evolution of the abducted decision set is shown in Fig. 6. The iterative variation of decision set size and objective entropy weight is shown in Fig. 7. Meanwhile, to demonstrate the trend of convergence of the abductive iterations towards the decision preferences, the variations in the mean objective function values of the excellent solutions are shown in Fig. 8.

In Fig. 6, it is clear from the \(Ds_{f}\) that the number of solutions is greater than 1, and they all belong to the “Excellent Class”. When \({W_{f}}=(0.35974584, 0.40878349, 0.23147067)\), the multi-objective weighted sums of \(Ds_{f}\) need to be calculated by Eq. (29), which are listed in Table 1.

Based on the description at the end of Subsect. 4.4, solution 8, which has the minimum multi-objective weighted sum in Table 1, is decided as the “most preferred” design, pushing to designers. The details of solution 8, described in Table 2, illustrate the model selections and the objective performances of the horizontal tail control system.

5.3 Result analysis

Horizontal tail control system is military, with a preference for performance metrics (including control performance and weight) significantly over cost. The weight reduction demand also serves the improvement of control performance. Correspondingly, the trend of decreasing cost weight in Fig. 7 is consistent with the decision preferences. Besides, Pareto solutions, the input of the proposed method, are mutually non-dominated. It is difficult to continue the simultaneous convergence of all objectives. Thus, Fig. 8 shows that price is compromised in exchange for the reduction of control performance and weight. The convergence of the abductive learning process to the decision preferences is proved.

The decisions of the proposed method are compared with the maximum priority objective method (MPO, proposed by [12]), EWM, and FCE in this engineering problem, shown in Table 3. The control performance is an implicit objective. The surrogate model (established by [12]) measures its performance but is difficult to visualize. For better model comparison and validation, a simulation analysis is provided in Fig. 9.

MPO and FCE are knowledge-driven decision-making methods, and EWM is data-driven. All solutions are non-dominated, and no optimal decision occurs. However, they consider only a single preference and are suitable for dealing with simple decision-making problems. Moreover, the classical ABL method is not applicable to address such knowledge-fuzzy decision-making problems in complex product design. In comparison, praiseworthily, the G-ABL method selects the design with the excellent control performance and the lightest weight (in Table 3). Further, by Fig. 9, it is evident that the solution decided with the G-ABL method has a shorter rise time and almost no overshoot and oscillation. The outstanding control performance of the horizontal tail significantly ensures the agility and safety of the aircraft.

To sum up, the G-ABL method provides excellent insight into the above data and knowledge preferences. The proposed method is advanced because it enables collaborative decision-making co-driven by data and knowledge, and gives a novel framework for intelligent autonomous decision-making with strong generalization capability. Especially for complex, knowledge-intensive decision-making tasks, the proposed method is more comprehensive and reliable.

6 Discussion

Two aspects need to be further discussed, especially concerning the limitations. 1) The proposed method does not conform to the convergence of the general heuristic classification learning models. 2) The decided preliminary design does not have absolute optimality, merely conforms to the decision preferences.

Convergence of algorithms is a prerequisite for heuristic learning. In classification tasks, the convergence of the classical ABL framework is demonstrated by the improvement of the classification accuracy of the classifier with iterations. Where, the classifier can be chosen from various classification learning models, such as SVM, CNN, and RNN. However, essentially, the convergence should be attributed to the classifier itself rather than to the ABL framework. The significant contribution of the ABL framework is to constantly correct the training data labels of the learning model based on prior knowledge during iterative training to improve the classifier performance. In the design decision problem addressed in this paper, the purpose is to achieve the classification evaluation of designs in the Pareto solution set co-driven by data and knowledge, so that the preliminary design is decided by removing the “inferior” class solutions with integrated consideration of data and knowledge preferences. Data-based and knowledge-based classification evaluation in the proposed decision-making method respectively relies on the labeling of entropy-based multi-objective weighted sum and FCE. The learning process of the classification model about training and testing is not involved, and its convergence is not applicable. By Fig. 7 and Fig. 8, the convergence of the proposed decision-making method is demonstrated by the fact that the characteristics of the decision set such as the changing trends of target values and target weights can keep conforming to the prior expertise stated in Subsect. 5.3. The proposed method may not be the general usage of the G-ABL framework, which has not been used in typical classification tasks. Thus, its convergence, when combined with a classification learning model, remains to be demonstrated, where the limitation lies. However, the prominent contribution of the proposed G-ABL framework to this paper is that it provides an effective structure for the integration of data and knowledge in decision-making, making possible the mutual benefit of data and knowledge.

The input of the proposed method is the Pareto solution set obtained by multi-objective optimization, in which the design solutions are mutually non-dominated. It means that there does not exist an absolutely optimal design whose all minimization objectives are the smallest in the Pareto solution set. Simultaneously, it is impossible to continue the simultaneous convergence of all objectives. This research fails to address the above questions. Therefore, a preference-based decision has to be made to resolve the design conflict in a sub-optimal way. In terms of data preference, the constraint criterion is set as the design with the smallest entropy-based multi-objective weighted sum is relatively optimal. However, prior expertise is ignored. For example, the cost may be incorrectly treated as the most important target in a military product and given a large weight. As for knowledge preference, the result of FCE is considered the criterion for judging relative optimality. However, trends in data are not taken seriously. For example, it is entirely possible to expend significant costs for a slight performance improvement, which is redundant and unworthy. Further, a correlation-based algorithm is proposed in Subsect. 4.4 to complement the relative optimality evaluation criterion when the results obtained from the above two criteria are inconsistent. The essential role of the G-ABL framework is to provide a pattern to help achieve a mutually beneficial complementarity of the two types of preferences, rather than to provide a learning model used to surrogate optimality classification criteria. Finally, we illustrate through simulations and comparisons that the preliminary design obtained by the proposed method has superior control performance and is more consistent with prior preferences. Although the evaluation criteria are provided in both conditions and the simulation verification is valid, it is still not enough to support the complete and formal demonstration of the optimality of the decided design, which needs further research.

7 Conclusion

This article proposes a G-ABL-based collaborative decision-making method for resolving multi-objective conflicts during the design process of complex products. A G-ABL framework is first presented, providing a novel AI paradigm that effectively combines both data and knowledge. Compared with classical ABL, the generalized framework is more comprehensive and extensible. Meanwhile, multiple models such as EWM, WK-means, and FCE are introduced and improved in the proposed framework to satisfy the adaptability and applicability. The improved EWM based WK-means model addresses the local static weight problem caused by the fixed data preferences as the decision set is locally invariant. Moreover, an engineering application demonstrates the effectiveness of the proposed framework and method. The experimental result verifies that the proposed method possesses greater autonomy and reliability for knowledge-intensive and knowledge-fuzzy decision-making problems in complex product design.

Tasks co-driven by data and knowledge are dramatically rising in engineering practice and industry application. However, the validation methods of decision model results are individualized in different engineering applications. So, future work can consider investigating a unified model validation method, where the difficulty of this paper lies. Besides, due to the lack of expert knowledge and engineering cases, the generality of the proposed framework and method should be further verified by the expanded applications and adaptive improvements in different specific and customized tasks.

Availability of data and materials

Not applicable.

References

S. Zhou, Y. Cao, Z. Zhang, Y. Liu, System design and simulation integration for complex mechatronic products based on SysML and modelica. J. Comput.-Aided Des. Comput. Graph. 30(4), 728–738 (2018). https://doi.org/10.3724/SP.J.1089.2018.16520

P. Zhang, Z. Nie, Y. Dong, Z. Zhang, F. Yu, R. Tan, Smart concept design based on recessive inheritance in complex electromechanical system. Adv. Eng. Inform. 43, 101010 (2020). https://doi.org/10.1016/j.aei.2019.101010

H. Chagraoui, M. Soula, Multidisciplinary design optimization of stiffened panels using collaborative optimization and artificial neural network. J. Mech. Eng. Sci. 232(20), 3595–3611 (2018). https://doi.org/10.1177/0954406217740164

P.M. Zadeh, M. Sayadi, A. Kosari, An efficient metamodel-based multi-objective multidisciplinary design optimization framework. Appl. Soft Comput. 74, 760–782 (2019). https://doi.org/10.1016/j.asoc.2018.09.014

M.A. Tawhid, V. Savsani, Multi-objective sine-cosine algorithm (MO-SCA) for multi-objective engineering design problems. Neural Comput. Appl. 31, 915–929 (2019). https://doi.org/10.1007/s00521-017-3049-x

H. Afshari, W. Hare, S. Tesfamariam, Constrained multi-objective optimization algorithms: review and comparison with application in reinforced concrete structures. Appl. Soft Comput. 83, 105631 (2019). https://doi.org/10.1016/j.asoc.2019.105631

N. Sayyadi Shahraki, S.H. Zahiri, An improved multi-objective learning automata and its application in VLSI circuit design. Memetic Comp. 12, 115–128 (2020). https://doi.org/10.1007/s12293-020-00303-8

G. Lei, G. Bramerdorfer, B. Ma, Y. Guo, J. Zhu, Robust design optimization of electrical machines: multi-objective approach. IEEE Trans. Energy Convers. 36(1), 390–401 (2021). https://doi.org/10.1109/TEC.2020.3003050

Y. Ma, Y. Xiao, J. Wang, L. Zhou, Multicriteria optimal Latin hypercube design-based surrogate-assisted design optimization for a permanent-magnet vernier machine. IEEE Trans. Magn. 58(2), 1–5 (2022). https://doi.org/10.1109/TMAG.2021.3079145

Q. Chen, Q. Zhang, Q.Y. Gao, Z. Feng, Q. Tang, G. Zhang, Design and optimization of a space net capture system based on a multi-objective evolutionary algorithm. Acta Astronaut. 167, 286–295 (2020). https://doi.org/10.1016/j.actaastro.2019.11.003

M.A. Tawhid, V. Savsani, ε-constraint heat transfer search (ε-HTS) algorithm for solving multi-objective engineering design problems. J. Comput. Des. Eng. 5(1), 104–119 (2018). https://doi.org/10.1016/j.jcde.2017.06.003

Z. Cui, J. Yue, C. Wu, Y. Su, F. Wu, A surrogate-assisted multi-objective optimization method for preliminary design of horizontal tail control system. 2022 34th Chinese Control and Decision Conference (CCDC) (2022). In press

O. Olabanji, K. Mpofu, Fusing multi-attribute decision models for decision making to achieve optimal product design. Found. Comput. Decision Sci. 45(4), 305–337 (2020). https://doi.org/10.2478/fcds-2020-0016

H. Garg, Generalized intuitionistic fuzzy entropy-based approach for solving multi-attribute decision-making problems with unknown attribute weights. Proc. Natl. Acad. Sci. India Sect. A Phys. Sci. 89, 129–139 (2019). https://doi.org/10.1007/s40010-017-0395-0

G. Yang, J. Yang, D. Xu, M. Khoveyni, A three-stage hybrid approach for weight assignment in MADM. Omega-Int. J. Manag. Sci. 71, 93–105 (2017). https://doi.org/10.1016/j.omega.2016.09.011

K.S. Chin, C. Fu, Y. Wang, A method of determining attribute weights in evidential reasoning approach based on incompatibility among attributes. Comput. Ind. Eng. 87, 150–162 (2015). https://doi.org/10.1016/j.cie.2015.04.016

T. Duo, J. Guo, F. Wu, R. Zhai, Application of entropy-based multi-attribute decision-making method to structured selection of settlement. J. Vis. Commun. Image Represent. 58, 220–232 (2019). https://doi.org/10.1016/j.jvcir.2018.11.026

A. Ishizaka, N.H. Nguyen, Calibrated fuzzy AHP for current bank account selection. Expert Syst. Appl. 40(9), 3775–3783 (2013). https://doi.org/10.1016/j.eswa.2012.12.089

D. Joshi, S. Kumar, Interval-valued intuitionistic hesitant fuzzy Choquet integral based TOPSIS method for multi-criteria group decision making. Eur. J. Oper. Res. 248(1), 183–191 (2016). https://doi.org/10.1016/j.ejor.2015.06.047

Y. Liang, Q. Zheng, A decision support system for satellite layout integrating multi-objective optimization and multi-attribute decision making. J. Syst. Eng. Electron. 30(3), 535–544 (2019). https://doi.org/10.21629/JSEE.2019.03.11

J. Lu, H. Guo, P. Yang, C. Li, L. Yang, Z. Zhang, Site selection of photovoltaic power station based on weighted least-square method and threshold normalization, in 2018 International Conference on Power System Technology (POWERCON) (2018), pp. 179–184. https://doi.org/10.1109/POWERCON.2018.8601705

M. Mehrabipour, A. Hajbabaie, A distributed gradient approach for system optimal dynamic traffic assignment. IEEE Trans. Intell. Transp. Syst. 23(10), 17410–17424 (2022). https://doi.org/10.1109/TITS.2022.3163369

N. Takayama, S. Arai, Multi-objective deep inverse reinforcement learning for weight estimation of objectives. Artif. Life Robot. 27, 594–602 (2022). https://doi.org/10.1007/s10015-022-00773-8

M. Zhang, Y. Li, Multi-objective optimal reactive power dispatch of power systems by combining classification-based multi-objective evolutionary algorithm and integrated decision making. IEEE Access 8, 38198–38209 (2020). https://doi.org/10.1109/ACCESS.2020.2974961

Y. Li, Y. Cheng, Q. Mou, S. Xian, Novel cross-entropy based on multi-attribute group decision-making with unknown experts’ weights under interval-valued intuitionistic fuzzy environment. Int. J. Comput. Intell. Syst. 13, 1295–1304 (2020). https://dx.doi.org/10.2991/ijcis.d.200817.001

Z. Zhang, X. Hu, Z. Liu, L. Zhao, Multi-attribute decision making: an innovative method based on the dynamic credibility of experts. Appl. Math. Comput. 393, 125816 (2021). https://doi.org/10.1016/j.amc.2020.125816

G. Yu, D. Li, W. Fei, A novel method for heterogeneous multi-attribute group decision making with preference deviation. Comput. Ind. Eng. 124, 58–64 (2018). https://doi.org/10.1016/j.cie.2018.07.013

Z. Zhou, Abductive learning: towards bridging machine learning and logical reasoning. Sci. China Inf. Sci. 62, 76101 (2019). https://doi.org/10.1007/s11432-018-9801-4

Y. Huang, W. Dai, J. Yang, L. Cai, S. Cheng, R. Huang, Y. Li, Z. Zhou, Semi-supervised abductive learning and its application to theft judicial sentencing, in 2020 IEEE International Conference on Data Mining (ICDM) (2020), pp. 1070–1075. https://doi.org/10.1109/ICDM50108.2020.00127

W. Dai, Q. Xu, Y. Yu, Z. Zhou, Bridging machine learning and logical reasoning by abductive learning, in Advances in Neural Information Processing Systems (NeurIPS) (2019), pp. 2815–2826

P. Zhang, S. Zhong, R. Zhu, M. Jiao, Evaluating technical condition of stone arch bridge based on entropy method-cloud model. J. Zhengzhou Univ. Eng. Sci. 43(1), 69–75 (2022)

J.Z. Huang, M.K. Ng, H. Rong, Z. Li, Automated variable weighting in k-means type clustering. IEEE Trans. Pattern Anal. Mach. Intell. 27(5), 657–668 (2005). https://doi.org/10.1109/TPAMI.2005.95

J. Cheng, M. Dong, B. Qi, An OW-FCE model based on MDE algorithm for evaluating integrated navigation system. IEEE Access 7, 178918–178929 (2019). https://doi.org/10.1109/ACCESS.2019.2957522

E.C. Altunkaya, I. Ozkol, Multi-parameter aerodynamic design of a horizontal tail using an optimization approach. Aerosp. Sci. Technol. 121, 107310 (2022). https://doi.org/10.1016/j.ast.2021.107310

J. Na, Y. Li, Y. Huang, G. Gao, Q. Chen, Output feedback control of uncertain hydraulic servo systems. IEEE Trans. Ind. Electron. 67(1), 490–500 (2020). https://doi.org/10.1109/TIE.2019.2897545

I. Kalita, M. Roy, Deep neural network-based heterogeneous domain adaptation using ensemble decision making in land cover classification. IEEE Trans. Artif. Intell. 1(2), 167–180 (2020). https://doi.org/10.1109/TAI.2020.3043724

G. Vanson, P. Marangé, E. Levrat, End-of-life decision making in circular economy using generalized colored stochastic Petri nets. Auton. Intell. Syst. 2(1), 3 (2022). https://doi.org/10.1007/s43684-022-00022-6

H. Wang, Z. Fang, D. Wang, S. Liu, An integrated fuzzy QFD and grey decision-making approach for supply chain collaborative quality design of large complex products. Comput. Ind. Eng. 140, 106212 (2020). https://doi.org/10.1016/j.cie.2019.106212

J. Hao, S. Luo, L. Pan, Computer-aided intelligent design using deep multi-objective cooperative optimization algorithm. Future Gener. Comput. Syst. 124, 49–53 (2021). https://doi.org/10.1016/j.future.2021.05.014

Y. Wu, T. Zhang, D. Liu, Y. Wang, Data-driven multi-attribute optimization decision-making for complex product design schemes. China Mech. Eng. 31(7), 865–870 (2020)

R. Wang, J. Milisavljevic-Syed, L. Guo, Y. Huang, G. Wang, Knowledge-based design guidance system for cloud-based decision support in the design of complex engineered systems. ASME J. Mech. Des. 143(7), 072001 (2021). https://doi.org/10.1115/1.4050247

X. Xue, X. Li, L. Wang, W. Guo, Y. Lin, Research on the design knowledge of complex products based on the cellular automata model. J. Mach. Des. 35(8), 1–6 (2018)

R. Jiang, S. Ci, D. Liu, X. Cheng, Z. Pan, A hybrid multi-objective optimization method based on NSGA-II algorithm and entropy weighted TOPSIS for lightweight design of dump truck carriage. Mach. 9(8), 156 (2021). https://doi.org/10.3390/machines9080156

Acknowledgements

This research is supported by the National Key Research and Development Program of China (Grant No. 2018YFB1700900).

Funding

This work was funded by the National Key R&D Program of China (2018YFB1700900).

Author information

Authors and Affiliations

Contributions

All authors contributed to the research’s conception. ZC wrote the paper; QX, JY, and ZC provided the research idea; JY provided the funding acquisition; ZC and WT contributed to the algorithm implementation; CW contributed to the organization, language, writing, and revision of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Cui, Z., Yue, J., Tao, W. et al. A novel collaborative decision-making method based on generalized abductive learning for resolving design conflicts. Auton. Intell. Syst. 3, 3 (2023). https://doi.org/10.1007/s43684-023-00048-4

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s43684-023-00048-4