Abstract

Wafer yield prediction, as the basis of quality control, is dedicated to predicting quality indices of the wafer manufacturing process. In recent years, data-driven machine learning methods have received a lot of attention due to their accuracy, robustness, and convenience for the prediction of quality indices. However, the existing studies mainly focus on the model level to improve the accuracy of yield prediction does not consider the impact of data characteristics on yield prediction. To tackle the above issues, a novel wafer yield prediction method is proposed, in which the improved genetic algorithm (IGA) is an under-sampling method, which is used to solve the problem of data overlap between finished products and defective products caused by the similarity of manufacturing processes between finished products and defective products in the wafer manufacturing process, and the problem of data imbalance caused by too few defective samples, that is, the problem of uneven distribution of data. In addition, the high-dimensional alternating feature selection method (HAFS) is used to select key influencing processes, that is, key parameters to avoid overfitting in the prediction model caused by many input parameters. Finally, SVM is used to predict the yield. Furthermore, experiments are conducted on a public wafer yield prediction dataset collected from an actual wafer manufacturing system. IGA-HAFS-SVM achieves state-of-art results on this dataset, which confirms the effectiveness of IGA-HAFS-SVM. Additionally, on this dataset, the proposed method improves the AUC score, G-Mean and F1-score by 21.6%, 34.6% and 0.6% respectively compared with the conventional method. Moreover, the experimental results prove the influence of data characteristics on wafer yield prediction.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The semiconductor manufacturing process consists of four parts: wafer fabrication, wafer test, assembly, and final test [1], as shown in Fig. 1. The semiconductor is fabricated layer-by-layer in each die on a piece of silicon wafer [2, 3]. After the wafer fabrication, a wafer test is conducted to evaluate the electrical function of each die. Then these qualified dies are cut from the wafer, assumed into individual chips, and label as qualified products after the final test [4]. During the wafer testing, the electrical function parameters are saved. Using these parameters to predict wafer yield is critical to the operation of the semiconductor wafer quality control system (SWQS) [5] since these parameters can provide insights to identify the root cause of defects and improve the yield. With the development of semiconductor manufacturing technology, the design of integrated circuits becomes more complex and the number of dies on a wafer is increased [6]. There is a great need for an effective and efficient wafer yield prediction (WYP) method in the operation of the SWQS [7].

Compared with conventional yield prediction methods, the WYP remains to be tough due to two challenges:

-

1)

Uneven data distribution: In real-world wafer testing, wafer acceptance test (WAT) datasets are often imbalanced, which means the number of data records of the qualified wafers is far larger than the defective wafer. For example, the ratio of the data record number of the qualified and defective wafers in the dataset utilized in this paper is 11:1. It will affect the learning ability of the prediction model for each category, and then affects the yield prediction [8]. Furthermore, the data in a dataset are usually overlapped. In wafer testing, the process is long with more than one thousand steps usually. Only a part of the factors in a record is abnormal since the defect is built in one or several steps. As a result, the data for the qualified and defective wafers are overlapped together, which will result in the prediction model cannot learn the correct decision boundary [9]. Then it will lead to a bad performance in the yield prediction. Forecasting wafer yield with imbalanced and overlapped data, that is unevenly distributed data, is a tough problem to be solved.

-

2)

High dimensions: Hundreds of parameters in WAT datasets are common, which means the number of detection steps in wafer testing is quite some. For example, in wafer testing, the detection steps include voltage, leakage current, saturation current, and breakdown voltage related to MOS transistor performance and so on 1558 in this paper. It will result in the curse of dimensionality then lead to over-fitting of the prediction model and a large amount of calculation [10]. Forecasting wafer yield with high dimensions is a tough problem to be solved.

Motivated by the above-mentioned problems, this paper proposed IGA-HAFS-SVM for the WYP with uneven distribution and high-dimensional data. An improved genetic algorithm (IGA) is proposed to modify the sample distribution of the WAT datasets according to the distance of different samples. Furthermore, a high dimensional alternating feature selection (HAFS) is designed to select key parameters in the WAT datasets according to the accuracy of WYP.

The rest of this paper is organized as follows: Sect. 2 reviews the related studies about the WYP, data sampling, and feature selection. Section 3 describes the method framework and designs of the IGA and HAFS. The experiment and discussion are presented in Sect. 4. The conclusion and future works are presented in Sect. 5.

2 State of the art

2.1 The review of WYP

With the development of artificial intelligence [3, 11–13], the WYP can be tackled as a binary classification problem using machine learning technologies with the class labels annotated by experts [4]. The WYP has received considerable attention, and the proposed methods can be divided into three types: expert experience methods, deep learning methods, and shallow structured machine learning methods.

-

1)

The expert experience method is to rely on experts to select some parameters closely related to the wafer as the input of the latter wafer prediction model. Schelasin [14] designed a queuing theory equation to estimate wafer factory cycle times. The parameters in the formula need to be selected by experts. Fang et al. [15] proposed a yield prediction method based on a Bayesian network, in which the node parameters required for the construction of a Bayesian network need to be determined by expert experience. The expert experience method based on expert experience is simple and efficient, but the wafer manufacturing system has dynamic characteristics, and the manufacturing process will continue to change with the production process, which makes it difficult for the method based on expert experience to maintain good performance for a long time.

-

2)

The deep learning method can deal with wafer yield prediction problems in multiple layers or stages [16], which can extract features from raw WAT data automatically. Chen et al. [17] compared the results of the three methods for wafer yield prediction. Finally, the Genetic Algorithm-Backpropagation Neural Network (GA-BPNN) only uses 9 parameters and the prediction effect is the best. Xu et al. [5] designed a hybrid feature selection method for identifying key parameters of wafer yield prediction. Then the key parameters are used as the input of the deep belief network (DBN) to predict the wafer yield. Although the deep learning method can deal with complex nonlinear problems, for the wafer manufacturing system with small batch production, it may cause the problem of underfitting because of too few data samples [18].

-

3)

The shallow structured machine learning method refers to some artificial intelligent methods (such as the support vector machine (SVM), XGBoost [19, 20]), which can predict the yield of the wafer based on some pre-designed models. Lim et al. [20] compared the prediction performance of SVM and XGBoost models under different production scales when identifying two types of wafer maps on the premise of data balance. Jiang et al. [21] designed a yield prediction method based on a support vector machine and other machine learning models, which uses the WAT parameters of the front end to predict the test yield of the back end. Dong et al. [22] proposed a novel wafer yield prediction method, using fused LASSO to derive the spatial distribution of defective wafer clusters, and then using logistic regression to predict the final yield. The shallow structure machine learning method learns the distribution of target variables through training data, avoiding the influence of expert experience deviation caused by changes in manufacturing process. In addition, compared with the deep learning method, when the amount of data is relatively small, the shallow structure machine learning method is simpler and more effective [18], which is very suitable for the wafer manufacturing system with less batch products in this paper, that is, less data.

Among the wafer yield prediction methods, the shallow machine learning method is a more suitable method for yield prediction in this paper. In this method category, SVM is more suitable for wafer yield prediction based on uneven data distribution because of its high computational efficiency in small samples and sensitivity to overlapping data.

2.2 The review of data sampling

Despite the existence of data sampling algorithms applied in WYP, for the unbalanced data that need to be considered, the current research is still relatively few. In data sampling problems, two major approaches can be adopted to solve the problem of imbalanced data: model-level and data-level methods [23]. The model-level method adjusts and rewrites the conventional model to directly predict the unbalanced data set but this method needs a very deep understanding of the model. The data level is more convenient, because it does not need to modify the traditional model, and the method of processing the data set can be combined with the existing prediction model [24]. There are two types of data sampling methods: oversampling and under-sampling [25].

-

1)

The oversampling method is to set some decision-making methods to expand the number of minority samples so that its number is consistent with the number of majority samples. Chawla [26] proposed a method named SMOTE. It generates new samples between minority samples and the selected k nearest neighbor samples. Maulidevi and Surendro [27] developed a method that is SMOTE variants. It uses SMOTE to synthetics minority samples and eliminates noise by LOF. Guan et al. [28] designed a method named SMOTE-WENN. First, SMOTE generates synthetic minority class examples using linear interpolation. Then, WENN detects and deletes unsafe majority and minority class examples using the weighted distance function and k-nearest neighbor (KNN) rule. However, due to the large scale of the wafer manufacturing system and the deviation of data acquisition, there is a lot of wafer data produced in a batch production, and the proportion of defective products is very small. Therefore, using oversampling method may increase the possibility of over fitting the prediction model.

-

2)

The under-sampling method is to filter out part of the majority samples through some set conditions so that the number of samples is consistent with the minority samples. Tsai et al. [29] proposed a method. It randomly deletes some samples from the majority samples to achieve the same number as minority samples. Guzmán-Ponce et al. [30] proposed a two-stage under-sampling method, which combined the DBSCAN [31] clustering algorithm with a minimum spanning tree algorithm to handle class overlap and imbalance simultaneously. Koziarski [32] proposed a method named CSMOUTE, which defined synthetic minority under-sampling by incorporating the two nearest majority instances. Ha and Lee [33] proposed a GA under-sampling method. To maximize the prediction accuracy of the classifier, the majority of samples are randomly selected by selecting cross mutation and other operations. The under-sampling method can alleviate the problem of model overfitting to a certain extent [34], and this kind of method is more suitable for the uneven distribution data collected by the small batch wafer manufacturing system in this paper.

Among the data under-sampling methods, GA was a promising method to deal with the data uneven distribution problem of WYP. GA can select the samples that are most conducive to model training, and it has strong expansibility compared with other under-sampling methods. It can be used for data sampling in scenes with uneven data distribution.

2.3 The review of feature selection

In recent years, there are much research on feature extraction. It can be divided into the principal component analysis (PCA), filter feature selection method, and wrapper feature selection method. For the principal component analysis method, Ravi et al. [35] used principal component analysis (PCA) to reduce the features and dimensions of high-dimensional data and then uses logistic regression analysis for data mining. Agarwal et al. [36] designed a hierarchical PCA. It breaks the image into various parts and then performs PCA on each part separately and then combines the results. Li et al. [37] designed an ORBF-KPCA, which is the optimum kernel parameter to minimize the difference between the dimension-reduced results of raw data and homogenous data utilizing a cross-validation method. For the filter feature selection method, Ke et al. [38] proposed a novel filter feature method named score-based criteria fusion (SCF), which selects informative genes for cancer classification and then removes the redundant genes from these candidate genes. Peng et al. [39] proposed a method named mRMR. It uses mutual information as the standard, a set of features with the greatest correlation and the least redundancy are selected from all parameters. Yu and Liu [40] proposed a fast correlation-based filter (FCBF) method. It uses the standard information gain as the evaluation standard, sets a threshold, and selects features according to the threshold in a quick sorting way. For the wrapper feature selection method, Gokalp et al. [41] proposed a novel wrapper feature selection algorithm called iterated greedy metaheuristic for sentiment classification, which focus on achieving high-quality results for classification and reducing dimension. Heidari et al. [42] proposed a new swarm intelligence algorithm called the Harris hawks optimization (HHO) algorithm, which imitates the habits of the eagles, each position movement takes into account the energy of the prey to get closer to the prey more accurately, that is, the optimal solution.

The above three kinds of feature selection methods have their own characteristics. However, for principal component analysis, because it obtains a group of linearly unrelated variables through dimension reduction, the original characteristic physical information of the WAT data will be damaged, resulting in the inability to trace back the characteristic information that affects the yield; For the filter feature selection method and the wrapper feature selection method, the former selects the key parameter subset according to the internal characteristics of WAT data without directly optimizing the performance of the classifier, so it will not inherit the selection bias of the classifier; The latter uses the preset classifier to evaluate the feature subset for feature selection, which is easy to inherit the selection bias of the classifier, but its prediction performance is often better than that of the filter type [43]; Considering the advantages between them, the filter is embedded in the wrapper feature selection algorithm to obtain a smaller number of unbiased key parameter subsets.

Moreover, in the filter feature selection method, because FCBF adopts the quick sorting method, the calculation efficiency is higher than the general filter feature selection method, so it is more suitable for high-dimensional WAT data in this paper; In the wrapper feature selection method, HHO can change the search strategy according to different search positions compared with other methods, which is more suitable for WAT data with high dimensions and complex relationships between parameters in this paper.

3 Method

In this section, we first present the framework of IGA-HAFS-SVM, as shown in Fig. 2.

Firstly, data cleaning is used for outliers cleaning and data standardization to ensure that the value of data is not affected by the dimension and outliers.

Secondly, dividing the data into the training set, testing set, and validation set, and subsequently improved Genetic algorithm (IGA) is used to solve data uneven distribution.

-

The first operation is selection. The input matrix is \(X=[ x_{1}, x_{2},\ldots , x_{n} ]^{T}\) which is the encoded majority samples of the training set, and the selection function is:

$$ X_{s} = f_{1} (X), $$(1)where \(X_{s}\) is a group of chromosomes to be selected and \(f_{1} (\cdot)\) is the selection operator.

-

The second operation is the crossover. The input matrix is \(X_{s1} =[ x_{1}, x_{2},\ldots , x_{m} ]^{T}\), \(X_{s2} =[ x_{m+1},x_{m+2},\ldots , x_{n} ]^{T}\) and the crossover function is:

$$ X_{c} = f_{2} ( X_{s1}, X_{s1} ), $$(2)where \(X_{s 1}\) and \(X_{s 2}\) are a part of \(X_{s}\) and do not repeat each other. \(X_{c}\) is the result of a new set of chromosomes crossover. \(f_{2} (\cdot)\) is the crossover operator.

-

The third operation is the mutation. The input matrix is \(X_{c}\), and the mutation function is:

$$ X_{m} = f_{3} ( X_{c} ), $$(3)where \(X_{m}\) is a part of \(X_{s}\) and do not repeat each other. \(X_{c}\) is the result of a new set of chromosomes crossover. \(f_{3} (\cdot)\) is the mutation operator.

-

The fourth operation is gene specific expression. The input vector is \(x_{i} \in X_{m}\), \(i=1,2,\dots,n\) and the filter function in gene specific expression is given by equation (4):

$$\begin{aligned}& \mathrm{G}( x, X_{\min } ) \\& \quad = \textstyle\begin{cases} 0,\quad \text{if }x_{i}^{k} = 1,\\ \max ( \mathrm{AVG}( \Vert x_{i}, X_{\min } \Vert _{2} ) ),\quad \text{if } x_{i}^{k} = 0, \end{cases}\displaystyle \end{aligned}$$(4)where k is a gene on chromosome i and \(k=1, 2, \dots , M\). M is the dimension of the chromosome, and \(\|\, \|_{2}\) is the 2-norm. AVG \((\,)\) is the average operation. The gene specific function is:

$$ x_{n} ' = G( x_{i}, X_{\min} ), $$(5)where \(x_{n}' \in [0, 1]\) is the encoded index vector of samples of majority class.

-

Eventually, the operation is training set construction. The input vector is \(X_{E}\) with the highest fitness value and \(X_{E}\) is constructed by \(x_{n}'\). Then the majority selection function is given by equation (6):

$$ f_{4} ( x, X_{\mathrm{maj}} ) = \textstyle\begin{cases} X_{\mathrm{maj}}^{k}\text{ selected}, &\text{if } x_{n}^{k} =1,\\ X_{\mathrm{maj}}^{k}\text{ unselected}, &\text{if } x_{n}^{k} =0, \end{cases} $$(6)where k is the index value of the majority of samples and \(X_{\mathrm{maj}}\) is a two-dimensional matrix composed of most class samples. \(f_{4} (\cdot )\) is the function of selection majority samples. The construction function is:

$$ X_{T} = f_{5} \bigl( f_{4} \bigl( x_{n} ', X_{\mathrm{maj}} \bigr), X_{\min} \bigr), $$(7)where \(f_{5} (\cdot)\) is the construction operator.

Thirdly, after the data preprocessing, the uneven distribution problem is solved. \(X_{T}\) will use as input data to Harris Hawks Optimization (HHO) to select a group of parameters first. Then, based on the parameter subset initially selected by HHO. We use a filter feature selection method: Fast Correlation-Based Filter (FCBF) to filter more highly redundant variables in the parameter subset.

-

The first operation is to update the initial energy \(E_{0}\), jump strength J and energy E. The function is given by equations (8), (9), and (10):

$$\begin{aligned}& E_{0} =2 x_{r} -1, \end{aligned}$$(8)$$\begin{aligned}& J =2(1- x_{r} ), \end{aligned}$$(9)$$\begin{aligned}& E =2 E_{0} \biggl(1- \frac{t}{T} \biggr), \end{aligned}$$(10)where \(x_{r}\) is randomly selected from \([0,1]\). The t represents the current iteration and T represents the maximum number of iterations.

-

The second operation is to update the position of predators according to the absolute value of E. The input vector is \(X' = [ x_{1}, x_{2},\dots , x_{n} ]^{T}\) which is the location of prey. If \(| E | \geq 1\), \(X'\) will update using equation (11):

$$\begin{aligned}& X' ( t +1) \\& \quad = \textstyle\begin{cases} X_{\mathrm{rand}} ( t ) - r_{1} \vert X_{\mathrm{rand}} ( t ) -2 r_{2} X' ( t ) \vert q \\ \quad \geq 0.5,\\ ( X_{\mathrm{best}} ( t ) - X_{m} ( t ) ) \\ \quad {}- r_{3} ( LB + r_{4} ( UB - LB ) ) q < 0.5, \end{cases}\displaystyle \end{aligned}$$(11)where \(X_{\mathrm{rand}} ( t )\) is a randomly selected vector from the population and \(X_{\mathrm{best}} ( t )\) are the vector of the optimal fitness value under the t iteration number and q is a random number from 0 to 1. Similarly, r1, r2, r3, r4. \(X_{m} ( t )\) is given by equation (12):

$$ X_{m} ( t ) = \frac{1}{N} \sum_{i =1}^{N} X_{i} ( t ), $$(12)where \(X_{i} ( t )\) is the individual of the population and N is the number of individuals. If \(r \geq 0.5\) and \(\vert E \vert \geq 0.5\), \(X'\) will update using equation (13). If \(r \geq 0.5\) and \(\vert E \vert <0.5\), \(X'\) will update using equation (14):

$$\begin{aligned}& X' ( t +1 ) =\Delta X ( t ) - E \bigl\vert J X_{\mathrm{best}} ( t ) - X' ( t ) \bigr\vert , \end{aligned}$$(13)$$\begin{aligned}& X' ( t +1 ) = X_{\mathrm{best}} ( t ) - E \bigl\vert \Delta X ( t ) \bigr\vert , \end{aligned}$$(14)where \(\Delta X ( t )\) is given by equation (15):

$$ \Delta X ( t ) = X_{\mathrm{best}} ( t ) - X' ( t ). $$(15) -

The third operation is to embed FCBF into the Soft/hard besiege with progressive rapid dives link of HHO to update the position of \(X'\) as \(r <0.5\) and \(\vert E \vert \geq 0.5\) or \(r <0.5 \vert E \vert <0.5\). The input vector and training set is \(X' = [ x_{1}, x_{2},\dots , x_{n} ]^{T}\) and \(X_{T}\), and the parameters extraction function is:

$$ X_{T}' = f_{6} \bigl( X', X_{T} \bigr), $$(16)where \(X_{T}'\) is the training set with extracted parameters. Then \(Y = [ y_{1}, y_{2},\dots , y_{n} ]^{T}\) is the target variable as input vector and \(X_{T}'\) is the input matrix, and the parameter filter operation is:

$$ X_{T}^{\prime \prime } = FCBF \bigl( X_{T}', Y \bigr), $$(17)where \(X_{T}^{\prime \prime }\) is the training set with key parameters. Then fitness value will calculate using \(X_{T}^{\prime \prime }\) and the parameters corresponding to the best fitness value will be retained in \(X' ( t +1 )\). \(X'\) will update using equation (18):

$$\begin{aligned}& X ' (t+1)_{i}^{k} \\& \quad = \textstyle\begin{cases} Y_{i}^{k}, \quad \text{if } k \in\text{optimal subset and } \\ \hphantom{Y_{i}^{k},\quad }F ( Y ) < F(Z),\\ Z_{i}^{k},\quad \text{if } k \in\text{optimal subset and } \\ \hphantom{Z_{i}^{k},\quad}F ( Z ) < F(Y),\\ 0, \quad \text{else}, \end{cases}\displaystyle \end{aligned}$$(18)where \(k\in [0, 1]\) is the encoded index vector and i is the individuals in the population. F is the fitness function. Y and Z are given by equations (19) and (20):

$$\begin{aligned}& Y = X_{\mathrm{best}} ( t ) - E \bigl\vert J X_{\mathrm{best}} ( t ) - X ( t ) \bigr\vert , \end{aligned}$$(19)$$\begin{aligned}& Z=Y + S \times LF ( D ), \end{aligned}$$(20)where D is the dimension of \(X'\) and S is a random vector by size \(1\times D\) and LF is levy flight function [42]. The above process is iterated repeatedly, and after reaching the termination condition, \(X_{\mathrm{TB}}\) representing the subset of key parameters is obtained.

-

Eventually, AUC score as evaluation criteria and support vector machine (SVM) as the evaluation model combining optimal subset to calculate fitness value, a parameter subset is reserved that the best adaptation values in this round. When the termination reaches, the output global is the optimal subset. Eventually, the global optimal subset will be sent in the prediction model to predict wafer yield by equation (21):

$$ \text{wafer yield} = SVM \bigl( X_{\mathrm{val}} ( X_{\mathrm{TB}} ), Y_{\mathrm{val}} \bigr), $$(21)where \(X_{\mathrm{val}} ( X_{\mathrm{TB}} )\) is the validation set, which only retains the key parameters \(X_{\mathrm{TB}}\).

3.1 Data preprocessing

Firstly, we need to handle outliers and standardized data (See Sect. 4.2 for details). In order to solve the influence of uneven data distribution on the identification of key parameters of WAT and the prediction of wafer yield, we need to solve the problems of data uneven distribution. The improved genetic algorithm (IGA) will search the majority class samples that do not overlap with the minority class samples, and its number is consistent with the number of minority class samples. IGA consists of six steps.

3.1.1 Individual coding and population initialization

In the beginning, generating randomly a group of initial chromosomes. Then, these chromosomes are coded by the binary encoding method.

3.1.2 Fitness function

When the population initialization and chromosome coding are completed, the selected individuals in majority class samples are initially determined, and then a new data set is constructed with all minority samples, and the new training data set to calculate satisfying the following equation:

The error represents the loss obtained by a given classier (the Support Vector Machine (SVM) classifier [20] is used here) with the new training set and loss is calculated by the (1-AUC) value. α and β are two parameters representing the weight of loss and selected feature rate in the whole fitness function, \(\alpha \in [0,1]\) and \(\beta =(1-\alpha )\).

3.1.3 Select operator

The selection operator adopts the Roulette Wheel mechanism. Supposing one chromosome has M genes, then the probability of each gene being selected as the following equation:

where x represents an individual gene in a chromosome.

3.1.4 Crossover operator

Firstly, the number of chromosomes that meet the crossover conditions needs to be determined by a crossover rate (CR) that is equal to 0.8. Then, for these selected chromosomes, the single-point crossover is used and the crossover point is chosen randomly. Two new chromosomes will be produced after the crossover operator.

3.1.5 Mutation operator

For mutation operation, we will determine whether each point is mutated one by one. If the random number generated for this point is less than the rate of variation that is equal to 0.01, it will mutate and mutate the equation as follow:

where k represents which genes in a chromosome and i represents which chromosome in a group of chromosomes.

3.1.6 Gene-specific expression

For gene-specific expression, its role is to selectively express more dominant genes and inhibit unqualified genes. Each gene is represented by the binary code, then we will use the genes selecting a function to choose genes, then the selected genes need to satisfy the following equation:

where k represents the gene in the chromosome and i represents a chromosome. When the code of the selected individual in a chromosome is zero, it means that the data is not selected. After that, the sample that is relatively far away from all samples of minority class samples in majority class samples will be selected, that is, its code will be set to 1. This selects samples that do not overlap with other class samples as much as possible. After gene specific expression, a better individual is produced and it will recalculate the fitness and recycle all operations until the termination meeting to get the optimal individual.

3.2 Key parameters identification by HAFS

After building the data set, we can eliminate the interference of data distribution and accurately identify the parameters. HAFS is a novel feature selection method, it embeds a fast correlation-based filter (FCBF) model into HHO that is a wrapper feature selection method. Then, we will introduce the steps of the algorithm.

3.2.1 Individual coding and population initialization

For high dimensional data sets, generating a population randomly is a good choice because the initial population generated randomly is more diversified and more likely to be close to the optimal solution, so this paper uses random population generating. Individual coding is equal to IGA.

3.2.2 Fitness function

After Individual coding and population initialization, the HAFS algorithm will calculate the fitness value and the optimal solution is the least fitness value because the fitness value mainly uses the predicted loss value and the proportion of selected features. After that, the optimal solution will be saved.

3.2.3 Search process

HHO is a swarm intelligence search algorithm, which imitates the hunting habits of eagles, including the exploration phase and exploration phase. According to the energy E of prey and the optimal solution and jumping intensity J, different position movement modes are selected to get close to the prey and prey as much as possible. For most structures, HHO in HAFS is similar to the basic HHO. However, to enable HHO to achieve the two goals of reducing dimensions and selecting key parameters at the same time. We changed the moving mode of predators in the HHO algorithm when Soft/Hard besiege with progressive rapid moves and embedded FCBF in HHO as a decision-making method so that the search solution can bypass more redundant parameters and be closer to the optimal solution. The formula is as follows:

Where Y and Z represent two sets of moving coordinates, i.e., two sets of parameter subsets. \(F(Y)\) and \(F(Z)\) are the fitness values of Y and Z, i represents a set of parameters, and k represents the individual in a set of parameters. Through this method, the two goals of reducing dimensions and selecting key parameters are achieved at the same time.

4 Experiment and discussion

First, the UCI standard data set is used to prove the validity and reliability of IGA-HAFS-SVM. Then the validity of the proposed method is verified using the WAT example data.

4.1 Experiment setting and standard dataset experiment

4.1.1 Experiment setting

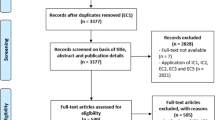

Firstly, we will use the standard data set of UCI to preliminarily verify the effectiveness of the proposed method. The selected UCI data set will be consistent with the characteristics of the wafer yield prediction data set, that is, the input characteristics have the characteristics of high dimension and the data itself has the problem of uneven distribution, after screening analysis, the following two sets of UCI standard data sets were finally chosen to verify the ability of the proposed IGA-HAFS-SVM method. Including Parkinson’s Disease data set [44] and Dorothea data set [45]. Among them, the positive class is the majority class sample, and the negative class is the minority class sample both data sets meet the two characteristics of high dimension and uneven class distribution, which is similar to the research problem. Then, the mRMR filtering method, HHO, and GA wrapper method are used to compare the proposed method. The proposed method is programmed with Python 3.6 and Sklearn.

The parameters obtained by the mRMR filter method will be used for the input of the SVM classifier to form the mRMR-SVM prediction model. For HHO and GA, similarly, HHO-SVM and GA-SVM prediction model is composed.

4.1.2 Standard dataset experiment

During the experiment, the AUC score is used as the evaluation index of the feature selection method because for unbalanced data sets, accuracy is not an indicator that can accurately measure the prediction results, because when the positive sample of data is much larger than the negative sample, the accuracy will often be high, while for AUC score, whether the data is balanced or not will not have a great impact on its value and we will add some redundant features to further verify the effectiveness of the method, eventually, the selected features will be checked whether the redundant and noisy features eliminated effectively. The test results are shown in Table 1.

It can be seen from Table 1 that all methods can effectively eliminate redundant variables and reduce the parameters of the input prediction model to reduce the operation time. But under the data set with uneven class distribution and high dimension, the proposed method has advantages because we consider the problem of uneven distribution of data, use IGA to solve the data imbalance and high overlapping of data, which are important for uneven distribution of data. In the subsequent parameter selection, because other methods are single-stage feature selection methods, in the case of high dimension, the searchability of data sets dealing with a large number of redundant variables is weak compared with HAFS.

4.2 Wafer data description and preprocessing

4.2.1 Data description

IGA-HAFS-SVM is evaluated on a public dataset, released by Live-line Technologies on Kaggle1. This dataset, named “Detecting Anomalies in Wafer Manufacturing,” was collected from an Indian wafer manufacturer. The dataset from the huge and complex wafer manufacturing system, and because the defective wafers are very few, the sample population is seriously unbalanced so that the dataset is a proper one to demonstrate the validity of IGA-HAFS-SVM. The goal of this dataset is to predict the yield of the wafer from the input data.

The raw row dataset contains 1763 samples and 1558 WAT parameters recorded by sensors, including an open voltage leakage current, a saturation current, a breakdown voltage, etc. as shown in Table 2

4.2.2 Data preprocessing

Data preprocessing is divided into three steps, namely data standardization, data denoising, and data split, as shown in Table 2. Data Standardization: In order to prevent the data from being affected by dimensions, which leads to slow convergence or even non convergence, this paper uses standard variance normalization to normalize all data to a value with a mean of 0 and a variance of 1. Data Denoising: Although there is no missing value in the data in this paper, due to the current shock in the wafer manufacturing process, abnormal values will appear in the data and the distribution of the data is characterized by peak and tail (since the values of all columns except the first three columns are 0 or 1, we only focus on the first three columns), as shown in Fig. 3, Fig. 4, and Fig. 5. Moreover, due to the lack of data samples, we cannot simply delete these outliers, so we use the tail reduction operation to replace the outliers, as shown in Fig. 6, Fig. 7, and Fig. 8. Data Split: To better verify the proposed method, the dataset is randomly split into three datasets, i.e., the training set (80%), test set (10%), and validation set (10%).

4.3 Forecasting performance comparison in wafer data

To further verify the effect of IGA-HAFS-SVM on high-dimensional and uneven distribution of wafer data, we intend to use GA-SVM, HHO-SVM, PSO-SVM, SVM, and XGBoost as a comparison, and AUC score, G-Mean, and F1-measure as evaluation indicators. The parameter settings of each method are shown in Table 3, which corresponds to the parameters set for the best result of each method.

After that, we will use the methods of tables and diagrams to describe the prediction ability of IGA-HAFS-SVM, GA-SVM, HHO-SVM, PSO-SVM, SVM, and XGBoost.

It can be seen from Table 4 and Fig. 9 that the proposed method: IGA-HAFS-SVM has certain advantages in the four indicators, and the selected features are 426 fewer than the best performing method: GA-SVM. Compared with the feature selection methods of other comparison methods, the advantage of our method is that a filter is embedded in the wrapper method, which can eliminate more redundant parameters from each dynamic iteration process.

Further analysis, from the perspective of data distribution, we have solved the problem of uneven distribution of data, so that the classifier can form a better decision boundary and accurately identify two types of samples, while other methods have not learned the characteristics of samples of minority well, resulting in a large number of samples being misjudged as samples of majority. From the number of features combined with the F1 score, because the majority samples account for the majority of the data, each model can well learn the characteristics of the majority samples. However, for our proposed method, the number of key parameters is the least, which prevents the model from overfitting to a certain extent. Therefore, F1 score is slightly better than the other five methods in identifying most class samples. In addition, the reason why most performance indicators are much higher than other methods are that the data obtained by the small batch wafer manufacturing system is seriously uneven. The main factor affecting the performance of the model is that the relationship between the data and the model is very small.

Eventually, the experiment proved the prediction method proposed in this study is an important tool for accurately identifying the wafer acceptance parameters, improving the yield prediction accuracy, and shortening the wafer construction period.

5 Conclusion

This work proposes a prediction method for wafer yield prediction in the case of uneven data distribution and high dimensions. Firstly, an improved genetic algorithm (IGA) is used to solve the uneven distribution of data. Then a high-dimensional alternating feature selection (HAFS) method is designed to solve the problems of high-dimensional and insignificant key parameters. Finally, the selected WAT key parameters are used for wafer yield prediction. The experimental comparison and analysis show a certain superiority.

The contributions of this paper are as follows:

-

Based on the uneven distribution of data in the WAT data set in the real world, an improved genetic algorithm (IGA) is proposed. This method solves the problem of the uneven distribution of data. Moreover, achieving good results in the WAT dataset.

-

Aiming at the problems of high dimension and insignificant key parameters of WAT in the real world, a high-dimensional alternating feature selection (HAFS) algorithm is proposed. This method combines the wrapper feature selection method and the filtering feature selection method. 1429 WAT parameters are reduced, which provides a reference for reducing wafer acceptance testing equipment and reducing testing costs.

-

The method proposed in this paper can achieve good performance in the case of high dimensions and uneven distribution of data, and the number of key parameter subsets obtained is 129 which is less than that of the method with the least number of key parameter subsets. Moreover, AUCs score, G-Mean, and F1-score are 21.6%, 34.6%, and 0.6% higher than the methods with the best prediction performance, respectively.

Due to the lack of data sets and other reasons, this paper only considers the case of binary classification, so future research work can consider a wafer yield prediction problem in the case of mixed categories with the difficulty of this paper.

Availability of data and materials

Not applicable.

Code availability

Not applicable.

References

L. Münch, R. Uzsoy, J.W. Fowler, A survey of semiconductor supply chain models part I: semiconductor supply chains, strategic network design, and supply chain simulation. Int. J. Prod. Res. 56(13), 4524–4545 (2017)

J. Wang, J. Yang, J. Zhang et al., Big data driven cycle time parallel prediction for production planning in wafer manufacturing. Enterp. Inf. Syst. 12(6), 714–732 (2018)

J. Wang, J. Zhang, Big data analytics for forecasting cycle time in semiconductor wafer fabrication system. Int. J. Prod. Res. 54(23), 7231–7244 (2016)

K.B. Lee, S. Cheon, C.O. Kim, A convolutional neural network for fault classification and diagnosis in semiconductor manufacturing processes. IEEE Trans. Semicond. Manuf. 30(2), 135–142 (2017)

H. Xu, J. Zhang, Y. Lv et al., Hybrid feature selection for wafer acceptance test parameters in semiconductor manufacturing. IEEE Access 8, 17320–17330 (2022)

J. Wang, J. Zhang, X. Wang, A data driven cycle time prediction with feature selection in a semiconductor wafer fabrication system. IEEE Trans. Semicond. Manuf. 31(1), 173–182 (2018)

C.-F. Chien, Y.-H. Chen, M.-F. Lo, Advanced quality control (AQC) of silicon wafer specifications for yield enhancement for smart manufacturing. IEEE Trans. Semicond. Manuf. 33(4), 569–577 (2020)

J. Wang, Z. Yang, J. Zhang et al., AdaBalGAN: an improved generative adversarial network with imbalanced learning for wafer defective pattern recognition. IEEE Trans. Semicond. Manuf. 32(3), 310–319 (2019)

S.A. Shahee, U. Ananthakumar, An overlap sensitive neural network for class imbalanced data. Data Min. Knowl. Discov. 35(4), 1654–1687 (2021)

B. Mwangi, T.S. Tian, J.C. Soares, A review of feature reduction techniques in neuroimaging. Neuroinformatics 12(2), 229–244 (2014)

A. Polenghi, I. Roda, M. Macchi et al., An ontological modelling of multi-attribute criticality analysis to guide prognostics and health management program development. Auton. Intell. Syst. 2(1), 1–16 (2022)

T. Wen, Y. Fang, B. Lu, Neural network-based adaptive sliding mode control for underactuated dual overhead cranes suffering from matched and unmatched disturbances. Auton. Intell. Syst. 2(1), 1–15 (2022)

M.A. Goodale, Lessons from human vision for robotic design. Auton. Intell. Syst. 1(1), 1–10 (2021)

R. Schelasin, Using static capacity modeling and queuing theory equations to predict factory cycle time performance in semiconductor manufacturing, in Proceedings of the 2011 Winter Simulation Conference (WSC) (2011), pp. 2040–2049

X. Fang, C. Chang, L.G. Using, Bayesian network technology to predict the semiconductor manufacturing yield rate in IoT. J. Supercomput. 77(8), 9020–9045 (2021)

R. Vargas, A. Mosavi, R. Ruiz, Deep Learning: A Review. Advances in Intelligent Systems and Computing (2017)

K. Chen, P. Chang, C. Yeh, Wafer die yield prediction by heuristic methods, in The 40th International Conference on Computers & Indutrial Engineering (2010), pp. 1–4

S. Dargan, M. Kumar, M.R. Ayyagari et al., A survey of deep learning and its applications: a new paradigm to machine learning. Arch. Comput. Methods Eng. 27(4), 1071–1092 (2020)

F. Adly, O. Alhussein, P. Yoo Simplified subspaced regression network for identification of defect patterns in semiconductor wafer maps. IEEE Trans. Ind. Inform. 11(6), 1267–1276 (2015)

M.Y.S. Lim, A. Sharma, C.S. Chin et al., Prediction of wafer map categories using wafer acceptance test parameters in semiconductor manufacturing, in IFIP International Conference on Artificial Intelligence Applications and Innovations (2022), pp. 136–144

C. Jiang, W. Lin, N. Raghavan, A Gaussian mixture model clustering ensemble regressor for semiconductor manufacturing final test yield prediction. IEEE Access 9, 22253–22263 (2021)

H. Dong, N. Chen, K. Wang, Wafer yield prediction using derived spatial variables. Qual. Reliab. Eng. Int. 33(8), 2327–2342 (2017)

S. Mayabadi, H. Saadatfar, Two density-based sampling approaches for imbalanced and overlapping data. Knowl.-Based Syst. 241, 108217 (2022)

P. Branco, L. Torgo, R.P. Ribeiro, A survey of predictive modeling on imbalanced domains. ACM Comput. Surv. 49(2), 1–50 (2016)

N.V. Chawla, Data mining for imbalanced datasets: an overview, in Data Mining and Knowledge Discovery Handbook (Springer, Boston, 2009), pp. 875–886

N.V. Chawla, K.W. Bowyer, L.O. Hall et al., SMOTE: synthetic minority over-sampling technique. J. Artif. Intell. Res. 16, 321–357 (2002)

N.U. Maulidevi, K. Surendro, SMOTE-LOF for noise identification in imbalanced data classification. J. King Saud Univ, Comput. Inf. Sci. 34(6), 3413–3423 (2021)

H. Guan, Y. Zhang, M. Xian et al., SMOTE-WENN: solving class imbalance and small sample problems by oversampling and distance scaling. Appl. Intell. 51(3), 1394–1409 (2021)

C.-F. Tsai, W.-C. Lin, Y.-H. Hu et al., Under-sampling class imbalanced datasets by combining clustering analysis and instance selection. Inf. Sci. 477, 47–54 (2019)

A. Guzmán-Ponce, R.M. Valdovinos, J.S. Sánchez et al., A new under-sampling method to face class overlap and imbalance. Appl. Sci. 10(15), 5164 (2020)

M. Ester, H.P. Kriegel, J. Sander et al., A density-based algorithm for discovering clusters in large spatial databases with noise. KDD 96(34), 226–231 (1996)

M. Koziarski, CSMOUTE: combined synthetic oversampling and undersampling technique for imbalanced data classification, in 2021 International Joint Conference on Neural Networks (IJCNN) (IEEE Press, New York, 2021), pp. 1–8

J. Ha, J.S. Lee, A new under-sampling method using genetic algorithm for imbalanced data classification, in Proceedings of the 10th International Conference on Ubiquitous Information Management and Communication (2016), pp. 1–6

J. Sun, J. Lang, H. Fujita et al., Imbalanced enterprise credit evaluation with DTE-SBD: decision tree ensemble based on SMOTE and bagging with differentiated sampling rates. Inf. Sci. 425, 76–91 (2018)

V. Ravi, N. Naveen, M. Pandey et al., Hybrid classification and regression models via particle swarm optimization auto associative neural network based nonlinear PCA. Int. J. Hybrid Intell. Syst. 10(3), 137–149 (2013)

A. Agarwal, T. El-Ghazawi, H. El-Askary et al., Efficient hierarchical-PCA dimension reduction for hyperspectral imagery, in 2007 IEEE International Symposium on Signal Processing and Information Technology (2007), pp. 353–356

D. Li, B. Yang, Y. Zhang, Dimension-reduction and reconstruction of multi-dimension spatial wind power data based on optimal RBF kernel principal component analysis, in 2020 10th International Conference on Power and Energy Systems (ICPES) (2020), pp. 326–332

W. Ke, C. Wu, Y. Wu et al., A new filter feature selection based on criteria fusion for gene microarray data. IEEE Access 6, 61065–61076 (2018)

H. Peng, F. Long, C. Ding, Feature selection based on mutual information criteria of max-dependency, max-relevance, and min-redundancy. IEEE Trans. Pattern Anal. Mach. Intell. 27(8), 1226–1238 (2005)

L. Yu, H. Liu, Feature selection for high-dimensional data: a fast correlation-based filter solution, in Proceedings of the 20th International Conference on Machine Learning (ICML-03) (2003), pp. 856–863

O. Gokalp, E. Tasci, A. Ugur, A novel wrapper feature selection algorithm based on iterated greedy metaheuristic for sentiment classification. Expert Syst. Appl. 146, 113176 (2020)

A.A. Heidari, S. Mirjalili, H. Faris et al., Harris hawks optimization: algorithm and applications. Future Gener. Comput. Syst. 97, 849–872 (2019)

J. Zhang, Y. Xiong, S. Min, A new hybrid filter/wrapper algorithm for feature selection in classification. Anal. Chim. Acta 1080, 43–54 (2019)

C.O. Sakar, G. Serbes, A. Gunduz et al., A comparative analysis of speech signal processing algorithms for Parkinson’s disease classification and the use of the tunable Q-factor wavelet transform. Appl. Soft Comput. 74, 255–263 (2019)

I. Guyon, S. Gunn, A. Ben-Hur et al., Result analysis of the NIPS 2003 feature selection challenge. Advances in Neural Information Processing Systems, 17 (2004)

Acknowledgements

The author thanks the teacher of Artificial Intelligence Research Institute of Donghua University for his guidance. We are very grateful to Dr. Xu Chuqiao for his suggestions on our manuscript.

Funding

Key R & D program of Shandong Province, China – key technologies and applications of intelligent green manufacturing of bacteriostatic textiles (2021CXGC011004); China Ministry of industry and information technology project – Textile intelligent manufacturing standard (2021-0173-2-1).

Author information

Authors and Affiliations

Contributions

QX, CX, and JW participated in the method design and manuscript writing, and QX implemented experiments. CX and JW helped revise the manuscript. JW is the master supervisor of QX at Donghua University which provides the opportunity to complete the research work. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Xu, Q., Xu, C. & Wang, J. Forecasting the yield of wafer by using improved genetic algorithm, high dimensional alternating feature selection and SVM with uneven distribution and high-dimensional data. Auton. Intell. Syst. 2, 24 (2022). https://doi.org/10.1007/s43684-022-00041-3

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s43684-022-00041-3