Abstract

With the ongoing digitization of the manufacturing industry and the ability to bring together data from manufacturing processes and quality measurements, there is enormous potential to use machine learning and deep learning techniques for quality assurance. In this context, predictive quality enables manufacturing companies to make data-driven estimations about the product quality based on process data. In the current state of research, numerous approaches to predictive quality exist in a wide variety of use cases and domains. Their applications range from quality predictions during production using sensor data to automated quality inspection in the field based on measurement data. However, there is currently a lack of an overall view of where predictive quality research stands as a whole, what approaches are currently being investigated, and what challenges currently exist. This paper addresses these issues by conducting a comprehensive and systematic review of scientific publications between 2012 and 2021 dealing with predictive quality in manufacturing. The publications are categorized according to the manufacturing processes they address as well as the data bases and machine learning models they use. In this process, key insights into the scope of this field are collected along with gaps and similarities in the solution approaches. Finally, open challenges for predictive quality are derived from the results and an outlook on future research directions to solve them is provided.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

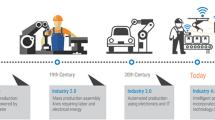

In the present era of Industry 4.0 and the digitization of the manufacturing industry, new technological possibilities are emerging that contribute to strengthen companies’ competitiveness. Especially the combinations of new communication technologies with state-of-the-art methods from the fields of machine learning (ML) and deep learning (DL) enable promising applications for data-driven, smarter manufacturing (Shang & You, 2019; Tao et al., 2018). One area that can strongly benefit from these developments is quality assurance. It involves activities across the product lifecycle to ensure that the requirements on the quality of produced products are met (Hehenberger, 2020; Pfeifer & Schmitt, 2021). ML and DL methods offer ways to support these activities by enabling data-driven, automated quality analyses. Their application in this field is referred to as predictive quality (Nalbach et al., 2018; Schmitt et al., 2020b).

Predictive quality solutions are built upon data from the manufacturing process. By extracting recurring patterns from the data and relating them to quality measurements, predictive quality enables the data-driven estimation of the product quality based on process data. The estimations serve as a decision-making basis for quality enhancing measures, such as adjusting the process parameters for avoiding rejects (Schmitt et al., 2020b). The common approach to predictive quality includes four main steps: the formulation of the manufacturing process and target quality, the selection and collection of process and quality data, the training of a ML/DL model, and the use of the model for estimations as a basis for decisions (schematically illustrated in Fig. 1). In this context, predictive quality mainly comprises methods for supervised ML.

In the current manufacturing research, there exist many examples that demonstrate the feasibility of ML or deep learning based predictive quality, ranging from inline fault predictions (Mayr et al., 2019) to automated quality inspections (Schmitt et al., 2020a). The addressed manufacturing processes and quality criteria are manifold, such as the prediction of part cracks in deep drawing (Meyes et al., 2019), the estimation of roughness in laser cutting (Tercan et al., 2017; Zhang & Lei, 2017), or the detection of porosity defects in additive manufacturing (Zhang et al., 2019a). Though these use cases are different, their solution approaches have similarities in terms of the data and methods used. However, it is noticeable that often the use cases are considered in isolation, making it difficult to compare the proposed approaches. Hence, it is not clear where the overall predictive quality research currently stands, which methods are currently investigated, what the limitations are, and in which directions the research should go. In this paper, we address these issues by conducting a systematic review of the publications that address the field of predictive quality. We see the timing for such a review as appropriate because, on the one hand, there is a sufficiently large amount of published papers from which we can draw these insights. On the other hand, there are currently no adequate papers dealing with this topic in its entirety. Although studies with similar investigations exist (see “Related survey paper” section), they either cover a broader scope (e.g. applications of ML for the production context in general (Fahle et al., 2020; Sharp et al., 2018) or they are no longer up-to-date as their publication dates back several years (Köksal et al., 2011; Rostami et al., 2015).

Due to these observations, we define the primary goal of this review: providing a comprehensive overview of scientific publications from 2012 to 2021 that address predictive quality approaches in manufacturing. Our perspective on this field focuses on its common concepts depicted in Fig. 1. After collecting the relevant publications and building a corresponding corpus, we extract information about their use cases, the manufacturing processes and quality criteria they address and the data bases and ML methods they use. The goal is to categorize the publications along these concepts and to answer the following three driving questions

-

Q1: What are the addressed manufacturing processes and quality criteria? Our goal is on the one hand to discover the scope of the field and the possible applications of predictive quality, and on the other hand to identify similarities and gaps in the domains.

-

Q2: What are the characteristics of the data used for model training? Predictive quality is based on process data. We aim to find out the common data sources used in the publications, the variables selected for ML model training, and the modality of the data.

-

Q3: Which machine learning models of supervised learning are commonly trained? We aim to discover which supervised learning problems are addressed, which models of ML and DL are trained for the quality estimations, and if they are compared with each other in the publications.

From these questions, we derive key insights and open challenges for predictive quality and provide an outlook on future research directions that we believe will increase its prevalence in research and industry.

The paper is structured as follows. In “Predictive quality” section, we introduce predictive quality by first defining the term and then describing the underlying machine learning models and tasks. In “Related survey paper” section, we provide an overview of related survey papers that deal with similar topics. We then present the methodology underlying our study in “Study methodology” section. Here, we define the categories by which we evaluate the publications and formulate the search queries and criteria for selecting them. “Results” section presents the result of our study with regard to the stated questions. On the basis of these results, “Key insights and challenges” section provides key findings and identified gaps in predictive quality research. In “Future research directions” section, we envision future research directions to master them. “Summary” section summarizes this review.

Predictive quality

Regarding the use of data-driven methods with reference to product and process quality in production, different terms and terminologies are used to describe them in the state of research and technology. Examples are data analytics, predictive analytics, machine learning for quality prediction (Mayr et al., 2019) or predictive model-based quality inspection (Schmitt et al., 2020a). Predictive quality is another term that covers this broad field of research. The definitions by Schmitt et al. (2020b) and Nalbach et al. (2018) highlight two key aspects of predictive quality: product-related quality as well as the data-driven prediction of it. Schmitt et al. (2020b) define predictive quality as “enabling the user to make a data-driven prediction of product- and process-related quality” with the goal of “acting prescriptively on the basis of predictive analyses”. Nalbach et al. (2018) take a similar view – their definition of predictive quality includes methods that use data to “identify statistical patterns to foresee future developments concerning the quality of a product”. Although the term prediction is also very broad, it limits the range of use cases of data-driven methods. For example, it does not cover use cases for ML based quality inspection where the goal is to detect faults that have already occurred in the process rather than providing future-oriented quality predictions. With regard to data-driven methods, it is also the case that particularly machine learning and deep learning methods have been the central focus of research in recent years. In our consideration, we therefore also concentrate on these two fields.

Relying on these definitions, we define the term predictive quality as the basis for our literature search and specify the terminologies as well as their scope in the context of this paper:

Predictive quality comprises the use of machine learning and deep learning methods in production to estimate product-related quality based on process and product data with the goal of deriving quality-enhancing insights.

The following remarks provide further concretization of the definition:

-

The term estimation includes prediction as well as classification of quality.

-

The product-related quality can be a fixed quality parameter as well as known product faults.

-

By process and product data, we mean product characteristics, process parameters, process states, and planning information

By our definition, predictive quality is not limited to the production phase of a product, but also is applied in the product or process planning phase - for example, in the design of a production process using test trials or a DoE-based feasibility analysis using process simulations. It should also be noted that our definition does not cover the notions of anomalies and anomaly detection. Anomalies in manufacturing are events that differ from normal behavior and as such are not initially associated with a known defect or quality degradation (Lopez et al., 2017). Anomaly detection therefore involves different methods than the ones considered in the context of this paper. For the sake of limiting the scope of our study, we exclude publications that deal with anomalies and anomaly detection.

As predictive quality involves the detection or prediction of quality, it mainly comprises methods of supervised learning. Supervised learning has the goal of estimating a numerical or categorical target variable on the basis of selected input variables (regression respectively classification). For supervised learning, methods of both fields machine learning and deep learning can be used:

-

Machine learning methods such as regression analysis that detect linear and quadratic correlations and models that can handle complex and non-linear estimations such as support vector machine (SVM) and feed-forward artificial neural network (ANN) (also called multilayer perceptron (MLP)), and interpretable graphical models such as decision trees. In addition, a variety of ensemble methods (e.g. random forest) exist that comprise several individual models.

-

Deep learning models that are based on deep ANNs and that have marked major milestones in the AI research in recent years. These include, for example, convolutional neural networks (CNNs) which are established in computer vision and image recognition (Redmon et al., 2016; Szegedy et al., 2015), as well as recurrent neural networks such as long short-term memory (LSTM) (Hochreiter & Schmidhuber, 1997) or transformer networks, which represent the state of the art in natural language processing areas such as speech recognition or machine translation (Vaswani et al., 2017; Xiong et al., 2016).

The learning methods used for predictive quality are diverse and often depend on the purpose of the application. Following the discussions by Köksal et al. (2011) and Rostami et al. (2015) on the purposes of data-driven quality assurance, we list three tasks of machine and deep learning for predictive quality:

-

Quality description The identification, evaluation and interpretation of relationships between process variables and product quality. The primary goal is to gain insights about interrelationships in the process.

-

Quality prediction The model-driven estimation of a numerical quality variable on the basis of process variables. The goal here is the prediction of product quality, either for decision support or for automation.

-

Quality classification Analogous to quality prediction, this involves model-driven estimation of categorical (binary or nominal) quality variables. An example is the prediction of certain product defect types.

In this paper, we study publications that primarily address these three tasks, focusing especially on quality prediction and classification. The use of such model estimations can be very diverse and range from pure knowledge gain for the user to automated feedback into the system. Generally, a process improvement is initiated which leads to the fulfillment of the specified product quality or its improvement. Possible improvements include the reduction of the reject rate through early intervention in the production process, the stabilization of the process for production in tighter tolerances (Schmitt et al., 2020a), or the optimization of process parameters (Weichert et al., 2019).

Related survey paper

A review similar to ours is provided by Köksal et al. (2011). The authors conducted an extensive literature review of data mining applications for quality improvement tasks in manufacturing and categorized them according the stated predictive quality tasks. Rostami et al. (2015) proposed a similar approach with a focus on applications of SVMs. Both studies date back several years and thus do not cover recent advances in this field. More recently, Weichert et al. (2019) reviewed machine learning applications for production process optimization with regard to product- or process-specific metrics. The study shows that optimization approaches are mostly based on root-cause analysis and fault diagnosis in production plants or by the combination of ML methods with optimization methods. Although the study has overlaps with our work, the authors mainly address approaches to process optimization. In our study, we focus as previously described on approaches for quality estimation and evaluate them based on the data and methods used.

In the course of our literature search, we also identified survey papers that conduct similar investigations on machine learning applications but with a different scope. For example, there already exist extensive studies on the use of artificial intelligence and machine learning techniques in the production and manufacturing context (Fahle et al., 2020; Mayr et al., 2019; Shang & You, 2019; Sharp et al., 2018). Shang and You (2019) provided an overview of recent advances in data analytics for different application task areas such process monitoring and optimization. They also discussed the works in terms of usability and interpretability for control tasks. Fahle et al. (2020) and Mayr et al. (2019) studied machine learning applications in different task scenarios such as process planning and control. Sharp et al. (2018) focused on cross-domain applications in the product lifecycle. There are also surveys that deal with related research fields such as ML-based predictive maintenance (Dalzochio et al., 2020; Zonta et al., 2020), condition monitoring (Serin et al., 2020b) and machine fault diagnosis (Ademujimi et al., 2017). Our paper clearly differs from the mentioned papers as it reviews approaches that primarily address the quality of the products produced.

Study methodology

Our review is based on the guiding questions defined in “Introduction” section. First, we translated the questions into several categories which we used to categorize and summarize the publications. The categories are listed in Table 1.

In the next step, we performed a literature search in the databases of Web of Science and ScienceDirect. As shown in “Predictive quality” section, there are different terms and terminologies for predictive quality that are used in publications. The same holds for the very broad application domain of manufacturing. In order to cover this broad scope in the search, we used different terms for the search queries and divided them into three categories: the predictive quality terminology, the machine learning field, and the manufacturing domain. Table 2 lists all defined search terms. We formulated search queries to find publications that contain at least one term from each of the three categories. In addition, we filtered the results based on the publication year from 2012 to 2021 (we performed the search on June 29, 2021). This literature search resulted in a large list of 1.261 (potentially relevant) publications for our review (see Fig. 2).

With the aim of identifying only publications that fit the scope of the paper, we first screened the publications based on their title and abstract. In the course of this screening, many publications were discarded as they lay in the fields of predictive maintenance, fault diagnosis, remaining useful lifetime prediction, software defect prediction, water quality prediction, process engineering or civil engineering. The result of this pre-selection were 144 remaining publications.

We then read through the remaining publications in detail and categorized them according to the defined categories in Table 1. During this process, we excluded publications from our consideration that met the following exclusion criteria:

-

Publications that do not contain information about the addressed manufacturing process or the data basis

-

Survey papers and literature studies

-

Publications that do not perform any development, implementation or evaluation of methods

-

Publications that are not accessible to us

After all, there were 81 publications which were selected and considered for our study. Table 10 in Appendix A lists all of them, sorted by publication year. The majority (\(69\%\)) are published in journals. \(31\%\) are published in the scope of scientific conferences. Figure 3 shows the number of publications per year. It clearly illustrates that the number of publications has been constantly increasing over the last years. It can also be assumed that this trend will continue in the further course of 2021 and 2022.

Results

In this chapter, we present the results of our study along the guiding questions formulated in “Introduction” section. First, we begin by categorizing the publications according to the manufacturing processes and quality criteria addressed (“Manufacturing process types and quality criteria” section). Then, we look at the data bases used for quality estimations (“Data Bases and Characteristics” section) and what learning models are trained and investigated (“Machine learning methods” section).

Manufacturing process types and quality criteria

Predictive quality approaches are being investigated in a wide variety of manufacturing processes. We therefore perform a categorization of the processes based on DIN 8580:2003-09 (2003). In the norm, the processes are divided into six main groups: primary shaping, forming, cutting, joining, coating and changing of material properties. Since there are also publications dealing with additive manufacturing, assembly processes or multi-stage processes, we add these three categories to our review. Considering how much each process category is represented in predictive quality research, there are significant differences and a large imbalance between them. Figure 4 shows the number of publication per category. While cutting comprises the largest group with 26 publications, there is no publication that primarily addresses processes for changing material properties. In the following, we will focus the categories in detail. For each of the categories, we also analyze which quality criteria are used in the publications as the estimated target variables.

Cutting Cutting includes a variety of manufacturing processes in which a commonly metallic workpiece is fractured by separating a portion from it. Table 3 lists the addressed cutting processes and quality criteria. It can be seen that most research aims to determine quality characteristics that reflect the shape of the finished product, while the surface roughness represents the overwhelming majority of them. In turning applications, for example, some approaches used ML methods such as multivariate regression or ANNs to estimate the roughness based on gathered sensor data (Du et al., 2021; Elangovan et al., 2015; Moreira et al., 2019) and/or machine parameters (Acayaba & de Escalona, 2015). Tušar et al. (2017) developed an automated quality control of a turning and soldering process, predicting both the roughness as well as several soldering defects based on recorded camera images. Vrabel et al. (2016) also proposed an inline process monitoring of the surface roughness quality. Other applications of predicting the roughness are found in the field of milling (Hossain & Ahmad, 2014; Serin et al., 2020a), honing (Gejji et al., 2020; Klein et al., 2020), diamond wire saw cutting (Kayabasi et al., 2017), or laser cutting (Tercan et al., 2016, 2017; Zhang & Lei, 2017). An important quality criterion in drilling processes is the hole diameter, which can also be predicted based on sensor data (Neto et al., 2013; Schorr et al., 2020a, b). Other than that, Nguyen et al. (2020) predicted the waviness of the kerf in laser cutting by training an MLP on process parameters (e.g. gas pressure and laser power). Furthermore, it was shown that ML is capable of predicting the material removal rate in cylindrical grinding of hardened steel (Varma et al., 2017) and chemical mechanical polishing (Yu et al., 2019).

Joining Joining processes comprise the second largest field of addressed manufacturing processes (14 publications) . Table 3 shows that mainly applications in welding were investigated. In laser welding, machine learning models (commonly ANNs) were trained on welding parameters (e.g. laser power, welding speed) or sensor data (e.g. light intensity) to predict quality values such as the tensile strength (Yu et al., 2016), weld bead dimensions (Ai et al., 2016; Lei et al., 2019), residual stress (Dhas & Kumanan, 2014) or to classify quality types captured with camera images (Yu et al., 2020). Similar approaches were conducted in spot welding (Hamidinejad et al., 2012; Martín et al., 2016) and ultrasonic welding (Li et al., 2020b; Natesh et al., 2019). Li et al. (2020a) and Gyasi et al. (2019) presented ANN-based inline quality control systems in welding processes. Goldman et al. (2021) conducted interpretability analysis of CNNs trained on welding sensor data. In contrast Wang et al. (2021) trained a CNN on line camera images for quality estimation. Other than welding, Dimitriou et al. (2020) estimated the glue volume based on 3D laser topology scans in a gluing process.

Primary shaping Primary shaping involves processes in which a body with a defined shape is produced from a shapeless material. Ten publications deal with this field (see table 4). The ones that lie in the field of casting proposed approaches for detecting casting defects on the product, such as by training CNNs on X-ray images (Ferguson et al., 2018) or MLPs on sensor data (Kim et al., 2018; Lee et al., 2018). In injection molding, data from machine parameters (e.g. temperature, packing pressure) was used for predicting quality values such as the product dimensions (Ke & Huang, 2020) or product weights (Ge et al., 2012). Alvarado-Iniesta et al. (2012) used a recurrent neural network to make warpage estimations for new parameter combinations. Garcia et al. (2019) predicted future product geometries of plastics tubes in a plastics extrusion process. Furthermore, two works lie in the field of spinning, where the goals were to predict the yarn quality in form of the count-strength-product (Nurwaha & Wang, 2012) or the leveling action point (Abd-Ellatif, 2013) with MLPs.

Forming Forming involves manufacturing processes in which raw parts are transformed into a different shape without material being added or removed. Among the 10 publications that lie in this field (see table 4), the ones addressing metal rolling processes noticeably differ from the works mentioned so far, as most of them aimed at in-line quality estimations in the rolling process. For example, Yun et al. (2020), Li et al. (2018) and Liu et al. (2021) proposed CNN-based quality inspection systems by detecting and classifying surface defects in line camera images. Ståhl et al. (2019) used inline geometry measurements to train LSTM networks and Lieber et al. (2013) made inline NOK quality predictions based on ultrasonic measurements. In sheet metal forming, Meyes et al. (2019) investigated LSTMs on sensor data for the inline prediction of part defects, while Essien and Giannetti (2020) trained them to estimate the machine speed. In contrast, Dib et al. (2020) made use of simulated experiments for ML-based part defect estimation. Other approaches were investigated in simulations of impression-die forging (Ciancio et al., 2015) or textile draping (Zimmerling et al., 2020).

Additive manufacturing Eight publications deal with predictive quality in additive manufacturing processes (see table 4). As additive manufacturing enables rapid prototyping, two of them are located in the design phase of products. Here, process simulations were used to train ANNs for fast predicting the inherent strain (Li & Anand, 2020) or geometric deviations (Zhu et al., 2020) of the product. Beyond that, ML can also be used in the realization phase, for example to make quality predictions based on optical measurements (Gaikwad et al., 2020; Bartlett et al., 2020) or machine sensors (e.g. IR, vibration) (Li et al., 2019; Zhang et al., 2018, 2019b). An in-line capability of quality monitoring in the process was demonstrated by Zhang et al. (2019a).

Assembly Regarding the 5 publications dealing with assembly (see Table 5), it becomes apparent that they are mainly concerned with ML-based classification of successful and unsuccessful assembly tasks. Examples are the detection of functioning products in manual assembly (Wagner et al., 2020) or correct positioning in SMT assembly (Schmitt et al., 2020a) by using virtual quality inspection systems. The assembled products as well as the data used may also be very different. While Sarivan et al. (2020) used acoustic signals to make a quality prediction for the connection of wire plugs, Martinez et al. (2020) used line camera images for detecting correctly fastened screws. Lastly, Doltsinis et al. (2020) detected successfull operations on the basis of robotic force signatures and machine sensors.

Coating In coating (4 publications, Table 5), manufacturing processes are involved to apply an adhesive layer of shapeless material to the surface of a certain workpiece. In dispensing, for example. Oh et al. (2019) proposed a SVM-based defect detection method for realtime visual quality inspection. Hsu and Liu (2021) trained CNNs on machine sensor data for OK/NOK classification of electric wafer quality. In contrast, some approaches are trained only on parameterizations of the process, which was shown for lacquering (Thomas et al., 2018) and car bodywork painting (Kebisek et al., 2020).

Multi-stage There are also publications which are not concerned with a single manufacturing process but multi-stage processes that comprise several types (4 publications, see Table 5). From a machine learning perspective, the challenge here is handling the increased complexity and number of data sources. For example, Liu et al. (2020b) investigated DL-methods (e.g. LSTMs) for quality prediction of a larger production line based on multimodal sensor data. The data was captured in different stages of the production. Similar work was also conducted in metal processing (Papananias et al., 2019) or multi-stage battery-cell manufacturing (Turetskyy et al., 2021), where the authors combined the model training with extensive feature selection to find suitable data inputs.

Data bases and characteristics

Data plays a central role in the context of predictive quality. Therefore, it is important to address the question of which data is used in the publications for training and evaluation of the predictive quality models. In the following, we aim to answer the second guiding question by categorizing the publications according to the source of the data, the amount of data used, the parameters used as input variables for the models, and the modality of the data.

Data set sources and amount

We have basically identified three source of data from which the publications obtain their data for model training: real data from a manufacturing process, virtual data from simulations, or from freely available data sets that have been generated for benchmarks or in the context of competitions. Real data is data that is gathered from the physical manufacturing process in which the corresponding product is produced on a machine or production line. Thus it is obtained during the manufacturing of a product. Depending on the use case and the manufacturing process, different measurement techniques and sensors are used for gathering real data. In contrast, virtual data is created during simulation runs of the manufacturing process. The data is generally created before the product is physically manufactured. Furthermore, in the case of real manufacturing data, we distinguish between two types of publications: those that take their data from a running production, and those that generate their data experimentally using predefined experimental designs. Table 6 lists all publications according to the data sources. While few publications exist that primarily use simulation data (\(10\%\)) or benchmark data (\(6\%\)), the majority of them make use of real manufacturing data (\(65\%\) with experimental data and \(14\%\) with data from running production). In addition, there are four publications (Abd-Ellatif, 2013; Ge et al., 2012; Nurwaha & Wang, 2012; Tušar et al., 2017) where the data source is not clear and therefore we cannot clearly assign them to one of the categories. In the following, we briefly discuss the data sources and also look at how large the data sets used for model training are.

Simulation Eight publications use simulations in which the respective manufacturing processes are simulated and the targeted quality variables are calculated. The generated data sets serve as a training basis for the machine learning models. The typical objective here is either to demonstrate the feasibility of ML-driven predictive quality using simulation data (Ciancio et al., 2015; Zhu et al., 2020), or to generate fast ML models from simulations in order to save cost and time in process design (Dib et al., 2020; Tercan et al., 2016, 2017). The most publications perform a certain set of experiments for data generation, either by varying process parameters (Alvarado-Iniesta et al., 2012; Ciancio et al., 2015; Dib et al., 2020; Tercan et al., 2016, 2017) and/or product design parameters (Li & Anand, 2020; Zhu et al., 2020; Zimmerling et al., 2020). On average, the publications use 9, 864 of simulated experiments, where the result of each experiment serves as a data sample for model training. The number of data samples vary widely across the publications: while Ciancio et al. (2015) used only 30 simulated data samples for training and testing, Tercan et al. (2017) generated over 22, 000. Zhu et al. (2020) additionally performed data augmentation techniques to increase the data amount from originally 102 samples to 1980.

Benchmark We identified five publications that use freely available data sets as a training and evaluation basis for predictive quality. Three of them lie in the field of image-based defect classification, such as the GRIMA X-Ray casting data (Mery et al., 2015) used by Ferguson et al. (2018), the NEU-DET data set (He et al., 2020) for surface defects in hot-rolling (Liu et al., 2021), and the Xuelang manufacturing AI challenge data set (Tianchi, 2021) used for fabric defect classification (Jun et al., 2021). Besides that, Liu et al. (2020b) used the large-scale Kaggle Bosch Production Line Performance data set and Yu et al. (2019) used the 2016 PHM Data Challenge data set for material removal rate prediction (PHM Society, 2020). On average, the data sets contain 5, 722 samples or images, with the NEU-Det data set being the smallest with 1, 800 images and the Kaggle dataset being the largest with over 16, 000 samples. Yet again, the works which use image sets (Ferguson et al., 2018; Jun et al., 2021; Liu et al., 2021) performed data augmentation on the images to increase the training data sizes.

Real data (experimental) Most research on predictive quality in conducted on real manufacturing, meaning that the data basis for model training is retrieved from a physical process in which a certain product is produced on a machine or production line. The majority of these publications (\(83\%\)) conduct predefined experiments on the process to generate the training data. Thereby a selected set of process parameters is varied under fixed boundary conditions and mostly for fixed workpieces. In some cases, well-known design of experiments are used for that, such as full factorial (Du et al., 2021; Elangovan et al., 2015; Gaikwad et al., 2020; Hamidinejad et al., 2012; Ke & Huang, 2020; Nguyen et al., 2020; Varma et al., 2017; Zhang et al., 2018, 2019b), half factorial (Gejji et al., 2020), fractional Box-Behnken (Hossain & Ahmad, 2014), central composite (Hamidinejad et al., 2012; Serin et al., 2020a), or Taguchi (Ai et al., 2016; Ciancio et al., 2015; Moreira et al., 2019) design method. The numbers of parameters that are varied (if known) are two (Bustillo et al., 2018; Gaikwad et al., 2020; Ke & Huang, 2020; Moreira et al., 2019; Vrabel et al., 2016), three (Du et al., 2021; Kayabasi et al., 2017; Mulrennan et al., 2018; Li et al., 2020a, 2019; McDonnell et al., 2021; Natesh et al., 2019; Nguyen et al., 2020; Varma et al., 2017; Yu et al., 2020; Zhang & Lei, 2017), four (Ai et al., 2016; Elangovan et al., 2015; Gejji et al., 2020; Hamidinejad et al., 2012; Jiao et al., 2020; Li et al., 2020b; Neto et al., 2013), five (Zhang et al., 2019a), six (Dhas & Kumanan, 2014), seven(Zahrani et al., 2020), or eight (Lei et al., 2019). Only a few publications vary other conditions besides process parameters, which are material batches (Lutz et al., 2020) or product specimens (Neto et al., 2013; Papananias et al., 2019). The small number of parameter variations is also reflected in the small amount of data used for model training and evaluation. Figure 5 illustrates the distribution of the publications based on the number of data samples they use. The blue bars represent the publications with experimental data. It shows that the majority of publications use around 100 samples. The average number is about 5, 600 and the median 144. Note that the number of data samples is not always equal to the number of experiments. For example, the top-4 publications that use more than 10, 000 samples either perform data augmentation (Dimitriou et al., 2020; Hsu & Liu, 2021) or conduct multiple measurements per experiment (Wang et al., 2021; Zhang et al., 2019a):

Real data (running production) In contrast to experimentally generated training data, there are eleven publications that take data from a running manufacturing process. In these cases, the manufacturing of the products and the collection of the data took place over a longer period of time, such as three months in an aluminum casting process (Lee et al., 2018), six months of steel rolling production (Ståhl et al., 2019), eleven months in a deep drawing process (Meyes et al., 2019), twelve months of aluminum can bodymaking (Essien & Giannetti, 2020), or 20 months in a car bodywork shop (Kebisek et al., 2020). Based on this data acquisition, historical data sets were generated on which the ML models are trained and evaluated. The data sets here are generally larger than in the publications that obtain the data experimentally (see red bars in Fig. 5). On average, the data sets contain 73, 984 data samples. The largest data set used by Essien and Giannetti (2020) contains 525, 600 samples from sensor data (machine speed) that is recorded every minute over the course of one year.

Input variables

The quality of a manufactured product depends on many factors. Among them are the design and parameterization of the manufacturing process and the interplay of the manufacturing steps. A predictive quality model trained on process data correlates these input factors with the quality. We therefore pose the question of what types of input variables are used in the publications. We have identified three major types in the course of our research: process parameters, sensor data, and product measurements. As it turns out, in most publications only one type is selected as the basis for model training. Table 7 provides an overview of all publications. Four publications (Abd-Ellatif, 2013; Liu et al., 2020b; Wagner et al., 2020; Yu et al., 2019) are not included as we cannot clearly determine which types of variables they use.

Process parameters The first type of input variables are process and machine parameters. These are set for the production of a particular product and are usually not changed during production. A predictive quality model built on process parameters can be used to determine product quality under new, previously unknown parameter spaces. Depending on the manufacturing process, different parameters are essential for the product quality and thusly are selected in the publications. While in cutting processes the feed rate (Acayaba & de Escalona, 2015; Bustillo et al., 2018; Hossain & Ahmad, 2014; Jiao et al., 2020; Varma et al., 2017) or cutting and rotation speed (Acayaba & de Escalona, 2015; Bustillo et al., 2018; Hossain & Ahmad, 2014; Jiao et al., 2020; Nguyen et al., 2020; Serin et al., 2020a; Varma et al., 2017; Zahrani et al., 2020; Zhang & Lei, 2017) are frequently used, the laser power (Ai et al., 2016; Lei et al., 2019; Nguyen et al., 2020; Yu et al., 2020; Zahrani et al., 2020; Zhang & Lei, 2017; Zhu et al., 2020) or focal position (Ai et al., 2016; Tercan et al., 2016, 2017) are often selected in laser-based applications. In plastics manufacturing, on the other hand, process times and temperatures (Alvarado-Iniesta et al., 2012; Ge et al., 2012; Mulrennan et al., 2018) are frequently occurring in the data. Worth mentioning here is the work in additive manufacturing by Li and Anand (2020) who used design parameters such as hatch patterns as model inputs and Zhu et al. (2020) for adding product design parameters such as product size and geometry to the data basis.

Sensor data The second type of input data is sensor data, which is taken from the process or machine during the manufacturing process. Sensor data values therefore represent the actual state of the process or the condition of the machine. A model trained on sensor data can, for example, provide an estimate of the product quality as it is being manufactured. As with process parameters, sensor data is highly dependent on the particular manufacturing process. While in welding processes, for example, welding current is often captured (Goldman et al., 2021; Li et al., 2020a, b), in shaping processes it is temperature and pressure data (Garcia et al., 2019; Kim et al., 2018; Lee et al., 2018). In cutting processes, on the other hand, there are often vibration, torque or force sensors used (Du et al., 2021; Moreira et al., 2019; Neto et al., 2013; Schorr et al., 2020a, b). A special case are assembly processes. While Doltsinis et al. (2020) used sensor signals from the robots used in snap-fit assembly, Sarivan et al. (2020) used acceleration and audio measurements from wearables worn by operators.

Sensor data + process parameters There are also publications that obtain data from both process parameters and sensors (see Table 6). For example, Elangovan et al. (2015) showed for a turning process that the addition of sensor variables (e.g. vibration signals) can significantly improve the performance model in contrast to the sole use of process parameters (e.g. cutting speed). Similar approaches can be found in other work on cutting processes, where common process parameters are combined with sensor data such as force or flank wear width (Klein et al., 2020; Lutz et al., 2020; Vrabel et al., 2016), or additive manufacturing, where data from IR sensors are gathered together with temperature and speed parameters (Zhang et al., 2018, 2019b). Also mentionable are the investigations by Lutz et al. (2020) by additionally incorporating data from material batches and tool types and analyzing the deviations of prediction results across them.

Product measurements The third category is measurement data of the product itself during its production or in the course of visual inspection. Models trained on these measurements are often used to automatically detect product defects in them. In more than half of the publications that fall in this category, the measurements are images captured by a (line) camera. They lie in the field of additive manufacturing (Bartlett et al., 2020; Gaikwad et al., 2020), assembly (Martinez et al., 2020), turning (Tušar et al., 2017), metal rolling (Li et al., 2018; Liu et al., 2021; Yun et al., 2020), welding (Wang et al., 2021) or textile manufacturing (Jun et al., 2021). Other measurement data than camera images are geometric measurements (Ståhl et al., 2019), thermal images (Oh et al., 2019), X-ray images (Ferguson et al., 2018), melt pool images (Zhang et al., 2019a) or laser topology scans (Dimitriou et al., 2020).

As mentioned, many publications focus on one type of input variables for predictive quality. This shows that even for the same manufacturing process different data can be used for quality estimations. A good example are turning processes, where on the one hand there are approaches based on setting parameters of the turning machine (e.g. cutting speed and cut depth) (Acayaba & de Escalona, 2015; Elangovan et al., 2015) and approaches using sensor data of the machine (e.g. vibration signals) on the other hand (Du et al., 2021; Lutz et al., 2020; Moreira et al., 2019). However, our analyses also show that there are also major differences between the respective manufacturing processes. Figure 6 shows how many times the three types of input variables appear in the publications for the nine most frequently addressed manufacturing processes. For instance, while turning and drilling applications use parameters and sensor data, laser cutting applications only use process parameters. It is also noticeable that in four out of five publications dealing with metal rolling (Li et al., 2018; Liu et al., 2021; Ståhl et al., 2019; Yun et al., 2020), the quality estimation is solely based on product measurements.

Data modality

From the described input variables, the data sets for training the machine learning models are finally created. Thereby, the data is transformed into a form on which the models can be used. In the reviewed publications, four data types respectively data modalities are identified. Table 8 lists the data types and their occurrences in the publications.

The least common type is categorical data. These are mostly non-numeric representations of process entities, e.g., a tool type and material batch (Lutz et al., 2020), compositional elements of a batch (Lee et al., 2018), or component counts (Thomas et al., 2018). Eight of the nine publications that perform time-series-based quality estimation obtain it from sensor data (Essien & Giannetti, 2020; Goldman et al., 2021; Gyasi et al., 2019; Hsu & Liu, 2021; Meyes et al., 2019; Sarivan et al., 2020; Zhang et al., 2018, 2019b), one publication from product measurements (Ståhl et al., 2019). The data can be univariate time series (Essien & Giannetti, 2020; Meyes et al., 2019; Ståhl et al., 2019) or multivariate time series (Goldman et al., 2021; Hsu & Liu, 2021; Sarivan et al., 2020).

Another important data type used is image data. The majority of these are 2D images, which are drawn from the described product measurements (Bartlett et al., 2020; Dimitriou et al., 2020; Ferguson et al., 2018; Jun et al., 2021; Li et al., 2018; Liu et al., 2021; Martinez et al., 2020; Oh et al., 2019; Wang et al., 2021; Yun et al., 2020; Zhang et al., 2019a). Dimitriou et al. (2020) used 3D point clouds which are generated by topology scan. In most cases, the generated images are enriched using data augmentation techniques such as adding noise or using random cropping (Dimitriou et al., 2020; Ferguson et al., 2018; Jun et al., 2021; Li et al., 2018; Liu et al., 2021; Martinez et al., 2020), resulting in a larger database for deep learning model training. In addition, Yun et al. (2020) used a variational auto encoder to generate synthetic image data.

The vast majority of publications almost exclusively use numerical/continuous data with scalar values for model training. These are values of parameter settings on the one hand, and quantities obtained from sensors and measurements on the other hand. In fact, 23 of the 31 publications (\(74\%\)) that gather their data from sensors transform the values into scalar numerical quantities. Commonly, either statistical feature extraction (e.g., minimum value, maximum value, mean value) is conducted (Doltsinis et al., 2020; Du et al., 2021; Elangovan et al., 2015; Garcia et al., 2019; Lee et al., 2018; Li et al., 2019; Lieber et al., 2013; Papananias et al., 2019; Turetskyy et al., 2021; Vrabel et al., 2016; Yu et al., 2016) or expert-driven aggregation is performed (Ke & Huang, 2020; Klein et al., 2020; Schorr et al., 2020a, b).

Machine learning methods

In the third part of our publication review, we look at the learning tasks and machine learning models that are used for predictive quality. In general, the learning task results from the described quality goals and the quality variable to be estimated (e.g., error detection, quality forecasting). On the one hand, this involves classification, which accounts for 30 of 81 publications, and numerical prediction, i.e. regression, which is conducted in 51 publications. Besides from the learning task, the choice of an appropriate ML or DL model for predictive quality results from the data modality (e.g., images) and the complexity of the relationships between input and output (e.g., linear, non-linear). Many different models are trained and experimentally evaluated in the publications. The review reveals that in \(49\%\) of all publications (40 out of 81) the evaluation is performed only for a single model or for different variants of the same model (e.g. changes of hyperparameters, architectures, optimization methods). In contrast, \(51\%\) (41 of 81) of the publications compare several models in experiments. In the following, the model which is the focus of a publication (because it is the single model) or which performs best in a model comparison is referred to as the prime model. All other models used for comparison are called baseline models.

Table 9 lists all prime models used in the publications. On the one hand, it can be seen that some models are commonly used for both classification and regression, such as the MLP. On the other hand, the popularity of models based on artificial neural networks and CNNs becomes evident. This also holds true for the most recent publications. Looking at the share of prime models in the publications of 2020 and 2021 (see Fig. 7), both models account for \(68\%\) of the publications. In contrast, other models such as SVM, random forest, and decision tree are more often used as baseline models (see Fig. 8). In the following, we will categorize the publications based on the prime models used and also briefly discuss the baseline comparisons.

Multilayer perceptron (MLP) The most frequently used prime model is the MLP with 30 publications. The popularity of MLPs continues into 2020 and 2021, and their versatility is demonstrated in a wide variety of use cases. On the one hand, MLPs are used for classification, be it for prediction of certain fault types (Yu et al., 2020; Lee et al., 2018; Dib et al., 2020), binary classification of OK/NOK states (Wagner et al., 2020) or the classification of multiple quality classes (Bustillo et al., 2018; Kebisek et al., 2020; Ke & Huang, 2020). On the other hand, MLPs are used (much more frequently) for the regression of numerical quality metrics, such as the aforementioned estimations of surface roughness (Acayaba & de Escalona, 2015; Du et al., 2021; Kayabasi et al., 2017; Serin et al., 2020a; Vrabel et al., 2016), tensile strength (Hamidinejad et al., 2012; Natesh et al., 2019; Yu et al., 2016) or dimensions (McDonnell et al., 2021; Lei et al., 2019; Neto et al., 2013; Papananias et al., 2019). Regarding the baseline models with which MLPs are experimentally compared, the publications often use other popular ML models such as SVM (Ciancio et al., 2015; Dib et al., 2020; Lee et al., 2018; Lutz et al., 2020; Nurwaha & Wang, 2012; Wagner et al., 2020), random forest (Dib et al., 2020; Lee et al., 2018; Li et al., 2020b; Lutz et al., 2020; Turetskyy et al., 2021), linear regression (Acayaba & de Escalona, 2015; Hamidinejad et al., 2012; Turetskyy et al., 2021), decision tree (Lee et al., 2018; Wagner et al., 2020), k-nearest neighbor (K-NN) (Dib et al., 2020; Wagner et al., 2020) and/or AdaBoost (Bustillo et al., 2018). In addition, MLPs are compared to methods such as adaptive neuro-fuzzy inference system (ANFIS) (Neto et al., 2013), gene expression programming (GEP) (Nurwaha & Wang, 2012), or an elman network (Papananias et al., 2019).

Convolutional neural network (CNN) CNNs are the second most frequently used models (14 publications), that is for numerical estimation or regression of quality values (Dimitriou et al., 2020; Wang et al., 2021; Zhu et al., 2020; Zimmerling et al., 2020), binary OK/NOK classification (Goldman et al., 2021; Hsu & Liu, 2021; Martinez et al., 2020; Sarivan et al., 2020) or multiclass classification (Ferguson et al., 2018; Jun et al., 2021; Li et al., 2018; Liu et al., 2021; Yun et al., 2020; Zhang et al., 2019a). They are well suited for pattern recognition in higher dimensional and spatial data and are therefore applied to 2D images (Ferguson et al., 2018; Jun et al., 2021; Li et al., 2018; Liu et al., 2021; Martinez et al., 2020; Wang et al., 2021; Yun et al., 2020; Zhang et al., 2019a), 3D point clouds (Dimitriou et al., 2020) and also time series data (Goldman et al., 2021; Hsu & Liu, 2021; Sarivan et al., 2020). Because of this characteristic, the CNN architectures used in the publications are often empirically compared with other CNN variations. For example, Jun et al. (2021) showed that a combination of CNNs with convolutional variational autoencoders (CVAE) provides better performances for class-imbalanced data than variations without CVAE. Yun et al. (2020) included well-known deep learning models such as AlexNet, VGG-16, and ResNet-50 in their comparisons. Liu et al. (2021) also developed their own architecture called TruingDet based on a Faster R-CNN and deformable convolutions and compared it to other state-of-the-art R-CNN models. In addition, other methods used for comparison with CNNs are MLP and SVM (Li et al., 2018), several computer vision algorithms (Jun et al., 2021), and shapelet forests (Hsu & Liu, 2021).

Recurrent neural network (RNN) Seven publication use a recurrent neural network architecture as a prime model for quality estimations. Since RNNs are suitable for application on time-dependent or sequential data, they are commonly applied on gathered time series data (Meyes et al., 2019; Essien & Giannetti, 2020; Ståhl et al., 2019; Zhang et al., 2018, 2019b). Some of the publications use them for the binary classification of defects (Liu et al., 2020b; Meyes et al., 2019), others for the regression of quantities such as material warpage (Alvarado-Iniesta et al., 2012), machine speed (Essien & Giannetti, 2020), or tensile strength (Zhang et al., 2018, 2019b). Regarding the type of RNNs, the focus of the publications is clearly on LSTM network architectures (Essien & Giannetti, 2020; Liu et al., 2020b; Meyes et al., 2019; Ståhl et al., 2019; Zhang et al., 2018, 2019b). For example, Meyes et al. (2019) used bidirectional LSTM which allows a time series to be processed in a forward run and a backward run to gain better classification results. Essien and Giannetti (2020) used a convolutional LSTM encoder-decoder architecture to forecast future values of the series. The authors therefore compared their approach with another CNN architecture and with an ARIMA model. Other baseline models used for the comparison with RNNs are SVM (Liu et al., 2020b; Ståhl et al., 2019; Zhang et al., 2018, 2019b), random forest (Ståhl et al., 2019; Zhang et al., 2018, 2019b), XGBoost (Liu et al., 2020b), polynomial regression (Zhang et al., 2018) and/or logistic regression (Ståhl et al., 2019).

Non-linear ML models Some publications used traditional machine learning methods that are well suited for nonlinear decision making. These include SVMs for the classification of defects (Oh et al., 2019) and operation success (Doltsinis et al., 2020; Schmitt et al., 2020a) as well as for the numerical estimation of product geometries (Garcia et al., 2019), relevance vector machine (RVM) for estimating product weights (Ge et al., 2012), decision trees (Tercan et al., 2016, 2017) and quadratic regression (Martín et al., 2016) for interpretable quality estimations, and both K-NN (Lieber et al., 2013) and naive bayes (Bartlett et al., 2020) for defect classification. With regard to their evaluation, these models are typically compared to each other (Garcia et al., 2019; Lieber et al., 2013; Oh et al., 2019; Schmitt et al., 2020a) and with other models such as MLPs (Oh et al., 2019; Garcia et al., 2019; Martín et al., 2016), gradient boosted trees (Schmitt et al., 2020a), or generalized additive models (GAM) (Martín et al., 2016).

Ensembles Ensemble methods involve the combination of multiple learning models, thereby aggregating their decisions to make a prediction. In some cases, extensive comparisons were conducted to show that ensembles can perform better than single models (Gejji et al., 2020; Kim et al., 2018; Thomas et al., 2018; Li et al., 2019). Probably the most popular ensemble method is the random forest, which is used for classification (Zahrani et al., 2020) and regression (Klein et al., 2020; Mulrennan et al., 2018; Schorr et al., 2020b; Tušar et al., 2017; Yu et al., 2019). The random forest is compared with single decision trees (Tušar et al., 2017), bagged trees (Mulrennan et al., 2018) and models such as MLP, CNN and SVM (Schorr et al., 2020b).

Variants and hybrid models with neural networks Some publications used methods that are hybrids or variants of artificial neural networks. These include ANFIS, which were proposed to estimate the surface roughness in cutting processes (Hossain & Ahmad, 2014; Moreira et al., 2019; Varma et al., 2017; Zhang & Lei, 2017), ANN variants such as sequential decision analysis neural network (SeDANN) (Gaikwad et al., 2020) and extreme machine learning (Nguyen et al., 2020), and hybrid models of neural networks and evolutionary computation methods such as genetic algorithm optimized neural network (GA-BPNN) (Ai et al., 2016) and neuro evolutionary hybrid model with genetic algorithm and particle swarm optimization (NN-GA-PSO) (Dhas & Kumanan, 2014). Accordingly, the approaches were also frequently compared with regular MLPs (Hossain & Ahmad, 2014; Nguyen et al., 2020; Varma et al., 2017; Zhang & Lei, 2017). Gaikwad et al. (2020) additionally compared the SeDANN model with other established models such as CNN, LSTM, CART, and general linear model (GLM).

Key insights and challenges

The previous chapter presented the results of our extensive literature review on predictive quality in manufacturing, in which we categorized the publications along the three dimensions of manufacturing processes and criteria, data basis for model training, and learning models used. Based on the obtained results, we will provide in the following our main findings and identified gaps with respect to the posed driving questions.

Scenarios and manufacturing domains

Applications of predictive quality All in all, the reviewed publications show that machine and deep learning methods prove to be versatile and powerful tools for data-driven quality estimations. In this context, the methods are very often validated with respect to their prognostic quality and accuracy for the particular use cases. The results show that predictive quality has great potential value for quality assurance and manufacturing process improvement. Although many publications do not clearly formulate how the proposed methods are intended to be used in the manufacturing process, we have identified three application scenarios of predictive quality. The first is process design support and process optimization based on simulation data or process parameters. Here, predictive quality methods are used to estimate product quality based on setting parameters. The estimates could then be used either to gain knowledge for the process designer or, in combination with optimization methods, to automate the design of the process. The second scenario is in-line quality prediction during the manufacturing of a product based on process and sensor data. The predictions could then be used to initiate a quality-improving action in the manufacturing process to avoid faults or meet manufacturing tolerances. Third, predictive quality is being investigated for visual quality inspection using ML/DL methods. The methods are used to detect rare product defects in image data or to classify certain defect types. They therefore offer great potential to automate manual and costly visual inspections.

Manufacturing processes Looking at the study results in terms of the manufacturing processes and quality scenarios addressed, there are many similarities (e.g. prediction of the same quality criteria, similar research of approaches for defect detection), but also large imbalances between the manufacturing process groups. While cutting and joining processes account for half of the publications, there are process groups which are hardly dealt with (e.g. coating) or not at all (i.e. changing of material properties). Furthermore, there are also large differences within the process groups. While, for example, many different domains are covered in cutting (e.g. turning, drilling, milling, laser cutting), joining processes are largely covered only by welding processes. Other important branches such as riveting, gluing or soldering are hardly to be found. One reason for this imbalance may be the different degrees of digitization in the domains. The availability of process and quality data in the manufacturing process is an essential requirement for predictive quality. It is noticeable that many of the reviewed publications lie in manufacturing domains in which solutions for process and tool condition monitoring already exist, such as machining processes (Mohanraj et al., 2020; Serin et al., 2020b) or additive manufacturing processes (Lin et al., 2022; Montazeri & Rao, 2018). Accordingly, in these domains it is easier to collect data from the process.

Process integration As mentioned before, the learning models used in the publications show very promising results for their use in real manufacturing scenarios. In most cases, however, the approaches are not integrated into the manufacturing process. Though some publications use real production data for quality inspection (Li et al., 2018; Oh et al., 2019; Ståhl et al., 2019; Wagner et al., 2020; Schmitt et al., 2020a; Yun et al., 2020) or quality prediction (Goldman et al., 2021; Essien & Giannetti, 2020; Lee et al., 2018; Kebisek et al., 2020; Meyes et al., 2019), the training and evaluation as well as the use of the models mostly happen offline or away from the actual process. There are a few works that implement and deploy predictive quality approaches as part of a larger framework (Kebisek et al., 2020; Lee et al., 2018; Li et al., 2020a; Martinez et al., 2020; Oh et al., 2019; Schmitt et al., 2020a). In addition, some publications discuss aspects such as inline capability and real-time capability of the model estimations (Doltsinis et al., 2020; Li et al., 2018; Martinez et al., 2020; Moreira et al., 2019; Sarivan et al., 2020; Schmitt et al., 2020a; Zhang et al., 2019a). However, no discussions are given on the implementation of predictive quality in real quality assurance processes. Furthermore, there is a lack of evaluation of the approaches in terms of their impact on process quality by using quality-oriented metrics (e.g., reduction of rejects, yield rate).

Data bases and characteristics

Input variables The publications show that predictive quality models can be trained on very different data sources and types. The majority of publications use data taken from the physical manufacturing process. This can be process parameters, which are set for the manufacturing of the product, as well as measurements and sensor data, which are gathered during the manufacturing process. Many of the proposed approaches are based on the use of a few input variables of the same type. However, using parameters in combination with sensor data can significantly improve performance, which was shown by Elangovan et al. (2015). In addition, we noticed that other important variables that influence product quality in manufacturing, such as product design or material properties, are not considered in the publications at all. In most cases, ML models are studied for only one product type. The common approach is to train a model on data (parameters or sensors) for a single product with specific characteristics and material compositions. The question of how a model trained for a single product can be used for other products remains open.

Common data processing steps The pre-processing of raw data is an important step before training machine learning models. In particular when using measurement data and sensor data, many publications perform data cleansing (e.g., filling missing data, removing noise and outliers) and data scaling (e.g. normalization or standardization) methods prior to model training. In addition, we noticed two other processing steps that occur frequently. One is the transformation of temporal sensor data into scalar features using feature extraction methods (Doltsinis et al., 2020; Du et al., 2021; Gaikwad et al., 2020; Garcia et al., 2019; Li et al., 2019, 2020b; Lieber et al., 2013; Neto et al., 2013). This involves extracting statistical features from the data (e.g., minimum, maximum, mean values) or transforming the data using signal processing methods. On the other hand, when using image data, data augmentation methods are used to significantly enrich the data set (Dimitriou et al., 2020; Ferguson et al., 2018; Hsu & Liu, 2021; Li et al., 2018; Jun et al., 2021; Liu et al., 2021; Martinez et al., 2020; Yun et al., 2020; Zhu et al., 2020). These methods generate additional image variants by adding noise, rotating the images or randomly cropping them.

Data amount The availability of representative data in a sufficiently large quantity is a fundamental requirement for ML and DL and consequently also for predictive quality. This is a major challenge in a domain like manufacturing where generating data can be cumbersome and costly. The study results show that many approaches are developed and evaluated on a small amount of experimentally generated data, where the experiments often involve the variation of a few process parameters. While experiments offer the advantage over running productions that they can include boundary conditions and edge cases, they provide in general a less representative data basis. Since many publications also use data sets that contain fewer than 100 data points, their results purely serve to demonstrate the potential and the feasibility of predictive quality. Therefore, solutions have to be researched and developed to improve the data representation and to increase the data quantity. Data augmentation is a promising approach for this. Though it used in some of the reviewed publications (as mentioned above), the focus here lies only on image data.

Benchmark data As mentioned, the generation and use of manufacturing process data is usually expensive and requires time and effort. The use of this data to investigate predictive quality is therefore an investment that cannot always be made. It is therefore all the more important to have freely available benchmark data sets that can be used for investigations. The literature review shows that the vast majority of the publications do not use freely available benchmark datasets, except from (Ferguson et al., 2018; Jun et al., 2021; Liu et al., 2021, 2020b; Yu et al., 2019), nor do they provide their own data base or source code. Thus, on the one hand, there is a lack of comparability between different approaches for similar predictive quality tasks. On the other hand, reproducibility and further development of existing research results is hardly possible.

Machine learning methods

Prime models \(68\%\) of publications from 2020 and 2021 use an MLP or CNN as their prime model. This clearly shows the popularity and potential of these models for predictive quality. They are well suited to identify complex patterns and relationships in process data. MLPs in particular are used in a wide variety of use cases and data sets. Many publications show that MLPs are superior to other machine learning models such as SVMs or random forests in terms of their prediction performance. CNNs are by far the most widely used deep learning models for predictive quality. As they are very well suited for pattern recognition in image data, they are often used in visual quality inspection. Regarding other deep learning models, only LSTMs are currently studied in a few publications. It is noteworthy that other popular models such as transformer networks are not found in the reviewed publications.

Baseline models Conducting experimental comparisons of different models or model variants is an important part in machine learning. About \(50\%\) of publications compare their prime model with other baseline models. These are mainly other machine learning models that are established for nonlinear learning problems, such as SVM, random forest, decision tree, and k-nearest neighbor. Although these models do not achieve the same performances as MLPs in most experiments, they also show strong versatility for different predictive quality use cases.

Models and data modalities The selection of an appropriate ML or DL model for a particular predictive quality use case depends on many factors. One of them is the data modality. Looking at the publications with respect to which model is used for which data modality, some common approaches become apparent. If the data basis consists of images, most publications (9 out of 11) use a CNN-based approach (Dimitriou et al., 2020; Ferguson et al., 2018; Jun et al., 2021; Li et al., 2018; Liu et al., 2021; Martinez et al., 2020; Yun et al., 2020; Wang et al., 2021; Zhang et al., 2019a). For numerical/continuous data, an MLP is used in about half of the corresponding publications, followed by random forest, SVM, and other models. For time series data, no common model is established yet. As mentioned above, the common approach for raw time series data coming from sensors is to transform them first into numerical/continuous variables using feature extraction and training then a machine learning model on it. However, of the few publications that train models on the time series directly, five publications use LSTMs (Essien & Giannetti, 2020; Meyes et al., 2019; Ståhl et al., 2019; Zhang et al., 2018, 2019b) and three publications use CNNs (Goldman et al., 2021; Hsu & Liu, 2021; Sarivan et al., 2020).

Future research directions

Based on the obtained results and conclusions, we provide an outlook on future directions of predictive quality research. We believe that these research directions can address the identified gaps as well as boost the prevalence of predictive quality in research and industry.

Synthetic data generation Machine learning and especially deep learning models typically require large amounts of training data. Therefore, solutions have to be researched and developed to increase the data quantity and to overcome the identified data sparsity in predictive quality scenarios. One promising approach is to generate synthetic training data using generative deep learning models. It has been shown that they are suitable for generating realistic data in large quantities at low cost (Mao et al., 2019; Nikolenko, 2021; Pashevich et al., 2019). In the predictive quality context, they could be used with manufacturing simulations to synthetically replicate data for rare process variations and product defects. In addition, we propose the establishment and further development of data augmentation methods for manufacturing process data, in particular sensor and time series data. We thus refer to research on data augmentation for time series problems (Iwana & Uchida, 2021; Wen et al., 2021).

Benchmark data sets We see great potential in research to make results and data sets more accessible to other scientists. Therefore, we recommend establishing benchmark data for predictive quality to be used for the evaluation of new approaches. This could be data for the classification of product defects or for the numerical prediction of quality values based on sensor data. In addition, we recommend scientists to use already existing data sets for their own research work. At this point, we refer to publications that provide an overview of datasets and repositories, such as for surface defect detection (Chen et al., 2021) or for machine learning in production (Kraußet al., 2019).

Novel deep learning methods The review results show that among the existing deep learning models, only CNNs and LSTM-based models are investigated for predictive quality. However, in the state of the art of deep learning, new types of methods have already been established. Among them is the Transformer network, an attention-based method that performs very well on sequential data (Vaswani et al., 2017) and image data (Khan et al., 2021). Though applications of Transformer networks for predictive quality are not yet known to us, there are already applications in the predictive maintenance context (Liu et al., 2020a; Mo et al., 2021). Also strongly researched in the deep learning field are graph neural networks (Zhou et al., 2020), which are suitable for graph data and can therefore be useful for pattern recognition in CAD or simulation data. We therefore see the potential for these methods to be equally well suited for predictive quality scenarios.

Time series classification and forecasting While deep learning on image data has gained acceptance in predictive quality (via CNNs), there is still a large research potential for training models on raw sensor data or time series data. In current deep learning research, there are already a number of different model approaches for performing time series classification (Ismail Fawaz et al., 2019) or forecasting (Lim & Zohren, 2021). Considering predictive quality scenarios, these approaches could be suitable to perform quality prediction based on temporal sensor data in the manufacturing process.

Transfer learning and continual learning The current works in predictive quality are mainly based on the assumption that the training data basis is representative for the given problem. However, this assumption is often not valid in industrial production, since manufacturing processes are subject to continuous changes (e.g. the production of new products). Process changes mean that previously trained learning models no longer work sufficiently well, which is why a lot of new training data has to be generated at great cost. The emerging fields of transfer learning and continual learning can overcome this challenge by training data-efficient and cost-effective models over process variants. In the current state of research, some efforts for the use of transfer learning (Maschler & Weyrich, 2021b; Maschler et al., 2021a; Tercan et al., 2018, 2019) and continual learning (Tercan et al., 2021) in manufacturing exist. We see great potential in further research of these fields for predictive quality.

Integration and deployment With regard to an establishment of predictive quality in industrial manufacturing systems, further research work has to be done. On the one hand, we see the need to evaluate predictive quality solutions in real quality assurance processes. This includes the development of strategies for automated feedback of model decisions to humans and systems as well as the evaluation of approaches in terms of their impact on process quality by using quality-oriented metrics (e.g. yield rate). Another important aspect is the operationalization and automation of model training and model use. In the current state of research, first approaches of MLOPs (machine learning operationalization) exist to continuously monitor, integrate and deliver ML models in business environments (Cardoso Silva et al., 2020; Garg et al., 2021). We see great potential of MLOPs strategies for continuous integration of predictive quality models. Furthermore, there is a need for certification of predictive quality processes that guarantees the reliability of the ML models and thus enables their use in industrial manufacturing processes. To the best of our knowledge, there are currently no established approaches for certifying machine learning methods in industries.

Summary

This review paper provided a comprehensive overview of 81 scientific publications between 2012 and 2021 that address predictive quality in manufacturing processes. The publications were categorized and evaluated based on three guiding questions. The first question was to discover which manufacturing processes and quality criteria are addressed in the publications. The categorization was done according to the DIN 8580. On the one hand, the results show that predictive quality is used in a wide variety of manufacturing processes, estimating various quality metrics or defect types. On the other hand, an imbalance in the process groups is evident. While a lot of research is done in process groups such as cutting and joining, hardly any publications lie in the fields of coating and changing material properties.

The second question dealt with the data used in the publications for training and evaluating the learning models. Here it was shown that for a large part of the publications, process parameters or sensor data were gathered from a real manufacturing process and merged with the quality values. The generation of the data was often carried out experimentally by varying a few parameters or boundary conditions. With regard to data modality, it appeared that numerical quantities as well as image data (e.g. from product measurements) are playing an increasingly important role.

The third question addressed the machine learning and deep learning models used for predictive quality. The results of the review showed that especially models based on artificial neural networks (MLPs) and deep learning (mainly CNNs) were very much in focus. While the MLP was used in versatile ways, CNNs were commonly used on image data. In about half of the publications, the models were also compared experimentally with other models. Here, it was observed that popular machine learning methods such as SVMs and random forests were frequently used for comparison.

Based on the obtained results, central challenges for predictive quality research were derived, which need to be addressed in future work. On the one hand, these include the tackling of sparse data sets in the manufacturing context and the generation of benchmark data for more comparability in research. On the other hand, there is a lack of approaches to integrate and deploy sustainable and robust predictive quality solutions in real production processes.

In conclusion, predictive quality is a very heterogeneous and highly researched field in the manufacturing world. The relevance and popularity of the field will likely continue to increase in the coming years. The current state of research highlights the great potential that data-driven methods of machine learning and deep learning bring to quality assurance and inspection. Yet the use cases, approaches, and results are still viewed in a very isolated way. As such, there is still much to be done in research to overcome this isolated view and enable greater prevalence of predictive quality in the future.

References

Abd-Ellatif, S. A. M. (2013). Optimizing sliver quality using artificial neural networks in ring spinning. Alexandria Engineering Journal, 52(4), 637–642. https://doi.org/10.1016/j.aej.2013.09.007

Acayaba, G. M. A., & de Escalona, P. M. (2015). Prediction of surface roughness in low speed turning of AISI316 austenitic stainless steel. CIRP Journal of Manufacturing Science and Technology, 11, 62–67. https://doi.org/10.1016/j.cirpj.2015.08.004

Ademujimi, T. T., Brundage, M. P., & Prabhu, V. V. (2017). A review of current machine learning techniques used in manufacturing diagnosis. In H. Lödding, R. Riedel, K.-D. Thoben, G. von Cieminski, & D. Kiritsis (Eds.), Advances in production management systems. IFIP advances in information and communication technology (Vol. 513, pp. 407–415). Springer. https://doi.org/10.1007/978-3-319-66923-6_48