Abstract

Despite advances in techniques for exploring reciprocity in brain-behavior relations, few studies focus on building neurocognitive models that describe both human EEG and behavioral modalities at the single-trial level. Here, we introduce a new integrative joint modeling framework for the simultaneous description of single-trial EEG measures and cognitive modeling parameters of decision-making. As specific examples, we formalized how single-trial N200 latencies and centro-parietal positivities (CPPs) are predicted by changing single-trial parameters of various drift-diffusion models (DDMs). We trained deep neural networks to learn Bayesian posterior distributions of unobserved neurocognitive parameters based on model simulations. These models do not have closed-form likelihoods and are not easy to fit using Markov chain Monte Carlo (MCMC) methods because nuisance parameters on single trials are shared in both behavior and neural activity. We then used parameter recovery assessment and model misspecification to ascertain how robustly the models’ parameters can be estimated. Moreover, we fit the models to three different real datasets to test their applicability. Finally, we provide some evidence that single-trial integrative joint models are superior to traditional integrative models. The current single-trial paradigm and the simulation-based (likelihood-free) approach for parameter recovery can inspire scientists and modelers to conveniently develop new neurocognitive models for other neural measures and to evaluate them appropriately.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Recent decades have seen a remarkable growth in the development of both the fields of mathematical psychology and cognitive neuroscience to interpret and predict cognition from behavioral and brain data (Usher & McClelland, 2001; Brown & Heathcote, 2008; Ditterich, 2006; Gold & Shadlen, 2007; Drugowitsch et al., 2012; Turner et al., 2013; Ratcliff et al., 2016). In particular, neurocognitive theories of perceptual decision-making have been proposed to explain how evoked electroencephalography (EEG) measures and behavioral data from experiments result from the specific time course of visual encoding, evidence accumulation, and motor preparation (O’Connell et al., 2012a; van Ravenzwaaij et al., 2017; Nunez et al., 2019; Lui et al., 2021). Models derived from these theories can then be fit to data and predict and explain both evoked EEG and behavioral data. These neurocognitive models utilize trial- or individual-level signatures of EEG or magnetoencephalography (MEG) signals to assess, constrain, replace, or add cognitive parameters in models (Nunez et al., 2017; 2022). Moreover, Turner et al. have proposed many approaches for directly or indirectly relating neural data to a cognitive model; for instance, the field of model-based cognitive neuroscience has used BOLD responses and EEG waveforms simultaneously and separately to predict and constrain cognitive parameters and behavioral data (Turner et al., 2013, 2016, 2019; Bahg et al., 2020; Kang et al., 2021). Also, Nunez et al. have introduced some neurocognitive models to study the effect of selective attention, the role of visual encoding time (VET), and the relationship of readiness potentials (RPs) in motor cortical areas to evidence accumulation during perceptual decision-making tasks (Nunez et al., 2017, 2019; Lui et al., 2021). Furthermore, Ravenzwaaij et al. have suggested an explicit and confirmatory model that reveals that the drift rate parameter can describe simultaneously the behavioral data and the neural activation in a mental rotation task (van Ravenzwaaij et al., 2017).

Motivation

Are EEG measures able to track parameters of sequential sampling models? Sequential sampling models (SSMs), especially drift-diffusion models (DDMs), assume that human and animal decision-making are based on the integration of evidence over time which reaches a specific threshold. The accumulation and non-accumulation parameters for a decision are usually measured by the firing rate of neurons and ERP signatures (O’Connell et al., 2018). Even though some joint models incorporate neural dynamics in classical DDMs, only some models have been developed that consider EEG signatures as a participant response similar to reaction time (RT) and accuracy (van Ravenzwaaij et al., 2017). There are however many studies linking event-related potential (ERP) components and behavioral performance (O’Connell et al., 2012a; Gluth et al., 2013; Philiastides et al., 2014; Loughnane et al., 2016), as well as using EEG to constrain models’ parameters at the level of the experimental trial (Frank, 2015; Nunez et al., 2017; Ghaderi-Kangavari et al., 2022). Models should be further developed that explain ERP data at the trial level with generative models similar to response times (RTs) and accuracy. Therefore, there is an impetus to build a few of these models, develop associated model fitting procedures, and then assess the idealized models to test how robustly their parameters can be estimated. In this paper, we introduce some practical models and model fitting procedures which will help researchers in the field of cognitive neuroscience to make better inferences about individual differences and trial-to-trial changes in neurocognitive underpinnings during perceptual decision-making tasks. These new neurocognitive models are advantageous compared to the traditional models. The benefits of these models and model-fitting procedures are listed in two categories.

First, we used model-fitting procedures that estimate posterior distributions through invertible neural networks (INNs). In particular, we used a new method named BayesFlow https://github.com/stefanradev93/BayesFlow; (Radev et al., 2020a). This allowed us to flexibly test complex models of joint data without solving for closed-form likelihoods, as well as test models that have no closed-form likelihood. Specifically, we used BayesFlow to apply amortized deep learning to build networks that quickly estimate posterior distributions of complicated and likelihood-free models rather than applying case-based inference. Note here that the training of neural networks did depend on particular types of prior distributions, similar to other Bayesian workflows, and we felt that uniform distributions can be a good option for generating sample parameters. After training, these posterior approximators can be widely shared and applied because they require less knowledge of computational and model fitting procedures rather than Markov chain Monte Carlo (MCMC) methods, non-amortized simulation-based methods, and other amortized Bayesian inference methods (Turner & Sederberg, 2012; Gelman et al., 2014; Lueckmann et al., 2019; Hermans et al., 2020; Turner & Van Zandt, 2012; Fengler et al., 2021). These posterior approximators still allow the estimation of full posterior distributions instead of summary statistics of the posterior (e.g., posterior mean or mode). The BayesFlow method has been recently modified to distinguish correct posterior distributions from posterior errors when there are some deviations between the assumed (simulated) model and the true model that generated the observed data, a situation known as model misspecification (Schmitt et al., 2021). This advantage is mostly due to the use of the summary network, which usually obtains the most important information and helps to detect the presence of unexpectedly contaminated data or misspecified simulators (i.e., simulation gaps). We endeavored to manage contaminated behavioral and neurological data by building some neurocognitive models which contain explicit mixtures of contaminants. For instance, the mixture model of a DDM model and a lapse process (uniform distribution) can be a good option to handle contaminated behavioral data (Ratcliff & Tuerlinckx, 2002; Nunez et al., 2019).

The second category of benefit is that these models explain EEG as single-trial-dependent variables. For example, specific models can generate event-related potential (ERP) latencies or ERP waveform shapes (ERPs explained below) in addition to RT and accuracy data from latent cognitive parameters. Some of these models separate visual encoding times (VETs) from other non-decision times (see Fig. 1), while some of these models also predict contaminants (e.g., “lapse” trials) in EEG measures and behavioral performance. Other models constrain evidence accumulation rate (i.e., drift-rate) parameters to make better parameter estimates (see Fig, 1). With these models, we can test research questions about correlates of behavioral performance and neural data (e.g., O’Connell et al., 2012a, Nunez et al., 2019, 2022), new research questions (such as about spatial attention, see Ghaderi-Kangavari et al., 2022), and explain and predict ERP measures jointly with behavioral measures. Another benefit is that these models are measurement models in that the estimated parameters reflect interpretations of cognitive processes. Finally, these models are easily extendable, such as similar models with linear and non-linear collapsing boundaries (Evans et al., 2020b; Voss et al., 2019).

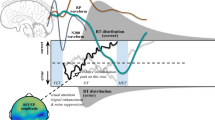

A theoretical presentation of novel neurocognitive models for the study of human cognition that explain single-trial neural activities and behavioral data simultaneously. Three types of decision boundaries (static, linear collapsing, and Weibull collapsing) have been used to design neurocognitive models. Single-trial EEG, such as single-trial event-related potentials (ERPs) from the occipito-parietal area, can be explained by these joint models; for example, N200 latencies (blue line) and centro-parietal positivities (CPP) (green line). These data constrain and predict latent parameters. Distributions of behavioral performance such as RT and choice for correct and error are displayed on both sides of the two choice boundaries. Latent parameters of the DDM are also displayed, which reflect N200 latencies and CPP slopes. Prior evidence has found that N200 waveforms track visual encoding time (VET) and the onset of evidence accumulation (Loughnane et al., 2016; Nunez et al., 2019). Moreover, P300 or CPP slope are assumed to account for the accumulation time rather than non-decision time and possibly reflect the mean of drift rate (O’Connell et al., 2012a). The minimum N200 waveform has initiated the onset of the evidence accumulation as well as the P300/CPP slope is similar to the mean slope of the evidence accumulation

Single-Trial ERP Analysis

EEG activity time-locked to particular events in an experimental task and averaged-across trials, i.e., event-related potentials (ERPs), have been frequently utilized as a powerful method in the past century to study both clinical and research applications such as the information processing in the brain, biomarkers for a variety of disorders, neural mechanisms involving cognitive processes, highlighting differences in brain activity, prediction of future cognitive functioning, identifying cognitive dysfunction, individual differences, etc. (Philiastides et al., 2006; Cavanagh et al., 2011; Luck, 2014; Zwart et al., 2018; Manning et al., 2022). Some advantages of using ERPs over other EEG measures include as follows: enhancing signal-to-noise ratios, less variance across conditional manipulations, and decreased type II errors (Luck, 2014; Luck et al., 2000). However, some disadvantages of trial-averaged ERPs are that: a large number of participants are needed to achieve robust inferences, there is a loss of abundant information contained in EEG across trials, and it is difficult to use ERPs to constraint cognitive models to estimate parameters within-trials. Nevertheless, correlation and multiple linear regression methods on the individual level have been crucial parts of analysis for researchers who assessed statistical relationships between models’ parameters, ERPs components, and behavioral performance (Nunez et al., 2015; Schubert et al., 2019; Clayson et al., 2019; Manning et al., 2022). In fact, the two-stage inference is a statistically attenuated approach because parameters are estimated, and then their summary measures are put into a regression model, resulting in missing information.

Improving upon the aforementioned disadvantages of trial-averaged ERPs, ERPs can also be measured on single trials under certain conditions (Nunez et al., 2017; Bridwell et al., 2018). Single-trial ERPs can help the neurocognitive models estimate and restrict cognitive parameters simultaneously because of fluctuations in cognition across experimental trials (Nunez et al., 2017). However, one of the main difficulties in using single-trial ERPs is the amount of noisy information due to artifacts (electromyographic—EMG—signals, electrical noise, and other unrelated brain activity). Therefore, modern techniques are required to discard irrelevant information and amplify relevant cognitive activity. In general, there are two approaches to extracting single-trial ERPs: manual and automatic. Using the manual method, researchers might spatially average single-trial EEG waveforms across electrodes in occipital, occipito-parietal, motor regions, etc. However, using an automatic method, researchers can apply machine learning approaches to identify linear spatial weightings to generate a single waveform, such that this single waveform contributes to the activity of many electrodes. One example is to find single-trial ERPs using a weighted map resulting from singular value decomposition (SVD) of the traditional trial-averaged ERPs at every electrode. This procedure has been successful in our previous work (Nunez et al., 2019; Ghaderi-Kangavari et al., 2022). In other work, a single-trial multivariate linear discriminant analysis (LDA) has been used to determine linear spatial weightings of the EEG electrodes for maximal discrimination of target (faces) and non-target (cars) trials during a simple categorization task (Philiastides et al., 2006; Diaz et al., 2017).

N200 Latencies Can Reflect Visual Encoding Time

Estimating the time of visual encoding for perceptual decision-making is challenging because it depends on distinct variables, for instance: uncertainty of stimuli, target selection, and figure-ground segregation (Lamme et al., 2002; Loughnane et al., 2016). Consequently, researchers have reported on the verity of time to encode visual stimulus, and this research has converged on a specific window of latency. We first review the previous literature on visual processing and then the specific literature related to decision-making.

Note that encoding for some emotional and threatening stimuli project to the amygdala nuclei, and thus visual encoding of these stimuli are expected to take a little time to make innate and unconscious reaction (Tamietto & De Gelder, 2010; Baars & Gage, 2013). In this work, we are concerned with stimuli where the mechanism of visual encoding is the transmission of information through the LGN of the thalamus to the primary visual cortex, and involves a different network to further process visual information (Baars & Gage, 2013; Hall & Hall, 2020). The visual path has a long way to go, and it takes a little longer than the auditory pathway. The C1 (60–80 ms), P100 (around 100 ms, also known as the P1), and N200 (120–180 ms, also known as the N1) are exogenous ERP waves over the visual cortex that are thought to reflect sensory stages of processing visual stimuli; such that the C1 wave is not manipulated by attention, and both P100 and N200 peaks were manipulated by attention and visual perceptual learning (Cobb & Dawson, 1960; Clark et al., 1994; Luck et al., 2000; Ahmadi et al., 2018). The N200 latency component between sessions correlates negatively with behavioral improvement in a texture discrimination task (Ahmadi et al., 2018). Di Russo et al. showed by studying ERPs and the combination of structural and functional magnetic resonance imaging (fMRI) that the spatio-temporal patterns of the calcarine sulcus, primary visual cortex, extrastriate and occipito-parietal activities were in the latency range of 80–225 ms in response to flash stimuli (Di Russo et al., 2003).

We define visual encoding time (VET) as the time between the onset of stimuli and the relative starting of the evidence accumulation process (Nunez et al., 2019). VET is thought to take place sequentially before, not in parallel to, the accumulation process (Weindel, 2021). This is not necessarily true for all cognitive components in decision-making; for instance, there is evidence to suggest that motor planning evolves concurrently with the evidence accumulation process (Dmochowski & Norcia, 2015; Servant et al., 2015, 2016; Verdonck et al., 2021). Researchers proposed that the process of VET can be completed from 150 ms to 225 after the appearance of stimulus (Thorpe et al., 1996; VanRullen & Thorpe, 2001; Nunez et al., 2019). Shadlen et al. demonstrate that the evidence accumulation from the lateral intraparietal area (LIP) begins at around 200 ms after the onset of random dot motion with difference phase coherence (Roitman & Shadlen, 2002; Kiani & Shadlen, 2009; Shadlen & Kiani, 2013). In addition, Thorpe et al. revealed that the visual processing demanded a go/no-go categorization task can be reached around 150 ms after the onset of stimulus (Thorpe et al., 1996). Furthermore, Nunez et al. found evidence that the latency of the negative N200 between 150 and 275 after stimulus onset in the occipito-parietal electrodes reflects the VET needed for figure-ground segregation before the accumulation of evidence (Nunez et al., 2019). Finally, Ghaderi-Kangavari et al. used hierarchical Bayesian models that included single-trial N200 latencies to identify the effects of spatial attention on perceptual decision-making and found evidence that perceptual decision-making manipulates both VET before evidence accumulation and other non-decision time process after or during evidence accumulation (Ghaderi-Kangavari et al., 2022).

Centro-parietal Positivities Can Reflect Evidence Accumulation

Analogous to well-known findings of invasive single cell recordings that identified markers of how animals accumulate information to make perceptual decisions (Shadlen & Newsome, 2001; Roitman & Shadlen, 2002; Gold & Shadlen, 2007; Shadlen & Kiani, 2013; Kiani & Shadlen, 2009; Jun et al., 2021), some researchers have shown that non-invasive EEG recordings can also describe how humans accumulate information towards a specific bound to make a decision (Kelly & O’Connell, 2013; O’Connell et al., 2012a, 2018). O’Connell et al. discovered an ERP signiture called the centro-parietal positivity (CPP) identifying a build-to-threshold decision variable (O’Connell et al., 2012a). They also clarify that the CPP evoked by gradual decreases in the contrast of stimuli is highly correlated to the classic P300 peak elicited by invariant contrast of targets. In addition, the P300/CPP dynamic taken from three electrodes centered on CPz, the standard site for the P300, can predict both timing and accuracy of perceptual decision-making in humans (O’Connell et al., 2012a; Kelly & O’Connell, 2013; McGovern et al., 2018). On the other hand, Loughnane et al. showed that a pair of early negative deflections at lateral occipito-temporal sites around 200 ms after stimulus appearance can influence the onset of the centro-parietal positivity (CPP) during a random dot motion task (Loughnane et al., 2016). In summary, researchers have shown that the CPP steadily increases at a certain rate and is assumed to reflect the integration of evidence to reach a particular peak at the time of response execution (O’Connell et al., 2012a; Kelly & O’Connell, 2013). It should be noted that the drift rate in drift-diffusion models (DDMs) is the mean rate of evidence accumulation. Therefore, the slope of the CPP can be assumed to be related to the drift rate (Nunez et al., 2022), which is line with previous findings (Ratcliff et al., 2009; Philiastides et al., 2014; van Ravenzwaaij et al., 2017).

Simulation-Based Approaches

Neuro-cognitive models that describe both brain and behavior could have complicated non-linear relationships between parameters. In order to obtain estimates (or full posterior distributions p(Θ|X)) of parameters of neurocognitive models, component likelihood functionsL(Θ|X) (or the probability density of the data given the parameters p(X|Θ)) can be derived to fit models using maximum likelihood estimation (MLE) or MCMC methods. However, in this paper, we will present plausible single-trial integrative joint models for which the component closed-form likelihoods are complicated, necessitating clever mathematical derivations to derive component likelihoods, if they are even tractable, i.e., possible to derive analytically.

Rejection-based approximate Bayesian computation (ABC) is an alternative to the calculation of likelihood functions with models only based on simulation (Csilléry et al., 2010; Turner & Van Zandt, 2012; Sunnåker et al., 2013). Rejection-based ABC algorithms estimate posterior distributions by taking proposal samples from a prior distribution \(\theta _{i} \sim p(\theta )\) and then generating an artificial dataset from the estimator model called the forward model \(x_{i} \sim p(x | \theta _{i})\) for xi = 1,…,N. If the artificial dataset is close enough to the observed dataset by a tolerance (𝜖), the sample (𝜃i) is chosen as a sample of the posterior (Csilléry et al., 2010; Klinger et al., 2018). One can use a sufficient statistic S(.) to summarize both artificial and observed data before comparing them (Palestro et al., 2018b). However, sufficient statistics are hard to obtain for complex models with intractable likelihoods, and using insufficient statistics may incur significant approximation error.

The pass/fail rule of rejection-based ABC is simple to implement but does not optimally use all simulated data, therefore kernel-based ABC algorithms can be an effective alternative (Turner & Sederberg, 2012). Moreover, synthetic likelihood methods and probability density approximations have been used as likelihood-free algorithms to fit mechanistic models to observed data (Wood, 2010; Turner & Sederberg, 2014; Palestro et al., 2018b). Both rejection-based methods need to include predetermined tolerance thresholds and the distributions of sufficient summary statistics, while synthetic likelihood methods and probability density approximation are computationally costly. Furthermore, these kinds of approaches have been identified as performing case-based inference which has a few drawbacks, such as expensive computations, inability to generate effective samples from posterior distributions, only approximating posteriors for specific data, and sensitivity to tolerance values.

Amortized Bayesian approaches are alternative methods to approximate joint posterior distributions, which can be applied quickly to new data. These methods also use simulated data for training. However, this training step is not necessary for each new dataset. For instance, some researchers have used masked autoregressive flows (Papamakarios et al., 2019) and probabilistic neural emulator networks (Lueckmann et al., 2019) to learn approximate likelihoods L(Θ|X) rather than instantaneous posterior distributions p(Θ|X). Moreover, Hermans et al. (2020) introduced a likelihood-to-evidence ratio estimator to compute an approximation of acceptance ratios in MCMC in order to draw posterior samples rather than evaluating the likelihood directly. Fengler et al. (2021) focused on two classes of architectures, multilayered perceptrons (MLPs), and convolutional neural networks (CNNs), to approximate likelihoods as well as estimate cognitive models with varying trial numbers. In this workflow by Fengler et al. (2021), two different posterior sampling methods, MCMC and importance sampling, are used for taking samples from posterior in each iteration. Estimating summary measures of the posteriors by constructing new artificial architectures for Bayesian inference can be useful for many research topics. These methods include as follows: fully convolutional neural networks as end-to-end architecture (Radev et al., 2020b), deep neural networks (Jiang et al., 2017), autoencoders (Albert et al., 2022), ABC random forests (Raynal et al., 2019), and different approaches to approximate likelihood and posteriors (Cranmer et al., 2020).

Radev et al. (2020a) have proposed a novel pathway to find posterior distributions using neural networks for globally amortized Bayesian inference. They proposed a fitting procedure with two neural networks: a summary network and an invertible neural network (INN). INNs are neural network architectures in which the mapping from input to output can be efficiently reversed (Ardizzone et al., 2018), while permutation-invariant summary networks are responsible for summarizing the independent and identically distributed (i.i.d.) observation datasets with a fixed-size vector automatically. The summarized data and the true posteriors are then transformed directly through an INN in the training phase into a simple latent space (e.g., Gaussian). This network is trained to learn simulated parameters, and then in the inference phase can then be inverted to estimate the joint (and marginal) posteriors. These networks are used to estimate posteriors in two main phases: a relatively expensive training phase and a very cheap inference phase (Radev et al., 2020a). Many of the models we propose in this paper fit with the procedure by (Radev et al., 2020a), although the models could also conceivably fit with other methods, such as that proposed by (Fengler et al., 2021) in the future.

Types of Joint Models

There are several strategies to link brain-behavior relationships in modeling (Turner et al., 2017; Palestro et al., 2018a). However, only two general strategies are relevant to our work. Directed and integrative models have been investigated to relate both unobserved parameters of behavior and the brain, and these models could either be employed for each participant or each experimental trial (see Fig. 2 to visualize modeling strategies relevant to our work).

Graphical models of a directed joint models, b a common integrative joint model, and c the single-trial integrative joint model. These graphical models represent approaches for linking EEG and behavioral data. These figures follow standard graphical model notation (Lee, 2014; Palestro et al., 2018b) with one new notation addition for c. Observed data are represented by shaded nodes, where node Z is the EEG data and node X is the behavioral data. Unobserved variables are represented by unshaded nodes. Nodes Λ are vectors of parameters that represent cognitive variables that describe both EEG and behavioral data. Nodes Ψ are vectors of computational parameters that only describe EEG data. Nodes Θ are vectors of cognitive parameters that only describe behavioral data. The double-bordered node in the directed model (a) refers to parameters that are not estimated and deterministic, such that their posterior distributions can be derived directly from posterior distributions of other parameters. The shaded double-bordered node in the single-trial integrative model (c) refers to parameters that are not necessarily estimable and are stochastic in simulation. It means that the posterior of the mentioned node must be estimated if we use closed-form likelihood approaches, but there is no need to estimate if we use a simulation-based approach. The plates that border the nodes represent replication over each trial i. Note that there are two differences between a and c: the changing direction of the arrow between Z and Λ, and the nature of the Λ node

In directed joint modeling, one assumes connections directly between EEG measures, denoted ϕ, and cognitive parameters, denoted 𝜃, that explain behavioral data (Turner et al., 2019). This connection is usually applied via a linking function. For instance, the linking function could be an embedded regression model. Note that a regression model the linking function allows EEG measures to be on any non-standardized scale for mapping from ϕ to 𝜃 (e.g., in the original scale of milliseconds for N200 latencies or micro-volts μV per second for CPP latencies). Directed neurocognitive models have been successfully used for studying the effect of visual attention, noise suppression, and spatial attention in perceptual decision-making tasks (Nunez et al., 2017, 2019; Ghaderi-Kangavari et al., 2022) as well as deployed to answer any other questions in related to the cognitive control of single-trial EEG in decision-making (Wiecki et al., 2013; Frank, 2015; Yau et al., 2021). Note that directed joint models can often produce better estimate parameter relationships between EEG measures and cognitive parameters than other methods because these models fit in a single step, as opposed to methods that fit cognitive models and linear regression sequentially (Ghaderi-Kangavari et al., 2021). However, while directed models are extremely useful for discovering the relationship between EEG data and cognition in exploratory research (Nunez et al., 2022), directed models can neither easily explain nor predict EEG data.

Integrative joint models describe, predict, and constrain both behavioral and EEG data simultaneously (Palestro et al., 2018a; Nunez et al., 2022). These models also allow us to fit models closer to the theoretical process models suspected to underlie both data types, as well as include computational parameters of EEG measures that are sometimes not necessarily tied to cognition. In this investigation, we focus on parameter recovery and robustness of novel integrative neurocognitive models to predict data on individual experimental trials. As a result, the models make both EEG measures (N200 and CPP) and behavioral data (RT and accuracy) explainable and predictable at the single-trial level.

Integrative model specification and formalization can explicitly reflect our understanding of cognition. So, different mathematical models can represent different theories of cognitive processes. Thus, another reason to model neurocognitive theory is to help understand the possible relationships between the brain, cognition, and behavior that can then be compared to data and tested in experimentation (Guest and Martin, 2021; Nunez et al., 2022). Therefore, the models presented here can help researchers who want to understand human cognition using EEG signals and behavioral data. Specifically, researchers can consider these models in terms of underlying neurocognitive theory, find parameter estimates of these models and compare them across experimental conditions and participants, and also compare models to find evidence for the most reasonable theory. We also believe the current approaches will be highly beneficial when applied to animal studies and when extended to other electrophysiology (e.g., single-unit and LFP data). Extensions of these models and simulation-based model fitting procedures can be easily applied in the future.

Mathematical Models for Neurocognitive Theory

We propose several neurocognitive models that reflect certain hypotheses and can be used to analyze data and answer research questions. These types of models can be considered integrative approaches to joint modeling (Turner et al., 2019). Specifically, they provide an architecture to apply behavioral and neural responses simultaneously at the single-trial level. These models also serve as examples and modeling architecture for psychological, cognitive, and neuroscience researchers who plan to construct explicit statistical models to connect data with theory.

From a methodological point of view, after formalization of these models, we implement them as simulation for a range of realistic parameter values (draws from prior distributions), and then submit these simulations through the BayesFlow Python pipeline to train INNs that yield samples from the parameter’s posterior distributions given any observed data set. We then test the robustness of the model fitting. We also provide saved fitted models, e.g., checkpoints in BayesFlow, of each trained model to be applied in a fast and single step on new data.

A General Framework for Integrative Joint Models

As mentioned before, in the following we propose a few models particular to the study of decision-making using single-trial EEG, response times, and choice data. However, our proposed models in this paper could be considered a part of a general framework to describe and predict single-trial EEG/behavior relationships. These models can be considered true integrative models as previously discussed. An important point to note here is that while known joint likelihood functions and approximations exist for choice-RTs (Tuerlinckx, 2004; Wagenmakers et al., 2007; Navarro & Fuss, 2009), and common likelihood functions exist that can be applied to single-trial EEG data (such as the Normal distribution), known joint likelihoods that describe all three data types on single trials do not exist. In such cases, joint likelihoods are likely necessary to estimate the parameters of these models using MCMC algorithms. However, the primary model-fitting technique we used for this work, BayesFlow, depends only upon being able to simulate the model. We can therefore express each model as a series of prior distributions and simulation equations from conditional probability distributions.

The general form to describe (joint) behavioral data X and neural data Z with shared latent single-trial cognitive parameters Λ is the following, with i being the index for one experimental trial, prior indicating a prior distribution, and sim indicating a simulation block:

where \(\mathcal {K}\) is the joint prior probability density over all non-single trial parameters, \({\mathscr{H}}\) is the marginal probability density function describing latent single-trial cognitive parameters, \(\mathcal {F}\) is the probability density function of behavior conditional on latent single-trial parameters, and \(\mathcal {G}\) is the probability density function of EEG conditional on latent single-trial parameters. Note that the three simulation blocks could be considered as simulations from one joint likelihood \((\mathbf {X}_{i},\mathbf {Z}_{i}) \sim \mathcal {J}(\mathbf {\Gamma },\mathbf {\Theta }, \mathbf {\Psi })\). However, it is often useful to think about three simulation blocks that share parameters instead, for example, to connect non-joint models developed in mathematical psychology and cognitive neuroscience. Here it is worth considering that behavioral parameters Θ, EEG measurement parameters Ψ, and shared cognitive parameters Γ can be estimated by BayesFlow under certain conditions for identified models, as we show later in this paper. Meanwhile, the issue to be highlighted here is that single-trial cognitive parameters Λi often cannot be estimated. However, using BayesFlow, estimation of Λi is not necessary because we can simulate Λi using the Γ parameters without needing to directly estimate Λi nor treating Λi as observed data.

These models can be fit to data using MCMC algorithms under certain conditions. The true joint likelihood of (Xi,Zi) must either be solved analytically by integrating out the so-called nuisance parameters Λ or approximated via estimation algorithms (Fengler et al., 2021) before sampling. Note however that sampling statements similar to the equations above written in programs such as JAGS (Plummer, 2003) and Stan (Carpenter et al., 2017), programs that fit MCMC models from a language of distributional sampling statements, will attempt to find posterior distributions for the nuisance parameters Λi for every trial i. For instance, a similar model with like lihoods, priors, and hyper priors is fundamentally different in that the density \({\mathscr{H}}\) is no longer assumed as part of the assumed model and instead reflects a prior belief about each single-trial parameter:

These similar models with priors for every trial do not easily converge to solutions due to a large number of parameters compared to data observations. Note also that the prior shape for single-trial parameters Λi in these models are influenced both by the functional form of \({\mathscr{H}}\) as well as the uncertainty of the hyperparameters Γ, while the models above have only an assumed probability density function \({\mathscr{H}}\) as part of the assumed joint density. Therefore, these are similar, but different, models (Gelman et al., 2014; Merkle et al., 2019).

As a consequence, a fixed probability distribution \({\mathscr{H}}\) must often be assumed that cannot result in a new hierarchical posterior distribution for the nuisance parameters Λi on every trial. This could be considered analogous to certain problems in decision-making models of behavior. For instance, a normal distribution for trial level drift rates δi is often assumed in DDMs (Ratcliff et al., 2016). Single-trial drift rates δi as nuisance parameters in this example are analogous to the single-trial Λi nuisance parameters in our framework. In order to estimate across-trial drift-rate variability parameters η with MCMC (and many other parameter estimation techniques), the trial-specific drift-rate parameter δi must be integrated out of the likelihood function, instead of estimating posterior distributions for each trial’s drift rate (Tuerlinckx, 2004; Ratcliff et al., 2016; Shinn et al., 2020). In these appropriate MCMC procedures and BayesFlow, single-trial drift rates are not estimated for every trial. However, integrating out this parameter is not necessary with BayesFlow because the entire model is instantiated in simulation.

Another similar model framework would be one in which there is no nuisance parameter Λi that varies over single trials, but that both EEG and behavioral model likelihoods (\(\mathcal {F}\) and \(\mathcal {G}\)) share some common parameters Γ and both predict single trial data. These are models that can be fit in using MCMC procedures, and similar joint models of EEG and behavioral data have been proposed (Nunez et al., 2022):

However, the key difference between this model framework and our proposed model framework is that the single-trial data is completely independent. That is, the same parameter estimates will result from a random permutation of the single-trial index i for EEG data Z if the single-trial index of behavioral data X remains the same. This essentially implies that the EEG data and behavioral data in these traditional integrative models are not assumed to be related on single-trials. This is not true of our proposed models which share a joint (estimated) likelihood on the single-trial level. A random permutation of EEG data will result in different parameter estimates in our framework. We will show later that these parameter estimates are intuitive in our specific models, and that the true relationship between behavioral data X and EEG data Z can also be assessed with our modeling framework.

Assuming Two Sources of Variance in Single-Trial EEG

In directed models, it is intrinsically assumed that the independent variable, the EEG measure Z, is a perfect descriptor of the true neural signal (see Fig. 2). However, for most electrophysiology and brain imaging, a perfect measure of the brain is unrealistic. This is especially true for EEG where derived measures of EEG are expected to be influenced by muscle and electrical artifacts, even when the measure of EEG is somewhat robust to artifact (Nunez et al., 2016). While other forms of integrated neurocognitive models could be beneficial for studying human cognition (see Appendix), the key benefit of our frameworks is that these models contain at least one source of measurement noise for the EEG measures, σ2, as well as variance in the underlying cognition that can change across trials s2. By measurement noise, we mean the combination of electrical noise, artifacts due to EMG, and unrelated cognitive processes embedded in all measures of EEG (Whitham et al., 2007; Nunez et al., 2016). Measurement noise is especially relevant for EEG on single trials (Bridwell et al., 2018) because we are often not using measures that are robust to EEG artifacts, like trial-averaged ERPs (although even trial-averaged ERPs are not completely robust to artifact either) (Nunez et al., 2016). Note also that preprocessing steps for EEG measures often have many degrees of freedom (Clayson et al., 2021), and therefore models should provide some quantification of the amount of relevant cognition described by single-trial EEG measures compared to the amount of extraneous information.

One would expect that both sources of variance, measurement noise and variance related to cognition, could contribute to the single-trial EEG. In our models, we operationalize the sum of these two sources of variance (e.g., (γ2s2 + σ2) in model 2) to describe the variance in the observed EEG measure for every trial i. However, only the variance in the underlying cognition (e.g., s2 in model 2) also describes the behavioral data. Therefore, an important property of these models is that they can assess the amount of variance in single-trial EEG related to cognition, given by the fraction of variance r. As a specific example, in model 2 (discussed below), the fraction of variance in EEG related to cognition is r = (γ2s2/(γ2s2 + σ2)). We will discuss further assessment of these models below. Note that the exact equation for the fraction of variance varies across models (e.g. r = (s2/(s2 + σ2)) in model 1 and r = (γ2η2/(γ2η2 + σ2)) in model 8.

The Base Evidence Accumulation Process of All New Models

The base processes that describe a portion of behavior and EEG data in our models are drift-diffusion models (DDMs) (Ratcliff, 1978; Ratcliff & Rouder, 1998; Ratcliff & McKoon, 2008). However, this framework can easily be extended with various other decision models (e.g., Usher & McClelland, 2004, Brown & Heathcote, 2008, Hawkins et al., 2015, Voss et al., 2019, van Ravenzwaaij et al., 2020, Hawkins & Heathcote, 2021), especially when using BayesFlow, because the base DDM can be replaced with any decision-making process that can be simulated. DDMs presume that participants integrate continuous information during choice tasks via a Wiener process (i.e., Brownian motion), typically for one choice over another or a correct over incorrect response. Once enough evidence is acquired, by passing one of two boundaries, a decision is made. This is one of many classes of ‘first pass time distributions’ that share the same boundary crossing properties, such as linear ballistic accumulators (LBAs) (Brown & Heathcote, 2008). Mathematically, this flowing evidence accumulation is assumed to be a diffusion process which can be represented as:

and a form of discrete approximation as a random walk process with a small size time scale for use in simulation in digital computers is as follows:

where \({\Delta } t \rightarrow 0\) is a very small time scale (5 ms or 1 ms in our simulations) that approximates an infinitesimal time step, Xt is the diffusion state, dWt represents the independent increment of the Wiener process, e is noise generated from the standard normal distribution \(\mathcal {N}(0,1)\), the δ parameter is drift rate, and the ς parameter is instantaneous variance (scaling parameter). Note that in simulation-based model fitting, we use 5 ms to approximate the very small time scale Δt. We experimented with using 1 ms to see if this affected model fitting performance, but it did not.

Standard DDMs have four major parameters. The first parameter is the amount of evidence required to make a decision, which is denoted by α. Psychologically, when this parameter is changed, it can index a speed-accuracy trade-off (Ratcliff & McKoon, 2008; Hanks et al., 2014). The second parameter is the drift rate of the diffusion process. It is the mean rate of evidence accumulation within a trial which is denoted by δ, and psychologically, it is determined by the quality of the stimulus. The third parameter β is the start point of the evidence accumulation path within a trial, shown to empirically reflect bias in a decision (Voss et al., 2004; Bowen et al., 2016). The fourth parameter is the non-decision time. It is often assumed to be only the summation of encoding and execution time (Ratcliff, 1978; Voss et al., 2015), although this assumption has rarely been tested (Ghaderi-Kangavari et al., 2022). Total non-decision time is denoted by τ. One additional parameter is sometimes estimated (Nunez et al., 2015, 2017), the instantaneous variance within a trial, “diffusion coefficient” ς. However, we fixed ς = 1 for all parameter recoveries.

Some integral formations have been derived to capture intrinsic trial-to-trial variability of the cognitive parameters in DDMs. However, only certain model likelihoods have known integral solutions. For instance, there is a an exact solution to estimate the four main variables (δ, α, β, τ), in addition to the trial-to-trial variance in the drift rate η. This is achieved by assuming a normal distribution of the nuisance parameter and integrating out the nuisance parameter (see Tuerlinckx 2004; Voss et al., 2015, for more information). Note however that in the simulation approach to fitting models using BayesFlow, it is acutely convenient to add across-trial variability parameters.

The General Structure of All New Single-Trial Integrative Models

Figure 3 provides a graphical description of the overview of the example neurocognitive models, including the relationship and difference between them, in the paper. Schematically, we use only the simulation statements to describe the models. However, each model is defined by both priors (listed below) and implicit likelihoods derived from the simulation statements. A common sampling statement is given by \(r_{i}, x_{i} \sim DDM(\lambda _{i}, \mathbf {\Theta } )\) which refers to a drift-diffusion model with single-trial nuisance parameter λi (either single-trial non-decision times τ(e)i or single-trial drift rates δi) and additional parameters Θ. The specific parameters will be defined in each sampling statement. Here, ri is the response time and xi is accuracy, coded with 1 for correct response and − 1 for incorrect response. Also, zi denotes the EEG measure in each model. Note that x, r, and z change on each trial i and for each participant.

Our methods here could be considered extensions of models by Ratcliff and others (Ratcliff et al., 2016) that allow trial-to-trial variability in the cognitive model parameters. These models are also similar to previously published directed models in which the single-trial EEG data describes trial-to-trial variability in the model parameters through embedded linear linking functions to cognitive parameters (Frank, 2015; Nunez et al., 2017; Ghaderi-Kangavari et al., 2022). However, the models proposed here allow some of the noise in the EEG to be described by measurement noise unrelated to behavior, thus improving the ultimate reflection of the true process and improving the estimation of single-trial behavior and EEG. Note that estimating the trial-to-trial variability parameters of DDMs is difficult with MCMC methods (Boehm et al., 2018). Using the current neurocognitive models and deep learning approximations of posteriors, parameters encoding across-trial variability of non-decision time and drift rate can be recovered well and conveniently.

We present specific models to represent specific hypotheses in the neurocognitive decision-making literature. However, many of our models could also be considered extensions of simple linear regressions on single-trial nuisance parameters. For instance, if we consider λi to be a specific nuisance cognitive parameter of a DDM that changes on each trial i that describes both choices xi and response times ri, then we can assume that the EEG measures zi come from linear regression with the nuisance parameter as the predictor variable. That is \(z_{i} \stackrel {\text {\textit {sim}}}{\sim } \mathcal {N}(\gamma \cdot \lambda _{i} + \rho , \sigma ^{2})\), where γ is the effect of the single-trial cognitive parameter on EEG, ρ is the residual mean cognitive variable unexplained by EEG, and σ2 is the measurement noise in EEG unexplained by this cognitive variable. Note that in some models we have performed parameter transformations and reordering of variables to reflect hypotheses in the neurocognitive literature. Also note that in some models γ = 1, such as in the class of model 1 which assumes single-trial N200 latencies are described by an additive component of non-decision times. While in some models ρ = 0, such as in model 8 which describes single-trial CPP slopes as scaled descriptors of drift rates. Unless otherwise specified as fixed, all parameters on the right side of distributional statements are free model parameters to be estimated.

Basic Single-Trial Integrative Models That Describe N200 Latencies

One idea, which has been used in our recent work (Nunez et al., 2019; Ghaderi-Kangavari et al., 2022), is that N200 peak-latencies can separate measures of visual encoding time (VET), τe from other non-accumulation times during decision making, e.g., motor encoding time (MET), τm. The following models were designed to test and use this idea with N200 latencies as the EEG data, although the models could also be used with other EEG data types. We will consider the separation of VET and MET in the first set of new models, such that they do not depend upon the assumption that single-trial N200s exactly reflect the onset of VET but are instead driven by VET with measurement noise.

The first, most simple, integrative joint model, model 1a, adds two sources of variance: measurement noise of EEG measures σ2 and variance related to non-decision time on single trials \(s_{(\tau )}^{2}\) to a DDM framework. This model assumes that one source of across-trial variance in non-decision time is contained in an EEG measure and that this source of variance in non-decision time is additive to fixed non-decision time across trials. For instance, we could use this model if we presume that the sources of non-decision time variance arise from the variance of visual encoding time, which is also reflected in N200 latencies, and not other sources of variance. However, note that this model is more general than this specific case:

Note that we assume normal distributions for both the EEG generation from single-trial cognitive variables as well as the generation of across-trial variance in non-decision time itself. We use normal distributions to be most flexible for various EEG inputs, and not because we think the normal distribution is the true data generation process of N200 latencies. We test these assumptions in simulation and with real data, see “Robustness to Model Misspecification and Fitting the Proposed Single-Trial Neurocognitive Models on Experimental Data.” It is important to observe that the mean non-decision time across-trials is τ = μ(e) + τ(m), where μ(e) reflects the mean EEG measure and τ(m) reflects the residual non-decision time after subtracting the mean EEG measure across trials. The key point here is that the posteriors of parameters reflecting cognitive-variability across trials \(s_{(\tau )}^{2}\) and measurement noise across trials σ2, and the percentage variance resulting from a transformation of these measures, are more informative about the true relationship between EEG and behavior than the mean parameters (see discussion below). In this particular additive model of non-decision time, the mean parameter μ(e) reflects the mean EEG measure and the residual parameter is just the mean non-decision time minus the mean EEG measure: τ(m) = τ − μ(e).

A specific example is the case of the N200 latencies reflecting visual encoding time (VET) (Nunez et al., 2019). In this case, we might expect μ(e) to reflect the mean visual encoding time (and N200 latencies) across trials, while the residual non-decision time τ(m) would reflect the motor execution time (MET). Thus, τ(e)i would reflect the fact that visual encoding time depends on both trials and individual levels. The residual non-decision time τ(m) would reflect MET that does not change trial-to-trial.

It should be noted that the above formulas can be transformed by instead parameterizing the models with a single-trial non-decision time τi = τ(e)i + τ(m). This model, labeled model 1b has the following form:

As it turns out, model 1b is just a more specific case of the embedded linear regressions framework presented in “The General Structure of All New Single-Trial Integrative Models,” where γ = 1. Note we do not consider model 1b to be truly different from model 1a. However, we tested whether parameter recovery would be different between the two parameterizations.

Because of the positive quantity of N200 latencies, we should confine them to a specific time window, for instance, a window from 50 to 500 ms. Therefore, we also constructed a neurocognitive model based on model 1b to evaluate whether using a truncated normal distribution \(\mathcal {T}\mathcal {N}(.)\) lies within the interval (a,b), as well as a uniform distribution \(\mathcal {U}\)(.) for non-decision time variability, can be identifiable well. The following equations reflect model 1c:

where the mean and variance of the uniform distribution in Eq. (8c) are the same as the mean and variance in Eq. (7c) in model 1b.

An obvious extension to model 1a is one in which the component of non-decision time that changes trial-to-trial τ(e)i describes a portion of the EEG variance up to a certain scalar value γ, called an “effect” parameter. We therefore propose model 2 which changes only the second equation from equation set Eq. (6a) to \(z_{i} \stackrel {\text {\textit {sim}}}{\sim } \mathcal {N}(\gamma \cdot \tau _{(e)i}, \sigma ^{2})\). In previous work, Nunez et al. (Nunez et al., 2019) discovered some evidence of a 1 to 1 ms (albeit noisy) relationship between non-decision time and N200 latencies. This evidence was, in part, shown using Savage-Dickey density ratios (Wagenmakers et al., 2010) to estimate Bayes Factors (van Ravenzwaaij & Etz, 2021) that compare the hypothesis of a slope of 1 in the linear link function of a directed neurocognitive model. In this new model, we also use the Savage-Dickey density ratio to test the relationship between N200 latencies and non-decision time by again testing whether γ = 1.

Extensions of Models That Describe N200 Latencies

Researchers often use cut-off values to truncate behavioral data in order to remove so-called contaminant data. For instance, for decision-making, these include slow outlier response times (RTs) and fast-guess responses (Vandekerckhove & Tuerlinckx, 2007; Ratcliff & Kang, 2021). Removing RTs outside a window from around 150 to 3000 ms has been frequently deployed, as well as using an exponentially weighted moving average to find an optimal lower cutoff (Vandekerckhove & Tuerlinckx, 2007). Ratcliff and Tuerlinckx proposed a mixture distribution model between standard DDM and a contaminant lapse process described by a uniform distribution to estimate a certain proportion of contaminant data (Ratcliff & Tuerlinckx, 2002). Recently, to describe response times due to fast guesses, Ratcliff and Kang have proposed a mixture of DDM and a normal distribution that describe some properties of observed choice-RT data that could not be easily described by DDMs alone (Rafiei & Rahnev, 2021; Ratcliff & Kang, 2021). Therefore, in neurocognitive models, we may would like to estimate automatically the amount of contaminated data, including very fast responses (e.g., due to lack of attention), very slow responses (e.g., due to mind wandering), and some other possible contaminant data that arises from the research environment. As an example, we propose a mixture model of the neurocognitive model and uniform distribution (\(\mathcal {U}\)), labeled model 3, with a new certain parameter 𝜃(l) describing the probability of a lapse process, and the resulting eight cognitive/computational parameters:

where max(r) is the maximum observed response time over a vector of response times r. Note that we have encoded both a Bernoulli(0.5) choice (with the mathematical convenience of encoding each choice x with a − 1 and 1 and then multiplying by the response time) as well as a uniform \(\mathcal {U}\) response time from 0 ms to max(r). The 𝜃 parameter represents the probability of a lapse response and exists only in the [0,1] interval.

Trial-averaged ERPs are somewhat robust to contaminants since averaging can increase signal proportions and decrease noise. However, single-trial EEG analysis often relies on the development of specific signal processing techniques (e.g., independent component analysis) (Shlens, 2014). Thus, single-trial EEG data could be contaminated with external noise (equipment and environmental sources) and internal noise (head motion, eye blinking, etc.). To handle possible contaminated data in EEG measures, that is not due to simple measurement noise as encoded by σ parameters in previous models, we may wish to find the percentage of data that is described by a relationship to EEG. Thus, to manage different sources of variance in single-trial N200 latencies, the following neurocognitive model, labeled Model 4a, is proposed which has seven free parameters:

where τ is non-decision time parameter (visual encoding time and motor execution time), \(\sigma ^{2}_{(e)}\) is the measurement noise in z (N200 latencies) when observed values arise from the neurocognitive model, \(s^{2}_{(\tau )}\) is across-trial variance in non-decision time when observed values arise from the neurocognitive model, and v and \(\sigma ^{2}_{(v)}\) parameters are the mean and variance of z (N200 latencies) when observed values are not derived from the neurocognitive model.

As it turns out, the 𝜃 parameter can detect the existence of the relationship between the brain and behavior, measuring the proportion of experimental trials that come from the neurocognitive model and what proportion that cannot be examined by the neurocognitive model. In fact, model 4a represents a new approach for comparing two competing models across experimental trials. In this case, a neurocognitive model versus two simple models: a DDM that describes the behavioral data ri,xi and normal distribution of EEG measures zi. That is, the first mixture is model 1a as a neurocognitive model and another mixture is the following model as a cognitive model:

We used model 4a as the joint mixture model to see the amount of actual relationship between neural data and behavior across trials, as discussed below. This model has eleven parameters, but for better recovery, we reduced the number of parameters to nine parameters. In model 1a, the variance of the EEG measures zi in the first mixture is \(s^{2}_{(m)} + \sigma ^{2}_{(e)}\) while the variance of the EEG measures in the second mixture is an independent parameter, \(\sigma ^{2}_{(v)}\). Therefore, we removed a free parameter by forcing the variance of the second mixture to be the same as the first. We similarly forced the non-decision times and mean of EEG measures to be the same in both mixture distributions. The equations of the reduced model 4b are as follows:

Models with Collapsing Boundaries

Drift-diffusion models with collapsing boundaries encode participant behavior such that participants require less and less amount of evidence to trigger a decision as time passes (Drugowitsch et al., 2012; Hawkins et al., 2015; Forstmann et al., 2016; Ratcliff et al., 2016). Mathematical models with collapsing boundaries have gained remarkable attention in some experimental and theoretical accounts. Such models outperform the fixed boundary DDM in certain circumstances and implement optimal decision-making (Ratcliff et al., 2016; Drugowitsch et al., 2012; Evans et al., 2020a). Other evidence for the accuracy of these models has been mixed in the literature. Hawkins et al. (Hawkins et al., 2015) consider both types of models for humans and nonhuman primates over three different paradigms and then by applying using model-selection methods showed that fixed boundary models are generally better than collapsing boundaries, although there is occasional evidence for a collapsing-bound DDM. These authors also concluded that models with collapsing boundaries are not a descriptive model for the majority of human participants, while they can be useful for interpreting the underlying components of non-human cognition. Moreover, Voskuilen et al. (Voskuilen et al., 2016) found that the fixed boundary model was superior to the collapsing boundary model for all data obtained from numerosity discrimination experiments and motion discrimination experiments in human subjects. Voss et al. (Voss et al., 2019) compared six distinct types of accumulator models based on Wiener diffusion, Cauchy-flight, and Lévy-flight models in four number-letter classification tasks to examine if decision bounds can be dynamic over times. They found some evidence in favor of collapsing boundary rather than a fixed boundary. Also, Evans et al. (Evans et al., 2020b) evaluated five different collapsing boundary models based on DDM, linear ballistic accumulator model (LBA), and urgency gating model and then found out the linear collapsing boundary for DDM can fit better in caution/urgency modulation as well as an urgency-gating model with a leakage process. It has also been shown that the drift-diffusion model with a time-variant boundary can well explain both behavioral and neural electrophysiological data in non-human primates (Roitman & Shadlen, 2002; Ditterich, 2006). Smith et al. (Smith & Ratcliff, 2022) showed that the standard DDM was the best model for conditions with constant stimulus and time-variant boundary models were similar to the standard DDM on changing-stimulus conditions.

Most of the findings support the idea that models with collapsing boundaries are optimal for animal’s cognition, in accordance with the idea that adept animals, which are trained on fixed juice feedback, adjust their behavior with urgency (Smith & Ratcliff, 2022). As a result, regardless of whether collapsing boundaries are optimal for humans and some paradigms or not, we deploy the use of collapsing boundaries and analyze their parameter recoveries as a generalization of our neurocognitive models.

We expand the previous neurocognitive models to include collapsing boundary models that allow for decreasing evidence used to make a decision over time. These models are the same as model 1a with collapsing instead of static boundaries. Specifically, we implemented two types of collapsing boundary models: linear and Weibull functions (Voss et al., 2019; Evans et al., 2020b). These are labeled model 5, with seven free parameters, and model 6, also with seven free parameters, respectively (Evans et al., 2020b; Voss et al., 2019).

The linear collapsing boundary is instantiated as follows:

where aslope is the slope of the linear collapsing boundary, α is the initial boundary value before any time elapses, u(t) is the upper threshold, and l(t) is the lower threshold.

A scaled Weibull cumulative distribution function describes the collapsing boundaries in model 6:

where u(t) is the upper threshold and l(t) is the lower threshold. The parameter k indicates the shape of collapse (early vs. late). Also, ω is the scale parameter and shows the onset at which the collapse begins, d encodes the amount of collapse, and finally α again represents the initial boundary before any time elapses. We fixed parameters of k = 3 and d = − 1.

Single-Trial Integrative Models That Describe CPP Slopes

In this section, we propose an integrative neurocognitive model that predicts CPP slope is related to the rate of accumulation evidence variable on single trials. The following model 7 assumed that trial-to-trial variability of drift rate parameters at the model level comes from CPP variance at ERPs signals as well as there is source noise of CPP slope which is denoted by the parameter c as follows:

In one final model, we impose a γ = 1 effect of CPP slope, changing the second equation of Eq. (17a). This results in \(c_{i} \sim \mathcal {N}(\gamma \cdot \delta _{i}, \sigma ^{2})\), i.e., model 8. Note that both models are specific forms of linear regressions presented in “The General Structure of All New Single-Trial Integrative Models,” with trial-to-trial drift rate δi as the nuisance variable λi. In the models of the previous sections, the nuisance parameter was trial-to-trial non-decision time τi or an additive non-decision time component τ(e)i for the same fit.

Traditional Integrative Models That Describe N200 Latencies

As a comparison to our models built in our new single-trial integrative framework, we also fit some similar traditional integrative models using BayesFlow. The models in this section predict single-trial behavior and EEG data but are not described by (estimated) joint likelihoods. Therefore, a random permutation of one of the data types will result in the same model fit. This type of model is shown in Fig. 2b. In these models, we replace the \(\stackrel {\text {\textit {sim}}}{\sim }\) notation with the \(\sim \) notation because these models can all be fit using MCMC algorithms and do not need to be directly simulated for model inference. Thus, they can be directly written in languages such as Stan for specific MCMC algorithms. However, we used BayesFlow for fitting the traditional integrative models in order to directly compare to previously described single-trial integrative models that were fit using BayesFlow.

The first model is analogous to model 1a. This non-single-trial integrative neurocognitive model has six parameters, labeled model 9. This model assumes that both behavioral and brain data are independent at the level of single trials, but it assumes that a non-decision time component τ(e) (for instance VET) describes EEG on single trials. Mathematically, the model equations are as follows:

As already pointed out, integrative neurocognitive models can be divided into two groups based on how two neural and behavior modalities constrain each other (see Fig. 2): first, those models that have shared parameters on a group level but predict independent behavior and neural data on the single-trial level, and second, those models that produce dependent data on the single-trial level. For instance, model 9 has two independent relations which constrain the non-decision parameter. It means that both sets of random variables of Xi = {ri,xi} and Zi = {zi} are independent on single trials. Therefore, by permutation of zi across trials, the same posteriors will be estimated for all parameters.

Meanwhile, there is another issue that should be highlighted here. Actually, another problem with model 9 is that it cannot provide any inference about the relationship between EEG measures and behavior. This is because τ(e) provides an estimate of the mean EEG measure that is not constrained at all by the non-decision time τ = τ(e) + τ(m), because the estimate of τ(m) will just be the estimate of non-decision time τ from behavior while τ(m) = τ − τ(e). Note that in the class of model 1, we can judge the relationship of EEG to behavior by exploring the posterior distributions of \(s_{\tau }^{2} / (s_{\tau }^{2} + \sigma ^{2})\), which does not exist in model 9.

Now, consider the following model, labeled model 10, with an additional parameter γ, ostensibly to solve the aforementioned problem:

When proposing a new neurocognitive model, it is important to note that the model’s parameters must be constrained by data in order to draw inference about the parameters. In the above model, it is obvious that this model is not a single-trial model; thus, the random variables ri,xi and the random variable zi are independent statistically. From the neural data zi, we can estimate both the mean (μ) and the variance (σ2 ) of the normal distribution, and on the other hand, by the random variables ri,xi, we can estimate non-decision time from DDM, which is the sum of τ(e) and τ(m). Thereby, the following two relations are introduced:

It should be noted that there are three variables and two equations which make it impossible for each parameter to be recovered perfectly. Figure 24 is the result of the parameter recovery of model 10. As we expected, the latent parameters can not be recovered and so this model cannot inform us at all about cognition nor the brain-behavior relationship.

Traditional Integrative Models That Describe CPP Latencies

To build a traditional neurocognitive model with CPP slope, we used the following model, model 11, to constrain the drift rate by CPP slope for each participant. In fact, CPP is denoted by parameter ci as follows:

We also tested the following neurocognitive model that predicts CPP slopes on single trials are described by both the mean drift rate and trial-to-trial variance in drift rate. Therefore, we use the following model, model 12:

The above model assumes that single-trial CPPs are explained by both the mean and variability of the drift rate, but single-trial CPPs and choice-RTs are still independent. Remember that both model 11 and model 12 are traditional integrative models in that they predict the same parameters for a random permutation of either the choice-RT pair (ri,xi) or CPP slopes ci with respect to the other data.

Directed Joint Models That Incorporate N200 Latencies

Directed joint models are another approach to constrain both behavioral data at the single-trial level. Recently, a new linear formation of τi = τ(e)i + τ(m) = λ ⋅ zi + τ(m) has been proposed to describe behavioral data directly by both cognitive parameters and neural data (Nunez et al., 2019; Ghaderi-Kangavari et al., 2022). Although note that these models do not describe neural data. More precisely, these approaches are able to make predictions of behavioral data with only neural activity, but cannot easily predict neural data from behavioral data. Parameter τ(m) is residual of the linear formation, assumed to represent MET (Nunez et al., 2019; Ghaderi-Kangavari et al., 2022), λ is the effect of N200 latencies on single-trial non-decision times and the zi parameter represents the N200 negative peak latencies for each trial, such that λ ⋅ zi is assumed to represent VET. This model has seven (non-nuisance) parameters, labeled model 13:

where μ(z) is the mean of N200 latencies.

In the above neurocognitive model, the single-trial non-decision time variability is related to the N200 latencies single-trial variability. This means that the source of the non-decision time variability is assumed to come only from variability in a noiseless neural measure. Therefore, this model is only more appropriate than model 2 if we expect the EEG measure z to reflect the true cognitive process without noise. To calculate the variability of τ, consider the following equations:

Therefore, in this model, the trial-to-trial variability of non-decision time is given by s(τ) = λ2 ⋅ σ2, which is also true of model 2 above.

Priors

We assume “weakly informative” prior distributions for all models (Gelman et al., 2014). For model 1a, model 1b, and model 1c, we assume uniform distributions as the following the prior distributions:

It is important to note here that the parameters shared by the models presented here had the same prior distributions. For instance, model 2 has an additional parameter γ with prior distribution \(\gamma \sim \mathcal {U} (0,3)\). Other parameters, which are similar to parameters in the class of model 1, have the same prior distributions. For the sake of brevity, for the following models, we do not mention prior distributions that were previously mentioned in a previous models. Model 3 contains a prior distribution of the lapse parameter as \(\theta _{(l)} \sim \mathcal {U} (0,0.3)\). Other parameters, which are similar to parameters in the class of model 1, have the same prior distributions. Model 4a has additional prior distributions \(\tau \sim \mathcal {U} (0.1,1)\), \(\sigma _{(v)} \sim \mathcal {U} (0,0.3)\), \(\sigma _{(e)} \sim \mathcal {U} (0,0.3)\), \(v \sim \mathcal {U} (0.04,0.4)\), and \(\theta _{(m)} \sim \mathcal {U} (0,1)\). Also, model 4b has additional a prior distribution \(\theta _{(m)} \sim \mathcal {U} (0,1)\). On the other hand, model 5 contains a prior distribution of \(a_{\text {slope}} \sim \mathcal {U} (0.01,0.9)\). For model 6, it contains a prior distribution of \(\omega \sim \mathcal {U} (0.5,4)\). For model 7, we assume uniform distributions as the following the prior distributions:

Model 8 has an additional prior of \(\gamma \sim \mathcal {U}(-3, 3)\). Also, for this model has the parameter β = .5. Other parameters, which are similar to parameters in model 7, have the same prior distributions. Note that we used a wider prior for the parameter γ in model 8 compared to the range of γ in model 2. This is because negative CPP slopes are reported in the literature (e.g., see van Vugt et al., 2019) and thus negative γ estimates were assumed to be possible.

For model 9, we also assume uniform distributions as the following the prior distributions:

Model 10 has an additional prior of \(\gamma \sim \mathcal {U}(.1, 4)\). Finally, by viewing Figs. 25, 26, and 27 in the Appendix, the range of parameters will be clear.

Recovering Parameters from Simulated Models

Results of parameter recovery reveal whether the proposed models reliably recover true parameters. We first tested whether our model fitting procedure can extract reliable parameter estimates for the data given that the data is generated from the same model. Then we tested whether the some models are robust to slight model misspecification, another important test of models and model fitting procedures before being applied to real experimental data. These procedures indicate whether the estimation method and the neurocognitive assumptions can successfully apply to real data.

We used point parameter recovery to assess how well the posterior mean of a proposed neurocognitive model resembles data-generating parameters. In Bayesian statistics, parameter recovery is conceptually beyond point recovery, so we also report the uncertainty of parameter approximations parameters as well as coverage percentages of free posterior.

Training the Model Fitting Procedure

We train neural networks in BayesFlow via experience-replay learning. Experience-replay learning has had success recently within the deep learning community and is a type of reinforcement optimization which uses a replay memory technique to store trajectories of experiences (Sutton & Barto, 2018). This technique will keep an experience replay buffer from past simulations for taking samples randomly for each iteration, so the networks will likely use some simulations multiple times. This training can efficiently simulate data on-the-fly for fast generation and is optimal in comparison to pure online training (Radev et al., 2020a). By increasing the number of epochs and iterations of deep learning, the parameters gradually become more convergent. In the training phase, we used 500 epochs, 1000 iterations per epoch, a batch size of 32, and a buffer size of 100 for the hyperparameter of memory for training weights of the deep learning structure. Also, we simulate a variable number of trials from 60 to 300 trials from a uniform distribution during the training phase. Note that we purposefully use a small number of trials in order to explore parameter recovery of realistic observation counts in real data. More precisely, we train these neural networks only in one training period with the same prior distributions, and then we generate posterior distributions from the trained neural networks in each subsequent analysis (including fitting real data in the next section).

Models Recover Simulated Parameters Accurately

Here, we generate figures of true parameters plotted against parameter posterior estimates and credible intervals. These parameter recovery figures are generated using 100 simulated datasets with 300 simulated trials each. It should be noted that we obtain 2000 samples from posteriors in the inference phase. Figure 4 as well as Figs. 13 and 14 in the Appendix show parameter recovery of the seven parameters from model 1a, model 1b, and model 1c, respectively, with the x-axis as true parameters and the y-axis as posterior distributions. Each figure includes the mean, median, 95%, and 99% credible intervals related to each data point of parameters. Also, Figs. 15, 16, and 17 in the Appendix represent the result of parameter recovery for model 2, model 3, and model 4a, respectively. Another parameter recovery figure can be seen in the Appendix is Fig. 18, which corresponds to model 4b with 100 datasets and 1000 trials for each. Looking further into the Appendix, it is clear that this parameter recovery approach is clearly confirmed for other proposed models in the same procedure. For instance, we observe that Figs. 19 and 20 show the results of parameter recovery of model 5 and model 6, respectively. In addition, for models including CPP slope results, Figs. 21 and 22 in the Appendix are related to model 7 and model 8, respectively. We used the fixed parameter β = .5 from model 8 to provide the accuracy-based model fitting of data in “2.” Furthermore, Figs. 23, 24, 25, and 26 in the Appendix represent the parameter recovery of traditional integrative joint modeling related to models 9, 10, 11, and 12. Finally, Fig. 27 presents the parameter recovery of the directed joint modeling of model 13.

The plot of true parameters versus posteriors of estimated parameters for model 1a. The x-axis of each graph are simulated parameters and the y-axis represents posterior distributions. The mean of the posteriors is shown by teal star symbols and the median of the posterior of parameters is shown by black circles. Also, uncertainty of parameters is indicated by 95% credible intervals (dark blue lines) and 99% credible intervals (green lines). Note that the orange line is the function of y = x, where we expected recovered posterior distributions to be centered. The estimated parameters follow the orange line, corresponding to the true recovery of these parameters