Abstract

Optimal transport and information geometry both study geometric structures on spaces of probability distributions. Optimal transport characterizes the cost-minimizing movement from one distribution to another, while information geometry originates from coordinate invariant properties of statistical inference. Their relations and applications in statistics and machine learning have started to gain more attention. In this paper we give a new differential-geometric relation between the two fields. Namely, the pseudo-Riemannian framework of Kim and McCann, which provides a geometric perspective on the fundamental Ma–Trudinger–Wang (MTW) condition in the regularity theory of optimal transport maps, encodes the dualistic structure of statistical manifold. This general relation is described using the framework of c-divergence under which divergences are defined by optimal transport maps. As a by-product, we obtain a new information-geometric interpretation of the MTW tensor on the graph of the transport map. This relation sheds light on old and new aspects of information geometry. The dually flat geometry of Bregman divergence corresponds to the quadratic cost and the pseudo-Euclidean space, and the logarithmic \(L^{(\alpha )}\)-divergence introduced by Pal and the first author has constant sectional curvature in a sense to be made precise. In these cases we give a geometric interpretation of the information-geometric curvature in terms of the divergence between a primal-dual pair of geodesics.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Let \(\mu \) and \(\nu \) be Borel probability measures on Polish spaces M and \(M'\) respectively. Given a real-valued cost function c defined on \(M \times M'\), the Monge–Kantorovich optimal transport problem is

where \(\varPi (\mu , \nu )\) is the set of probability measures on \(M \times M'\) whose first and second marginals are \(\mu \) and \(\nu \) respectively. For systematic mathematical expositions of optimal transport theory we refer the reader to the textbooks [46, 48, 49]. Under suitable conditions on the cost function c and the measures \(\mu , \nu \), the optimal coupling \(\gamma ^*\) can be shown to be deterministic, i.e., \(\gamma ^*\) concentrates on the graph \(G \subset M \times M'\) of a measurable map \(f: M \rightarrow M'\), called the optimal transport map. The transport cost \({\mathcal {T}}_c\) can then be used to compare probability measures. For example, when \(M = M'\) and \(c(x, y) = d(x, y)^p\), where d is a metric and \(p \ge 1\), then \({\mathcal {T}}_c^{1/p}\) is the Wasserestein metric of order p. Optimal transport has deep and elegant links with probability, geometry and analysis. Thanks to recent breakthroughs in algorithmic development, optimal transport was also shown to be remarkably useful in statistics and machine learning [44].

In this paper we let M and \(M'\) be n-dimensional smooth manifolds (\(n \ge 2\)). In many cases of interest these are open domains in Euclidean space. Also we assume that \(c: M \times M' \rightarrow {\mathbb {R}}\) is smooth. Following the geometric approach of Kim and McCann [21] and McCann [32], consider the cross-difference

defined for pairs \((p, q'), (p_0, q_0')\) of the product space \(M \times M'\). Intuitively, it compares the costs of the matching \((p \rightarrow q', p_0 \rightarrow q_0')\) with that of \((p \rightarrow q_0', p_0 \rightarrow q')\). Note that if both \((p, q')\) and \((p_0, q_0')\) belong to the graph G of an optimal transport map (for some pair \((\mu , \nu )\)), by the c-cyclical monotonicity of G we have \(\delta (p, q', p_0, q_0') \ge 0\). In [21, 32] it was shown that this cross-difference defines a pseudo-Riemannian geometry on \(M \times M'\) which controls the geometry – including the regularity – of the optimal coupling. The pseudo-Riemannian metric (a non-degenerate 2-tensor), given by \(h = \frac{1}{2} {{\,\mathrm{Hess}\,}}\delta \), has signature (n, n) where the Hessian is taken with respect to the 2n-dimensional variable \((p, q')\). The details of this construction are recalled in Sect. 2.2. In this paper we show that the pseudo-Riemannian framework also encodes the dualistic structure of statistical manifold, and use this relation to elucidate several aspects of information geometry.

Before describing the relation with information geometry, let us discuss briefly the context of the papers [21, 32] in more detail. A fundamental problem in optimal transport is to study when the Monge–Kantorovich problem (1) admits a Monge (i.e., deterministic) solution, and, if so, the regularity (continuity and smoothness) of the optimal transport map. While existence of a Monge solution is implied by a twist condition on the cost function, regularity involves analyzing nonlinear partial differential equations of which the Monge–Ampère equation is a classic example. Significant progress was achieved by Ma, Trudinger and Wang [31] and Trudinger and Wang [47] who introduced a fourth order differential condition on the cost c and showed that it is sufficient for continuity and regularity estimates of the transport map (under suitable conditions on \(\mu \) and \(\nu \) and their supports). Loeper [29] showed that this condition is also necessary and also observed that for a Riemannian manifold M with \(c(x, y) = \frac{1}{2} d^2(x, y)\), the Ma–Trudinger–Wang (MTW) tensor on the diagonal of \(M \times M\) is proportional to the Riemannian sectional curvature (see Example 9). Kim and McCann [21] showed that these conditions can be interpreted in terms of the Riemann curvature tensor in their pseudo-Riemannian framework (see Remark 4). Further progress, including a characterization of the graph G in terms of a calibration form [22], is surveyed in [32]. For more details about the regularity of optimal transport maps we refer the reader to [49, Chapter 12], [21, Section 5] as well as [11].

1.1 Summary of main results

To describe the relation between the pseudo-Riemannian framework and information geometry, we first recall the concept of divergence which is a fundamental concept in information geometry [1, 5]. For simplicity we assume that the objects considered (manifolds, functions, etc) are all smooth.

Definition 1

(Divergence) Let M be an n-dimensional manifold. A divergence on M is a function \({\mathbf {D}} : M \times M \rightarrow [0, \infty )\) such that the following properties hold:

-

(i)

\(\mathbf{D}[ p : p'] = 0\) only if \(p = p'\).

-

(ii)

In local coordinates \((\xi ^1, \ldots , \xi ^n)\), we have

$$\begin{aligned} \mathbf{D}[ \xi + \varDelta \xi : \xi ] = \frac{1}{2} g_{ij}(\xi ) \varDelta \xi ^i \varDelta \xi ^j + O(|\varDelta \xi |^3), \end{aligned}$$(3)where \((g_{ij}(\xi ))\) is strictly positive definite and varies smoothly in \(\xi \). Note that g defines a Riemannian metric on M.

In (3) and throughout the paper the Einstein summation convention is used. The classic example is the case where M is a finite-dimensional parameterized family \(\{p(\cdot ; \xi )\}\) of probability densities (such as an exponential family, see [1, Chapter 2]), and \(\mathbf{D}\) is the Kullback–Leibler divergence (relative entropy). Then the corresponding Riemannian metric is the Fisher information metric given by

Following Eguchi [12], a divergence induces a dualistic structure \((g, \nabla , \nabla ^*)\) on M consisting of a Riemannian metric g, defined by (3), and a pair \((\nabla , \nabla ^*)\) of torsion-free affine connections which are dual with respect to the metric g (the expressions are given in Sect. 3.1). The connections are defined in terms of third order derivatives of \(\mathbf{D}[ \cdot : \cdot ]\) on the diagonal (which may be regarded as the graph \(G = \{ (x, x): x \in M\}\) of the identity map \(f(x) = x\), see Example 1 below), and the duality means that for any vector fields X, Y and Z we have the following extension of the metric property:

Note that the average \(\frac{1}{2} (\nabla + \nabla ^*)\) of \(\nabla \) and \(\nabla ^*\) is the Levi–Civita connection of g.Footnote 1 When \(\mathbf{D}\) is a Bregman divergence the induced dualistic structure is dually flat, i.e., both \(\nabla \) and \(\nabla ^*\) are flat [34]. Moreover, the two affine coordinate systems are related by a Legendre transformation [1, Chapter 6]. In the context of exponential family, this reduces to the convex duality between the natural and expectation parameters. Dual connections also appear in the context of affine differential geometry [35]. There are other geometric structures in information geometry, including statistical manifolds admitting torsion [18], quantum information geometry [54] as well as infinite dimensional statistical manifolds [45], but in this paper we focus on the dualistic structure \((g, \nabla , \nabla ^*)\).

In Sect. 2.1 we review the framework of c-divergence, a divergence on the graph \(G \subset M \times M'\) of optimal transport derived from the optimal transport map f and the corresponding Kantorovich potentials. Introduced by Pal and the first author in [42, 51], this framework includes the Bregman divergence as well as the \(L^{(\alpha )}\)-divergence studied in the series of papers [41, 42, 50,51,52]. The \(L^{(\alpha )}\)-divergence has many interesting properties; here we only note that its dualistic structure is dually projectively flat with constant sectional curvature \(-\alpha < 0\) and leads naturally to a generalized exponential families [51]. In fact, any divergence in the sense of Definition 1 can be regarded as a c-divergence by letting \(c = \mathbf{D}\) (see Example 1). Given the optimal transport map f, we regard the graph \(G = \{(p, f(p)) : p \in M\}\) as an embedded submanifold of the product manifold \(M \times M'\), and the c-divergence as a divergence on G. This gives a dualistic structure \((g, \nabla , \nabla ^*)\) on G.

In Sect. 3 we study the relations between the two geometries \((M \times M', h)\) and \((G, g, \nabla , \nabla ^*)\). Our main result, informally stated, reads as follows.

Theorem 1

The information-geometric Riemannian metric g is the restriction of the pseudo-Riemannian metric h to G. Moreover, the Levi–Civita connection \({\bar{\nabla }}\) of h induces \((\nabla , \nabla ^*)\) via natural horizontal and vertical projection maps. Similar statements hold for the curvature.

This result encodes the dualistic geometry of information geometry, in complete generality, in the pseudo-Riemannian framework. Intuitively, this is a consequence of the fact that the cross-difference (2) is equal to the symmetrization of the c-divergence on the graph G of the transport map (see Proposition 1). As a by-product, we obtain a new information-geometric interpretation of the MTW tensor on G (Corollary 1). Let us note that Theorem 1 is not the first result that relates the geometry of optimal transport to information geometry. In [20] Khan and Zhang computed the MTW tensor for the logarithmic cost function corresponding to the \(L^{(\alpha )}\)-divergence, and found that it has a particularly simple form. This result motivated our work on this paper. In the follow up work [19], they considered convex costs on \({\mathbb {R}}^n\) of the form \(c(x, y) = \varPsi (x - y)\), and showed that the MTW tensor is a multiple of the orthogonal holomorphic bisectional curvature of a Kähler manifold equipped with the Sasaki metric. On the other hand, in this paper we consider an arbitrary divergence and derive its dualistic structure using the original pseudo-Riemannian framework of Kim and McCann. Thus in this sense our results are more general.

As in classical differential geometry, spaces of constant sectional curvatures are of special interest. In our context there are two kinds of sectional curvatures: the cross curvature of a cost function on \(M \times M'\) (optimal transport) and the (primal and dual) sectional curvatures of the dualistic structure on G (information geometry). Their relations are studied in Sect. 4. In particular, constant cross curvature implies constant information-geometric curvature. From this point of view, the dually flat geometry of Bregman divergence, which corresponds to the quadratic transport, follows immediately from the flatness of the pseudo-Euclidean space. The \(L^{(\alpha )}\)-divergence, and its associated logarithmic cost function, lead to constant negative curvature.Footnote 2 In this setting we provide an intrinsic interpretation of the information-geometric curvature in terms of the divergence between a pair of primal-dual geodesics. Finally, in Sect. 5 we conclude and discuss some avenues for future research, including relations with the entropically relaxed optimal transport problem.

We end the introduction with a brief discussion of the relevant literature. The relations between optimal transport and information geometry have been the inspiration of many recent papers. Apart from our line of works which centers on the c-divergence and \(L^{(\alpha )}\)-divergence corresponding to the Dirichlet transport [41,42,43], other perspectives have been considered in the literature and the following discussion is far from exhaustive. For example, the papers [2, 3] study divergences defined using the entropically relaxed transport problem (see Example 4). The porous medium equation, which played an important role in the development of optimal transport, is studied in [37] using tools of information geometry. Relations between the Wasserstein metric and the Fisher–Rao metric are studied in [2, 9, 27, 33] among many others. Finite dimensional submanifolds of the Wasserstein space as well as their geometric and statistical properties are studied in a series of papers by Li and his collaborators; see [8, 26, 28] and the references therein.

2 c-Divergence and the pseudo-Riemannian framework

This section sets the stage of the paper. In Sect. 2.1 we review the c-divergence introduced by Pal and the first author in [42, 51]. It is worth noting that any divergence in the sense of Definition 1 can be regarded as a c-divergence via a suitable choice of the cost function (see Example 1). Then, in Sect. 2.2, we introduce the pseudo-Riemannian framework of Kim and McCann [21, 32]. For motivations and background in optimal transport we refer the reader to [42, 51] and their references.

2.1 c-Divergence

We first adapt the definitions in [42, 51] to our differential-geometric setting. Let M and \(M'\) be n-dimensional smooth manifolds, and let \(c: M \times M' \rightarrow {\mathbb {R}}\) be a (smooth) cost function. In many cases of interest we have \(M = M' \subset {\mathbb {R}}^n\). We denote generic points in M and \(M'\) by p and \(q'\) respectively.

Let \(\varphi : M \rightarrow {\mathbb {R}}\) be smooth and c-concave, i.e., there exists \(\psi : M' \rightarrow {\mathbb {R}} \cup \{-\infty \}\) such that

Suppose that the c-gradient of \(\varphi \) exists, i.e., for each \(p \in M\) there exists a unique \(q' =: D^c \varphi (p) \in M'\) such that \(\varphi (p) + \psi (q') = c(p, q')\). We assume that \(f = D^c \varphi \) is a smooth diffeomorphism from M onto its range \(f(M) \subset M'\). Intuitively, f represents an optimal transport map, with respect to the cost c, for a given pair of probability measures on M and \(M'\) respectively. Note that we may let \(\psi = \varphi ^c\) be the c-transform of \(\varphi \) which is given by

For an exposition of these concepts see [46, Section 1.6].

Let G be the graph of the optimal transport map, i.e.,

Clearly G is an n-dimensional embedded submanifold of the product manifold \(M \times M'\). By construction, we have

We regard the c-divergence, defined below, as a divergence on G.

Definition 2

(c-divergence) For \(x = (p, q), x' = (p', q') \in G\), we define

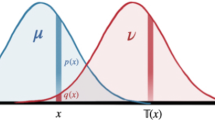

We call \(\mathbf{D} : G \times G \rightarrow [0, \infty )\) the c-divergence induced by the triple \((f, \varphi , \psi )\). See Fig. 1 for an illustration.

Illustration of the c-divergence. The black curve shows the graph G of an optimal transport map. The colour represents the value of \(c(p, q') - \varphi (p) - \psi (q')\) which is non-negative and zero only when \((p, q')\) belongs to the graph G. This value defines the divergence \(\mathbf{D}[x : x']\) where \(x = (p, q)\) and \(x' = (p', q')\) are respectively the horizontal and vertical projections of \((p, q')\) onto G

From (6) we see that \(\mathbf{D}\) is non-negative and vanishes only if \(x = x'\). For it to be a divergence on G in the sense of Definition 1, we require that the cost function is non-degenerate in the sense of [21, Definition 2.2]; this means means that in local coordinates the matrix \(\frac{\partial ^2}{\partial p^i \partial q'^{j}} c(p, q')\) is invertible (see also the discussion in Sect. 2.2). This condition will be assumed throughout the paper.

Remark 1

Note that the c-divergence is defined on the graph G. We may identify G with M (or \(f(M) \subset M'\)) via the mapping \(x = (p, f(p)) \mapsto p\) (or \(x = (p, f(p)) \mapsto q = f(p)\)). In particular, M and f(M) may also be identified via the transport map \(p \mapsto f(p)\). The last identification is used in [42, Definition 3.3] and [51, Definition 7].

Remark 2

(Interpretation of the c-divergence) Let \(\mu \) and \(\nu \) be probability measures satisfying \(\nu = f_{\#}\mu \) (where \(f_{\#} \mu \) is the pushforward of \(\mu \) under f), so that (under mild integrability conditions on \(\mu \) and \(\nu \)) \(f = D^c \varphi \) is an optimal transport map for the pair \((\mu , \nu )\). By Kantorovich’s duality, the value of the transport problem is given by

Now let \(\gamma \in \varPi (\mu , \nu )\) be a coupling of \((\mu , \nu )\) which may be suboptimal. Given \((p, q')\) on the support of \(\gamma \), let \(x := (p, f(p)), x' := (f^{-1}(q'), q') \in G\); see the projection maps introduced in Definition 3. We have

Thus the expected c-divergence is the excess transport cost compared to the optimal coupling. Intuitively, the c-divergence \(\mathbf{D}[x : x']\) measures the “distance” between \((p, q')\) and the graph G of optimal transport (see Fig. 1).

Before giving specific examples, let us make the important observation that any divergence can be regarded as a c-divergence.

Example 1

(Any divergence is a c-divergence) Suppose \(M = M'\) and \(\mathbf{D}: M \times M \rightarrow [0, \infty )\) is an arbitrary divergence as in Definition 1. Consider the cost function \(c \equiv \mathbf{D}\) given by the divergence. Then the identity transport \(f(p) \equiv p\) has a graph – the diagonal of \(M \times M\) – which is c-cyclically monotone. This transport map is induced by the constant c-concave function \(\varphi (x) \equiv 0\) whose c-transform \(\psi \) is also zero. Since \(\varphi \) and \(\psi \) both vanish, the c-divergence (7) is exactly the given divergence \(\mathbf{D}\).

While any divergence can be regarded as a c-divergence, the more interesting case for the purposes of this paper is where a given cost induces many c-cyclically monotone graphs (5) by varying the transport map f (which solves the optimal transport problem for different pairs of \((\mu , \nu )\)). Clearly the existence of such graphs is closely related to the existence and regularity of optimal transport map. Instead of giving precise sufficient conditions (which can be found in [49, Chapter 12]), to focus on the geometric ideas we simply assume that f and the Kantorovich potentials \((\varphi , \psi )\) are given.

Example 2

(Bregman divergence) Let \(M = M' = {\mathbb {R}}^n\) and let \(c(p, q') = \frac{1}{2}|p - q'|^2\) be the quadratic cost (where p, \(q'\) are expressed in Euclidean coordinates). Note that for this cost the effective term for the transport is \(-p \cdot q'\) since each of the other two terms of the expansion depend only on one of the variables. This important example has been treated in [51, Section 2] and to illustrate the ideas we recall the argument. By Brenier’s theorem [6], the optimal transport map has the form \(q = f(p) = D \phi (p)\), where \(\phi \) is a convex function and \(D\phi \) is the gradient. Here we assume \(\phi \) is smooth and the Hessian \(D^2 \phi \) is positive definite. Then \(\varphi (p) = \frac{1}{2}|p|^2 - \phi (p)\) is c-concave, \(D^c \varphi = D \phi \), and \(\psi (q') = \varphi ^c(q') = \frac{1}{2}|q'|^2 - \varphi ^*(q')\), where \(\varphi ^*\) is the convex conjugate of \(\varphi \). Since \(\varphi (p) + \varphi ^*(q') \equiv p \cdot q'\) by convex duality, substituting into (7) and simplifying, we see that the c-divergence corresponding to the triple \((f, \varphi , \psi )\) is the classic Bregman divergence:

where \(p' = f^{-1}(q') = D \phi ^*(q')\). Note that in (8) we represented the divergence in terms of the primal coordinate system p. When expressed in the dual coordinate system q, we have

The representation as a c-divergence recovers the self-dual representation of the Bregman divergence [1, (1.68)], namely

Its information geometry, which is dually flat [1, Chapter 1], is revisited in Example 7.

Example 3

(\(L^{(\alpha )}\)-divergence) This may be regarded as a nonlinear “deformation” of Example 2. Let \(M = M' = (0, \infty )^n\), and let \(\alpha > 0\) be a fixed parameter. Consider the logarithmic cost function

where \(a \cdot b\) is the Euclidean dot product. This reduces, after a suitable reparameterization, to the cost function of the Dirichlet transport studied in [43]. Remarkably, as shown in [43] and [51, Theorem 6], the optimal transport map is still explicit and has the form

where \(\varphi \) is an \(\alpha \)-exponentially concave function on M (i.e., \(e^{\alpha \varphi }\) is concave) satisfying suitable regularity conditions (see [51, Condition 7]) that will be assumed implicitly. The representation (10) of the optimal transport map may be regarded as an analogue of Brenier’s theorem. By an argument similar to the one presented in Example 2, it can be shown that the c-divergence is the \(L^{(\alpha )}\)-divergence given by

For detailed studies of this divergence see [42, 43, 50,51,52]. Note that as \(\alpha \rightarrow 0\) the \(L^{(\alpha )}\)-divergence converges to the Bregman divergence of the convex function \(-\varphi \). In [42, 51] it was shown that the induced dualistic structure is dually projectively flat with constant sectional curvature \(-\alpha \) (the converse is also true; for the precise statement see [51, Theorem 19]). Also see [39] which interprets the quadratic cost and (9) in terms of convex costs (Example 6) defined by exponential families. The regularity theory of this transport problem is recently addressed in [20] which motivated our study.

Example 4

(Entropic regularization) From (7), a general c-divergence is, apart from a change of coordinates (via \(q = f(p)\)), the same as the original cost function up to some linear terms that involve only p or \(q'\). We show how this idea can be used to interpret the \(D_{\lambda }\)-divergence in the recent paper [3] which defines a “modified Sinkhorn divergence” for the entropically regularized transport problem.

To be consistent with this paper we modify slightly the notations of [3]. Let \({\mathcal {X}} = \{0, 1, \ldots , n\}\) be a finite set, and let \(C = (C_{ij})\) be a non-negative cost on \({\mathcal {X}} \times {\mathcal {X}}\) which vanishes on the diagonal. Given \(p, q' \in {\mathcal {P}}({\mathcal {X}})\) (probability distributions on \({\mathcal {X}}\)) and a coupling \(\pi \in \varPi (p, q')\), consider the entropically regularized cost given by

where \(\lambda > 0\) is a regularization parameter and \(H(\pi )\) is the Shannon entropy of the coupling \(\pi \). We define the so-called C-function by

Let \(M = M' = {\mathcal {P}}_+ ({\mathcal {X}}) \equiv \{p \in (0, 1)^{1 + n} : \sum _i p^i = 1\}\) and consider the cost function \(c = C_{\lambda }\). In [3, Theorem 2] it is shown that

where \({\tilde{K}}_{\lambda } : {\mathcal {P}}({\mathcal {X}}) \rightarrow {\mathcal {P}}({\mathcal {X}})\) is an injective shrinkage operator.

Consider the function \(\psi (q') \equiv 0\) on \(M'\). Then

and it is easy to see that \(\varphi ^c = \psi ^{cc} = 0 = \psi \) on the range of \({\tilde{K}}_{\lambda }\). Thus, restricting c to \(M \times {\tilde{K}}_{\lambda }(M)\), \(\varphi \) is c-concave and the corresponding optimal transport map is given by \(q = f(p) = {\tilde{K}}_{\lambda } p\). The c-divergence is given by

which is nothing but the \(D_{\lambda }\)-divergence in [3, Definition 1] apart from a multiplicative constant. Clearly the same approach extends to other regularizations as long as the analogue of (13) is well-defined.

2.2 Pseudo-Riemannian framework

Next we describe the pseudo-Riemannian framework of Kim and McCann [21] which gives a geometric interpretation of the regularity theory of optimal transport studied by Ma, Trudinger & Wang [31], Loeper [29] and many others. A general reference of pseudo-Riemannian geometry is [38].

Consider manifolds \(M, M'\) and the cost function c as above. Recall the cross-difference \(\delta = \delta (p, q', p_0, q_0') : (M \times M')^2 \rightarrow {\mathbb {R}}\) defined in (2). The pseudo-Riemannian metric h is given in terms of the Hessian of \(\delta \) in the 2n-dimensional variables \((p, q')\). More precisely, let \(\xi = (\xi ^1, \ldots , \xi ^n)\) and \(\eta ' = (\eta '^{{\bar{1}}}, \ldots , \eta '^{{\bar{n}}})\) be local coordinates on M and \(M'\) respectively, and express the cost function in the form \(c = c(\xi , \eta ')\). Note that we use i to denote indices for M and \({\bar{i}}\) for \(M'\) to be consistent with the index notations in [21]. We denote

and so on. By the product structure we have the canonical decomposition

A generic tangent vector v at \((\xi , \eta ') \in M \times M'\) (with an abuse of notation where we identify the points with their coordinate representations) can be written in the form

We also write \(a = (a^1, \ldots , a^n)^{\top }\) and \(b = (b^{{\bar{1}}}, \ldots , b^{{\bar{n}}})^{\top }\) as column vectors. Define the \(2n \times 2n\) matrix

where the matrices \({\bar{D}}D c := (c_{i:{\bar{j}}})_{i,{\bar{j}}}\) and \({\bar{D}}Dc := (c_{j:{\bar{i}}})_{{\bar{i}},j}\) are evaluated at \((\xi , \eta ')\). We assume throughout that c is non-degenerate, i.e., \({\overline{D}}Dc\) and \(D {\overline{D}}c\) are invertible. Using (15) and (16), we define a pseudo-Riemannian metric h by

It is easy to see that (17) is equivalent to the following intrinsic representation.

Lemma 1

Write the cross-difference in the form \(\delta (p, q', p_0, q_0') = \delta (x, y)\), where \(x = (p, q'), y = (p_0, q_0') \in M \times M'\). Let X and Y be vector fields on \(M \times M'\). Then

where \(X_{(x)}\) is the derivation (on M) applied to the function when x varies and y is kept fixed (similar for \(Y_{(y)}\)).

In [21] it is shown that h has signature (n, n), i.e., the matrix (16) (denoted also by h) has n positive eigenvalues and n negative eigenvalues. Given the pseudo-Riemannian metric h, one can consider geodesics with respect to its Levi–Civita connection \({\bar{\nabla }}\) as well as the Riemann curvature tensor \({\bar{R}}\); these objects will be studied in the next section. In particular, the (unnormalized) sectional curvature gives a geometric interpretation of the Ma–Trudinger–Wang (MTW) tensor, introduced in [31], which plays a crucial role in the regularity theory of optimal transport maps. We will recall the definition of the MTW tensor in Remark 4.

The following result explains intuitively why the dualistic structure and the pseudo-Riemannian framework are related. The details of this relation, which amounts to desymmetrizing (18), are worked out in Sect. 3.

Proposition 1

(Cross difference is symmetrization of c-divergence) Consider the c-divergence \(\mathbf{D}\) associated to \((f, \varphi , \psi )\) and the graph G. Then for \(x = (p, q)\) and \(x' = (p', q')\) in G, we have

Thus on \(G \times G\) the cross difference is equal to the symmetrization of the c-divergence.

Proof

Let \(x = (p, q), x' = (p', q') \in G\). Using the definition of c-divergence, we have

\(\square \)

Remark 3

As an extension of (18), we may consider three pairs of points instead of two. Given \(x_i = (p_i, q_i)\), \(i = 1, 2, 3\), on G, we have

This identity was first observed in [42, Section 3.3]. In terms of optimal transport, this equals the excess transport cost (which can be positive or negative) of the coupling \((p_1 \rightarrow q_3, p_2 \rightarrow q_1, p_3 \rightarrow q_2)\) over \((p_1 \rightarrow q_3, p_2 \rightarrow q_2, p_3 \rightarrow q_1)\), and reduces to the cross-difference when \(x_1 = x_3\). Since a divergence is locally quadratic (see (3)), the left hand side of (19) may be called a “Pythagorean expression". Such expressions play an important role in information geometry. Specifically, both the Bregman and \(L^{(\alpha )}\)-divergence satisfy a generalized Pythagorean theorem [51, Theorem 16] which characterizes the sign of (19) in terms of the Riemannian angle of a primal-dual geodesic triangle.

Example 5

(Quadratic cost) Suppose \(M = M' = {\mathbb {R}}^n\) and \(c(p, q') = \frac{1}{2}|p - q'|^2\) as in Example 2. It is easy to verify that the matrix of the pseudo-Riemannian metric is given by

where I is the \(n \times n\) identity matrix. If \(v = a^i \frac{\partial }{\partial p^i} + b^{{\bar{i}}} \frac{\partial }{\partial q'^{{\bar{i}}}}\) is a tangent vector, then (20) gives \(h(p, q')(v, v) = a^1 b^{{\bar{1}}} + \cdots + a^n b^{{\bar{n}}}\).

Consider the pseudo-Euclidean space \({\mathbb {R}}_n^{2n} := \{(x, y): x, y \in {\mathbb {R}}^n\}\) with the metric

It is easy to verify that the mapping

is an isometry from \((M \times M', h)\) to \({\mathbb {R}}_n^{2n}\). Note that \({\mathbb {R}}_n^{2n}\) is, up to isometries, the unique space form (complete connected pseudo-Riemannian manifold with constant curvature) with signature (n, n) and zero curvature; see [38, Corollary 8.24]. As we shall see in Example 7, the dually flat geometry of Bregman divergence follows directly from our framework and the flatness of the pseudo-Euclidean space.

Example 6

(General convex cost) Suppose again \(M = M' = {\mathbb {R}}^n\). Now let \(c(p, q') = \varPsi (p - q')\) where \(\varPsi \) is strictly convex. The solution to this transport problem is given by Gangbo and McCann [17]. The pseudo-Riemannian metric is given in Euclidean coordinates by

where \(D^2 \varPsi \) is the Hessian matrix of \(\varPsi \). Khan and Zhang [19] expressed the MTW tensor for this cost in terms of the bisectional curvature of a certain Kähler manifold.

3 Connecting the two geometries

We show that the pseudo-Riemannian framework encodes the dualistic structure in information geometry. In essence, the pseudo-Riemannian metric h on the product manifold \(M \times M'\) induces, in a sense to be made precise, the dualistic structure \((g, \nabla , \nabla ^*)\) of the c-divergence on the graph G regarded as a submanifold of \(M \times M'\).

3.1 Preliminaries

We begin with some notations and preliminary results. Consider again the graph

equipped with the c-divergence \(\mathbf{D}\) given by (7). Fix local coordinate systems \(\xi = (\xi ^1, \ldots , \xi ^n)\) on M and \(\eta ' = (\eta '^{{\bar{1}}}, \ldots , \eta '^{{\bar{n}}})\) on \(M'\). Then \((\xi , \eta ')\) is a coordinate system on \(M \times M'\). By an abuse of notation, the coordinates are related on G by \(\eta = f(\xi )\). Projecting G to M (using \((p, f(p)) \mapsto p\)) and \(M'\) (using \((p, f(p)) \mapsto q = f(p)\)) respectively, we may regard \(\xi \) and \(\eta \) as local coordinate systems of G. We call \(\xi \) the primal coordinates and \(\eta \) the dual coordinates on G. Note that they are related by the transport map f. See Fig. 2 for an illustration (also see Remark 1 and compare with [42, Figure 2]).

As mentioned in Sect. 1.1, the c-divergence \(\mathbf{D}\), given by (7), induces a dualistic structure \((g, \nabla , \nabla ^*)\) on G, where g is a Riemannian metric and \(\nabla \) and \(\nabla ^*\) are torsion-free affine connections. Let us give the coordinate representation of these objects (see [1, Chapter 6] and [7, Chapter 11] for more details). Suppose we use the primal coordinate system \(\xi \) on G. Writing \(\mathbf{D} = \mathbf{D}[\xi : \xi ']\) as a function of \((\xi , \xi ')\), we have

Here \(\varGamma _{ijk}\) and \(\varGamma _{ijk}^*\) are the Christoffel symbols of \(\nabla \) and \(\nabla ^*\) respectively. Also we define \(\varGamma _{ij}{}^{k} (\xi ) = \varGamma _{ijm}(\xi ) g^{mk}(\xi )\) and \(\varGamma ^*_{jk}{}^{k} (\xi ) = \varGamma ^*_{ijm}(\xi ) g^{mk}(\xi )\), where \((g^{ij})\) is the inverse matrix of \((g_{ij})\). For instance, if \(\partial _i = \partial /\partial \xi ^i\) we have \(\nabla _{\partial _i} \partial _j = {\varGamma _{ij}}^k \partial _k\). Similarly, we can write down the coefficients in terms of the dual coordinates \(\eta = f(\xi )\). We denote by \(\frac{\partial \eta }{\partial \xi } = \left( \frac{\partial \eta ^{{\bar{i}}}}{\partial \xi ^j} \right) \) the Jacobian matrix of the transition map \(\xi \mapsto \eta \). Its inverse is given by \(\frac{\partial \xi }{\partial \eta } = \left( \frac{\partial \xi ^i}{\partial \eta ^{{\bar{j}}}} \right) \).

Write \(c = c(\xi , \eta ')\) as a function of \((\xi , \eta ')\) (locally in \(M \times M'\)) and consider the matrix \((c_{i:{\bar{j}}})\) of cross derivatives given by (14). We denote its inverse (which exists since c is non-degenerate) by \((c^{{\bar{i}} : j})\). This means that

where \(\delta _i^j\) and its analogues are Kronecker deltas. Differentiating (23), we obtain the following useful identities that are also used in [21].

Lemma 2

Under the local coordinate system \((\xi , \eta ')\) in \(M \times M'\), We have

With these notations we are ready to express the dualistic structure of the c-divergence \(\mathbf{D}\) on G.

Lemma 3

Under the primal coordinate system \(\xi \) of G, we have

where mixed derivatives such as \(c_{i : {\bar{m}}}\) are evaluated at \((\xi , \eta ) = (\xi , \eta (\xi ))\), so that the coefficients are functions of \(\xi \).

Similarly, the coefficients of g and \(\nabla ^*\) under the dual coordinate system \(\eta \) are given as follows:

Proof

Express the c-divergence (7) in terms of local coordinate. Then (25) and (26) follow from the definition (22) via direct differentiation. Computations for the special case where \(\mathbf{D}\) is an \(L^{(\alpha )}\)-divergence can be found in [42, 51]. \(\square \)

Note that \((g_{ij})\) and \((g^{{\bar{i}}{\bar{j}}})\) in (25) and (26) are by construction symmetric even though this may not be apparent from the formulas. Next we consider the pseudo-Riemannian metric h introduced in Sect. 2.2. The following result is taken from [21, Lemma 4.1].

Lemma 4

Equip the product manifold \(M \times M'\) with the pseudo-Riemannian metric h. Let \({\bar{\nabla }}\) be the Levi–Civita connection induced by h and let \({\bar{\varGamma }}_{\cdot \cdot }{}^{\cdot }\) be its Christoffel symbols. In the local coordinates \(\xi = (\xi ^1, \ldots , \xi ^n)\) for M and \(\eta ' = (\eta '^{{\bar{1}}}, \ldots , \eta '^{{\bar{n}}})\) for \(M'\), the only non-vanishing Christoffel symbols are

where the derivatives are evaluated at \((\xi , \eta ')\).

3.2 Metrics and connections

We are now ready to connect the two geometries, namely \((G, g, \nabla , \nabla ^*)\) and \((M \times M', h)\). We first give two results concerning the metrics and the connections that are intuitive and easy to state; the curvature tensors will be studied in Sect. 3.3.

First we consider the metrics. Recall that we have the canonical inclusion and decomposition

where we again abuse notations and identify points with their coordinates. A generic element v of \(T_{(\xi , \eta )} G\) has the form

where \((a^1, \ldots , a^n) \in {\mathbb {R}}^n\) and \(\frac{\partial \eta }{\partial \xi }\) is the Jacobian of the coordinate expression of f.

Theorem 2

(g as restriction of h to G) For any \((p, f(p)) \in G\) we have

Thus the information-geometric Riemannian metric g is the restriction of h to G.

Proof

Simply evaluate (17) where \(\eta ' = \eta \) and v is given by (29), and compare with (25). (Also see (18).) \(\square \)

Next consider the primal and dual connections \(\nabla \), \(\nabla ^*\) on G as well as the Levi–Civita connection \({\bar{\nabla }}\) on \(M \times M'\).

Definition 3

(Projection maps) We define projection maps \(\pi _0, \pi _1 : M \times f(M) \rightarrow G\) by

for \(x = (p, q') \in M \times f(M)\).

See Fig. 3 for an illustration. Motivated by this figure, we think of \(\pi _0\) as the vertical projection and \(\pi _1\) as the horizontal projection onto G. Note that if \(v = v_0 \oplus v_1\) as in (28), then \((\pi _i)_* v = v_i\), \(i = 0, 1\) (the differential map).

Given a mapping \(\pi : M \times f(M) \rightarrow G\) (which in our case is the projection \(\pi _0\) or \(\pi _1\)) and the connection \({\bar{\nabla }}\) on \(M \times M'\), we define an induced connection \({\bar{\nabla }}^{\pi }\) on G as follows. Let X, Y be given vector fields on G. For x of G fixed, we may extend X and Y to vector fields \({\tilde{X}}, {\tilde{Y}}\) in a neighborhood in \(M \times M'\). So we may apply \({\bar{\nabla }}\) to \(({\tilde{X}}, {\tilde{Y}})\) near x in \(M \times {\bar{M}}\). Note that \( {\bar{\nabla }}_{{\tilde{X}}} {\tilde{Y}} |_x \in T_x (M \times M')\) is not necessarily tangent to G. We define

where \(\pi _* : T(M \times M') \rightarrow TG\) is the differential of \(\pi \). Since X and Y are tangent to G, it can be verified easily that (32) defines unambiguously a torsion-free affine connection on G.

Theorem 3

We have \(\nabla = {\bar{\nabla }}^{\pi _0}\) and \(\nabla ^* = {\bar{\nabla }}^{\pi _1}\).

Proof

Consider the coordinate system \((\xi , \eta ')\) on \(M \times M'\) as in Sect. 3.1. Write \(\partial _i = \frac{\partial }{\partial \xi ^i}\) and \(\partial _{{\bar{i}}} = \frac{\partial }{\partial \eta '^{{\bar{i}}}}\). Let X and Y be vector fields on G, and let their local extensions in \(M \times M'\) be

By Lemma 4, the covariant derivative \({\bar{\nabla }}_{{\tilde{X}}} {\tilde{Y}}\) is given by

Evaluating at the point \(x = (p, f(p))\) and using the primal coordinates \(\xi \) on G, we have

By Lemma 3, we have \({\bar{\varGamma }}_{ij} {}^{k}(\xi , \eta ) = \varGamma _{ij}{}^{k}(\xi )\). So the last expression is equal to \(\nabla _X Y\). Similarly, we have \(\nabla ^* = {\bar{\nabla }}^{\pi _1}\). \(\square \)

Recall that \(T_{(p, f(p))} G \subset T_{(p, f(p))} (M \times M') = T_p M \oplus T_{f(p)} M'\). Using this decomposition, define mappings \(\iota _0, \iota _1: TG \rightarrow T(M \times M')\) as follows. If \(v = v_0 \oplus v_1 \in T_{(p, f(p))} G \subset T_p M \oplus T_{f(p)} M'\), define \(\iota _0(v), \iota _1(v) \in T_pM \oplus T_{f(p)} M'\) by

In coordinates, if \(v = a^i \partial _i + a^{{\bar{i}}} \partial _{{\bar{i}}}\), then \(\iota _0(v) = a^i \partial _i + 0\) and \(\iota _1(v) = 0 + a^{{\bar{i}}} \partial _{{\bar{i}}}\). Geometrically, \(\iota _0(v)\) and \(\iota _1(v)\) are respectively the horizontal and vertical components of v in \(T (M \times M')\). Now for vector fields X, Y on G, we may rewrite the identify (33) in the form

Example 7

(Geometry of Bregman divergence) As an illustration of the relation between the two geometries, let us consider the dualistic geometry of Bregman divergence. From Example 2, this corresponds to the case where \(M = M' = {\mathbb {R}}^n\) and c is the quadratic cost \(c(p, q') = \frac{1}{2} |p - q'|^2\). In Euclidean coordinates, the matrix of the pseudo-Riemannian metric h, given by (20), is constant. By Lemma 4, the Christoffel symbols of the Levi–Civita connection \({\bar{\nabla }}\) all vanish, so the \({\bar{\nabla }}\)-geodesics are constant-velocity straight lines in \({\mathbb {R}}^n \times {\mathbb {R}}^n\).

For the quadratic transport, the graph G has the form

where \(\phi \) is a convex function. (The results still hold if \(\phi \) is only defined on an open convex domain in \({\mathbb {R}}^n\).) So \(q = \nabla \phi (p)\) is the dual coordinates obtained from the Legendre transformation [1, Chapter 1] or equivalently the Brenier map. From (34), the Christoffel symbols of \(\nabla \) (resp. \(\nabla ^*\)) in the primal (resp. dual) coordinates vanish. So the primal (resp. dual) geodesics on G are straight lines in the primal (resp. dual) coordinates. Thus we recover the classic dually flat geometry. Also, from the first equation in (25), since \((c_{i:{\bar{j}}}) = -I\) and \(\frac{\partial q}{\partial p} = D^2 \phi \), the Riemannian metric is given in primal coordinates by \((g_{ij}(p)) = D^2 \phi (p)\), the Hessian of \(\phi \).

3.3 Curvature tensors

Next we study the Riemann curvature tensors \({\bar{R}}\) of \({\bar{\nabla }}\) on \(M \times M'\), and \(R, R^*\) of \(\nabla \) and \(\nabla ^*\) respectively on G. To fix the notations, we define the Riemann curvature tensor (say for the primal connection \(\nabla \)) by

where [X, Y] is the Lie bracket. In coordinates, we write

and \({R_{ijk}}^{\ell } = R_{ijkm} g^{m\ell }\), so that \(R(\partial _i, \partial _j) \partial _k = {R_{ijk}}^{\ell } \partial _{\ell }\). We have

See e.g. [1, Section 5.8]. The notations for \(R^*\) (on G) and \({\bar{R}}\) (on \(M \times M'\)) are analogous. Note that for \({\bar{R}}\) the indices run through both \(\xi \) and \(\eta '\).

Lemma 5

In the coordinates \((\xi , \eta ')\), the coefficients of \({\bar{R}}\) are zero unless the number of unbarred and barred indices is equal, in which case the coefficient can be inferred from \({\bar{R}}_{ij{\bar{k}}{\bar{\ell }}} = 0\) and

using the symmetries of the curvature tensor.

Proof

This is a computation (done in [21, Lemma 4.1]) involving Lemma 4, Lemma 2 and (37), which is straightforward once one is familiar with the notations. Note that our expressions differ from [21, (4.2)] by a sign; this is due to the difference in the tensorial notation (37).

For later use we also record the symmetries of the coefficients:

See for example [24, Proposition 7.4] whose notations are the same as ours. These symmetries hold in both the Riemannian and pseudo-Riemannian cases. Note that (39) gives all the coefficients of R that are possibly nonzero. \(\square \)

Lemma 6

-

(i)

In primal coordinates, we have

$$\begin{aligned} R_{ijk\ell }(\xi ) = -2 {\bar{R}}_{i{\bar{\alpha }}{\bar{\beta }}k}(\xi , \eta (\xi )) \frac{\partial \eta ^{{\bar{\alpha }}}}{\partial \xi ^j} \frac{\partial \eta ^{{\bar{\beta }}}}{\partial \xi ^{\ell }} + 2 {\bar{R}}_{j{\bar{\alpha }}{\bar{\beta }}k}(\xi , \eta (\xi )) \frac{\partial \eta ^{{\bar{\alpha }}}}{\partial \xi ^i} \frac{\partial \eta ^{{\bar{\beta }}}}{\partial \xi ^{\ell }}. \end{aligned}$$(40) -

(ii)

In dual coordinates, we have

$$\begin{aligned} {R_{{\bar{i}}{\bar{j}}{\bar{k}}{\bar{\ell }}}}^*(\eta ) = -2 {\bar{R}}_{\alpha {\bar{i}}{\bar{k}} \beta }(\xi (\eta ), \eta ) \frac{\partial \xi ^{\alpha }}{\partial \eta ^{{\bar{j}}}} \frac{\partial \xi ^{\beta }}{\partial \eta ^{{\bar{\ell }}}} + 2 {\bar{R}}_{\alpha {\bar{j}}{\bar{k}} \beta }(\xi (\eta ), \eta ) \frac{\partial \xi ^{\alpha }}{\partial \eta ^{{\bar{i}}}} \frac{\partial \xi ^{\beta }}{\partial \eta ^{{\bar{\ell }}}} . \end{aligned}$$(41)

Proof

The proof is similar to that of [21, Lemma 4.1]. Now we use Lemma 3, keeping in mind that in the primal case (say) \(\eta = \eta (\xi )\) is a function of \(\xi \). For example, we have

The dual case is similar. \(\square \)

Definition 4

(Unnormalized sectional curvature) Let X, Y be tangent vectors at the same point of \(M \times M'\). We define the unnormalized sectional curvature of \({\bar{R}}\) by

Similarly, we define

when X and Y are tangent to G.

Remark 4

(The Ma–Trudinger–Wang tensor) At a point \(x = (p, q') \in M \times M'\), let \(X = u \oplus 0\) and \(Y = 0 \oplus {\bar{v}}\) where \(u \in T_p M\) and \({\bar{v}} \in T_{q'} M\). Following Kim and McCann [21], the Ma–Trudinger–Wang (MTW) tensor (see [16, (5)] where a different multiplicative constant is used) can be expressed intrinsically as the unnormalized (cross) sectional curvature

The cost c is said to be weakly regular if \({\mathfrak {S}} \ge 0\) whenever \(u \oplus {\bar{v}}\) is a null tangent vector, i.e., \(h(u \oplus {\bar{v}}, u \oplus {\bar{v}}) = 0\) [21, Definition 2.3]. Note that in this case we have \(h(X, X) h(Y, Y) - h(X, Y)^2 = 0\); this is why one considers the unnormalized sectional curvature instead of the usual one. We refer the reader to [21, 32, 49] and their references for how this condition comes into play in the regularity theory of optimal transport maps. In Corollary 1 we give a new information-geometric interpretation of this quantity.

Now we are ready to state an interesting relation among the unnormalized sectional curvatures.

Theorem 4

Let \(X, Y \in T_{(p, f(p))}G \subset T_{(p, f(p))} M \times M'\). Then

In particular, suppose the dualistic structure \((g, \nabla , \nabla ^*)\) on G has constant information-geometric sectional curvature \(\lambda \in {\mathbb {R}}\). By definition, this means that

for X, Y tangent to G. Then

for X, Y tangent to G.

Proof

Write \(X = x^i \partial _i + x^{{\bar{i}}} \partial _{{\bar{i}}}\) and \(Y = y^i \partial _i + y^{{\bar{i}}} \partial _{{\bar{i}}}\). Using (42), Lemma 5 and the symmetries (39) of \({\bar{R}}\), we have

Since X and Y are tangent to G, from (29) we have \(x^{{\bar{i}}} = x^i \frac{\partial \eta ^{{\bar{i}}}}{\partial \xi ^i}\) and \(x^i = x^{{\bar{i}}} \frac{\partial \xi ^i}{\partial \eta ^{{\bar{i}}}}\) (similar for Y). In primal coordinate on G, we have \(X = x^i \partial _i\) and \(Y = y_i \partial _i\). We then compute

where the last identity follows from Lemma 6. Similarly, working in dual coordinates, we get

The result follows by averaging. \(\square \)

3.4 Divergence between geodesics

Consider a Riemannian manifold with distance d. If \(\gamma (s)\) and \(\sigma (t)\) are two arc-length parameterized geodesics started at the same point when \(s = t = 0\), then

where \(\kappa \) is the sectional curvature of the the plane spanned by \({\dot{\gamma }}(0)\) and \({\dot{\sigma }}(0)\), and \(\theta \) is the angle between the initial velocities (see for example [21, (4.9)]). This is the classical geometric interpretation of sectional curvature. In this section we extend this result to a c-divergence. Naturally, this involves the primal and dual geodesics rather than the Riemannian geodesics. The special case for \(L^{(\alpha )}\)-divergence is given in [53]. This result (and its proof) is closely related to, but different from, [21, Lemma 4.5] which extends (51) to the pseudo-Riemannian framework with a general cost function.

Theorem 5

Consider the graph G and equip it with the dualistic structure \((g, \nabla , \nabla ^*)\) induced by a c-divergence \(\mathbf{D}\). Let \(\gamma (s) = (p(s), f(p(s)))\) be a primal geodesic and \(\sigma (t) = (f^{-1}(q'(t)), q'(t))\) be a dual geodesic with \(\gamma (0) = \sigma (0) = x\). Letting

we have

Theorem 5 may be regarded as an interpretation of the MTW tensor (44) on the graph G. It should be compared with the standard interpretation (see e.g. [11, (4.13)]) which involves the c-exponential map.

Corollary 1

(Information-geometric interpretation of the MTW tensor) In the context of Theorem 5, let \((p, q) \in G\), \(u \in T_p M\) and, \({\bar{v}} \in T_q M\). Let \(\gamma \) and \(\sigma \) be respectively primal and dual geodesics whose initial velocities match with u and \({\bar{v}}\) (when expressed in the respective coordinates). Then the MTW tensor can be expressed as

Although the statement of Theorem 5 (as well as the proof) is very similar to [21, Lemma 4.5], the two results are not the same. In (52), both \(\gamma \) and \(\sigma \) are curves in G. On the other hand, in [21, Lemma 4.5] one is a “horizontal” curve and the other one is “vertical”. Before giving the proof of Theorem 5 let us give some examples. Another application to the \(L^{(\alpha )}\)-divergence is given in Corollary 2.

Example 8

(Bregman divergence) Consider the Bregman divergence \(\mathbf{D}\) as in (2). Let \(\xi \) and \(\eta '\) be respectively the primal and dual coordinates. By Example 7, the primal and dual geodesics are given respectively by \(\xi (s) = \xi (0) + {\dot{\xi }}(0)s\) and \(\eta '(t) = \eta '(0) + {\dot{\eta }}'(0) t\). This gives

which is consistent with the dual flatness. More about the constant curvature case is studied in Sect. 4.

Example 9

(Quadratic cost on a Riemannian manifold) Let M be a Riemannian manifold with geodesic distance \(d(p, q')\). Consider the cost function \(c(p, q') = \frac{1}{2} d^2(p, q')\) on \(M \times M\). Let \(f = \mathrm {Id}\) be the identity transport, so that G is the diagonal of \(M \times M\). The corresponding c-divergence is \(\mathbf{D} [ (p, p) : (p', p') ] = \frac{1}{2} d^2(p, p')\) (see Example 1). Identifying M and G (as smooth manifolds) under the natural map \(p \mapsto (p, p)\), it is easily shown that the dualistic structure \((g, \nabla , \nabla ^*)\) reduces to the Riemannian structure of M, i.e., g is the Riemannian metric of M and \(\nabla = \nabla ^*\) are equal to the Riemannian Levi–Civita connection of g. In particular, the primal and dual geodesics are simply Riemannian geodesics. By (51) and (53) we immediately get \({\mathfrak {S}} = \frac{2}{3} \kappa \sin ^2 \theta \), where \(\kappa \) is the Riemannian sectional curvature of the plane spanned by (u, v) and \(\cos \theta = g(u, v)\). This recovers [29, Theorem 3.8]. Also see [21, Example 3.6].

Proof of Theorem 5

Again we use the primal coordinates for \(\gamma \) and the dual coordinates for \(\sigma \). The first equality follows directly from (7). Computing the derivative (52), we have

By Lemma 3, the primal and geodesic equations are given by

Plugging into (54) and simplifying, we have

Comparing this with (42) gives the result. \(\square \)

4 Costs with constant sectional curvature

The quadratic cost and Bregman divergence are flat when considered in both the pseudo-Riemannian and information-geometric frameworks (see Example 5 and Example 7). In this section we consider the case of constant (non-zero) sectional curvature, a concept we now make precise. Note that the unnormalized sectional curvature \({\overline{\sec }}_u\) can be regarded as an operator on \(\bigwedge ^2 (T(M \times M')) = (\bigwedge ^2 TM) \oplus (\bigwedge ^2 TM') \oplus (TM \wedge TM')\) (see [21, Remark 4.2]). Since \({\overline{\sec }}_u\) vanishes on \((\bigwedge ^2 TM) \oplus (\bigwedge ^2 TM')\), \({\overline{\sec }}_u\) is determined by its action on \(TM \wedge TM'\).

Definition 5

Consider a real-valued cost function c on \(M \times M'\).

-

(i)

c has constant cross curvature \(\lambda \in {\mathbb {R}}\) on \(TM \wedge TM'\) if

$$\begin{aligned} \begin{aligned} {\overline{\sec }}_u(X,Y) = \lambda \left( h(X,X)h(Y,Y) - h(X,Y)^2\right) . \end{aligned} \end{aligned}$$(55)for any \(X = u \oplus 0, Y = 0 \oplus {\bar{v}} \in T_{(p, q')} (M \times M')\).

-

(ii)

c has constant sectional curvature \(\lambda \in {\mathbb {R}}\) on a graph G of optimal transport if (55) holds when X, Y are tangent to G. By Theorem 4, this is the case when G has constant information-geometric sectional curvature (see (46)).

Note that when \(X = u \oplus 0\) and \(Y = 0 \oplus {\bar{v}}\), from the form of the metric h (see (16)) we always have \(h(X, X) = h(Y, Y) = 0\). Thus (55) is equivalent to \({\overline{\sec }}_u(X, Y) = -\lambda h(X, Y)^2\).

4.1 The logarithmic cost function

Our main examples for Definition 5 are the quadratic cost (Example 2) as well as the logarithmic cost (Example 3). As shown in [41, 51], the logarithmic cost arises naturally in stochastic portfolio theory [41]. See [43] for a probabilistic interpretation involving the Dirichlet perturbation model.

Lemma 7

Consider the logarithmic cost \(c(p,q') = \frac{1}{\alpha } \log (1+\alpha p \cdot q')\) where \(M = M' = (0, \infty )^n\) and \(\alpha > 0\). Using the Euclidean coordinates (i.e., \(\xi = p\) and \(\eta ' = q'\)), we have:

Proof

This is a straightforward but tedious computations. For the benefit of the reader we record some of the intermediate steps:

\(\square \)

In fact, as the following lemma shows, (56) is equivalent to the condition of constant cross curvature.

Lemma 8

A cost function c has constant cross curvature \(-4\alpha \) on \(TM \wedge TM'\), \(\alpha \in {\mathbb {R}}\), if and only if (56) holds in some (and hence any) coordinate system \((\xi , \eta ')\). In particular, the logarithmic cost function (9) has constant cross curvature \(-4\alpha \) on \(TM \wedge TM'\).

Proof

Fix a coordinate system \((\xi , \eta ')\). Let \(X, Y \in T_{(\xi , \eta ')} (M \times M')\). Suppose \(X = (x^i \partial _i) \oplus 0\) and \(Y = 0 \oplus (y^{{\bar{i}}} \partial _{{\bar{i}}}) \in T_{(\xi , \eta ')} (M \times M')\). Since \(x^{{\bar{i}}} = 0\) and \(y^i = 0\). Following the argument of (48), we have

Suppose c satisfies (56). Then (57) implies that

Thus c has constant cross curvature \(-4\alpha \) on \(TM \wedge TM'\).

Conversely, suppose c has constant cross curvature \(-4\alpha \) on \(TM \wedge TM'\). Then for \(X = (x^i \partial _i) \oplus 0\) and \(Y = 0 \oplus (y^{{\bar{i}}} \partial _{{\bar{i}}})\) we have

Note that both coefficients \({\bar{R}}_{i{\bar{j}}{\bar{k}}l}\) and \(\frac{\alpha }{2} \left( c_{i{\bar{j}}} c_{l{\bar{k}}} + c_{i{\bar{k}}}c_{l{\bar{j}}}\right) \) are invariant under the swap of indices i and l or the swap of \({\bar{j}}\) and \({\bar{k}}\). Since (57) and (58) holds for arbitrary choice of \(x^i\), \(x^l\) and \(y^{{\bar{j}}}\), \(y^{{\bar{k}}}\), the identity (56) must hold. \(\square \)

4.2 Consequences of constant cross curvature

Now we show that if c has constant cross curvature, then the statistical manifolds it generates have constant information-geometric sectional curvature.

Theorem 6

Suppose the cost function c has constant cross curvature \(-4\alpha \) on \(TM \wedge TM'\). Then any graph G of optimal transport has constant information-geometric sectional curvature \(-\alpha \). Consequently, c has constant sectional curvature \(-\alpha \) on G.

Proof

By Lemma 8, c satisfies (56) in some coordinate system \((\xi , \eta ')\). Let G be a graph of optimal transport, and let X, Y be tangent to G. By (49), we have

Hence G has constant information-geometric primal sectional curvature \(-\alpha \). From (50), the same holds for the dual sectional curvature. The last statement follows from Theorem 4. \(\square \)

Remark 5

It is clear that if c has constant cross curvature, then c is weakly regular (see Remark 4). Indeed, the MTW tensor satisfies \({\mathfrak {S}} = 0\) whenever \(u \oplus {\bar{v}}\) is a null vector. From this and Lemma 8 we recover the recent result of Khan and Zhang (see [19, p. 22]).

Remark 6

It is interesting to know if some converse of Theorem 6 holds: if all graphs of optimal transport have constant information geometric sectional curvature, does the corresponding cost also have constant cross curvature? Is the logarithmic cost (up to reparameterization and linear terms) the unique cost which has constant cross curvature?

Finally, we specialize the above results to the \(L^{(\alpha )}\)-divergence to give an intrinsic interpretation of its information geometric sectional curvature. This representation is intrinsic because if a statistical manifold is dually projectively flat with constant sectional curvature \(-\alpha \), then locally one can define canonically a divergence of \(L^{(\alpha )}\)-type which is consistent with the ambient geometry [51, Theorem 19]. See [53] for more discussion and related results.

Corollary 2

Let \(\mathbf{D}\) be the \(L^{(\alpha )}\)-divergence which is the c-divergence of the logarithmic cost (9). Consider the context of Theorem 5, so that \(\gamma (s)\) is a primal geodesic, \(\sigma (t)\) is a dual geodesic and \(\gamma (0) = \sigma (0)\), we have

Proof

Following the notations Theorem 5, Let \(X = {\dot{p}}(0) \oplus 0\) and \(0 \oplus {\dot{q}}'(0)\). By Theorem 5, we have

Since the logarithmic cost has constant cross curvature \(-4\alpha \) by Lemma 8, we have

Consider the coordinates \((\xi , \eta ')\). Writing \(X = u^i \partial _i \oplus 0\) and \(Y = 0 \oplus {\bar{v}}^{{\bar{j}}} \partial _{{\bar{j}}}\). From (17), we have

Now consider \(\gamma \) and \(\sigma \) as curves in G. Using the primal coordinate on G, we have

By Lemma 3, we have

Plugging this into (60), we obtain the desired result. \(\square \)

5 Conclusion and future directions

This paper uncovers a fundamental relation between optimal transport and information geometry, and we expect that this framework will be useful for extending results in information geometry using optimal transport and vice versa. Here we discuss several directions for further study.

In this paper we studied the geometry of the basic Monge–Kantorovich optimal transport problem. Consider the entropically relaxed optimal transport problem (EOT)

where \(H(\gamma | \mu \otimes \nu )\) is the relative entropy of \(\gamma \) from the product coupling \(\mu \otimes \nu \), and \(\epsilon > 0\) is a regularization parameter. As \(\epsilon \rightarrow 0\) we recover the Monge–Kantorovich problem (1). Since it is closely related to the Schrödinger bridge problem [25] and can be solved efficiently via the Sinkhorn algorithm [10], many researchers have worked on the EOT in recent years. It can be shown that the optimal coupling \(\gamma _{\epsilon }^*\) of (61) has the form

where \(\varphi _{\epsilon }\) and \(\psi _{\epsilon }\) are called Schrödinger potentials. Under suitable conditions and normalizations, it was recently shown in [36] that the Schrödinger potentials converge to the Kantorovich potentials as \(\epsilon \rightarrow 0\), i.e., \(\varphi _{\epsilon } \rightarrow \varphi \), \(\psi _{\epsilon } \rightarrow \psi \). Thus, when \(\epsilon \approx 0\), we have heuristically

Thus the c-divergence arises naturally in the asymptotics of EOT. See in particular [40] where the c-divergence is used in a crucial way when studying an expansion of (61) as \(\epsilon \rightarrow 0\). It is interesting to see if the pseudo-Riemannian and information geometries play a role in these expansions. Also see [51, Section 4] where a generalized exponential family is defined using the logarithmic cost (9) and the expression \(e^{- \mathbf{D}}\). On the other hand, it may be possible to consider directly a geometry for the problem (61) where \(\epsilon > 0\) is fixed. Note that the optimal coupling (62) is no longer concentrated on a graph, but as \(\epsilon \rightarrow 0\) it converges to the optimal coupling in (1). Thus, the corresponding information geometry, if any, lives on the product space \(M \times M'\) rather than a submanifold G. This is close in spirit to [2] which considers the statistical manifold of optimal couplings.

Another possible direction is to consider the geometry of dynamic optimal transport problems where the coupling is replaced by the law of a stochastic process, say \((X_t)_{0 \le t \le 1}\), with initial distribution \(X_0 \sim \mu \) and final distribution \(X_1 \sim \nu \). We believe that an improved understanding of these problems will be helpful in statistical applications of optimal transport and information geometry.

In [53] and in Sect. 4 we studied the geometric meaning of information-geometric curvature in the case of constant sectional curvature. It is desirable to extend this result to arbitrary statistical manifolds. While Theorem 5 relates the time derivative of the divergence \(\mathbf{D}[\gamma (s) : \sigma (t)]\) to the unnormalized sectional curvature \(\overline{\mathrm {sec}}_u\), it is in general not intrinsic as there are infinitely many pseudo-Riemannian geometries which are compatible with a given dualistic structure \((G, g, \nabla , \nabla ^*)\). The inverse problem (of constructing h, c and \(\mathbf{D}\)) is related to the construction of canonical divergence [4] in information geometry; see also [13,14,15]. Finally, let us remark that optimal transport problems such as the reflector antenna problem [30] and the 2-Wasserstein transport on Riemannian manifolds may lead to interesting new examples of statistical manifolds and divergences.

Notes

Equivalently, a dualistic structure (also called a statistical manifold) can be defined by (M, g, T), where \(T = \nabla - \nabla ^*\) can be interpreted as a symmetric 3-tensor [23]. It can be shown that for any dualistic structure there exists a divergence which induces it.

Constant positive curvature corresponds to the \(L^{(-\alpha )}\)-divergence introduced in [51]. Since the proofs are similar, in this paper we focus on the \(L^{(\alpha )}\)-divergence.

References

Amari, S.-I.: Information Geometry and Its Applications. Springer, New York (2016)

Amari, S.-I., Karakida, R., Oizumi, M.: Information geometry connecting Wasserstein distance and Kullback–Leibler divergence via the entropy-relaxed transportation problem. Inf. Geom. 1(1), 13–37 (2018)

Amari, S.-I., Karakida, R., Oizumi, M., Cuturi, M.: Information geometry for regularized optimal transport and barycenters of patterns. Neural Comput. 31(5), 827–848 (2019)

Ay, N., Amari, S.-I.: A novel approach to canonical divergences within information geometry. Entropy 17(12), 8111–8129 (2015)

Ay, N., Jost, J., Lê, H.V., Schwachhöfer, L.: Information Geometry. Springer, New York (2017)

Brenier, Y.: Polar factorization and monotone rearrangement of vector-valued functions. Commun. Pure Appl. Math. 44(4), 375–417 (1991)

Calin, O., Udrişte, C.: Geometric Modeling in Probability and Statistics. Springer, New York (2014)

Chen, Y., Li, W.: Wasserstein natural gradient in statistical manifolds with continuous sample space. arXiv preprintarXiv:1805.08380 (2018)

Chizat, L., Peyré, G., Schmitzer, B., Vialard, F.-X.: An interpolating distance between optimal transport and Fisher–Rao metrics. Found. Comput. Math. 18(1), 1–44 (2018)

Cuturi, M.: Sinkhorn distances: lightspeed computation of optimal transport. Adv. Neural Inf. Process. Syst. 26, 2292–2300 (2013)

De Philippis, G., Figalli, A.: The Monge–Ampère equation and its link to optimal transportation. Bull. Am. Math. Soc. 51(4), 527–580 (2014)

Eguchi, S.: Second order efficiency of minimum contrast estimators in a curved exponential family. Ann. Stat. 11(3), 793–803 (1983)

Felice, D., Ay, N.: Dynamical systems induced by canonical divergence in dually flat manifolds. arXiv preprintarXiv:1812.04461 (2018)

Felice, D., Ay, N.: Towards a canonical divergence within information geometry. arXiv preprintarXiv:1806.11363 (2018)

Felice, D., Ay, N.: Divergence functions in information geometry. arXiv preprintarXiv:1903.02379 (2019)

Figalli, A.: Regularity of optimal transport maps [after Ma–Trudinger–Wang and Loeper]. In Séminaire BOURBAKI, vol. 2008–2009. Société Mathématique de France (2008)

Gangbo, W., McCann, R.J.: The geometry of optimal transportation. Acta Math. 177(2), 113–161 (1996)

Henmi, M., Matsuzoe, H.: Statistical manifolds admitting torsion and partially flat spaces. In: Geometric Structures of Information, pp. 37–50. Springer, New York (2019)

Khan, G., Zhang, J.: On the Kähler geometry of certain optimal transport problems. Pure Appl. Anal. 2(2), 397–426 (2020)

Khan, G., Zhang, J.: Optimal transport on the probability simplex with logarithmic cost. arXiv preprintarXiv:1812.00032v1 (2018)

Kim, Y.-H., McCann, R.J.: Continuity, curvature, and the general covariance of optimal transportation. J. Eur. Math. Soc. 12(4), 1009–1040 (2010)

Kim, Y.-H., McCann, R.J., Warren, M.: Pseudo-Riemannian geometry calibrates optimal transportation. Math. Res. Lett 17(06), 1183–1197 (2010)

Lauritzen, S.L.: Statistical manifolds. In: Differential Geometry in Statistical Inference, pp. 163–216. Inst. Math. Statist. (1987)

Lee, J.M.: Riemannian Manifolds: An Introduction to Curvature. Springer, New York (1997)

Léonard, C.: A survey of the Schrödinger problem and some of its connections with optimal transport. Discrete Contin. Dyn. Syst. A 34(4), 1533–1574 (2014)

Li, W.: Transport information geometry I: Riemannian calculus on probability simplex. arXiv preprintarXiv:1803.06360 (2018)

Li, W., Montúfar, G.: Natural gradient via optimal transport. Inf. Geom. 1(2), 181–214 (2018)

Li, W., Zhao, J.: Wasserstein information matrix. arXiv preprintarXiv:1910.11248 (2019)

Loeper, G.: On the regularity of solutions of optimal transportation problems. Acta Math. 202(2), 241–283 (2009)

Loeper, G.: Regularity of optimal maps on the sphere: The quadratic cost and the reflector antenna. Arch. Ration. Mech. Anal. 199(1), 269–289 (2011)

Ma, X.-N., Trudinger, N.S., Wang, X.-J.: Regularity of potential functions of the optimal transportation problem. Arch. Ration. Mech. Anal. 177(2), 151–183 (2005)

McCann, R.J.: A glimpse into the differential topology and geometry of optimal transport. Discrete Contin. Dyn. Syst. A 34(4), 1605–1621 (2014)

Modin, K.: Geometry of matrix decompositions seen through optimal transport and information geometry. J. Geom. Mech. 9(3), 335–390 (2017)

Nagaoka, H., Amari, S.-I.: Differential geometry of smooth families of probability distributions. Technical Report METR 82-7, University of Tokyo (1982)

Nomizu, K., Sasaki, T.: Affine Differential Geometry: Geometry of Affine Immersions. Cambridge University Press, Cambridge (1994)

Nutz, M., Wiesel, J.: Entropic optimal transport: Convergence of potentials. arXiv preprintarXiv:2104.11720 (2021)

Ohara, A., Wada, T.: Information geometry of q-Gaussian densities and behaviors of solutions to related diffusion equations. J. Phys. A Math. Theor. 43(3), 035002 (2009)

O’Neill, B.: Semi-Riemannian Geometry with Applications to Relativity. Academic Press, London (1983)

Pal, S.: Embedding optimal transports in statistical manifolds. Indian J. Pure Appl. Math. 48(4), 541–550 (2017)

Pal, S.: On the difference between entropic cost and the optimal transport cost. arXiv preprintarXiv:1905.12206 (2019)

Pal, S., Wong, T.-K.L.: The geometry of relative arbitrage. Math. Financ. Econ. 10(3), 263–293 (2016)

Pal, S., Wong, T.-K.L.: Exponentially concave functions and a new information geometry. Ann. Probab. 46(2), 1070–1113 (2018)

Pal, S., Wong, T.-K.L.: Multiplicative Schröodinger problem and the Dirichlet transport. Probab. Theory Relat. Fields 178, 613–654 (2020)

Peyré, G., Cuturi, M.: Computational optimal transport. Found. Trends Mach. Learn. 11(5–6), 355–607 (2019)

Pistone, G., Sempi, C.: An infinite-dimensional geometric structure on the space of all the probability measures equivalent to a given one. Ann. Stat. 23(5), 1543–1561 (1995)

Santambrogio, F.: Optimal Transport for Applied Mathematicians. Birkäuser, Basel (2015)

Trudinger, N., Wang, X.-J.: On the second boundary value problem for Monge–Ampére type equations and optimal transportation. Annali della Scuola Normale Superiore di Pisa-Classe di Scienze 8(1), 143–174 (2009)

Villani, C.: Topics in Optimal Transportation. American Mathematical Society, Providence (2003)

Villani, C.: Optimal Transport: Old and New. Springer, New York (2008)

Wong, T.-K.L.: Optimization of relative arbitrage. Ann. Finance 11(3–4), 345–382 (2015)

Wong, T.-K.L.: Logarithmic divergences from optimal transport and Rényi geometry. Inf. Geom. 1(1), 39–78 (2018)

Wong, T.-K.L.: Information geometry in portfolio theory. In: Geometric Structures of Information, pp. 105–136. Springer, New York (2019)

Wong, T.-K.L., Yang, J.: Logarithmic divergence: geometry and interpretation of curvature. In: Nielsen, F., Barbaresco, F. (eds.) International Conference on Geometric Science of Information, pp. 413–422. Springer, New York (2019)

Zanardi, P., Giorda, P., Cozzini, M.: Information-theoretic differential geometry of quantum phase transitions. Phys. Rev. Lett. 99(10), 100603 (2007)

Acknowledgements

Leonard Wong would like to thank Robert McCann, Jun Zhang and Soumik Pal for helpful conversations and comments. Jiaowen Yang would like to thank Shanghua Teng and Francis Bonahon for helpful discussions. Most of the work was done when he was a student at the University of Southern California. We also thank the anonymous reviewers for their careful reading and comments.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The research of T.-K. L. Wong is partially supported by NSERC Grant RGPIN-2019-04419 and the Connaught New Researcher Award. J. Yang was partially supported by a Simons Investigator Award and NSF grant CCF-1815254.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wong, TK.L., Yang, J. Pseudo-Riemannian geometry encodes information geometry in optimal transport. Info. Geo. 5, 131–159 (2022). https://doi.org/10.1007/s41884-021-00053-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s41884-021-00053-7