Abstract

The present study evaluated whether subjects’ expectations and neurofeedback training performance predict neurofeedback efficacy in cognitive training by controlling both factors as statistical variables. Twenty-two psychology students underwent neurofeedback training, employing beta/theta protocol to enhance beta1 power (13–21 Hz) and suppress theta (4–7 Hz) power. Neurofeedback efficacy was evaluated by behavioral components measured on pre-tests and post-tests employing a visual continuous performance task. The results revealed a significant interaction term between change in reaction time from pre-test to post-test and expectancy effect, indicating that participants with high prognostic expectations showed better improvement in reaction time scores. The data did not reveal that actual neurofeedback performance influenced the post-test measurements of the visual continuous performance task. No significant differences were found for reaction time variability, omission, or commission errors. Possible factors contributing to the results are discussed, and directions for future research are suggested.

Similar content being viewed by others

A large body of research appears to confirm the potential benefits of brain training programs (Jaeggi et al., 2014; Lilienthal et al., 2013; Rudebeck et al., 2012; Susanne et al., 2008) and in recent years, the implementation of such programs has significantly advanced. Many companies are attempting to introduce products to schools and therapy centers with the claim that cognitive performance will improve after a certain number of training sessions, positively affecting performance in academic, social, or professional contexts, and even reducing symptoms associated with various psychological disorders. One such training program involves neurofeedback.

The roots of neurofeedback can be traced to the late 1960s and 1970s, when it was established that it is possible to recondition or retrain brainwave patterns. The work of Sterman, in which cats learned to regulate sensorimotor rhythm through operant conditioning, became a milestone in neurofeedback research (Sterman & Egner, 2006). A typical procedure involves converting EEG parameters into visual or auditory signals, and presenting them to participants through a human–computer interface in a real-time setting. Feedback is the core component of such training, as the process reflects the principle of “no feedback, no learning.” Operant conditioning is the basis of this learning process (Hammond, 2011; Pérez-Elvira et al., 2021), reinforcing or inhibiting learning by means of positive or negative feedback following an evaluation of the actions performed. Every neurofeedback training session follows a protocol that specifies the power of the frequency band to be trained and the area on the scalp where this power will be recorded. A variety of protocols exist, each with its own history of development targeting different psychological disorders (for review, see Hammond (2011)). This study focuses on the so-called theta-beta (also referred to as beta/theta) protocol, often used to improve certain cognitive abilities, particularly in terms of improved attention (Kropotov, 2010).

Previously, several studies employing the beta/theta protocol and different cognitive tasks reported significant improvement in cognitive performance in both healthy individuals and attention-deficit/hyperactivity disorder (ADHD) patients after neurofeedback training (Boulay et al., 2011a; Egner & Gruzelier, 2004; Fuchs et al., 2003). Research results have also associated training of the beta frequency band with improvement in semantic memory performance using a computerized conceptual span task (Vernon et al., 2003). Other research noted that beta oscillations increased during endogenous top-down processes (Engel & Fries, 2010). Moreover, beta band (12–30 Hz) activity over the occipital area might be involved in sustaining attention processes (Kamiński et al., 2012), and is possibly linked to vigilance in humans (Joachim et al., 2004).

Even though several studies have reported positive results from neurofeedback training, the limitations of these findings also require consideration. Several studies pointed out on (Arnold et al., 2013; Schabus et al., 2017; van Dongen-Boomsma et al., 2013) implemented a control group in a double-blind placebo design and found that the effectiveness of neurofeedback training was not superior to placebo training, indicating that both conditions were effective. This fact suggests that neurofeedback training, like other brain training programs, faces the problem that underlying mechanisms leading to cognitive improvement are not well understood (Greenwood & Parasuraman, 2015). In addition, the inclusion of a control group does not necessarily account for unspecified factors that may influence results in both experimental and controlled conditions (for a more detailed discussion on this topic, see Boot et al. (2013) and Borkovec and Sibrava (2005)). Foroughi et al. (2016) proposed one such mechanism, also widely recognized in the literature: the expectancy effect. Nevertheless, little is known about the effect of expectations on brain training programs (Rabipour & Davidson, 2015).

The expectancy effect is a subjective expectation about the efficacy of a specific intervention. This concept, related to placebo mechanisms, was first theorized by Goldstein (1962). The theory was further divided into “prognostic expectation” and “participants’ role expectation.” The former refers to how participants estimate the positive or negative outcomes of their treatment. The latter, which is more complex, refers to the ways therapists anticipate participants will behave, as well as the participants’ expectations for their own behavior. In studies with psychological interventions, the expectancy effect has rarely been measured in experimental or control groups (Boot et al., 2013). Therefore, any conclusions about the causal efficacy of psychological interventions may be doubtful. These arguments are very important and highly relevant to neurofeedback training design, as neurofeedback training studies face identical issues. Acknowledging the aforementioned challenges, the focus of this study is centered on so-called prognostic expectations.

Current Study

Several previous studies employing the theta/beta protocol and different cognitive tasks (custom Go/No-go reaction time task [Boulay et al., 2011b]; the test of variables of attention [Egner & Gruzelier, 2004]; and continuous performance task [Egner & Gruzelier, 2001]) reported significant improvement of cognitive performance in healthy individuals after neurofeedback training that was associated with a change in behavioral data, such as reaction time, reaction time variability, and reduction of omission and commission errors. However, the results of some neurofeedback studies (Schabus et al., 2017; Schönenberg et al., 2017) that implemented a control placebo group support the hypothesis that the efficacy of neurofeedback training may be due to unspecified psychological factors rather than the training itself. The study of Lee and Suhr (2020) specifically evaluated the expectancy effect, reporting that this factor was positively associated with self-reported ADHD symptoms. This factor is considered one of the placebo mechanisms (Koshi & Short, 2007). Such findings imply that studying the expectancy effect individually could benefit the current debate in the literature. However, no well-defined hypothesis addresses the degree to which the placebo effect affects neurofeedback training. Hence, we avoided the umbrella term placebo and focused on a specific placebo effect: prognostic expectations. The literature suggests that the expectancy effect might serve as a powerful independent factor that increases participants’ commitment to the tasks in the study, potentially resulting in enhanced performance and increased scores post-test (Foroughi et al., 2016). If the expectancy effect is a significant psychological mechanism that enhances the outcome after cognitive training, then this phenomenon might also serve to predict the efficacy of neurofeedback training.

In the current study, we employed a visual continuous performance task and tested whether neurofeedback training is associated with training gains (change of reaction time scores, improvement of reaction time variability scores, and reduced number of omission and commission errors). Furthermore, to evaluate whether prognostic expectations and actual neurofeedback performance contributed to the training outcome, we added these factors to our model. Such a design allowed us to control these factors statistically and evaluate whether they are associated with pre-test-post-test measurement improvement.

Materials and Methods

Design and Procedure

This study implemented an experimental repeated measurement design with a voluntary response sample method. The study had one intervention group with pre-test-post-test measurements and intervention in the form of a neurofeedback training course.

Participants

A total of 22 (16 females, six males, aged 18–31 years, M = 24, SD = 3.1) university students participated in the study. All participants gave their informed consent. The study complied with the ethical standards outlined in the Declaration of Helsinki and was approved by the Norwegian Regional Committees for Medical Research Ethics (REK) (n 2014/497).

Participants’ Prognostic Expectations

Participants were introduced to neurofeedback training in a psychology course at the Norwegian University of Science and Technology. Their prognostic expectations about the effectiveness of neurofeedback training were measured using a visual analogue scale (Cline et al., 1992) before all neurofeedback training sessions. The scale was presented as a 10 cm horizontal line, with a verbal descriptor at each extreme. Before the first neurofeedback training session, subjects were asked to indicate their level of expectation for the entire course of training, with the request that they answer instinctively and mark their current state of feelings. On the left end of the scale was the descriptor “Neurofeedback will not improve attention” and at the right end was “I will feel a difference,” indicating neurofeedback would significantly improve attention. The students were asked to draw a perpendicular line to the point on the scale that represented their expectations. The scores were quantified by measuring the distance in millimeters from the left end to the point where a participant made their mark. The distance in millimeters, ranging from 0 to 100, represented each participant’s score.

Neurofeedback Method

Neurofeedback training was performed with a Mitsar Biofeedback Trainer Amplifier using the Braintuner 1.5.23 software package (http://www.mitsar-eeg.com/). Electroencephalogram (EEG) signals were acquired at 125 Hz, with refreshing time at 250 ms and band-pass filtered to extract theta (4–7 Hz) and beta (13–21 Hz) bands. The artifact detection threshold was set to 70 uV, and the signal was recorded with the help of two tin electrodes attached to region of training according to the theta/beta protocol, specifically at Fpz and Fz. The reference electrode was attached to the left earlobe.

Neurofeedback Training Sessions

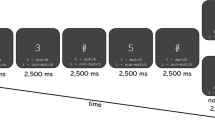

All neurofeedback training sessions (10 in total) took place at one of the EEG labs in the Department of Psychology at the Norwegian University of Science and Technology. All training sessions were performed between 9 a.m. and 3 p.m. and lasted no longer than 3 months. All participants had at least two training sessions per week, with a minimum 1 day break between sessions. Each training session was carried out in a sound-isolated room. The participants sat in a comfortable chair, looking at a computer screen (22-inch monitor) 1.5 m in front of them. Each training session lasted 30 min and consisted of four training blocks of 5 min each, interrupted by a 2-min break between blocks (Fig. 1). Before each training session, the participant’s theta/beta ratio was calculated for 2 min (eyes open), which was then used as a baseline for the following session.

The visual interface of a single training session. The training line moves from left to right during a training session. This line represents a participant’s brain activity. The participant tries to control this line by concentrating and using different focusing strategies to move the line upwards. Results represent the participant’s achievement for each separate training block, with 100% the maximum result. The baseline is represented by the theta/beta ratio (fast Fourier transform [FFT]) of participants prior to the training session. The baseline was manually changed to better visualize training effects

When the filtered EEG signal was transmitted to the computer, it was converted and displayed visually for the participant as a line graph (Fig. 1). The line was constantly moving during the training session from left to right. No particular strategy was suggested for maintaining focus on the feedback line. Instead, participants were instructed to try different focusing strategies to find the one that suited them best. If the participant managed to increase their beta/theta ratio (increased beta band power and suppressed theta band power), the line moved up; suppressing beta band power and increasing theta band power moved the line down. After each training session, the software displayed a percentage for each training block, reflecting how long the training line was above the midline during each block. The higher the number, the better the subject's performance. In other words, a high percentage suggested that the participant managed to increase their beta/theta ratio during the training. During the breaks, the training participants were instructed not to focus on anything in particular. The average performance from all four blocks was used to evaluate the entire training session.

Each training session concluded with a short debriefing, during which the results were discussed and participants could share their experiences. This procedure is considered one of the core components of neurofeedback training: “no feedback, no learning” (Hammond, 2011). If the participants perceived their results as negative, attempts were made to neutralize these perceptions during the debriefing, in order to maintain motivation and keep expectations at a consistent level. To assess the course of training, neurofeedback performance was operationalized as a variable representing the final result. This variable shows a percentage relative to time within training session (how long beta/theta ratio was above the baseline FFT). Since it was expected that the last training session would represent the participant’s best results in terms of ability to increase their theta/beta ratio, the results of the last training were calculated by averaging the four blocks of the session.

Visual Continuous Performance Task

Both pre-test and post-test measurements were taken in the same lab where the neurofeedback training took place. A visual continuous performance task was used to evaluate the training outcomes in terms of sustained attention (Riccio et al., 2002). While performing the cued Go/No-Go task, participants sat in a comfortable chair in a sound-isolated room, approximately 1.5 m from a 22-inch screen. The task consisted of 400 pairs of images, with each image belonging to one of three categories of stimuli: animal, human, or plant. Each category contained 20 different images to reduce habituation to the repetition of stimuli. The stimuli were presented in four equally probable combinations: animal-animal, plant-plant, animal-plant, and plant-human (Fig. 2). The images in pairs animal-animal and plant-plant were always identical. The first stimulus of each pair served as a cued stimulus where participants were asked to respond as soon as a second stimulus appears. The combination of animal-animal was defined as the Go condition, in which participants were asked to respond—as quickly and accurately as possible—by pressing the left mouse button. In the No-Go condition, participants were asked to withhold responses when presented with animal-plant images (Fig. 2). No responses were required for plant-plant and plant-human conditions, rendering these two conditions irrelevant. Each stimulus pair was presented separately for 100 ms, with a stimulus interval of 1000 ms, and for a trial duration of 3500 ms. No stimuli were presented during trial intervals.

The 400 trials were divided into four blocks. Each block consisted of 100 trials and lasted for 5 min. After each block, participants were given a break for about 1 min to maintain alertness and to neutralize boredom. On both pre-tests and post-tests, the participants were asked to respond as quickly as possible in the Go condition and to make as few errors as possible in the No-Go condition. Research has shown that behavioral components are highly sensitive to the instructions subjects receive (Aasen & Brunner, 2016). Therefore, every single participant in this study received identical verbal instructions.

Reaction time is a behavior component derived from the visual continuous performance task. This variable represents participants’ reaction speed in all Go trials (100 trials). A response was regarded as correct if it occurred between 150 and 1000 ms after the second stimulus in each Go trial. Reaction time variability represents the within-person fluctuation of reaction time response in Go trials. This score reflected the stability of response performance and was calculated using the following formula: SDrt / RTmean (Tamm et al., 2012). Omission and commission errors represent the number of errors participants made by failing to respond to target stimuli (omission) and the number of incorrect responses to non-target stimuli (commission).

Data Preparation and Analysis Approach

For our study, we chose a mixed-model analysis, which was developed to account for participant responses correlated over time (Twisk, 2013). The R software package was used to prepare and analyze the data (R Core Team, 2021). To apply mixed linear models, we used the lme4 and lmerTest packages (Bates et al., 2015). For post hoc comparison, we used the emmeans package (Russell, 2021). For a fixed effect in our mixed model, we entered the pre-post as our predictor with two levels (pre-test, post-test). To address skewed response times, our response variable (reaction time) was log transformed (Judd et al., 1995). Participants’ expectations and neurofeedback performances were entered as two additional predictors to our regression model, as well as their interaction term. For random effect, we entered the intercept of participants, thereby accounting for by-participant variability. Using the Wilkinson notation, the model was specified as follows:

-

RT model = rt log ~ pre-post × nf-performance × expectations + (1|participant)

-

We analyzed the omission and commission errors separately, using the same approach. The models were specified as follows:

-

Omission model = omission errors ~ pre-post × nf-performance × expectations + (1|participant)

-

Commission model = commission errors ~ pre-post × nf-performance × expectations + (1|participant)

Before fitting regression models, we analyzed all Go responses (N = 4341). Prior to response time analysis, we removed all response errors (commission and omission, 1.35% of the total amount). For within-subject procedure, we identified and removed outlier responses with a standardized residual value greater than three standard deviations from zero (1.33% of the total amount). For between-subject procedure, we used a boxplot method (Tukey, 1977) and analyzed all Go responses for pre-test and post-test scores separately. Pre-test scores of one participant (2.3% data lost) and post-test scores of another participant (2.2% data lost) were identified as outlier responses, which were excluded from the analysis.

Results

Descriptive Statistics (Table 1)

Response Times

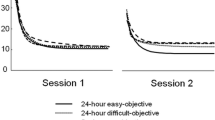

A reaction time analysis did not reveal a significant simple main effect of pre-test and post-test F (1, 4047) = 0.10, 95% CI [− 0.07, 0.10], p = 0.748. An interaction term between the pre- and post-test and neurofeedback performance did not reach significance F (1, 4042) = 0.14, 95% CI [− 0.00, 0.00], p = 0.706. The analysis revealed a significant interaction term between the pre- and post-tests and prognostic expectations F (1, 4042) = 3.68, 95% CI [− 0.00, 0.04], p = 0.055. Lastly, a three-way interaction between the pre- and post-test, neurofeedback performance, and participants’ expectations was not significant F (1, 4083) = 0.04, 95% CI [− 0.00, 0.00], p = 0.174.

This outcome indicates that participants’ expectations moderated the change from pre-test to post-test. We explored this two-way interaction term further by applying the median split (Iacobucci et al., 2015) to our predictor prognostic expectation Mdn = 3.8. Figure 3 illustrates a plot of this interaction.

Post Hoc Comparison (Reaction Time)

Further post hoc analysis with the Games-Howell adjustment revealed a significant difference between pre- and post-test measurements in the high expectations group (Mdiff = 18 ms) t(4072) = − 10.06, p < 0.001, ηp2 = 0.003. A significant difference between pre- and post-test scores was also found in the low expectations group with (Mdiff = 11 ms) t(4071) = − 7.25, p < 0.001, ηp2 = 0.001. The difference in pre-test scores between groups (Mdiff = 5 ms) was not significant t(22) = 0.54, p = 0.946. The difference in post-test scores between groups (Mdiff = 11 ms) was also not significant t(22) = 1.23, p = 0.615.

Omission and Commission Errors

Omission Errors

The analysis did not reveal any significant change between pre- and post-test measurements F (1, 8708) = 0.00, 95% CI [− 0.01, 0.01], p = 0.953. Neither neurofeedback performance F (1, 8708) = 0.53, 95% CI [− 0.00, 0.00], p = 0.465 nor prognostic expectation F (1, 8708) = 0.00, 95% CI [− 0.00, 0.00], p = 0.984 influenced post-test results. The three-way interaction between all predictors was not significant F (1, 8708) = 0.00, 95% CI [− 0.00, 0.00], p = 0.647.

Commission Errors

Similarly, the analysis did not show any significant change between pre- and post-test measurements F (1, 8690) = 0.50, 95% CI [− 0.05, 0.02], p = 0.475. Neither neurofeedback performance F (1, 8690) = 0.28, 95% CI [− 0.00, 0.00], p = 0.591 nor prognostic expectation F (1, 8690) = 0.10, 95% CI [− 0.00, 0.02], p = 0.749 influenced post-test results. The three-way interaction between all predictors was not significant F (1, 8690) = 0.00, 95% CI [− 0.00, 0.00], p = 0.776.

Reaction Time Variability

The analysis did not reveal significant differences between pre-test and post-test F (1, 20) = 0.97, 95% CI [− 0.25, 0.08], p = 0.336. Neither neurofeedback performance F (1, 20) = 0.85, 95% CI [− 0.00, 0.00], p = 0.365 nor prognostic expectations F (1, 20) = 0.581, 95% CI [− 0.02, 0.06], p = 0.454 had any effect on reaction time variability. The three-way interaction between all predictors was not significant F (1, 20) = 0.665, 95% CI [− 0.00, 0.00], p = 0.424.

Discussion

The present study aimed to investigate the efficacy of neurofeedback training on sustained attention and determine whether actual neurofeedback training performance or prognostic expectations can predict this efficacy. In terms of behavioral components of VCPT, and only after accounting for participants’ prognostic expectations, our results are similar to previous findings that successful training, designed to enhance beta1 power and suppress theta power, leads to improved reaction time scores (Egner & Gruzelier, 2004). In addition, our findings also support previously published results (Foroughi et al., 2016; Lee & Suhr, 2020; Schönenberg et al., 2017), showing that the expectancy effect influences cognitive training. Participants with high expectations had better change of reaction time scores from pre-test to post-test. In addition, the present findings did not show that actual neurofeedback performance affected post-test results of the visual continuous performance task.

We evaluated participants’ prior prognostic expectations as a predictor marginally influencing post-test reaction time scores. On the one hand, such results support previous findings that participants with high expectations might have had more interest in a given cognitive training, resulting in greater commitment and motivation to achieve positive outcomes (Foroughi et al., 2016; Hicks et al., 2016; Katerelos et al., 2015; Oken et al., 2008; Rutherford et al., 2010). On the other hand, the marginal effect could be related to other factors involved in cognitive training. One plausible explanation is that participants’ expectations changed during the course of the training, diminishing final results. Benedetti (2020) noted that expectations are not static beliefs and can change due to many aspects of the intervention procedure. Although we took note of the participants’ perceptions of performance, motivation, and interest in continuing the course during the debriefing sessions, we did not control these factors statistically. Therefore, the question of how prognostic expectations change during the training course remains. Furthermore, the pre-post-test difference was also significant in the low expectation group, possibly indicating that other confounding factors influenced the results.

The findings of this study only show statistical evidence relating to reaction time scores. We did not uncover statistical evidence of any improvements in reaction time variability or omission and commission errors. Therefore, our results are similar to the study of Egner and Gruzelier (2004), who reported that reduced amount of omission errors and reaction time variability were found when participants underwent neurofeedback training using sensorimotor rhythm training protocol (SMR: 12–15 Hz), whereas training of beta1 (15–18 Hz) was associated only with reduced reaction time. However, our data did not provide statistical evidence that actual neurofeedback performance was associated with improved reaction time scores. While such results could be considered supportive of the arguments of Vernon (2005), in that neurofeedback results are not associated with training frequency derived from EEG data, the absence of statistical evidence could still be related to methodological limitations. Specifically, we only evaluated neurofeedback performance from the last training session, when the performance of some participants was potentially affected by confounding factors (tiredness, mood state, etc.). Therefore, evaluation of neurofeedback performance from all the training sessions could provide more quality data. Furthermore, results from studies that used EEG power spectrum analysis and compared those results between pre- and post-test measurements of different tasks dispute the arguments of Vernon (2005). Whereas Janssen et al. (2020) reported that EEG data from pre- and post-tests of a resting-state task are not associated with theta/beta neurofeedback training, Benito et al. (2021), using a working memory task, and Wang et al. (2016), using an aberrant behavior checklist and theta/beta neurofeedback protocol, reported opposite results. Therefore, additional EEG power spectrum comparison is required to validate the point that training beta1 power (13–21 Hz) and suppressing theta power (4–7 Hz) is not associated with post-training results of the visual continuous performance task.

Comparing these findings with those of other neurofeedback studies is challenging because other studies did not evaluate potential unspecified factors statistically. Double-blind placebo studies only point out that such factors may contribute to the measured behavioral outcome (Borkovec & Sibrava, 2005; van Dongen-Boomsma et al., 2013). An evaluation of the expectancy effect is often ignored in other brain training programs; researchers prefer using a control group over controlling expectations as a statistical variable (Boot et al., 2013). Nevertheless, the literature has addressed the question of whether the potential effects of prognostic expectations contribute to therapeutic gains. For example, one meta-analysis conducted by Constantino et al. (2011) examined the effectiveness of prognostic expectations in 46 psychotherapy studies. The researchers found a small but significant correlation (d = 0.24) between expectations and therapeutic outcomes. Although the authors noted the methodological limitations of the studies, including some confusion over theoretical concepts and different types of measurements, they also argued that expectations appeared to change throughout the therapy.

A study by Schönenberg et al. (2021) reported that expectancy did not influence neurofeedback training success, and such success was dependent on the repetition of accuracy during neurofeedback performance. However, the prognostic expectations were not evaluated as the expectancy manipulation was achieved by providing different information to participants about what frequency band should be trained, even though participants trained the same SMR (15–18 Hz) band. Lee and Suhr (2020) implemented an approach similar to our study by operationalizing the expectancy effect (prognostic expectations) and reported statistical evidence that positive prognostic expectations about treatment outcome influenced the post-test of the self-reported ADHD symptoms questionnaire. However, the authors did not specify what protocol was used for neurofeedback training. Nevertheless, our study supports the results of Lee and Suhr (2020) that prognostic expectations are a possible psychological factor that may predict neurofeedback training results.

Limitations and Future Directions

In light of the presented findings, it should be highlighted that many neurofeedback studies suffer from methodological weaknesses (Loo & Makeig, 2012). Many neurofeedback studies assessed the effectiveness of neurofeedback training as a change in symptoms. However, the efficacy of neurofeedback training should be first assessed as an ability to produce changes in the EEG profile, and changes in symptoms or behavior should be considered a consequence of changes in the EEG profile (Pérez-Elvira et al., 2021). Although we applied a widely used protocol (beta/theta) for attentional enhancement, we did not account for the EGG profile of the group. Therefore, this protocol could not have been ideal for all participants in the sample due to individual EEG profile variation.

We acknowledge that our study’s lack of a control group prevents us from drawing any strong conclusions. Evaluating prognostic expectations in an intervention group and a control group could provide more clarity about this psychological mechanism. Furthermore, in order to investigate the expectancy effect and its relationship to neurofeedback, future studies could counterbalance participants’ prognostic expectations. Participants’ expectations are formed at the beginning of a course of training, when a particular brain training program is presented (Boot et al., 2013; Borkovec & Sibrava, 2005; Foroughi et al., 2016). In addition to statistically controlling expectations as a group variable in the analysis, dividing participants into intervention/control groups, and counterbalancing those groups with high/low expectations by presenting a brain training program differently, can provide deeper insights into this psychological mechanism.

Another possible limitation of this study might be related to the number of neurofeedback training sessions. Due to individual differences, more training sessions are required to strengthen results for some participants (Wang et al., 2016). However, the number of training sessions might be more relevant when neurofeedback training is used to treat different psychological disorders, such as anxiety, depression, or ADHD (Hammond, 2011). Regarding optimal performance, Gruzelier et al. (2006) reported that at least 5–10 training sessions are required before improvement can be observed. Furthermore, the evaluation of healthy subjects could potentially be affected by the ceiling effect (Ho & Yu, 2015). Since little is known about the physiological limits of the behavioral components of visual continuous performance tasks, evaluating the effects of expectations on specific psychological disorders may be preferable to examining peak performance in healthy individuals.

This study highlights an important factor in the design of a study aimed at evaluating potential placebo effects. Lack of specificity in various theoretical concepts is a recognized problem (Katerelos et al., 2015). Such confusion leads to flexibility in definitions which, in turn, yields biased results. These arguments are highly relevant to neurofeedback studies because those who are implementing control groups do not clearly distinguish between different placebo concepts. Without distinctively defined theoretical concepts, assessing the placebo effects on cognitive training becomes problematic. Therefore, using well-defined concepts, along with a statistical assessment, is essential and will allow for an easier comparison of independent studies.

Conclusion

Our results showed that prior prognostic expectations influenced neurofeedback training results in terms of faster reaction times. Prognostic expectations are an important starting point not only in cognitive training but also in other intervention programs aimed at treating different dysfunctions. Therefore, we want to highlight the importance of including this mechanism in future studies, in order to gain better insights into participants’ preparedness, motivation, and mental state prior to intervention. This may help readjust behavior, if necessary, and contribute to the training outcome. We must also emphasize the importance of using more specific theoretical concepts to reduce the flexibility of definitions related to placebo effects, allowing the comparison of results from different studies. This lack of precise theoretical concepts has yet to be resolved. Studying such concepts further can provide a better understanding of the underlying psychological mechanisms that may trigger a placebo response to therapy or brain training programs.

Availability of Data and Material

The datasets generated and analyzed during the current study are available in https://osf.io/pxe75/?view_only=ad8dbc68bd6d4fd5ae0adb41073a137d.

Code Availability

References

Aasen, I. E., & Brunner, J. F. (2016). Modulation of ERP components by task instructions in a cued go/no-go task. Psychophysiology, 53(2), 171–185. https://doi.org/10.1111/psyp.12563

Arnold, L. E., Lofthouse, N., Hersch, S., Pan, X., Hurt, E., Bates, B., Kassouf, K., Moone, S., & Grantier, C. (2013). EEG neurofeedback for ADHD: Double-vlind sham-controlled randomized pilot feasibility trial. Journal of Attention Disorders, 17(5), 410–419. https://doi.org/10.1177/1087054712446173

Bates, D., Mächler, M., Bolker, B., & Walker, S. (2015). Fitting linear mixed-effects models using lme4. Journal of statistical software, 67(1), 1–48. https://doi.org/10.18637/jss.v067.i01

Benedetti, F. (2020). Placebo effects: Understanding the mechanisms in health and disease (3rd ed.). OUP Oxford.

Benito, J.M.-B., Jorge, B.-B., Rolando, J.B.-L., Juan, S.-P., Lucero, A.-C., & Thalía, F. (2021). Effects of neurofeedback on the working memory of children with learning disorders—An EEG power-spectrum analysis. Brain Sciences, 11(957), 957. https://doi.org/10.3390/brainsci11070957

Boot, W. R., Simons, D. J., Stothart, C., & Stutts, C. (2013). The pervasive problem with placebos in psychology. Perspectives on Psychological Science, 8(4), 445–454. https://doi.org/10.1177/1745691613491271

Borkovec, T. D., & Sibrava, N. J. (2005). Problems with the use of placebo conditions in psychotherapy research, suggested alternatives, and some strategies for the pursuit of the placebo phenomenon. Journal of Clinical Psychology, 61(7), 805–818. https://doi.org/10.1002/jclp.20127

Boulay, C. B., Sarnacki, W. A., Wolpaw, J. R., & McFarland, D. J. (2011a). Trained modulation of sensorimotor rhythms can affect reaction time. Clinical Neurophysiology. https://doi.org/10.1016/j.clinph.2011.02.016

Boulay, C. B., Sarnacki, W. A., Wolpaw, J. R., & McFarland, D. J. (2011b). Trained modulation of sensorimotor rhythms can affect reaction time. Clinical Neurophysiology, 122(9), 1820–1826. https://doi.org/10.1016/j.clinph.2011.02.016

Cline, M. E., Herman, J., Shaw, E. R., & Morton, R. D. (1992). Standardization of the visual analogue scale. Nursing Research, 41(6), 378–380. https://doi.org/10.1097/00006199-199211000-00013

Constantino, M. J., Arnkoff, D. B., Glass, C. R., Ametrano, R. M., & Smith, J. Z. (2011). Expectations. Journal of Clinical Psychology, 67(2), 184–192. https://doi.org/10.1002/jclp.20754

Egner, H. T., & Gruzelier, H. J. (2001). Learned self-regulation of EEG frequency components affects attention and event-related brain potentials in humans. NeuroReport, 12(18), 4155–4159.

Egner, T., & Gruzelier, J. H. (2004). EEG Biofeedback of low beta band components: Frequency-specific effects on variables of attention and event-related brain potentials. Clinical Neurophysiology, 115(1), 131–139. https://doi.org/10.1016/S1388-2457(03)00353-5

Engel, A. K., & Fries, P. (2010). Beta-band oscillations — signalling the status quo? Current Opinion in Neurobiology, 20(2), 156–165. https://doi.org/10.1016/j.conb.2010.02.015

Foroughi, C. K., Monfort, S. S., Paczynski, M., McKnight, P. E., & Greenwood, P. M. (2016). Placebo effects in cognitive training.(PSYCHOLOGICAL AND COGNITIVE SCIENCES). Proceedings of the National Academy of Sciences of the United States, 113(27), 7470. https://doi.org/10.1073/pnas.1601243113

Fuchs, T., Birbaumer, N., Lutzenberger, W., Gruzelier, J., & Kaiser, J. (2003). Neurofeedback treatment for attention-deficit/hyperactivity disorder in children: A comparison with methylphenidate. Applied Psychophysiology and Biofeedback, 28(1), 1–12. https://doi.org/10.1023/A:1022353731579

Goldstein, A. P. (1962). Therapist-patient expectancies in psychotherapy (1st ed.). Macmillan Co/Pergamon Press.

Greenwood, P. M., & Parasuraman, R. (2015). The mechanisms of far transfer from cognitive training: Review and hypothesis. Neuropsychology. https://doi.org/10.1037/neu0000235

Gruzelier, J., Egner, T., & Vernon, D. (2006). Validating the Efficacy of Neurofeedback for Optimising Performance., 159, 421–431. https://doi.org/10.1016/s0079-6123(06)59027-2

Hammond, D. C. (2011). What is neurofeedback: An update. Journal of Neurotherapy, 15(4), 305–336. https://doi.org/10.1080/10874208.2011.623090

Hicks, M., Hanes, D., & Wahbeh, H. (2016). Expectancy effect in three mind-body clinical trials. Journal of Evidence-Based Complementary & Alternative Medicine, 21(4), NP103-NP109. https://doi.org/10.1177/2156587216652572

Ho, A. D., & Yu, C. C. (2015). Descriptive statistics for modern test score distributions: Skewness, kurtosis, discreteness, and ceiling effects. Educational and Psychological Measurement, 75(3), 365–388. https://doi.org/10.1177/0013164414548576

Iacobucci, D., Posavac, S. S., Kardes, F. R., Schneider, M. J., & Popovich, D. L. (2015). The median split: Robust, refined, and revived. Journal of Consumer Psychology, 25(4), 690–704. https://doi.org/10.1016/j.jcps.2015.06.014

Jaeggi, S., Buschkuehl, M., Shah, P., & Jonides, J. (2014). The role of individual differences in cognitive training and transfer. Memory & Cognition, 42(3), 464–480. https://doi.org/10.3758/s13421-013-0364-z

Janssen, T. W. P., Geladé, K., Bink, M., van Mourik, R., Twisk, J. W. R., Maras, A., & Oosterlaan, J. (2020). Long-term effects of theta/beta neurofeedback on EEG power spectra in children with attention deficit hyperactivity disorder. Clinical Neurophysiology, 131(6), 1332–1341. https://doi.org/10.1016/j.clinph.2020.02.020

Joachim, G., Frank, S., Irmtraud, S., Klaus, K., Kimron, S., Bernhard, H., & Alfons, S. (2004). Modulation of long-range neural synchrony reflects temporal limitations of visual attention in humans. Proceedings of the National Academy of Sciences of the United States of America, 101(35), 13050. https://doi.org/10.1073/pnas.0404944101

Judd, C. M., McClelland, G. H., & Culhane, S. E. (1995). Data analysis: Continuing issues in the everyday analysis of psychological data. Annual Review of Psychology, 46(1), 433–465. https://doi.org/10.1146/annurev.ps.46.020195.002245

Kamiński, J., Brzezicka, A., Gola, M., & Wróbel, A. (2012). Beta band oscillations engagement in human alertness process. International Journal of Psychophysiology, 85(1), 125–128. https://doi.org/10.1016/j.ijpsycho.2011.11.006

Katerelos, T. E., Bélanger, C., Payette, M.-C., El-Baalbaki, G., Marchand, A., & Perreault, M. (2015). The role of expectations in treatment outcome and symptom development in anxiety disorders. In A Fresh Look at Anxiety Disorders.https://doi.org/10.5772/60668

Koshi, E. B., & Short, C. A. (2007). Placebo theory and its implications for research and clinical practice: A review of the recent literature. Pain Practice, 7(1), 4–20. https://doi.org/10.1111/j.1533-2500.2007.00104.x

Kropotov. (2010). Quantitative EEG, event-related potentials and neurotherapy. Elsevier Science.

Lee, G. J., & Suhr, J. A. (2020). Expectancy effects of placebo neurofeedback in ADHD treatment seekers: A neuropsychological investigation. Neuropsychology, 34(7), 774–782. https://doi.org/10.1037/neu0000689

Lilienthal, L., Tamez, E., Shelton, J. T., Myerson, J., & Hale, S. (2013). Dual n-back training increases the capacity of the focus of attention. Psychonomic Bulletin & Review, 20(1), 135. https://doi.org/10.3758/s13423-012-0335-6

Loo, S., & Makeig, S. (2012). Clinical utility of EEG in attention-deficit/hyperactivity disorder: A research update. The Journal of the American Society for Experimental NeuroTherapeutics, 9(3), 569–587. https://doi.org/10.1007/s13311-012-0131-z

Oken, B. S., Flegal, K., Zajdel, D., Kishiyama, S., Haas, M., & Peters, D. (2008). Expectancy effect: Impact of pill administration on cognitive performance in healthy seniors. Journal of Clinical and Experimental Neuropsychology, 30(1), 7–17. https://doi.org/10.1080/13803390701775428

Pérez-Elvira, R., Oltra-Cucarella, J., Carrobles, J. A., Moltó, J., Flórez, M., Parra, S., Agudo, M., Saez, C., Guarino, S., Costea, R. M., & Neamtu, B. (2021). Enhancing the effects of neurofeedback training: The motivational value of the reinforcers. Brain Sciences, 11(4), 457. https://doi.org/10.3390/brainsci11040457

R Core Team (2021). R: A language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria. URL https://www.R-project.org/.

Rabipour, S., & Davidson, P. S. R. (2015). Do you believe in brain training? A questionnaire about expectations of computerised cognitive training. Behavioural Brain Research, 295, 64–70. https://doi.org/10.1016/j.bbr.2015.01.002

Riccio, C. A., Reynolds, C. R., Lowe, P., & Moore, J. J. (2002). The continuous performance test: A window on the neural substrates for attention? ☆. Archives of Clinical Neuropsychology, 17(3), 235–272. https://doi.org/10.1093/arclin/17.3.235

Rudebeck, S. R., Bor, D., Ormond, A., O’Reilly, J. X., Lee, A. C. H., & Chao, L. (2012). A Potential Spatial Working Memory Training Task to Improve Both Episodic Memory and Fluid Intelligence (episodic Memory Training)., 7(11), e50431. https://doi.org/10.1371/journal.pone.0050431

Russell V. L. (2021). emmeans: Estimated marginal means, aka least-squares means. R package version 1.6.2–1. https://CRAN.R-project.org/package=emmeans

Rutherford, B., Wager, T., & Roose, S. (2010). Expectancy and the treatment of depression: A review of experimental methodology and effects on patient outcome. Current Psychiatry Reviews, 6(1), 1–10. https://doi.org/10.2174/157340010790596571

Schabus, M., Griessenberger, H., Gnjezda, M.-T., Heib, D. P. J., Wislowska, M., & Hoedlmoser, K. (2017). Better than sham? A double-blind placebo-controlled neurofeedback study in primary insomnia. Brain, 140(4), 1041–1052.

Schönenberg, M., Wiedemann, E., Schneidt, A., Scheeff, J., Logemann, A., Keune, P. M., & Hautzinger, M. (2017). Neurofeedback, sham neurofeedback, and cognitive-behavioural group therapy in adults with attention-deficit hyperactivity disorder: A triple-blind, randomised, controlled trial. The Lancet Psychiatry, 4(9), 673–684. https://doi.org/10.1016/s2215-0366(17)30291-2

Schönenberg, M., Weingärtner, A.-L., Weimer, K., & Scheeff, J. (2021). Believing is achieving - On the role of treatment expectation in neurofeedback applications. Progress in Neuro-Psychopharmacology and Biological Psychiatry, 105, 110129–110129. https://doi.org/10.1016/j.pnpbp.2020.110129

Sterman, M. B., & Egner, T. (2006). Foundation and practice of neurofeedback for the treatment of epilepsy. Applied Psychophysiology and Biofeedback, 31(1), 21–35. https://doi.org/10.1007/s10484-006-9002-x

Susanne, M. J., Martin, B., John, J., & Walter, J. P. (2008). Improving fluid intelligence with training on working memory. Proceedings of the National Academy of Sciences, 105(19), 6829. https://doi.org/10.1073/pnas.0801268105

Tamm, L., Narad, M., Antonini, T., O’Brien, K., Hawk, L., & Epstein, J. (2012). Reaction time variability in ADHD: A review. The Journal of the American Society for Experimental NeuroTherapeutics, 9(3), 500–508. https://doi.org/10.1007/s13311-012-0138-5

Tukey, J. (1977). Exploratory data analysis (1st ed.). Pearson.

Twisk, J. W. R. (2013). Applied longitudinal data analysis for epidemiology : A practical guide (2nd ed. ed.). Cambridge University Press.

van Dongen-Boomsma, M., Vollebregt, M. A., Slaats-Willemse, D., & Buitelaar, J. K. (2013). A randomized placebo-controlled trial of electroencephalographic (EEG) neurofeedback in children with attention-deficit/hyperactivity disorder. The Journal of Clinical Psychiatry, 74(8), 821. https://doi.org/10.4088/JCP.12m08321

Vernon, D. (2005). Can neurofeedback training enhance performance? An evaluation of the evidence with implications for future research. Applied Psychophysiology and Biofeedback, 30(4), 347–364. https://doi.org/10.1007/s10484-005-8421-4

Vernon, D., Egner, T., Cooper, N., Compton, T., Neilands, C., Sheri, A., & Gruzelier, J. (2003). The effect of training distinct neurofeedback protocols on aspects of cognitive performance. International Journal of Psychophysiology, 47(1), 75–85. https://doi.org/10.1016/S0167-8760(02)00091-0

Wang, Y., Sokhadze, E. M., El-Baz, A. S., Li, X., Sears, L., Casanova, M. F., & Tasman, A. (2016). Relative power of specific EEG bands and their ratios during neurofeedback training in children with autism spectrum disorder. Frontiers in Human Neuroscience, 9, 723–723. https://doi.org/10.3389/fnhum.2015.00723

Funding

Open Access funding provided by UiT The Arctic University of Norway. The Artic University of Norway will undertake all expenses related to the publication.

Author information

Authors and Affiliations

Contributions

Conceptualization: Yevhen Damanskyy, Alexander Olsen, Stig Arvid Hollup.

Methodology and data collection: Yevhen Damanskyy, Stig Arvid Hollup.

Formal analysis: Yevhen Damanskyy.

Writing-original draft preparation: Yevhen Damanskyy, Alexander Olsen.

Writing-review editing: Yevhen Damanskyy, Alexander Olsen, Stig Arvid Hollup.

Corresponding author

Ethics declarations

Ethical Approval

The authors declare that they have no conflict of interest.

Consent to Participate

All participants provided signed informed consent prior to participation.

Consent for Publication

Participants also provided their consent to publish the data after it has been anonymized.

Conflict of Interest

The authors declare no competing of interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Damanskyy, Y., Olsen, A. & Hollup, S. Prior Prognostic Expectations as a Potential Predictor in Neurofeedback Training. J Cogn Enhanc 6, 205–215 (2022). https://doi.org/10.1007/s41465-021-00234-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s41465-021-00234-3