Abstract

Acrylic glasses, as well as mineral glasses, exhibit a high variability in tensile strength. To cope with this uncertainty factor for the dimensioning of structural parts, modeling of the stress-strain behavior and a proper characterization of the varying fracture stress or strain are required. For the latter, this work presents an experimental and mathematical methodology. Fracture strains from 50 quasi-static tensile tests, locally analyzed using digital image correlation, form the sample. For the assignment of an occurrence probability to each experiment, an evaluation of existing probability estimators is conducted, concerning their ability to fit selected probability distribution functions. Important goodness-of-fit tests are introduced and assessed critically. Based on the popular Anderson-Darling test, a generalized form is proposed that allows a free, hitherto not possible, choice of the probability estimator. To approach the fracture strains population, the combination of probability estimator and distribution function that best reproduces the experimental data is determined, and its characteristic progression is discussed with the aid of fractographic analyses.

Similar content being viewed by others

1 Introduction

Poly(methyl methacrylate) (PMMA), also known as acrylic glass, is a material with great potential for resource conservation. Since it is much lighter than mineral glass, the integration into automotive structures provides weight saving that reduces the car’s energy consumption. For mineral glass, it is already known that the strength in practical applications is significantly lower than theoretically determined (Orowan 1949; Wiederhorn 1967; Ritter and Sherburne 1971; Chandan et al. 1978; Overend and Zammit 2012). Alter et al. (2017) attribute this effect to the mineral glasses’ highly brittle nature paired with stochastic distributed surface flaws, coming from manufacturing, handling, and transportation. Thus, especially the glass edge shows a reduced strength compared to the glass surface (Lindqvist et al. 2011; Kleuderlein et al. 2016; Ensslen and Müller-Braun 2017). Since these flaws occur randomly, the failure criterion is a stochastic quantity rather than a deterministic value.

Likewise, acrylic glass, which is a brittle thermoplastic consisting of randomly entangled polymer chains, shows a high variation in its fracture strain (Rühl 2017). Since PMMA is either injection molded or processed from extruded plates, especially for the latter the machined edges show a higher roughness than the surface areas (Rühl 2017). In consequence, for machined PMMA components the edge strength must be assumed to be lower than the one of the surface. In this work, the tensile specimens had to be milled from extruded plates. Therefore, the gained fracture strains might be on a lower level than they are for injection molded components. Nevertheless, the hereafter proposed methodology is independent of the manufacturing process of specimens, as long as it is kept consistently.

Proceeding the work of Rühl et al. (2017), a methodology is introduced for determining the probability distribution of fracture strains in acrylic glasses. A description is given for sample generation, including the manufacturing process of specimens and the measurement of fracture strain. In order to provide the necessary statistical basics, the regarded probability distribution functions are briefly introduced, and the probability estimators, which are common in order statistics, are compiled. Based on the problem of how to properly combine distribution function and probability estimator to best reproduce the material’s fracture behavior, a detailed introduction of established goodness-of-fit tests is provided, in which the fundamentals are prepared to understand the working principle of a new test. One of the great results from this study is the proposal of a generalization of the Anderson–Darling goodness-of-fit test. This new test allows the necessary variability in choosing probability distribution function and probability estimator in order to find their optimum combination. The fit methodology is directly adopted for the sample of PMMA fracture strains, revealing two different fracture criteria within the raised sample. With the help of fractographiy, a link between two-modal fracture strain distribution and defects induced by specimen production is discovered.

2 Experimental results

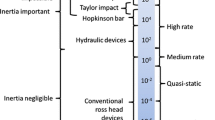

The data base for the statistical analyses on the fracture behavior of acrylic glasses is a sample of fracture strains, which is gained from 50 uniaxial tensile tests that where performed at room temperature. The tensile specimens are milled out from PMMA plates. These plates come from the same production batch having a mean thickness of 3.065 mm with a standard deviation of \(\pm 0.043\) mm. With the tolerances coming from the milling process, the cross-sectional area of the measuring zone becomes averaged at 36.94 mm\(^2\) with a standard deviation of \(\pm 0.57\) mm\(^2\). The specimen’s dimensions that are shown in Fig. 1a are adopted from Rühl et al. (2017), which is a geometry (BZ) optimized for high strain rates with uniaxial stress state in the measuring zone. The relation of hydrostatic pressure p over von Mises stress \(\sigma _{\mathrm {vM}}\) can be used as a measure of triaxiality m, where

becomes 1/3 for stress in only one principal direction, i.e. uniaxial tensile stress (e.g. Rühl 2017). Figure 1b illustrates the results of a linear-elastic simulation of the tensile test with respect to triaxiality. In the experimental tests quasi-static loading is realized by 6 mm/min machine traverse velocity, resulting in a nearly constant strain rate of \({\dot{\varepsilon }}=(0.0014\) \(\pm 0.0001) \text { s}^{-1}\) in the measuring zone. The received force-displacement curves are given in Fig. 2. On the one hand, they illustrate the high reproducibility of the test, on the other hand, they demonstrate the necessity for a statistical approach in characterization of the fracture strain due to the high variation in the acrylic glasses tensile strength. Young’s modulus and Poisson’s ratio are taken from the work of Rühl (2017). The areas of turquoise color state an almost uniaxial stress state in the specimen’s measuring zone and on edge level. Thus, the fracture strains are taken locally with slight edge distance right at the position of crack initiation, utilizing a digital-image-correlation (DIC) analysis, which is shown in Fig. 3. The cracks starting point lies in the tip of the v-shaped fracture pattern that is displayed on the right hand side in Fig. 3. DIC enables the measurement of true strain or Hencky strain on the specimen’s surface. Since the point of failure usually occurs between the last picture of the intact and the first picture of the fractured specimen, the last local strain is linearly extrapolated using the higher measuring frequency of the load cell to receive the fracture strain. As fracture criterion, strain is preferred over stress, since it is always a distinct value, even for non-linear material behavior, and as a kinematic quantity it is independent of material models. The measurement precision of the DIC system cannot be provided in a conventional way as distinct value. The accuracy is strongly dependent on the requirements of the test laboratory, such as ambient temperature, illumination, vibrations, etc. In order to quantify the error, 100 images of an unloaded specimen are taken, and the noise of measured strain about the image series is analyzed. The applied facet size is approx. 3% of the sample width. The uncertainty in strain measurement is found to be \(\pm 0.0006\) in the current experiments. The fracture strains \(\varepsilon _i\) \((i=1,2, \ldots ,50)\) that form the database in the following investigations are depicted as a histogram in Fig. 4. The histogram, whose interval ranges from 0.02 to 0.05 and which is divided into ten even bins, gives a rough idea of what the probability density function (PDF) of the sample might look like. Yet, it is uncertain whether the PDF features an unimodal or a multimodal progression. However, the familiar bell-shaped curve seems to be unlikely. For this reason, further statistical analysis are conducted in the following, instead of a general assumption of normal distribution. As shown later on, that is the correct decision. Thus, even in this early state, the histogram gives the indication for an irregularity within the sample.

3 Probability distribution functions

The probability distribution of the fracture strains \(\varepsilon _i\) is tested for agreement with selected probability distribution functions, of which an overview is listed in Table 1. Tensile tests are performed on specimens that are assumed to feature no residual stresses. That postulate bases on our studies in Brokmann et al. (2019), in which by use of a polariscope the manufactured specimens are attested to be stress free. Since no negative fracture strains occur, all distribution functions are considered for the variable \( \varepsilon ~\in ~[0,\infty ) \). The function parameters are consistently defined as

-

mean value \(\mu \in {\mathbb {R}}\),

-

standard deviation \(\sigma \in {\mathbb {R}}^+\),

-

shape parameter \(\beta \in {\mathbb {R}}^+\),

-

scale parameter \(\eta \in {\mathbb {R}}^+\), and

-

location parameter \(\gamma \in {\mathbb {R}} \).

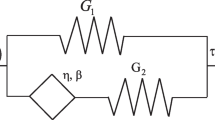

The probability distribution functions are chosen for representation of the most common function families in science and of the most common modified distribution functions in the statistics of glass. Besides the commonly familiar normal or Gaussian distribution of Eq. (2), its relative, the log-normal distribution, is considered. Its cumulative distribution function (CDF) is given in Eq. (8), in which \(\mu \) and \(\sigma \) are mean and standard deviation of the natural logarithmized random variable \(\varepsilon \). In both normal and log-normal CDF, “\(\mathrm {erf}(x)\)” is the error function.

In reproduction of the stochastic fracture behavior of glasses and ceramics typically Weibull distributions are applied. The 2-parameter Weibull (2PW) distribution is a special form of the three-parameter Weibull (3PW) distribution, where the location parameter \(\gamma \) is zero, cf. Eq. (3) and Eq. (4). The parameter \(\gamma \) describes a lower limit, below which no observations are expected, i.e. only in excess of the null strain \(\varepsilon _0 = \gamma \) fracture appears. Similarly, the so called left-truncated Weibull (LTW) distribution in Eq. (10), see Ballarini et al. (2016), provides a lower limit \(\gamma \) as well, but by truncation of the 2PW distribution’s probability density function. That changes the interpretation of the lower limit: fracture at strains below \(\gamma \) is possible, but not represented in the sample.

Furthermore, the CDFs of the log-Weibull, the Gumbel, the logistic and log-logistic, and Cauchy distribution are given in Eq. (6, 7, 9, 12, 13), cf. Forbes et al. (2011), Rinne (2008), Ahmad et al. (1988). The variable \(\varepsilon \) is defined as being log-normal, log-logistic, or log-Weibull if \(\ln (\varepsilon )\) is normal, logistic, or Weibull distributed.

In case the probability density function of experimental data features two modes, as potentially indicated in Fig. 4 for the current sample of fracture strains, the bilinear Weibull (BLW) distribution in Eq. (5), cf. Ballarini et al. (2016), offers the reasonable approach of splitting the sample in two sets and assuming an 2PW distribution for each. The intersection \(\varepsilon ^*\) of the two 2PW distributions derives from equating both functions and solving for

At transition, the differentials of both 2PW distributions differ, unless \(\eta _1 = \eta _2\) and \(\beta _1 = \beta _2\), which is trivial. For a more smooth transition, the bimodal Weibull (BMW) distribution of Eq. (11), cf. Pisano (2018), merges the two separate functions together. Its parameters differ from the ones of a corresponding BLW distribution and cannot simply be transferred.

4 Function fitting

The biggest difficulty in the modeling of the probability distribution of a sample is the probability assignment to an occurrence since the population is unknown. Over time, many probability estimators were introduced for various applications. An overview of these estimators is given in Table 2. In case of the estimator in Eq. (17) published by the California Department of Public Works (1923) the leading author is unknown, so typically it is referred to as the California method. As discussed later, this estimator is the one commonly used for goodness-of-fit evaluations. Cunnane (1978) showed a generalized estimator, to which many of the others can be attributed to by an appropriate choice of c in Eq. (25). He suggested \(c=0.4\). Furthermore, it is to be noted that Eq. (18) gives the mean rank and Eq. (20) the median rank plotting positions (Weibull 1939; Bernard and Bos-Levenbach 1953; Datsiou and Overend 2018).

The procedure is as follows: the N measured fracture strains are sorted in ascending order \(\varepsilon _1\le \varepsilon _2\le \ldots \le \varepsilon _N\), so that position \(m~=~1\) holds the minimum fracture strain and position \(m=N\) the maximum one. Then only dependent on this position m, the corresponding occurrence probability \(p_i\) is assigned using one of the probability estimators. The generated coordinates \({(\varepsilon _i\mid p_i)}\) are the plotting positions. To fit the CDFs from Sect. 3 to this empirical CDF, the function parameters that minimize the weighted residual sum of squares (WRSS)

are determined, in analogy to the later discussed weightened goodness-of-fit tests (Anderson and Darling 1954; Sinclair et al. 1990). In Eq. (28) \(p_i\) is the ith plotting position, \(P(\varepsilon _i)\) is the function value for the corresponding ith fracture strain and \(\psi (u)\) is a weight function, for which examples are given in Sects. 5.2 and 5.3 . This procedure is adopted for all introduced distribution functions. Indeed, by appropriated transformation of the coordinate system the 2PW, the BLW, the log-Weibull, the Gumbel, the logistic, the log-logistic and the Cauchy distribution can be brought into a linear form. In such coordinate systems, a CDF is then simply fitted on the plotting positions by linear regression. The provided confidence and prediction limits of the linear regression are advantageous. But by a transformation of the coordinate systems an unwanted weighting of residua is brought into the fit. Makkonen (2006) warns against this fit of observations to a model, instead of fitting a model to the observations. So to avoid that and keep the weights comparable, the CDFs are all fitted by minimization of the WRSS.

5 Goodness-of-fit

The goodness of a fitted distribution function is evaluated in hypothesis testing. A null hypothesis is assumed, which states that the sample and the fitted probability distribution have the same population. The error, which one would make by falsely rejecting \(H_0\), is quantified. Typically, the outcome of these tests, the so called test statistic, is compared to tabulated critical values, which are dependent on sample size and significance level. The significance level \(\alpha \) is the probability for the rejection of a true \(H_0\). Subsequently, the development of famous goodness-of-fit tests is presented, in order to provide the fundamentals for the proposal of a new test statistic.

5.1 Developing enhanced complexity

The simplest testing method in EDF statistics is the so-called Kolmogorov–Smirnov (KS) test (Stephens 1974). It examines the most critical deviation between fitted CDF and sample. The experimental data is considered as empirical CDF \(P_n(\varepsilon )\), i.e. empirical distribution function (EDF), which is defined through

cf. Anderson and Darling (1954). Thus, for the measured fracture strains \(\varepsilon _i\) the occurrence probabilities \(P_n(\varepsilon _i)\) are just the ones of the California method from Eq. (17). This means that in all EDF statistics the singe occurrence probability \(p_i=P_n(\varepsilon _i)\) is estimated by i/n. Figure 5 displays the EDF of the current sample of PMMA fracture strains. For each probability step of the EDF, the starting and the ending point are compared to the CDF \(P(\varepsilon )\) at corresponding abscissa-position (\(D^+\) and \(D^-\)). The maximum occurred deviation is defined as test statistic D with

and

as

The KS test provides a distinct test statistic D that is compared to a critical value one might take from D’Agostino and Stephens (1989). However, for the subject of this work this test statistic is not suitable, since only the one point of the EDF with maximum deviation to the CDF is considered. The EDF’s progression within the limits is disregarded. Furthermore, at the distributions tails the KS test is less sensitive as Fig. 5 indicates. But, especially the lower tail is of major importance at structural designs, for which only very low failure probabilities are accepted. In the worst-case scenario, the tolerances of the KS test would lead to the overestimation of the critical limit for maximum strain. The use of this test must be refused in such context.

As enhancement to the limitations of the KS test, the Cramér-von-Mises (CVM) test (Cramér 1946) considers the EDF in every step. Thus, it generates the test statistic \(W^2\). The smaller \(W^2\) gets, the better is the empirical data reproduced by the CDF. The calculation follows

in which \(P_n(\varepsilon )\) represents the EDF and \(P(\varepsilon )\) the CDF of the assumed probability distribution. Using the midpoint rule, the integral in Eq. (33) is to be converted into a sum, which significantly simplifies the calculation for the test statistic \(W^2\). In doing so, the occurrence probability \(p_i\) for each observation is again estimated according to Eq. (17), and not Eq. (16) as one could assume. The best possible test statistic obviously equals the error term 1/(12n). The test statistics asymtotic and modified borders for not rejecting \(H_0\) are again given by D’Agostino and Stephens (1989), regarding various types of distribution functions.

The mandatory use of the California method’s probability estimator in the CVM test, given by the EDF, becomes problematic when the plotting positions, initially used to fit the CDF, are based on a different estimator because consequently they are not considered in the test statistic. Thus, for the comparison of probability estimators in order to detect their effect on the fitted CDF, the conventional CVM test is not a proper evaluation method. A consistent use of the plotting positions for fit and goodness-of-fit analysis is not possible.

5.2 Anderson–Darling test

An enhancement to the CVM test was introduced in Anderson and Darling (1954). The purpose was to bring higher weight to the tails of the distribution in the goodness-of-fit rating. Therefore, a weight function

the shape of which is shown in Fig. 7 was added to the residual squares, as

Since the function values of a CDF represent an occurrence probability, they always have to result in values between 0 and 1. Hence, this is the very interval for the argument in Eq. (34), with an approach of the function value towards infinity at the limits. As before, the integral expression of the test statistic is transformed into a sum. First, it is simplified by substituting the continuous CDF \(P(\varepsilon ) = u\) and \(\mathrm {d}P(\varepsilon )=\mathrm {d}u\). Then, the single integral is divided into an integral for each probability step \(P(\varepsilon _i) = u_i\). Following the California methods estimation, the EDF is \(P_n(\varepsilon _i)=p_i = i/n\) for an ascend ordering of \( \varepsilon _1 \le \varepsilon _2 \le \ldots \le \varepsilon _n\). With \(p_0=0\) and \(p_n=1\), the fraction of the first and of the last integral reduces. Simple integration and collecting of terms results in

Critical values of the test statistic \(A^2\) for an acceptance or rather not rejection of the null hypothesis in dependence on sample size and probability distribution can also be taken from D’Agostino and Stephens (1989). Similar to the CVM test, the Anderson–Darling (AD) test uses a mandatory probability estimator. That becomes obvious by examining the resulting sum in Eq. (36), which only contains the CDF values. Thus, by choosing a probability estimator differing to the one of the California method to fit a particular distribution, the conventional EDF based AD test is like the CVM test not suitable for the goodness-of-fit rating.

5.3 Generalized Anderson–Darling test

Since the conventional AD test requires the California method’s probability estimator, we propose the generalized Anderson-Darling (GAD) test. The derivation is similar up to the point, where the California method’s probability estimation replaces the general approach. That is omitted to keep \(p_i\) unspecified. Collecting the integrated terms leads to

Replacing \(p_i\) in Eq. (37) by the California methods estimator would lead back to the conventional AD test statistic in Eq. (36). Now, one advantage of the GAD test is the free choice of the probability estimator and thus the enabling of a consistent use for function fit and afterwards goodness-of-fit examination. It is worth noticing that in the conventional AD test the probability \(p_{0}\) equals zero and \(p_{n}\) equals one. Otherwise Eq. (35) is indefinite. Hence, the GAD test enables one to change the examined plotting positions apart from the nth one. In other words, the last plotting position is still adopted from the EDF. Basically, this is still an inaccuracy because of the inconsistent use of the nth plotting position in CDF fit and goodness-of-fit test, when the initial probability estimator is not i/n. That inaccuracy increases for small sample sizes, since their last plotting position gets a higher deviation from one, as exemplarily shown in Fig. 6 for Weibull’s probability estimator (WPE) from Eq. (18), which is the one with the lowest estimate for \(p_{i}\).

To deviate even with the last plotting position \(p_{n}\) from the EDF, the approach of Sinclair et al. (1990) can be utilized. They split the weight function \(\psi \) of the conventional AD test to gain one test statistic putting higher weight on the lower plotting positions and one putting higher weight on the upper ones. Adopting their lower-tail weighting

in Eq. (35) and bringing the integral into sum form, but keeping the plotting positions \(p_i\) as argument, provides a new test statistic

the lower-tail GAD test. As Eq. (39) shows, the nth plotting position is now arbitrary. However, with respect to the step function, one should be aware that even for \(\varepsilon \rightarrow \infty \) the occurrence probability for another observation does not become one, except when a probability estimator is chosen that defines the nth plotting position as one. Thus, the deviation between nth step and CDF tends to infinity. Due to simultaneously decreasing weight, the impact on the magnitude of the test statistic is negligible especially for larger sample sizes. The weights of both GAD test and lower-tail GAD test are compared in Fig. 7.

5.4 Monte-Carlo simulation for the p-value

In GAD and lower-tail GAD test, the consideration of arbitrary step functions independent of the EDF is enabled. Analogously to the conventional AD test, weight is put either on both or only on one tail of the distribution, which are the areas of major importance for many applications. To complete a goodness-of-fit analysis, the results of GAD and lower-tail GAD must be classified. Typically, the test result is compared to critical values of respective significance level. Besides providing overflowing tables, here the methodology is shown to calculate corresponding p-values via Monte-Carlo simulation.

The p-value of a goodness-of-fit test statistic describes how probable a test outcome that is higher than the observed result would be if \(H_0\) was true (D’Agostino and Stephens 1989). The higher the p-value occurs, the greater is the statistical significance of the test result. Only that means that the probability density of all possible test outcomes have to be known. Fortunately, the permanent increase in computer processing power allows the carryout of extensive Monte-Carlo simulations, in order to estimate this probability density with great accuracy.

In the following, the calculation of the p-value is explained on the example of the current sample of 50 PMMA fracture strains in a goodness-of-fit test for 3PW distribution. Using the introduced tools in Sect. 4, the function parameters \(\beta \), \(\eta \), and \(\gamma \) are fitted. The determined 3PW distribution function is postulated to be the true population of the experimental data and the 50 fracture strains to be a random sample of this population. If the 50 tensile tests were repeated, a different composition would occur, but still following the population. Its composition is made by chance alone. The twist is now that this chance is going to be simulated. Based on the population, samples with random fracture strains are created that are representative for sets of real experiments.

The generation of random variables from a distribution function is enabled by the so-called method of inverse transform sampling. For this, random uniformly distributed numbers in the interval (0, 1) are inserted for u in the inverse \(\varepsilon =P^{-1}(u)\) of a given CDF \(P(\varepsilon )\). The basis is the probability integral transform, which states that a random variable \(\varepsilon \) with CDF \(P(\varepsilon )\) entails the uniform distributed variable \(u=P(\varepsilon )\), and vice versa. In other words, the CDF performs the transformation from a variable of particular distribution into a variable of the standard uniform distribution (Robert and Casella 1999). For the example, regarding a population of 3PW distribution, random fracture strains are received by transforming Eq. (4) as described into

with u as a uniformly distributed variable. The equations for further popular distribution functions are given by Forbes et al. (2011). For the BMW distribution the generation becomes more extensive, due to the fact that its CDF is not to be inversed. Therefore, by given u a numerical solution of Eq. (11) for \(\varepsilon \) has to be conducted.

The determination of the p-value for the initial sample is now demonstrated in Fig. 8 as a flowchart. The algorithm starts with input of the 50 experimental fracture strains \(\varepsilon _i\) and the fitted CDF \(P(\varepsilon )\). From both of which, on the right path the test statistic \(A^2_{\mathrm {orig}}\) is calculated. On the left path, a loop is entered. Based on the assumed population \(P(\varepsilon )\), i.e. the CDF, a new sample of 50 random fracture strains \(\varepsilon _{\mathrm {rand},i}\) is generated. The parameters of the initial CDF are fitted to the new sample and a goodness-of-fit test is performed, resulting in the test statistic \(A^2_{\mathrm {MC},j}\). This procedure is repeated 10 million times. In this way, 10 million possible test outcomes are simulated. It was found appropriate to create at least 10 million test statistics for a p-value that is reproducible to the third decimal place. In a final step in Fig. 8, the p-value arises from the percentage of test statistics \(A^2_{\mathrm {MC},j}\) \((j=1,2, \ldots ,10^7)\) from the Monte-Carlo simulation being greater than the original test statistic \(A^2_{\mathrm {orig}}\) with

where P is the probability measure. In Sect. 6, this algorithm is adopted in p-value determination for the Anderson–Darling test statistic \(A^2\), and for both new test statistics \(A_\mathrm {G}^2\) and \(A_\mathrm {G,LT}^2\), in order to keep continuity.

6 Transfer to the sample

6.1 The best fit

With the aid of the p-value, the initially introduced distribution functions are assessed for their ability to reproduce the experimental data using the AD, the GAD, and the lower-tail GAD test. Each probability distribution function is fitted to the fracture strain sample. As stated in Sect. 4, the fit criterion is the WRSS, for which the same weight is chosen as for the lower-tail GAD because it visually provided the best capturing of the data points. The plotting positions are respectively generated for each of the probability estimators in Table 2, in order to detect the pairing between distribution function and estimator that results in minimum WRSS. In doing so, surprisingly one estimator shows a continuously good performance. It is \(p_i=i/(n+1)\) by Weibull that produces the minimal or at least the second lowest WRSS in almost all cases. This conforms with the conclusions of Makkonen (2006), Makkonen (2008), revealing WPE as the only valid approach for probability assignment.

Ranking the distribution functions by WRSS, Table 3 lists the respective AD, GAD and lower-tail GAD test statistics and more important for comparability, their p-values. Noticeable is the inconsistent ascending order of the test statistics, though it is not astonishing, since the goodness-of-fit tests are based on a continuous EDF and the WRSS only on the sampling points. More important is the different ranking between the three goodness-of-fit tests regarding the p-values. Just for AD and GAD, which both possess the same weighting, the difference shows that the consistent choice of plotting positions is not trivial.

The BLW distribution, which ranks first in Table 3, is illustrated in Fig. 9, choosing the Weibull plot for visualization. Attribute of the Weibull plot, which is gained by transforming the abscissa by \(x=\ln (\varepsilon )\) and the ordinate by \(y=\ln \left[ -\ln \left( 1-P(\varepsilon )\right) \right] \), is the display of a 2PW CDF as a straight line. In case of Fig. 9, the plotting positions clearly show two line-ups, i.e. two 2PW distributions, which explains the top ranking of the BLW distribution. The occurrence of two separate 2PW distributions in the sample leads to the assumption of two different fracture criteria being present.

a Weibull plot of the mixture distribution’s cumulative distribution function. The plotting positions are estimated by Eq. (18) and weighted by Eq. (38). (b) Associated probability density function within the same interval limits

Hitherto, all sample points are assumed to follow the same population. But based on the prominent performance of the multimodal distribution functions, this assumption is to be questioned. The superposition of two effects for the failure of the PMMA samples appears more likely. In response to the visual indication of two 2PW distributions \(P_1(\varepsilon )\) and \(P_2(\varepsilon )\), the distribution fit is repeated with consideration of a mixture distribution

where \(w_1\) and \(w_2\) are the weights for the respective mixture component so that \(w_1+w_2=1\). In result, the fit produces the best WRSS so far with \(WRSS=0.0463\). This fit is shown in Fig. 10. The left mixture component weights with \({w_1=0.209}\) and the right accordingly with \(w_2 = 0.791\). Compared to Fig. 9b, the trend of the PDF in Fig. 10b features a smooth transition between the two modes and thus corresponds more to a physical behavior.

6.2 Fractographic Analyses

That raises the question whether there is a mechanical explanation for the two populations in the sample. To find an answer, the fracture patterns of the tensile specimens are inspected more closely. Fortunately, the examined PMMA exhibits brittle behavior, featuring the so called fracture mirrors at the very point of crack initiation (Brokmann et al. 2019). Therefore, in all tensile specimens the crack origin is traced by closely examining the topography of the fracture surface. In doing so, an irregularity is found. Figure 11 shows the image of a specimen’s milled surface. Despite an unavoidable roughness, its topography is still quite homogeneous. But, for some specimens in the primarily homogeneous surface local spalling occurs. Especially towards the edges, where the milling head first cuts the surface, spalling is discovered. An example is given in Fig. 12.

By tracing the fracture mirror, for 23 specimens the crack origin is located just within the notch root of such spalling. Figure 13 provides a clear image of this case. The view is set orthogonally onto the fracture surface and the fracture mirror, whose center is right in the root of a prominent notch. The size of the notch is even big enough that the spray mist could enter from preparing the specimen with the speckle pattern for DIC. This is noticeable by the black and white dots. The complex multiaxial stress-state in such a notch root is hardly to be determined. The failure stress might be calculated by the size of the fracture mirror, as in Brokmann et al. (2019). At this point, the specimens are divided into two groups. The ones without visible defects in the machining surface and those with crack origin lying within a spalling.

The original sample is again plotted in Fig. 14 with delimitation of the specimens of the second group. It is evident how all the lower fracture strains are affected. For the 27 experiments without visible defects, a linear regression line, i.e. 2PW distribution, is added. Even visually it demonstrates very good agreement. To quantify the goodness of a 2PW fit, the introduced procedure is reapplied on this reduced sample. Due to its changed size, the probability for each occurrence is re-estimated using WPE. The gained plotting positions are weighted by Eq. (38) for an accordance with the previous analyses. As a result, the 2PW CDF is fitted with a WRSS to 0.0942, gaining a p-value of 0.577 in the lower-tail GAD test, which is chosen to regard consistent weights. This high p-value is a strong indication for the 2PW distribution to reproduce the population.

Delimitation of the specimens within the sample, whose crack origin lies in the notch root of a spalling. Analogous to Fig. 9, the plotting positions are estimated by Eq. (18). A linear regression line is added to the experiments of specimens without visible defects

6.3 Discussion

Using the proposed goodness-of-fit test procedure, a multimodal progression of the sample’s probability density is detected, which is always a hint that multiple influencing factors affect the gained results. Indeed, fractographic analyses identified a distinguishing feature within the sample: surface defects. Certainly, in all experiments micro notches from machining initiate the fracture by stress concentration. As Fig. 11 demonstrates, a machined surface always has particular roughness. However, defects like the one shown in Fig. 12 must be treated differently as usual machining tolerances. The present study showed that these defects from spalling significantly reduce the strength of the material and provoke a second distribution within the sample. Thus, when keeping the sample unfiltered, a mixture distribution might be the best approach to reproduce the physical behavior, since both fracture criteria are considered separately in each of the mixture components. A second approach is to exclude the specimens weakened by spalling from the sample. Then, the probability distribution of the retained experiments can be reproduced by a common 2PW distribution.

The decision of treating the weakened specimens as outliers, or not, must be taken with regard to the actual manufacturing process in practical application. Imagining a quality control in a well-monitored production process, spalling should not be an issue. Hence, an exclusion of respective specimens would be valid. It is important that the sample is representative. In the end, this study determined the fracture strain distribution for one specific acrylic glass, coming from one production batch and being processed by milling exactly as described. A blind carryover to other PMMA materials is not advisable. More important is the introduced methodology of determining the probability distribution of experimental data. Its practical adoption is of minor effort. We suggest the usage of WPE \(p_i=i/(n+1)\) for probability estimation. Dependent on the desired weighting of the plotting positions, the GAD, or the lower-tail GAD are appropriate to evaluate the quality of tested distribution functions in reproducing the empirical distribution. If the Monte-Carlo simulation for the p-value is to be avoided, the classic AD test is still an excellent option as an alternative, providing tabulated significance levels. Though, then the probability estimator \(p_i=i/n\) of the California method should be chosen for continuity.

7 Summary and outlook

For statistical analyses, in 50 unaxial tensile tests the local fracture strain for a PMMA material is determined. Based on this sample and its very composition, WPE provides the highest agreement with the regarded probability distribution functions. Hence, we recommend the WPE as the first choice for probability analyses on fracture strains or stresses. For comparison of the probability distribution of different samples, a variation of the probability estimator is to be avoided. With the development of new goodness-of-fit tests allowing a free choice of the considered probability estimator, distribution functions can now be rated by their statistical significance in reproducing the sample by consistent use of the underlying plotting positions. The influence of the sample size on the performance of the probability estimator has not yet been considered.

The probability distribution of the unfiltered experimental data exhibits a multimodal progression. The best reproduction is gained with the mixture distribution of two independent 2PW distributions, which is an indication for two different fracture criteria within the sample. Fractographic analyses of the fracture surface reveal the discrepancy between the specimens. The sample is to be divided into the group of specimens, which have no visible defects, and the group of specimens being damaged by spalling. This classification directly corresponds with the two mixture components of the distribution. Thus, the characteristics of the probability distribution are explained. The decision of excluding the damaged specimens from the sample is refused, since in this study no particular application is intended. The development of the introduced methodology is more important than the particular probability distribution.

Within this study, quasi-static uniaxial loading at room temperature is taken into account, so the influence of multiaxial stress, varying strain rates and different temperatures on the materials fracture behavior is yet to be examined in further investigation. The objective has to be the detection of a proper CDF for fracture strain with scale, shape and/or location parameter dependent on triaxiality, strain rate and temperature. Furthermore, the specimen’s dimensions are constant in the present study. For a more profound knowledge of the volume dependency, additional tests with samples of different geometries are a topic of further investigation.

Change history

03 May 2021

A Correction to this paper has been published: https://doi.org/10.1007/s40940-021-00152-y

References

Ahmad, M.I., Sinclair, C.D., Werritty, A.: Log-logistic flood frequency analysis. J. Hydrol. 98(3–4), 205–224 (1988)

Alter, C., Kolling, S., Schneider, J.: An enhanced non-local failure criterion for laminated glass under low velocity impact. Int. J. Impact Eng. 109, 342–353 (2017)

Anderson, T.W., Darling, D.A.: A test of goodness of fit. J. Am. Stat. Assoc. 49(268), 765–769 (1954)

Ballarini, R., Pisano, G., Royer-Carfagni, G.: The lower bound for glass strength and its interpretation with generalized weibull statistics for structural applications. J. Eng. Mech. 142(12), 04016100 (2016)

Beard, L.R.: Statistical analysis in hydrology. Trans. Am. Soc. Civ. Eng. 108, 1110–1160 (1943)

Bernard, A., Bos-Levenbach, E.C.: The plotting of observations on probability paper. Statistica Neerlandica 7(3), 163–173 (1953)

Blom, G.: Statistical estimates and transformed beta-variables. Wiley, NY (1958)

Brokmann, C., Berlinger, M., Schrader, P., Kolling, S.: Fractographic fracture stress analysis of acrylic glass. ce/papers 3(1), 225–237 (2019)

California Department of Public Works. Flow in california streams. Div. Eng. Irrig., Bull. 5, (1923)

Chandan, H.C., Bradt, R.C., Rindone, G.E.: Dynamic fatigue of float glass. J. Am. Ceram. Soc. 61(5–6), 207–210 (1978)

Cramér, H.: Mathematical methods of statistics. Princeton University Press (1946)

Cunnane, C.: Unbiased plotting positions — a review. J. Hydrol. 37, 205–222 (1978)

D’Agostino, R.B., Stephens, M.A.: Goodness-of-fit techniques. Marcel Dekker, Inc., (1986)

Datsiou, K.C., Overend, M.: Weibull parameter estimation and goodness-of-fit for glass strength data. Struct. Saf. 73, 29–41 (2018)

Ensslen, F., Müller-Braun, S.: Study on edge strength of float glass as a function of relevant cutting process parameters. ce/papers 1(1), 189–202 (2017)

Filliben, J.J.: The probability plot correlation coefficient test for normality. Technometrics 17(1), 111–117 (1975)

Forbes, C., Evans, M., Hastings, N., Peacock, B.: Statistical distributions. Wiley (2011)

Gringorten, I.I.: A plotting rule for extreme probability paper. J. Geophys. Res. 68(3), 813–814 (1963)

Hazen, A.: Storage to be provided in impounding reservoirs for municipal water supply. Trans. Am. Soc. Civ. Eng. 77, 1547–1550 (1914)

Kleuderlein, J., Ensslen, F., Schneider, J.: Study on edge strength of float glass as a function of edge processing. Stahlbau 85(S1), 149–159 (2016)

Landwehr, J.M., Matalas, N.C., Wallis, J.R.: Probability weighted moments compared with some traditional techniques in estimating gumbel parameters and quantiles. Water Resour. Res. 15(5), 1055–1064 (1979)

Lindqvist, M., Vandebroek, M., Louter, C., Belis, J.: Influence of edge flaws on failure strength of glass. In 12th International Conference on Architectural and Automotive Glass, pages 126–129. Glass Performance Days, (2011)

Makkonen, L.: Plotting positions in extreme value analysis. J. Appl. Meteor. Climatol. 45(2), 334–340 (2006)

Makkonen, L.: Problems in the extreme value analysis. Struct. Saf. 30(5), 405–419 (2008)

McClung, D.M., Mears, A.I.: Extreme value prediction of snow avalanche runout. Cold Reg. Sci. Technol. 19(2), 163–175 (1991)

Orowan, E.: Fracture and strength of solids. Rep. Prog. Phys. 12(1), 185 (1949)

Overend, M., Zammit, K.: A computer algorithm for determining the tensile strength of float glass. Eng. Struct. 45, 68–77 (2012)

Pisano, G.: The statistical characterization of glass strength: from the micro-to the macro-mechanical response. Phd thesis, Università di Parma, (2018)

Rinne, H.: The Weibull distribution: a handbook. Chapman and Hall/CRC (2008)

Ritter Jr., J.E., Sherburne, C.L.: Dynamic and static fatigue of silicate glasses. J. Am. Ceram. Soc. 54(12), 601–605 (1971)

Robert, C., Casella, G.: Monte Carlo statistical methods. Springer Science & Business Media, Berlin (1999)

Rühl, A.: On the time and temperature dependent behavior of laminated amorphous polymers subjected to low-velocity impact. Phd thesis, TU Darmstadt, (2017)

Rühl, A., Kolling, S., Schneider, J.: Characterization and modeling of poly(methyl methacrylate) and thermoplastic polyurethane for the application in laminated setups. Mech. Mater. 113, 102–111 (2017)

Sinclair, C.D., Spurr, B.D., Ahmad, M.I.: Modified anderson darling test. Commun Stat-Theory Meth 19(10), 3677–3686 (1990)

Stephens, M.A.: EDF statistics for goodness of fit and some comparisons. J. Am. Stat. Assoc. 69(347), 730–737 (1974)

Tukey, J.W.: The future of data analysis. Ann. Math. Stat. 33(1), 1–67 (1962)

Weibull, W.: A statistical theory of the strength of materials, p. 151. Ing. Vet. Ak, Handl (1939)

Wiederhorn, S.M.: Influence of water vapor on crack propagation in soda-lime glass. J. Am. Ceram. Soc. 50(8), 407–414 (1967)

Yu, G.-H., Huang, C.-C.: A distribution free plotting position. Stoch. Environ. Res. Risk Assess. 15(6), 462–476 (2001)

Acknowledgements

We acknowledge the financial support within the LOEWE program of excellence of the German Federal State of Hesse (project initiative “SimPlex - Entwicklung einer Simulationsmethodik zur Berechnung des Crashverhaltens von Automobilverglasungen aus Plexiglas”). This work is part of a cooperative dissertation project between Justus Liebig University Giessen and Technische Hochschule Mittelhessen – University of Applied Sciences at the Graduate Centre of Engineering Sciences at the Research Campus of Central Hesse.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The original version of this article was revised: The original version of this article unfortunately contained a typesetting mistake in two references. The corrected references are given below. The original article has been corrected.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Berlinger, M., Kolling, S. & Schneider, J. A generalized Anderson–Darling test for the goodness-of-fit evaluation of the fracture strain distribution of acrylic glass. Glass Struct Eng 6, 195–208 (2021). https://doi.org/10.1007/s40940-021-00149-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40940-021-00149-7