Abstract

In this paper, we analyse various minimization algorithms applied to the problem of determining elasto-plastic material parameters using an inverse analysis and digital image correlation (DIC) system. As the DIC system, ARAMIS is used, while for the finite element solution of boundary value problems, Abaqus software is applied. Different minimization algorithms, implemented in the SciPy Python library, were initially juxtaposed, compared and evaluated based on benchmark functions. Next the proper evaluation of the algorithms was performed to determine the material parameters for isotropic metal plasticity with the Huber-Mises yield criterion and isotropic or combined kinematic-isotropic plastic hardening models. For all researchers utilizing back calculation methods based on a DIC measuring system, such analysis results may be interesting. It was concluded that among the local minimization methods, derivative free optimization algorithms, especially the Powell algorithm, perform the most efficiently.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

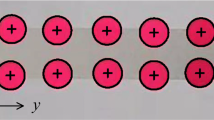

In recent decades, the application of measuring techniques that enable us to register whole displacement fields has spread dramatically, mainly owing to companies that produce such systems for commercial applications (see [1,2,3,4]). Digital image correlation (DIC) systems can be configured to track selected measurement points or to track micro-subregions, where points are defined unambiguously by randomly generated patterns (facets). These configurations have different working field ranges [4]: in the former case, the working/measurement field can have large dimensions (reaching a few metres); however, in the latter case, with a satisfactory measuring accuracy, the measuring field is only a few square centimetres. Generally, DIC systems are used for two-dimensional (2D) measurements (utilizing one camera) or for three-dimensional measurements (utilizing 2 or more cameras, depending on the visibility of the measured subregions).

When DIC is used with a randomly generated pattern on the analysed subregion, strain localization, plastic strain developments after yielding [5,6,7,8,9], and crack propagation can be monitored [10]. Every measurement can be done without risking damage to the measuring system, in contrast with observations carried out using conventional measurement methods. The application of such measurement methods leads to the design of experiments with homogenous/uniform stress or strain fields to explicitly determine one of the constitutive model parameters on the basis of the specific experiment (with the other parameters having no influence on the particular experimental results or being already determined at the given stage of the experiment).

Unfortunately, in the case of complex constitutive models, such as isotropic and anisotropic models of elasto-plasticity or hyper-elasticity, such an approach is unfeasible [11,12,13]. Due to this limitation, it is worthwhile to use the ability to measure displacements in the whole subregion, as is presented in many applications [9, 13,14,15,16,17,18]. Arguably, the measurement of homogeneous deformation fields using DIC systems does not make much sense, as the full potential of this method has not yet been fully utilized. Such measurements can possibly be treated as checking the homogeneity of the measured field. Therefore, to determine the constitutive model parameters, introducing the heterogeneity of the deformation (strain) field and the strain concentration regions in the case of elasto-plasticity is reasonable. The observed deformation field may then be assumed to be influenced by all of the constitutive parameters – if the strains are large enough [19]. This influence is another problem for interpretation; to determine the material parameters, we must obtain the solution of the boundary value problem that describes the analysed study with the constitutive relationships for which we need to find the parameters. Having such solutions and the results of the experimental tests, we can then define the goal/cost function, with the material parameters being variables whose norm should be minimized. Obtaining a boundary value problem solution for any sample shape and complex load condition requires a universal solution method (e.g., finite element method, FEM) [20, 21]. In this study, the ARAMIS system [1] was used to measure the displacement field and the ABAQUS/Standard FEM system [20, 21] was used to solve the boundary value problems with elasto-plasticity constitutive relationships [22].

This article is a continuation of previous work [14] in which inverse analysis and DIC tools were used to determine material parameters of isotropic metal plasticity with the Huber-Mises plasticity condition and isotropic strain hardening [12, 22]. The aforementioned article [14] presents results of non-linear optimization that was performed using the same optimization method for two differently shaped subregions (a rectangular sample with a hole or with two semicircular notches). In addition, various forms of the goal/cost function were considered, which included not only displacements but also a global response of the sample (reaction force) to the considered displacement boundary conditions. Using this approach, we concluded that using a sample with asymmetric notches and a cost function that takes into account the global reaction of the sample is preferable. The importance of including a global reaction function in the cost function has also been discussed by other researchers [13]. Apparently, no analysis of the influence of the selected optimization method on the convergence and efficiency of the material parameter determination process in the case of isotropic elasto-plasticity with isotropic or combined strain hardening has been reported, even in very similar papers [23,24,25]. This article attempts to fill this gap, pointing out the most efficient and fastest convergence method. By contrast, the literature contains several papers that propose an analytical approach to the problem of determining constitutive material parameters [26]. There are also papers in which different optimization strategies [19] and multi-objective approaches [27] are analysed.

Experimental Approach

The Concept of Determining Material Parameters Using DIC and Inverse Analysis

The concept presented in this article for determining material parameters is based on a reversal of the classical boundary value problem. In this problem for a given geometry, the displacement field is determined on the basis of boundary conditions (stress-type and displacement-type boundary conditions) and the constitutive relationship with the given material parameters. In the case of the inverse analysis, the displacement state of the sample is given (based on the experiment) but the material parameters in the constitutive relationship are unknown. To determine them, the FEM tasks that correspond to the experiments are solved and the resulting displacement map (and possibly the global reaction force function) is compared with the experimental results. The closer the results are to each other, the smaller the value of the objective function. Finding the optimal solution (objective function minimizer) requires the use of non-linear optimization algorithms.

Constitutive Models and Determined Parameters

For the sample material description, the elasto-plasticity constitutive model with isotropic or kinematic hardening and additional decomposition of the strain tensor on elastic εe and plastic part εp,

has been used. As the FEM tasks are solved with the non-linear geometry (NLGEOM) option enabled, cf. [12, 20, 21], the strain tensor is interpreted as Cauchy–Green’s left tensor logarithm (ε = ln V) in the polar decomposition of the deformation gradient tensor (F = RU = VR) [28]. In turn, the stress tensor σ (true stress) is identified with τ (Kirchhoff stress tensor), which indicates that volume changes are neglected [12]. Elastic states are separated from plastic states with the Huber-Mises yield condition:

In equation. (2), \( F\left(\boldsymbol{\sigma}, {\overline{\varepsilon}}^p\right) \) is the yield surface, \( {\overline{\varepsilon}}^p={\overline{\varepsilon}}_0^p+\underset{0}{\overset{t}{\int }}{\dot{\boldsymbol{\varepsilon}}}_p.{\dot{\boldsymbol{\varepsilon}}}_p dt \) is the equivalent plastic strain, s = σ − 1/3(trσ)I is a stress state deviator, α is a “back stress” tensor in kinematic hardening, and σ0is a function that can be assumed as

In equation. (3), σ0 is a plasticity limit determined in a uniaxial tension test, whereas Q∞ and b are material parameters that characterize isotropic strain hardening after yielding. The α tensor is defined with the following kinematic equation:

where parameters C and γk describe kinematic hardening with a single “back stress” tensor [20, 21]. In pure isotropic hardening, α = 0, whereas in kinematic hardening, σ0 is constant.

The time in the definition of the equivalent plastic strain (1) in the analysed quasi-static problem is treated as a parameter that orders the body configurations, and the plastic part of the strain tensor is determined by the associated flow law in the form

Where γ is a non-negative plastic multiplier determined from the condition \( \dot{F}=0 \) [12, 22]. The constitutive relationship for isotropic elasticity can be written as

where

In equation (6), I is the second-order identity tensor, and in equation (7), λ and μ are Lamé material parameters. In summary, the presented constitutive relations show that there are 7 material parameters: E and ν for the elasticity; σ0, Q∞ and b for isotropic plasticity with hardening; Cand γk for kinematic hardening.

Description of the Samples and Laboratory Methods

The sample analysed in this work was a flat bar with a thickness of 2 mm made of aluminium EN AW-6082 [29], the shape and dimensions of which are shown in Fig. 1. The presence of asymmetrically placed notches results in a heterogeneous stress state in the sample. A single experiment involved stretching the flat bar to the extent that caused crossing of the yield point in a significant area of the sample and then relieving it. The sample in Fig. 1 was selected on the basis of the conclusions of a previous study [14]. In this paper, two different shapes of a sample causing strain inhomogeneity were used for the parameter determination of the same material. On that basis, it was concluded that in the case of the sample with two notches, the optimization procedure allows obtaining material parameters with an assumed accuracy faster than in the case of a sample with a central circular hole.

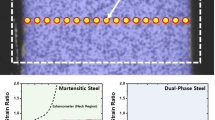

The numerical model that represents the sample was created using the Abaqus FEM software. It is a 2D plane stress model that consists of 3550 linear quadrilateral elements CPS4R and 70 linear triangular elements CPS3. The model mesh was optimized such that the highest density of nodes was in the areas with the largest stress/strain concentrations. The jobs were calculated by the Abaqus Standard program as quasi-static problems with the NLGEOM option activated [20, 21]. The FEM mesh is presented in Fig. 2(b).

The tests were performed on an Instron testing equipment, which applied displacement to the sample edge and measured the reaction force response to generate a displacement map of the sample. The sample was stretched between machine grips (steered with displacement) in the direction of the long edge to 2 mm, which caused plastification of the regions between notches; the sample was then unloaded. The applied displacement as a function of the real time is shown in Fig. 1. The displacements were recorded using an ARAMIS DIC system equipped with two cameras (for three-dimensional (3D) measurement). Such systems enable non-contact measurement of displacement in the whole subregion of the sample being tested (usually lying flat) by taking a series of pictures during the test and then processing them using appropriate software (post-processing). In the pictures, the characteristic points are recognized and their location and relative displacements during the experiment are determined.

Figure 2(a) shows ARAMIS measuring points and the measuring points located between the notches that correspond to the nodes of the FEM mesh shown in Fig. 2(b), which are selected as those that will be taken into account when comparing displacements in a goal function. The analysis of the selected measurement points included in the objective function is presented in [14]. The selected points should be located in the area with the largest heterogeneity of the displacement field, which, in this case, is in the region between notches (Figs. 2(a) and 2(c)).

Formulation of the Optimization Problem

The most important element in the definition of the optimization problem is the determination of the cost function that will evaluate the “distance” of the FEM solution for the given parameter values from the results of the laboratory experiment. The cost function accounts for all comparable quantities, i.e., the global reaction force of the sample to the assumed edge displacement and the displacement map of the sample surface. Due to the discrete character of the data obtained from the experiment and the FEM task, comparing displacement maps is simply done by comparing/subtracting the displacements of multiple pairs of correlated points. For each node in the chosen node set of the FEM model, the closest measurement point was determined from the DIC system and, on this basis, a reference displacement function was assigned to it. A tolerance was established that specified the maximum permissible distance between the FEM node and the corresponding DIC measurement point.

Both the function that determines the reaction force of the model and the displacement function of the individual points, whether for FEM or DIC, were received as a set of function values at specific time points. Between these known time points, the linear interpolation of each function was assumed, such that the values of each function could be obtained at any time between the initial t0 and final tmax time points. The number of time increments in which the experimental data were recorded and the number of increments of the FEM algorithm were no longer relevant. As such, the cost function is defined as

where p is the vector of material parameters, Nn represents the number of all nodes chosen for comparison, uj, i(p) is the displacement function of node i in the direction j (x or y, see Fig. 2(a)) obtained from the FEM solution, \( {u}_{j,i}^{\mathrm{exp}}(t) \) is the corresponding reference function (obtained from the experiment), R(p) is the global reaction force obtained from the FEM solution, and Rexp is the reaction force from experiment. Norms Mu and MR in (8) are determined as

The problem of determining parameters p is reduced to the minimization of the goal function f(p) with norms (9) and (10).

Implementation

A program to automate the entire optimization process was created using the Python programming language with utilization of the NumPy and SciPy libraries according to the flowchart presented in Fig. 3. The program integrates the DIC (ARAMIS) and FEM (Abaqus) environments by storing the information about the model, nodes and their displacements as well as the reactions obtained from the laboratory experiments. It automates the process of starting FEM jobs and comparing FEM results with experimental data (in a manner such as that described in section 3). The availability of ready-made implementations of multiple minimizing algorithms, available in the SciPy library (https://docs.scipy.org/doc/scipy-0.18.1/reference/), allowed for a qualitative comparison of the behaviour of various algorithms on the presented task.

The main loop of the designed program is performed by the optimizer module, which utilizes one of many implemented optimization procedures. DIC software provides the optimizer module with exemplary data for later comparison with FEM results. The optimizer module manages the optimization process by evaluating the value of the cost function and choosing the next point/points to query for the FEM results. At each point, the FEM job is set up and solved, and the result data are collected. These data are then used to calculate the value of the cost function. The choice of ARAMIS and Abaqus software does not depend on the optimization procedures, and vice versa.

The displacement boundary conditions for the FEM jobs were established on the basis of experimental DIC data [9]. The nodes at the boundary of the measured region were given the displacements of their corresponding DIC points. Only the region inside the measured area was the subject of comparison between FEM and DIC (as shown in Fig. 2).

Results and Discussion

Analysis of the Optimization Methods for their Efficiency

The minimization algorithms that were analysed (Table 1) are divided into four categories: derivative-free, gradient, trust-region, and stochastic algorithms.

The DFO, gradient and trust-region algorithms are used for local minimization. DFO algorithms do not calculate the gradient of the minimized functions, whereas gradient algorithms require gradient calculations. Trust-region methods approximate the subset of the search region with a model function (e.g., a quadratic). On the other hand, the differential evolution and basin-hopping algorithms are designed for global minimization. Both are non-deterministic and are therefore based on an assignment to the stochastic group.

The implementation details of all minimization algorithms are presented elsewhere [30]. The algorithms were initially tested on the four 2D benchmark functions listed in subsection 5.1. Afterwards, the minimization of the cost function presented in section 3 was performed. The parameters sought were Q∞ and b, whereas the E, ν and σ0 parameters were assumed in advance on the basis of independent tests.

The optimization task is, in general, a constrained minimization problem. However, the use of unconstrained minimization algorithms, such as Powell or BFGS, does not cause any problems after a careful choice of the search region boundaries as well as the artificial addition of bounds in the form of infinite values of the cost function outside the search region.

The algorithms were compared based on the value of the cost function obtained during the optimization process after a fixed number of cost function evaluations as well as the final value of the cost function achieved with the total number of required cost function evaluations. In the material parameters minimization problem, the reliability of algorithms (whether or not they were error free) was also considered.

Benchmark Tests

The main goals for analysing optimization algorithms on some benchmark functions are as follows:

-

Validation of the open-source implementation of optimization algorithms that are used [30];

-

Initial evaluation of the efficiency of each algorithm with a low time-consuming numerical calculation; and

-

Choosing the test functions that have similar properties, such as the objective function in the plasticity parameters determination problem presented in point 5.2.

Four benchmark functions for evaluating the algorithms were chosen [31,32,33]:

-

Ackley: \( -a\exp \left(-b\sqrt{\frac{1}{2}\left({x}^2+{y}^2\right)}\right)-\exp \left(\frac{1}{2}\left(\cos (cx)+\cos (cy)\right)\right)+a+\exp (1) \),

where a = 20, b = 0.2, and c = 2π

-

Levy n.13: sin2(3πx) + (x − 1)2(1 + sin2(3πy)) + (y − 1)2(1 + sin2(3πy)),

-

Schaffer n.2: \( 0.5+\frac{\sin^2\left({x}^2-{y}^2\right)-0.5}{{\left(1+0.001\left({x}^2+{y}^2\right)\right)}^2} \)

Styblinski-Tang: 4 2 4 2 \( \frac{1}{2}+{x}^4-16{x}^2+5x+{y}^4-16{y}^2+5y \)

For each minimization algorithm, 100 minimization tasks were performed from randomly chosen starting points. Afterwards, the results were collected and averaged. The diagram in Fig. 4(a) presents the total number of successes (in which a global minimum was found) for each algorithm and benchmark function, whereas the diagram in Fig. 4(b) shows the total number of cost-function evaluations required to achieve such results. Fig. 5, on the other hand, presents the averaged convergence graphs, i.e., the average value of the cost function achieved after the given number of function evaluations.

In the previously presented analysis, the derivative-free Powell algorithm stands out (excluding stochastic differential evolution algorithm) as the most cost-effective approach, especially in the case of the Levi and Ackley functions for which the Powell algorithm is better than all gradient algorithms in avoiding local minima. The implementation of the differential evolution algorithm finds the minimum of a global function correctly in almost every case. However, it requires an order-of-magnitude larger number of function evaluations than simpler algorithms.

Target Tests: Determination of Elasto-Plasticity Material Parameters

The primary analysis was performed on the optimization task described in section 2, where the values of parameters Q∞ and b were investigated. The search region was constrained on the basis of previously reported results [9, 14, 30] (see Fig. 6), and the minimization starting points were chosen randomly.

Because the evaluation of the cost function is a time-consuming process (mainly because of the need for the calculation of an FEM job at every point), the convergence speed, as well as the final result, is considered to be the most important factor in the evaluation of the algorithms.

Furthermore, the stochastic algorithms (basin-hopping and differential evolution) have been excluded from the comparison presented in this section. Both algorithms can be characterized by the best effectiveness regarding the minimization results among all; however, they require the largest number of function evaluations. Basin-hopping repeatedly performs a local minimization using the L-BFGS-B algorithm, whereas differential evolution is a brute-force algorithm. Therefore, only the deterministic local minimization algorithms are analysed below.

The optimization problem has only two parameters; as such, a graph of the cost function in the search region can be plotted (Fig. 6). A large subregion with little variation in the value of the cost function exists where the global minimum is located. Additionally, due to the noise present in the cost function [34], which is caused by, among other things, the noise in the laboratory data, the cost function is barely differentiable; thus, the algorithms that required calculation of the Hessian of the cost function did not perform well. In addition, the gradient algorithms, such as CG, displayed a higher susceptibility to errors than the DFO algorithms. In the following summary, only the algorithms that succeeded without errors in most cases are included.

Fig. 7 presents the averaged convergence graph obtained using a method similar to that used for the graphs presented in section 5.1. Due to the time limitations (cost-function evaluation is extremely time consuming), only 6 repetitions of the task of choosing a starting point and comparing all the algorithms were performed. If an algorithm did not achieve its convergence criterion (and ended due to an error), the best obtained result was chosen as the final result for that task. The total number of FEM job calculations required for the creation of this graph reached a few thousand, and the time required for completion of a single FEM job on a typical workstation varied between 30 and 60 s.

Arguably, the best behaviour was demonstrated by the derivative-free Powell algorithm. This algorithm appears to be the most reliable, converging successfully in every case and achieving good results. Among the other algorithms, BFGS stands out as the best gradient method, although it often does not converge successfully; this shortcoming is highlighted further in problems of minimization with a greater number of variables. The exceptional performance of SLSQP is a coincidence, as the algorithm performs extremely ineffectively on problems with larger dimensions. The BFGS and L-BFGS-B can perform better than the Powell algorithm from certain starting points; however, for the presented set of tests, the BFGS shows no significant disadvantage over the Powell algorithm. However, in some cases, gradient algorithms fail to achieve any satisfactory result (when they terminate due to an error), whereas the Powell algorithm finds a local minimum in every case.

Some tests for problems associated with a larger number of unknowns were also performed (up to 7 material variables). The only algorithms to compare were the Powell and BFGS algorithms, and the results of these tests confirmed the reliability of the Powell algorithm. Without exception, it always converged successfully to a local minimum. BFGS, by contrast, performed substantially worse in the 2D case, often terminating prematurely due to convergence errors. A similar problem with convergence was encountered with other gradient algorithms. However, the basin-hopping algorithm, which utilizes L-BFGS-B for local minimization, performed as efficiently as in the 2D case because convergence problems were diminished by minimization repetitions. Fig. 8 shows the averaged convergence graph for a mixed hardening problem with 5 optimization variables (Q∞, b, C, γk and σ0; see equations. (2), (3) and (4)) and compares the two best local minimization algorithms. If both algorithms converged successfully, the difference in the results would be insignificant. However, the BFGS algorithm often ended before the convergence criterion was met; thus, the averaged result clearly shows the advantage of the Powell algorithm.

Overall, the best behaviour, mostly due to reliability, was demonstrated by the DFO Powell algorithm.

Conclusions

The general conclusions are presented as follows:

-

a)

Derivative-free algorithms, especially the Powell algorithm, performed better than gradient algorithms in the presented optimization task.

-

b)

Algorithms that require a Hessian of the function to be calculated appears to be susceptible to noise present in the minimization task.

-

c)

BFGS appears to be the best gradient algorithm, whereas Powell behaves arguably better than any other local minimization algorithm. BFGS converges faster, but Powell is more reliable.

-

d)

Both the Powell and the BFGS algorithms are methods for unconstrained optimization. However, practical tests show that they can perform well in a problem with strict bounds.

-

e)

More complex algorithms—specifically, basin-hopping and differential evolution—perform better when used to find the global minima in a single run of an algorithm. However, the basin-hopping algorithm simply repeats the minimization task from (mostly) randomly chosen starting points, whereas differential evolution does not perform any local minimization, thus requiring a large number of function evaluations to converge to any solution.

-

f)

Among the benchmark functions, the minimizations of the Levi and Ackley functions show results similar to the target minimization task, as the Powell algorithm has a substantial advantage. However, gradient algorithms (mainly BFGS) perform better on the target task than on benchmark functions.

-

g)

Among all the local minimization methods, the Powell algorithm was found to be the most efficient.

References

Aramis v6 User Manual, GOM mbh, 2008.

Sutton MA, Hild F (2015) Recent advances and perspectives in digital image correlation. Exp Mech 55:1–8. https://doi.org/10.1007/s11340-015-9991-6.

Sutton MA, Matta F, Rizos D, Ghorbani R, Rajan S, Mollenhauer DH, Schreier HW, Lasprilla AO (2017) Recent Progress in digital image correlation: background and developments since the 2013 W M Murray lecture. Exp Mech 57:1–30. https://doi.org/10.1007/s11340-016-0233-3

Sutton MA, Orteu JJ, Schreier HW (2009) Image correlation for shape, motion and deformation measurements - basic concepts, theory and applications. Springer. https://doi.org/10.1007/978-0-387-78747-3

Amiot F, Hild F, Roger JP (2007) Identification of elastic property and loading fields from full-field displacement measurements. Int J Solids Struct 44:2863–2887. https://doi.org/10.1016/j.ijsolstr.2006.08.031

Avril S, Bonnet M, Bretelle A-S, Grédiac M, Hild F, Ienny P, Latourte F, Lemosse D, Pagano S, Pagnacco E, Pierron F (2008) Overview of identification methods of mechanical parameters based on full-field measurements. Exp Mech 48:381–402. https://doi.org/10.1007/s11340-008-9148-y

Chu T, Ranson W, Sutton M, Peters W (1985) Applications of digital image correlation techniques to experimental mechanics. Exp Mech 25:232–244

Cooreman S, Lecompte D, Sol H, Vantomme J, Debruyne D (2008) Identification of mechanical material behavior through inverse modeling and DIC. Exp Mech 48:421–433. https://doi.org/10.1007/s11340-007-9094-0

Fedele R (2015) Simultaneous assessment of mechanical properties and boundary conditions based on digital image correlation. Exp Mech 55:139–153. https://doi.org/10.1007/s11340-014-9931-x

Myslicki S., Ortlieb M., Frieling G., Walther F.: High-precision deformation and damage development assessment of composite materials by high-speed camera, high-frequency impulse and digital image correlation techniques, Materials Testing57, pp. 933–941, 2015, DOI https://doi.org/10.3139/120.110813

Bertin M, Hild F, Roux S (2017) On the identifiability of Hill-1948 plasticity model with a single biaxial test on very thin sheet. Strain e12233:1–13. https://doi.org/10.1111/str.12233

Jemioło S., Gajewski M.: Hyperelasto-plasticity, Warsaw University of Technology Publishing House, Warsaw, 2014 [in Polish].

Mathieu F, Leclerc H, Hild F, Roux S (2015) Estimation of elastoplastic parameters via weighted FEMU and integrated-DIC. Exp Mech 55:105–119. https://doi.org/10.1007/s11340-014-9888-9

Gajewski M, Kowalewski Ł (2016) Inverse analysis and DIC as tools to determine material parameters in isotropic metal plasticity models with isotropic strain hardening. Materials Testing 58(10):818–825

Lecompte D, Smits A, Sol H, Vantomme J, Van Hemelrijck D (2007) Mixed numerical–experimental technique for orthotropic parameter identification using biaxial tensile tests on cruciform specimens. Int J Solids Struct 44:1643–1656. https://doi.org/10.1016/j.ijsolstr.2006.06.050

Mansouri MR, Darijani H, Baghani M (2017) On the correlation of FEM and experiments for Hyperelastic elastomers. Exp Mech 57:195–206. https://doi.org/10.1007/s11340-016-0236-0

Meuwissen MHH, Oomens CWJ, Baaijens FPT, Petterson R, Janssen JD (1998) Determination of the elasto-plastic properties of aluminium using a mixed numerical–experimental method. J Mater Process Technol 75:204–211. https://doi.org/10.1016/j.ijsolstr.2006.06.050

Siddiqui MZ, Khan SZ, Khan MA, Shahzad M, Khan KA, Nisar S, Noman D (2017) A projected finite element update method for inverse identification of material constitutive parameters in transversely isotropic laminates. Exp Mech 57:755–772. https://doi.org/10.1007/s11340-017-0269-z

de-Carvalho R, Valente RAF, Andrade-Campos A (2011) Optimization strategies for non-linear material parameters identification in metal forming problems. Comput Struct 89:246–255. https://doi.org/10.1016/j.compstruc.2010.10.002

Abaqus Theory Manual (2011) Version 6.11. In: Dassault Systèmes

Abaqus Analysis User’s Manual, Version 6.11, Dassault Systèmes, 2011.

Khan AS, Huang S (1995) Continuum theory of plasticity. John Wiley & Sons, Inc., New York

Yun GJ, Shang S (2011) A self-optimizing inverse analysis method for estimation of cyclic elasto-plasticity model parameters. Int J Plast 27:576–595

Cooreman S, Lecompte D, Sol H, Vantomme J, Debruyne D (2007) Elasto-plastic material parameter identification by inverse methods: calculation of the sensitivity matrix. Int J Solids Struct 44:4329–4341

Guery A, Hild F, Latourete F, Roux S (2016) Slip activities in polycrystals determined by coupling DIC measurements with crystal plasticity calculations. Int J Plast 81:249–266

Cherkaev A, Dzierżanowski G (2013) Three-phase plane composites of minimal elastic stress energy: high-porosity structures. Int J Solids Struct 50(25–26):4145–4160. https://doi.org/10.1016/j.ijsolstr.2013.08.010

Sinaie S, Heidarpour A, Zhao XL (2014) A multi-objective optimization approach to the parameter determination of constitutive plasticity models for the simulation of multi-phase load histories. Comput Struct 138. https://doi.org/10.1016/j.compstruc.2014.03.005

Bonet J, Wood RD (2008) Nonlinear continuum mechanics for finite element analysis, second ed. Cambridge University Press, Cambridge

Wojciechowski J., Ajdukiewicz C., Gajewski M.: Application of the digital image correlation system for determination of elasto-plasticity parameters for chosen aluminum alloy, Zastosowanie system optycznej korelacji obrazu do wyznaczeniaparametrówsprężysto-plastycznościwybranegostopualuminium, XXII Slovak-Polish-Russian Seminar, Theoretical Foundation of Civil Engineering, Proceedings str. 203–210, Żylina, Slovakia 9.09–13.09.2013, V. Andriejew (ed.) [in Polish]

Pottier T, Toussaint F, Vacher P (2011) Contribution of heterogeneous strain field measurements and boundary conditions modelling in inverse identification of material parameters. European Journal of Mechanics A/Solids 30:373–382. https://doi.org/10.1016/j.euromechsol.2010.10.001

Ackley DH (1987) A connectionist machine for Genetic Hill-climbing. Kluwer

Bäck T (1995) Evolutionary algorithms in theory and practice : evolution strategies, evolutionary programming, genetic algorithms. Oxford University Press, Oxford

Laguna M, Marti R (2005) Experimental testing of advanced scatter search designs for global optimization of multimodal functions. J Glob Optim 33(2):235–255

Badaloni M, Rossi M, Chiappini G, Lava P, Debruyne D (2015) Impact of experimental uncertainties on the identification of mechanical material properties using DIC. Exp Mech 55:1411–1426. https://doi.org/10.1007/s11340-015-0039-8

Acknowledgements

The authors would like to express their gratitude to Prof. Grzegorz Dzierżanowski, whose remarks led to an improved final version of the paper.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Kowalewski, Ł., Gajewski, M. Assessment of Optimization Methods Used to Determine Plasticity Parameters Based on DIC and back Calculation Methods. Exp Tech 43, 385–396 (2019). https://doi.org/10.1007/s40799-018-00298-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40799-018-00298-5