Abstract

In recent decades, there has been rapid development in digital technologies for automated assessment. Through enhanced possibilities in terms of algorithms, grading codes, adaptivity, and feedback, they are suitable for formative assessment. There is a need to develop computer-aided assessment (CAA) tasks that target higher-order mathematical skills to ensure a balanced assessment approach beyond basic procedural skills. To address this issue, research suggests the approach of asking students to generate examples. This study focuses on an example-generation task on polynomial function understanding, proposed to 205 first-year engineering students in Sweden and 111 first-year biotechnology students in Italy. Students were encouraged to collaborate in small groups, but individual elements within the tasks required each group member to provide individual answers. Students' responses kept in the CAA system were qualitatively analyzed to understand the effectiveness of the task in extending the students’ example space in diverse educational contexts. The findings indicate a difference in students’ example spaces when performing the task between the two educational contexts. The results suggest key strengths and possible improvements to the task design.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The development of digital technologies for the automated assessment of students’ work has been rapid in recent decades. This type of technology, referred to as computer-aided assessment (CAA) systems, is widespread today, particularly within university mathematics education (Galluzzi et al., 2021; Kinnear et al., 2022). The enhanced possibilities offered by CAA systems in terms of algorithms, grading codes, adaptivity, and feedback make them particularly suitable for formative assessment (Barana et al., 2018, 2021). So far, CAA systems have mainly been used for assessing basic mathematical procedural skills. Researchers emphasize the importance of developing CAA tasks that address higher-order skills in mathematics to prevent assessments from focusing solely on lower-order skills (e.g., Rønning, 2017).

To address this issue, researchers focusing on CAA suggest the pedagogical approach of asking students to generate examples that meet certain criteria (Kinnear et al., 2022; Yerushalmy et al., 2017). Inviting students to generate their own examples has been suggested as a way to foster their mathematical thinking (Bills et al., 2006; Watson & Mason, 2005). Since example-generation tasks can often be automatically corrected, they suit CAA systems well (Sangwin, 2003; Yerushalmy et al., 2017).

This paper reports on a follow-up study based on suggested revisions of a specific example-generation task on polynomial functions, as reported at the MEDA 3 Conference (Fahlgren & Brunström, 2022). In the present study, a revised version of the task has been trialed in two different educational contexts. The overarching aim of this paper is to provide new insights into example-generation tasks appropriate for CAA systems. This has been accomplished by analyzing the student responses to the revised task.

Before we formulate the research question, we introduce the literature framing the study, in particular CAA, the theory of example spaces, as well as teaching and learning polynomial functions.

Computer-Aided Assessment

CAA systems have recently become increasingly popular in higher mathematics education. Several systems have been developed that can process open-ended questions that deal with mathematical objects, such as expressions, equations, sets, matrices, graphs, and establish their equivalence with the correct answer (Keijzer-de Ruijter & Draaijer, 2019; Sangwin, 2015; Yerushalmy et al., 2017).

By using CAA systems, innovative task designs can be developed. Through the mathematical engine, it is possible to create grading codes that check if the answer satisfies some constraints (Sangwin, 2003). Moreover, algorithms which randomize parts of the tasks can be created, so that all students have different versions of the same task (Rønning, 2017). Nicol and Milligan (2006) suggest that algorithmic questions can be used in group work to stimulate discussion: if each group member has different variants of the same task, they should not focus only on the comparison of results but also on discussing processes.

Sangwin et al. (2009) suggest using the computer capabilities of creating different representations of mathematical objects to provide implicit feedback instead of explicit solutions. For example, if the task asks students to write the expression of a function satisfying particular conditions, implicit feedback could consist of showing the graph of a correct function (Sangwin et al., 2009). Alternatively, it is possible to provide the graph of the students’ answer before grading it, either using the CAA system’s capabilities to generate a preview (Barana et al., 2018) or encouraging students to use an external system, such as a Dynamic Mathematics Software (DMS), to create the graph of the function, so that they can check if it satisfies the constraints (Sangwin et al., 2009). This would also help develop self-assessment, since students have the chance to autonomously notice mistakes (Black & Wiliam, 2009).

The Theory of Example Spaces

Examples play a crucial role in the field of mathematics education (Bills et al., 2006). Watson and Mason (2005) propose example-generation tasks where students are encouraged to provide examples that meet certain criteria as an effective approach in the learning of mathematics. Since there are no general methods for solving these types of task, students have to be creative and develop solution strategies building on conceptual understanding (Antonini, 2006). Watson and Mason (2005) use the notion of example spaces when referring to collections of examples that meet certain conditions. They distinguish between personal example spaces, conventional example spaces, and collective and situated example spaces. Personal example spaces reflect an individual’s collection of available examples, whereas conventional example spaces pertain to examples that are generally comprehended by mathematicians and commonly presented in textbooks. A collective and situated example space is the example space provided by a group of students at a specific time (Watson & Mason, 2005).

Watson and Mason (2005) use the term ‘example’ in a broad way, including examples of concepts, strategies, principles, etc. According to them, students’ mathematical understanding is indicated by the richness of their example spaces. To characterize the structure of example spaces, Watson and Mason (2005) introduce the terms dimensions of possible variation and associated ranges of permissible change. Dimensions of Possible Variation (DofPV) denote the attributes of an example that can be altered without compromising the determining characteristics. The associated Ranges of Permissible Change (RofPCh) refer to the extent to which these dimensions can be modified while remaining a valid example (Watson & Mason, 2005). These notions arrive from the theory of variation, and specifically from the notion of dimensions of variation, by Marton and colleagues (e.g. Marton & Booth, 1997). They suggest that learning involves a process of discerning significant aspect of a learning object, and this process is facilitated by experiencing variation in these aspects (dimensions of variation). Watson and Mason (2005) expanded on this by introducing the concept of ‘dimensions of possible variation’, suggesting that individuals may recognize different aspects that can be varied.

To encourage students to extend their existing example spaces, Watson and Mason (2005) suggest various types of example-generation tasks. One of these consists of a sequence of prompts that progressively add more constraints. According to Watson and Mason (2005), the addition of constraints to the initial criteria one at a time often “… opens up new possibilities for the learners and promotes creativity” (p. 11). In case an additional constraint makes prototypical examples invalid, students need to be creative and explore innovative approaches to generate valid examples. The implementation of this type of task into a CAA system is particularly useful since it allows more than one, or even infinitely many correct solutions (Sangwin, 2003).

In another type of example-generation task, students are invited to generate several (different) examples that fulfill certain conditions. The literature emphasizes the significance of this approach to prompt students to extend their existing and accessible example spaces (Goldenberg et al., 2008; Zaslavsky & Zodik, 2014). However, there is an identified risk that students tend to only provide prototypical examples (Yerushalmy et al., 2017). To increase the opportunities for students to generate examples that go beyond their initial thoughts, researchers recommend requesting examples that differ as much as possible (Watson & Mason, 2005; Zaslavsky & Zodik, 2014).

Understanding Polynomial Functions

Polynomial functions are usually introduced during secondary school as the first example of functions. Best and Bikner‑Ahsbahs (2017) note that students’ understanding relies on algebra, but it also involves other different concepts, such as dependent and independent variables, covariation, and quantitative relationships. Students learn how to represent functions in different ways (with formulas, graphs, tables, and verbal descriptions), their different properties, and how to perform several operations with them. Due to the different aspects that are involved, it is possible that students develop a fragmented understanding of polynomial functions (Best & Bikner-Ahsbahs, 2017). Bossé et al. (2014) and Adu-Gyamfi et al. (2017) focus on tasks based on polynomial functions and their graphical/algebraic representations and investigate how students translate between mathematical representations, a practice that should be aligned with instructional guidance to help students make connections. In this paper, two ideas to promote flexibility in working with polynomial functions, highlighted in the literature, are elaborated.

The first idea involves adopting a functional approach when working with algebra to link algebraic and analytic aspects of polynomials (Kieran, 2004; Yerushalmy, 2000). The Factor theorem, as an application of the remainder theorem, is one of the main results in algebra that connects the algebraic aspects of polynomials with the analytic aspects of functions (Weiss, 2016). Movshovitz‐Hadar and Shmukler (1991) suggest a teaching method where polynomials of a degree greater than two are constructed and treated inductively as products of linear and quadratic functions vanishing in given points. The goal is to develop students’ intuition for polynomials as a basis for further algebraic and analytical study of polynomials. Watson and Chick (2011) discuss didactical considerations regarding the qualities and usage of examples, emphasizing the importance of the nature of students’ engagement as well as the teacher’s intentions and actions. They explore a task based on polynomial functions that invites the learner to construct an example of a curve that fits certain constraints, and then asks about the function class(es) to which it belongs. The authors conjecture about the methods in which students solved the task, including the use of the Factor theorem and solving a system of linear equations to obtain the standard form of a cubic function. The latter can raise several types of difficulties like dealing with unknowns versus parameters (Watson & Chick, 2011). Bossé et al. (2014) disclose how students may prefer the standard form even when the factored form is easier to use, for example when having information about roots.

The second idea involves studying the properties of functions through the transformations of elementary functions (Faulkenberry & Faulkenberry, 2010). These transformations include translations, scaling, and reflections. Günster and Weigand (2020) presented a task in which students were asked to describe the influence of a multiplicative parameter (vertical scaling) on linear functions, managing to realize the consequences on the x- and y-intercepts. Since they are the same for any function, this strategy can be used to fine-tune the expression of a function according to a given y-intercept. However, several studies point out general difficulties in recognizing the implications of functions’ transformations: Zazkis et al. (2003) regarding horizontal translations, Lage and Gaisman (2006) detecting a lack of understanding transformations’ effects on functions, and Daher and Anabousi (2015) displaying recurrent errors. Transformations are powerful, but students may not be able to exploit their full potential.

Research Question

As mentioned before, the overarching aim of this paper is to provide new insights into example-generation tasks appropriate for CAA systems. This aim is fostered by analyzing students’ answers to an example-generation task (see Fig. 1). Since there are different ways to approach the task, leading to different answers, or different forms of the same answer, the analysis is run with the purpose of identifying students’ example spaces. The analysis in two different educational contexts allows us to investigate similarities and differences in the collective and situated example spaces. Researchers suggest studies that cross national boundaries to provide a broader picture of the variation in students’ strategy usage (Jiang et al., 2023). Thus, the research question that guides this study is:

How did the design of a specific example-generation task affect students’ example spaces in two different educational contexts?

In light of the findings, we will discuss possible re-design of the task as well as possible feedback to extend students’ example spaces.

Method

The Research Context

This study took place in autumn 2022 at two universities: one in Sweden and one in Italy. The Swedish context involved 205 first-year engineering students taking their first Calculus course. In Italy, 111 first-year biotechnology students taking the “Mathematics and Biostatistics” module were involved. The same computer-based activities were proposed in both contexts, consisting of task sequences focusing on function understanding. In both cases, the activities included collaborative small group tasks and tasks requiring an individual answer from each group member. While the activities were mandatory in Sweden, they were optional in Italy.

The Swedish Context

In the Swedish school syllabi, mathematical content and different mathematical abilities are introduced separately. In the syllabus for upper-secondary school mathematics (age of students between16 and 19), five core content areas and six general abilities are described (Swedish National Agency for Education, n.d.). The courses for upper-secondary school are organized into three tracks, one of which is compulsory for students entering the engineering programs at universities. The mathematical content relevant to this study is part of several mandatory courses in this track (Course 1c, 2c, 3c, and 4). Of interest for this paper, the teaching in these courses should cover the following core content (Swedish National Agency for Education, n.d.):

-

Algebraic and graphic representations of functions (Course 1c)

-

Quadratic functions and methods for solving quadratic equations (Course 2c)

-

Properties of polynomial functions and methods for solving polynomial equations (Course 3c)

-

Factorization of polynomials and using the Factor theorem to solve polynomial equations (Course 4)

The Calculus course curriculum at the university includes an introductory part on the fundamentals of functions, having the implicit goal of emphasizing the connections between algebraic, geometric, and analytic aspects. An important focus is on elementary functions and their properties, including topics such as the factorization of polynomial functions and the Factor theorem. The total examination gives 7.5 ECTS credits (6.5 credits for the written exam and 1 credit for the mandatory computer-based assignment).

The Italian Context

Italian national guidelines (MIUR, 2010a, b, c) for learning mathematics in upper secondary school (from K9 to K13, age of students between 14 and 19) include basic algebra for all students in the first two years (K9-K10, when pupils are aged 14–16). In terms of relevant knowledge for this work, Italian students cover:

-

Algebraic and graphic representations of polynomial functions having degree 1 or 2

-

Methods for solving linear and quadratic systems of equations and inequalities

-

Polynomial factorization, by polynomial manipulation and by roots computation

Italian national guidelines, in line with international literature (Kieran, 2004; Yerushalmy, 2000), suggest the in depth study of linear and quadratic expressions as functions in the last three years of upper secondary school (from K11 to K13). In particular, for this paper, we are interested in:

-

The concept of zero of a function

-

Number of solutions of polynomial equations

-

Analytical and graphical study of the main kinds of functions

The semester-long Mathematics and Biostatistics module held at the Italian university is addressed to first-year students, and its total commitment is equivalent to 8 ECTS, subdivided between various branches of mathematics. Relative to Calculus, the topics covered range from univariate functions to differential equations, passing through univariate and bivariate Calculus. Topics such as the factorization of polynomial functions and the Factor theorem are not explicitly covered during the module since they are considered as bases to be acquired from upper secondary school. In fact, an initial test to check if the students need to fulfil additional learning requirements is administered at the beginning of the degree program. However, during the module time is devoted to sketching elementary functions and their transformations, including vertical scaling.

The Task

This paper will explore a specific type of example-generation task adapted from Sangwin (2003), see Fig. 1. The task consists of a sequence of Prompts (a) to (e) that progressively add more constraints (i) to (iv). The numerical values of a and b in constraints (ii), (iii), and (iv) are randomized. In this way, group members receive different versions of the task. In Prompt (e), students are asked to provide two examples that fulfil all constraints except for the first one. The key idea addressed by the task is the Factor theorem. In addition, the intention is to draw students’ attention to the possibility of vertical scaling by adding a further constraint in terms of a given y-intercept, i.e., constraint (iv). Based on findings from a Swedish study (Fahlgren & Brunström, 2022), the task has been revised in two ways:

-

In the previous version, constraint (iv) was \(p\left(0\right)=ab.\) Since several students responded with \(p\left(x\right)=\left(x+1\right)\left(x-a\right)\left(x-b\right)\) to Prompt (c), they could use this response also to Prompt (d) without having to use vertical scaling. In the current version of the task, we tackle this by revising constraint (iv) into \(p\left(0\right)=-2ab\).

-

In the previous version, we only asked for one example in Prompt (e). Since most students provided the straightforward response, i.e., the second-degree polynomial \(p\left(x\right)=\left(x-a\right)\left(x-b\right)\), we decided to ask for two examples to extend students’ example spaces.

An example-generation task adapted from Sangwin (2003)

In the Swedish version of the task, beside the details shown in Fig. 1, students were provided with an additional note encouraging them to consider the possibility of checking if their suggested responses really fulfill the specified conditions by using a DMS environment before submitting them as an answer to the task, as suggested by Sangwin et al. (2009). The Italian version of the task did not include this suggestion.

The task is anticipated by an Introducing Task (IT), where students were asked to determine a function formula for a given graph of a quadratic function by using the two zeros. The intention of the IT is to direct students’ attention to the Factor theorem. They were also encouraged to explain the thinking behind their answer. In this task, students were encouraged to agree on a joint group answer.

Data Collection and Analysis

The primary data consists of student responses to the task in Fig. 1, collected through a CAA system, in this case, Möbius Assessment. This data only consists of students’ submitted answers, that is, without information about the thinking behind their answers. Therefore, we decided to include student responses to the IT in the data set. From this data, we can see if the students explicitly refer to the Factor theorem in their explanation, and hence are familiar with this key idea.

In the previous study, student responses to the earlier version of the task in Fig. 1 were analyzed (Fahlgren & Brunström, 2022). The data coding manual developed in that study was adapted to fit the current version of the task. Moreover, codes for the IT were developed. The algebraic expression of the function was categorized, i.e., standard form, factored form, or vertex form. Concerning their explanation, we indicated whether they referred to the Factor theorem, the equation system or something else.

The coding manual for the main task (Fig. 1) consisted of several codes for each prompt (see Table 1) guided by the key ideas addressed by the task. The coding manual was then trialled in both research groups, and any doubtful cases were sorted out. After some minor changes, the coding manual was established.

As an example, the codes used when coding student responses to Prompt (c) are introduced, with comments, in Table 2. Since the main key idea addressed by the task is the Factor theorem, it was important to indicate the distinction between factored form and standard form. In addition, to discern any general patterns concerning the third factor, i.e., the factor \((x-\alpha )\) in \(p\left(x\right)=\left(x-a\right)\left(x-b\right)\left(x-\alpha \right)\), special cases were coded separately. To be able to code responses written in standard form, a computer algebra system was used to factorize the responses. Notably, in a few cases we have coded an incorrect response with a code that corresponds to a correct answer. For instance, the response \(p\left(x\right)=x\left(x-a\right)\left(x+b\right)\) was coded with the first code in Table 2, as it was considered a typing error.

During the initial stage of data analysis process, each student’s response was coded, resulting in 20 codes per student. Multiple meetings were held to discuss and compare the usage of the coding manual. In the subsequent stage, parts of the data were cross-tabulated to discern patterns in the material. For example, students’ explanations in the IT were cross-tabulated with all prompts in the main task, except for Prompt (a). This was done to indicate if students responding in standard form, might have used the Factor theorem to construct their response.

Next, the initial codes were merged into categories to make the result more comprehensible. Besides the key ideas, the Factor theorem and vertical scaling, this merge was guided by the idea of using an equation system—a result of the findings of the IT. For example, when introducing the result to Prompt (c), the response category \(p(x)=(x-a)(x-b)(x-\alpha )\) also includes the special cases α = -1, 2, -2, a, and b (see Table 2). Finally, responses in the two contexts were compared to discern context-related similarities and differences.

Results and Analysis

This section starts with a description of the results from the IT since student responses to this task could serve as an indicator of student strategies when solving the main task. Then, student responses to Prompts (b), (c), (d) and (e) are reported and analyzed one at a time. The section ends with an analysis based on the theory of example spaces.

The Introducing Task

Concerning the algebraic expression of the function, most of the students responded in standard form (\(p\left(x\right)=-{x}^{2}+x+6)\), 63% of the Swedish students and 90% of the Italian students responded in this way. Among the Swedish students, 25% responded in factored form and 11% responded in vertex form. None of the Italian students responded in factored form while 10% responded in vertex form. Moreover, the findings indicate a remarkable difference between the Swedish and Italian students concerning the strategy referred to when explaining the thinking behind their answers. While 74% (152/205) of the Swedish students referred to the Factor theorem in their explanation, only one of the Italian students gave this explanation. Notably as many as 81% (105/130) of the Swedish students who answered in standard form referred to the Factor theorem in their explanation. This indicates that students who have responded in standard form to the prompts in the main task might have utilized the Factor theorem, and then extended the function expression from a factored form into a standard form. However, it is important to emphasize that students’ reasoning on the IT does not provide answers regarding how they reason on the main task; we can only speculate about possible solution strategies. Instead of referring to the Factor theorem, almost all Italian students (86%) explained that they have solved an equation system to find the function formula in the IT.

Prompt (b)

In Prompt (a) students were encouraged to give an example of a polynomial of degree three. In Prompt (b) one further constraint (\(p\left(a\right)=0\)) has been added. Table 3 provides an overview of the responses to Prompt (b). The responses have been analyzed in relation to the Factor theorem, i.e., the key idea addressed by the task. Moreover, in light of the findings from the IT, reflections on possible student strategies based on equation systems have been provided.

Concerning the Factor theorem, it is only those students who answered in a factored form that we can be sure used this strategy. In total 24% (50/205) of the Swedish students and 10% (11/111) of the Italian students responded in factored form. We can also conclude that those students who responded with \(p\left(x\right)={x}^{3}-{a}^{3}\), 19% of the Swedish students and 33% of the Italian students, did not use the Factor theorem. The responses to the IT indicate that some Swedish students might have used the Factor theorem in the main task, even if they responded in standard form. In total 56% (114/205) of the Swedish students gave a response in standard form. Among these students, 73% referred to the Factor theorem when explaining how they solved the IT. Hence, there is reason to believe that at least some of these students used the Factor theorem to find an example that fulfills Prompt (b). Since just one of the Italian students referred to the Factor theorem in the IT, it is not relevant to carry out this analysis for the Italian students.

Another way to approach this prompt would be to solve an equation system. However, in this case, only one condition (\(p\left(a\right)=0\)) needs to be fulfilled. Therefore, students using equation systems could choose any value for three of the parameters k, c, d, and e in \(p\left(x\right)=k{x}^{3}+c{x}^{2}+dx+e\) and then solve an equation with one unknown. The most frequent response among the Italian students, \({p\left(x\right)=x}^{3}-{a}^{3}\), could be reached by choosing \(k=1\) and \(c=d=0\) and solving the equation. However, this response is also rather straightforward to find just by adding a constant term to \(p\left(x\right)={x}^{3}\) so that \(p\left(a\right)=0\).

Prompt (c)

In Prompt (c), a second zero, \(p\left(b\right)=0\), is added. Table 4 shows the responses to this prompt. As in Prompt (b), the analysis is based on the Factor theorem and equation systems.

In comparison to Prompt (b), there are more students who responded in factored form to Prompt (c). In total 32% (65/205) of the Swedish students and 14% (15/111) of the Italian students responded in factored form. The most frequent answer was \(p\left(x\right)={x}^{3}-\left(a+b\right){x}^{2}+abx\), i.e., \(p\left(x\right)=x(x-a)(x-b)\) in standard form. This response was provided by 32% (65/205) of the Swedish students and 26% (29/111) of the Italian students. Among the 65 Swedish students 75% referred to the Factor theorem in the IT when explaining how they found the function formula. This indicates that more than 32% of the Swedish students probably used the Factor theorem when responding to Prompt (c).

Since there are two conditions (\(p\left(a\right)=0 \text{ and } p\left(b\right)=0\)) in Prompt (c), students using equation systems to find a response can choose any value for two of the parameters k, c, d, and e in \(p\left(x\right)=k{x}^{3}+c{x}^{2}+dx+e\). They can then solve an equation system with two unknowns and two equations. The response \(p\left(x\right)={x}^{3}-\left(a+b\right){x}^{2}+abx\), that 26% of the Italian students gave, could be reached by choosing \(k=1\) and\(e=0\), and then solving the equation system. If instead \(k=1\) and \(c=0\) or \(d=0\) the standard form response could always be factorized to \(p\left(x\right)=(x-a)(x-b)(x-\alpha )\), where the value of α depends on the values on a and b. Altogether 33% (37/111) of the Italian students gave this type of response.

Prompt (d)

By adding a further constraint in terms of a given y-intercept (\(p\left(0\right)=-2ab\)) the key idea of vertical scaling by multiplying with a constant factor is addressed by Prompt (d). Table 5 provides an overview of the responses to Prompt (d). Besides the Factor theorem and equation systems the key idea of vertical scaling guided the analysis of student responses to this prompt. Before analyzing the responses on Prompt (d), it is important for the analysis in relation to vertical scaling to highlight one more result from Prompt (c). In total 44% (91/205) of the Swedish students and 34% (38/111) of the Italian students responded \(p\left(x\right)=x\left(x-a\right)\left(x-b\right)\) in factored or standard form to Prompt (c). These students could not use vertical scaling of the response to Prompt (c) to answer Prompt (d).

In this prompt, only 46% of the Italian students responded with a correct answer. The high number of incorrect answers could be attributed to a misinterpretation of the task. Forty students answered with \(p\left(x\right)={x}^{3}-2ab\), a polynomial satisfying the properties (i) and (iv), but generally not (ii) and (iii). This suggests a likely interpretation of “(i) - (iv)” as “(i) and (iv)” rather than “from (i) to (iv)”. This is important to consider when analyzing this prompt.

Concerning the Factor theorem, 30% (61/205) of the Swedish students and 5% (6/111) of the Italian students responded in factored form. The most frequent response among the Swedish students is the standard form of \(p\left(x\right)=(x-2)(x-a)(x-b)\). As many as 40% (81/205) of the Swedish students gave this response. Among these students, 78% referred to the Factor theorem in the IT. Therefore, we find it likely that some of these students used the Factor theorem also in this prompt.

Since there are three conditions (\(p\left(a\right)=0, p\left(b\right)=0\) and \(p\left(0\right)=-2ab\)) in Prompt (d), students who use equation systems could choose any value on one of the parameters k, c, and d in \(p\left(x\right)=k{x}^{3}+c{x}^{2}+dx-2ab\) and then solve an equation system with two unknowns and two equations. A straightforward choice would be to let \(k=1\) which will result in the standard form of \(p\left(x\right)=(x-2)(x-a)(x-b)\). This response was provided by 18% (20/111) of the Italian students.

In relation to vertical scaling, the data can only indicate that some students might have used this strategy. We find it likely that those students who multiply their response to Prompt (c) with a constant factor to create their response to Prompt (d) used vertical scaling. For example, if the response to Prompt (c) is \(p\left(x\right)=(x+1)(x-a)(x-b)\), the response to Prompt (d) will be \(p\left(x\right)=-2(x+1)(x-a)(x-b)\). However, this was only performed by 4% (9/205) of the Swedish students and 8% (9/111) of the Italian students. There were also responses, either in factored or standard form, to Prompt (d) that included a constant factor, but with a different third zero than in Prompt (c). As an example, one student who responded with \(p\left(x\right)=x\left(x-a\right)\left(x-b\right)\) to Prompt (c), responded with \(p\left(x\right)=-2(x+1)(x-a)(x-b)\) to Prompt (d). If this student first tried \(p\left(x\right)=\left(x+1\right)\left(x-a\right)\left(x-b\right)\) as an answer to Prompt (d), and then realized that this response must be multiplied by \(-2\), vertical scaling was used. In total, 12% (25/205) of the Swedish students and 18% (20/111) of the Italian students, might have used this strategy.

Notably, as many as 65% (133/205) of the Swedish students and 19% (21/111) of the Italian students responded with \(p\left(x\right)=(x-2)(x-a)(x-b)\) in factored or standard form. For these students, there was no need for vertical scaling.

Prompt (e)

In Prompt (e), students are asked to provide two examples of polynomials that fulfill all constraints except being of degree three. Table 6 introduces the various types of responses provided by the students to Prompt (e), both their first and second example. Besides analyzing the responses in relation to the Factor theorem, equation system, and vertical scaling, the degree of the polynomials is indicated.

Predominantly, students provided a correct second-degree polynomial as their first example, 55% (112/205) among the Swedish students and 68% (75/111) among the Italian students. As there is only one correct second-degree polynomial meeting the conditions, students who provided this polynomial as their first example needed to provide a polynomial of higher degree as their second example. On the other hand, students who provided a fourth-degree polynomial as their first example, as 31% (64/205) of the Swedish students and 10% (11/111) of the Italian students did, had the possibility to either provide one more polynomial of the same degree or change the polynomial degree. The most frequent combination was providing one polynomial of degree 2 and one of degree 4, as 59% (121/205) of the Swedish students and 45% (50/111) of the Italian students did. Additionally, 12% (25/205) of the Swedish students and 3% (3/111) of the Italian students provided two polynomials of degree 4. Furthermore, 14% (29/205) of the Swedish students and 3% (3/111) of the Italian students provided polynomials of degrees 4 and 5.

Concerning the Factor theorem, 36% (73/205) of the Swedish students and 12% (13/111) of the Italian students responded in a factored form in at least one of their two examples.

The most frequent response among the Italian students was the correct second-degree polynomial in standard form, which 66% (73/111) of the students provided as their first or second example. This answer is straightforward to receive by solving an equation system.

In relation to vertical scaling, the data indicate that several students have used this strategy. In total, 62% (127/205) of the Swedish students and 73% (81/111) of the Italian students responded with \(p\left(x\right)=-2(x-a)(x-b)\) in factored or standard form as their first or second example. If these students first tried \(p\left(x\right)=(x-a)(x-b)\), and then realized that this response had to be multiplied by -2, vertical scaling was used. In addition, some of the fourth-degree polynomial responses, either in factored or standard form, included a constant factor (see Table 6). Altogether, 66% (135/205) of the Swedish students and 77% (85/111) of the Italian students might have used vertical scaling in at least one of their examples.

Analysis Based on Example Spaces

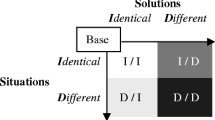

The results reveal some context-related differences. One difference concerns the percentage of students responding in factored form. Although the key idea addressed by the task was the Factor theorem, the findings indicate that most of the Italian students seemed to have used equation systems to solve the task, i.e., without using the Factor theorem. We regard the strategy used to obtain the given zeros as one dimension of possible variation (DofPV). When analyzing the data, we focused on the Factor theorem and equation systems. However, the conventional example space allows for a greater range of permissible change (RofPCh), such as the use of Vieta’s formulas relating polynomial coefficients to sums and products of its roots.

When comparing the number of students responding in factored form to the different prompts, we noted an increase from Prompt (b) to Prompt (c), from 24 to 32% among the Swedish students and from 10 to 14% among the Italian students. In addition, when responding to Prompt (e), 36% of the Swedish students responded in factored form. Hence, we assert that the addition of constraints led several students to extend their personal example space.

In Prompt (e), students were asked to provide two examples. The degree of the polynomials is one DofPV utilized by most of the students. If their first response was a second-degree polynomial, they needed to change the degree of the polynomial to provide their second example. If the degree of their first example is at least 4, they can also utilize this DofPV, even if it is not the only option. Notably, 10% (20/205) of the Swedish students responded with \(p\left(x\right)=\left(x-a\right)\left(x-b\right)\left(x-2\right)(x+1)\) in factored or standard form as their first example followed by \(p\left(x\right)=\left(x-a\right)\left(x-b\right)\left(x-2\right){\left(x+1\right)}^{2}\) as their second example. Probably, these students realized that it is possible to vary the degree of the polynomial in an infinite number of ways by multiplying with the factor \({\left(x+1\right)}^{n}\) (where n is a positive integer), that is, that the associated RofPCh is unrestricted. Another student who realized that the RofPCh is unrestricted provided the response \(p\left(x\right)=(x-a)(x-b)({x}^{1000}-2)\) as one example, demonstrating a rich personal example space.

On the other hand, if students, when constructing the second example, choose to keep the degree of their first-example polynomial, they have to activate another DofPV. This can be done by changing at least one (polynomial) factor and, if necessary, adjusting the constant term. As an example, one student provided the following responses: \(p\left(x\right)=-(x-a)(x-b)(x+1)(x+2)\) and \(p\left(x\right)=-2\left(x-a\right)\left(x-b\right){\left(x+1\right)}^{2}\). In this case, the student changed one linear factor, and adjusted the constant term accordingly. Probably this student realized that this strategy, i.e., using vertical scaling to adjust the y-intercept, could be generalized and that the associated RofPCh is unrestricted.

Considering that different strategies can be employed to obtain a certain y-intercept in Prompt (d) and Prompt (e), we regard the strategy used as one DofPV. Even if we do not know the thinking behind different student responses, the responses indicate that a higher proportion of the Italian students than the Swedish ones used vertical scaling both in Prompt (d) and Prompt (e). Several Swedish students, instead seem to have included a linear factor to get the correct y-intercept in Prompt (d). When comparing the total number of students that seem to have used vertical scaling, there is an increase from Prompt (d) to Prompt (e). Therefore, we assert that several students extended their personal example space by employing different strategies, likely influenced by the fact that the only correct second-degree polynomial in Prompt (e) includes a constant factor.

Discussion

The analysis of the student responses provided an overview of the collective and situated example spaces in the two different countries. Since this is a follow-up study, we first discuss the findings concerning the suggested revisions of the task in the previous study (Fahlgren & Brunström, 2022). Then, we discuss the findings in relation to context-related differences.

The original task was revised in two ways: first, a constant factor was added to constraint (iv) to encourage students to use vertical scaling in Prompt (d). Second, instead of asking for one example in Prompt (e), students were asked to provide two examples to extend their example space. The added constant factor to constraint (iv) did not have the intended effect on student responses to Prompt (d). However, the percentage of students who might have used vertical scaling increased from Prompt (d) to Prompt (e) in both contexts. This is probably caused by the added constant factor to constraint (iv), and the fact that students who provide a second-degree polynomial need to include the constant factor.

The second revision, asking for a second different example was effective in extending the students’ example space. Indeed, different kinds of polynomials were provided in both contexts. This especially resulted in degree 4 polynomials, which are the simplest examples after degree 2 polynomials and can be constructed, like degree 3 polynomials, by multiplying proper linear and quadratic polynomials (Movshovitz‐Hadar & Shmukler, 1991). The request for a different example could also be added to Prompt (d) to further stimulate students to use vertical scaling. This could be particularly useful for students choosing \((x-2)\) as their third factor since this will force them to change this factor (which in turn will make it more possible that they will use vertical scaling). It could also be useful to ask for a different example in Prompt (c), especially for the students responding \(p\left(x\right)=x(x-a)(x-b)\) since they cannot scale the response given in Prompt (c) to answer Prompt (d). In line with previous research (Goldenberg & Mason, 2008; Zaslavsky & Zodik, 2014), we think that asking for more than one example is an effective design principle to foster higher-order thinking skills.

One finding in the previous study (Fahlgren & Brunström, 2022) is that the added zero in Prompt (c) resulted in an increase in students utilizing the Factor theorem. Also in this study, the percentage of students who probably used the Factor theorem increased from Prompt (b) to Prompt (c). In this way, the finding of the previous study in the Swedish context is confirmed; the finding is further validated through data from a different context.

The results highlight how the prompts allowed students to use different solving strategies to tackle the analyzed task. The most relevant strategies include the Factor theorem and equation systems. The choice of solving strategy could reflect students’ background; the teaching approach during the course might have influenced their answers. However, in both contexts, they are at the beginning of university with a background of 12 or 13 years in Mathematics. Therefore, it is likely that they chose strategies in line with the methods studied at school. In particular, Swedish students tended to use the Factor theorem, which is a more effective way to solve the task. This could reflect that the Factor theorem is explicitly mentioned in the syllabus for the last mandatory course in upper secondary school (Swedish National Agency for Education, n.d.) and in the syllabus for the university course in question. On the other hand, Italian students tended to solve an equation system to determine the coefficients of the polynomials. This method is less effective in terms of computations but is more general since it is applicable to different constraints and kinds of functions. The preference for this method could reflect the emphasis on theory and abstract algebra that secondary Italian school teachers often incorporate into their practices (Bolondi & Ferretti, 2021). Moreover, it might also reflect the discrepancy between two intertwined concepts: factorization of polynomials and zeros of functions. Although the national guidelines suggest introducing algebra with a functional approach, this may not be a common practice, and zeros of functions are studied years later. Thus, students might face difficulties in making connections between algebraic, geometric, and analytic aspects, which are often treated as separate (Best & Bikner-Ahsbahs, 2017; Greer, 2008).

From the results related to the last two prompts, it emerges that Italian students seem more flexible in using vertical scaling than their Swedish peers. One possible explanation for this could be their engagement in various activities involving graphic transformations of elementary functions during the course, facilitated by a DMS environment. This aligns with Günster and Weigand (2020), where the use of DMS, like GeoGebra or Maple, helped overcome difficulties in connecting graphical effects of transformations with modifications in the symbolic expression of the function. Without this assistance, many students tend to rely on memorized facts or proceed mechanically, for instance, by constructing data tables, which is generally insufficient for properly handling function transformations (Lage & Gaisman, 2006). This suggests the usefulness of providing activities targeted at developing flexibility with symbolic and graphic representations of functions and understanding their transformations.

In both contexts, the majority of students—more so in Italy than in Sweden—tended to provide the answer in standard form. This result aligns with Bossé et al. (2014), indicating that students comprehend polynomials better when written in standard form.

We noticed that many Italian students misunderstood Prompt (d). Additionally, some did not adhere to the condition of providing a polynomial function in Prompt (e) and wrote different types of functions. Several explanations are possible for these results. For Prompt (d), one reason could be the unfamiliar notation using “(i)-(iv)” to represent “from (i) to (iv)”, leading to the misinterpretation “(i) and (iv)”. Regarding Prompt (e), some Italian students may have mistakenly considered non-polynomial functions, such as the absolute value of a polynomial,as a polynomial function. For both prompts, they might have faced difficulties in interpreting the prompt or extending their example space and therefore choose the simplest solution even if it meant disregarding the requirements. Therefore, it is crucial to pay attention to the task formulation and highlight the relevant information. In this case, the prompts will be reformulated before the next implementation of the activities. Moreover, in similar cases, the use of interactive feedback (Barana et al., 2021), adapted to students’ answers, would help them better understand the task and the underlying processes.

Conclusion

Through this study, we addressed the research question:

How did the design of a specific example-generation task affect students’ example spaces in two different educational contexts?

The differences between the two contexts (i.e., different cultures, difference in terms of obligation, the diverse degree courses) provided a relevant number of situations and a variety of students’ behaviors in solving the task, thereby enriching the analysis.

In the example-generation task, we combined the idea of progressively adding constraints to a sequence of prompts with the idea of requesting more than one example (Prompt (e)). The findings indicate that this is a successful strategy for stimulating flexibility in the choice of solving strategy and, consequently, extending students’ example space. Additionally, we identified several instances where a request for more than one example might further extend students’ example space, also in other prompts.

When creating example-generation tasks aimed at addressing specific key ideas, it is essential to consider the level of targeting required. A more targeted task increases the likelihood of the key ideas being considered, but at the same time it restricts the DofPV, thereby reducing the possibilities of a rich example space. For instance, to encourage students to use the Factor theorem, we could have specified that the response should be provided in factored form. Another example is that we could have formulated Prompt (d) in a way that relates back to Prompt (c), thereby increasing the number of students using vertical scaling of their response to Prompt (c) to answer Prompt (d). However, in both these cases, the changes will reduce the DofPV. Accordingly, finding the right balance between a targeted task and a broad example space is crucial when constructing example-generation tasks. It is important to consider the specific key ideas that need to be addressed while also allowing for a rich and varied example space.

One way to address this dilemma is, instead of restricting the task, to use adapted feedback. One idea would be to incorporate interactive feedback (Barana et al., 2021) to foster the use of different strategies in solving a task (Günster & Weigand, 2020): the feedback could confirm the correctness of the answer and suggest providing one more example using a different method. For example, if a student provides a correct response in standard form (in the specific example-generation task), the feedback could confirm the correctness of the answer, provide the student with the factored form of his/her answer, and ask for one more example written in factored form. Another idea is to provide a preview of students’ answers in factored form before submitting it. This is implicit feedback (Sangwin et al., 2009), and it could help them self-assess their answer by checking if the zeros are correct. This might also help them make sense of the factored form as a final answer.

Moreover, it is possible to introduce immediate feedback at the end of each prompt, so that students can use the information provided by the feedback in the next steps. This feedback would stimulate deeper thinking in case of errors and change the reasoning to be applied in the following prompts. In case of errors, it is also possible to allow for more attempts and provide information about the correctness of one’s response at each prompt. This kind of feedback would also be effective in avoiding misunderstandings, as occurred among the Italian students in Prompt (d).

In the Swedish context, students were encouraged to use a DMS to check their responses before submitting them, as suggested by Sangwin et al. (2009). The implicit feedback from the DMS provided opportunities for self-assessment. This is likely reflected in the fact that few Swedish students provided incorrect answers.

Even if we suggest several ways to improve the task, it has several key strengths that will be maintained in future replications of this study: asking for more different examples in a chain of prompts with additional constraints; utilizing the algorithmic capabilities of the CAA system to provide each student with a different version of the same task, which can foster discussion about processes, not just results (Nicol & Milligan, 2006); and recommending the use of a DMS to check the answer before submitting it.

A limitation of the study is the lack of data revealing students’ thinking behind their answers. In many cases, the solving strategy is inferred from the students’ answers, while we do not have a record of their thoughts and mental processes as they complete the activities. The research group plans to revise the task to further promote flexibility in dealing with polynomial functions and to re-experiment with the task in the next academic year, incorporating self-reflection questions that inquire about the strategies used and collecting more data through video-recording.

Data Availability

The datasets generated and analyzed during the current study are available from the corresponding author on reasonable request.

References

Adu-Gyamfi, K., Bossé, M. J., & Chandler, K. (2017). Student connections between algebraic and graphical polynomial representations in the context of a polynomial relation. International Journal of Science and Mathematics Education, 15(5), 915–938. https://doi.org/10.1007/s10763-016-9730-1

Antonini, S. (2006). Graduate students’ processes in generating examples of mathematical objects. In J. Novotná, H. Moraová, & N. Stehlíková (Eds.), Proceedings of the 30th Conference of the International Group for the Psychology of Mathematics Education (Vol. 2, pp. 57–64). PME.

Barana, A., Conte, A., Fioravera, M., Marchisio, M., & Rabellino, S. (2018). A model of formative automatic assessment and interactive feedback for STEM. 2018 IEEE 42nd Annual Computer Software and Applications Conference (COMPSAC). https://doi.org/10.1109/COMPSAC.2018.00178

Barana, A., Marchisio, M., & Sacchet, M. (2021). Interactive feedback for learning mathematics in a digital learning environment. Education Sciences, 11(6), 279. https://doi.org/10.3390/educsci11060279

Best, M., & Bikner-Ahsbahs, A. (2017). The function concept at the transition to upper secondary school level: Tasks for a situation of change. ZDM Mathematics Education, 49, 865–880. https://doi.org/10.1007/s11858-017-0880-6

Bills, L., Dreyfus, T., Mason, J., Tsamir, P., Watson, A., & Zaslavsky, O. (2006). Exemplification in mathematics education. In J. Novotná, H. Moraová, M. Krátká, & N. Stehlíková (Eds.), Proceedings of the 30th Conference of the International Group for the Psychology of Mathematics Education (Vol. 1, pp. 126–154). PME.

Black, P., & Wiliam, D. (2009). Developing the theory of formative assessment. Educational Assessment, Evaluation and Accountability, 21(1), 5–31. https://doi.org/10.1007/s11092-008-9068-5

Bolondi, G., & Ferretti, F. (2021). Quantifying solid findings in mathematics education: Loss of meaning for algebraic symbols. International Journal of Innovation in Science and Mathematics Education. https://doi.org/10.30722/IJISME.29.01.001

Bossé, M. J., Adu-Gyamfi, K., & Chandler, K. (2014). Students’ differentiated translation processes. International Journal for Mathematics Teaching and Learning, 828, 1–28.

Daher, W. M., & Anabousi, A. A. (2015). Students’ recognition of function transformations’ themes associated with the algebraic representation. REDIMAT, 4(2), 179–194. https://doi.org/10.4471/redimat.2015.1110

Fahlgren, M., & Brunström, M. (2022). Example-generating tasks in a computer-aided assessment system: Redesign based on student responses. In H. G. Weigand, A. Donevska-Todorova, E. Faggiano, P. Iannone, J. Medová, M. Tabach, & M. Turgut (Eds.), MEDA3 mathematics education in digital age 3. Proceedings of the 13th ERME Topic Conference (ETC13) (pp. 141−144). Constantine the Philosopher University of Nitra. https://hal.science/hal-03925304

Faulkenberry, E. D., & Faulkenberry, T. J. (2010). Transforming the way we teach function transformations. The Mathematics Teacher, 104(1), 29–33. https://doi.org/10.5951/MT.104.1.0029

Galluzzi, F., Marchisio, M., Roman, F., & Sacchet, M. (2021). Mathematics in higher education: A transition from blended to online learning in pandemic times. In Proceedings of the 2021 IEEE 45th Annual Computers, Software, and Applications Conference (COMPSAC) (pp. 84−92). https://doi.org/10.1109/COMPSAC51774.2021.00023

Goldenberg, E. P., Scher, D., & Feurzeig, N. (2008). What lies behind dynamic interactive geometry software. In G. W. Blume & M. K. Heid (Eds.), Research on Technology and the teaching and learning of Mathematics: Syntheses, Cases and Perspectives (Vol. 2, pp. 53–87). Information Age Publishing.

Goldenberg, P., & Mason, J. (2008). Shedding light on and with example spaces. Educational Studies in Mathematics, 69(2), 183–194. https://doi.org/10.1007/s10649-008-9143-3

Greer, B. (2008). Algebra for all? The Mathematics Enthusiast, 5(2), 423–428. https://doi.org/10.54870/1551-3440.1120

Günster, S. M., & Weigand, H.-G. (2020). Designing digital technology tasks for the development of functional thinking. ZDM. https://doi.org/10.1007/s11858-020-01179-1

Jiang, R., Star, J. R., Hästö, P., Li, L., Liu, R.-D., Tuomela, D., Prieto, N. J., Palkki, R., Abánades, M. Á., & Pejlare, J. (2023). Which one is the “best”: A cross-national comparative study of students’ strategy evaluation in equation solving. International Journal of Science and Mathematics Education, 21(4), 1127–1151. https://doi.org/10.1007/s10763-022-10282-6

Keijzer-de Ruijter, M., & Draaijer, S. (2019). Digital exams in engineering education. In S. Draaijer, & D. Joosten-ten Brinke (Eds.), Technology Enhanced Assessment. TEA 2018, 1014, 140–164.

Kieran, C. (2004). Algebraic thinking in the early grades: What is it. The Mathematics Educator, 8(1), 139–151.

Kinnear, G., Jones, I., Sangwin, C., Alarfaj, M., Davies, B., Fearn, S., Foster, C., Heck, A., Henderson, K., Hunt, T., Iannone, P., Kontorovich, I., Larson, N., Lowe, T., Meyer, J. C., O’Shea, A., Rowlett, P., Sikurajapathi, I., & Wong, T. (2022). A collaboratively-derived research agenda for e-assessment in undergraduate mathematics. International Journal of Research in Undergraduate Mathematics Education. https://doi.org/10.1007/s40753-022-00189-6

Lage, A. E., & Gaisman, M. T. (2006). An analysis of students’ ideas about transformations of functions. In A. S. Cortina, J. L. Saíz, & A. Méndez (Eds.), Proceedings of the 28th Annual Meeting of the North American Chapter of the International Group for the Psychology of Mathematics Education (Vol. 2, pp. 68–70). Universidad Pedagógica Nacional.

Marton, F., & Booth, S. (1997). Learning and awareness. Lawrence Erlbaum Associates. https://doi.org/10.4324/9780203053690

MIUR. (2010a). Draft regulation containing "National indications concerning the specific learning objectives concerning the activities and teachings included in the study plans envisaged for lyceum courses"(in Italian). Retrieved August 13, 2024, from https://www.istruzione.it/alternanza/allegati/NORMATIVA%20ASL/INDICAZIONI%20NAZIONALI%20PER%20I%20LICEI.pdf

MIUR. (2010b). Guidelines for the transition to the new system of technical institutes(in Italian). Retrieved August 13, 2024, from https://www.indire.it/lucabas/lkmw_file/nuovi_tecnici/INDIC/_LINEE_GUIDA_TECNICI_.pdf

MIUR. (2010c). Guidelines for the transition to the new system of vocational institutes (in Italian). Retrieved August 13, 2024, from https://www.indire.it/lucabas/lkmw_file/nuovi_professionali///linee_guida/01_indice.pdf

Movshovitz-Hadar, N., & Shmukler, A. (1991). A qualitative study of polynomials in high school. International Journal of Mathematical Education in Science and Technology, 22(4), 523–543. https://doi.org/10.1080/0020739910220404

Nicol, D., & Milligan, C. (2006). Rethinking technology-supported assessment practices in relation to the seven principles of good feedback practice. In C. Bryan, & K. Clegg (Eds.), Innovative assessment in higher education (pp. 64–77). Routledge.

Rønning, F. (2017). Influence of computer-aided assessment on ways of working with mathematics. Teaching Mathematics and Its Applications, 36(2), 94–107. https://doi.org/10.1093/teamat/hrx001

Sangwin, C., Cazes, C., Lee, A., & Wong, K. L. (2009). Micro-level automatic assessment supported by digital technologies. In C. Hoyles & J. B. Lagrange (Eds.), Mathematics education and technology-rethinking the terrain (pp. 227–250). USA: Springer. https://doi.org/10.1007/978-1-4419-0146-0_10

Sangwin, C. (2015). Computer aided assessment of mathematics using STACK. In S. J. Cho (Ed.), Selected regular lectures from the 12th international congress on mathematical education (pp. 695–713). Springer. https://doi.org/10.1007/978-3-319-17187-6_39

Sangwin, C. (2003). New opportunities for encouraging higher level mathematical learning by creative use of emerging computer aided assessment. International Journal of Mathematical Education in Science and Technology, 34(6), 813–829. https://doi.org/10.1080/00207390310001595474

Swedish National Agency for Education. (n.d.). Mathematics [Syllabus]. Retrieved August 12, 2024, from https://bit.ly/4fHMYSP

Watson, A., & Chick, H. (2011). Qualities of examples in learning and teaching. ZDM Mathematics Education, 43(2), 283–294. https://doi.org/10.1007/s11858-010-0301-6

Watson, A., & Mason, J. (2005). Mathematics as a constructive activity: Learners generating examples. Routledge. https://doi.org/10.4324/9781410613714-11

Weiss, M. (2016). Factor and remainder theorems: An appreciation. The Mathematics Teacher, 110(2), 153–156. https://doi.org/10.5951/mathteacher.110.2.0153

Yerushalmy, M., Nagari-Haddif, G., & Olsher, S. (2017). Design of tasks for online assessment that supports understanding of students’ conceptions. ZDM, 49(5), 701–716. https://doi.org/10.1007/s11858-017-0871-7

Yerushalmy, M. (2000). Problem solving strategies and mathematical resources: A longitudinal view on problem solving in a function based approach to algebra. Educational Studies in Mathematics, 43(2), 125–147.

Zaslavsky, O., & Zodik, I. (2014). Example-generation as indicator and catalyst of mathematical and pedagogical understandings. In Y. Li, E. Silver, & S. Li (Eds.), Transforming mathematics instruction (pp. 525–546). Springer. https://doi.org/10.1007/978-3-319-04993-9_28

Zazkis, R., Liljedahl, P., & Gadowsky, K. (2003). Conceptions of function translation: Obstacles, intuitions, and rerouting. The Journal of Mathematical Behavior, 22(4), 435–448. https://doi.org/10.1016/j.jmathb.2003.09.003

Funding

Open access funding provided by Karlstad University.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethical Approval

The study was conducted in accordance with local, national and international laws and ethical guidelines, and was approved by the ethics committees of both universities. All participants signed an informed consent form.

Conflict of Interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Fahlgren, M., Barana, A., Brunström, M. et al. Example-Generation Tasks for Computer-Aided Assessment in University Mathematics Education: Insights From A Study Conducted in Two Educational Contexts. Int. J. Res. Undergrad. Math. Ed. (2024). https://doi.org/10.1007/s40753-024-00252-4

Accepted:

Published:

DOI: https://doi.org/10.1007/s40753-024-00252-4