Abstract

This manuscript introduces a novel linear weighted watermarking through normalized principal components using discrete wavelet transform (DWT) and singular value decomposition (SVD). Weight evaluation for embedding a singular value matrix of a watermark into a host is a trivial task in watermark embedding process. This task is accomplished by the normalized principal components derived from the singular value matrices of HH subbands of the host and the watermark images. Experiments are conducted to analyze the effectiveness of the proposed watermark embedding and watermark-host extraction processes against the other DWT–SVD based watermarking schemes. Performance of this method is analyzed by peak signal to noise ratio, structural similarity index and correlation coefficient. The proposed method is also tested against various geometrical and non-geometrical attacks on watermarked images.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

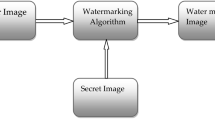

Watermarking is the process of embedding a secret image into a host image without degrading the imperceptibility of the observer. With the rapid development of multimedia applications, there is a growing concern over the authentication, copy right protection and ownership [1] of the multimedia content which are communicated in the open networks. Digital watermarking methods provide solutions to ownership and tamper localization issues [2]. For security and authentication, watermark (WM) should not be visible and at the same time, quality of the host should not be degraded. The same should be ascertained for better robustness of security and authentication even if the watermarked image experiences geometrical or any non-geometrical attacks. There is always a tradeoff between amount of information embedded on the host and imperceptibility for authentication and copyright protection. This system requirement directs the researchers towards the watermarking algorithms which provide security, authentication, imperceptibility and robustness.

Watermarking algorithms do provide a solution to handle all these issues either in spatial domain or in frequency domain [3]. Spatial domain algorithms are more sensitive to image processing operations and other attacks than frequency domain methods [4]. In spatial domain methods, the selected pixels of the host image are directly modified by the watermark pixels without degrading the quality of the host image. In frequency domain, the transformed coefficients of the host image are modified by the watermark image [1]. Higher robustness can be achieved by transform domain methods [5].

WM algorithms are classified into fragile, semi-fragile and robust methods based on their response to the attacks. Fragile watermarking does not tolerate any modifications; hence the WM image is either lost or modified by the attacks. Semi-fragile methods are robust against certain attacks and allow certain image processing tasks such as image compression and image enhancement [6]. On the other hand, robust watermarking techniques resist any modifications or attacks to the WM to preserve the quality of the watermarked image [7]. These robust methods are very much useful for the protection of copyrights and ownership authentication.

In the recent years, semi-fragile watermarking gains momentum that attracts the researchers to employ frequency domain techniques. Spatial domain watermarking schemes need less computation but often results in poor robustness against geometric attacks [8]. Frequency domain algorithms provide an alternative for this issue and hence the watermarking schemes based on DCT [8], DWT, RDWT [9, 10] and SVD [11] have gained momentum in watermarking concepts. SVD combined with transforms such as DCT, DWT, RDWT are more efficient than SVD based methods [12]. DWT–SVD based watermarking schemes provide better robustness and imperceptibility [13] because of the sensitiveness to modeling of DWT. In DWT–SVD watermarking schemes [4, 14], WM embedding is carried out on ‘S’ matrices of LL subbands [10, 15] of the host and the WM images. The amount of embedding is decided by the scaling factor ‘\(\alpha \)’. Pros and cons of spatial and transform domain watermarking methods are given in Table 1.

Watermarking schemes using SVD matrices of the watermark and the host images faces false positive detection problem in watermark extraction process [20]. DWT–SVD watermarking [15] repeats the watermark embedding process and hence the complexity increases. In SVD-Radon transform [7] and SVD-support vector regression [21] based watermarking methods, embedding is done on LL subband of the host image.

This manuscript uses HH subband of the images for WM embedding. Singular value matrices (S matrices) of the HH coefficients of the host image and the WM image are combined for watermark embedding. The amount of WM embedding is determined by the normalized principal components (NPC). Principal components are evaluated for the HH coefficients of the host and the WM images. This can be interpreted as a principal component analysis (PCA) fusion which fuses the singular values of HH coefficients of the host and the WM images. Since linear weighted fusion rule is applied for the singular value matrices of the frequency subbands of DWT, pitfalls of spatial domain fusion are eliminated. The novelty of the proposed method lies on the evaluation of normalized principal components as scaling factors for embedding process. Hence the proposed method distances itself from the conventional DWT–SVD–PCA watermarking. Performance of this method is analyzed by peak signal to noise ratio (PSNR), structural similarity index (SSIM) and correlation coefficient (CC). Various experiments are conducted to analyze the performance of the proposed method. We also test the proposed method against geometrical and non-geometrical attacks on watermarked images.

This paper is organized as follows: “DWT–SVD watermarking and PCA fusion” section elaborately analyzes DWT–SVD and principal component analysis (PCA) fusion. “Linear weighted watermarking using NPC” deals with the proposed watermarking algorithm. Experiments and performance analysis is given in next section. This is followed by conclusion in last section.

DWT–SVD watermarking and PCA fusion

Frequency domain methods are more preferred for semi-fragile watermarking schemes. DWT–SVD [9] uses the scaling factor ‘\(\alpha \)’ for WM embedding. SVD could easily convert largest changes due to attacks into minor changes in the watermark and the host images. Watermarking schemes using SVD require more computations. On the other hand, SVD combined with transforms reduce the amount of computational requirement. This desirable property of SVD paved the way for watermarking algorithms such as DWT–SVD, RDWT–SVD, DCT–SVD and so on.

SVD finds wide applications in image compression, noise reduction, image hiding and watermarking. SVD decomposes a square or rectangular matrix into two orthogonal matrices U, V and a diagonal matrix S. SVD is preferred for watermarking because of its properties. In SVD, S matrix represents the brightness of the image whereas U, V demonstrate geometry properties of the image [22]. Small changes on an image will not make big impact in S matrix of the image.

DWT–SVD watermark embedding

DWT–SVD watermarking techniques, available in the literature, use LL subbands for WM embedding. ‘S’ matrices are derived for the LL subbands of the host and the WM and further used for watermark embedding. The steps involved in DWT–SVD watermarking are elaborated below and shown in Fig. 1.

-

Step A1:

Apply DWT to the host image H(x, y)

$$\begin{aligned}{}[\hbox {LL} _{\mathrm{H}} , \hbox {LH} _{\mathrm{H}} , \hbox {HL} _{\mathrm{H}} , \hbox {HH} _{\mathrm{H}} ]=\hbox {DWT}\{{H(x,y)}\} \end{aligned}$$(1)Apply SVD to \(LL_{\mathrm{H}}\)

$$\begin{aligned}{}[ {U}_{\mathrm{H}} , {S}_{\mathrm{H}} , {V}_{\mathrm{H}} ]=\hbox {SVD}\{ \hbox {LL}_{\mathrm{H}} \} \end{aligned}$$(2) -

Step A2:

Apply DWT to WM image WM(x, y)

$$\begin{aligned}{}[ \hbox {LL}_{\mathrm{wm}} , \hbox {LH}_{\mathrm{wm}} , \hbox {HL}_{\mathrm{wm}} , \hbox {HH}_{\mathrm{wm}} ]=\hbox {DWT}\{\hbox {WM}(x,y)\} \end{aligned}$$(3)Apply SVD to \(LL_{\mathrm{wm}}\)

$$\begin{aligned}{}[ {U}_{\mathrm{wm}} , {S}_{\mathrm{wm}} , {V}_{\mathrm{wm}} ]=\hbox {SVD}\{ \hbox {LL}_{\mathrm{wm}} \} \end{aligned}$$(4) -

Step A3:

WM embedding is given by

$$\begin{aligned} {S}_{\mathrm{emb}} = {S}_{\mathrm{H}}+\alpha \cdot {S}_{\mathrm{wm}} \end{aligned}$$(5)

where ‘\(\alpha \)’ is a scaling factor

Apply inverse SVD (ISVD) to get WM embedded Singular value matrix

-

Step A4:

Apply Inverse DWT (IDWT) to get watermarked image in spatial domain

$$\begin{aligned} {H}_{\mathrm{emb}} {(x,y)}=\hbox {IDWT}\{ \hbox {LL}_{\mathrm{emb}} , \hbox {LH}_{\mathrm{H}} , \hbox {HL}_{\mathrm{H}} , \hbox {HH}_{\mathrm{H}} \} \end{aligned}$$(7)

DWT–SVD watermark and host extraction

-

Step B1:

Apply DWT to the \(H_\mathrm{emb}(x,y)\)

$$\begin{aligned}{}[\hbox {LL}_{\mathrm{emb}}, \hbox {LH}_{\mathrm{H}}, \hbox {HL}_{\mathrm{H}}, \hbox {HH}_{\mathrm{H}}]=\hbox {DWT}\{\hbox {H}_{\mathrm{emb}}\} \end{aligned}$$(8)Apply SVD to \(LL_\mathrm{emb}\)

$$\begin{aligned}{}[{U}_{\mathrm{emb}}, {S}_{\mathrm{emb}}, {V}_{\mathrm{emb}}]=\hbox {SVD}\{\hbox {LL}_{\mathrm{emb}}\} \end{aligned}$$(9) -

Step B2:

Repeat step A1

-

Step B3:

Repeat step A2

-

Step B4:

Extract the watermark \(\mathrm{WM}'(x,y)\)using

$$\begin{aligned} S_\mathrm{wm}'= (1/\alpha ) \cdot (S_\mathrm{emb}- S_{H}) \end{aligned}$$(10)Apply ISVD to get DWT coefficients

$$\begin{aligned} \hbox {LL}_{\mathrm{wm}}' = \hbox {ISVD} \{{U}_{\mathrm{wm}}, {S}_{\mathrm{wm}}', {V}_{\mathrm{wm}}^{\mathrm{T}}\} \end{aligned}$$(11)Apply IDWT to get WM image in spatial domain

$$\begin{aligned} \hbox {WM}'{(x,y)} =\hbox {IDWT}\{\hbox {LL}_{\mathrm{wm}}',\hbox {LH}_{\mathrm{wm}},\hbox {HL}_{\mathrm{wm}},\hbox {HH}_{\mathrm{wm}}\}\nonumber \\ \end{aligned}$$(12) -

Step B5:

Extract the host \(H'(x,y)\) using

$$\begin{aligned} S_{H}'=S_\mathrm{emb}-\alpha \cdot S_\mathrm{wm} \end{aligned}$$(13)Apply ISVD to get DWT coefficients

$$\begin{aligned} \hbox {LL}_{\mathrm{H}}' = \hbox {ISVD} \{{U}_{\mathrm{H}}, {S}_{\mathrm{H}}', {V}_{\mathrm{H}}^{\mathrm{T}}\} \end{aligned}$$(14)Apply IDWT to get host in spatial domain

$$\begin{aligned} \hbox {H}'{(x,y)} =\hbox {IDWT}\{\hbox {LL}_{\mathrm{H}}',\hbox {LH}_{\mathrm{H}},\hbox {HL}_{\mathrm{H}},\hbox {HH}_{\mathrm{H}}\} \end{aligned}$$(15)

PCA fusion

Principal component analysis fusion is one of the fusion techniques performed in spatial domain. PCA is a dimension reduction technique [23] which represents a whole data set with very few principal components [24] and hence the principal components derived from the covariance properties of the source data sets deliver meaningful weights for a linear weighted spatial domain fusion [25]. This spatial domain fusion is often degraded by spectral distortions [26, 27] and thus frequency domain fusion is often preferred. Evaluation of normalized principal components is shown in Fig. 2 and the steps are given below

Consider two source images \(I_{1}(x,y)\) and \(I_{2}(x,y)\) with the spatial resolution of \(M \times M\). Principal component evaluation is given by

where

E—Eigen vectors D—Diagonal matrix with Eigen values

Normalized principal components \(P_{1}\) and \(P_{2}\) are evaluated by

If \(D_{11}>\) \(D_{22}\)

Else

PCA fusion is given by

Linear weighted watermarking using NPC

In DWT–SVD method, WM embedding is carried out by the factor ‘\(\alpha \)’. Evaluation of ‘\(\alpha \)’ is a trivial task that decides the amount of data to be embedded. This ambiguity of ‘\(\alpha \)’ evaluation can be replaced by PCA based fusion as given in “PCA fusion” section. Principal components evaluated for the ‘S’ matrices of the host and the WM images provide scaling factors ‘\(P_{1}\)’ and ‘\(P_{2}\)’ for WM embedding and extraction. This is illustrated for various host images in Table 2. If this scaling is experimented in spatial domain, then the watermarking will be a visible watermarking which is not preferred for image authentication. Since the fusion is carried out for ‘S’ matrices based on the covariance of the same, watermarking is not visible. This is evident in watermarked images shown in Fig. 6.

The proposed linear weighted watermarking scheme uses HH coefficients for WM embedding. The rule for WM embedding in DWT–SVD is also replaced by PCA fusion. The entire proposed watermarking scheme is elaborated below and shown in Fig. 3.

Linear weighted watermark embedding using NPC

A host image H(x, y) and a WM image WM(x,y) with the size of \(M \times M\) are considered for WM embedding. DWT is applied to the images with ‘Haar’ wavelet.

-

Step C1:

Apply DWT to the host image H(x, y)

$$\begin{aligned}{}[\hbox {LL}_{\mathrm{H}}, \hbox {LH}_{\mathrm{H}}, \hbox {HL}_{\mathrm{H}}, \hbox {HH}_{\mathrm{H}}]=\hbox {DWT}\{{H(x,y)}\} \end{aligned}$$(19)Apply SVD to HH \(_{H}\)

$$\begin{aligned}{}[{U}_{\mathrm{H}}, {S}_{\mathrm{H}}, {V}_{\mathrm{H}}]=\hbox {SVD}\{\hbox {HH}_{\mathrm{H}}\} \end{aligned}$$(20) -

Step C2:

Apply DWT to WM image WM(x,y)

$$\begin{aligned}{}[\hbox {LL}_{\mathrm{wm}}, \hbox {LH}_{\mathrm{wm}}, \hbox {HL}_{\mathrm{wm}}, \hbox {HH}_{\mathrm{wm}}]=\hbox {DWT}\{\hbox {WM(x,y)}\}\nonumber \\ \end{aligned}$$(21)Apply SVD to HH \(_{wm}\)

$$\begin{aligned}{}[{U}_{\mathrm{wm}}, {S}_{\mathrm{wm}}, {V}_{\mathrm{wm}}]=\hbox {SVD}\{\hbox {HH}_{\mathrm{wm}}\} \end{aligned}$$(22) -

Step C3:

Apply PCA fusion for WM embedding

$$\begin{aligned}{}[{P}_{1}, {P}_{2}]= & {} \hbox {PCA} \{{S}_{\mathrm{H}}, {S}_{\mathrm{wm}}\} \end{aligned}$$(23)$$\begin{aligned} {S}_{\mathrm{emb}}= & {} {P}_{1} \cdot {S}_{\mathrm{H}} + {P}_{2} \cdot {S}_{\mathrm{wm}} \end{aligned}$$(24)Apply ISVD to get WM embedded HH coefficients

$$\begin{aligned} \hbox {HH}_{\mathrm{emb}}= \hbox {ISVD} \{\hbox {U}_{\mathrm{H}}, {S}_{\mathrm{emb}}, {V}_{\mathrm{H}}^{\mathrm{T}}\} \end{aligned}$$(25) -

Step C4:

Apply IDWT to get watermarked image in spatial domain

$$\begin{aligned} {H}_\mathrm{emb}{(x,y)}=\hbox {IDWT}\{\hbox {LL}_{\mathrm{H}}, \hbox {LH}_{\mathrm{H}}, \hbox {HL}_{\mathrm{H}}, \hbox {HH}_{\mathrm{emb}} \} \end{aligned}$$(26)

Watermark and host extraction

-

Step D1:

Apply DWT to the \(H_{emb}(x,y)\)

$$\begin{aligned}{}[\hbox {LL}_{\mathrm{H}}, \hbox {LH}_{\mathrm{H}}, \hbox {HL}_{\mathrm{H}}, \hbox {HH}_{\mathrm{emb}}]=\hbox {DWT}\{\hbox {H}_{\mathrm{emb}}\} \end{aligned}$$(27)Apply SVD to HH \(_{emb}\)

$$\begin{aligned}{}[{U}_{\mathrm{emb}}, {S}_{\mathrm{emb}}, {V}_{\mathrm{emb}}]=\hbox {SVD}\{\hbox {HH}_{\mathrm{emb}}\} \end{aligned}$$(28) -

Step D2:

Repeat step C1

-

Step D3:

Repeat step C2

-

Step D4:

Extract the watermark WM’(x, y) using

$$\begin{aligned} {S}_{\mathrm{wm}}'= ({1/P}_{2}) \, \cdot \,({S}_{\mathrm{emb}}- {P}_{1}\cdot {S}_{\mathrm{H}}) \end{aligned}$$(29)Apply ISVD to get DWT coefficients

$$\begin{aligned} \hbox {HH}_{\mathrm{wm}}' = \hbox {ISVD} \{{U}_{\mathrm{wm}}, {S}_{\mathrm{wm}}', {V}_{\mathrm{wm}}^{\mathrm{T}}\} \end{aligned}$$(30)Apply IDWT to get WM image in spatial domain

$$\begin{aligned} \hbox {WM}'{(x,y)} =\hbox {IDWT}\{\hbox {LL}_{\mathrm{wm}},\hbox {LH}_{\mathrm{wm}},\hbox {HL}_{\mathrm{wm}},\hbox {HH}_{\mathrm{wm}}'\}\nonumber \\ \end{aligned}$$(31) -

Step D5:

Extract the host \(H'(x,y)\) using

$$\begin{aligned} S_{H}'= (1/P_{1}) \, \cdot (S_{emb}-P_{2}\cdot S_{wm}) \end{aligned}$$(32)Apply ISVD to get DWT coefficients

$$\begin{aligned} \hbox {HH}_{\mathrm{H}}' = \hbox {ISVD}\, \{{U}_{\mathrm{H}}, {S}_{\mathrm{H}}', {V}_{\mathrm{H}}^{\mathrm{T}}\} \end{aligned}$$(33)Apply IDWT to get host in spatial domain

$$\begin{aligned} {H}'{(x,y)} =\hbox {IDWT}\{\hbox {LL}_{\mathrm{H}},\hbox {LH}_{\mathrm{H}},\hbox {HL}_{\mathrm{H}},\hbox {HH}_{\mathrm{H}}'\} \end{aligned}$$(34)

Experiments and analysis

Performance of the proposed watermarking algorithm is evaluated by various experiments and metrics. In our experiments, we use six host images of spatial resolution \(512 \times 512\) and given in Fig. 4. A watermark image with the same spatial resolution is used for embedding and given in Fig. 5. All experiments are simulated by MATLAB R2012a image processing tool box in Intel Core i5, 3.2 GHz processor with 4 GB RAM.

DWT with first level of decomposition is applied to the host and WM images using ‘Haar’ wavelet and subsequently SVD is applied. We conduct various experiments to analyze the performance of the proposed method. First experiment analyzes WM embedding process. Second one deals with WM and host extraction process. In the third experiment, we analyze the selection of DWT subbands for WM embedding. Fourth experiment analyzes the impact of various attacks on WM embedded image and the fifth one is about the cost of computation.

Metrics for performance analysis

Performance analysis is accomplished by the three matrices PSNR in dB, SSIM and CC. PSNR is inversely proportional to mean square error which finds out the quantitative difference between the two images. Hence, higher value of PSNR [28] denotes better performance. Mean SSIM is the quality assessment metric that exhibits much better consistency with the qualitative visual presentation based on luminance subtraction, contrast and structural characteristics [29]. CC is the metric which finds out the correlation between two images by evaluating the difference between the mean and gray value of the individual pixels. This is another quantitative metric that demonstrates the closeness of one image with the reference image. SSIM and CC values close to one demonstrate superior watermarking process.

Watermark embedding analysis

Six host images are experimented with a WM with the same spatial resolution. DWT with ‘Haar’ wavelet is applied to the host and the WM images. PSNR values of embedding and extraction are used to select the appropriate wavelet for DWT decomposition and given in Table 3. Mexican hat and bior2.2 wavelets deliver better embedding but result in poor extraction process. Both embedding and extraction are better with Haar wavelet and hence Haar wavelet is selected for decomposition. Bior1.1 wavelet also delivers similar results to that of Haar wavelet and can be alternatively used in embedding and extraction. Principal components are derived from the S matrices of ‘HH’ subbands of the host and the WM images. The amount of WM embedding into the host decides PSNR, SSIM and CC. For comparative analysis, DWT–SVD and RDWT–SVD watermarking methods are evaluated and given in Table 4. Upon analyzing the metrics, it is revealed that the proposed method is able to deliver better PSNR, SSIM and CC values compared to other two methods for all the host images. Higher PSNR value demonstrates better imperceptibility for the observer. Watermarks are also not visible, since the embedding is carried in transformed domain. This is illustrated by the watermarked host images in Fig. 6. SSIM and CC values of close to one demonstrate high similarity between the host and the watermarked images. SSIM reveals the qualitative performance of the proposed method by analyzing the structural characteristics and contrast of the watermarked image with respect to the original image.

Host and watermark extraction analysis

For ensuring authentication, copyright and ownership, extraction of both the images needs to be experimented. By carrying out reverse process, as stated in “Watermark and host extraction” section, host and WM images can be extracted and analyzed for performance evaluation. After extraction, both the host and WM images should be similar to the original host and the WM images. This is can be objectively analyzed by evaluating PSNR, SSIM and CC between the extracted and the original images. Upon analyzing Tables 5 and 6, it is observed that the proposed method is able to extract the host and the WM images with higher PSNR compared to other methods. SSIM and CC values of one denote that the extracted images are similar to that of the original images.

The proposed algorithm is also experimented on Caltech background dataset [30] with 451 images and the metrics for embedding and extraction are given in Table 7. Mean value of the metrics are evaluated to all the images of background dataset. This dataset comprises of low and high contrast images of varying illumination. Upon analyzing the metrics, it is evident that the proposed method delivers better embedding and extraction for the images of different contrast and background.

Subband selection for WM embedding

DWT–SVD and RDWT–SVD use LL subbands for WM embedding, but the proposed method is tested with HH subbands. In our experiments, selection of subband for WM embedding is not only decided by embedding process and also by the host and WM extraction. From the metrics given in Table 8, one can observe that the selection of HH subbands not only results in better embedding but also delivers better extraction.

Robustness analysis

Image authentication, copyright and ownership can be established by watermarking algorithms with better robustness against geometrical and non-geometrical attacks on WM embedded images. The robustness of the proposed method is tested with different attacks and the extracted WM images are given in Fig. 7. Extracted WM images are also given for other methods also. Crop and rotation attacks don’t change the gray values of the pixels, but results in loss of pixel values in certain spatial locations. Watermarking in DWT–SVD domain distributes watermark coefficients all over the host image and hence the crop and rotation hampers the recovery of watermark with cropped and out of bound details of the host image. For these two attacks, DWT–SVD and RDWT–SVD methods are able to extract the WM with less imperceptibility but the proposed methods delivers better results. Mean, median attacks change the gray values of the pixels and hence lead to poor extraction. In mean and median attacks, edges of the extracted watermark are blurred and this effect is similar to the dead band effect. When the watermarked image is corrupted by additive noises such as impulse and white Gaussian noise, noise values are distributed over the entire spatial location of the host image. The recovery of the watermark entirely depends on the noise density. If the noise density is less, then the extracted watermark will be good and vice versa. Upon analyzing the extracted images, it is observed that the proposed method delivers better results compared to other two methods.

Cost of computation

The three methods are compared based on the cost of computation for embedding and extraction process and given in Table 9. DWT–SVD method takes less time for embedding and extraction because of down sampling of subbands and absence of evaluation of \(\alpha \). RDWT–SVD method takes more time because of the absence of down sampling in DWT decomposition. Because of the evaluation of normalized principal components, the proposed method consumes more time than DWT–SVD.

Conclusion

This manuscript proposes a linear weighted WM embedding and extraction based on NPC, DWT and SVD. NPCs are derived from the singular value matrices of the HH coefficients of the host and the WM images. The singular value matrices of both the images are fused by the normalized linear weights derived from the principal components. Since this fusion is carried out in the transform domain, the impact of the WM over the host image is significantly unnoticeable, thus leads to invisible watermarking. Selection of scaling factors for watermarking is also replaced by this linear weight evaluation and hence this method eliminates the ambiguity of scaling factor evaluation. Experiments conducted on the watermarked and extracted images do sufficiently prove that the proposed method performs better watermarking even in the presence of geometrical and non-geometrical attacks. Comparative analysis with other DWT–SVD algorithms also reveals the effectiveness of the proposed method. Cost of computation of the proposed method is higher compared to other DWT–SVD based methods and the future work can include the robustness of the method against more attacks.

References

Al-Otum Hazem munawer (2014) Semi-fragile watermarking for grayscale image authentication and tamper detection based on an adjusted expanded-bit multiscale quantization-based technique. J Vis Commun Image R 25:1064–1081

Ahmad AI, Millie P (2017) Multipurpose image watermarking in the domain of DWT based on SVD and ABC. Pattern Recogn Lett 94:228–236

Kuo CT, Cheng SC (2007) Fusion of color edge detection and color quantization for color image watermarking g using principal axes analysis. Pattern Recogn 40:3691–3704

Lai CC, Tsai CC (2010) Digital image watermarking using discrete wavelet transform and singular value decomposition. IEEE Trans Instrum Meas 59(11):3060–3063

Potdar VM, Han S, Chang E (2004) A survey of digital image watermarking techniques. INDIN’05, 3rd IEEE international conference on industrial informatics, IEEE Explore, pp 709–716

Rzouga HL, Bernadette D, Ben ANE (2017) A combined watermarking approach for securing biometric data Signal Processing. Image Commun 55:23–31

Rastegar S, Namazi F, Yaghmaie K, Aliabadian A (2011) Hybrid watermarking algorithm based on singular value decomposition and radon transform. Int J Electron Commun (AEU) 65(7):658–63

Lin S, Chen CF (2000) A robust DCT-based watermarking for copyright protection. IEEE Trans Consum Electron 46(3):415–21

Makbol Nasrin M, Ee KB (2013) Robust blind image watermarking scheme based on Redundant discrete wavelet transform and singular value decomposition. Int J Electron Commun (AEU) 67:102–112

Lagzian S, Soryani M, Fathy M (2011) A new robust watermarking scheme based on RDWT–SVD. Int J Intell Inform Process 2(1):22–29

Chandra DS (2002) Digital image watermarking using singular value decomposition. In: Proceedings of the 45th Midwest symposium on circuits and system, vol 3, pp 264–267

Ansari IA, Pant M, Ahn CW (2016) Robust and false positive free watermarking in IWT domain using SVD and ABC. Eng Appl Artif Intell 49:114–125

Haouzia A, Noumeir R (2008) Methods for image authentication: a survey. Multimedia tools Appl 39(1):1–46

Faragallah Osama S (2013) Efficient video watermarking based on singular value decomposition in the discrete wavelet transform domain. Int J Electron Commun (AEU) 67:189–196

Joo S, Suh Y, Shin J, Kitkuchi H (2002) A new robust watermarking embedding into wavelet DC components. ETRI J 24:401–404

Schyndle RGV, Tirkle AZ, Osbrone CF (1994) A digital watermark. Proc IEEE Int Conf Image Process 2:86–90

Ejima M, Myazaki A (2001) On the evaluation of performance of digital watermarking in the frequency domain. In: Proceedings of IEEE international conference on image processing, pp 546–549

Bors AG, Pitas I (1994) Image watermarking using DCT domain constraints. Proc IEEE Int Conf Image Process 3:231–234

Sweldens W (1995) The lifting scheme: a new philosophy in biorthogonal wavelet constructions. Wave Appl Signal Image Process III(SPIE2569):68–79

Geeta K, Singh KS (2017) Reference based semi blind image watermarking scheme in wavelet domain. Optik 142:191–204

Tsai HH, Jhuang YJ, Lai YS (2012) A SVD-based image watermarking in wavelet domain using SVR and PSO. Appl Soft Comput 12:2442–2453

Ganic E, Eskicioglu AM (2005) Robust embedding of visual watermarks using discrete wavelet transform and singular value decomposition. J Electron Image 14(4):043004–043009

Turk MA, Pentland AP (1994) Eigen faces for recognition. J Cogn Neuroscience 3(1):71–86

Sadhasivam SK, Bharath KM, Muttan S (2011) Implementation of max principle with pca in image fusion for surveillance and navigation application. Electron Lett Comput Vis Image Anal 10(1):1–10

Vijayarajan R, Muttan S (2014) Iterative block level principal component averaging medical image fusion. Optik Int J Light Electron Opt 125(17):4751–4757

Vijayarajan R, Muttan S (2015) Discrete wavelet transform based principal component averaging fusion for medical images. Int J Electron Commun 69(6):896–902

Vijayarajan R, Muttan S (2016) Adaptive principal component analysis fusion schemes for multifocus and different optic condition images. Int J Image Data Fusion 7(2):189–201

Musrrat A et al (2015) An image watermarking scheme in wavelet domain with optimized compensation of singular value decomposition via artificial bee colony. Inf Sci 301(20):44–60

Wang Z, Bovik AC, Sheikh HR, Simoncelli EP (2004) Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Process 13(4):600–612

Computational Vision at Caltech, http://www.vision.caltech.edu/Image_Datasets/Caltech101/Caltech101.html. Accessed 25 Oct 2017

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

N, S., X, A. Linear weighted watermarking using normalized principal components. Complex Intell. Syst. 4, 181–193 (2018). https://doi.org/10.1007/s40747-017-0065-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40747-017-0065-5