Abstract

Identity protection is an indispensable feature of any information security system. An identity can exist in the form of digitally written signatures, biometric information, logos, etc. It serves the vital purpose of the owners’ verification and provides them with a safety net against their imposters, so its protection is essential. Numerous security mechanisms are being developed to achieve this goal, and information embedding is prominent among all. It consists of cryptography, steganography, and watermarking; collectively, they are known as data hiding (DH) techniques. In addition to providing insight into various DH techniques, this review prominently covers the image watermarking works that have positively influenced its relevant research area. To that end, one of the main aspects of this study is its inclusive nature in reviewing watermarking techniques, via which it aims to provide a 360\(^{\circ }\) view of the watermarking technology. The main contributions of this study are summarised below.

-

The proposed study covers more than 100 major watermarking works that have positively influenced the field and continue to do so. This approach makes the discussion effective as it allows us to pivot on the vital watermarking works that have positively influenced the research area instead of just highlighting as many existing methods as possible. Moreover, it also empowers us to provide the readers with an insight into the current research trends, the pros and cons of the state-of-the-art methods, and recommendations for future works.

-

In addition to reviewing the state-of-the-art watermarking works, this study solves the issue of reverse-engineering the main existing watermarking methods. For instance, most recent surveys have focused primarily on reviewing as many watermarking works as possible without probing into the actual working of the techniques. This approach can leave the readership without a vital understanding of implementing or reverse-engineering a watermarking method. This issue is especially prevalent among newcomers to the watermarking field; hence, this study presents the breakdown of the well-known watermarking techniques.

-

A new systematisation of classifying existing watermarking methods is proposed. It classifies watermarking techniques into two phases. The first phase divides watermarking methods into three categories based on the domain employed during watermark embedding. The methods are further classified based on other watermarking attributes in the following phase.

Similar content being viewed by others

1 Introduction

Since the beginning of the Internet, its usage has been on a hike. The Internet has influenced almost every aspect of human life, and their dependence on it is increasing daily. However, the Internet has never been as prominent since 2020. COVID-19 has altered how people interact in their professional and personal lives. This pandemic has severely curtailed the use of offices and places to socialise, forcing the world into a lockdown. This left people with the Internet as their primary mode of communication, ramping its usage to new heights.

The Internet is vital for keeping people in touch via social networks (SNs) and tools like Zoom™, and Microsoft Teams™. On the flip side, this is also the prime time for hackers to flex their muscles, and their actions’ impact is being felt worldwide. For instance, data breaches exposed 36 billion records in the first half of 2020 [89]. A breach is even more detrimental when performed on SNs. For instance, the Twitter ™ breach in July 2020 aimed to ruin the image of politicians and business tycoons [15]. Moreover, such actions are the worst when carried out on sensitive data such as medical images, passports, licenses, and other legal documents [95]. Thus, thwarting them is vital. To this end, many data hiding (DH) techniques: cryptography, steganography, and watermarking, that fall under the umbrella of cybersecurity are blooming. A brief insight into these DH techniques is as follows.

Cryptography alias “secret writing” is derived from Greek words kryptos: secret and graphein: writing [45]. It is a way of transmitting a secret message by concealing it within a cover medium. Note a cover medium can exist in various forms: a video, an image, a speech signal, and others. However, as this review focuses primarily on images thus, the cover medium corresponds to an image in this discussion. Before transmission, a cryptography process consists of scrambling the secret message using a key, known as encryption, followed by its embedding in a cover image. After transmission, the secret message is extracted from the cover image and then unscrambled using the aforementioned key, decryption. Note that the secret key is generally transmitted separately from the encrypted image to minimise the chances of hacking. During the transmission, the primary purpose of encryption is to make the data unintelligible to unauthorised personnel.

Steganography is made from the Greek word steganos, which means “hidden”. Some current research works classify cryptography and steganography as the same [22]. However, there are fundamental differences between the two. First and foremost, in the former’s case, the secret message, also known as the planetext, is encrypted and converted into the ciphertext before it is concealed in a cover medium. In the latter’s case, the secret message never changes its state and is embedded as it is but confidentially into a cover medium. Second, cryptography aims to hide the message content from a hacker but not the message’s existence. Steganography even hides the very existence of the message within the communicating data. On the same note, the security of a cryptography process is assumed to be compromised when the encrypted message is hacked. In the case of steganography, it is considered compromised the moment the very existence of the hidden message is confirmed.

It is evident from the previous discussion that the primary concern of both steganography and cryptography processes is the security of the concealed message but not that of the cover medium. This is where watermarking comes into the limelight. In hindsight, steganography and cryptography are means of covert communication, whereas watermarking primarily focuses on media copyright protection and verification. Moreover, a watermark’s embedding can be visible or invisible; however, the embedded message in steganography and cryptography schemes must be invisible or hidden. As this review focuses on image watermarking, thus it has the center stage in the rest of this discussion.

1.1 Our contributions

-

In addition to reviewing the state-of-the-art watermarking works, this review solves the issue of reverse-engineering the main existing watermarking methods. For instance, most recent surveys have focused primarily on studying as many watermarking works as possible without probing into the actual working of the techniques. This approach can leave the readership without a vital understanding of implementing or reverse-engineering a watermarking method. This issue is especially prevalent among newcomers to the watermarking field; hence, this study presents the breakdown of the well-known watermarking techniques. To the best of our knowledge, this study is the first review in the watermarking field that attempted to do so. It is assumed that the study can provide the necessary tools to the new entrants to kick-start their research and equally serve their experienced peers as their go-to study whenever they want to revisit essential watermarking concepts.

-

In line with the above-mentioned contribution, this review probes into the watermarking works which have shaped the field and continue to do so. This approach makes the discussion effective as it allows us to pivot on the vital watermarking works that have positively influenced the lot instead of just highlighting as many existing methods as possible. Moreover, it also empowers us to provide the readers with an insight into the current research trends, the pros and cons of the state-of-the-art methods, and recommendations for future works.

-

A new systematisation of classifying existing watermarking methods is proposed. It classifies watermarking techniques into two phases. The first phase divides watermarking methods into three categories based on the domain employed during watermark embedding. The methods are further classified based on other watermarking attributes in the following phase. More on this systematisation is within Section 5.1.

The rest of this discussion is as follows. Section 2 covers the general watermarking concepts, and Section 3 presents the commonly used performance metrics to evaluate watermarked images. Section 4 introduces watermarking attacks, and the subsequent Section 5 reviews the well-known watermarking works. The next is Section 6, wherein a summary of the methods discussed in this review is provided. Moreover, a questionnaire is also developed within this section that facilitates the evaluation of the existing processes and provides guidelines or recommendations for designing new ones. Finally, Section 7 concludes the discussion.

2 Watermarking

2.1 Definition and applications

The image watermarking process embeds subtle information known as the “watermark” to a host/original image. The embedded watermark can successively be extracted to validate the host image [96, 98]. A successful extraction proves the intactness of the host image or vice-versa. The upcoming Section 2.2 discusses the watermark’s embedding and extraction procedures in detail.

The term “Digital Watermarking” dawned in the early 1990s, and since then, it has been an active research topic [113]. Its applications are continuously branching out to new advents in technology; for example, the process of watermarking a neural network is known as “passporting” [11, 111], securing the cloud storage systems [102, 103], electronic money transfers, e-governance [52]. Various state-of-the-art watermarking applications and their description are presented in Table 1.

Notwithstanding the successes of watermarking in the aforementioned applications, many prominent industries are still missing out on the benefits of this technology. For instance, according to Bertini et al. [9], only one of 13 main SNs uses watermarking technology. The same study also highlights that these platforms are the major sources of information leaks and identity theft. To this end, the Facebook™ security breach at the beginning of 2020 impacted its 50 million users. These users had their email accounts compromised, pictures or images were stolen, and the same goes for the Twitter™ breach of July 2020 [92]. Subsequently, Services New South Wales (NSW), Australia’s information systems were infiltrated, and numerous sensitive documents were stolen. Consequently, almost a quarter of a million Australians lost their personal information in the form of driver’s licenses, handwritten signatures, and marriage and birth certificates [95]. Moreover, data breaches exposed billions of records in 2020, whereby 86% of violations were financially motivated, and 10% were motivated by espionage [19]. These are only a handful of snippets of the wide range of persisting cyber-attacks that have inspired this review, as thwarting them is pivotal.

To sum up, most of the above-mentioned incidents happened due to organisations’ lacking copyright protection and authentication mechanisms. Therefore, the need for watermarking to address this shortfall is vindicated. Image copyright protection and authentication have been a critical focus of watermarking technology ever since its arrival [4, 65]. To that end, as this research study is focused on reviewing the image watermarking methods, its significance is therefore justified.

2.2 Watermarking process

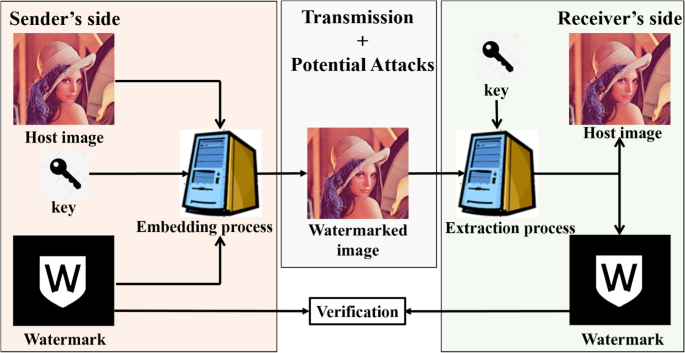

The watermarking process primarily consists of two parts. The first part, as shown in Fig. 1, is that of the watermark’s embedding, and the other is that of the watermark’s extraction. The watermark embedding happens on the sender’s side, whereas the extraction occurs on the receiver’s side. Each of these parts is discussed below.

An overview of the watermarking process. In this example, the embedded watermark is invisible, and the watermark extraction is blind. These attributes are discussed in detail in Section 5.1

Firstly, the watermark is encrypted using an encryption algorithm. Note that this step is optional but is a common practice within image watermarking. The main reason for such encryption is that it uplifts the security of a watermarking scheme by making the watermark unintelligible to hackers. Consequently, even if a hacker can detect the presence of the watermark, it is simply impossible to make any sense of it as encryption scrambles it before embedding. To this end, the watermark can only be unscrambled by applying the inverse of the encryption algorithm that scrambled it in the first place. This is why an encryption algorithm is called the “key” in watermarking. In other words, the watermark can not be extracted without knowledge of the encryption algorithm employed during the embedding phase. Moreover, it is well established in the literature that the combination of watermarking and encryption is an indispensable tool that certainly limits, if not eradicates, the watermark’s duplication or removal. Some of the widely used encryption algorithms are duly acknowledged in the later parts of this review.

Secondly, once the watermark is encrypted (if it is encrypted), it is embedded into the host image. The watermark embedding follows a set of rules generally called the embedding rules. Some researchers within the field are actively working on optimising the existing watermark embedding rules, and others are focused on developing new ones. Irrespective of who is doing what, an embedding rule is designed by considering several requirements which need to be addressed by a watermarking scheme. These requirements are discussed below in Section 2.3. Once the watermarked image is achieved, it is transmitted, and so is the encryption key(s). In most cases, the watermarked image and the encryption key(s) are transmitted separately to minimise hacking-related risks.

Thirdly, similar to the embedding phase, the watermark extraction follows a set of rules. These rules are known as the extraction rules. The watermarked image is decoded on the receiver’s side, and the embedded watermark’s bits are extracted. Subsequently, the extracted bits are unscrambled using the above-mentioned key(s), culminating in the extraction process. Note, based on the extraction type, both the host and the watermarked images are sometimes required for the watermark extraction, and sometimes only the watermarked image is sufficient. This difference between the two is addressed in detail in Section 5.1.

2.3 Watermarking requirements

A successful image watermarking scheme needs to address three main requirements [98]. Firstly, in the case of invisible watermarking, adding a watermark to the host signal (original image) has to be imperceptible. This avoids any deformities perceived by the human visual system (HVS). Secondly, the watermark needs to be secure against unauthorised modifications. Thirdly, a watermarking scheme should have a healthy capacity, i.e., its ability to embed large watermark(s).

These three requirements are closely correlated; changing one can significantly affect the other. For instance, high capacity can improve security but degrades imperceptibility. In other words, the lower the capacity, the better the imperceptibility, and the weaker the security. Thus, reaching an equilibrium amongst these requirements is a significant challenge in the field, especially between imperceptibility and security, as they are conflicting in nature. The existing trade-offs between the watermarking requirements are illustrated in Fig. 2. Most current watermarking methods are developed by considering the trade-offs between these watermarking requirements.

Illustration of trade-offs in watermarking. The \(1^{st}\) column contains the host image (Lena, \(512\times 512\) in size) and watermark (jetplane). The \(2^{nd}\) column shows Lena’s image watermarked with jetplane (\(256\times 256\) in size). The \(3^{rd}\) and \(4^{th}\) columns illustrate Lena’s images watermarked with \(128\times 128\) and \(64\times 64\) sized watermarks, respectively. In the \(1^{st}\) row, it can be observed that the watermark’s imperceptibility is increasing from left to right because the watermark size is decreasing. In contrast, the \(2^{nd}\) row shows that the extracted watermark’s quality deteriorates as the size decreases. Best viewed when zoomed in

3 Performance baseline and metrics

The efficacy of the performance of the watermarking methods was measured using several performance metrics. To this end, an insight into some of the widely cited performance metrics is given below [6, 39, 41, 43, 46, 47, 60] and [114].

3.1 Imperceptibility measures

The embedded watermark’s imperceptibility is measured via the peak-signal-to-noise ratio (PSNR). The PSNR values are calculated in decibels (dB) via (1)—the higher the PSNR value, the better the imperceptibility:

where b, w, and h represent the number of bits used to represent the pixel value, image width, and image height, respectively. Furthermore, I(i, j) and \(I^{'}(i,j)\) indicate pixel values of the host and the watermarked images, respectively.

Another parameter that measures the embedded watermark’s imperceptibility is the structural similarity index (SSIM), calculated as per (2):

here or at any other instance in this discussion, I and \(I^{'}\) stand for the host and the watermarked images, respectively. Moreover, \(l(I, I^{'})\), \(c(I, I^{'})\), and \(s(I, I^{'})\) are the functions comparing the luminance, contrast and the overall structure of the host image and the watermarked image, respectively. To this end, if there is no difference (in terms of luminance, contrast, and structural) between I and \(I^{'}\), then the value attained by SSIM is ‘1’ else, it is less than one. Note that the higher the SSIM, the better the imperceptibility. Further insight into SSIM can be gained from [2].

3.2 Security measures

The security of the embedded watermark is tested through normalised cross-correlation (NCC), given by (3), where W and \(W^{'}\) stand for the original and the extracted watermarks of dimensions \(P \times Q\), respectively:

Note, sometimes in the literature, the NCC is also addressed as “NC”, and for the sake of consistency, the former is adopted throughout this discussion. The NCC values should range between [0 1], with ‘0’ being the least in similarity and ‘1’ being the highest. Further insight into the NCC and its theoretical basis can be gained from [66] and [125].

Another security parameter that measures the similarity between the embedded and the extracted watermarks is the bit error rate (BER). It is calculated as per (4);

The BER’s value lies between 0 and 1. The watermark extraction is perfect if the BER is ‘0’. In such a case, the extracted watermark bits are identical to the embedded ones. In contrast, the BER value of ‘1’ indicates a total mismatch between the former and the latter. The symbols in (4) are similar to the ones in (3), i.e. W and \(W^{'}\) stand for the original and extracted watermarks of dimensions P and Q, respectively.

3.3 Tamper detection and localisation measures

The false-positive rate (FPR), the false-negative rate (FNR), and the true-positive rate (TPR) are employed to measure tamper detection and tamper localisation attributes, facilitated only by a fragile watermark [78]. The FPR, FNR, and TPR are defined by (5), (6), and (7), respectively:

Here false-negative (FN) is the number of tampered pixels (which should be judged as tampered) that are judged as non-tampered. False-positive (FP) is the number of non-tampered pixels (which should be judged as non-tampered) that are judged as tampered. True-positive (TP) is the number of tampered pixels (which should be judged as tampered) that are judged as tampered. True-negative (TN) is the number of non-tampered pixels (which should be judged as non-tampered) that are judged as non-tampered.

Finally, another parameter that measures a watermarking scheme’s effectiveness in tamper detection and tamper localisation is known as the accuracy (ACC) [78]. It is defined as per (8):

The ACC should have values between [0 1]. The closer the ACC’s value to ‘1’, the better the watermarking scheme’s accuracy in detecting the tampering and locating or localising the regions it affects.

4 Watermarking attacks

Before delving into the intricacies of the watermarking attacks, we like to shed light on two critical terms. The first is the spatial domain, and the other is the transform domain. In image processing, the spatial and transform are two fundamental domains employed for analysing and manipulating digital images [31, 107]. Moreover, as the proposed study targets image watermarking, these terms are frequently used in the rest of this discussion.

The spatial domain refers to the original image representation, where each pixel value corresponds to a specific location in an image. In this domain, image processing operations are performed directly on the pixel values to obtain the desired outcome [31, 107]. In the spatial domain-based watermarking, the watermark is embedded directly into the host image’s pixel values through various techniques covered in Section 5.2.

In contrast, the transform domain involves applying a mathematical transform to convert the image from the spatial domain to a different domain [31, 107]. For instance, the Fourier transform is a commonly used technique that converts an image from the spatial domain to the frequency domain, representing the image or pixel information as a set of coefficients [7]. In the transform domain-based watermark embedded, these coefficients are manipulated through various techniques mentioned in Section 5.3. During the extraction phase, the watermark is extracted from the manipulated coefficients and transformed back into the spatial domain by taking an inverse of the applied transform technique.

In image watermarking, an attack is defined in the form of manipulations, if performed on a watermarked image, have the potential to harm the embedded watermark. In other words, it may impair the watermark detection on the receiver’s side after transmitting the watermarked image [38]. Image watermarking attacks exist in a wide range; however, they can be classified as either geometrical attacks or non-geometrical attacks [21].

The geometrical attacks are the ones that occur within the spatial domain, i.e., via direct manipulation of the pixels. Because of their simplicity, geometrical attacks are the most commonly used ones. Some readily used geometrical attacks are shown in Fig. 3. These attacks are relatively easier to apply and can be applied using readily available software, such as Microsoft Paint™, Adobe Photoshop™, etc. In fact, some of the examples in Fig. 3 are attained using Microsoft Paint™. Moreover, these attacks are easily perceived by the HVS.

In contrast, the non-geometrical attacks are relatively sophisticated and can be executed in both the spatial and the transform domains. To this end, their implementation requires some knowledge from hackers. Consequently, these attacks are generally more severe than the geometrical attacks and inflict more damage on the watermark. Moreover, in some instances, they are so discrete that it is pretty much impossible to tell by the naked eye whether the watermarked image is attacked or not. Some commonly used non-geometrical attacks are shown below in Figs. 4 and 5.

In addition to the aforementioned watermarking attacks, other well-known manipulations are covered here. Vector quantisation (VQ), copy-move, and protocol attacks have been in the limelight over the last few years. Due to space constraints, this discussion does not elaborate on the intricacies of these attacks, and only a brief overview is provided here. However, Haghighi et al.’s study offers an excellent insight into these attacks [30].

Illustrations (top to bottom) of the VQ, copy-move, and protocol attacks. This figure is inspired by Sharma et al.’s study [100]. Best viewed when zoomed in

-

In the VQ attack, a section of a watermarked image(s), achieved using a particular watermarking method, is inserted into another watermarked or target image acquired by the same method. Illustrations within the red boundaries in Fig. 6 depict images exposed to the VQ attack.

-

In the copy-move attack, a part(s) from a watermarked image is copied and subsequently placed within the same watermarked image. Illustrations within the orange boundaries in Fig. 6 show a few examples of the images attacked via copy-move.

-

The protocol attack, also known as the watermark copy or ambiguity attack, is one of the significant watermarking manipulations. In this attack, external information is inserted into a target image so that the least significant bits (LSBs) of the target image remain unaltered. Consequently, the attack often leads to ambiguity during the watermark extraction process, and the attack may remain unnoticed. Despite the attack’s effectiveness, many state-of-the-art methods have not been tested against this attack. The effects of the protocol attack are evident from illustrations within the green boundaries of Fig. 6.

5 Review of the existing methods

The year-wise distribution of the methods discussed in this paper is illustrated in Fig. 7.

5.1 Classifications of the existing methods

The watermarking methods discussed in this review are classified in two phases: phase-1 and phase-2. In phase-1, methods are classified based on the domain employed for watermarking embedding. In phase-2, they are further classified based on several attributes. These phases are briefly illustrated in Fig. 8.

In the first phase (phase-1), the methods classified based on the embedding domain belong to one of the following three categories. Firstly, the techniques for embedding the watermark in the spatial domain. Secondly, the ones in which embedding occurs in the transform or frequency domain. Finally, the others that employ both the spatial and the transform domains during embedding are the hybrid domain-based methods. Some well-known existing watermarking methods in each category or domain are discussed later in this review.

Once a method is classified in the first phase, it is further classified in the second phase (phase-2) based on the following attributes.

The first attribute is based on the watermark’s security. Based on this attribute, a method is further divided into two sub-categories. The methods wherein the embedded watermark can withstand watermarking attacks are known as robust watermarking methods. In other words, the embedded watermark in such methods is robust and can be extracted after the watermarked image is exposed to any attack. The techniques wherein the embedded watermark has zero tolerance towards watermarking attacks are fragile. In other words, the embedded watermark in these methods is fragile and can not be extracted after the watermarked image is exposed to an attack.

The second attribute is based on the watermark’s extraction process. Based on this attribute, image watermarking methods are further divided into two sub-categories. Ones that require both the original and the watermarked images during the watermark’s extraction are called non-blind. The others in which only the watermarked image suffices for the watermark’s extraction are called blind.

The third attribute is based on the watermark’s visibility. Image watermarking methods are further divided into two sub-categories based on this attribute. Ones in which the embedded watermark is visible to the HVS, and in others, it is invisible.

5.1.1 Visible and invisible attributes

Generally, the watermark embedding process can be expressed as (9):

where \(I_{Watermarked}\), \( I_{Host}\), \( W_{Total}\), and \(\beta \) stand for the final watermarked image, the original or host image, the total watermark embedded, and the watermark’s embedding strength or scaling parameter, respectively. Note that in (9), the range of \(\beta \) is (0 1], specifying the watermark’s visibility. To this end, an obvious watermark is represented by ‘1’ [10]. An illustration of the watermark’s visibility in response to different embedding strength factors is given in Fig. 9.

It can be observed in the first row of Fig. 9 that the watermark appears as it is, i.e., unscrambled. This indicates that the watermark was not scrambled before the embedding. In contrast, the second row shows how a scrambled watermark appears in a host image and responds to different embedding strength factors. Note that a greyscale host image is used here in the second row because the discussed changes are visually more prominent (in terms of illumination) in a greyscale image than in its colour counterpart.

The watermark’s response to different embedding strength factors. \(\beta \)’s value from left to right is 0.1, 0.5, and 0.9. The embedded watermark is not scrambled in the first row but in the second row. Note that this illustration is achieved from Sharma et al.’s method in [99]. Best viewed when zoomed in

Note that (9) is only a general representation of the watermark embedding process and does not explain various intricacies. For instance, the overflowing issue happens when the embedding process (in the case of a greyscale image) causes some pixels to have values greater than 255. Such complications are prominent in the spatial domain-based techniques, rectified by improvising the embedding process. Specifically, the embedding rules, a unique aspect of the overall embedding process, are tailored to limit, if not nullify, the embedding-related issues. Further insight into various embedding processes and rules is provided later in this discussion.

5.1.2 Blind and non-blind attributes

In the case of the non-blind watermark extraction, (9) can be rearranged, and the watermark can be extracted as per (10):

It is essential to realise that (10) only outputs the watermark(s) in a scrambled state. The final step in watermark extraction is unscrambling the former by an inverse execution of the aforementioned secret key. A pictorial representation of a non-blind extraction is presented in Fig. 10.

In the case of blind watermarking, the extrication process is not as straightforward as it is in non-blind watermarking. The blind watermark extraction generally follows extraction rules to extract the embedded watermark bits. In general terms, the extraction rules go hand in hand with the embedding rules (discussed in detail in Sections 5.2, 5.3, and 5.4) as the latter varies from method to method; therefore, the former also changes. To this end, it is difficult to express a blind extraction process in a generalised manner. However, the specific steps in any blind extraction process are illustrated in Fig. 11. To this end, insight into the execution of various blind extraction processes is provided as this discussion progresses.

5.1.3 Robust and fragile attributes

An application dictates whether a watermarking scheme needs to be fragile or robust. In other words, robust watermarking achieves copyright protection, and media authentication or verification is performed through fragile watermarking. To this end, the watermark embedding into the host image is tailored to meet the application’s requirements. For instance, the resultant strategy is robust when the watermark is embedded into the host image’s features that are not easily manipulated or affected by an attack; otherwise, it is fragile.

Fragile watermarking is subdivided into two categories based on the integrity criteria [13]. The first is called semi-fragile watermarking, which provides soft authentication, i.e., has relaxed integrity criteria. The watermark embedded using semi-fragile watermarking techniques is tailored to entertain certain modifications or attacks, such as JPEG or JPEG 2000 compression and luminosity changes. Methods in the other category are considered to be ultimately fragile or hard fragile. These methods follow hard integrity criteria against all modifications-more on these categories is provided as the discussion progresses.

Illustrations of how robust and fragile watermarks respond to watermarking attacks are provided in Figs. 12 and 13, respectively.

Robustness illustration of a robust watermark when exposed to different attacks. Solid blue, yellow, and purple boundaries contain the watermarked images under rotation attack at \(45^{\circ }\), Gaussian noise (GN) at 0.001, and JPEG compression with a quality factor (QF) of 40, respectively. All dashed borders represent the extracted watermarks from the attacked watermarked images. This illustration is achieved using Sharma et al.’s methods in [99]. Best viewed when zoomed in

Fragility illustration of a fragile watermark when exposed to the rotation attack. The solid red boundaries contain watermarked images after modification or attack, and the solid green boundaries contain the watermarked image with no modification. Subsequently, the extracted fragile watermarks from these images are contained within their corresponding coloured dashed boundaries. This illustration is achieved using Sharma et al.’s methods in [97]. Best viewed when zoomed in

The watermark’s (DICTA 2020) survival or robustness against various attacks is evident in Fig. 12. This ability of a watermark to withstand or survive attacks helps prove an image’s copyright information. Moreover, as the extracted watermark (in the case of robust watermarking) is intelligible and resembles the original or embedded watermark, the achieved NCC values are high or close to ‘1’ on a scale with a range of [0 1]. To this end, readers may refer to [99] for an insight into the original watermark (DICTA 2020) and the NCC performance of the watermarks illustrated in Fig. 12.

In contrast, when an image embedded through fragile watermarking is attacked, the embedded watermark becomes unintelligible. This phenomenon is highlighted in Fig. 13, where the WSU watermark is employed for fragile watermarking, and its successful extraction (in dashed green borders) is evident from an unattacked image (in solid green boundaries). However, extracted watermarks (in dashed red borders) are unintelligible from images that are attacked (in solid red boundaries), confirming the existence of an attack on the watermarked image and invalidating its authenticity. Moreover, NCC values attained by unintelligible watermarks are insignificant and close to ‘0’, the lower end of a scale ranging from [0 1]. One may refer to [97] for further insight into Fig. 13.

A preview of the methods discussed within this review and how they are classified under phase-1 and phase-2 is presented in Table 2.

5.2 Spatial domain-based methods

Several spatial domain methods exist in the field of image watermarking. They are easy to implement and faster than methods executed in other domains. The majority of these methods belong to one of the following categories.

5.2.1 Fragile and other attributes-based methods in the spatial domain

The LSB-based watermarking is one of the most, if not the most widely used, watermarking techniques in the spatial domain. The LSB watermarking methods are divided into two categories. The first is the LSB substitution, and the other is LSB matching [110]. In the former’s case, the LSBs of the host image are straightaway substituted with the LSBs of the watermark. In the latter’s case, the LSBs of the host and watermark images are matched in the first instance, and the only LSBs that differ from each other are substituted. Consequently, the former has higher watermarking capacity but is prone to unnecessary noise, whereas the latter is the reverse. The degradation in the watermarked image’s quality also depends on how many bits are utilised during the embedding process. For instance, if only the LSB (the far right bit in Fig. 14) is utilised in an eight-bit greyscale pixel, the difference between the watermarked images produced using the substitution and the matching-based techniques is insignificant. In this case, the PSNR of the images achieved using either of these techniques is around 51 dB [3]. This PSNR value drops to 44 dB if the LSB and an intermediate significant bit (ISB) are employed for embedding [17, 23]. Even in this scenario, the difference between the watermarked images produced using each method is negligible. However, a further PSNR drop (from 44 dB to around 37 dB and 41dB in the cases of substitution and matching-based techniques, respectively) happens when three of the rightmost bits are involved [105, 109].

Most existing approaches in this category follow similar steps as illustrated in Fig. 15. However, the embedding rule mainly differentiates them from each other. It is often the novel feature that separates one method from the other. Moreover, the LSB-based techniques have an excellent watermarking capacity and are primarily used in fragile watermarking. In the case of tampering, fragile watermarking methods can detect tampering and locate the regions affected by it. In other words, the former characteristic is known as tamper detection, and the latter as tamper localisation. More on these characteristics is provided as the discussion progresses.

Moreover, the watermarked image’s degree of degradation is further based on the type of watermark, i.e., whether it is a foreign object or self-generated. It is well-known that a watermark’s imperceptibility is considered better when a self-generated watermark is employed during embedding [100]. Merely because, in the foreign watermark’s case, the foreign noise is added to the host image. By the way, the term foreign refers to the watermark that does not originate from the host image. In other words, it does not carry any information associated with the host image. A TV channel’s logo displayed during the news and the university’s emblem on the transcripts are two of the many use cases of foreign watermarks. In contrast, a self-generated watermark is generated from the host image and generally carries some information associated with the host image. That said, a foreign watermark is usually employed for copyright protection or ownership claims, facilitated by robust watermarking schemes. In contrast, the self-generated watermark is used for verification or authentication, enabled by fragile watermarking. In addition, the self-generated watermarks exhibit better tamper detection and localisation performance when compared to their foreign counterparts. These are some reasons why preference is given to self-generated watermarks for fragile watermarking. Needless to say that self-generated watermark-based schemes are also referred to as self-embedding watermarking schemes in the literature. Hence, from this point onward, most discussion on fragile watermarking revolves around self-generated watermark-based methods. Readers may refer to earlier surveys [31] and [107] as well as methods [39] and [46] to gain further insight into foreign watermark-based fragile watermarking. Moreover, Fig. 15 is tailored to show the usage of a foreign watermark in an LSB-based method.

Walton’s work in [116] is one of the first well-known works in fragile watermarking. Their technique uses the checksum approach, wherein the sum of the first seven bits (starting from the leftmost bit or the MSB) in an eight-bit pixel is employed to detect whether or not the pixel is tampered with. Although their method is laborious and struggles with limited tamper detection, being a pioneer inspired many later works, such as [119, 120] and [124]. These later works curbed the limitations of Walton’s method, but they suffered from the VQ and collage attacks. Wong and Memon address the shortfall in [121], wherein authors have divided the host image in a block-wise manner and then used a hashing algorithm to establish the inter-block dependency. The method ignited the use of hashing in fragile watermarking or encryption in general. Interblock reliance is necessary to deal with VQ and collage attacks; however, the technique requires a binary equivalent of the host image to complete the verification process, incurring additional communication costs. Celik et al.’s method in [12] eradicated the downsides associated with the earlier methods [119] and [121]. Their process used a hierarchical approach to tackle VQ and collage attacks but performed poorly in tamper detection. The such poor performance resulted from using large pixel blocks while conducting the detection procedure. Note that the details on hashing or other encryption algorithms mentioned in this review are not provided. Because the watermarking technology uses these techniques as a tool, an in-depth discussion on encryption techniques is redundant in this review. However, readers can refer to [48] and [49] to gain insight into various encryption techniques.

Regarding hashing and hierarchical approaches, Hsu and Tu have used message digest-5 (MD5) hashing to generate the authentication bits, which are subsequently embedded into the host image [36]. These bits are then used for tamper detection and localisation in two hierarchical phases, wherein the detection results of the first phase are improved in the second phase. To this end, if the tampering rate is 7.64%, the method’s FPR and FNR performances are 0.22% and 1%, respectively. Unfortunately, these values degrade significantly when the tampering rate is > 40%. Subsequently, Li et al.’s method in [54] extends the work in [36]. The extended process is implemented block-wise, wherein a 64-bit authentication code exclusive to each block is computed using the MD5 hashing algorithm and finally embedded via the LSB substitution. The improvised technique can outperform the method in [36] regarding FNR and FPR performance. For instance, even for 80% tampering, the extended process can achieve the FNR and FPR values of 3.1% and 16%, respectively. Another hashing technique readily used in watermarking is secure hash algorithm-256 (SHA-256) [27, 85]. Recently, a combination of MD5 and SHA-256 hashing techniques has been used by Neena and Shreelekshmi in [85]. The combination has not only improved the scheme’s overall fragility against most watermarking attacks, but the FPR and FNR performances have also surpassed that of the above-discussed works. In contrast, in their study, Gul et al. [27] proved that Neena et al.’s method struggled with accurately detecting the tampered regions and shared that the combination of two hashing techniques leads to a hike in the processing time. Inspired by these reasons, Gul et al. employed only the SHA-256 hashing in their method, via which they could maintain the watermark’s fragility against the majority of attacks in a streamlined fashion. However, the tamper detection accuracy suffered as the employed size of the block-wise division was \(32\times 32\).

By the way, it’s not only the hash-based approaches hired for securing the watermark before embedding but also the chaos-based approaches. Some known spatial domain-based fragile watermarking works employing chaos-based encryption are [13, 77, 78] and [86]. In their non-blind approach, Raman and Rawat used Arnold cat map and logistic mapping [86]. They implemented the Arnold cat map on the host image, the resultant scrambled image is divided into \(8\times 8\) blocks, and the LSBs of the pixels within these blocks are embedded with the watermark. However, before embedding, an encrypted version of a binary watermark is prepared using an XOR operation between the watermark image and a chaotic sequence obtained using a logistic map. Undoubtedly, this approach makes removing the watermark by a hacker highly unlikely, but the major drawback is that the employed binary logo watermark is foreign.

On the other hand, Chang et al.’s method uses a self-generated watermark in their fragile watermarking scheme [13]. The approach also utilises a novel two-pass logistic map along with Hamming code. Their method exhibits excellent tamper detection and localisation abilities, shown via the FNR and FPR performances of 0.07 % and 0.43 %, respectively. Above all, their approach proved that the VQ attack could be nullified even without inter-block dependency. The main shortfall of the method is that it is operable only on greyscale images. Motivated by [13] and [86], Prasad et al. in 2020 presented their work on fragile watermarking in [78]. Their approach generated the authentication code by combining MSBs and Hamming code. The generated code is further encrypted by using a logistic map. Subsequently, the encrypted code is embedded into the LSBs using a novel block-level pixel adjustment process (BPAP). Prasad et al.’s approach achieves high tamper detection and localisation while maintaining the required visual quality of watermarked images. The reported FNR, FPR, and ACC are 0.08%, 1.45%, and 99.89%, respectively. Another study by Prasad et al. in late 2020 presents an active forgery detection scheme using fragile watermarking, which works at the pixel level [77]. The watermark preparation and embedding procedures in this method are very similar to their predecessor work in [78]; however, the main difference is that the predecessor method is implemented at the block level, whereas the other is at the pixel level. To this end, this scheme’s tamper detection precision is higher than the previous method [78], whereas it lacks tamper localisation ability. To this end, the FNR, FPR, and ACC values reported by [77] are 0.45%, 0.01%, and 99.71%, respectively.

5.2.2 Semi-fragile and other attributes-based methods in the spatial domain

Several semi-fragile watermarking methods exist in the spatial domain but not as many as in the other domains. Some of the most influential semi-fragile watermarking works within the spatial domain are discussed here.

Schlauweg et al.’s semi-fragile watermarking utilises a self-generated watermark [91]. Firstly, the host image is processed using lattice quantization to generate the watermark data, which is then encrypted using the MD5 hash algorithm. The encrypted watermark is subsequently embedded using the novel dither modulation-based approach and error correction coding (ECC). The method performs well when exposed to desirable manipulations such as JPEG compression but fails to provide soft authentication to other non-malicious attacks, such as rotation.

Xiao and Wang proposed a scheme tailored to accommodate the sharpening attack [123]. The method has a direct use case as image sharpening is a commonly used image modification. To this end, Laplacian sharpening, or sharpening in general, is used for edge enhancement in images without altering the actual (image) content. Xiao and Wang argued that their method could withstand Laplacian sharpening to any degree and distinguish it from other attacks. Their proposed algorithm is low in time complexity and high in watermark imperceptibility because only the LSB value of pixels is altered. Moreover, the watermark is embedded by modifying the parity of the pixel value and its Laplacian sharpening result, making it tolerant to the Laplacian sharpening but fragile to other attacks. Conversely, the method’s main flaw is that it requires an external or foreign watermark and cannot achieve tamper detection and localisation.

Local binary pattern (LBP) based watermarking is another widely employed technique [118]. The LBP can be perceived as a particular case of the LSB substitution; however, the main difference is in their applicability. For instance, the LBP is mainly used in semi-fragile watermarking, whereas the LSB serves hard-fragile watermarking. As mentioned earlier, the watermark can withstand specifically authorised modifications in semi-fragile watermarking. To this end, as the LBP-based watermarking is immune to luminosity changes, it is an excellent candidate for scenarios wherein the watermark must withstand watermarking attacks, such as CE, brightness, HE, and gamma correction.

An illustration of how to calculate the LBP from a pixel block is shown in Fig. 16. Here, \(N_{P}\) and \(C_{P}\) stand for the neighbouring pixel(s) and the center pixel, respectively. Note that there are several ways via which the \(C_{P}\) (represented using red ink in Fig. 16) can be calculated, and peers in the field are actively working on finding novel ways to improvise its selection. However, for explanation simplicity, \(C_{P}=158\) is selected for illustration in Figs. 16 and 17.

Once the LBP is obtained, it can be embedded using the steps outlined in Fig. 17. There are a lot of commonalities between these steps and those related to the LSB substitution-based methods (shown above in Fig. 15). However, in Fig. 17, the authors have deliberately demonstrated an example of the LBP-based watermarking wherein the employed watermark is self-generated. In other words, the watermark itself is generated from the host image. This approach has many benefits, such as improving tamper detection and localisation capabilities.

Wenyin and Shih presented the LBP-based semi-fragile watermarking in [118]. It was a breakthrough work that emphasised using the LBP for semi-fragile watermarking. Their proposed work starts with single-level watermarking, wherein a logo-based watermark is embedded using the LBP. In the beginning, the study shows the working using the LBP that is \(3\times 3\) in block size. Subsequently, the working is also demonstrated using LBPs of other dimensions, for instance, \(5\times 5\) or bigger. The results achieved by their approach revealed that the proposed scheme is robust against readily used image manipulations, such as additive noise, luminance change, and contrast adjustment. At the same time, the method is fragile against other attacks, such as filtering, translation, and cropping. To this end, the technique exhibits tamper detection and localisation abilities against unentertained attacks. The scheme’s success positively influenced many later semi-fragile watermarking works, such as [14, 127] and [128]; however, a significant flaw is common in these methods. Specifically, these methods employ LBPs that are odd in pixel numbers or dimensions, for instance, \(3\times 3\), \(5\times 5\), and more-resulting in an issue when dealing with a host image whose dimensions are in powers of two. Above all, these methods are operable only on greyscale images. These shortfalls are addressed by Pal et al. in a series of their works [71, 72] and [73]. As mentioned earlier, a higher ratio of the semi-fragile watermarking methods exists in other domains; hence, the rest of the semi-fragile works are discussed later in this review.

5.2.3 Robust and other attributes-based methods in the spatial domain

The transform domain is generally preferred over the spatial domain when the focus is robust watermarking. That said, some spatial domain-based robust watermarking works still have left their mark. A few of those are summarised below.

In contrast to the spatial domain-based fragile watermarking methods, which primarily tend to employ the LSBs during embedding, the robust watermarking techniques prefer using the ISBs. If robustness is the main requirement, embedding into the MSBs may seem perfect, but such is not the case. The MSB-based embedding significantly degrades the image quality, compromising the vital balance between the watermarking requirements of imperceptibility and robustness. Hence the use of ISBs in achieving spatial domain-based robust watermarking is justified.

Parah et al. in 2017 proposed an ISB-based robust watermarking scheme for grayscale images [75]. Their strategy is blind and demonstrates how a watermarking scheme’s robustness changes when a watermark is embedded using the ISBs instead of the LSBs. Moreover, the method employs a foreign binary logo watermark encrypted using a pseudo-random address vector (PAV). Details on PAV are present in Parah et al.’s other work in [74]. In [75], the method’s robustness is tested through some commonly used watermarking attacks, such as histogram equalisation (HE), median filtering, low pass filtering (LPF), JPEG compression, GN, salt and pepper (S &P) noise, and rotation but not against other readily used attacks such as cropping and scaling. Abraham and Paul presented their work in 2019 to address this shortfall [1]. Their non-blind approach achieves watermarking in colour images, wherein only the blue channel is employed during watermark embedding. That is because the HVS is less sensitive to changes to the blue channel than to the red and green channels. The method in [1] utilises a block-based approach, wherein each block is exposed to a sub-region selection process using a simple image region detector (SIRD) before the watermark embedding. SIRD facilitates the selection of the most appropriate region or sub-region for watermark embedding within an \(8\times 8\) pixel block. Selected pixels in a sub-region are subsequently modified to achieve watermark embedding. Moreover, two embedding masks M1 and M2, are used during the embedding process. M1 modulates or adjusts the blue channel with respect to the watermark bit, and M2 is the compensating mask that changes red and green color channels in response to the blue channel’s modulation. In a nutshell, M1 and M2 masks aim to maintain the balance between imperceptibility and robustness.

The experimental results of the method in [1] show its robustness against several geometric and non-geometric attacks. Many complex watermarking attacks are also addressed, including cropping, resizing, and flipping. However, the robustness evaluation does not cover the scheme’s effectiveness against simultaneously occurring multiple attacks or a combination of attacks. Hasan et al., in 2021, presented one of the most recent works on the ISB-based robust watermarking [32]. Considering this method caters only to greyscale images, the authors emphasised using the host image’s black pixels for watermark embedding. This technique balances watermarking requirements by employing the third ISB plane of the black pixels (the third ISB from the right in Fig. 14) and Pascal’s triangle during the embedding process. To this end, Pascal’s triangle selects the most suitable black pixels for embedding by achieving a minimum trade-off between imperceptibility and robustness. The study’s experimental analysis has proved that embedding using black pixels instead of white results in better PSNR and NCC performances. Moreover, the scheme’s \(\mathcal {O}(n^{2})\) time complexity is low enough to be adopted for real-time applications.

Histogram shifting is another widely accepted watermarking scheme. It was devised by Ni et al. [69] in 2006, and since then, it has been vastly employed. The main advantage of the technique is that it produces watermarked images with superb imperceptibility. To put into perspective, the average PSNR value of the watermarked images achieved by Ni et al.’s method is at least 48 dB, which was higher or on par with any other existing method(s) at that time. On the flip side, such a great imperceptibility came at the price of low capacity. A general representation of the histogram shifting is shown in Fig. 18. Here, the highest point (with respect to the y-axis) within the histogram is termed as the peak point. In other words, the peak point depicts the most frequently occurring greyscale value within the host image. In contrast, it is also well established that there is always an absence of a grey level (sometimes more than one) in a natural image. The figure defines such an absent grey level as the zero point. In the literature, grey levels within a histogram are also referred to as bins [69].

A step-wise breakdown of the histogram shifting technique’s methodology is given in Fig. 19. In this scheme, the histogram of the host image is plotted at first. Subsequently, the peak and the zero points are located. After that, the greyscale values between the peak and zero points are shifted to create a gap next to the peak point. In other words, this shifting can be perceived as the zero point’s shifting from its initial greyscale position to the one next to the peak point’s. In Fig. 18, the grey levels are shifted to the right as the zero point in the original histogram is located on the peak point’s right. Finally, all the pixels corresponding to the peak point’s grey level are located, and watermark bits are embedded using an embedding rule. Several embedding rules have been devised since Ni et al.’s method in 2006; however, a straightforward version is expressed below.

Suppose pixels associated with the peak point have a greyscale value of 150. If the watermark bit to be embedded is 0, then no change is made. However, if the watermark bit to be embedded is 1, then a pixel with a value of 150 is incremented by 1, so it can be placed at the grey level of 151 in the (watermarked) histogram. These steps are repeated for other pixels (with 150 as their grey level) until all the watermark bits are embedded and the watermarked image is generated.

Ni et al.’s method motivated many later works, such as [34, 37, 44, 56] and [90], but these studies focused on reversible image watermarking. The reversibility attribute is exclusive to blind watermarking methods that allow the host image’s reconstruction once the watermark is extracted from it. The study of these methods is out of this review’s scope; however, readers may refer to Sreenivas et al.’s survey to understand the intricacies of reversible watermarking techniques [107]. Nonetheless, Ni et al.’s methods also inspired other histogram-based watermarking schemes, focusing mainly on robust watermarking. Most procedures are devised to tackle severe attacks such as cropping and random bending attacks (RBAs). Common examples of RBAs are global bending, high-frequency bending, and jittering. These attacks are responsible for causing de-synchronisation between the embedding and extraction processes, making the watermark extraction hard or sometimes impossible [122]. A few histogram-based methods developed to deal with such harsh attacks are discussed below.

Xiang et al. mentioned that geometric attacks, including RBAs, only shift pixels’ position [122]. Consequently, they do not affect the histogram’s shape as it is independent of the pixels’ position but dependent on their grey levels. To this end, even after a geometric attack, the histogram’s shape is barely modified; thereby, robustness is guaranteed. Moreover, this analogy is verified by Zong et al. in [129], wherein they compared histograms of unattacked images with those of geometrically attacked. Through this comparison, the authors illustrated that histograms hardly varied from each other. That said, in Xiang et al. method [122], the host image (I) is first exposed to the Gaussian low-pass filter because it allows for combating the high-frequency-based attacks. Subsequently, the yielded low-frequency image’s (\(I_{Low}\)) mean value (A) is calculated, and the histogram is constructed. After that, the population of the pixels corresponding to the grey level of A is quantified, which also defines the length of the watermark or the number of bits that can be embedded. Subsequently, the watermark embedding is achieved based on the embedding rules, which tend to manipulate a pair of neighbouring bins or greyscales within the histogram. Readers are urged to refer to Xiang et al. ’s study [122] for further insight into the workings of the relevant embedding and extraction procedures.

Xiang et al.’s method (mentioned above) gained a lot of attraction and has also been extensively used in the field. However, Zong et al. highlighted some of its flaws in [129]. The major weakness is its inability to use the histogram’s shape to its fullest during the embedding process. This inability results in low watermarking capacity and, even worse, uncertain fluctuations within the embedding capacity. For instance, Zong et al., in their aforementioned study, proved that the watermark’s length in Xiang et al.’s method is dependent on the population of the pixels with grey level corresponding to the mean value (A). Therefore, the lower the population, the lower the embedding capacity, and the lower the robustness. To this end, Zong et al. tackled this issue by not letting only the mean value of \(I_{Low}\) dictate the embedding capacity but by employing as much of the histogram’s shape as possible.

Similar to Xiang et al.’s approach, Zong et al. in their novel histogram-based watermarking method [130], employ a Gaussian low-pass filter to preprocess the host image. Moreover, the watermark bits are embedded only into the low-frequency components of the filtered image to withstand various non-geometrical attacks. In addition, the geometrical manipulations, including the RBAs, are tackled using a technique called the histogram-shape-related index, which selects the most suitable pixel groups for watermark embedding. Consequently, a safe band is introduced between the selected and non-selected pixel groups, further suppressing the effects of geometric attacks. Moreover, during watermark embedding, a novel high-frequency component modification (HFCM) scheme is implemented to compensate for the side effects of Gaussian filtering. Even though the embedding rules in Zong et al.’s methods [129] and [130] are not much different from Xiang et al.’s work but the distinct features discussed in this paragraph are exclusive to Zong et al.’s approaches. Thanks to these unique features, Zong et al.’s methods have the excellent embedding capacity and exhibit robustness superiority over Xiang et al.’s approach.

Needless to say that the above-mentioned histogram-based watermarking methods are the backbone of the other existing histogram-based watermarking techniques. Readers are encouraged to explore relatively recent studies in [35, 55], and [62].

5.3 Transform domain-based methods

In the context of robust watermarking, the transform domain-based watermarking techniques are considered a better candidate than the spatial domain-based techniques. Several reasons justify this superiority; however, their immunity to geometric attacks is the main one. That is because the geometric attacks result in a direct altercation with the pixels, thereby damaging the watermark embedded in the spatial domain. However, in the transform domain-based methods, the watermark is embedded using the frequency coefficients, which are unlikely to be damaged via direct manipulation of the pixels [97, 100, 101]. Consequently, the transform domain-based methods are more resilient to attacks and suitable for robust watermarking. The most prominent and widely employed transform domain-based watermarking techniques are discussed below.

Firstly, discrete cosine transform (DCT) is a readily used technique in the transform domain. The general sequence of the steps involved in the DCT-based watermarking methods is given in Fig. 20. Here, the host image is first divided into \(8\times 8\) non-overlapping blocks. Subsequently, the DCT is carried on each block to yield the respective DCT coefficients. Based on frequencies, the DCT coefficients are categorised as low-frequency (LF), mid-frequency (MF), and high-frequency (HF). Moreover, the first low-frequency coefficient is the direct-current (DC) coefficient. To this end, these coefficients are depicted using different colour codes in Fig. 20.

The extracted DCT coefficients are exposed to a selection procedure that selects the suitable coefficients for the watermark embedding. In most existing DCT-based watermarking works, the MF coefficients are preferred for embedding the watermark. That is because the MF coefficients, unlike their counterparts (LF and HF coefficients), allow alterations while maintaining an appropriate balance between imperceptibility and robustness. A complete account of how the host image’s behaviour changes when a watermark is embedded into different DCT coefficients can be found in [76]. The selected coefficients are then manipulated per an embedding rule to achieve the watermark embedding. Of course, embedding rules vary from method to method, making them unique. Finally, the inverse of the DCT (IDCT) is performed, and the watermarked image is achieved.

Secondly, singular value decomposition (SVD) is another popular transform domain-based technique many in the field use. SVD is a numerical tool that decomposes a matrix into two orthogonal matrices and a diagonal matrix. If \({\textbf {I}}\) is the matrix representation of \(I_{Host}\) and \({\textbf {I}}\) is a real-valued matrix of \(m \times n\) dimensions, i.e., \({\textbf {I}}=\mathbb {R}^{m \times n}\), then its SVD is formulated as per (11):

Here, \({\textbf {U}}\in \mathbb {R}^{m \times m}\) and \({\textbf {V}}\in \mathbb {R}^{n \times n}\) are two unitary or orthogonal matrices, referred to as the left and right singular matrices, respectively. These two matrices represent the geometrical features of \(I_{Host}\). Moreover, T denotes the transpose operation, and \({\textbf {S}}\in \mathbb {R}^{m \times n}\) is the diagonal matrix that contains the positive (non-negative) singular values of \({\textbf {I}}\) in descending order. To this end, \({\textbf {S}}\) controls the luminosity attribute of \(I_{Host}\). The main advantages of employing SVD for image watermarking are below.

The first benefit is that the singular values in S are highly stable, and a (slight) change made to them generally goes unnoticed by the HVS. Hence, these values serve as an excellent candidate for achieving imperceptible watermarking. Another benefit is that whenever a data matrix is distorted, its element values are changed, but the singular values have little to no changes. These singular values withstand geometrical and non-geometrical attacks, making them suitable for robust watermark embedding.

The present SVD-based watermarking methods are divided into two categories. The first category is singular value matrix watermarking (SVMW), and the other is direct watermarking (DW). In the former’s case, the singular values of the watermark (\(S_{w}\)) and the host image (\(S_{H}\)) are extracted and combined to create \(S_{new}\). The \(S_{new}\) is subsequently combined with (\(U_{H}\)) and (\(V^{T}_{H}\)) to achieve the watermarked image (\(I_{Watermarked}\)). In the latter’s case, only the \(S_{H}\) values are used and directly combined with the watermark (W) to create \(S_{new}\). The SVD is subsequently performed on \(S_{new}\) to achieve \(S_{HNew}\), which is then combined with (\(U_{H}\)) and (\(V^{T}_{H}\)) to achieve (\(I_{Watermarked}\)). This difference between the two is further highlighted using the figures below. Here, Fig. 21 shows the steps involved in the SVMW technique, whereas Fig. 22 is for the DW-based SVD approach.

The benefits of using the SVD for image watermarking are evident from the discussion above; however, they come at a price. The primary issue amongst the SVD-based watermarking methods is the false-positive problem (FPP). This problem leads to an ambiguous situation where a hacker can obtain a counterfeit watermark and unlawfully obtain the rights to an image. For instance, the SVD-based techniques, such as SVMW and DW, tend to use the left and right singular matrices (shown using the green ink in Figs. 21 and 22) as the key(s) or side information during the extraction phase. Hackers understand that the diagonal singular values can be extracted from the left and right singular matrices. To this end, hackers use this significant limitation to gain access to the original watermark and then replace it with their own. By doing so, the adversaries can claim ownership of an image or a media in more general terms.

Thirdly, discrete wavelet transform (DWT) is another well-versed transform domain-based technique. Almost the whole image processing space has benefited from the arrival of DWT, and its advantages in achieving image watermarking are immense. A general step-by-step breakdown of DWT-based watermark embedding is shown in Fig. 23.

Here, the first step is to expose the host image to the DWT operation. Precisely, the DWT of an image yields four frequency subbands, termed and represented in Fig. 23 as low-low (LL), low-high (LH), high-low (HL), and high-high (HH). Note that the wavelet’s ability to decompose an image is called multi-resolution analysis (MRA), via which the DWT coefficients at different decomposition levels are extracted (see [18] and [94] to gain an insight into the MRA). It is well-known that the HVS is more receptive to low-frequency modulations; as the LL subband comprises the low-frequency DWT coefficients, it is generally considered unfit for watermark embedding. Similarly, the HH subband contains high-frequency coefficients, which can easily be oppressed by the usual watermarking attacks, such as compression and high-pass filtering, rendering it unsuitable for embedding. So choosing an appropriate subband(s) in the DWT-based watermarking is an essential step in the embedding procedure. Subsequently, the DWT coefficients within the selected subband(s) are determined and successively manipulated to achieve the actual watermark embedding. Needless to say, the embedding itself is reached via defined embedding rules (red box in Fig. 23). The final step is to perform the DWT inverse (IDWT) to achieve the final watermarked image.

5.3.1 Fragile and other attributes-based methods in the transform domain

Fridrich and Goljan have left their mark on DCT-based fragile watermarking through their work in [24]. A step-by-step breakdown is necessary because this pioneering work is heavily cited. In the first step, pixels in the host image are stripped of their LSBs, i.e., set to zero. Subsequently, these stripped-off pixels are divided into \(8\times 8\) blocks, and each block is exposed to the DCT operation. The extracted DCT coefficients are then subject to a quantization procedure with the help of the quantization table (see [24] for an insight into the actual quantization table). The first 11 of the total quantized DCT coefficients are converted into 64 bits using binary encoding. To this end, the 64 bits are self-generated watermark bits. Fridrich and Goljan, in their study, proved that binary encoding of the first 11 coefficients guarantees a binary sequence of 64 bits.

Moreover, they have also shown that only these 11 coefficients are sufficient to represent information of an \(8\times 8\) block, even though they are compressed to 50% via JPEG compression. Subsequently, these 64 bits are inserted into the LSBs of the pixels belonging to an \(8\times 8\) block. Note that in this technique, 64 bits of one block are embedded into the LSBs of another block by using the concept of block mapping. That is vital, less so for the tamper detection and localisation, but more for the reconstruction or recovery of the tampered regions. For instance, if a block is tampered with, its recovery information (64 bits) can be found in the other block’s LSBs, which can be extracted and employed to reconstruct the tampered region(s). Even though the quality of the restored blocks is lower than 50%, it is sufficient to inform the user about the original content.

Furthermore, the tamper detection and localisation capabilities of the method in [24] are satisfactory, thanks to \(8\times 8\) blocks employed as authenticators. In the same study, Fridrich and Goljan proposed improving the quality of the restored regions by utilising two LSBs (the LSB and an ISB) to hide 128 bits, but this comes at the price of a poor-quality watermarked image. Notwithstanding the successes of the method, it is vulnerable to standard attacks, such as setting all LSB bits to zeros. This issue is addressed by Li et al. in [53].

The initial steps in Li et al. method [53] are similar to the ones in [24], but the 64-bit watermark used in the former’s case is achieved from 14 of the total quantised DCT coefficients instead of 11. Once selected, 64 bits are embedded using a novel block-mapping-based approach, termed by the authors as the dual-redundant-ring structure [53]. Unlike in [24], the novel technique allows \(8\times 8\) blocks of the host image to form a cycle, wherein watermark bits of the \(1^{st}\) block are hidden into the LSBs of its adjacent block. Subsequently, a copy of the watermark bits is also embedded into the ISBs of another block whose position is dictated by the \(1^{st}\) block. The approach results in a ring-like formation, wherein block dependency (dependence of a block’s information on the other) is achieved. Moreover, the existence of multiple copies of a watermark bit elevates the survival chances of the watermark, which leads to an improvement in tamper detection and localisation performances. Needless to say, that block dependency helps in combating the VQ and collage attacks. However, the method is only applicable to greyscale images, and the fixed block size of \(8\times 8\) is also responsible for increasing the FPR, which is undesirable.

Singh et al. in late 2015, proposed a self-generated watermark-based fragile watermarking scheme [106]. Their strategy is blind and implemented block-wise, wherein the size of each block is \(2\times 2\). Each of the four pixels in a \(2\times 2\) block is stripped off its three far-right bits (LSB and two ISBs), whereas the remaining five bits (the MSB and four ISBs) are utilised to achieve a self-generated watermark. The generated watermark is a blend of authentication and multitasking bits. Authentication bits verify the host image and provide tamper detection and localisation characteristics. In contrast, multitasking bits can perform the function of the authentication bits but also carry the information required to restore or recover tampered regions. To this end, the primary purpose of multitasking bits is restoration, whereas their authentication ability is generally used as a backup in case an attack damages actual authentication bits. Note that five bits (MSB and four ISBs) of each pixel in a \(2\times 2\) block are used to generate multitasking and authentication bits in the following manner.

Firstly, ten of the total multitasking bits are generated using a combination of the DCT and quantization techniques. By the way, the same combination is used in the above-mentioned methods [24] and [53]. Subsequently, the first of two authentication bits is produced by combining five bits (the MSB and four ISBs) and a cyclic redundancy check (CRC) bit with the help of a key. The second authentication bit is achieved by combining five bits (the MSB and four ISBs) and the Hamming code using another key. Readers are encouraged to refer to [106] for the intricacies of the CRC and Hamming code and how they are gelled with five bits (the MSB and four ISBs) to produce two authentication bits. Once 12 watermark bits (ten multitasking and two authentication bits) are generated from a \(2\times 2\) block, they are embedded into pixels of another \(2\times 2\) block, selected with the help of a block-mapping procedure. Similar steps are executed on the remaining \(2\times 2\) blocks to achieve the final watermarked image. When operating on color images, the system is performed on one of the red, green, and blue (RGB) channels and replicated to the other two channels. Subsequently, the processed channels are concatenated to achieve the final (colour) watermarked image.

Singh et al.’s method has excellent fragility and sensitivity to even small changes, which is desirable [106]. Furthermore, the tamper detection and localisation performances are high due to small-sized blocks. However, the method has a significant flaw in using multiple keys; specifically, six such keys are used throughout the process. Since these keys must be shared with the receiver through the transmission channel, it poses a severe threat of being hacked. Not to mention the overhead imposed on the overall processing time by multiple keys is also significant.

Singh et al. presented another DCT-based fragile watermarking technique in late 2016 [105]. This scheme can be perceived as an extension of their previous work in [106]. To this end, when it comes to embedding and extraction procedures, the new method follows the footsteps of its predecessor. However, the main difference is that the practice employs three secret keys instead of six. That makes the new process more balanced from its application viewpoint; however, the three keys are still far too many.

Dadkhah et al. in [17] proposed an SVD-based fragile watermarking scheme capable of tamper detection and localisation. The scheme begins by dividing the host image into \(4\times 4\) blocks, and each block is further subdivided into four blocks, each of which is \(2\times 2\) in size. Subsequently, the SVD is performed, and a 3-bit authentication code from each sub-block is generated. After concatenation, a 12-bit authentication code is achieved from four sub-blocks. Furthermore, the average value of these sub-blocks is calculated, and the first five far-left bits (MSB and four ISBs) are extracted and concatenated, resulting in a 20-bit block recovery code. In the next step, previously achieved 12-bit authentication data is placed within the LSBs of the pixels belonging to the aforementioned sub-blocks. However, the 20-bit recovery data is placed in ISBs of pixels in a different \(4\times 4\) block. To this end, the different \(4\times 4\) block is found using the mapping operation.

The main strength of Dadkhah et al.’s method is its ability to use pixel blocks (in the form of blocks and sub-blocks) of different sizes. This difference implements tamper detection and localisation hierarchically. For instance, the first set of tamper detection results is achieved using a bigger block (\(4\times 4\) in size), which can then be fine-tuned using smaller blocks. Moreover, as there are more blocks to play with, it also increases the overall watermarking capacity, which helps avoid collage attacks and aids in the recovery of tampered regions. In contrast, the advantages of this study are challenged in [8].