Abstract

Flow in coastal waters contains multi-scale flow features that are generated by flow separation, shear-layer instabilities, bottom roughness and topographic form. Depending on the target application, the mesh design used for coastal ocean modelling needs to adequately resolve flow features pertinent to the study objectives. We investigate an iterative mesh design strategy, inspired by hydrokinetic resource assessment, that uses modelled dynamics to refine the mesh across key flow features, and a target number of elements to constrain mesh density. The method is solver-agnostic. Any quantity derived from the model output can be used to set the mesh density constraint. To illustrate and assess the method, we consider the cases of steady and transient flow past the same idealised headland, providing dynamic responses that are pertinent to multi-scale ocean modelling. This study demonstrates the capability of an iterative approach to define a mesh density that concentrates mesh resolution across areas of interest dependent on model forcing, leading to improved predictive skill. Multiple design quantities can be combined to construct the mesh density, refinement can be applied to multiple regions across the model domain, and convergence can be managed through the number of degrees of freedom set by the target number of mesh elements. To apply the method optimally, an understanding of the processes being model is required when selecting and combining the design quantities. We discuss opportunities and challenges for robustly establishing model resolution in multi-scale coastal ocean models.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Numerical simulation of coastal flow requires a model representation that takes into account the multi-scale nature of both the domain geometry and the broader forcing dynamics. The model domain typically includes islands of varying size and shape, and is constrained at the land boundary by a complex coastline and bathymetry that has features across a range of spatial scales. The coastline shape can lead to flow redirection, acceleration and separation triggering the formation of trapped and shedding eddies (Signell and Geyer 1991; Geyer 1993; Russell and Vennell 2017). Narrow channels can cause jet-driven flow structures (Fujiwara et al. 1994; Old and Vennell 2001; Spiers et al. 2009), and islands generate wakes (Wolanski et al. 1984; Furukawa and Wolanski 1998; Caldeira et al. 2005) resembling the von Kármán vortex street (von Karman 1964). Similar separation processes also occur at the seabed due to complex topography (Slingsby et al. 2021; Lucas et al. 2022). The influence of flow separation on flow dynamics can propagate further downstream, with non-intuitive implications for a robust mesh design.

An unstructured mesh allows the domain discretisation to capture the observed range of spatial scales while keeping the associated number of mesh elements to a minimum. The use of unstructured meshes for modelling coastal processes has become standard practice, applied across a range of problems. At the most basic level is the accurate representation of the surface elevation and flow from the shelf-break to the coast (Hagen et al. 2001, 2006; Bilgili et al. 2006; Legrand et al. 2007), followed by more complex flow interactions with reefs (Legrand et al. 2006; Mackie et al. 2021), islands (Pérez-Ortiz et al. 2017), and sediment transport related morphodynamics (Bertin et al. 2009). Unstructured meshes have been used to improve the prediction of storm surges (Bilgili et al. 2006; Warder et al. 2021) and tsunamis (Zhang and Baptista 2008), the study of near-shore wave-current interactions (Zheng et al. 2017; Fragkou et al. 2023), site assessments for tidal energy extraction (Murray and Gallego 2017; Coles et al. 2017), and quantifying the impact of man-made structures on the local environment (Cazenave et al. 2016). The above are a subset of an extensive set of applications, but indicate the diverse range of problems addressed using unstructured meshes in the modelling of coastal fluid systems.

Previous methods for unstructured mesh design of coastal and shelf-sea models have focussed on capturing the topography using the spatial variation of bathymetric (and/or topographic) gradients (Bilgili et al. 2006; Gorman et al. 2008; Bilskie et al. 2015, 2020). Methods of mesh refinement based on wavelength and celerity (Hagen et al. 2001; Legrand et al. 2007) have been developed to improve the representation of the tidal constituents across the continental shelf into the coastal zone. Both of these approaches provide a mesh design that remains fixed for the ensuing modelling and analysis. Within the mesh design these approaches do not take into account the formation of transient flow structures that vary in space and time. The latter can significantly vary based on the forcing mechanisms at play. The impact of mesh design on unrealistic numerical diffusion has been noted by Holleman et al. (2013) and Fringer et al. (2019), with implications for the application of numerical simulation to physical oceanographic problems. Divett et al. (2013) noted the impact of low mesh resolution on the artificial diffusion of vorticity downstream of flow separation points. To address these issues a different approach to mesh design is required.

Piggott et al. (2008) resolved flow features whose positions are not known a priori as they evolve in time using adaptive meshing techniques. This is followed by an active body of work into the implementation of adaptive grids for coastal process modelling, such as Divett et al. (2016), Wallwork et al. (2022), and Clare et al. (2022). Due to the complex geometries associated with coastal ocean models, on-the-fly adaptive mesh methods present challenges associated with conservation errors, computational overhead, and scalable implementation. Depending on the end-use of the model, such computational cost may not be justified. It is possible to resolve evolving dynamic features using standard mesh design methods, but this requires an experienced modeller with a good knowledge of the system considered. Hence, we seek to deliver a mesh design strategy that aims to reduce the epistemic errors associated with standard methods, while minimising the computational cost. This design method must locate and refine the mesh in key areas of transient flow feature formation, while constraining the overall number of mesh elements. We hypothesise that establishing an objective approach to identify key dynamic areas can lead to a structured design method that is more accessible to a broader range of end users compared to more advanced adaptive mesh systems.

We propose and detail an iterative mesh refinement process that begins with an a priori discretisation defined by the problem geometry, then applies consecutive refinements using the output of a model based on the previous discretisation. At each iteration, the spatio-temporal variations in model-derived dynamical parameters are used to determine where to refine the mesh. Piggott et al. (2008) suggest that errors associated with solution gradients and/or vorticity can be used to inform locations for mesh refinement. We use this idea as the basis for the methodology. The assumption is that the application of consecutive refinements will produce a convergent discretisation. This approach sits between the bathymetry-based mesh design and on-the-fly adaptive methods. The aim is to produce a problem-specific fixed discretisation with an optimal design that targets the dynamics considered. Case studies based on an idealised setup representing flow past a headland serve to develop, test, and demonstrate the method. The principles of the mesh design method are outlined (Sect. 2), followed by a description of the case studies (Sect. 2.4), presentation and analysis of the results (Sect. 3), and a discussion of key observations and limitations (Sect. 4).

2 Methodology

We consider the flow simulated by solving the shallow-water equations on a fixed computational mesh \({\mathcal {H}}\) that represents a physical domain \(\Omega \). Our aim is to accurately capture a process that results from the interaction of the flow with a given domain geometry. Starting from a geometry-based mesh \({\mathcal {H}}_0\), we seek to converge, through a number of iterations n, to an optimised mesh \({\mathcal {H}}_{n}\) subject to practical constraints C. The latter are associated with the computational resources available and model stability or other criteria.

2.1 Shallow-water equation modelling

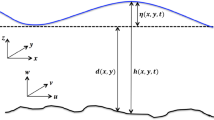

The non-conservative form of the 2-D shallow-water equations (SWE) can be expressed as

where \(\eta \) is the free surface perturbation, H is the total water depth so that \(H=\eta - z\), where z is the bottom elevation. The vector \({\textbf{u}}\) is the depth-averaged velocity with horizontal components (u, v) in the (x, y) directions, respectively. In the pressure gradient term, \(g\nabla \eta \), g is the gravitational acceleration. In turn, \(\nu \) is the viscosity attributed to eddy diffusivity. We parameterise the bottom shear stress by a quadratic drag coefficient \(C_D\) as \(\mathbf {\tau _b}=\rho C_D|{\textbf{u}}|{\textbf{u}}\). For idealised cases, we assume homogeneity in the vertical direction, and that additional terms such as atmospheric or Coriolis forcing can be neglected (Vouriot et al. 2019).

In demonstrating the methodology we apply design functions based on parameters derived from a 2-D depth-averaged hydrodynamic model and a fixed bathymetry. Model parameters that are sensitive to the mesh resolution include the bathymetric gradient, surface elevation gradient, velocity shear, and vorticity. Corresponding design quantities \({\textbf{q}}({\textbf{x}},t)\) and design functions \(f_{\mu }({\textbf{q}}({\textbf{x}},t))\) are summarised in Table 1.

2.2 Basis for mesh design

The physical processes we aim to resolve depend on the spatial gradients of quantities in the partial differential equations (PDE) in question. To achieve this, we seek a mesh discretisation \({\mathcal {H}}\) over the domain \(\Omega ({\textbf{x}})\) where \({\textbf{x}}=(x,y)\). Its underlying mesh density field \(\rho _{{\mathcal {H}}}({\textbf{x}})\) is constrained by (i) a dynamic quantity \({\textbf{q}}\) that is sensitive to the mesh design, and (ii) a limit on the resulting numerical degrees of freedom. Mesh generation tools use a spatial distribution of edge lengths \(l({\textbf{x}})\) to inform the mesh design, i.e. \({\mathcal {H}}({\textbf{x}}) = {\mathcal {H}}(l({\textbf{x}}))\). Therefore, we define \(l({\textbf{x}})\) as a function of \({\textbf{q}}\). To do so, we define a design metric

that converts the design quantity \({\textbf{q}}({\textbf{x}},t)\), over some interval \(\Delta t\) that we wish to analyse, so that \(\mu ({\textbf{x}}) \in {\mathbb {R}}_{> 0}\).

If a mesh is too coarse at key regions then these spatial gradients will be under-resolved with potential implications for the interpretation of the results. Therefore our design quantity \({\textbf{q}}({\textbf{x}},t)\) is a gradient function based of a model variable derived from the shallow-water equations (1) and (2), as presented in Table 1. Based on this principle, we render mesh density proportional to gradient steepness so that \({\textbf{q}}({\textbf{x}},t) \propto \rho _{{\mathcal {H}}}({\textbf{x}}) = \frac{1}{A({\textbf{x}})}\), where the local element area \(A({\textbf{x}})\propto l^2({\textbf{x}})\). This implies \(l({\textbf{x}}) \propto \frac{1}{\sqrt{{\textbf{q}}({\textbf{x}},t)}}\). Based on the requirements that \(\mu ({\textbf{x}}) \in {\mathbb {R}}_{> 0}\) and is independent of t, we re-write (3) in the following expanded form:

where the design function \(f_{\mu }({\textbf{q}}({\textbf{x}},t))\) removes the time dependence, and the constant offset \(\lambda > 0\) ensures \(\mu ({\textbf{x}}) \in {\mathbb {R}}_{> 0}\). The design function can be user- or case-defined as per Table 1 for key SWE variables. We note that \(f_{\mu }({\textbf{q}}({\textbf{x}},t))\) can be based on an integrated variable (e.g. magnitude-based or variance-based) expression that reflects the dynamics to be resolved.

To constrain the number of elements in \({\mathcal {H}}(l)\), we propose that

where L is a representative length scale and \({\overline{\mu }} = \frac{1}{A_\Omega } \int \mu ({\textbf{x}}) \ \textrm{d} {\textbf{x}}\) is the mean metric value across the domain \(\Omega ({\textbf{x}})\). Dividing by \({\overline{\mu }}\) normalises and centres the distribution of \(\mu ({\textbf{x}})\) allowing metrics to be easily combined. We may define L by considering a target number of mesh elements \(N_{T}\). The associated average mesh density is given by

where \(A_{\Omega }\) is the total area of the model domain, and \({\overline{A}}\) is the corresponding average element area. Assuming isotropic triangular mesh elements that are approximately equilateral, then an element edge length is \(l= \sqrt{ \frac{4}{\sqrt{3}} A(l)}\) where A(l) is the area of an equilateral triangle with edge length l. Hence, the mean edge length based on the required number of mesh elements is

assuming a uniform composition of equilateral triangles. For an unstructured mesh this is not generally the case and a level of asymmetry is expected. This can be accounted for by including an asymmetry scale factor \(k_{A}\), so that our representative edge length becomes \(L = k_{A} {\overline{l}}\) where \(k_{A}\) scales the total number of mesh elements generated using \(l({\textbf{x}})\).

2.3 Iterative mesh design

The mesh design metric \(\mu \) is based on the integration of a time-varying quantity derived from model output that is linked to regions in the model domain where mesh resolution may be too low to accurately resolve the flow dynamics. A single mesh refinement may not produce an optimal mesh. This can be tested by running the model using the refined mesh then comparing the predictions against certain fitness criteria. If the criteria are not met, then a further mesh refinement can be applied based on the output from the refined model. It is hypothesised that mesh structure will converge over repeated refinements, so there will be a diminishing return on effort. This iterative mesh refinement process is summarised in Fig. 1.

2.3.1 Unstructured mesh generation

We solve the shallow-water equations on unstructured 2-D triangular meshes. Mesh generation is performed using qmesh (Avdis et al. 2018) which interfaces with the gmsh (Geuzaine and Remacle 2009) engine to construct 2-D unstructured triangular meshes. qmesh takes as input the spatially varying function \(l({\textbf{x}})\) on \(\Omega ({\textbf{x}})\) projected onto a regular grid. A numerical solution to the SWE (1) and (2) returns parameter values at the mesh nodes as unstructured scattered data, so prior to the calculation of the \(l({\textbf{x}})\) field, the design metric \(\mu ({\textbf{x}})\) generated from a model parameter \({\textbf{q}}({\textbf{x}},t)\) is linearly interpolated onto a regular grid. The raster grid used extends 1 m beyond the model domain extent to capture edge effects, with a spatial resolution of 0.1 m. Consecutive \(\mu _{i}({\textbf{x}})\) fields generated by the iterative process are projected onto identical grids for ease of combination.

2.3.2 Model simulations

The simulation based on each meshing iteration is first brought to a stable state, as initial conditions assume equilibrium. Subsequently, model data are used for convergence testing and the extraction of parameters for creating the next design function. Strategies for minimising the length of the simulation required to capture key flow dynamics will depend on the system being modelled. For a constant inflow model, the system can be run for a fixed period. For periodic transient inflow, in this case resembling tidal flow, the model run should generate a suitable number of periods to capture the key dynamics. For tidal models that include a neap-spring variation, it may be necessary to target a maximum neap-spring period, or a representative subset, for mesh refinement.

To demonstrate the methodology, we use Thetis (Kärnä et al. 2018), a 2-D/3-D model for coastal (Clare et al. 2022; Jordan et al. 2022) and estuarine (Warder et al. 2022) flows. It is implemented using the Firedrake finite element PDE solver framework (Rathgeber et al. 2016) to solve the SWE as in Eqs. (1) and (2). The setups we consider make use of a piecewise-linear discontinuous Galerkin finite element spatial discretisation (P1-DG-FEM) and semi-implicit Crank–Nicolson time-stepping for temporal discretisation. The nonlinear discretised shallow-water equations are iteratively solved by Newton’s method using the PETSc library (Balay et al. 2016). As simulations must be able to handle inter-tidal effects, the wetting and drying formulation of Kärnä et al. (2011) is used. This introduces a correction to ensure positive water depth as defined by Eq. (8):

A modified value for the water depth, \({\tilde{H}}= \eta - z + \delta _{\eta } \left( H \right) \) (Fragkou et al. 2023), is applied sequentially in the solution of the SWE.

2.3.3 Combining design metrics

Every iteration constructs a new design metric \(\mu ({\textbf{x}})\) based on a representative quantity \({\textbf{q}}({\textbf{x}},t)\) that depends on the previous model run. Nevertheless, across iterations it is instructive to retain mesh design information in the new mesh design. We adopt a simple weighted sum of the normalised design metrics, expressing Eq. (5) as

In Eq. (9), two metric weighting cases are tested:

-

W1: \(w_{i} = 1\) and \(\sum _{i=0}^{n} w_{i} = n\), so that all design metrics \(\mu _i\) have an equal influence.

-

W2: \(w_{i} = {\left\{ \begin{array}{ll} \ \; 1 \ \;; \; i=0 \\ 2^{i-1}; \; i > 0 \end{array}\right. }\) and \(\sum _{i=0}^{n} w_{i} = 2^{n}\), emphasising the influence of the latest iteration metric.

As indicated earlier, design functions \(f_{\mu }({\textbf{x}})\) may target either the magnitude or variability of the design quantity \({\textbf{q}}({\textbf{x}},t)\). These two target values will generally have different spatial patterns. Using the time-maximum value ensures mesh refinement everywhere a steep gradient occurs for any state of the flow, but does not necessarily capture variability in time. Depending on the analysis objectives, a metric based on the combination of \(\max {(\Vert {\textbf{q}}({\textbf{x}},t)\Vert )}\) and \(\sigma {(\Vert {\textbf{q}}({\textbf{x}},t)\Vert ))}\) may be used. We use a weighted sum of the separate normalised design metrics derived from each design function, i.e.

Following a similar rationale, \(\mu \) can be extended to include combinations of multiple quantities \({\textbf{q}}\) and design functions \(f_{\mu }\).

2.3.4 Mesh convergence test

There are two types of criteria for completing the iterative mesh refinement process; either (a) the structure of the mesh does not change significantly between iterations, i.e. the mesh refinement has converged, or (b) the predictive skill of the model does not change significantly between iterations. The mesh discretisation \(\mathcal {H({\textbf{x}})}\) is constrained by the edge element length field \(l({\textbf{x}})\). As such, mesh design convergence can be defined in terms of the difference between consecutive pairs of projected \(l({\textbf{x}})\) fields, i.e. \(\Delta {l_{i+1}({\textbf{x}})} = l_{i+1}({\textbf{x}})-l_{i}({\textbf{x}})\). We use the mean and standard deviation of \(\Delta {l_{i+1}({\textbf{x}})}\) to measure mesh convergence. It is assumed that both the mean and standard deviation will decrease with each iteration. The mesh convergence test used is \(|\overline{\Delta {l_{i+1}}}| < \delta _{l}\), where \(\overline{\Delta {l_{i+1}}}\) is the mean of \(\Delta {l_{i+1}({\textbf{x}})}\) and \(\delta _{l}\) is a value close to 0.

Updating the mesh can increase or decrease mesh density locally, with either change impacting on the numerical predictions. To quantify this, changes in predictive skill can be tested by tracking the difference in some integrated parameter q of the modelled flow relative to some measure of the “truth” Q. The parameter q may target local or global change. To simplify our analysis, we will compare a time-averaged modelled quantity (\(\overline{q(\mathbf {x{,t})}}={\overline{q}}({\textbf{x}})\)) with the time-average “truth” (\(\overline{Q(\mathbf {x{,t})}}={\overline{Q}}({\textbf{x}})\)), i.e.

for iteration i. We require that \(|\Delta {{\overline{q}}_{i}({\textbf{x}}_{j})}| < \sigma _{c}\) at some target location \({\textbf{x}}_{j}\), where \(\sigma _{c}\) is an acceptable level of model uncertainty for the intended end-use.

2.4 Case study: oscillatory flow around a headland

For demonstration purposes, we use a simple model geometry consisting of a conical headland on a beach in a straight channel, based on the setup of Stansby et al. (2016). The model geometry is shown in Fig. 2. The open ends of the channel can be forced with a constant or transient inflow to demonstrate transferability to real-world cases. This simple model construct contains spatially varying geometry and allows the generation of coherent time-varying flow structures through flow interactions with the headland.

Simple process model of conical headland across a beach, based on Stansby et al. (2016). Grey arrows indicate open boundary forcing for the two cases considered. The beach slope is 1 in 20 and the headland 1 in 5. The dry headland extent is 5.5 m. The dashed line is the analysis Region of Interest (RoI)

As this is an idealised construct, we will use a benchmark model based on a very high-resolution mesh to represent the “truth” for assessing a given models predictive skill. The dynamics we aim to capture are those associated with the flow separation processes, as these influence kinetic energy hot-spots. The spatial distribution of linear and angular momentum will be affected by the accuracy of the predicted flow separation processes. For each mesh element, these can be represented by the kinetic energy (\(E_{k}\)) density

and the circulation (\(\Gamma \)) density (or areal-averaged vorticity)

where \(\rho _{\textrm{w}}\) is the water density, \({\textbf{x}}_{i}\) and \(A_{i}\) are the centroid and the area of the ith mesh element, and \(d{\textbf{l}}\) is the differential path length around the ith element.

The parameters \(\rho _{E_{k},i}\) and \(\rho _{\Gamma ,i}\) provide a measure of the local system energy at the mesh element level. The total energy in the model domain \(\Omega \) can be assessed by calculating the areal integral of these two parameters. Integrating \(\rho _{E_{k}}\) gives the total kinetic energy \(E_{k}(t)\), and integrating \(\rho _{\Gamma }\) gives the total angular momentum L(t) for the relevant time step. As we are using a spatial discretisation for the numerical simulation, the areal integral becomes a summation across the mesh elements. Therefore, the total kinetic energy is

and the total angular momentum is

for the full model domain.

To demonstrate the mesh refinement process, we start with a baseline model that uses bathymetry \(z({\textbf{x}})\) to define the initial mesh resolution. We then apply iterative refinements to this mesh using a selection of design quantities \({\textbf{q}}({\textbf{x}},t)\), functions \(f_{\mu }({\textbf{q}})\), and weighting methods (i.e. W1 and W2) for combining the normalised metrics \(\mu _{i}({\textbf{x}})\) at each iteration, as per the flowchart of Fig. 1.

2.4.1 Numerical model setup

The numerical setup requires definitions of the open-boundary forcing, time-stepping, friction, and a set of numerical stability parameters. For the constant inflow case, the open-boundary conditions are a constant inward normal velocity \(u_{n} = U_{0}\) at the left-hand boundary with a free elevation (mixed boundary condition), and a free velocity and surface elevation (Neumann boundary condition) at the right-hand boundary. For the transient inflow case, both the left and right open boundaries are driven using a sinusoidal normal velocity \(u_{n}=U_{0}\sin (\omega t - kx)\) and free surface elevation (mixed boundary conditions). The wave frequency is given by \(\omega = 2\pi /T\) and the wave number k is estimated by solving the dispersion equation \(\omega ^{2}=gk\tanh (kh)\), where \(h = 0.48\,\textrm{m}\), i.e. the channel depth offshore. For both the cases, the closed boundaries are set to obey a Neumann condition of zero normal flow.

The time step for the constant inflow is set to 5 s and the models run for 3600 s. For the transient inflow case, the time step is reduced to 1 s and the models run for 3000 s. For the transient case, the wave period is set to \(T=600\) s giving a wavenumber \(k=4.83\times 10^{-3}\,\textrm{m}^{-1}\). For numerical stability, the amplitude of the inflow is linearly ramped up from 0 to the target peak speed \(U_{0} = 0.1 \; \mathrm {m\,s}^{-1}\) over 600 s. Modelled data are exported every 5 s. Bottom friction is represented using a quadratic drag, as per Sect. 2.1, and the horizontal diffusivity is set using a constant eddy viscosity \(\nu \). To account for flow at the beach edge, wetting/drying is implemented with the constraint \(\alpha _{\textrm{WD}}\) (Kärnä et al. 2011). The implicitness factor \(\theta _{I}\) is used to control the semi-implicit Crank–Nicolson time-stepping. The conditions used are summarised in Table 2.

2.4.2 Benchmark and baseline models

The baseline model uses a mesh design that represents a typical bathymetry-based mesh used in coastal modelling (Bilgili et al. 2006; Gorman et al. 2008; Bilskie et al. 2015, 2020). The baseline mesh is the zeroth-level refinement \({\mathcal {H}}_{0}\) from which an improved mesh design is sought, and against which predictive performance of a mesh design is compared. The benchmark model represents the system “truth” and is used to assess the predictive skill of mesh designs generated for the model domain.

The baseline model mesh is constructed by providing qmesh with an element length field based on \(q({\textbf{x}}) = \nabla {z({\textbf{x}})}\). For the constant inflow case, a design constraint of \(N_{T} = 6000\) is used to generate the \(l({{\textbf{x}}})\) field. For the transient inflow case, \(N_{T} = 9000\) to allow for the formation of a trapped eddy on either side of the headland, i.e. two separate regions of interest. A preliminary sensitivity analysis using a range of asymmetry scale factors indicates that \(k_{A}=1.2\) ensures the triangulation imposed by qmesh results in a number of elements close to the target \(N_{T}\). This value is consistent across all mesh design iterations. The full domain baseline meshes generated are shown in Fig. 3b and c, respectively, and the corresponding mesh statistics are summarised in Table 3.

The benchmark mesh \({\mathcal {H}}_{\textrm{BM}}\) is constructed using the qmesh internal gradation method, where parameters are passed to control the gradation from a set of fixed lines around the domain (Avdis et al. 2018). The parameters define the minimum and maximum target element lengths, and the gradation growth rate. The lines and gradation parameters (\(l_{min}=0.1\), \(l_{max}=1.0\), \(R_{g}=66.66\)) chosen ensure the mesh density is highest around the headland with minimal variation to the outer boundaries. The full domain benchmark mesh is shown in Fig. 3a, and the mesh statistics (number of elements \(N_{\textrm{elem}}\), mean element length \({\overline{l}}\), and range (min, max) of element lengths \(R_{l}\)) are summarised in Table 3.

2.4.3 Baseline predictive performance

The parameters chosen to assess model performance are \(\rho _{E_{k}}\) and \(\rho _{\Gamma }\) as per Eqs. (12) and (13), respectively. For analysis, the constant inflow models are run for 3600 s and the transient inflow models for 3000 s. The analysis metrics are generated from the final 600 s for each model run which corresponds to a single cycle of the transient inflow forcing period. For the transient inflow case, the data are sorted into flood/ebb phases and the parameters estimated separately for each phase. The flood (\(t/T=0\)–0.5) and ebb (\(t/T=0.5\)–1.0) phases correspond to inflow and outflow at the left-hand open boundary, respectively. We compute time-averaged values to measure model performance over each phase. Figure 4 shows the benchmark and baseline time-averaged parameter values (\({\overline{\rho }}_{E_{k}}({\textbf{x}})\), \({\overline{\rho }}_{\Gamma }({\textbf{x}})\)) for the constant inflow case (Fig. 4a, b), and the ebb period only of the transient inflow case (Fig. 4c, d).

We use the location and magnitude of the peak \({\overline{\rho }}_{E_{k}}\) value across the RoI, and the peak \({\overline{\rho }}_{\Gamma }\) value at the trapped eddy core. The time averaging is over the representative time periods for the two inflow case, i.e. 600 s for constant inflow models, and 300 s representing either the flood or ebb phase for transient inflow models. The location \({\textbf{x}}_{p}\) and magnitude \({\overline{\rho }}_{E_{k}}({\textbf{x}}_{p})\) for the kinetic energy density is determined by filtering the data based on a threshold value (outlined in black in (a) and (c)), then searching this subset for the maximum value. The location \({\textbf{x}}_{p}\) is taken as the centroid of the mesh element corresponding to the peak value, since the chosen parameters areal-averaged values per element (see Eqs. (12) and (13)). A similar approach is taken to determine the location \({\textbf{x}}_{c}\) and magnitude \({\overline{\rho }}_{\Gamma }({\textbf{x}}_{c})\) of the circulation density at the trapped eddy core, except a search region is predefined to exclude the shear layer. In this case, separate search regions are defined for the transient inflow flood and ebb phases. As with peak \({\overline{\rho }}_{E_{k}}\), the location \({\textbf{x}}_{c}\) is taken as the centroid of the element corresponding to the eddy core as defined by the maximum \({\overline{\rho }}_{\Gamma }({\textbf{x}}_{c})\) value in the search region.

The locations and magnitudes of the peak values are indicated on the panels in Fig. 4 and tabulated in Table 4. Only the ebb phase results are shown graphically for the transient inflow case, the flood phase is essentially a mirror image of the ebb phase pattern about the headland. The locations of the benchmark values are identified by \(+\) and the baseline values by \(\circ \). Both locations are included on the baseline graphics for qualitative comparison. For the constant inflow case, the data in Table 4 show the baseline model overestimates the peak \({\overline{\rho }}_{E_{k}}\) but predicts the average position well, and underestimates \({\overline{\rho }}_{\Gamma }\) at the eddy core and gives a poor prediction of average the location. The baseline model for the transient inflow case underestimates both the peak \({\overline{\rho }}_{E_{k}}\) and \({\overline{\rho }}_{\Gamma }\) magnitudes, but gives a reasonable prediction of average location. The location and magnitude of the transient inflow \({\overline{\rho }}_{E_{k}}\) and \({\overline{\rho }}_{\Gamma }\) peak values (Table 4) indicate that there is an inherent asymmetry between the ebb and flood flow structures. This is probably the result of an incorrect forcing phase difference between open boundaries due to the value used for wavenumber k not taking into account bottom drag and bed form.

Benchmark (left) and baseline (right) performance parameters \({\overline{\rho }}_{E_{k}}\) and \({\overline{\rho }}_{\Gamma }\) for the constant inflow case (a) and (b) respectively, and the ebb phase of the transient inflow case (c) and (d), respectively. The transient flood phase is not shown. The benchmark peak parameter locations are identified by +, and the baseline by \(\circ \). The sub-region shown corresponds to the RoI shown in Fig. 2

3 Results

Results for the constant (Sect. 3.1) and transient (Sect. 3.2) inflow cases are considered separately. The constant inflow case demonstrates the methodology and serves to investigate mesh refinement sensitivity to (a) the design quantity, (b) the mesh metric function and (c) the weighting approach across iterations. The transient inflow case is an application of the methodology to the more complex problem of time-varying forcing over the same domain. For the constant inflow case, a series of six consecutive mesh refinements are applied to the baseline mesh (\({\mathcal {H}}_{0}\)) for three representative quantities \(q({\textbf{x}},t) = \{\nabla {\eta ({\textbf{x}},t)}, \nabla {{\textbf{u}}({\textbf{x}},t)}, \nabla {\times {{\textbf{u}}({\textbf{x}},t)}}\}\), using both W1 and W2 metric combination weighting methods (see Sect. 2.3.3) for \(\mu _{i}({\textbf{x}})\). We use magnitude-based design functions \(f_{\mu }({\textbf{q}}({\textbf{x}},t))\) as presented in Table 1. Sequentially, model performance is assessed using the four parameters [\({\textbf{x}}_p\), \({\overline{\rho }}_{E_{k}}({\textbf{x}}_p)\), \({\textbf{x}}_c\), \({\overline{\rho }}_{\Gamma }({\textbf{x}}_c)\)] described in Sect. 2.4.3. Performance in reproducing the location of areas of interest is assessed as a radial offset distance \(\Delta {r}\) from the benchmark, and the peak values are presented as normalised differences relative to the benchmark model as per Eq. (11). The mean benchmark model value \({\overline{Q}}\) as in (11) is used to normalise the difference. Based on the results for the constant inflow case study presented in Sect. 3.1, a further case where a design metric based on a combination of \(\nabla {\times {{\textbf{u}}}}\) and \(\sigma {\left( \nabla {\times {{\textbf{u}}}}\right) }\) is trialled to account for the spatio-temporal variability in \(\nabla {\times {\mathbf {u({\textbf{x}},t)}}}\). In this instance, Eq (10) for \(W_\textrm{max} = W_{\sigma } = 1\) is applied to construct the design metric \(\mu ({\textbf{x}})\). For the transient inflow case, a series of six consecutive mesh refinements are applied to the baseline mesh (\({\mathcal {H}}_{0}\)) for a subset of design parameters identified by the constant inflow results. Results for the transient inflow application of the methodology are presented in Sect. 3.2 below.

3.1 Constant inflow case study

Evolution of mesh density can be traced through changes in the mesh element length \(\Delta {l({\textbf{x}})}\) field. Histograms of \(\Delta {l}\) (Fig. 5) show that the length fields \(l_{i}({\textbf{x}})\) eventually converge for all design quantities \(q({\textbf{x}},t)\) trialled. The spread and shape of the histograms varies depending on the \(q({\textbf{x}},t)\) used to design the mesh. The scale of the plots focuses on the central region where \(\Delta {l({\textbf{x}})} \rightarrow 0\); this is the target for later iterations close to convergence. Significant \(\Delta {l}\) peaks in early iterations are negative indicating the mesh is getting finer, and through iterations moves towards a zero difference indicating that the design is converging. These histograms do not give an indication of any redistribution of element sizes across the domain between iterations. For all cases, the W2 weighted (right column) metric averaging shows stronger convergence. Considering the single design quantity cases, the refinements based on \(q=\nabla {\times {{\textbf{u}}}}\) gave the fastest convergence in terms of the percentage of points with minimal change in length. This performance improved using a combination of \(q=\nabla {\times {{\textbf{u}}}}\) and \(q=\sigma { (\nabla {\times {{\textbf{u}}}})}\).

Histograms of mesh element length differences \(\Delta {l}\) per iteration, based on a \(q = \nabla {\mathbf {\eta }}\), b \(q = \nabla {{\textbf{u}}}\), c \(q = \nabla {\times {{\textbf{u}}}}\), and d combined \(q = \{ \nabla {\times {{\textbf{u}}}}, \sigma {(\nabla {\times {{\textbf{u}}}})} \}\), using W1 (left) and W2 (right) weighted averaging of \(\mu _{i}({\textbf{x}})\). To calculate \(\Delta {l}\), the \(l_{i}({\textbf{x}})\) fields are rasterised onto a common regular grid. The baseline mesh length field \(l_{0}({\textbf{x}})\) is based on \(q = \nabla {z}\)

Iterative mesh refinements based on \(q=\nabla {\times {{\textbf{u}}}}\) (left) and combined \(q = \bigl \{ \nabla {\times {{\textbf{u}}}}, \sigma (\nabla {\times {{\textbf{u}}})} \bigr \}\) (right). The top row is the baseline starting mesh, followed in order by refinements \(i = \{1,2,3\}\). Boxes in row (d) highlight the combined metric reduction in mesh density around flow separation point. The mesh data are colourised using mesh density in elements per \(\mathrm {m^{2}}\). The sub-region shown corresponds to the RoI indicated in Fig. 2

In Fig. 6, we see meshes generated for the first three iterations based on \(q=\nabla {\times {{\textbf{u}}}}\) (left) and the combination of \(q = \{ \nabla {\times {{\textbf{u}}}}, \sigma {(\nabla {\times {{\textbf{u}}}})} \}\) (right), using W2 weighted averaging. The iterative transition from the baseline mesh (a) constructed using \(q=\nabla {z}\) is clearly demonstrated for both design parameters. Refinement based on \(q=\nabla {\times {{\textbf{u}}}}\) (left) increases the resolution from the flow separation point across the shear-layer instability region and into the trapped eddy, while retaining some refinement across the upstream beach area. For this case, the change in the mesh generated using the \(l_{i}({\textbf{x}})\) field was negligible beyond the third iteration. The metric based on a combination of magnitude and variability parameters (right) concentrates refinement on the levee shear-layer instability at the expense of the flow separation point (see annotated boxes in Fig. 6d). Beyond the third iteration, this separation became stronger with the mesh density reducing everywhere else across the domain.

Mesh statistics for the third iteration of the process are summarised in Table 5. These data show that all parameters produced meshes in the range of 6000 and 6500 elements, apart from \(q=\nabla {\eta }\) which produced meshes with approximately 5300 elements, i.e. a difference in excess of 10%. The spatial distribution of \(q=\nabla {\eta }\) is smoother than the other design quantities, resulting in a broader spread in the distribution of the corresponding \(l({\textbf{x}})\) field with a smoother gradient \(\nabla {l({\textbf{x}})}\), leading to significantly more of the model domain covered by large elements, hence fewer elements overall (see Fig. 7).

Summary of impact of mesh design quantities of interest on model predictions of \({\overline{\rho }}_{E_{k}}\) and \({\overline{\rho }}_{\Gamma }\) where \(\Delta {\rho }={\overline{\rho }}_{M}-{\overline{\rho }}_{\textrm{BM}}\). The panels show the mesh (left), \(\Delta {\rho _{E_{k}}}\) (centre) and \(\Delta {\rho _{\Gamma }}\) (right). The sub-region shown corresponds the RoI indicated in Fig. 2

The predictive performance for a given mesh design is based on how well the model represents the \({\overline{\rho }}_{E_{k}}\) and \({\overline{\rho }}_{\Gamma }\) fields compared with the benchmark model. A robust model should return both the magnitude of the parameters and the location of key structures correctly. Figure 4a shows that on average the peak of \({\overline{\rho }}_{E_{k}}\) is 11.2 m downstream and 0.2 m offshore north of the tip of the headland, while the core of the separation zone is 5.0 m downstream and 1.8 m shoreward of the tip of the headland. The location and magnitude data for the benchmark and baseline models are given in Table 4. The baseline model overestimates the peak \({\overline{\rho }}_{E_{k}}\) by 0.5% and the location is 0.14 m offset (see Table 5), and underestimates the eddy core vorticity magnitude \({\overline{\rho }}_{\Gamma }\) by 2.0% and its location by 0.67 m which is about 12% of the headland levee length of 5.5 m (see Fig. 2). This indicates that for this simple model construct the magnitude of the test values are fairly insensitive to the mesh design, whereas the location of the eddy core is sensitive. The accuracy of the peak value locations is limited to the size of the mesh element where the peak is identified, noting that all mesh designs except the baseline model have large elements at the peak \({\overline{\rho }}_{E_{k}}\) location \({\textbf{x}}_p\), while the element size around the eddy core is dependent on mesh design; the baseline and \(q=\nabla {\times {{\textbf{u}}}}\) refined models based on \(q=\nabla {\times {{\textbf{u}}}}\) concentrate the highest resolution here.

Table 5 summarises peak \({\overline{\rho }}_{E_{k}}\) and \({\overline{\rho }}_{\Gamma }\) data for the third iteration refinements for all \(q({\textbf{x}},t)\) trialled and the two metric weighted averaging methods. All models return consistent estimates of the peak \({\overline{\rho }}_{E_{k}}\) value with varying accuracy in the location \({\textbf{x}}_p\), however the results for the eddy core \({\overline{\rho }}_{\Gamma }\) were more variable. Overall, the mesh design based on \(q=\nabla {\times {{\textbf{u}}}}\) gave the best results compared to the benchmark quantities, while the combined metric using \(q = \{ \nabla {\times {{\textbf{u}}}}, \sigma (\nabla {\times {{\textbf{u}}}}) \}\) gave comparable results.

A holistic view of predictive performance can be seen by mapping the difference calculated using Eq. (11), between the benchmark and test model values across the domain. Figure 7 presents \(\rho _{{\mathcal {H}}}\) the model mesh density (left), \(\Delta {{\overline{\rho }}_{E_{k}}}\) (centre) and \(\Delta {{\overline{\rho }}_{\Gamma }}\) (right) for the baseline model and the third iteration refinement for all design parameters using the W2 weighted averaging of metrics. To apply the difference, the time-averaged benchmark data are linearly interpolated onto the model element centroids where predicted parameters are calculated. Visually the model design based on \(q=\nabla {\times {{\textbf{u}}}}\) and \(q=\{\nabla {\times {{\textbf{u}}}},\sigma (\nabla {\times {{\textbf{u}}}})\}\) show the least difference. All models over estimate the shear layer \({\overline{\rho }}_{E_{k}}\) which may reflect the layer not forming in the correct location. A similar pattern in \({\overline{\rho }}_{\Gamma }\) supports the argument of a shear-layer offset discrepancy. Specifically, the baseline, \(q=\nabla {\eta }\) and \(q=\{\nabla {\times {{\textbf{u}}}},\sigma (\nabla {\times {{\textbf{u}}}})\}\) models under-represent the flow separation point at the tip of the headland.

Bulk measures of model performance based on time-series of spatially integrated \(\rho _{E_{k}}\) and \(\rho _{\Gamma }\) values are considered in Table 6. These are presented as Root Mean Square Error (RMSE) and normalised RMSE (NRMSE) values of the difference between predicted and benchmark time-series data. The time-averaged integrated benchmark values (\(\overline{E_{k}}_{\textrm{BM}}\), \({\overline{L}}_{\textrm{BM}}\)) are used as the normalising factors. The total kinetic energy is fairly insensitive to mesh design for the constant inflow case (\(\textrm{NRMSE}_{E_{k}} < 1.0\%\)). The magnitude of the angular momentum shows more sensitivity (\(\textrm{NRMSE}_{L} < 4\%\)), with a less conclusive separation between meshes. It should be noted that the predictive performance for meshes based on \(q=\{\nabla {\times {{\textbf{u}}}},\sigma (\nabla {\times {{\textbf{u}}}})\}\) decreased with more iterations due to further loss of information at the flow separation point, whereas the performance for \(q=\nabla {\times {{\textbf{u}}}}\) remained consistent with further refinement.

3.2 Transient inflow case study

Applying a transient inflow to the model domain allows a first-pass test of the mesh design methodology for a system with strong spatio-temporal variability. Analysis of the constant inflow case identified \(q=\nabla {\times {{\textbf{u}}}}\), \(q=\{\nabla {\times {{\textbf{u}}}},\sigma (\nabla {\times {{\textbf{u}}}})\}\) as the preferred mesh design parameters and W2 as the preferred metric weighting; these are used for transient inflow mesh design. The models are run for 3000 s, and the data are separated into flood (\(t/T=0 \; \textrm{to} \;0.5\)) and ebb (\(t/T=0.5 \; \textrm{to} \; 1.0\)) phases for analysis, as described in Sect. 2.4.3 for the baseline model.

Histograms of \(\Delta {l}\) for consecutive pairs of refinement length fields \(l_{i}({\textbf{x}})\) are shown in Fig. 8. For both design parameters tested, the mesh initially reduces in resolution (peak \(\Delta {l}\) +ve) then after the 3rd iteration the mesh begins to refine with the optimal (minimum \(\overline{\Delta {l}_{i}}\) and \(\sigma \left( {\Delta {l}_{i}}\right) \)) occurring on the 5th iteration. Further iterations begin to remove spatial geometry information. The resulting mesh statistics are given in Table 7. All models have a total element number within \(\pm {400}\) of the \(N_{T}=9000\) target value, i.e. less than 5%, and a mean element length of \({\overline{l}}\approx {0.65 \textrm{m}}\). The baseline mesh \({\mathcal {H}}_{0}\) and 5th iteration meshes \({\mathcal {H}}_{5}\) for the two design parameters \(q=\nabla {\times {{\textbf{u}}}}\) and \(q = \{ \nabla {\times {{\textbf{u}}}}, \sigma {(\nabla {\times {{\textbf{u}}}})} \}\) are shown in the left column of Fig. 9. The mesh design for the two parameters is dominated by refinement at the headland and across the shear layer, at the expense of resolution across the beach region and the near-shore headland edges.

Histograms of consecutive differences, \(\Delta {l}\), between refinement length fields \(l_{i}({\textbf{x}})\) based on a \(q = \nabla {\times {{\textbf{u}}}}\) and b \(q = \bigl \{ \nabla {\times {{\textbf{u}}}}, \sigma (\nabla {\times {{\textbf{u}}})} \bigr \}\), for W2 weighted averaging of the design metrics \(\mu _{i}({\textbf{x}})\), and \(l_{0}\) is the baseline model length field. To generate the difference \(\Delta {l}\) the \(l_{i}({\textbf{x}})\) fields are projected onto a common regular raster

Summary of impact of mesh design quantities of interest on model ebb flow predictions of \({\overline{\rho }}_{E_{k}}\) and \({\overline{\rho }}_{\Gamma }\) where \(\Delta {\rho }={\overline{\rho }}_{M}-{\overline{\rho }}_{\textrm{BM}}\). The panels show the mesh (left), \(\Delta {\rho _{E_{k}}}\) (centre) and \(\Delta {\rho _{\Gamma }}\) (right). The sub-region shown corresponds the RoI indicated in Fig. 2

Table 7 collates the key performance parameter for the baseline model and the 5th iteration mesh designs based on \(q=\nabla {\times {{\textbf{u}}}}\) and \(q = \{ \nabla {\times {{\textbf{u}}}}, \sigma {(\nabla {\times {{\textbf{u}}}})} \}\). All of the models underestimate the magnitudes of the peak \({\overline{\rho }}_{E_{k}}\) and \({\overline{\rho }}_{\Gamma }\) values compared to the benchmark, with differences between − 4.7 and − 16.6% for \({\overline{\rho }}_{E_{k}}\) and between − 4.4 and − 23.2% for \({\overline{\rho }}_{\Gamma }\). In general all models gave better predictions for the ebb phase. The mesh refinement based on \(q=\nabla {\times {{\textbf{u}}}}\) gave the best overall predictive performance.

The spatial differences in the \({\overline{\rho }}_{E_{k}}\) and \({\overline{\rho }}_{\Gamma }\) fields relative to the benchmark data for the ebb phase are shown in Fig. 9. The differences are calculated using Eq(11). The flood phase essentially mirrors the ebb about the headland. The structural patterns (adjacent regions of +ve/-ve differences) in both parameters are indicative of an offset in the predicted location of both the shear layer and the trapped eddy. In all cases, the shear-layer forms (centre) further offshore compared to that of the benchmark, suggesting that the size of the trapped eddy is larger than expected. This observation is consistent with the bimodal pattern shown in the \(\Delta {{\overline{\rho }}_{\Gamma }}\) field (right). The negative difference in the magnitude of both the peak \({\overline{\rho }}_{E_{k}}\) and \({\overline{\rho }}_{\Gamma }\) (Table 7) indicates a weaker shear layer and a more diffuse eddy. There is no evidence the transient flow structures extend to the open boundaries. The normalised RMSE in \({E}_{k}(t)\) and L(t) given in Table 8, show the total energy in the models is within 0.5% of the benchmark, therefore, the key model differences relative to the benchmark are in the spatial distribution of the energy. The total angular momentum is more sensitive to mesh design, where the \(\nabla {\times {\textbf{q}}}\) mesh design performs slightly better overall.

4 Discussion

4.1 On the iterative mesh design method

The iterative mesh design method implemented is based on straightforward assumptions, and is shown in principle to produce a mesh design that improves a models predictive skill, sensitive to design quantities and functions chosen. The method allows control on the total number of mesh elements \(N_{T}\), and this is well constrained between iterations through an appropriate determination of the mesh element scaling factor \(k_{A}\) based on element type and the spatial distribution of the element length field \(l({\textbf{x}})\). The method can be used to deliver a convergent mesh design, as shown by Figs. 5 and 8. Overall, the method meets the main goals required for improved mesh design and to focus resolution on dynamic locations not known a priori.

In terms of our case-study demonstration, model performance is determined using time-averaged values of the system energy parameters. The reasons for this choice are to simplify the analysis and to highlight the key rationale of the method. As expected, flow separation occurs at the headland/levee tip generating flow instability downstream. The behaviour of the flow evolution is very different for the two forcing cases (constant and transient inflow), which highlights the need to consider dynamics in the mesh design process.

For a constant inflow the timing of the separation onset and the subsequent eddy-shedding frequency is sensitive to the mesh element size at the separation point and in the shear layer associated with the shed eddies. Analysis of velocity magnitude time-series extracted at the location of the benchmark model peak \(\rho _{E_{k}}\) shows this phase offset. Statistical analysis shows low temporal correlation (r) relative to the benchmark, as expected, but the time-mean and standard deviation (Table 9) for the velocity time series give good agreement. We note that the effects of mesh refinement highlighted by the standard deviation are consistent with the analysis presented for \(\rho _{E_{k}}\) data. For this test case, we are interested in the prediction of available energy, not the frequency of the variability. There are applications where frequency becomes important, so mesh design would need to adapt accordingly; higher frequency data and a longer time-series would be required to apply a robust spectral analysis.

For the transient inflow case, the formation of the flow separation zones is phase locked to the open-boundary forcing, as shown by the high correlation in velocity magnitude relative to the benchmark model (Table 10). Statistical analysis of the time-series of \(|\mathbf {u({\textbf{x}})}|\) at the location of the benchmark model peak \(\rho _{E_{k}}\) shows that the time-mean values return a good agreement, but NRMSE error is higher than expected due to the spatial shift in the physical location of structures. These data are consistent with the presented analysis of \(\rho _{E_{k}}\) data. During the transition between phases of the flow, a headland jet is formed which ejects a vortex dipole. The strength and persistence of this dipole pair depends on the mesh resolution at both the formation point and downstream of the separation point. It was observed that the vortex dipoles released did not exit the domain during the formation phase; instead they were transported back towards the headland at the change of phase. Running the benchmark model forward showed that the persistence of these dipoles influence the form of the separation zone. The vortex dipoles generated in lower resolution refined models did not persist as long, due to the greater expected numerical diffusion. There was also an inherent asymmetry between flood and ebb phases which is attributed to the use of an approximate wavelength \(\lambda \) for the surface wave generated by the forcing.

The mesh convergence for the transient case reached a minimum but further refinement showed resolution reducing near the headland. This behaviour suggests that either there were insufficient degrees of freedom for the chosen design criteria, i.e. by increasing target number of elements, or a different method for combining the mesh densities was required. In this instance it was not an issue as we had reached a suitable mesh convergence for our use, but this highlights the need to check the convergence behaviour to determine whether the design need to be modified. The convergence criteria may also need to be tailored to the use case and design functions used. These effects may be more pronounced in more complex domains and when multiple design parameters are used.

To a certain extent, the observed offset in the location and strength of the shear layer could be corrected for a specific mesh design by calibrating the resulting model (Fringer et al. 2019), e.g. through modification of the eddy diffusivity value. The implication of this observation is that model calibration is specific to a given mesh design. This in turn raises questions about the impact of model calibration for on-the-fly adaptive mesh refinement methods, particularly across complex domain bathymetry often encountered in coastal regions.

4.2 On the mesh design functions and parameters

The design quantity \({\textbf{q}}=\nabla {\times {{\textbf{u}}}}\) and the design function \(f_{\mu }({\textbf{q}})=\max {(\nabla {\times {{\textbf{u}}}})}\) gave the most robust mesh discretisation and improved predictive performance compared with the baseline model for the case study geometry and application presented. To a certain extent, this is an intuitive result as the curl of the velocity field inherently captures information about both the velocity gradients and the surface gradients. It should be noted that any design function performance is linked to the overall objective of a modelling study. Our perspective stems strongly from marine hydrokinetic energy resource assessment and array design optimisation. Within this context, an accurate appreciation of energy density becomes significant. However, to establish optimal utility of the marine environment, identification of shear layers and flow separation zones can have significant implications for the feasibility of particular technologies and their interactions. From this point of view, quantities such as horizontal flow vorticity and circulation stand out as obvious candidates to guide the mesh design. Other design quantities may be more appropriate for other dynamical processes. These could extend to wave dynamics in coupled-modelling systems (Roland et al. 2012; Zheng et al. 2017; Fragkou et al. 2023), or dispersal and water quality (James 2002; Hamza et al. 2015) processes (e.g. that could be represented by connected advection diffusion equations). In these cases, design functions may need adapting for the quantity of interest in the system. The key advantage of the presented iterative process is that it provides a route to introduce modeller skill and knowledge in the meshing process, particularly when dealing with realistic/complex geometries.

The design functions (Table 1) used for the case studies presented are based on 1st-order spatial derivatives of fluid parameters. In contrast, adaptive mesh systems (Piggott et al. 2008) typically use the Hessian (2nd-order derivative) of the velocity field to optimise the mesh design. The Hessian better captures the local numerical error and is more sensitive to finer spatial and temporal scales. Our use case does not need to capture instantaneous time-evolution of the system, as we are generating a fixed mesh that captures regions of high gradient in the design quantity across the full simulation period used. In this case, 1st-order derivatives perform well for supporting our fixed mesh design in the context of hydrokinetic resource characterisation. The use of higher order derivatives for the design functions may be beneficial for other use-cases and design parameters. However, the form of the design function does not alter how the iterative mesh design method operates. The methods used are at the discretion of the mesh designer.

In terms of other design options, the weighted averaging of the normalised design metrics \(\mu _{i}({\textbf{x}})\) using method W2 consistently produced stronger mesh convergence and improved predictive skill. There are two other design parameters that were not varied: (i) the distribution offset parameter \(\lambda \) in Eq (4), and (ii) the element scaling factor \(k_{A}\). The distribution offset value alters the spread of the distribution of the element length; increasing \(\lambda \) narrows the distribution and decreasing broadens the distribution. A constant scale factor of \(k_{A}=1.2\) is used for all mesh designs in this study. However, this value does not work as well for all design parameters, in particular \({\textbf{q}}=\nabla {\eta }\) where the underlying distribution of gradients is broad. We note that \(k_{A}\) could be expressed as a function of some measure of the element length distribution, such as the element length range or variance across the domain, to better capture the spatial structure of the design parameter.

Our mesh design functions assumed triangular elements where the element area is proportional to \(l^2\). More generally, the area of an n-side regular polygon of edge length l is given by \(A_{n} = \left[ \frac{n}{4} \tan {\left( \frac{n-2}{2n} \pi \right) }\right] l^{2}\). This shows that the area remains proportional to \(l^{2}\), but the area weighting differs. Therefore this refinement method should work for meshes based on other fundamental element shapes. It should also be noted that meshes based on a different element shape will exhibit a different packing density, so scaling the element length fields will become more nuanced.

4.3 On computational cost

Numerical models were run using a single core on an Intel® Core\(^{\textrm{TM}}\) X-series Processor (i9-10980XE CPU @3.00 GHz) to allow direct comparison of computational cost. Benchmark simulations and mesh refinements for \(q = \nabla {\times {{\textbf{u}}}}\) are used to estimate mean computation time per simulation time step (\(\Delta {t}\)), and the per iteration overhead associated with the extraction of the design parameter \(q_{i}({\textbf{x}},t)\), the calculation of the target mesh density \(l_{i+1}({\textbf{x}})\) and the new mesh \({\mathcal {H}}_{i+1}\) generation. Values for the constant and transient inflow case are summarised in Table 11. Compute times normalised by the benchmark simulation time per \(\Delta {t}\) are included to quantify relative computing costs. These data can be used to estimate computational costs for the high-resolution benchmark models based on the \({\mathcal {H}}_{\textrm{BM}}\) mesh design and a model based the \({\mathcal {H}}_{N}\) mesh designed by a series of iterative refinements starting from a baseline mesh \({\mathcal {H}}_{0}\). The time to run the benchmark model for a simulation of T seconds is

and the time to generate a mesh design based on N iterative refinements from \({\mathcal {H}}_{0}\) is

Assuming \(T = 3600\) s and \(N=6\) iterations, the computational costs for the constant inflow case are \(t_{\textrm{BM}} = 2.14\) h and \(t_{{\mathcal {H}}_{6}} = 0.5\) h, respectively, corresponding to a 23% cost of the mesh design approach relative to running the benchmark model. Similarly, for the transient inflow case, \(t_{\textrm{BM}} = 15.3\) h and \(t_{{\mathcal {H}}_{6}} = 4.6\) h respectively, with a relative cost of 30%. For both cases it is cost effective to apply the iterative mesh refinement to improve model predictive skill. The mesh refinement time overhead increases as the target number of elements increases. If the target number is too great, then iterative refinement will cease to be cost effective.

For more complicated cases where multiple design parameters are considered and there are potentially multiple regions of interest to be refined, there will be an increase in the overall computation cost, as each design parameter required for each RoI will need to be computed. The impact on computational cost will depend on the number of processes being considered and the number of RoI’s that need to be refined. If the system is too complex then a different approach to mesh refinement may be more cost effective.

For real-world regional-scale coastal models, the iterative refinement will be cost effective provided the refinement process is only applied to the region of interest and not the full model domain, and a reduced representative time period is chosen for the mesh design process. This is particularly significant if a long time series of data is to be generated based on the final mesh design, e.g. 30–90 days to assess key tidal dynamics constituents. If the problem does not require a long time-series, requires refinement over a large-area domain, or the spatio-temporal patterns are constantly changing, then it may prove more cost effective to simply run the model using a high-resolution mesh. Running models partitioned across multiple cores will reduce the computation cost, but this does not change the general argument that for many problems this mesh refinement approach will reduce computational cost while maintaining predictive skill.

4.4 On real-world applications

We considered a simple process model where there is a single point of flow separation within the region of interest. Real-world sites will have a complex domain shape and may contain multiple sources of flow separation that impact the RoI rendering the need for a meshing design strategy more pressing (Fringer et al. 2019) and less intuitive. In general, regional-scale hydrodynamic models need to cover a large-area extent to ensure the boundaries are sufficiently remote from the RoI to limit the impact of open-boundary effects and to allow far-field processes to develop (Westerink et al. 1992). There are a range of issues that need to be considered for real-world problems, but the rationale of this mesh refinement method can be applied to design 2-D and 3-D mesh discretisations for regional-scale hydrodynamic models.

It is not necessary nor computationally efficient to apply the mesh refinement to the entire model domain, so the refinement needs to be limited to a sub-domain covering the RoI. All sources of flow separation that impact the RoI need to be identified, and taken into account when defining the sub-domain where the mesh refinement is to be applied. The construction of a baseline mesh using the gradient of the bathymetry \(\nabla {z}\) supports the identification of relevant physical structures that need to be captured in the sub-domain (Bilgili et al. 2006; Bilskie et al. 2015). Therefore, the baseline model is used to define the sub-domain for applying the refinement and the initial design quantities that form the basis of the mesh design.

In cases where there are multiple processes of interest that may either occur concurrently or at discrete locations, multiple design quantities would be warranted and a single or multiple regions of interest may need to be defined for mesh refinement. Through the application of a suitable method for combining multiple design metrics an optimal mesh can be reached. The combination method used should ensure that the process that is most sensitive to length scales takes priority, e.g. a mesh resolution suitable for the process requiring the shortest length scales will be suitable for other process at the same location that are less sensitive to length scale. The other determining factor will be the choice of number of target elements which controls the minimum edge length. By modifying the function form for \(l({\textbf{x}})\) ((5)), it is possible to impose minimum and maximum edge length values. Numerical errors associated with the combination of multiple design quantities will be minimised allowing an appropriate number of degrees of freedom for the design criteria to met.

When presented with a more computationally demanding geometry and over an extended interval, several options can be explored to render the mesh design process more efficient. The steady-state solution from the baseline model can be used to pre-initialise all subsequent refinement runs to optimise the computational cost of the iterative design process. For transient cases, the identification of key intervals that challenge the model accuracy could be targeted rather than performing the analysis over long simulation periods.

We acknowledge that it is generally not feasible to create a very high-resolution benchmark model that is necessarily reliable, and therefore the refined model predictions will need to be tested against in situ and/or remotely sensed data. The ability to quantify predictive performance of a given mesh design will be limited by the data available and where they have been collected in relation to the flow features that need to be resolved. It is unlikely that the spatial analysis applied above will be possible due to the typical sparsity of spatial data across real-world sites, therefore time-series (Gunn and Stock-Williams 2013), spectral (Novo and Kyozuka 2020), and Bayesian (Beckers et al. 2020) analysis techniques will need to be used. If the modelling exercise is associated with the collection of field data, then there is value in using the baseline model to design the data collection campaign to support the testing of the mesh refinement.

4.5 Further development

The weighted averaging method used to combine the mesh design metrics \(\mu _{i}({\textbf{x}})\) led in certain cases to loss of mesh density information with repeated refinement. Within our particular example, the baseline discretisation was entirely based on the domain geometry through \(\nabla z\). As subsequent model iterations disregard the bathymetry from the design function, it is expected that meshes gradually seek to establish a compromise between domain geometry and capturing the dynamics sought after through \(\mu \). There are alternate methods for combining the metrics to investigate. For example, an obvious starting point would be to take the minimum value between pairs of normalised \(\mu _{i}({\textbf{x}})\). This will effectively retain all refinement information from previous iterations. A related challenge is controlling the resulting minimum and maximum edge length values in the \(l({\textbf{x}})\) fields. Depending on the numerics of the model and for numerical stability there may be a requirement to constrain the minimum value of \(l({\textbf{x}})\) through an adjustment of the scaling method based on the target number of elements \(N_{T}\).

The local rate of change in edge length is constrained by the spatial distribution of the gradient function used. If the distribution of gradients has narrow peaks then very rapid transitions from small to large triangular elements can be created. This is exacerbated by the use of an inverse relationship between \({\textbf{q}}({\textbf{x}},t)\) and \(\mu ({{\textbf{x}}})\), which promotes refinement at steeper gradients. A rapid change in mesh element size can affect numerical stability of the solution to the SWE and a minimum gradient across the \(l({\textbf{x}})\) field would need to be defined subject to the application. This could, to some extent, be addressed through a combination of multiple design quantities \({\textbf{q}}({\textbf{x}},t)\). For example, the spatial distribution of the surface gradients \(\nabla {\eta }\) is different to that of the vorticity \(\nabla {\times {{\textbf{u}}}}\). Combining the design metrics based on these two quantities may lead to a smoother transition in lengths across the domain. Alternatively, use of a different relationship between \({\textbf{q}}({\textbf{x}},t)\) and \(\mu {({\textbf{x}})}\) will change the weighting and resulting distribution of \(l({\textbf{x}})\). Further work is required to understand the implications of using multiple design quantities and the choice of conversion function.

Cumulative area fraction error (CAFE) plots for the RoI sub-region indicated in Fig. 2. Data based on transient inflow case with design quantity \(q = \nabla {\times {{\textbf{u}}}}\) using W2 weighting

It was observed that mesh convergence does not necessarily guarantee that mesh design will capture all of the related processes, e.g. the decay of the persistent vortex dipoles generated in the transient flow case. Mesh design applicability is dependent on the choice of design quantities \({\textbf{q}}({\textbf{x}},t)\) and functions \(f_{\mu }({\textbf{q}})\), and the target number of elements \(N_{T}\). This issue will become more apparent in complex real-world cases, and will be investigated in follow-on work. Similarly, mesh convergence does not necessarily guarantee that the model predictive skill is optimal. Hagen et al. (2001) introduced the Cumulative Area Fraction Error (CAFE) plot to quantify the impact of mesh design on model predictive skill. This provides an integrated estimate of model performance. The CAFE plot for the transient inflow case with mesh refinements based on \(q = \nabla {\times {{\textbf{u}}}}\) is shown in Fig. 10. Only the RoI sub-region is used in the calculation based on a single-cycle time-average of the kinetic energy density. The kinetic energy density field difference relative to the benchmark model is used to measure model skill. These data suggest that the 4th iteration produced the lower overall error, while the 5th iteration introduced errors comparable to the baseline (\({\mathcal {H}}_{0}\)) model, despite having a different spatial distribution of mesh elements (see Fig. 9). Further work is required to determine how best to quantify model skill in a way that is relevant to the purpose of the model, and how to link this to the choice of design quantities.

Modelling in this work uses the Thetis coastal ocean model and mesh triangulation through qmesh and gmsh, however we have taken a model-agnostic approach in developing this methodology as per the sequence of Fig. 1. The method is not limited to triangular mesh discretisation, but can be used for any n-sided polygon. In addition, while many models solve the same equations, differences in the underlying numerics would require substantially different mesh designs even for the same cases. It is known that higher order schemes require fewer degrees of freedom and produce solutions that can be numerically more accurate (Ekaterinaris 2005; Wang 2007), potentially removing the need to apply significant mesh refinement. We have used a low-order piecewise-linear discontinuous Galerkin method to solve the SWE’s, but other schemes were available through the Firedrake (Ham et al. 2023) solver on which Thetis is built. The rationale behind using a low-order scheme is that the majority of solvers currently used in modelling coastal fluids are low-order. However, the iterative design method is applicable to solvers of any order, so the novelty and application remains consistent. One of the immediate strengths of this method would be to provide a fair unstructured mesh discretisation when embarking on systematic benchmarking studies of multi-scale models.

5 Conclusions

An iterative and transferable methodology for generating dynamics-based unstructured 2-D mesh discretisations has been developed. The method is demonstrated in a Shallow Water Equation coastal ocean model employing a triangular discretisation and is shown to improve the predictive skill by ensuring regions of high dynamic gradients of interest are acknowledged in the mesh design. The method allows user control on the number of elements in the resulting mesh and the range (e.g. minimum and maximum) of element edge lengths used in the mesh generation. Care has been taken to render the method as generic as possible, so it is extensible to designing unstructured meshes based on any n-sided polygon, with the same control on the number of elements and range of element edge lengths. The iterative design process produces a fixed mesh, which is computationally cheaper overall compared to running an on-the-fly mesh adaptation, while still reducing inherent epistemic errors associated with bathymetry-based mesh designs typically used in coastal modelling.

The analysis presented is based on an example that focuses on predicting the system energy, inspired specifically by ocean engineering applications contemplating hydrokinetic energy. Nevertheless it can be seamlessly applied to a broader range of applications as the mesh design can be based on any spatially and/or temporally varying quantity derived from model output variables. The motivation for this framework is towards standardising the design of multi-scale models. Through our demonstrations we note that the implementation of the mesh design requires sufficient understanding of the system to define appropriate metrics that guide the mesh optimisation. Once an appropriate set of design parameters \({\textbf{q}}({\textbf{x}},t)\) and design functions \(f_{\mu }({\textbf{q}})\) have been defined, alongside a method for combining the mesh design metrics \(\mu _{i}({\textbf{x}})\) generated through iterative refinements, the process can be automated and has the additional benefit of being model-agnostic aiding model inter-comparison benchmarking studies.

Further development work is required to improve the mesh convergence and to ensure that all key processes are captured. A number of development strands are identified in the discussion. The next step is to apply the method to a real-world problem. To this end, the methodology will be applied to the design of mesh discretisations for modelling tidal energy extraction sites in coastal ocean waters, and to trial automated mesh design for multi-scale operational models that comprise digital-twins targeting specific end-user quantities.

Data availability

Data are available upon request.

Code availability

Software is available upon request.

References

Avdis A, Candy AS, Hill J, Kramer SC, Piggott MD (2018) Efficient unstructured mesh generation for marine renewable energy applications. Renew Energy 116:842–856. https://doi.org/10.1016/j.renene.2017.09.058

Balay S, Abhyankar S, Adams MF et al (2016) PETSc users manual. Technical Report ANL-95/11 - Revision 3.7, Argonne National Laboratory. http://www.mcs.anl.gov/petsc

Beckers F, Heredia A, Noack M, Nowak W, Wieprecht S, Oladyshkin S (2020) Bayesian calibration and validation of a large-scale and time-demanding sediment transport model. Water Resour Res. https://doi.org/10.1029/2019WR026966

Bertin X, Oliveira A, Fortunato AB (2009) Simulating morphodynamics with unstructured grids: description and validation of a modeling system for coastal applications. Ocean Model 28:75–87. https://doi.org/10.1016/j.ocemod.2008.11.001

Bilgili A, Smith KW, Lynch DR (2006) Battri: a two-dimensional bathymetry-based unstructured triangular grid generator for finite element circulation modeling. Comput Geosci 32(5):632–642. https://doi.org/10.1016/j.cageo.2005.09.007

Bilskie MV, Coggin D, Hagen SC, Medeiros SC (2015) Terrain-driven unstructured mesh development through semi-automatic vertical feature extraction. Adv Water Resour 86:102–118. https://doi.org/10.1016/j.advwatres.2015.09.020

Bilskie MV, Hagen SC, Medeiros SC (2020) Unstructured finite element mesh decimation for real-time hurricane storm surge forecasting. Coast Eng 156:103622. https://doi.org/10.1016/j.coastaleng.2019.103622

Caldeira RM, Marchesiello P, Nezlin NP, DiGiacomo PM, McWilliams JC (2005) Island wakes in the southern California Bight. J Geophys Res Oceans 110:1–20. https://doi.org/10.1029/2004JC002675

Cazenave PW, Torres R, Allen JI (2016) Unstructured grid modelling of offshore wind farm impacts on seasonally stratified shelf seas. Prog Oceanogr 145:25–41. https://doi.org/10.1016/j.pocean.2016.04.004

Clare MC, Kramer SC, Cotter CJ, Piggott MD (2022) Calibration, inversion and sensitivity analysis for hydro-morphodynamic models through the application of adjoint methods. Comput Geosci 163:105104. https://doi.org/10.1016/J.CAGEO.2022.105104

Coles DS, Blunden LS, Bahaj AS (2017) Assessment of the energy extraction potential at tidal sites around the Channel Islands. Energy 124:171–186. https://doi.org/10.1016/j.energy.2017.02.023

Divett T, Vennell R, Stevens C (2013) Optimization of multiple turbine arrays in a channel with tidally reversing flow by numerical modelling with adaptive mesh. Philos Trans R Soc A Math Phys Eng Sci. https://doi.org/10.1098/rsta.2012.0251

Divett T, Vennell R, Stevens C (2016) Channel-scale optimisation and tuning of large tidal turbine arrays using les with adaptive mesh. Renew Energy 86:1394–1405. https://doi.org/10.1016/j.renene.2015.09.048

Ekaterinaris JA (2005) High-order accurate, low numerical diffusion methods for aerodynamics. Prog Aerosp Sci 41(3):192–300. https://doi.org/10.1016/j.paerosci.2005.03.003

Fragkou AK, Old C, Venugopal V, Angeloudis A (2023) Benchmarking a two-way coupled coastal wave-current hydrodynamics model. Ocean Model 183:102193. https://doi.org/10.1016/j.ocemod.2023.102193

Fringer OB, Dawson CN, He R, Ralston DK, Zhang YJ (2019) The future of coastal and estuarine modeling: findings from a workshop. Ocean Model 143:101458. https://doi.org/10.1016/j.ocemod.2019.101458

Fujiwara T, Nakata H, Nakatsuji K (1994) Tidal-jet and vortex-pair driving of the residual circulation in a tidal estuary. Cont Shelf Res 14:1025–1038. https://doi.org/10.1016/0278-4343(94)90062-0

Furukawa K, Wolanski E (1998) Shallow-water frictional effects in island wakes. Estuar Coast Shelf Sci 46:599–607. https://doi.org/10.1006/ECSS.1997.0301

Geuzaine C, Remacle JF (2009) Gmsh: a 3-D finite element mesh generator with built-in pre- and post-processing facilities. Int J Numer Methods Eng 79:1309–1331. https://doi.org/10.1002/nme.2579

Geyer WR (1993) Three-dimensional tidal flow around headlands. J Geophys Res 98:955–966. https://doi.org/10.1029/92JC02270