Abstract

This paper is devoted to the study of a class of impulsive nonlinear evolution partial differential equations. We give new results about existence and multiplicity of global classical solutions. The method used is based on the use of fixed points for the sum of two operators. Our main results will be illustrated by an application to an impulsive Burgers equation.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Mechanical systems with impact, heart beats, blood flows, population dynamics, industrial robotics, biotechnology, economics, etc are real world and applied science phenomena which are abruptly changed in their states at some time instants due to short time perturbations whose duration is negligible in comparison with the duration of these phenomena. They are called impulsive phenomena. A natural framework for mathematical simulation of such phenomena are impulsive differential equations or impulsive partial differential equations when more factors are taking into account.

Whereas impulsive differential equations are well studied, see for example the books [4, 8, 39, 42] and the references therein, the literature concerning impulsive partial differential equations does not seem to be very rich. The history of impulsive partial differential equations starts at the end of the 20th century with the pioneering work [15], in which, impulsive partial differential systems have been showed to be a natural framework for the mathematical modeling of processes in ecology and biology, like population growth, see also [10]. We can find studies of first order partial differential equations with impulses in [5, 21, 30, 40]. Higher-order linear and nonlinear impulsive partial parabolic equations were considered in [19]. An initial boundary value problem for a nonlinear parabolic partial differential equation was discussed in [9]. The approximate controllability of an impulsive semilinear heat equation was proved in [1]. A class of impulsive wave equations was investigated in [18]. In [27] a class of impulsive semilinear evolution equations with delays is investigated for existence and uniqueness of solutions. The investigations in [27] includes several important partial differential equations such as the Burgers equation and the Benjamin–Bona–Mahony equation with impulses, delays and nonlocal conditions. A class of semilinear neutral evolution equations with impulses and nonlocal conditions in a Banach space is investigated in [2] for existence and uniqueness of solutions. To prove the main results in [2] the authors use a Karakostas fixed point theorem. In [2] an example involving Burger’s equation is provided to illustrate the application of the main results. Some studies concerning impulsive Burgers equation can be found in [14, 25, 33].

Many classical methods have been successfully applied for solving impulsive partial differential equations. By using variational method, the existence of solutions for a fourth-order impulsive partial differential equations with periodic boundary conditions was obtained in [28]. The Krasnoselskii theorem is used to prove existence and uniqueness of solutions for impulsive Hamilton–Jacobi equation in [34]. Some other references on impulsive partial differential equations are: [3, 7, 11, 16, 17, 22,23,24, 26, 32, 35, 38, 41].

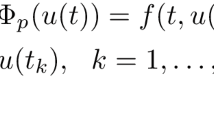

In this paper, we investigate the following class of nonlinear impulsive evolution partial differential equations

where

Note that for \(\psi (u)=\frac{1}{2}u^{2},\) we get impulsive Burgers equations. Assume that

- (A1):

-

\(0=t_0<t_1<\cdots< t_k<t_{k+1}=T\), \(u_0\in {\mathcal {C}}^1({\mathbb {R}})\), \(0< u_0\le B\) on \({\mathbb {R}}\) for some positive constant B,

- (A2):

-

\(\psi \in {\mathcal {C}}^1({\mathbb {R}})\), and

$$\begin{aligned} |\psi ^{\prime }(u(t, x)))|\le b_1(t, x)+ b_2(t, x) |u(t, x)|^l, \end{aligned}$$\((t, x)\in J\times {\mathbb {R}}\), \(b_1, b_2 \in {\mathcal {C}}(J\times {\mathbb {R}})\), \(0\le b_1, b_2\le B\) on \(J\times {\mathbb {R}}\), \(l\ge 0\),

- (A3):

-

\(I_j \in {\mathcal {C}}({\mathbb {R}}^{2})\), \(|I_j(x, v)|\le a_j(x) |v|^{p_j}\), \(x\in {\mathbb {R}}\), \(v\in {\mathbb {R}}\), \(a_j\in {\mathcal {C}}({\mathbb {R}})\), \(0\le a_j(x)\le B\), \(x\in {\mathbb {R}}\), \(p_j\ge 0\), \(j\in \{1, 2, \ldots , k\}\),

- (A4):

-

there exist a positive constant A and a function \(g\in {\mathcal {C}}(J\times {\mathbb {R}})\) such that \(g>0\) on \((0, T]\times \left( {\mathbb {R}}\backslash \{x=0\}\right) \) and

$$\begin{aligned} g(0, x)= & {} g(t, 0)= 0,\quad t\in [0, T],\quad x\in {\mathbb {R}}, \end{aligned}$$and

$$\begin{aligned} 2(1+t)\left( 1+|x|\right) \int _0^t \left| \int _0^x g(t_1, s) \textrm{d}s\right| \textrm{d}t_1\le A,\quad (t, x)\in J\times {\mathbb {R}}. \end{aligned}$$In the last section, we will give an example for a function g that satisfies (A4). Assume that the constants B and A which appear in the conditions (A1) and (A4), respectively, satisfy the following inequalities:

- (A5):

-

\(AB_1<B,\) where \(B_1=2B+T\left( B^{2}+ B^{2+l}\right) +\sum _{j=1}^k B^{1+p_j},\) and

- (A6):

-

\(AB_1<\frac{L}{5},\) where \(B_1=2B+T\left( B^{2}+ B^{2+l}\right) +\sum _{j=1}^k B^{1+p_j}\) and L is a positive constant that satisfies the following conditions:

$$\begin{aligned} r< L<R_1\le B,\quad R_1+\frac{A}{m} B_1>\left( \frac{1}{5m}+1\right) L, \end{aligned}$$with r and \(R_1\) are positive constants and m is the constant which appear in (A6).

Our aim in this paper is to investigate the problem (1.1) for existence of classical solutions. Let \(J_0=J\backslash \{t_j\}_{j=1}^k\) and define the spaces \(PC(J),\;PC^1(J)\) and \(PC^1(J, {\mathcal {C}}^1({\mathbb {R}}^n))\) by

and

Our main results are as follows.

Theorem 1.1

Under the hypotheses (A1), (A2), (A3), (A4) and (A5), problem (1.1) has at least one solution in \(PC^1(J, {\mathcal {C}}^1({\mathbb {R}})).\)

Theorem 1.2

Assume that the hypotheses (A1), (A2), (A3), (A4) and (A6) are satisfied. Then the problem (1.1) has at least two nonnegative solutions in \(PC^1(J, {\mathcal {C}}^1({\mathbb {R}})).\)

Our work is motivated by the interest of researchers for many mathematical questions related to impulsive partial differential equations. In fact, some important applied problems reduce to the study of such equations, see for example, [6, 13, 20, 23, 29, 36, 43]. Some applications of the impulsive PDEs in the quantum mechanics can be found in [36]. The asymptotical synchronization of coupled nonlinear impulsive partial differential systems in complex networks was considered in [43]. Applications are given to models in ecology in [20]. Applications to the population dynamics are given in [6, 13, 23]. A cell population model described by impulsive PDEs was studied in [29].

This paper is organized as follows. In the next section, we give some existence and multiplicity results about fixed points of the sum of two operators. Then in Sect. 3, we prove our main results. First, we give an integral representation and a priori estimates related to solutions of problem (1.1). Then, we use these estimates to prove Theorems 1.1 and 1.2 by using the results on the sum of operators recalled in Sect. 2. Finally, in Sect. 4, we illustrate our main results by an application to an impulsive Burgers equation.

2 Fixed points for the sum of two operators

The following theorem concerns the existence of fixed points for the sum of two operators. Its proof can be found in [18].

Theorem 2.1

Let E be a Banach space and

with \(R>0.\) Consider two operators T and S, where

with \(\epsilon >0\) and \(S: E_{1}\rightarrow E\) be continuous and such that

-

(i)

\((I-S)(E_{1})\) resides in a compact subset of E and

-

(ii)

\(\{x\in E: x=\lambda (I-S)x,\quad \Vert x\Vert =R\}=\emptyset ,\; \text{ for } \text{ any } \; \lambda \in \left( 0, \frac{1}{\epsilon }\right) .\)

Then there exists \(x^*\in E_{1}\) such that

In the sequel, E is a real Banach space.

Definition 2.2

A closed, convex set \({\mathcal {P}}\) in E is said to be cone if

-

(i)

\(\alpha x\in {\mathcal {P}}\) for any \(\alpha \ge 0\) and for any \(x\in {\mathcal {P}}\),

-

(ii)

\(x, -x\in {\mathcal {P}}\) implies \(x=0\).

Definition 2.3

A mapping \(K: E\rightarrow E\) is said to be completely continuous if it is continuous and maps bounded sets into relatively compact sets.

Definition 2.4

Let X and Y be real Banach spaces. A mapping \(K: X\rightarrow Y\) is said to be expansive if there exists a constant \(h>1\) such that

for any \(x, y\in X\).

The following theorem concerns the existence of nonnegative fixed points for the sum of two operators. The details of its proof can be found in [12] and [31].

Theorem 2.5

Let \({\mathcal {P}}\) be a cone of a Banach space E; \(\Omega \) a subset of \({\mathcal {P}}\) and \(U_1, U_2 \text{ and } U_3\) three open bounded subsets of \({\mathcal {P}}\) such that \({\overline{U}}_1\subset {\overline{U}}_2\subset U_3\) and \(0\in U_1.\) Assume that \(T: \Omega \rightarrow {\mathcal {P}}\) is an expansive mapping, \(S: {\overline{U}}_3\rightarrow E\) is a completely continuous and \(S({\overline{U}}_3)\subset (I-T)(\Omega ).\) Suppose that \(({U}_2{\setminus }{\overline{U}}_1)\cap \Omega \ne \emptyset ,\, ({U}_3{\setminus } {\overline{U}}_2)\cap \Omega \ne \emptyset ,\) and there exists \(w_{0}\in {\mathcal {P}}\backslash \{0\}\) such that the following conditions hold:

-

(i)

\(Sx\ne (I-T)(x-\lambda w_{0}),\;\) for all \(\lambda >0\) and \(x\in \partial U_1\cap (\Omega +\lambda w_0),\)

-

(ii)

there exists \(\varepsilon > 0\) such that \(Sx\ne (I-T)(\lambda x), \;\) for all \(\,\lambda \ge 1+\varepsilon , \, x\in \partial U_2\) and \(\lambda x\in \Omega \),

-

(iii)

\(Sx\ne (I-T)(x-\lambda w_{0}),\;\) for all \(\lambda >0\) and \(x\in \partial U_3\cap (\Omega +\lambda w_0).\)

Then \(T+S\) has at least two non-zero fixed points \(x_1, x_2\in {\mathcal {P}}\) such that

or

3 Proof of the main results

3.1 Integral representation and a priori estimates related to solutions of problem (1.1)

In the sequel, we will denote the space \(PC^1(J, {\mathcal {C}}^1({\mathbb {R}}))\) defined in (1.2) by X and it will be endowed by the following norm:

provided it exists.

Lemma 3.1

Under hypothesis (A2) (respectively, (A3)) and for \(u\in X\) with \(\Vert u\Vert \le B\), the following estimate holds:

respectively,

Proof

-

(i)

The estimation of \(|\psi ^{\prime }(u(t, x))|,\; (t, x)\in J\times {\mathbb {R}}:\)

$$\begin{aligned} |\psi ^{\prime }(u(t, x))|\le & {} b_1(t, x)+b_2(t, x)|u(t, x)|^l\\\le & {} B+B^{l+1}\\= & {} B(1+B^l). \end{aligned}$$ -

(ii)

The estimation of \(|I_j(x, u(t, x))|,\; (t, x)\in J\times {\mathbb {R}},\;j\in \{1, \ldots , k\}:\)

$$\begin{aligned} |I_j(x, u(t, x))|\le & {} a_j(x)|u(t, x)|^{p_j}\\\le & {} B^{p_j+1}. \end{aligned}$$ -

(iii)

The estimation of \(\left| \sum \nolimits _{j=1}^k I_j(x, u(t, x))\right| ,\; (t, x) \in J\times {\mathbb {R}}:\)

$$\begin{aligned} \left| \sum \limits _{j=1}^k I_j(x, u(t, x))\right|\le & {} \sum \limits _{j=1}^k |I_j(x, u(t, x))|\\\le & {} \sum \limits _{j=1}^k B^{p_j+1}. \end{aligned}$$

This completes the proof. \(\square \)

For \(u\in X\), define the operator

Lemma 3.2

Suppose (A1)–(A3). If \(u\in X\) satisfies the equation

then it is a solution to the IVP (1.1).

Proof

We have

Hence,

We differentiate (3.2) with respect to t and we find

We put \(t=0\) in (3.2) and we get

Now, by (3.2), we obtain

\(j\in \{1, \ldots , k\}\), and

\(j\in \{1, \ldots , k\}\), whereupon

This completes the proof. \(\square \)

Lemma 3.3

Suppose (A1)–(A3). If \(u\in X\), \(\Vert u\Vert \le B\), then

where \(B_1=2B+T\left( B^2+ B^{2+l}\right) +\sum _{j=1}^k B^{1+p_j}.\)

Proof

We apply Lemma 3.1 and we get

This completes the proof. \(\square \)

For \(u\in X\), define the operator

with g is the function which appears in the condition (A4).

Lemma 3.4

Suppose (A1)–(A4). If \(u\in X\) and \(\Vert u\Vert \le B\), then

where \(B_1=2B+T\left( B^2+ B^{2+l}\right) +\sum _{j=1}^k B^{1+p_j}.\)

Proof

We have

and

and

Thus, \(\Vert S_2u\Vert \le B\). This completes the proof. \(\square \)

Lemma 3.5

Suppose (A1)–(A4). If \(u\in X\) satisfies the equation

then u is a solution to the IVP (1.1).

Proof

We differentiate two times with respect to t and two times with respect to x the Eq. (3.4) and we find

whereupon

Since \(S_1u(\cdot , \cdot )\in {\mathcal {C}}(J\times {\mathbb {R}})\), we get

Thus,

Hence and Lemma 3.2, we conclude that u is a solution to the IVP (1.1). This completes the proof. \(\square \)

3.2 Proof of Theorem 1.1

Suppose that the constants B, A and \(B_1\) are those which appear in the conditions (A1), (A4) and (A5), respectively. Choose \(\epsilon \in (0, 1),\) such that \(\epsilon B_1(1+A)<B.\) Let \(\widetilde{\widetilde{{\widetilde{Y}}}}\) denotes the set of all equi-continuous families in \(X=PC^1(J, {\mathcal {C}}^1({\mathbb {R}}))\) with respect to the norm \(\Vert \cdot \Vert \). Let also, \({\widetilde{{\widetilde{Y}}}}=\overline{\widetilde{\widetilde{{\widetilde{Y}}}}}\) be the closure of \({\widetilde{{\widetilde{{\widetilde{Y}}}}}}\), \({\widetilde{Y}}=\widetilde{{\widetilde{Y}}}\cup \{u_0\}\),

By the Ascoli–Arzelà theorem, it follows that Y is a compact set in X. For \(u\in X\), define the operators

where \(S_2\) is the operator defined by formula (3.3). For \(u\in Y\), using Lemma 3.4, we have

Thus, \(S: Y\rightarrow X\) is continuous and \((I-S)(Y)\) resides in a compact subset of X. Now, suppose that there is \(u\in E\) so that \(\Vert u\Vert =B\) and

or

or

for some \(\lambda \in \left( 0, \frac{1}{\epsilon }\right) \). Hence, \(\Vert S_2u\Vert \le AB_1<B\),

which is a contradiction. Hence and Theorem 2.1, it follows that the operator \(T+S\) has a fixed point \(u^*\in Y\). Therefore

whereupon

From here and from Lemma 3.5, it follows that u is a solution to the problem (1.1). This completes the proof.

3.3 Proof of Theorem 1.2

Let \(X=PC^1(J, {\mathcal {C}}^1({\mathbb {R}}))\) and

With \({\mathcal {P}}\) we will denote the set of all equi-continuous families in \({\widetilde{P}}\). For \(v\in X\), define the operators

where \(\epsilon \) is a positive constant, \(m>0\) is the constant which appear in (A6) and the operator \(S_2\) is given by formula (3.3). Note that any fixed point \(v\in X\) of the operator \(T_1+S_3\) is a solution to the IVP (1.1). Now, let us define

where \(r, L, R_1, A, B_1\) are the constants which appear in condition (A6).

-

1.

For \(v_1, v_2\in \Omega \), we have

$$\begin{aligned} \Vert T_1v_1-T_1v_2\Vert = (1+m\epsilon ) \Vert v_1-v_2\Vert , \end{aligned}$$whereupon \(T_1: \Omega \rightarrow X\) is an expansive operator with a constant \(h=1+m\epsilon >1\).

-

2.

For \(v\in \overline{{\mathcal {P}}_{R_1}}\), we get

$$\begin{aligned} \Vert S_3v\Vert\le & {} \epsilon \Vert S_2v\Vert +m\epsilon \Vert v\Vert +\epsilon \frac{L}{10}\\\le & {} \epsilon \bigg (AB_1+mR_1 +\frac{L}{10}\bigg ). \end{aligned}$$Therefore \(S_3(\overline{{\mathcal {P}}_{R_1}})\) is uniformly bounded. Since \(S_3: \overline{{\mathcal {P}}_{R_1}}\rightarrow X\) is continuous, we have that \(S_3(\overline{{\mathcal {P}}_{R_1}})\) is equi-continuous. Consequently \(S_3: \overline{{\mathcal {P}}_{R_1}}\rightarrow X\) is completely continuous.

-

3.

Let \(v_1\in \overline{{\mathcal {P}}_{R_1}}\). Set

$$\begin{aligned} v_2= v_1+ \frac{1}{m} S_2v_1+\frac{L}{5m}. \end{aligned}$$Note that \( S_2v_1+ \frac{L}{5}\ge 0\) on \(J\times {\mathbb {R}}\). We have \(v_2\ge 0\) on \(J\times {\mathbb {R}}\) and

$$\begin{aligned} \Vert v_2\Vert\le & {} \Vert v_1\Vert +\frac{1}{m}\Vert S_2v_1\Vert +\frac{L}{5m}\\\le & {} R_1+ \frac{A}{m}B_1+\frac{L}{5m}\\= & {} R_2. \end{aligned}$$Therefore \(v_2\in \Omega \) and

$$\begin{aligned} -\epsilon m v_2= -\epsilon m v_1 -\epsilon S_2v_1-\epsilon \frac{L}{10}-\epsilon \frac{L}{10} \end{aligned}$$or

$$\begin{aligned} (I-T_1) v_2= & {} -\epsilon mv_2 +\epsilon \frac{L}{10}\\ \\= & {} S_3v_1. \end{aligned}$$Consequently \( S_3(\overline{{\mathcal {P}}_{R_1}})\subset (I-T_1)(\Omega )\).

-

4.

Assume that for any \(v_0\in {\mathcal {P}}^*={\mathcal {P}}{\setminus }\{0\}\) there exist \(\lambda > 0\) and \(v\in \partial {\mathcal {P}}_{r}\cap (\Omega +\lambda v_0)\) or \(v\in \partial {\mathcal {P}}_{R_1}\cap (\Omega +\lambda v_0)\) such that

$$\begin{aligned} S_3v=(I-T_1)(v-\lambda v_0). \end{aligned}$$Then

$$\begin{aligned} -\epsilon S_2v-m\epsilon v-\epsilon \frac{L}{10}=-m\epsilon (v-\lambda v_0)+\epsilon \frac{L}{10} \end{aligned}$$or

$$\begin{aligned} -S_2v= \lambda mv_0+\frac{L}{5}. \end{aligned}$$Hence,

$$\begin{aligned} \Vert S_2v\Vert =\left\| \lambda m v_0+\frac{L}{5}\right\| >\frac{L}{5}. \end{aligned}$$This is a contradiction.

-

5.

Let \(\varepsilon _1=\frac{2}{5m}.\) Assume that there exist \(w\in \partial {\mathcal {P}}_{L}\) and \(\lambda _1\ge 1+\varepsilon _1\) such that \(\lambda _1w\in \overline{{\mathcal {P}}_{R_2}}\) and

$$\begin{aligned} S_3w= (I-T_1)(\lambda _1w). \end{aligned}$$Since \(w\in \partial {\mathcal {P}}_{L}\) and \(\lambda _1w\in \overline{{\mathcal {P}}_{R_2}}\), it follows that

$$\begin{aligned} \left( \frac{2}{5m}+1\right) L<\lambda _1L=\lambda _1\Vert w\Vert \le R_1+\frac{A}{m} B_1+\frac{L}{5m}. \end{aligned}$$Moreover,

$$\begin{aligned} -\epsilon S_2w-m\epsilon w-\epsilon \frac{L}{10}=-\lambda _1m\epsilon w+\epsilon \frac{L}{10}, \end{aligned}$$or

$$\begin{aligned} S_2w+\frac{L}{5}=(\lambda _1-1)mw. \end{aligned}$$From here,

$$\begin{aligned} 2\frac{L}{5}> \left\| S_2w+\frac{L}{5}\right\| =(\lambda _1-1)m \Vert w\Vert =(\lambda _1-1) m L \end{aligned}$$and

$$\begin{aligned} \frac{2}{5m}+1> \lambda _1, \end{aligned}$$which is a contradiction.

Therefore all conditions of Theorem 2.5 hold. Hence, the problem (1.1) has at least two solutions \(u_1\) and \(u_2\) so that

or

4 An Example

Below, we will illustrate our main results. Let \(k=2\),

and

Then

and

i.e., (A5) holds. Next,

i.e., (A6) holds. Take

Then

Therefore

Hence, there exists a positive constant \(C_1\) so that

\(s\in {\mathbb {R}}\). Note that \(\lim \limits _{s\rightarrow \pm 1} l(s)=\frac{\pi }{2}\) and by [37, pp. 707, Integral 79], we have

Let

and

Then there exists a constant \(C>0\) such that

Let

Then

i.e., (A4) holds. Therefore for the problem

Data availability statement

Not applicable.

References

Acosta, A.; Leiva, H.: Robustness of the controllability for the heat equation under the influence of multiple impulses and delays. Quaest. Math. 41(6), 761–772 (2018)

Agarwal, R.P.; Leiva, H.; Riera, L.; Lalvay, S.: Existence of solutions for impulsive neutral semilinear evolution equations with nonlocal conditions. Discontin. Nonlinearity Complex. 11(2), 1–18 (2022)

Asanova, A.T.; Kadirbayeva, Z.M.; Bakirova, É.A.: On the unique solvability of a nonlocal boundary-value problem for systems of loaded hyperbolic equations with impulsive actions. Ukr. Math. J. 69(8), 1175–1195 (2018)

Bainov, D.; Simeonov, P.: Impulsive Differential Equations: Periodic Solutions and Applications. Pitman Monographs and Surveys in Pure and Applied Mathematics 66, New York (1993)

Bainov, D.; Kamont, Z.; Minchev, E.: Periodic boundary value problem for impulsive hyperbolic partial differential equations of first order. Appl. Math. Comput. 68(2–3), 95–104 (1995)

Bainov, D.; Minchev, E.: Estimates of solutions of impulsive parabolic equations and applications to the population dynamics. Publ. Math. 40, 85–94 (1996)

Bainov, D.; Kolev, D.; Nakagawa, K.: The control of the blowing-up time for the solution of the semilinear parabolic equation with impulsive effect. J. Korean Math. Soc. 37, 793–802 (2000)

Benchohra, M.; Henderson, J.; Ntouyas, S.: Impulsive Differential Equations and Inclusions. Contemporary Mathematics and its Applications, 2, New York (2006)

Boucherif, A.; Al-Qahtani, A.S.; Chanane, B.: Existence of solutions for impulsive parabolic partial differential equations. Numer. Funct. Anal. Optim. 36, 730–747 (2015)

Chan, C.Y.; Ke, L.: Remarks on impulsive quenching problems. Proc. Dyn. Syst. Appl. 1(1), 59–62 (1994)

Chan, C.Y.; Deng, K.: Impulsive effects on global existence of solutions of semi-linear heat equations. Nonlinear Anal. 26, 1481–1489 (1996)

Djebali, S.; Mebarki, K.: Fixed point index theory for perturbation of expansive mappings by \(k\)-set contractions. Topol. Methods Nonlinear Anal. 54(2A), 613–640 (2019)

Dou, J.W.; Li, K.T.: Comparison results for a kind of impulsive parabolic equations with application to population dynamics. Acta Math. Appl. Sinica (Engl. Ser.) 22, 211–218 (2006)

Duque, C.; Uzcátegui, J.; Leiva, H.; Camacho, O.: Controllability of the Burgers equation under the influence of impulses, delay and nonlocal condition. Int. J. Appl. Math. 33(4), 573–583 (2020)

Erbe, L.H.; Freedman, H.I.; Liu, X.Z.; Wu, J.H.: Comparison principles for impulsive parabolic equations with applications to models of single species growth. J. Aust. Math. Soc. Ser. B 32, 382–400 (1991)

da Costa Ferreira, J.; Pereira, M.C.: A nonlocal Dirichlet problem with impulsive action: estimates of the growth for the solutions. Comptes Rendus Mathmatique 358(11–12), 1119–1128 (2020)

Gao, W.; Wang, J.: Estimates of solutions of impulsive parabolic equations under Neumann boundary condition. J. Math. Anal. Appl. 283, 478–490 (2003)

Georgiev, S.G.; Zennir, K.: Existence of solutions for a class of nonlinear impulsive wave equations. Ricerche Mat. 71(1), 211–225 (2022)

Georgieva, A.; Kostadinov, S.; Stamov, G.T.; Alzabut, J.O.: \(L_{p}(k)\)-equivalence of impulsive differential equations and its applications to partial impulsive differential equations. Adv. Differ. Equ. 2012(144), 12 (2012)

He, L.; Anping, L.: Existence and uniqueness of the solution for parabolic systems with impulse and delay. J. Biomath. 31(2), 158–170 (2016)

Hernández, E.M.; Aki, S.M.T.; Henríquez, H.: Global solutions for impulsive abstract partial differential equations. Comput. Math. Appl. 56(5), 1206–1215 (2008)

Isaryuk, I.M.; Pukalskyi, I.D.: Boundary-value problem with impulsive conditions and degeneration for parabolic equations. Ukr. Math. J. 57(10), 1515–1526 (2016)

Kirane, M.; Rogovchenko, Y.V.: Comparison results for systems of impulse parabolic equations with applications to population dynamics. Nonlinear Anal. 28, 263–276 (1997)

Lakshmikantham, V.; Yin, Y.: Existence and comparison principle for impulsive parabolic equations with variable times. Nonlinear World 4(2), 145–156 (1997)

Leiva, H.; Sundar, P.: Approximate controllability of the Burgers equation with impulses and delay. Far East J. Math. Sci. 102(10), 2291–2306 (2017)

Leiva, H.; Sivoli, Z.: Existence, stability and smoothness of bounded solutions for an impulsive semilinear system of parabolic equations. Afr. Mat. 29(7–8), 1225–1235 (2018)

Leiva, H.: Karakostas fixed point theorem and the existence of solutions for impulsive semilinear evolution equations with delays and nonlocal conditions. Commun. Math. Anal. 21(2), 68–91 (2018)

Li, H.; Zhang, Y.: Variational method to nonlinear fourth-order impulsive partial differential equations. Adv. Mater. Res. 261–263, 878–882 (2011)

Liu, X.; Zhang, S.: A cell population model described by impulsive PDEs. Existence and numerical approximation. Comput. Math. Appl. 36(8), 1–11 (1998)

Liu, J.H.: Nonlinear impulsive evolution equations. Dyn. Contin. Discrete Impuls. Syst. 6(1), 77–85 (1999)

Mouhous, A.; Georgiev, S.; Mebarki, K.: Existence of solutions for a class of first order boundary value problems. Archivum Mathematicum 58(3), 141–158 (2022)

Nakagawa, K.: Existence of a global solution for an impulsive semilinear parabolic equation and its asymptotic behaviour. Commun. Appl. Anal. 4, 403–409 (2000)

Ndiyo, E.E.; Etuk, J.J.; Jim, U.S.: Distribution solutions for impulsive evolution partial differential equations. Br. J. Math. Comput. Sci. 9(5), 407–417 (2015)

Ndiyo, E.; Etuk, J.; Aaron, A.: Existence and uniqueness of solution of impulsive Hamilton–Jacobi equation. Palest. J. Math. 8(2), 103–106 (2019)

Özbekler, A.; Işler, A.: Sturm comparison criterion for impulsive hyperbolic equations. Rev. R. Acad. Cienc. Exactas Fs. Nat. Ser. A Mat. 114(2), 10 (2020)

Petrov, G.: Impulsive moving mirror model in a Schrödinger picture with impulse effect in a Banach space. Communications of the Joint Institute for Nuclear Research, preprint E2-92-272, Dubna, Russia (1992)

Polyanin, A.; Manzhirov, A.: Handbook of Integral Equations. CRC Press, Boca Raton (1998)

Pukalskyi, I.D.; Yashan, B.O.: Boundary-value problem with impulsive action for a parabolic equation with degeneration. Ukr. Math. J. 71(5), 735–748 (2019)

Rachunková, I.; Tomeček, J.: State-Dependent Impulses. Boundary Value Problems on Compact Interval. Atlantis Briefs in Differential Equations 6, Amsterdam (2015)

Rogovchenko, Y.V.: Nonlinear impulse evolution systems and applications to population models. J. Math. Anal. Appl. 207(2), 300–315 (1997)

Song, G.: Estimates of solutions of impulsive parabolic equations and application. Int. J. Biomath. 1(2), 257–266 (2008)

Stamova, I.: Stability Analysis of Impulsive Functional Differential Equations, De Gruyter Expositions in Mathematics 52, Berlin (2009)

Wu, K.N.; Zhu, Y.N.; Chen, B.S.; Yao, Y.: Asymptotical synchronization of coupled nonlinear partial differential systems with impulsive effects. Asian J. Control 18(2), 1–11 (2016)

Acknowledgements

The authors Saïda Cherfaoui, Arezki Kheloufi and Karima Mebarki acknowledge support of “Direction Générale de la Recherche Scientifique et du Développement Technologique (DGRSDT)”, MESRS, Algeria.

Funding

Not applicable.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interest.

Informed consent

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Cherfaoui, S., Georgiev, S.G., Kheloufi, A. et al. Existence of classical solutions for a class of nonlinear impulsive evolution partial differential equations. Arab. J. Math. 12, 573–585 (2023). https://doi.org/10.1007/s40065-022-00415-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40065-022-00415-8