Abstract

Recent advancements with deep generative models have proven significant potential in the task of image synthesis, detection, segmentation, and classification. Segmenting the medical images is considered a primary challenge in the biomedical imaging field. There have been various GANs-based models proposed in the literature to resolve medical segmentation challenges. Our research outcome has identified 151 papers; after the twofold screening, 138 papers are selected for the final survey. A comprehensive survey is conducted on GANs network application to medical image segmentation, primarily focused on various GANs-based models, performance metrics, loss function, datasets, augmentation methods, paper implementation, and source codes. Secondly, this paper provides a detailed overview of GANs network application in different human diseases segmentation. We conclude our research with critical discussion, limitations of GANs, and suggestions for future directions. We hope this survey is beneficial and increases awareness of GANs network implementations for biomedical image segmentation tasks.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The medical imaging domain is widely used to gather life-saving image data by noninvasive peering at different human body organs [1]. With the advancement of biomedical imaging fields, the data are provided by positron emission tomography (PET), computed tomography (CT), magnetic resonance imaging (MRI), and a few other modalities such as microscopy and digital pathology. The images collected by these modalities can demonstrate anatomical views of different human body organs. Still, it is pretty tricky for any radiologist to identify lesions areas from provided image data correctly. Similarly, CT scans and MRI imaging help accurately diagnose and provide detailed information about anatomy. These images data are provided in the 3D form such that all lesion tissue segmentation processes required slice-to-slice on 2D format images data. However, if medical imaging data are hand-mark by some annotation expert or radiologist, it will take more or less fifteen minutes per image [2]. Therefore, the manual annotation method is expensive, time-consuming, and challenging to scale. The time consummation overhead of manually segmenting images made the automatic methods most active in the research field.

Over the last few years, medical experts have highly adopted automated segmentation methods for accurate clinical decision-making. Automated segmentation methods can provide the necessary evidence for any medical expert for proper treatment planning and predicting potential high-risk factors. Various challenges are present in the medical image segmentation field. Medical imaging researchers often experience limited data availability issues for any specific disease segmentation. Furthermore, the changeable shape, position, and size of lesion tissues create serious difficulty for the segmentation methods. Another challenge is correctly locating boundary elements that belong to the same tissue structures. Thirdly, various factors of the image capturing such as aliasing, image sampling, reconstruction, and different types of noises may create boundaries of the region of interest ambiguous and indistinct [3]. Recently deep learning has appeared as a revolutionary model so that many medical imaging challenges, including image segmentation, are tackled by adopting various CNNs models [4]. Deep learning models are known for outstanding performance and are vital in medical imaging for complex decision-making [5]. These deep networks use many nonlinear transformations to abstract given input at a multilevel and map together to predict results. Deep networks are bound to a nonlinear mapping among input and predicted results and are fully capable of learning the hidden features [6].

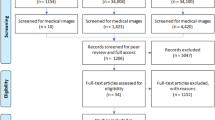

Recently, generative adversarial networks (GANs) have been introduced as outstanding breakthroughs in deep networks and rapidly getting research community attention because of their wide variety of medical imaging applications. As compared to traditional deep neural networks, GANs are different types of deep neural networks where two networks are trained simultaneously. Some survey and review papers on applications of GAN to medical imaging were published [7,8,9,10]. These papers consider a lot of research details generally, which is helpful for new GANs researchers. However, the GAN application in the biomedical segmentation task was not explicitly covered in detail. The core objective of our survey is to share a comprehensive overview of GANs applications in the segmentation of biomedical images. For data collection, we utilized the Google scholar tool to query papers with titles that contain ("Generative adversarial network," "GANs segmentations," “GANs medical image segmentation”). Our research paper collection timeline was from 2016 and March 2021. Our search outcome has identified 151 papers, but only 138 published papers are covered on GANs for segmentation tasks. Most of the papers are considered from well-reputed journals, such as IEEE, Springer, Elsevier, and some related conference papers. Also papers with good citations are also considered from arXiv e-Print. We reviewed all papers thoroughly and excluded such papers that are irrelevant to biomedical imaging or GANs. To tackle the overlapping issue, only the paper with the highest citations is considered. A comparison of our survey with previous survey papers is presented in Table 1.

The remainder of this survey is organized as follows. We began with the background of GANs and structural variants in Sect. 2. Section 3 presents imaging modalities used in GANs studies. Section 4 provides detail of GANs segmentation for different human organs. The discussions, limitations, and conclusion are presented in Sects. 5 and 6.

2 Background

This section discusses various models of GANs, performance matrices, and loss function, respectively.

2.1 Generative adversarial networks (GANs)

This section aims to provide an introduction to different GANs that we have found in medical image segmentation papers reviewed in this research work. Table 2 shows different variants of GANs architecture used in existing studies and a number of a paper published on various human organs.

GANs [11] classic architecture was proposed in 2014 that is also known as vanilla GAN. As compared to traditional deep neural networks, GANs are two different type of deep neural networks where generator G and the discriminator D train simultaneously. The basic aim of discriminator D is to determine where a sample members to fake distribution or real. Whereas fake samples are generated by a generator to deceive the discriminator D, A two-player min–max game is played among two networks where one participant tries to maximize the value function; and the second participant tries to minimize it. In generator G, the prior input noise is p(z), that is, usually Gaussian or uniform distribution. The visual similarity is expected between output G and \(x{}_{g}\), with real images \(x{}_{r}\) drawn from pr(x) real data images distribution. Here, the generator G determines a nonlinear mapping function parameterized with θg and formulated as G(z;θg). The given input to discriminator D is a real sample or generated one. Network D obtains both \(x{}_{r}\) and \(x{}_{g}\) outputs a single scalars value O1 = D(x;θd), stating the probability of whether input images are realistic or fake sample images. Here, function D(x; θd) is the map function learned by discriminator D and parameterized with θd. The distribution created by synthesis samples is Pg, and it is anticipated to approximate Pr after successful training. Normally Z is denoted as input noise, network generator is denoted as G, and discriminator is denoted as D. Here, y1 is the output of binary real or fake images.

The discriminator D is focused on differentiating between real and fake images; here, network G especially trains to fool network D. However, gradient info back propagates from network D to network G, so network G updates parameters to generate such output, which helps to fool the network D. A mathematical intuition of network G and network D can by illustrated as follows.

It can be observed that here network D act to differentiate between fake and real images as binary classification. In case network D trains to optimality prior to next network G, minimizing \(L_{G}^{GAN}\) is equivalent to reducing JS divergence among Pr and Pg as demonstrated in [11]. The required result after the training process is that samples generated by \(x{}_{g}\) must approximate real data-distribution pr(x). In 2015, conditional-GAN (cGAN) [12] is proposed, which includes some extra information such as class label in image synthesis, and information vector c is added to generator network (G), and discriminator (D). Here, G is using some random noise, denoted as Z, and prior information is denoted as C, and both merged. The discriminator D duty is to discriminate information provided as C to identify fake and real images provided by generator G quickly. Similarly, deep convolutional GAN was initially presented in 2015, also known as DCGAN [13]. In this model, FC hidden layer was removed, and pooling layer was also replaced by adding stride convolutions operation on discriminator network, and fractional stride was added on generator network G. One another modification was adopting batch normalization for both generative G and discriminative D networks along with applying Leaky-ReLU activations in all layers of the discriminative network. However, introducing batch normalization and activations, Leaky-ReLU does not fully resolve mode collapse issues in the DCGAN. In Pix2pix [14], network was presented for the image to image-based translation. Additionally, learn mapping among input to output image and pix2pix network contrast loss function trained the mapping. This network has shown outstanding results in various domains of image processing and computer vision applications. Learning a transformation between images distributions, cycle-GAN [15] was proposed in 2017. A cycle-consistency loss is introduced to save input image after reverse and cycle of translation. The matching pairs are not necessary for training purposes. The technique makes the preparation of data simpler and extends this network for a large variety of applications. Figure 1 depicts the hierarchical presentation with modification in the original GAN architecture.

2.2 Performance metrics

Various performance metrics in GANs segmentation models are utilized to evaluate the different models performance. The multiple performance measures are used to analyze train models performance because sometimes the model outperforms in one performance measure, while the same model poorly performs on another performance metric. Using performance measures correctly is highly recommended that reflect where the proposed model is trained correctly or poorly performed on a given dataset. Table 3 shows various performance measures found in this survey research. In cases, metrics are intended to compare segmentation results against the ground truth images, which might be a label or mask. Mostly all these metrics are calculated using a confusion matrix by using four basic elements, such as true positives (TP) rate, true negatives (TN) rate, false positives (FP) rate, and false negatives (FN) rate. However, we share the detail of all performance metrics in Table 3.

2.3 Loss functions

There are various loss functions available for GAN in both generator and discriminator networks equally. We have seen different losses proposed in GANs models, as shown in Table 4.The \(l{}_{{{\text{adv}}}}\) function is widely used in 32 studies followed by \(l{}_{bce}\) function used in 23 different studies. Similarly, \(l_{{{\text{dice}}}}\) function is the third widely used function in 16 different studies. We have also noticed that \(l_{mse}\) function is also utilized in 6 different studies followed by \(l_{{{\text{mae}}}}\), the function being used in 2 studies only.

3 Imaging modalities used in GAN-based segmentation

This section summarizes GAN model applications in various biomedical imaging modalities. These image modalities can be categorized into MRI, CT scan, X-ray, dermoscopy, and microscopy images. Figure 2 demonstrates the hierarchical diagram of biomedical imaging modalities. It shows that 48% of studies are based on MRI modality, and 31% are based on CT scans. Furthermore, 21% of studies are based on X-ray, ultrasound, and mammography, respectively.

3.1 Datasets based on MRI imaging

MRI is an essential noninvasive technique that is widely used as a brain tumor imaging modality in various research studies. The MRI medical imaging technique is safe even for pregnant females and their babies, and it never affects radiation. But the major disadvantage of MRI images is sensitive, and it is challenging to assess organs that involve mouth tumors. In MRI medical imaging technique, segmentation's common usage is to extract different tissues to identify abnormalities and tumor regions. From 2013 to 2018, MICCAI includes a series of brain tumor MRI scans datasets (BraTS 2013–18). Different brain tumor segmentation approaches and frameworks are reported [16,17,18,19,20,21,22,23, 28, 108, 109, 112, 125, 133, 140] that aid in improving accuracy and identifying tumors from MRI images. ISLES (Ischemic Stroke Lesion Segmentation) contributes datasets for biomedical segmentation, and similarly IXI and NAMIC Multimodality datasets [24] are also used in studies. Alzheimer Disease Neuroimaging Initiative (ADNI) databases were launched in 2004 by R. Michael W. Weiner and were financed by a public–private partnership. The ADNI database's central object is to design a clinical system for prompt diagnosing of Alzheimers life-threatening disease in the early stage. Most of the medial datasets of ADNI are MRI and PET base images for disease assessments utilized in studies [25, 110, 126, 134, 142]. Likewise, studies in [24] and [26] used another dataset NAMIC Brain Multimodality for automatic brain segmentation. This database is freely accessible and contains structural MRI (sMRI) images.

3.2 Datasets based on CT scans

The CT scan is a biomedical imaging modality that made an outstanding impact on the diagnosis of human body assessments. CT scans are widely used in various medical conditions in a broad range of biomedical applications similar to MRI modality. CT scan requires less screening time and is an excellent technique for abnormal coronary artery diseases and vessel assessments than MRI imaging. However, radiation exposure and contrast material cause adverse effects on kidney function in persons having kidney problems. ISLES challenge 2018 provides ischemic stroke lesion segmentation CT (3D) images used in studies [27]. There are various CT scan datasets MICCAI 2017, ImageCHD, MRBrainS18, and MM-WHS-2017 which are used in [88] and [101] for heart segmentation. Similarly, MICCAI Grand Challenge also provided a PROMISE12 Prostate MR Image segmentation dataset utilized in [145]. The CT scan images have achieved importance as a 3D imaging modality, and most of the liver tumor datasets ISBI LiTS-2017, DeepLesion, MICCAI-SLiver07, LIVER100 are based on 3D technique, which is widely utilized in literature [72, 73, 89, 99, 130, 136]. For lung tumor segmentation, LIDC-IDRI, SARS-COV-2 Ct-Scan, and NSCLC-Radiomics datasets have been experimented in the research work [65, 96]. The CT (3D) scan images also find application in kidney tumor segmentation. GAN-based models [99, 130] use datasets Kidney KiTS19 Challenge, and Kidney NIH Pancreas-CT for accurate tumor segmentation. Similarly, for the spine, thorax, head and neck, and spleen segmentation, datasets InnerEye, 2017 AAPM Thoracic Auto-segmentation Challenges, H&N CT, and Spleen Data Decathlon are publicly available. These datasets have widely experimented within the GAN models [66, 67, 84, 130].

3.3 Datasets based on ultrasound imaging

Ultrasound images are taken by diagnostic ultrasound, a noninvasive technique used to capture inside body visual images. Ultrasound is widely adopted because it is low cost, and no side effects are reported in patients. There are a few limitations with ultrasound techniques strictly linked to medical experiments and require ensuring no air gaps between probe and body. Dataset B was shared in 2012 by UDIAT Parc Taulí, Spain. This dataset includes 163 images of women breast cancer cases with resolution 760 × 570, and each image shows more than one lesion. This dataset is also used in the study [92]. The OASBUD dataset is collected from Baheya Hospital Egypt, and it contains 100 patients’ breast ultrasound images, 50 malignant, and 48 benign lesions.

3.4 Datasets based on X-ray imaging

X-ray (CXRs) imaging is a very affordable and commonly used medical imaging modality. Due to low cost and easy availability, daily thousands of CXRs are performed in various hospitals regularly. The Osteoarthritis Initiative project is based on understanding and preventing knee osteoarthritis, one of the leading disabilities in older males and females. The OAI data are adopted in research work [117], and more than 4,796 subjects X-ray and MRI images are available. JSRT database contains 154 lungs nodule and 93 non-nodule X-ray images with 2048 × 2048 resolution. These data are utilized for segmentation purposes in the studies [97, 98, 131]. The NIH chest X-ray is another chest X-ray image dataset experimented within the research work [98]. Mammography is a low-energy X-ray-based biomedical imaging technique to examine breast cancer diagnosis and screening. The mammography datasets INbreast, DDSM-BCRP, CBIS-DDSM are widely utilized in GANs [93, 120].

3.5 Datasets based on RGB imaging

In the following subsection, we focus on RGB imaging applications for GANs network. As seen in Fig. 3, these are sub-categorized into microscopy, funduscopy, and dermoscopy. Figure 3 demonstrates that 20 papers have been published on fundoscopy-related studies. Fundus images are taken by a specialized fundus camera which captures the retina, optic disk, and macula images in 2D format. These 2D format images are used to diagnose eye diseases and segment glaucoma, optic disk, blood vessels, and eye retina. The publicly available datasets DRIVE, STARE, Drishti-GS are leading datasets that are widely used in the work of [39,40,41,42,43,44,45,46,47,48,49, 135, 138]. Similarly, CHASEDB1and RIM-ONE are retinal fundus image databases used for segmentation [41, 44, 48,49,50, 138]. In research [44, 46, 48, 49, 51, 52, 111, 121, 138], few other datasets are utilized which are Origa650, REFUGE, DRIONS-DB, EyePACS, FGADR, and IDRiD, respectively. Figure 3 shows that ten papers have been published on dermoscopy-related studies. Dermoscopy is a noninvasive technique performed by instrument dermatoscope to examine pigmented skins lessons. In the skin cancers segmentation-related studies, ISIC2016, ISIC2017, and ISIC2018 are three publicly available challenge datasets that are widely used in the work of [78,79,80,81,82, 100, 118, 144]. The PH2 is a small dataset consisting of 200 skin images, including ground truth images. Similarly, HAM10000 dataset consists of 10,015 dermatoscopic images released to train deep learning and some machine learning models. These datasets are used by the following research papers [80, 81, 83, 119]. Figure 3 shows that 14 papers are published on microscopy-related studies. The 2018 Data Science Bowl was contributed by numerous biological laboratories and presented by Booz Allen Hamilton & Kaggle. This dataset consists of a total of 660 RGB images and is used in the research [115]. Similarly, MIVIA, ssTEM, SCD RBC, triple negative breast cancer (TNBC) are microscopy datasets that are used in GAN applications [55, 116, 132]. The MICCAI challenge 2015 provided the Gland Challenge dataset based on H&E-stained histology images. Similarly, MICCAI 2017 shared the Digital Pathology Challenge dataset for research purpose. In the 2018 challenge, a multi-organ nuclei segmentation challenge was conducted, and microscopy images were provided. These challenging datasets are followed by the subsequent researchers [55, 56].

The complete overview of various datasets used in GANs-based studies is presented in Table 5. The dataset name, type, target, number of samples, and downloading URLs are also provided.

4 GAN-based segmentation methods for various human organs

This section will focus on the medical image segmentation model based on GAN architecture, and studies are categorized on a specific part of human anatomy. Figure 4 shows papers published on different human organs and their share in biomedical segmentation tasks. Tables 6, 7, 8, 9, 10, 11, 12, 13, 14 and 15 have categorized these models into tabular form stating each model, loss function, dataset, preprocessing method, image resolution, augmentation method, performance measure, implementation, and available source code link.

4.1 GANs applications in brain tumor segmentation

In medical imaging, brain MRI images and CT scans are usually used to diagnose and monitor specific patient illness progression and plan possible treatment. Alzheimer and brain tumor diseases are most commonly known as the deadliest diseases in both males and females all around the world. However, manual segmentation and identification of pathologies in brain images are time-consuming and tedious jobs. The imbalanced datasets of brain tumor images are causing significant challenges in medical imaging, directly affecting the proposed models training process. Recently, GANs have gained momentum in the research community to synthesize brain images. Rezaei et al. [108] proposed a GAN model with i) generative, ii) discriminative, iii) and refinement networks to address imbalanced dataset issues using ensemble-based learning. A 3D projective GAN, called PAN [29], is introduced to address the computational burden. Attention module is also proposed to select global information from the segmentor network. The proposed solution for privacy restriction problems in multiple health entries uses the AsynDGAN [16] model. The framework also focused on train generators to collect information distribution for discriminators and utilized synthetic samples for training segmentation models. A framework was proposed [17] for synthetic segmentation of FLAIR MRI images translation to high-contrast synthetic MRI images. Few regression models were also utilized to predict each patient test case. In MPC-GAN-based [30] model, dense multipath UNet is used as a generator to be regularized through the discriminator.

Moreover, discriminator and generator capture contextual information as input images. Furthermore, the boundary loss function is utilized to enhance the performance of the generator loss function and proposed UAGAN [31] for multimodal un-paired medical image segmentation. Different modalities of invariant features are captured by translation stream for target anatomical structures. CoCa-GAN [18] is based on 3-dimensional feature learning context-aware GAN for data synthesis. This resolves the missed modalities problem for grading gliomas with single T1-weighted image MRI input. Rachmadi et al. presented DEP-GAN [32] with two discriminators to improve the predicting performance evaluation of WMH small vessel disease. Using incomplete multimodal brain images, a unified disease-image-specific deep neural network was developed to synthesize images and diagnose disease [126]. Vox2Vox suggested network [133] generates realistic output images from multichannel 3D sample images. The adversarial training approach is incorporated with CNN-based segmentation method. Also, the loss function is utilized to improve the generator network and discrimination network [26]. An unsupervised 3D adversarial neural network is introduced [33] for brain image segmentation—moreover, a multiconnected discriminator is suggested for optimizing adversarial training of Deep-supGAN [110] cascade GAN network to segmentation of bony structures from generated CT scans of MRI images. Complementary information of bony structures was gathered from a combination of CT scans and MRI images. A software solution [19] is presented for the reconstruction of retina 3D MRI images. The method uses the multistage model to pay attention to filtering the unimportant contents, and the resolution of HTC is enhanced [125]. GP-GAN is a modified variant of classical U-Net, and a novel loss function is presented with dice loss [20]. The proposed approach is called diffusion-weighted imaging, and perfusion parameter maps get optimum and correct image segmentation [27]. SegAM is an end-to-end GAN-based model with a novel multiscale loss for segmentation tasks [21]. The proposed network is a residual cyclic unpaired encoder and utilizes residual and mirroring principles [140]. The method is presented to segment the white matter in 18F-FDG PETCT images by employing GANs [134]. The structure of GAN model used in this study is illustrated in Fig. 5.

reproduced from paper [134]

Segmentation map generation using the adversarial network. This figure is

The tumor GAN [22] is introduced, which generates image segmentation pairs using unpaired adversarial training. UG-net can gain pixel-level classification of MRI images, and GANs are utilized for training two models simultaneously [34]. MCMT-GAN is another variant of the GAN network, which addresses brain MRI synthesis issues in an unsupervised fashion [24]. Liu et al. proposed GANReDL [35] based on SRGAN to improve image quality. The loss function is also introduced as a feature real order derivative. The discriminators network is trained to discriminate among improved sources and masks images. Researchers in studies [28, 112, 113] presented GAN models, which depend on a fully convolutional network to synthesize and segment MRI images more accurately. In research [109, 111, 114, 142], a cGAN was proposed for segmenting brain MRI (3D) or CT scans images. Results demonstrate that proposed works are practical for different types of brain tumor images. The cycle-GAN-based unsurprised network proposed in various papers [25, 127], synthesized, and segmented medical images. Results indicate that proposed approaches help in correct segmentation of brain tissues. Few other studies are presented based on generator and discriminator networks to correctly segment brain 2D or 3D images. Similarly, research presented in [23, 36,37,38] is based on generator and discriminator networks to correctly segment brain 2D and 3D images.

4.2 GANs applications in cardiac segmentation

In medical imaging, cardiac segmentation contributes a significant potential in cardiac diseases, clinical monitoring, and treatment planning. CMRI (cardiac magnetic resonance imaging) contains details for premedication and surgical treatments, which are beneficial for evaluating all possible treatments. But there are various challenges in echocardiography such as low spatial resolution, deformable appearance, and limited annotation image availability. The authors in [102] presented cCGAN developed bi-ventricle segment cardiac short-axis in MRI. Traditional UNet inspires the generator to be used for segmenting images, and a discriminator network is developed to distinguish the input image of a ground truth image. GAN model is utilized to learn the UNet feature representation for the segmentation process. The proposed U-Net-GAN [128] presents an annotation-free solution for the medical segmentation problem. From the latent space factorization based on cycles consistency principle, a method [129] is utilized in semi-supervised myocardial segmentation. In work of Xu et al. [90], multitask GAN is proposed as contract-free to clinically segment and quantify MIs concurrently. The method has achieved 96.46% accuracy for classification. VoxelAtlas GAN [103] was suggested for 3-D LV segmentation on 3-D echocardiography. This network is consisting of voxel to voxel-based cGAN and adds atlas into an end-to-end improvement of the framework. The results show that this proposed framework has great importance for clinical applications. Similarly, cGAN [104] is used to predict deformation from CMR frames, with an outstanding result of accuracy in realistic prediction. For automatically whole heart and great vessel segmenting using CMR images, a context-aware cGAN is presented by research [105]. Additionally, cascade leverage transfer learning is introduced to address gradient vanishing problems and enhance the training process. Dou et al. [88] presented an adversarial learning-based un-supervised framework for the cross-modality segmentations. Moreover, the pixel-wise prediction GAN model consists of dilated FCN. Dong et al. [91] came up with a method that can efficiently overcome 3D-echocardiography issues, complex anatomical environment, and high dimensionality in data. Atla's prior knowledge is integrated with CNN for the 3D LV segmentation task. Furthermore, a deep atlas network can be trained with limited availability of annotated images. The experimental dataset was acquired privately to demonstrate the significance of the presented framework. The proposed PSCGAN [106] is CA-Free, synthesizes LGE equivalent images while segmenting all diagnostic tissue from MRI scans. Furthermore, the proposed framework offers three stages and divide-conquer approach for training generated images and segmenting given images. The network runs for 180 subjects, and results have shown that PSCGAN could be used as an effective clinical application for the standardization f IHD diagnosis. Teng et al. [101] is called few-shot GAN leveraging transfer learning and echocardiography translation. Two-parents network U2S and S2U are trained and assembled for the transfer learning process. Pre-knowledge shifted into targeted networks. The proposed work gains interactive translation among sketch images and ultrasound images with shot annotated data. The proposed contrast-free method DSTGANs [107] automatically segments and quantifies cine MRI images. The experiments are demonstrated on 165 subject cases and achieve a pixel classification accuracy of 96.98%. Rezaei et al. [89] proposed an RNN-GAN network and used it to mitigate the imbalance data challenge. The architecture is based on the recurrent generator and recurrent discriminator. Furthermore, categorical accuracy LOS is merged with the adversarial loss to train the RNN-GAN. Also, the proposed work also validated abdomen CT scans and gained good results on LITs benchmarks. The structure of the GAN model used in this research is illustrated in Fig. 6.

reproduced from paper [89]

The RNN-GAN architecture is based on two generator and discriminator networks. This figure is

4.3 GANs applications in liver tumor segmentation

The WHO reports 2017 revealed that liver cancer had become second most common malignant tumor, the leading cause of mortality globally [74]. The prevention and treatment of liver diseases are active research topics worldwide. Therefore, liver lesions share essential information regarding the initial treatment plan to improve the patient recovery process. Nowadays, various researchers have proposed different liver segmentation approaches and frameworks based on CT images to detect lesions in the early stages of such cancers. Frid Adar et al. [75] presented a GAN-oriented augmenting data method used against a limited dataset to increase the medical images. This experiment is tested on three categories of a liver lesion, such as cysts, metastases, and hemangiomas, to improve classification performance using CNN networks. A multiscale GAN network [136] is utilized for liver segmentation using weighted loss function. Furthermore, pix2pix GAN is based on DeepLabv3 to achieve semantic features. In liver appearance, fuzzy boundary, complex background, and appearance create a challenging task for the research community. A network called DI2IN proposes [72] liver segmentation based on encoder and decoder modules incorporating multilevel feature combination and deep supervision. A low-cost and safe clinical tool is suggested using Radiomics-guided GAN [76] to segment liver lesions. This network also learns mapping relations between contrast and no-contrast images. Results demonstrate that the liver segmentation method is the most useful tool for clinical experts. Another research work [77] presented a semi-supervised-based GAN architecture for liver segmentation using CT images. In the training process, non-annotated data are used to reduce annotated data requirements. In addition, Bayesian-based loss function is adopted to include prior and likelihood. Sun et al. presented an end-to-end MM-GAN-based framework [73] to translate label maps to 3D MRI images. In detailed experiments, both liver and brain images are synthesized to increase the data volume.

4.4 GANs applications in retina diseases segmentation

Diabetic retinopathy and GLAUCOMA are two leading eye diseases that cause severe damage to the eyes blood vessels and ultimate vision loss. Therefore, early diagnosis and screening are essential to reduce permanent blindness risks. Research studies based on retinal vessel segmentation have highly employed deep learning-based models. A GANs-oriented semi-supervised network is proposed for the semantic segmentation task. Compared with traditional CNN networks, proposed GAN training process is more effective [39]. The residual learning concept is applied to improve architecture built upon FCNs models. Moreover, adversarial training improves the segmentation results—a mapping among retinal and segmentation maps using FCN and GAN [50]. Wu et al. [40] presented a U-GAN improvement model based upon GAN incorporated with classical U-Net architecture, including densely connect convolutional and attention gates in the generator network. A novel label refinement approach was proposed [41] based on iterative GAN. This network trains on low-quality patches and high-quality patches with some noisy vessel labels. Tu et al. proposed [42] WGAN-GP-based encoder and decoder networks that consist of dilated residual layer and pyramid pooling network to address training instability. Tjio et al.[43] presented MuGAN consisting of multi discriminators that contain receptive fields sensitive to various scales. And discriminators play the role of attention to multi scale patterns. Edge detector HEDNet [121] was used for the segmentation task of the diabetic retinopathy dataset. Also, the model is trained to reduce loss and optimized discriminator classifying loss. A conditional-GAN [51] is used for segmentation, which improves the overall performance, and reduces the overfitting problem with a minimum learning rate. AMD-GAN [53] uses an attention encoder mechanism and multibranch structure for the detection of fundus disease images. cGAN [138] is trained for segmentation tasks such that pre-processing and image enchantment is applied. Texture features use the indices of taxonomic diversity M-GAN [44]. Discriminator based on U-Net with few short connections and middle Conv layer replace with a dense connection block [45]. A GAN [46] model is employed for thin vessel segmentation of the retina; performance is better than classical U-Net network. The architecture of the GAN model used in this study is shown in Fig. 7. GL-Net [47] is based on DCNN, which is used to segment optic disk and cup. VGG16 is used as a feature extraction to reduce the down sampling factor. SEGAN [48] and MSFRB models are applied to enhance the performance of retina vessel segmentation. Their proposed model [54] is useful for high-resolution DR images, and synthesized images are used for further data augmentation process. GAN is combined with a topological structure to reduce the loss and enhance connectivity [52]. To precisely segment the optic cup and disk, a patch-based output space adversarial framework is applied. [49]. Few other studies [46, 135] proposed GAN-based models, and results are evaluated on fundoscopic datasets such as DRIVE and STARE.

reproduced from paper [46]

The framework of RetinaGAN for accurate vessel segmentation. This figure is

4.5 GANs applications in breast cancer segmentation

Breast cancer is the deadliest disease in young females all around the world. In the USA, 268,600 females are diagnosed with breast cancers, and 41,760 mortalities are reported [146, 147]. The female survival rate strongly relies on early diagnosis to prevent breast cancer risks. Mammography is an inexpensive primary breast cancer screening method for female breast cancer diagnosis, leading to breast cancer detection. Breast tumor segmentation remains challenging because of tumor variability in shape, size, texture, and correct localization.

Recently, various studies have been proposed to synthesize and segment breast tumors and improve prediction accuracy. Muli-FCN [94] proposed dilated convolution to partial convolution to reduce the loss of pixels. A generative adversarial network is adopted to train models to segment images correctly. The proposed model enhanced mammography segmentation's overall accuracy and achieved a dice score of 91.15% and 91.8%. Singh et al. [120] presented cGAN is used to segment breast tumor ROI, and then classified binary mask using the convolutional network-based descriptor. GAN networks learn to identify between fake and real images. Additionally, the GAN network is also encored to create ground truth images as possible. In the classification process, the CNN model generated ground truth into four parts: lobular, oval, irregular, and round. Overall 80% accuracy is achieved, higher than other previously proposed methods. A semi-supervised GAN-based model consists of the BUS-S network for segmenting image and the BUS-E network to evaluate the network's performance [92]. This method extracts densely multi scale features vector to assist the variance individually of breast lesions. BUS-GAN achieves higher performance in the segmentation process by BUS-E network, which provides direct guides to the second network BUS-S, to produce more realistic segmentation maps. Results reflect that the proposed GAN network achieves superior accuracy on both private and public datasets. Ma et al. [95] suggested an automated GAN-based deep learning method to determine the FGT region in MRI-based images. As compared with classic U-Net architecture, the proposed GAN identifies the FGT region in MRI more accurately and produces accurate results. A novel RDA-UNET-WGAN [141] method was proposed for breast cancer images and adversarial training to generate realistic tumor masks that are similar to ground truth images. To segment, the image residual dilated attention gate is used in the U-Net model, which acts as a generator. However, a Conv network is used as a classifier to act as a discriminator. Zhu et al. [93] suggested FCN-CRF network based upon end-to-end adversarial network for breast mass segmentation. Furthermore, mass distribution depends on pixel position, fully convolutional network merge with position priori. Experiments are performed on two publicly available datasets INbreast and DDSMBCRP.

4.6 GANs applications in skin lesion segmentation

According to facts, malignant melanoma is a fast-growing cancer type worldwide, and the death rate has increased dramatically. A cancer report published by the WHO has revealed that 1.04 melanoma cases were recorded in 2018. It is more important to timely diagnose such cancers because the survival of patients with melanoma within five years is lesser than 15%; however, rapid melanin is greater than six times. Dermatologists use the determoscopy procedure to monitor and magnify skin pigmentation diseases. However, this treatment is more time-consuming and highly required expertise. The advancement of deep learning models in computer vision systems provides an essential tool for dermatologists to detect skin-related cancers more accurately.

The skin lesion features are learned by stacked adversarial learning. Learned features extend feature diversity to FCN as training data [100]. The leveraging of GANs network is introduced for the skin lesion segmentation process. The lesion samples are synthesized using the FCN network, a CNN for discriminating between real and syntactic images [83]. Lei et al. [78] introduced DAGAN integrated UNet-SDC module similar to the UNet encoder and decoder and used a dual discrimination module. The proposed network is trained on ISIC challenge datasets 2016–17 and 2018. The structure of the GAN model used in this study is illustrated in Fig. 8. Sarkera et al.[79] came up with integrated 1D kernel factorized networks, aggregation, multiscale position, and channel-based attention mechanisms with the classical GAN architecture. As compared with previously proposed models, MobileGAN has 2.35 million trainable parameters. The results are assessed on the skin challenge dataset ISBI 2017–18. TU et al. [80] method is based on the dense residual module and adversarial-based learning, which rely on CNN architecture. Furthermore, EPE and multiscale loss functions were adopted for deep supervision to improve fuzzy boundaries in skin lesion images and make the segmentation process more stable. Peng et al. [81] presented a network consisting on the classical UNet model, and the discriminator module is linked with a few convolutional layers of CNN. The proposed architecture was tested on two public datasets PH2 and ISBI 2016 challenge datasets. Pollastri et al. [144] proposed two GAN-based networks, which are called DCGAN and LAPGAN, respectively. The core concept is to produce skin lesion images and segmentation masks using the proposed GANs architecture. Ding et al.[118] present a method for synthesis dermoscopy images to tackle data limitation issues. The cGAN is used for image-to-image translation to account for prior label mapping as source input to create new dermoscopy synthesis images. Furthermore, feature matching loss is proposed to enhance the generated image quality.

reproduced from paper [78]

The flowchart shows the GAN-based UNet-SCDC model. This figure is

4.7 GANs applications in microscopic segmentation

Histopathology plays a crucial role in many clinic decision-making and disease-identifying processes. Different cell segmenting models have been proposed for images from light-sheet imaging and electron microscopy in the past few years. Xu et al. [122] proposed cycle-GAN to convert H&E-stained to IHC-stained images, supporting fake IHC on the same slide. Moreover, a limited data sample is required, but results are generated pixel-wise. Furthermore, two-loss functions are adopted to improve translation accuracy. Label pathology images are expensive to analyze and time-consuming as well. A consistent cycle-GAN [123] is presented to tackle this problem based on an unsupervised segmentation approach for segmentation histopathology images. A self-supervision GAN model [57] is used for segmentation without manual annotation images. Then coarse segmentation is obtained using the classical segmentation method. Experiments are performed on red blood cells and microscope images. Results demonstrate that annotation free method achieved considerable improvement as compared to classical methods. Tsuda et al. [132] suggested a pix2pix variant of GAN used for cell image segmentation. Moreover, multiple GANs are adopted with different roles, and results are more accurate than other previously proposed methods. An end-to-end trainable model was proposed [124] that combines segmentation tumor epithelium on PDL1 while using unpaired image to image translation among CK and PDL1. Majurski et al. [58] introduced a cell object representation as an abstract approach.

The unsupervised learn representation feeds to a traditional CNN segmentation network. Furthermore, transfer learning is used incorporated with the COCO dataset, including semantic segmentation. Research in the present network [59] is based on CNN and adversarial loss function to segment microcopy cells. Wang et al. [60] deep learning-based model is presented with an adversarial training approach to resolving the object contour segmentation problem. Generator part is replaced with FCN while discriminator is utilized for the adversarial training process. In a study [61], image to image, a translation method is proposed to synthesize fake samples from real sample images. The fake images are combined with original images to generate multichannel images. HEp-2 cells are essential for detecting antinuclear auto antibodies in human autoimmune disease. These cells have multiple patterns and shapes. For the accurate segmentation of HEp-2 dataset images, novel cGAN is adopted [115]. The accurate segmentation of cell nuclei images limited annotated data samples as another challenge. To address this challenge, Method [116] is based on the data image augmentation task, and corresponding ground truth is also produced. Guo et al.[62] stated that transfer learning knowledge is incorporated with the adversarial-based network for microcopy segmentation. The results reflect that the presented model is sufficient to develop a segmentation solution for new modalities. Zhang et al. [56] suggested a DAN model for gland segmentation and achieved good results on un-annotated and annotated images. Basically, the proposed framework consists of a segmentation architecture and evaluation network to review network segmentation quality. A GAN-based model [63] is suggested with multiple feature extraction layers for accurate segment spheroids. Results are qualitatively and quantitatively evaluated against segmentation datasets. Gong et al. [55] used Style Consistent GAN for the nuclei segmentation task. Furthermore, the model is used to segment real and fake nuclei images, which benefits the generator part to boost segmentation accuracy and performance.

4.8 GANs applications in lungs diseases segmentation

There is considerable research work based on CXR image analysis for lungs diseases diagnosis. Besides, lung cancer is another severe type of cancer, with around 1.7 million death reported worldwide in 2018. The lung cancer survival rate is 10–17%, and the early diagnosed five-year survival rate increased to 70% [148]. Computerized tomography (CT scans) is another widely practiced modality for clinical diagnosis and treatment planning. A proposed DecGAN [64] automatically decomposed X-ray images but with un pair provided data. Furthermore, depending on existing CT anatomy information, X-ray data are separated into different components. The efficiency of the model is evaluated by comparing it with other states of art models. LGAN [96] is a novel lung segmentation schema to segment the CT dataset, which includes 220 scan images. Moreover, the proposed network can also be employed for different modalities of image segmentation. A style-based GAN is used with randomly selected styles for data augmentation of given LIDC-IDRI datasets. Results demonstrate that the synthesized lung nodule samples are realistic and help a more accurate nodule segmentation method [143]. The SCAN model is presented to segment lung and heart regions by utilizing CXR datasets. A critic network is employed to learn structures in provided masks to differentiate between ground truth and synthesized images [97]. The researcher in [98] studied multi-organ segmentation in an unsupervised manner. The synthetic label is taken as DRR image input and produces segmentation results. Five hundred chest X-ray images were acquired from the NIH-dataset for detailed experiments. Cycle-GAN network is used to perform image style transfer and develop a module to segment human multiple organs concurrently. The structure of GAN-based framework is illustrated in Fig. 9. The proposed network [131] is based on a cGAN. A cGAN is used on the pix2pix network and extends to a novel image-to-image network. Furthermore, dilated conv is utilized to enhance the performance and receptive fields. The JSRT-chest X-ray dataset is used for experiments, and results are compared with previously proposed methods.

reproduced from paper [98]

Overview of the task-oriented generative model framework. This figure is

4.9 GANs applications in orthopedic diseases segmentation

Orthopedic diseases are the most common in older adults, which causes physical disability. The pain and symptoms cause a severe effect on older adults lifestyles. But early treatment helps the physician to diagnose and slow down the progression process properly. Several CNN models have been proposed to classify and detect orthopedic disease in the last few decades. A butterfly-shaped GAN network [84] merges the information across reformations and incorporates energy-based adversarial training. The proposed network without post-processing outperformed as compared with other models. Alsinan et al. [139] developed a GAN-based network that produces synthetic B-mode ultrasound images and performs segmentation for the bone surface mask in real time. Besides, two conv blocks are termed as self-attention and self-projection blocks. In a clinical setting, fully automated tissue segmenting is an important process to translate MRI images quantitatively. A hybrid approach is proposed by combining CGAN and U-Net architecture to perform segmentation on medical images [117]. Human spinal structure analysis remains laborious for clinical assessments of MRI images to identify an abnormality of pathological factors. Spine-GAN [85] is another variant of classical GAN proposed to address the big changes and variability of complex spinal structures, an autoencoder block that is efficient enough to obtain semantic task-aware representation. For osteolytic bone tumor surgery, accurate cystic bone lesion localization is the most critical process. Zaman et al. [137] presented a multiple snapshot method to mitigate the unimodal deterministic output challenges in the Pix-2-Pix model without utilizing any deep and complex network. Acoustic bone shadow is considered an essential artifact utilized to determine bone boundaries' appearance in ultrasound images. In this regard, Alsinan et al. [86] presented a GAN model that is used to accurately segment bone shadow in the biomedical image.

4.10 GANs applications in multi-organ segmentation

An sMRI-adid automatic segmentation method is proposed for multi-organ segmentation. To estimate sMRI images from CT, a cycle-GAN is employed. DA-UNet is trained on sMRI images for auto-segmentation of pelvic CT images [149]. A novel STRAINet [150] with adversarial learning is presented to segment the pelvic MRI images jointly. Furthermore, a stochastic residual approach is adopted to tackle the optimization issues of FCN networks. An adversarial confidence learning-based framework [151] is adopted for a better image segmentation task. Additionally, fully convolutional GAN is used for confidence learning to contribute region and voxel-wise confidence details for the synthesis network. In MRI images, fiducial markers display as tiny-signal voids and are usually challenged to localized in images. The proposed approach relies on deep learning to automatically detect tiny-signal fiducial features in scan images [152]. Segmenting the prostate accurately by using MRI images is a challenging research area in prostate cancer diagnosis. In research [145], a fully convolutional generation model of densely connected blocks and a critic model is combined with multiscale feature extraction. Qu et al. proposed TDGAN [87] training for multi-organ segmentation to allow the generator to learn privately and temporarily introduced discriminators from multidata centers. Furthermore, two-loss functions are proposed as digesting and remaindering loss functions to balance between memorizing and distribution.

In research [66], a deep learning model is introduced for radiotherapy treatment planning using automatic multiple thoracic OARs on the chest CT scans. UNet-GAN is a U-Net and GAN network hybrid, which can concurrently train a set of UNet as generators, and FCNs models as discriminators. Because hand contouring is a time-consuming and arduous activity, multi-organ segmentation of the head and neck is essential for the first treatment plan. Furthermore, a supervised-based FCN called Dense-Net is used to perform segmentation for voxel-wise prediction [67]. Deep learning models require extensive biomedical imaging data to train the model correctly. Recent researchers also employ adversarial networks to generate synthesis images to increase medical data size. In this research, cycle-GAN [130] is used for augmenting data techniques to increase the data size. Sivanesan et al. [82] presented an unsupervised framework for medical semantic segmentation. The GAN model is trained to convert simple edge diagrams into synthetic medical images and develop a dataset to train the model. Kidney tumor segmentation is another important research field for quantifying tumor indices and helping medical experts with tumor therapy planning. MB-FSGAN [68] is presented for concurrent quantification and the segmentation of kidney tumors in CT scans. This network accurately segments and quantifies tumors of kidney diseases by utilizing combined learning incorporating adversarial learning. Zhang et al. [69] came up with a colorectal tumor segmenting method to diagnose colorectal cancers. The proposed LAGAN model excelled using probabilistic maps and their respective masks. Learning enhances the pixel-wise label assignment and improves refinement. The diameter of the tumor, cross-sectional area, perimeter, and center-point coordinates are crucial for treatment planning and diagnosing. For renal tumor estimation, Mt-UcGAN [70] is a joint segmentation and quantization of uncertainty. In Photoacoustic computed tomography (PACT), immediate image reconstruction is considered a serious challenge. In this regard, Ki-GAN [71] is presented to develop a primary PA pressure of vessels. Results indicate that the proposed model performs well-sampled images.

5 Discussions

This survey paper shows a sudden spike in GAN applications in the biomedical segmentation domain. More than 138 papers are reviewed that are purely based on medical images segmentation using different GANs networks. It is evident that GANs are achieving significant attention in the biomedical imaging from 2016–2021. After reviewing several research papers, we hope to share detailed information regarding developing a better GAN for a biomedical image segmentation. Successful training of segmentation models requires a huge amount of label images. Some of the segmentation networks used in the evaluated studies use local datasets (publicly inaccessible datasets), limiting their reusability and reachability. Table 5 summarizes the most extensively used publicly available datasets to develop a broadly approved solution. These benchmark datasets assist the research community in validating current performance and suggesting enhancements. We have also observed that each modality required a different approach or technique to tackle the corresponding challenges. The preliminary results show that complex variation in targeted images of brain, lungs, skin, and retina organs requires different mechanisms to integrate with the GAN to learn complex structures and patterns. Furthermore, noise factor in different imaging modalities adds another challenge to proposing a unique solution for different segmentation methods. But data labeling and annotations are considered expensive in biomedical imaging [2]. Because of the lack of data availability, deep learning model usually suffers data imbalance issues due to the rare nature of pathologies texture, shape, and color features [5]. GANs are unsupervised learning models that do not require label data and can be trained using unlabeled datasets. GANs are fully capable of generating realistic-looking fake images and increasing data quantity and lower cost. Most studies use a single GAN model as a data augmentation technique to increase the availability of training datasets in the same imaging modality. GANs-based augmentation enlarges dataset images and helps in semi-supervised and unsupervised training. Furthermore, cycle-GAN models are widely used as translators between different modalities and significantly contribute to cross-modality segmentation tasks [125]. These models can learn internal, messy, and complicated representation and distribution of dataset [126, 127]. Learning texture, shape, and color features pattern in deep learning contributes to detecting various diseases. These networks are based on adversarial learning, which is the most state-of-the-art architecture to extract essential information from provided datasets that classical pix-wise losses fail to hold. This survey found that 48% of studies are based on MRI modality, 31% are based on CT scan modality, and various studies are most frequently experimented with brain tumor segmentation. Table 2 shows that 65% of GANs models are based on the classical vanilla-GAN model. Conditional-GAN and cycle-GAN are also popular, and their share is 16% and 7% in all proposed models. The rest of Pix2Pix, Patch-GAN, Style-GAN, and DCGAN is also utilized, but their share is around 5–1% only. Most research papers shared their model implementation source code for other researchers to reproduce the model. And most of the time, these codes help new researchers reduce their effort to start code from scratch level. The python-based libraries like TensorFlow, Keras, and PyTorch are most famous in GANs models that can provide an efficient way to train models on different datasets. According to our findings, 79 papers did not reveal their programming language or libraries. However, 35 papers used TensorFlow, and Keras libraries to implement GANs models. Rest of 23 papers used PyTorch library to train and implement models. Majorities of studies are based on open-source Python frameworks and libraries; only 2 studies are implemented in licensed software MATLAB.

5.1 Limitations of GANs

In our presented survey, we identify a few major limitations of GANs that may be obstacles to the biomedical research community’s acceptance. The unavailability of a large medical dataset has been a huge barrier to the use of GANs-based models in biomedical images [75]. In the healthcare domain, clinician trust is a big challenge for any new advancements; fake data synthesis by GANs provides some comfort for the research community. In a study [7], clinical usage of artificial intelligence in medical imaging is still debated. When GANs are utilized for the clinical task, the big challenge is that they may synthesize false information. The deep biomedical models that train on fake images also raise data credibility questions. Another research [9] shows that GANs can easily fool radiologists, and it is quite challenging to determine real and fake images. In medical imaging, most research studies are performed on 2-D and 3-D images, but there is still a challenging task to use GANs power for 4-D-based images [24]. In studies, separate GANs are trained for each class, which increases complexity. However, generating multiclass samples would be better to reduce complexity overheads [75]. Despite GANs-based networks performing well for medical image segmentation tasks, there are different challenges involved, as shown in Fig. 10 with modality-based challenges, for deploying the real-world implication of GANs. The performance of GANs is also affected by low-quality imaging caused by various artifacts and noises, where noise may affect useful image features, while artifacts add irrelevant features with complex patterns. However, it is an open challenge for the research community to propose GANs that de noise and help in preprocessing medical imaging data to reduce the presence of artifacts and noises from images.

The training aim of GANs network is mostly considered as saddle point optimization that gradient-based methods could resolve. The other problem is that generator and discriminator networks are trained from scratch to converge together [8]. There is uncertainty of balance among training of discriminator D and generator G with the JS divergence. It mostly leads towards better performance of discriminator as opposite to generator network. Consequently, one network maybe become more powerful to classify real and fake samples easily, So, if Discriminator D becomes stronger as compared to generator G, thus gradient of D reaches zero, and it turns into ineffectual in adversarial learning of generator G [7]. Another common problem is that generator produces a few set of repetitive mage samples because of the focus on few finite modes of true data distribution. The other limitation of GANs is convergence issues such as mode collapse. This problem happens when the generator network learns to synthesize a limited heterogeneity of images out of various modes obtainable in the training dataset. In biomedical imaging, where the image modes are not explicit, identifying such uncertain conditions generates unrealistic results that could be an obstacle for researchers. Sometimes discriminators perform so well, and the generator fails because of the gradient vanishing problem [9]. It means discriminators stop sharing important information for the generator to process by learning new patterns and textures from input images. Traditional pixel-wise matrices use ground truth images to evaluate performance. So, the researcher must carefully develop data flow and loss functions to tackle non-convergence and model collapsing issues. But most of the time, GANs dataset images do not have any ground truth images in the case of unsupervised models. To reduce the training errors of GANs and to improve the network convergence, the optimization of loss function is the key challenge in current studies [98]. The training process of GANs model is another issue, powerful GPU hardware is essential to train a considerable amount of image data, and these systems are costly to afford [79]. Compared with traditional CNN models, the GAN-based model is based on generator and discriminator networks, so two networks train simultaneously and need a lot of time to train the network properly. Most of the GANs-based networks use small size of input images like 64, or 128-pixels images, which may also cause distinctive information loss from medical images [19]. When images size is increased to 256, and 512 resolutions, it may also require huge training time and extensively powerful GPUs power.

6 Conclusion

GANs have emerged as a hot research area in medical imaging due to their image synthesis power and success rate in many computers vision-related challenges such as segmentation, image construction, and registration. Following this context, different GANs are explored, covering developments and advancements in biomedical image segmentation tasks. GANs network development incurred unique challenges in medical imaging modalities. According to our findings Vanilla-GAN, conditional-GAN and their modified variants are widely used in different medical imaging segmentation tasks. We have also noticed that MRI and CT scans imaging modalities are widely repeated in GANs-based studies. For new researchers, there is huge potential to explore breast tumors, pelvic segmentation, bone segmentation, and colorectal tumor segmentation for future work. Furthermore, brain tumor segmentation is hot research area in GANs-based studies. Most of studies utilized adversarial loss, binary cross-entropy, and dice loss function to compute loss of generator and discriminator networks. GANs suffer from un-interpretability and low repeatability as other traditional CNN models. It could be a challenge to their application in the biomedical segmentation task. The solution to these challenges will provide a new direction for developing effective GANs-based networks. Because of the great utility and promising results of GANs variants, it is expected that they will be widely used to handle a variety of tough challenges in biomedical image segmentation for the development of real-world computer-aided diagnosis systems.

References

McInerney T, Terzopoulos D (1996) Deformable models in medical image analysis: a survey. Med Image Anal 1:91–108. https://doi.org/10.1016/S1361-8415(96)80007-7

Martel AL, Allder SJ, Delay GS, et al (1999) Measurement of infarct volume in stroke patients using adaptive segmentation of diffusion weighted MR images. In: Lecture notes in computer science (including subseries lecture notes in artificial intelligence and lecture notes in bioinformatics). pp 22–31

Iqbal A, Sharif M (2010) MDA-Net: Multiscale dual attention-based network for breast lesion segmentation using ultrasound images. J King Saud Univ Comput Inf Sci. https://doi.org/10.1016/j.jksuci.2021.10.002

Garcia-Garcia A, Orts-Escolano S, Oprea S et al (2017) A review on deep learning techniques applied to semantic segmentation. Med Image Anal 42:60–88. https://doi.org/10.1016/j.media.2017.07.005

Litjens G, Kooi T, Bejnordi BE et al (2017) A survey on deep learning in medical image analysis. Med Image Anal 42:60–88. https://doi.org/10.1016/j.media.2017.07.005

Arel I, Rose DC, Karnowski TP (2010) Deep machine learning—a new frontier in artificial intelligence research [research frontier]. IEEE Comput Intell Mag 5:13–18. https://doi.org/10.1109/MCI.2010.938364

Yi X, Walia E, Babyn P (2019) Generative adversarial network in medical imaging: a review. Med Image Anal. https://doi.org/10.1016/j.media.2019.101552

Kazeminia S, Baur C, Kuijper A et al (2020) GANs for medical image analysis. Artif Intell Med 109:101938. https://doi.org/10.1016/j.artmed.2020.101938

Sorin V, Barash Y, Konen E, Klang E (2020) Creating artificial images for radiology applications using generative adversarial networks (GANs)—a systematic review. Acad Radiol 27:1175–1185. https://doi.org/10.1016/j.acra.2019.12.024

Pavan Kumar MR, Jayagopal P (2020) Generative adversarial networks: a survey on applications and challenges. Int J Multimed Inf Retr. https://doi.org/10.1007/s13735-020-00196-w

Goodfellow IJ, Pouget-Abadie J, Mirza M et al (2014) Generative adversarial networks. Commun ACM 63:139–144. https://doi.org/10.1145/3422622

Mirza M, Osindero S (2014) Conditional generative adversarial nets. 1–7

Radford A, Metz L, Chintala S (2015) Unsupervised representation learning with deep convolutional generative adversarial networks. In: 4th international conference on learning representations, ICLR 2016—conference track proceedings 1–16

Isola P, Zhu J-Y, Zhou T, Efros AA (2016) Image-to-image translation with conditional adversarial networks. In: Proceedings—30th IEEE conference computer vision pattern recognition, CVPR 2017 2017-Janua: 5967–5976. https://doi.org/10.1109/CVPR.2017.632

Zhu J-Y, Park T, Isola P, Efros AA (2017) Unpaired image-to-image translation using cycle-consistent adversarial networks. In: Proceedings of IEEE international conference computer vision 2017-Octob: 2242–2251. https://doi.org/10.1109/ICCV.2017.244

Chang Q, Qu H, Zhang Y, et al (2020) Synthetic learning: learn from distributed asynchronized discriminator GAN without sharing medical image data. arXiv 1–11

Hamghalam M, Lei B, Wang T (2019) Brain tumor synthetic segmentation in 3D multimodal MRI scans. 2:153–162. https://doi.org/10.1007/978-3-030-46640-4_15

Huang P, Li D, Jiao Z, et al (2019) CoCa-GAN: common-feature-learning-based context-aware generative adversarial network for glioma grading. pp 155–163

Delannoy Q, Pham CH, Cazorla C et al (2020) SegSRGAN: super-resolution and segmentation using generative adversarial networks—application to neonatal brain MRI. Comput Biol Med. https://doi.org/10.1016/j.compbiomed.2020.103755

Elazab A, Wang C, Gardezi SJS et al (2020) GP-GAN: brain tumor growth prediction using stacked 3D generative adversarial networks from longitudinal MR images. Neural Netw 132:321–332. https://doi.org/10.1016/j.neunet.2020.09.004

Xue Y, Xu T, Zhang H, et al (2017) SegAN: adversarial network with multi-scale L1 Loss for medical image segmentation. https://doi.org/10.1007/s12021-018-9377-x

Li Q, Yu Z, Wang Y, Zheng H (2020) Tumorgan: a multi-modal data augmentation framework for brain tumor segmentation. Sensors (Switzerland) 20:1–16. https://doi.org/10.3390/s20154203

Li Z, Wang Y, Yu J (2018) Brain tumor segmentation using an adversarial network. Lect Notes Comput Sci (including Subser Lect Notes Artif Intell Lect Notes Bioinformatics) 10670 LNCS:123–132. https://doi.org/10.1007/978-3-319-75238-9_11

Huang Y, Zheng F, Cong R et al (2020) MCMT-GAN: multi-task coherent modality transferable GAN for 3D brain image synthesis. IEEE Trans Image Process 29:8187–8198. https://doi.org/10.1109/TIP.2020.3011557

Tokuoka Y, Suzuki S, Sugawara Y (2020) An inductive transfer learning approach using cycle-consistent adversarial domain adaptation with application to brain tumor segmentation. arXiv 44–48

Moeskops P, Veta M, Lafarge MW, et al (2017) Adversarial training and dilated convolutions for brain MRI segmentation. arXiv 56–64. https://doi.org/10.1007/978-3-319-67558-9

Wang G, Song T, Dong Q et al (2020) Automatic ischemic stroke lesion segmentation from computed tomography perfusion images by image synthesis and attention-based deep neural networks. Med Image Anal 65:1–14. https://doi.org/10.1016/j.media.2020.101787

Chen H, Qin Z, Ding Y, Lan T (2019) Brain tumor segmentation with generative adversarial nets. In: 2019 2nd international conference on artificial intelligence and big, ICAIBD 2019, 301–305. https://doi.org/10.1109/ICAIBD.2019.8836968

Khosravan N, Mortazi A, Wallace M, Bagci U (2019) PAN: projective adversarial network for medical image segmentation. arXiv 2:68–76

Kuang H, Menon BK, Qiu W (2019) Automated infarct segmentation from follow-up non-contrast CT scans in patients with acute ischemic stroke using dense multi-path contextual generative adversarial network. Lect Notes Comput Sci (including Subser Lect Notes Artif Intell Lect Notes Bioinformatics) 11766 LNCS:856–863. https://doi.org/10.1007/978-3-030-32248-9_95

Yuan W, Wei J, Wang J, et al (2019) Unified attentional generative adversarial network for brain tumor segmentation from multimodal unpaired images. arXiv 1:229–237

Rachmadi MF, Valdés-Hernández M del C, Makin S, et al (2019) Predicting the evolution of white matter hyperintensities in brain MRI using generative adversarial networks and irregularity map. bioRxiv 2:146–154. https://doi.org/10.1101/662692

Kamnitsas K, Baumgartner C, Ledig C, et al (2017) Unsupervised domain adaptation in brain lesion segmentation with adversarial networks. Lect Notes Comput Sci (including Subser Lect Notes Artif Intell Lect Notes Bioinformatics) 10265 LNCS: 597–609. https://doi.org/10.1007/978-3-319-59050-9_47

Shi Y, Cheng K, Liu Z (2019) Hippocampal subfields segmentation in brain MR images using generative adversarial networks. Biomed Eng Online 18:1–12. https://doi.org/10.1186/s12938-019-0623-8

Liu P, Li C, Schönlieb CB (2019) GANReDL: Medical image enhancement using a generative adversarial network with real-order derivative induced loss functions. Lect Notes Comput Sci (including Subser Lect Notes Artif Intell Lect Notes Bioinformatics) 11766 LNCS:110–117. https://doi.org/10.1007/978-3-030-32248-9_13

Baur C, Wiestler B, Albarqouni S, Navab N (2019) Deep autoencoding models for unsupervised anomaly segmentation in brain MR images. In: arXiv. Springer International Publishing, pp 161–169

Nie D, Wang L, Xiang L, et al (2019) Difficulty-aware attention network with confidence learning for medical image segmentation. In: 33rd AAAI Conf Artif Intell AAAI 2019, 31st Innov Appl Artif Intell Conf IAAI 2019 9th AAAI Symp Educ Adv Artif Intell EAAI 2019, 1085–1092. https://doi.org/10.1609/aaai.v33i01.33011085

Zhang C, Song Y, Liu S et al (2018) (2019) MS-GAN: GAN-based semantic segmentation of multiple sclerosis lesions in brain magnetic resonance imaging. Int Conf Digit Image Comput Tech Appl DICTA 2018:1–8. https://doi.org/10.1109/DICTA.2018.8615771

Lahiri A, Ayush K, Biswas PK, Mitra P (2017) Generative adversarial learning for reducing manual annotation in semantic segmentation on large scale miscroscopy images: automated vessel segmentation in retinal fundus image as test case. IEEE Comput Soc Conf Comput Vis Pattern Recognit Work. https://doi.org/10.1109/CVPRW.2017.110

Wu C, Zou Y, Yang Z (2019) U-GAN: generative adversarial networks with u-net for retinal vessel segmentation. In: 14th international conference on computer science & education (ICCSE) 2019, pp 642–646. https://doi.org/10.1109/ICCSE.2019.8845397

Yang Y, Wang Z, Liu J, et al (2019) Label refinement with an iterative generative adversarial network for boosting retinal vessel segmentation. arXiv 1–9

Tu W, Hu W, Liu X, He J (2019) DRPAN: a novel adversarial network approach for retinal vessel segmentation. In: Proceedings of the 14th IEEE conference on industrial electronics and applications, ICIEA 2019, 228–232. https://doi.org/10.1109/ICIEA.2019.8833908

Tjio G, Li S, Xu X, et al (2019) Multi-discriminator generative adversarial networks for improved thin retinal vessel segmentation. Lect Notes Comput Sci (including Subser Lect Notes Artif Intell Lect Notes Bioinformatics) 11855 LNCS:148–155. https://doi.org/10.1007/978-3-030-32956-3_18

Park KB, Choi SH, Lee JY (2020) M-GAN: retinal blood vessel segmentation by balancing losses through stacked deep fully convolutional networks. IEEE Access 8:146308–146322. https://doi.org/10.1109/ACCESS.2020.3015108

Yang T, Wu T, Li L, Zhu C (2020) SUD-GAN: deep convolution generative adversarial network combined with short connection and dense block for retinal vessel segmentation. J Digit Imag 33:946–957. https://doi.org/10.1007/s10278-020-00339-9

Son J, Park SJ, Jung KH (2019) Towards accurate segmentation of retinal vessels and the optic disc in fundoscopic images with generative adversarial networks. J Digit Imag 32:499–512. https://doi.org/10.1007/s10278-018-0126-3

Jiang Y, Tan N, Peng T (2019) Optic disc and cup segmentation based on deep convolutional generative adversarial networks. IEEE Access 7:64483–64493. https://doi.org/10.1109/ACCESS.2019.2917508

Zhou Y, Chen Z, Shen H et al (2021) A refined equilibrium generative adversarial network for retinal vessel segmentation. Neurocomputing 437:118–130. https://doi.org/10.1016/j.neucom.2020.06.143

Wang S, Yu L, Yang X et al (2019) Patch-based output space adversarial learning for joint optic disc and cup segmentation. IEEE Trans Med Imag 38:2485–2495. https://doi.org/10.1109/TMI.2019.2899910

Shankaranarayana SM, Ram K, Mitra K, Sivaprakasam M (2017) Joint optic disc and cup segmentation using fully convolutional and adversarial networks. Lect Notes Comput Sci (including Subser Lect Notes Artif Intell Lect Notes Bioinformatics) 10554 LNCS:168–176. https://doi.org/10.1007/978-3-319-67561-9_19

Bian X, Luo X, Wang C et al (2020) Optic disc and optic cup segmentation based on anatomy guided cascade network. Comput Methods Programs Biomed 197:105717. https://doi.org/10.1016/j.cmpb.2020.105717

Yang J, Dong X, Hu Y et al (2020) Fully automatic arteriovenous segmentation in retinal images via topology-aware generative adversarial networks. Interdiscip Sci Comput Life Sci 12:323–334. https://doi.org/10.1007/s12539-020-00385-5

Xie H, Lei H, Zeng X et al (2020) AMD-GAN: attention encoder and multi-branch structure based generative adversarial networks for fundus disease detection from scanning laser ophthalmoscopy images. Neural Netw 132:477–490. https://doi.org/10.1016/j.neunet.2020.09.005

Zhou Y, Wang B, He X, et al (2019) DR-GAN: conditional generative adversarial network for fine-grained lesion synthesis on diabetic retinopathy images. arXiv XX:1–11

Gong X, Chen S, Zhang B, Doermann D (2021) Style consistent image generation for nuclei instance segmentation. Wacv 3994–4003

Zhang Y, Yang L, Chen J, et al (2017) Deep adversarial networks for biomedical image segmentation utilizing unannotated images. Lect Notes Comput Sci (including Subser Lect Notes Artif Intell Lect Notes Bioinformatics) 10435 LNCS:408–416. https://doi.org/10.1007/978-3-319-66179-7_47

Yu F, Dong H, Zhang M, et al (2020) AF-SEG: an annotation-free approach for image segmentation by self-supervision and generative adversarial network. In: 2020 IEEE 17th international symposium on biomedical imaging (ISBI). IEEE, pp 1503–1507

Majurski M, Manescu P, Padi S et al (2019) Cell image segmentation using generative adversarial networks, transfer learning, and augmentations. IEEE Comput Soc Conf Comput Vis Pattern Recognit Work. https://doi.org/10.1109/CVPRW.2019.00145

Arbelle A, Raviv TR (2017) Microscopy cell segmentation via adversarial neural networks. arXiv 645–648

Wang D, Gu C, Wu K, Guan X (2017) Adversarial neural networks for basal membrane segmentation of microinvasive cervix carcinoma in histopathology images. In: Proceedings of 2017 international conference on machine learning and cybernetics, ICMLC 2017, 2:385–389. https://doi.org/10.1109/ICMLC.2017.8108952

Gupta L, Klinkhammer BM, Boor P, et al (2019) GAN-based image enrichment in digital pathology boosts segmentation accuracy, pp 631–639

Guo Y, Wang Q, Krupa O, et al (2019) Cross modality microscopy segmentation via adversarial adaptation. Lect Notes Comput Sci (including Subser Lect Notes Artif Intell Lect Notes Bioinformatics) 11466 LNBI:469–478. https://doi.org/10.1007/978-3-030-17935-9_42

Sadanandan SK, Karlsson J, Wählby C (2017) Spheroid segmentation using multiscale deep adversarial networks. In: Proceedings of the IEEE international conference on computer vision (ICCV), 2017, 2018-Janua:36–41. https://doi.org/10.1109/ICCVW.2017.11

Li Z, Li H, Han H, et al (2019) Encoding CT anatomy knowledge for unpaired chest x-ray image decomposition. arXiv 4:275–283

Goel T, Murugan R, Mirjalili S, Chakrabartty DK (2021) Automatic screening of COVID-19 using an optimized generative adversarial network. Cognit Comput. https://doi.org/10.1007/s12559-020-09785-7

Dong X, Lei Y, Wang T et al (2019) Automatic multiorgan segmentation in thorax CT images using U-net-GAN. Med Phys 46:2157–2168. https://doi.org/10.1002/mp.13458

Tong N, Gou S, Yang S et al (2019) Shape constrained fully convolutional DenseNet with adversarial training for multiorgan segmentation on head and neck CT and low-field MR images. Med Phys 46:2669–2682. https://doi.org/10.1002/mp.13553