Abstract

In step-stress experiments, test units are successively exposed to higher usually increasing levels of stress to cause earlier failures and to shorten the duration of the experiment. When parameters are associated with the stress levels, one problem is to estimate the parameter corresponding to normal operating conditions based on failure data obtained under higher stress levels. For this purpose, a link function connecting parameters and stress levels is usually assumed, the validity of which is often at the discretion of the experimenter. In a general step-stress model based on multiple samples of sequential order statistics, we provide exact statistical tests to decide whether the assumption of some link function is adequate. The null hypothesis of a proportional, linear, power or log-linear link function is considered in detail, and associated inferential results are stated. In any case, except for the linear link function, the test statistics derived are shown to have only one distribution under the null hypothesis, which simplifies the computation of (exact) critical values. Asymptotic results are addressed, and a power study is performed for testing on a log-linear link function. Some improvements of the tests in terms of power are discussed.

Similar content being viewed by others

1 Introduction

In accelerated life testing, step-stress models are applied to lifetime experiments with highly reliable products, where under normal operating conditions the number of observed failures is expected to be low; see Bagdonavičius and Nikulin (2001), Meeker and Escobar (1998) & Nelson (2004). In a general step-stress experiment, n items are put on a lifetime test and successively exposed to m ≥ 2 different (usually increasing) stress levels \(y_{1},\dots ,y_{m}\). Starting the experiment under stress level y1, the stress on the test items then changes at pre-fixed time points or after having observed a pre-specified number of failures under each stress level. The experiment ends at some specified time point (type-I censoring) or upon observing a specified number of failures under stress level ym (type-II censoring). Based on a statistical analysis of this failure time data, the aim is then to estimate the lifetime distribution of the product under normal operating conditions. For an overview on the topic focussing on exponential lifetime distributions, we refer to Gouno and Balakrishnan (2001) and Balakrishnan (2009).

In a common parametric step-stress set-up, unknown distribution parameters \(\theta _{0},\theta _{1},\dots ,\theta _{m}\) are associated with (known) stress levels \(y_{0},y_{1},\dots ,y_{m}\) via a link function

Here, the parameter 𝜃0 corresponds to stress level y0, which represents the normal operating conditions. The function Ψζ, in turn, depends on an unknown parameter (vector) ζ, which is referred to as link function parameter. As two examples, ζ may be the vector of intercept and slope of a linear link function or may consist of the parameters of a log-linear link function; see, e.g., Bai et al. (1989), Alhadeed and Yang (2002), Wu et al. (2006), Srivastava and Shukla (2008) & Wang and Yu (2009). To obtain an estimator of 𝜃0 = Ψζ(y0), one may then either replace \(\theta _{1},\dots ,\theta _{m}\) and estimate ζ directly or estimate \(\theta _{1},\dots ,\theta _{m}\) and then fit the link function with respect to ζ. In both cases, however, there is some prior belief in the assumed type of the link function stating, for instance, a log-linear relationship of the 𝜃’s and y’s. Although link function assumptions are usually deduced from contextually relevant physical principles as, e.g., the inverse power law or the Arrhenius model, there also seem to be situations, where the theoretical knowledge supporting a specific life-stress relation is rather insufficient. For some specific lifetime distributions, such as the Weibull or log-normal distribution, (asymptotic) tests on a log-linear life-stress relationship can be found in Nelson (2004) and Meeker and Escobar (1998), where the latter also contains related graphical methods.

In Balakrishnan et al. (2012), a general step-stress model is proposed and studied based on sequential order statistics (SOSs), which have been introduced as an extension of common order statistics; see Kamps(1995a, b). For arbitrary baseline distribution, maximum likelihood estimation of the parameters associated with the stress levels turns out to be simple in this model, and various estimators along with their properties are shown. In Bedbur et al. (2015), the model is extended to the multi-sample case which also applies to differently designed experiments. Additional inferential results are provided including, for instance, univariate most powerful tests and multivariate tests for hypotheses concerning the parameters. Moreover, maximum likelihood estimation of the parameters of a log-linear link function is considered. Recently, confidence regions for the parameters associated with the stress levels have been established in Bedbur and Kamps (2019), where optimality properties are also obtained.

For the aforementioned general multi-sample step-stress model based on SOSs, the present work provides statistical tests to check for the validity of some link function assumption. On the one hand, these tests can be used to confirm some link function type motivated by physical laws, say, in the sense that there is no statistical evidence against the assumption. Moreover and maybe more important, the tests may be applied to check for the adequacy of some link function type in situations, where underlying physical principles are too complex or not known at all. In any case, a data-based statistical test may help to assess the accuracy of the model assumptions, to detect significant deviations, and with it to prevent the use of unsuitable estimates of 𝜃0, the parameter corresponding to normal operating conditions.

The remainder of this article is organized as follows. In Section 2, the model is introduced and some basic properties are reviewed. The test statistics are proposed in Section 3 for general hypotheses, first, and some simple representations are shown. In Sections 4 and 5, these test statistics are then applied to check for the null hypothesis of a linear or log-linear link function, respectively, and associated inferential properties are stated. In particular, the test statistics are shown to have a single null distribution when testing on a proportional, power, or log-linear link function, which eases the computation of exact critical values. Under any null hypothesis, the asymptotic distribution of the test statistics is obtained in Section 6. In case of testing on a log-linear link function, which represents the most important case in applications, a power study is carried out in Section 7. Testing under order restrictions is briefly discussed in Section 8 giving the potential basis of future work, and we conclude with Section 9.

2 SOSs as Step-Stress Model

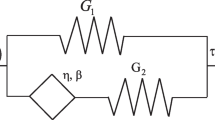

SOSs based on distribution functions \(F_{1},\dots ,F_{n}\) have been defined by Kamps(1995a, b) to model the lifetimes of sequential k-out-of-n systems, in which upon failure of some component the underlying component lifetime distribution may change. In the common semi-parametric setting, SOSs \(X_{*}^{(1)},\dots ,X_{*}^{(n)}\) are based on \(F_{j}=1-(1-F)^{\alpha _{j}}\), 1 ≤ j ≤ n, where F denotes some absolutely continuous distribution function with corresponding density function f and \(\alpha _{1},\dots ,\alpha _{n}\) are positive model parameters. In that case, the joint density function of the first r ≤ n SOSs \(X_{*}^{(1)},\dots ,X_{*}^{(r)}\) is given by

for \(\boldsymbol {x}\in {\mathcal {X}}_{r}=\{(x_{1},\dots ,x_{r})\in {\mathbb {R}}^{r}:F^{-1}(0+)<x_{1}<\dots <x_{r}<F^{-1}(1)\}\) with \(\kappa ({\boldsymbol {\alpha }})=-{\sum }_{j=1}^{r}\log \alpha _{j}\), \( {\boldsymbol {\alpha }}=(\alpha _{1},\dots ,\alpha _{r})\in (0,\infty )^{r}\), and

where \(\overline {F}=1-F\) and \(\overline {F}(x_{0})\equiv 1\) for a simple notation; see, e.g., Bedbur et al. (2010). In a sequential (n − r + 1)-out-of-n system, say, the hazard rate of any working component after the (j − 1)th component failure is then described by \(\alpha _{j} f/\overline {F}\) and thus proportional to the hazard rate of F, 1 ≤ j ≤ r. Common order statistics based on F are included in the distribution-theoretical sense by setting \(\alpha _{1}=\dots =\alpha _{n}=1\). For an extensive account on the model including distribution theory and inference, we refer to Cramer and Kamps (2001).

Following the approach in Balakrishnan et al. (2012) and Bedbur et al. (2015), we consider a general step-stress model based on s independent samples of SOSs, which is parametrized as follows. For a common known baseline distribution function F and positive parameters \(\theta _{1},\dots ,\theta _{m}\) with m ≥ 2, we have in sample \(i \in \{1, {\dots } , s\}\)

-

ni ≥ 1 test items,

-

\(r_{i\bullet }\in \{1,\dots ,n_{i}\}\) failure times \((x_{i1},\dots ,x_{ir_{i\bullet }} )\in {\mathcal {X}}_{r_{i\bullet }}\) as realizations of SOSs \(X_{*i}^{(1)},\dots ,X_{*i}^{(r_{i\bullet })}\) based on F and parameters \(\alpha _{\rho _{i,k-1}+1} = {\dots } = \alpha _{\rho _{ik}} = \theta _{k}\), for 1 ≤ k ≤ m with ρi,k− 1 < ρik, where \(\rho _{i0},\rho _{i1},\dots ,\rho _{im}\) are integers with \(0=\rho _{i0}\leq \rho _{i1}\leq \dots \leq \rho _{im}=r_{i\bullet }\),

-

\( r_{ik}=\rho _{ik}-\rho _{i,k-1}\in \{0,1,\dots ,r_{i\bullet }\}\) observations under stress level yk with corresponding hazard rate \(\theta _{k} f/\overline {F}\) for \(k\in \{1, \dots , m \}\).

Over all samples, we have \(r_{\bullet k}={\sum }_{i=1}^{s} r_{ik}\) observations under stress level yk, 1 ≤ k ≤ m, and \(r_{\bullet \bullet }={\sum }_{k=1}^{m} r_{\bullet k}={\sum }_{i=1}^{s} r_{i\bullet }\) observations in total. Throughout, we assume that r∙k ≥ 1, 1 ≤ k ≤ m, but rik = 0 is permitted for some \(i\in \{1,\dots ,s\}\) and \(k\in \{1,\dots ,m\}\). Note that we choose a different parametrization than in Bedbur et al. (2015), which is suitable for the purposes, here.

For \(i\in \{1,\dots ,s\}\), we define the statistics

as functions of \(\boldsymbol {x}_{i}=(x_{i1},\dots ,x_{ir_{i\bullet }})\in {\mathcal {X}}_{r_{i}\bullet }\). The overall joint density function of \(\mathbf {\tilde {X}}=(X_{*i}^{(j)})_{1\leq i\leq s,1\leq j\leq r_{i\bullet }}\) is then given by

for \(\mathbf {\tilde {x}}=(\boldsymbol {x}_{1},\dots ,\boldsymbol {x}_{s})\in \tilde {{\mathcal {X}}}=\times _{i=1}^{s}{\mathcal {X}}_{r_{i\bullet }}\) with \(\tilde {\kappa }({\boldsymbol {\theta }})=-{\sum }_{k=1}^{m} r_{\bullet k}\log \theta _{k}\) for \({\boldsymbol {\theta }}=(\theta _{1},\dots ,\theta _{m})\in {\varTheta }=(0,\infty )^{m}\), and

For 𝜃 ∈Θ, let P𝜃 denote the distribution with density function \(\tilde {f}_{{\boldsymbol {\theta }}}\). The set \(\mathcal {P}=\{P_{{\boldsymbol {\theta }}}:{\boldsymbol {\theta }}\in {\varTheta }\}\) then forms a regular exponential family of rank m for which \(\tilde {{\boldsymbol {T}}}_{\bullet }=(\tilde {T}_{\bullet 1},\dots ,\tilde {T}_{\bullet m})^{t}\) is a minimal sufficient and complete statistic, where superscript t means transposition. Moreover, \(\tilde {T}_{\bullet 1},\dots ,\tilde {T}_{\bullet m}\) are independent, and

where Γ(b,a) denotes the gamma distribution with shape parameter b and scale parameter a. For more details, see Bedbur et al. (2015).

3 Test Statistics

In the multi-sample general step-stress model introduced in Section 2, unknown model parameters \(\theta _{1},\dots ,\theta _{m}\) correspond to the (known) stress levels \(y_{1},\dots ,y_{m}\). Aiming at conclusions about the unknown parameter 𝜃0 associated with stress level y0, which are the normal operating conditions, a link function should be part of the model connecting stress levels and parameters.

As a preliminary work for checking whether a particular type of link function is appropriate, we consider the test problem

for an arbitrary parameter set \({\varTheta }_{0}\subset {\varTheta }=(0,\infty )^{m}\). To decide between the hypotheses, the likelihood ratio test, the Rao score test, and the Wald test are applied, which reject the null hypothesis for (too) large values of the associated test statistics. As shown in Bedbur et al. (2015) (cf. Balakrishnan et al. 2012), the (unrestricted) maximum likelihood estimator (MLE) \(\hat {{\boldsymbol {\theta }}}=(\hat {\theta }_{1},\dots ,\hat {\theta }_{m})\) of 𝜃 has independent and inverse gamma distributed components

Immediately, we have the following important property.

Lemma 1.

\((\hat {\theta }_{1}/\theta _{1},\dots ,\hat {\theta }_{m}/\theta _{m})\) is a pivotal quantity, the distribution of which does only depend on \(r_{\bullet 1},\dots ,r_{\bullet m}\).

Provided that the MLE \(\tilde {{\boldsymbol {\theta }}}=(\tilde {\theta }_{1},\dots ,\tilde {\theta }_{m})\) in Θ0 exists, the likelihood ratio statistic Λ and the Rao score statistic R for test problem (3.1) are defined as

where S𝜃 = \(\nabla _{{\boldsymbol {\theta }}} \log \tilde {f}_{{\boldsymbol {\theta }}}\) denotes the score statistic on \(\tilde {{\mathcal {X}}}\) and \({\boldsymbol {\mathcal {I}}}({\boldsymbol {\theta }})\) = \({\int \limits } {\boldsymbol {S}}_{{\boldsymbol {\theta }}}{\boldsymbol {S}}_{{\boldsymbol {\theta }}}^{t} dP_{{\boldsymbol {\theta }}}\) the Fisher information matrix of \(\mathcal {P}\) at 𝜃 ∈Θ. By using formulas (2.1) and (2.2.2), S𝜃 has components \(\tilde {T}_{\bullet k}+r_{\bullet k}/\theta _{k}\), 1 ≤ k ≤ m, and

is a diagonal matrix with entries \(r_{\bullet k}/{\theta _{k}^{2}}\), 1 ≤ k ≤ m. Together with formulas (2.1) and (3.2), we then arrive at the simple representations

which depend on \(\mathbf {\tilde {x}}\) only through the ratios \(\tilde {\theta }_{k}/\hat {\theta }_{k}\), 1 ≤ k ≤ m; cf. Bedbur et al. (2015). Hence, if the distribution of \((\tilde {\theta }_{1}/\theta _{1},\dots ,\tilde {\theta }_{m}/\theta _{m})\) is free of 𝜃 for every 𝜃 ∈Θ0, Lemma 1 implies that Λ and R both have a single null distribution, i.e., only one distribution under H0 regardless of the true value of 𝜃 ∈Θ0.

While the likelihood ratio test and the Rao score test both involve the MLE of 𝜃 in Θ0, the Wald test only depends on the unrestricted MLE of 𝜃. Let \(g:{\varTheta }\rightarrow {\mathbb {R}}^{q}\), q ≤ m, be a continuously differentiable function with the property that g(𝜃) = 0 if and only if (iff) 𝜃 ∈Θ0. Moreover, let the Jacobian matrix \(\mathbf {D}_{g}({\boldsymbol {\theta }})\in {\mathbb {R}}^{q\times m}\) of g at 𝜃 be of full rank for every 𝜃 ∈Θ. Then, the Wald statistic W for test problem (3.1.1) based on g is defined by

Note that varying g may lead to different test statistics. For computational reasons, a simple form of g is often preferred.

Remark 1.

In this work, Λ, R, and W are applied to test on the type of the underlying link function connecting parameters and stress levels, which yields a composite null hypothesis in test problem (3.1.1), each. However, the tests also allow to check for a single link function by choosing the simple null hypothesis

with some completely specified function Ψ0 as, e.g., \({\varPsi }_{0}={\varPsi }_{\boldsymbol {\zeta }_{0}}\) with known link function parameter ζ0. The corresponding test statistics are given by formula (3.4) with \(\tilde {{\boldsymbol {\theta }}}=({\varPsi }_{0}(y_{1}),\dots ,{\varPsi }_{0}(y_{m}))\), where W = R for the canonical choice \(g({\boldsymbol {\theta }})={\boldsymbol {\theta }}-\tilde {{\boldsymbol {\theta }}}\), 𝜃 ∈Θ. For independent and identically distributed (iid) samples of generalized order statistics and in a different parametrization, these tests can also be found in Bedbur et al. (2014, 2016).

4 Linear Link Functions

Let the stress levels \(y_{1},\dots ,y_{m}\) be known positive numbers. Without loss of generality, we assume that \(y_{1},\dots ,y_{m}\) are pairwise distinct, i.e., we have

The case of arbitrary positive stress levels can then be traced back to the above case by building in formula (2.1) sums of the statistics \(\tilde {T}_{\bullet 1},\dots ,\tilde {T}_{\bullet m}\) and numbers \(r_{\bullet 1},\dots ,r_{\bullet m}\), respectively, corresponding to identical 𝜃’s to arrive at a representation of the density function with \(\tilde {m}<m\) distinct parameters.

In this section, we consider the situation that stress levels and parameters might be connected via a proportional link function

for some parameter b > 0, or via a linear link function

for some parameters \(a,b\in {\mathbb {R}}\) with a + byk > 0, 1 ≤ k ≤ m. Note that, for m = 2, formula (4.3) is just a reparametrization of the parameters.

First, estimation of the link function parameters is discussed under the assumption that formula (4.2) or (4.3) holds true. Then, we derive statistical tests for checking whether the proportional or linear link function assumption is appropriate.

4.1 Estimation Under Proportional or Linear Link Functions

We start by deriving estimators for the link function parameters when formula (4.2) or (4.3) is assumed to be true. To ease notation, let the statistics U and V be defined as

Based on an iid sample of SOSs, estimators for b and (a,b) along with their properties are provided in Balakrishnan et al. (2011), where the focus is on load-sharing systems. The results are easily generalized and adopted to the actual step-stress model.

Theorem 1.

-

(a) Under the proportional link function in formula (4.2),

-

(i) V is sufficient and complete for b with \(-V\thicksim {\varGamma }(r_{\bullet \bullet },1/b)\),

-

(ii) the unique MLE of b is given by \(\hat {b}=-r_{\bullet \bullet }/V\),

-

(iii) the uniformly minimum-variance unbiased estimator of b is given by \((r_{\bullet \bullet }-1)\hat {b}/r_{\bullet \bullet }\).

-

-

(b)Under the linear link function in formula (4.3) and for m ≥ 3,

-

(i) (U,V ) is minimal sufficient and complete for (a, b),

-

(ii) the unique MLE \((\hat {a},\hat {b})\) of (a,b) is equal to (−r∙∙/U,0) if \({\sum }_{k=1}^{m} r_{\bullet k} y_{k}/V=r_{\bullet \bullet }/U\) and otherwise given by the only solution of the equations

$$ a = \frac{r_{\bullet\bullet}+bV}{-U}\quad\text{and}\quad \sum\limits_{k=1}^{m}\frac{r_{\bullet k}}{r_{\bullet\bullet}+b(V-y_{k} U)} = 1 $$(4.4)with respect to \(a,b\in {\mathbb {R}}\) satisfying b≠ 0 and a + byk > 0, 1 ≤ k ≤ m.

-

Proof.

(a) By inserting formula (4.2) in density function (2.1), we find that \(\mathcal {P}\) forms a regular one-parameter exponential family in b, from which all statements are obvious.

(b) Likewise, by inserting formula (4.3) in density function (2.1), \(\mathcal {P}\) is seen to form a regular two-parameter exponential family in a and b, which is of rank 2, since the covariance matrix of (U,V ) is positive definite (which, in turn, follows by application of the Cauchy-Schwarz inequality). From this, all statements are directly obtained. In particular, the MLE \((\hat {a},\hat {b})\) of (a,b) uniquely exists and is the only solution of the likelihood equations

with respect to \(a,b\in {\mathbb {R}}\) satisfying a + byk > 0, 1 ≤ k ≤ m. Obviously, \(\hat {b}=0\) iff \(r_{\bullet \bullet }/U={\sum }_{k=1}^{m} r_{\bullet k} y_{k}/V\), in the case of which \((\hat {a},\hat {b})=(-r_{\bullet \bullet }/U,0)\). Otherwise, we may multiply the second equation in formula (4.5) by b, from which the relation − bV = r∙∙ + aU and Eq. (4.4) are obtained. □

4.2 Testing on Proportional or Linear Link Functions

To check whether link function (4.2) or (4.3) is appropriate, we consider test problem (3.1) with Θ0 specified as

Then, \(\tilde {{\boldsymbol {\theta }}}^{A}=\hat {b}(y_{1},\dots ,y_{m})\) with \(\hat {b}\) as in Theorem 1(a) and \(\tilde {{\boldsymbol {\theta }}}^{B}=(\hat {a}+\hat {b}y_{1},\dots ,\hat {a}+\hat {b}y_{m})\) with \((\hat {a},\hat {b})\) as in Theorem 1(b) are the MLEs of 𝜃 in \({{\varTheta }_{0}^{A}}\) and \({{\varTheta }_{0}^{B}}\), respectively. Since \(\tilde {{\boldsymbol {\theta }}}^{A}\) is available in explicit form, the likelihood ratio statistic and the Rao score statistic are simple for checking the proportional link function assumption.

Theorem 2.

Let test problem (3.1) be given with \({\varTheta }_{0}={{\varTheta }_{0}^{A}}\) as defined in formula (4.6). Then,

where, under the null hypothesis, \((y_{1}\tilde {T}_{\bullet 1}/V,\dots ,y_{m}\tilde {T}_{\bullet m}/V)\) has a Dirichlet distribution with parameters \(r_{\bullet 1},\dots ,r_{\bullet m}\). In particular, Λ and R each have a single null distribution, which only depends on \(r_{\bullet 1},\dots ,r_{\bullet m}\).

Proof.

We have that

and inserting this expression in formula (3.4) leads to the stated representations for Λ and R. By formula (2.2), \(-y_{1}\tilde {T}_{\bullet 1},\dots ,-y_{m}\tilde {T}_{\bullet m}\) are independent, where, under H0, \(-y_{k}\tilde {T}_{\bullet k}\thicksim {\varGamma }(r_{\bullet k},1/b)\) for 1 ≤ k ≤ m and some b > 0. Since these random variables sum up to − V by definition, the proof is completed. □

Simple expressions for Λ and R are also near at hand when testing on the presence of a linear link function.

Lemma 2.

Let test problem (3.1) be given with \({\varTheta }_{0}={{\varTheta }_{0}^{B}}\) as defined in formula (4.7), and let m ≥ 3. Then,

Proof.

The representations are directly obtained by using that \(-\hat {a}U-\hat {b}V=r_{\bullet \bullet }\) (see Eq. (4.4)). □

As simulations show, the distributions of Λ and R in Lemma 2 vary for different \({{\boldsymbol {\theta }}\in {\varTheta }_{0}^{B}}\), so that both test statistics do not have a single null distribution in the present case. This gives rise to respective asymptotic results, which are provided in a subsequent section.

Finally, we derive Wald statistics for testing the proportional and linear link function assumption. For this, we introduce the function \(g^{A}=({g_{1}^{A}},\dots ,g_{m-1}^{A})^{t}\) on Θ with component functions

and the function \(g^{B}=({g_{1}^{B}},\dots ,g_{m-2}^{B})^{t}\) on Θ with component functions

for 1 ≤ k ≤ m − 2. Then, gA(𝜃) = 0 iff \({{\boldsymbol {\theta }}\in {\varTheta }_{0}^{A}}\), and gB(𝜃) = 0 iff \({{\boldsymbol {\theta }}\in {\varTheta }_{0}^{B}}\).

In the following, let \({\boldsymbol {\mathcal {I}}}_{k}({\boldsymbol {\theta }})=\mathbf {diag}(r_{\bullet 1}/{\theta _{1}^{2}},\dots ,r_{\bullet k}/{\theta _{k}^{2}})\) be the quadratic submatrix of \({\boldsymbol {\mathcal {I}}}({\boldsymbol {\theta }})\) and Ik denote the unity matrix in \({\mathbb {R}}^{k\times k}\) for 1 ≤ k ≤ m.

Theorem 3.

Let test problem (3.1) be given with \({\varTheta }_{0}={{\varTheta }_{0}^{A}}\) as defined in formula (4.6). Then, the Wald statistic based on gA defined by formula (4.8) is given by

and has a single null distribution, which only depends on \(r_{\bullet 1},\dots ,r_{\bullet m}\). Here, δij is the Kronecker delta.

Proof.

Let \({\boldsymbol {z}}=(z_{1},\dots ,z_{m-1})^{t}\in {\mathbb {R}}^{m-1}\) with zk = −yk/ym, 1 ≤ k ≤ m − 1. Then, by using formulas (3.3) and (3.5),

Application of the Woodbury matrix identity (see, e.g., Rao (1973, p. 33)) yields

Inserting for z and replacing 𝜃 by \(\hat {{\boldsymbol {\theta }}}\) then leads to the representation of W. Now, let \({{\boldsymbol {\theta }}\in {\varTheta }_{0}^{A}}\), which implies that yk𝜃m = ym𝜃k, 1 ≤ k ≤ m. Then, W can be seen to depend on the data and 𝜃 only through \((\hat {\theta }_{1}/\theta _{1},\dots ,\hat {\theta }_{m}/\theta _{m})\), since

By Lemma 1, W thus has a single null distribution, which is free of \(y_{1},\dots ,y_{m}.\hfill \square \) Summarizing, we find that in case of testing the proportional link function assumption, Λ, R, and W all have single null distributions, the quantiles of which are readily obtained by simulation and may then serve as (exact) critical values of the test statistics subject to a desired confidence level. The Wald statistic for testing the linear link function assumption is as follows. □

Lemma 3.

Let test problem (3.1) be given with \({\varTheta }_{0}={{\varTheta }_{0}^{B}}\) as defined in formula (4.7), and let m ≥ 3. Then, the Wald statistic based on gB defined by formula (4.9) is given by

where \({\boldsymbol {\eta }}=(\eta _{1},\dots ,\eta _{m})\) and \({\boldsymbol {\zeta }}=(\zeta _{1},\dots ,\zeta _{m})\) with

Proof.

Let \(\eta _{1},\dots ,\eta _{m}\) and \(\zeta _{1},\dots ,\zeta _{m}\) be defined as in formula (4.11), and let uk = −ηk and vk = −ζk for 1 ≤ k ≤ m. Note that um− 1 = − 1 = vm and um = 0 = vm− 1. Moreover, let \(\tilde {{\boldsymbol {u}}}=(u_{1},\dots ,u_{m-2})^{t}\) and \(\tilde {\boldsymbol {v}}=(v_{1},\dots ,v_{m-2})^{t}\). Then, by using formulas (3.3) and (3.5),

with matrix \(\mathbf {U}({\boldsymbol {\theta }})= \left (\theta _{m-1}\tilde {{\boldsymbol {u}}}/\sqrt {r_{\bullet m-1}},\theta _{m}\tilde {\boldsymbol {v}}/\sqrt {r_{\bullet m}}\right ) \in {\mathbb {R}}^{(m-2)\times 2}\). Here, we obtain from the Woodbury matrix identity that

Since

with \({\boldsymbol {u}}=(u_{1},\dots ,u_{m})\), \({\boldsymbol {v}}=(v_{1},\dots ,v_{m})\), and Δ(𝜃;u,v) as in formula (4.12), we have that

Using this representation in formula (4.13) then finally gives

from which the formula for W is obtained by inserting for \(u_{1},\dots ,u_{m},v_{1},\dots \), vm and replacing 𝜃 by \(\hat {{\boldsymbol {\theta }}}\). \(\hfill \square \)□

As it is the case for the likelihood ratio and Rao score statistic shown in Lemma 2, simulations indicate that the Wald statistic for testing the linear link function assumption does not have a single null distribution. Asymptotic results are therefore addressed in a following section.

5 Log-Linear Link Functions

We now consider the case that the relation between stress levels and parameters might be described by a power link function

for some parameter \(d\in {\mathbb {R}}\), or by a log-linear link function

for some parameters \(c,d\in {\mathbb {R}}\), where the stress levels \(y_{1},\dots ,y_{m}\) are assumed to satisfy formula (4.1). Again, the case m = 2 in formula (5.2) is just a bijective transformation of the parameters. As in Section 4, estimation of the link function parameters is considered first. Then, statistical tests are presented to check for the presence of a power or log-linear link function.

5.1 Estimation Under Power or Log-Linear Link Functions

We start by estimating the link function parameters under the assumption that formula (5.1) or (5.2) is true. In a different parametrization, maximum likelihood and best linear unbiased estimation of log-linear link function parameters is also considered in Bedbur et al. (2015).

Theorem 4.

-

(a) Under the power link function in formula (5.1),

-

(i) \(\tilde {{\boldsymbol {T}}}_{\bullet }=(\tilde {T}_{\bullet 1},\dots ,\tilde {T}_{\bullet m})^{t}\) is minimal sufficient for d,

-

(ii) the MLE \(\hat {d}\) of d uniquely exists and is the only solution of the equation

$$ \sum\limits_{k=1}^{m} y_{k} e^{dy_{k}} \tilde{T}_{\bullet k} = -\sum\limits_{k=1}^{m} r_{\bullet k} y_{k} $$(5.3)with respect to \(d\in {\mathbb {R}}\).

-

-

(b) Under the log-linear link function in formula (5.2) and for m ≥ 3,

-

(i) \(\tilde {{\boldsymbol {T}}}_{\bullet }=(\tilde {T}_{\bullet 1},\dots ,\tilde {T}_{\bullet m})^{t}\) is minimal sufficient for (c,d),

-

(ii) the MLE \((\hat {c},\hat {d})\) of (c,d) uniquely exists and is the only solution of the equations

$$ c = \log\left( - \frac{r_{\bullet\bullet}}{{\sum}_{k=1}^{m} e^{dy_{k}} \tilde{T}_{\bullet k}}\right) $$(5.4)$$ \text{and}\quad\frac{{\sum}_{k=1}^{m} y_{k} e^{dy_{k}} \tilde{T}_{\bullet k}}{{\sum}_{k=1}^{m} e^{dy_{k}} \tilde{T}_{\bullet k}} = \frac{{\sum}_{k=1}^{m} r_{\bullet k} y_{k}}{r_{\bullet\bullet}} $$(5.5)with respect to \((c,d)\in {\mathbb {R}}^{2}\).

-

Proof.

In the following, let \(y_{(1)},\dots ,y_{(m)}\) denote the (increasing) order statistics of \(y_{1},\dots ,y_{m}\), which satisfy \(0<y_{(1)}<\dots <y_{(m)}\) by formula (4.1).

(a) (i) When inserting representation (5.1) in density function (2.1), \(\mathcal {P}\) forms a (curved) exponential family in the mappings \(\xi _{1},\dots ,\xi _{m}\) with \(\xi _{k}(d)=e^{dy_{k}}\), \(d\in {\mathbb {R}}\), for 1 ≤ k ≤ m. Then, by Theorem 1.6.9 in Pfanzagl (1994), it is sufficient to show that \(\xi _{1},\dots ,\xi _{m}\) are affinely independent. For this, let \(q_{0},q_{1},\dots ,q_{m}\in {\mathbb {R}}\) with \(q_{0}+{\sum }_{k=1}^{m} q_{k} e^{dy_{(k)}}=0\) for all \(d\in {\mathbb {R}}\). This yields for \(d\rightarrow -\infty \) that q0 = 0 and, thus, \({\sum }_{k=1}^{m} q_{k} e^{dy_{(k)}}=0\) or, equivalently, \(q_{1}+{\sum }_{k=2}^{m} q_{k} e^{d(y_{(k)}-y_{(1)})}=0\) for all \(d\in {\mathbb {R}}\). Now, for \(d\rightarrow -\infty \), we find that q1 = 0. Successively, it follows that \(q_{0}=q_{1}=\dots =q_{m}=0\), so that \(\xi _{1},\dots ,\xi _{m}\) are affinely independent. (ii) The log-likelihood function \(l(d)=\log \tilde {f}_{(\exp \{dy_{1}\},\dots ,\exp \{dy_{m}\})}\), \(d\in {\mathbb {R}}\), with \(\tilde {f}_{{\boldsymbol {\theta }}}\) as in formula (2.1), is increasing-decreasing and strictly concave, since \(l^{\prime \prime }(d)={\sum }_{k=1}^{m} {y_{k}^{2}}e^{dy_{k}}\tilde {T}_{\bullet k}<0\), \(d\in {\mathbb {R}}\). Hence, the MLE of d is unique and given by the only solution of the likelihood (5.3).

(b) (i) The statement can be shown by proceeding as in the proof of (a) (i). (ii) With \(l(c,d)=\tilde {f}_{(\exp \{c+dy_{1}\},\dots ,\exp \{c+dy_{m}\})}\), \(c,d\in {\mathbb {R}}\), the likelihood equations are given by

from which Eqs. (5.4) and (5.5) are directly obtained. Since for i ∈{1, m}

it follows that the left-hand side of Eq. (5.5) converges to y(1) for \(d\rightarrow -\infty \) and to y(m) for \(d\rightarrow \infty \). The inequality \(y_{(1)}<{\sum }_{k=1}^{m} r_{\bullet k} y_{k}/r_{\bullet \bullet }<y_{(m)}\) then ensures the existence of at least one solution of the likelihood equations. Moreover, the Hessian matrix of l turns out to be negative definite by using the Cauchy-Schwarz inequality. Hence, l is strictly concave, and the unique MLE of (c,d) is given by the only solution of Eqs. (5.4) and (5.5). □

5.2 Testing on Power or Log-Linear Link Functions

To check for the presence of link function (5.1) or (5.2), we consider test problem (3.1) with Θ0 given by

Then, \(\tilde {{\boldsymbol {\theta }}}^{C}=(\exp \{\hat {d}y_{1}\},\dots ,\exp \{\hat {d}y_{m}\})\) with \(\hat {d}\) as in Theorem 4(a) and \(\tilde {{\boldsymbol {\theta }}}^{D}=(\exp \{\hat {c}+\hat {d}y_{1}\},\dots ,\exp \{\hat {c}+\hat {d}y_{m}\})\) with \((\hat {c},\hat {d})\) as in Theorem 4(b) are the MLEs of 𝜃 in \({{\varTheta }_{0}^{C}}\) and \({{\varTheta }_{0}^{D}}\), respectively.

First, we establish the likelihood ratio and the Rao score statistics and then derive Wald statistics for testing a power or log-linear link function assumption, all of which turn out to have a single null distribution.

Theorem 5.

Let test problem (3.1) be given with \({\varTheta }_{0}={{\varTheta }_{0}^{C}}\) as defined in formula (5.6). Then,

both have a single null distribution, where \(Y_{k}=-e^{\hat {d}y_{k}} \tilde {T}_{\bullet k}\), 1 ≤ k ≤ m, with \(\hat {d}\) as in Theorem 4(a).

Proof.

The representations of the test statistics are obvious from \(\tilde {\theta }_{k}^{C}/\hat {\theta }_{k}=Y_{k}/r_{\bullet k}\), 1 ≤ k ≤ m. Now, let the null hypothesis be true, i.e., let \(\theta _{k}=e^{dy_{k}}\), 1 ≤ k ≤ m, for some \(d\in {\mathbb {R}}\). In that we take the unique solution \(\hat {d}\) of Eq. (5.3) as a function of \(\tilde {{\boldsymbol {T}}}_{\bullet }\), Theorem 4(a) yields that

with quantities \(Z_{k}=e^{dy_{k}}\tilde {T}_{\bullet k}\), 1 ≤ k ≤ m. By formula (2.2), \(Z_{1},\dots ,Z_{m}\) are independent with \(-Z_{k}\thicksim {\varGamma }(r_{\bullet k},1)\), 1 ≤ k ≤ m. Necessarily, \(\hat {d}(\tilde {{\boldsymbol {T}}}_{\bullet }) -d = \hat {d}(\boldsymbol {Z})\), where \(\boldsymbol {Z}=(Z_{1},\dots ,Z_{m})\), and, as a consequence, \(Y_{k}=-e^{\hat {d}(\boldsymbol {Z})y_{k}}Z_{k}\), 1 ≤ k ≤ m. Λ and R thus depend on the data and d only through Z, the distribution of which is free of d. Hence, both have a single null distribution. □

In contrast to the linear link function case, Λ and R have single null distributions when testing a log-linear link function assumption.

Theorem 6.

Let test problem (3.1) be given with \({\varTheta }_{0}={{\varTheta }_{0}^{D}}\) as defined in formula (5.7), and let m ≥ 3. Then, Λ and R are given by formula (5.8) with \(Y_{k}=-e^{\hat {c}+\hat {d}y_{k}} \tilde {T}_{\bullet k}\), 1 ≤ k ≤ m, and \((\hat {c},\hat {d})\) as in Theorem 4(b). Moreover, both test statistics have a single null distribution.

Proof.

The representations for Λ and R remain the same as in Theorem 5, since, again, \(\tilde {\theta }_{k}^{D}/\hat {\theta }_{k}=Y_{k}/r_{\bullet k}\), 1 ≤ k ≤ m. Now, let \(\theta _{k}=e^{c+dy_{k}}\), 1 ≤ k ≤ m, for some \(c,d\in {\mathbb {R}}\). We proceed similarly as in the proof of Theorem 5. First, we obtain from formula (5.5) that

with quantities \(\tilde {Z}_{k}=e^{c+dy_{k}}\tilde {T}_{\bullet k}\), 1 ≤ k ≤ m. By using formula (2.2), \(\tilde {Z}_{1},\dots ,\tilde {Z}_{m}\) are independent with \(-\tilde {Z}_{k}\thicksim {\varGamma }(r_{\bullet k},1)\), 1 ≤ k ≤ m. With the notation \(\tilde {\boldsymbol {Z}}=(\tilde {Z}_{1},\dots ,\tilde {Z}_{m})\), necessarily, \(\hat {d}(\tilde {{\boldsymbol {T}}}_{\bullet })-d=\hat {d}(\tilde {\boldsymbol {Z}})\), i.e., the unique solution of Eq. (5.5) with \(\tilde {T}_{\bullet k}\) replaced by \(\tilde {Z}_{k}\), 1 ≤ k ≤ m. Then, by using Eq. (5.4),

and, consequently, \(Y_{k}=-e^{\hat {c}(\tilde {\boldsymbol {Z}})+\hat {d}(\tilde {\boldsymbol {Z}})y_{k}}\tilde {Z}_{k}\), 1 ≤ k ≤ m. Hence, Λ and R depend on the data and (c,d) only through \(\tilde {\boldsymbol {Z}}\), the distribution of which is free of (c,d). Both thus have a single null distribution. □

For the derivation of the Wald statistics for testing a power or log-linear link function assumption, we define the mapping \(g^{C}=({g_{1}^{C}},\dots ,g_{m-1}^{C})^{t}\) on Θ with component functions

and the mapping \(g^{D}=({g_{1}^{D}},\dots ,g_{m-2}^{D})^{t}\) on Θ with component functions

for 1 ≤ k ≤ m − 2. Then, gC(𝜃) = 0 iff \({{\boldsymbol {\theta }}\in {\varTheta }_{0}^{C}}\), and gD(𝜃) = 0 iff \({{\boldsymbol {\theta }}\in {\varTheta }_{0}^{D}}\).

Theorem 7.

Let test problem (3.1) be given with \({\varTheta }_{0}={{\varTheta }_{0}^{C}}\) as defined in formula (5.6). Then, the Wald statistic based on gC defined by formula (5.9) is given by

and has a single null distribution.

Proof.

The representation of W is directly obtained by setting \(z_{k}=-y_{k}\theta _{m}^{y_{k}/y_{m}-1}/y_{m}\), 1 ≤ k ≤ m − 1, in the proof of Theorem 3 and then using formula (4.10). Moreover, for \({{\boldsymbol {\theta }}\in {\varTheta }_{0}^{C}}\), we have that \(\hat {\theta }_{m}^{y_{k}/y_{m}}/\theta _{k}=(\hat {\theta }_{m}/\theta _{m})^{y_{k}/y_{m}}\), 1 ≤ k ≤ m. In that case, by proceeding along the lines in the proof of Theorem 3, W can be seen to depend on the data and 𝜃 only through \((\hat {\theta }_{1}/\theta _{1},\dots ,\hat {\theta }_{m}/\theta _{m})\), and, by using Lemma 1, it thus has a single null distribution. □

Theorem 8.

Let test problem (3.1) be given with \({\varTheta }_{0}={{\varTheta }_{0}^{D}}\) as defined in formula (5.7), and let m ≥ 3. Then, the Wald statistic based on gD defined by formula (5.10) is given by

with \(\eta _{1},\dots ,\eta _{m}\) and \(\zeta _{1},\dots ,\zeta _{m}\) as in formula (4.11) and Δ as in formula (4.12), and \(\tilde {\boldsymbol {\eta }}({\boldsymbol {\theta }})=(\tilde {\eta }_{1}({\boldsymbol {\theta }}),\dots ,\tilde {\eta }_{m}({\boldsymbol {\theta }}))\) and \(\tilde {\boldsymbol {\zeta }}({\boldsymbol {\theta }})=(\tilde {\zeta }_{1}({\boldsymbol {\theta }}),\dots ,\tilde {\zeta }_{m}({\boldsymbol {\theta }}))\) have components \(\tilde {\eta }_{k}({\boldsymbol {\theta }})=\eta _{k} \theta _{m-1}^{\eta _{k}}\theta _{m}^{\zeta _{k}}\) and \(\tilde {\zeta }_{k}({\boldsymbol {\theta }})=\zeta _{k} \theta _{m-1}^{\eta _{k}}\theta _{m}^{\zeta _{k}}\) for 1 ≤ k ≤ m. Moreover, W has a single null distribution.

Proof.

To derive the formula for W set \(u_{k}=-\eta _{k}\theta _{m-1}^{\eta _{k}-1}\theta _{m}^{\zeta _{k}}\) and \(v_{k}=-\zeta _{k}\theta _{m-1}^{\eta _{k}}\theta _{m}^{\zeta _{k}-1}\), 1 ≤ k ≤ m, in the proof of Lemma 3, which satisfy um− 1 = − 1 = vm and um = 0 = vm− 1, too. Thus, we arrive at formula (4.14), again, and, by using that \({\varDelta }({\boldsymbol {\theta }};{\boldsymbol {u}}^{t},{\boldsymbol {v}}^{t})={\varDelta }({\boldsymbol {\theta }};\tilde {\boldsymbol {\eta }}({\boldsymbol {\theta }}),\tilde {\boldsymbol {\zeta }}({\boldsymbol {\theta }}))/(\theta _{m-1}\theta _{m})^{2}\), the stated representation is found. Now, for \({{\boldsymbol {\theta }}\in {\varTheta }_{0}^{D}}\),

and, by following similar arguments as in the proof of Theorem 3, W can be seen to depend on the data and 𝜃 only through \((\hat {\theta }_{1}/\theta _{1},\dots ,\hat {\theta }_{m}/\theta _{m})\). Thus, by application of Lemma 1, W has a single null distribution. □

To sum up the findings, Λ, R, and W all have single null distributions when testing on a power or log-linear link function, and (exact) critical values subject to a desired confidence level are therefore easily obtained by simulation.

6 Asymptotic Results

In this section, we address some asymptotic properties of the derived estimators and tests, respectively. In particular, the asymptotic distribution of Λ, R, and W under the null hypothesis of a linear link function is presented, in the case of which exact critical values are not available (see Section 4.2).

In what follows, let \(\stackrel {\text {d}}{\rightarrow }\) denote convergence in distribution, and let Nk(μ,Σ) be the k-dimensional normal distribution with mean vector μ and covariance matrix Σ.

Theorem 9.

Let \(r_{\bullet k}/r_{\bullet \bullet }\rightarrow \tau _{k}>0\) for \(r_{\bullet \bullet }\rightarrow \infty \) and 1 ≤ k ≤ m, where \({\sum }_{k=1}^{m} \tau _{k}=1\). Then, for \(r_{\bullet \bullet }\rightarrow \infty \) and

-

(a) under link function (4.2), \(\sqrt {r_{\bullet \bullet }}(\hat {b}-b)\stackrel {\text {d}}{\longrightarrow } N_{1}(0,b^{2})\) for b > 0.

-

(b) under link function (4.3), \(\sqrt {r_{\bullet \bullet }}\left ((\hat {a},\hat {b})-(a,b)\right )^{t}\stackrel {\text {d}}{\longrightarrow }N_{2}(\boldsymbol {0},\mathbf {\Sigma }_{B}^{-1}(a,b))\)

$$ \text{with} \quad \mathbf{\Sigma}_{B}(a,b) = \left( \begin{array} {cc}{\sum}_{k=1}^{m} \frac{\tau_{k}}{(a+by_{k})^{2}} & \quad {\sum}_{k=1}^{m} \frac{\tau_{k} y_{k}}{(a+by_{k})^{2}}\\ {\sum}_{k=1}^{m} \frac{\tau_{k} y_{k}}{(a+by_{k})^{2}} &\quad {\sum}_{k=1}^{m} \frac{\tau_{k} {y_{k}^{2}}}{(a+by_{k})^{2}} \end{array}\right)\quad\text{for}~ (a,b){\in{\varTheta}_{0}^{B}}. $$ -

(c) under link function (5.1), \( \sqrt {r_{\bullet \bullet }}(\hat {d}-d)\stackrel {\text {d}}{\longrightarrow }N_{1}\left (0,1/ {\sum }_{k=1}^{m} \tau _{k} {y_{k}^{2}}\right )\) for \(d\in {\mathbb {R}}\).

-

(d) under link function (5.2), \(\sqrt {r_{\bullet \bullet }}\left ((\hat {c},\hat {d})-(c,d)\right )^{t}\stackrel {\text {d}}{\longrightarrow }N_{2}(\boldsymbol {0},\mathbf {\Sigma }_{D}^{-1})\)

$$ \begin{array}{@{}rcl@{}} \text{with}\quad \mathbf{\Sigma}_{D} = \left( \begin{array} {cc} 1 &\quad {\sum}_{k=1}^{m} \tau_{k} y_{k}\\ {\sum}_{k=1}^{m} \tau_{k} y_{k} &\quad {\sum}_{k=1}^{m} \tau_{k} {y_{k}^{2}} \end{array}\right)\quad\text{for}~ (c,d)\in{\mathbb{R}}^{2}. \end{array} $$

In particular, all estimators are consistent.

Proof.

It is well-known that the statistics Tij, 1 ≤ j ≤ ri∙, 1 ≤ i ≤ s, are jointly independent, where − Tij, ρi,k− 1 + 1 ≤ j ≤ ρik, 1 ≤ i ≤ s, are identically exponentially distributed with mean 1/𝜃k for 1 ≤ k ≤ m; see, e.g., Balakrishnan et al. (2012). Hence, the sample situation is distribution theoretically equivalent to having m independent samples, where in sample k, 1 ≤ k ≤ m, we have r∙k iid random variables following an exponential distribution with density function \(f_{k}(x)=\theta _{k} e^{-\theta _{k} x}\), x > 0. Now, by assuming a link function connecting the parameters \(\theta _{1},\dots ,\theta _{m}\), the m distributions have the link function parameter ζ, say, in common. Denoting the MLE of ζ by \(\hat {\boldsymbol {\zeta }}\), application of Theorem 1(iv) in Bradley and Gart (1962) then yields that, for \(r_{\bullet \bullet }\rightarrow \infty \), \(\sqrt {r_{\bullet \bullet }}(\hat {\boldsymbol {\zeta }}-\boldsymbol {\zeta })\) has a multivariate normal distribution with mean zero and covariance matrix \(\left [{\sum }_{k=1}^{m} \tau _{k} \mathbf {{\boldsymbol {\mathcal {I}}}}_{k}(\boldsymbol {\zeta })\right ]^{-1}\), where \(\mathbf {{\boldsymbol {\mathcal {I}}}}_{k}(\boldsymbol {\zeta })\) denotes the Fisher information matrix of distribution k at ζ for 1 ≤ k ≤ m. For every link function considered here and upon inserting the corresponding representation for 𝜃k in density function fk, \(\mathbf {{\boldsymbol {\mathcal {I}}}}_{k}(\boldsymbol {\zeta })\) can be obtained as the mean of the Hessian matrix of \(-\log f_{k}(X)\) with respect to ζ, where \(X\thicksim f_{k}\). By doing so, we arrive at the stated asymptotic distributions, which imply consistency of the estimators by Slutsky’s theorem. □

Some findings related to Theorem 9 should be highlighted. First note that, in case of a proportional link function, the asymptotic null distribution does not depend on the stress levels \(y_{1},\dots ,y_{m}\). On the other hand, when dealing with a power or log-linear link function, the asymptotic null distribution is free of the link function parameters. Such results may be used for experimental design, as we demonstrate in case of a power link function with the focus on interval estimation for d. Denoting by up the p-quantile of N1(0,1), an equal-tail confidence interval for d of approximate confidence level 1 − p is given by

with non-random length

Moreover, the approximation

is obtained when estimating τk by r∙k/r∙∙, 1 ≤ k ≤ m (as it will usually be done in practice). Hence, the stress levels \(y_{1},\dots ,y_{m}\) and the corresponding numbers \(r_{\bullet 1},\dots ,r_{\bullet m}\) of observed failures, which are specified in advance, may be chosen in such a way that the confidence interval meets a required accuracy. Finally, asymptotic tests on the previous link functions are addressed.

Theorem 10.

Let the assumptions of Theorem 9 be given. Then, for test problem (3.1) and \(r_{\bullet \bullet }\rightarrow \infty \), the asymptotic distribution of Λ, R, and W under the null hypothesis is each a χ2(m − 1)-distribution for \({\varTheta }_{0}\in \{{{\varTheta }_{0}^{A}},{{\varTheta }_{0}^{C}}\}\), and it is a χ2(m − 2)-distribution for \({\varTheta }_{0}\in \{{{\varTheta }_{0}^{B}},{{\varTheta }_{0}^{D}}\}\) provided that m ≥ 3. Here, χ2(k) denotes the chi-square distribution with k degrees of freedom.

Proof.

For the likelihood ratio statistic, the asymptotic null distribution can be obtained from Bradley and Gart (1962, Section 2.4). Since Λ, R, and W are asymptotically equivalent (see, e.g., Serfling 1980), all statements are already shown. □

7 Power Study

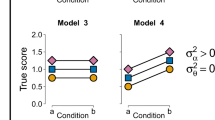

For the important case of a log-linear link function assumption, we perform a power study and compare the tests derived. A step-stress experiment with m = 5 increasing stress levels is considered, which are given by \((y_{1},\dots ,y_{5})=(0.5,1,1.5,2,2.5)\). The corresponding numbers of observations are chosen as

The aim is to check for the null hypothesis \(H_{0}:{{\boldsymbol {\theta }}\in {\varTheta }_{0}^{D}}\) with \({{\varTheta }_{0}^{D}}\) as in formula (5.7), which states a log-linear link function between the parameters and the stress levels. Hereto, the likelihood ratio test, the Rao score test, and the Wald test are applied, whose test statistics are given in Theorems 6 and 8. For any test statistic, the exact critical value is chosen subject to a confidence level of 5%. We examine the power of each test at the alternatives lying in

which correspond to all linear link functions with positive slopes. Simulations show that the power of each test at some 𝜃 ∈Θ1 depends on (a,b) only through the ratio a/b. For sample situations I and II, the power of all tests is depicted in Fig. 1 as a function of a/b(> −y1). The likelihood ratio test and the Rao score test are found to have a similar power performance and are superior to the Wald test. For all tests, the power decreases when a/b increases, and it tends to 1 for a/b ↘−y1 and to the confidence level of 5% for \(a/b\rightarrow \infty \), which allows for some interesting interpretations. For fixed a > 0, the power of all tests is getting worse if b ↘ 0, i.e., if the linear link function becomes more and more a constant line. On the other hand, for fixed b > 0, we always find a linear link function with that slope having power arbitrarily close to 1 (no matter how small b is). Note that the power at every proportional link function is the value at a/b = 0.

Power of the likelihood ratio test (solid line), the Rao score test (dashed line), and the Wald test (dotted line) with exact confidence level 5% in sample situations I (left) and II (right) as function of a/b (obtained by generating 5 × 105 realizations of each test statistic for every value of a/b)

By using \(\chi ^{2}_{0.95}(3)=7.814728\) as critical value for each test statistic (see Theorem 10), the actual confidence levels of the likelihood ratio test, the Rao score test, and the Wald test are 5.36%, 4.24%, and 8.87% in sample situation I and 5.16%, 4.58%, and 5.46% in sample situation II. This finding indicates that the Rao score test is conservative, whereas the other tests are not. Moreover, the convergence of actual to nominal confidence level is slow in case of the Wald test (compared to the other tests).

8 Testing Under Order Restrictions

If the stress levels are strictly increasing, i.e., \(0<y_{1}<\dots <y_{m}\), one may take this additional information into account to improve the performance of statistical procedures; cf. Balakrishnan et al. (2009). Estimation and testing are then carried out under the simple order restriction \(\theta _{1}\leq \dots \leq \theta _{m}\), which implies the same order for the hazard rates corresponding to the stress levels (see Section 2). In that case, the general test problem is

with \({\varTheta }_{0}\subset \check {{\varTheta }}=\{{\boldsymbol {\theta }}\in (0,\infty )^{m}:\theta _{1}\leq \dots \leq \theta _{m}\}\). The MLE \(\check {{\boldsymbol {\theta }}}=(\check {\theta }_{1},\dots ,\check {\theta }_{m})\) in \(\check {{\varTheta }}\) is given by

and takes over the role of the unrestricted MLE \(\hat {{\boldsymbol {\theta }}}\); cf. Bedbur et al. (2015). To test on a proportional, linear, power, or log-linear link function, Θ0 has to be chosen as

In case of a proportional link function, we obtain from formula (8.1) that, under H0, the distribution of \(\check {\theta }_{k}/\theta _{k}\) and, thus, that of \(\tilde {\theta }_{k}^{A}/\check {\theta }_{k}\) are free of b for 1 ≤ k ≤ m. Hence, Λ, R, and W, which are given by formula (3.4) and Theorem 3 with \(\hat {{\boldsymbol {\theta }}}\) being replaced by \(\check {{\boldsymbol {\theta }}}\), have again a single null distribution each, by following the same arguments as before.

For the other link functions, deriving a test under order restrictions is mathematically more challenging. As it is the case for the omnibus tests on a linear link function, the distribution of \(\check {\theta }_{k}/\theta _{k}\) under H0 is expected to depend on (a,b). Moreover, when checking for a power or log-linear link function assumption, it might not be free of d. Beyond that, the restricted MLEs may not be continuously distributed. For instance, in the context of a power link function, the MLE in \(\check {{\varTheta }}_{0}^{C}\) uniquely exists and has positive point mass

at \((1,\dots ,1)\), where \(\check {d}\) denotes the MLE of d ≥ 0 (see Eq. (5.3)). Nevertheless, if analytically manageable, these tests seem to be worth working out as they will naturally be superior to the omnibus procedures in terms of power.

9 Conclusion

In step-stress experiments, where test units are exposed to higher stress levels to cause earlier failures, some additional assumption such as a link function connecting parameters and stress levels is usually required to infer on the lifetime distribution under normal operating conditions. In a multi-sample general step-stress model based on sequential order statistics, we provide exact and asymptotic statistical tests which allow to check for the adequacy of the assumed link function being, for instance, of proportional or log-linear type. Since most of the test statistics derived have a single null distribution, critical values can be obtained by standard Monte-Carlo simulation, which makes the application of the tests particularly easy. The proposed tests may serve as basis for subsequent inference for the link function parameters rendering it only statistically meaningful.

References

Alhadeed, A.A. and Yang, S.-S. (2002). Optimal simple step-stress plan for khamis-Higgins model. IEEE Trans. Reliab. 51, 212–215.

Bagdonavičius, V. and Nikulin, M. (2001). Accelerated Life Models: Modeling and Statistical Analysis. Chapman & Hall/CRC, Boca Raton.

Bai, D.S., Kim, M.S. and Lee, S.H. (1989). Optimum simple step-stress accelerated life tests with censoring. IEEE Trans. Reliab. 38, 528–532.

Balakrishnan, N. (2009). A synthesis of exact inferential results for exponential step-stress models and associated optimal accelerated life-tests. Metrika 69, 351–396.

Balakrishnan, N., Beutner, E. and Kamps, U. (2011). Modeling parameters of a load-sharing system through link functions in sequential order statistics models and associated inference. IEEE Trans. Reliab. 60, 605–611.

Balakrishnan, N., Beutner, E. and Kateri, M. (2009). Order restricted inference for exponential step-stress models. IEEE Trans. Reliab. 58, 132–142.

Balakrishnan, N., Kamps, U. and Kateri, M. (2012). A sequential order statistics approach to step-stress testing. Ann. Inst. Stat. Math. 64, 303–318.

Bedbur, S., Beutner, E. and Kamps, U. (2010). Generalized order statistics: An exponential family in model parameters. Statistics 46, 159–166.

Bedbur, S., Beutner, E. and Kamps, U. (2014). Multivariate testing and model-checking for generalized order statistics with applications. Statistics48, 1297–1310.

Bedbur, S. and Kamps, U. (2019). Confidence regions in step-stress experiments with multiple samples under repeated type-II censoring. Stat. Probab. Lett.146, 181–186.

Bedbur, S., Kamps, U. and Kateri, M. (2015). Meta-analysis of general step-stress experiments under repeated type-II censoring. Appl. Math. Model.39, 2261–2275.

Bedbur, S., Müller, N. and Kamps, U. (2016). Hypotheses testing for generalized order statistics with simple order restrictions on model parameters under the alternative. Statistics 50, 775–790.

Bradley, R.A. and Gart, J.J. (1962). The asymptotic properties of ML estimators when sampling from associated populations. Biometrika 49, 205–214.

Cramer, E. and Kamps, U. (2001). Sequential k-out-of-n Systems. In Advances in Reliability, Handbook of Statistics, (N. Balakrishnan, and C.R. Rao, eds.)., vol. 20, 301–372. Elsevier, Amsterdam.

Gouno, E. and Balakrishnan, N. (2001). Step-Stress Accelerated Life Tests. In Handbook of Statistics, Advances in Reliability, vol. 20, 623–639. Elsevier, Amsterdam.

Kamps, U. (1995a). A concept of generalized order statistics. J. Stat. Planning Infer. 48, 1–23.

Kamps, U. (1995b). A Concept of Generalized Order Statistics. Teubner, Stuttgart.

Meeker, W.Q. and Escobar, L.A. (1998). Statistical Methods for Reliability Data. Wiley, New Jersey.

Nelson, W.B. (2004). Accelerated Testing: Statistical Models Test Plans and Data Analysis. Wiley, Hoboken.

Pfanzagl, J. (1994). Parametric Statistical Theory. de Gruyter, Berlin.

Rao, C.R. (1973). Linear Statistical Inference and Its Applications, 2nd edn. Wiley, New York.

Serfling, R.J. (1980). Approximation Theorems of Mathematical Statistics. Wiley, New York.

Srivastava, P.W. and Shukla, R. (2008). A log-logistic step-stress model. IEEE Trans. Reliab. 57, 431–434.

Wang, B.X. and Yu, K. (2009). Optimum plan for step-stress model with progressive type-II censoring. TEST 18, 115–135.

Wu, S.-J., Lin, Y.-P. and Chen, Y.-J. (2006). Planning step-stress life test with progressively type-I group-censored exponential data. Statistica Neerlandica60, 46–56.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Bedbur, S., Seiche, T. Testing the Validity of a Link Function Assumption in Repeated Type-II Censored General Step-Stress Experiments. Sankhya B 84, 106–129 (2022). https://doi.org/10.1007/s13571-021-00250-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13571-021-00250-5

Keywords

- Accelerated life testing

- step-stress model

- link function

- sequential order statistics

- maximum likelihood estimation

- Hypothesis test.