Abstract

This work deals with the existence and uniqueness of global solution and finite time stability of fractional partial hyperbolic differential systems (FPHDSs). Using the fixed-point approach, the existence and uniqueness of global solution is studied and an estimation of solution is given. Moreover, some sufficient conditions for the finite time stability of FPHDSs are established. Numerical experiments illustrate the Stability result.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Since twenty years, the area of fractional calculus has gained much attentions by the researchers and numerous works has been published in this context [1,2,3,4,5,6,7,8,9,10,11]. In fact, in [1] a phase dynamics of inline Josephson junction in voltage state is discussed, also, phase difference between the wave functions is analyzed via fractional calculus and a finite element scheme is used for the simulations of governing equations. In addition, authors in [5] have replaced the integer first-order derivative in time with the Caputo fractional derivative in which a numerical approach to chaotic pattern formation in diffusive predator-prey system is investigated. In the frame of novelty study, a new approach for the solution of fractional diffusion problems with conformable derivative is elaborated with authors in [8].

Fractional differential equations have recently proved to be valuable tools in the modeling of many phenomena in different domain applications, whether in biology [12,13,14], diffusion [8], control theory [15,16,17,18,19,20,21] or viscoelasticity [22]. In fact, regrading biological application, authors in [12] have modeled a fractional order system for COVID-19 pandemic transmission. In regards control theory, authors in [17] have presented a novel controller of fractional sliding mode type based on nonlinear fractional-order Proportional Integrator (PI) derivative controller. In addition, with systems dealing with time varying delay, a new Finite Time Stability (FTS) analysis of singular fractional differential equations is investigated. Furthermore, regarding fuzzy neural networks, a finite-time stability is studied in the work of [16].

For some basic results in the theory of fractional partial differential equations (FPDEs), the reader is referred to [23,24,25,26,27,28,29,30]. For example, authors in [27] have presented a Lyapunov-type inequality for the Darboux Problem for FPDEs. Furthermore, such inequality is used to study the existence of nontrivial solutions of FPDEs.

In the literature, for the existence and uniqueness of Darboux fractional partial differential equations with time delay, the exist the work of [29]. Compared to the previous cited work, the existence and uniqueness of solutions is given without any the Lipschitz constant. Furthermore, there are many works which treat FTS of time delay fractional order systems when the solution depends on one variable (see [15, 16, 21, 31]), unlike our studied work treats the case when the solution depends of two variables.

Based on the above interpretation, the contribution of this work is summarized as follow:

-

The existence and uniqueness of the global solution of Darboux fractional partial differential equations with time delay is proved.

-

An estimation of the solutions is given.

-

The FTS results of such systems are given and the theoretical contributions are validated by two numerical examples.

The paper is organized as follows. In Sect. 2, Some basic results related to the fractional calculus are given. In Sect. 3, the existence, the uniqueness of the global solutions, the estimation of solutions and the FTS results are investigated. In Sect. 4, we present some numerical examples which illustrate and prove the efficiency of the main results.

2 Basic results

Definition 1

The Riemann Liouville fractional integral of order \(\gamma =\left( \gamma _{1},\gamma _{2}\right) \) of w is defined by:

where \(a=(a_1, a_2) \in \mathbb {R}^2\) and \(\gamma _{1}, \, \gamma _{2}\) are strictly positives.

Definition 2

The Riemann Liouville fractional derivative of order \(\gamma =\left( \gamma _{1},\gamma _{2}\right) \) of w is defined by:

where \(a=(a_1, a_2) \in \mathbb {R}^2\), \(\left( \gamma _{1},\gamma _{2}\right) \in \left( 0,1\right) ^{2}\) and \(D_{\xi ,\zeta }^{2}=\frac{\partial ^{2}}{\partial \xi \partial \zeta }\).

Definition 3

The Caputo fractional derivative of order \(\gamma =\left( \gamma _{1},\gamma _{2}\right) \) of w is defined by:

where \(a=(a_1, a_2) \in \mathbb {R}^2\), \(\left( \gamma _{1},\gamma _{2}\right) \in \left( 0,1\right) ^{2}\) and \(D_{\xi ,\zeta }^{2}=\frac{\partial ^{2}}{\partial \xi \partial \zeta }\).

Definition 4

The Mittag-Leffler function is defined by:

where \(\xi >0\), \(\varrho \in \mathbb {C}\).

Remark 1

Let \(\varepsilon \) be a nonzero real. The function \(\nu (s)=E_{\tau }\big (\varepsilon (s-p)^\tau \big )\) satisfies:

where \(s, \,p \in \mathbb {R}\), \(p \le s\).

Definition 5

A mapping \(\varpi : \Upsilon \times \Upsilon \rightarrow [0,\infty ]\) is called a generalized metric on a nonempty set \(\Upsilon \), if:

- (i):

-

\(\varpi (\gamma _1,\gamma _2)=0\) if and only if \(\gamma _1=\gamma _2\),

- (ii):

-

\(\varpi (\gamma _1,\gamma _2)=\varpi (\gamma _2,\gamma _1)\), \(\forall \) \(\gamma _1,\gamma _2\in \Upsilon \),

- (iii):

-

\(\varpi (\gamma _1,\gamma _3)\le \varpi (\gamma _1,\gamma _2)+\varpi (\gamma _2,\gamma _3)\), \(\forall \) \(\gamma _1,\gamma _2,\gamma _3\in \Upsilon \).

The following theorem describes a main result of the fixed point theory.

Theorem 1

[32] Suppose that \((\Upsilon ,\varpi )\) is a generalized complete metric space. Let \(\Psi : \Upsilon \rightarrow \Upsilon \) is a strictly contractive operator with \(C<1\). If one can find a nonnegative integer \(j_0\) such that \(\varpi (\Psi ^{j_0+1}y_0,\Psi ^{j_0}y_0)<\infty \) for some \(y_0\in \Upsilon \), then:

- (i):

-

\(\Psi ^n y_0\) converges to a fixed point \(y_1\) of \(\Psi \),

- (ii):

-

\(y_1\) is the unique fixed point of \(\Psi \) in \(\Upsilon ^*:=\{y_2\in \Upsilon : \varpi (\Psi ^{j_0} y_0,y_2)<\infty \}\),

- (iii):

-

If \(y_2\in \Upsilon ^*\), then \(\varpi (y_2,y_1)\le \frac{1}{1-C} \varpi (\Psi y_2,y_2)\).

3 Main results

Throughout the paper, we use the following notations:

where \(\tau _1\), \(\tau _2\) are two functions which will be specified later.

We consider the fractional-order system, with the variable \(\mathbf{t}\), as follows:

with the initial condition:

where \(\alpha =( \alpha _1,\alpha _2)\), \(0<\alpha _1,\alpha _2<1\). The function \(\mathbf{\tau }\) is continuous on I and \(\tau _1\), \(\tau _2\) are positives. The matrices \(A, \, B\in \mathbb {R}^{n\times n}\), \(C\in \mathbb {R}^{n\times p}\) and the function \(\Phi \in C(\tilde{J}, \mathbb {R}^n)\). Here, the domain \(\tilde{J}\) is defined by:

where the constants \(r_1,\,r_2\) are given by:

The source term \(F \in C(\mathbb {R}_+^2\times \Sigma ^{np}, \mathbb {R}^n)\), (in Eq. (1)), is continuous and satisfies:

for all \(\mathbf{t}\in \mathbb {R}_+^2\) and for all \(\mathbf{u}=(u_1,u_2,u_3)\), \(\mathbf{v}=(v_1,v_2,v_3)\) \(\in \Sigma ^{np} \), where \(\kappa \) is a continuous function on \(\mathbb {R}_+^2\). \(\Vert .\Vert \) is the Euclidean norm.

The function \(d\in \mathbb {R}^p\) is the disturbance. We suppose that the function \(d \in C(\mathbb {R}_+^2, \mathbb {R}^p)\) is continuous and satisfies:

Let us introduce the following constants \(a_0, \, a_1, \, a_2\) which are defined by:

where the function \(\kappa \) is given in relation (2).

Definition 6

The system (1) is robustly FTS with respect to \(\{\varepsilon , \sigma , \rho ,T_1, T_2\}\), \(\varepsilon < \sigma \), if the following relation is satisfied:

for all disturbance \(d \in \mathbb {R}^p\) satisfying (3).

Recall that the solution of the system (1) is defined on the extended domain \(J=\tilde{J} \cup I\) as follows:

where the functions \(\pi _{x}, \theta \) are defined by:

The first main result is given by the following theorem.

Theorem 2

Let \(\eta _1, \,\, \eta _2 >0\) such that \(1-\frac{a_0+a_1}{\eta _1\eta _2} >0\). Assume that hypothesis (2) is satisfied. Then, Eq. (1) has a unique solution \(y_0\) on I. In addition, the following inequality holds:

where \(M_0(\eta _1, \eta _2)\) is given by:

The proof of Theorem 2 will be established later.

Let us consider the complete metric space \((E, \delta )\) that is defined as follows:

where the function \(h \in C(J, \mathbb {R}_+)\) and is defined by:

Let \(\Phi \in C(\tilde{J}, \mathbb {R}^n)\). We consider the operator \(\mathcal {A}: E \rightarrow E\) defined by:

where the function \(\pi _{y}\) is given by:

Immediately, we have the following proposition.

Proposition 1

The operator \(\mathcal{{A}}: E \rightarrow E\) is contractive.

Proof

Recall that \(\mathbf{r}=(u,v) \in \mathbb {R}_+^2\). Let \(y_1, \,\,y_2 \in E\), we can deduce, from system (10), that:

On the other hand, for \(\mathbf{t}=(t,s) \in I\) we have:

Now, by using relation (2), we obtain:

From the definition of the constants \(a_0, \,a_1, \,a_2\), we deduce that:

Or, equivalently:

Finally, from the definition of the metric space E, we get:

Let us mention that we have:

Then, we can deduce from relations (11) and (12) that:

Using Remark 1, we get:

Thus,

Therefore, \(\mathcal{{A}}\) is contractive. The proof is complete. \(\square \)

In the following, we establish the proof of Theorem 2.

Proof

(Theorem 2). Let \(\Phi \in C(\tilde{J}, \mathbb {R}^n)\), with \( \Vert \Phi \Vert \le \varepsilon _1\). We consider a function \(\mu \) defined as follows:

It’s easy to see that we have the following estimation:

From the definition of the operator \(\mathcal {A}\), see (10), and the definition of the function \(\mu \), see (13), we get:

For all \(\mathbf{t}=(t, s) \in I\), we obtain:

where the function \(\pi _{\mu }\) is given as in Eq. (6). Then, we deduce that:

Hence, we deduce that:

By using Theorem 1 and Proposition 1, there exists a unique solution \(y_0\) to the problem (1) such that:

and we have the following estimation:

or, equivalently

where \(M_0(\eta _1, \eta _2)\) is given by Eq. (8). So, for all \(\mathbf{t} \in I\) we have

It’s well known that:

Consequently, using (14) and (15), we can establish that:

The proof is complete. \(\square \)

The second main result of this paper is given by the following theorem.

Theorem 3

If there exists \(\eta _1, \,\eta _2>0\) such that: \(a_0+a_1<\eta _1\eta _2\) and the following inequality holds:

Then, the system (1) is FTS w.r.t. \(\{\varepsilon , \sigma , \rho ,T_1, T_2\}\).

Proof

4 Numerical simulation

Recall that the solution of the system (1) is given by the relation (4) as follows:

for all \((t, s) \in [0, T_1]\times [0, T_2]\), where the functions \(\pi _{x}, \theta \) are given by relations (5) and (6). In this section, we study the system (1) where \(x, \, \theta ,\,\pi _{x} \in \mathbb {R}^2\), then we suppose that the solution vector x is of the form:

We consider an uniform grid in the extended domain \([-r_1, T_1]\times [-r_2, T_2]\). Let \(\lambda =\frac{T_1}{N}=\frac{r_1}{q}\) and \(\beta =\frac{T_2}{M}=\frac{r_2}{p}\), where \(N,\,M,\,p,\,q \in \mathbb {N}\). We build two sequences \((t_i)_i\), \((s_j)_j\) as follows:

At the point \((t_i, s_j)\), we have:

where \(\theta (t_i, s_j)= \Phi (0, s_j) + \Phi (t_i, 0) - \Phi (0, 0)\). Now, we consider the following approximations:

Then Eq. (18) becomes:

We deduce from (19) and (20) that:

Equation (20) can be rewritten as follows:

Now, we can used the approximation proposed in [26]:

where we have the approximation \(\pi _{x}(t_k, s_l) \approx \pi _{x}^{kl}\) and:

and the term

so, we deduce that:

By calculating the integral in the right hand side of Eq. (21) and after simplification, we obtain:

where \(b_{ik}, \,c_{lj}\) are given by:

The convergence of the method can be deduced from [26]. The error in this method is given by:

5 Numerical examples

In the following numerical examples, we prove that the solution of the system (1) satisfies the Definition 6. Indeed, we show that for any \(\varepsilon >0\) and \(\sigma >0\) such that: \(\varepsilon <\sigma \), we have

Recall that the system (1) is given as follows:

for all \((t, s) \in [0, T_1]\times [0, T_2]\), and the initial condition is defined by:

where the solution \(x(t,s)=(x_1(t,s), x_2(t,s))^T\)

Example 1

We have taken the following data:

The source term is in the form:

where \((r_1, r_2)= (0.1, 0.2)\). The initial condition:

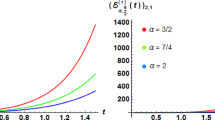

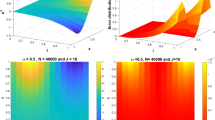

for all \((t, s) \in [-0.1, 0]\times [-0.2, 0]\). Moreover, we have taken: \(N=70\), \(M=60\) \(\eta _1=\eta _2=1\), \(\varepsilon =0.1\), \(\sigma =10\) and \(\rho =0.01\). In the following, we have plotted the solution for different values of \(\alpha =(\alpha _1,\alpha _2)\). Remark that \(\Vert \Phi \Vert \approx 0.09899<\varepsilon \). Also, the stability relation given in (23) is well satisfied \(\Vert x(t,s)\Vert < 10\). Indeed, the norm of the solution x(t, s) is given in each figure: Figures 1, 2 and 3. In this case, the fractional-order system (24) is FTS with respect to \(\{\varepsilon , \sigma ,\rho , T_1, T_2 \}\).

In the experiment illustrated by Fig. 4, we take the same data as considered in Fig. 1, but with a height perturbation \(d(t,s)=(2,3)^T\), \(\Vert d(t,s)\Vert \approx 3.605\) and \(\Vert x(t,s)\Vert =9.9441\). It’s clear that the stabilization is slower than in Fig. 1 (where \(d(t,s)=10^{-3}(5,4)^T\), \(\Vert d(t,s)\Vert \approx 0.0064\) and \(\Vert x(t,s)\Vert =6.4160\)). In fact, it’s quite in agreement.

Example 2

Now, we consider the same fractional-order system given in (24), but we take the following data:

The source term is in the form:

where \((r_1,r_2)= (0.1, 0.1)\). The initial condition:

for all \((t, s) \in [-0.1, 0]\times [-0.1, 0]\). Moreover, we have taken: \(N=80\), \(M=70\) \(\eta _1=\eta _2=1\), \(\varepsilon =0.1\), \(\sigma =1\) and \(\rho =0.02\). Remark that \(\Vert \Phi \Vert \approx 0.01414<\varepsilon \). Also, the stability relation given in (23) is well satisfied \(\Vert x(t,s)\Vert < 1\). Indeed, the norm of the solution x(t, s) is given in each figure: Figures 5, 6 and 7. Also, in this case, the fractional-order system (24) is FTS with respect to \(\{\varepsilon , \sigma ,\rho , T_1, T_2 \}\).

6 Conclusion

In this work, several goals have been achieved. Indeed, we have proved the existence and uniqueness of a global solution of (FPHDSs) using an approach based on the fixed-point theory. Moreover, a new sufficient condition for the (FTS) of such systems is obtained. Finally, some illustrative examples were presented to prove the validity of our result.

References

Ali, I., Rasheed, A., Anwar, M.S., Irfan, M., Hussain, Z.: Fractional calculus approach for the phase dynamics of Josephson junction. Chaos Solitons Fractals 143, 110572 (2021)

Pan, X., Zhu, J., Yu, H., Chen, L., Liu, Y., Li, L.: Robust corner detection with fractional calculus for magnetic resonance imaging. Biomed. Signal Process. Control 63, 102112 (2021)

Ahmed, H.M., Zhu, Q.: The averaging principle of Hilfer fractional stochastic delay differential equations with Poisson jumps. Appl. Math. Lett. 112, 106755 (2021)

Rabbani, F., Khraisha, T., Abbasi, T., Jafari, G.R.: Memory effects on link formation in temporal networks: a fractional calculus approach. Physica A 564, 125502 (2021)

Owolabi, K.M.: Numerical approach to chaotic pattern formation in diffusive predator-prey system with Caputo fractional operator. Numer. Methods Partial Differ. Equ. 37, 131–151 (2021)

Jahanshahi, H., Sajjadi, S.S., Bekiros, S., Aly, A.A.: On the development of variable-order fractional hyperchaotic economic system with a nonlinear model predictive controller. Chaos Solitons Fractals 144, 110698 (2021)

Ray, S.S.: A new approach by two-dimensional wavelets operational matrix method for solving variable-order fractional partial integro-differential equations. Numer. Methods Partial Differ. Equ. 37, 341–359 (2021)

Bayrak, M.A., Demir, A., Ozbilge, E.: A novel approach for the solution of fractional diffusion problems with conformable derivative. Numer. Methods Partial Differ. Equ. (2021). https://doi.org/10.1002/num.22750

Laadjal, Z., Abdeljawad, T., Jarad, F.: Sharp estimates of the unique solution for two-point fractional boundary value problems with conformable derivative. Numer. Methods Partial Differ. Equ. (2021). https://doi.org/10.1002/num.22760

Mumcu, I., Set, E., Akdemir, A.O., Jarad, F.: New extensions of Hermite-Hadamard inequalities via generalized proportional fractional integral. Numer. Methods Partial Differ. Equ. (2021). https://doi.org/10.1002/num.22767

Arfan, M., Shah, K., Abdeljawad, A., Hammouch, Z.: An efficient tool for solving two-dimensional fuzzy fractional-ordered heat equation. Numer. Methods Partial Differ. Equ. (2021). https://doi.org/10.1002/num.22587

Higazy, M., Allehiany, F.M., Mahmoud, E.E.: Numerical study of fractional order COVID-19 pandemic transmission model in context of ABO blood group. Results Phys. 22, 103852 (2021)

Moustafa, M., Mohd, M.H., Ismail, A.I., Abdullah, F.A.: Global stability of a fractional order eco-epidemiological system with infected prey. Int. J. Math. Model. Numer. Optim. (2021). https://doi.org/10.1504/IJMMNO.2021.111722

Cardoso, L.C., Camargo, R.F., Dos Santos, F.L.P., Dos Santos, P.C.: Global stability analysis of a fractional differential system in hepatitis B. Chaos Solitons Fractals 143, 110619 (2021)

Thanh, N.T., Phat, V.N., Niamsup, T.: New finite-time stability analysis of singular fractional differential equations with time-varying delay. Fract. Calc. Appl. Anal. 23, 504–519 (2020)

Tyagi, S., Martha, S.C.: Finite-time stability for a class of fractional-order fuzzy neural networks with proportional delay. Fuzzy Sets Syst. 381, 68–77 (2020)

Mirrezapour, S.Z., Zare, A., Hallaji, M.: A new fractional sliding mode controller based on nonlinear fractional-order proportional integral derivative controller structure to synchronize fractional-order chaotic systems with uncertainty and disturbances. J. Vib. Control (2021). https://doi.org/10.1177/1077546320982453

Xu, Y., Yu, J., Li, W., Feng, J.: Global asymptotic stability of fractional-order competitive neural networks with multiple time-varying-delay. Appl. Math. Comput. (2021). https://doi.org/10.1016/j.amc.2020.125498

Zouari, F., Ibeas, A., Boulkroune, A., Cao, J., Arefi, M.M.: Neural network controller design for fractional-order systems with input nonlinearities and asymmetric time-varying Pseudo-state constraints, Chaos Solitons Fractals (2021). https://doi.org/10.1016/j.chaos.2021.110742

Zguaid, K., El Alaoui, F.Z., Boutoulout, A.: Regional observability for linear time fractional systems. Math. Comput. Simul. (2021). https://doi.org/10.1016/j.matcom.2020.12.013

Ben Makhlouf, A.: A Novel Finite Time Stability Analysis of Nonlinear Fractional-Order Time Delay Systems: A Fixed Point Approach. arXiv:2012.00007

Cao, J., Chen, Y., Wang, Y., Cheng, G., Barriere, T., Wang, L.: Numerical analysis of fractional viscoelastic column based on shifted Chebyshev wavelet function. Appl. Math. Model. 91, 374–389 (2021)

Abbas, S., Benchohra, M.: Darboux problem for perturbed partial differential equations of fractional order with finite delay. Nonlinear Anal. Hybrid Syst. 381, 68–77 (2020)

Chandhini, G., Prashanthi, K.S., Vijesh, V.: A radial basis function method for fractional Darboux problems. Eng. Anal. Bound. Elem. 86, 1–18 (2018)

Hassani, H., Tenreiro Machado, J.A., Avazzadeh, Z., Naraghirad, E. Dahaghin, M.: Sh, Generalized Bernoulli polynomials: solving nonlinear 2D fractional optimal control problems. J. Sci. Comput. 83, 1–21 (2020)

Vityuk, A.N., Mykhailenko, A.V.: The Darboux problem for an implicit fractional-order differential equation. J. Math. Sci. 175, 391–401 (2011)

Wang, J., Zhang, S.: A Lyapunov-type inequality for partial differential equation involving the mixed caputo derivative. Mathematics (2020). https://doi.org/10.3390/math8010047

Abbas, S., Benchohra, M., Cabada, A.: Partial neutral functional integro-differential equations of fractional order with delay. Bound. Value Probl. 2012, 1–13 (2012)

Benchohra, M., Hellal, M.: Global uniqueness results for fractional partial hyperbolic differential equations with state-dependent delay. Ann. Polon. Math. 110, 259–281 (2014)

Abbas, S., Benchohra, M.: Darboux Problem for implicit impulsive partial hyperbolic fractional order differential equations. Electron. J. Differ. Equ. 2011, 1–14 (2011)

Ali, M.S., Narayanan, G., Saroha, S., Priya, B., Thakur, G.K.: Finite-time stability analysis of fractional-order memristive fuzzy cellular neural networks with time delay and leakage term. Math. Comput. Simul. 185, 468–485 (2021)

Diaz, J.B., Margolis, B.: A fixed point theorem of the alternative for contractions on a generalized complete metric space. Bull. Am. Math. Soc. 74, 305–309 (1968)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Arfaoui, H., Ben Makhlouf, A. Some results for a class of two-dimensional fractional hyperbolic differential systems with time delay. J. Appl. Math. Comput. 68, 2389–2405 (2022). https://doi.org/10.1007/s12190-021-01625-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12190-021-01625-7