Abstract

In this paper, we derive a new linear convergence rate for the gradient method with fixed step lengths for non-convex smooth optimization problems satisfying the Polyak-Łojasiewicz (PŁ) inequality. We establish that the PŁ inequality is a necessary and sufficient condition for linear convergence to the optimal value for this class of problems. We list some related classes of functions for which the gradient method may enjoy linear convergence rate. Moreover, we investigate their relationship with the PŁ inequality.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We consider the gradient method for the unconstrained optimization problem

where \(f: {\mathbb {R}}^n\rightarrow {\mathbb {R}}\) is differentiable, and \(f^\star \) is finite. The gradient method with fixed step lengths may be described as follows.

In addition, we assume that f has a maximum curvature \(L\in (0, \infty )\) and a minimum curvature \(\mu \in (-\infty , L)\). Recall that f has a maximum curvature L if \(\tfrac{L}{2}\Vert .\Vert ^2-f\) is convex. Similarly, f has a minimum curvature \(\mu \) if \(f-\tfrac{\mu }{2}\Vert .\Vert ^2\) is convex. We denote smooth functions with curvature belonging to the interval \([\mu , L]\) by \({\mathcal {F}}_{\mu , L}({\mathbb {R}}^n)\). The class \({\mathcal {F}}_{\mu , L}({\mathbb {R}}^n)\) includes all smooth functions with Lipschitz gradient (note that \(\mu \ge 0\) corresponds to convexity). Indeed, f is L-smooth on \({\mathbb {R}}^n\) if and only if f has a maximum and minimum curvature \(\bar{L}>0\) and \(\bar{\mu }\), respectively, with \(\max (\bar{L}, |\bar{\mu }|)\le L\). This class of functions is broad and appears naturally in many models in machine learning, see [8] and the references therein.

For \(f\in {\mathcal {F}}_{\mu , L}({\mathbb {R}}^n)\), we have the following inequalities for \(x, y\in {\mathbb {R}}^n\)

see Lemma 2.5 in [21].

It is known that the lower bound of first order methods for obtaining an \(\epsilon \)-stationary point is of the order \(\Omega \left( \epsilon ^{-2}\right) \) for L-smooth functions [6]. Hence, it is of interest to investigate the classes of functions for which the gradient method enjoys linear convergence rate. This subject has been investigated by some scholars and some classes of functions have been introduced where linear convergence is possible; see [7, 14,15,16] and the references therein. This includes the class of functions satisfying the Polyak-Łojasiewicz (PŁ) inequality [16, 20].

Definition 1

A function f is said to satisfy the PŁ inequality on \(X\subseteq {\mathbb {R}}^n\) if there exists \(\mu _p>0\) such that

Note that the PŁ inequality is also known as gradient dominated; see [19, Definition 4.1.3]. Strongly convex functions satisfy the PŁ inequality, but some classes of non-convex functions also fulfill this inequality. For instance, consider a differentiable function \(G:{\mathbb {R}}^n\rightarrow {\mathbb {R}}^m\) with \(m\le n\). Suppose that the non-linear system \(G(x)=0\) has some solution. If

where \(J_G(x)\) is the Jacobian matrix of G at x, then the function \(f(x)=\Vert G(x)\Vert ^2\) fulfils the PŁ inequality; see [19, Example 4.1.3]. Here, \(\lambda _{\min }(A)\) denotes the smallest eigenvalue of symmetric matrix A. In other words, nonlinear least squares problems often correspond to instances of (1) where the objective satisfies the PŁ inequality.

The following classical theorem provides a linear convergence rate for Algorithm 1 under the PŁ inequality.

Theorem 1

[20, Theorem 4] Let f be L-smooth and let f satisfy PŁ inequality on \(X=\{x: f(x)\le f(x^1)\}\). If \(t_1\in (0, \tfrac{2}{L})\) and \(x^2\) is generated by Algorithm 1, then

In particular, if \(t_1=\tfrac{1}{L}\), we have

In this paper we will sharpen this bound; see Theorem 3. Under the assumptions of Theorem 1, Karimi et al. [16] established linear convergence rates for some other methods including the randomized coordinate descent. We refer the interested reader to the recent survey [7] for more details on the convergence of non-convex algorithms under the PŁ inequality.

In this paper, we analyse the gradient method from black-box perspective, which means that we have access to the gradient and the function value at the given point. Furthermore, we study the convergence rate of Algorithm 1 by using performance estimation.

In recent years, performance estimation has been used to find worst-case convergence rates of first order methods [1, 2, 9, 10, 13, 23], to name but a few. This strong tool first has been introduced by Drori and Teboulle in their seminal paper [12]. The idea of performance estimation is that the infinite dimensional optimization problem concerning the computation of convergence rate may be formulated as a finite dimensional optimization problem (often semidefinite programs) by using interpolation theorems. The interested reader may consult the PhD theses of Drori [11] and Taylor [22] for an introduction to, and review of the topic.

The rest of the paper is organized as follows. In Sect. 2, we consider problem (1) when f satisfies the PŁ inequality. We derive a new linear convergence rate for Algorithm 1 by using performance estimation. Furthermore, we provide an optimal step length with respect to the given bound. We also show that the PŁ inequality is necessary and sufficient for linear convergence, in a well-defined sense. Sect. 3 lists some other situations where Algorithm 1 is linearly convergent. Moreover, we study the relationships between these situations. Finally, we conclude the paper with some remarks and questions for future research.

Notation

The n-dimensional Euclidean space is denoted by \({\mathbb {R}}^n\). Vectors are considered to be column vectors and the superscript T denotes the transpose operation. We use \(\langle \cdot , \cdot \rangle \) and \(\Vert \cdot \Vert \) to denote the Euclidean inner product and norm, respectively. For a matrix A, \(A_{ij}\) denotes its (i, j)-th entry. The notation \(A\succeq 0\) means the matrix A is symmetric positive semi-definite, and \(\mathrm{tr}(A)\) stands for the trace of A.

2 Linear convergence under the PŁ inequality

This section studies linear convergence of the gradient descent under the PŁ inequality. It is readily seen that the PŁ inequality implies that every stationary point is a global minimum on X. By virtue of the descent lemma [19, Page 29], we have

Hence, \(\mu _p\) can take value in (0, L]. On the other hand, we may assume without loss of generality \(\mu \le \mu _p\). The inequality is trivial if \(\mu \le 0\), and we therefore assume that \(\mu >0\). By taking the minimum with respect to y from both side of inequality (3), we get

Hence, one may assume without loss of generality \(\mu _p=\max \{\mu , \mu _p\}\) in inequality (4).

In what follows, we employ performance estimation to get a new bound under the assumptions of Theorem 1. In this setting, the worst-case convergence rate of Algorithm 1 may be cast as the following optimization problem,

In problem (7), f and \(x^1\) are decision variables and \(X=\{x: f(x)\le f(x^1)\}\). We may replace the infinite dimensional condition \(f \in {\mathcal {F}}_{\mu ,L}({\mathbb {R}}^n)\) by a finite set of constraints, by using interpolation. Theorem 2 gives some necessary and sufficient conditions for the interpolation of given data by some \(f\in {\mathcal {F}}_{\mu , L}({\mathbb {R}}^n)\).

Theorem 2

[21, Theorem 3.1] Let \(\{(x^i; g^i; f^i)\}_{i\in I}\subseteq {\mathbb {R}}^n \times {\mathbb {R}}^n \times {\mathbb {R}}\) with a given index set I and let \(L\in (0, \infty ]\) and \(\mu \in (-\infty , L)\). There exists a function \(f\in {\mathcal {F}}_{\mu , L}({\mathbb {R}}^n)\) with

if and only if for every \(i, j\in I\)

It is worth noting that Theorem 2 addresses non-smooth functions as well. In fact, \(L=\infty \) covers non-smooth functions. Note that we only investigate the smooth case in this paper, that is \(L\in (0, \infty )\) and \(\mu \in (-\infty , 0]\).

By Theorem 2, problem (7) may be relaxed as follows,

As we replace the constraint \( f(x)-f^\star \le \tfrac{1}{2\mu _p} \Vert \nabla f(x)\Vert ^2\) for each \(x\in X\) by \( f^1-f^\star \le \tfrac{1}{2\mu _p}\Vert g^1\Vert ^2\) and \( f^2-f^\star \le \tfrac{1}{2\mu _p}\Vert g^2\Vert ^2\), problem (10) is a relaxation of problem (7). By using the constraint \(x^2=x^1-t_1g^1\), problem (10) may be reformulated as,

By using the Gram matrix,

problem (11) can be relaxed as follows,

where

In addition, \(X, f^1, f^2\) are decision variables in this formulation. In the next theorem, we obtain an upper bound for problem (11) by using weak duality. This bound gives a new convergence rate for Algorithm 1 for a wide variety of functions.

Theorem 3

Let \(f\in {\mathcal {F}}_{\mu , L}({\mathbb {R}}^n)\) with \(L\in (0, \infty ), \mu \in (-\infty , 0]\) and let f satisfy the PŁ inequality on \(X=\{x: f(x)\le f(x^1)\}\). Suppose that \(x^2\) is generated by Algorithm 1.

-

i)

If \(t_1\in \left( 0,\tfrac{1}{L}\right) \), then

$$\begin{aligned}&\frac{f(x^2)-f^\star }{f(x^1)-f^\star }\\&\quad \le \left( \frac{\mu _p\left( 1-Lt_1\right) +\sqrt{\left( L-\mu \right) \left( \mu -\mu _p \right) \left( 2-L t_1\right) \mu _pt_1+\left( L-\mu \right) ^2}}{L-\mu +\mu _p}\right) ^2. \end{aligned}$$ -

ii)

If \(t_1\in \left[ \tfrac{1}{L}, \tfrac{3}{\mu +L+\sqrt{\mu ^2-L\mu +L^2}}\right] \), then

$$\begin{aligned} \frac{f(x^2)-f^\star }{f(x^1)-f^\star } \le \left( \frac{(Lt_1-2)(\mu t_1-2)\mu _p t_1}{\left( L+\mu -\mu _p\right) t_1-2}+1\right) . \end{aligned}$$ -

iii)

If \(t_1\in \left( \tfrac{3}{\mu +L+\sqrt{\mu ^2-L\mu +L^2}}, \tfrac{2}{L}\right) \), then

$$\begin{aligned} \frac{f(x^2)-f^\star }{f(x^1)-f^\star }\le \frac{(L t_1-1)^2}{(Lt_1-1)^2 +\mu _p t_1(2-Lt_1)}. \end{aligned}$$

In particular, if \(t_1=\tfrac{1}{L}\) and \(\mu =-L\), we have

Proof

First we consider \(t_1\in \left( 0,\tfrac{1}{L}\right) \). Let

where

It is readily seen that \(b_1,b_2\ge 0\). Furthermore,

Therefore, for any feasible solution of problem (11), we have

and the proof of this part is complete. Now, we consider the case that \(t_1\in \left[ \tfrac{1}{L}, \tfrac{3}{\mu +L+\sqrt{\mu ^2 -L\mu +L^2}}\right] \). Suppose that

It is readily seen that \(a_1,a_2,a_3,a_4\ge 0\). Furthermore,

Therefore, for any feasible solution of problem (11), we have

Now, we prove the last part. Assume that \(t_1\in \left( \tfrac{3}{\mu +L+\sqrt{\mu ^2-L\mu +L^2}},\tfrac{2}{L}\right) \). With some algebra, one can show

where,

The rest of the proof is similar to that of the former cases. \(\square \)

One may wonder how we obtain Lagrange multipliers (dual variables) in Theorem 3. The multipliers are computed by solving the dual of problem (12) by hand. Furthermore, Theorem 3 provides a tighter bound in comparison with the convergence rate given in Theorem 1 for L-smooth functions with \(t_1\in (0, \tfrac{2}{L})\). To show this, we need investigate three subintervals:

-

i)

Suppose that \(t_1\in \left( 0,\tfrac{1}{L}\right) \). As \(1-Lt_1\le 0\),

$$\begin{aligned}&\left( \tfrac{\mu _p\left( 1-Lt_1\right) +\sqrt{2L\left( -L-\mu _p \right) \left( 2-L t_1\right) \mu _pt_1+4L^2}}{2L+\mu _p}\right) ^2\\&\quad \le \tfrac{4 L^2+2 L \mu _p t_1 (L+\mu _p) (L t_1-2) +(\mu _p-L \mu _p t_1)^2}{(2 L+\mu _p)^2}\le 1-t_1\mu _p(2-t_1L), \end{aligned}$$where the last inequality follows from non-positivity of the quadratic function \(T_1(t_1)=-L t_1^2 \left( 2 L^2+L \mu _p+\mu _p^2\right) +2 t_1 \left( 2 L^2+L \mu _p+\mu _p^2\right) -4 L\) on the given interval.

-

ii)

Let \(t_1\in \left[ \tfrac{1}{L}, \tfrac{\sqrt{3}}{L}\right] \). Since \(\mu _p\le L\) and \((2-Lt_1)> 0\), we have

$$\begin{aligned} 1\le \tfrac{L t_1+2}{\mu _pt_1+2} \ \Rightarrow \ 1-\tfrac{(2-Lt_1)(L t_1+2) \mu _p t_1}{\mu _pt_1+2} \le 1-t_1\mu _p(2-Lt_1). \end{aligned}$$ -

iii)

Assume that \(t_1\in (\tfrac{\sqrt{3}}{L},\tfrac{2}{L})\). It is readily verified that the quadratic function \(T_2(t_1)=(Lt_1-1)^2+\mu _p t_1(2-Lt_1)-1\) is non-positive on the given interval. Hence,

$$\begin{aligned} \tfrac{(L t_1-1)^2}{(Lt_1-1)^2+\mu _p t_1(2-Lt_1)} =1-\tfrac{\mu _p t_1(2-Lt_1)}{(Lt_1-1)^2+\mu _p t_1(2-Lt_1)} \le 1- t_1\mu _p(2-Lt_1). \end{aligned}$$

Therefore, for \(t_1\in \left( 0,\tfrac{2}{L}\right) \) the bound provided by Theorem 3 is tighter than that given by Theorem 2.

In most problems, the smoothness constant, L, is unknown. By using (2), any estimation of the smoothness constant L, say \(\tilde{L}\), should satisfy the following inequality,

Thus one may try to obtain a suitable estimate by searching for a sufficiently large value of \(\tilde{L}\) that satisfies this inequality. This technique is due to Nesterov; see [18, Section 3] for details.

The next proposition gives the optimal step length with respect to the worst-case convergence rate.

Proposition 1

Let \(f\in {\mathcal {F}}_{\mu , L}({\mathbb {R}}^n)\) with \(L\in (0, \infty ), \mu \in (-\infty , 0]\) and let f satisfy the PŁ inequality on \(X=\{x: f(x)\le f(x^1)\}\). Suppose that \(r(t)=L\mu (L+\mu -\mu _p)t^3- \left( L^2-\mu _p (L+\mu )+5 L \mu +\mu ^2\right) t^2+4(L+\mu )t-4\) and \(\bar{t}\) is the unique root of r in \(\left[ \tfrac{1}{L}, \tfrac{3}{\mu +L+\sqrt{\mu ^2-L\mu +L^2}}\right] \) if it exists. Then \(t^\star \) given by

is the optimal step length for Algorithm 1 with respect to the worst-case convergence rate.

Proof

To obtain an optimal step length, we need to solve the optimization problem

where h is given by

It is easily seen that h is decreasing on \(\left( 0,\tfrac{1}{L}\right) \) and is increasing on \(\left( \tfrac{3}{\mu +L+\sqrt{\mu ^2-L\mu +L^2}}, \tfrac{2}{L}\right) \). Hence, we need investigate the closed interval \(\left[ \tfrac{1}{L}, \tfrac{3}{\mu +L+\sqrt{\mu ^2-L\mu +L^2}}\right] \). We will show that h is convex on the interval in question. First, we consider the case \(L+\mu -\mu _p\le 0\). Let \(p(t)=\frac{\mu t-2}{\left( L+\mu -\mu _p\right) t-2}\) and \(q(t)=(Lt-2)\mu _p t\). By some algebra, one can show the following inequalities for \(t\in \left[ \tfrac{1}{L}, \tfrac{3}{\mu +L+\sqrt{\mu ^2 -L\mu +L^2}}\right] \):

Hence, the convexity of h follows from \(h^{\prime \prime }=p^{\prime \prime }q+2p^{\prime }q^{\prime }+pq^{\prime \prime }\). Now, we investigate the case that \(L+\mu -\mu _p>0\). Suppose that \(p(t)=\frac{\mu _p t}{\left( L+\mu -\mu _p\right) t-2}\) and \(q(t)=(Lt-2)(\mu t-2)\). For these functions, we have the following inequalities

which analogous to the former case one can infer the convexity of h on the given interval. Hence, if h has a root in \(\left[ \tfrac{1}{L}, \tfrac{3}{\mu +L+\sqrt{\mu ^2-L\mu +L^2}}\right] \), it will be the minimum. Otherwise, the point \(t^\star =\tfrac{3}{\mu +L+\sqrt{\mu ^2-L\mu +L^2}}\) will be the minimum. This follows from the point that \(h^\prime (\tfrac{1}{L})=\tfrac{2L\mu _p(\mu _p-L)}{(L+\mu _p-\mu )^2}\le 0\) and the convexity of h on the interval in question. \(\square \)

Thanks to Proposition 1, the following corollary gives the optimal step length for L-smooth convex functions satisfying the PŁ inequality.

Corollary 1

If f is an L-smooth convex function satisfying the PŁ inequality, then the optimal step length with respect to the worst-case convergence rate is \(\min \left\{ \tfrac{2}{L+\sqrt{L\mu _p}}, \tfrac{3}{2L}\right\} \).

The constant \(\tfrac{2}{L+\sqrt{L\mu _p}}\) also appears in the the fast gradient algorithm introduced in [17] for L-smooth convex functions which are \((1, \mu _s)\)-quasar-convex, see Definition 4. By Theorem 9, \((1, \mu _s)\)-quasar-convexity implies the PŁ inequality with the same constant. Algorithm 2 describes the method in question.

One can verify that Algorithm 2, at the first iteration, generates \(x^2=x^1-\tfrac{2}{L+\sqrt{L\mu _p}}\nabla f(x^1)\).

A more general form of the PŁ inequality, called the Łojasiewicz inequality, may be written as

where \(\theta \in (0, 1)\). It is known that when \(\theta \in (0, \tfrac{1}{2}]\) some algorithms, including Algorithm 1, is linearly convergent; see [3, 4]. In the next theorem, we show that for functions with finite maximum and minimum curvature the Łojasiewicz inequality cannot hold for \(\theta \in (0, \tfrac{1}{2})\).

Theorem 4

Let \(f\in {\mathcal {F}}_{\mu , L}({\mathbb {R}}^n)\) be a non-constant function. If f satisfies the Łojasiewicz inequality on \(X=\{x: f(x)\le f(x^1)\}\), then \(\theta \ge \tfrac{1}{2}\).

Proof

To the contrary, assume that \(\theta \in (0, \tfrac{1}{2})\). Without loss of generality, we may assume that \(\mu =-L\). It is known that Algorithm 1 generates a decreasing sequence \(\{f(x^k)\}\) and it is convergent, that is \(\Vert \nabla f(x^k)\Vert \rightarrow 0\); see [19, page 28]. Furthermore, (14) implies that \(f(x^k)\rightarrow f^\star \). Without loss of generality, we may assume that \(f^\star =0\). First, we investigate the case that \(f(x^1)=1\). The semi-definite programming problem corresponding to performance estimation in this case may be formulated as follows,

Since Algorithm 1 is a monotone method, \(f^2\) can take value in [0, 1]. In addition, we have \(f^2\le (f^2)^{2\theta }\) on this interval. Hence, by using Theorem 3, we get the following bound,

Now, suppose that \(f(x^1)=f^1>0\). Consider the function \(h: {\mathbb {R}}^n\rightarrow {\mathbb {R}}\) given by \(h(x)=\tfrac{f(x)}{f^1}\). It is seen that h is \(\tfrac{L}{f^1}\)-smooth and

As Algorithm 1 generates the same \(x^2\) for both functions, by using the first part, we obtain

For \(f^1\) sufficiently small, we have \(\frac{2L-2\mu _p(f^1)^{2\theta -1}}{2L+\mu _p(f^1)^{2\theta -1}}< 0\), which contradicts \(f^\star \ge 0\) and the proof is complete. \(\square \)

Necoara et al. gave necessary and sufficient conditions for linear convergence of the gradient method with constant step lengths when f is a smooth convex function; see [17, Theorem 13]. Indeed, the theorem says that Algorithm 1 is linearly convergent if and only if f has a quadratic functional growth on \(\{x: f(x)\le f(x^1)\}\); see Definition 3. However, this theorem does not hold necessarily for non-convex functions. The next theorem provides necessary and sufficient conditions for linear convergence of Algorithm 1.

Theorem 5

Let \(f\in {\mathcal {F}}_{\mu , L}({\mathbb {R}}^n)\). Algorithm 1 is linearly convergent to the optimal value if and only if f satisfies PŁ inequality on \(\{x: f(x)\le f(x^1)\}\).

Proof

Let \(\bar{x}\in \{x: f(x)\le f(x^1)\}\). Linear convergence implies the existence of \(\gamma \in [0, 1)\) with

where \(\hat{x}=\bar{x}-\tfrac{1}{L}\nabla f(\bar{x})\). By (3), we have \(f(\bar{x})- f(\hat{x})\le \tfrac{2L-\mu }{2L^2}\Vert \nabla f(\bar{x})\Vert ^2\). By using this inequality with (16), we get

which shows that f satisfies PŁ inequality on \(\{x: f(x)\le f(x^1)\}\). The other implication follows from Theorem 3. \(\square \)

3 The PŁ inequality: relation to some classes of functions

In this section, we study some classes of functions for which Algorithm 1 may be linearly convergent. We establish that these classes of functions satisfy the PŁ inequality under mild assumptions, and we infer the linear convergence by using Theorem 3. Moreover, one can get convergence rates by applying performance estimation.

Throughout the section, we denote the optimal solution set of problem (1) by \(X^\star \) and we assume that \(X^\star \) is non-empty. We denote the distance function to \(X^\star \) by \(d_{X^\star }(x):=\inf _{y\in X^\star } \Vert y-x\Vert \). The set-valued mapping \(\Pi _{X^\star } (x)\) stands for the projection of x on \(X^\star \), that is, \(\Pi _{X^\star } (x)=\{y: \Vert y-x\Vert =d_{X^\star }(x)\}\). Note that, as \(X^\star \) is non-empty closed set, \(\Pi _{X^*}(x)\) exists and is well-defined.

Definition 2

Let \(\mu _g> 0\). A function f has a quadratic gradient growth on \(X\subseteq {\mathbb {R}}^n\) if

for some \(x^\star \in \Pi _{X^\star }(x)\).

Note that inequality (2) implies that \(\mu _g\le L\). Hu et al. [15] investigated the convergence rate \(\{x^k\}\) when f satisfies (17) and \(X^\star \) is singleton. To the best knowledge of the authors, there is no convergence rate result in terms of \(\{f(x^k)\}\) for functions with a quadratic gradient growth. The next proposition states that quadratic gradient growth property implies the PŁ inequality.

Proposition 2

Let \(f\in {\mathcal {F}}_{\mu , L}({\mathbb {R}}^n)\). If f has a quadratic gradient growth on \(X\subseteq {\mathbb {R}}^n\) with \(\mu _g> 0\), then f satisfies the PŁ inequality with \( \mu _p=\tfrac{\mu _g^2}{L}\).

Proof

Suppose that \(x^\star \in \Pi _{X^\star }(x)\) satisfies (17). By the Cauchy-Schwarz inequality, we have

On the other hand, (2) implies that

The PŁ inequality follows from (18) and (19). \(\square \)

By Proposition 2 and Theorem 3, one can infer the linear convergence of Algorithm 1 when f has a quadratic gradient growth on \(X=\{x: f(x)\le f(x^1)\}\). Indeed, one can derive the following bound if \(t_1=\tfrac{1}{L}\) and \(\mu =-L\),

Nevertheless, by using the performance estimation method, one can derive a better bound than the bound given by (20). The performance estimation problem for \(t_1=\tfrac{1}{L}\) in this case may be formulated as

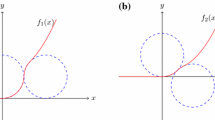

Analogous to Sect. 2, one can obtain an upper bound for problem (21) by solving a semidefinite program. Our numerical results show that the bounds generated by performance estimation is tighter than bound (20); see Fig. 1. We do not have a closed-form bound on the optimal value of (21), though.

Convergence rate computed by performance estimation (red line) and the bound given by (20) (blue line) for \(\tfrac{\mu _g}{L}\in (0,1)\). (color figure online)

Definition 3

[17, Definition 4], [19, Definition 4.1.2] Let \(\mu _q> 0\). A function f has a quadratic functional growth on \(X\subseteq {\mathbb {R}}^n\) if

It is readily seen that, contrary to the previous situations, the quadratic functional growth property does not necessarily imply that each stationary point is a global optimal solution. The next theorem investigates the relationship between quadratic functional growth property and other notions.

Theorem 6

Let \(f\in {\mathcal {F}}_{\mu , L}({\mathbb {R}}^n)\) and let \(X=\{x: f(x)\le f(x^1)\}\). We have the following implications:

- i)

-

ii)

If \(\mu _q>\tfrac{-\mu L}{L-\mu }\), then (22) \(\Rightarrow \) (17) with \(\mu _g=\tfrac{\mu _q}{2} (1-\tfrac{\mu }{L})+\tfrac{\mu }{2}\).

-

iii)

If

$$\begin{aligned} f(x)-f(x^\star )\le \langle \nabla f(x), x-x^\star \rangle , \ \forall x\in X, \end{aligned}$$for some \(x^\star \in \Pi _{X^\star } (x)\) then (22) \(\Rightarrow \) (17) with \(\mu _g=\tfrac{\mu _q}{2}\).

Proof

One can establish i) similarly to the proof of [16, Theorem 2]. Consider part ii). Let \(x\in X\) and \(x^\star \in \Pi _{X^\star } (x)\) with \(d_{X^\star } (x)=\Vert x-x^\star \Vert \). By (9), we have

As \(\tfrac{\mu _q}{2}\Vert x-x^\star \Vert ^2 \le f(x)-f(x^\star )\), we get

which establishes the desired inequality. Part iii) is proved similarly to the former case. \(\square \)

By Theorem 3, it is clear that Algorithm 1 enjoys linear convergence rate if f has a quadratic gradient growth on \(X=\{x: f(x)\le f(x^1)\}\) and if f satisfies assumptions ii) or iii) in Theorem 6. For instance, if \(\mu =-L\) and \(\mu _q\in (\tfrac{L}{2}, L)\), one can derive the following convergence rate for Algorithm 1 for fixed step length \(t_k=\tfrac{1}{L}\), \(k\in \{1, ..., N\}\),

It is interesting to compare the convergence rate (23) to the convergence rate obtained by using the performance estimation framework. In this case, the performance estimation problem may be cast as follows,

Since \(x^{k+1}=x^k-\tfrac{1}{L} g^k\), we get \(x^{k+1}=x^1-\tfrac{1}{L} \sum _{l=1}^k g^l\). Hence, problem (24) may be reformulated as follows,

The next theorem provides an upper bound for problem (25) by using weak duality.

Theorem 7

Let \(f\in {\mathcal {F}}_{-L, L}({\mathbb {R}}^n)\) and let f have a quadratic functional growth on \(X=\{x: f(x)\le f(x^1)\}\) with \(\mu _q\in (\tfrac{L}{2}, L)\). If \(t_k=\tfrac{1}{L}\), \(k\in \{1, ..., N\}\), then we have the following convergence rate for Algorithm 1,

Proof

The proof is analogous to that of Theorem 3. Without loss of generality, we may assume that \(f^\star =0\). By some algebra, one can show that

By using the above inequality, we get

for any feasible point of (25), and the proof is complete. \(\square \)

By doing some calculus, one can verify the following inequality

Hence, Theorem 7 provides a tighter bound than (23).

Definition 4

[14, Definition 1] Let \(\gamma \in (0, 1]\) and \(\mu _s\ge 0\). A function f is called \((\gamma , \mu _s)\)-quasar-convex on \(X\subseteq {\mathbb {R}}^n\) with respect to \(x^\star \in \text {argmin}_{x\in {\mathbb {R}}^n} f(x)\) if

The class of quasar-convex functions is large. For instance, non-negative homogeneous functions are (1, 0)-quasar-convex on \({\mathbb {R}}^n\). (Recall that a function \(f:{\mathbb {R}}^n\rightarrow {\mathbb {R}}\) is called homogeneous of degree k if \(f(\alpha x)=\alpha ^k f(x)\) for all \(x\in {\mathbb {R}}^n, \alpha \in {\mathbb {R}}\). ) Indeed, if f is non-negative homogeneous of degree \(k\ge 1\), by the Euler identity, we have

where \(x^\star =0\). In what follows, we list some convergence results concerning quasar-convex functions for Algorithm 1.

Theorem 8

[5, Remark 4.3] Let f be L-smooth and let f be \((\gamma , \mu _s)\)-quasar-convex on \(X=\{x: f(x)\le f(x^1)\}\). If \(t_1=\tfrac{1}{L}\) and if \(x^2\) is from Algorithm 1, then

In the following theorem, we state the relationship between quasar-convexity and other concepts. Before we get to the theorem, we recall star convexity. A set X is called star convex at \(x^\star \) if

Theorem 9

Let \(x^\star \) be the unique solution of problem (1) and let \(X=\{x: f(x)\le f(x^1)\}\). If X is star convex at \(x^\star \), then we have the following implications:

-

i)

(27) \(\Rightarrow \) (17) with \(\mu _g=\tfrac{\mu _s\gamma }{2}+\tfrac{\mu _s\gamma ^2}{4}\).

-

ii)

(17) \(\Rightarrow \) (27) with \(\mu _s=\ell -\tfrac{L}{2}\) and \(\gamma =\tfrac{\mu _g}{\ell }\) for each \(\ell \in (\max (\tfrac{L}{2}, \mu _g), \infty )\).

- iii)

Proof

The proof of i) is similar in spirit to the proof of Theorem 1 in [17]. Let \(x\in X\). By the fundamental theorem of calculus and (27),we have

where the last inequality follows from the global optimality of \(x^\star \). By summing \(f(x)-f(x^\star )\ge \tfrac{\gamma \mu _s}{4}\Vert x-x^\star \Vert ^2\) and (27), we get the desired inequality. Now, we prove part ii). Let \(x\in {\mathbb {R}}^n\) and \(\ell \in (\max (\tfrac{L}{2}, \mu _g), \infty )\). By (2), we have

By using (29) and (17), we get

For the proof of iii), we refer the reader to [5, Lemma 3.2]. \(\square \)

By combining Theorem 3 and Theorem 9, under the assumptions of Theorem 8, one can get the following convergence rate for Algorithm 1 with \(t_1=\tfrac{1}{L}\),

which is tighter the bound given in Theorem 8.

4 Concluding remarks

In this paper we studied the convergence rate of the gradient method with fixed step lengths for smooth functions satisfying the PŁ inequality. We gave a new convergence rate, which is sharper than known bounds in the literature. One important question which remains to be addressed is the computation of the tightest bound for Algorithm 1. Moreover, the performance analysis of fast gradient methods, like Algorithm 2, for these classes of functions may also be of interest.

We only studied the linear convergence in terms of the convergence of objective values. However, one can also infer the linear convergence in terms of distance to the solution set or the norm of the gradient by using our results. For instance, under the assumption of Theorem 3, we have

where the first inequality follows from Theorem 6, \(\gamma \) is the linear convergence rate given in Theorem 3, and the last inequality resulted from (2). Hence,

Moreover, the quadratic gradient growth is a necessary and sufficient conditions for the linear convergence in terms of distance to the solution set; see [24, Theorem 3.4]. Note that the PŁ inequality and the quadratic gradient growth are equivalent.

References

Abbaszadehpeivasti, H., de Klerk, E., Zamani, M.: The exact worst-case convergence rate of the gradient method with fixed step lengths for L-smooth functions. Opt. Lett., pp. 1–13 (2021)

Abbaszadehpeivasti, H., de Klerk, E., Zamani, M.: On the rate of convergence of the difference-of-convex algorithm (DCA). arXiv preprint arXiv:2109.13566 (2021)

Attouch, H., Bolte, J.: On the convergence of the proximal algorithm for nonsmooth functions involving analytic features. Math. Program. 116(1), 5–16 (2009)

Attouch, H., Bolte, J., Redont, P., Soubeyran, A.: Proximal alternating minimization and projection methods for nonconvex problems: an approach based on the Kurdyka-Łojasiewicz inequality. Math. Oper. Res. 35(2), 438–457 (2010)

Bu, J., Mesbahi, M.: A note on Nesterov’s accelerated method in nonconvex optimization: a weak estimate sequence approach. arXiv preprint arXiv:2006.08548 (2020)

Carmon, Y., Duchi, J.C., Hinder, O., Sidford, A.: Lower bounds for finding stationary points I. Math. Program. 184, 1–50 (2019)

Danilova, M., Dvurechensky, P., Gasnikov, A., Gorbunov, E., Guminov, S., Kamzolov, D., Shibaev, I.: Recent theoretical advances in non-convex optimization. arXiv preprint arXiv:2012.06188 (2020)

Davis, D., Drusvyatskiy, D.: Stochastic model-based minimization of weakly convex functions. SIAM J. Opt. 29(1), 207–239 (2019)

De Klerk, E., Glineur, F., Taylor, A.B.: On the worst-case complexity of the gradient method with exact line search for smooth strongly convex functions. Opt. Lett. 11(7), 1185–1199 (2017)

De Klerk, E., Glineur, F., Taylor, A.B.: Worst-case convergence analysis of inexact gradient and Newton methods through semidefinite programming performance estimation. SIAM J. Opt. 30(3), 2053–2082 (2020)

Drori, Y.: Contributions to the complexity analysis of optimization algorithms. Ph.D. thesis, Tel-Aviv University (2014)

Drori, Y., Teboulle, M.: Performance of first-order methods for smooth convex minimization: a novel approach. Math. Program. 145(1), 451–482 (2014)

Gupta, S.D., Van Parys, B.P., Ryu, E.K.: Branch-and-bound performance estimation programming: a unified methodology for constructing optimal optimization methods. arXiv preprint arXiv:2203.07305 (2022)

Hinder, O., Sidford, A., Sohoni, N.: Near-optimal methods for minimizing star-convex functions and beyond. In: Conference on Learning Theory, pp. 1894–1938. PMLR (2020)

Hu, B., Seiler, P., Lessard, L.: Analysis of biased stochastic gradient descent using sequential semidefinite programs. Math. Program. 187(1), 383–408 (2021)

Karimi, H., Nutini, J., Schmidt, M.: Linear convergence of gradient and proximal-gradient methods under the Polyak-Łojasiewicz condition. In: Joint European Conference on Machine Learning and Knowledge Discovery in Databases, pp. 795–811. Springer (2016)

Necoara, I., Nesterov, Y., Glineur, F.: Linear convergence of first order methods for non-strongly convex optimization. Math. Program. 175(1), 69–107 (2019)

Nesterov, Y.: Gradient methods for minimizing composite functions. Math. Program. 140(1), 125–161 (2013)

Nesterov, Y.: Lectures on Convex Optimization, vol. 137. Springer (2018)

Polyak, B.T.: Gradient methods for the minimisation of functionals. USSR Computational Mathematics and Mathematical Physics 3(4), 864–878 (1963)

Rotaru, T., Glineur, F., Panagiotis, P.: Tight convergence rates of the gradient method on hypoconvex functions. arXiv preprint arXiv:2203.00775 (2022)

Taylor, A.B.: Convex interpolation and performance estimation of first-order methods for convex optimization. Ph.D. thesis, Catholic University of Louvain, Louvain-la-Neuve, Belgium (2017)

Taylor, A.B., Hendrickx, J.M., Glineur, F.: Smooth strongly convex interpolation and exact worst-case performance of first-order methods. Math. Program. 161(1), 307–345 (2017)

Zamani, M., Abbaszadehpeivasti, H., de Klerk, E.: Convergence rate analysis of the gradient descent-ascent method for convex-concave saddle-point problems. arXiv preprint arXiv:2209.01272 (2022)

Acknowledgment

This work was supported by the Dutch Scientific Council (NWO) Grant OCENW.GROOT.2019.015, Optimization for and with Machine Learning (OPTIMAL).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Abbaszadehpeivasti, H., de Klerk, E. & Zamani, M. Conditions for linear convergence of the gradient method for non-convex optimization. Optim Lett 17, 1105–1125 (2023). https://doi.org/10.1007/s11590-023-01981-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11590-023-01981-2