Abstract

The evergrowing Internet of Things (IoT) ecosystem continues to impose new requirements and constraints on every device. At the edge, low-end devices are getting pressured by increasing workloads and stricter timing deadlines while simultaneously are desired to minimize their power consumption, form factor, and memory footprint. Field-Programmable Gate Arrays (FPGAs) emerge as a possible solution for the increasing demands of the IoT. Reconfigurable IoT platforms enable the offloading of software tasks to hardware, enhancing their performance and determinism. This paper presents ChamelIoT, an agnostic hardware operating systems (OSes) framework for reconfigurable IoT devices. The framework provides hardware acceleration for kernel services of different IoT OSes by leveraging the RISC-V open-source instruction set architecture (ISA). The ChamelIoT hardware accelerator can be deployed in a tightly- or loosely-coupled approach and implements the following kernel services: thread management, scheduling, synchronization mechanisms, and inter-process communication (IPC). ChamelIoT allows developers to run unmodified applications of three well-established OSes, RIOT, Zephyr, and FreeRTOS. The experiments conducted on both coupling approaches consisted of microbenchmarks to measure the API latency, the Thread Metric benchmark suite to evaluated the system performance, and tests to the FPGA resource consumption. The results show that the latency can be reduced up to 92.65% and 89.14% for the tightly- and loosely-coupled approaches, respectively, the jitter removed, and the execution performance increased by 199.49% and 184.85% for both approaches.

Similar content being viewed by others

1 Introduction

The Internet of Things (IoT) has remarkably evolved during the past years, resulting in the proliferation of smart devices (things) through a wide variety of sectors, e.g., healthcare (Pinto et al. 2017), automotive (Cunha et al. 2022), industrial (Sanchez-Iborra and Cano 2016), and domotics (Alexandrescu et al. 2022), among others (Oliveira et al. 2020). With the growing trend of having more nodes connected to the Internet, there has been a substantial effort towards shifting heavy computational workloads from centralized facilities to decentralized networks of devices at the edge, i.e., edge computing (Perera et al. 2014; Wei et al. 2018).

Edge devices are, by nature, resource-constrained, especially when compared to their counterparts, the servers on the cloud. However, edge computing requires these devices to have better performance and real-time capabilities to gather and process data and execute their functions without needing intervention from cloud services. Despite the increasing demand for performance, these devices are still limited by their small form factor and low-power consumption requirements, pushing the limits of what is achievable with the system’s current hardware (processor, memory, peripherals, among others) (Cao et al. 2020).

Aiming to improve and expand the capabilities of the current IoT edge devices, reconfigurable platforms are gaining traction in the IoT industry. These platforms, namely Field Programmable Gate Arrays (FPGAs), enable the development of custom solutions by offloading intensive software tasks to hardware accelerators (Pena et al. 2017). System configurations based on FPGAs often include a microcontroller unit (MCU) and reconfigurable fabric where the hardware accelerators are deployed. The MCU can be a hard core, i.e., implemented in silicon, or a soft core, deployed in the FPGA fabric, and it is responsible for managing the hardware accelerators. The most common targets for hardware acceleration are tasks requiring great amounts of processing power, e.g., mathematical or artificial intelligence (AI) algorithms (Boutros et al. 2020), or tasks that execute very frequently during the standard system’s workflow, such as kernel services (Gomes et al. 2016; Maruyama et al. 2014). Furthermore, hardware accelerators have been used in the IoT context to optimize power consumption by applying techniques like Dynamic Voltage and Frequency Scaling (DVS) (Chéour et al. 2019; Karray et al. 2018).

Operating Systems (OSes) are widely present across embedded and IoT systems. They provide a plethora of advantages to the development of any application, for instance, managing complexity by abstracting the low-level details from the developer and providing a set of libraries that enable easy implementation of features like network communication or device drivers. Given the ubiquity of OSes in the IoT and considering that kernel services are executed countless times throughout the execution of any application, they are a prime target for hardware acceleration (Gomes et al. 2016). Nevertheless, this approach has been disregarded by the IoT industry since prior hardware accelerators were highly tailored to a specific application or OS and did not provide an easy-to-use software application programming interface (API). An alternative solution is the implementation of a hardware accelerator capable of improving the performance of multiple OSes with only minimal modifications to their kernels and providing agnosticism to the developer by allowing applications to be executed without any changes to the code. This approach would minimize the knowledge required to build and deploy an entire system stack with hardware acceleration (Ong et al. 2013).

Another consideration regarding hardware acceleration is the processing system that manages and controls the accelerator (Maruyama et al. 2014). Depending on the target platform, this system can either be a hardcore MCU, implemented in silicon, parallel to the FPGA, or a softcore instantiated in the same reconfigurable logic as the hardware accelerator. A hardcore MCU imposes any accelerator to be connected through the resources available, which often are memory interfaces, resulting in loosely-coupled accelerators (Maruyama et al. 2010). On the other hand, a softcore MCU allows the deployment of loosely-coupled accelerators and enables the inclusion of tightly-coupled accelerators integrated directly into the MCU datapath. Additionally, if the architecture’s instruction set architecture (ISA) is proprietary, the deploying method, soft or hardcore, is irrelevant. Any modification to the MCU is unfeasible due to the intellectual property being close-source, and for the same reason, scaling the accelerator to be deployed in silicon also becomes impossible.

Open-source ISAs, like RISC-V, have been rising in popularity to mitigate this effect. RISC-V is a novel open-source ISA that follows a reduced instruction set computer (RISC) design (Asanovic et al. 2014; Waterman 2016). It was designed to support a broad range of devices, spanning from high-performance application processors to low-power embedded microcontrollers. RISC-V enables a new level of software and hardware freedom by allowing easy integration of dedicated and custom-tailored accelerators with the application software. Among the multiple available implementations of the RISC-V ISA, some already take into consideration the possibility of adding accelerators as coprocessors coupled to the datapath by defining a subset of instructions for these coprocessors. With these instructions and the standard memory interfaces, implementations like the Rocket core (Asanović et al. 2016) allow for easy deployment of tightly- and loosely-coupled hardware accelerators. Consequently, RISC-V cores provide an extra degree of flexibility and the possibility of exploring the trade-offs from the two coupling approaches regarding performance, determinism, real-time, system integration, and portability (Davide Schiavone et al. 2017; Fritzmann et al. 2020; SEMICO Research Corporation 2019).

This paper presents a solution that consolidates hardware acceleration of IoT OSes in an easy-to-use agnostic framework from reconfigurable IoT devices, named ChamelIoT. Our framework leverages the Rocket core implementation of the RISC-V ISA to provide a highly configurable hardware accelerator for IoT OS kernel services, requiring minimal modifications to the OS software kernels and no modifications to application-level code. This way, there are no barriers to using ChamelIoT from the software development point of view, allowing legacy applications to take advantage of the benefits of hardware acceleration. The main contributions of this article are summarized as follows:

-

1.

An agnostic framework that currently supports three different IoT OSes, RIOT, FreeRTOS, and Zephyr, requiring few changes to the kernel while keeping the end-user interface unmodified;

-

2.

A highly configurable hardware accelerator with two different coupling approaches, tightly and loosely, integrated with an open-source implementation of the RISC-V ISA, Rocket core;

-

3.

An easily portable abstraction layer for several kernel services that enable the use of hardware acceleration to any IoT OS;

-

4.

Complete evaluation of the three aforementioned IoT OSes using the ChamelIoT framework, including microbenchmark experiments, system-wide benchmarks, and FPGA resource consumption measurements.

2 Background and related work

At the edge of the IoT ecosystem, low-end devices are becoming more strained with the increasing demands from the growing popularity of edge computing (Wei et al. 2018). Considering the nature of these devices, with limited form factor and energy consumption, frequently it is impossible to add hardware to compensate for the lack of performance. Hence, reconfigurable platforms emerge as possible solutions to IoT low-end devices (Pena et al. 2017). These platforms incorporate FPGA fabric, allowing for offloading software tasks to hardware, where they can be sped up, vastly increasing their performance and determinism. Hardware acceleration is a widely known endeavor that has proven to provide significant advantages since the early 1990s (Baum and Winget 1990; Brebner 1996). Recently, this technique has also reached the IoT across different ecosystems: security (Gomes et al. 2022; Johnson et al. 2015), AI (Qiu et al. 2016; Zhang et al. 2017), wireless connectivity (Engel and Koch 2016; Gomes et al. 2017), and image processing (DivyaKrishna et al. 2016), among others (Najafi et al. 2017; Zhao et al. 2019).

Nevertheless, reconfigurable platforms and hardware acceleration have failed to gain traction in the IoT industry until recent years since FPGA-based platforms were costly regarding price, form factor, and energy consumption. However, the latest efforts in reconfigurable fabric technology development are attempting to solve the size, weight, power, and cost (SWaP-C) constraints imposed on IoT systems. For example, Embedded FPGAs such as those provided by QuickLogic or low-power FPGAs from the Lattice portfolio are seeing increasing applicability in low-end IoT devices. These platforms are highly tailored towards low-end applications, with optimized reconfigurable slices, fewer resources available, and power-efficient organization. Further along, we expect this technology to evolve to accompany the growing need for low-end FPGAs (Allied Market Research 2023).

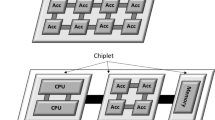

Traditionally, hardware accelerators were developed in FPGAs that physically were separated from the processing system, which often is an MCU where the main software application is executed. The communication methods between the two elements were often limited to the facilities available in the processing system, for instance, specialized I/O connections or memory buses. When the accelerator is connected through a standard memory interface, like AXI (ARM 2013) or TileLink (SiFive 2018), the accelerator is a memory-mapped device accessible by the software through conventional memory instructions, e.g., loads and stores. This method is referred to as a loosely-coupled approach and is depicted in Fig. 1. One of the main advantages of this approach is the easy portability across platforms since the accelerator’s core can remain untouched in the porting process. Only the connection interface needs modifications to be compatible with the MCU. However, resorting to memory buses to communicate with the accelerator can lead to bus contention. Both the processing system and the hardware accelerator try to access the memory bus, consequently inducing stalls in the pipeline, which translates into worse throughput and less determinism for the whole system (Maruyama et al. 2014).

Alternatively, hardware accelerators can be implemented following a tightly-coupled approach, as illustrated in Fig. 2. This approach requires the accelerator to be integrated with the MCU datapath, forcing modifications on the pipeline, and consequently, the MCU has to be provided as a softcore. A tightly-coupled accelerator requires the MCU to include additional specialized instructions dedicated only to communicating with the accelerator. Most compilers do not accommodate these instructions by default. Hence an extra layer of abstraction is required for the software to use the instructions. On the other hand, this approach can vastly improve the system performance and determinism since the usage of external buses introduces no delays.

The two aforementioned approaches present portability, scalability, performance, real-time, and determinism trade-offs. To explore these trade-offs, it is necessary to have a reconfigurable platform that can be modified as needed. Open-source ISAs like RISC-V offer great potential since they consolidate, in a single place, the possibility of having both tightly- and loosely-coupled accelerators.

RISC-V is a highly modular and customizable open-source ISA initially developed by the University of California, Berkeley, and currently administered by the RISC-V International (Asanovic et al. 2014; Waterman 2016). It supports multiple word sizes (32 and 64-bit) and well-defined, documented, and maintained ISA extensions. These extensions can be used to tailor the ISA implementations to fit the system’s requirements and constraints, leading to a wide variety of readily available RISC-V softcores, like Rocket, BOOM, CVA6, and Ariane, among others. Different implementations differ in terms of the implemented microarchitecture (e.g., pipeline stages, caches, etc.) to the high-level SoC elements (e.g., peripherals, buses).

Among these implementations, Rocket was chosen for the current stage of ChamelIoT since it provides, by default, mechanisms that enable the design and deployment of hardware accelerators, both tightly- and loosely-coupled (Sá et al. 2022). For the former approach, Rocket provides the Rocket Custom Coprocessor (RoCC) interface (Asanović et al. 2016; Pala 2017), which is integrated with the MCU datapath and accounts for up to four separate accelerators with their respective instruction opcodes. The RoCC interface also provides memory access to the accelerator without needing the MCU or software intervention. Regarding the loosely-coupled approach, Rocket allows for the inclusion of accelerators or any form of peripheral as memory-mapped devices accessible from the MCU through load and store instructions. Additionally, with the available Rocket libraries, any accelerator can also include a Direct Memory Access (DMA) port, enabling it to perform memory transactions without requiring external intervention.

Leveraging reconfigurable platforms to implement OS hardware acceleration is not a new endeavor. Moreover, the rising popularity of the IoT made it clear that it is crucial to have high-performing and deterministic OSes (Pena et al. 2017; Silva et al. 2019). There are two distinct types of OSes that use hardware acceleration: Reconfigurable OSes and Hardware-accelerated OSes. Reconfigurable OSes can be described as software OSes enhanced with capabilities to execute and schedule application-level tasks in hardware. In some cases, these OSes can also use partial reconfiguration techniques to change the accelerator in run-time according to the application’s needs. On the other hand, hardware-accelerated OSes take advantage of FPGA platforms to enhance the performance of their kernel services, e.g., scheduler, thread management, or synchronization and inter-process communication (IPC) mechanisms, by migrating them to hardware.

In the following sections, we provide a brief description of several OS representatives of the two aforementioned categories. More hardware-accelerated OSes are studied and analyzed since ChamelIoT is a framework that enables hardware acceleration for kernel services. Additionally, Table 1 summarizes the gap analysis among hardware-accelerated OSes, providing a feature comparison between these OSes and ChamelIoT, highlighted in bold. For each OS, the following characteristics are identified: (1) the target CPU architecture, (2) the coupling approach, either tightly- or loosely-coupled, (3) whether the accelerator targets a Commercial Off-The-Shelf (COTS) OS or is used as a standalone OS, (4) the type of API provided, if it follows a standard like POSIX, if it can be ported to replace APIs from a software OS, or if it has custom-built API, and, lastly, (5) the kernel services accelerated in hardware.

2.1 Reconfigurable operating systems

R3TOS Iturbe et al. (2015) presented R3TOS, which leverages FPGA reconfigurability to provide a reliable and fault-tolerant OS. This reconfigurable OS is composed of a multilayered architecture including a Real-time Scheduler, Network-on-chip Manager, Allocator, Dynamic Router, Placer, Diagnostic Unit, and Inter-Device Coordinator. These layers schedule the hardware tasks, manage resources, and control the access port to reconfigure the FPGA fabric. The abstraction layer provided by R3TOS aims for a “software look and feel” while alleviating the application developer from dealing with occurring faults and managing the FPGA’s lifetime.

ReconOS Introduced by Lübbers and Platzner (2009), ReconOS is a Real-Time Operating System (RTOS) that allows the scheduling of hardware threads among software threads. Each hardware thread is assigned to a reconfigurable slot that encompasses two modules. The first manages the communication with software (OS interface). And the second is responsible for ensuring that hardware threads can correctly access synchronization and communication mechanisms implemented in software (OS synchronization finite state machine). ReconOS provides a POSIX-like API and a set of VHDL libraries for OS communication and memory access. Together with a system-building tool, it is possible to generate a fully integrated hardware-software project.

2.2 Hardware-accelerated operating systems

HThreads Agron et al. (2006) proposed HThreads, a multithreaded RTOS kernel for hybrid FPGA/CPU systems. This work intended to offload the (i) thread manager, (ii) scheduler, (iii) mutex manager, and (iv) interrupt scheduler to hardware. Each module is connected to the CPU through the available peripheral bus, allowing the software to access the hardware modules via load/store instructions. HThreads also allows for user-defined hardware threads that execute as a service available to software threads. Additionally, the API provided is compatible with the POSIX thread standard.

ARPA-MT ARPA-MT (Oliveira et al. 2011) is a MIPS32 implementation that takes advantage of user-defined coprocessors and exception interfaces to implement hardware support to an RTOS. The coprocessor includes a scheduler, task manager, synchronization, and communication mechanisms and provides support for non-real-time, soft, and hard real-time tasks. An object-oriented API enables the interface between software tasks and the hardware coprocessor. ARPA-MT also provides software implementations for services instantiated in hardware.

HartOS HartOS (Lange et al. 2012) is a microkernel-structured RTOS implemented in hardware that targets hard real-time applications. A custom processor, connected to the CPU through a standard peripheral bus, is responsible for interpreting the software requests and controlling the remaining hardware modules to attend to the received requests. This custom processor shares with the remaining hardware blocks an internal memory implemented in the FPGA fabric. This memory also interacts with the timer module, watchdog module, mutexes, and semaphores, among others.

SEOS SEOS (Ong et al. 2013) is a hardware-based OS designed to provide high adaptability for easy hardware RTOS adoption. SEOS aims for easy integration with a variety of CPUs. As such, it is connected to the core through a configurable peripheral bus that meets the CPU architecture. Furthermore, SEOS provides parametrization of several modules, e.g., mutexes, semaphores, and message queues. Regarding the software, SEOS defines a set of porting steps that do not require in-depth knowledge of both SEOS and the RTOS, enabling easy integration of this hardware RTOS with a software one.

RT-SHADOWS RT-SHADOWS (Gomes et al. 2016) is an architecture that provides unified hardware-software scheduling by manipulating an ARM-based softcore, developed in-house, to include support for multi-threading in the datapath. RT-SHADOWS is implemented as a coprocessor that includes a scheduler, thread manager, context switching, and synchronization and communication mechanisms. It leverages magic instructions (supported by unmodified compilers) to enable multiple APIs directly mapped to RTOS APIs, allowing for effortless integration in software by swapping both calls.

OSEK-V OSEK-V (Dietrich and Lohmann 2017) explores the hardware-software design space for event-triggered fixed-priority real-time systems at the hardware-OS boundary. By modifying the whole pipeline of a RISC-V core and introducing additional instructions to the ISA, OSEK-V integrates a highly-tailored hardware RTOS. Components like hardware tasks, alarms, and the scheduling policy, are implemented only to fit the application demands. The finite state machine customization happens at the compile time, ensuring the hardware-software synchronization. This approach aims at minimizing the FPGA resource consumption while maximizing the performance by designing the hardware for the application’s behavior.

ARTESSO Maruyama et al. (2014) present a study comparing the same hardware-accelerated RTOS (ARTESSO) implemented in two approaches: tightly- and loosely-coupled. ARTESSO HWRTOS (Maruyama et al. 2010) was originally developed to be an integrating part of a proprietary purpose-built CPU for TCP/IP-based applications. This implementation resorted to custom ISA instructions to enable the communication between the CPU and the hardware RTOS, and included scheduling, context-switching, event/semaphore/mailbox controllers, and an interrupt controller. With the goal of making the hardware RTOS easily portable and adaptable to industrial controllers, ARTESSO can be integrated tightly-coupled to a modified Arm core or loosely-coupled to an unmodified Arm core through standard peripheral buses. The evaluation in Maruyama’s study encompasses API execution times, interrupt responses, the influence of interrupts and ticks, and UDP/IP throughput. The results show that using a hardware RTOS improves the system’s performance and determinism when compared to an software-only approach. Furthermore, the tightly-coupled approach results presented are at least one order of magnitude better than the loosely-coupled one. Notwithstanding, the better performance of the tightly-coupled approach comes at the cost of portability and integrability. The tightly-coupled approach requires a modified Arm core, while the loosely-coupled is easier to integrate with any FPGA-based platform.

3 ChamelIoT overview

3.1 Motivation and goals

As supported by the extensive work in the literature presented earlier, offloading OS kernel services to hardware is not a new effort. Considering the hardware-accelerated OSes and their features, depicted in Table 1, there is a lack of direction regarding the ideal method for implementing OS hardware acceleration for IoT systems. Some OSes, like RT-SHADOWS, provide a portable API mappable into most IoT OSes allowing applications to run unmodified. Others, like HThreads or HartOS, have a dedicated API and require applications to be developed from the ground up. The multiple OSes are also deployed in different platforms, integrated with several MCUs, and have a varying range of services in hardware and configurability points. Taking this into account, both reconfigurable and hardware-accelerated OSes have yet to draw attention in the IoT industry, considering the roadblocks they present to their adoption. The main roadblocks we identify are discussed as follows:

Software interface The software API provided by each OS influences the amount knowledge regarding the whole required by the application developer. Some hardware-accelerated OSes provide their custom-built and unique API, which increases the development and learning curves since it requires developers to learn a complete set of new APIs and develop the application from scratch. Considering that time-to-market is a driving force for the IoT industry, the additional development time imposed by the learning curve of these hardware OS APIs compels the industry to opt for COTS solutions. To solve these issues, other hardware-accelerated OSes use compatibility standards like POSIX as a basis for their APIs, allowing for better portability for legacy Linux-based applications and the development of new applications due to the community’s overall familiarity with POSIX-like APIs. Lastly, the remaining OSes APIs were designed to mimic software IOT OSes’ APIs and replace them seamlessly. This approach allows developers to keep using the OS they are familiar with since the compiler or other external tools select which APIs to use. Additionally, this method can also enable legacy applications to use hardware acceleration since no modification is required at the application level.

Target architecture The processing system in reconfigurable platforms provides a limited number of interfaces usable by external peripherals and devices, consequently limiting the methods for integrating hardware accelerators with the core. In addition to the fact that not all MCUs are designed with hardware accelerators in mind, the number of processing systems that can be used for OS hardware acceleration is reduced. Furthermore, similarly to the software interface, developers also have a degree of familiarity with some processor families and architectures, which results in these being preferred by the IoT industry. Considering these facts, softcore MCUs are well-suited for IoT systems with hardware acceleration since they can be modified to include and accept hardware accelerators, both tightly- and loosely-coupled. Hardware-accelerated OSes usually are developed targeting a single CPU or architecture, which limits their overall usability and portability, hindering their adoption. The problem is even worse when the accelerators are tightly-coupled to the CPU and require modifications to the datapath. This fact makes the hardware replication in mass a challenge, as it involves redesigning the silicon, which is nearly impossible with proprietary CPU architectures, often behind a paywall. To tackle this issue, some hardware OSes are exploring open ISAs, e.g., RISC-V, as their foundation, due to their availability and openness. This approach future-proofs the system and thus enhances its scalability and possible adoption.

Application suitability Due to the heterogeneity of the IoT ecosystem, the myriad of different systems presents a variety of requirements and constraints. Notably, closer to the edge, each application is progressively more constrained regarding energy consumption and form factor, as these applications are often small sensors or actuators powered by batteries. Therefore, reconfigurable platforms in the IoT edge ideally uses the smallest FPGA available. To cope with this, hardware-accelerated OSes must provide enough configurability points to fit within the hardware constraints. As such, the OS should allow the user to modify kernel parameters, e.g., number of states or priorities, or entirely remove unused features. This is only possible if the hardware-accelerated OS allows by design for such configurability, which is not always true, as a variety of accelerators in the literature are tailored to a specific application or OS.

Aiming to increase the adoption rate of hardware-accelerated OSes in the IoT market, ChamelIoT tackles the previously identified roadblocks by providing a framework for accelerating kernel services in hardware in an agnostic fashion, allowing applications from different IoT OSes to run unmodified. To do so, ChamelIoT presents the following solutions:

-

Regarding the Software Interface, ChamelIoT offers a minimalist set of APIs that implement low-level communication with the hardware accelerator to execute well-defined kernel services. Each function can be mapped to a kernel service in software and is replaced at compile-time, providing the benefits of hardware acceleration to any IoT OS. Since only kernel internals are modified, the application-level code is kept intact. Taking this into consideration, developers can leverage ChamelIoT without the need to learn any new set of APIs or the workings of an OS, thus making it easier to use hardware acceleration.

-

The Target Architectures of ChamelIoT are architectures deployed in reconfigurable logic. Leveraging RISC-V, an open-source ISA and its available implementations, allows ChamelIoT to explore multiple avenues of hardware acceleration, like providing the same accelerator as both tightly- and loosely-coupled. Furthermore, with RISC-V gaining popularity in the IoT industry, there is inherent scalability for hardware accelerators implemented with the same foundation as these emerging platforms.

-

Lastly, ChamelIoT offers multiple configurability points to ensure Application Suitability. The user can opt to: (1) have the accelerator either tightly- or loosely-coupled, (2) modify multiple kernel parameters, e.g., number of threads, priorities, and states, (3) configure feature-specific implementations like the type of semaphores or if mutexes have priority inheritance, and (4) add or remove components like mutexes, semaphores or message queues as needed. All these configurations are made before the synthesis and deployment of the accelerator, enabling the user to fully customize the system and avoid unnecessary resource consumption.

Taking into consideration, the current implementation of ChamelIoT provides hardware acceleration to several OS services, which can replace with existing services of three different software IoT OSes, without requiring modifications to the application’s interface. The main goals and benefits of the ChamelIoT encompass:

Real-time and determinism As one of the main requirements of low-end IoT devices, ChamelIoT must provide hard real-time guarantees and bounded worst-case execution time (WCET). Moreover, predictability shall not be affected by any configuration on the hardware accelerator, e.g., the number of priorities or the total number of mutexes, or any application-specific detail like the number of waiting threads or their priorities.

Performance It is paramount for a hardware-accelerated system to have better performance than its software-centric implementation. ChamelIoT must ensure higher performance, regardless of coupling approach or any configuration, by executing kernel services faster than their respective standard software version, independent of the IoT OS being accelerated.

Flexibility Without enough customization, it is impossible to guarantee that a hardware accelerator is not using unnecessary resources. The framework must provide several configurability points to modify ChamelIoT in a way that the accelerator behaves precisely like the target IoT OS while minimizing resource consumption.

Agnosticism In order to lessen the learning curve associated with hardware-accelerated OSes, the ChamelIoT framework must allow the user to transparently run unmodified applications of any supported IoT OS. This is achieved by only modifying the kernels’ internals and leaving the user interface untouched when using the hardware accelerator.

The current stage of development of the ChamelIoT framework incorporates a hardware-accelerated OS that can be deployed both tightly- or loosely-coupled. The accelerator implements the following kernel services: scheduling, thread management, and synchronization and communication mechanisms. It is part of the already identified future work to provide a tool to ease the process of configuring and building the complete system stack. This includes configuring and synthesizing the hardware accelerator and applying the required modifications the software OS to use the accelerator. The kernel services implemented are present across the vast majority of IoT OSes, and can be implemented and deployed as hardware accelerators without changing the behavior of the software OS. On the other hand, some OS services already deployed in hardware by works in the literature, require more intrusive implementations capable of changing the OS execution. Consequently, forcing the developer to adapt the application code. These services are not within the scope of our framework and include:

-

Interrupt management—the most common approach to manage interrupts in hardware is to trap and process them in the accelerator, interrupting the processor through a single interface when needed. This approach reduces the priority spaces of interrupts and threads to a single one, i.e., the accelerator becomes responsible for managing both interrupts and threads which share the same priority rules. This approach has proven to bring several benefits to performance and memory footprint (Hofer et al. 2009; Gomes et al. 2015). Notwithstanding, it heavily modifies the OS behavior and requires the developer to understand several implementation details. Thus, the agnostic characteristics of ChamelIoT would be invalidated, as applications would need to be developed while considering the unified priority space.

-

Time management—most IoT OSes rely on platform-available timers, e.g., system tick or general purpose timers, to manage time features like the tick system, delays, or periodic events. Migrating time-related operations to hardware would require replicating the timer logic in the FPGA fabric (Gomes et al. 2016; Ong et al. 2013), resulting in redundancy and waste of FPGA resources. Furthermore, it would also require redirecting the system timer interrupt to a different source, adding a priority space solely for the timer. It could lead developers to mistakenly assign priority to their interrupts, thus, breaking the system’s expected behavior, which compromises ChamelIoT’s agnosticism.

-

Context switching—as the most architecture-dependent feature, implementing the context switching in hardware would require extensive modifications to the CPU datapath to accommodate the different register files and other data that need to be saved and loaded when a new thread is scheduled. Even though the migration of this feature to hardware has proven to bring performance and determinism benefits (Maruyama et al. 2014), a highly-tailored core limits its flexibility and adaptability. A custom-built core is not easily adapted to other platforms, consequently limiting its reusability and adoption.

-

Memory management—implementing heap and thread stacks management in hardware is a great ordeal that requires a vast amount of FPGA resources. Considering the constrained nature of IoT devices, especially the FPGA-based ones, we believe this feature makes sense to be handled in software.

3.2 Architecture

In order to achieve the goals mentioned previously, the proposed ChamelIoT framework architecture is composed of three main components: (1) a tightly- or loosely-coupled Hardware Accelerator where the kernel services are implemented in hardware; (2) an Abstraction Layer that enables the interface between the software and hardware elements in the system; and (3) a Configuration and Building tool to customize the whole system stack and abstract the user from low-level implementation details. Figure 3 depicts the three components of ChamelIoT framework incorporated in system based on a RISC-V reconfigurable platform.

Hardware accelerator This component is the central piece of the framework since it is responsible for the main goal of ChamelIoT, the acceleration of OS kernel services in hardware. The services implemented in hardware encompass (1) a scheduler responsible for determining the active thread, (2) a thread manager that stores and manages the data related to each individual thread on the system, (3) synchronization mechanisms, including mutexes and semaphores, and (4) inter-process communication mechanisms through message queues. By providing enough configurability to each service, i.e., the scheduling priority or the number of threads supported, it is possible to build the hardware accelerator to fit the application needs without wasting unnecessary FPGA resources. Designed with flexibility in mind, the Hardware Accelerator can be deployed following a loosely- and a tightly-coupled approach. The tightly-coupled approach assumes that the accelerator is integrated into the core’s datapath, for instance, using a coprocessor interface. However, this option is not always available in some RISC-V implementations, where the loosely-coupled approach is always viable by connecting the accelerator to the available system bus.

Abstraction layer The software API enables the communication between the software and the hardware accelerator. This is achieved by providing a software abstraction for all the possible functions of each kernel service deployed in hardware, which results in a fine-grained abstraction layer. Together with a collection of additional APIs to access and gather context data from the accelerator, it is possible to easily adapt and port the ChamelIoT framework to most IoT OSes. Depending on the hardware accelerator coupling approach, each function in this component comprises one to four assembly instructions, a custom-made instruction for the tightly-coupled approach, and memory operations for the loosely-coupled.

Configuration and building tool Our framework intends to offer an external tool that can be used for hardware and software customization through a graphical user interface to ease the development process and soften the learning curve of hardware accelerators. This tool reduces the required knowledge about implementation details by consolidating in a single place all the customization and configuration available in the complete system stack, automating the process of synthesizing the hardware accelerator, including the correct abstraction layer, and building the target OS with the modifications required.

4 Framework implementation

Considering the heterogeneity of applications in the IoT ecosystem, numerous IoT OSes start to implement and provide additional features to help with the variety of requirements and constraints. High-level features like wireless connectivity or cryptography are some of the prominent requirements in nowadays IoT edge devices, and OS support for these features is highly appreciated in the community. In addition to these features, design choices such as kernel architecture, scheduling policy, or synchronization and communication mechanisms have a significant influence on the developer’s choice of IoT OS since these features greatly impact the overall system performance and behavior.

The OSes available for low-end IoT systems present a wide variety of them regarding implementation details and features supported. From the myriad of IoT OSes, ChamelIoT currently provides support to RIOT, Zephyr, and FreeRTOS, as they present enough variability regarding the main design points and present extensive popularity and applicability in IoT applications. The three OSes share similar design principles, e.g., they implement a preemptive priority-based scheduler and multi-queue thread management, which are also common characteristics of other low-end IoT OSes (Chandra et al. 2016; Hahm et al. 2016; Silva et al. 2019; Zikria et al. 2018). Nonetheless, there are several distinctions in their design choices which the accelerator needs to accommodate, as summarized in Table 3. The ChamelIoT framework allows for several configurations to ensure that the minimum resources are used and that the system operates exactly like the software OS. These configurations describe how the OS works, for instance, the number of thread states, the meaning of each state, the priority scheme, and which synchronization and communication mechanisms are included.

The current implementation of the ChamelIoT framework includes the Hardware Accelerator and Abstraction Layer components, while the Configuration and Building Tool are still in development. The Hardware Accelerator is based on the open-source SiFive E300, featuring an E31 Coreplex RISC-V core (RV32-IMAC), which supports atomic (A) and compressed (C) instructions for higher performance and better code density, respectively. This core is created by the Rocket Chip generator and its main characteristics include a single-issue in-order 32-bit pipeline (with a peak sustained execution rate of one instruction per clock cycle) and a single L1 instruction cache. The E300 platform also includes a platform-level interrupt controller (PLIC), a debug unit, several peripherals, and two TileLink interconnections interfaces (used to interface custom accelerators). The Abstraction Layer is composed of a set of APIs that implement low-level abstractions for the interface between the software and hardware accelerator, regardless of the coupling approach. The APIs are implemented resorting to macros and inline functions and replace the software OS APIs at compile-time.

4.1 Hardware accelerator

As the core element of the ChamelIoT framework, the Hardware Accelerator implements in hardware the kernel services common to the three IoT OSes supported, as identified in Table 2. These services include scheduling, thread management, mutexes, semaphores, and message queues, which directly correspond to hardware modules, as depicted in Figs. 4 and 5. Additionally, there is also a Control Unit module that manages the interaction between all other hardware elements and handles the interface with the processing system. Each of these hardware modules will be further detailed in later sections.

As mentioned previously, the Hardware Accelerator is implemented in a Rocket Core based platform, considering it enables the deployment of hardware accelerators both tightly- and loosely-coupled. The two different methods require different techniques and resources from the processing system, which implies modifications to hardware that manages the communication with the processing system, the Control Unit. Since this module is solely responsible for the interface with the CPU, all the other hardware elements remain untouched, independently from the coupling approach.

Tightly-coupled For this approach, the Hardware Accelerator leverages the RoCC interface provided by the Rocket core to integrate the coprocessor directly into the datapath. This interface is composed of two smaller interfaces, as illustrated in Fig. 4: (1) the command interface (CMD IF), which is responsible for receiving and answering any requests from the pipeline, and (2) the memory interface (MEM IF), through which any memory transaction can be made without CPU intervention.

Along with the ISA specification, the command interface imposes restrictions on the hardware accelerator. The instruction type specified for RoCC instructions is R-type, which limits the data input to the accelerator to two 32-bit words and the output to one 32-bit word, all in general-purpose registers. This data is provided directly to the command interface already decoded by previous pipeline stages, along with a 7-bit field that works as an internal opcode for the accelerator. Since the accelerator is integrated within the pipeline, it also needs to follow its timing restrictions, implying that the command interface needs to have the output ready in the same clock cycle. This fact forces the output logic to be fully combinational, demanding the majority of the other modules to be implemented with combinational logic.

Loosely-coupled In this approach, the Hardware Accelerator acts as a memory-mapped device for the processing system. The accelerator needs to be registered as two different TileLink nodes, as depicted in Fig. 5: (1) a Register Map node, through which the software can write input data and read the output from predefined memory addresses, and (2) a Direct Memory Access (DMA) node used to provide memory access to the accelerator without CPU intervention.

In the TileLink Register Map node, a memory address range has to be assigned to the accelerator, which determines the addresses the software can use to communicate with the hardware. In this range, a register file is defined according to the inputs and outputs required for each kernel service. Each register is composed of a 32-bit word and has a specific address. The software application can access these registers through load and store instructions. Additionally, the TileLink DMA node grants the accelerator access to the system memory through burst operations. These operations are done in bursts with predefined sizes (values are always in powers of 2), which limits finer-grained memory transactions.

4.1.1 Control Unit

The Control Unit is the main component of the Hardware Accelerator, as it ensures that all the other elements function as intended. Among its responsibilities, the Control Unit manages the interfaces with the processing system available in the accelerator. It involves processing the software request by interpreting internal instruction opcode, collecting the input data from the correct sources, commanding other modules to execute the proper functions, and outputting results in a timely fashion. When the accelerator is tightly-coupled, both the input and output data is available through the RoCC command interface. The software issues a single instruction containing the source and destination registers for the data. Contrarily, in the loosely-coupled approach, the input data is provided by software by storing data in the register map before the execution of any function. Likewise, the output is available in the register map to be read by the software after executing the main instruction.

Considering the limited nature of FPGA resources, there is a limit to the number of kernel services that can be deployed in hardware. Mutexes, semaphores, and message queues are kernel elements of which the software can use multiple instances. Therefore, the maximum number of these modules deployed in hardware is a parameter configured by the user and the Control Unit is responsible for managing which modules are free or used in run time.

Lastly, regardless of the coupling approach, there is only a single interface to perform memory operations. Consequently, whenever another module, e.g., message queue, needs to execute any memory transaction, it must request the Control Unit to execute that operation. This way, it is impossible to have multiple modules concurrently trying to access the system’s memory. Additionally, the Control Unit has an internal memory buffer used by the message queues to store data that has not yet been requested by the software. Given that some message queue operations require direct transfer to and from this buffer to the system memory, it also becomes part of the Control Unit’s responsibility to manage the buffer to avoid concurrency in any access.

4.1.2 Thread Manager

The Thread Manager is mainly responsible for storing and managing the data of each thread used by the software application. With the goal of minimizing FPGA resource usage, the total amount of active threads allowed in the system is a configurable parameter at compile time. This configuration is one of the most impacting on resource usage because it forces the internal Thread Identifier (TID) to have a field size capable of holding the highest number of threads. Taking into consideration that the TID is a field propagated throughout the whole accelerator, it naturally increases resource consumption, especially considering that most of the accelerator is implemented with combinational logic.

The TID is used by the Thread Manager to address each thread added by the software application. It represents an index in an array of Thread Nodes, which are structures that contain thread data required by most hardware modules, as depicted in Fig. 6. The data field stores a pointer to the Thread Control Block (TCB) provided by the software OS. The accelerator uses this pointer to enable multiple ways for the software to access a thread, either through TID or TCB. Furthermore, there is a context in the TCB that has not been migrated to hardware, e.g., memory management details, allowing the software OS to not be limited by what ChamelIoT implements. Both state and priority fields are used by the scheduling algorithm and have variable field sizes according to additional configurability points. A single-bit field, dirty, is used to indicate whether or not that index is available. When a thread is added to the system, the software should provide the previous fields, and the hardware determines which index is free, then sets the dirty bit and outputs its index. To remove a thread, the Thread Manager only needs to clear the dirty bit.

The last field in the Thread Node is named next, and it is used to implement linked lists utilized in the queue of threads ready to be scheduled, henceforth referred to as ready queue. Given that all thread data is stored and handled by the Thread Manager, it is also part of this component’s function to manage the state of each thread and the ready queue. The ready queue implemented in hardware follows a multi-queue system, where there is a circular linked list for each priority level, leveraging the next field in each node to point to the next thread with execution rights with the same priority. Figure 7 illustrates a state example of a ready queue with five threads. Thread 3 is currently running, and Thread 5 is blocked, both with the same priority level, resulting in the Thread 3 node pointing to itself. The remaining three threads have lower priority and form a circular list while waiting to be scheduled. Lastly, any changes to the ready read are prompted by changes in the thread state, which can be caused by a mutex, semaphore, or message queue blocking or unblocking a thread, the scheduling algorithm, or directly by a software request.

4.1.3 Scheduler

The scheduling policy implemented follows a preemptive priority-based algorithm that uses a hardware configuration to define the priority order, i.e., ascending or descending. By definition, the thread with the highest priority in a ready state will run until it yields its execution time or it is interrupted by a thread with higher priority. In case of multiple threads with the same priority, the scheduling algorithm follows a round-robin scheme to determine which thread is to be executed next.

To schedule the next thread, the Scheduler accesses the Thread Manager’s ready queue to identify which is the highest priority among threads in a ready state. Then, the Scheduler is responsible for changing the currently active thread state from running to ready and the other way around for the new thread. Due to timing constraints, it is mandatory for the Scheduler to be implemented with only combinational logic since whenever a scheduling operation is requested, the kernel is in the process of swapping active threads, which must be deterministic and requires the shortest possible time.

4.1.4 Mutexes

A mutex is a synchronization primitive that ensures mutually exclusive access to a resource. In the context of operating systems, a thread can use a mutex to guarantee that its access to a shared resource is undisturbed and that other threads cannot corrupt the resource. To perform an access, a thread must attempt to lock the mutex, which only is successful if no other thread is locking it. Once the thread is successful, it becomes the owner of said mutex and holds its ownership until the thread unlocks the mutex. On the other hand, if an attempt to lock a mutex fails, the thread that tried is blocked and yields its execution to the next thread.

In the most common implementations of mutexes in IoT OSes, a failed lock can result in a priority inversion scenario, where a thread with lower priority executes before one with higher priority. It can lead to an instance where a critical portion of code protected by a mutex is delayed to a later scheduling point. This is depicted in Fig. 8a, where the highest priority Thread C interrupts Thread A, and fails to lock a mutex currently owned by Thread A. This lead to a case where Thread A critical section only occurs after Thread B finishes executing. These situations are not desirable in IoT edge devices where real-time and determinism are paramount.

A possible solution to priority inversion scenarios is using priority inheritance algorithms, which consist of raising the priority level of the current owner of a mutex to the highest priority of the threads that attempted to lock the same mutex. This is represented in Fig. 8b, where after Thread C fails to lock the mutex, Thread A is given the same priority level to finish executing its critical section and unlock the mutex. Once Thread A unlocks the mutex, Thread C can resume its critical section, and Thread B only runs after the highest priority thread finishes its execution.

In ChamelIoT’s hardware accelerator, each Mutex implementation maintains a register with the current thread that owns the mutex and a list of TIDs of each thread that was blocked trying to lock it. This list of threads also contains their respective priority, to enable the implementation of priority inheritance mechanisms. Whenever a thread’s priority is modified, the Mutex informs the Thread Manager of the TID and new priority. Consequently, the Thread Manager can keep the ready queue correct, removing the thread from one linked list and adding it to the list regarding the new priority. The process is the same whether the priority is raised as a result of a failed lock or lowered after an unlock. Finally, whenever a thread is forced to change state, e.g., to ready state when the priority is raised, the Scheduler is also updated accordingly, and the software is notified in the next scheduling point.

4.1.5 Semaphores

A semaphore is another method of synchronization utilized in operating systems. It follows a producer–consumer scheme, where the producer thread signals the semaphore once it finishes acquiring or processing a certain resource. Internally, the semaphore registers the count of how many signals were emitted by the producer thread. In turn, the consumer thread checks, through the semaphore, if there are resources available. If the semaphore’s internal count is greater than zero, the consumer thread is allowed to keep executing, otherwise the thread is blocked. Semaphores are often used in cases where a resource is produced at a high frequency, e.g., data acquired from a sensor, and multiple threads need access to it.

Each hardware Semaphore has a configurable maximum count of resources produced and variable size of threads that are blocked when there are no resources available. When a thread tries to take from a semaphore and fails, it is blocked and its TID and priority are saved internally in the semaphore. At the same time, the Thread Manager is informed to remove the thread from the ready queue. These two values are used later when a producer thread issues a give operation to request the Thread Manager to put the blocked thread in the ready queue.

4.1.6 Message queues

Message queues are asynchronous communication mechanisms used to send data from one thread to another. Common implementations of message queues in OSes use Firs-In First-Out (FIFO) queues to store messages waiting for a receiving thread. When a thread attempts to send a message, it should provide a pointer to the data and the message size so that the message queue can store a copy of the message. Likewise, when a thread receives a message, it should provide a pointer to the address where it wants the data to be stored so it can receive a copy of the data. When the message queue holds a copy of the data, it avoids having the threads share memory, which often requires extra care to prevent data corruption.

As mentioned previously, the Control Unit manages the internal memory buffer for all the hardware Message Queues to prevent concurrent accesses to the memory. This buffer is composed of a configurable limit of messages per message queue, with the message size also being configurable. The scenarios illustrated in Fig. 9 show an example of this memory buffer when there are four message queues in the system with a limit of four messages each.

Figure 9a depicts a put operation on Message Queue 1. In this case, there is a message already stored in the buffer. The Control Unit reads the data from the system memory and stores it in the next free message slot. On the other hand, in the get operation, illustrated in Fig. 9b, the data written to the system memory is from the first message stored in the message queue, forming a FIFO queue. As such, the Message Queues need to keep track of the order in which the messages are stored.

Lastly, whenever a thread tries to receive a message and the buffer is empty, the thread is blocked until a message is sent. At the same time, when a thread attempts to send a message, and there is no thread waiting to receive it, i.e., if the buffer is full, the sending thread is also blocked to prevent overwriting other data. The amount of threads in each waiting list, sending and receiving, is also a configurable parameter.

4.2 Software abstraction layer

In the ChamelIoT framework, the Software Abstraction Layer fulfills the role of mediator between the software kernel and the Hardware Accelerator. This layer is mainly responsible for: (1) providing low-level generic functions that interact with the accelerator, regardless of coupling approach, (2) implementing functions for each kernel service in hardware and accessing their context data, and (3) doing the modifications needed so that the supported OSes use ChamelIoT’s API.

Regarding the low-level functions that interact with the Hardware Accelerator, these have to take into consideration the coupling approach. For the tightly-coupled accelerator, all the interaction must be made in a single custom instruction, while accessing the loosely-coupled accelerator is done by reading and writing to specific addresses.

Providing support for additional operating systems in ChamelIoT requires the developer to have intimate knowledge of both the ChamelIoT framework and the OS. To add a hardware service to an IoT OS, the developer should follow the guidelines below:

-

1.

Identify the code blocks or functions within the kernel that implement the service;

-

2.

Add conditional compiling verification macros;

-

3.

Include the necessary calls to ChamelIoT API to replicate the service behavior;

-

4.

Ensure the inputs and outputs of the ChamelIoT Abstraction Layer match the software version.

Furthermore, additional modifications may be required to the Software Abstraction Layer. This is particularly applicable in unique scenarios where the operating system requires specific inputs, outputs, or functionalities that are not readily available in the hardware or require significant alterations. In this case, the suggested approach is to keep the additional features outside the API to ensure the behavior remains unchanged.

Tightly-coupled Considering that ChamelIoT’s Hardware Accelerator is currently deployed in a Rocket-based platform, it leverages the RoCC interface to implement a tightly-coupled approach. Regarding the communication with the CPU, the RoCC interface defines an extension to the RISC-V ISA by introducing a custom instruction that follows the R-type format, depicted in Fig. 10. It specifies the target coprocessor, the source and destination of data, and the performing operation.

The opcode field identifies the coprocessor, and according the RoCC specification, it can only contain one of four predefined values, thus, limiting the number of coprocessors. The fields rd, rs1, and rs2 specify the destination (rd) and source (rs1 and rs2) CPU registers used to transfer data with the coprocessor. The xd, xs1, and xs2 are auxiliary fields that identify which of the previous registers have valid data. Lastly, the field funct7 is used as a user-defined opcode for each coprocessor that indicates which function has to be executed.

Listing 1 demonstrates how the RoCC instruction is translated into a C macro. This macro is then used in the implementation of kernel service APIs by having function arguments directly mapped to the source registers rs1 and rs2, and the return value coming from the rd register. The funct7 is determined by a table which maps every function implemented in hardware to a unique value. In order to keep every service available through a single instruction, the interaction between the CPU and accelerator becomes limited to: (1) two 32-bit words being received on the coprocessor; (2) a single 32-bit word response; and (3) a maximum of 128 distinct operations.

Loosely-coupled When the accelerator is deployed loosely-coupled, it is integrated with the Rocket core as a TileLink Register Map node. Consequently, the Hardware Accelerator becomes a memory-mapped device with a well-defined range of addresses configured at compile-time. This implies that the accesses to the accelerator from the software application are done via loads and store instructions. An example of code used to execute these instructions is depicted in Listing 2, where a read and write to specific accelerator registers.

The register map consists of a register per possible input and output and a special register for the instruction. In the current version of the hardware accelerator, the register map includes a total of 34 registers. The instruction register is located at the accelerator base address, and it triggers the accelerator to perform a function whenever anything is written in this register. This approach uses the same funct7 values to offer a similar behavior to the RoCC interface implementation, allowing most of the hardware component to remain unmodified.

The interaction between software and hardware is done through memory accesses. It provides flexibility regarding inputs and outputs as they are not limited by the boundaries of a single instruction. However, it comes at the cost of needing more instructions to execute a single service which involves writing all the inputs, then writing the instruction register, and finally reading the outputs.

Independently from the coupling approach, the Software Abstraction Layer provides a library of functions to be used by IoT OSes in their kernels. Table 3 lists all the current APIs used to accelerate the current IoT OSes. These include functions to add or remove threads from the system, change thread states, schedule a new thread, and use any operation in mutexes, semaphores, and message queues. Furthermore, some functions allow the software to collect data from any hardware component in the accelerator.

As mentioned previously, currently, the ChamelIoT framework supports three IoT OSes: RIOT, Zephyr, and FreeRTOS. For each OS, minimal modifications had to be made in their kernels to use ChamelIoT’s accelerator. Listing 3 shows an example of a modification made to Zephyr’s kernel, replacing the code to schedule the next thread. Currently, this is achieved by resorting to preprocessor directives, allowing the user to decide if hardware acceleration is used in the system by defining a variable during the OS building process. Furthermore, any additional feature not supported by the API that needs to be included to the system should be added within the preprocessor directives.

5 Evaluation

For the purpose of evaluating the ChamelIoT framework, we have integrated and provided support for three IoT OSes: RIOT, FreeRTOS, and Zephyr. To assess performance and determinism, we measured the latency of most kernel services APIs through a series of microbenchmark experiments that measured the clock cycles required by most kernel services implemented by the hardware accelerator. We also evaluated the overall system’s performance using the Thread Metric benchmark suite, which provides a set of synthetic benchmarks stressing kernel features, like scheduling, and synchronization. Each experiment was performed for the three OSes targeting the multiple configurations available with the ChamelIoT framework:

-

SW—the software-based approach without using the hardware accelerator available, i.e., the vanilla software implementation of each OS;

-

TC—the tightly-coupled approach where the hardware accelerator is connected to the core through the RoCC interface, and each OS uses the hardware acceleration by using specific instructions;

-

LC—the loosely-coupled approach, where the multiple OSes leverage the memory-mapped hardware accelerator through memory operations.

Additionally, we evaluated the impact of ChamelIoT on the hardware resources and power estimation required by different threads and priorities configurations, which (from empirical experiments) are the most impactful configurability points. Lastly, we measured the memory footprint of each OS for the three ChamelIoT configurations.

5.1 Experimental setup

We deployed and evaluated our solution on an Arty A7-100T, which features a Xilinx XC7A100TCSG324-1 FPGA running at a clock speed of 65MHz. The hardware accelerator is integrated into an E31 Coreplex RISC-V core (RV32-IMAC). Both the RISC-V core and our accelerator were implemented using the SiFive Freedom E300 Arty FPGA Dev Kit and synthesized in Vivado 2020.2.

The performance evaluation experiments targeted the RIOT v6ae67, FreeRTOS v10.2.1, and Zephyr v2.6.0-577. All software was compiled with the GNU RISC-V Toolchain (version 9.2.0), with optimizations for size enabled (-Os). Apart from OS-specific configurations such as the priority order, the hardware accelerator was kept with the same configurations for the three OSes: maximum of 16 threads with 16 unique priorities, four different mutexes, semaphores, and messages queues (each with 16-word size, and a list of four messages).

5.2 API latency

To assess determinism and performance, we have measured the number of clock cycles required to execute the most common OS services for the three aforementioned setups. Each experiment was repeated 10,000 times for each kernel service. The results are presented by the average number of cycles, i.e., arithmetic mean (M), along with the standard deviation (SD) measured across all repetitions. Furthermore, the worst-case execution time (WCET) measured across all the experiments is presented for each API. The results are discussed below.

5.2.1 Thread Manager and Scheduler

Table 4 presents the results regarding the latency of three different APIs implemented by the Thread Manager and Scheduler: Thread Suspend, Thread Resume, and Schedule. The first two APIs are responsible for modifying the thread state, i.e., from ready to suspended state in Thread Suspend and the other way around in Thread Resume. Whenever one of these functions is executed by a kernel, it implies adding or removing a thread from the ready queue. To test these functions, the system included two threads with different priorities, where the higher priority thread suspends itself, and the low-priority thread resumes the high-priority thread. Lastly, the Schedule function is executed at every scheduling point to select the next executing thread. In order to test this function, two threads with the same priority constantly yielded their execution time to trigger an explicit scheduling point.

The TC setup on RIOT presents latency decreases and improved determinism on all three APIs when compared to the baseline (SW configuration). The latency is decreased by 43.92% on Thread Suspend, 40.73% on Thread Resume, and 62.02% on Schedule. Additionally, the standard deviation is closer to zero on all kernel service, indicating better determinism. On the other hand, the LC setup only shows better performance on the Schedule, decreasing its latency by 62.02%. Nonetheless, it presents better determinism. The number of cycles required to perform a Thread Resume on the LC configuration is over double the number required on the SW configuration. This is justified by the fact that the Abstraction Layer for the loosely-coupled accelerator requires multiple registers to be written in order to perform a service or access a value from the accelerator, e.g., thread priority or state. If a function performs multiple accesses like these, it will greatly increase the total number of cycles required by that API since both the SW and TC would only need a single instruction to execute the same function.

The results gathered for Zephyr also show that only the TC setup increases the performance over the SW setup. The latency is decreased by 13.51%, 1.81%, and 49.94% Thread Suspend, Thread Resume, and Schedule, respectively. Furthermore, the standard deviation is also lower. The LC configuration also shows a performance increase of 99.76% on the Schedule function and better determinism on all three APIS. However, for the previously mentioned reasons, both Thread Resume and Thread Suspend functions show latency increases.

Lastly, both hardware-accelerated setups on FreeRTOS present performance increase on all three kernel services along with less variance. On the TC setup, the latency is decreased by 64.30% on Thread Suspend, 60.75% on Thread Resume, and 88.10% on Schedule. And the LC setup decreases the latency by 51.70%, 43.93%, and 85.08% for each API, respectively.

5.2.2 Mutexes

Table 5 summarizes the results gathered for the two APIs related to Mutexes in three different scenarios. The Lock function is used when a thread attempts to acquire the mutex before entering a critical section of code. Whenever this function executes three different scenarios can occur: (i) the mutex is successfully locked, and the current thread continues to execute; (ii) the mutex is already locked, and the priority inheritance mechanism is triggered; and (iii) the API fails to lock the mutex for external reasons, e.g., uninitialized or invalid mutex. The Unlock API is used when a thread is leaving a critical code section to release the mutex ownership. Likewise, this service can result in three different scenarios: (i) a successful unlock, where the thread keeps execution rights; (ii) the thread had its priority raised by the priority inheritance mechanism, and consequently, its priority has to be reverted, and a scheduling point is forced; and (iii) the Unlock fails because the mutex was not previously locked, for instance. In order to test the three scenarios, we first had a single thread successfully locking and unlocking the same mutex and then trying to lock and unlock an uninitialized mutex, leading to failed attempts on both operations. Lastly, to validate the priority inheritance scenario the following steps are executed in a loop: (1) a low-priority thread locks a mutex and resumes a high-priority thread; (2) the high-priority thread attempts to lock the mutex, triggering the priority inheritance mechanism, forcing the other thread to execute; (3) the first thread has its priority raised and unlocks the mutex, once again triggering the priority inheritance to revert its priority and schedule the next thread; and (4) the high priority thread unlocks the mutex and suspends itself. The results shown in Table 5 for this scenario were collected in the Lock function in step 2 and the Unlock in step 3.

RIOT does not support priority inheritance in its mutex implementation, as such, no results are available for this scenario. The TC configuration shows latency decreases of 13.58% on successful Locks, 70.81% on failed Locks, and 24.79% on successful Unlocks. On failed Unlocks, this setup shows a minimal latency increase. However, it presents lower standard deviation and a better WCET. The LC setup presents performance degradation on most scenarios due to the increasing number of instructions to communicate with the accelerator.

The TC setup on Zephyr decreases the latency on both APIs and all scenarios. For the Lock operations, it shows decreases of 23.35%, 67.14%, and 67.16% for the successful, priority inheritance, and fail scenarios, respectively. On the Unlock function, the latency is decreased by 84.59%, 86.50%, and 0.10% on the same scenarios correspondingly. For the LC setup, there is a latency increase in the successful and fail experiments on both APIs. However, on the priority inheritance scenario, it shows a decrease of 21.46% and 48.68% on the latency of the Lock and Unlock functions.

Finally, both hardware configurations, TC and LC, increase the performance on all scenarios and APIs. This is most notable in the priority inheritance cases, where for the Lock function the latency is decreased by 92.65% on the TC setup and 89.14% on the LC one. And for the Unlock function, it is decreased by 76.94% and 64.75% on the TC and LC configurations, respectively. FreeRTOS software kernel implements its ready queues in a fashion that requires the iterative traversing of linked lists whenever a TCB is accessed. This results in longer times on functions that need to modify thread priorities and states multiple times, like the priority inheritance algorithm on mutexes.

5.2.3 Semaphores

The Semaphores API consists of mainly two functions: Give and Take. The Give function is used by a thread to signal a semaphore that new data or resources are now available. Whenever this function executes, it can result in two different scenarios. The first where another thread previously tried to take from the semaphore, and the current thread has to yield the execution after the Give. And the second scenario where there is no thread waiting, and the current thread continues execution. The Take function is used by a thread attempting to access a resource, and similarly to the previous function, it can also result in two different scenarios: (i) the resource is already available in the semaphore, allowing the current thread to access it and keep executing; and (ii) there are no resources available, forcing the current thread to yield until the semaphore is signaled by other threads. To test these APIs, we devised two experiments, one with a single thread using Give and Take repeatedly, leading to no yields being required. And another experiment with two threads, where the first attempts to take from a semaphore without resources, yielding the execution to the other thread, which signals the semaphore and yields the execution to the waiting thread. The results collected are presented in Table 6.

For RIOT OS, the TC setup provides a latency decrease for all the scenarios in both APIs. This decrease varies from 69.16% on a Take with no resource available to 72.70% on a Give with a thread waiting. The LC setup slightly increases the latency in all cases, up to 7.84%. However, it decreases the latency variance.

The TC configuration on Zephyr decreases the latency of Gives with threads waiting by 63.65% and without threads waiting by 89.05%. For the Take API, this setup only performs slightly better (up to 4.55%) than the SW configuration. The LC setup decreases the latency on Gives with threads waiting by 0.12% and 75.23% on Gives with no thread waiting. On the Take API, the latency increased to over double. The software implementation of semaphores on Zephyr is already fast, to the point that hardware acceleration mostly offers better determinism.

Lastly, the hardware-based setups increase the performance on all cases for the semaphores API in FreeRTOS. This is most evident in the scenarios that require the thread to yield execution, e.g., Gives with threads waiting (latency decreased by 54.70% and 49.67% on the TC and LC setups respectively), and Takes with no resource available (latency decreased by 89.58% and 87.07% on the TC and LC setups, respectively). As mentioned previously, this is due to the fact that FreeRTOS uses more complex logic to access the ready queue.

5.2.4 Message queues

There are two main operations regarding message queues, i.e., Send and Receive. Both functions may cause threads to be suspended or resumed, depending on whether there is someone waiting for the message or if there is a message ready. However, for the purpose of isolating the memory operations and evaluating their performance, scenarios, where threads had to yield execution, were not considered. As such, the conducted experiment consisted of one thread sending a message through a message queue, and another thread receiving it through the same queue. After each iteration of this process, the sending thread modified the message contents, and the receiving thread checked the content to ensure correctness. The results are depicted in Fig. 11, where the same experiment was repeated to multiple message sizes.

RIOT defines a structure to represent a message which contains a pointer to the data sent/received. However, unlike standard message queue implementations, the contents of this structure are copied from sending thread to the receiving thread, including the original pointer. Thus, giving direct access to the memory location of the original data to the receiving thread. Nonetheless, intending to keep agnosticism, the ChamelIoT framework uses the same mechanism of only copying the structure contents on message queue operations. This results in identical results across the board, i.e., all three setups on both APIs, as the message size is always the structure size.

The TC setup on Zephyr shows consistent latency decreases for both Send and Receive APIs, averaging across all message sizes 65.80%, and 67.63%, respectively. On the LC configurations, up to message sizes of 16 words, the hardware setup performs better on both APIs. However, for bigger messages the latency is increased by 11.61% on Sends of 64 words and 10.76% on Receives of 64 words.