Abstract

In this paper, we consider a class of structured nonconvex nonsmooth optimization problems, in which the objective function is formed by the sum of a possibly nonsmooth nonconvex function and a differentiable function with Lipschitz continuous gradient, subtracted by a weakly convex function. This general framework allows us to tackle problems involving nonconvex loss functions and problems with specific nonconvex constraints, and it has many applications such as signal recovery, compressed sensing, and optimal power flow distribution. We develop a proximal subgradient algorithm with extrapolation for solving these problems with guaranteed subsequential convergence to a stationary point. The convergence of the whole sequence generated by our algorithm is also established under the widely used Kurdyka–Łojasiewicz property. To illustrate the promising numerical performance of the proposed algorithm, we conduct numerical experiments on two important nonconvex models. These include a compressed sensing problem with a nonconvex regularization and an optimal power flow problem with distributed energy resources.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this work, we consider the structured optimization problem

where C is a nonempty closed subset of a finite-dimensional real Hilbert space \(\mathcal {H}\), A is a linear mapping from \(\mathcal {H}\) to another finite-dimensional real Hilbert space \(\mathcal {K}\), \(f:\mathcal {H}\rightarrow (-\infty , +\infty ]\) is a proper lower semicontinuous (possibly nonsmooth and nonconvex) function, \(h:\mathcal {K}\rightarrow \mathbb {R}\) is a differentiable (possibly nonconvex) function whose gradient is Lipschitz continuous with modulus \(\ell \), and \(g:\mathcal {H}\rightarrow (-\infty , +\infty ]\) is a continuous weakly convex function with modulus \(\beta \) on an open convex set containing C. This broad optimization problem has many important applications in diverse areas, including power control problems [1], compressed sensing [2], portfolio optimization, supply chain problem, image segmentation, and others [3].

In particular, the model problem (P) covers two of the most general models in the literature. Firstly, in statistical learning, the following optimization model is often used

where \(\varphi \) is called a loss function which measures the data misfitting, r is a regularization which promotes specific structure in the solution such as sparsity, and \(\gamma >0\) is a weighting parameter. Typical choices of the loss function are the least square loss function \(\varphi (x)=\frac{1}{2}\Vert Ax-b\Vert ^2\) where \(A\in \mathbb {R}^{m\times d}\) and \(b \in \mathbb {R}^m\) and the logistic loss function, which are both convex. In the literature, nonconvex loss functions have also received increased attentions. Some popular nonconvex loss functions include the ramp loss function [4, 5] and the Lorentzian norm [6]. In addition, [7] recently showed that many regularization r used in the literature can be written as difference of two convex functions, and so, the model (1) can be formulated into problem (P). These include popular regularizations such as the smoothly clipped absolute deviation (SCAD) [8], the indicator function of cardinality constraint [9], \(L_1-L_2\) regularization [2], or minimax concave penalty (MCP) [10]. Therefore, problem (P) can be interpreted as a problem with the form (1) whose objective function is the sum of a nonconvex and nonsmooth loss function and a regularization which can be expressed as a specific form of difference-of-(possibly) nonconvex functions.Footnote 1 Secondly, in the case when \(C =\mathbb {R}^d\) and A is the identity mapping, problem (P) reduces to

referred as the general difference-of-convex (DC) program, which is a broad class of optimization problems studied in the literature. To solve problem (2) under the convexity of g, a generalized proximal point algorithm was developed in [11]. For the case when both f and g are convex, [12] provided an accelerated difference-of-convex algorithm incorporating Nesterov’s acceleration technique into the standard difference-of-convex algorithm (DCA) to improve its performance, while [13] proposed an inexact successive quadratic approximation method. When f, h, and g are all required to be convex, a proximal difference-of-convex algorithm with extrapolation (pDCAe) was proposed in [14], and there are also other existing studies (e.g., [15, 16]) that developed algorithms to solve such a problem.

In the cases where the loss function f is smooth and the regularization r is prox-friendly in the sense that its proximal operator can be computed efficiently, the proximal gradient method is a widely used algorithm for solving (1) (for example, see [17]). Moreover, incorporating information from previous iterations to accelerate the proximal algorithm while trying not to significantly increase the computational cost has also been a research area which receives a lot of attention. One such approach is to make use of the extrapolation technique. In this approach, momentum terms that involve the information from previous iterations are used to update the current iteration. Such techniques have been successfully implemented and achieved significant results, including Polyak’s heavy ball method [18], Nesterov’s techniques [19, 20], and the fast iterative shrinking-threshold algorithm (FISTA) [21]. In particular, extrapolation techniques have shown competitive results for optimization problems that involve sum of convex functions [22], difference of convex functions [14, 16], and ratio of nonconvex and nonsmooth functions [23].

In view of these successes, this paper proposes an extrapolated proximal subgradient algorithm for solving problem (P). In our work, comparing to the literature, the convexity and smoothness of the loss functions f are relaxed. We also allow a closed feasible set C instead of optimizing over the whole space. This general framework allows us to tackle problems involving nonconvex loss functions such as Lorentzian norm and problems with specific nonconvex constraints such as spherical constraint. We then prove that the sequence generated by the algorithm is bounded and any of its cluster points is a stationary point of the problem. We also prove the convergence of the full sequence under the assumption of Kurdyka–Łojasiewicz property. We then evaluate the performance of the proposed algorithm on a compressed sensing problem for both convex and nonconvex loss functions together with the recently proposed nonconvex \(L_1-L_2\) regularization. Finally, we formulate an optimal power flow problem considering photovoltaic systems placement, and address it using our algorithm.

The rest of this paper is organized as follows. In Section 2, we provide preliminary materials used in this work. In Section 3, we introduce our algorithm, and establish subsequential convergence and full sequential convergence of the proposed algorithm. In Section 4, we present numerical experiments for several case studies. Finally, we conclude the paper in Section 5.

2 Premilinaries

Throughout this paper, \(\mathcal {H}\) is a finite-dimensional real Hilbert space with inner product \(\langle \cdot , \cdot \rangle \) and the induced norm \(\Vert \cdot \Vert \). We use the notation \(\mathbb {N}\) for the set of nonnegative integers, \(\mathbb {R}\) for the set of real numbers, \(\mathbb {R}_+\) for the set of nonnegative real numbers, and \(\mathbb {R}_{++}\) for the set of the positive real numbers.

Let \(f:\mathcal {H}\rightarrow \left[ -\infty ,+\infty \right] \). The domain of f is \({\text {dom}}f:=\{x\in \mathcal {H}: f(x) <+\infty \}\) and the epigraph of f is \({\text {epi}}f:= \{(x,\rho )\in \mathcal {H}\times \mathbb {R}: f(x)\le \rho \}\). The function f is proper if \({\text {dom}}f \ne \varnothing \) and it never takes the value \(-\infty \), lower semicontinuous if its epigraph is a closed set, and convex if its epigraph is a convex set. We say that f is weakly convex if \(f+\frac{\alpha }{2}\Vert \cdot \Vert ^2\) is convex for some \(\alpha \in \mathbb {R}_+\). The modulus of the weak convexity is the smallest constant \(\alpha \) such that \(f+\frac{\alpha }{2}\Vert \cdot \Vert ^2\) is convex. Given a subset C of \(\mathcal {H}\), the indicator function \(\iota _C\) of C is defined by \(\iota _C(x):=0\) if \(x\in C\), and \(\iota _C(x):=+\infty \) if \(x\notin C\). If \(f+\iota _C\) is weakly convex with modulus \(\alpha \), then f is said to be weakly convex on C with modulus \(\alpha \). Some examples of weakly convex functions are quadratic functions, convex functions, and differentiable functions with Lipschitz continuous gradient.

Let \(x\in \mathcal {H}\) with \(|f(x) |<+\infty \). The Fréchet subdifferential of f at x is defined by

and the limiting subdifferential of f at x is defined by

where the notation \(y{\mathop {\rightarrow }\limits ^{f}}x\) means \(y\rightarrow x\) with \(f(y)\rightarrow f(x)\). In the case where \(|f(x) |=+\infty \), both Fréchet subdifferential and limiting subdifferential of f at x are defined to be the empty set. The domain of \(\partial _L f\) is given by \({\text {dom}}\partial _L f:=\{x\in \mathcal {H}: \partial _L f(x) \ne \varnothing \}\). It can be directly verified from the definition that the limiting subdifferential has the robustness property

Next, we revisit some important properties of the limiting subdifferential.

Lemma 2.1

(Sum rule) Let \(x \in \mathcal {H}\) and let \(f, g:\mathcal {H} \rightarrow (-\infty , +\infty ]\) be proper lower semicontinuous functions. Suppose that f is finite at x and g is locally Lipschitz around x. Then \(\partial _L (f + g)({x}) \subseteq \partial _L f({x})+\partial _L g({x})\). Moreover, if g is strictly differentiable at x, then \(\partial _L(f + g)({x}) = \partial _Lf({x}) + \nabla g({x})\).

Proof

This follows from [24, Proposition 1.107(ii) and Theorem 3.36].\(\square \)

The following result, whose proof is included for completeness, is similar to [25, Lemma 2.9].

Lemma 2.2

(Upper semicontinuity of subdifferential) Let \(f:\mathcal {H} \rightarrow [-\infty , +\infty ]\) be Lipschitz continuous around \(x \in \mathcal {H}\), let \((x_n)_{n\in \mathbb {N}}\) be a sequence in \(\mathcal {H}\) converging to x, and let, for each \({n\in \mathbb {N}}\), \(x_n^* \in \partial _L f(x_n)\). Then \((x_n^*)_{n\in \mathbb {N}}\) is bounded with all cluster points contained in \(\partial _L f(x)\).

Proof

By the Lipschitz continuity of f around x, there are a neighborhood V of x and a constant \(\ell _V \in \mathbb {R}_+\) such that f is Lipschitz continuous on V with modulus \(\ell _V\). Then, by [24, Corollary 1.81], for all \(v\in V\) and \(v^*\in \partial _L f(v)\), one has \(\Vert v^*\Vert \le \ell _V\). Since \(x_n \rightarrow x\) as \(n\rightarrow +\infty \), there is \(n_0\in \mathbb {N}\) such that, for all \(n\ge n_0\), \(x_n \in V\), which implies that \(\Vert x^*_n\Vert \le \ell _V\). This means \((x_n^*)_{n\in \mathbb {N}}\) is bounded.

Now, let \(x^*\) be a cluster point of \((x_n^*)_{n\in \mathbb {N}}\), i.e., there exists a subsequence \((x_{k_n}^*)_{n\in \mathbb {N}}\) such that \(x_{k_n}^*\rightarrow x^*\) as \(n\rightarrow +\infty \). On the other hand, we have from the convergence of \((x_n)_{n\in \mathbb {N}}\) and the Lipschitz continuity of f around x that \(x_{k_n} {\mathop {\rightarrow }\limits ^{f}} x\). Therefore, \(x^*\in \partial _L f(x)\) due to the robustness property of the limiting subdifferential.\(\square \)

We end this section with the definitions of stationary points for the problem (P). A point \(\overline{x}\in C\) is said to be a

-

stationary point of (P) if \(0\in \partial _L(f+\iota _C+h\circ A-g)(\overline{x})\),

-

lifted stationary point of (P) if \(0\in \partial _L(f+\iota _C)(\overline{x})+A^*\nabla h(A\overline{x}) -\partial _L g(\overline{x})\).

Here \(A^*\) is the adjoint mapping of the linear mapping A.

3 Proximal subgradient algorithm with extrapolation

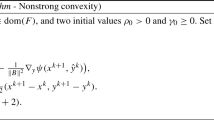

We now propose our extrapolated proximal subgradient algorithm for solving problem (P) with guaranteed convergence to stationary points.

Proximal subgradient algorithm with extrapolation

Remark 3.1

(Discussion of the algorithm structure and extrapolation parameters) Some comments on Algorithm 1 are in order.

-

(i)

Recalling that the proximal operator of a proper function \(\phi :\mathcal {H}\rightarrow \left( -\infty ,+\infty \right] \) is defined by

$$\begin{aligned} {\text {prox}}_{\phi }(x) ={\text {*}}{argmin}_{y\in \mathcal {H}}\left( \phi (y) + \frac{1}{2}\Vert y-x\Vert ^2\right) , \end{aligned}$$we see that the update of \(x_{n+1}\) in Step 2 can be written as

$$\begin{aligned} x_{n+1} \in {\text {prox}}_{\tau _n (f+\iota _C)}(v_n-\tau _n A^*\nabla h(Au_n)+\tau _n g_n). \end{aligned}$$Therefore, Step 2 essentially boils down to the computation of the proximal operator of \(\tau _n (f+\iota _C)\). This can be done efficiently for various specific structures of f and C.

-

First, in the case where \(f\equiv 0\), computing the proximal operator of \(\tau _n (f+\iota _C)\) is equivalent to computing the projection onto the set C. This can be efficiently computed in many applications or even admits a closed form solution, for example, when C is a ball, a sphere, or takes the form \(\{x\in \mathcal {H}: \Vert x\Vert _0 \le r\}\) with \(r>0\). Here, \(\Vert x\Vert _0\) denotes the cardinality of the vector x.

-

Second, in the case where \(C=\mathcal {H}=\mathbb {R}^d\), this task reduces to computing the proximal operator of \(\tau _n f\), which can also have closed form solution for several nonconvex/nonsmooth functions f. These include various popular regularization functions in the literature, such as the \(L_{1/2}\) regularization \(f(x)=\sum _{i=1}^d |x_i|^{\frac{1}{2}}\) [26, Theorem 1], the \(L_1-L_2\) regularization \(f(x) =\Vert x\Vert _1 - \alpha \Vert x\Vert \) (\(\alpha \in \mathbb {R}_+\)) [2, Lemma 1], and the sum entropy and sparsity regularization terms [27, Section 3].

-

In addition, when f is a convex quadratic function and C is a polyhedral set, computing the proximal operator of \(\tau _n (f+\iota _C)\) is equivalent to solving a convex quadratic programming problem. When f is a nonconvex quadratic function and C is the unit ball, this reduces to a trust region problem which can be solved as a generalized eigenvalue problem or a semi-definite programming problem. For further tractable cases, see, e.g., [23, Remark 4.1].

-

-

(ii)

Let us consider the case when A is the identity mapping and \(C = \mathcal {H}\). We fix an arbitrary \(\tau \in \left( 0, 1/\ell \right) \) and choose \(\overline{\lambda } =\overline{\mu } =0\) (which yields \(\lambda _n = \mu _n =0\)), \(\delta \in \left( 0, 1/(2\tau ) -\ell /2\right) \), and \(\tau _n =\tau \). Then the update of \(x_{n+1}\) in Step 2 becomes

$$\begin{aligned} x_{n+1}\in {\text {prox}}_{\tau f}(x_n-\tau \nabla h(x_n) + \tau g_n), \end{aligned}$$which is the so-called generalized proximal point algorithm (GPPA) in [11], where g is assumed to be convex (In this case, \(\beta =0\) and \(1/\tau _n = 1/\tau >2\delta +\ell =\beta +2\delta +\ell \Vert A\Vert ^2\left( 2\overline{\lambda }+1\right) +2\overline{\mu }\)).

-

(iii)

In the case where \(h\equiv 0\), the objective function F reduces to \(f-g\) and the update of \(x_{n+1}\) in Step 2 reduces to

$$\begin{aligned} x_{n+1} \in {\text {prox}}_{\tau _n (f+\iota _C)}(v_n+\tau _n g_n). \end{aligned}$$In turn, if \(C = \mathcal {H}\) and \(\mu _n=0\), Algorithm 1 reduces to the proximal linearized algorithm proposed in [28] which requires that f and g are convex.

-

(iv)

When \(g \equiv 0\), A is the identity mapping, and \(C = \mathcal {H}\), the objective function reduces to \(f+h\). By choosing \(\lambda _n =\mu _n\), we have

$$\begin{aligned} x_{n+1} \in {\text {prox}}_{\tau _n f}(u_n-\tau _n\nabla h(u_n)) \end{aligned}$$and Algorithm 1 reduces to the inertial forward-backward algorithm studied in [22], in which an additional requirement of the convexity of h is imposed.

-

(v)

Motivated by the popular parameter used in FISTA and also its variants (such as the restarted FISTA scheme) [17, Chapter 10], a plausible option for extrapolation parameters \(\lambda _n\) and \(\mu _n\) (which will be used in our computation later) is that

$$\begin{aligned} \lambda _n =\overline{\lambda }\frac{\kappa _{n-1}-1}{\kappa _n} \text {~~and~~} \mu _n =\overline{\mu }\tau _n\frac{\kappa _{n-1}-1}{\kappa _n}, \end{aligned}$$where \(\kappa _{-1} =\kappa _0 =1\) and \(\kappa _{n+1} =\frac{1+\sqrt{1+4\kappa _n^2}}{2}\). It can be seen that, for all \(n\in \mathbb {N}\), \(1\le \kappa _{n-1} <\kappa _n +1\), and so \(\lambda _n \in [0, \overline{\lambda }]\) and \(\mu _n \in [0, \overline{\mu }\tau _n]\). We can also reset \(\kappa _{n-1} =\kappa _n =1\) whenever n is a multiple of some fix integer \(n_0\).

From now on, let \((x_n)_{n\in \mathbb {N}}\) be a sequence generated by Algorithm 1. Under suitable assumptions, we show in the next theorem that \((x_n)_{n\in \mathbb {N}}\) is bounded and any of its cluster points is a stationary point of problem (P).

Theorem 3.2

(Subsequential convergence) For problem (P), suppose that the function F is bounded from below on C and that the set \(C_0:=\{x\in C: F(x) \le F(x_0)\}\) is bounded. Set \(c:=\frac{1}{2}(\ell \Vert A\Vert ^2\overline{\lambda }+\overline{\mu })\). Then the following statements hold:

-

(i)

For all \(n\in \mathbb {N}\),

$$\begin{aligned}&(F(x_{n+1}) + c\Vert x_{n+1}-x_{n}\Vert ^2)+\delta \Vert x_{n+1}-x_{n}\Vert ^2 \le F(x_n)+ c\Vert x_{n}-x_{n-1}\Vert ^2 \end{aligned}$$(3)and the sequence \((F(x_n))_{n\in \mathbb {N}}\) is convergent.

-

(ii)

The sequence \((x_n)_{n\in \mathbb {N}}\) is bounded and \(x_{n+1}-x_n\rightarrow 0\) as \(n\rightarrow +\infty \).

-

(iii)

Suppose that \(\liminf _{n\rightarrow +\infty } \tau _n =\overline{\tau } >0\) and let \(\overline{x}\) be a cluster point of \((x_n)_{n\in \mathbb {N}}\). Then \(\overline{x}\in C\cap {\text {dom}}f\), \(F(x_n)\rightarrow F(\overline{x})\), and \(\overline{x}\) is a lifted stationary point of (P). Moreover, \(\overline{x}\) is a stationary point of (P) provided that g is strictly differentiable on an open set containing \(C\cap {\text {dom}}f\).

Proof

(i) & (ii): We see from Step 2 of Algorithm 1 that, for all \(n\in \mathbb {N}\), \(x_n\in C\) and

Therefore, for all \(n\in \mathbb {N}\) and all \(x\in C\),

or equivalently,

By the Lipschitz continuity of \(\nabla h\), we derive from [20, Lemma 1.2.3] that

As \(g_n\in \partial _L g(x_n)\) and \(x_n,~ x_{n+1}\in C\), it follows from the weak convexity of g and [23, Lemma 4.1] that

Letting \(x =x_n\in C\) in (4) and combining with the last two inequalities, we obtain that

By the definition of \(u_n\) and \(v_n\), we have \(x_n-u_n =-\lambda _n(x_n-x_{n-1})\), \(x_{n+1}-v_n =(x_{n+1}-x_n) -\mu _n(x_n-x_{n-1})\), \(x_n-v_n =-\mu _n(x_n-x_{n-1})\), and so

where we have used \(\langle x_{n+1}-x_n, x_n-x_{n-1} \rangle \le \Vert x_{n+1}-x_n\Vert \Vert x_n-x_{n-1}\Vert \le \frac{1}{2}(\Vert x_{n+1}-x_n\Vert ^2 + \Vert x_n-x_{n-1} \Vert ^2)\). Rearranging terms yields

Since \(\lambda _n \in [0, \overline{\lambda }]\), \(\mu _n \in [0, \overline{\mu }\tau _n]\), and \(1/\tau _n \ge \beta +2\delta +\ell \Vert A\Vert ^2\left( 2\overline{\lambda }+1\right) +2\overline{\mu }\), it follows that

which proves (3).

Recalling \(c =\frac{1}{2}(\ell \Vert A\Vert ^2\overline{\lambda }+\overline{\mu })\) and setting \(\mathcal {F}_n:=F(x_n) +c\Vert x_n-x_{n-1}\Vert ^2\), we have

Since \(\delta >0\), the sequence \((\mathcal {F}_n)_{n\in \mathbb {N}}\) is nonincreasing. Since F is bounded below on C, the sequence \((\mathcal {F}_n)_{n\in \mathbb {N}}\) is bounded below, and it is therefore convergent. After rearranging (5) and performing telescoping, we obtain that, for all \(m\in \mathbb {N}\),

Denoting \(\overline{\mathcal {F}}:=\lim _{n\rightarrow +\infty } \mathcal {F}_n\) and letting \(m\rightarrow +\infty \), we obtain that

Therefore, as \(n \rightarrow +\infty \), \(x_{n+1}-x_{n} \rightarrow 0\), and so \(F(x_n) =\mathcal {F}_n-c\Vert x_n-x_{n-1}\Vert ^2 \rightarrow \overline{\mathcal {F}}\), which means that the sequence \((F(x_n))_{n\in \mathbb {N}}\) is convergent.

Now, we observe that

which implies \(x_n\in C_0 =\{x\in C: F(x) \le F(x_0)\}\). Hence, \((x_n)_{n\in \mathbb {N}}\) is bounded due to the boundedness of \(C_0\).

(iii): As \(\overline{x}\) is a cluster point of the sequence \((x_n)_{n\in \mathbb {N}}\), there exists a subsequence \((x_{k_n})_{n\in \mathbb {N}}\) of \((x_n)_{n\in \mathbb {N}}\) such that \(x_{k_n}\rightarrow \overline{x}\) as \(n \rightarrow +\infty \). Then \(\overline{x}\in C\) and, since \(x_{n+1}-x_n\rightarrow 0\), one has \(x_{k_n-1}\rightarrow \overline{x}\), so as \(u_{k_n-1}\) and \(v_{k_n-1}\). Since \(g +\frac{\beta }{2}\Vert \cdot \Vert ^2\) is a continuous convex function on an open set O containing C, we obtain from [29, Example 9.14] that g is locally Lipschitz continuous on O. In view of Lemma 2.2, since \(x_{k_n}\rightarrow \overline{x}\) as \(n\rightarrow +\infty \), passing to a subsequence if necessary, we can assume that \(g_{k_n}\rightarrow \overline{g}\in \partial _L g(\overline{x})\) as \(n\rightarrow +\infty \).

Replacing n in (4) with \(k_n-1\), we have for all \(n\in \mathbb {N}\) and all \(x\in C\) that

As \(\liminf _{n\rightarrow +\infty } \tau _n =\overline{\tau } >0\), letting \(x=\overline{x}\) and \(n\rightarrow \infty \), we obtain that \(f(\overline{x}) \ge \limsup _{n\rightarrow +\infty } {f(x_{k_n})}\). Since f is lower semicontinuous, it follows that \(\lim _{n \rightarrow +\infty } f(x_{k_n}) =f(\overline{x})\). On the other hand, \(\lim _{n \rightarrow +\infty } g(x_{k_n}) =g(\overline{x})\) and \(\lim _{n \rightarrow +\infty } h(Ax_{k_n}) =h(A\overline{x})\) due to the continuity of g and h. Therefore,

Next, by letting \(n\rightarrow +\infty \) in (6), for all \(x\in C\),

which can be rewritten as

This means \(\overline{x}\) is a minimizer of the function \((f+\langle \nabla h(A\overline{x}),A\cdot -A\overline{x}\rangle -\langle \overline{g}, \cdot -\overline{x}\rangle +\frac{1}{2\overline{\tau }}\Vert \cdot -\overline{x}\Vert ^2)(x)\) over C. Hence, \(0\in \partial _L(f+\langle \nabla h(A\overline{x}),A\cdot -A\overline{x}\rangle -\langle \overline{g}, \cdot -\overline{x}\rangle +\frac{1}{2\overline{\tau }}\Vert \cdot -\overline{x}\Vert ^2+\iota _C)(\overline{x}) =\partial _L(f+\iota _C)(\overline{x}) +A^*\nabla h(A\overline{x}) -\overline{g}\), and we must have \(\overline{x}\in C\cap {\text {dom}}f\). Since \(\overline{g}\in \partial _L g(\overline{x})\), we deduce that \(0\in \partial _L(f+\iota _C)(\overline{x}) +A^*\nabla h(A\overline{x}) -\partial _L g(\overline{x})\), i.e., \(\overline{x}\) is a lifted stationary point of (P). In addition, if we further require that g is strictly differentiable, then Lemma 2.1 implies that \(\overline{x}\) is a stationary point of (P). \(\square \)

Next, we establish the convergence of the full sequence generated by Algorithm 1. In order to do this, we recall that a proper lower semicontinuous function \(G:\mathcal {H}\rightarrow \left( -\infty , +\infty \right] \) satisfies the Kurdyka–Łojasiewicz (KL) property [30, 31] at \(\overline{x} \in {\text {dom}}\partial _L G\) if there exist \(\eta \in (0, +\infty ]\), a neighborhood V of \(\overline{x}\), and a continuous concave function \(\phi : \left[ 0, \eta \right) \rightarrow \mathbb {R}_+\) such that \(\phi \) is continuously differentiable with \(\phi ' > 0\) on \((0, \eta )\), \(\phi (0) = 0\), and, for all \(x \in V\) with \( G(\overline{x})< G(x) < G(\overline{x}) + \eta \),

We say that G is a KL function if it satisfies the KL property at any point in \({\text {dom}}\partial _L G\). If G satisfies the KL property at \(\overline{x} \in {\text {dom}}\partial _L G\), in which the corresponding function \(\phi \) can be chosen as \(\phi (t) = c t ^{1 - \theta }\) for some \(c \in \mathbb {R}_{++}\) and \(\theta \in [0, 1)\), then G is said to satisfy the KL property at \(\overline{x}\) with exponent \(\theta \). The function G is called a KL function with exponent \(\theta \) if it is a KL function and has the same exponent \(\theta \) at any \(x \in {\text {dom}}\partial _L G\).

Theorem 3.3

(Full sequential convergence) For problem (P), suppose that F is bounded from below on C, that the set \(C_0:=\{x\in C: F(x) \le F(x_0)\}\) is bounded, that g is differentiable on an open set containing \(C\cap {\text {dom}}f\) whose gradient \(\nabla g\) is Lipschitz continuous with modulus \(\ell _g\) on \(C\cap {\text {dom}}f\), and that \(\liminf _{n\rightarrow +\infty } \tau _n =\overline{\tau } >0\). Define

where \(c =\frac{1}{2}(\ell \Vert A\Vert ^2\overline{\lambda }+\overline{\mu })\), and suppose that G satisfies the KL property at \((\overline{x},\overline{x})\) for every \(\overline{x} \in C\cap {\text {dom}}f\). Then

-

(i)

The sequence \((x_n)_{n\in \mathbb {N}}\) converges to a stationary point \(x^*\) of (P) and \(\sum _{n=0}^{+\infty }\Vert x_{n+1}-x_n\Vert <+\infty \).

-

(ii)

Suppose further that G satisfies the KL property with exponent \(\theta \in [0,1)\) at \((\overline{x},\overline{x})\) for every \(\overline{x} \in C\cap {\text {dom}}f\). The following statements hold:

-

(a)

If \(\theta =0\), then \((x_n)_{n\in \mathbb {N}}\) converges to \(x^*\) in a finite number of steps.

-

(b)

If \(\theta \in (0,\frac{1}{2}]\), then there exist \(\gamma \in \mathbb {R}_{++}\) and \(\rho \in \left( 0,1\right) \) such that, for all \(n\in \mathbb {N}\), \(\Vert x_n-x^*\Vert \le \gamma \rho ^{\frac{n}{2}}\) and \(|F(x_n) -F(x^*) |\le \gamma \rho ^n\).

-

(c)

If \(\theta \in (\frac{1}{2},1)\), then there exists \(\gamma \in \mathbb {R}_{++}\) such that, for all \(n\in \mathbb {N}\), \(\Vert x_n-x^*\Vert \le \gamma n^{-\frac{1-\theta }{2\theta -1}}\) and \(|F(x_n) -F(x^*)|\le \gamma n^{-\frac{2-2\theta }{2\theta -1}}\).

-

(a)

Proof

For each \(n\in \mathbb {N}\), let \(z_n =(x_{n+1},x_n)\). According to Theorem 3.2, we have that, for all \(n\in \mathbb {N}\),

that the sequence \((z_n)_{n\in \mathbb {N}}\) is bounded, that \(z_{n+1}-z_n\rightarrow 0\) as \(n\rightarrow +\infty \), and that for every cluster point \(\overline{z}\) of \((z_n)_{n\in \mathbb {N}}\), \(\overline{z} = (\overline{x}, \overline{x})\), where \(\overline{x}\in C\cap {\text {dom}}f\) is a stationary point of (P) and \(G(z_n) = F(x_{n+1}) +c\Vert x_{n+1}-x_n\Vert ^2\rightarrow F(\overline{x}) =G(\overline{z})\) as \(n\rightarrow +\infty \).

Let \(n\in \mathbb {N}\). It follows from the update of \(x_{n+1}\) in Step 2 of Algorithm 1 that

which implies that

Noting that \(G(z_n) =(f+\iota _C)(x_{n+1})+h(Ax_{n+1})-g(x_{n+1})+c\Vert x_{n+1}-x_n\Vert ^2\) and that

we obtain

Since \(\Vert x_{n+1}-u_n\Vert \le \Vert x_{n+1}-x_n\Vert +\lambda _n\Vert x_n-x_{n-1}\Vert \) and \(\Vert x_{n+1}-v_n\Vert \le \Vert x_{n+1}-x_n\Vert +\mu _n\Vert x_n-x_{n-1}\Vert \), we derive that

Since \(\liminf _{n\rightarrow +\infty } \tau _n = \overline{\tau } > 0\), there exists \(n_0 \in \mathbb {N}\) such that, for all \(n \ge n_0\), \(\tau _n \ge \overline{\tau }/2\). Recalling that \(\lambda _n \le \overline{\lambda }\) and \(\frac{\mu _n}{\tau _n} \le \overline{\mu }\), we have for all \(n\ge n_0\) that

where \(\eta _1 =\ell _g+\ell \Vert A\Vert +\frac{2}{\overline{\tau }}+4c\) and \(\eta _2 =\ell \Vert A\Vert \overline{\lambda }+\overline{\mu }\). Now, the first conclusion follows by applying [23, Theorem 5.1] with \(I =\{1,2\}\), \(\lambda _1 =\frac{\eta _1}{\eta _1+\eta _2}\), \(\lambda _2 =\frac{\eta _2}{\eta _1+\eta _2}\), \(\Delta _n =\Vert x_{n+2}-x_{n+1}\Vert \), \(\alpha _n \equiv \delta \), \(\beta _n \equiv \frac{1}{\eta _1+\eta _2}\), and \(\varepsilon _n \equiv 0\). The remaining conclusions follow a rather standard line of argument as used in [23, 32, 33], see also [34, Theorem 3.11].\(\square \)

Remark 3.4

(KL property and KL exponents) In the preceding theorem, the convergence of the full sequence generated by Algorithm 1 requires the KL property of the function G with the form that \(G(x,y):=F(x) +\iota _C(x) +c\Vert x-y\Vert ^2\), where F is the objective function of the model problem (P), C is the feasible region of problem (P) and \(c>0\). We note that this assumption holds for a broad class of model problem (P) where F is a semi-algebraic function and C is a semi-algebraic set. More generally, it continues to hold when F is a definable function and C is a definable set (see [30, 35]).

As simple illustrations, in our case study in the next section, we will consider the following two classes of functions:

-

(i)

\(F(x) = \varphi (Ax)+\gamma (\Vert x\Vert _1-\alpha \Vert x\Vert )\), where \(\varphi (z) = \frac{1}{2}\Vert z-b\Vert ^2\) (least square loss) or \(\varphi (z) = \Vert z-b\Vert _{LL_{2,1}} = \sum _{i=1}^{m}\log \left( 1+|z_i-b_i |^2\right) \) (Lorentzian norm loss [6]), \(A \in \mathbb {R}^{m\times d}\), \(b\in \mathbb {R}^m\), \(\alpha \in \mathbb {R}_+\), and \(\gamma \in \mathbb {R}_{++}\).

-

(ii)

\(F(x) = \frac{1}{2}x^TMx+u^Tx+r\), where M is an \((d \times d)\) symmetric matrix, \(u \in \mathbb {R}^d\), and \(r \in \mathbb {R}\).

Let \(G(x,y):=F(x) +\iota _C(x) +c\Vert x-y\Vert ^2\), where \(c > 0\) and C is a semi-algebraic set in \(\mathbb {R}^d\). Then, in both cases, G is definable, and so, it satisfies the KL property at \((\overline{x},\overline{x})\) for all \(\overline{x} \in C\cap {\text {dom}}F\). Moreover, for case (ii), if C is further assumed to be a polyhedral set, then as shown in [33] the KL exponent for G is \(\frac{1}{2}\), and by Theorem 3.3, the proposed algorithm exhibits a linear convergence rate.

4 Case studies

In this section, we provide the numerical results of our proposed algorithm for two case studies: compressed sensing with \(L_1-L_2\) regularization, and optimal power flow problem which considers photovoltaic systems placement for a low voltage network. All of the experiments are performed in MATLAB R2021b on a 64-bit laptop with Intel(R) Core(TM) i7-1165G7 CPU (2.80GHz) and 16GB of RAM.

4.1 Compressed sensing with \(L_1-L_2\) regularization

We consider the compressed sensing problem

where \(A\in \mathbb {R}^{m\times d}\) is an underdetermined sensing matrix of full row rank, \(\gamma \in \mathbb {R}_{++}\), and \(\alpha \in \mathbb {R}_{++}\). Here, \(\varphi \) can be the least square loss function and the Lorentzian norm loss function mentioned in Remark 3.4.

In our numerical experiments, we let \(\alpha =1\) to be consistent with the setting in [2]. We first start with the least square loss function. By letting \(\varphi (z)=\frac{1}{2}\Vert z-b\Vert ^2\), where \(b\in \mathbb {R}^m \setminus \{0\}\), the problem (7) now becomes

This is known as the regularized least square problem, which has many applications in signal and image processing [2, 36, 37]. To solve problem (8), we use Algorithm 1 with \(f =\gamma \Vert \cdot \Vert _1\), \(h =\varphi \), and \(g =\gamma \Vert \cdot \Vert \). Then the update of \(x_{n+1}\) in Step 2 of Algorithm 1 reads as

where \(w_n =v_n-\tau _n A^*(Au_n-b)+\gamma \tau _n g_n\), and where \(g_n\in \partial _L \Vert \cdot \Vert (x_n)\) is given by

In this case, the proximal operator is the soft shrinkage operator [21], and so, for all \(i=1,\dots ,d\),

For this test case, we compare our proposed Algorithm 1 with the following algorithms:

-

Alternating direction method of multipliers (ADMM) proposed in [2];

-

Generalized proximal point algorithm (GPPA) proposed in [11];

-

Proximal difference-of-convex algorithm with extrapolation (pDCAe) in [14].

Note that the ADMM algorithm uses the \(L_1-L_2\) proximal operator which was first proposed in [2]. For ADMM, we have \(f(x) =\gamma \Vert x\Vert _1-\gamma \Vert x\Vert \), \(h(x) =\varphi (Ax)\), and \(g \equiv 0\). For GPPA and pDCAe, we let \(f(x) =\gamma \Vert x\Vert _1\), \(h(x) =\varphi (Ax)\), and \(g(x) =\gamma \Vert x\Vert \). The parameters of ADMM and pDCA are derived from [2, 14]. The step sizes for GPPA and pDCAe are \(0.8/\lambda _{\max }(A^TA)\) and \(1/\lambda _{\max }(A^TA)\), respectively, where \(\lambda _{\max }(M)\) is the maximum eigenvalue of a symmetric matrix M. We set \(\gamma =0.1\) and run all algorithms, initialized at the origin, for a maximum of 3000 iterations. Note that \(\beta =0\) (since g is convex) and \(\ell =1\) (since \(\nabla \varphi (z) =z-b\)). For our proposed algorithm, \(\delta =5\times 10^{-25}\), \(\overline{\lambda }=0.1\), \(\overline{\mu }=0.01\), \(\tau _n=1/\left( 2\delta +\ell \Vert A\Vert ^2\left( 2\overline{\lambda }+1\right) +2\overline{\mu }\right) \) with \(\Vert A\Vert \) being spectral norm, and

where \(\kappa _{-1}=\kappa _0=1\) and \(\kappa _{n+1}=\frac{1+\sqrt{1+4\kappa _n^2}}{2}\). Here, we adopt the well-known restarting techniques (see, for example, [17, Chapter 10]) and reset \(\kappa _{n-1}=\kappa _n=1\) every 50 iterations. Note that this technique has been utilized in several existing studies such as [14, 23]. We generate the vector b based on the same method as in [2]. In generating the matrix A, we use both randomly generated Gaussian matrices and discrete cosine transform (DCT) matrices. For each case, we consider different matrix sizes of \(m \times d\) with sparsity level s as given in Table 1. For the ground truth sparse vector \(x_g\), a random index set is generated and non-zero elements are drawn following the standard normal distribution. The stopping condition for all algorithms is \(\frac{\Vert x_{n+1}-x_n\Vert }{\Vert x_n\Vert }<10^{-8}\).

In Table 2, we report the CPU time, the number of iteration, and the function values at termination, the error to the ground truth at termination, averaged over 30 random instances. It can be observed that since Step 2 involves the calculation of matrix multiplication, the CPU time is significantly increased with the increasing dimension of the matrices. In addition, in terms of running time, objective function values, the number of iterations used, and the error with respect to the ground truth solution (defined as \(\frac{\Vert x_{n+1}-x_g\Vert }{\Vert x_g\Vert }\)), our proposed algorithm outperforms ADMM and GPPA in all test cases. Our algorithm also appears to be comparable to pDCAe. Note that our algorithm can be applied to a more general framework than pDCAe (see the next numerical experiment with the Lorentzian norm loss function for an illustration).

Next, we consider the case of Lorentzian norm loss function by letting \(\varphi (z)=\Vert z-b\Vert _{LL_{2,1}}\). Lorentzian norm can be useful in robust sparse signal reconstruction [6]. In this case, the optimization problem (7) becomes

We note that

is Lipschitz continuous with modulus \(\ell =2\). Since the loss function is now nonconvex and the pDCAe algorithm in [14] requires a convex loss function, pDCAe is not applicable in this case. Moreover, the ADMM algorithm in [2] is also not directly applicable due to the presence of the Lorentzian norm. Therefore, we compare our method with the GPPA only. For GPPA, we let \(h(x)=\varphi (Ax)\). The step size for GPPA is \(\tau =0.8/(2\lambda _{\max }(A^TA))\). For this case, we set \(\gamma =0.001\) and run the GPPA and our proposed algorithm, which are both initialized at the origin, for a maximum of 4000 iterations. The remaining parameters of our algorithm are set to the same values as before. We also use 30 random instances of the previous 8 test cases. The results are presented in Table 3. It can be seen from Table 3 that the proposed algorithm outperforms GPPA in this case.

4.2 Optimal power flow considering photovoltaic systems placement

Optimal power flow (OPF) is a well-known problem in power system engineering [38]. The integration of many distributed energy resources (DERs) such as photovoltaic systems, has become increasingly popular in modern smart grid [39], leading to the needs of developing more complicated OPF models considering the DERs. Metaheuristic algorithms are popular in solving OPF, and they have also been applied to solve the OPF with DERs integration [40, 41]. However, the drawbacks of the metaheuristic algorithms are that the convergence proof cannot be established, and their performances are not consistent [42]. Difference-of-convex programming has also been successfully applied to solve the OPF problem in [43], although DERs are not considered. Motivated by the aforementioned results, in this work we try to apply our proposed algorithm to solve the OPF in a low voltage network, which includes optimizing the placement of photovoltaic (PV) systems. We formulate two models which are based on the Direct Current OPF (DC OPF) [44], and Alternating Current OPF (AC OPF) [45]. To the best of the authors’ knowledge, this is the first time a proximal algorithm is used to solve an DER-integrated OPF with a difference-of-convex formulation, considering PV systems placement. The objective function aims at minimizing the cost of the conventional generator, which is a diesel generator in this case study, while maximizing the PV-penetration, which is defined as the ratio of the power generated by the PV systems divided by the total demand. The network considered in this case study is illustrated in Fig. 1, which consists of 14 buses. This case study is taken from a real low voltage network in Victoria, Australia. Currently, there are loads at buses 1, 3, 4, 6, 8, 9, 13, and 14. There are 6 PV systems at buses 1, 2, 4, 5, 7, and 8 with a capacity of 800 kW. A 5000 kW diesel generator is connected to bus 11. All of the parameters and decision variables in this case study are presented in Table 6. The cost of the current situation (before optimization is performed) is based on the cost of active power withdrawn from the generator, plus the installation cost of the PV systems. To determine this initial cost, the amount of active power generated by the generator is determined via DIgSILENT Power Factory 2021. After that, the cost of active power is calculated by the expression \(\sum _{i\in M}(a(P_{i}^{G})^2+bP_{i}^{G}+c)\), plus the installation cost of the six PV systems.

We first formulate the OPF problem with PV, which is based on the DC OPF, as follows

We see that for any \(i \in N\), if \(X_i\in [0,1]\), then \(X_i - X_i^2 = X_i(1-X_i) \ge 0\). Therefore,

Taking into account of the above equivalence, a plausible alternative optimization model for the OPF problem with PV is as follows a

The objective function (11a) aims at minimizing the installation cost and the generation cost of the diesel generator and maximizing the PV penetration, which is defined as \(\frac{\sum _{i\in N} P^{PV}_i}{\sum _{i\in N} D_i}\) [46], the parameter \(\gamma >0\) which serves as a Lagrangian multiplier for the discrete constraints \(X_i \in \{0,1\}\).

With this reformulation, the objective function (11a) now becomes a difference-of-convex function. Constraint (10b) describes the relationship between the power flow from one bus to another and their corresponding phasor angles, constraint (10c) defines the voltage angle at the slack bus, which is the bus connected to the diesel generator, constraints (10d) and (10e) define the power flow in and out of any buses, constraint (10f) ensures that the PV penetration rate is at least 50 percent, constraint (10g) defines the transmission limits of the transmission lines, and constraint (10h) makes sure that the solar power only exists at a bus when there is a PV system at that bus. Finally, constraint (10i) defines the boundaries of the remaining decision variables. All of the constraints form the feasible set S. This problem takes the form of (P) with \(f =\iota _S\), \(h =\sum _{i\in N}CX_i + \sum _{i\in M}\left( a(P_{i}^{G})^2+bP_{i}^{G}+c\right) - \frac{\sum _{i\in N} P^{PV}_i}{\sum _{i\in N} D_i}\), and \(g =\gamma \sum _{i \in N}(X_i^2 - X_i)\). By Remark 3.4 (ii) and Theorem 3.3, in this case the proposed algorithm converges with a linear rate. The update of \(x_{n+1}\) in Algorithm 1 becomes

Here,

This step is solved by MATLAB’s quadprog command. Noting that \(\beta =0\) (since g is convex) and \(\ell =2a\), the parameters are set as follows: \(\delta =5\times 10^{-25}\), \(\overline{\lambda }=0.1\), \(\overline{\mu }=0.01\), \(\tau _n=1/\left( 2\delta +\ell \left( 2\overline{\lambda }+1\right) +2\overline{\mu }\right) \), \(\mu _n=\overline{\mu }\tau _n\), and \(\lambda _n\) is chosen in the same way as in Section 4.1. The performance of the proposed algorithm is compared with the the GPPA, and the pDCAe, as illustrated in Table 4. We use the step size \(\tau _n=0.8/\ell \) for GPPA, and \(\tau _n =1/\ell \) for pDCAe. The maximum number of iteration is 1000, and the stopping condition is the same as the one used in Section 4.1.

We test all algorithms for 30 times, at each time we use a random starting point between the upper bound and the lower bound of the variables. The mean objective function values, and the best objective function values found by all algorithms are reported in Table 4. Although the proposed algorithm, on average, needs more iterations than the remaining ones, it can find a better solution. The mean objective function value found by our algorithm is also better than the ones found by the other algorithms. Our algorithm is also comparable to the GPPA and the pDCAe in terms of average CPU time.

The details of the best solution found by our algorithm are shown in Fig. 1.

Now we consider the case of AC OPF model. The formulation is based on the branch flow model given in [45]. Firstly, the network is treated as a directed graph, as shown in Fig. 2.

We denote a directed link by (i, j) or \(i\rightarrow j\) if it points from bus i to bus j, and the set of all directed links by E. Next, the formulation is given as follows,

The main differences between the AC OPF model and the DC OPF model are that the AC OPF model has a nonconvex feasible set, and that it also accounts for the loss in the network as well as the reactive power. Consequently, AC OPF is more accurate than DC OPF in practice [47], and due to its nonconvexity, it is also more challenging to solve [48]. Constraints (12e) \(\rightarrow \) (12h) define the power flow in any directed links. Constraint (12i) ensures that the PV penetration rate is at least 50 percent. Constraints (12j) and (12k) ensure that the active and reactive power from PV systems only exist at a bus if and only if there is a PV system at that bus. Constraint (12l) describes the relationship between the voltage of any two bus in a directed link. Constraint (12m) is a nonconvex constraint ensuring that the solution have physical meaning. Finally, constraints (12n) \(\rightarrow \) (12t) define the boundaries of the decision variables. The update of \(x_{n+1}\) is also the same as before. For this case,

We also perform the same numerical experiment as in the DC OPF case. However, the pDCAe is not applicable in this case, so we compare our algorithm with the GPPA only. The parameters of GPPA and our proposed algorithm are set to the same values as those used for the DC OPF model. Due to the nonconvex constraint, MATLAB’s fmincon is used to solve the subproblem in Step 2 instead of quadprog. The results are shown in Table 5.

Table 5 shows that our proposed algorithm takes less time and fewer iterations than the GPPA to converge. The best solution found by our algorithm in this case is also the same as the one found in the DC OPF model.

It can be seen that for both DC OPF and AC OPF, two PV systems need to be installed at bus 7 and bus 9, the remaining demands can be supplied by the generator, and the demands are satisfied by the power flows. Although the mathematical model aims at maximizing the PV penetration, drawing power from the diesel generator is still more economical due to the high installation cost of the PV systems. The solution significantly reduces the cost by approximately \(70\%\) from the original situation. This can serve as a proof of concept for future research.

5 Conclusion

In this paper, we proposed an extrapolated proximal subgradient algorithm for solving a broad class of structured nonconvex and nonsmooth optimization problems. Our general framework and the proposed algorithm impose less restrictions on the smoothness and convexity requirements than the current literature, and allow us to tackle problems with specific nonconvex loss functions and nonconvex constraints. In addition, our choice of the extrapolation parameters is flexible enough to cover the popular choices used in restarted FISTA scheme. The convergence of the whole sequence generated by our algorithm was proved via the abstract convergence framework given in [23]. We then performed numerical experiments on a least squares problem with the nonconvex \(L_1-L_2\) regularization, and on a compressed sensing problem with the nonconvex Lorentzian norm loss function. In the numerical experiments, the proposed algorithm exhibited very competitive performance, and, in various instances, outperformed the existing algorithms in terms of time and the quality of the solutions. We also applied this algorithm to solve an OPF problem considering PV placement, which serves as a proof of concept for future works.

Data Availability

All data generated or analyzed during this study are included in this article. In particular, the data for Case study 4.1 were generated randomly and we explained how they were explicitly generated. The data for Case study 4.2 are available in the Appendix.

Notes

Indeed, note that any smooth function with Lipschitz gradient function is weakly convex. By adding and subtracting \(\alpha \Vert x\Vert ^2\) for large \(\alpha >0\), our model problem (P) can also be mathematically reduced to the form (1) whose objective function is the sum of a nonconvex and nonsmooth loss function and a difference-of-convex regularization.

References

Cheng, Y., Pesavento, M.: Joint optimization of source power allocation and distributed relay beamforming in multiuser peer-to-peer relay networks. IEEE Transactions on Signal Processing 60(6), 2962–2973 (2012)

Lou, Y., Yan, M.: Fast L1–L2 minimization via a proximal operator. Journal of Scientific Computing 74(2), 767–785 (2017)

Le Thi, H.A., Pham Dinh, T.: DC programming and DCA: thirty years of developments. Mathematical Programming 169(1), 5–68 (2018)

Wang, H., Shao, N.X.Y.: Proximal operator and optimality conditions for ramp loss svm. Optimization Letters 16(3), 999–1014 (2022)

Xiao, Y., Wang, W.X.H.: Ramp loss based robust one-class svm. Pattern Recognition Letters 85(1), 15–20 (2017)

Carrillo, R.E., Barner, T.C.A.K.E.: Robust sampling and reconstruction methods for sparse signals in the presence of impulsive noise. IEEE Journal of Selected Topics in Signal Processing 4, 392–408 (2010)

Ahn, M., Pang, J., Xin, J.: Difference-of-convex learning: Directional stationarity, optimality, and sparsity. SIAM Journal on Optimization 27(3), 1637–1665 (2017)

Antoniadis, A.: Wavelets in statistics: A review. Journal of the Italian Statistical Society 6(2), 97–130 (1997)

Gotoh, J., Takeda, A., Tono, K.: DC formulations and algorithms for sparse optimization problems. Mathematical Programming 169(1), 141–176 (2017)

Zhang, C.: Nearly unbiased variable selection under minimax concave penalty. The Annals of Statistics 38(2) (2010)

An, N.T., Nam, N.M.: Convergence analysis of a proximal point algorithm for minimizing differences of functions. Optimization 66(1), 129–147 (2016)

Phan, D.N., Le, M.H., Le Thi, H.A.: Accelerated difference of convex functions algorithm and its application to sparse binary logistic regression. In: Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence (2018)

Liu, T., Takeda, A.: An inexact successive quadratic approximation method for a class of difference-of-convex optimization problems. Computational Optimization and Applications 82, 141–173 (2022)

Wen, B., Chen, X., Pong, T.K.: A proximal difference-of-convex algorithm with extrapolation. Computational Optimization and Applications 69(2), 297–324 (2017)

Lu, Z., Zhou, Z.: Nonmonotone enhanced proximal DC algorithms for a class of structured nonsmooth DC programming. SIAM Journal on Optimization 29(4), 2725–2752 (2019)

Lu, Z., Zhou, Z., Sun, Z.: Enhanced proximal DC algorithms with extrapolation for a class of structured nonsmooth DC minimization. Mathematical Programming 176(1), 369–401 (2018)

Beck, A.: First-Order Methods in Optimization. MOS-SIAM Series on Optimization, vol. 25. Society for Industrial and Applied Mathematics, Philadelphia, USA (2017)

Polyak, B.T.: Some methods of speeding up the convergence of iteration methods. USSR Computational Mathematics and Mathematical Physics 4(5), 1–17 (1964)

Nesterov, Y.: Inexact accelerated high-order proximal-point methods. Mathematical Programming 2021, 1–26 (2021)

Nesterov, Y.: Lectures on Convex Optimization. Springer Optimization and Its Applications, vol. 137. Springer, Cham, Switzerland (2018)

Beck, A., Teboulle, M.: A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM Journal on Imaging Sciences 2(1), 183–202 (2009)

Attouch, H., Cabot, A.: Convergence rates of inertial forward-backward algorithms. SIAM Journal on Optimization 28(1), 849–874 (2018)

Boţ, R.I., Dao, M.N., Li, G.: Extrapolated proximal subgradient algorithms for nonconvex and nonsmooth fractional programs. Mathematics of Operations Research 47(3), 1707–2545 (2022)

Mordukhovich, B.S.: Variational Analysis and Generalized Differentiation I. Grundlehren der mathematischen Wissenschaften, vol. 330. Springer, Berlin, Heidelberg (2006)

Dao, M.N., Tam, M.K.: A Lyapunov-type approach to convergence of the Douglas-Rachford algorithm for a nonconvex setting. Journal of Global Optimization 73(1), 83–112 (2019)

Xu, Z., Chang, X., Xu, F., Zhang, H.: L1/2 regularization: A thresholding representation theory and a fast solver. IEEE Transactions on Neural Networks and Learning Systems 23(7), 1013–1027 (2012)

Afef, C., Émilie, C., Marc-André, D.: Proximity operators for a class of hybrid sparsity + entropy priors. Application to dosy NMR signal reconstruction. In: Proceedings of the 8th International Symposium on Signal, Image, Video and Communications (ISIVC), pp. 120–125 (2016)

Souza, J.C.O., Oliveira, P.R., Soubeyran, A.: Global convergence of a proximal linearized algorithm for difference of convex functions. Optimization Letters 10(7), 1529–1539 (2015)

Rockafellar, R.T., J-B. Wets, R.: Variational Analysis. Grundlehren der mathematischen Wissenschaften, vol. 317. Springer, Berlin, Heidelberg (1998)

Kurdyka, K.: On gradients of functions definable in o-minimal structures. Annales de l’institut Fourier 48(3), 769–783 (1998)

Lojasiewicz, S.: Une propriété topologique des sous-ensembles analytiques réels. Les Équations aux Dérivées Partielles, 87–89 (1963)

Attouch, H., Bolte, J.: On the convergence of the proximal algorithm for nonsmooth functions involving analytic features. Mathematical Programming 116(1–2), 5–16 (2007)

Li, G., Pong, T.K.: Calculus of the exponent of Kurdyka-Łojasiewicz inequality and its applications to linear convergence of first-order methods. Foundations of Computational Mathematics 18(5), 1199–1232 (2017)

Boţ, R.I., Dao, M.N., Li, G.: Inertial proximal block coordinate method for a class of nonsmooth sum-of-ratios optimization problems. SIAM Journal on Optimization 33(2), 361–393 (2023)

Bolte, J., Daniilidis, A., Lewis, A., Shiota, M.: Clarke subgradients of stratifiable functions. SIAM Journal on Optimization 18(2), 556–572 (2007)

Nikolova, M.: Analysis of the recovery of edges in images and signals by minimizing nonconvex regularized least-squares. Multiscale Modeling & Simulation 4(3), 960–991 (2005)

Kim, S., Koh, K., Lustig, M., Boyd, S., Gorinevsky, D.: An interior-point method for large-scale-regularized least squares. IEEE Journal of Selected Topics in Signal Processing 1(4), 606–617 (2007)

Abdi, H., Beigvand, S.D., Scala, M.L.: A review of optimal power flow studies applied to smart grids and microgrids. Renewable and Sustainable Energy Reviews 71, 742–766 (2017)

Wankhede, S.K., Paliwal, P., Kirar, M.K.: Increasing penetration of DERs in smart grid framework: A state-of-the-art review on challenges, mitigation techniques and role of smart inverters. Journal of Circuits, Systems and Computers 29(16), 2030014 (2020)

Shaheen, M.A.M., Hasanien, H.M., Mekhamer, S.F., Talaat, H.E.A.: Optimal power flow of power systems including distributed generation units using sunflower optimization algorithm. IEEE Access 7, 109289–109300 (2019)

Khaled, U., Eltamaly, A.M., Beroual, A.: Optimal power flow using particle swarm optimization of renewable hybrid distributed generation. Energies 10(7), 1013 (2017)

Ezugwu, A.E., Adeleke, O.J., Akinyelu, A.A., Viriri, S.: A conceptual comparison of several metaheuristic algorithms on continuous optimisation problems. Neural Computing and Applications 32(10), 6207–6251 (2019)

Merkli, S., Domahidi, A., Jerez, J.L., Morari, M., Smith, R.S.: Fast AC power flow optimization using difference of convex functions programming. IEEE Transactions on Power Systems 33(1), 363–372 (2018)

Kargarian, A., Mohammadi, J., Guo, J., Chakrabarti, S., Barati, M., Hug, G., Kar, S., Baldick, R.: Toward distributed/decentralized DC optimal power flow implementation in future electric power systems. IEEE Transactions on Smart Grid 9(4), 2574–2594 (2018)

Farivar, M., Low, S.H.: Branch flow model: Relaxations and convexification-part I. IEEE Transactions on Power Systems 28(3), 2554–2564 (2013)

Hoke, A., Butler, R., Hambrick, J., Kroposki, B.: Steady-state analysis of maximum photovoltaic penetration levels on typical distribution feeders. IEEE Transactions on Sustainable Energy 4(2), 350–357 (2013)

Frank, S., Rebennack, S.: An introduction to optimal power flow: Theory, formulation, and examples. IIE Transactions 48(12), 1172–1197 (2016)

Low, S.H.: Convex relaxation of optimal power flow-part I: Formulations and equivalence. IEEE Transactions on Control of Network Systems 1(1), 15–27 (2014)

Weedy, B.M., Cory, B.J., Jenkins, N., Ekanayake, J.B., Strbac, G.: Electric Power Systems, 5th edn. Wiley-Blackwell, Hoboken, NJ (2012)

Kusakana, K.: Optimal scheduled power flow for distributed photovoltaic/wind/diesel generators with battery storage system. IET Renewable Power Generation 9(8), 916–924 (2015)

Fodhil, F., Hamidat, A., Nadjemi, O.: Potential, optimization and sensitivity analysis of photovoltaic-diesel-battery hybrid energy system for rural electrification in algeria. Energy 169, 613–624 (2019)

Funding

Open Access funding enabled and organized by CAUL and its Member Institutions. The research of TNP was supported by Henry Sutton PhD Scholarship Program from Federation University Australia. The research of MND benefited from the FMJH Program Gaspard Monge for optimization and operations research and their interactions with data science, and was supported by a public grant as part of the Investissement d’avenir project, reference ANR-11-LABX-0056-LMH, LabEx LMH. The research of GL was supported by Discovery Project 190100555 from the Australian Research Council.

Author information

Authors and Affiliations

Contributions

All authors contributed to the manuscript and approved the submitted version.

Corresponding author

Ethics declarations

Ethics approval

Not applicable.

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix: Data of Case study 4.2

Appendix: Data of Case study 4.2

In Case study 4.2, we use a base power of 100 MVA, and a base voltage of 22 kV. All of the parameters are converted into Per Unit (pu) values in the calculation. Readers can refer to [49, Chapter 2] for a detailed tutorial on the Per Unit system. The active and reactive power demand are given in Table 7. The other technical parameters of the system including susceptance, resistance, and reactance of the lines are given in Tables 8, 9, and 10, respectively.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Pham, T.N., Dao, M.N., Shah, R. et al. A proximal subgradient algorithm with extrapolation for structured nonconvex nonsmooth problems. Numer Algor 94, 1763–1795 (2023). https://doi.org/10.1007/s11075-023-01554-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-023-01554-5

Keywords

- Composite optimization problem

- Difference of convex

- Distributed energy resources

- Extrapolation

- Optimal power flow

- Proximal subgradient algorithm