Abstract

We study families of multivariate orthogonal polynomials with respect to the symmetric weight function in d variables

for \(\gamma >-1\), where \(\omega (t)\) is an univariate weight function in \(t \in (a,b)\) and \(\mathtt {x} = (x_{1},x_{2}, \ldots , x_{d})\) with \(x_{i} \in (a,b)\). Applying the change of variables \(x_{i},\) \(i=1,2,\ldots ,d,\) into \(u_{r},\) \(r=1,2,\ldots ,d\), where \(u_{r}\) is the r-th elementary symmetric function, we obtain the domain region in terms of the discriminant of the polynomials having \(x_{i},\) \(i=1,2,\ldots ,d,\) as its zeros and in terms of the corresponding Sturm sequence. Choosing the univariate weight function as the Hermite, Laguerre, and Jacobi weight functions, we obtain the representation in terms of the variables \(u_{r}\) for the partial differential operators such that the respective Hermite, Laguerre, and Jacobi generalized multivariate orthogonal polynomials are the eigenfunctions. Finally, we present explicitly the partial differential operators for Hermite, Laguerre, and Jacobi generalized polynomials, for \(d=2\) and \(d=3\) variables.

Similar content being viewed by others

1 Introduction

In 1974 (see [8, 9]), Koornwinder considered the family of orthogonal polynomials \(p_{n,k}^{\alpha ,\beta , \gamma }( u, v)\), with \(n \geqslant k \geqslant 0\), obtained by orthogonalization of the sequence \(1, u, v, u^{2}, uv, v^{2}, u^{3}, u^{2}v, \ldots\) with respect to the weight function \((1-u+v)^{\alpha }(1+u+v)^{\beta }(u^{2}-4v)^{\gamma }\) for \(\alpha ,\beta , \gamma> -1, \alpha + \gamma +3/2> 0, \beta +\gamma +3/2 > 0\), on the region bounded by the lines \(1 -u+ v = 0\) and \(1+u+ v = 0\) and by the parabola \(u^{2}-4v= 0\) (see Fig. 1). In the special case \(\gamma = -1/2\), orthogonal polynomials \(p_{n,k}^{\alpha ,\beta ,-1/2}( u, v)\) can be explicitly obtained by the identity

and the change of variables \(u = x+ y, v= xy\), where \(P_{n}^{(\alpha ,\beta )}(x)\) are Jacobi polynomials in one variable. The author obtained two explicit linear partial differential operators \(D_{1}^{\alpha ,\beta , \gamma }\) and \(D_{2}^{\alpha ,\beta , \gamma }\) of order two and four, respectively, such that the polynomials \(p_{n,k}^{\alpha ,\beta , \gamma }(u,v)\) are their common eigenfunctions. In fact, \(D_{1}^{\alpha ,\beta , \gamma }\) and \(D_{2}^{\alpha ,\beta , \gamma }\) were the generators of the algebra of differential operators having the polynomials \(p_{n,k}^{\alpha ,\beta , \gamma }(u,v)\) as eigenfunctions. The polynomials \(p_{n,k}^{\alpha ,\beta , \gamma }( u, v)\) are not classical in the Krall and Sheffer sense [10] since the corresponding eigenvalues of \(D_{1}^{\alpha ,\beta , \gamma }\) depend on n and k.

In several variables, we find different extensions of Koorwinder’s polynomials connected with symmetrical multivariate weight functions constructed from classical univariate weights. In fact, the so-called generalized classical orthogonal polynomials are multivariable polynomials which are orthogonal with respect to the weight functions

with \(\omega (t)\) being one of the classical weight functions (Hermite, Laguerre, or Jacobi) on the real line.

The multivariable Hermite, Laguerre, and Jacobi families associated with the weight functions \(B_{\gamma }(\mathtt {x})\) were introduced by Lassalle [11,12,13] and Macdonald [16] as a generalization of a previously known special case in which the parameter \(\gamma\) is being fixed at the value 0, [7]. Later, these multivariable generalizations of the classical Hermite, Laguerre, and Jacobi polynomials occur as the polynomial part of the eigenfunctions of certain Schrödinger operators for Calogero-Sutherland-type quantum systems [1]. In fact, if we denote by

the second-order differential operator having the classical orthogonal polynomials as eigenfunctions then the multivariable Hermite, Laguerre, and Jacobi are eigenfunctions of the differential operators

Lassalle expressed the generalized classical orthogonal polynomials in terms of the basis of symmetric monomials

with \(\lambda \in \mathbb {Z}^{d}\) satisfying \(\lambda _{1} \geqslant \lambda _{2} \geqslant \ldots \geqslant \lambda _{d} \geqslant 0\). Here the summation in (1.1) is over the orbit of \(\lambda\) with respect to the action of the symmetric group \(\mathcal {S}_{d}\) which permutes the vector components \(x_{1}, x_{2}, \ldots , x_{d}\) (see [11,12,13]).

Rather than study the eigenfunctions of \(\mathcal {H}_{\gamma }\) in terms of the monomial symmetric polynomials, in some previous studies (see [16]), it has been shown that it is convenient to change basis from the monomial symmetric polynomials to the Jack polynomials, that is, the unique (up to normalization) symmetric eigenfunctions of the operator

In this work, we will consider \(\omega (t)\) a univariate weight function in \(t \in (a,b)\). For \(\gamma >-1\), we define a symmetric weight function in d variables on the hypercube \((a,b)^{d}\) as

where \(\mathtt {x} = (x_{1},x_{2}, \ldots , x_{d})\), with \(x_{i} \in (a,b), i= 1, 2, \ldots , d.\) Next, we apply the change of variables

where \(u_{r}\) are the r-th elementary symmetric functions defined by

In [2], the change of variables \(\mathtt {x}=(x_{1},x_{2}, \ldots , x_{d}) \mapsto \mathtt {u} = (u_{1},u_{2}, \ldots , u_{d})\) was considered to construct multivariate gaussian cubature formulae in the case \(\gamma = \pm \dfrac{1}{2}\). This construction is based on the common zeroes of multivariate quasi-orthogonal polynomials, which turns out to be expressed in terms of Jacobi polynomials (see also [3]).

Our main goal is the study of multivariate orthogonal polynomials in the variable \(\mathtt {u}\) associated with the weight function \(W_{\gamma }(\mathtt {u})\) obtained from the change of variables \(\mathtt {x} \mapsto \mathtt {u}\). Obviously, generalized classical orthogonal polynomials are included in our study.

To this end, in Section 2, some basic definitions will be introduced and some properties of the derivatives of elementary symmetric functions will be obtained.

In Section 3, we analyze the structure of the domain of the weight function \(W_{\gamma }(\mathtt {u})\), that is, the image of the map \(\mathtt {x} \mapsto \mathtt {u}\). Orthogonal polynomials with respect to \(W_{\gamma }(\mathtt {u})\) are defined in Section 4.

Finally, in Section 5, generalized classical orthogonal polynomials are considered. Our main result states that, under the change of variables \(\mathtt {x} \mapsto \mathtt {u}\), the differential operators \(\mathcal {H}_{\gamma }^{H}, \mathcal {H}_{\gamma }^{L}\) and \(\mathcal {H}_{\gamma }^{J}\) can be represented as linear partial differential operators in the form

where \(a_{rs}(\mathtt {u})\) for \(r,s = 1, \ldots , d\) are polynomials of degree 2 in \(\mathtt {u}\) and \(b_{r}(\mathtt {u})\) for \(r = 1, \ldots , d\) are polynomials of degree 1 in \(\mathtt {u}\). Those operators have the multivariate orthogonal polynomials with respect to \(W_{\gamma }(\mathtt {u})\) as eigenfunctions. In particular, we explicitly give the representation of these operators in the cases \(d= 2\) and \(d=3\).

2 Definitions and first properties

Let \(d\geqslant 1\) denote the number of variables. If \(\alpha = (\alpha _{1}, \alpha _{2}, \ldots , \alpha _{d}) \in \mathbb {N}^{d}_{0}\), \(\mathbb {N}_{0} := \mathbb {N} \cup \{0\}\), is a d-tuple of non-negative integers \(\alpha _{i}\), we call \(\alpha\) a multi-index which has degree \(|\alpha | = \alpha _{1} + \alpha _{2} + \cdots + \alpha _{d}\). We order the multi-indexes by means of the graded reverse lexicographical order, that is, \(\alpha \prec \beta\) if and only if \(|\alpha | < |\beta |\), and in the case \(|\alpha | = |\beta |\), the first entry of \(\alpha - \beta\) different from zero is positive.

A multi-index \(\lambda = (\lambda _{1}, \lambda _{2}, \ldots , \lambda _{d}) \in \mathbb {N}^{d}_{0}\) will be called a partition if \(\lambda _{1} \geqslant \lambda _{2} \geqslant \ldots \geqslant \lambda _{d} \geqslant 0\).

Observe that for every multi-index \(\mu = (\mu _{1}, \mu _{2}, \ldots , \mu _{d})\) there exists a unique partition \(\lambda = (\lambda _{1}, \lambda _{2}, \ldots , \lambda _{d})\) satisfying

If \(\alpha\) is a multi-index and \(\mathtt {x} = (x_{1}, x_{2}, \dots , x_{d}) \in \mathbb {R}^{d}\), we denote by \(\mathtt {x}^{\alpha }\) the monomial \(x_{1}^{\alpha _{1}} x_{2}^{\alpha _{2}} \ldots x_{d}^{\alpha _{d}}\) which has total degree \(|\alpha |\). A polynomial P in d variables is a finite linear combination of monomials \(P(\mathtt {x}) = \sum _{\alpha } c_{\alpha } \mathtt {x}^{\alpha }\). The total degree of P is defined as the highest degree of its monomials.

Following [14], the r-th elementary symmetric function \(u_{r}\) is the sum of all products of r different variables \(x_{i}\), i.e.,

and \(u_{0} = 1\). The elementary symmetric functions \(u_{r}\) and \(r=1,2,\ldots ,d\) are harmonic homogeneous polynomials of degree r and can be obtained from the generating polynomial of degree d on the variable t, P(t), defined by

For a given multivariate function f, we will denote by \(\partial _{k} f\) the partial derivative of f with respect to de variable \(x_{k}\). In this work, we are going to deal frequently with partial derivatives of the elementary symmetric functions. The following lemma provides some recursive and closed expressions for \(\partial _{k} u_{r}\).

Lemma 2.1

For \(r = 1, 2, \ldots d\), partial derivatives of the elementary symmetric functions satisfy

Proof

Taking partial derivatives in (2.2), we get

Next, multiply by \((1 + x_{k} t)\) in the above equality to obtain

and (2.3) follows equating coefficients in both sides of the last equality. Next, (2.4) is obtained iterating (2.3).

Finally, taking partial derivatives in (2.3), for \(k \ne i\), we get

changing the role of k and i we obtain

and therefore, (2.5) follows.

3 The domain

Given a univariate weight function \(\omega (t)\) on \(t \in (a,b)\) (where \(a = -\infty\) and \(b = \infty\) are allowed) consider the variable \(\mathtt {x} = (x_{1},x_{2}, \ldots , x_{d})\), with \(x_{i} \in (a,b).\) For \(\gamma >-1\), we define a weight function in d variables on the hypercube \((a,b)^{d}\) as

Since \(B_{\gamma }\) is obviously symmetric in the variables \(x_{1},x_{2}, \ldots , x_{d}\), it suffices to consider its restriction on the domain \(\Delta\) given by

Let E(t) be the monic polynomial of degree d on the variable t, having \(x_{i}, i=1, 2, \ldots , d\) as its roots, From (2.2), E(t) satisfies

Let us consider the mapping

and the corresponding Jacobian matrix

Using (2.4) and subtracting suitable combinations of columns in \(\left| T \right|\), we get

the Vandermonde determinant. Thus, the determinant of the matrix \(TT^{t}\) can be given as

It turns out that \(D(\mathtt {u})\) coincides with the discriminant (see [17, p. 23]) of the polynomial E(t). In this way, \(D(\mathtt {u})\) can be expressed in terms of the elementary symmetric functions since the discriminant can be obtained from the resultant (see [17, section 1.3.1]) of E and its derivative \(E^{\prime }\) in the following way:

with

where \(a_{i}=(-1)^{i}u_{i}\) for \(i=0,\ldots , d,\) and \(b_{i}=(-1)^{i}(d-i)u_{i}\) for \(i=0,\ \ldots ,\ d-1\).

As it is well known, the existence of d different roots of the polynomial E(t) as defined in (3.2) (\(x_{i}\) for \(i = 1, \ldots , d\)) is equivalent to the positivity of \(D(\mathtt {u})\), the discriminant of E(t). Moreover, that all these different roots are contained in the interval (a, b) can be characterized in terms of the corresponding Sturm sequence (see [17, p. 30]). Consider the polynomials \(p_{0}(t)=E(t)\) and \(p_{1}(t) = E^{\prime }(t)\) and let us construct a sequence \(\{p_{k}(t)\}_{k=0}^{d}\) with the help of Euclid’s algorithm to seek the greatest common divisor of E and \(E^{\prime }\)

where \(m_{k}\) is a positive constant for \(k = 1, \ldots , d-1\).

Since the roots of E(t) are simple, \(p_{d}(t)\) is a nonzero constant. Sturm’s theorem states that if v(t) is the number of sign changes in the sequence

then the number of roots of \(p_{0}(t)\) (without taking multiplicities into account) confined between a and b is equal to \({v(a)-v(b)}\). If all the roots of E(t) satisfy \(a< x_{1}< x_{2}< \cdots< x_{d} <b\) then, according to Sturm’s theorem the sequence \(\{p_{0}(b), p_{1}(b), \ldots , p_{d}(b)\}\) has no sign changes and \(\{p_{0}(a), p_{1}(a), \ldots , p_{d}(a)\}\) has exactly d sign changes.

In [4], explicit expressions for the polynomials in a Sturm sequence were provided. These explicit representations were given in terms of the d different roots of the first polynomial in the sequence \(p_{0}(t)\) (\(x_{i}\) for \(i = 1, \ldots , d\) in our case). In particular, the author shows that the constant value of \(p_{d}(t)\) coincides with the discriminant of \(p_{0}(t)\) up to a positive multiplicative factor. Therefore, the condition \(D(\mathtt {u})>0\) is equivalent to \(p_{d}(t)>0\).

Consequently, the following result holds.

Proposition 3.1

The region

is the image of \(\Delta\) under the mapping \(\mathtt {x} = (x_{1}, x_{2}, \ldots , x_{d}) \mapsto \mathtt {u} = (u_{1}, u_{2}, \ldots , u_{d})\) defined by (2.1).

As a consequence, the orthogonality measure and its support in terms of the coordinates \(u_{1},\ \ldots ,\ u_{d}\) can be obtained explicitly using the determinant \(R(E, E^{\prime })\) combined with a simple algorithm.

3.1 The case \(d=2\)

Let \(\omega\) be a weight function defined on (a, b). For \(\gamma > -1\), let us define a weight function of two variables,

defined on the domain \(\Delta\) given by

Let us consider the mapping \(\mathtt {x} \mapsto \mathtt {u}\) defined by

Then, \(E(t) = t^{2} - u_{1} t + u_{2}\) and the Jacobian of the change of variables is \(|x_{1} - x_{2}|\).

Expressed in terms of the variable \(\mathtt {u}\), the discriminant of the polynomial E(t) is

And the Sturm sequence reads

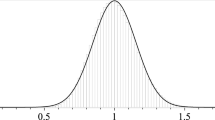

In the Jacobi case, we have \((a,b) = (-1,1)\) and \(\omega (t) = (1-t)^{\alpha }(1+t)^{\beta }\), with \(\alpha>-1, \beta >-1\). In fact, this is the case originally considered by Koornwinder (see [8]). Then, using Proposition 3.1, the mapping \(\mathtt {x} \mapsto \mathtt {u}\) is a bijection between \(\Delta\) and the domain \(\Omega\) given by

which is depicted in Fig. 1.

In the Laguerre case, we have \((a,b) = (0,+\infty )\) and \(\omega (t) = t^{\alpha }e^{-t}\), with \(\alpha >-1\). Therefore, using again Proposition 3.1, the domain \(\Omega\) is given by

the region described in Fig. 2.

In the Hermite case, we have \((a,b) = (-\infty ,+\infty )\) and \(\omega (t) = e^{-t^{2}}\). The domain \(\Omega\) is

as we show in Fig. 3.

3.2 The case \(d=3\)

For \(d=3\), we set \(\mathtt {x} = (x_{1},x_{2},x_{3})\) and \(\mathtt {u} = (u_{1},u_{2},u_{3})\), with

Then, \(E(t) = t^{3} - u_{1} t^{2} + u_{2} t -u_{3}\) and the discriminant \(D(\mathtt {u})\) can be expressed in terms of the elementary symmetric functions

The Sturm sequence reads

And finally, the region \(\Omega\) for \(d=3\) can be described by the following inequalities

The region \(\Omega\) is depicted in Fig. 4. This picture has been obtained from the parametric representation of the images under the map defined by (2.1) of the four triangular faces of the domain \(\Delta\) given by

\(\Omega\) is a solid limited by two flat faces and two curved faces. The first thing we have to notice is that \(\Omega\) is invariant under the change of variables \((u_{1},u_{2},u_{3}) \rightarrow (-u_{1},u_{2},-u_{3})\). In the image, the brown face is part of the plane \(p_{0}(1) = 0\). There is another symmetrical flat face contained in the plane \(-p_{0}(-1) = 0\). The two flat faces intersect in the line segment from \(A = (1,-1,-1)\) to \(B = (-1,-1,1)\). The other line segment bounding the brown region (which is the intersection of the planes \(p_{0}(1) = 0\) and \(p_{1}(1) = 0\)) is the line segment from \(A = (1,-1,-1)\) to \(C = (3, 3, 1)\). The third boundary part of the brown region is the part from B to C of a parabola touching at the endpoints A and C of the boundary line segments. The orange curved faces are the part of the quartic surface \(D(u) = 0\) which is bounded by the line segments AC and BD (where the surface touches the planes \(p_{0}(1) = 0\) and \(-p_{0}(-1) = 0\), respectively), and by the parabola segments CB (where the surface intersects the plane \(p_{0}(1) = 0\)) and DA (where the surface intersects the plane \(-p0(-1) = 0\)).

Figure 5 shows the projection of \(\Omega\) on the \(u_{1} u_{3}\) plane. Notice the two triangles, sharing one edge, and each having one parabolic side, namely, part of the parabolas \(u_{3} = \frac{1}{4}(u_{1}-1)^{2}\) and \(u_{3} = -\frac{1}{4}(u_{1}+1)^{2}\).

4 Orthogonal polynomials

Under the mapping defined by (2.1), the weight function \(B_{\gamma }\), given in (3.1), becomes a weight function defined on the domain \(\Omega\) by

Now, it is possible to define the polynomials orthogonal with respect to \(W_{\gamma }(\mathtt {u})\) on \(\Omega .\)

Proposition 4.1

Define monic polynomials \(P_{\mu }^{(\gamma )}(\mathtt {u})\) under the graded reverse lexicographic order \(\prec\),

that satisfy the orthogonality condition

for \(\alpha \prec \mu\), then these polynomials are uniquely determined and are mutually orthogonal with respect to \(W_{\gamma }(\mathtt {u})\).

Proof

Since the graded reverse lexicographic order \(\prec\) is a total order, applying the Gram–Schmidt orthogonalization process to the monomials so ordered, the uniqueness follows from the fact that \(P_{\mu }^{(\gamma )}(\mathtt {u})\) has leading coefficient 1.

In the cases \(\gamma = \pm 1/2\), a family of orthogonal polynomials in the variable \(\mathtt {u}\) can be given explicitly in terms of orthogonal polynomials of one variable (see [2] and [3, p.155]).

Proposition 4.2

Let \(\{p_{k} \}_{k\geqslant 0}\) be the sequence of monic orthogonal polynomials with respect to w on (a, b). For \(\gamma = -1/2\), \(n \in \mathbb {N}_{0}\), and \(\mu = (\mu _{1}, \mu _{2},\ldots , \mu _{d})\) satisfying \(0 \leqslant \mu _{1} \leqslant \mu _{2} \leqslant \ldots \leqslant \mu _{d} = n\), we define

where \(\mathtt {x}\) and \(\mathtt {u}\) are related by (2.1), and the sum in the right-hand side of (4.3) runs over all distinct permutations \(\sigma\) in the symmetric group \(\mathcal {S}_{d}\). Then, \(P_{\mu }^{(-1/2)}(\mathtt {u})\) is an orthogonal polynomial of degree n in the variable \(\mathtt {u}\).

For \(\gamma = 1/2\), \(n \in \mathbb {N}_{0}\), and \(\mu = (\mu _{1}, \mu _{2},\ldots , \mu _{d})\) satisfying \(0 \leqslant \mu _{1}< \mu _{2}< \ldots < \mu _{d} = n + d -1\), we define

where \(\mathtt {x}\) and \(\mathtt {u}\) are related by (2.1). Then, \(P_{\mu }^{(1/2)}(\mathtt {u})\) is an orthogonal polynomial of degree n in the variable \(\mathtt {u}\).

5 Generalized classical orthogonal polynomials

In this section, multivariable orthogonal polynomials are considered associated with the weight functions

with \(\alpha , \beta , \gamma >-1\).

Under the change of variables \(\mathtt {x} \mapsto \mathtt {u}\) defined by (2.1) the corresponding weight functions \(W_{\gamma }(\mathtt {u})\), as defined in (4.1), are given by

with \(\alpha , \beta , \gamma >-1\).

The multivariable Hermite, Laguerre, and Jacobi families associated with the weight functions \(B_{\gamma }^{H}(\mathtt {x}), B_{\gamma }^{L}(\mathtt {x})\), and \(B_{\gamma }^{H}(\mathtt {x})\) (see [1, (2.1)]), respectively, are eigenfunctions of the differential operators

with \(\alpha , \beta , \gamma >-1\).

We are going to obtain the representation of the differential operators \(\mathcal {H}_{\gamma }^{H}, \mathcal {H}_{\gamma }^{L}\) and \(\mathcal {H}_{\gamma }^{J}\), under the change of variables \(\mathtt {x} \mapsto \mathtt {u}\).

For \(h = 0, 1, 2\), let us define the operators

then

Under the change of variables \(\mathtt {x} \mapsto \mathtt {u}\), we get

and since \({\partial _{i}^{2}} u_{r} = 0\) we obtain

Proposition 5.1

The operator \(\mathcal {E}_{h}\) satisfies

Proof

From (5.1), we have

For \(h=0\), using (2.3) and Euler’s identity for homogeneous polynomials, we get

which gives (5.2). Identity (5.3) follows in the same way, since for \(h=1\) we get

Proposition 5.2

The operator \(\mathcal {D}_{h}\) can be represented as

where the coefficients

satisfy

taking into account that \(a_{rs}^{h}(\mathtt {u})=0\), for \(r\leqslant 0\), \(s \leqslant 0\), \(r > d\), or \(s > d\). Obviously, we have \(a_{rs}^{h}(\mathtt {u}) = a_{sr}^{h}(\mathtt {u})\) so we may assume that \(r \leqslant s\).

Proof

For \(h=0\), using (2.3) and (5.2), we deduce

and the recurrence formula (5.4) follows. Expression (5.5) can be obtained iterating (5.4).

For \(h=1\), from (2.3) and (5.2), we obtain

Hence, (5.6) follows.

For \(h=2\), (5.7) can be obtained in the same way

To obtain the representation of the operator \(\mathcal {F}_{h}\), let us consider the Vandermonde determinant V defined in (3.3). One can see that

and therefore

since every element \(1/(x_{i}-x_{k})\) in the above sum appears twice with opposite sign.

On the other hand, since V is an homogeneous symmetric polynomial of total degree \(d(d-1)/2\), again Euler’s identity for homogeneous polynomials gives

Lemma 5.3

For \(r=1,2,\ldots ,d\), we have

Proof

Using (2.5) and \(\partial _{ii}^{2} u_{r} = 0\) for \(i = 1, 2, \ldots , d\), we get

Finally, using (5.2) twice, we conclude

Proposition 5.4

The operator \(\mathcal {F}_{h}\) satisfies

where \(\left( {\begin{array}{c}d-r\\ 2\end{array}}\right) =0\), for \(r=d\) or \(r=d-1\).

Proof

First, for \(h=0\), we have

For \(h=1\), using (2.3) and (5.8), we have

Finally, for \(h=2\), using (2.3) and (5.9), we have

where \(\left( {\begin{array}{c}d-r\\ 2\end{array}}\right) =0\), for \(r=d\) or \(r=d-1\). For the last equality, the two last equalities in the proof for \(h = 1\) were used.

In this way, we have shown that, under the change of variables \(\mathtt {x} \mapsto \mathtt {u}\) defined by (2.1), the differential operators \(\mathcal {H}_{\gamma }^{H}, \mathcal {H}_{\gamma }^{L}\) and \(\mathcal {H}_{\gamma }^{J}\) can be represented as linear partial differential operators in the form

where \(a_{rs}(\mathtt {u})\), for \(r,s = 1, \ldots , d\) are polynomials of degree 2 in \(\mathtt {u}\) and \(b_{r}(\mathtt {u})\), for \(r = 1, \ldots , d\) are polynomials of degree 1 in \(\mathtt {u}\).

Remark 5.5

It is well known that, in the \(\mathtt {x}\) variable, it is possible to derive formulas for Laguerre and Hermite cases by taking limits of formulas in the Jacobi case (see [1, (2.18)–(2.19)]. Similar results hold for the \(\mathtt {u}\) variable.

Next, it will be proved that the polynomials defined in (4.2) are eigenfunctions of \(\mathcal {M}_{\gamma }\). The proof is based on two lemmas.

Lemma 5.6

Let \(\mathtt {u}^{\mu } = u_{1}^{\mu _{1}}\ldots u_{d}^{\mu _{d}}\) a multivariate monomial then

where \(\text {l.o.m.}\) stands for lower order degree monomials in the graded reverse lexicographical order. Here \(c(\mu ) \in \mathbb {R}\).

Proof

This result easily follows from Propositions 5.1, 5.2, and 5.4.

Lemma 5.7

For arbitrary polynomials \(p(\mathtt {u})\) and \(q(\mathtt {u})\), it holds that

Proof

Integration by parts provides the self-adjoint character of the differential operators \(\mathcal {H}_{\gamma }^{H}, \mathcal {H}_{\gamma }^{L}\) and \(\mathcal {H}_{\gamma }^{J}\) for symmetric polynomials in the corresponding domains (see [16]). The result follows after the change of variables \(\mathtt {x} \mapsto \mathtt {u}\).

Theorem 5.8

Let the \(p_{\mu }(\mathtt {u})\) one of the monic orthogonal polynomials defined by (4.2). Then,

Proof

By Lemma 5.6, the function \(\mathcal {M}_{\gamma } p_{\mu }(\mathtt {u})\) is a polynomial in \(\mathtt {u}\) whose leading term is \(c(\mu ) \mathtt {u}^{\mu }\). Let \(\mu ^{\prime } \prec \mu\), then it follows from Lemmas 5.6 and 5.7 that

Hence, \(\mathcal {M}_{\gamma } p_{\mu }(\mathtt {u})\) is a polynomial whose leading term is \(c(\mu ) \mathtt {u}^{\mu }\) orthogonal to all polynomials of lower degree, so \(\mathcal {M}_{\gamma } p_{\mu }(\mathtt {u}) = c(\mu ) p_{\mu }(\mathtt {u})\).

Remark 5.9

If we write \(\mu = (\lambda _{1}-\lambda _{2}, \lambda _{2}-\lambda _{3}, \ldots , \lambda _{d})\) then the expression \(\text {l.o.m.}\) in Lemma 5.6 can stand for lower in the dominance partial ordering of the \(\lambda\), i.e., \(\lambda ^{\prime } \leqslant \lambda\) if and only if \(\lambda ^{\prime }_{1} \leqslant \lambda _{1}, \lambda ^{\prime }_{1} + \lambda ^{\prime }_{2} \leqslant \lambda _{1} + \lambda _{2}, \ldots , \lambda ^{\prime }_{1} + \cdots + \lambda ^{\prime }_{d} \leqslant \lambda _{1} + \cdots + \lambda _{d}\).

Accordingly, the orthogonal polynomials \(p_{\mu }\) can also be characterized as \(p_{\mu } = u^{\mu }+ \text {l.o.m.}\) (with \(\text {l.o.m.}\) having the same meaning as above) such that they are orthogonal to all \(p_{\mu ^{\prime }}\) with corresponding \(\lambda ^{\prime }\) less than \(\lambda\) (corresponding to \(\mu\)) in the dominance partial ordering. Since the dominance partial ordering is not a total order, a priori, the polynomials \(p_{\mu }\) defined in this way could seem different from the polynomials \(p_{\mu }\) defined in (4.2). However, that they are still equal was first proved by Heckman [5, Theorem 8.3] by using very deep methods. Much easier proofs were given by Macdonald [15, (11.11)] and Heckman [6, Corollary 3.12].

5.1 The case \(d=2\)

For \(d=2\), using Propositions 5.1, 5.2, and 5.4, we can easily deduce the explicit expression of the differential operators \(\mathcal {H}_{\gamma }^{H}, \mathcal {H}_{\gamma }^{L}\) and \(\mathcal {H}_{\gamma }^{J}\), under the change of variables \(\mathtt {x} \mapsto \mathtt {u}\).

In the Jacobi case, the operator

for \(d=2\), can be written as follows

and therefore we recover the differential operator given by Koornwinder in [8].

Denoting the corresponding orthogonal polynomial, for \(d=2\), by \(P_{n-k,k}^{(\alpha ,\beta ,\gamma )}(\mathtt {u}) = u_{1}^{n-k}{u_{2}^{k}} + \cdots\), we get

In the Hermite case, the explicit expression of the differential operator

for \(d=2\), is given by

Denoting the orthogonal polynomial, for \(d=2\), by \(H_{n-k,k}^{(\gamma )}(\mathtt {u}) = u_{1}^{n-k}{u_{2}^{k}} + \cdots\), we get

In the Laguerre case, the explicit expression of the differential operator

for \(d=2\), is given by

Again, denoting the orthogonal polynomial, for \(d=2\), by \(L_{n-k,k}^{(\gamma )}(\mathtt {u}) = u_{1}^{n-k}{u_{2}^{k}} + \cdots\), we get

5.2 The case \(d=3\)

For \(d=3\), using Propositions 5.1, 5.2, and 5.4, we can easily deduce the explicit expression of the differential operators \(\mathcal {H}_{\gamma }^{H}, \mathcal {H}_{\gamma }^{L}\) and \(\mathcal {H}_{\gamma }^{J}\), under the change of variables \(\mathtt {x} \mapsto \mathtt {u}\)

In the Jacobi case, the operator

for \(d=3\), can be written as follows

In the Hermite case, the explicit expression of the differential operator

for \(d=3\), is given by

In the Laguerre case, the explicit expression of the differential operator

for \(d=3\), is given by

Data availability

Data sharing not applicable to this article as no datasets were generated or analyzed during the current study.

References

Baker, T.H., Forrester, P.J.: The Calogero-Sutherland model and generalized classical polynomials. Comm. Math. Phys. 188, 175–216 (1997)

Berens, H., Schmid, H.J., Xu, Y.: Multivariate Gaussian cubature formulae. Arch. Math. 64, 26–32 (1995)

Dunkl, C. F., Xu, Y.: Orthogonal Polynomials of Several Variables. In: 2nd ed., Encyclopedia of Mathematics and its Applications, vol. 155, Cambridge Univ. Press, Cambridge (2014)

Godwin, H.J.: Explicit expressions for Sturm sequences Math. Proc. Camb. Phil. Soc. 100, 225–227 (1986)

Heckman, G.J.: Root systems and hypergeometric functions. II, Compositio Math. 64, 353–373 (1987)

Heckman, G.J.: An elementary approach to the hypergeometric shift operators of Opdam. Invent. Math. 103, 341–350 (1991)

James, A.T.: Special functions of matrix and single argument in statistics. In: Askey, R. (ed.) Theory and Application of Special Functions, pp. 497–520. Academic Press, New York (1975)

Koornwinder, T.H.: Orthogonal polynomials in two variables which are eigenfunctions of two algebraically independent partial differential operators I. Indag. Math. 36, 48–58 (1974)

Koornwinder, T.H.: Orthogonal polynomials in two variables which are eigenfunctions of two algebraically independent partial differential operators II. Indag. Math. 36, 59–66 (1974)

Krall, H. L., Sheffer, I. M.: Orthogonal polynomials in two variables. Ann. Mat. Pure, Appl. (4) 76, 326–376 (1967)

Lassalle, M.: Polynômes de Jacobi généralisés. C. R. Acad. Sci. Paris Sér. I Math. 312, 425–428 (1991)

Lassalle, M.: Polynômes de Laguerre généralisés. C. R. Acad. Sci. Paris Sér. I Math. 312, 725–728 (1991)

Lassalle, M.: Polynômes de Hermite généralisés. C. R. Acad. Sci. Paris Sér. I Math. 313, 579–582 (1991)

Macdonald, I.G.: Symmetric Functions and Hall Polynomials, 2nd edn. Clarendon Press, Oxford (1995)

Macdonald, I. G.: Orthogonal polynomials associated with root systems. Sem. Lothar. Combin. 45, B45a, 40 (2000)

Macdonald, I. G.: Hypergeometric Functions I, arXiv:1309.4568 [math.CA]

Prasolov, V. V.: Polynomials, vol. 11 of algorithms and computation in mathematics. Springer (2004)

Funding

Funding for open access charge: Universidad de Granada / CBUA This research was supported through the Brazilian Federal Agency for Support and Evaluation of Graduate Education (CAPES), in the scope of the CAPES-PrInt Program, process number 88887.310463/2018-00, International Cooperation Project number 88887.468471/2019-00. The second author (MAP) has been partially supported by grant PGC2018-094932-B-I00 from FEDER/Ministerio de Ciencia, Innovación y Universidades – Agencia Estatal de Investigación, and the IMAG-María de Maeztu grant CEX2020-001105-M/AEI/10.13039/501100011033.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Dedicated to professor Claude Brezinski on the occasion of his 80th birthday.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Bracciali, C.F., Piñar, M.A. On multivariate orthogonal polynomials and elementary symmetric functions. Numer Algor 92, 183–206 (2023). https://doi.org/10.1007/s11075-022-01434-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-022-01434-4