Abstract

This paper deals with the equation \(-\varDelta u+\mu u=f\) on high-dimensional spaces \({\mathbb {R}}^m\), where the right-hand side \(f(x)=F(Tx)\) is composed of a separable function F with an integrable Fourier transform on a space of a dimension \(n>m\) and a linear mapping given by a matrix T of full rank and \(\mu \ge 0\) is a constant. For example, the right-hand side can explicitly depend on differences \(x_i-x_j\) of components of x. Following our publication (Yserentant in Numer Math 146:219–238, 2020), we show that the solution of this equation can be expanded into sums of functions of the same structure and develop in this framework an equally simple and fast iterative method for its computation. The method is based on the observation that in almost all cases and for large problem classes the expression \(\Vert T^ty\Vert ^2\) deviates on the unit sphere \(\Vert y\Vert =1\) the less from its mean value the higher the dimension m is, a concentration of measure effect. The higher the dimension m, the faster the iteration converges.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The numerical solution of partial differential equations in high space dimensions is a difficult and challenging task. Methods such as finite elements, which work perfectly in two or three dimensions, are not suitable for solving such problems because the effort grows exponentially with the dimension. Random walk based techniques only provide solution values at selected points. Sparse grid methods are best suited for problems in still moderate dimensions. Tensor-based methods [2, 11, 12] stand out in this area. They are not subject to such limitations and perform surprisingly well in a large number of cases. Tensor-based methods exploit less the regularity of the solutions rather than their structure. Consider the equation

on \({\mathbb {R}}^m\) for high dimensions m, where \(\mu >0\) is a given constant. Provided the right-hand side f of the equation (1.1) possesses an integrable Fourier transform,

is a solution of this equation, and the only solution that tends uniformly to zero as x goes to infinity. If the right-hand side f of the equation is a tensor product

of functions, say from the three-dimensional space to the real numbers, or a sum of such tensor products, the same holds for the Fourier transform of f. If one replaces the corresponding term in the high-dimensional integral (1.2) by an approximation

based on an appropriate approximation of 1/r by a sum of exponential functions, the integral then collapses to a sum of products of lower-dimensional integrals. That is, the solution can be approximated by a sum of such tensor products whose number is independent of the space dimension. The computational effort no longer increases exponentially, but only linearly with the space dimension.

However, the right-hand side of the equation does not always have such a simple structure and cannot always be well represented by tensors of low rank. A prominent example is quantum mechanics. The potential in the Schrödinger equation depends on the distances between the particles considered. Therefore, it is desirable to approximate the solutions of this equation by functions that explicitly depend on the position of the particles relative to each other. As a building block in more comprehensive calculations, this can require the solution of equations of the form (1.1) with right-hand sides that are composed of terms such as

The question is whether such structures transfer to the solution and whether in such a context arising iterates stay in this class. The present work deals with this problem. We present a conceptually simple iterative method that preserves such structures and takes advantage of the high dimensions.

First we embed the problem as in our former paper [19] into a higher dimensional space introducing, for example, some or all differences \(x_i-x_j\), \(i<j\), in addition to the components \(x_i\) of the vector \(x\in {\mathbb {R}}^m\) as additional variables. We assume that the right-hand side of the equation (1.1) is of the form \(f(x)=F(Tx)\), where T is a matrix of full rank that maps the vectors in \({\mathbb {R}}^m\) to vectors in an \({\mathbb {R}}^n\) of a still higher dimension and \(F:{\mathbb {R}}^n\rightarrow {\mathbb {R}}\) is a function that possesses an integrable Fourier transform and as such is continuous. The solution of the equation (1.1) is then the trace \(u(x)=U(Tx)\) of the then equally continuous function

which is, in a corresponding sense, the solution of a degenerate elliptic equation

This equation replaces the original equation (1.1). Its solution (1.6) is approximated by the iterates arising from a polynomially accelerated version of the basic method

The calculation of these iterates requires the solution of equations of the form (1.1), that is, the calculation of integrals of the form (1.2), now not over \({\mathbb {R}}^m\), but over the higher dimensional \({\mathbb {R}}^n\). The symbol \(\Vert T^t\omega \Vert ^2\) of the operator \({\mathscr {L}}\) is a homogeneous second-order polynomial in \(\omega \). For separable right-hand sides F as above, the calculation of these integrals thus reduces to the calculation of products of lower, in the extreme case one-dimensional integrals.

The reason for the usually astonishingly good approximation properties of these iterates is the directional behavior of the term \(\Vert T^t\omega \Vert ^2\), more precisely the fact that the euclidean norm of the vectors \(T^t\eta \in {\mathbb {R}}^m\) for vectors \(\eta \) on the unit sphere \(S^{n-1}\) of the \({\mathbb {R}}^n\) takes an almost constant value outside a very small set whose size decreases rapidly with increasing dimensions, a typical concentration of measure effect. To capture this phenomenon quantitatively, we introduce the probability measure

on the Borel subsets M of the \({\mathbb {R}}^n\), where \(\nu _n\) is the volume of the unit ball and \(n\nu _n\) thus is the area of the unit sphere. If M is a subset of the unit sphere, \({\mathbb {P}}(M)\) is equal to the ratio of the area of M to the area of the unit sphere. If M is a sector, that is, if M contains with a vector \(\omega \) also its scalar multiples, \({\mathbb {P}}(M)\) measures the opening angle of M. In the following we assume that the mean value of the expression \(\Vert T^t\eta \Vert ^2\) over the unit sphere, or in other words its expected value with respect to the given probability measure, takes the value one. This is only a matter of the scaling of the variables in the higher dimensional space and does not represent a restriction. The decisive quantity is the variance of the expression \(\Vert T^t\eta \Vert ^2\), considered as a random variable on the unit sphere. Since the values \(\Vert T^t\eta \Vert ^2\) are, except for rather extreme cases, approximately normally distributed in higher dimensions, the knowledge of the variance makes it possible to capture the distribution of these values sufficiently well. The concentration of measure effect is reflected in the fact that the variances almost always tend to zero as the dimensions increase.

For a given \(\rho <1\), let S be the sector that consists of the points \(\omega \) for which the expression \(\Vert T^t\omega \Vert ^2\) differs from \(\Vert \omega \Vert ^2\) by \(\rho \Vert \omega \Vert ^2\) or less. If the Fourier transform of the right-hand side of the equation (1.7) vanishes at all \(\omega \) outside this set, the same holds for the Fourier transform of its solution (1.6) and the Fourier transforms of the iterates \(U_k\). Under this condition, the iteration error decreases at least like

with respect to a broad range of Fourier-based norms. Provided the values \(\Vert T^t\eta \Vert ^2\) are approximately normally distributed with expected value \(E=1\) and small variance V, the measure (1.9) of the sector S is almost one as soon as \(\rho \) exceeds the standard deviation \(\sigma =\sqrt{V}\) by more than a moderate factor. The sector S then fills almost the entire frequency space. This is admittedly an idealized situation and the actual convergence behavior is more complicated, but this example characterizes pretty well what one can expect. The higher the dimensions and the smaller the variance, the faster the iterates approach the solution.

The rest of this paper is organized as follows. Section 2 sets the framework and is devoted to the representation of the solutions of the equation (1.1) as traces of higher dimensional functions (1.6) for right-hand sides that are themselves traces of functions with an integrable Fourier transform. In comparison with the proof in [19], we give a more direct proof of this representation. In addition, we introduce two scales of norms with respect to which we later estimate the iteration error.

In a sense, the following two sections form the core of the present work. They deal with the distribution of the values \(\Vert T^t\eta \Vert ^2\) on the unit sphere. Section 3 treats the general case. We first show that the expected value E and the variance V of the expression \(\Vert T^t\eta \Vert ^2\), considered as a random variable, can be expressed in terms of the singular values of the matrix T. Using this representation, we show that for random matrices T with assigned expected value \(E=1\) the expected value of the corresponding variances V not only tends to zero as the dimension m goes to infinity, but also that these variances cluster the more around their expected value the larger m is. Sampling large numbers of these variances supports these theoretical findings. We also study a class of matrices T that are associated with interaction graphs. These matrices correspond to the case that some or all coordinate differences are introduced as additional variables and formed the original motivation for this work. The expected values E that are assigned to these matrices always take the value one and the corresponding variances V can be expressed directly in terms of the vertex degrees and the dimensions. Numerical experiments for standard classes of random graphs show that the variances tend to zero with high probability as the number of the vertices increases. As mentioned, the knowledge of the expected value and the variance assigned to a given matrix T is of central importance since the distribution of the values \(\Vert T^t\eta \Vert ^2\) is usually not much different from the corresponding normal distribution. This can be checked experimentally by sampling these values for a large number of points \(\eta \) that are uniformly distributed on the unit sphere.

These observations are supported by the results for orthogonal projections from a higher onto a lower dimensional space presented in Sect. 4, a case which is accessible to a complete analysis and that for this reason serves us as a model problem. These results are based on a precise knowledge of the corresponding probability distributions and are related to the random projection theorem and the Johnson-Lindenstrauss lemma [18]. This section is based in some parts on results from our former papers [19] and [20], which are taken up here and are adapted to the present situation.

In Sect. 5 we finally return to the higher-dimensional counterpart (1.7) of the original equation (1.1) and study the convergence behavior of the polynomially accelerated version of the iteration (1.8) for its solution. Special attention is given to the limit case \(\mu =0\) of the Laplace equation. The section ends with a brief review of an approximation of the form (1.4) by sums of Gauss functions.

2 Solutions as traces of higher-dimensional functions

In this paper we are mainly concerned with functions \(U:{\mathbb {R}}^n\rightarrow {\mathbb {R}}\), n a potentially high dimension, that possess the then unique representation

in terms of an integrable function \(\widehat{U}\), their Fourier transform. Such functions are uniformly continuous by the Riemann-Lebesgue theorem and vanish at infinity. The space \({\mathscr {B}}_0({\mathbb {R}}^n)\) of these functions becomes a Banach space under the norm

Let T be an arbitrary \((n\times m)\)-matrix of full rank \(m<n\) and let

be the trace of a function in \(U\in {\mathscr {B}}_0({\mathbb {R}}^n)\). Since the functions in \({\mathscr {B}}_0({\mathbb {R}}^n)\) are uniformly continuous, the same also applies to the traces of these functions. Since there is a constant c with \(\Vert x\Vert \le c\,\Vert Tx\Vert \) for all \(x\in {\mathbb {R}}^m\), the trace functions (2.3) vanish at infinity as U itself. The next lemma gives a criterion for the existence of partial derivatives of the trace functions, where we use the common multi-index notation.

Lemma 2.1

Let \(U:{\mathbb {R}}^n\rightarrow {\mathbb {R}}\) be a function in \({\mathscr {B}}_0({\mathbb {R}}^n)\) and let the functions

be integrable. Then the trace function (2.3) possesses the partial derivative

which is, like u, itself uniformly continuous and vanishes at infinity.

Proof

Let \(e_k\in {\mathbb {R}}^m\) be the vector with the components \(e_k|_j=\delta _{kj}\). To begin with, we examine the limit behavior of the difference quotient

of the trace function as h goes to zero. Because of

and under the condition that the function \(\omega \rightarrow \omega \cdot Te_k\,\widehat{U}(\omega )\) is integrable, it tends to

as follows from the dominated convergence theorem. Because of \(\omega \cdot Te_k=T^t\omega \cdot e_k\), this proves (2.5) for partial derivatives of order one. For partial derivatives of higher order, the proposition follows by induction. \(\square \)

Let \(D({\mathscr {L}})\) be the space of the functions \(U\in {\mathscr {B}}_0({\mathbb {R}}^n)\) with finite (semi)-norm

Because of \(|(T^t\omega )^\beta |\le 1+\Vert T^t\omega \Vert ^2\) for all multi-indices \(\beta \) of order two or less, the traces of the functions in this space are twice continuously differentiable by Lemma 2.1. Let \({\mathscr {L}}:D({\mathscr {L}})\rightarrow {\mathscr {B}}_0({\mathbb {R}}^n)\) be the pseudo-differential operator given by

For the functions \(U\in D({\mathscr {L}})\) and their traces (2.3), by Lemma 2.1

holds. With corresponding right-hand sides, the solutions of the equation (1.1) are thus the traces of the solutions \(U\in D({\mathscr {L}})\) of the pseudo-differential equation

Theorem 2.1

Let \(F:{\mathbb {R}}^n\rightarrow {\mathbb {R}}\) be a function with integrable Fourier transform, let \(f(x)=F(Tx)\), and let \(\mu \) be a positive constant. Then the trace (2.3) of the function

is twice continuously differentiable and the only solution of the equation

whose values tend uniformly to zero as \(\Vert x\Vert \) goes to infinity. Provided the function

is integrable, the same holds in the limit case \(\mu =0\).

Proof

That the trace u is a classical solution of the equation (2.11) follows from the remarks above, and that u vanishes at infinity by the already discussed reasons from the Riemann-Lebesgue theorem. The maximum principle ensures that no further solution of the equation (2.11) exists that vanishes at infinity. \(\square \)

From now on, the equation (2.9) will replace the original equation (2.11). Our aim is to compute its solution (2.10) iteratively by polynomial accelerated versions of the basic iteration (1.8). The convergence properties of this iteration depend decisively on the directional behavior of the values \(\Vert T^t\omega \Vert ^2\), which will be studied in the next two sections, before we return to the equation and its iterative solution.

Before we continue with these considerations and turn our attention to the directional behavior of these values, we introduce the norms with respect to which we will show convergence. The starting point is the radial-angular decomposition

of the integrals of functions in \(L_1({\mathbb {R}}^n)\) into an inner radial and an outer angular part. Inserting the characteristic function of the unit ball, one recognizes that the area of the n-dimensional unit sphere \(S^{n-1}\) is \(n\nu _n\), with \(\nu _n\) the volume of the unit ball. If f is rotationally symmetric, \(f(r\eta )=f(re)\) holds for every \(\eta \in S^{n-1}\) and every fixed, arbitrarily given unit vector e. In this case, (2.13) simplifies to

The norms split into two groups, both of which depend on a smoothness parameter. The norms in the first group possess the radial-angular representation

and take in cartesian coordinates the more familiar form

The spectral Barron spaces \({\mathscr {B}}_s={\mathscr {B}}_s({\mathbb {R}}^n)\), \(s\ge 0\), consist of the functions U with finite norms \(\Vert U\Vert _t\) for \(0\le t\le s\). Since all these norms scale differently, we do not combine them to an entity but treat them separately. One should be aware that these norms are quite strong. For integer values s, functions \(U\in {\mathscr {B}}_s\) possess continuous partial derivatives up to order s, which are bounded by the norms \(\Vert U\Vert _k\), \(k\le s\), and vanish at infinity. Barron spaces play a prominent role in the analysis of neural networks and in high-dimensional approximation theory in general; see for example [15].

The norms in the second group are a mixture between an \(L_1\)-based norm, in radial direction, and an \(L_2\)-based norm for the angular part and are given by

If the norm \(|\!|\!|U|\!|\!|_s\) of U is finite, then also the norm \(\Vert U\Vert _s\) and

holds. This follows from the Cauchy-Schwarz inequality. Both norms scale in the same way. By (2.14), they coincide for radially symmetric functions.

The following lemma is needed to bring these two norms into connection with Theorem 2.1 and the solution (2.10) of the equation (2.9).

Lemma 2.2

Let \(m\ge 3\) and \(m\ge 5\), respectively. Then the surface integrals

take finite values, despite the singular integrands.

Proof

Let \(\varSigma _0\) be the \((m\times m)\)-diagonal matrix with the singular values of T on its diagonal. A variable transform and the Fubini-Tonelli theorem then lead to

Because the first of the two integrals on the right-hand side of this identity is finite for dimensions \(m\ge 3\) and the second without such a constraint, the integral

takes a finite value. The same then also holds for the integral over the unit sphere on the right-hand side of the equation. For dimensions \(m\ge 5\), the second of the two integrals in (2.19) can be treated in the same way. \(\square \)

For a given function F with integrable Fourier transform, the function

possesses the radial-angular representation

If the function \(\phi :S^{n-1}\rightarrow {\mathbb {R}}\) given by

is bounded and the dimension m is three or higher, the function (2.20) is integrable by Lemma 2.2 and Fourier transform of a function \(U\in {\mathscr {B}}_0({\mathbb {R}}^n)\) with finite norm \(\Vert U\Vert _0\). For dimensions m greater than four, it suffices that \(\phi \) is square integrable. For the other norms introduced above, one can argue in a similar way.

3 A moment-based analysis

The purpose of this and the next section is to study how much the pseudo-differential operator (2.7) differs from the negative Laplace operator, that is, how much

deviates on the unit sphere \(S^{n-1}\) of the \({\mathbb {R}}^n\) from the constant one. The expression (3.1) is a homogeneous function of second order in the variable \(\omega \). To study its angular distribution, we make use of the already introduced probability measure

on the Borel subsets M of the \({\mathbb {R}}^n\). The direct calculation of the expected values of the given and other homogeneous functions as surface integrals is complicated. We therefore reduce the calculation of such quantities to the calculation of simpler volume integrals. Our main tool is the radial-angular decomposition (2.13).

Lemma 3.1

Let \(x\rightarrow \Vert x\Vert ^{2\ell }\,W(x)\) be rotationally symmetric and integrable. Then

holds for all functions \(\chi \) that are positively homogeneous of degree \(2\ell \ge 0\), where the constant \(C(\ell )\) is given by the expression

and e is an arbitrarily given unit vector.

Proof

As by assumption \(\chi (r\eta )=r^{2\ell }\chi (\eta )\) and \(W(r\eta )=W(re)\) holds for \(\eta \in S^{n-1}\) and \(r>0\), the decomposition (2.13) leads to

yielding the proposition after some rearrangement. \(\square \)

The choice of the function W can be adapted to the needs. For example, it can be the characteristic function of the unit ball or the normed Gauss function

which breaks down into a product of univariate functions. Another almost trivial, but often very useful observation concerns coordinate changes.

Lemma 3.2

Under the assumptions from the previous lemma,

holds for all orthogonal \((n\times n)\)-matrices Q.

Proof

The transformation theorem for multivariate integrals leads to

Because \(W(Qx)=W(x)\) and \(\textrm{det}Q=\pm 1\), the proposition follows. \(\square \)

The expected value and the variance of the function (3.1), the central moments

are of fundamental importance for our considerations.

Lemma 3.3

The expected value and the variance of the function (3.1) depend only on the singular values \(\sigma _1,\ldots ,\sigma _m\) of the matrix T. In terms of the power sums

of order one and two of the squares of the singular values, they read as follows

Proof

Inserting the matrix Q from the singular value decomposition \(T=Q\varSigma {\widetilde{Q}}^t\) of the matrix T, Lemmas 3.1 and 3.2 lead to the representation

of the moment of order k as a homogeneous symmetric polynomial in the \(\sigma _i^2\). With the function W from (3.5), the volume integral on the right-hand side splits into a sum of products of one-dimensional integrals. Using

this integral and the constant C(k) can be calculated in principle. For the first two moments, this is easily possible. For moments of order three and higher, one can take advantage of the fact that the symmetric polynomials are polynomials in the power sums of the \(\sigma _i^2\); see [17], or [16] for a more recent treatment. \(\square \)

In terms of the normalized singular values \(\eta _i=\sigma _i/\sqrt{n}\), the expected value and the variance (3.7) and (3.9), respectively, can be written as follows

We are interested in matrices T for which the expected value E is one, that is, for which the vector \(\eta \) composed of the normalized singular values \(\eta _i\) lies on the unit sphere of the \({\mathbb {R}}^m\). The variances V then possess the representation

The function X attains the minimum value 1/m and the maximum value one on the unit sphere of the \({\mathbb {R}}^m\). The variances (3.11) therefore extend over the interval

and can be arbitrarily close to the value two for large n. The spectral norms of the given matrices \(T^t\), their maximum singular values \(\sigma _i\), spread over the interval

However, the variances are most likely of the order \({\mathscr {O}}(1/m)\).

Lemma 3.4

Let the vectors \(\eta \) composed of the normalized singular values \(\eta _i\) be uniformly distributed on the part of the unit sphere consisting of points with strictly positive components. Then the expected value and the variance of X are

Proof

For symmetry reasons and because the intersections of lower-dimensional subspaces with the unit sphere have measure zero, we can allow points \(\eta \) that are uniformly distributed on the whole unit sphere. The expected value and the variance of the function X, treated as a random variable, are therefore

and can be calculated along the lines given by Lemma 3.1. \(\square \)

It is instructive to express the variance (3.11) in terms of the random variable

which is rescaled to the expected value \({\mathbb {E}}({\widetilde{X}})=1\). Its variance

tends to zero as m goes to infinity. This not only means that the expected value

of the variances V tends to zero as the dimension m increases, but also that the variances cluster the more around their expected value the larger m is. This observation is supported by simple, easily reproducible experiments. Uniformly distributed points on the unit sphere \(S^{m-1}\) can be generated from vectors in \({\mathbb {R}}^m\) with components that are subject to the standard normal distribution. Such vectors then follow themselves the standard normal distribution in the m-dimensional space. Scaling them to length one gives the desired uniformly distributed points on the unit sphere. This allows one to sample the random variable X for any given dimension m.

The expected value and the variance (3.7) can be expressed directly in terms of the entries of the \((m\times m)\)-matrix \(S=T^tT\), since the power sums (3.8) are the traces

of the matrices S and \(S^2\), and can therefore be computed without recourse to the singular values of T. Consider the \((n\times m)\)-matrix T assigned to an arbitrarily given undirected graph with m vertices and \(n-m\) edges that maps the components \(x_i\) of a vector \(x\in {\mathbb {R}}^m\) first to themselves and then to the \(n-m\) weighted differencesFootnote 1

assigned to the edges of the graph connecting the vertices i and j. In quantum physics, matrices of the given kind can be associated with the interaction of particles. They formed the original motivation for this work. In the given case, the matrix S has the form \(S=I+L/2\), where I is the identity matrix and L is the Laplacian matrix of the graph. The off-diagonal entries of L are \(L_{ij}=-1\) if the vertices i and j are connected by an edge and \(L_{ij}=0\) otherwise. The diagonal entries \(L_{ii}=d_i\) are the vertex degrees, the numbers of edges that meet at the vertices i.

Lemma 3.5

For a matrix T of dimension \(n\times m\) associated with a graph with m vertices and \(n-m\) edges, the expected value and the variance (3.7) are

where the quantity \(d^2\) is the mean value of the squares of the vertex degrees:

Proof

By (3.18), the constants (3.8) possess the representation

in the case considered here. The proposition therefore follows from (3.9). \(\square \)

If the squares of the vertex degrees remain bounded in the mean, the variance tends to zero inversely proportional to the total number of vertices and edges. The matrices assigned to graphs whose vertices up to one are connected with a designated central vertex, but not to each other, form the other extreme. For these matrices, the variances decrease to a limit value greater than zero as the number of vertices increases. However, such matrices are a very rare exception, not only with respect to the above random matrices, but also in the context of matrices assigned to graphs. Consider the random graphs with a fixed number m of vertices that are with a given probability p connected by an edge, or those with a fixed number m of vertices and \(n-m\) of edges. Sampling the variances assigned to a large number of graphs in such a class, one sees that these variances exceed the value \(2/(m+1)\) with a very high probability at most by a factor that is only slightly greater than one, if at all. If all vertices are connected with each other, if their degree is \(d_i=m-1\) and the number of edges is therefore \(n-m=m(m-1)/2\), the variance is

With the exception of a few extreme cases, it can be observed that the distribution of the values \(\Vert T^t\eta \Vert ^2\) for points \(\eta \) on the unit sphere of \({\mathbb {R}}^n\) more and more approaches the normal distribution with the expected value and the variance (3.7) as the dimensions increase, a fact that underlines the importance of these quantities. This can be seen by evaluating the expression \(\Vert T^t\eta \Vert ^2\) at a large number of independently chosen, uniformly distributed points \(\eta \) on the unit sphere and comparing the frequency distribution of the resulting values with the given Gauss function

Let the matrix T have the singular value decomposition \(T=Q\varSigma {\widetilde{Q}}^t\). The frequency distribution of the values \(\Vert \varSigma ^t\eta \Vert ^2=\Vert T^tQ\eta \Vert ^2\) on the unit sphere then coincides with the distribution of the values \(\Vert T^t\eta \Vert ^2\). Let \(\varSigma _0\) be the \((m\times m)\)-diagonal matrix that is composed of the singular values. Given the above remarks about the generation of uniformly distributed points on a unit sphere, sampling the values \(\Vert T^t\eta \Vert ^2\) for a large number of points \(\eta \in S^{n-1}\) means to sample the ratio

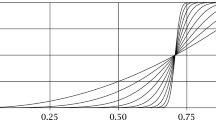

for a large number of vectors \(x\in {\mathbb {R}}^m\) and \(y\in {\mathbb {R}}^{n-m}\) with standard normally distributed components. The values \(\Vert y\Vert ^2\) are then distributed according to the \(\chi ^2\)-distribution with \(n-m\) degrees of freedom. The direct calculation of \(\Vert y\Vert ^2\) can thus be replaced by the calculation of a single scalar quantity following this distribution. The amount of work then remains strictly proportional to the dimension m, no matter how large the difference \(n-m\) of the dimensions is. Let T be the matrix assigned to the graph associated with the \(\textrm{C}_{60}\)-fullerene molecule, which consists of the ninety edges of a truncated icosahedron and its sixty corners as vertices. The degree of these vertices is three and the assigned variance therefore \(V=9/380\). The frequency distribution of the values \(\Vert T^t\eta \Vert ^2\) for a million randomly chosen points \(\eta \) on the unit sphere and the corresponding Gauss function (3.23) are shown in Fig. 1.

The singular values of the matrices T and \(T^t\), respectively, can in general only be determined numerically. The matrices T assigned to complete graphs, in which all vertices are connected with each other, are an exception. It is not difficult to see that the associated matrices \(S=T^tT\) have the eigenvalue one of multiplicity one and the eigenvalue \((m+2)/2\) of multiplicity \(m-1\) in terms of the number m of vertices. The square roots of these eigenvalues are the singular values of T.

Orthogonal projections, matrices \(T^t=P\) of dimension \((m\times n)\) with one as the only singular value, are another important exception. The quantities (3.8) take the value m in this case. The expected value and the variance are therefore

or after rescaling, that is, after multiplying P by the factor \(\sqrt{n/m}\),

Since \(\Vert \varSigma _0x\Vert ^2=\Vert x\Vert ^2\) for orthogonal projections, for these matrices the quotient (3.24) follows the beta distribution with the probability density function

with parameters \(\alpha =m/2\) and \(\beta =(n-m)/2\); see [1, Eq. 26.5.3]. In a context similar to ours, this has already been observed in [9]. Orthogonal projections are accessible to a complete analysis. The next section is therefore devoted to their study.

4 Orthogonal projections and measure concentration

We begin with a simple, but very helpful corollary from Lemma 3.2.

Lemma 4.1

Let A be an arbitrary matrix of dimension \(m\times n\), \(m<n\), with singular value decomposition \(A=Q\varSigma {\widetilde{Q}}^t\). Then the probabilities

are equal and the first one depends only on the singular values of A.

Proof

This follows from the invariance of these probabilities under orthogonal transformations. Let \(\chi (x)=1\) if \(\Vert Ax\Vert ^2\le \delta \Vert A\Vert ^2\Vert x\Vert ^2\) holds and \(\chi (x)=0\) otherwise. The transformed function, with the values \(\chi ({\widetilde{Q}}x)\), is then the characteristic function of the set of all x for which \(\Vert \varSigma x\Vert ^2\le \delta \Vert \varSigma \Vert ^2\Vert x\Vert ^2\) holds. \(\square \)

The next theorem is an only slightly modified version of Theorem 2.4 in [20]. Its proof is based on the observation that the probability (4.1) is equal to the volume of the set of all x inside the unit ball for which \(\Vert Ax\Vert ^2\le \delta \Vert A\Vert ^2\Vert x\Vert ^2\) holds, up to the division by the volume of the unit ball itself. This is an implication of Lemma 3.1 if the characteristic function of the unit ball is chosen as the function W.

Theorem 4.1

Let the \((m\times n)\)-matrix P be an orthogonal projection. Then

holds, where the function \(F(\delta )=F(m,n;\delta )\) is defined by the integral expression

and the two exponents \(\alpha \) and \(\beta \) are given by

The function (4.2) tends to the limit value \(F(1)=1\) as \(\delta \) goes to one.

Proof

By Lemma 4.1, we can restrict ourselves to the matrix P, which extracts from a vector in \({\mathbb {R}}^n\) its first m components. We split the vectors in \({\mathbb {R}}^n\) into a part \(x\in {\mathbb {R}}^m\) and a remaining part \(y\in {\mathbb {R}}^{n-m}\). The set whose volume has to be calculated according to the above remark, then consists of the points in the unit ball for which

or, resolved for the norm of the component \(x\in {\mathbb {R}}^m\),

holds. This volume can be expressed as double integral

where \(H(t)=0\) for \(t<0\), \(H(t)=1\) for \(t\ge 0\), \(\chi (t)=1\) for \(t\le 1\), and \(\chi (t)=0\) for arguments \(t>1\). If \(\delta \) tends to one and \(\varepsilon \) thus to infinity, the value of this double integral tends, by the dominated convergence theorem, to the volume of the unit ball and the expression (4.2) tends to one, as claimed. In terms of polar coordinates, that is, according to (2.14), the double integral is given by

where \(\nu _d\) is the volume of the unit ball in \({\mathbb {R}}^d\). By substituting \(t=r/s\) in the inner integral, the upper bound becomes independent of s and the integral simplifies to

Interchanging the order of integration, it attains the value

With \(\varphi (t)=t^2/(1+t^2)\) and the constants \(\alpha \) and \(\beta \) from (4.4), one obtains

and, because of \(\varphi (0)=0\) and \(\varphi (\varepsilon )=\delta \), therefore the representation

of the integral. Dividing the expression above by \(\nu _n\) and recalling that

this completes the proof of the theorem. \(\square \)

That is, for points x on the unit sphere, the values \(\Vert Px\Vert ^2\) follow the beta distribution mentioned at the end of the last section. For smaller dimensions, the frequency distribution \(f(t)=F'(t)\) and its approximation by the Gauss function (3.23) still differ considerably, as can be seen in Fig. 2. This difference vanishes with increasing dimensions. In fact, it is common knowledge in statistics that beta distributions behave in the limit of large parameters almost like normal distributions. If a random variable \(X(\omega )\) with expected value E and standard deviation \(\sigma \) follows a beta distribution with parameters \(\alpha \) and \(\beta \), the distribution of the random variable

differs the less from the standard normal distribution as \(\alpha \) and \(\beta \) become larger. For the sake of completeness, we prove this in the following. Let

be the distribution function of the beta distribution with parameters \(\alpha \) and \(\beta \), let E and V be the assigned expected value and the assigned variance given by

and let \(\sigma =\sqrt{V}\) be the corresponding standard deviation. The function (4.6) is first only defined for arguments \(0\le x\le 1\). To avoid problems with its domain of definition, we extend it by the value zero for arguments \(x<0\) and the value one for arguments \(x>1\). If \(\alpha \) and \(\beta \) are greater than one, which is the only us interesting case, the extended function is a continuously differentiable cumulative distribution function with a density that vanishes outside the interval \(0<t<1\). Let

be the accordingly rescaled distribution function.

Theorem 4.2

The function (4.8) tends pointwise to the distribution function

of the standard normal distribution as \(\alpha \) and \(\beta \) go to infinity.

Proof

Before we start with the proof itself, we recall that it suffices to show that the assigned densities converge pointwise to the density of the normal distribution. This may be surprising in view of the monotone or the dominated convergence theorem, but is easily explained and is often utilized in stochastics. Let \(f_1,f_2,\ldots \) be a sequence of densities that converges pointwise to a density f. The nonnegative functions

then tend pointwise monotonously from below to f and it is

where we have used that the integral of \(f_n\) over \({\mathbb {R}}\) takes the value one. The two outer integrals converge, by the monotone convergence theorem, to the limits

This proves that the middle integral converges to the limit

Pointwise convergence of the densities thus implies convergence in distribution.

Now let us fix an arbitrary finite interval and assume that the parameters \(\alpha \) and \(\beta \) are already so large that, for the points x in this interval, the quantities

take values less than 9/100. On the given interval, the density of the distribution (4.8), its derivative with respect to the variable x, can then be written as follows

To proceed, we need Stirling’s formula (see [13, Eq. 5.6.1]), the representation

of the gamma function for real arguments \(z>0\). Introducing the function

the logarithm of the density breaks down into the sum of the three terms

The third term C tends by definition to zero as \(\alpha \) and \(\beta \) go to infinity. The first of the three terms can be easily calculated and reduces to the value

By means of the second-order Taylor expansion

of \(\ln (1+t)\) around \(t=0\), the second term splits into the polynomial part

and a remainder \(B_2\). The polynomial part possesses the representation

with coefficients a and b that depend on the parameters \(\alpha \) and \(\beta \) and are given by

Because of \(|R(t)|\le |t|^3\) for \(|t|\le 3/10\) and our assumption about the size of \(\alpha \) and \(\beta \) in dependence of the interval under consideration, the term \(B_2\) can be estimated as

The logarithm of the density tends on the given interval therefore uniformly to

as \(\alpha \) and \(\beta \) go to infinity. This implies locally uniform convergence of the densities themselves and thus at least pointwise convergence of the distributions \(\square \)

However, the key message of this section remains that the values \(\Vert Px\Vert ^2\) cluster, for points x on the unit sphere, more and more around their expected value \(\xi =m/n\) as the dimensions increase. Much better than by the previous theorem this is reflected by the following result, which again is more or less taken from [20]. A similar technique, based in the same way on the Markov inequality, was used in [8]. The following estimates are expressed in terms of the function

It increases strictly on the interval \(0\le \vartheta \le 1\), reaches its maximum value one at the point \(\vartheta =1\), and decreases strictly from there.

Theorem 4.3

Let the \((m\times n)\)-matrix P be an orthogonal projection and let \(\xi \) be ratio of the two dimensions m and n. For \(0<\delta <\xi \), then

holds. For \(\xi<\delta <1\), this estimate is complemented by the estimate

Proof

As before, we can restrict ourselves to the matrix P that extracts from a vector in \({\mathbb {R}}^n\) its first m components. The characteristic function \(\chi \) of the set of all x for which the estimate \(\Vert Px\Vert ^2\le \delta \,\Vert x\Vert ^2\) holds satisfies, for all \(t>0\), the estimate

by a product of univariate functions. Choosing the normed weight function (3.5), the left-hand side of (4.11) can, by Lemma 3.1, therefore be estimated by the integral

which remains finite for all t in the interval \(0\le t<1/\delta \). It splits into a product of one-dimensional integrals and takes, for given t, the value

This expression attains its minimum on the interval \(0<t<1/\delta \) at

At this point t it takes the value

With the abbreviation \(\delta /\xi =\vartheta \) and because of \(\vartheta \xi <1\) and \(\xi <1\), the logarithm

possesses the power series expansion

Because the series coefficients are for all \(\vartheta \ge 0\) greater than or equal to zero and, by the way, polynomial multiples of \((1-\vartheta )^2\), the estimate (4.11) follows.

The proof of the second estimate is almost identical to the proof of the first. For sufficiently small \(t>0\), the expression (4.12) can be estimated by the integral

This integral splits into a product of one-dimensional integrals and takes the value

which attains, for \(\delta <1\), on the interval \(0<t<1/(1-\delta )\) its minimum at

At this point t it again takes the value

This leads, as above, to the estimate (4.12). \(\square \)

If the dimension ratio \(\xi =m/n\) is fixed or only tends to a limit value \(\delta _0\), then the probability distributions (4.2) and the functions (4.3), respectively, tend to a step function with jump discontinuity at \(\delta _0\). Figure 3 reflects this behavior. One can look at the estimates from Theorem 4.3 also from a different perspective and consider the rescaled counterparts \(P'=\sqrt{n/m}\,P\) of the projections P. The distributions

assigned to them tend, outside every small neighborhood of \(\delta _0=1\), exponentially to a step function with jump discontinuity at \(\delta _0=1\) as m goes to infinity, independent of the ratio m/n of the two dimensions, no matter how small it may be.

The probability distributions (4.2) as function of \(0\le \delta <1\) for \(m=2^k\), \(k=1,\ldots ,16\), and \(n=2m\)

5 The iterative procedure

Now we are ready to analyze the iterative method

presented in the introduction and its polynomially accelerated counterpart, respectively, for the solution of the equation (2.9), the equation that has replaced the original equation (1.1). The iteration error possesses the Fourier representation

where U is the exact solution (2.10) of the equation (2.9), \(\alpha (\omega )\) is given by

and the functions \(P_k(\lambda )\) are polynomials of order k with value \(P_k(0)=1\). Throughout this section, we assume that the expression \(\Vert T^t\eta \Vert ^2\) possesses the expected value one. We restrict ourselves to the analysis of this iteration in the spectral Barron spaces \({\mathscr {B}}_s\) equipped with the norm (2.16), or (2.15) in radial-angular representation. In a corresponding sense, this analysis can be transferred to the Hilbert spaces \(H^s\).

Theorem 5.1

If the solution U lies in the Barron space \({\mathscr {B}}_s({\mathbb {R}}^n)\), \(s\ge 0\), this also holds for the iterates \(U_k\). For all coefficients \(\mu \ge 0\), the norm (2.16) of the error, given by

then tends to zero for suitably chosen polynomials \(P_k\) as k goes to infinity.

Proof

Because the expression \(\Vert T^t\eta \Vert ^2\) possesses the expected value one, the spectral norm of the matrix \(T^t\) attains a value \(\Vert T^t\Vert \ge 1\). If one sets \(\vartheta =1/\Vert T^t\Vert ^2\),

therefore holds for all \(\omega \) outside the kernel of \(T^t\), as a subspace of a dimension less than n a set of measure zero. Choosing \(P_k(\lambda )=(1-\vartheta \lambda )^k\), the proposition thus follows from the dominated convergence theorem. \(\square \)

Of course, one would like to have more than just convergence. The next theorem is a first step in this direction.

Theorem 5.2

Let \(0<\rho <1\), \(a=1-\rho \), \(b=\Vert T^t\Vert ^2\), and \(\kappa =b/a\) and let

be the to the interval \(\alpha \le \lambda \le b\) transformed Chebyshev polynomial \(T_k\) of the first kind of degree k. The norm (2.16) of the iteration error (5.2) then satisfies the estimate

where \(\widehat{U}_\rho \) takes the same values as \(\widehat{U}\) on the set of all \(\omega \) for which

holds and vanishes outside this set.

Proof

This follows from \(0\le \alpha (\omega )(\Vert T^t\omega \Vert ^2+\mu )\le b\) for \(\omega \in {\mathbb {R}}^n\) and the estimates

for the values of the Chebyshev polynomial (5.5) on the interval \(0\le \lambda \le b\). \(\square \)

Depending on the size of \(\kappa =\Vert T^t\Vert ^2/(1-\rho )\), the norm of the error \(U-U_k\) soon reaches the size of the norm of \(U-U_\rho \). The idea behind the estimate (5.6) is that in high space dimensions the condition (5.7) is satisfied for nearly all \(\omega \) and that the part \(U-U_\rho \) of U is therefore often negligible. The set on which the condition (5.7) is violated is a subset of the sector

for \(\delta =1-\rho \). Let the expression \(\Vert T^t\omega \Vert ^2\) possess the angular distribution

Consider the solution U for a rotationally symmetric right-hand side F with finite norm \(\Vert F\Vert _s\), and let \(\chi (t)=1\) for \(t\le \delta \) and \(\chi (t)=0\) for \(t>\delta \). Then

holds, independent of \(\mu \) and with equality for \(\mu =0\). This follows from the radial-angular representation of the involved integrals. By Lemma 2.2, the integral over the unit sphere on the right-hand side has a finite value for dimensions \(m\ge 3\). In terms of the distribution density from (5.9), it is given by

and tends to zero as \(\delta \) goes to zero, for higher dimensions usually very rapidly as the example of the (rescaled) orthogonal projections from Theorem 4.1 shows.

The estimate (5.6) is extremely robust in many respects. First, it is based on a pointwise estimate of the Fourier transform of the error. It is therefore equally valid for other Fourier-based norms. Second, the function (5.3) can be replaced by any approximation \({\widetilde{\alpha }}(\omega )\) for which an estimate

on the entire frequency space and an inverse estimate

on a sufficiently large ball around the origin hold without losing too much. This applies in particular to approximations by sums of Gauss functions.

Nevertheless, the estimate from Theorem 5.2 is rather pessimistic, because it only takes into account the decay of the left tail of the distribution (5.9), but ignores the fast decay of its right tail. To see what can be reached, we study the behavior of the iteration in the limit \(\mu =0\), the case where the underlying effects are most clearly brought to light. Decomposing the vectors \(\omega =r\eta \) into a radial part \(r\ge 0\) and an angular part \(\eta \in S^{n-1}\), the error (5.2) propagates frequency-wise as

and after the transition to the limit value \(\mu =0\) as

In the limit case, therefore, the method acts only on the angular part of the error. Nevertheless, by Theorem 5.1 the iterates converge to the solution. To clarify the underlying effects, we prove this once again in a different form.

Theorem 5.3

If the solution U lies in the Barron space \({\mathscr {B}}_s({\mathbb {R}}^n)\), \(s\ge 0\), this also holds for the iterates \(U_k\) implicitly given by (5.15). The norm (2.15) of the iteration error then tends to zero for suitably chosen polynomials \(P_k\) as k goes to infinity.

Proof

In radial-angular representation, the iteration error is given by

where the integrable function \(\phi :S^{n-1}\rightarrow {\mathbb {R}}\) is defined by the expression

To prove the convergence of the iterates \(U_k\) to the solution U, we again consider the polynomials \(P_k(t)=(1-\vartheta t)^k\) with \(\vartheta =1/\Vert T^t\Vert ^2\). For \(\eta \in S^{n-1}\), it is

where the value one is only attained on the intersection of the \((n-m)\)-dimensional kernel of the matrix \(T^t\) with the unit sphere, that is, on a set of area measure zero. The convergence again follows from the dominated convergence theorem. \(\square \)

Under a seemingly harmless additional assumption, one gets a rather sharp, hardly to improve estimate for the speed of convergence.

Theorem 5.4

Under the assumption that the norm given by (2.17) of the solution U takes a finite value, the iteration error can be estimated as

in terms of the density f of the distribution of the values \(\Vert T^t\eta \Vert ^2\) on the unit sphere.

Proof

The proof is based on the same error representation

as that in the proof of the previous theorem, but by assumption the function

is now square integrable, not only integrable. Its correspondingly scaled \(L_2\)-norm is the norm \(|\!|\!|U|\!|\!|_s\) of the solution. The Cauchy-Schwarz inequality thus leads to

If one rewrites the integral still in terms of the distribution f, (5.16) follows. \(\square \)

In sharp contrast to Theorem 5.1 and Theorem 5.2, this theorem strongly depends on the involved norms. But of course one can hope that other error norms behave similarly, especially for solutions whose Fourier transform is not strongly concentrated on a small sector around the kernel of \(T^t\). For rotationally symmetric solutions U,

holds as the norms given by (2.15) and (2.17) coincide in this case. This estimate can in turn be transferred to the Hilbert spaces \(H^s\), in which even equality holds.

Lemma 5.1

Let the rotationally symmetric function U lie in the Hilbert-space \(H^s\) equipped with the seminorm \(|\cdot |_{2,s}\). The iteration error, here given by

then tends to zero for suitably chosen polynomials \(P_k\) as k goes to infinity.

Proof

In this case, the iteration error possesses the radial-angular representation

where the integrable function \(\phi :S^{n-1}\rightarrow {\mathbb {R}}\) is defined by the expression

Since the function \(\phi \) takes the constant value \(|U|_{2,s}^2\) in the given case, this proves the error representation. Convergence is shown as in the proof of Theorem 5.3. \(\square \)

This lemma may not be very interesting on its own, but once again shows that not much is lost in the error estimate (5.16) and that the prefactors

cannot really be improved. Moreover, it shows that these prefactors tend to zero for optimally or near optimally chosen polynomials \(P_k\) as k goes to infinity.

The task is therefore to find the polynomials \(P_k\) of order k that minimize the integral (5.19) under the constraint \(P_k(0)=1\). These polynomials can be expressed in terms of the orthogonal polynomials assigned to the density f as weight function. Under the given circumstances, the expression

defines an inner product on the space of the polynomials. Let the polynomials \(p_k\) of order k satisfy the orthogonality condition \((p_k,p_\ell )=\delta _{k\ell }\).

Lemma 5.2

In terms of the given orthogonal polynomials \(p_k\), the polynomial \(P_k\) of order k that minimizes the integral (5.19) under the constraint \(P_k(0)=1\) is

and the integral itself takes the minimum value

Proof

We represent the optimum polynomial \(P_k\) as linear combination

The zeros of \(p_j\) lie strictly between the zeros of \(p_{j+1}\), the interlacing property of the zeros of orthogonal polynomials. The polynomials \(p_0,p_1,\ldots ,p_k\) therefore cannot take the value zero at the same time. Introducing the vector x with the components \(x_j\), the vector \(p\ne 0\) with the components \(p_j(0)\), and the vector \(a=p/\Vert p\Vert \), we have to minimize \(\Vert x\Vert ^2\) under the constraint \(a^tx=1/\Vert p\Vert \). Because of

the polynomial \(P_k\) minimizes the integral if and only if its coefficient vector x is a scalar multiple of a or p that satisfies the constraint. \(\square \)

This is the point where our considerations from the previous two sections apply. We have seen that the values \(\Vert T^t\omega \Vert ^2\) are approximately normally distributed, with a variance V and a standard deviation \(\sigma =\sqrt{V}\) that tend to zero in almost all cases as the dimensions increase. This justifies replacing the actual distribution (5.9) by the corresponding normal distribution with the density

Then one ends up up with a classical case and can express the orthogonal polynomials \(p_k\) in terms of the Hermite polynomials \(He_0(x)=1\), \(He_1(x)=x\), and

that satisfy the orthogonality condition

In dependence of the standard deviation \(\sigma \), in this case the \(p_k\) are given by

The first twelve \(M_k=M_k(\sigma )\) assigned to the orthogonal polynomials (5.26) for the standard deviations \(\sigma =1/16\), \(\sigma =1/32\), and \(\sigma =1/64\) are compiled in Table 1. They give a good impression of the speed of convergence that can be expected and are fully in line with our predictions.

The only matrices \(T^t\) for which the distribution of the values \(\Vert T^t\eta \Vert ^2\) is explicitly known and available for comparison are the orthogonal projections studied in Sect. 4. The densities of such distributions can be transformed to the weight functions associated with Jacobi polynomials. The orthogonal polynomials assigned to the rescaled variants of these matrices can therefore be expressed in terms of Jacobi polynomials. Details can be found in the appendix. For smaller dimensions, the resulting values (5.22) tend to zero much faster than the values that one obtains approximating the actual density by the density of a normal distribution. Not surprisingly, the values approach each other as the dimensions increase.

For the matrices T from Sect. 3 assigned to undirected interaction graphs, the angular distribution of the values \(\Vert T^t\omega \Vert ^2\) behaves very similarly to the case of the rescaled orthogonal projections. In fact, it differs in almost all cases only very slightly from a to the expected value one rescaled beta distribution. Recall that beta distributions depend on two parameters \(\alpha ,\beta >0\) and possess the density

The density of their to the expected value one rescaled counterpart is

on the interval \(0<t<(\alpha +\beta )/\alpha \) and zero otherwise.

Lemma 5.3

The variance V and the third-order central moment Z of the rescaled beta distribution with the density (5.28) are connected to each other through

In terms of V and Z, the parameters \(\alpha \) and \(\beta \) are given by

For any given \(V>0\) and Z that satisfy the condition (5.29), conversely there exists a rescaled beta distribution with variance V and third-order central moment Z.

Proof

The variance and the third-order central moment of a rescaled beta distribution with the density (5.28) possess the explicit representation

From the representation of the variance V

follows. As \(\beta \) and the right-hand side of this identity are positive, \(1-V\alpha \) is positive, too, and we obtain the given representation of \(\beta \) in terms of \(\alpha \) and V. If one inserts this into the representation of Z, one is led to the identity

and finally to the representation of \(\alpha \). Because the left-hand of this identity grows as function of \(\alpha \ge 0\) strictly and \(0<\alpha <1/V\) holds, (5.29) follows. As one can reverse the entire process, the proposition is proved. \(\square \)

Let T be an \((n\times m)\)-matrix T with the singular values \(\sigma _1,\ldots ,\sigma _m\) and let

be the traces of the matrices \(S=T^tT\), \(S^2\), and \(S^3\). Then the variance and the third-order central moment of the distribution of the values \(\Vert T^t\omega \Vert ^2\) are given by

For the matrices T assigned to interaction graphs, these quantities satisfy the condition (5.29) with very few exceptions, and the resulting densities (5.28) agree almost perfectly with the actual densities. The values (5.22) assigned to these densities thus reflect the convergence behavior presumably at least as well as the values resulting from the given approximation by a simple Gauss function. In a sense, this reverses the argumentation and emphasizes the role of the model problem analyzed in Sect. 4. From this point of view, the beta distributions are at the beginning and the normal distributions only the limit case for higher dimensions.

The matrices T assigned to interaction graphs still have another nice property. For vectors e pointing into the direction of a coordinate axis, \(\Vert T^te\Vert =\Vert e\Vert \) holds. The expression \(\alpha (\omega )(\Vert T^t\omega \Vert ^2+\mu )\) thus takes the value one on the coordinate axes, which is the reason for the square root in the definition (3.19) of these matrices. The described effects become therefore particularly noticeable on the regions on which the Fourier transforms of functions in hyperbolic cross spaces are concentrated. The functions that are well representable in tensor formats and in which we are first and foremost interested in the present paper fall into this category.

We still need to approximate 1/r on intervals \(\mu \le r\le R\mu \) with moderate relative accuracy by sums of exponential functions, which then leads to the approximations (1.4) of the kernel (5.3) by sums of Gauss functions. Relative, not absolute accuracy, since these approximations are embedded in an iterative process; see the remarks following Theorem 5.2. It suffices to restrict oneself to intervals \(1\le r\le R\). If v(r) approximates 1/r on this interval with a given relative accuracy, the function

approximates the function \(r\rightarrow 1/r\) on the original interval \(\mu \le r\le R\mu \) with the same relative accuracy. Good approximations of 1/r with astonishingly small relative error are the at first sight rather harmless looking sums

a construction due to Beylkin and Monzón [4] based on an integral representation. The parameter h determines the accuracy and the quantities \(k_1h\) and \(k_2h\) control the approximation interval. The functions (5.34) possess the representation

in terms of the for s going to infinity rapidly decaying window function

To check with which relative error the function (5.34) approximates 1/r on a given interval \(1\le r\le R\), thus one has to check how well the function \(\phi \) approximates the constant 1 on the interval \(0\le s\le \ln R\).

For \(h=1\) and summation indices k ranging from \(-2\) to 50, the relative error is, for example, less than 0.0007 on almost the whole interval \(1\le r\le 10^{18}\), that is, in the per mill range on an interval that spans eighteen orders of magnitude. Such an accuracy is surely exaggerated in the given context, but the example underscores the outstanding approximation properties of the functions (5.34). Figure 4 depicts the corresponding function \(\phi \). These observations are underpinned by the analysis of the approximation properties of the corresponding infinite series in [14, Sect. 5]. It is shown there that these series approximate 1/r with a relative error

as h goes to zero. Moreover, the functions \(\phi \) and their series counterparts almost perfectly fulfill the equioscillation condition as approximations of the constant 1, so that not much room for improvement remains. High relative accuracy over large intervals \(r\ge 1\) is a much stronger requirement than high absolute accuracy, the case that has been studied by Braess and Hackbusch in [5, 6], and [10].

The practical feasibility of the approach depends on the representation of the tensors involved and the access to the Fourier transforms of the functions represented by them. A central task not discussed here is the recompression of the data between the iteration steps in order to keep the amount of work and storage under control, a problem common to all tensor-oriented iterative methods. If one fixes the accuracy in the single coordinate directions, the process reduces to an iteration on the space of the functions defined on a given high-dimensional cubic grid, functions that are stored in a compressed tensor format. There exist highly efficient, linear algebra-based techniques for recompressing such data. A problem in the given context may be that the in this framework naturally looking discrete \(\ell _2\)-norm of the tensors does not match the underlying norms of the continuous problem. Another open question is the overall complexity of the process. A difficulty with our approach is that the given operators do not split into sums of operators that act separately on a single variable or a small group of variables, a fact that complicates the application of techniques such as of those in [3] or [7]. For more information on tensor-oriented solution methods for partial differential equations, see the monographs [11] and [12] of Hackbusch and of Khoromskij and Bachmayr’s comprehensive survey article [2].

Notes

The square root is important. Why, is explained in Sect. 5.

References

Abramowitz, M., Stegun, I.A.: Handbook of mathematical functions, Dover Publications, New York, 10th printing in (1972)

Bachmayr, M.: Low-rank tensor methods for partial differential equations. Acta Numer. 32, 1–121 (2023)

Bachmayr, M., Dahmen, W.: Adaptive near-optimal rank tensor approximation for high-dimensional operator equations. Found. Comput. Math. 15, 839–898 (2015)

Beylkin, G., Monzón, L.: Approximation by exponential sums revisited. Appl. Comput. Harmon. Anal. 28, 131–149 (2010)

Braess, D., Hackbusch, W.: Approximation of \(1/x\) by exponential sums in \([1,\infty )\). IMA J. Numer. Anal. 25, 685–697 (2005)

Braess, D., Hackbusch, W.: On the efficient computation of high-dimensional integrals and the approximation by exponential sums. In: DeVore, R., Kunoth, A. (eds.) Multiscale, Nonlinear and Adaptive Approximation. Springer, Berlin Heidelberg (2009)

Dahmen, W., DeVore, R., Grasedyck, L., Süli, E.: Tensor-sparsity of solutions to high-dimensional elliptic partial differential equations. Found. Comput. Math. 16, 813–874 (2016)

Dasgupta, S., Gupta, A.: An elementary proof of a theorem of Johnson and Lindenstrauss. Random Struct. Algorithms 22, 60–65 (2003)

Frankl, P., Maehara, H.: Some geometric applications of the beta distribution. Ann. Inst. Stat. Math. 42, 463–474 (1990)

Hackbusch, W.: Computation of best \(L^\infty \) exponential sums for \(1/x\) by Remez’ algorithm. Comput. Vis. Sci. 20, 1–11 (2019)

Hackbusch, W.: Tensor Spaces and Numerical Tensor Calculus. Springer, Cham (2019)

Khoromskij, B.N.: Tensor Numerical Methods in Scientific Computing. Radon Series on Computational and Applied Mathematics, vol. 19. De Gruyter, Berlin München Boston (2018)

Olver, F.W.J., Lozier, D.W., Boisvert, R.F., Clark, C.W. (eds.): NIST Handbook of Mathematical Functions. Cambridge University Press, Cambridge (2010)

Scholz, S., Yserentant, H.: On the approximation of electronic wavefunctions by anisotropic Gauss and Gauss-Hermite functions. Numer. Math. 136, 841–874 (2017)

Siegel, J.W., Xu, J.: Sharp bounds on the approximation rates, metric entropy, and n-widths of shallow neural networks. Found. Comput. Math. (2022). https://doi.org/10.1007/s10208-022-09595-3

Sturmfels, B.: Algorithms in Invariant Theory. Springer, Wien (2008)

van der Warden, B.L.: Algebra I. Springer, Berlin Heidelberg New York (1971)

Vershynin, R.: High-Dimensional Probability. Cambridge University Press, Cambridge (2018)

Yserentant, H.: On the expansion of solutions of Laplace-like equations into traces of separable higher-dimensional functions. Numer. Math. 146, 219–238 (2020)

Yserentant, H.: A measure concentration effect for matrices of high, higher, and even higher dimension. SIAM J. Matrix Anal. Appl. 43, 464–478 (2022)

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix: Beta distributions and Jacobi polynomials

Appendix: Beta distributions and Jacobi polynomials

Setting \(a=\beta -1\), \(b=\alpha -1\), and introducing the constants

the density (5.28) can be rewritten in the form

The orthogonal polynomials \(p_k\) from Lemma 5.2 assigned to this weight function f can therefore be expressed in terms of the Jacobi polynomials

The Jacobi polynomials satisfy the orthogonality relation

The polynomials \(p_k\) therefore possess the representation

At \(x=-1\), the Jacobi polynomials take the value

see [1, Table 22.4] or [13, Table 18.6.1]. This leads to the closed representation

of the values \(p_k(0)^2\). Starting from \(p_0(0)^2=1\), they can therefore be computed in a numerically very stable way by the recursion

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Yserentant, H. An iterative method for the solution of Laplace-like equations in high and very high space dimensions. Numer. Math. 156, 777–811 (2024). https://doi.org/10.1007/s00211-024-01401-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00211-024-01401-2