Abstract

In this paper, we analyze the drift-implicit (or backward) Euler numerical scheme for a class of stochastic differential equations with unbounded drift driven by an arbitrary λ-Hölder continuous process, λ ∈ (0,1). We prove that, under some mild moment assumptions on the Hölder constant of the noise, the \(L^{r}({\Omega };L^{\infty }([0,T]))\)-approximation error converges to 0 as O(Δλ), Δ → 0. To exemplify, we consider numerical schemes for the generalized Cox–Ingersoll–Ross and Tsallis–Stariolo–Borland models. The results are illustrated by simulations.

Similar content being viewed by others

1 Introduction

We analyze the drift-implicit (also known as backward) Euler numerical scheme for stochastic differential equations (SDEs) of the form

where Z is a general λ-Hölder continuous noise, λ ∈ (0,1), and the drift b is unbounded and has one of the following two properties:

-

(A) b(t,y) has an explosive growth of the type (y − φ(t))−γ as y ↓ φ(t), where φ is a given Hölder continuous function of the same order λ as Z and \(\gamma > \frac {1}{\lambda } - 1\);

-

(B) b(t,y) has an explosive growth of the type (y − φ(t))−γ as y ↓ φ(t) and an explosive decrease of the type − (ψ(t) − y)−γ as y ↑ ψ(t), where φ and ψ are given Hölder continuous functions of the same order λ as Z such that φ(t) < ψ(t), t ∈ [0,T], and \(\gamma > \frac {1}{\lambda } - 1\).

The SDEs of this type were extensively studied in [14]. It was shown that the properties (A) or (B), along with some relatively weak additional assumptions, ensure that the solution to (1.1) is bounded from below (one-sided sandwich case) by the function φ in the setting (A), i.e.

or stays between φ and ψ (two-sided sandwich case) in the setting (B), i.e.

We emphasize that the SDE type (1.1) includes and generalizes several widespread stochastic models. For example, the process given by

where Z is λ-Hölder continuous with \(\lambda > \frac {1}{2}\), fits into the setting (B), and can be regarded as a natural extension of the Tsallis–Stariolo–Borland (TSB) model employed in biophysics (for more details on the standard Brownian TSB model, see, e.g. [15, Subsection 2.3] or [16, Chapter 3 and Chapter 8]). Another important example is

where Z is λ-Hölder continuous, λ ∈ (0,1), and \(\gamma > \frac {1}{\lambda } - 1\). It can be shown (see [14, Subsection 4.2]) that, if \(\lambda > \frac {1}{2}\), stochastic process X(t) := Y1+γ(t) satisfies the SDE

where \(\alpha := \frac {\gamma }{1+\gamma } \in (0,1)\) and the integral w.r.t. Z exists as a pathwise limit of Riemann-Stieltjes integral sums. Equations of the type (1.5) are used in finance in the standard Brownian setting and are called Chan–Karolyi–Longstaff–Sanders (CKLS) or constant elasticity of variance (CEV) model (see, e.g. [4, 8, 9]). If \(\alpha = \frac {1}{2}\), Eq. (1.5) is also known as the Cox–Ingersoll–Ross (CIR) equation, see, e.g. [10,11,12].

In this work, we develop a numerical approximation (both pathwise and in \(L^r({\Omega }; L^{\infty } ([0,T]))\)) for sandwiched processes (1.1) which is similar to the drift-implicit (also known as backward) Euler scheme constructed for the classical Cox–Ingersoll–Ross process in [2, 3, 13] and extended to the case of the fractional Brownian motion with \(H> \frac 1 2\) in [18, 21, 22]. In this drift-implicit scheme, in order to generate \(\widehat Y(t_{k+1})\), one has to solve the equation of the type

with respect to \(\widehat Y(t_{k+1})\) which is in general a more computationally heavy problem in comparison to the standard Euler-type techniques (see, e.g. [14, Section 5]). However, this drift-implicit numerical method also has a substantial advantage: the approximation \(\widehat Y\) maintains the property of being sandwiched, i.e. for all points tk of the partition

in the setting (A) and

in the case (B). Having this in mind, we shall say that the drift-implicit scheme is sandwich preserving.

We note that a similar approximation scheme was studied in [21] and [18, 22] for processes of the type (1.4) driven by a fractional Brownian motion with H > 1/2. Our work can be seen as an extension of those. However, we emphasize that our results have several elements of novelty. In particular, the paper [21] discusses only pathwise convergence and not convergence in \(L^r({\Omega }; L^{\infty } ([0,T]))\). The approach of [18] and [22] is very noise specific as both use Malliavin calculus techniques in the spirit of [19, Proposition 3.4] to estimate inverse moments of the considered process (which turns out to be crucial to control explosive growth of the drift). As a result, two limitations appear: a restrictive condition involving the time horizon T (see, e.g. [18, Eq. (8) and Remark 3.1]) and sensitivity to the choice of the noise, i.e. their method cannot be applied directly for drivers other than fBm with H > 1/2. This lack of flexibility in terms of the choice of the noise is a crucial disadvantage in, e.g. finance where modern empirical studies justify the use of fBm with extremely low Hurst index (H < 0.1) [7] or even drivers with time-varying roughness [1]. Our approach makes use of [14, Theorem 3.2] based on the pathwise calculus and allows us to obtain strong convergence with no limitations on T for a substantially larger class of noises. In fact, we require only Hölder continuity of the noise and some moment condition on the corresponding Hölder coefficient which is often satisfied and shared by, e.g. all Hölder continuous Gaussian processes.

The paper is organized as follows. Section 2 describes the setting in detail and contains some necessary statements on the properties of the sandwiched processes. In Section 3, we give the convergence results in the setting (B) which turns out to be a bit simpler than (A) due to boundedness of the process. Section 4 extends the scheme to the setting (A). In Section 5, we give some examples and simulations; in particular, we show that in some cases (e.g. for the generalized TSB and CIR models), Eq. (1.6) can be solved explicitly which drastically improves the computational efficiency of the algorithm.

2 Preliminaries and assumptions

Fix T > 0 and define

where φ, ψ ∈ C([0,T]) are such that φ(t) < ψ(t), t ∈ [0,T].

Throughout the paper, we will be dealing with a stochastic differential equation of the form

The noise Z = {Z(t), t ∈ [0,T]} is always assumed to satisfy the following conditions:

-

(Z1) Z(0) = 0 a.s.;

-

(Z2) Z has a.s. λ-Hölder continuous paths for some λ ∈ (0,1), i.e. there exists a positive random variable Λ such that

$$ |Z(t) - Z(s)| \le {\Lambda} |t-s|^{\lambda}, \quad s,t\in[0,T], \quad a.s. $$

Given the noise Z satisfying (Z1)–(Z2), the initial value Y (0) and the drift b satisfy one of the two assumptions given below.

Assumption A

(One-sided sandwich case) There exists a λ-Hölder continuous function φ: \([0,T] \to \mathbb {R}\) with λ being the same as in (Z2) such that

-

Y (0) is deterministic and Y (0) > φ(0),

-

b: \(\mathcal D_{0} \to \mathbb {R}\) is continuous and for any \(\varepsilon \in \left (0, 1\right )\)

$$ |b(t_{1},y_{1}) - b(t_{2}, y_{2})| \le \frac{c_{1}}{\varepsilon^{p}} \left(|y_{1} - y_{2}| + |t_{1} - t_{2}|^{\lambda} \right),\! \quad (t_{1}, y_{1}), (t_{2},y_{2}) \in \mathcal D_{\varepsilon}, $$where c1 > 0 and p > 1 are some given constants and λ is from (Z2),

-

$$ b(t, y) \ge \frac{c_{2}}{(y - \varphi(t))^{\gamma}}, \quad (t,y) \in \mathcal D_{0}\setminus \mathcal D_{y_{*}}, $$

where y∗, c2 > 0 are some given constants and \(\gamma > \frac {1}{\lambda } - 1\) with λ being from (Z2),

-

the partial derivative \(\frac {\partial b}{\partial y}\), with respect to the spacial variable exists, is continuous and bounded from above, i.e.

$$ \frac{\partial b}{\partial y}(t, y) < c_{3}, \quad (t,y) \in \mathcal D_{0}, $$for some c3 > 0.

Assumption B

(Two-sided sandwich case) There exist λ-Hölder continuous functions φ, ψ: \([0,T]\to \mathbb {R}\), φ(t) < ψ(t), t ∈ [0,T], with λ being the same as in (Z2) such that

-

Y (0) is deterministic and φ(0) < Y (0) < ψ(0),

-

b: \(\mathcal D_{0,0} \to \mathbb {R}\) is continuous and for any \(\varepsilon \in \left (0, \min \limits \left \{1, \frac {1}{2}\lVert \psi - \varphi \rVert _{\infty }\right \}\right )\)

$$ |b(t_{1},y_{1}) - b(t_{2}, y_{2})| \le \frac{c_{1}}{\varepsilon^{p}} \left(|y_{1} - y_{2}| + |t_{1} - t_{2}|^{\lambda} \right), \quad (t_{1}, y_{1}), (t_{2},y_{2}) \in \mathcal D_{\varepsilon, \varepsilon}, $$where c1 > 0 and p > 1 are some given constants and λ is from (Z2),

-

$$ b(t, y) \ge \frac{c_{2}}{(y - \varphi(t))^{\gamma}}, \quad (t,y) \in \mathcal D_{0,0}\setminus \mathcal D_{y_{*}, 0}, $$$$ b(t, y) \le -\frac{c_{2}}{(\psi(t) - y)^{\gamma}}, \quad (t,y) \in \mathcal D_{0,0}\setminus \mathcal D_{0, y_{*}}, $$

where y∗, c2 > 0 are some given constants and \(\gamma > \frac {1}{\lambda } - 1\) with λ being from (Z2),

-

the partial derivative \(\frac {\partial b}{\partial y}\), with respect to the spacial variable exists, is continuous and bounded from above, i.e.

$$ \frac{\partial b}{\partial y}(t, y) < c_{3}, \quad (t,y) \in \mathcal D_{0,0}, $$for some c3 > 0.

Both Assumptions A and B along with (Z1)–(Z2) ensure that the SDE (2.2) has a unique solution. In the theorem below, we provide some relevant results related to sandwiched processes (see [14, Theorems 2.3, 2.5, 2.6, 3.1, and 3.2]).

Theorem 2.1

Let Z = {Z(t), t ∈ [0,T]} be a stochastic process satisfying (Z1)–(Z2).

-

If the initial value Y (0) and the drift b satisfy assumptions (A1)–(A3), then the SDE has a unique strong pathwise solution such that for all t ∈ [0,T]

$$ Y(t) > \varphi(t) \quad a.s. $$(2.3)Moreover, there exist deterministic constants L1, L2, L3, and L4 > 0 depending only on Y (0), the shape of b and λ, such that for all t ∈ [0,T], the estimate (2.3) can be refined as follows:

$$ \varphi(t) + \frac{L_{1}}{ (L_{2} + {\Lambda} )^{\frac{1}{\gamma \lambda + \lambda - 1}} } \le Y(t) \le L_{3} + L_{4} {\Lambda} \quad a.s., $$(2.4)where Λ is from (Z2) and γ is from (A3). In particular, if Λ is such that

$$ \mathbb{E} \left[{\Lambda}^{\frac{r}{\gamma\lambda+\lambda-1}}\right] < \infty $$(2.5)for some r > 0, then

$$ \mathbb{E}\left[ \underset{t\in[0,T]}{\sup} \frac{1}{(Y(t) - \varphi(t))^{r}} \right] < \infty, $$and, if

$$ \mathbb{E} {\Lambda}^{r} < \infty $$(2.6)for some r > 0, then

$$ \mathbb{E} \left[\underset{t\in[0,T]}{\sup} |Y(t)|^{r}\right] < \infty. $$ -

If the initial value Y (0) and the drift b satisfy assumptions (B1)–(B3), then the SDE has a unique strong pathwise solution such that for all t ∈ [0,T]

$$ \varphi(t) < Y(t) < \psi(t) \quad a.s. $$(2.7)Moreover, there exist deterministic constants L1 and L2 > 0 depending only on Y (0), the shape of b and λ, such that for all t ∈ [0,T], the estimate (2.7) can be refined as follows:

$$ \varphi(t) + \frac{L_{1}}{ (L_{2} + {\Lambda} )^{\frac{1}{\gamma \lambda + \lambda - 1}} } \le Y(t) \le \psi(t) - \frac{L_{1}}{ (L_{2} + {\Lambda} )^{\frac{1}{\gamma \lambda + \lambda - 1}} } \quad a.s., $$(2.8)where Λ is from (Z2) and γ is from (B3). In particular, if Λ can be chosen in such a way that

$$ \mathbb{E} \left[ {\Lambda}^{\frac{r}{\gamma\lambda+\lambda-1}} \right] < \infty $$(2.9)for some r > 0, then

$$ \mathbb{E}\left[ \underset{t\in[0,T]}{\sup} \frac{1}{(Y(t) - \varphi(t))^{r}} \right] < \infty, \quad \mathbb{E}\left[ \underset{t\in[0,T]}{\sup} \frac{1}{(\psi(t) - Y(t))^{r}} \right] < \infty. $$

Remark 2.2

Properties (2.3)–(2.4) and (2.7)–(2.8) hold on each ω ∈Ω such that Z(ω;t), t ∈ [0,T], is Hölder continuous and we always consider only such ω ∈Ω in all proofs with pathwise arguments. For notational simplicity, we will also omit ω in brackets.

Remark 2.3

Due to the property (2.7), the setting described in Assumption B will be referred to as the two-sided sandwich case since the solution is “sandwiched” between φ and ψ a.s. Similarly, the property (2.3) justifies the name one-sided sandwich case for the setting corresponding to Assumption A. In both cases A and B, the solution to (2.2) will be referred to as a sandwiched process.

Remark 2.4

Note that assumptions (A4) and (B4) are not required for Theorem 2.1 to hold and will be used later on.

In what follows, conditions (2.5), (2.6), and (2.9) will play an important role since the \(L^{r}({\Omega };L^{\infty }([0,T]))\)-convergence of the approximation scheme will directly follow from the integrability of Λ. However, it should be noted that these conditions are not very restricting as indicated in the following example.

Example 2.5

(Hölder Gaussian noises) Let Z = {Z(t), t ∈ [0,T]} be an arbitrary Hölder continuous Gaussian process satisfying (Z1)–(Z2), e.g. standard or fractional Brownian motion. In this case, by [6], the random variable Λ from (Z2) can be chosen to have moments of all orders.

We now complete the Section with some examples of the sandwiched processes.

Example 2.6

(Generalized CIR and CKLS/CEV models) Let φ ≡ 0, Z satisfy (Z1)–(Z2) with λ ∈ (0,1) and Y (0), κ1, κ2 > 0, \(\gamma > \frac {1}{\lambda } - 1\) be given. Then, by Theorem 2.1 (1), the SDE of the form

has a unique positive solution. Moreover, it can be shown (see [14, Subsection 4.2]) that, if \(\lambda > \frac {1}{2}\), stochastic process X(t) := Y1+γ(t), t ∈ [0,T], a.s. satisfies the SDE of the form

where \(\alpha := \frac {\gamma }{1+\gamma } \in (0,1)\) and the integral w.r.t. Z exists a.s. as a pathwise limit of Riemann-Stieltjes integral sums. As mentioned already, the (2.11) appears in finance in the standard Brownian setting and is called Chan–Karolyi–Longstaff–Sanders (CKLS) or constant elasticity of variance (CEV) model (see, e.g. [4, 8, 9]). If \(\alpha = \frac {1}{2}\) (i.e. when γ = 1), the (2.11) is also known as the Cox–Ingersoll–Ross (CIR) equation [10,11,12].

Remark 2.7

(Connection with the classical Brownian CIR/CKLS models)

-

1)

If γ = 1 in (2.10) (CIR case), Assumption (A3) demands Z to be Hölder continuous of order \(\lambda > \frac {1}{2}\). That means that Example 2.6 does not cover the classical Brownian CIR model since the continuous modification of a standard Brownian motion has paths that are Hölder continuous only up to (but not including) the order 1/2. However, it is still possible to establish a clear connection between our setting and the classical CIR model. Indeed, let {W(t), t ∈ [0,T]} be the continuous modification of a standard Brownian motion. Consider the CIR process X = {X(t), t ∈ [0,T]} defined by

$$ dX(t) = a(b - X(t))dt + \sigma \sqrt{X(t)} dW(t), \quad X_{0} >0, $$where a, b, σ > 0 and 2ab > σ2. The latter condition ensures that X has positive paths a.s. and hence one can define \(Y := \sqrt {X}\). By Itô’s formula, Y satisfies the SDE

$$ dY(t) = \left(\frac{\kappa_{1}}{Y(t)} - \kappa_{2} Y(t)\right)dt + \frac{\sigma}{2}dW(t), \quad Y_{0} = \sqrt{X_{0}}>0, $$(2.12)with \(\kappa _{1} := \frac {4ab - \sigma ^{2}}{8}\) and \(\kappa _{2} := \frac {a}{2}\), which has a type very similar to (2.10). The SDE (2.12) can then be used to define a drift-implicit Euler scheme of the form (1.6) which turns out to converge to the original process (2.12). For more details on the drift-implicit Euler scheme for the classical Brownian CIR process, see, e.g. [13].

-

2)

If γ > 1 in (2.10), Assumptions (Z1)–(Z2) and (A1)–(A4) allow Z to be a standard Brownian motion. However, in this case, one cannot use pathwise calculus to obtain (2.11) whereas the standard Itô’s formula shows that X := Y1+γ does not coincide with the standard CKLS process. In order to cover the standard CKLS model, we have to modify the drift in (2.10) to compensate for the second order term in Itô’s formula as follows:

$$ dY(t) = \left(\frac{\kappa_{1}}{Y^{\gamma}(t)} - \frac{\gamma \sigma^{2}}{2Y(t)} - \kappa_{2} Y(t) \right)dt + \sigma dW(t). $$(2.13)The SDE (2.13) satisfies Assumption A and X := Y1+γ is the solution to the SDE

$$ \begin{array}{@{}rcl@{}} X(t)& =& X(0) + (1+\gamma){{\int}_{0}^{t}}\left(\kappa_{1} - \kappa_{2} X(s) \right) ds + (1+\gamma) \sigma {{\int}_{0}^{t}} X^{\alpha}(s) dW(s),\\ &&\alpha = \frac{\gamma}{1+\gamma}, \end{array} $$i.e. X := Y1+γ is the classical CKLS process.

Example 2.8

(Generalized TSB model) Let φ ≡− 1, ψ ≡ 1, Y (0) ∈ (− 1,1), Z satisfy (Z1)–(Z2) with \(\lambda > \frac {1}{2}\) and κ > 0. Then, by Theorem 2.1 (2), the SDE of the form

has a unique solution such that − 1 < Y (t) < 1 for all t ∈ [0,T] a.s. In the standard Brownian setting, the SDE of the type (2.14) is known as the Tsallis–Stariolo–Borland (TSB) model and is used in biophysics (for more details, see, e.g. [15, Subsection 2.3] or [16, Chapter 3 and Chapter 8]).

Example 2.9

For the given Z satisfying (Z1)–(Z2) with λ ∈ (0,1), λ-Hölder continuous functions φ, ψ, φ(t) < ψ(t), t ∈ [0,T], and Y (0) ∈ (φ(0),ψ(0)) consider the SDE of the form

where κ1, κ2 > 0, \(\kappa _{3}\in \mathbb {R}\), and \(\gamma > \frac {1}{\lambda } - 1\). By Theorem 2.1 (2), this SDE has a unique solution such that φ(t) < Y (t) < ψ(t) a.s. Note that the TSB drift from (2.14) also has this shape with φ ≡− 1, ψ ≡ 1, γ = 1, \(\kappa _{1} = \kappa _{2} = \frac {\kappa }{2}\), and κ3 = 0 since

Notation 2.10

In what follows, C denotes any positive deterministic constant that does not depend on the partition and the exact value of which is not relevant. Note that C may change from line to line (or even within one line).

3 The approximation scheme for the two-sided sandwich

We will start by considering the numerical scheme for the two-sided sandwich case which turns out to be slightly simpler due to boundedness of Y. Let the noise Z satisfy (Z1)–(Z2), Y (0) and b satisfy Assumption B and Y = {Y (t), t ∈ [0,T]} be the unique solution of the SDE (2.2). Consider a uniform partition {0 = t0 < t1 < ... < tN = T} of [0,T], \(t_{k} := \frac {Tk}{N}\), k = 0,1,...,N, with the mesh \({\Delta }_{N}:=\frac {T}{N}\) such that

where c3 is an upper bound for \(\frac {\partial b}{\partial y}\) from (B4). Let us define \(\widehat Y(t)\) as follows:

where the second expression is considered as an equation with respect to \(\widehat Y(t_{k+1})\).

Remark 3.1

Equation with respect to \(\widehat Y(t_{k+1})\) from (3.2) has a unique solution such that \(\widehat Y(t_{k+1}) \in (\varphi (t_{k+1}), \psi (t_{k+1}))\). Indeed, for any fixed t ∈ [0,T] and any \(z\in \mathbb {R}\), consider the equation

w.r.t. y. Assumption (B4) together with condition (3.1) imply that (y − b(t,y)ΔN)y′ > 0 and, by (B3),

Thus, there exists a unique y ∈ (φ(t),ψ(t)) satisfying (3.3).

Remark 3.2

The value of \(\widehat Y(t)\) for t ∈ [0,T] ∖{t0,...,tN} can also be defined via linear interpolation as

In such case, all results of this section hold with almost no changes in the proofs.

Remark 3.3

The algorithms of the type (3.2) are sometimes called the drift-implicit [2, 3, 13] or backward [18] Euler approximation schemes.

Before presenting the main results of this section, we require some auxiliary lemmas. First of all, we note that the values \(\widehat Y(t_{n})\), n = 0,1,...,N, of the discretized process are bounded away from both φ and ψ by random variables that do not depend on the partition. Namely, we have the following result that can be regarded as a discrete modification of arguments in [14, Theorem 3.2].

Lemma 3.4

Let Z satisfy (Z1)–(Z2), Assumption B hold and the mesh of the partition ΔN satisfy (3.1). Then there exist deterministic constants L1 and L2 > 0 depending only on Y (0), the shape of the drift b and λ, such that

where Λ is from (Z2) and γ is from (B3).

Proof

We will prove that

by using the pathwise argument (see Remark 2.2). The other inequality can be derived in a similar manner. Recall that, by Assumption B, φ and ψ are λ-Hölder continuous, i.e. there exists K > 0 such that

Denote also

where c2 is from (B3),

with the constants y∗ and γ also from (B3), and

Note that, with probability 1,

and, furthermore, it is easy to check that ε < Y (0) − φ(0), ε < ψ(0) − Y (0), and ε < y∗.

If \(\widehat Y(t_{n}) \ge \varphi (t_{n}) + \varepsilon \) for a particular n = 0,1,...,N, then, by definition of ε, the bound of the type (3.4) holds automatically. Suppose that there exists n = 1,...,N such that \(\widehat Y({t_{n}}) < \varphi (t_{n}) + \varepsilon \). Denote by κ(n) the last point of the partition before tn on which \(\widehat Y\) stays above ε, i.e.

(note that such point exists since \(\widehat Y(t_{0}) - \varphi (0) = Y(0) - \varphi (0) > \varepsilon \)). Then, for all k = κ(n) + 1,...,n we have that \(\widehat Y(t_{k}) < \varepsilon < y_{*}\) and therefore, using (B3), we obtain that, with probability 1,

Consider a function \(F_{\varepsilon } : \mathbb {R}_{+} \to \mathbb {R}\) such that

It is straightforward to verify that Fε attains its minimum at

and, taking into account the explicit form of ε,

Namely, even if \(\widehat Y(t_{n}) < \varphi (t_{n}) + \varepsilon \), we still have that, with probability 1,

and thus, with probability 1, for any n = 0,1,...,N

where \(L_{1} := \frac {1}{2^{\frac {\gamma \lambda }{\gamma \lambda + \lambda -1}} \beta ^{\frac {1 - \lambda }{\gamma \lambda + \lambda -1}} }\). □

Remark 3.5

It is clear that constants L1 and L2 in Lemma 3.4 can be chosen jointly for Y and \(\widehat Y\), so that the inequalities

and

hold simultaneously with probability 1.

Next, we proceed with a simple property of the sandwiched process Y in (2.2).

Lemma 3.6

Let Z satisfy (Z1)–(Z2) and assumptions (B1)–(B3) hold.

-

There exists a positive random variable Υ such that, with probability 1,

$$ |Y(t) - Y(s)| \le {\Upsilon} |t-s|^{\lambda}, \quad t, s\in[0,T]. $$ -

If, for some r ≥ 1,

$$ \mathbb{E} \left[{\Lambda}^{\frac{r\max\{p, \gamma\lambda + \lambda - 1\}}{\gamma\lambda + \lambda - 1}}\right] < \infty, $$(3.5)where λ and Λ are from (Z2), p is from (B2), and γ is from (B3), then one can choose Υ such that

$$ \mathbb{E} [{\Upsilon}^{r}] < \infty. $$

Proof

Denote \(\phi (t) := \frac {1}{2}(\psi (t) + \varphi (t))\), t ∈ [0,T]. By (2.8),

i.e. with probability 1 \((t, Y(t)) \in \mathcal D_{\frac {1}{\xi }, \frac {1}{\xi }}\), t ∈ [0,T], where

and \(\mathcal D_{\frac {1}{\xi }, \frac {1}{\xi }}\) is defined by (2.1). It is evident that \((t, \phi (t)) \in \mathcal D_{\frac {1}{\xi }, \frac {1}{\xi }}\), t ∈ [0,T]; therefore, using (Z2), (B2), and (2.7), we can write that, with probability 1, for all 0 ≤ s < t ≤ T:

where C is a positive constant. Now one can put

and observe that the definition of Υ, (3.5), and (3.6) implies that

□

Next, using Lemma 3.4 and following the proof of Lemma 3.6, it is easy to obtain the following result.

Corollary 3.7

Let (Z1)–(Z2) and Assumption B hold. Then there exists a random variable Υ independent of the partition such that with probability 1

Furthermore, if (3.5) holds for some for r ≥ 1, then

Finally, Υ can be chosen jointly for Y and \(\widehat Y\), so that

holds simultaneously with (3.9) with probability 1.

Lemma 3.8

Let Z satisfy (Z1)–(Z2), Assumption B hold and the mesh of the partition ΔN satisfy (3.1). Then

-

for any r ≥ 1, there exists a positive random variable \(\mathcal C_{1}\) that does not depend on the partition such that

$$ \underset{k=0,1,...,N}{\sup} |Y(t_{k}) - \widehat{Y}(t_{k})|^{r} \le \mathcal C_{1} {\Delta}_{N}^{\lambda r} \quad a.s.; $$ -

if, additionally,

$$ \mathbb{E}\left[ {\Lambda}^{\frac{r(p+\max\{p, \gamma\lambda + \lambda - 1\})}{\gamma\lambda + \lambda -1}} \right] < \infty, $$(3.10)where λ and Λ are from (Z2), p is from (B2), and γ is from (B3), then one can choose \(\mathcal C_{1}\) such that \(\mathbb {E} [\mathcal C_{1}] < \infty \), i.e. there exists a deterministic constant C that does not depend on the partition such that

$$ \mathbb{E}\left[\underset{k=0,1,...,N}{\sup} |Y(t_{k}) - \widehat{Y}(t_{k})|^{r} \right] \le C{\Delta}_{N}^{\lambda r}. $$

Proof

Fix ω ∈Ω such that Z(ω,t), t ∈ [0,T], is Hölder continuous (for simplicity of notation, we will omit ω in the brackets). Denote \(e_{n} := Y(t_{n}) - \widehat Y(t_{n})\), ΔZn := Z(tn) − Z(tn− 1). Then

By the mean value theorem,

with \({\Theta }_{n} \in (Y(t_{n}) \wedge \widehat Y(t_{n}), Y(t_{n}) \vee \widehat Y(t_{n}) )\). Using this, we can rewrite (3.11) as follows:

where

by (B4) and (3.1).

Next, denote

and define \(\tilde e_{n} := \zeta _{n} e_{n}\). By multiplying both sides of (3.12) by ζn− 1, we obtain that

and, expanding the terms \(\tilde e_{i-1}\) in (3.13) one by one, i = n,n − 1,...,1, and taking into account that \(\tilde e_{0} = 0\), we obtain that

Therefore,

Observe that, by assumption (B4) and (3.1), for any \(i,n \in \mathbb {N}\), i < n,

whence there exists a constant C that does not depend on i, n or N such that

Using this, one can deduce that

Note that \((t, Y(t)) \in \mathcal D_{\frac {1}{\xi }, \frac {1}{\xi }}\), where ξ is defined by (3.6) and \(\mathcal D_{\frac {1}{\xi }, \frac {1}{\xi }}\) is defined via (2.1); hence, by (B2) as well as Lemma 3.6, we can deduce that

In other words, there exists a constant C that does not depend on the partition such that

and, since the right-hand side of the relation above does not depend on n or N, we have

It remains to notice that, by (3.6) and (3.8),

whenever (3.10) holds, which finally implies

□

Now we are ready to proceed to the main results of this subsection.

Theorem 3.9

Let Z satisfy (Z1)–(Z2), Assumption B hold and the mesh of the partition ΔN satisfy (3.1). Then

-

for any r ≥ 1, there exists a random variable \(\mathcal C_{2}\) that does not depend on the partition such that

$$ \underset{t\in[0,T]}{\sup} |Y(t) - \widehat{Y}(t)|^{r} \le \mathcal C_{2} {\Delta}_{N}^{\lambda r} \quad a.s.; $$ -

if, additionally,

$$ \mathbb{E}\left[ {\Lambda}^{\frac{r(p+\max\{p, \gamma\lambda + \lambda - 1\})}{\gamma\lambda + \lambda -1}} \right] < \infty, $$where λ and Λ are from (Z2), p is from (B2), and γ is from (B3), then one can choose \(\mathcal C_{2}\) such that \(\mathbb {E}[\mathcal C_{2}] < \infty \), i.e. there exists a deterministic constant C that does not depend on the partition such that

$$ \mathbb{E}\left[ \underset{t\in[0,T]}{\sup} |Y(t) - \widehat{Y}(t)|^{r} \right] \le C{\Delta}_{N}^{\lambda r}. $$

Proof

Fix ω ∈Ω such that Z(ω,t), t ∈ [0,T], is Hölder continuous (for simplicity of notation, we again omit ω in the brackets) and consider an arbitrary t ∈ [0,T]. Denote

i.e. t ∈ [tn(t),tn(t)+ 1). Then

where we used Lemma 3.6 to estimate |Y (t) − Y (tn(t))|r and bound (3.14) to estimate \(|Y(t_{n(t)}) - \widehat Y(t_{n(t)})|^{r}\). Therefore,

Finally, using the same arguments as in Lemma 3.6 and Lemma 3.8, one can easily show that the condition

implies that

therefore

for some constant C > 0 that does not depend on the partition. □

Theorem 3.10

-

Let Z satisfy (Z1)–(Z2), Assumption B hold and the mesh of the partition ΔN satisfy (3.1). Then, for any r ≥ 1, there exists a random variable \(\mathcal C_{3}\) that does not depend on the partition such that

$$ \underset{n = 0,1,...,N}{\sup}\left|\frac{1}{Y(t_{n}) - \varphi(t_{n})} - \frac{1}{\widehat{Y}(t_{n}) - \varphi(t_{n})}\right|^{r} \le \mathcal C_{3} {\Delta}_{N}^{\lambda r} \quad a.s. $$and

$$ \underset{n = 0,1,...,N}{\sup}\left|\frac{1}{\psi(t_{n}) - Y(t_{n})} - \frac{1}{\psi(t_{n}) - \widehat{Y}(t_{n})}\right|^{r} \le \mathcal C_{3} {\Delta}_{N}^{\lambda r} \quad a.s. $$ -

If, additionally,

$$ \mathbb{E}\left[ {\Lambda}^{\frac{r(2 + p + \max\{p, \gamma\lambda + \lambda - 1\})}{\gamma\lambda + \lambda -1}} \right] < \infty, $$(3.15)where λ and Λ are from (Z2), p is from (B2), and γ is from (B3), then one can choose \(\mathcal C_{3}\) such that \(\mathbb {E}[\mathcal C_{3}] < \infty \), i.e. there exists a deterministic constant C that does not depend on the partition such that

$$ \mathbb{E}\left[ \underset{n = 0,1,...,N}{\sup}\left|\frac{1}{Y(t_{n}) - \varphi(t_{n})} - \frac{1}{\widehat{Y}(t_{n}) - \varphi(t_{n})}\right|^{r} \right] \le C{\Delta}_{N}^{\lambda r} $$and

$$ \mathbb{E}\left[ \underset{n = 0,1,...,N}{\sup}\left|\frac{1}{\psi(t_{n}) - Y(t_{n})} - \frac{1}{\psi(t_{n}) - \widehat{Y}(t_{n})}\right|^{r} \right] \le C{\Delta}_{N}^{\lambda r}. $$

Proof

By Remark 3.5 and estimate (3.14), with probability 1 for any n = 0,...,N:

It remains to notice that, by (3.6) and (3.8), the condition (3.15) implies that \(\mathbb {E}[\mathcal C_{3}] < \infty \). The second estimate can be obtained in a similar manner. □

4 One-sided sandwich case

The drift-implicit Euler approximation scheme described in Section 3 for the two-sided sandwich can also be adapted for the one-sided setting that corresponds to Assumption A on the SDE (1.1). However, in the two-sided sandwich case, the process Y was bounded (which was utilized, e.g. in Lemma 3.6) and, moreover, the behaviour of Y was similar near both φ and ψ so that it was sufficient to analyze only one of the bounds. In the one-sided case, each Y (t), for t ∈ [0,T], is not a bounded random variable; therefore, the approach from Section 3 has to be adjusted. For this, we will be using the inequalities (2.4).

Let the noise Z satisfy (Z1)–(Z2), Y (0) and b satisfy Assumption A and Y = {Y (t), t ∈ [0,T]} be the unique solution of the SDE (2.2). In line with Section 3, we consider a uniform partition {0 = t0 < t1 < ... < tN = T} of [0,T], \(t_{k} := \frac {Tk}{N}\), k = 0,1,...,N, with the mesh \({\Delta }_{N}:=\frac {T}{N}\) such that

where c3 is an upper bound for \(\frac {\partial b}{\partial y}\) from assumption (A4). The backward Euler approximation \(\widehat Y(t)\) is defined in a manner similar to (3.2), i.e.

where the second expression is considered as an equation with respect to \(\widehat Y(t_{k+1})\).

Remark 4.1

Just as in the two-sided sandwich case, each \(\widehat Y(t_{k})\), k = 1,...,N, is well defined since the equation

has a unique solution w.r.t. y such that y > φ(t) for any fixed t ∈ [0,T] and any \(z\in \mathbb {R}\). To understand this, note that assumption (A4) together with (4.1) imply that

Second, by (A3),

Next, by (A2), for any (s,y1), \((s,y_{2}) \in \overline {\mathcal D_{1}} := \{(u,y)\in [0,T]\times \mathbb {R}_{+}, y\in [\varphi (u) + 1, \infty )\}\), we have that

i.e.

Using this, (A4), and the mean value theorem, for any positive y ≥ φ(t) + 1

whence

Existence and uniqueness of the solution then follows from (4.3)–(4.5).

Remark 4.2

Similarly to the two-sided sandwich case, the value of \(\widehat Y(t)\) for t ∈ [0,T] ∖{t0,...,tN} can also be defined via linear interpolation with no changes in formulations of the results and almost no variations in the proofs.

Our strategy for proving the convergence of \(\widehat Y\) to Y will be similar to what we have done in Section 3. Therefore, we will be omitting the details highlighting only the points which are different from the two-sided sandwich case. We start with some useful properties of \(\widehat Y\) and Y.

Lemma 4.3

Let Z satisfy (Z1)–(Z2), Assumption A hold and the mesh of the partition ΔN satisfy (4.1). Then there exist deterministic constants L1, L2 > 0 depending only on Y (0), the shape of the drift b and λ, such that

where Λ is from assumption (Z2) and γ is from assumption (A3). Moreover, there exist constants L3, L4 > 0 that also depend only on Y (0), the shape of the drift b and λ such that

for all partitions with the mesh satisfying \(\frac {c_{1} }{(Y(0) - \varphi (0))^{p}}{\Delta }_{N} < 1\) with c1 and p being from (A2).

Proof

The proof of

is identical to the corresponding one in Lemma 3.4 and will be omitted. Let us prove that

Fix ω ∈Ω for which Z(ω,t) is Hölder continuous, consider a partition with the mesh satisfying \(\frac {c_{1} }{(Y(0) - \varphi (0))^{p}}{\Delta }_{N} < 1\) and fix an arbitrary n = 0,1,...,N − 1. Assume that \(\widehat Y(t_{n+1}) > \varphi (t_{n+1}) + (Y(0) - \varphi (0))\) (otherwise, the claim of the lemma holds automatically). Put

and observe that \((t_{k}, \widehat Y(t_{k})) \in \mathcal D_{Y(0) - \varphi (0)}\) for any k = κ(n) + 1,...,n + 1, where \(\mathcal D_{Y(0) - \varphi (0)}\) is defined via (2.1). Next, by (A2), for any \(y\in \mathcal D_{Y(0) - \varphi (0)}\)

i.e. there exists a constant C > 0 that does not depend on the partition such that

Next, observe that, for any k = κ(n) + 1,...,n + 1, we have

Therefore, using (4.6) and

one can write

where C > 0 is some positive constant that does not depend on the partition.

Now we want to apply the discrete version of the Gronwall inequality from [20, Lemma A.3]. In order to do that, we observe that

and, for any k = κ(n) + 2,...,n + 1,

Now, since \(\frac {c_{1}}{(Y(0) - \varphi (0))^{p}} {\Delta }_{N} < 1\), we can write that

and, for all k = κ(n) + 2,...,n + 1,

Put

with [x] being the greatest integer less than or equal to x and observe that, for all N ≥ N0,

Therefore,

and, for all k = κ(n) + 2,...,n + 1,

Using a discrete version of the Gronwall inequality, we now obtain that for all k = κ(n) + 1,...,n + 1

which ends the proof. □

Remark 4.4

It is clear that constants L1, L2, L3, and L4 can be chosen jointly for Y and \(\widehat Y\), so that the inequalities

and

hold simultaneously with probability 1.

Next, corresponding to Lemma 3.6 in the two-sided case, Y enjoys Hölder continuity with the Hölder constant being integrable provided that Λ has moments of sufficiently high order. This is summarized in the lemma below.

Lemma 4.5

Let Z satisfy (Z1)–(Z2) and assumptions (A1)–(A3) hold.

-

There exists a positive random variable Υ such that with probability 1

$$ |Y(t) - Y(s)| \le {\Upsilon} |t-s|^{\lambda}, \quad t, s\in[0,T]. $$ -

If, for some r ≥ 1,

$$ \mathbb{E} \left[{\Lambda}^{\frac{r(p + \gamma\lambda + \lambda - 1)}{\gamma\lambda + \lambda - 1}}\right] < \infty, $$(4.7)where λ and Λ are from (Z2), p is from (A2), and γ is from (A3), then one can choose Υ such that

$$ \mathbb{E} [{\Upsilon}^{r}] < \infty. $$

Proof

By (2.4),

i.e. with probability 1 \((t,Y(t)) \in \mathcal D_{\frac {1}{\xi }}\), t ∈ [0,T], where

and \(\mathcal D_{\frac 1 \xi }\) is defined in (2.1). Denote ϕ(t) := φ(t) + 1 and notice that \((t,\phi (t)) \in \mathcal D_{\frac {1}{\xi }}\), t ∈ [0,T], since \(\frac {1}{\xi } \le Y(0) - \varphi (0)\). Thus, using the same arguments as applied in (3.7), we can write that, with probability 1, for any 0 ≤ s < t ≤ T:

where c1 is from (A2). Now, again by (2.4),

hence with probability 1

where C is a positive constant. Now one can put

and observe that

whenever (4.7) holds. □

Corollary 4.6

Using Lemma 4.3 and following the proof of Lemma 4.5, it is easy to obtain that, for any partition with the mesh satisfying

there is a random variable Υ independent of the partition such that with probability 1

Furthermore, just like in Lemma 3.6, for r > 0

provided that

Finally, such Υ can be chosen jointly for Y and \(\widehat Y\), so that

holds simultaneously with (4.11) with probability 1.

Lemma 4.7

Let Z satisfy (Z1)–(Z2), Assumption A hold and the mesh of the partition ΔN satisfy (4.1).

-

For any r ≥ 1, there exists a positive random variable \(\mathcal C_{4}\) that does not depend on the partition such that

$$ \underset{k=0,1,...,N}{\sup} |Y(t_{k}) - \widehat{Y}(t_{k})|^{r} \le \mathcal C_{4} {\Delta}_{N}^{\lambda r} \quad a.s. $$ -

If, additionally,

$$ \mathbb{E}\left[ {\Lambda}^{\frac{r(2p+ \gamma\lambda + \lambda - 1)}{\gamma\lambda + \lambda -1}} \right] < \infty, $$(4.12)where λ and Λ are from (Z2), p is from (A2), and γ is from (A3), then one can choose \(\mathcal C_{4}\) such that \(\mathbb {E} [\mathcal C_{4}] < \infty \), i.e. there exists a deterministic constant C that does not depend on the partition such that

$$ \mathbb{E}\left[\underset{k=0,1,...,N}{\sup} |Y(t_{k}) - \widehat{Y}(t_{k})|^{r} \right] \le C{\Delta}_{N}^{\lambda r}. $$

Proof

Following the proof of Lemma 3.8, one can easily obtain that for any n = 0,1,...,N

Next, note that \((t, Y(t)) \in \mathcal D_{\frac 1 \xi }\), where ξ is defined by (4.8), so, by (A2) and Lemma 4.5,

i.e.

In order to conclude the proof, it remains to notice that (4.8), (4.9), and (4.12) imply that

□

Now we are ready to formulate the two main results of this section.

Theorem 4.8

Let Z satisfy (Z1)–(Z2), Assumption A hold and the mesh of the partition ΔN satisfy (4.10).

-

For any r ≥ 1, there exists a random variable \(\mathcal C_{5}\) that does not depend on the partition such that

$$ \sup_{t\in[0,T]} |Y(t) - \widehat{Y}(t)|^{r} \le \mathcal C_{5} {\Delta}_{N}^{\lambda r} \quad a.s. $$ -

If, additionally,

$$ \mathbb{E}\left[ {\Lambda}^{\frac{r(2p+\gamma\lambda + \lambda - 1)}{\gamma\lambda + \lambda -1}} \right] < \infty, $$where λ and Λ are from (Z2), p is from (A2), and γ is from (A3), then one can choose \(\mathcal C_{5}\) such that \(\mathbb {E}[\mathcal C_{5}] < \infty \), i.e. there exists a deterministic constant C that does not depend on the partition such that

$$ \mathbb{E}\left[ \sup_{t\in[0,T]} |Y(t) - \widehat{Y}(t)|^{r} \right] \le C{\Delta}_{N}^{\lambda r}. $$

Proof

The proof is similar to the one of Theorem 3.9 but instead of Lemmas 3.6, 3.8 and bound (3.14) one should apply Lemmas 4.5, 4.7 and bound (4.13). □

Theorem 4.9

Let Z satisfy (Z1)–(Z2), Assumption A hold and the mesh of the partition ΔN satisfy (4.10).

-

For any r ≥ 1, there exists a random variable \(\mathcal C_{6}\) that does not depend on the partition such that

$$ \underset{n = 0,1,...,N}{\sup}\left|\frac{1}{Y(t_{n}) - \varphi(t_{n})} - \frac{1}{\widehat{Y}(t_{n}) - \varphi(t_{n})}\right|^{r} \le \mathcal C_{6} {\Delta}_{N}^{\lambda r} \quad a.s. $$ -

If, additionally,

$$ \mathbb{E}\left[ {\Lambda}^{\frac{r(2+2p+ \gamma\lambda + \lambda - 1)}{\gamma\lambda + \lambda -1}} \right] < \infty, $$(4.14)where λ and Λ are from (Z2), p is from (A2), and γ is from (A3), then one can choose \(\mathcal C_{6}\) such that \(\mathbb {E}[\mathcal C_{6}] < \infty \), i.e. there exists a deterministic constant C that does not depend on the partition such that

$$ \mathbb{E}\left[ \sup_{n = 0,1,...,N}\left|\frac{1}{Y(t_{n}) - \varphi(t_{n})} - \frac{1}{\widehat{Y}(t_{n}) - \varphi(t_{n})}\right|^{r} \right] \le C{\Delta}_{N}^{\lambda r}. $$

Proof

The proof is similar to Theorem 3.10 and is omitted. □

5 Examples and simulations

The algorithms presented in (3.2) and (4.2) imply that, in order to generate \(\widehat Y(t_{n+1})\), one has to solve an equation that potentially can be challenging from the computational point of view. However, in some cases that are relevant for applications, this equation has a simple explicit solution.

Regarding the numerical examples that follow, we remark that:

-

all the simulations are performed in the R programming language on the system with Intel Core i9-9900K CPU and 64 Gb RAM;

-

in order to simulate paths of fractional Brownian motion, R package somebm is used;

-

in Example 5.3, discrete samples of the multifractional Brownian motion (mBm) values are simulated using the Cholesky decomposition of the corresponding covariance matrix (for covariance structure of the mBm, see, e.g. [5, Proposition 4]) and the R package nleqslv is used for solving (3.2) numerically.

Example 5.1

(Generalized CIR processes) Let φ ≡ 0, Z satisfy (Z1)–(Z2) with \(\lambda \in \left (\frac {1}{2},1\right )\), Y (0), κ1, κ2 > 0, \(\gamma > \frac {1}{\lambda } - 1\) be given and {Y (t), t ∈ [0,T]} satisfy the SDE of the form

This process fits into the framework of Section 4 and the equation for \(\hat Y(t_{k+1})\) from (4.2) reads as follows:

It is easy to see that it has a unique positive solution

Figure 1 contains 10 sample paths of the process (5.1) driven by a fractional Brownian motion with H = 0.7. In all simulation, we take N = 10000, T = 1, and Y (0) = 1 = κ1 = κ2 = 1.

Ten sample paths of (5.1) generated using the drift-implicit Euler approximation scheme; N = 10000, T = 1, Y (0) = κ1 = κ2 = 1, Z is a fractional Brownian motion with H = 0.7

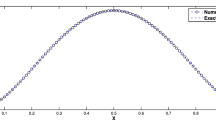

In order to illustrate the convergence, we also simulate the drift-implicit approximation \(\widehat Y\) with a small step size 10− 6 (it will serve as the “exact” solution). Then, using the same path of Z, we generate the drift-implicit Euler approximations with step sizes of the form 1/N, where N runs over all divisors of 106. Afterwards, we compute the \(L^{\infty }([0,T])\)-distances between the “exact” solution and its approximations with larger step sizes. This procedure is performed 10000 times and the mean square error of each \(L^{\infty }([0,T])\)-distance is computed. The resulting values serve as consistent estimators of the corresponding \(L^{2}({\Omega };L^{\infty }([0,T]))\)-errors and are depicted on Fig. 2a.

Convergence analysis of the drift-implicit Euler approximation scheme for (5.1); T = 1, Y (0) = κ1 = κ2 = 1, Z is a fractional Brownian motion with H = 0.7. On panel a, \(L^{2}({\Omega };L^{\infty }([0,T]))\)-errors are depicted. Panel b contains the values of \(\log \left ({\Delta }_{N}\left |\log ({\Delta }_{N}) \right |^{\frac {1}{2H}} \right )\) plotted against the logarithms of the corresponding \(L^{2}({\Omega };L^{\infty }([0,T]))\)-errors (black) as well as the line fitted with the least squares method (red). The slope of the red line is 0.7022687 ≈ 0.7 = H

Note that the drift-implicit Euler scheme for (5.1) driven by the fractional Brownian motion was the main subject of [18] and [22], but in both cases, the convergence of \(\widehat Y\) to Y is established only on [0,T] with T being small (see, e.g. [18, Eq. (8) and Remark 3.1]). Our results fill this gap and prove that convergence holds on arbitrary [0,T] for any model parameters. It should be noted though that the convergence rate in Theorem 4.8 is not optimal and can be improved for the fractional Brownian driver. It is well known that paths of a fractional Brownian motion are Hölder continuous up to (but not including) its Hurst index H and whence Theorem 4.8 indicates that the exact convergence speed of the drift-implicit Euler scheme is better than \(O\left ({\Delta }_{N}^{\lambda }\right )\) for any λ ∈ (0,H). In turn, [18] uses the results on the modulus of the continuity of the fractional Brownian motion and establishes that the exact speed of convergence is \(O\left ({{\Delta }_{N}^{H}} \sqrt {|\log ({\Delta }_{N})|}\right )\) (provided that T is small enough). On Fig. 2b, we plot the values of \(\log \left ({\Delta }_{N}\left |\log ({\Delta }_{N}) \right |^{\frac {1}{2H}} \right )\) against the logarithms of the corresponding \(L^{2}({\Omega };L^{\infty }([0,T]))\)-errors from Fig. 2a. The resulting points (depicted in black) turn out to be located along the line with the slope 0.7022687 ≈ 0.7 = H (depicted in red; least squares method was used to estimate the slope). This gives an empirical evidence to the conjecture that additional conditions on T in [18] can be lifted and the speed \(O\left ({{\Delta }_{N}^{H}} \sqrt {|\log ({\Delta }_{N})|}\right )\) is still preserved.

Example 5.2

(Sandwiched process of the TSB type) Consider a sandwiched SDE of the form

where Z satisfies (Z1)–(Z2) with \(\lambda \in \left (\frac {1}{2}, 1\right )\). This equation fits into the framework of Section 4 and the scheme (3.2) leads to N cubic equations of the form

where

Note this equation can be solved explicitly using, e.g. the celebrated Cardano method. Namely, define

and put

where among possible complex values of αn and βn, one should take those for which \(\alpha _{n}\beta _{n} = -\frac {Q_{1,n}}{3}\). Then the three roots of the cubic (5.3) are

and \(\widehat Y(t_{n+1})\) is equal to the root which belongs to (φ(tn+ 1),ψ(tn+ 1)) (note that there is exactly one root in that interval).

Figure 3 contains 10 sample paths of the process (5.2) driven by a fractional Brownian motion with H = 0.7. In all simulations, we take φ ≡− 1, ψ ≡ 1, N = 10000, T = 1, and Y (0) = 0, \(\kappa _{1} = \kappa _{2} =\frac {1}{2}\), κ3 = 0 (this case corresponds to the TSB equation described in Example 2.8). Simulation is performed by direct implementation of the Cardano’s method in R. On Fig. 4a, the \(L^{2}({\Omega };L^{\infty }([0,T]))\)-errors are depicted. Just as in Example 5.1, behaviour of the modulus of continuity of the fractional Brownian motion allows to suggest that the exact convergence speed of the numerical scheme is \(O\left ({{\Delta }_{N}^{H}} \sqrt {|\log ({\Delta }_{N})|}\right )\). Figure 4b gives an empirical evidence to this conjecture: the values of \(\log \left ({\Delta }_{N}\left |\log ({\Delta }_{N}) \right |^{\frac {1}{2H}} \right )\) plotted against the logarithms of the corresponding \(L^{2}({\Omega };L^{\infty }([0,T]))\)-errors (black) are located along the line (red) with the slope 0.7033434 ≈ 0.7 = H (least squares fit was used).

Ten sample paths of (5.2) generated using the drift-implicit Euler approximation scheme; φ ≡− 1, ψ ≡ 1, N = 10000, T = 1, Y (0) = 0, \(\kappa _{1} = \kappa _{2} =\frac {1}{2}\), κ3 = 0, Z is a fractional Brownian motion with H = 0.7

Convergence analysis of the drift-implicit Euler approximation scheme for (5.2); φ ≡− 1, ψ ≡ 1, T = 1, Y (0) = 0, \(\kappa _{1} = \kappa _{2} =\frac {1}{2}\), κ3 = 0, Z is a fractional Brownian motion with H = 0.7. On panel a, \(L^{2}({\Omega };L^{\infty }([0,T]))\)-errors are depicted. Panel b contains the values of \(\log \left ({\Delta }_{N}\left |\log ({\Delta }_{N}) \right |^{\frac {1}{2H}} \right )\) plotted against the logarithms of the corresponding \(L^{2}({\Omega };L^{\infty }([0,T]))\)-errors (black) as well as the line fitted with the least squares method (red). The slope of the red line is 0.7033434 ≈ 0.7 = H

In both Examples 5.1 and 5.2, equations for computing \(\widehat Y\) could be explicitly solved but the Hölder continuity of the noise could not be less then 1/2. The next example shows that the drift-implicit Euler scheme can be applied in the rough case as well.

Example 5.3

(Sandwiched process driven by multifractional Brownian motion) Consider the sandwiched SDE of the form

In this case, Theorem 2.1 guarantees existence and uniqueness of the solution for λ-Hölder Z with \(\lambda > \frac {1}{5}\) (note that this equation fits the framework of Example 2.9 from Section 2). On Fig. 5, one can see paths of the process (5.4) with κ1 = κ2 = 1, \(\varphi (t) = \sin \limits (10t)\), \(\psi (t) = \sin \limits (10t) + 2\) driven by multifractional Brownian motion (mBm) with functional Hurst parameter \(H(t) = \frac {1}{5} \sin \limits (2\pi t) + \frac {1}{2}\) (note that the lowest value of the functional Hurst parameter is \(H\left (\frac {3}{4}\right ) = 0.3\); for more details on mBm, see [5] as well as [17, Lemma 3.1] for results on Hölder continuity of its paths). Figure 6 contains the \(L^{2}({\Omega };L^{\infty }([0,T]))\)-errors of approximation. Note a much slower rate of convergence: the multifractional Brownian motion Z under consideration is Hölder continuous up to the order 0.3; therefore, Theorem 3.9 guarantees convergence speed of only \(O({\Delta }_{N}^{\lambda })\) with λ ∈ (0,0.3).

A sample path of (5.4) generated using the backward Euler approximation scheme; N = 10000, T = 1, Y (0) = 1, κ1 = κ2 = 1, \(\varphi (t) = \sin \limits (10t)\), \(\psi (t) = \sin \limits (10t) + 2\), Z is a multifractional Brownian motion with functional Hurst parameter \(H(t) = \frac {1}{5} \sin \limits (2\pi t) + \frac {1}{2}\)

\(L^{2}({\Omega };L^{\infty }([0,T]))\)-errors of the drift-implicit Euler approximation scheme for (5.4); T = 1, Y (0) = 1, κ1 = κ2 = 1, \(\varphi (t) = \sin \limits (10t)\), \(\psi (t) = \sin \limits (10t) + 2\), Z is a multifractional Brownian motion with functional Hurst parameter \(H(t) = \frac {1}{5} \sin \limits (2\pi t) + \frac {1}{2}\)

References

Alfi, V., Coccetti, F., Petri, A., Pietronero, L.: Roughness and finite size effect in the NYSE stock-price fluctuations. The European Phys. J. B 55(2), 135–142 (2007)

Alfonsi, A.: On the discretization schemes for the CIR (and Bessel squared) processes. Monte Carlo Methods Appl. 11(4), 355–384 (2005)

Alfonsi, A.: Strong order one convergence of a drift implicit Euler scheme: application to the CIR process. Statist. Probab. Lett. 83(2), 602–607 (2013)

Andersen, L. B. G., Piterbarg, V. V.: Moment explosions in stochastic volatility models. Finance Stochast. 11(1), 29–50 (2006)

Ayache, A., Cohen, S., Vehel, J. L.: The covariance structure of multifractional Brownian motion, with application to long range dependence. In: 2000 IEEE International Conference on Acoustics, Speech, and Signal Processing. Proceedings (Cat. No.00CH37100), IEEE (2002)

Azmoodeh, E., Sottinen, T., Viitasaari, L., Yazigi, A.: Necessary and sufficient conditions for Hölder continuity of Gaussian processes. Stat. Probability Lett. 94, 230–235 (2014)

Bayer, C., Friz, P., Gatheral, J.: Pricing under rough volatility. Quant Finance 16(6), 887–904 (2016)

Chan, K. C., Karolyi, G. A., Longstaff, F. A., Sanders, A. B.: An empirical comparison of alternative models of the short-term interest rate. J. Finance 47(3), 1209–1227 (1992)

Cox, J. C.: The constant elasticity of variance option pricing model. The J. Portfolio Manag. 23(5), 15–17 (1996)

Cox, J. C., Ingersoll, J. E., Ross, S. A.: A re-examination of traditional hypotheses about the term structure of interest rates. J. Financ. 36(4), 769–799 (1981)

Cox, J. C., Ingersoll, J. E., Ross, S. A.: An intertemporal general equilibrium model of asset prices. Econometrica 53(2), 363 (1985)

Cox, J. C., Ingersoll, J. E., Ross, S. A.: A theory of the term structure of interest rates. Econometrica 53(2), 385 (1985)

Dereich, S., Neuenkirch, A., Szpruch, L.: An Euler-type method for the strong approximation of the Cox–Ingersoll–Ross process. Proc. Royal Soc. A Math Phys. Eng. Sci. 468(2140), 1105–1115 (2012)

Di Nunno, G., Mishura, Y., Yurchenko-Tytarenko, A.: Sandwiched SDEs with unbounded drift driven by Hölder noises. Adv. Appl. Probab. 55(3) (2022) (to appear)

Domingo, D., d’Onofrio, A., Flandoli, F.: Properties of bounded stochastic processes employed in biophysics. Stoch. Anal. Appl. 38(2), 277–306 (2019)

d’Onofrio, A. (ed.): Bounded Noises in Physics, Biology, and Engineering. Springer New York, New York (2013)

Dozzi, M., Kozachenko, Y., Mishura, Y., Ralchenko, K.: Asymptotic growth of trajectories of multifractional Brownian motion, with statistical applications to drift parameter estimation. Stat. Infer. Stoch. Process. 21(1), 21–52 (2018)

Hong, J., Huang, C., Kamrani, M., Wang, X.: Optimal strong convergence rate of a backward Euler type scheme for the Cox–Ingersoll–Ross model driven by fractional Brownian motion. Stoch. Process. Appl. 130(5), 2675–2692 (2020)

Hu, Y., Nualart, D., Song, X.: A singular stochastic differential equation driven by fractional Brownian motion. Statistics & Probability Letters 78 (14), 2075–2085 (2008)

Kruse, R.: Strong and weak approximation of semilinear stochastic evolution equations. Springer International Publishing (2014)

Kubilius, K., Medžiūnas, A.: Positive solutions of the fractional SDEs with non-Lipschitz diffusion coefficient. Mathematics 9, 1 (2020)

Zhang, S.-Q., Yuan, C.: Stochastic differential equations driven by fractional Brownian motion with locally Lipschitz drift and their implicit Euler approximation. In: Proceedings of the Royal Society of Edinburgh: Section A Mathematics, pp 1–27 (2020)

Acknowledgements

The present research is carried out within the frame and support of the ToppForsk project nr. 274410 of the Research Council of Norway with title STORM: Stochastics for Time-Space Risk Models. The second author was supported by Japan Science and Technology Agency CREST JPMJCR14D7, CREST Project reference number JPMJCR2115.

Funding

Open access funding provided by University of Oslo (incl Oslo University Hospital)

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Data availability

The R code used to generate the sample paths from Section 5 is available from the corresponding author on reasonable request.

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Di Nunno, G., Mishura, Y. & Yurchenko-Tytarenko, A. Drift-implicit Euler scheme for sandwiched processes driven by Hölder noises. Numer Algor 93, 459–491 (2023). https://doi.org/10.1007/s11075-022-01424-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-022-01424-6