Abstract

In this paper, we study the nonuniform fast Fourier transform with nonequispaced spatial and frequency data (NNFFT) and the fast sinc transform as its application. The computation of NNFFT is mainly based on the nonuniform fast Fourier transform with nonequispaced spatial nodes and equispaced frequencies (NFFT). The NNFFT employs two compactly supported, continuous window functions. For fixed nonharmonic bandwidth, we show that the error of the NNFFT with two \(\sinh\)-type window functions has an exponential decay with respect to the truncation parameters of the used window functions. As an important application of the NNFFT, we present the fast sinc transform. The error of the fast sinc transform is estimated as well.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The discrete Fourier transform (DFT) can easily be generalized to arbitrary nodes in the space domain as well as in the frequency domain (see [4, 6], [13, pp. 394–397]). Let \(N \in \mathbb N\) with N ≫ 1 and \(M_{1}, M_{2} \in 2 \mathbb N\) be given. By \(\mathcal {I}_{M_{1}}\) we denote the index set \(\{-\frac {M_{1}}{2}, 1-\frac {M_{1}}{2}, \ldots , \frac {M_{1}}{2}-1\}\). We consider an exponential sum \(f: \left[-\frac {1}{2}, \frac {1}{2}\right]\to \mathbb C\) of the form

where \(f_{k} \in \mathbb C\) are given coefficients and \(v_{k} \in \left[-\frac {1}{2}, \frac {1}{2} \right]\), \(k\in \mathcal {I}_{M_{1}}\), are arbitrary nodes in the frequency domain. The parameter \(N \in \mathbb N\) is called nonharmonic bandwidth of the exponential sum (1.1).

We assume that a linear combination (1.1) of exponentials with bounded frequencies is given. For arbitrary nodes \(x_{j} \in \left[-\frac {1}{2}, \frac {1}{2} \right]\), \(j \in \mathcal {I}_{M_{2}}\), in the space domain, we are interested in a fast evaluation of the M2 values

A fast algorithm for the computation of the M2 values (1.2) is called a nonuniform fast Fourier transform with nonequispaced spatial and frequency data (NNFFT) which was introduced by B. Elbel and G. Steidl in [6]. In this approach, the rapid evaluation of NNFFT is mainly based on the use of two compactly supported, continuous window functions. As in [10] this approach is also referred to as NFFT of type 3.

In this paper we present new error estimates for the NNFFT. Since these estimates depend exclusively on the so-called window parameters of the NNFFT, this gives rise to an appropriate parameter choice. The outline of this paper is as follows. In Section 2, we introduce the special set Ω of continuous, even functions \(\omega : \mathbb R \to [0, 1]\) with the support [− 1,1]. Choosing ω1, ω2 ∈Ω, we consider two window functions

where \(N_{1} := \sigma _{1} N \in 2 \mathbb N\) with some oversampling factor σ1 > 1 and where \(m_{1} \in {\mathbb N}\setminus \{1\}\) is a truncation parameter with 2m1 ≪ N1. Analogously, \(N_{2} := \sigma _{2} (N_{1} + 2m_{1}) \in 2 \mathbb N\) is given with some oversampling factor σ2 > 1 and \(m_{2} \in {\mathbb N}\setminus \{1\}\) is another truncation parameter with \(2 m_{2} \ll \left(1 - \frac {1}{\sigma _{1}} \right) N_{2}\). For the fast, approximate computation of the values (1.2), we formulate the NNFFT in Algorithm 1. In Section 3, we derive new explicit error estimates of the NNFFT with two general window functions φ1 and φ2. In Section 4, we specify the result when using two \(\sinh\)-type window functions. Namely, we show that for fixed nonharmonic bandwidth N of (1.1), the error of the related NNFFT has an exponential decay with respect to the truncation parameters m1 and m2. Numerical experiments illustrate the performance of our error estimates.

In Section 5, we study the approximation of the function sinc(Nπx), x ∈ [− 1, 1], by an exponential sum. For given target accuracy ε > 0 and n ≥ 4N, there exist coefficients wj > 0 and frequencies vj ∈ (− 1, 1), j = 1…,n, such that for all x ∈ [− 1, 1],

In practice, we simplify the approximation procedure. Since for fixed \(N \in \mathbb N\), it holds

we apply the Clenshaw–Curtis quadrature with Chebyshev points \(z_{k} = {\cos \limits } \frac {k \pi }{n}\in [-1, 1]\), k = 0…, n, where \(n\in \mathbb N\) fulfills n ≥ 4N. Then the function sinc(Nπx), x ∈ [− 1, 1], can be approximated by the exponential sum

with explicitly known coefficients wk > 0 which satisfy the condition \({\sum }_{k=0}^{n} w_{k} = 1\).

An interesting signal processing application of the NNFFT is presented in the last Section 6. If a signal \(h: \left[- \frac {1}{2}, \frac {1}{2} \right] \to \mathbb C\) is to be reconstructed from its nonuniform samples at \(a_{k}\in \left[- \frac {1}{2}, \frac {1}{2} \right]\), then h is often modeled as linear combination of shifted sinc functions

with complex coefficients ck. Hence, we present a fast, approximate computation of the discrete sinc transform (see [7, 11])

where \(b_{\ell } \in \left[- \frac {1}{2}, \frac {1}{2} \right]\) can be nonequispaced. The discrete sinc transform is motivated by numerous applications in signal processing. However, since the sinc function decays slowly, it is often avoided in favor of some more local approximation. Here we prefer the approximation of the sinc function by an exponential sum (1.3). Then we obtain the fast sinc transform in Algorithm 3, which is an approximate algorithm for the fast computation of the values (6.2) and applies the NNFFT twice. Besides, the error of the fast sinc transform is estimated and numerical examples are presented as well.

2 NNFFT

Now we start with the explanation of the main algorithm, the NNFFT. To this end, we firstly introduce the special set Ω, which is necessary to define required window functions φj, j = 1,2. Since the NNFFT is mainly based on the well-known NFFT, then we proceed with a short description of the NFFT and move on to the NNFFT afterwards. This procedure is summarized in Algorithm 1. Note that here a parameter a > 1 is necessary in order to prevent aliasing artifacts, since we approximate a non-periodic function on the interval [− 1, 1] by means of a-periodic functions.

Let Ω be the set of all functions \(\omega : \mathbb R \to [0, 1]\) with the following properties:

-

Each function ω is even, has the support [− 1, 1], and is continuous on \(\mathbb R\).

-

Each restricted function ω|[0,1] is decreasing with ω(0) = 1.

-

For each function ω its Fourier transform

$${\hat \omega}(v) := {\int}_{\mathbb R} \omega(x) {\mathrm e}^{- 2 \pi {\mathrm i} v x} \;{\mathrm d}x = 2 {{\int}_{0}^{1}} \omega(x) \cos(2\pi v x) \;{\mathrm d}x$$is positive and decreasing for all \(v\in [0, \frac {m_{1}}{2 \sigma _{1}} ]\), where it holds \(m_{1} \in \mathbb N \setminus \{1\}\) and \(\sigma _{1} \in [\frac {5}{4}, 2 ]\).

Obviously, each ω ∈Ω is of bounded variation over [− 1,1].

Example 2.1

By \(B_{2m_{1}}\), we denote the centered cardinal B-spline of even order 2m1 with \(m_{1} \in \mathbb N\). Thus, B2 is the centered hat function. We consider the spline

which has the support [− 1, 1]. Its Fourier transform reads as

Obviously, \({\hat \omega }_{\mathrm {B},1}(v)\) is positive and decreasing for v ∈ [0,m1). Hence, the function ωB,1 belongs to the set Ω.

For \(\sigma _{1} > \frac {\pi }{3}\) and β1 = 3m1 with \(m_{1} \in \mathbb N \setminus \{1\}\), we consider

By [12, p. 8], its Fourier transform reads as

where \(J_{\beta _{1}}\) denotes the Bessel function of order β1. By [1, p. 370], it holds for v≠ 0 the equality

where \(j_{\beta _{1},s}\) denotes the s th positive zero of \(J_{\beta _{1}}\). For β1 = 3m1, it holds \(j_{\beta _{1},1} > 3 m_{1} + \pi - \frac {1}{2}\) (see [8]). Hence, by \(\sigma _{1} > \frac {\pi }{3}\) we get

Therefore, the Fourier transform \({\hat \omega }_{ {alg},1}(v)\) is positive and decreasing for \(v \in \big [0, \frac {m_{1}}{2 \sigma _{1}}\big ]\). Hence, ωalg,1 belongs to the set Ω.

Let \(\sigma _{1} \in \left[\frac {5}{4}, 2 \right]\) and \(m_{1} \in \mathbb N \setminus \{1\}\) be given. We consider the function

with the shape parameter

Then by [12, p. 38], its Fourier transform reads as

where I1 and J1 denote the modified Bessel function and the Bessel function of first order, respectively. Using the power series expansion of I1 (see [1, p. 375]), we obtain for \(|v| < m_{1} \left(1 - \frac {1}{2\sigma _{1}} \right)\) that

Therefore, the Fourier transform \({\hat \omega }_{\sinh ,1}(v)\) is positive and decreasing for \(v \in \left[0, \frac {m_{1}}{2 \sigma _{1}} \right]\), since for \(\sigma _{1} \ge \frac {5}{4}\) it holds

Hence, \(\omega _{\sinh ,1}\) belongs to the set Ω.

As known (see [6, 14]), the NNFFT can mainly be computed by means of an NFFT. This is why this algorithm is briefly explained below. For fixed \(N, { M_{2}} \in 2 \mathbb N\) and N1 := σ1N with σ1 > 1, the NFFT (see [4, 5, 17] or [13, pp. 377–381]) is a fast algorithm that approximately computes the values p(xj), \(j\in \mathcal {I}_{{M_{2}}}\), of any 1-periodic trigonometric polynomial

at nonequispaced nodes \(x_{j} \in \left[- \frac {1}{2}, \frac {1}{2} \right]\), \(j \in \mathcal {I}_{{ M_{2}}}\), where \(c_{k} \in \mathbb C\), \(k\in \mathcal {I}_{N}\), are given complex coefficients. In other words, for the NFFT it holds \(N=M_{1}\in 2\mathbb N\) in (1.2).

For ω1 ∈Ω we introduce the window function

By construction, the window function (2.3) is even, has the support \(\left[- \frac {m_{1}}{N_{1}}, \frac {m_{1}}{N_{1}} \right]\), and is continuous on \(\mathbb R\). Further, the restricted window function \(\varphi _{1} |_{[0, m_{1}/N_{1}]}\) is decreasing with φ1(0) = 1. Its Fourier transform

is positive and decreasing for \(v \in \Big[0, N_{1} - \frac {N}{2} \Big)\). Thus, φ1 is of bounded variation over \(\left[-\frac {1}{2}, \frac {1}{2} \right]\).

In the following, we denote the torus \(\mathbb R/\mathbb Z\) by \(\mathbb T\) and the Banach space of continuous, 1-periodic functions by \(C(\mathbb T)\). For the window function (2.3), we denote its 1-periodization by

Using a linear combination of shifted versions of the 1-periodized window function \({\tilde \varphi }_{1}^{(1)}\), we construct a 1-periodic continuous function \(s \in C(\mathbb T)\) which approximates (2.2) well. Then the computation of the values s(xj), \(j \in \mathcal {I}_{{ M_{2}}}\), is very easy, since φ1 has the small support \(\left[-\frac {m_{1}}{N_{1}}, \frac {m_{1}}{N_{1}} \right]\). The computational cost of NFFT is \({\mathcal O} (N \log N + { M_{2}} )\) flops, see [4, 5, 17] or [13, pp. 377–381]. The error of the NFFT (see [15]) can be estimated by

where \(e_{\sigma _{1}}(\varphi _{1})\) denotes the \(C(\mathbb T)\)-error constant defined as

with

Note that the constants \(e_{\sigma _{1},N}(\varphi _{1})\) are bounded with respect to N (see [15, Theorem 5.1]).

Now we proceed with the NNFFT. For better readability, we describe the procedure just shortly. For more detailed explanations we refer to [6]. For chosen functions ω1,ω2 ∈ Ω, we form the window functions

where again \(N_{1} := \sigma _{1} N \in 2 \mathbb N\) with some oversampling factor σ1 > 1 and \(m_{1} \in \mathbb N \setminus \{1\}\) with 2m1 ≪ N1 and where \(N_{2}:= \sigma _{2} (N_{1} + 2 m_{1}) \in 2 \mathbb N\) with an oversampling factor σ2 > 1 and \(m_{2} \in \mathbb N \setminus \{1\}\) with \(2 m_{2} \le \left(1 - \frac {1}{\sigma _{1}} \right) N_{2}\). The second window function φ2 has the support \(\left[-\frac {m_{2}}{N_{2}}, \frac {m_{2}}{N_{2}} \right]\). Additionally, in order to prevent aliasing, we use a-periodic functions, where we introduce the constant

such that aN1 = N1 + 2m1 and N2 = σ2σ1aN. Without loss of generality, we can assume that

If \(v_{k} \in \left[-\frac {1}{2}, \frac {1}{2} \right]\), then we replace the nonharmonic bandwidth N by \(N^{\ast } := N + \lceil \frac {2m_{1}}{\sigma _{1}}\rceil\) and set \(v_{j}^{\ast } := \frac {N}{N^{\ast }} v_{j} \in \big [- \frac {1}{2a}, \frac {1}{2a}\big ]\) such that \(N v_{j} = N^{\ast } v_{j}^{\ast }\).

For arbitrarily given \(f_{k} \in \mathbb C\), \(k \in \mathcal {I}_{M_{1}}\), and \(k \in \mathcal {I}_{M_{1}}\), \(k\in \mathcal {I}_{M_{1}}\), we introduce the compactly supported, continuous auxiliary function

which has the Fourier transform

Hence, for arbitrary nodes \(x_{j} \in \left[- \frac {1}{2}, \frac {1}{2} \right]\), \(j\in \mathcal {I}_{M_{2}}\), we have

Therefore, it remains to compute the values \({\hat h}(Nx_{j})\), \(j\in \mathcal {I}_{M_{2}}\), because we can precompute the values \({\hat \varphi }_{1}(Nx_{j})\), \(j \in \mathcal {I}_{M_{2}}\). In some cases (see Section 4), these values \({\hat \varphi }_{1}(Nx_{j})\), \(j\in \mathcal {I}_{M_{2}}\), are explicitly known.

For arbitrary \(v_{k} \in \left[- \frac {1}{2a}, \frac {1}{2a} \right]\), \(k \in \mathcal {I}_{M_{1}}\), we have φ1(t − vk) = 0 for all \(t < -\frac {1}{2a} - \frac {m_{1}}{N_{1}} =\)\(-\frac {a}{2} + \left(\frac {1}{2}- \frac {1}{2a} \right)\) and for all \(t > \frac {1}{2a} + \frac {m_{1}}{N_{1}} = \frac {a}{2} - \left(\frac {1}{2}- \frac {1}{2a} \right)\), since \({ {supp}} \varphi _{1} = \left[- \frac {m_{1}}{N_{1}}, \frac {m_{1}}{N_{1}} \right]\) and \(\frac {1}{2}- \frac {1}{2a}> 0\). Thus, by (2.8) and

we obtain

Then the rectangular quadrature rule leads to

which approximates \({\hat h}(N x)\). Note that \(\frac {\ell }{N_{1}} \in \left[- \frac {a}{2}, \frac {a}{2} \right]\) for each \(\ell \in \mathcal {I}_{N_{1} + 2 m_{1}}\) by N1 + 2m1 = aN1. Changing the order of summations in (2.10), it follows that

After computation of the inner sums

we arrive at the following NFFT

If we denote the result of this NFFT (with the 1-periodization \({\tilde \varphi }_{2}^{(1)}\) of the second window function φ2 and N2 := σ2(N1 + 2m1)) by s1(Nxj), then \(s_{1}(Nx_{j})/{\hat \varphi }_{1}(Nx_{j})\) is an approximate value of f(xj), \(j \in \mathcal {I}_{M_{2}}\). Thus, the algorithm can be summarized as follows.

The computational cost of the NNFFT is equal to \({\mathcal O}\big (N \log N + M_{1} + M_{2}\big )\) flops.

In Step 4 of Algorithm 1 we use the assumption \(2m_{2} \le \left(1 - \frac {1}{\sigma _{1}} \right) N_{2}\) such that

Then for all \(j \in \mathcal {I}_{M_{2}}\) and \(s\in \mathcal {I}_{N_{2}}\), it holds

Since we approximate a non-periodic function f on the interval \(\left[-\frac {1}{2}, \frac {1}{2} \right]\) by means of a-periodic functions on the torus \(a\mathbb T\cong \Big[-\frac {a}{2}, \frac {a}{2} \Big)\), the parameter a has to fulfill the condition a > 1, in order to prevent aliasing artifacts.

3 Error estimates for NNFFT

Now we study the error of the NNFFT, which is measured in the form

where f is a given exponential sum (1.1) and \(x_{j} \in \left[-\frac {1}{2}, \frac {1}{2} \right]\), \(j \in \mathcal {I}_{M_{2}}\), are arbitrary spatial nodes. At the beginning of this section we present some technical lemmas. The main result will be Theorem 3.5.

We introduce the a-periodization of the given window function (2.3) by

For each \(x\in \mathbb R\), the above series (3.1) has at most one nonzero term. This can be seen as follows: For arbitrary \(x \in \mathbb R\) there exists a unique \(\ell ^{*} \in \mathbb Z\) such that x = −aℓ∗ + r with a residuum \(r \in \big [-\frac {a}{2}, \frac {a}{2}\big )\). Then φ1(x + aℓ∗) = φ1(r) and hence φ1(r) > 0 for \(r \in \left(-\frac {m_{1}}{N_{1}}, \frac {m_{1}}{N_{1}} \right)\) and φ1(r) = 0 for \(r \in \big [-\frac {a}{2}, - \frac {m_{1}}{N_{1}}\big ] \cup \big [ \frac {m_{1}}{N_{1}}, \frac {a}{2}\big )\). For each \(\ell \in \mathbb Z \setminus \{\ell ^{*}\}\), we have

since \(|a (\ell - \ell ^{*}) + r | \ge \frac {a}{2} = \frac {1}{2} + \frac {m_{1}}{N_{1}} > \frac {m_{1}}{N_{1}}\). Further it holds

By the construction of φ1, the a-periodic window function (3.1) is continuous on \(\mathbb R\) and of bounded variation over \(\left[- \frac {a}{2}, \frac {a}{2} \right]\). Then the k-th Fourier coefficient of the a-periodic window function (3.1) reads as follows

By the convergence theorem of Dirichlet–Jordan (see [19, Vol. 1, pp. 57–58]), the a-periodic Fourier series of (3.1) converges uniformly on \(\mathbb R\) and it holds

Then we have the following technical lemma.

Lemma 3.1

Let the window function φ1 be given by (2.3). Then for any \(n \in \mathcal {I}_{N}\) with \(N \in 2 \mathbb N\), the series

is uniformly convergent on \(\mathbb R\) and has the sum

which coincides with the rectangular quadrature rule of the integral

Proof

Using the uniformly convergent Fourier series (3.3), we obtain for all \(n\in \mathcal {I}_{N}\) that

Replacing x by \(x + \frac {\ell }{N_{1}}\) with \(\ell \in \mathcal {I}_{N_{1}+ 2m_{1}}\), we see that by N1 + 2m1 = aN1,

Summing the above formulas for all \(\ell \in \mathcal {I}_{N_{1}+ 2m_{1}}\) and applying the known formula

we conclude that

Obviously,

is the rectangular quadrature formula of the integral

with respect to the uniform grid \(\Big\{\frac {\ell }{N_{1}} : \ell \in \mathcal {I}_{N_{1}+2m_{1}} \Big\}\) of the interval \(\left[-\frac {a}{2}, \frac {a}{2} \right]\). This completes the proof.

By (3.2) we obtain that for \(n \in \mathcal {I}_{N}\),

Now we generalize the technical Lemma 3.1.

Lemma 3.2

For arbitrary fixed \(v \in \left[-\frac {N}{2}, \frac {N}{2} \right]\), \(N\in \mathbb N\), and given window function (2.3), the function

is \(\frac {1}{N_{1}}\)-periodic, continuous on \(\mathbb R\), and of bounded variation over \(\left[-\frac {1}{2}, \frac {1}{2} \right]\). For each \(x\in \mathbb R\), the corresponding \(\frac {1}{N_{1}}\)-periodic Fourier series converges uniformly to ψ1(x), i.e.,

Proof

The definition (3.4) of the function ψ1 is correct, since

with the finite index set \({\mathbb Z}_{m_{1},N_{1}}(x) = \{ \ell \in \mathbb Z: | N_{1} x + \ell | < m_{1} \}\). If \(x\in \left[-\frac {1}{2}, \frac {1}{2} \right]\), we observe that \({\mathbb Z}_{m_{1},N_{1}}(x) \subseteq \mathcal {I}_{N_{1} + 2m_{1}}\) and therefore

Simple calculation shows that for each \(x \in \mathbb R\),

By the construction of φ1, the \(\frac {1}{N_{1}}\)-periodic function ψ1 is continuous on \(\mathbb R\) and of bounded variation over \(\left[-\frac {1}{2}, \frac {1}{2} \right]\). Thus, by the convergence theorem of Dirichlet–Jordan, the Fourier series of ψ1 converges uniformly on \(\mathbb R\) to ψ1. The r th Fourier coefficient of ψ1 reads as follows

This completes the proof.

Then Lemma 3.2 leads immediately to the following technical result.

Corollary 3.3

Let the window function φ1 be given by (2.3). For all \(x \in \left[-\frac {1}{2}, \frac {1}{2} \right]\) and \(w \in \left[-\frac {N}{2a}, \frac {N}{2a} \right]\) it holds then

Further, for all \(w \in \left[-\frac {N}{2a}, \frac {N}{2a} \right]\), it holds

Proof

As before, let \(v \in \Big[-\frac {N}{2}, \frac {N}{2} \Big]\) be given. Substituting \(w := \frac {v}{a}\in \left[-\frac {N}{2a}, \frac {N}{2a}\right]\) and observing N1 + 2m1 = aN1, we obtain by Lemma 3.2 that for all \(x \in \Big[-\frac {1}{2}, \frac {1}{2} \Big]\) it holds,

Since by assumption \({\hat \varphi }_{1}(w) > 0\) for all \(w \in \left[-\frac {N}{2a}, \frac {N}{2a} \right]\subset \left[-\frac {N}{2}, \frac {N}{2} \right]\), we have

Multiplying the above equality by the exponential e2πiwx, this results in (3.5) and (3.6).

We say that the window function φ1 of the form (2.3) is convenient for NNFFT, if the general \(C(\mathbb T)\)-error constant

with

fulfills the condition \(E_{\sigma _{1}}(\varphi _{1}) \ll 1\) for conveniently chosen truncation parameter m1 ≥ 2 and oversampling factor σ1 > 1. Obviously, the \(C(\mathbb T)\)-error constant (2.4) is a “discrete” version of the general \(C(\mathbb T)\)-error constant (3.7) with the property

Thus, Corollary 3.3 means that all complex exponentials e2πiwx with \(w\in \left[-\frac {N}{2a}, \frac {N}{2a} \right]\) and \(x\in \left[-\frac {1}{2}, \frac {1}{2} \right]\) can be uniformly approximated by short linear combinations of shifted window functions, cf. [4, Theorem 2.10], if φ1 is convenient for NNFFT.

Theorem 3.4

Let σ1 > 1, \(m_{1} \in {\mathbb N} \setminus \{1\}\), and \(N_{1} = \sigma _{1} N \in 2\mathbb N\) with 2m1 ≪ N1 be given. Let φ1 be the scaled version (2.3) of ω1 ∈Ω. Assume that the Fourier transform \({\hat \omega }_{1}\) fulfills the decay condition

with certain constants c1 > 0, c2 > 0, and μ > 1.

Then the general \(C(\mathbb T)\)-error constant \(E_{\sigma _{1}}(\varphi _{1})\) of the window function (2.3) has the upper bound

Proof

By the scaling property of the Fourier transform, we have

For all \(v \in \left[-\frac {N}{2}, \frac {N}{2} \right]\) and \(r\in {\mathbb Z}\setminus \{0, \pm 1\}\), we obtain

and hence

From [15, Lemma 3.1] it follows that for fixed \(u =\frac {v}{N_{1}}\in \left[- \frac {1}{2\sigma _{1}}, \frac {1}{2\sigma _{1}} \right]\),

For all \(v \in \left[- \frac {N}{2}, \frac {N}{2} \right]\), we sustain

since it holds

Thus, for each \(v \in \left[-\frac {N}{2}, \frac {N}{2} \right]\), we estimate the sum

such that

Now we determine the minimum of all positive values

Since \(\frac {m_{1} |v|}{N_{1}} \le \frac {m_{1}}{2 \sigma _{1}}\) for all \(v \in \left[ - \frac {N}{2}, \frac {N}{2} \right]\), we obtain

Thus, we see that the constant \(E_{\sigma _{1},N}(\varphi _{1})\) can be estimated by an upper bound which depends on m1 and σ1, but does not depend on N. We obtain

Consequently, the general \(C(\mathbb T)\)-error constant \(E_{\sigma _{1}}(\varphi _{1})\) has the upper bound (3.10). By (3.9), the expression (3.10) is also an upper bound of \(C(\mathbb T)\)-error constant \(e_{\sigma _{1}}(\varphi _{1})\).

Thus, by means of these technical results we obtain the following error estimate for the NNFFT.

Theorem 3.5

Let the nonharmonic bandwidth \(N\in \mathbb N\) with N ≫ 1 be given. Assume that \(N_{1} = \sigma _{1} N \in 2 \mathbb N\) with σ1 > 1. For fixed \(m_{1} \in \mathbb N \setminus \{1\}\) with 2m1 ≪ N1, let N2 = σ2(N1 + 2m1) with σ2 > 1. For \(m_{2} \in \mathbb N \setminus \{1\}\) with \(2m_{2} \le (1 - \frac {1}{\sigma _{1}} ) N_{2}\), let φ1 and φ2 be the window functions of the form (2.5). Let \(x_{j} \in \left[ - \frac {1}{2}, \frac {1}{2} \right]\), \(j \in \mathcal {I}_{M_{2}}\), be arbitrary spatial nodes and let \(f_{k} \in \mathbb C\), \(k \in \mathcal {I}_{M_{1}}\), be arbitrary coefficients. Further, let a > 1 be the constant (2.6).

Then for a given exponential sum (1.1) with arbitrary frequencies \(v_{k}\in \left[- \frac {1}{2a}, \frac {1}{2a} \right]\), \(k \in \mathcal {I}_{M_{1}}\), the error of the NNFFT can be estimated by

where \(E_{\sigma _{j}}(\varphi _{j})\) for j = 1,2, are the general \(C(\mathbb T)\)-error constants of the form (3.7).

Proof

Now for arbitrary spatial nodes \(x_{j} \in \left[-\frac {1}{2}, \frac {1}{2} \right]\), \(j\in \mathcal {I}_{M_{2}}\), we estimate the error of the NNFFT in the form

At first we consider

From (2.9) and (2.11) it follows that for all \(x \in \mathbb R\),

Thus, by (2.7), (3.6), and (3.8), we obtain the estimate

Now we show that for \(\varphi _{2}(t) := \omega _{2}\big (\frac {N_{2} t}{m_{2}}\big )\) and N2 = σ2(N1 + 2m1) it holds

By construction, the functions s and s1 can be represented in the form

where \({\tilde \varphi }_{2}^{(1)}\) denotes the 1-periodization of the second window function φ2 and

Substituting \(t = \frac {x}{\sigma _{1}}\), it follows that

are 1-periodic functions. By [15, Lemma 2.3], we conclude

where the general \(C(\mathbb T)\)-error constant \(E_{\sigma _{2}}(\varphi _{2})\) defined similar to (3.7) has an analogous property (3.9). Since x = σ1t, we obtain that

such that (3.13) is shown. Note that for \(x \in \left[- \frac {1}{2}, \frac {1}{2} \right]\) it holds

with the index set

Further, by (2.6) and (2.12) it holds

Combining this with (3.12) and (3.14) completes the proof.

Now it merely remains to estimate the general \(C(\mathbb T)\)-error constants \(E_{\sigma _{j}}(\varphi _{j})\) for j = 1,2, and \({\hat \varphi }_{1} \left(\frac {N}{2} \right)\) in (3.11) for specific window functions.

4 Error of NNFFT with \({\sinh }\)-type window functions

In this section we specify the result in Theorem 3.5 for the NNFFT with two \(\sinh\)-type window functions.

Let \(N\in \mathbb N\) with N ≫ 1 be the fixed nonharmonic bandwidth. Let \(\sigma _{1}, \sigma _{2} \in \left[\frac {5}{4}, 2 \right]\) be given oversampling factors. Further let \(N_{1} = \sigma _{1} N \in 2 \mathbb N\), \(m_{1} \in \mathbb N \setminus \{1\}\) with 2m1 ≪ N1, and \(N_{2} = \sigma _{2} (N_{1} + 2m_{1}) = \sigma _{1} \sigma _{2} a N \in 2 \mathbb N\) be given, where a > 1 denotes the constant (2.6). Let \(m_{2} \in \mathbb N \setminus \{1\}\) with \(2m_{2} \le \left(1 - \frac {1}{\sigma _{1}} \right) N_{2}\) be given as well.

For j = 1, 2, we consider the functions

with the shape parameter

As shown in Example 2.1, both functions belong to the set Ω. By scaling, for j = 1, 2, we introduce the \(\sinh\)-type window functions

Now we show that the error of the NNFFT with two \(\sinh\)-type window functions (4.1) has exponential decay with respect to the truncation parameters m1 and m2.

Theorem 4.1

Let the nonharmonic bandwidth \(N\in \mathbb N\) with N ≫ 1 be given. Further let \(N_{1} = \sigma _{1} N \in 2 \mathbb N\) with \(\sigma _{1} \in \left[\frac {5}{4}, 2 \right]\) be given. For fixed \(m_{1} \in \mathbb N \setminus \{1\}\) with 2m1 ≪ N1, let \(N_{2} = \sigma _{2} (N_{1} + 2m_{1})\in 2 \mathbb N\) with \(\sigma _{2} \in \left[\frac {5}{4}, 2 \right]\). For \(m_{2} \in \mathbb N \setminus \{1\}\) with \(2m_{2} \le \left(1 - \frac {1}{\sigma _{1}} \right) N_{2}\), let \(\varphi _{\sinh ,1}\) and \(\varphi _{\sinh ,2}\) be the \(\sinh\)-type window functions (4.1). Assume that m2 ≥ m1. Let \(x_{j} \in \left[ - \frac {1}{2}, \frac {1}{2} \right]\), \(j \in \mathcal {I}_{M_{2}}\), be arbitrary spatial nodes and let \(f_{k} \in \mathbb C\), \(k \in \mathcal {I}_{M_{1}}\), be arbitrary coefficients. Let a > 1 be the constant (2.6).

Then for the exponential sum (1.1) with arbitrary frequencies \(v_{k}\in \left[- \frac {1}{2a}, \frac {1}{2a} \right]\), \(k \in \mathcal {I}_{M_{1}}\), the error of the NNFFT with the \(\sinh\)-type window functions (4.1) can be estimated in the form

with the constant

Proof

By Theorem 3.5 we have to estimate the general \(C(\mathbb T)\)-error constants \(E_{\sigma _{j}}(\varphi _{j})\), j = 1, 2, and \({\hat \varphi }_{1}\big (\frac {N}{2}\big )\) in (3.11) for the \(\sinh\)-type window functions (4.1).

Applying Theorem 3.4, we obtain by the same technique as in [15, Theorem 5.6] that

Now we estimate \({\hat \varphi }_{\sinh ,1}\big (\frac {N}{2}\big )\). Using the scaling property of the Fourier transform, by (2.1) we obtain

where we have used the equality

From m1 ≥ 2 and \(\sigma _{1} \ge \frac {5}{4}\), it follows that

By the inequality for the modified Bessel function I1 (see [15, Lemma 3.3]) it holds

Thus, we obtain

By the simple inequality

we conclude that

and hence

Applying Theorem 3.5, we estimate the error of the NNFFT with two \(\sinh\)-type window functions (4.1). By (4.3) and (4.4) we obtain the inequality

since it holds

This completes the proof.

Example 4.2

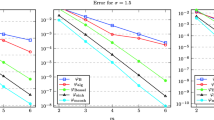

Now we visualize the result of Theorem 4.1. To this end, we fix N = 1200 and consider m1 ∈{2,…,8} and σ1 ∈{1.25,1.5,2}. In Fig. 1 the error bound (4.2) is depicted for several choices of m2 ≥ m1 and σ2 ≥ σ1. Clearly, the error bounds (4.2) decrease for increasing truncation parameters and oversampling factors, respectively. Moreover, we recognize that the results get better when choosing σ2 > σ1, cf. Fig. 1c, and are best for m2 > m1, cf. Fig. 1a. Besides, we remark that choices m2 < m1 or σ2 < σ1 produce the same results as in the equality setting such that we omitted these tests.

Therefore, we recommend the use of truncation parameters m2 > m1 and oversampling factors σ2 ≥ σ1. For the choice of m1 and σ1, we refer to previous works concerning the NFFT, e.g., [15, 16].

Additionally, we aim to compare these theoretical bounds with the errors obtained by the NNFFT. For this purpose, we introduce the relative error

since by Theorem 4.1 it holds

Thus, we choose random nodes \(x_{j} \in \left[-\frac 12, \frac 12 \right]\), \(j \in \mathcal {I}_{M_{2}}\), and \(v_{k} \in \left[- \frac {1}{2a}, \frac {1}{2a}\right]\), \(k \in \mathcal {I}_{M_{1}}\), with \(a = 1 + \frac {2m_{1}}{N_{1}}\), as well as random coefficients \(f_{k}\in \mathbb C\), \(k \in \mathcal {I}_{M_{1}}\), and compute the values (1.2) once directly and once rapidly using the NNFFT. Due to the randomness of the given data, this test is repeated one hundred times and afterwards the maximum error over all repetitions is computed. The errors (4.5) for the parameter choice M1 = 2400 and M2 = 1600 are displayed in Fig. 1b.

Unfortunately, the current release NFFT 3.5.3 of the software package [9] is not yet designed for the use of parameters m1≠m2 and σ1≠σ2. Therefore, we can only handle the setting m1 = m2 and σ1 = σ2 in Fig. 1b. Moreover, the \(\sinh\)-type window function is currently not implemented in the software package [9]. Thus, we use two standard window functions, namely the Kaiser–Bessel window functions, since it was shown in [15] that those are very much related. Since the results in Fig. 1 show great promise, these features might be part of future releases.

5 Approximation of sinc function by exponential sums

Since we aim to present an interesting signal processing application of the NNFFT in the last Section 6, we now study the approximation of the function sinc(Nπx), x ∈ [− 1,1], by an exponential sum (1.1).

In [3] the exponential sum (1.1) is used for a local approximation of a bandlimited function F of the form

where \(w: \left[-\frac {1}{2}, \frac {1}{2}\right]\to [0, \infty )\) is an integrable function with \(\int_{-1/2}^{1/2} w(t) \;{\mathrm d}t >0\). By the substitution

we recognize that the Fourier transform of (5.1) is supported on \(\left[-\frac {N}{2}, \frac {N}{2} \right]\), i.e., the function (5.1) is bandlimited with bandwidth N. For instance, for w(t) := 1, \(t \in \left[-\frac {1}{2}, \frac {1}{2} \right]\), we obtain the famous bandlimited sinc function

Now we show that the bandlimited sinc function (5.2) can be uniformly approximated on the interval [− 1,1] by an exponential sum (1.1). We start with the uniform approximation of the sinc function on the interval \(\left[-\frac {1}{2}, \frac {1}{2} \right]\).

Theorem 5.1

Let ε > 0 be a given target accuracy.

Then for sufficiently large \(n\in \mathbb N\) with n ≥ 2N, there exist constants wj > 0 and frequencies \(v_{j}\in \left(- \frac {1}{2}, \frac {1}{2} \right)\), j = 1,…,n, such that for all \(x\in \left[-\frac {1}{2}, \frac {1}{2} \right]\),

Proof

This result is a simple consequence of [3, Theorem 6.1]. Introducing \(\nu := \frac {N}{n} \le \frac {1}{2}\), we obtain by substitution \(\tau := - \frac {t}{2 \nu }\) that

Setting \(y:= n x \in \left[-\frac {n}{2}, \frac {n}{2} \right]\), we have

Then from [3, Theorem 6.1] (with \(d = \frac {1}{2}\)), it follows the existence of wj > 0 and Θj ∈ (−ν, ν), j = 1,…, n, such that for all \(y \in \left[- \frac {n}{2}-1, \frac {n}{2} + 1 \right]\),

Hence, for all \(x = \frac {y}{n}\in \left[-\frac {1}{2}, \frac {1}{2} \right]\), we conclude that for \(v_{j} := - \frac {{\Theta }_{j}}{2 \nu } \in \left(- \frac {1}{2}, \frac {1}{2} \right)\), j = 1,…,n,

This completes the proof.

Substituting the variable \(x = \frac {t}{2}\), t ∈ [− 1, 1], the frequencies \(v_{j}=\frac {z_{j}}{2}\), zj ∈ (− 1, 1), and replacing the bandwidth N in (5.3) by 2N, we obtain the following uniform approximation of the sinc function (5.2) on the interval [− 1, 1] (after denoting t by x and zj by vj again):

Corollary 5.2

Let ε > 0 be a given target accuracy.

Then for sufficiently large \(n\in \mathbb N\) with n ≥ 4N, there exist constants wj > 0 and frequencies vj ∈ (− 1,1), j = 1,…, n, such that (5.3) holds for all x ∈ [− 1, 1], i.e.,

In practice, we simplify the approximation procedure of the function sinc(Nπx). Since for fixed \(N \in \mathbb N\), it holds

the approximation on the interval [− 1, 1] can efficiently be realized by means of the Clenshaw–Curtis quadrature (see [18, pp. 143–153] or [13, pp. 357–364]). Using this procedure for the integrand \(\frac {1}{2} {\mathrm e}^{- \pi {\mathrm i} N t x}\), t ∈ [− 1, 1], with fixed parameter x ∈ [− 1, 1], the Chebyshev points \(z_{k} = {\cos \limits } \frac {k \pi }{n}\in [-1,\;1]\), k = 0,…, n, and the positive coefficients

with \(\varepsilon _{n}(0) = \varepsilon _{n}(n) := \frac {\sqrt 2}{2}\) and εn(j) := 1, j = 1,…, n − 1 (see [13, p. 359]), we obtain

Further the coefficients fulfill the condition (see [13, p. 359])

Then we receive the following error estimate.

Theorem 5.3

Let \(N\in \mathbb N\), n = νN be given. Let \(z_{k} = {\cos \limits } \frac {k \pi }{n}\in [-1, 1]\), be the Chebyshev points, let wk, k = 0,…, n, denote the coefficients (5.4), and set \(C := \frac {\pi ({\mathrm e}^{2}-1)}{2 {\mathrm e}}\).

Then for all x ∈ [− 1, 1], the approximation error of sinc(Nπx) can be estimated in the form

In other words, the error bound is exponentially decaying if ν > C ≈ 3.69.

Proof

Since the imaginary part of the integrand \(\frac {1}{2} {\mathrm e}^{- \pi {\mathrm i} N t x}\), t ∈ [− 1, 1], is odd, it holds

Therefore, we apply the Clenshaw–Curtis quadrature to the analytic function \(f(t, x) := \frac {1}{2} \cos \limits (\pi N t x)\), t ∈ [− 1, 1], with fixed parameter x ∈ [− 1, 1]. Note that it holds

by the symmetry properties of the Chebyshev points zk and the coefficients wk, namely zk = −zn−k and wk = wn−k, k = 0,…, n (see [13, p. 359]).

By Eρ with some ρ > 1, we denote the Bernstein ellipse defined by

Then Eρ has the foci − 1 and 1. For simplicity, we choose ρ = e.

For \(z \in \mathbb C\) and fixed x ∈ [− 1, 1], it holds

For \(z \in \mathbb C\) with Re z = 0 we have

Hence, in the interior of the Bernstein ellipse Ee, the integrand is bounded, since

Therefore, by [18, p. 146] we obtain the error estimate

By defining \(C := \frac {\pi ({\mathrm e}^{2}-1)}{2 {\mathrm e}}\), the term \(\mathrm e^{-n} \cosh (CN)\) in (5.8) can be rewritten as

Thus, we end up with (5.6). This completes the proof.

In practice, the coefficients wk in (5.4) can be computed by a fast algorithm, the so-called discrete cosine transform of type I (DCT–I) of length n + 1, n = 2t, (see [13, Algorithm 6.28 or Algorithm 6.35]). This DCT–I uses the orthogonal cosine matrix of type I

A similar approach can be found in [7], where a Gauss–Legendre quadrature was applied to obtain explicit coefficients wk for given Legendre points zk. However, the computation of the coefficients wk using Algorithm 2 is more effective for large n.

Example 5.4

Now we visualize the result of Theorem 5.3. In Fig. 2a the error bound (5.6) is depicted as a function of N for several choices of \(\nu \in \{1,\dots ,5\}\), where n = νN. It clearly demonstrates that ν ≥ 4 is needed to obtain reasonable error bounds.

Additionally, we compare the error constant and the maximum approximation error, cf. (5.6). To measure the accuracy we consider a fine evaluation grid \(x_{r}=\frac {2r}{R}\), \(r\in \mathcal {I}_{R}\), with R ≫ N, where R = 3 ⋅ 105 is fixed. On this grid we calculate the discrete maximum error

for different bandwidths N = 2ℓ, \(\ell =3,\dots ,7\). For the parameter n = νN we investigate several choices \(\nu \in \{1,\dots ,10\}\). We compute the coefficients wk using Algorithm 2. Subsequently, the approximation to the sinc function is computed by means of the NFFT, which is possible since the xr are equispaced. The results for both the error bound (5.6) and the maximum error (5.9) are displayed in Fig. 2b. It becomes apparent that for increasing oversampling factor ν, the maximum error (5.9) decreases to machine precision for all choices of N. Even for rather large choices of ν (up to 10) the error remains stable, so there is no worsening in terms of ν.

6 Discrete sinc transform

Finally, we present an interesting signal processing application of the NNFFT. If a signal \(h: \left[- \frac {1}{2}, \frac {1}{2} \right] \to \mathbb C\) is to be reconstructed from its equispaced/nonequispaced samples at \(a_{k}\in \left[- \frac {1}{2}, \frac {1}{2} \right]\), then h is often modeled as linear combination of shifted sinc functions

with complex coefficients ck. In the following, we propose a fast algorithm for the approximate computation of the discrete sinc transform (see [7, 11])

where \(b_{\ell } \in \left[- \frac {1}{2}, \frac {1}{2} \right]\) can be equispaced/nonequispaced points.

Such a function (6.1) occurs by the application of the famous sampling theorem of Shannon–Whittaker–Kotelnikov (see, e.g., [13, pp. 86–88]). Let \(f\in L_{1}(\mathbb R) \cap C(\mathbb R)\) be bandlimited on \(\left[-\frac {L_{2}}{2}, \frac {L_{2}}{2} \right]\) for some L2 > 0, i.e., the Fourier transform of f is supported on \(\left[-\frac {L_{2}}{2}, \frac {L_{2}}{2} \right]\). Then for \(N \in \mathbb N\) with N ≥ L2, the function f is completely determined by its values \(f \left(\frac {k}{N} \right)\), \(k \in \mathbb Z\), and further f can be represented in the form

where the series converges absolutely and uniformly on \(\mathbb R\). By truncation of this series, we obtain the linear combination of shifted sinc functions

which has the same form as (6.1), when ak are equispaced.

Since the naive computation of (6.2) requires \(\mathcal O(L_{1}\cdot L_{2})\) arithmetic operations, the aim is to find a more efficient method for the evaluation of (6.2). Up to now, several approaches for a fast computation of the discrete sinc transform (6.2) are known. In [7], the discrete sinc transform (6.2) is realized by applying a Gauss–Legendre quadrature rule to the integral (5.7). The result can then be approximated by means of two NNFFT’s with \(\mathcal O((L_{1}+L_{2})\log (L_{1}+L_{2}))\) arithmetic operations. A multilevel algorithm with \(\mathcal {O}(L_{2}\log (1/\delta ))\) arithmetic operations is presented in [11] which is most effective for equispaced points ak and bℓ and, as the authors claim themselves, is only practical for rather large target evaluation accuracy δ > 0.

In the following, we present a new approach for a fast sinc transform (6.2), where we approximate the function sinc(Nπx) by an exponential sum on the interval [− 1, 1] by means of the Clenshaw–Curtis quadrature as described in Section 5. Let the Chebyshev points \(z_{j} = \cos \limits \frac {j \pi }{n}\), j = 0,…, n, and the coefficients wj defined by (5.4) be given. Utilizing (5.8), for arbitrary ak, \(b_{\ell } \in \left[- \frac {1}{2}, \frac {1}{2} \right]\) we obtain the approximation

Inserting this approximation into (6.2) yields

If ε > 0 denotes a target accuracy, then we choose n = 2t, \(t \in \mathbb N \setminus \{1\}\) such that by Theorem 5.3 it holds

For example, in the case ε = 10− 8 we obtain n ≥ 4N for N ≥ 54.

We recognize that the term inside the brackets of (6.3) is an exponential sum of the form (1.2), which can be computed by means of an NNFFT. Then the resulting outer sum is of the same form such that this can also be computed by means of an NNFFT. Thus, as in [7] we may compute the discrete sinc transform (6.2) by means of an NNFFT, a multiplication by the precomputed coefficients wj as well as another NNFFT afterwards. Hence, the fast sinc transform, which is an application of the NNFFT, can be summarized as follows.

If we use the same NNFFT in both steps (with the window functions φj, truncation parameters mj, and oversampling factors σj for j = 1,2), Algorithm 3 requires all in all

arithmetic operations.

Considering the discrete sinc transform (6.2), we can deal with the special sums of the form

i.e., we are given equispaced points \(b_{\ell } = \frac {\ell }{N}\) with L2 = N. In this special case, we simply obtain an adjoint NFFT instead of the NNFFT in step 3 of Algorithm 3. Therefore, the computational cost of Algorithm 3 reduces to \(\mathcal O(N\log N + L_{1} + n)\). In the case, where \(a_{k} = \frac {k}{L_{1}}\), \(k\in \mathcal {I}_{L_{1}}\), the NNFFT in step 1 of Algorithm 3 naturally turns into an NFFT. Clearly, in this case the same amount of arithmetic operations is needed as in the first special case. If both sets of nodes ak and bℓ are equispaced, then the computational cost reduces even more to \(\mathcal O(N\log N + n)\). Hence, these modifications are automatically included in our fast sinc transform.

A quite similar approach was already developed in [2] for the computation of the Coulombian interaction between punctual masses, where the main idea is using two different quadrature rules to approximate the given problem. Then the computation can be done by means of NNFFTs, i.e., they receive a 3-step method analogous to Algorithm 3.

Now we study the error of the fast sinc transform in Algorithm 3, which is measured in the form

Theorem 6.1

Let \(N\in \mathbb N\) with N ≫ 1 and L1, \(L_{2} \in 2 \mathbb N\) be given. Let \(N_{1} = \sigma _{1} N \in 2 \mathbb N\) with σ1 > 1. For fixed \(m_{1} \in \mathbb N \setminus \{1\}\) with 2m1 ≪ N1, let N2 = σ2(N1 + 2m1) with σ2 > 1. For \(m_{2} \in \mathbb N \setminus \{1\}\) with \(2m_{2} \le \big (1 - \frac {1}{\sigma _{1}}\big ) N_{2}\), let φ1 and φ2 be the window functions of the form (2.5). Let ak, \(b_{\ell } \in \left[ - \frac {1}{2}, \frac {1}{2} \right]\) with \(k \in \mathcal {I}_{L_{1}}\), \(\ell \in \mathcal {I}_{L_{2}}\) be arbitrary points and let \(c_{k} \in \mathbb C\), \(k \in \mathcal {I}_{L_{1}}\), be arbitrary coefficients. Let a > 1 be the constant (2.6). For a given target accuracy ε > 0, the number n = 2t, \(t \in \mathbb N \setminus \{1\}\), is chosen such that

Then the error of the fast sinc transform can be estimated by

where \(E_{\sigma _{j}}(\varphi _{j})\) for j = 1,2, are the general \(C(\mathbb T)\)-error constants of the form (3.7). If it holds

one can use the simplified estimate

Proof

By (6.3), the value hℓ is an approximation of h(bℓ). Since ak, \(b_{\ell }\in \left[- \frac {1}{2}, \frac {1}{2} \right]\), it holds by (5.8) and (6.6) that

Hence, we conclude that

After step 1 of Algorithm 3, the error of the NNFFT (with the window functions φ1 and φ2) can be estimated by Theorem 3.5 in the form

Using (5.5), step 2 of Algorithm 3 generates the error

After step 3 of Algorithm 3, the error of the NNFFT (with the same window functions φ1 and φ2) can be estimated by Theorem 3.5 in the form

Using the triangle inequality, we obtain

such that by (5.5)

Thus, the error of Algorithm 3 can be estimated by

From (6.10)–(6.12) it follows the estimate (6.7). If it holds (6.8), we have

and therefore the simplified estimate (6.9).

Thus, the error of Algorithm 3 for the fast sinc transform mostly depends on the target accuracy ε of the precomputation and on the general \(C(\mathbb T)\)-error constants \(E_{\sigma _{j}}(\varphi _{j})\), j = 1,2, of the window functions φj, j = 1,2, see Theorem 3.5.

Example 6.2

Next we verify the accuracy of our fast sinc transform in Algorithm 3. To this end, we choose random nodes \(a_{k}\in \left[-\frac 12,\frac 12 \right]\), equispaced points \(b_{\ell } = \frac {\ell }{N}\) with \(\ell \in \mathcal {I}_{N}\), as well as random coefficients \(c_{k}\in \mathbb C\), \(k\in \mathcal {I}_{L_{1}}\), and compute the discrete sinc transform (6.2) directly as well as its approximation (6.4) by means of the fast sinc transform. Subsequently, we compute the maximum error (6.5). Due to the randomness of the given values this test is repeated one hundred times and afterwards the maximum error over all repetitions is computed.

In this experiment we choose different bandwidths N = 2k, \(k=5,\dots ,13,\) and without loss of generality we use \(L_{1}=\frac N2\). We apply Algorithm 3 using the weights wj computed by means of Algorithm 2 and the Chebyshev points \(z_{j} = {\cos \limits } \frac {j \pi }{n}\), j = 0,…,n. Therefore, we only have to examine the parameter choice of n ≥ 4N. To this end, we compare the results for several choices, namely for n ∈{4N,6N,8N}. The appropriate results can be found in Fig. 3. We see that for large N there is almost no difference between the different choices of n. However, we point out that a higher choice heavily increases the computational cost of Algorithm 3. Therefore, it is recommended to use the smallest possible choice n = 4N. Compared to [7] the same approximation errors are obtained, but with a more efficient precomputation of weights.

Data Availability

Data sharing not applicable to this article as no datasets were generated or analyzed during the current study.

References

Abramowitz, M, Stegun, I.A. (eds.): Handbook of Mathematical Functions. National Bureau of Standards, Washington (1972)

Alouges, F., Aussal, M.: The sparse cardinal sine decomposition and its application for fast numerical convolution. Numer. Algor. 70(2), 427–448 (2015)

Beylkin, G., Monzón, L.: On generalized Gaussian quadratures for exponentials and their applications. Appl. Comput. Harmon. Anal. 12, 332–373 (2002)

Dutt, A., Rokhlin, V.: Fast Fourier transforms for nonequispaced data. SIAM J. Sci. Statist. Comput. 14, 1368–1393 (1993)

Dutt, A., Rokhlin, V.: Fast Fourier transforms for nonequispaced data II. Appl. Comput. Harmon. Anal. 2, 85–100 (1995)

Elbel, B., Steidl, G.: Fast Fourier transforms for nonequispaced grids. In: Chui, C.K., Schumaker, L.L. (eds.) Approximation Theory IX. Vanderbilt Univ Press, Nashville (1998)

Greengard, L., Lee, J. -Y., Inati, S.: The fast sinc transform and image reconstruction from nonuniform samples in k-space. Commun. Appl. Math. Comput. Sci. 1, 121–131 (2006)

Ifantis, E. K., Siafarikas, P.D.: A differential equation for the zeros of Bessel functions. Appl. Anal. 20, 269–281 (1985)

Keiner, J., Kunis, S., Potts, D.: NFFT 3.5, C subroutine library. http://www.tu-chemnitz.de/potts/nfft. Contributors: F. Bartel, M. Fenn, T. Görner, M. Kircheis, T. Knopp, M. Quellmalz, M. Schmischke, T. Volkmer, A. Vollrath (2020)

Lee, J. -Y., Greengard, L.: The type 3 nonuniform FFT and its applications. J. Comput. Physics 206, 1–5 (2005)

Livne, O., Brandt, A.: MuST: The multilevel Sinc Transform. SIAM J. Sci. Comput. 33(4), 1726–1738 (2011)

Oberhettinger, F.: Tables of Fourier Transforms and Fourier Transforms of Distributions. Springer, Berlin (1990)

Plonka, G., Potts, D., Steidl, G., Tasche, M.: Numerical Fourier Analysis. Birkhäuser/Springer, Cham (2018)

Potts, D., Steidl, G., Tasche, M.: Fast Fourier transforms for nonequispaced data: A tutorial. In: Benedetto, J.J., Ferreira, P.J.S.G. (eds.) Modern Sampling Theory: Mathematics and Applications, pp 247–270. Birkhäuser, Boston (2001)

Potts, D.: M. Tasche. Uniform error estimates for nonequispaced fast Fourier transforms. Sampl. Theory Signal Process Data Anal. 19(17), 1–42 (2021)

Potts, D., Tasche, M.: Continuous window functions for NFFT. Adv. Comput. Math. 47(53), 1–34 (2021)

Steidl, G.: A note on fast Fourier transforms for nonequispaced grids. Adv. Comput. Math. 9, 337–353 (1998)

Trefethen, L.N.: Approximation Theory and Practice. SIAM Approximation philadelphia PA (2013)

A. Zygmund.: Trigonometric Series, vol. I, II, 3rd edn. Cambridge Univ Press, Cambridge (2002)

Acknowledgements

The authors thank the referees and the editor for their very helpful suggestions for improvements.

Funding

Open Access funding enabled and organized by Projekt DEAL. Melanie Kircheis received funding support from the European Union and the Free State of Saxony (ESF). Daniel Potts received funding from Deutsche Forschungsgemeinschaft (German Research Foundation) – Project–ID 416228727 – SFB 1410.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kircheis, M., Potts, D. & Tasche, M. Nonuniform fast Fourier transforms with nonequispaced spatial and frequency data and fast sinc transforms. Numer Algor 92, 2307–2339 (2023). https://doi.org/10.1007/s11075-022-01389-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-022-01389-6

Keywords

- Nonuniform fast Fourier transform

- NUFFT

- NNFFT

- Nonequispaced nodes in space and frequency domain

- Exponential sums

- Fast sinc transform

- Error estimates

- Sampling