Abstract

Recently, several types of neural operators have been developed, including deep operator networks, graph neural operators, and Multiwavelet-based operators. Compared with these models, the Fourier neural operator (FNO), a physics-inspired machine learning method, is computationally efficient and can learn nonlinear operators between function spaces independent of a certain finite basis. This study investigated the bounding of the Rademacher complexity of the FNO based on specific group norms. Using capacity based on these norms, we bound the generalization error of the model. In addition, we investigate the correlation between the empirical generalization error and the proposed capacity of FNO. We infer that the type of group norm determines the information about the weights and architecture of the FNO model stored in capacity. The experimental results offer insight into the impact of the number of modes used in the FNO model on the generalization error. The results confirm that our capacity is an effective index for estimating generalization errors.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Physics-inspired machine learning is an actively studied area with two approaches to learning. One approach focuses on determining solutions to partial differential equations (PDEs) for fixed PDE and boundary conditions and includes the deep Ritz method (Weinan & Yu, 2018), physics-informed neural networks (Raissi et al., 2019), and least-squares ReLU neural network (Cai et al., 2021). The other approach focuses on the operators between the function spaces and includes DeepONets (Lu et al., 2021), multiwavelet-based operator (Gupta et al., 2021), graph neural operator (Li et al., 2020) and Fourier neural operator (FNO) Li et al. (2021). This study focuses on FNO, which uses a Fourier transform to manage the convolution operator between two functions quickly and practically. One advantage of FNO is its computational efficiency; unlike DeepONet, its representation is not limited to a finite-dimensional space spanned by a few basis functions. Previous studies ( Li et al. (2021) and Pathak et al. (2022)) have confirmed that the FNO successfully approximates numerical solvers and real-world data, verifying its computational efficiency and potential applicability. Unlike real-world machine-learning problems, approximating the solver operator of a PDE is deterministic and concrete. The universal approximation property of the FNO and its approximation error for certain PDE problems (Kovachki et al., 2021) have been verified; however, there are no results estimating the generalization error of the FNO. Moreover, although approximating the solver operator of the PDE is a deterministic problem, we can only provide a finite number of samples to the FNO. Therefore, the accurate inference of hidden data is another problem to consider. Several approaches have been proposed regarding the bounding generalization error of deep neural networks, such as the group norm of weights (Neyshabur et al., 2015), spectral norm (Bartlett et al., 2021), path norm (Neyshabur et al., 2015), Fisher-Rao norm (Liang et al., 2019), and relative flatness (Petzka et al., 2021). In this study, we investigated the bounding of generalization errors within the probably approximately correct (PAC) learning theory framework. In particular, we bound the Rademacher complexity of the FNO.

1.1 Overview of FNOs

Figure 1 illustrates the FNO architecture. The network input is \(\mathbb {R}^{d_{a}}\)-valued function in the domain \(\tilde{D} \subset \mathbb {R}^{d}\). We denote the input function space of FNO by \(\mathcal {A}(\tilde{D};\mathbb {R}^{d_{a}})\). The vector value of the input function is lifted to a \(d_{v}\)-dimensional vector using a layer defined as \(\mathcal {N}_{P}\); while passing through the Fourier layers (denoted as \(\mathcal {A}_{i}\) in the diagram) iteratively, it is processed as a \(\mathbb {R}^{d_{v}}\)-valued function. Each Fourier layer comprises an activation function, which is the sum of a neural network with a convolution of the input function with a kernel parameterized by weight \(R_{i}\). After passing through the Fourier layers, the vector value of the \(\mathbb {R}^{d_{v}}\)-valued function \(v_{D}\) is projected onto the \(d_{u}\)-dimensional vector using \(\mathcal {N}_{Q}\). We denote the output function space of FNO by \(\mathcal {U}(\tilde{D};\mathbb {R}^{d_{u}})\). The neural network \(A_{i}\) in the Fourier layers can be chosen arbitrarily. In our results, we chose \(A_{i}\) as a fully connected network (FCN) or convolutional neural network (CNN). Because computational machines cannot handle infinite-dimensional data, we constructed an FNO model using finite parameters based on the above concept, considering real-world implementation.

1.2 Probably approximately correct learning

PAC learning is a framework of statistical learning theory proposed by Valiant (1984). One of the main concepts of PAC learning theory is the no-free-lunch (NFL) theorem, which states that it is not possible to achieve low approximation and estimation errors simultaneously. The trade-off between the two errors is closely related to the complexity of the hypothesis class. Various quantities related to the complexity of the hypothesis class, such as the VC dimension, Rademacher complexity, and Gaussian complexity, determine the learnability and decay of estimation errors. All the complexities are related; however, there are several differences. For example, the VC dimension is independent of the training set, whereas the others are not. Neural networks and deep learning can be applied to PAC learning theory as a subcategory of machine learning. Recently, various studies have investigated bounding the Rademacher complexity and the VC dimensions of the hypothesis class of neural networks. For instance, results regarding the bounding of Rademacher complexities for FCN (Neyshabur et al., 2015), RNN (Minshuo et al., 2020), and GCN (Lv, 2021), and analysis of the VC dimension of neural networks (Sontag, 1998) have been obtained. In addition, there is information about the bounding Rademacher complexity of DeepONet (Gopalani et al., 2022), a kind of neural operator. Reference (Weinan et al., 2020) estimated the generalization error of ResNet in prior and posterior estimates.

1.3 Our contributions

In this study, we defined the capacities of FNO models based on certain group norms. We bound the Rademacher complexity of the hypothesis class based on these capacities for two types of FNOs (Fourier layers with FCN and CNN) and induced the bounding of the posterior generalization error of the FNO models. In Sect. 4, we experiment with data generated from the Burgers equation problem and verify the correlation between our bounding process and empirical generalization errors. Through experiments, we gain insights into the model architecture and weights contained in various capacities. We also qualitatively confirmed that the empirical generalization errors depend on the number of modes used in the FNO model. Furthermore, we confirmed the strong correlation between our capacity factored by dataset size and empirical generalization error on experiments with varying dataset size. We replicate the experiments using other PDE problems. Finally, we compared our capacity with the Hessian trace, Fisher-Rao norm, and relative flatness, showing time, memory efficiency, and effectiveness of our capacity.

2 Preliminary

Notation Several indices are considered in the discussion. Therefore, to simplify the formulas, we denote \(x_{1}\dots x_{d}\) as \({\textbf {x}}\) and \(k_{1}\dots k_{d}\) as \({\textbf {k}}\). In addition, for the multi-index tensor in the norm, indices denoted by \(\cdot\) are used to calculate the norm; for example,

Discretization of data Because the function space is infinite-dimensional, to treat the data and operator numerically, we discretize the function domain and consider the function to be a finite-dimensional vector. Let \(\tilde{D}_{N}=\{x_{1},...,x_{N}\}\) be the discretization of the domain \(\tilde{D}\in \mathbb {R}^{d}\). Then, the \(\mathbb {R}^{m}\)-valued function f is discretized into \((f(x_{1}),...,f(x_{N})))\in \mathbb {R}^{N\times m}\): Subsequently, we discretize \(\mathcal {A}(\tilde{D};\mathbb {R}^{d_{a}})\) and \(\mathcal {U}(\tilde{D};\mathbb {R}^{d_{u}})\) as \(\mathbb {R}^{N\times d_{a}}\) and \(\mathbb {R}^{N\times d_{u}}\), respectively. Then, sample data are defined as follows: element \(((a_{jk}),(u_{jk}))\in \mathbb {R}^{N\times d_{a}}\times \mathbb {R}^{N\times d_{u}}\).

Fourier transform Based on the Fourier analysis, we know that the Fourier transform transforms the convolution operation to pointwise multiplication. For the function of domain \(\tilde{D} \subset \mathbb {R}^{d}\), let \(\mathcal {F}\) and \(\mathcal {F}^{-1}\) be the Fourier and inverse Fourier transforms over \(\tilde{D}\), respectively. Thus, we obtain the following relationship:

For our analysis, we select \(\tilde{D}\) as \([0,2\pi ]^{d}\). Because we treat functions as discretized vectors, we can treat the Fourier transform as a discrete Fourier transform. If the discretization of \(\tilde{D}\) is uniform, it can be replaced by a fast Fourier transform. Consider that \(\tilde{D}\) is discretized uniformly by resolution \(N_{1}\times \dots \times N_{d} = N\); then, for discretized function \(f \in \mathbb {R}^{N}\), its FFT \(\mathcal {F}(f)(k)\) and IFFT \(\mathcal {F}^{-1}(f)(k)\) are defined as follows:

In our analysis, we denote the components of FFT and IFFT tensors as follows: \(F_{{\textbf {k}}{} {\textbf {x}}}=\frac{1}{\sqrt{N_{1}\dots N_{d}}}e^{-2i\pi \sum _{j=1}^{d}{\frac{x_{j}k_{j}}{N_{j}}}}\), \(F^{\dag }_{{\textbf {x}}{} {\textbf {k}}}=\frac{1}{\sqrt{N_{1}\dots N_{d}}}e^{2i\pi \sum _{j=1}^{d}{\frac{x_{j}k_{j}}{N_{j}}}}\), respectively.

Definition 1

(General FNO) Let \(\tilde{D}_{N}\) be the discretized domain in \(\mathbb {R}^{d}\); then, \({\textbf {FNO}}: \mathbb {R}^{N\times d_{a}} \rightarrow \mathbb {R}^{N\times d_{u}}\) is defined as follows:

where \(\mathcal {N}_{P}\) and \(\mathcal {N}_{Q}\) denote the neural networks used for lifting and projection, respectively. Each \(\mathcal {A}_{i}\) is a Fourier layer. For simplicity, we assume that \(\mathcal {N}_{Q}\) and \(\mathcal {N}_{P}\) are linear maps. Each Fourier layer is a composition of the activation function with a sum of convolutions based on a parameterized function and linear map. Only partial frequencies were used in the Fourier layers, expressed as an index set \(K=\{(k_{1},...,k_{d})\in \mathbb {Z}^{d}: 0 \le k_{j} \le k_{max,j}, j=1,...,d\}\). The formula for the FNO is

CNN layer For each Fourier layer, a general linear map can be replaced by a CNN layer. A schematic of the convolution with 2D data and a kernel is shown in Fig. 2.

A certain kernel size swipes the input tensors so that each index of outputs has an inner product with the kernel and local components of the input tensor centering the index. For example, for a d-rank input tensor of size \(N_{1}\times \cdots \times N_{d}\), we consider a d-rank tensor kernel K of size \(c_{1}\times \cdots \times c_{d}\), with \(c_{i}\) less than \(N_{i}\). Let us denote this CNN layer by the kernel \(C(c_{1}\times \cdots \times c_{d})\); then, the tensor that passes through the CNN layer with K is defined as follows:

The CNN layers were restricted to kernels of odd sizes to maintain the positional dimension of the tensor. Padding was applied to the input tensor of the CNN layer to fit the dimensions. For example, for \(N_{1}\times \cdots \times N_{d}\)-dimensional tensor \(x_{x_{1}\cdots x_{d}}\) and CNN layer \(C(c_{1},\dots ,c_{d})\), we pad \(\frac{c_{i}-1}{2}\) zeros for each side of the input tensor. We denote this padded tensor by \(\tilde{x}\). Subsequently, \(C(c_{1},\dots ,c_{d})(\tilde{x}_{x_{1}\cdots x_{d}})\) have the same dimensions as the input tensor. As the number of channels in the Fourier layers is fixed, for a CNN layer with multiple channels, we use the same notation, that is, \(C(c_{1},\dots ,c_{d})\). The formula for multi-channel CNN layer is as follows:

Definition 2

(FNO with CNN layer) Consider the settings of the above FNO; the only difference is that the Fourier layer is the sum of the CNN layer and convolution with parameterized functions.

An ideal operator should infer a solution from all the functions in the input function space. However, for practical and implementation ease, finite training samples were selected from vector space distributions, which is a discretized function space. Suppose \(\mathcal {D}\) is a distribution on \(\mathbb {R}^{N\times d_{a}}\times \mathbb {R}^{N\times d_{u}}\). Then, we define the loss function as follows:

Definition 3

(Loss for FNO) Suppose that the training dataset is given by

where each sample is chosen independent of the distribution \(\mathcal {D}\). The training loss is defined as follows:

Let p be the probability distribution of \(\mathcal {D}\), defined as \(\mathbb {R}^{N\times d_{a}}\times \mathbb {R}^{N\times d_{u}}\). Then, the loss of the entire distribution \(\mathcal {D}\) is defined as follows:

3 Generalization bound for FNOs

In this section, we calculate the upper bound of the Rademacher complexity of the FNO, and estimate the generalization bound. In addition, we present several lemmas regarding the main results. The proof of the main theorems comprises two main lemmas: inequality for the Rademacher complexity and sup-norm of FNO models. Using these lemmas, we prove the main results.

3.1 Mathematical setup

Definition 4

(Rademacher complexity) Let \(\mathcal {F}\) represent mapping from \(\mathcal {X}\) to \(\mathbb {R}\). Suppose \(\{x_{i}\in \mathcal {X}:i=1,...,m\}\). \(\epsilon _{i}\) are independent and uniform and \(\{+1,-1\}\)-valued random variables. The empirical Rademacher complexity of \(\mathcal {F}\) for a given sample set is defined as follows:

The main components of our results are as follows:

Definition 5

(weight norms and capacity) For the multi-rank tensor \(M_{i_{1},...,i_{m},j_{1},...,j_{k}}\), we define the following weight norm:

For \(p=\infty\) or \(q=\infty\), we consider the sup-norm instead of the definition above. Now, suppose that for an FNO with a Fourier layer of depth D, we denote Q and P as the projection and lifting weight matrices, respectively, and \(A_{i}\) and \(R_{i}\) as the weight tensors of the Fourier layers. We then define \(\Vert \cdot \Vert _{p,q}\), where p is the index for positions, frequencies, and inputs, and q is the output index. The following norm is defined for the Fourier layer:

The capacity of the FNO model h as a product of the weights of its layers is defined as follows:

Next, for the kernel tensor K of the CNN layer, we define the following norms for the weights and capacities of the entire neural network: In the \(\Vert \cdot \Vert _{p,q}\) norm for the kernel tensor of the CNN layer, p is the index of the kernels and input, and q is the output index.

Next, we define hypothesis classes in which the Rademacher complexity is bounded in our results. A hypothesis class is a collection of functions from which a learning algorithm selects a function.

Definition 6

(Hypothesis classes of FNO) Suppose that the function classes of the FNO with D depth and maximal modes of the Fourier layers are \(k_{max,1},...,k_{max,d}\). The width and size of the input vector, size of the output vector, and activation function are fixed. Then, the hypothesis class for a general FNO is defined as follows:

Finally, we define the hypothesis class of the FNO with CNN layers as follows:

We also define the following auxiliary definition for the hypothesis class of sub-neural networks of FNO models, where the terminal layer is the Fourier layer (denoted as \({\textbf {FNO}}_{sub:i}\)).

Similarly, we define \({\mathcal {H}_{CNN}}_{C_{P},C_{0},...,C_{i}}^{d_{in}}\).

3.2 Main results

The notations in each lemma and theorem are based on the definitions in Sect. 3.1. The activation function is Lipschitz continuous and passes through the origin (\(\sigma (0)=0\)). Moreover, we set our notations as follows: for a given sample \(S=\{a_{i}\}_{i=1,\dots ,m}\) (with input data \(a_{i}\)) and hypothesis class \(\mathcal {H}_{C_{P},C_{0},...,C_{t}}^{d_{in}}\), we denote \(h(a_{i})\) by \(v_{t,i}\), where \(h\in \mathcal {H}_{C_{P},C_{0},...,C_{t}}^{d_{in}}\). The components are denoted by \(v_{t,i,{\textbf {x}}j}\).

The following lemma regarding \(l_{p}\) norms is frequently used in our proofs.

Lemma 1

(Norm inequality) If \(1\le p\le q \le \infty\), then for \(v \in \mathbb {R}^N\) we obtain the following inequality:

Let \(\lfloor \cdot \rfloor _{+}\) denote a ReLU function. Then, for an arbitrary \(1\le p,q\), the inequality can be defined as

The following lemma handles nonlinear loss in our proof (the proof can be found in Maurer (2016)).

Lemma 2

(Vector-contraction inequality for Rademacher complexity) Assume that \(\sigma\) is a Lipschitz continuous function with Lipschitz constant L and \(\mathcal {F}\) is a hypothesis class of \(\mathbb {R}^{N}\)-valued functions. Thus, we obtain the following inequality:

Here, we present the main results. The proof has two parts. First, we obtain the upper bound of \(p*\)-norm of the output of FNO models. Second, we bound the Rademacher complexity of the FNO model on samples based on the obtained upper bound. We assume that the projection and lifting layers are linear maps. However, we can easily generalize this to a general FCN.

Lemmas 3 and 3’ are the main factors in our results; the Fourier layers are peeled inductively.

Lemma 3

Suppose \(\mathcal {H} = \mathcal {H}_{C_{P},C_{1},...,C_{D},C_{Q}}^{d_{in}}\) is the FNO hypothesis class with constants \(C_{P},C_{1},\dots ,C_{D},C_{Q}\). Then, for the sample \(a \in \mathbb {R}^{N\times d_{a}}\), we obtain the following inequality:

Proof

Then, we have the following:

Subsequently, we peel off the Fourier layers.

For \(\Big \Vert \sum F^{\dag }_{{\textbf {x}}{} {\textbf {k}}}R_{D,{\textbf {k}},j\cdot }F_{{\textbf {k}}\cdot }\Big \Vert _{p}\) in (2),

For fixed \({\textbf {x}}, {\textbf {z}}, k\), \(\Big (F^{\dag }_{{\textbf {x}}{} {\textbf {k}}}F_{{\textbf {k}}{} {\textbf {z}}}\Big )_{{\textbf {k}}}\) is a \(k_{max,1},\dots ,k_{max,d}\)-dimensional vector, where each component exhibits the \(\frac{e^{ib}}{N}\) form. Thus, by applying Hölder’s inequality, we obtain the following inequality:

Subsequently, the following bound is obtained:

We iteratively apply the above bound to obtain the following inequality:

By combining the two inequalities, we obtain the following inequality.

We use Hölder’s inequality in (1) and (2) and norm inequalities in (3) and (4). \(\square\)

The proof of the following lemma is similar to that of Lemma 3. However, in this case, the hypothesis class is FNO with CNN layers.

Lemma 3’

Suppose \(\mathcal {H} = {\mathcal {H}_{CNN}}_{C_{P},C_{1},...,C_{D},C_{Q}}^{d_{in}}\) is the hypothesis class of an FNO with CNN layer and constants \(C_{P},C_{1},\dots ,C_{D},C_{Q}\). Then, for a sample \(a\in \mathbb {R}^{N\times d_{a}}\), we obtain the following inequality:

Proof

We modify the induction parts of the Fourier layers in the proof of Lemma 3.

where we use Hölder’s inequality in (5). Subsequently, by applying \(p*\) norm to the inequality above over \({\textbf {x}},j\) and the norm inequality, we obtain the following inequality:

The remainder of this proof is similar to that of Lemma 3. \(\square\)

Lemma 4

Suppose \(\mathcal {H}_{C_{P},C_{1},...,C_{D},C_{Q}}^{d_{in}}\) is the hypothesis class of the FNO with given constants \(C_{P},C_{1},\dots ,C_{D}, C_{Q}\). Then, for samples \(S=\{a_{i}\}_{i=1,\dots ,m}\), we obtain the following inequality:

Proof

where we used norm inequality in (6). \(\square\)

Theorem 1

Suppose \(\mathcal {H}_{C_{P},C_{1},...,C_{D},C_{Q}}^{d_{in}}\) is a hypothesis class with constants \(C_{P},C_{1},\dots ,C_{D},C_{Q}\). Then, for samples \(S=\{a_{i}\}_{i=1,\dots ,m}\), we obtain the following inequality:

Proof

\(\square\)

Theorem 2

(FNO with CNN layer) Suppose \({\mathcal {H}_{CNN}}_{C_{P},C_{1},...,C_{D},C_{Q}}^{d_{in}}\) is a hypothesis class with constants \(C_{P},C_{1},\dots ,C_{D},C_{Q}\). Then, for samples \(S=\{a_{i}\}_{i=1,\dots ,m}\), we obtain the following inequality:

Proof

\(\square\)

Corollary 1

For a constant \(\gamma >0\), consider the hypothesis class \(\mathcal {H}_{\gamma _{p,q}\le \gamma }\), which is a collection of FNOs with \(\gamma _{p,q}\le \gamma\). For samples \(S=\{a_{i}\}_{i=1,\dots ,m}\), we obtain the following inequality:

For a given hypothesis class \({\mathcal {H}_{CNN}}_{\gamma _{p,q}\le \gamma }\), similar to \(\mathcal {H}_{\gamma _{p,q}\le \gamma }\), and training samples \(S=\{a_{i}\}_{i=1,\dots ,m}\), we obtain the following inequality:

Proof

As

We obtain the following inequalities.

Because the upper bound of \(p*\)-norm of the models of the hypothesis class in the above equation is the same as that in Lemma 3, we apply the same logic as in Theorem 1. Thus, we obtain the following inequality:

Similarly, based on the above proof, we obtain the inequality for FNO with CNN layers. \(\square\)

Recall the following fundamental theorem (for details, see Shalev-Shwartz and Ben-David (2014)) that states the statistical estimation of the generalization error bound of a given hypothesis class in terms of Rademacher complexity.

Theorem 3

(Generalization error bounding based on Rademacher complexity) Given hypothesis class \(\mathcal {H}\) and loss function \(l:\mathcal {H}\times Z\) that satisfy the following case: for all \(h \in \mathcal {H}\) and \(z \in Z\), we obtain \(\vert l(h,z)\vert \le c\). Then, with a probability of at least \(1-\delta\), for all \(h\in \mathcal {H}\), we obtain

where \(\mathcal {D}\) is the probability distribution on Z and S is a training dataset sampled from \(\mathcal {D}\) i.i.d.

Before considering the generalization bound of FNO, we select the distribution \(\mathcal {D}\) on \(\mathbb {R}^{N\times d_{a}}\times \mathbb {R}^{N\times d_{u}}\) to have a compact support. Thus, \(\vert l(h,z)\vert \le c\) condition in Theorem 3 holds. Then, using Theorem 3 and Corollary 1, we obtain the following estimation of the generalization error bound:

Theorem 4

(Generalization error bound for FNO) For the training dataset \(S=\{(a_{i},u_{i})\}_{i=1,\dots ,m}\), sampled from probability distribution \(\mathcal {D}\) i.i.d., and for hypothesis class \(\mathcal {H}_{\gamma _{p,q}\le \gamma }\), let \(h^{\star }\) be the ERM minimizer of \(L_{S}\) and \(\Vert h(a)-u\Vert _{2} \le \epsilon ^{2}\) for all \((a,u)\sim \mathcal {D}\), \(h\in \mathcal {H}_{\gamma _{p,q}\le \gamma }\). Subsequently, with a probability of at least \(1-\delta\), we obtain the following inequality:

Similarly for hypothesis class of FNOs with CNN layers, dataset S, and hypothesis class \({\mathcal {H}_{CNN}}_{\gamma _{p,q}\le \gamma }\), let \(h^{\star }_{CNN}\) be the ERM minimizer of \(L_{S}\) and \(\Vert h(a)-u\Vert _{2} \le \epsilon ^{2}\) for all \((a,u)\sim \mathcal {D}\), \(h\in {\mathcal {H}_{CNN}}_{\gamma _{p,q}\le \gamma }\). Subsequently, with a probability of at least \(1-\delta\), we obtain the following inequality:

Proof

We just need to calculate \(\mathcal {R}_{m}(l \circ \mathcal { H})\) term in Theorem 3.

Similarly, based on the above proof, we obtain the inequality for the FNO with CNN layers. \(\square\)

If the capacity of FNO model h is \(\gamma\), it is included in the hypothesis class \(\mathcal {H}_{\gamma _{p,q}\le \gamma }\). Because the inequalities in Theorem 4 hold for all hypotheses in class, we have the following posterior estimate of FNO:

Corollary 2

(Posterior estimation of generalization and expected errors) Given architecture parameters \(N, H, d_{u}, d_{a}, L\), and training samples \(\{(a_{i},u_{i})\}_{i=1,\dots ,m}\) with \(\Vert a_{i}\Vert _{p*} \le B\) for all i. Suppose h is a trained FNO (Fourier layer with FCN or CNN) such that \(\Vert h(a)-u\Vert _{2} \le \epsilon ^{2}\) for all training samples. Then, with a confidence level of at least \(1-\delta\), we obtain the following estimates:

for FNOs with CNN,

Our definitions of capacity and results are motivated by the group-norm capacity of an FCN (Neyshabur et al., 2015). The results in Neyshabur et al. (2015) imposed the upper bound of the generalization error on the capacity and inverse factor of \(\sqrt{m}\). The proof of this upper bound relies on the homogeneity of the ReLU activation function. Our results apply only to the Lipschitzness of the activation function. Although our results only have the O(1) bound, if we focus on the ReLU activation case, we may have the following bound based on our capacity derivation and theorems in Neyshabur et al. (2015).

Corollary 3

(Posterior estimation of generalization error and expected error in the RELU activation case) Given architecture parameters \(N, H, d_{u}, d_{a}, L\), and training samples \(\{(a_{i},u_{i})\}_{i=1,\dots ,m}\) with \(\Vert a_{i}\Vert _{p*} \le B\) for all i. Suppose h is a trained FNO (Fourier layer with FCN or CNN) such that \(\Vert h(a)-u\Vert _{2} \le \epsilon ^{2}\) for all training samples and \(1\le p \le 2\), \(1\le q \le p*\). Then, with a confidence level of at least \(1-\delta\), we obtain the following estimates:

For FNOs with CNN,

Therefore, for the ReLU activation case, we can guarantee convergence of the generalization error with increasing training dataset size. However, the actual convergence rate of the generalization error is higher than the theoretical bound, as observed in Sect. 4.

4 Experiments

This section validates the experimental results. In addition, we show that the capacity we defined is an effective index for estimating empirical generalization errors in various respects.

4.1 Overall correlation over various p and q

First, we investigate the correlation between our capacity and the empirical generalization errors for various capacities of p and q.

Data specification For the experiment, we synthesized a dataset based on the following Burgers equation:

The domain of the problem is a circle; we uniformly discretize the domain by \(N=1024\). As described in Sect. 2, each data point represents a pair of functions. In our experiment, the input function was an initial condition, and the target function was a solution to the above equation at \(t=0.1\). Each input function was generated from Gaussian random fields with covariance \(k(x,y)=e^{-\frac{(x-y)^2}{(0.05)^2}}\). The training and test datasets comprise 800 and 200 pairs of functions, respectively (both generated independently).

Correlation for various capacities of p and q We investigated the correlation between the generalization error and capacities. Each point in Fig. 3 represents a trained model for the randomly chosen hyperparameters. The architecture of the models used in our experiment was organized as follows: 2-depth Fourier layers, linear layers without projection activation, and lifting layers. The width is fixed at 64. The weight decay for each training session was randomly chosen from 0, 2*1e−2, 4*1e−2, 6*1e−2, and 8*1e−2; \(k_{max}\) was randomly chosen from 8, 12, 16, and 20; the kernel size was randomly chosen from 1, 3, 5, and 7 for 100 iterations.

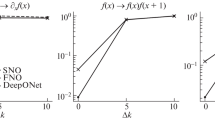

Table 1 lists the correlations for the various values of p and q. The correlation decreases with increasing p and q. This is because as p increases, the p-norm loses information about elements other than the highest norm. Thus, the information of the model is lost in a capacity defined by high values of p and q. However, as p goes to \(\infty\), \(p*\) reaches 1; thus, kernel size and \(k_{max}\) have a greater effect on capacity as p increases. Therefore, we assume that the capacity of a high p contains more information regarding model architecture. To prove our arguments, we conducted experiments in which \(k_{max}\) was varied and the other hyperparameters were fixed. First, to show that the capacities of low p and q contain more information about the model weights than its architecture, we trained three models with negligible differences in \(k_{max}\). Second, to demonstrate that the capacities of high p and q are more closely related to the model architecture, we trained three types of models with considerable differences in \(k_{max}\). For each experiment, we trained the models 30 times for each \(k_{max}\) setting, that is, 14, 16, and 18 in the left column of Fig. 4 and 10, 30, and 50 in the right column. Hyperparameters other than \(k_{max}\) were fixed: the kernel size of the CNN layer was 1, the width was 64, and the depth of the Fourier layers was 2. As revealed by the left column of Fig. 4, models with small gaps in \(k_{max}\) lose the correlation between the generalization gap and capacity with increasing p and q. However, in the right column, the highest correlation between the capacity and generalization error is obtained for higher p and q values compared to those in the left column. The correlation is maintained at 0.89 for the \(p,q =\infty\) case.

Left: Scatter plot, correlation, and linear regression between generalization error and capacities of various p and q for 30 trained models for \(k_{max}=14, 16, 18\); Right: Scatter plot, correlation, and linear regression between generalization error and capacities of various p and q for 30 trained models for \(k_{max}=10, 30, 50\)

4.2 Dependency of generalization errors on architectures and datasets

4.2.1 Dependency on \(k_{max}\)

Next, we examined the dependency of the generalization error on the model architecture. In the experiments, hyperparameters other than \(k_{max}\) are fixed. We consider two cases: Fourier layers at depths of 1 and 2.

A low \(k_{max}\) implies that the dynamics of learning are unpredictable and chaotic (Seleznova & Kutyniok, 2021); therefore, we did not consider models with extremely small values of \(k_{max}\). We varied \(k_{max}\) from 13 to 39 in two intervals. For a detailed analysis, we removed the CNN layer parts from the Fourier layers, such that the generalization error is proportional to the weight norm \(R_{i}\). To verify the influence of \(k_{max}\) size, we divided the generalization error by \(R_{i}\). As the defined capacity is correlated with the generalization error, it is expected that the divided generalization error is correlated with \(\root p* \of {k_{max}}\) at a depth of 1 and \(\root \frac{p*}{2} \of {k_{max}}\) at a depth of 2. As listed in Tables 2 and 3, the generalization error divided by the norms is correlated to \(\root p* \of {k_{max}}\) and \(\root \frac{p*}{2} \of {k_{max}}\). Hence, we can verify that the correlation is low for low capacities of p and q, and the desired dependency on \(k_{max}\) is unclear. As p and q increase, this correlation first increases and then decreases slightly. Based on these data, we can conclude that capacities with higher p and q contain more information about the model architecture(\(k_{max}\)), and capacities with very high p and q may cause a loss of specific information about each model; thus, the correlation decreases. Figure 5 shows the scatter plot and regression for a few experimental cases. The generalization error dependence on \(k_{max}\) was more convex at a depth of two. Based on our definition of capacity, the exponent of \(k_{max}\) is proportional to the depth of the Fourier layers. Therefore, the increased convexity illustrated on the right side of the figures qualitatively validates the results.

Left: Scatter plot and regression between generalization error divided by norms of Fourier layers and \(\root p* \of {k_{max}}\) for various p, q where the depth of Fourier layer is 1; Right: Scatter plot and regression between generalization error divided by norms of Fourier layers and \(\root \frac{p*}{2} \of {k_{max}}\) for various p, q where the depth of Fourier layer is 2

4.2.2 Empirical dependency on the size of training samples

As in Corollary 3 and other results on bounding the generalization error of neural nets by capacity norms, generalization error should converge as the training data set grows. The convergence rate is \(O(n^{-l})\) where \(0\le l \le 0.5\). However, we found that the real convergence rate is much faster than the theoretical bounding rate. Moreover, our defined capacity is a suitable indicator by observing that the combination of our capacity with factor by training dataset size is highly correlated to the empirical generalization error. First, we show that we also obtain a high correlation for nonlinear activation other than ReLU. The architecture of the models is as follows: all the hyperparameters are the same as in Sect. 4.1, the weight decay, \(k_{max}\), is randomly chosen from [0,e−3,...,4e−3], [10,12,...,20] respectively. The results are shown in Fig. 6.

Next, we demonstrate a high correlation between our empirically determined formula and the generalization errors of our experimental results. The architecture of this experiment was the same as that described above. However, the architecture of the model was fixed at \(k_{max}=14\) and the other hyperparameters were equal to those in the above experiments. The only varying parameter was the training dataset size [200, 400,..., 10,000]. We found that the actual convergence rates are much faster than the results of Corollary 3 (which implies that the upper bound of the generalization error is \(O(m^{-0.5})\)) and the convergence rates are dependent on the activation functions of the models; \(O(m^{-1.1})\) for ReLU, and \(O(m^{-1.55})\) for GELU. The results are presented in Figs. 7 and 8. The shaded areas in the right figures indicate the variance in the empirical generalization error. We also calculated the variance of our estimation; however, when normalized to the scale of empirical generalization error, it is significantly small compared to empirical generalization error. Therefore, it is a stable index for estimating generalization errors.

4.3 Correlation for different resolution test samples and out of distribution samples

The upper bound of the generalization errors in Sect. 3 depends on discretization and dataset structure. To investigate how our capacity correlates with the generalization error of the test samples generated from different data distributions of the original training dataset, we constructed several test datasets other than the original one. For the experiment, we trained our models based on the same settings as those in Sect. 4.2.2, and inferenced on various test datasets. Recall that covariance of the GRF is \(k(x,y)=e^{-\frac{(x-y)^2}{c^2}}\) where c is a coefficient: Our test datasets were all four cases: \(c=0.05\) with discretization \(N=512\) and \(N=2048\). and \(N=1024\) with \(c=0.025\) and \(c=0.1\). The capacity is calculated as \(p=2, q=2\). The experimental results are shown in Fig. 9 and Table 4. It is interesting that although in Corollary 2 and 3, the upper bound has explicit dependence on discretization size N, in the empirical experiment, our capacity itself has a resolution-invariant property, showing almost the same tendency in the N=2048 and N=512 cases as in the original case. When \(c=0.1\), the tendencies of regression line and data are similar as in \(c=0.05\) case, and even empirical generalization errors are lower. However, when \(c=0.025\), the tendency of the data points was frustrated and had a higher generalization error. We assume that the main reason for this phenomenon is that the information on the frequency data distribution is different. For \(c=0.025\) case, each function pair has more high-frequency components. The \(c=0.1\) case has even fewer high-frequency components than \(c=0.05\) case.

4.4 Additional experiments on other PDEs

To show that our capacity is an effective indicator for estimating the generalization error, we experimented with more cases, which are problems of the governing equations 1-d integration, 1-d heat equation, 2-d heat equation and 2-d Navier–stokes equation. We verified the correlation between the empirical generalization error and the defined capacity. Similar to the experiments for the Burgers equation described in Sect. 4.2, We checked the correlation between empirical generalization error and capacity factored by the sizes of dataset for the fixed model architecture and varying sizes of dataset (Fig. 10). As discussed in Sect. 4.2, the GELU performs better than ReLU activation, and we fixed our activation as GELU throughout all experiments. We omitted the CNN layer to simplify the experiment.

4.4.1 1-d integration

1-d integration is one of the simplest function operators. The experimental settings were as follows:

where \(u_{0}\) is an initial condition, and u is a scaled integration of this function. As in the Burgers equation setting in Sects. 4.1 and 4.2, our domain is a circle uniformly discretized by \(N=1024\). The function was scaled by multiplying it by 10 for normalization. Each initial function is generated from Gaussian random fields with covariance \(k(x,y)=e^{-\frac{(x-y)^2}{(0.05)^2}}\), similar to the Burgers equation. The total training dataset comprises 10,000 pairs of functions, and test dataset comprises 200 pairs of functions, respectively.

Training settings For fixed hyperparameters and varying sizes of the training dataset, we fixed the model architecture as \(k_{max}=14\) and the width as 64, the depth of the Fourier layers as 2, and the weight decay as e−3. The size of training dataset is [200, 400,..., 10,000]. For each training dataset size, the training was repeated five times.

4.4.2 1-d heat equation

We experimented with another 1-d time dependent PDE problem known as 1-d heat equation. 1-d heat equation describes the heat distribution on a physical object such as a steel rod. The governing equations are as follows.

The domain of the problem is a circle, and we uniformly discretize the domain by N = 1024. The initial condition was generated by the same Gaussian random field as that in the Burgers equation and 1-d integration settings. The target function is a section of the solution of the heat equation for a given initial condition at T=0.1. The training and test datasets have 10,000 and 200 pairs of functions, respectively.

Training settings The training settings are the same as in 1-d integration problem.

4.4.3 2-d heat equation

Time-dependent heat equations are parabolic PDEs that describe heat diffusion. We experimented based on a 2-d time-dependent heat equation to verify that our capacity is effective for a linear 2-dimensional PDE. The governing equations are as follows:

The domain of the problem is a torus, which means that the problem is periodic along the x- and y-axes. The domain was uniformly discretized with N=64 in both coordinates. The initial condition is generated by 2-d Gaussian random field with the distribution \(\mu = \mathcal {N}(0,7^{3/2}(-\Delta + 49I)^{-2.5})\) having the same GRF as in A.3.3 of Li et al. (2021). The target function is a section of the solution of the heat equation for a given initial condition at T = 0.005: The training and test datasets have 5000 and 200 pairs of functions, respectively.

Training settings For fixed hyperparameters and varying sizes of the training dataset, we fixed the model architecture as \((k_{max,1},k_{max,2}) = (14,14)\), with a width of 32, a depth of 2, and a weight decay of e−3. The training dataset size is [100, 200,..., 5000]. For each training dataset size, the training was repeated five times.

4.4.4 2-d Navier–Stokes equation

We consider the vicious, incompressible 2-d Navier–Stokes equation in vorticity form. The governing equations are as follows.

where u denotes the vorticity of velocity field v (\(u = \nabla \times v\)) defined in the torus-product time interval (\(T\times [0,T]\)). \(0 \le \nu\) is the viscosity coefficient, and f is a forcing function fixed as \(f(x)=0.1(sin(2\pi (x_{1}+x_{2})) + cos(2\pi (x_{1}+x_{2})))\). We selected one of the 2-d Navier–Stokes equation training dataset samples constructed in Li et al. (2021). Therefore, the initial vorticity function is generated from \(\mu = \mathcal {N}(0,7^{3/2}(-\Delta + 49I)^{-2.5})\) as in the heat equation. The viscosity coefficient \(\nu\) is 1e−4. We set the target of our model as vorticity at time \(T=5\) (=u(0, 5)) for a given initial vorticity.

Training settings The training settings are the same as in the 2-d heat equation problem.

4.5 Comparison with other capacities

In this subsection, we compare our capacity with recently developed capacity norms: the Fisher-Rao norm (Liang et al., 2019), the Hessian trace norm (Petzka et al., 2021) and relative flatness (Petzka et al., 2021). For the experiment, we trained models with hyperparameters of width 16, 2-depth Fourier layers; \(k_{max}\) was chosen from [10,12,...,20] and weight decay from [0,1e−3,...,4e−3] on training dataset with 800 pairs of functions, which is the same as in the previous experiment settings. From Table 6 and Fig. 11, we infer that our capacity has the highest correlation among the norms because our capacity does contain information about the hyperparameter concerning the model’s architecture, whereas other norms do not. In addition, complicated derivatives or second-derivative information are not required to calculate our capacity. Therefore, as shown in Table 5, the calculation time of our capacity is much shorter than that of the other norms, enhancing memory efficiency.

5 Conclusion

We investigated the bounding Rademacher complexity of an FNO and defined its capacity, which depends on the model architecture and the group norms of the weights. Although several results exist regarding the bounding Rademacher complexity of various types of neural networks, the FNO possesses tensor weights of rank higher than two. Therefore, our study may be useful for other NNs containing higher-rank tensors. Our results are experimentally validated. Based on these experiments, we gained insights into the impact of p and q values and information about the model weights and architecture stored in terms of capacities. Through experiments on other PDEs and their dependency on the architecture and size of datasets, we validated that our capacity norm is effective for estimating the empirical generalization error. By comparing it with other sophisticated capacity norms, we empirically prove that our capacity norm is an efficient and effective index among these norms. Moreover, although various neural operators have been developed, including FNO and DeepONet, the analysis of PAC learning for these neural operators has not been performed in detail. Thus, this study may serve as a guide for such analyses. In this study, we assume that the activation function is fixed. For a general model containing parameterized activation, such as PReLU, we need to modify our analysis. Although the Rademacher complexity contains information about datasets, the bounding of our results lacks a specific dependency on each problem. Because we experimented with various PDE problems, the performance of the FNO varied for each problem. Therefore, we must extend the complexities to include information about datasets. In addition, although we empirically verified faster convergence compared to the theoretical bound, we need to justify the empirical fast convergence of the generalization error through careful theoretical analysis.

Availability of data and materials and codes

The codes used to generate dataset used in this study and conduct experiments, including modeling models, are available from TK upon reasonable request.

References

Awasthi, P., Frank, N., & Mohri, M. (2020). On the rademacher complexity of linear hypothesis sets. arXiv arXiv:2007.11045

Bartlett, P. L., Foster, D. J. & Telgarsky, M. (2021). Spectrally-normalized margin bounds for neural networks. Advances in Neural Information Processing Systems

Cai, Z., Chen, J., & Liu, M. (2021). Least-squares ReLU neural network (LSNN) method for linear advection-reaction equation. Journal of Computational Physics, 443, 686–707.

Gopalani, P., Karmakar, S. & Mukherjee, A. (2022). Capacity bounds for the deeponet method of solving differential equations. arXiv arXiv:2205.11359

Gupta, G., Xiao, X., & Bogdan, P. (2021). Multiwavelet-based operator learning for differential equations. Advances in Neural Information Processing Systems, 34, 24048–24062.

Hao, C., Zhanfeng, M., Zhouwang, Y. & Xiao, W. (2019). Theoretical investigation of generalization bound for residual networks. In International joint conferences on artificial intelligence organization, pp. 2081–2087

Jakubovitz, D., Giryes, R., Rodrigues, M. R. D. (2019). Generalization Error in Deep Learning. Birkhuser Cham, GEWERBESTRASSE 11, Cham, Switzerland.

Kovachki, N., Li, Z., Liu, B., Azizzadenesheli, K., Bhattacharya, K., Stuart, A. & Anandkumar, A. (2021). Neural operator: Learning maps between function spaces. arXiv arXiv:2108.08481

Kovachki, N., Lanthaler, S., & Mishra, S. (2021). On universal approximation and error bounds for Fourier neural operators. Journal of Machine Learning Research, 22(290), 1–76.

Lei, Y., Dogan, U., Zhou, D., & Kloft, M. (2019). Data-dependent generalization bounds for multi-class classification. IEEE Transactions on Information Theory, 65(5), 2995–3021.

Li, Z., Kovachki, N., Azizzadenesheli, K., Liu, B., Bhattacharya, K., Stuart, A. & Anandkumar, A. (2020). Neural operator: Graph kernel network for partial differential equations. ICLR 2020 Workshop ODE/PDE+DL

Li, Z., Kovachki, N., Azizzadenesheli, K., Liu, B., Bhattacharya, K., Stuart, A. & Anandkumar, A. (2021) Fourier neural operator for parametric partial differential equations. ICLR 2021.

Liang, T., Poggio, T., Rakhlin, A., & Stokes, J. (2019). Fisher-rao metric, geometry, and complexity of neural networks. Proceedings of the Twenty-Second International Conference on Artificial Intelligence and Statistics, 89, 888–896.

Long, P. M. & Sedghi, H. (2020). Generalization bounds for deep convolutional neural networks. ICLR 2020.

Lu, L., Jin, P., Pang, G., Zhang, Z., & Karniadakis, G. E. (2021). Learning nonlinear operators via deeponet based on the universal approximation theorem of operators. Nature Machine Intelligence, 3(3), 218–229.

Lv, S. (2021). Generalization bounds for graph convolutional neural networks via rademacher complexity. arXiv arXiv:2102.10234

Maurer, A. (2016). A vector-contraction inequality for rademacher complexities. arXiv arXiv:1605.00251

Minshuo, C., Xingguo, L., & Tuo, Z. (2020). On generalization bounds of a family of recurrent neural networks. Proceedings of Machine Learning Research, 108, 1233–1243.

Neyshabur, B., Tomioka, R., & Srebro, N. (2015). Norm-based capacity control in neural networks. Proceedings of Machine Learning Research, 40, 1376–1401.

Pathak, J., subramanian, S., Harrington, P., Raja, S., Chattopadhyay, A., Mardani, M., Kurth, T., Hall, D., Li, Z., Azizzadenesheli, K., Hassanzadeh, P., Kashinath, K. & Anandkumar, A. (2022). Fourcastnet: A global data-driven high-resolution weather model using adaptive fourier neural operators. arXiv arXiv:2202.11214

Petzka, H., Kamp, M., Adilova, L., Sminchisescu, C., & Boley, M. (2021). Relative flatness and generalization. Advances in Neural Information Processing Systems, 34, 18420–18432.

Raissi, M., Perdikaris, P., & Karniadakis, G. E. (2019). Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. Journal of Computational Physics, 378, 686–707.

Seleznova, M., & Kutyniok, G. (2021). Analyzing finite neural networks: Can we trust neural tangent kernel theory? Proceedings of Machine Learning Research, 145, 847–867.

Shalev-Shwartz, S., & Ben-David, S. (2014). Understanding Machine Learning: From Theory to Algorithms. Cambridge University Press.

Sontag, E. D. (1998). Vc dimension of neural networks. NATO ASI Series F Computer and Systems Sciences, 168, 69–96.

Valiant, L. G. (1984). A theory of the learnable. Communications of the ACM, 27(11), 1134–1142.

Vapnik, V. N. (1999). An overview of statistical learning theory. IEEE Transactions on Neural Networks, 10(5), 988–999.

Weinan, E., Chao, M., & Qingcan, W. (2020). Rademacher complexity and the generalization error of residual networks. Communications in Mathematical Sciences, 18(6), 1755–1774.

Weinan, E., & Yu, B. (2018). The deep ritz method: A deep learning-based numerical algorithm for solving variational problems. Communications in Mathematics and Statistics, 6(1), 1–12.

Wen, G., Li, Z., Azizzadenesheli, K., Anandkumar, A., & Benson, S. M. (2022). U-fno-an enhanced Fourier neural operator-based deep-learning model for multiphase flow. Advances in Water Resources, 163, 104180.

Acknowledgements

This work was supported by the Challengeable Future Defense Technology Research and Development Program through the Agency for Defense DevelopmentADD, funded by the Defense Acquisition Program Administration in 2021 (No. 915020201), and NRF Grant [2021R1A2C3010887].

Funding

Open Access funding enabled and organized by Seoul National University.

Author information

Authors and Affiliations

Contributions

TK devised the approach, developed the mathematical proofs, coded, and conducted the experiments. MK corrected the overall manuscript and acquired funding for the project.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical approval

Not applicable.

Consent to participate

Not applicable.

Consent for publication

Not applicable.

Additional information

Editor: Pradeep Ravikumar.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Experimental details

1.1 A.1 Environment of experiments

All the experiments were conducted using Pytorch 2.0.1, with Python 3.9.6. The specifications of the hardware environment are as follows (Table 7):

1.2 A.2 Numerical schemes used in experiments

We used a forward difference scheme with a time step of 1e−4 and 1e−5 for 1-d and 2-d heat equations, respectively. For the 1-d integration experiment, we used the most basic method (right-hand rule) by adding all terms and multiplying by the interval spacing. For 2-d Navier–Stokes equation, we used the dataset in Li et al. (2021); the detailed numerical scheme is specified in Li et al. (2021). The equation was solved using a stream function formulation and a pseudospectral method. For time marching, the Crank-Nicolson update was used with a time step of 1e−4.

1.3 A.3 Mathematical formulas for norms

We calculated the Fisher-Rao norm using the following formula with the finite difference method derived in Liang et al. (2019):

For the Hessian trace, we apply the following formula to the first Fourier layer:

Finally, for relative flatness, because the weight is a multi-rank tensor, we slightly modified the formula in Petzka et al. (2021). This formula is also applied to the first Fourier layer. The formula is as follows:

Appendix B: FNO with CNN layer versus without CNN layer

In this section, we present the results of the CNN-layer case. Although, for simplicity of analysis, we dropped the CNN layers in the experiments in Sect. 4, as shown in Fig. 12, the correlation is even higher than the cases with CNN layers dropped.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kim, T., Kang, M. Bounding the Rademacher complexity of Fourier neural operators. Mach Learn 113, 2467–2498 (2024). https://doi.org/10.1007/s10994-024-06533-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10994-024-06533-y