Abstract

In the present paper we considered the problems of studying the best approximation order and inverse approximation theorems for families of neural network (NN) operators. Both the cases of classical and Kantorovich type NN operators have been considered. As a remarkable achievement, we provide a characterization of the well-known Lipschitz classes in terms of the order of approximation of the considered NN operators. The latter result has inspired a conjecture concerning the saturation order of the considered families of approximation operators. Finally, several noteworthy examples have been discussed in detail

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the last decade the popularity of neural networks (NNs) increased significantly due to the fact that they are very convenient in solving practical problems in domains such as economy, healthcare, artificial intelligence or machine learning. Furthermore, also useful applications have been developed in relation to Approximation Theory, see, e.g., [1,2,3,4], and the wide available literature on the topic.

Obviously, one of the most important directions of research is the approximation of functions using neural network (NN) operators, and we mention here the important paper [5], where such investigation was conducted by means of the so-called Cardaliaguet-Euvrard NN operators, which provide one of the possible mathematical formalizations of the previous tools, characterized by a deterministic approach. Later, in [6] the author obtained estimations for the rate of convergence of these operators and then in papers [7, 8], again, estimations for the rate of convergence were obtain for new NN operators activated by the logistic and the hyperbolic tangent functions, respectively. Let us also mention some recent developments in this topic (see also [9,10,11] for more details). The NN operators have been recently considered by several authors, and their approximation properties have been deeply investigated. For instance, in [12] the authors introduced exponential type NN operators. In [13] the authors studied NN operators activated by ramp functions, while in [14] the authors investigated the convergence of multivariate NN operators of max-product kind. Moreover, in [15] the authors introduced fractional type multivariate NN operators. For other remarkable works related to NNs in Approximation Theory, we list here the following [16,17,18,19,20].

In this paper we will focus on univariate NN operators of classical and Kantorovich type activated by sigmoidal functions and introduced in the papers [21, 22], respectively (for the multivariate version of the above operators we refer to [23]). In these papers pointwise and uniform convergence results were obtained. Then, in paper [24] estimations with respect to K-functionals have been obtained and in addition, if the sigmoidal function \(\sigma :\mathbb {R} \rightarrow \mathbb {R}\) satisfies \(\sigma (x)=\mathcal {O}\left( \left| x\right| ^{-\alpha }\right) \) as \(x\rightarrow -\infty \) with \(\alpha >2\), a Jackson type estimation was obtained for the Kantorovich NN operator. This last result inspired us to investigate whether is it possible to find the rate of uniform convergence for these operators as a function of the parameter \(\alpha \). In paper [9] we found these estimations for both the classical and Kantorovich variants of the NN operators in the general setting of multivariate functions. In the present contribution we continue this investigation by providing actually the best possible rates of uniform convergence depending on the parameter \(\alpha \). We will discuss the univariate case for both classical and Kantorovich NN operators. First, in the case \(\alpha =2\) we will prove that actually the best order of uniform approximation is \(\mathcal {O}\left( \omega \left( f,\,\frac{\log n}{n} \right) \right) \), as \(n \rightarrow +\infty \). Therefore, the Jackson type estimation does not hold as this was left as an open question in [9]. Then, we will also prove that the estimations proved in [9] for the cases \(\alpha >2\) and \(1<\alpha <2\), respectively, are the best possible in general.

Finally, we also prove an inverse theorem of approximation showing that, starting from a continuous function f that can be approximated by an order of convergence of the kind \(\mathcal {O}(n^{-\nu })\), as \(n \rightarrow +\infty \), on an interval \(\left[ a,b \right] \), with \(0<\nu <1\), together with some boundedness conditions on the so-called discrete absolute moments of the kernel functions and its first derivative, then f belongs to the class \(Lip_{\nu }[a,b]\).

Inverse theorems of approximation are useful in Approximation Theory, since if they are combined with results related to the (direct) order of approximation, they allow to characterize the classes \(Lip_{\nu }[a,b]\) in term of the order of convergence of the considered family of linear operators. Indeed here, such a characterization is obtained for both the classical and Kantotovich versions of the NN operators.

We now give a detailed description of the paper. After this introductory section, in Sect. 2 we present the basic information about NN operators activated by sigmoidal functions. Then, in Sect. 3 we present the results on the best approximation orders. In Sect. 4 we will present the inverse approximation result for the class \( Lip_{\nu }[a,b]\), and its corresponding characterization in terms of the order of convergence. In the final section, inspired by the inverse approximation result, we will formulate several open questions concerning the saturation order for these NN operators.

2 Preliminaries

Let us consider a sigmoidal function \(\sigma :\mathbb {R}\rightarrow \mathbb {R }\) such that \(\sigma (x)-1/2\) is an odd function, \(\sigma \in C^{2}(\mathbb {R })\) is concave for \(x\ge 0\), and \(\sigma (x)=\mathcal {O}\left( \left| x\right| ^{-\alpha }\right) \) as \(x\rightarrow -\infty \) for some \( \alpha >1\) (see, e.g., [21]). Note that in many papers one writes \(\sigma (x)=\mathcal {O}\left( \left| x\right| ^{-\beta -1}\right) \) as \(x\rightarrow -\infty \) for some \(\beta >0\).

In addition, in line with the definition given in [25], we will assume that \(\sigma \) is a non-decreasing measurable function such that:

Now, taking \(\phi _{\sigma }(x)=\frac{1}{2}\left[ \sigma (x+1)-\sigma (x-1) \right] \), it is immediate that \(\phi _{\sigma }\) is an even function taking non-negative values, non-decreasing on \((-\infty ,0]\) and non-increasing on \( [0,\infty )\). In addition to that, \(\phi _{\sigma }\) satisfies a list of properties (see Lemmas 2.2, 2.4 and 2.6 in [21]) from which it is enough here to mention:

i) \(\sum \nolimits _{k\in \mathbb {Z}}\phi _{\sigma }(x-k)=1\), for all \(x\in \mathbb {R}\);

ii) \(1\ge \sum \nolimits _{k=\lceil na\rceil }^{\left\lfloor nb\right\rfloor }\phi _{\sigma }(nx-k)\ge \phi _{\sigma }(1)>0\), for every \( a,b\in \mathbb {R}\) with \(a<b\), \(x\in [a,b]\) and \(n\in \mathbb {N}_{+}\) such that \(\lceil na\rceil \le \left\lfloor nb\right\rfloor \), where \(\lceil t\rceil \) is the ceiling of t and \(\left\lfloor t\right\rfloor \) is the integer part of t, for some \(t\in \mathbb {R}\);

iii) \(\phi _{\sigma }(x)=\mathcal {O}\left( \left| x\right| ^{-\alpha }\right) \) as \(x\rightarrow \pm \infty \), \(\alpha >1\), and consequently \( \phi _{\sigma } \in L^1({\mathbb {R}})\).

Remark 1

We can observe that, the theory of the neural network operators holds also for sigmoidal activation functions \(\sigma \) which not necessarily belong to \(C^{2}(\mathbb {R})\). In the latter case, we can assume that the corresponding \(\phi _{\sigma }\) satisfies the following conditions:

-

\(\phi _{\sigma }(x)\) is non-decreasing for \(x<0\) and non-increasing for \(x\ge 0\);

-

\(\phi _{\sigma }(1)>0\).

For more details on this matter one can check, e.g., [21].

Suppose now that \(f:[a,b]\rightarrow \mathbb {R}\) is continuous. As usual, for some \(\delta >0\) we denote

i.e., \(\omega (f,\delta )\) is the usual modulus of continuity of a given function f. For more details about the properties of \(\omega (f,\delta )\) see, e.g., [26].

3 Best Order of Uniform Approximation for Neural Network Operators

Consider a bounded function \(f:[a,b]\rightarrow \mathbb {R}\) and \(n\in \mathbb {N}_{+}\), such that \(\lceil na\rceil \le \left\lfloor nb\right\rfloor \). The positive linear NN operators activated by the sigmoidal function \(\sigma \), are defined as (see Definition 3.1 in [21])

Note that, the operators \(F_{n}\) are well-defined because of the boundedness of f and by properties i) and ii).

Consider now a locally integrable and bounded function \(f:[a,b]\rightarrow \mathbb {R}\) and \(n\in \mathbb {N}_{+}\), such that \(\lceil na\rceil \le \left\lfloor nb\right\rfloor -1\). The Kantorovich NN operators are given by (see [22])

Note that, again, the operators \(K_n\) are well-defined; in order to show the latter fact we need to exploit a condition analogous to ii) of Sect. 2, that is:

In paper [9] we obtained quantitative estimations for the rate of uniform convergence of \(F_{n}(f,\cdot )\) and \(K_{n}(f,\cdot )\), in connections with the values of \(\alpha \). Let us recall these results.

Theorem 2

(see [9], Theorem 2) Consider \(f\in C[a,b]\) and the NN operator given in (1) such that \(\sigma (x)= \mathcal {O}\left( \left| x\right| ^{-\alpha }\right) \) as \( x\rightarrow -\infty \) with \(\alpha \in (1,2)\). Then there exists a constant \(C>0\) which may depend only on \(\sigma ,a,b\) and \(\alpha \), such that

and \(n\in \mathbb {N}_{+}\), \(\lceil na\rceil \le \left\lfloor nb\right\rfloor \).

Theorem 3

(see [9], Theorem 3) Consider \(f\in C[a,b]\) and the NN operator given in (1) such that \( \sigma (x)=\mathcal {O}\left( \left| x\right| ^{-\alpha }\right) \) as \(x\rightarrow -\infty \) with \(\alpha \in (2,\infty )\). Then there exists a constant \(C>0\) which may depend only on \(\sigma ,a,b\) and \(\alpha \), such that

and \(n\in \mathbb {N}_{+}\), \(\lceil na\rceil \le \left\lfloor nb\right\rfloor \).

Finally:

Theorem 4

(see [9], Theorem 4) Consider \(f\in C[a,b]\) and the NN operator given in (1) such that \(\sigma (x)= \mathcal {O}(\left| x\right| ^{-2})\) as \(x\rightarrow -\infty \). Then, for any \(\varepsilon >0\) there exists a constant \(C(\varepsilon )>0\) which may depend only on \(\sigma ,a,b\) and \(\varepsilon \), such that

and \(n\in \mathbb {N}_{+}\), \(\lceil na\rceil \le \left\lfloor nb\right\rfloor \).

Remark 5

The same estimations are obtained for the operator \(K_{n}(f,\cdot )\), where the only difference in the statements is that \(\lceil na\rceil \le \left\lfloor nb\right\rfloor -1\) (see Theorems 5,6,7 in [9]).

We leaved as an open question whether in the case \(\alpha =2\), the estimations could be improved in general to \(\mathcal {O}\left( \omega \left( f,\,\frac{1}{n}\right) \right) \).

In this section, first we prove that actually, in the case \(\alpha =2\), in general the order of uniform approximation is \(\mathcal {O}\left( \omega \left( f,\,\frac{\log n}{n}\right) \right) \), where \(\log \) stands for the natural logarithmic function. More exactly, for an arbitrary sigmoidal function \(\sigma \) such that that \(\phi _{\sigma } (x)\sim \left| x\right| ^{-2}\) as \(x\rightarrow -\infty \) (the exact definition of the symbol “\(\sim \)” will be specified below), we will show that there exists a function \(f\in C[a,b]\) and a constant \(C>0\), such that \(\left\| F_{n}(f,\cdot )-f\right\| \ge C\omega \left( f,\,\frac{\log n}{n}\right) \).

In practice, we will show that the order of approximation \(\omega (f, {\log n \over n})\) can not be improved in general, hence it is the best possible in the class C[a, b] for the operators \(F_n\) and \(K_n\).

Here, and in all that follows, \(f(x)\sim g(x)\) as \(x\rightarrow a\), means that \(f(x)=\mathcal {O}\left( g(x)\right) \) as \(x\rightarrow a\) and \(g(x)= \mathcal {O}\left( f(x)\right) \) as \(x\rightarrow a\).

Using the properties of \(\omega (f,\delta )\) and \(\phi _{\sigma }\), for \( x\in [a,b]\) we get (see, e. g. [9])

We can write

thanks to the well-known inequality

which then implies

We need to recall some useful facts used in [9] too, more exactly, the following formulae (4), (5), and (6). Since \(\phi _{\sigma }(x)=\mathcal {O}\left( \left| x\right| ^{-2}\right) \) as \(x\rightarrow \pm \infty \), there exists two positive constants \(x_{0}\) and \(C_{1}\) which may depend only on \(\sigma \) such that \( \left| \phi _{\sigma }(x)\right| \le C_{1}\left| x\right| ^{-2}\), for all \(x\in \mathbb {R}\) with \(\left| x\right| \ge \left| x_{0}\right| \). Without any loss of generality we may suppose that \(x_{0}\ge 1\). We may write

where

and

First, let us notice that, by i) and by the fact that \(\phi _{\sigma }\ge 0\), we get

Further, from the definition of J(n, x), it follows that

Let us choose arbitrary \(x\in [a,b]\) and \(k\in J(n,x)\). Since \(na\le \lceil na\rceil \le k\le \left\lfloor nb\right\rfloor \le nb\), it follows that

and

It means that in any interval \([l,l+1)\), where \(l\in \mathbb {Z}\) and \(0\le l<l+1\le \left\lfloor n\left( b-a\right) \right\rfloor +1\), there are at most two elements \(k_{1},k_{2}\in J(n,x)\) such that \(\left| nx-k_{1}\right| \in [l,l+1)\) and \(\left| nx-k_{2}\right| \in [l,l+1)\). Clearly, this implies that

From inequalities (3)–(6), it follows that

It is well-known that there exists a constant \(c>0\) such that \( \sum \limits _{k=1}^{n}\frac{1}{k}\le c\log n\), for every \(n\in \mathbb {N} _{+} \). Therefore, there exists a constant \(C_{2}>0\), which may depend only on a and b, such that \(\sum \limits _{k=1}^{\left\lfloor n\left( b-a\right) \right\rfloor +1}\frac{1}{k}\le C_{2}\log n\),, for every \(n\in \mathbb {N}_{+}\). Consequently, there exists a constant \(C>0\) such that

Actually, if \(\phi _{\sigma } (x)\sim \left| x\right| ^{-2}\) as \( x\rightarrow -\infty \), the order of uniform approximation \(\mathcal {O} \left( \omega \left( f,\,\frac{\log n}{n}\right) \right) \) cannot be improved over the class C[a, b].

Indeed, if we define:

i.e., a sigmoidal functions satisfying all the required assumptions in Remark 1, we can easily see that the corresponding \(\phi _{\sigma }\) satisfies:

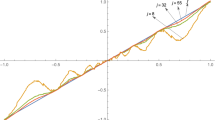

We made this choice for \(\sigma \) just to simplify the proof. We will see later that actually we can assume \(\sigma \) arbitrary such that \( \phi _{\sigma } (x)\sim \left| x\right| ^{-2}\) as \(x\rightarrow +\infty \). Then, consider the function \(f:[0,1]\rightarrow \mathbb {R}\), \( f(x)=\left| 2x-1\right| \). It is immediate that for \(\delta >0\) sufficiently small, we have

Suppose that \(n\in \mathbb {N}_{+}\) is even. Then we have (we are in the case \(a=0\) and \(b=1\))

and since for for sufficiently large n we have

and using (8) it follows that

for n even and sufficiently large. Obviously, for n odd we can obtain a similar inequality.

Now, suppose that \(\sigma \) is an arbitrary sigmoidal function such that \( \sigma (x)\sim \left| x\right| ^{-2}\) as \(x\rightarrow -\infty \). It means that there exists the constants \(C_{1},C_{2}>0\) and \(x_{0}>0,\) such that

In view of inequality (9), it is clear that this time, the constant \(\frac{1}{8}\) will be replaced with a constant depending on \(C_{1}\) and instead of \(\ \sum \nolimits _{k=2}^{n/2}\frac{1}{k}\) we will obtain the expression

where \(C_{3}\) depends on \(x_{0}\). Clearly, this implies that we can obtain a similar inequality as in (10), only with a different constant in front of \(\omega \left( f,\,\frac{\log n}{n}\right) \).

Thus, we improved the result in Theorem 4, that is, when \( \sigma (x){=}\mathcal {O}\left( \left| x\right| ^{-2}\right) \) as \( x\rightarrow -\infty \) the order of uniform approximation is \(\mathcal {O} \left( \omega \left( f,\,\frac{\log n}{n}\right) \right) \), which in addition is the best order of uniform approximation over the class C[a, b], whenever \(\phi _{\sigma } (x)\sim \left| x\right| ^{-2}\) as \( x\rightarrow -\infty \).

Let us now explain that the same property is true in the case of the Kantorovich variant given in (2). First, let us notice that by simple calculations, for any \(f\in C[a,b]\) it holds that

(see the last line at page 11 from [9]). Clearly, this implies the same order \(\mathcal {O}\left( \omega \left( f,\,\frac{\log n}{n} \right) \right) \) of uniform approximation for \(K_{n}\). Let us now explain that this order of uniform approximation is the best possible over \(f\in C[a,b]\), whenever \(\phi _{\sigma } (x)\sim \left| x\right| ^{-2}\) as \( x\rightarrow -\infty \).

Suppose again that \(\sigma \) is that introduced in (7), with \(\phi _{\sigma }\left( x\right) =\frac{1}{8}\cdot \frac{1}{x^{2}-1} \ge {\frac{1 }{8\, x^2}}\), if \(\left| x\right| \ge \frac{3}{2}\). Then, we consider the same function \(f(x)=\left| 2x-1\right| \), \(x\in [0,1]\). If \(n\in \mathbb {N}_{+}\) is even, we obtain

for sufficiently large n. Therefore, we reached the same conclusion, that is, when \(\phi _{\sigma } (x)\sim \left| x\right| ^{-2}\) as \( x\rightarrow -\infty \), the order of uniform approximation \(\mathcal {O} \left( \omega \left( f,\,\frac{\log n}{n}\right) \right) \) is the best possible over the class C[a, b] in the approximation by the \(K_{n}\) operators.

Now, suppose that \(\sigma (x)=\mathcal {O}\left( \left| x\right| ^{-\alpha }\right) \) as \(x\rightarrow -\infty \) with \(\alpha \in (1,2)\). From Theorem 2 we know that the rate of uniform convergence is at most \(\mathcal {O}\left( \omega \left( f,\,\frac{1}{ n^{1-\alpha }}\right) \right) \) in the approximation by the \(F_{n}\) operators. Let us prove that actually, this is the best order of uniform approximation over the class C[a, b].

Let now \(\sigma _\beta \) be a sigmoidal activation function defined as follows:

with \(\beta \in (0,1]\). Obviously, if \(\beta =1\) we find again the sigmoidal function introduced in (7). Now, it is not difficult to see that:

when \(x\in (-\infty ,-3/2]\cup [3/2,\infty )\). In practice, for \(\frac{1}{2}<\beta <1\), it turns out that \(\phi _{\sigma _\beta }\) is \(\phi _{\sigma _\beta }(x) \sim |x|^{-\alpha }\), with \(1< \alpha =2\beta <2\). Again, it suffices to work with the function \(f(x)=\left| 2x-1\right| \), \(x\in [0,1]\) and to suppose that n is an even positive integer. We have

where in the above inequalities we posed, for the sake of simplicity, \( C_\beta := {\frac{2^{-\beta } }{8}}\) and \(\alpha =2\beta \). Applying the Lagrange theorem on each interval \(\left[ k,k+1\right] \), \(k=2,...,n/2\), for the function \(g(x)=x^{2-\alpha }\), one can easily prove that

for n sufficiently large. This implies

As in the case \(\alpha =2\) we can easily generalize this estimation for the case of an arbitrary sigmoidal function \(\sigma \) such that \(\phi _{\sigma } (x)\sim \left| x\right| ^{-\alpha }\) as \(x\rightarrow -\infty \) with \(\alpha \in (1,2)\). Then of course, the same estimation holds for the Kantorovich variant.

Finally, we notice that in the case when \(\sigma (x)=\mathcal {O}\left( \left| x\right| ^{-\alpha }\right) \) as \(x\rightarrow -\infty \) with \(\alpha >2\), by Theorem 4 it follows that the estimation is of Jackson type and it is well-known that such estimation cannot be bettered. Actually, repeating the reasoning form the previous case it is obvious that this time the sequence is convergent and this easily implies the conclusion stated earlier.

We can now summarize all the results obtained so far in the following first main result of this paper.

Theorem 6

Consider a sigmoidal function \(\sigma :\mathbb {R} \rightarrow \mathbb {R}\) and \(\alpha >1\) such that \(\phi _{\sigma } (x)\sim \left| x\right| ^{-\alpha }\) as \(x\rightarrow -\infty \) and \(a,b\in \mathbb {R}\), \(a<b\). Considering the NN operators \(F_{n}\) and \(K_{n}\), given in (1) and 2, respectively, we state the following properties:

(i) if \(\alpha \in (1,2)\) then \(\mathcal {O}\left( \omega \left( f,\,\frac{1}{ n^{1-\alpha }}\right) \right) \) is the best order of uniform approximation over the class C[a, b] in the approximation by the operators \(F_{n}\) and \( K_{n}\);

(ii) if \(\alpha =2\) then \(\mathcal {O}\left( \omega \left( f,\,\frac{\log n}{n }\right) \right) \) is the best order of uniform approximation over the class C[a, b] in the approximation by the operators \(F_{n}\) and \(K_{n}\);

(iii) if \(\alpha >2\) then \(\mathcal {O}\left( \omega \left( f,\,\frac{1}{n} \right) \right) \) is the best order of uniform approximation over the class C[a, b] in the approximation by the operators \(F_{n}\) and \(K_{n}\).

4 Inverse Results of Approximation

In this section we deal with the problem of inverse approximation theorem by means of NN operators.

In order to do this, we first recall the definition of the well-known Lipschitz classes of order \(0<\nu \le 1\).

We will say that a function \(f \in C[a,b]\) belongs to the Lipschitz classes of order \(0<\nu \le 1\), that we denote by \(Lip_\nu [a,b]\), if:

for sufficiently small \(\delta >0\) and a suitable positive constant C.

Before to state the main result of this section we recall the following notation. For any given function \(\chi : \mathbb {R} \rightarrow \mathbb {R}\), we denote by:

It is well-known that, if the parameter \(\alpha \) of condition iii) of Sect. 2 is \(\alpha >2\), it turns out that (see [21]):

For more details about the finiteness of the discrete absolute moments of a given function, see also [27].

We can immediately state the following.

Theorem 7

Let \(\sigma \) be a sigmoidal function and let \(\alpha >2\) be the parameter of condition iii) of Sect. 2. Assume in addition that \(M_0(\phi _{\sigma }^{\prime })\), and \(M_1(\phi _{\sigma }^{\prime })\) are both finite. Considering the NN operators \(F_{n}\) and \(K_{n}\), given in (1) and (2), respectively, and \(f \in C[a,b]\), \(a<b\), such that:

or

with \(0<\nu < 1\), it turns out that:

Proof

We only consider the case in which we have:

since in the case of the operators \(K_n\) the proof works similarly. Now, for any \(\delta >0\) we can write what follows:

where \(M>0\) is a suitable constant arising from (13). We want to estimate \(I_2\). Now, recalling the assumptions given in Sect. 2, since \(\sigma \in C^2(\mathbb {R})\), it turns out that the function \(F_n (f, \cdot )\) is differentiable on the interval [a, b]. Hence, for any x, \( y \in [a,b]\) we easily have:

Computing \(F^{\prime }_n(f, t)\) we found the following general expression:

Now, recalling that the NN operators \(F_n\) preserve the function \(\textbf{1} (x)=1\), \(x \in [a,b]\), i.e.,

we can deduce that:

Then, we can write what follows:

which suggests to estimate the last integrand. We have:

Using again the properties of the modulus of continuity, we can write what follows:

for \(n \in \mathbb {N}_+\). Using the latter estimate in (14) we get:

from which we obtain that:

In conclusion, rearranging all the above computations we finally have the following inequality:

\(n \in \mathbb {N}_+\). Now, we consider the number \(A \in \mathbb {N}_+\) sufficiently large, in such a way that:

and we define the sequence \((\delta _w)_{w \in \mathbb {N}_+}\) by \(\delta _w:= A^{-w}\), \(w \in \mathbb {N}_+\). We want to prove that:

for \(w \in \mathbb {N}_+\), where:

In order to get (16) we proceed by induction on w. For \(w=1\) we immediately have the thesis since:

We now assume that the thesis is satisfied for \(w-1 \ge 1\) and then we consider w. Setting \(n=A^{w-1} \in \mathbb {N}_+\), noting that \( A^{-\nu }\delta _{w-1}^{\nu } = \delta ^{\nu }_w\), and using (15) we obtain:

In order to conclude the proof, we finally need to show that the inequality:

holds for every \(0<\delta < \bar{\delta }\), for suitable \(L>0\) and \(\bar{\delta }>0\). Setting \(\bar{\delta }= \delta _1\), for every fixed \(\delta < \delta _1\), there exists \(w \ge 2\) such that \(\delta _w \le \delta \le \delta _{w-1}\). Using the properties of the modulus of continuity, and inequality (16) we finally have:

This completes the proof of the first part of the theorem. Note that, if we assume (13) with \(K_n\) in place of \(F_n\), and repeating all the above steps we can easily establish the same conclusion. \(\square \)

Now, recalling the results established in Theorem 3 and Theorem , we can immediately deduce the following characterization for the space \(Lip_\nu [a,b]\), in term of convergence of the families \(F_n\) and \(K_n\).

Theorem 8

Under the assumptions of Theorem 7, we have that a function \(f \in Lip_\nu [a,b]\), \(0<\nu <1\), if and only if:

The same holds for the operators \(K_n\).

Examples of sigmoidal functions for which the above inverse results of approximation are satisfied can be easily provided. Indeed, we can simply consider, e.g., the usual logistic function, the sigmoidal function generated by the hyperbolic tangent function, and several others. For other examples, see, e.g., [10, 11, 28,29,30,31,32].

5 Final Remarks

In the present paper we considered the problem of the best approximation order and inverse theorems for families of neural network operators. From the results here established, the following conjecture concerning the saturation order of \(F_n\) and \(K_n\) in the space C[a, b] with respect to the uniform norm, can be formulated:

-

1.

in the case \(\alpha \in (1,2)\) the saturation order is \({\mathcal {O}}(n^{1-\alpha })\), as \(n\rightarrow +\infty \);

-

2.

in the case \(\alpha = 2\) the saturation order is \({\mathcal {O}}(n^{-1}\log n)\), as \( n\rightarrow +\infty \);

-

3.

in the case \(\alpha > 2\) the saturation order is \({\mathcal {O}}(n^{-1})\), as \( n\rightarrow +\infty \).

The proof of the above conjecture is still and open problem, as well as, the inverse result of approximation for \(\alpha >2\) and \(\nu =1\) (other than, inverse results for the cases \(\alpha \in (1,2]\)). For \(\alpha >2\) and \(\nu = 1\) the proof of Theorem 7 fails, as usually happens in the cases corresponding to the saturation order. Hence, it needs to be solved by different strategies.

Availability of data and material and Code availability

Not applicable.

References

Ancellin, M., Després, B.: A functional equation with polynomial solutions and application to neural networks. C. R. Math. 358(9–10), 1059–1072 (2020)

Grohs, P., Voigtlaender, F.: Proof of the Theory-to-Practice Gap in Deep Learning via Sampling Complexity bounds for Neural Network Approximation Spaces, Foundations of Computational Mathematics Paper Numb, vol .142 (2023)

Petersen, P., Voigtlaender, F.: Equivalence of approximation by convolutional neural networks and fully-connected networks. Proc. Am. Math. Soc. 148, 1567–1581 (2020)

Yang, Y., Zhou, D.-X.: Optimal rates of approximation by shallow ReLU\(^k\) neural networks and applications to nonparametric regression, arXiv, https://doi.org/10.48550/arXiv.2304.01561 (2023)

Cardaliaguet, P., Euvrard, G.: Approximation of a function and its derivative with a neural network. Neural Netw. 5(2), 207–220 (1992)

Anastassiou, G.A.: Rate of convergence of some neural network operators to the univariate case. J. Math. Anal. Appl. 212, 237–262 (1997)

Anastassiou, G.A.: Multivariate hyperbolic tangent neural network approximation. Comput. Math. Appl. 61(4), 809–821 (2011)

Anastassiou, G.A.: Multivariate sigmoidal neural network approximation. Neural Netw. 24, 378–386 (2011)

Coroianu, L., Costarelli, D., Kadak, U.: Quantitative estimates for neural network operators implied by the asymptotic behaviour of the sigmoidal activation functions. Mediter. J. Math. 19, paper 211 (2022)

Costarelli, D.: Density results by deep neural network operators with integer weights. Math. Model. Anal. 27(4), 547–560 (2022)

Costarelli, D.: Approximation error for neural network operators by an averaged modulus of smoothness. J. Approx. Theory 294, 105944 (2023)

Bajpeyi, S., Kumar, A.S.: Approximation by exponential sampling type neural network operators. Anal. Math. Phys. 11(3), paper number 108 (2021)

Cao, F., Chen, Z.: The construction and approximation of a class of neural networks operators with ramp functions. J. Comput. Anal. Appl. 14(1), 101–112 (2012)

Costarelli, D., Sambucini, A.R., Vinti, G.: Convergence in Orlicz spaces by means of the multivariate max-product neural network operators of the Kantorovich type and applications. Neural Comput. Appl. 31, 5069–5078 (2019)

Kadak, U.: Fractional type multivariate neural network operators. Math. Methods Appl. Sci. (2021). https://doi.org/10.1002/mma.7460

Qian, Y., Yu, D.: Rates of approximation by neural network interpolation operators. Appl. Math. Comput. 418, Paper No. 126781 (2022)

Schmidhuber, J.: Deep learning in neural networks: an overview. Neural Netw. 61, 85–117 (2015)

Turkun, C., Duman, O.: Modified neural network operators and their convergence properties with summability methods. Rev. R. Acad. Cienc. Exactas Fís. Nat. Ser. A Mat. RACSAM 114(3), Paper No. 132 (2020)

Zhou, D.-X.: Universality of deep convolutional neural networks. Appl. Comput. Harmonic Anal. 48(2), 787–794 (2020)

Zoppoli, R., Sanguineti, M., Gnecco, G., Parisini, T.: Neural Approximations for Optimal Control and Decision, Communications and Control Engineering book series (CCE). Springer, Cham (2020)

Costarelli, D., Spigler, R.: Approximation results for neural network operators activated by sigmoidal functions. Neural Netw. 44, 101–106 (2013)

Costarelli, D., Spigler, R.: Convergence of a family of neural network operators of the Kantorovich type. J. Approx. Theory 185, 80–90 (2014)

Costarelli, D., Spigler, R.: Multivariate neural network operators with sigmoidal activation functions. Neural Netw. 48, 72–77 (2013)

Costarelli, D., Vinti, G.: Quantitative estimates involving K-functionals for neural network type operators. Appl. Anal. 98(15), 2639–2647 (2019)

Cybenko, G.: Approximation by superpositions of a sigmoidal function. Math. Control Signals Syst. 2, 303–314 (1989)

DeVore, R.A., Lorentz, G.G.: Constructive, vol. 303. Springer, New York (1993)

Costarelli, D., Piconi, M., Vinti, G.: The multivariate Durrmeyer-sampling type operators: approximation in Orlicz spaces, Dolomites Res. Notes Approx., Special Issue ATMA2021 - Approx. Theory Methods Appl. 15 128–144 (2022)

Chen, H., Yu, D., Li, Z.: The construction and approximation of ReLU neural network operators. J. Funct. Spaces 2022 (2022)

Costarelli, D., Vinti, G.: Estimates for the neural network operators of the max-product type with continuous and p-integrable functions. Res. Math. 73(1), 12 (2018)

Costarelli, D., Sambucini, A.R.: Approximation results in Orlicz spaces for sequences of Kantorovich max-product neural network operators. Res. Math. 73(1), 15 (2018)

Kainen, P.C., Kurková, V.: A Vogt, Approximative compactness of linear combinations of characteristic functions. J. Approx. Theory 257, paper number 105435 (2020)

Siegel, J.W., Xu, J.: Approximation rates for neural networks with general activation functions. Neural Netw. 128, 313–321 (2020)

Acknowledgements

The author D. Costarelli is a member of the Gruppo Nazionale per l’Analisi Matematica, la Probabilità e le loro Applicazioni (GNAMPA) of the Istituto Nazionale di Alta Matematica (INdAM), of the Gruppo UMI (Unione Matematica Italiana) T.A.A. (Teoria dell’Approssimazione e Applicazioni), and of the network RITA (Research ITalian network on Approximation).

Funding

Open access funding provided by Università degli Studi di Perugia within the CRUI-CARE Agreement. The author D. Costarelli has been partially supported by the Department of Mathematics and Computer Science of the University of Perugia (Italy) and within the projects: (1) 2023 GNAMPA-INdAM Project "Approssimazione costruttiva e astratta mediante operatori di tipo sampling e loro applicazioni" (CUP _E53C22001930001), (2) 2024 GNAMPA-INdAM Project "Tecniche di approssimazione in spazi funzionali con applicazioni a problemi di diffusione" (CUP_E53C23001670001), (3) "National Innovation Ecosystem grant ECS00000041 - VITALITY", funded by the European Union - NextGenerationEU under the Italian Ministry of University and Research (MUR), and (4) PRIN 2022 PNRR: "RETINA: REmote sensing daTa INversion with multivariate functional modeling for essential climAte variables characterization", funded by the European Union under the Italian National Recovery and Resilience Plan (NRRP) of NextGenerationEU, under the MUR (Project Code: P20229SH29, CUP: J53D23015950001).

Author information

Authors and Affiliations

Contributions

All authors contributed equally to the study. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing Interests

The authors declare that they have no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Coroianu, L., Costarelli, D. Best Approximation and Inverse Results for Neural Network Operators. Results Math 79, 193 (2024). https://doi.org/10.1007/s00025-024-02222-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00025-024-02222-3

Keywords

- Neural network operators

- sigmoidal function

- modulus of continuity

- Lipschitz classes

- inverse theorem of approximation