Abstract

Development of professional expertise is the process of continually transforming the repertoire of knowledge, skills and attitudes necessary to solve domain-specific problems which begins in late secondary education and continues during higher education and throughout professional life. One educational goal is to train students to think more like experts and approach the mastery of a subject as an expert would. Helping students to develop professional expertise and evaluating whether classrooms are conducive to the development of expertise is difficult and time-consuming. At present, there is no instrument that measures all the core classroom factors that influence specifically the development of professional expertise. This paper describes the development and validation of an instrument that measures the extent to which educators create a Supportive Learning Environment for Expertise Development, the SLEED-Q. A sample of 586 secondary school students (14–18 years-old) was used for validation. Both exploratory and confirmatory factor analyses were carried out. Examination of the fit indices indicated that the model seemed to fit the data well, with the goodness-of-fit coefficients being in recommended ranges. The SLEED-Q, consisting of seven factors with 30 items, the SLEED-Q has potential as an instrument for examining how conducive learning environments are to development of professional expertise in secondary school settings. The implications of the results and potential paths for future research are considered.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Assessing the quality of learning environments has a long tradition in pedagogical science and many surveys and questionnaires have been developed. Most of these instruments were developed to fit existing theories about factors which predict achievement and to investigate which factors in the learning environment predict educational outcomes. Parallel to the research on learning environments, cognitive psychologists have been investigating the transition from novice to expert, including suggestions about how to organise learning environments to foster the development of professional expertise. Although student development is considered in both strands of research, insights from research on the development of professional expertise have not yet been systematically implemented in instruments used for evaluating the quality of learning environments. This study was intended to bridge the gap between these domains by developing and validating a Supportive Learning Environment for Expertise Development Questionnaire (SLEED-Q).

The introduction of a National Curriculum in the Netherlands was intended to ensure that secondary educationFootnote 1 (15–18 years) focused more on skills, particularly domain-specific problem solving skills, instead of solely on the acquisition of conceptual knowledge (Bolhuis 1996). Problem solving has long been the focus of research into development of professional expertise. In the fields of professional expertise (Boshuizen et al. 2004) and academic domain learning (Alexander 2003), it is generally acknowledged that experts outperform novices in problem solving. This is due to experts’ (1) well-organised knowledge, (2) thorough problem analysis and problem representation (3) and strong self-monitoring abilities (Chi et al. 1988). The journey from novice to expert begins in the upper grades of secondary school and continues through higher education (formal education) into professional life (workplace). This developmental process can be characterised as a process of continually transforming a repertoire of knowledge, attitudes and skills to improve problem solving in a particular domain according to expertise standards (Boshuizen et al. 2004).

The knowledge taught in upper grades of secondary education and higher education can be characterised as largely decontextualised and formalised academic knowledge which is expressed in, for example, theories, domain principles, equations and graphs. In industrial and post-industrial societies, the formalised knowledge embodied in traditional academic domains such as history, science, mathematics and economics serves as the backbone of formal education (Alexander 2005). The introduction of the National Curriculum and the corresponding emphasis on problem-solving skills as well as formalised knowledge has introduced elements of expertise development into the upper grades of secondary education in The Netherlands.

This paper focuses on the very early phases in the development of professional expertise (i.e. on development of expertise during the upper grades of secondary education). Although secondary education serves several different purposes and is not always included in studies of expertise development, it is a recognised goal of secondary school programs to help students to take their first steps towards becoming experts (Alexander 2005; Bereiter and Scardamelia 1986; Goldman et al. 1999). The goal of secondary school learning environments is not to make experts of secondary school students but to help students to develop the types of knowledge representations, ways of thinking and social practices that define successful learning in specific domains (Goldman et al. 1999; Hatano and Oura 2003) and thus lay the foundations for development of expertise or, as Tynjälä et al. (1997, p. 479): argued “Education as an institution and educational practices have an important role in creating (or inhibiting) the preconditions for expertise.”

Recognising the importance of education in the development of expertise (Alexander 2005; Boshuizen et al. 2004; Goldman et al. 1999), Tynjälä (2008) developed a pedagogical model of development of expertise. This Integrative Pedagogy Model assumes that an ideal learning environment is one in which all the elements of being an expert—theoretical knowledge, practical skills and self-regulation (reflective and metacognitive skills)—are present and integrated. Tynjälä gives a clear account of the knowledge components and learning processes that together constitute a suitable learning environment for development of expertise, but little is known about the instructional principles which would enable researchers and educators to design and implement learning environments from this pedagogical perspective.

Several studies (e.g. Arts et al. 2002; Nievelstein et al. 2011) have provided evidence that development of professional expertise is a malleable process that is responsive to well-conceived, skilfully implemented interventions. These authors discussed educational and instructional implications which foster students’ development in professional expertise. Although an understanding of these implications would have significant pedagogical value, relevant data are scattered throughout the literature and have not yet been brought together in a systematic manner; as a consequence, they are still underexploited in educational practice and research. Following a review of the literature, Elvira et al. (in press) have presented ten instructional principles for the creation of a classroom environment which promotes expert-like behaviour in diagnostic domains (e.g. business, geography and biology), based on an integrative pedagogical perspective. The question of the extent to which instruction in classrooms is based on insights from research on development of professional expertise remains unanswered.

This paper reports the development and validation of an instrument that assesses the degree to which the classroom learning environment is consistent with known principles for promoting development of professional expertise in diagnostic domains. Many instruments for the evaluation of learning environments have their roots in psychosocial or learning sciences. By using Tynjälä’s Integrative Pedagogy Model as a starting point, we choose for a pedagogical perspective on learning environments grounded in professional expertise development research. The presentation of this classroom learning environment instrument is divided into six parts; following this introduction, the second section focuses on development of professional expertise and the third section looks at research on classroom learning environments. The design of our research instrument is explained in the fourth section, the results of a validation study are presented in the fifth section and the final section presents a conclusion based on discussion of the instrument.

Expertise development research

Within the domain of cognitive psychology, substantial effort has been devoted to defining the distinguishing characteristics of experts. “While top performance in any field, ranging from chess to composing, represented the main research interest in the 1970s and 1980s, expertise in professions has emerged as one of the most important areas in this decade the 1990s” (Tynjälä et al. 1997, p. 477). Based on cross-sectional studies of professional expertise which mostly focused on knowledge structures and the cognitive strategies used in domain-specific problem solving, Tynjälä et al. (1997, p. 477) summarised the characteristics of professional experts as follows: experts perceive large, meaningful patterns in their own domain; experts focus on relevant cues of the task; experts represent problems on a deeper level than novices; experts have better self-monitoring skills than novices; experts’ knowledge structures are hierarchically organised and have more depth in their conceptual levels than those of novices; experts categorise problems in their domain according to abstract, high-level principles; and experts’ knowledge structures are more coherent than those of novices (Chi et al. 1988; Ericsson and Lehman 1996, Ericsson and Smith 1991; Eteläpelto 1994; Saariluoma 1995 cited by Tynjälä et al. 1997). Experts are not created overnight. Development of expertise is a long and ongoing process, beginning in formal education and continuing throughout professional life, when the different elements of knowledge, skills and attitudes are continually transformed qualitatively and quantitatively, from the beginning of students’ formal education throughout their professional life (Boshuizen et al. 2004), to support better domain-specific problem solving.

Expertise, as we use the term here, is relative. This perspective on expertise assumes that there are several stages of proficiency between novicehood and expertise (Chi 2006). The literature on expertise development (Alexander 2003; Dreyfus and Dreyfus 1986; Schmidt et al. 1990) provides us with models that describe the path to expertise. This journey involves numerous transitions within and between stages of expertise (Alexander 2003; Schmidt et al. 1990). Stage theories imply a developmental continuum from novice to expert and identify characteristics and development activities at each stage (Grenier and Kehrhahn 2008). In this paper, we focus on the early stages of expertise development, namely, the novice phase, which starts in secondary education, when the foundations for expertise could be laid.

Tynjälä (2008) developed a pedagogical model in which learning towards expertise, in terms of getting better in problem-solving, plays a central role. This pedagogical model integrates the various elements of expert knowledge and learning processes underlying expertise development that unfold around problem solving (see Fig. 1). Problem solving plays a central role in Tynjälä’s (2008) Integrative Pedagogy Model. Various authors (Arts et al. 2006; Boshuizen et al. 2004; Herling 2000) have claimed that the key to expertise lies in an individual’s propensity for solving problems. Expert professionals are constantly solving problems and the ability to solve problems manifests the degree of expertise. The domain-specificity of expertise is reflected in problem-solving ability, such as diagnosing a X-ray photographs in radiology (Gunderman et al. 2001), analysing legal cases (Nievelstein et al. 2010), approving financial statements (Bouwman 1984) and choosing appropriate statistical techniques in applied research (Alacaci 2004).

Integrative Pedagogy Model (Tynjälä 2008)

Expert knowledge, another key feature of professional expertise, consists of three kinds of knowledge that are tightly integrated with each other (Tynjälä 2008). Conceptual/theoretical knowledge is universal, formal and explicit in nature and depends on conscious, conceptual thought processes (Heikkinen et al. 2012) supported by texts, figures, discussions or lectures. The second constituent of expertise, practical knowledge, is manifested as skills or ‘knowing how’ and is seldom taught in university settings; it is usually gained through practical experience (Engel 2008). Knowledge based on practical experience is personal, tacit and similar to intuitions in that it is difficult to be expressed explicitly (Tynjälä 1999). The third type of knowledge is related to self-regulation; self-regulative knowledge, including metacognitive and reflective skills, is knowledge about self-regulated learning strategies, and how to plan, monitor and evaluate one’s own learning and work.

The Integrative Pedagogy Model offers an account of how these three knowledge components are both products of expertise and contributors to its development. Tynjälä (2008) argued that integration of the three types of knowledge occurs during problem solving through the following learning processes: transforming conceptual/theoretical knowledge into practical/experiential knowledge; explicating practical knowledge and reflecting on both practical; and conceptual knowledge by applying and developing self-regulative knowledge (see Fig. 1).

Transforming theoretical knowledge into practical knowledge requires that theories are considered in the light of practical experience (i.e. theoretical knowledge is applied in a practical context). Explicating practical knowledge into conceptual knowledge is the process of making practical knowledge accessible and explicit (in the form of texts, figures, discussions or lectures). The third learning process is reflecting on conceptual and practical/experiential knowledge using self-regulative knowledge; self-regulative knowledge is developed further in the process. This process enables students to make practical knowledge explicit and analyse both theoretical and practical or experiential knowledge (Tynjälä 2008); it is a means of increasing awareness of effective learning strategies and developing an understanding of how these strategies can be used in other learning situations (Ertmer and Newby 1996). The model’s premise is that “the processes that lead to expertise are intriguingly domain general in their view of developmental origins” (Wellman 2003, p. 247) but expertise is definitely not domain-general in terms of developmental outcomes and problem solving.

Tynjälä’s Integrative Pedagogy Model reflects the essential role that integration of the three elements of expert knowledge plays in development of expertise. For instance, during the problem solving process, expert knowledge is transformed and developed; simultaneously this knowledge is used as input for the problem solving process. The arrows in the model represent the continuous, holistic character of expertise development. Although Tynjälä outlined the kinds of learning processes which should be fostered in a learning environment, there has been little work from an integrative pedagogical perspective on instructional principles for such a learning environment.

Taking Tynjälä’s framework as a starting point, we have been searching for instructional principles for promoting development of expertise. We derived ten instructional principles for promoting the development of professional expertise in diagnostic domains such as biology, business and geography from a systematic literature review (Elvira et al. in press). The instructional principles derived from these studies were categorised according to their association with the three learning processes underlying expertise development. The instructional principles (Support students’ in their epistemological understanding, Enable students to understand how particular concepts are connected, Provide students with opportunities to differentiate between and among concepts and Target for relevance) were associated with transforming theoretical knowledge into practical knowledge. Two other instructional principles were associated with explicating practical knowledge into conceptual knowledge: Share inexpressible knowledge and Pay explicit attention to all students’ prior knowledge. The last four principles, Facilitate self-control and self-reflection, Support students in strengthening their Problem solving strategies, Evoke reflection and Practice with a variety of problems to enable students to experience complexity and ambiguity) were associated with the learning process reflecting on practical and conceptual knowledge by using self-regulative knowledge. These instructional principles are summarised in Table 1.

Research on classroom learning environments

Research over the past 40 years has shown that the quality of the classroom learning environment has a critical influence on achievement of educational objectives (Walker and Fraser 2005). Several approaches have been used to assess what aspects of a classroom learning environments facilitate learning achievement and learning outcomes. Various approaches are discussed in this section; they can be distinguished on the basis of the following characteristics:

-

(a)

Theoretical perspective

-

(b)

Perspective on the classroom learning environment

-

(c)

Type of data collection

-

(d)

Educational level.

One way of differentiating instruments for evaluation of learning environments is to consider the variety of (learning science) theories by which the research is influenced. For example, the Questionnaire Teacher Interaction (QTI; e.g. Wubbels and Brekelmans 1998) was influenced by the pragmatic perspective of the communicative systems approach (i.e. the effect of communication on someone else). The Learning Environment Inventory (LEI; Fraser et al. 1982), Classroom Environment Scale (CES; e.g. Moos and Trickett 1987) and My Class Inventory (MCI; Fraser and O’Brien 1985) were designed for use in teacher-centred classrooms, whereas the Individualised Classroom Environment Questionnaire (ICEQ; Fraser 1990) was developed to assess the factors which differentiate conventional classrooms from individualised ones involving open or inquiry-based approaches to learning. Other instruments are informed by important concepts in learning theories such as metacognition or social constructivism. These include the Metacognitive Orientation Learning Environment Scale-Science (MOLES-S; Thomas 2003) and the Constructivistic Learning Environment Survey (CLES; e.g. Taylor et al. 1997). The What Is Happening In this Class? (WIHIC; Fraser et al. 1996) was designed to bring parsimony to the field of learning environments research and might therefore be considered to be based on a pragmatic perspective (Dorman 2003).

Studies also differ in their perspective on the learning environment. An important distinction can be drawn between studies which focus on psychosocial factors and studies which also consider physical factors (e.g. Zandvliet and Fraser 2005). From a psychosocial perspective, the classroom learning environment should create favourable conditions for learning; in other words, attention should be paid to factors that, for example, affect student satisfaction, cohesiveness and autonomy. According to Moos (1974), each human environment—irrespective of the type of setting—can be described by three dimensions: Relationship Dimensions (the nature and intensity of personal relationships within the environment and the extent to which people are involved in the environment and support and help each other), Personal Development Dimensions (standard pathways for personal growth and self-enhancement) and System Maintenance and Change Dimensions (orderliness, clarity of expectations, degree to which control is exerted, responsiveness to change). The LEI (Fraser et al. 1982), CES (Moos and Trickett 1987), ICEQ (Fraser 1990), MCI (Fraser and O’Brien 1985), College and University Classroom Environment Inventory (CUCEI; Fraser and Treagust 1986), Science Laboratory Environment Inventory (SLEI; Fraser and McRobbie 1995), QTI (Wubbels and Brekelmans 1998), CLES (Taylor et al. 1997) and WIHIC (Fraser et al. 1996) are examples of questionnaires that focus on the psychosocial aspects of the classroom environment (Fraser 1998; Schönrock-Adema et al. 2012; Van der Sijde and Tomic 1992). Instruments which assess the physical learning environment focus on the physical, ergonomic, chemical and biological factors that can affect a student’s ability and capacity to learn (Zandvliet and Straker 2001). Certain elements of the physical environment (e.g. space, light, colour, noise, materials, thermal control, air quality) influence learning and development (Berris and Miller 2011). It is assumed that physically inappropriate learning environments can be barriers to learning (for example, a noisy classroom can impede concentration and make it difficult to hear the teacher). The Computerised Classroom Ergonomic Inventory (CCEI; Zandvliet and Straker 2001) and Childhood Physical Environment Rating Scale (CPERS; Moore and Sugiyama 2007) are examples of questionnaires that focus on the physical aspect of learning environments.

There is an important distinction between qualitative and quantitative studies in classroom learning environment research. Studies which take a qualitative approach use open-ended responses (Wong 1993), interviews (e.g. Ge and Hadré 2010; Lorsbach and Basolo 1998), participant observation (Kankkunen 2001), logbooks (Stevens et al. 2000), video recordings (Li 2004), field notes (Parsons 2002) and reflections (Harrington and Enochs 2009) to build a detailed picture of learning environments. Although valuable, these techniques are very time-consuming; a reliable and conceptually-sound instrument which provides quantitative snapshots of classrooms would be a valuable supplement to such activities (Walker and Fraser 2005). Quantitative studies involve numerical, structured and validated data-collection instruments, and statistics. A distinction can also be drawn between ‘alpha press’ and ‘beta press’. Murray (1938) used the term ‘alpha press’ to refer to an external observer’s perceptions of the learning environment and ‘beta press’ to refer to observations by the constituent members of the environment under observation. In many cases, classroom learning environment research uses student ratings. Students are in a good position to make judgements about classrooms because they have encountered many different learning environments and spend enough time in a given classroom environment to form an accurate impression of it (Fraser 1998).

Finally, studies can be distinguished according to the educational level on which they focus. Research and measurement of learning environments have been dominated by studies at the secondary school level and, to a lesser extent, elementary school and higher education levels (Fraser 1998).

To date, learning environment research focusing explicitly on the development of expertise has taken a qualitative, learning science perspective on higher education (Ge and Hadré 2010). Using a qualitative design, Ge and Hadré (2010) identified stages in the development of expertise and the processes by which novices gain competence. In this present article, we present the development and validation of a Supportive Learning Environment for Expertise Development-Questionnaire, SLEED-Q, drawing on pedagogical, learning theoretical and psychosocial insights (see Table 1). The SLEED-Q is a quantitative instrument for evaluating the extent to which a learning environment supports the development of professional expertise in diagnostic domains such as business, geography and biology.

Instrument design and development

In order to develop a useful instrument to assist researchers and teachers in assessing the degree to which a particular classroom’s learning environment is consistent with known principles of development of professional expertise, we derived an instrumental framework from the theoretical framework. The first step in developing the draft version of an instrument measuring the extent to which educators have created a Supportive Learning Environment for Expertise Development was conceptualisation of the dimensions. The ten instructional principles of a learning environment supportive for expertise development were reflected in ten dimensions (see Table 1). We wrote items that tapped each of the ten dimensions. The questionnaire was developed in Dutch and presented to Dutch students. The first author of this paper developed entirely new items for the scales epistemological understanding, prior knowledge, differentiation, connectedness and practice with complexity and ambiguity; for the other five scales, we combined original items with relevant items from the ‘metacognitive demands’ and ‘teacher encouragement and support’ scales of the MOLES-S (Thomas 2003), performance control and self-reflection scales from the Self-Regulated Learning Inventory for Teachers (SRLIT; Lombaerts et al. 2007) and the Planning and Monitoring scale, Relevance and Coherence scale and the Self-tackling assignments scale (Sol and Stokking 2008). Only the four items from MOLES-S used in the SLEED-Q were originally published in English. These four items were independently translated into Dutch by a native English speaker (with a Master degree) and a certified Dutch translator (with a Master Degree and knowledge of education in the age category 12–18 years) fluent in English.

The questionnaire items were reviewed by two researchers in educational sciences and one researcher in management sciences to establish content validity. These reviewers agreed that the set of statements was consistent with the underlying theoretical framework. In order to ensure that the items were considered relevant and comprehensible by teachers and students, we tested the instrument in a pilot study in which 36 secondary school students (with three different teachers) completed the digital version of the SLEED-Q. Students reported difficulties in understanding questions to their teachers. They were also asked about the user-friendliness of the digital instrument. After the students completed the questionnaire, two secondary school teachers reviewed the questionnaire to assess the clarity and absence of ambiguity of the items. We made some modifications based on recommendations and comments from the students, teachers and researchers. We improved the phrasing and clarity of some items and the usability characteristics of the instrument (e.g. we added a good introduction to the questionnaire and divided it into clearly arranged, tabbed pages). We also had to make certain changes to the electronic design of the questionnaire. The result of this process was the initial Supportive Learning Environment for Expertise Development Questionnaire (SLEED-Q), a 65-item instrument consisting of ten scales, each corresponding to one of the ten aforementioned instructional principles. The questionnaire makes use of a five-point Likert scale (1 = strongly disagree, 2 = disagree, 3 = neither agree nor disagree, 4 = agree, and 5 = strongly agree).

In order to determine the best factor structure to represent the SLEED-Q, we performed exploratory factor analysis (EFA) and confirmatory factor analysis (CFA). EFA was carried out on the initial instrument, CFA on the modified instrument. Between November 2011 and January 2012, the initial instrument was administered to 430 secondary school students (176 girls; 254 boys) following a business (Management and Organisation; M&O) track, in 18 different 10th to 12th grade pre-university and senior general higher education classes (14–18 years of age, average age 16.83 years; 10th grade: 40 %; 11th grade: 40 %; 12th grade: 20 %) at 9 Dutch schools.

In June and July 2012, the modified questionnaire was administered to 156 secondary school students (90 girls; 66 boys) following a business (Management & Organisation) track, in 8 different 10th to 12th grade pre-university and senior general higher education classes (14–18 years of age, average age 16.35 years; 10th grade: 59 %; 11th grade 41 %) at 5 Dutch schools. As well as completing the SLEED-Q, students provided demographic information (gender, age).

On the basis of evidence that student ratings tend to reflect personal preferences (Kunter and Baumert 2006), we added an external criterion. Students were asked to fill out a validated scale consisting of nine questions (Harackiewicz et al. 2008) about their individual interest in the school subject for which they completed the SLEED-Q to provide a measure of concurrent validity.

Analysis and results

Exploratory factor analysis

The data were subjected to maximum likelihood extraction with an oblimin rotation. We chose maximum likelihood because there was a hypothesis about the underlying structure. Next an oblimin rotation was performed because we assumed that the factors describing the structure were interrelated. The decision about the number of factors to retain was based on initial eigenvalues; we kept all factors with an eigenvalue of 1.0 or higher. A scree plot was also examined, looking for a change in the slope of the line connecting the eigenvalues of the factors. Only items with a loading of 0.40 or more were selected. Internal consistency and reliability of the various scales was assessed using Cronbach’s α, with a value of at least 0.60 as the criterion for acceptable internal consistency (Nunnally 1978; Robinson et al. 1991; DeVellis 1991). We also followed alpha Raubenheimer’s (2004) recommendation that there should be no fewer than three items per factor and that replacement items should be generated if the items of a scale in development do not exhibit sufficient reliability and validity. Based on these criteria, we removed items and added six new items: (V_18: “We learn how the subject M&O relates to other subjects”; V_75: “When working on an assignment we keep track of time ourselves”’; V_76: “The assignments from the book/hand-out deal with examples from the professional world”; V_77: “We get a variety of assignments taken from the professional world”; V_78: “We learn how to comment on fellow students’ work”; V_79: “The teacher teaches us how to deal with feedback”). Three of these new items were from the SRLIT (Lombaerts et al. 2007) and the other three were developed by the first author. Table 2 shows the factor loadings and where and why the modifications were made.

Four of the initially conceptualised dimensions were part of the SLEED-Q: Relevance, Self-control and self-reflection, Problem solving strategies and Epistemological understanding. Three new scales were derived from the data: Teaching for understanding, Support learning for understanding and Sharing and comparing knowledge. Following Kember et al. (2010), we titled one scale Teaching for understanding; this subscale tapped encouragement for students to employ a deep approach to learning. This scale (see Table 6 in Appendix) consisted of two Relevance items, two Differentiation items, two Connectedness items and one Self-control and self-reflection item, giving a total of seven items. The scale Support learning for understanding tapped understanding of (new) concepts and information needed to solve problems; it consisted of two items on Prior knowledge, and two items on Problem solving strategies. The scale Sharing and comparing knowledge consisted of two Reflection items and two Inexpressible knowledge items related to articulation of hidden, unspoken knowledge. One of the original SLEED-Q scales, Complexity and ambiguity, was eliminated as the three items comprising it did not load as one factor and did not load on any of the remaining seven factors. The modified version of the instrument consisted of seven scales consisting of 32 items and covering 9 of the 10 initially conceptualised scales.

Confirmatory factor analysis

CFA was performed to determine whether the seven-factor structure obtained using EFA would be confirmed in another dataset. A confirmatory factor model was tested using LISREL 8.80. The seven latent variables were the seven factors identified by the EFA. The 32 questionnaire items were the observed variables. The goodness-of-fit indices for this model, shown in the second row of Table 3, consistently indicated a good fit with the data. The Chi square/df ratio was less than 2.0, indicating that the model was a good fit with the data; the NNFI and the CFI were higher than the recommended value of 0.90 (Hu and Bentler 1999); and the RMSEA (0.070) also indicated acceptable fit (Browne and Cudeck 1993).

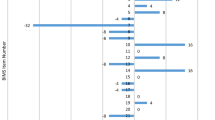

An examination of the modification indices of the seven-factor model revealed that there was still room for improvement. Item 61 “We have information that enables us to judge for ourselves whether our work is finished”, and Item 57 “We are used to checking our own work in the M&O classes” had an unsatisfactory loading tendency and an insignificant t-value for their respective factors and were therefore deleted; this improved the fit of the model (third and fourth row Table 3). We chose the 30-item solution with the sample of secondary school students. The 30 items and the path diagram of the items’ factor loadings are presented in Fig. 2. The factor loadings of the items varied between 0.28 and 0.85 (see Fig. 2). Table 4 shows the scale descriptions and a sample item from the modified SLEED-Q.

Internal consistency reliability

Internal consistency reliability was assessed with Cronbach’s α coefficient using SPSS 19.0. As shown in Table 5, α-coefficients ranged from 0.60 for Epistemological understanding to 0.81 for Problem solving strategies.

Discriminant validity

Table 5 gives discriminant validity data in the form of correlations between the factors under investigation. These data indicate overlap, but not to such an extent that it compromises the psychometric properties of the SLEED-Q. The conceptual distinctions between the scales are another reason for retaining them in the SLEED-Q. All the correlations were statistically significant at 0.05 level, except for the correlation between the factors of Problem solving strategies and self-control and self-reflection.

Concurrent validity

Concurrent validity measures the degree to which a test corresponds to an external criterion that is known concurrently (Rubin and Babbie 2007). The concurrent validity of the SLEED-Q was computed using bivariate correlations between the seven factors and total score on a measure of individual interest in the school subject for which SLEED-Q had been completed (see Table 5). The correlations between individual interest in the school subject scores and the seven factors were all significant at the p < 0.01 level. For concurrent validity, weak to moderate correlation coefficients were found for the seven scales of the SLEED-Q and total score on individual interest in the school subject (r = 0.17–0.52). In other words, students with high interest scores on the school subject had low or moderate scores on the seven scales of the SLEED-Q, meaning that the factors of the SLEED-Q and the total score for individual interest in the school subject were only distantly related.

Conclusion

This paper presented the development and validation of the SLEED-Q. Development was based on a systematic review of the literature on instructional practices which promote development of professional expertise in diagnostic domains. Sixty-five relevant instructional practices were derived from this review which theoretically fit in terms of Tynjälä’s Integrative Pedagogy Model and cluster into ten instructional principles. These ten instructional principles were reflected in ten scales. EFA and CFA of responses to the SLEED-Q showed that the questionnaire had a seven-factor structure: (1) Share and compare knowledge, (2) Relevance, (3) Self-control and self-reflection, (4) Epistemological understanding, (5) Teaching for understanding, (6) Support learning for understanding and (7) Problem solving strategies.

Four of the ten original scales (Epistemological understanding, Relevance, Self-control and self-reflection and Problem solving strategies) were confirmed. Three new factors emerged from the factor analysis: items referring to Evoking reflection and Inexpressible knowledge loaded together on the Sharing and comparing knowledge factor; items measuring differentiation, connectedness, self-control and self-reflection and Relevance loaded together on one factor (Teaching for understanding). Items which were originally part of the scales of Prior knowledge and Problem solving strategies loaded together on one factor, labelled Support learning for understanding. Sharing and comparing knowledge and Support learning for understanding reflect the learning processes Explicating practical/experiential knowledge into conceptual/theoretical knowledge and Reflecting on both practical and conceptual knowledge by using self-regulative knowledge. Although both new factors tap the same learning processes underlying development of expertise, they have different foci. The four items loading on Sharing and comparing knowledge relate to reflection and direct sharing of inexpressible knowledge. Interestingly, reflection and sharing inexpressible knowledge are sometimes considered crucial to fostering expertise. Kirsner (2000) argued that, without reflection, only ‘false expertise’ can develop. Several authors (e.g. Alacaci 2004; Nilsson and Pilhammar 2009) claim that sharing inexpressible knowledge of intermediates and experts in a domain contributes to an individual’s enculturation into the ‘expert’ group. Enculturation should be understood in two senses, as process of developing knowledge, skills, habits, attitudes which characterise a specific domain, and as the process of becoming an accepted and a legitimised member of a group (Boshuizen et al. 2004). The factor Support learning for understanding includes four items tapping the activation of elements of prior conceptual and practical knowledge. Chi et al. (1988) suggested that prior conceptual knowledge influences how problem solvers define, represent and solve problems. From an expertise development perspective, Support learning for understanding embodies the requirement for integrated conceptual knowledge and practical knowledge when solving domain-specific problems.

Teaching for understanding reflects the learning processes by which theoretical and conceptual knowledge is translated into experiential and practical knowledge and the process of reflecting on practical and conceptual knowledge by using self-regulative knowledge. The seven items measuring Teaching for understanding reflect instructional practices which promote a deeper understanding of the domain, such as encouraging students to compare and contrast concepts, helping students to see relationships between concepts, and encouraging students to apply domain-specific concepts to assignments. Self-directed learning can promote a more thorough understanding; this is captured by the item “We have deadlines for our assignments”. The factors Sharing and comparing knowledge, Teaching for understanding and Support learning for understanding appear more interrelated than the other factors (Relevance, Self-control and self-reflection and Problem solving strategies). The difference in interrelatedness (in terms of learning processes) of the factors indicates that, from the student perspective, instructional practices focus on each learning process individually as well as on integrating and synthesising the various learning processes.

The items assessing seven factors revealed in this study relate to nine of the ten original instructional principles. Our data provided empirical support for the validity of nine out of ten of the instructional principles identified in our literature review (Elvira et al. in press). It might be considered disappointing that the scale initially designed to measure the complexity and ambiguity of tasks did not survive the statistical analyses. One explanation for the absence of the complexity and ambiguity factor from students’ perspectives of their learning environments might be that teachers do not provide complex, ambiguous assignments at this early stage in development of professional expertise. Several authors have stated that caution is vital when confronting students with complexity; some authors (e.g. Botti and Reeve 2003; Brookes et al. 2011) propose that the complexity of problems, cases, representations and scenarios should be increased gradually: “Starting any sequence of representations with the most regular, simple forms available and minimising contextual features that could potentially confuse or distract the students, will enable students to ‘get their eye in’ when making sense of a representation” (Gilbert 2005 cited by Halverson et al. 2011, p. 816).

Some limitations of this study should be mentioned. First, although other scales had reliability coefficients close to or higher than 0.70, Epistemological understanding had a reliability of 0.6. Closer inspection of the data revealed that the individual items making up this scale did not show low corrected item-total correlations or low factor loadings. One explanation for the lower reliability is that this scale consists of only three items, which is the minimum number of items recommended for a scale (Raubenheimer 2004). Future development of the instrument should include consideration of adding items to this scale and re-examining the formulation of the current items.

Second, whilst an instrument with 30 items might be preferable to a longer instrument, the factor analyses revealed scales with different numbers of items and, although it is not necessary for each scale to have the same number of items, a more balanced distribution of items across factors might result in a more efficient instrument (Johnson and Stevens 2001).

Third, the SLEED-Q only measures student perceptions of the nature of the learning environment. Previous research (Kunter and Baumert 2006) has shown that there are differences between teachers’ and students’ perceptions of the same learning environment. Research into both teachers’ and students’ perceptions of learning environments is important, because divergence and convergence between students’ and teachers’ perceptions have proven to be informative in investigations of teaching and learning processes and could provide valuable information for teacher education programs (Brekelmans and Wubbels 1991, cited by den Brok et al. 2006). Future research should focus on developing a teacher version of the SLEED-Q.

Fourth, the face validity of items (i.e. whether the meaning was clear and the response scales easy to use for students) was evaluated indirectly by asking students to report possible problems with the questionnaire to their teacher. This gave us indirect information about possible misinterpretations; it might be preferable to interview a sample of respondents to obtain first-hand data on face validity.

Fifth, the SLEED-Q was administered in Dutch to Dutch children taught in a particular educational system; cross-validation of the SLEED-Q in other countries with different educational systems is desirable. Earlier learning environment research (MacLeod and Fraser 2010; Telli et al. 2007; Wong and Fraser 1995) has shown that various instruments (e.g. SLEI, WIHIC and QTI) had satisfactory internal consistency reliability and factorial validity, indicating cross-cultural validity. The SLEED-Q is included in the paper (Table 6 in Appendix) to stimulate further research on learning environment factors which promote development of expertise at secondary school level.

Although several scholars (Tynjälä et al. 1997) have argued that educational practices have an important role in creating or inhibiting the preconditions for expertise, the pedagogical dimension of expertise is rarely incorporated into frameworks for development of instruments to evaluate learning environments. The process by which the SLEED-Q was developed is a first step in this direction. The SLEED-Q provides another means of evaluating classroom environments with respect to their potential to promote development of expertise and is complementary to often time-consuming, qualitative approaches. Studies using the SLEED-Q could stimulate discussions with educators about how to create an environment conducive to development of professional expertise, and secondary schools could use the SLEED-Q as a diagnostic tool as part of a quality-assurance process. Practical application of the SLEED-Q would require formulation and implementation of intervention strategies based on questionnaire results and re-administration of the questionnaire to establish the effectiveness of the interventions (Yarrow et al. 1997).

Notes

Dutch secondary education has four types of secondary education which are hierarchically ordered. In descending order, they are pre-university education, senior general higher education, pre-vocational secondary education and practical training. This paper focuses on pre-university education and senior general higher education.

References

Alacaci, C. (2004). Understanding experts’ knowledge in inferential statistics and its implications for statistics education. Journal of Statistics Education, 12(2), 1–46. Retrieved from http://www.amstat.org/publications/jse/v12n2/alacaci.html.

Alexander, P. A. (2003). The development of expertise: The journey from acclimation to proficiency. Educational Researcher, 32(8), 10–14. doi:10.3102/0013189X032008010.

Alexander, P. A. (2005). Teaching towards expertise [Special monograph on pedagogy—Learning for teaching]. British Journal of Educational Psychology, Monograph Series II, 3, 29–45.

Arts, J. A. R., Boshuizen, H. P. A., & Gijselaers, W. H. (2006). Understanding managerial problem-solving, knowledge use and information processing: Investigating stages from school to the workplace. Contemporary Educational Psychology, 31(4), 387–410. doi:10.1016/j.cedpsych.2006.05.005.

Arts, J. A. R., Gijselaers, W. H., & Segers, M. S. R. (2002). Cognitive effects of an authentic computer-supported, problem-based learning environment. Instructional Science, 30, 465–495. doi:10.1023/A:1020532128625.

Bereiter, C., & Scardamelia, M. (1986). Educational relevance of the study of expertise. Interchange, 17(2), 10–19. doi:10.1007/BF01807464.

Berris, R., & Miller, E. (2011). How design of physical environment impacts early learning: Educators and parents perspectives. Australasian Journal of Early Childhood, 36(4), 102–110.

Bolhuis, S. (1996, April). Towards active and selfdirected learning: Preparing for lifelong learning, with reference to Dutch secondary education. Paper presented at the annual meeting of the American Educational Research Association, New York.

Boshuizen, H. P. A., Bromme, R., & Gruber, H. (Eds.). (2004). Professional learning: Gaps and transitions on the way from novice to expert. Dordrecht: Kluwer Academic Publishers.

Botti, M., & Reeve, R. (2003). Role of knowledge and ability in student nurse’ clinical decision-making. Nursing and Health Sciences, 5(1), 39–49. doi:10.1046/j.1442-2018.2003.00133.x.

Bouwman, M. (1984). Expert vs. novice decision making in accounting: A summary. Accounting, Organizations and Society, 9(3–4), 325–327. doi:10.1016/0361-3682(84)90016-3.

Brookes, D. T., Ross, B. H., & Mestre, J. P. (2011). Specificity, transfer, and the development of expertise. Physical Review Special Topics: Physics Education Research, 7, 8. doi:10.1103/PhysRevSTPER.7.010105.

Browne, M. W., & Cudeck, R. (1993). Alternative ways of assessing model fit. In K. A. Bollen & J. S. Long (Eds.), Testing structural equation models (pp. 136–162). Newbury Park, CA: Sage.

Chi, M. T. H. (2006). Two approaches to the study of experts’ characteristics. In K. A. Ericsson, N. Charness, P. J. Feltovich, & R. R. Hoffman (Eds.), The Cambridge handbook of expertise and expert performance (pp. 21–30). Cambridge: Cambridge University Press.

Chi, M. T. H., Glaser, R., & Farr, M. J. (Eds.). (1988). The nature of expertise. London: Lawrence Erlbaum.

den Brok, P. J., Bergen, T. C. M., & Brekelmans, J. M. G. (2006). Convergence and divergence between students’ and teachers’ perceptions of instructional behaviour in Dutch secondary education. In D. L. Fisher & M. S. Khine (Eds.), Contemporary approaches to research on learning environments: World views (pp. 125–160). Singapore: World Scientific.

DeVellis, R. F. (1991). Scale development: Theory and applications. Newbury Park: Sage.

Dorman, J. P. (2003). Cross-national validation of the What is Happening in This Class? Questionnaire using confirmatory factor analysis. Learning Environments Research, 6, 231–245. doi:10.1023/A:1027355123577.

Dreyfus, H. L., & Dreyfus, S. E. (1986). Mind over machine: The power of human intuition and expertise in the era of the computer. New York: Free Press.

Elvira, Q. L., Imants, J., Dankbaar, B., & Segers, M. S. R (in press). Designing education for professional expertise development. Scandinavian Journal of Educational Research.

Engel, P. J. H. (2008). Tacit knowledge and visual expertise in medical diagnostic reasoning: Implications for medical education. Medical Teacher, 30, 184–188. doi:10.1080/01421590802144260.

Ericsson, K. A. & Lehman, A. C. (1996). Expert and exceptional performance: Evidence of maximal adaptation to task constraints. Annual Review of Psychology, 47, 273–305.

Ericsson, K. A., & Smith, J. (Eds.). (1991). Toward a general theory of expertise: Prospects and limits. Cambridge: Cambridge University Press.

Ertmer, P. A., & Newby, T. J. (1996). The expert learner: Strategic, self-regulated, and reflective. Instructional Science, 24(1), 1–24. doi:10.1007/BF00156001.

Eteläpelto, A. (1994). Work experience and the development of expertise. In W. J. Nijhof & J. N. Streumer (Eds.), Flexibility and Training in Vocational Education (pp. 319–341). Utrecht: Lemma.

Fraser, B. J. (1990). Individualised classroom environment questionnaire. Melbourne: Australian Council for Educational Research.

Fraser, B. J. (1998). Classroom environment instruments: Development, validity and applications. Learning Environments Research, 1, 7–33. doi:10.1023/A:1009932514731.

Fraser, B. J., Anderson, G. J., & Walberg, H. J. (1982). Assessment of learning environments: Manual for Learning Environment Inventory (LEI) and My Class Inventory (MCI) (3rd vers.). Perth: Western Australian Institute of Technology.

Fraser, B. J., Fisher, D. L., & McRobbie, C. J. (1996, April). Development, validation, and use of personal and class forms of a new classroom environment instrument. Paper presented at the annual meeting of the American Educational Research Association, New York.

Fraser, B. J., & McRobbie, C. J. (1995). Science laboratory classroom environments at schools and universities: A cross-national study. Educational Research and Evaluation: An International Journal on Theory and Practice, 1(4), 289–317. doi:10.1080/1380361950010401.

Fraser, B. J., & O’Brien, P. (1985). Student and teacher perceptions of the environment of elementary-school classrooms. Elementary School Journal, 85, 567–580. doi:10.1086/461422.

Fraser, B. J., & Treagust, D. F. (1986). Validity and use of an instrument for assessing classroom psychosocial environment in higher education. Higher Education, 15, 37–57. doi:10.1007/BF00138091.

Ge, X., & Hadré, P. L. (2010). Self-processes and learning environment as influences in the development of expertise in instructional design. Learning Environments Research, 13(1), 23–41. doi:10.1007/s10984-009-9064-9.

Goldman, S. R., Petrosino, A. J., & CTGV. (1999). Design principles for instruction in content domains: Lessons from research on expertise and learning. In F. T. Durso (Ed.), Handbook of applied cognition (pp. 595–628). Chichester: Wiley.

Grenier, R., & Kehrhahn, M. (2008). Toward an integrated model of expertise and its implications for HRD. Human Resource Development Review, 7(2), 198–217. doi:10.1177/1534484308316653.

Gunderman, R., Williamson, K., Fraley, R., & Steele, J. (2001). Expertise: Implications for radiological education. Academic Radiology, 8(12), 1252–1256. doi:10.1016/S1076-6332(03)80708-0.

Halverson, K. L., Pires, J. C., & Abell, S. K. (2011). Exploring the complexity of tree thinking expertise in an undergraduate plant systematics course. Science Education, 95(5), 794–823. doi:10.1002/sce.20436.

Harackiewicz, J. H., Durik, A. M., Barron, K. E., Linnenbrink-Garcia, L., & Tauer, J. M. (2008). The role of achievement goals in the development of interest: Reciprocal relations between achievement goals, interest, and performance. Journal of Educational Psychology, 100(1), 105–122. doi:10.1037/0022-0663.100.1.105.

Harrington, R., & Enochs, L. (2009). Accounting for preservice teachers’ constructivist learning environment experiences. Learning Environments Research, 12(1), 45–65. doi:10.1007/s10984-008-9053-4.

Hatano, G., & Oura, Y. (2003). Commentary: Reconceptualising school learning using insight from expertise research. Educational Researcher, 32(8), 26–29. doi:10.3102/0013189X032008026.

Heikkinen, H., Jokinen, H., & Tynjälä, P. (2012). Teacher education and development as lifelong and lifewide learning. In H. Heikkinen, H. Jokinen, & P. Tynjälä (Eds.), Peer-group mentoring for teacher development (pp. 3–30). Milton Park: Routledge.

Herling, R. (2000). Operational definitions of expertise and competence. In R. W. Herling & J. M. Provo (Eds.), Strategic perspectives on knowledge, competence, and expertise: Advances in developing human resources (pp. 8–21). San Francisco: Sage.

Hu, L., & Bentler, P. M. (1999). Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Structural Equation Modeling, 6(1), 1–55. doi:10.1080/10705519909540118.

Johnson, B., & Stevens, J. J. (2001). Confirmatory factor analysis of the School Level Environment Questionnaire (SLEQ). Learning Environments Research, 4(3), 325–344. doi:10.1023/A:1014486821714.

Kankkunen, K. (2001). Concept mapping and Peirceʼs semiotic paradigm meet in the classroom environment. Learning Environments Research, 4(3), 287–324. doi:10.1023/A:1014438905784.

Kember, D., Ho, A., & Hong, C. (2010). Characterising a teaching and learning environment capable of motivating student learning. Learning Environments Research, 13(1), 43–57. doi:10.1007/s10984-009-9065-8.

Kirsner, D. (2000). Unfree associations inside psychoanalytic institutes. London: Process Press Ltd.

Kunter, M., & Baumert, J. (2006). Who is the expert? Construct and criteria validity of student and teacher ratings of instruction. Learning Environments Research, 9(3), 231–251. doi:10.1007/s10984-006-9015-7.

Li, Y. L. (2004). Pupil-teacher interactions in Hong Kong kindergarten classrooms—Its implications for teachers’ professional development. Learning Environments Research, 7(1), 36–45. doi:10.1023/B:LERI.0000022280.11397.f8.

Lombaerts, K., Engels, N., & Athanasou, J. (2007). Development and validation of the self-regulated learning inventory for teachers. Perspectives in Education, 25(4), 29–47.

Lorsbach, A. W., & Basolo, F, Jr. (1998). Collaborating in the evolution of a middle school science learning environment. Learning Environments Research, 1(1), 115–127. doi:10.1023/A:1009992800619.

MacLeod, C., & Fraser, B. J. (2010). Development, validation and application of a modified Arabic translation of the What Is Happening In this Class? (WIHIC) questionnaire. Learning Environments Research, 13(2), 105–125. doi:10.1007/s10984-008-9052-5.

Moore, G. T., & Sugiyama, T. (2007). The Children’s Physical Environment Rating Scale (CPERS): Facilities. Children, Youth and Environments, 17(4), 24-53. On-line journal available from http://www.colorado.edu/journals/cye.

Moos, R. H. (1974). The social climate scales: An overview. Palo Alto, CA: Consulting Psychologists Press.

Moos, R. H., & Trickett, E. J. (1987). Classroom environment scale manual (2nd ed.). Palo Alto, CA: Consulting Psychologists Press.

Murray, H. A. (1938). Explorations in personality: A clinical and experimental study of fifty men of college age. New York: Oxford University Press.

Nievelstein, F., Gog, T., van Boshuizen, H. P. A., & Prins, F. J. (2010). Effects of conceptual knowledge and availability of information sources on law students legal reasoning. Instructional Science, 38(1), 23–35. doi:10.1007/s11251-008-9076-3.

Nievelstein, F., van Gog, T., Van Dijck, G., & Boshuizen, H. P. A. (2011). Instructional support for novice law students: Reducing search processes and explaining concepts in cases. Applied Cognitive Psychology, 25, 408–413. doi:10.1002/acp.1707.

Nilsson, M. S., & Pilhammar, E. (2009). Professional approaches in clinical judgements among senior and junior doctors: Implications for medical research. BMC Medical Education,. doi:10.1186/1472-6920-9-25.

Nunnally, J. C. (1978). Psychometric theory (2nd ed.). New York: McGraw-Hill.

Parsons, E. C. (2002). Using comparisons of multi-age learning environment to understand two teachers’ democratic aims. Learning Environments Research, 5(2), 185–202. doi:10.1023/A:1020330930653.

Raubenheimer, J. E. (2004). An item selection procedure to maximize scale reliability and validity. South African Journal of Industrial Psychology, 30(4), 59–64. doi:10.4102/sajip.v30i4.168.

Robinson, J. P., Shaver, P. R., & Wrightsman, L. S. (Eds.). (1991). Measures of personality and social psychology attitudes. San Diego: Academic Press Inc.

Rubin, A., & Babbie, E. (2007). Essential research methods for social work. Belmont, CA: Thomson Brooks/Cole.

Saariluoma, P. (1995). Chess players' thinking: A cognitive psychological approach. London: Routledge

Schmidt, H. G., Norman, G. R., & Boshuizen, H. P. A. (1990). A cognitive perspective on medical expertise: Theory and implications. Academic Medicine, 65(10), 611–621. doi:10.1097/00001888-199010000-00001.

Schönrock-Adema, J., Bouwkamp-Timmer, T., van Hell, E. A., & Cohen-Schotanus, J. (2012). Key elements in assessing the educational environment: Where is the theory? Advances in Health Sciences Education, 17, 727–742. doi:10.1007/s10459-011-9346-8.

Sol, Y. B., & Stokking, K. M. (2008). Het handelen van docenten in scholen met een vernieuwend onderwijsconcept. Ontwikkeling, gebruik en opbrengsten van een instrument. Utrecht: Universiteit Utrecht.

Stevens, L., van Werkhoven, W., Stokking, K., Castelijns, J., & Jager, A. (2000). Interactive instruction to prevent attention problems in class. Learning Environments Research, 3(3), 265–286. doi:10.1023/A:1011414331096.

Taylor, P. C., Fraser, B. J., & Fisher, D. L. (1997). Monitoring constructivist classroom learning environments. International Journal of Educational Research, 27, 293–302. doi:10.1016/S0883-0355(97)90011-2.

Telli, S., den Brok, P. J., & Cakiroglu, J. (2007). Students’ perceptions of science teachers’ interpersonal behaviour in secondary schools: Development of a Turkish version of the Questionnaire on Teacher Interaction. Learning Environments Research, 10(2), 115–129. doi:10.1007/s10984-007-9023-2.

Thomas, G. P. (2003). Conceptualisation, development and validation of an instrument for evaluating the metacognitive orientation of science classroom learning environments: The Metacognitive Orientation Learning Environment Scale-Science (MOLES-S). Learning Environments Research, 6(3), 175–197. doi:10.1023/A:1024943103341.

Tynjälä, P. (1999). Towards expert knowledge? A comparison between a constructivist and a traditional learning environment in the university. International Journal of Educational Research, 31, 355–442. doi:10.1016/S0883-0355(99)00012-9.

Tynjälä, P. (2008). Perspectives into learning at the workplace. Educational Research Review, 3(2), 130–154. doi:10.1016/j.edurev.2007.12.001.

Tynjälä, P., Nuutinen, A., Eteläpelto, A., Kirjonen, J., & Pirkko, R. (1997). The acquisition of professional expertise—A challenge for educational research. Scandinavian Journal of Educational Research, 41(3–4), 475–494. doi:10.1080/0031383970410318.

Van der Sijde, P. C., & Tomic, W. (1992). The influence of teacher training program on student perception of classroom climate. Journal of Education for Teaching, 18(3), 287–295. doi:10.1080/0260747920180306.

Walker, S. L., & Fraser, B. J. (2005). Development and validation of an instrument for assessing distance education learning environments in higher education: The distance learning environments survey (DELES). Learning Environments Research, 8(3), 289–308. doi:10.1007/s10984-005-1568-3.

Wellman, H. M. (2003). Enablement and constraint. In U. Staudinger & U. Lindenberger (Eds.), Understanding human development (pp. 245–263). Dordrecht, NL: Kluwer.

Wong, N. Y. (1993). The psychosocial environment in the Hong Kong mathematics classroom. Journal of Mathematical Behavior, 12(3), 303–309.

Wong, A. F. L., & Fraser, B. J. (1995). Cross-validation in Singapore of the science laboratory environment inventory. Psychological Reports, 76, 907–911. doi:10.2466/pr0.1995.76.3.907.

Wubbels, Th, & Brekelmans, M. (1998). The teacher factor in the social climate of the classroom. In B. J. Fraser & K. G. Tobin (Eds.), International handbook of science education (pp. 565–580). Dordrecht: Kluwer.

Yarrow, A., Millwater, J., & Fraser, B. (1997). Improving university and primary school classroom environments through preservice teachers’ action research. Practical Experiences in Professional Education, 1(1), 68–93.

Zandvliet, D. B., & Fraser, B. J. (2005). Physical and psychosocial environments associated with networked classrooms. Learning Environments Research, 8(1), 1–17. doi:10.1007/s10984-005-7951-2.

Zandvliet, D. B., & Straker, L. M. (2001). Physical and psychosocial aspects of learning environment in information technology rich classrooms. Ergonomics, 44(9), 838–851. doi:10.1080/00140130117116.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

See Table 6.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Elvira, Q., Beausaert, S., Segers, M. et al. Development and validation of a Supportive Learning Environment for Expertise Development Questionnaire (SLEED-Q). Learning Environ Res 19, 17–41 (2016). https://doi.org/10.1007/s10984-015-9197-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10984-015-9197-y