Abstract

We consider a specific random graph which serves as a disordered medium for a particle performing biased random walk. Take a two-sided infinite horizontal ladder and pick a random spanning tree with a certain edge weight c for the (vertical) rungs. Now take a random walk on that spanning tree with a bias \(\beta >1\) to the right. In contrast to other random graphs considered in the literature (random percolation clusters, Galton–Watson trees) this one allows for an explicit analysis based on a decomposition of the graph into independent pieces. We give an explicit formula for the speed of the biased random walk as a function of both the bias \(\beta \) and the edge weight c. We conclude that the speed is a continuous, unimodal function of \(\beta \) that is positive if and only if \(\beta < \beta _c^{(1)}\) for an explicit critical value \(\beta _c^{(1)}\) depending on c. In particular, the phase transition at \(\beta _c^{(1)}\) is of second order. We show that another second order phase transition takes place at another critical value \(\beta _c^{(2)}<\beta _c^{(1)}\) that is also explicitly known: For \(\beta <\beta _c^{(2)}\) the times the walker spends in traps have second moments and (after subtracting the linear speed) the position fulfills a central limit theorem. We see that \(\beta _c^{(2)}\) is smaller than the value of \(\beta \) which achieves the maximal value of the speed. Finally, concerning linear response, we confirm the Einstein relation for the unbiased model (\(\beta =1\)) by proving a central limit theorem and computing the variance.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and Main Results

1.1 Introduction

This paper studies a very specific model for transport in a disordered medium. Biased random walks in random environments and on random graphs have been investigated intensively over the last years. The most prominent examples are biased random walk on supercritical percolation clusters, introduced in [3] and biased random walk on supercritical Galton–Watson tree, introduced in [23]. We refer to [5] for a survey. Another specific model which has found a lot of recent interest in the physics literature is the random comb graph, see [2, 13, 21, 26]. In the presence of traps in the medium, there are often three regimes of transport, see for instance [21] and the references therein.

-

1.

The Normal Transport regime for small values of the bias: the walk has a positive linear speed and, when subtracting the linear speed, it is diffusive.

-

2.

The Anomalous Fluctuation regime for intermediate values of the bias: the walk still has a positive linear speed but the diffusivity is lost.

-

3.

The Vanishing Velocity regime (aka subballistic regime): the speed of the random walk is zero if the bias is larger than some critical value, due to the time the random walk spends in traps.

The Normal Transport regime together with the Anomalous Fluctuation regime are also known as the ballistic regime. For biased random walk on supercritical Galton–Watson trees, these statements have been proved in [23]. For biased random walks on supercritical percolation clusters, the existence of the critical value separating the ballistic regime from the Vanishing Velocity regime was shown in [14], whereas the earlier works [25] and [9] gave the existence of a zero speed and a positive speed regime. In the ballistic regime, one may ask about the behaviour of the linear speed as a function of the bias. Is the speed increasing as a function of the bias? This question is also interesting in disordered media without “hard traps”, for instance Galton–Watson trees without leaves or the random conductance model (with conductances that are bounded above and bounded away from 0). In that case, there is no Vanishing Velocity regime. Monotonicity of the speed for biased random walks on supercritical Galton–Watson trees without leaves is a famous open question, see [24]. We refer to [1] and [7] for recent results on Galton–Watson trees and [8] for a counterexample to monotonicity in the random conductance model. The Normal Transport regime for biased random walks on supercritical percolation clusters has been established in [9, 14, 25]. Limit laws for the position of the walker have been investigated both in the Anomalous Fluctuation regime and in the Vanishing Velocity regime in several examples, see [6, 12, 16, 18, 22]. For biased random walk on a supercritical percolation cluster, the conjectured picture of the speed as a function of the bias is as in Fig. 3. However, there is no rigorous proof that the speed is a unimodal function of the bias. Here, we consider a random graph given as a (uniformly chosen) spanning tree of the ladder graph, parametrized by the density of vertical edges. In this case, we can give an explicit formula for the speed of the biased random walk, see (1.12). In particular, we have an explicit critical value \(\beta _c^{(1)}\) for the bias such that the speed is positive for \(\beta \in \big (1,\beta _c^{(1)}\big )\) and is zero for \(\beta \ge \beta _c^{(1)}\). From this formula, we see that the speed is a unimodal function of the bias, see Fig. 3. The formula also allows to study the dependence of the speed on the density of vertical edges. We show that for an explicit value \(\beta _c^{(2)}< \beta _c^{(1)}\), a central limit theorem holds for \(\beta < \beta _c^{(2)}\). This establishes the Normal Transport regime for our model and is not surprising as the same statement is true for other biased walks on random graphs, see [9, 14, 16, 25]. In contrast to these examples, the critical value \(\beta _c^{(2)}\) is explicit in our case. For the unbiased case, we even have a quenched invariance principle. By computing the variance, we confirm the Einstein relation for our model. It has been said (but we do not have a written reference for this conjecture) in the general setup that the critical \(\beta _c^{(2)}\) for the existence of second moments and for the validity of a central limit theorem is the value of \(\beta \) where the speed is maximal. However, this is not true in our example. We show that \(\beta _c^{(2)}\) is strictly smaller than the value where the speed is maximized.

Our proofs rely on a decomposition of the uniform spanning tree due to [19], on explicit calculations for hitting times using conductances, on regeneration times and some ergodic theory arguments. The decomposition of the spanning tree allows for an interpretation as a trapping model in the spirit of [5, 10, 11].

1.2 Definition of the Model

To define our model of biased random walk on a random spanning tree, we need to introduce two things: (1) the random spanning tree and (2) the random walk on it. We begin with the random spanning tree.

Random spanning tree

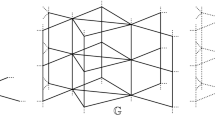

Consider the two-sided infinite ladder graph \(L=\big (V^L,E^L\big )\) with vertex set \(V^L=\{0,1\}\times {\mathbb {Z}}\) and edge set

Here the

are the horizontal edges and the

are the vertical edges. See Fig. 1.

For \(n\in {\mathbb {N}}\), let

and let \(E^L_n\) denote the induced set of edges. Finally, let \(L_n:=(V^L_n,E^L_n)\) denote the induced finite subgraph of L.

Let \(\textsf{ST}(L)\) denote the set of all spanning trees of L. That is, each \(t\in \textsf{ST}(L)\) is a subset of \(E^L\) such that the graph \((V^L,t)\) is connected but has no cycles. Analogously, define \(\textsf{ST}(L_n)\).

Let \(c>0\) be a parameter of the model. We attach a weight \(\mathop {\text{ weight }}(z_m)=c\) to each vertical edge \(z_m\) and \(\mathop {\text{ weight }}(h_{i,m})=1\) to each horizontal edge \(h_{i,m}\).

Denote by \(\mathbb {P}^c_n\) the weighted spanning tree distribution on \(\textsf{ST}\big (L_n\big )\), that is

By taking the limit \(n\rightarrow \infty \), we get (in the sense of convergence of finite dimensional distributions)

By a standard recurrence argument, \(\mathbb {P}\) is concentrated on connected graphs. That is, \(\mathbb {P}[\textsf{ST}(L)]=1\).

Let \(\mathbb {E}\) denote the expectation with respect to \(\mathbb {P}\) and let \({\mathcal {T}}\) be the generic random spanning tree with distribution \(\mathbb {P}\).

Although this is a rigorous and precise description of the model, it is not very helpful when it comes to explicit computations. In fact, for this purpose, it is more convenient to describe the random spanning tree in terms of the positions of its vertical edges (rungs) and its missing horizontal edges. Before we introduce the somewhat technical notation, let us explain the concept.

The tree is completely specified if we know the positions of the missing horizontal edges and the positions of the vertical edges (rungs) in the tree.

-

Let \((H_n)_{n\in {\mathbb {Z}}}\) denote the horizontal positions of the right vertices of the missing rungs. Assume that the numeration is chosen such that

$$\begin{aligned} \ldots H_{-2}<H_{-1}<H_0\le 0<H_1<H_2<\ldots \end{aligned}$$ -

Denote by \((W_n)\) the corresponding vertical positions of the missing edges.

-

Between any two horizontal positions \(H_n\) and \(H_{n+1}-1\) there is exactly one vertical edge in the tree. Denote the horizontal position of this edge by \(V_n\). That is, \(H_n\le V_n<H_{n+1}\). Note that \(V_{-1}<0\) and \(V_1>0\) but \(V_0\) could have either sign or equal 0.

Roughly speaking, if we start from a rung at position \(V_n\) there are a random number \(F_n\) of positions to the right with both horizontal edges before the next horizontal edge is missing. That is, \(V_n + F_n +1 = H_{n+1}\). Going right from \(H_{n}\) there are a random number \(F'_{n}\) of positions before the next rung at \(V_{n}\). That is \(H_n+F_{n}'=V_{n}\). Note that

Following the work of Häggström [17] for the case \(c=1\) and [19] for general \(c>0\), the \((F_n)\) and \((F_n')\) and \((W_n)\) are independent random variables and

-

\(W_n\) takes the values 0 and 1 each with probability 1/2

-

\(F_n\) and \(F_n'\), \(n\ne 0\), are geometrically distributed \(\gamma _{1-\alpha }\) with parameter \(1-\alpha \) with \(\alpha \) defined in (1.2).

Here

and the geometric distribution with parameter \(a\in (0,1]\) is defined by

Note that \(\alpha \) is a monotone decreasing function of c and \(\alpha \rightarrow 0\) as \(c\rightarrow \infty \) (and hence the \(F_n\) and \(F_n'\) tend to 0) and \(\alpha \rightarrow 1\) as \(c\rightarrow 0\).

Clearly, \((H_n)_{n\in {\mathbb {Z}}}\) is a stationary renewal process and the renewal times \(G_n:=H_{n+1}-H_n=F_{n}+F'_{n}+1\) have distribution \(\gamma _{1-\alpha }*\gamma _{1-\alpha }*\delta _1\) for \(n\ne 0\). For \(n=0\), however, the gap \(G_0\) is a size-biased pick of this distribution (waiting time paradox). That is,

Roughly speaking, by symmetry, given \(G_0\), both the position of the origin and the position of the rung at \(V_0\) are uniformly distributed among the possible values \(\{H_{0},\ldots ,H_1-1\}\) and are independent. In other words, given \(G_0\), the random variables \(H_0\) and \(F'_0\) are independent and

Note that

is the difference of two independent and uniformly distributed random variables \(F'_0\) and \(-H_0\) (given \(G_0\)).

Summing up, the random spanning tree \({\mathcal {T}}\) can be described in terms of the independent random variables \((F_n)\), \((F'_n)\), \((W_n)\) and by \(H_0\). The \(F_n\) and \(F'_n\), \(n\ne 0\), are \(\gamma _{1-\alpha }\) distributed while for \(F_0\) and \(F'_0\) a somewhat different distribution needs to be chosen. (Since we are interested in asymptotic properties only, we would not even need to know the precise distributions of \(F_0\) and \(F'_0\).) Given these random variables, the positions of the rungs and the missing edges in \({\mathcal {T}}\) are given by:

and

Random walk on the spanning tree

We now define random walk on \({\mathcal {T}}\) in the spirit of the random conductance model. Denote by \(\textbf{P}^{\mathcal {T}}\) the probabilities for a fixed spanning tree \({\mathcal {T}}\). Furthermore, we let

denote the annealed distribution and \(\textbf{E}\) its expectation.

Fix a parameter \(\beta \ge 1\) and attach to each edge in \({\mathcal {T}}\) a weight (conductance)

For \(v\in {\mathcal {T}}\), we write the sum of the conductances of adjacent edges by

Note that C(v) depends on \({\mathcal {T}}\) but this dependence is suppressed in the notation.

The random walk \(X=(X^{(1)},X^{(2)})\) on \({\mathcal {T}}\) chooses among its neighboring edges with a probability proportional to the edge weight. That is,

Note that \(X^{(1)}\in \{0,1\}\) is the vertical position and \(X^{(2)}\in {\mathbb {Z}}\) is the horizontal position of X.

1.3 Main Results

For \(\beta >1\), this random walk has a bias to the right and we will see that it is in fact transient to the right and that the asymptotic speed

exists. Since \({\mathcal {T}}\) is ergodic, the value of v does not depend on \({\mathcal {T}}\) and is a deterministic function of \(\alpha \) and \(\beta \). In this paper, we give an explicit formula for v and we discuss how v depends on \(\beta \) and on \(\alpha \). In particular, we see that v is strictly positive if and only if \(\beta < \beta _c^{(1)}:=1/\alpha \), and that v is a unimodal function of \(\beta \). For random walk on the full ladder graph, the speed is a monotone function of \(\beta \). However, in the spanning tree, right of the vertical edges there are dead ends of varying sizes where the random walk can spend large amounts of time if \(\beta \) is large. Hence it can be expected that there exists a critical value \(\beta _c^{(1)}=\beta _c^{(1)}(\alpha )\) such that \(v>0\) for \(\beta \in \big (1,\beta _c^{(1)}\big )\) and \(v=0\) for \(\beta \ge \beta _c^{(1)}\). For \(\beta < 1/\alpha \), let

and

(For a more intuitive description of these quantities, see (2.7), (2.8) and (2.9) in the proof).

Theorem 1.1

(Asymptotic Speed) Let \(\beta _c^{(1)}:=1/\alpha \). For \(\beta \ge \beta _c^{(1)}\), we have \(v=v(\beta )=0\). For \(\beta \in \big (1,\beta _c^{(1)}\big )\), we have \(v(\beta )>0\) and the value of v is given by

Note that \(\frac{\beta -1}{\beta +1}\) is the asymptotic speed of random walk on \({\mathbb {Z}}\) with a bias \(\beta \) to the right. The remaining terms on the r.h.s. of (1.12) describe the slowdown due to (1) traps and (2) the lengthening of the path due to the need to pass vertical edges. Note that \(C > 0\) and \((s_+-s_-) > 0\).

Clearly, \(v(\beta ) = 0\) for \(\beta \in \{1, 1/\alpha \}\). To see that for each value of \(\alpha \in (0,1)\), \(\beta \mapsto v(\beta )\) is a unimodal function, it suffices to show that \(\beta \mapsto 1/v(\beta )\) is convex on \((1, 1/\alpha )\), and the readers can convince themselves from (1.12) that this is the case since \(\beta \mapsto 1/v(\beta )\) is a sum of convex functions.

The explicit formula for the speed allows to investigate the dependence on the parameters \(\beta \) and \(\alpha \). Taking the limit of v as \(\alpha \rightarrow 0\) (which amounts to \(c\rightarrow \infty \)) in (1.12), we get

Note that this corresponds to the speed of a biased RW on a uniform spanning tree with all vertical edges. The uniform spanning tree can be chosen as follows: for each pair of horizontal edges \(h_{0,k}\), \(h_{1,k}\), a fair coin flip decides which one is retained. For this case, the formula (1.13) could be derived directly by a straightforward (but not short) Markov chain argument.

Does the speed increase as \(\alpha \) increases, since there are less vertical edges to slow down the random walk? Or does increasing \(\alpha \) mean that the traps get larger and the speed decreases? The latter effect should be stronger for large \(\beta \) and in fact we have

which is positive if \(\beta <3\) and negative if \(\beta >3\). Hence, for fixed \(\beta \), the value of the speed can either increase or decrease in the neighborhood of \(\alpha = 0\) (Figs. 4, 5).

The next goal is to establish a central limit theorem in the ballistic regime, that is, in the regime where \(v(\beta )>0\). As we will need second moments, we have to restrict the range of \(\beta \) further. Assume that \(\beta \in \big (1,1/\sqrt{\alpha }\big )\). Note that \(v(\beta )>0\) and that

By Theorem 1.1 of [15], the time the random walk spends in a trap has tails with moments of all orders smaller than \(\varrho \) but no moments larger than or equal to \(\varrho \). Hence, the critical value \(\beta _c^{(2)}\) for the existence of second moments is

For \(\beta \in \big (1,\beta _c^{(2)}\big )\), second moments exist and this indicates that a central limit theorem should hold in this regime. Denote by \({\mathcal {N}}_{0,1}\) the standard normal distribution.

Theorem 1.2

Assume that \(\beta \in \big (1,\beta _c^{(2)}\big )\). Then there exists a \(\varsigma ^2\in (0,\infty )\) such that the annealed laws converge to a standard normal distribution, i.e.

Note that

where \(\beta _{\textrm{max}}\) is the value \(\beta \) for which \(v(\beta )\) is maximal. In fact, an explicit calculation gives

with

As all coefficients are positive, we have

In the case \(\beta \in \big (\beta _c^{(2)},\beta _c^{(1)}\big )\), we are still in the ballistic regime but second moments of the time spent in traps fail to exist. In fact, the pth moment exists if and only if \(p<\varrho \). See [15]. Hence we might ask if a proper rescaling yields convergence to a stable law. This, however, cannot be expected to hold since the time that the random walk spends in a random trap does not have regularly varying tails. See [15] for a detailed discussion.

Compare the situation to the random comb model (with exponential tails) (see [21, Figs. 3, 9b]): There are two critical values \(g_1>g_2>0\) for the drift g such that the following happens.

-

For small drift \(0<g<g_2\), the random comb is in the Normal Transport regime (NT). That is, the speed is positive and the second moments are finite. The phase transition at \(g_2\) is of second order, that is, the second moments diverge. Also for our model, the second moments of the time spent in traps diverges as \(\beta \uparrow \beta _c^{(2)}\) as can be read off from the explicit formula of the tails given in [15].

-

For \(g_2< g < g_1\), the random comb is in the Anomalous Fluctuation regime (AF). The speed is positive but the second moments are infinite. The phase transition at \(g_1\) is of second order, that is, the speed converges to 0 as \(g\rightarrow g_1\). Also for our random walk on the random spanning tree, the speed decreases to 0 as \(\beta \uparrow \beta _c^{(1)}\).

-

For \(g_1<g\), the random comb is in the Vanishing Velocity regime (VV) where the speed is zero.

Also in the random comb model, the speed is an increasing function of the drift g in the NT regime (for exponential tails), as can be seen in [21, Fig. 9b], and the speed is maximal at some \(g_{\textrm{max}}>g_2\) just as we have shown for our model in (1.17).

We come to the final goal of this paper. In the unbiased case \(\beta =1\), all moments of the time spent in traps exist and a central limit theorem should hold. Moreover, the variance \(\sigma ^2\) should be given by the Einstein relation

where the second equality can easily checked by an explicit computation. We show that this is indeed true and that a quenched invariance principle holds true with this value of \(\sigma ^2\).

Theorem 1.3

(Quenched Functional Central Limit Theorem) Let \(\beta =1\) and let \(\sigma ^2\) be given by (1.18). The process

converges in \(\textbf{P}^{\mathcal {T}}\)-distribution in the Skorohod space \(D_{[0,\infty )}\) to a standard Brownian motion for \(\mathbb {P}[d{\mathcal {T}}]\) almost all spanning trees \({\mathcal {T}}\).

Uniform spanning tree (\(c=1\), \(\alpha =2-\sqrt{3}\)). No drift: \(\beta =1\). The histogram shows the endpoints as a result of 100,000 simulations of 1,000,000 steps of RWRE. The curve shows the (scaled) density of the normal distribution with variance \(10,000\times \frac{1+\alpha }{3+\alpha }\) as suggested by the CLT

1.4 Organization of the Paper

The rest of the paper is organized as follows. In Sect. 2, we study the random conductances in some more detail. We also decompose the spanning tree into building blocks and compute the expected times the random walks spends in these blocks. We put things together to get the explicit formula for the speed and prove Theorem 1.1.

In Sect. 3, we use a regeneration time argument to infer the central limit theorem in the ballistic regime.

In Sect. 4, we prove the Einstein relation (Theorem 1.3) by computing second moments.

2 Conductances Model and Proof of Theorem 1.1

2.1 Some More Considerations on the Spanning Tree

In the spanning tree \({\mathcal {T}}\), there is a unique ray (self avoiding path) from the left to the right. We denote the ray by \({\textsf{Ray}}\). We can enumerate the ray by the positive integers following it from (0, 0) [or (1,0) if (0,0) is not in the ray] to the right and by the negative integers going to the left. Let \(\phi (i)=(\phi ^{(1)}(i),\phi ^{(2)}(i))\), \(i\in {\mathbb {Z}}\), be this enumeration.

The basic idea is to decompose the random walk X into a random walk Y that makes steps only on \({\textsf{Ray}}\) with edge weights given by (1.9). That is, if \(Y_n\) is in the ray and also the position one step to the right, that is \(\phi (\phi ^{-1}(Y_n)+1)=Y_n+(0,1)\), is in the ray, then \(\textbf{P}^{\mathcal {T}}[Y_{n+1}=\phi (\phi ^{-1}(Y_n)+1)\big |Y_n]=\beta /(1+\beta )\) and \(\textbf{P}^{\mathcal {T}}[Y_{n+1}=\phi (\phi ^{-1}(Y_n)-1)\big |Y_n]=1/(1+\beta )\). Otherwise these probabilities are both 1/2. Now assume that we attach random holding times to Y that model the times that X spends in the traps splitting from the ray. In most cases, of course, these holding times are simply 1 since there are no traps. There are three kinds of traps:

-

(a)

A horizontal edge splits to the right of the ray: The ray makes a step down (or up) and the trap consists of a number, say k, of horizontal edges to the right of the turning point. See Fig. 6.

-

(b)

A horizontal edge splits to the left of the ray: The ray has just made a step down (or up) and now turns to the right again. The trap consists of a number, say l, of horizontal edges to the left of the turning point. See Fig. 7.

-

(c)

A vertical edge splits from the ray. The ray has just made a step to the right and will make another step to the right. A vertical edge either splits to the top or the bottom of the ray. At the other end of this vertical edge, there are k horizontal edges to the right and l horizontal edges to the left. See Fig. 8.

It is tempting to follow a simple (but wrong) argument to compute the asymptotic speed of X: For a given point on the ray, compute the probability p that a trap starts at this point and compute the average time \(\textbf{E}[T]\), the walk spends in a trap. Then take the speed \(v^Y\) of the random walk Y on the ray and divide it by \(1+p\textbf{E}[T]\). What is wrong with the argument is that the expected numbers of visits to a given point on the ray vary in a subtle way depending on the ray. Hence, we will have to argue more carefully to prove Theorem 1.1. However, there is a nice simplification of our model where this approach works and as a warm-up we present this here.

Consider the spanning tree \({\mathcal {T}}^u\) that is defined just as \({\mathcal {T}}\) but the ray is \(\{1\}\times {\mathbb {Z}}\). In other words, we would have \(W_n=0\) for all n. In this case, there are only traps of type (c). Also, the random walk \(Y^u\) on the ray is simply a random walk on \({\mathbb {Z}}\) with a drift \(\frac{\beta -1}{\beta +1}\). Since the gaps between vertical edges have distribution \(\gamma _{1-\alpha }*\gamma _{1-\alpha }*\delta _1\) they have expectation \(\frac{1+\alpha }{1-\alpha }\). Hence, the probability for a given vertical edge to be in \({\mathcal {T}}^u\) is

Assume that the trap starts one step below (or above in the general case \({\mathcal {T}}\)) the initial point of the random walk and then splits into l edges to the left and k edges to the right. Let \(T_{k,l}\) be the time, the random walk on \({\mathcal {T}}^u\) spends in the trap before it makes the first step on the ray. By Lemma 2.3 below, we have

We still have to average over k and l to get, for \(\beta < 1/\alpha \),

with \(s_+\) and \(s_-\) from (1.10). Summing up, we get that the biased random walk on \({\mathcal {T}}^u\) has, for \(\beta < 1/\alpha \), asymptotic speed

As indicated above, the computation of the speed v of random walk on \({\mathcal {T}}\) requires more care. We prepare for this in the next section by considering hitting times in the random conductance model.

2.2 Conductance Method and Times in Traps

If the random walker on the random spanning tree enters a dead end of the tree (a trap) it takes a random amount of time to get back to the ray of the tree. The average amount of time spent in a trap is the key quantity for computing the ballistic speed of the random walk. In this section, we first present the (well-known) formula that computes the average time to exit the trap in terms of the sum of edge weights (2.4). Then we apply this formula to the three prototypes of traps: (a) a dead end to the right, (b) a dead end to the left and (c) a rung with dead ends to both sides.

Let \(G=(V, E)\) be a connected graph and assume that we are given edge weights \(C(x,y)=C(y,x)>0\) for all \(\{x,y\}\in E\) and \(C(x,y)=0\) otherwise. Let \(C(x)=\sum _{y}C(x,y)\) and let

be the transition probabilities of a reversible Markov chain X on V. Assume that

It is well known that the unique invariant distribution of this Markov chain is given by \(\pi (x)= C(x)/C(V)\) for all \(x\in V\). Let

It is well known (see, e.g., [20, Theorem 17.52]) that

Now we assume that there is a point \(x\in V\) with only one neighboring point y. Then

Quite similarly, assume that there are two points \(x_1,x_2\in V\) each of which has only y as a neighbor. Let \(\tau _{\{x_1,x_2\}}\) be the first hitting time of \(\{x_1,x_2\}\). By identifying the two points and giving the edge to y the weight \(C(x_1,y)+C(x_2,y)\), we get from (2.5)

We will need (2.6) when we compute the expected time that the random walk X on \({\mathcal {T}}\) spends in a trap before it makes one step on the ray from y to the left (say \(x_1\)) or the right (say \(x_2\)).

Lemma 2.1

(Trap of type (a)) Assume that for a given tree \({\mathcal {T}}\), the trap starts one step right of the initial point of the random walk and that the trap has \(k\in {\mathbb {N}}\) edges. Let \(T_{k}\) be the time the random walk spends in the trap before it makes the first step on the ray (not counting this first step on the ray). Then

Proof

We apply (2.6) with y the point on the ray where the trap splits off, \(x_1\) and \(x_2\) the two neighbouring points of y on the ray. The graph V consists of the points in the trap, y, \(x_1\) and \(x_2\). The sum of edge weights is

Hence

This, however, is the assertion. \(\square \)

Lemma 2.2

(Trap of type (b)) Assume that for a given \({\mathcal {T}}\), the trap starts one step left of the initial point of the random walk and that the trap has \(l\in {\mathbb {N}}\) edges. Let \(T_{l}\) be the time the random walk spends in the trap before it makes the first step on the ray (not counting this first step on the ray). Then

Proof

We argue as in the proof of Lemma 2.1. Note that here the two edges on the ray have weights 1 and \(\beta \), respectively. Hence

\(\square \)

Lemma 2.3

(Trap of type (c)) Assume that for a given \({\mathcal {T}}\), the trap starts one step above (or below) the initial point of the random walk and then splits into l edges to the left and k edges to the right. Let \(T_{k,l}\) be the time the random walk spends in the trap before it makes the first step on the ray (not counting this first step on the ray). Then

Proof

We argue as in the proof of Lemma 2.2 to get

\(\square \)

2.3 Proof of Theorem 1.1

This conductance approach is perfectly suited to study random walk on spanning trees. In fact, the speed of random walk on the random spanning tree can be derived from the average time it takes to make a step to the right on the infinite ray. From the considerations of the previous section it is clear that we need to compute the sum of the edge weights of all edges in \({\mathcal {T}}\) that are left to walker (i. e., edges that can be reached without making a step to the right on the ray). The random spanning tree is made of i.i.d. building blocks that consist of the subgraphs between two missing horizontal edges. So we need the average weights of i.i.d. building blocks plus the edge weights in the block the walker is currently in. For this block, we need the exact shape of the block and the exact position of the walker before we take averages.

Recall that \({\mathcal {T}}\) is the random spanning tree with enumeration \(\phi (n)\), \(n\in {\mathbb {Z}}\) of its ray. Assume that we are given conductances \(C(h_{i,k})=C(z_k)=\beta ^{k}\) for all \(h_{i,k},z_k\in {\mathcal {T}}\). Let X be the random walk on \({\mathcal {T}}\) with these conductances. Let \(\tau _0=0\) and for \(k\in {\mathbb {N}}\), let

Denote by \(\textbf{E}^{\mathcal {T}}_0\) the quenched expectation for the random walk X started at \(\phi (0)\). Since \({\mathcal {T}}\) is stationary and ergodic, \(\phi ^{(2)}(X)\) has a deterministic asymptotic speed \(v=v(\beta )\) given by

We will later condition on the position of the first horizontal edge missing to the left, count the edge weights between it and the origin by hand and average over the edge weights to the left of it. By translation invariance, it is enough to condition on

Denote by \({\mathcal {T}}^-\subset {\mathcal {T}}\) the set of edges in \({\mathcal {T}}\) with at least one endpoint strictly to the left of 0:

We now compute

Recall that

and

Summing up, we have

Let us now consider the details of the spanning around the position of the walker. That is, we consider the part of \({\mathcal {T}}\) between two missing horizontal edges in which the walker is. Since we defined the position of the walker to be 0, this is the part of \({\mathcal {T}}\) between \(H_0\) and \(H_1\) (see Fig. 2). Recall that \(F'_0\) is the distance of the rung to \(H_0\) and \(F_0\) is the distance of the rung to \(H_1\). Furthermore, \(V_0\) is the position of the rung. Note that \(|W_1-W_0|=1\) if the missing horizontal edges are in alternating vertical positions and \(W_1-W_0=0\) otherwise. We first condition on these random variables and compute the edge weights. Later we average over \(F'_0\), \(F_0\) and \(|W_1-W_0|\).

Define the events

for \(a,b\in {\mathbb {N}}_0,\,k=-a,\ldots ,b,\,\sigma =0,1\). For each of these events, we compute the conditional expectation \(\textbf{E}_0[\tau _1|A_{a,b,k,\sigma }]\) and then sum over all possibilities.

Recall that \(\phi (0)=(\phi ^{(1)}(0),\phi ^{(2)}(0))\in {\textsf{Ray}}\cap \{(1,0),(0,0)\}\) is the position of the walker at time 0. Note that given \(A_{a,b,k,\sigma }\), we have \(\phi (1)=(\phi ^{(1)}(0),1))\) if \(k\ne 0\) or \(\sigma =0\) and \(\phi (1)=(1-\phi ^{(1)}(0),0)\) if \(k=0\) and \(\sigma =1\). In the latter case, the walker has to pass \(z_0\) before it makes a jump to the right and accomplishes \(\tau _1\). In order to compute the expected waiting time before the walker makes a step on the ray, we use (2.6) and compute the edge weights \(C_{{\mathcal {T}},-}\) “to the left” of the walker. More precisely, let \(C_{{\mathcal {T}},-}\) denote the sum of the edge weights of all edges that can be connected to \(\phi (0)\) without touching \(\phi (1)\) (the next position right on the ray). In the case \(k=0\), this includes the edges in the trap starting at \(\phi (0)\).

- Case 1:

-

\(k<0\). Here we compute

$$\begin{aligned} \begin{aligned} \mathbb {E}\big [C_{\tau ,-}|A_{a,b,k,\sigma }\big ]&=C\beta ^{-a-k}+1+\beta ^{-1}+\ldots +\beta ^{1-a-k}\\&=\left( C-\frac{\beta }{\beta -1}\right) \beta ^{-a-k}+\frac{\beta }{\beta -1}. \end{aligned} \end{aligned}$$(2.12)Denote by \(\textbf{E}_0\) the annealed expectation for the random walk started in \(\phi (0)\). Since \(C(h_{i,1})=\beta \), \(i=1,2\), we conclude due to (2.6)

$$\begin{aligned} \begin{aligned} \textbf{E}_{0}\big [\tau _1\big |A_{a,b,k,\sigma }\big ]&=\beta ^{-1}\,2\,\textbf{E}[C_{\tau ,-}|A_{a,b,k,\sigma }]+1\\&=\frac{\beta +1}{\beta -1}+2\left( \frac{C}{\beta }-\frac{1}{\beta -1}\right) \beta ^{-a-k}\\&=:\frac{\beta +1}{\beta -1}+f_1(a,k). \end{aligned} \end{aligned}$$(2.13) - Case 2:

-

\(k>0\) or \(k=0\) and \(\sigma =0\). This is quite similar to Case 1, but since \(z_{-k}\in {\mathcal {T}}\), we also have the additional edge weights

$$\begin{aligned} \beta ^{-k}\big (1+\beta ^{-a}+\beta ^{-a+1}+\ldots +\beta ^{b}\big ). \end{aligned}$$Hence

$$\begin{aligned} \begin{aligned} \textbf{E}_{0}\big [\tau _1\big |A_{a,b,k,\sigma }\big ]&=\frac{\beta +1}{\beta -1}+f_1(a,k)+2\beta ^{-k}\left( \frac{1}{\beta }+\frac{1}{\beta -1}\big (\beta ^b-\beta ^{-a}\big )\right) \\&=:\frac{\beta +1}{\beta -1}+f_1(a,k)+f_2(a,b,k). \end{aligned} \end{aligned}$$(2.14) - Case 3:

-

\(k=0\) and \(\sigma =1\). This works as in Case 2, but in addition, the walker has to pass the edge \(z_0\). Here we only compute the additional time that it takes for passing this edge. Since the edge weights

$$\begin{aligned} \beta +\ldots +\beta ^{b}=\beta \frac{\beta ^b-1}{\beta -1} \end{aligned}$$contribute to \(C_{{\mathcal {T}},-}\), and since \(C(z_0)=1\), we get

$$\begin{aligned} \begin{aligned} \textbf{E}_{0}\big [\tau _1|A_{a,b,k,\sigma }\big ]&=\frac{\beta +1}{\beta -1}+f_1(a,b)+f_2(a,b)+f_3(a,b) \end{aligned} \end{aligned}$$(2.15)with

$$\begin{aligned} \begin{aligned} f_3(a,b)&=2\left( C-\frac{\beta }{\beta -1}\right) \beta ^{-a}+2\,\frac{\beta }{\beta -1}+1+2\beta \frac{\beta ^b-1}{\beta -1}\\&=1+2\left( C-\frac{\beta }{\beta -1}\right) \beta ^{-a}+\frac{2\beta }{\beta -1}\beta ^b. \end{aligned} \end{aligned}$$(2.16)

Now we sum up over the events \(A_{a,b,k,\sigma }\). Recall the distribution of \(G_0=F_0+F'_0+1\) from (1.2) and recall that \(-H_0\) and \(F'_0\) are independent and uniformly distributed on \(\{0,\ldots ,G_0-1\}\) given \(G_0\). Hence

Hence, we get

Furthermore,

Summing the terms for \(f_1\) and \(f_2\), we get the expected value for \(\tau _1\) for the case where the ray stays either up all time or down all time. That is, by an explicit computation, we get

with \(v^u\) from (2.3). The last equality is a tedious calculation that is omitted here. However, it is clear that \(1/v^u\) must be the average holding time since the event we condition on is \(\{{\mathcal {T}}={\mathcal {T}}^u\}\).

Now we come to the last term that describes the slow down of the walker due to the need to pass through \(z_{V_0}\) if \(W_{-1}\ne W_0\).

Concluding, we get the explicit formula (recall C and \(s_+\) from (2.7), (1.11) and (1.10))

and the formula for the speed

Plugging in the expressions for \(\textbf{E}_0[\tau _1]\) and \(v^{u}\) we get (1.12) and the proof of Theorem 1.1 is complete. \(\square \)

3 Ballistic Central Limit Theorem, Proof of Theorem 1.2

We follow the strategy of proof of Theorem 2 in [11] by introducing regeneration times \((\sigma ^X_n)_{n\in {\mathbb {N}}}\) (in (3.9) below) and showing that \(\sigma ^X_2-\sigma ^X_1\) has a second moment.

Recall the definition of the random variables \(H_n\), \(n\in {\mathbb {Z}}\), from Sect. 1.2. Loosely speaking, \(H_n\) is the right vertex of the nth horizontal bond right of the origin that has no matching horizontal bond. That is, either \(\{(0,H_n-1),(0,H_n)\}\) is in the tree but \(\{(1,H_n-1),(1,H_n)\}\) is not or vice versa. Let \(i_n\in \{0,1\}\) be such that \(e_n:=\{(i_n,H_n-1),(i_n,H_n)\}\in {\mathcal {T}}\). Denote by \({\mathcal {T}}_n\) the set of edges in \({\mathcal {T}}\) between \(H_n\) and \(H_{n+1}\) shifted by \(H_n\) and complemented by the information if the unique vertical edge between \(H_{n-1}\) and \(H_n\) is in this set is in the ray or not. More formally, for an edge \(e=\{(j_1,h_1),(j_2,h_2)\}\), we define \(e+(0,k)=\{(j_1,h_1+k),(j_2,h_2+k)\}\). Then

Note that \(({\mathcal {T}}_n)_{n=1,2,\ldots }\) is i.i.d.

Let \(n_1<n_2<\ldots \) be the times where X is on the ray:

Let \(Y_k=X_{n_k}\), \(k\in {\mathbb {N}}\). Then Y is a random walk on the infinite ray of \({\mathcal {T}}\).

Assume that Y is started at \(\phi (0)\). Since Y is transient to the right, every point \(\phi (n)\), \(n>0\), is visited at least once. Recall that \(C(\phi (n),\phi (n+1))\) is the conductance between \(\phi (n)\) and \(\phi (n+1)\). We write

for the effective resistance between \(\phi (n)\) and \(+\infty \). By [20, Theorem 19.25], the probability that Y never returns to \(\phi (n)\) is

Denote by \(\ell ^Y(\phi (n))=\sum _{k=0}^\infty \textbf{1}_{\{\phi (n)\}}(Y_k)\) the local time of Y at \(\phi (n)\). The above considerations show that

Lemma 3.1

The local time \(\ell ^Y(\phi (n))\) of Y at \(\phi (n)\), \(n>0\), has distribution (given \({\mathcal {T}}\))

with \(p^{{\mathcal {T}}}(\phi (n))\) given by (3.2). For \(p^{{\mathcal {T}}}(\phi (n))\) we have the bounds

Proof

We only have to show (3.4). Note that the effective resistance to \(\infty \) is minimal if the ray is straight right of \(\phi (n)\), that is, \(\phi (n+k)=\phi (n)+(0,k)\) for \(k=0,1,2,\ldots \). In this case, \(C\big (\phi (n+k),\phi (n+k+1)\big )=\beta ^k C\big (\phi (n),\phi (n+1)\big )\) and hence

Since \(C\big (\phi (n-1),\phi (n)\big )\ge C\big (\phi (n),\phi (n+1)\big )/\beta \), we get the upper bound in (3.4), i.e.

The effective resistance is maximal if all the vertical edges are in the ray (to the right of \(\phi (n)\), at least). Since each vertical edge has the same conductance as its preceding horizontal edge, the effective resistance essentially doubles. We distinguish the cases

-

(i)

where \(\phi (n-1)\) and \(\phi (n)\) are connected by a vertical edge and

-

(ii)

where they are connected by a horizontal edge.

In case (i), the edge between \(\phi (n)\) and \(\phi (n+1)\) is horizontal, the edge between \(\phi (n+1)\) and \(\phi (n+2)\) is vertical and so on. Summing up, we get

and \(C\big (\phi (n-1),\phi (n)\big )=C\big (\phi (n),\phi (n+1)\big )/\beta \). That is

In case (ii), we have

and \(C\big (\phi (n-1),\phi (n)\big )=C\big (\phi (n),\phi (n+1)\big )\). That is, we get again

Summarizing, this gives the lower bound in (3.4). \(\square \)

As Y has positive speed to the right, it passes each \(e_n\), \(n\in {\mathbb {N}}\) at least once. We say that n is a regeneration point if Y passes \(e_n\) exactly once. Note that for given \({\mathcal {T}}\) and n, the probability that n is regeneration point is

Let

For \(m>n\), let

and

Clearly, we have \(A_{n}\subset A_{n,m}\) for all \(m>n\). Now let \(n_1,\ldots ,n_k\in {\mathbb {N}}\), \(n_1<n_2<\ldots <n_k\). Note that \(A_{n_l,n_{l+1}}\), \(l=1,\ldots ,k-1\), and \(A_{n_k}\) are independent events under \(\textbf{P}^{\mathcal {T}}_0\). Hence

Summing up, the set of regeneration points is minorized by a Bernoulli point process with success probability \((\beta -1)/2\beta \) that is independent of \({\mathcal {T}}\). More explicitly, there exist i.i.d. Bernoulli random variables \(Z_1,Z_2,\ldots \) independent of \({\mathcal {T}}\) such that \(\textbf{P}[Z_1=1]=(\beta -1)/2\beta \) and such that \(Z_n=1\) implies that n is a regeneration point (but not vice versa).

Now let \(\tau _1<\tau _2<\ldots \) denote the sequence of n’s such that \(Z_n=1\). Note that \((\tau _{k+1}-\tau _{k})_{k\in {\mathbb {N}}}\) are i.i.d. geometric random variables. Let

and

Note that for \(n\in {\mathbb {N}}\),

Then \((\sigma ^X_{k+1}-\sigma ^X_k)_{k\in {\mathbb {N}}}\) are i.i.d. random variables (under the annealed probability measure). Along each elementary block \({\mathcal {T}}_n\) the ray passes \((\#{\mathcal {T}}_n(1)+1)/2=H_{n+1}-H_n\) horizontal edges and \({\mathcal {T}}_n(2)\) vertical edges. Note that

For each step of \(Y_n\), the random walk \(X_n\) spends a random amount of time \(T_n\) in a trap. For most points of the ray, this random variable is simply 1. Only at points adjacent to a vertical edge, there can be either one or two real traps with \(T_n\) possibly larger than 1. Let

and recall that \(\varrho >2\) since \(1<\beta <\beta _c^{(2)}=1/\sqrt{\alpha }\). By Theorem 1.1 of [15], there exists a constant K such that

Hence, for all \(\zeta <\varrho \), we have \(\textbf{E}[T_n^\zeta ]<\infty \). In fact, the result of [15] gives a precise statement for the tails of the traps of type (a) (see Fig. 6) only, but clearly, the cases (b) and (c) work similarly.

Let

be the occupation time of Y in (i, m). Given \({\mathcal {T}}\), for each (i, m) on the ray, \(\ell ^Y\big ((i,m)\big )-1\) is a geometric random variable with success probability \(p^{{\mathcal {T}}}\big ((i,m)\big )\). Here \(p^{{\mathcal {T}}}\big ((i,m)\big )\) is the probability that Y will go to infinity before returning to (i, m). By Lemma 3.1, this probability is bounded below by

Let \(\gamma _p(k)=p(1-p)^k\) denote the weights of the geometric distribution on \({\mathbb {N}}_0\) with success probability p. Hence for every \(\zeta >0\), there is a constant \(K_\zeta <\infty \) such that

Note that \(H_{n+1}-H_n\) has moments of all orders, hence there exists \(K'_\zeta <\infty \) such that (recall \(L_n\) from (3.10))

Now fix \(\zeta \in (2,\varrho )\) and let \(\eta =\frac{\zeta }{\zeta -2}\). Note that \(\frac{1}{\zeta /2}+\frac{1}{\eta }=1\). Recall that \(\tau _{n+1}-\tau _n\) is geometrically distributed. We use Hölder’s inequality to infer

Having established the existence of second moments of the regeneration times, we can argue as in the proof of Theorem 2 in [11] to conclude the proof of Theorem 1.2. \(\square \)

4 Einstein Relation: Proof of Theorem 1.3

Recall that \({\textsf{Ray}}\) denotes the (unique) self-avoiding path on \({\mathcal {T}}\) from \(-\infty \) to \(\infty \). We may and will assume that X starts at a point chosen from the ray instead of (possibly) from a point inside a trap. Since all traps are of finite size, it is enough to prove the theorem for this initial position. For \(n\in {\mathbb {N}}_0\), define the last time \(m\le n\) when \(X_m\) was on the ray by

and let

That is, \({\tilde{X}}\) waits at the entrances of the traps for X to come back to the ray. Since the depths of the traps are independent geometric random variables, it is easy to check that

Hence, it is enough to show the theorem for \({\tilde{X}}\) instead of X.

Note that \({\tilde{X}}\) is symmetric simple random walk on \({\textsf{Ray}}\) with random holding times. We now give a different construction of such a random walk that allows explicit computations.

Recall that \(\phi (n)=(\phi ^{(1)}(n),\phi ^{(2)}(n))\), \(n\in {\mathbb {Z}}\), is the enumeration of \({\textsf{Ray}}\), such that \(\phi ^{(1)}(0)=0\) and \(\phi ^{(1)}(-1)=-1\). Let Y be symmetric simple random walk on \({\mathbb {Z}}\). Then \(\phi (Y_n)\) is symmetric simple random walk on \({\textsf{Ray}}\). For each \(k\in {\mathbb {Z}}\), there may or may not be a trap starting at \(\phi (k)\). Let \(\nu _k\) denote the (random) distribution of the time that X spends in that trap at any visit of \(\phi (k)\) before it makes a move on \({\textsf{Ray}}\). Let \(U_{k,i}\) denote the time that \({\tilde{X}}\) spends at its ith visit to \(\phi (k)\) before it makes a step on the ray. Clearly, the \(U_{k,i}\) are independent given \((\nu _k)_{k\in {\mathbb {Z}}}\) and \(U_{k,i}\) has distribution \(\nu _k\). Now we define

and let

Then \(\phi (Y^D_n)_{n\in {\mathbb {N}}_0}\) and \(({\tilde{X}}_n)_{n\in {\mathbb {N}}_0}\) coincide in (quenched) distribution. Hence the proof of Theorem 1.3 amounts to showing that

converges in \(\textbf{P}^{\mathcal {T}}\)-distribution in the Skorohod space \(D_{[0,\infty )}\) to a standard Brownian motion.

Let \(m:=\mathbb {E}[\sum _\ell \nu _k(\{\ell \})\ell ]\) be the mean time before \(Y^D\) takes its next step. In Theorem 2.10 of [4], a functional central limit theorem for our \(Y^D\) was shown for the situation where the sequence \((\nu _n)_{n\in {\mathbb {Z}}}\) is i.i.d. In our situation, the \((\nu _n)_{n\in {\mathbb {Z}}}\) are not i.i.d. but are ergodic. In fact, they are a strongly mixing sequence as the random spanning tree \({\mathcal {T}}\) is made of i.i.d. building blocks. Taking a closer look at the proof of Theorem 2.10 in [4, Sect. 7.2], we notice that their assumption of independence is too strong and that ergodicity is perfectly enough to infer the statement of the theorem. Hence, by Theorem 2.10 of [4], we get that

converges in \(\textbf{P}^{\mathcal {T}}\)-distribution in the Skorohod space \(D_{[0,\infty )}\) to a standard Brownian motion.

It remains to compute m and to measure the effect of applying \(\phi ^{(1)}\) to \(Y^D\).

Lemma 4.1

For almost all \({\mathcal {T}}\), we have

Proof

Let

be the probability that a given vertical edge \(z_k\) is in the spanning tree. Recall that the fair coin flips \((W_n)\) determine the vertical positions of the missing edges. That is, the horizontal edge \(h_{W_n,H_n}\) is not in the tree \({\mathcal {T}}\). Note that \(H_n\le V_n<H_{n+1}\) and that the vertical edge \(z_{V_n}\) is in the ray if and only if \(W_n\ne W_{n+1}\). Hence

By ergodicity, we get for almost all \({\mathcal {T}}\)

and

This implies

\(\square \)

Lemma 4.2

We have

Proof

For n such that the vertex \(\phi (n)\) is of degree 2 (that is, there is no trap adjacent to \(\phi (n)\)), we have \(\nu _n=\delta _1\) since \(Y^D\) makes its next step immediately. For n such that \(\phi (n)\) has degree 3 and there is a trap consisting of k edges starting at \(\phi (n)\), by (2.5), we have that the average holding time is

By the ergodic theorem, we get

Together with the above discussion, these lemmas finish the proof of Theorem 1.3. \(\square \)

Data Availability

Data sharing not applicable to this article as no datasets were generated or analysed during the current study.

References

Aïdékon, E.: Speed of the biased random walk on a Galton-Watson tree. Probab. Theory Relat. Fields 159(3–4), 597–617 (2014)

Balakrishnan, V., Van den Broeck, C.: Transport properties on a random comb. Phys. A 217, 1–21 (1995)

Barma, M., Dhar, D.: Directed diffusion in a percolation network. J. Phys. C 16(8), 1451 (1983)

Ben Arous, G., Cabezas, M., Černý, J., Royfman, R.: Randomly trapped random walks. Ann. Probab. 43(5), 2405–2457 (2015)

Ben Arous, G., Fribergh, A.: Biased random walks on random graphs. In: Probability and Statistical Physics in St. Petersburg, vol. 91 of Proc. Sympos. Pure Math., pp. 99–153. Amer. Math. Soc., Providence (2016)

Ben Arous, G., Fribergh, A., Gantert, N., Hammond, A.: Biased random walks on Galton-Watson trees with leaves. Ann. Probab. 40(1), 280–338 (2012)

Ben Arous, G., Fribergh, A., Sidoravicius, V.: Lyons-Pemantle-Peres monotonicity problem for high biases. Commun. Pure Appl. Math. 67(4), 519–530 (2014)

Berger, N., Gantert, N., Nagel, J.: The speed of biased random walk among random conductances. Ann. Inst. Henri Poincaré Probab. Stat. 55(2), 862–881 (2019)

Berger, N., Gantert, N., Peres, Y.: The speed of biased random walk on percolation clusters. Probab. Theory Relat. Fields 126(2), 221–242 (2003)

Betz, V., Meiners, M., Tomic, I.: Speed function for biased random walks with traps. Stat. Probab. Lett. 195, 109765 (2023)

Bowditch, A.: Central limit theorems for biased randomly trapped random walks on \(\mathbb{Z} \). Stoch. Process. Appl. 129(3), 740–770 (2019)

Bowditch, A.M., Croydon, D.A.: Biased random walk on supercritical percolation: anomalous fluctuations in the ballistic regime. Electron. J. Probab. 27, 1–22 (2022)

Demaerel, T., Maes, C.: The asymptotic speed of reaction fronts in active reaction-diffusion systems. J. Phys. A 52, 245001 (2019)

Fribergh, A., Hammond, A.: Phase transition for the speed of the biased random walk on the supercritical percolation cluster. Commun. Pure Appl. Math. 67(2), 173–245 (2014)

Gantert, N., Klenke, A.: The tail of the length of an excursion in a trap of random size. J. Stat. Phys. 188(3), 27 (2022)

Gantert, N., Meiners, M., Müller, S.: Einstein relation for random walk in a one-dimensional percolation model. J. Stat. Phys. 176(4), 737–772 (2019)

Häggström, O.: Aspects of Spatial random processes. PhD thesis, University Göteborg (1994)

Hammond, A.: Stable limit laws for randomly biased walks on supercritical trees. Ann. Probab. 41(3A), 1694–1766 (2013)

Klenke, A.: The random spanning tree on ladder-like graphs. arXiv:1704.00182 (2017)

Klenke, A.: Probability Theory: A Comprehensive Course. Universitext, 3rd edn. Springer, Cham (2020)

Kotak, J., Barma, M.: Biased induced drift and trapping on random combs and the Bethe lattice: Fluctuation regime and first order phase transitions. Phys. A 597, 127311 (2022)

Lübbers, J.-E., Meiners, M.: The speed of critically biased random walk in a one-dimensional percolation model. Electron. J. Probab. 24, 1–29 (2019)

Lyons, R., Pemantle, R., Peres, Y.: Biased random walks on Galton-Watson trees. Probab. Theory Relat. Fields 106(2), 249–264 (1996)

Lyons, R., Pemantle, R., Peres, Y.: Unsolved problems concerning random walks on trees. In: Classical and Modern Branching Processes (Minneapolis, MN, 1994), vol. 84 of IMA Vol. Math. Appl., pp. 223–237. Springer, New York (1997)

Sznitman, A.-S.: On the anisotropic walk on the supercritical percolation cluster. Commun. Math. Phys. 240(1–2), 123–148 (2003)

White, S.R., Barma, M.: Field-induced drift and trapping in percolation networks. J. Phys. A 17, 2995–3008 (1984)

Funding

Open Access funding enabled and organized by Projekt DEAL. The authors did not receive support from any organization for the submitted work.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

There is no conflict of interest.

Additional information

Communicated by Deepak Dhar.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Gantert, N., Klenke, A. Biased Random Walk on Spanning Trees of the Ladder Graph. J Stat Phys 190, 83 (2023). https://doi.org/10.1007/s10955-023-03091-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10955-023-03091-w