Abstract

We consider random walks on the infinite cluster of a conditional bond percolation model on the infinite ladder graph. In a companion paper, we have shown that if the random walk is pulled to the right by a positive bias \(\uplambda > 0\), then its asymptotic linear speed \(\overline{\mathrm {v}}\) is continuous in the variable \(\uplambda > 0\) and differentiable for all sufficiently small \(\uplambda > 0\). In the paper at hand, we complement this result by proving that \(\overline{\mathrm {v}}\) is differentiable at \(\uplambda = 0\). Further, we show the Einstein relation for the model, i.e., that the derivative of the speed at \(\uplambda = 0\) equals the diffusivity of the unbiased walk.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We continue our study of regularity properties in [14] of a biased random walk on an infinite one-dimensional percolation cluster introduced by Axelson-Fisk and Häggström [3]. The model was introduced as a tractable model that exhibits similar phenomena as biased random walk on the supercritical percolation model in \({\mathbb {Z}}^{d}\). The bias, whose strength is given by some parameter \(\uplambda >0\), favors the walk to move in a pre-specified direction.

There exists a critical bias \(\uplambda _{\mathrm {c}}\in (0,\infty )\) such that for \(\uplambda \in (0, \uplambda _{\mathrm {c}})\) the walk has positive speed while for \(\uplambda \ge \uplambda _{\mathrm {c}}\) the speed is zero, see Axelson-Fisk and Häggström [2]. The reason for the existence of these two different regimes is that the percolation cluster contains traps (or dead ends) and the walk faces two competing effects. When the bias becomes larger the time spent in such traps (peninsulas stretching out in the direction of the bias) increases while the time spent on the backbone (consisting of infinite paths in the direction of the bias) decreases. Once the bias is sufficiently large the expected time the walk stays in a typical trap is infinite and the speed of the walk becomes zero.

Even though the model may be considered as one of the easiest non-trivial models for a random walk on a percolation cluster, explicit calculation for the speed \(\overline{\mathrm {v}}=\overline{\mathrm {v}}(\uplambda )\) could not be carried out. The main result of our previous work [14] is that the speed (for fixed percolation parameter p) is continuous in \(\uplambda \) on \((0,\infty )\). The continuity of the speed may seem obvious, but to our best knowledge, it has not been proved for a biased random walk on a percolation cluster, and not even for biased random walk on Galton-Watson trees. Moreover, we proved in [14] that the speed is differentiable in \(\uplambda \) on \((0,\uplambda _{\mathrm {c}}/2)\) and we characterized the derivative as the covariance of a suitable two-dimensional Brownian motion.

This paper studies the regularity of the speed in \(\uplambda =0\). In particular, we establish the Einstein relation for the model: we prove that \(\overline{\mathrm {v}}\) is differentiable at \(\uplambda = 0\) and that the derivative at \(\uplambda = 0\) equals the variance of the scaling limit of the unbiased walk.

The Einstein relation is conjectured to be true in general for reversible motions which behave diffusively. We refer to Einstein [9] for a historical reference and to Spohn [29] for further explanations. A weaker form of the Einstein relation holds indeed true under such general assumptions and goes back to Lebowitz and Rost [22]. However, the Einstein relation in the stronger form as described above was only established (or disproved) in examples. For instance, Loulakis [24, 25] considers a tagged particle in an exclusion process, Komorowski and Olla [21] and Avena, dos Santos and Völlering [1] investigate other examples of space-time environments.

Komorowski and Olla [20] treat a first example of random walks with random conductances on \({\mathbb {Z}}^{d}, d\ge 3\), and Gantert, Guo and Nagel [12] establish the Einstein relation for random walks among i.i.d. uniformly elliptic random conductances on \({\mathbb {Z}}^{d}, d\ge 1\). In dimension one the Einstein relation can be proved via explicit calculations, see Ferrari et al. [11]. There are only few results for non-reversible situations, see Guo [16] and Komorowski and Olla [21]. We want to stress that while the differentiability of the speed might appear as natural or obvious, there are examples where the speed is not differentiable, see Faggionato and Salvi [10].

Despite this recent progress not much is known in models with hard traps, e.g. random conductances without uniform ellipticity condition or percolation clusters. The first result in this direction is Ben Arous et al. [4] that proves the Einstein relation for certain biased random walks on Galton-Watson trees. An additional difficulty in our model is that the traps are not only hard but do also have an influence on the structure of the backbone. Our paper is the first, to our knowledge, to prove the Einstein relation for a model with hard traps and dependence of traps and backbone. Although the structure of the traps is elementary the decoupling of traps and backbone is one of the major difficulties we encountered.

We prove a quenched (joint) functional limit theorem via the corrector method, see Sect. 7, with additional moment bounds for the distance of the walk from the origin. The law of the unbiased walk is compared with the law of the biased one using a Girsanov transform. The difference between these measures is quantified using the above joint limit theorem. Finally, we use regeneration times that depend on the bias and appropriate double limits to conclude that the derivative of the speed equals a covariance, see Sect. 8. It remains then to identify the covariance as the variance of the unbiased walk, see Eq. (2.6).

2 Preliminaries and Main Results

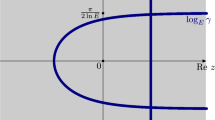

In this section we introduce the percolation model. The reader is referred to Fig. 1 for an illustration.

Percolation on the ladder graph Let \({\mathcal {L}} = (V,E)\) be the infinite ladder graph with vertex set \(V = {\mathbb {Z}}\times \{0,1\}\) and edge set \(E = \{\langle v,w\rangle : v,w \in V, |v-w|=1\}\) where \(\langle v,w\rangle \) is an unordered pair and \(|\cdot |\) the standard Euclidean norm in \({\mathbb {R}}^2\). We also write \(v \sim w\) for \({\langle v,w\rangle \in E}\), and say that v and w are neighbors.

Axelson-Fisk and Häggström [3] introduced a percolation model on \({\mathcal {L}}\) that may be called ‘i. i. d. bond percolation on the ladder graph conditioned on the existence of a bi-infinite path’. We give a short review of this model.

Let \(\varOmega :=\{0,1\}^E\). We call \(\varOmega \) the configuration space, its elements \(\omega \in \varOmega \) are called configurations. A path in \({\mathcal {L}}\) is a finite or infinite sequence of distinct edges connecting a finite or infinite sequence of neighboring vertices. For a given \(\omega \in \varOmega \), we call a path \(\pi \) in \({\mathcal {L}}\)open if \(\omega (e)=1\) for each edge e from \(\pi \). If \(\omega \in \varOmega \) and \(v \in V\), we denote by \({\mathcal {C}}_{\omega }(v)\) the connected component in \(\omega \) that contains v, i. e.,

We write \({\mathsf {x}}: V \rightarrow {\mathbb {Z}}\) and \({\mathsf {y}}:V \rightarrow \{0,1\}\) for the projections from V to \({\mathbb {Z}}\) and \(\{0,1\}\), respectively. Then \(v = ({\mathsf {x}}(v),{\mathsf {y}}(v))\) for every \(v \in V\). We call \({\mathsf {x}}(v)\) and \({\mathsf {y}}(v)\) the \({\mathsf {x}}\)- and \({\mathsf {y}}\)-coordinate of v, respectively. For \(N_1, N_2 \in {\mathbb {N}}\), let \(\varOmega _{N_1,N_2}\) be the set of configurations in which there exists an open path from some \(v_1 \in V\) with \({\mathsf {x}}(v_1)=-N_1\) to some \(v_2 \in V\) with \({\mathsf {x}}(v_2) = N_2\). Further, let \(\varOmega ^* :=\bigcap _{N_1, N_2 \ge 0} \varOmega _{N_1,N_2}\) be the set of configurations which have an infinite path connecting \(-\infty \) and \(+\infty \).

Denote by \({\mathcal {F}}\) the \(\sigma \)-field on \(\varOmega \) generated by the projections \(p_e: \varOmega \rightarrow \{0,1\}\), \(\omega \mapsto \omega (e)\), \(e \in E\). For \(p \in (0,1)\), let \(\mu _p\) be the probability distribution on \((\varOmega ,{\mathcal {F}})\) which makes \((\omega (e))_{e \in E}\) an independent family of Bernoulli variables with \(\mu _p(\omega (e)=1)=p\) for all \(e \in E\). Then \(\mu _p(\varOmega ^*)=0\) by the Borel-Cantelli lemma. Write \(\mathrm {P}_{p,N_1,N_2}(\cdot ) :=\mu _p(\cdot \cap \varOmega _{N_1,N_2})/\mu _p(\varOmega _{N_1,N_2})\) for the probability distribution on \(\varOmega \) that arises from conditioning on the existence of an open path from \({\mathsf {x}}\)-coordinate \(-N_1\) to \({\mathsf {x}}\)-coordinate \(N_2\). The measures \(\mathrm {P}_{p,N_1,N_2}(\cdot )\) converge weakly as \(N_1,N_2 \rightarrow \infty \) as was shown in [3, Theorem 2.1]:

Theorem 2.1

The distributions \(\mathrm {P}_{p,N_1,N_2}\) converge weakly as \(N_1,N_2 \!\rightarrow \! \infty \) to a probability measure \(\mathrm {P}_{\!p}^*\) on \((\varOmega ,{\mathcal {F}})\) with \(\mathrm {P}_{\!p}^*(\varOmega ^*)=1\).

For any \(\omega \in \varOmega ^*\), we write \({\mathcal {C}}= {\mathcal {C}}_\omega \) for the \(\mathrm {P}_{\!p}^*\)-a. s. unique infinite open cluster. We write \({\mathbf {0}} :=(0,0)\) und define \(\varOmega _{{\mathbf {0}}} :=\{\omega \in \varOmega ^*: {\mathbf {0}} \in {\mathcal {C}}\}\) and

Random walk on the infinite percolation cluster Throughout the paper, we keep \(p \in (0,1)\) fixed and consider random walks in a percolation environment sampled according to \(\mathrm {P}_{\!p}\). The model to be introduced next goes back to Axelson-Fisk and Häggström [2], who used a different parametrization.

We work on the space \(V^{{\mathbb {N}}_0}\) equipped with the \(\sigma \)-algebra \({\mathcal {G}}= \sigma (Y_n: n \in {\mathbb {N}}_0)\) where \(Y_n: V^{{\mathbb {N}}_0} \rightarrow V\) denotes the projection from \(V^{{\mathbb {N}}_0}\) onto the nth coordinate. Let \(P_{\omega ,\uplambda }\) be the distribution on \(V^{{\mathbb {N}}_0}\) that makes \(Y :=(Y_n)_{n \in {\mathbb {N}}_0}\) a Markov chain on V with initial position \({\mathbf {0}}\) and transition probabilities \(p_{\omega ,\uplambda }(v,w)=P_{\omega ,\uplambda }(Y_{n+1} = w \mid Y_n = v)\) defined via

The random walk \((Y_n)_{n \in {\mathbb {N}}_0}\) is the weighted random walk on the ladder graph \({\mathcal {L}}\) with edge conductances \(c_{\omega ,\uplambda }(v,w) = e^{\uplambda ({\mathsf {x}}(v)+{\mathsf {x}}(w))} \mathbb {1}_{\{\omega (\langle v,w \rangle )=1\}}\) for \(v \sim w\) and \(c_{\omega ,\uplambda }(v,v) = \sum _{u \sim v} e^{\uplambda ({\mathsf {x}}(u)+{\mathsf {x}}(v))} \mathbb {1}_{\{\omega (\langle u,v \rangle )=0\}}\), see [23, Sect. 9.1] for some background. We write \(P^{{\mathbf {0}}}_{\omega ,\uplambda }\) to emphasize the initial position \({\mathbf {0}}\), and \(P^v_{\omega ,\uplambda }\) for the distribution of the Markov chain with the same transition probabilities but initial position \(v \in V\). The joint distribution of \(\omega \) and \((Y_n)_{n \in {\mathbb {N}}_0}\) on \((\varOmega \times {\mathbb {V}}^{{\mathbb {N}}_0},{\mathcal {F}}\otimes {\mathcal {G}})\) when \(\omega \) is drawn at random according to a probability measure Q on \((\varOmega ,{\mathcal {F}})\) is denoted by \(Q \times P^v_{\omega ,\uplambda }~=:~{\mathbb {P}}_{Q,\uplambda }^v\) where v is the initial position of the walk. (Notice that, in slight abuse of notation, we consider \(Y_n\) also as a mapping from \(\varOmega \times V^{{\mathbb {N}}_0}\) to V.) We refer to [14] for a formal definition. We write \({\mathbb {P}}_{\uplambda }^v\) for \({\mathbb {P}}_{\mathrm {P}_{\!p},\uplambda }^v\), \({\mathbb {P}}_{\uplambda }\) for \({\mathbb {P}}_{\uplambda }^{{\mathbf {0}}}\) and \({\mathbb {P}}^*_{\uplambda }\) for \({\mathbb {P}}_{\mathrm {P}_{\!p}^*,\uplambda }^{{\mathbf {0}}}\). If the walk starts at \(v={\mathbf {0}}\), we sometimes omit the superscript \({\mathbf {0}}\). Further, if \(\uplambda =0\), we sometimes omit \(\uplambda \) as a subscript, and write \(p_{\omega }\) for \(p_{\omega ,0}\), and \({\mathbb {P}}\) for \({\mathbb {P}}_{0}\).

The speed of the random walk Axelson-Fisk and Häggström [2, Proposition 3.1] showed that \((Y_n)_{n \in {\mathbb {N}}_0}\) is recurrent under \({\mathbb {P}}_0\) and transient under \({\mathbb {P}}_{\uplambda }\) for \(\uplambda \not = 0\). Moreover, there is a critical bias \(\uplambda _{\mathrm {c}}\in (0,\infty )\) separating the ballistic from the sub-ballistic regime. More precisely, if one denotes by \(X_n~:=~{\mathsf {x}}(Y_n)\) the projection of \(Y_n\) on the \({\mathsf {x}}\)-coordinate, the following result holds.

Proposition 2.2

For any \(\uplambda \ge 0\), there exists a deterministic constant \(\overline{\mathrm {v}}(\uplambda ) = \overline{\mathrm {v}}(p,\uplambda ) \in [0,1]\) such that

Further, there is a critical bias \(\uplambda _{\mathrm {c}}= \uplambda _{\mathrm {c}}(p) > 0\) (for which an explicit expression is available) such that

Proof

For \(\uplambda > 0\) this is Theorem 3.2 in [2]. For \(\uplambda = 0\), the sequence of increments \((X_n-X_{n-1})_{n \in {\mathbb {N}}}\) is ergodic under \({\mathbb {P}}\) by Lemma 4.4 below. Birkhoff’s ergodic theorem implies \(\overline{\mathrm {v}}(0) = \lim _{n \rightarrow \infty } \frac{X_n}{n} = {\mathbb {E}}[X_1] = 0\,{\mathbb {P}}\)-a. s. \(\square \)

Functional central limit theorem for the unbiased walk In a preceding paper [14], we have shown that \(\overline{\mathrm {v}}\) is differentiable as a function of \(\uplambda \) on the interval \((0,\uplambda _{\mathrm {c}}/2)\), and continuous on \((0,\infty )\). In this paper, we show that \(\overline{\mathrm {v}}\) is also differentiable at 0 with \(\overline{\mathrm {v}}'(0) = \sigma ^2\) where \(\sigma ^2\) is the limiting variance of \(n^{-1/2} X_n\) under the distribution \({\mathbb {P}}_0\). This is the Einstein relation for the model. Clearly, a necessary prerequisite for the Einstein relation is the central limit theorem for the unbiased walk.

Before we provide the central limit theorem for the unbiased walk, we introduce some notation. As usual, for \(t \ge 0\), we write \(\lfloor t \rfloor \) for the largest integer \(\le t\). Then, we define

for each \(n \in {\mathbb {N}}\). The random function \(B_n\) takes values in the Skorokhod space D([0, 1]) of right-continuous real-valued functions with existing left limits. We denote by “\(\Rightarrow \)” convergence in distribution of random variables in the Skorokhod space D[0, 1], see [6, Chapter 3] for details.

Theorem 2.3

There exists a constant \(\sigma = \sigma (p) \in (0,\infty )\) such that

for \(\mathrm {P}_{\!p}\)-almost all \(\omega \in \varOmega \) where B is a standard Brownian motion.

It is worth mentioning that an annealed invariance principle for \((B_n)_{n \in {\mathbb {N}}}\) follows without much effort from [7]. In principle, we do not require a quenched central limit theorem for the proof of the Einstein relation. However, we do require a joint central limit theorem for \(B_n\) together with a certain martingale \(M_n\), see Theorem 2.5 below. Therefore, we cannot directly apply the results from [7]. On the other hand, in the proof of the Einstein relation we use precise bounds on the corrector. Using similar arguments as Berger and Biskup [5], these bounds almost immediately give the quenched invariance principle.

Einstein relation We are now ready to formulate the Einstein relation:

Theorem 2.4

The speed \(\overline{\mathrm {v}}\) is differentiable at \(\uplambda =0\) with derivative

where \(\sigma ^2\) is given by Theorem 2.3.

The joint functional central limit theorem As in [14], the proof of the differentiability of the speed is based on a joint central limit theorem for \(X_n\) and the leading term of a suitable density.

To make this precise, we first introduce some notation. For \(v \in V\), let \(N_{\omega }(v) :=\{w \in V: p_{\omega ,0}(v,w) > 0\}\). Thus, \(N_{\omega }(v) \not = \varnothing \), even for isolated vertices. For \(w \in N_{\omega }(v)\), the function \(\log p_{\omega ,\uplambda }(v,w)\) is differentiable at \(\uplambda =0\). As \(\log p_{\omega ,\uplambda }(v,w)\) is differentiable at \(\uplambda = 0\), we have

where \(\nu _{\omega }(v,w)\) is the derivative of \(\log p_{\omega ,\uplambda }(v,w)\) at 0 and o(1) converges to 0 as \(\uplambda \rightarrow 0\). The one-step transition probability \(p_{\omega ,\uplambda }(v,w)\) depends other than on \(\uplambda \) only on the local configuration around v in \(\omega \) and the position of w relative to v. Because of the discrete nature of the model, there are only finitely many such local configurations. Consequently, the error term \(o(1) \rightarrow 0\) as \(\uplambda \rightarrow 0\) uniformly (in v, w and \(\omega \)).

For all v and all \(\omega \), \(p_{\omega ,\uplambda }(v,\cdot )\) is a probability measure on \(N_{\omega }(v)\) and hence

Therefore, for fixed \(\omega \), the sequence \((M_{n})_{n \in {\mathbb {N}}_0}\) where \(M_{0}=0\) and, for \(n \in {\mathbb {N}}\),

is a \(P_{\omega }\)-martingale with respect to the canonical filtration of the walk \((Y_k)_{k \in {\mathbb {N}}_0}\). Clearly, \(M_n\) is a (measurable) function of \(\omega \) and \((Y_k)_{k \in {\mathbb {N}}_0}\) and thus a random variable on \(\varOmega \times V^{{\mathbb {N}}_0}\). The sequence \((M_n)_{n \in {\mathbb {N}}_0}\) is also a martingale under the annealed measure \({\mathbb {P}}\).

Theorem 2.5

Let \(p \in (0,1)\). Then, for \(\mathrm {P}_{\!p}\)-almost all \(\omega \in \varOmega \),

where (B, M) is a two-dimensional centered Brownian motion with deterministic covariance matrix \(\varSigma = (\sigma _{ij})_{i,j=1,2}\). Further, it holds that

As the martingale \(M_n\) has bounded increments, the Azuma-Hoeffding inequality [30, E14.2] applies and gives the following exponential integrability result, see the proof of Proposition 2.7 in [14] for details.

Proposition 2.6

For every \(t > 0\),

We finish this section with an overview of the steps that lead to the proof of Theorem 2.4.

-

1.

In Sect. 7, we prove the joint central limit theorem, Theorem 2.5. The proof is based on the corrector method, which is a decomposition technique in which \(Y_n\) is written as a martingale plus a corrector of smaller order. The martingale is constructed in Sect. 6. Many arguments are based on the method of the environment seen from the point of view of the walker.

-

2.

In Lemma 8.1, we prove that

$$\begin{aligned} \sup _{n \in {\mathbb {N}}} \frac{1}{n} {\mathbb {E}}\Big [\max _{k=1,\ldots ,n} X_k^2\Big ] < \infty . \end{aligned}$$(2.8)The proof is based on estimates for the almost sure fluctuations of the corrector derived in Sect. 7.

-

3.

Using the joint central limit theorem and (2.8), we show in Proposition 8.3 that, for any \(\alpha > 0\),

$$\begin{aligned} \lim _{\begin{array}{c} \uplambda \rightarrow 0,\\ \uplambda ^2 n \rightarrow \alpha \end{array}} \frac{{\mathbb {E}}_{\uplambda }[X_n]}{\uplambda n} ~=~ {\mathbb {E}}[B(1) M(1)]. \end{aligned}$$(2.9)Equation (2.9) is a weak form of the Einstein relation going back to Lebowitz and Rost [22].

-

4.

Finally, we show in Sect. 8.4 that, for any \(\alpha > 0\),

$$\begin{aligned} \lim _{\begin{array}{c} \uplambda \rightarrow 0,\\ \uplambda ^{2} n \rightarrow \alpha \end{array}} \bigg [\frac{\overline{\mathrm {v}}(\uplambda )}{\uplambda } - \frac{{\mathbb {E}}_{\uplambda }[X_n]}{\uplambda n}\bigg ] ~=~ 0. \end{aligned}$$(2.10)

Notice that by (2.9) the limit in (2.10) does not depend on \(\alpha > 0\). Hence, (2.10) together with \(\overline{\mathrm {v}}(0)=0\) implies

3 Background on the Percolation Model

In this section we provide some basic results on the percolation model.

Ergodicity of the percolation distribution To ease notation, we identify V with the additive group \({\mathbb {Z}}\times {\mathbb {Z}}_2\). For instance, we write \((k,1)+(n,1)= (k+n,0)\) for \(k,n \in {\mathbb {Z}}\). With this notation, for \(v \in V\), we define the shift \(\theta ^{v}:V \rightarrow V\), \(w \mapsto w-v\). The shift \(\theta ^{v}\) canonically extends to a mapping on the edges and hence to a mapping on the configurations \(\omega \in \varOmega \). In slight abuse of notation, we denote all these mappings by \(\theta ^{v}\). The mappings \(\theta ^{v}\) form a commutative group since \(\theta ^{v} \theta ^{w} = \theta ^{v+w}\).

The next result is contained in the proof of Lemma 5.5 in [2].

Lemma 3.1

The probability measure \(\mathrm {P}_{\!p}^*\) is ergodic w. r. t. the family of shifts \(\theta ^v\), \(v \in V\), that is, it is invariant under all shifts \(\theta ^v\) and for all shift-invariant sets \(A \in {\mathcal {F}}\), we have \(\mathrm {P}_{\!p}^*(A) \in \{0,1\}\).

Cyclic decomposition We introduce a decomposition of the percolation cluster into independent cycles. A similar decomposition for the given model was first introduced in [2]. If (i, 1) is isolated in \(\omega \), we call (i, 0) a pre-regeneration point. Cycles begin and end at pre-regeneration points. These are bottlenecks in the graph which the walk has to visit in order to get past. Let \(\ldots , R^{\mathrm {pre}}_{-2}, R^{\mathrm {pre}}_{-1}, R^{\mathrm {pre}}_0, R^{\mathrm {pre}}_1, R^{\mathrm {pre}}_2, \ldots \) be an enumeration of the pre-regeneration points such that \(\ldots< {\mathsf {x}}(R^{\mathrm {pre}}_{-1})< 0 \le {\mathsf {x}}(R^{\mathrm {pre}}_0)< {\mathsf {x}}(R^{\mathrm {pre}}_1) < \ldots \) .

Let \({\mathcal {R}}^{\mathrm {pre}}\) be the set of all pre-regeneration points. Let \(E^{i,\le }, E^{i, \ge } \subseteq E\) consist of those edges with both endpoints having \({\mathsf {x}}\)-coordinate \(\le i\) or \(\ge i\), respectively. Further, let \(E^{i,<} :=E \setminus E^{i, \ge }\) and \(E^{i,>}:=E \setminus E^{i,\le }\). We denote the subgraph of \(\omega \) with vertex set \(\{v \in V: a \le {\mathsf {x}}(v) \le b\}\) and edge set \(\{e \in E^{a,\ge } \cap E^{b,<}: \omega (e)=1\}\) by [a, b) and call [a, b) a block (of \(\omega \)). The pre-regeneration points split the percolation cluster into blocks

There are infinitely many pre-regeneration points on both sides of the origin \(\mathrm {P}_{\!p}\)-a. s. The random walk \((Y_n)_{n \ge 0}\) under \({\mathbb {P}}\) can be viewed as a random walk among random conductances \((\omega (e))_{e \in E}\) (with additional self-loops). For \(n \in {\mathbb {Z}}\), we define \(C_{n}\) to be the effective conductance between \(R_{n-1}^{\mathrm {pre}}\) and \(R_{n}^{\mathrm {pre}}\). To be more precise, consider the nth cycle \(\omega _n\) as a finite network. Then the effective resistance between \(R_{n-1}^{\mathrm {pre}}\) and \(R_{n}^{\mathrm {pre}}\) is well-defined, see [23, Sect. 9.4]. We denote this effective resistance by \(1/C_n\) and the effective conductance by \(C_n\). We further define \(L_n :=L(\omega _n)\) to be the length of the nth cycle, i. e., \(L_n = {\mathsf {x}}(R_{n}^{\mathrm {pre}})-{\mathsf {x}}(R_{n-1}^{\mathrm {pre}})\). We summarize the two definitions:

For later use, we note the following lemma.

Lemma 3.2

Under \(\mathrm {P}_{\!p}\), the family \(\{(C_{n},L_{n}): n \in {\mathbb {Z}}\}\) is independent and the \((C_{n},L_{n})\), \(n \in {\mathbb {Z}}\setminus \{0\}\), are identically distributed. Further, there is some \(\vartheta > 0\) such that

for all \(n \in {\mathbb {Z}}\).

Proof

By Lemma 3.3 in [14], under \(\mathrm {P}_{\!p}\), the family \((\theta ^{R_{n-1}^{\mathrm {pre}}} \omega _n)_{n \in {\mathbb {Z}}}\) is independent and all cycles except cycle 0 have the same distribution. Hence the family \(((C_n,L_n))_{n \in {\mathbb {Z}}}\) is independent and the \((C_n,L_n)\), \(n \in {\mathbb {Z}}\), \(n \not = 0\) are identically distributed. Lemma 3.3(b) in [14] gives that \(L_1 = {\mathsf {x}}(R_{1}^{\mathrm {pre}})-{\mathsf {x}}(R_0^{\mathrm {pre}})\) has a finite exponential moment of some order \(\vartheta ' > 0\). By Raleigh’s monotonicity law [23, Theorem 9.12], \(C_{1}^{-1}\), the effective resistance between \(R_{1}^{\mathrm {pre}}\) and \(R_0^{\mathrm {pre}}\), increases if open edges between these two points are closed. So, the effective resistance between \(R_{1}^{\mathrm {pre}}\) and \(R_0^{\mathrm {pre}}\) is bounded above by the effective resistance of the longest self-avoiding open path connecting these two points. This path has length at most \(2L_1\) and thus, by the series law, resistance of at most \(2L_1\). Therefore, \(C_{1}^{-1}\) has a finite exponential moment of order \(\vartheta '/2\).

The proof of the statements concerning the cycle \(\theta ^{R_{-1}^{\mathrm {pre}}} \omega _0\) can be accomplished analogously, but requires revisiting the proof of Lemma 3.3 in [14]. We omit further details. \(\square \)

We close this section with the definition of the backbone. We call a vertex v forwards-communicating (in \(\omega \)) if it is connected to \(+\infty \) via an infinite open path that does not visit any vertex u with \({\mathsf {x}}(u)<{\mathsf {x}}(v)\). Finally, we define \({\mathcal {B}}\, :=\,{\mathcal {B}}(\omega )\, :=\,\{v \in V: v \text { is forwards-communicating (in } \omega )\}\).

4 The Environment Seen from the Walker and Input from Ergodic Theory

We define the process of the environment seen from the particle\(({\overline{\omega }}(n))_{n \in {\mathbb {N}}_0}\) by \({\overline{\omega }}(n) :=\theta ^{Y_n} \omega \), \(n \in {\mathbb {N}}_0\). It can be understood as a process under \(P_{\omega }\) as well as under \({\mathbb {P}}_0\). For later use, we shall show that \(({\overline{\omega }}(n))_{n \ge 0}\) is a reversible, ergodic Markov chain under \({\mathbb {P}}_0\).

Lemma 4.1

The sequence \(({\overline{\omega }}(n))_{n \in {\mathbb {N}}_0}\) is a Markov process with state space \(\varOmega \) under \(P_{\omega }\), \({\mathbb {P}}^*_0\) and \({\mathbb {P}}_0\), with initial distributions \(\delta _{\omega }\), \(\mathrm {P}_{\!p}^*\) and \(\mathrm {P}_{\!p}\), respectively. In each of these cases, the transition kernel \(M(\omega ,\mathrm {d}\omega ')\) is given by

\(\omega \in \varOmega \), f nonnegative and \({\mathcal {F}}\)-measurable. Moreover, \(({\overline{\omega }}(n))_{n \in {\mathbb {N}}_0}\) is reversible and ergodic under \({\mathbb {P}}_0\).

Proof

The proof of (4.1) is a standard calculation and can be done along the lines of the corresponding one for random walk in random environment, see e. g. [31, Lemma 2.1.18]. To prove reversibility of \(({\overline{\omega }}(n))_{n \in {\mathbb {N}}_0}\) under \({\mathbb {P}}_0\), notice that, for every bounded and measurable \(f: \varOmega \rightarrow {\mathbb {R}}\) and all \(v \in V\) with \(v \sim {\mathbf {0}}\),

This can be verified along the lines of the proof of (2.2) in [5]. Arguing as in the proof of Lemma 2.1 in [5], (4.2) implies that

for all measurable and bounded \(f,g: \varOmega \rightarrow {\mathbb {R}}\), which is the reversibility of \(({\overline{\omega }}(n))_{n \in {\mathbb {N}}_0}\) under \({\mathbb {P}}_0\). To prove ergodicity, we argue as in the proof of Lemma 4.3 in [7]. Fix an invariant set \(A' \subseteq \varOmega \), i. e.,

By Corollary 5 on p. 97 in [28], it is enough to show that \(\mathrm {P}_{\!p}(A') \in \{0,1\}\). If \(\mathrm {P}_{\!p}(A') = 0\), there is nothing to show. Thus assume that \(\mathrm {P}_{\!p}(A') > 0\). Since \(\mathrm {P}_{\!p}\) is concentrated on \(\varOmega _{{\mathbf {0}}}\), we can ignore the part of \(A'\) that is outside \(\varOmega _{{\mathbf {0}}}\) and can thus assume \(A' \subseteq \varOmega _{{\mathbf {0}}}\). From the form of M, we deduce that

To avoid trouble with exceptional sets of \(\mathrm {P}_{\!p}\)-probability 0, we define \(A :=\{\omega \in A': \theta ^v \omega \in A' \text { for all } v \in {\mathcal {C}}_\omega ({\mathbf {0}})\}\). Since \(A \subseteq A'\), it suffices to show \(\mathrm {P}_{\!p}(A)=1\). First notice that \(\mathrm {P}_{\!p}(A) = \mathrm {P}_{\!p}(A') > 0\) and that

Plainly,

By definition, B is invariant under shifts \(\theta ^v\), \(v \in V\). Since \(\mathrm {P}_{\!p}^*(B) \ge \mathrm {P}_{\!p}^*(A) > 0\), the ergodicity of \(\mathrm {P}_{\!p}^*\) (see Lemma 3.1) implies \(\mathrm {P}_{\!p}^*(B) = 1\). We shall now show that \(B \cap \varOmega _{{\mathbf {0}}} \subseteq A\) up to a set of \(\mathrm {P}_{\!p}^*\) measure zero. Once this is shown, we can conclude that \(\mathrm {P}_{\!p}^*(A) = \mathrm {P}_{\!p}^*(\varOmega _{{\mathbf {0}}})\), in particular, \(\mathrm {P}_{\!p}(A)=1\). In order to show \(B \cap \varOmega _{{\mathbf {0}}} \subseteq A\)\(\mathrm {P}_{\!p}^*\)-a. s., pick an arbitrary \(\omega \in B \cap \varOmega _{{\mathbf {0}}}\) such that \(\omega \) has only one infinite connected component \({\mathcal {C}}_{\omega }\). (The set of \(\omega \) with this property has measure 1 under \(\mathrm {P}_{\!p}^*\).) By definition of the set B, there exists a \(v \in V\) such that \(\theta ^v \omega \in A\). Since \(A \subseteq \varOmega _{{\mathbf {0}}}\), the origin \({\mathbf {0}}\) must be in the infinite connected component of \(\theta ^v \omega \) or, equivalently, v is in the infinite connected component of \(\omega \). From the uniqueness of the infinite connected component together with \(\omega \in \varOmega _{{\mathbf {0}}}\), we thus infer \(v \in {\mathcal {C}}_\omega ({\mathbf {0}})\). This is equivalent to \(-v \in {\mathcal {C}}_{\theta ^v \omega }({\mathbf {0}})\). Together with \(\theta ^v \omega \in A\) this implies \(\omega = \theta ^{-v} \theta ^v \omega \in A\) by means of (4.4). The proof is complete. \(\square \)

The lemma has the following useful corollary.

Lemma 4.2

Let \(f: \varOmega \rightarrow {\mathbb {R}}\) be integrable with respect to \(\mathrm {P}_{\!p}\). Then, for \(\mathrm {P}_{\!p}\)-almost all \(\omega \in \varOmega \), we have

Proof

As \(({\overline{\omega }}(n))_{n \in {\mathbb {N}}_0}\) is reversible and ergodic with respect to \({\mathbb {P}}_0\), we infer

from Birkhoff’s ergodic theorem. As the law of \({\overline{\omega }}(0)\) under \({\mathbb {P}}_0\) is \(\mathrm {P}_{\!p}\), we have \({\mathbb {E}}_0[f({\overline{\omega }}(0))] = \mathrm {E}_{p}[f]\). Hence (4.5) follows from (4.6) and the definition of \({\mathbb {P}}_0\). \(\square \)

Next we see that if the walker sees the same environment at two different epochs, then, with probability 1, the position of the walker at those two epochs is actually the same. This allows to reconstruct the random walk from the environment seen from the walker.

Lemma 4.3

We have \(\mathrm {P}_{\!p}^*(\theta ^v \omega = \theta ^{w} \omega ) = 0\) for all \(v, w \in V\), \(v \not = w\). The same statement holds with \(\mathrm {P}_{\!p}^*\) replaced by \(\mathrm {P}_{\!p}\).

Proof

By shift invariance, we may assume \(w={\mathbf {0}}\) and \(v \not = {\mathbf {0}}\). Call a vertex \(u \in V\) backwards-communicating (in \(\omega \)) if there exists an infinite open path in \(\omega \) which contains u but no vertex with strictly larger \({\mathsf {x}}\)-coordinate. Define  by letting

by letting

Notice that \(\theta ^v \omega = \omega \) implies \(\theta ^v {\texttt {T}} = {\texttt {T}}\) where \(\theta ^v {\texttt {T}}_i\) records whether the vertices (i, 0) and (i, 1) are backwards-communicating in \(\theta ^v\omega \). Under \(\mathrm {P}_{\!p}^*\), \({\texttt {T}}\) is a time-homogeneous, stationary, irreducible and aperiodic Markov chain with state space \(\{\texttt {01}, \texttt {10}, \texttt {11}\}\) by [3, Theorem 3.1 and the form of the transition matrix on p. 1111]. From this one can deduce \(\mathrm {P}_{\!p}^*(\theta ^v {\texttt {T}} = {\texttt {T}}) = 0\) and, in particular, \(\mathrm {P}_{\!p}^*(\theta ^v \omega = \omega ) = 0\). Finally, notice that, for every event \(A \in {\mathcal {F}}\), \(\mathrm {P}_{\!p}^*(A) = \mathrm {P}_{\!p}(A)\) whenever \(\mathrm {P}_{\!p}^*(A) \in \{0,1\}\). \(\square \)

Lemma 4.4

The increment sequences \((X_n-X_{n-1})_{n \in {\mathbb {N}}}\) and \((Y_n-Y_{n-1})_{n \in {\mathbb {N}}}\) are ergodic under \({\mathbb {P}}\).

Proof

Define a mapping \(\varphi : \varOmega \times \varOmega \rightarrow V\) via

It can be checked that \(\varphi \) is product-measurable. Further, \({\mathbb {P}}\)-a. s., \(Y_n - Y_{n-1} = \varphi ({\overline{\omega }}(n\!-\!1),{\overline{\omega }}(n))\) for all \(n \in {\mathbb {N}}\). Combining the ergodicity of \(({\overline{\omega }}(n))_{n \in {\mathbb {N}}_0}\) under \({\mathbb {P}}_0\) (see Lemma 4.1) with Lemma 5.6(c) in [3], we infer that \((Y_n-Y_{n-1})_{n \in {\mathbb {N}}} = (\varphi ({\overline{\omega }}(n\!-\!1),{\overline{\omega }}(n)))_{n \in {\mathbb {N}}}\) is ergodic. (To formally apply the lemma, one may extend \(({\overline{\omega }}(n))_{n \in {\mathbb {N}}_0}\) to a stationary ergodic sequence \(({\overline{\omega }}(n))_{n \in {\mathbb {Z}}}\) using reversibility.) Then also \((X_n-X_{n-1})_{n \in {\mathbb {N}}} = ({\mathsf {x}}(Y_n-Y_{n-1}))_{n \in {\mathbb {N}}}\) is ergodic under \({\mathbb {P}}\) again by Lemma 5.6(c) in [3]. \(\square \)

5 Preliminary Results

Hitting probabilities The next lemma provides bounds on hitting probabilities for biased random walk that we use later on.

Lemma 5.1

Let \(L,R \in {\mathbb {N}}\) and \(u,v,w \in {\mathcal {R}}^{\mathrm {pre}}\) be such that \({\mathsf {x}}(v)-{\mathsf {x}}(u)=L\lfloor \frac{1}{\uplambda }\rfloor \) and \({\mathsf {x}}(w)-{\mathsf {x}}(v)=R \lfloor \frac{1}{\uplambda }\rfloor \). Then, with \(T_u\) and \(T_w\) denoting the first hitting times of \((Y_n)_{n \in {\mathbb {N}}_0}\) at u and w, respectively, we have

for all sufficiently small \(\uplambda \in (0,\uplambda _0]\) for some \(\uplambda _0 > 0\) not depending on L, R. In particular, for these \(\uplambda \),

Moreover, for \(L=1\) and \(R=\infty \), for all sufficiently small \(\uplambda > 0\), we have

Proof

Since u, w are pre-regeneration points, it suffices to consider the finite subgraph [u, w). As v is also a pre-regeneration point, the walk cannot visit both u and w during one excursion from v to v. Standard electrical network theory thus gives

where \({\mathcal {R}}_{\mathrm {eff}}(u \leftrightarrow v) = {\mathcal {R}}_{\text {eff},\uplambda }(u \leftrightarrow v)\) denotes the effective resistance between u and v in [u, w), see [23, Proposition 9.5]. Let us first prove the upper bound by applying Raleigh’s monotonicity law [23, Theorem 9.12]. We add all edges left of v that were not present in the cluster \(\omega \). This decreases the effective resistance \({\mathcal {R}}_{\mathrm {eff}}(u \leftrightarrow v)\) between u and v. On the right of v we delete all edges but a simple path from v to w. This increases the effective resistance \({\mathcal {R}}_{\mathrm {eff}}(v \leftrightarrow w)\).

The graph obtained is denoted by G, see Fig. 3 for an example. We conclude

where \({\mathcal {R}}_{\mathrm {eff},G}\) denotes the corresponding effective resistance in G. We may assume without loss of generality that \(v = {\mathbf {0}}\). Recall that the conductance of an open edge is \(e^{\uplambda k}\) where k is the sum of the \({\mathsf {x}}\)-coordinates of the two end points of the edge. Then, by the series law, we can bound \({\mathcal {R}}_{\mathrm {eff},G}(v \leftrightarrow w)\) from above by

From the Nash-Williams inequality [23, Proposition 9.15], we infer

Consequently,

for all sufficiently small \(\uplambda > 0\) independent of L, R. The proof of the lower bound is similar. We add all edges right of v and keep only one simple path left of v. For this new graph \({{\widetilde{G}}}\) we bound the effective resistances as follows. From the Nash-Williams inequality [23, Proposition 9.15], we infer

for all sufficiently small \(\uplambda > 0\). Moreover,

The lower bound in (5.1) now follows. Equation (5.2) follows from (5.1) by letting \(R \rightarrow \infty \). Equation (5.3) follows from (5.4) and the observation that the term on the right-hand side of (5.4) with \(R = \infty \) and \(L=1\) tends to \(\frac{4}{3+e^{2}}=0.3850\ldots \) for \(\uplambda \rightarrow 0\). \(\square \)

6 Harmonic Functions and the Corrector

Throughout this section, we consider the unbiased walk, i.e., we assume \(\uplambda = 0\). We use harmonic functions to construct a martingale approximation for \(X_n\). As a result, \(X_n\) can be written as a martingale plus a corrector.

The corrector method The corrector method has been used in various setups, see e.g. [5] and [26]. In the present setup, the method works as follows.

We seek a function \(\psi : \varOmega \times V \rightarrow {\mathbb {R}}\) such that, for each fixed \(\omega \), \(\psi (\omega ,\cdot )\) is harmonic in the second argument with respect to the transition kernel of \(P_{\omega }\), that is, \(E^v_{\omega } [\psi (\omega ,Y_1)] = \psi (\omega ,v)\) for all \(v \in {\mathcal {C}}_\omega \). In what follows, we shall sometimes suppress the dependence of \(\psi \) on \(\omega \) in the notation so that the above condition becomes

If we find such a function, then \((\psi (\omega ,Y_n))_{n \in {\mathbb {N}}_0}\) is a martingale under \(P_{\omega }\). We can then define \(\chi (\omega ,v) :={\mathsf {x}}(v) - \psi (\omega ,v)\) and get that \(X_n = \psi (Y_n) + \chi (Y_n)\). In other words, \(X_n\) can be written as the nth term in a martingale, \(\psi (Y_n)\), plus a corrector, \(\chi (Y_n)\). In order to derive a central limit theorem for \(X_n\), it then suffices to apply the martingale central limit theorem to \(\psi (Y_n)\) and to show that the contribution of \(\chi (Y_n)\) is asymptotically negligible.

Construction of a harmonic function Let \(\omega \in \varOmega _{{\mathbf {0}}}\) be such that there are infinitely many pre-regeneration points to the left and to the right of the origin (the set of these \(\omega \) has \(\mathrm {P}_{\!p}\)-probability 1). Then, under \(P_{\omega }\), the walk \((Y_n)_{n \ge 0}\) is the simple random walk on the unique infinite cluster \({\mathcal {C}}_\omega \). It can also be considered as a random walk among random conductances where each edge \(e \in E\) has conductance \(\omega (e)\). Recall that \(C_{n} = C_n(\omega )\) denotes the effective conductance between \(R_{n-1}^{\mathrm {pre}}\) and \(R_{n}^{\mathrm {pre}}\), see (3.1). We couple our model with the random conductance model on \({\mathbb {Z}}\) with conductance \(C_{n}\) between \(n-1\) and n. For the latter model, the harmonic functions are known. In fact,

is harmonic for the random conductance model on \({\mathbb {Z}}\). Notice that \(\varPsi \) is a function of \(\omega \) and n as the conductances \(C_k(\omega )\) depend on \(\omega \). We define \(\phi (\omega ,R_{n}^{\mathrm {pre}}) :=\varPsi (\omega ,n)\). Now fix an arbitrary \(n \in {\mathbb {Z}}\). For any vertex \(v \in \omega _n\), we then set \(\phi (\omega ,v) :=\phi (\omega ,R_{n-1}^{\mathrm {pre}}) + {\mathcal {R}}_{\mathrm {eff}}(R_{n-1}^{\mathrm {pre}} \leftrightarrow v)\), where the latter expression denotes the effective resistance between \(R_{n-1}^{\mathrm {pre}}\) and v in the finite network \(\omega _n\). This definition is consistent with the cases \(v = R_{n-1}^{\mathrm {pre}}, R_{n}^{\mathrm {pre}}\), and, by [23, Eq. (9.12)], makes \(\phi (\omega ,\cdot )\) harmonic on \(\omega _n\) under \(P_\omega \). As \(n \in {\mathbb {Z}}\) was arbitrary, \(\phi (\omega ,\cdot )\) is harmonic on \({\mathcal {C}}_\omega \) under \(P_\omega \). We now define

where we remind the reader that the \(L_n\), \(n \in {\mathbb {Z}}\) were defined in (3.1). Notice that the expectations \(\mathrm {E}_{p}[L_1]\) and \(\mathrm {E}_{p}[C_{1}^{-1}]\) are finite by Lemma 3.2. Since \(\phi (\omega ,\cdot )\) is harmonic under \(P_{\omega }\), so is \(\psi (\omega ,\cdot )\) as an affine transformation of \(\phi (\omega ,\cdot )\). It turns out that \(\psi \) is more suitable for our purposes as \(\psi \) is additive in a certain sense. Next, we collect some facts about \(\psi \).

Proposition 6.1

Consider the function \(\psi : \varOmega \times V \rightarrow {\mathbb {R}}\) constructed above. The following assertions hold:

-

(a)

For \(\mathrm {P}_{\!p}\)-almost all \(\omega \in \varOmega _{{\mathbf {0}}}\), the function \(v \mapsto \psi (\omega ,v)\) is harmonic with respect to (the transition probabilities of) \(P_{\omega }\).

-

(b)

For \(\mathrm {P}_{\!p}\)-almost all \(\omega \in \varOmega _{{\mathbf {0}}}\) and all \(u,v \in {\mathcal {C}}_\omega \), it holds that

$$\begin{aligned} \textstyle \psi (\omega ,u+v) = \psi (\omega ,u) + \psi (\theta ^u \omega ,v). \end{aligned}$$(6.3) -

(c)

For \(\mathrm {P}_{\!p}\)-almost all \(\omega \in \varOmega _{{\mathbf {0}}}\)

$$\begin{aligned} \sup _{v \sim w} |\psi (\omega ,v)-\psi (\omega ,w)| \mathbb {1}_{\{v \in {\mathcal {C}}_\omega \}} \mathbb {1}_{\{\omega (\langle v,w \rangle )=1\}}\le {\textstyle \frac{\mathrm {E}_{p}[L_1]}{\mathrm {E}_{p}[C_{1}^{-1}]}}. \end{aligned}$$(6.4)

Proof

Assertion (a) is clear from the construction of \(\psi \). For the proof of (b), in order to ease notation, we drop the factor \(\mathrm {E}_{p}[L_1]/\mathrm {E}_{p}[C_{1}^{-1}]\) in the definition of \(\psi \). This is no problem as (6.3) remains true after multiplication by a constant. Now fix \(\omega \in \varOmega _{{\mathbf {0}}}\) such that there are infinitely many pre-regeneration points to the left and to the right of \({\mathbf {0}}\). The set of these \(\omega \) has \(\mathrm {P}_{\!p}\)-probability 1. Let \(u,v \in {\mathcal {C}}_{\omega }\). We suppose that there are \(n,m \in {\mathbb {N}}_0\) such that \(u \in \omega _n\) and \(v \in \omega _{n+m}\). The other cases can be treated similarly. We further assume that \({\mathsf {y}}(u)=0\). Define \(T :=\inf \{n \in {\mathbb {N}}_0: Y_n \in {\mathcal {R}}^{\mathrm {pre}}\}\) to be the first hitting time of the set of pre-regeneration points. From Proposition 9.1 in [23], we infer

where we have used that \({\mathsf {y}}(u)=0\) which implies that the pre-regeneration points in \(\theta ^u \omega \) are the pre-regeneration points in \(\omega \) but shifted by index n as \(u \in \omega _n\). Here,

Using the last two equations in (6.6) and summing over (6.5) and (6.6) gives:

The proof in the case \({\mathsf {y}}(u)=1\) is similar but requires more cumbersome calculations as the pre-regenerations change when considering \(\theta ^u \omega \) instead of \(\omega \) due to the flip of the cluster. However, the pre-regeneration points in \(\omega \) remain pivotal edges in \(\theta ^u \omega \) and by the series law the corresponding resistances add. We refrain from providing further details.

We now turn to the proof of assertion (c). According to the definition of \(\psi \), the statement is equivalent to

For the proof of (6.7), pick \(\omega \in \varOmega _{{\mathbf {0}}}\) such that there are infinitely many pre-regeneration points to the left and to the right of the origin. Now pick \(v,w \in {\mathcal {C}}_\omega \) with \(\omega (\langle v,w \rangle ) = 1\). Then there is an \(n \in {\mathbb {Z}}\) such that v, w are vertices of \(\omega _n\) and \(\langle v,w \rangle \) is an edge of \(\omega _n\). In this case, by the definition of \(\phi \), \(\phi (\omega , v) :=\phi (\omega , a) + {\mathcal {R}}_{\mathrm {eff}}(a \leftrightarrow v)\) and \(\phi (\omega , w) :=\phi (\omega , a) + {\mathcal {R}}_{\mathrm {eff}}(a \leftrightarrow w)\) for \(a=R_{n-1}^{\mathrm {pre}}\) where \({\mathcal {R}}_{\mathrm {eff}}(u \leftrightarrow u')\) denotes the effective resistance between u and \(u'\) in the finite network \(\omega _n\). To unburden notation, we assume without loss of generality that \(\phi (\omega , a)=0\). Then \({\mathcal {R}}_{\mathrm {eff}}(\cdot \leftrightarrow \cdot )\) is a metric on the vertex set of \(\omega _n\), see [23, Exercise 9.8]. In particular, \({\mathcal {R}}_{\mathrm {eff}}(\cdot \leftrightarrow \cdot )\) satisfies the triangle inequality. This gives

where we have used that \({\mathcal {R}}_{\mathrm {eff}}(v \leftrightarrow w) \le 1\). This inequality follows from Raleigh’s monotonicity principle [23, Theorem 9.12] when closing all edges in \(\omega _n\) except \(\langle v,w \rangle \). By symmetry, we also get \(\phi (\omega , v) \le \phi (\omega , w)+1\) and, hence, \(|\phi (\omega , v)-\phi (\omega , w)| \le 1\). \(\square \)

For \(v \in V\) (and fixed \(\omega \)), we define \(\chi (\omega , v) :={\mathsf {x}}(v) - \psi (\omega , v)\). Then (suppressing \(\omega \) in the notation) \(X_n = \chi (Y_n) + \psi (Y_n)\). For the proof of the Einstein relation, we require strong bounds on the corrector \(\chi \). These bounds are established in the following lemma.

Lemma 6.2

For any \(\varepsilon \in (0,\frac{1}{2})\) and every sufficiently small \(\delta > 0\) there is a random variable K on \(\varOmega \) with \(\mathrm {E}_{p}[K^2]<\infty \) such that

Further, there is a random variable \(D \in L^2({\mathbb {P}})\) such that

Proof

For \(k \in {\mathbb {Z}}\), set \(\eta _k(\omega ) :=L_k(\omega ) - \frac{\mathrm {E}_{p}[L_1]}{\mathrm {E}_{p}[C_1^{-1}]} \frac{1}{C_k(\omega )}\). Then, for \(n \in {\mathbb {N}}\),

where \(\eta _1,\ldots ,\eta _n\) are i.i.d. centered random variables on \((\varOmega ,{\mathcal {F}},\mathrm {P}_{\!p})\). It holds that \(\mathrm {E}_{p}[e^{\vartheta |\eta _1|}] < \infty \) for some \(\vartheta > 0\) by Lemma 3.2. From (A.2), the fact that \(|\phi (\omega ,{\mathbf {0}})| \le 1/{C_0}\) and again Lemma 3.2, which guarantees that \(\mathrm {E}_{p}[1/{C_0^2}]<\infty \), we thus infer, for arbitrary given \(\varepsilon \in (0,\frac{1}{4})\) and \(\delta \in (0,\frac{1}{2})\),

for all \(n \in {\mathbb {N}}\) and a random variable \(K_2\) on \((\varOmega ,{\mathcal {F}})\) satisfying \(\mathrm {E}_{p}[K_2^2]<\infty \). If \(v \in \omega _n\) for some \(n > 0\), then

The \(\xi _n\), \(n \in {\mathbb {N}}\) are nonnegative and i.i.d. under \(\mathrm {P}_{\!p}\). Hence, (A.1) gives

for all \(n \in {\mathbb {N}}\), where \(K_1\) is a nonnegative random variable on \((\varOmega ,{\mathcal {F}})\) with \(\mathrm {E}[K_1^2] < \infty \). Analogous arguments apply when \(v \in \omega _n\) with \(n \le 0\). Combining (6.10) and (6.11), we infer that for sufficiently small \(\delta > 0\) there is a random variable \(K \ge 1\) on \((\varOmega ,{\mathcal {F}})\) with \(\mathrm {E}_{p}[K^2]<\infty \) such that

for all \(v \in {\mathcal {C}}_\omega \), i.e., (6.8) holds.

Finally, we turn to the proof of (6.9). We use (6.8) with \(\varepsilon = \frac{1}{2}\) twice and \(|{\mathsf {x}}(Y_k)| \le k\) to infer for some sufficiently small \(\delta \in (0,\frac{1}{2})\),

\(P_{\omega }\)-a. s. for all \(k \in {\mathbb {N}}_0\). Now \((\psi (\omega ,Y_k))_{k \in {\mathbb {N}}_0}\) is a martingale with respect to \(P_\omega \) and also a \({\mathbb {P}}\)-martingale with respect to its canonical filtration. As it has bounded increments, we may apply Lemma A.1(d) to infer the existence of a variable \(K_2 \in L^2({\mathbb {P}})\) such that \(|\psi (Y_k)| \le K_2 + k^{\frac{1}{2} + \delta }\) for all \(k \in {\mathbb {N}}_0\)\({\mathbb {P}}\)-a. s. Using this in (6.12), we conclude

for \({\mathbb {P}}\)-almost all \((\omega ,(v_j)_{j \in {\mathbb {N}}_0}) \in \varOmega \times V^{{\mathbb {N}}_0}\) and all \(k \in {\mathbb {N}}_0\). The assertion now follows with \(D(\omega ,(v_k)_{k \in {\mathbb {N}}_0}) :=K(\omega ) + \tfrac{1}{2} K(\omega )^{\frac{1}{2}+\delta } + K_2(\omega ,(v_k)_{k \in {\mathbb {N}}_0})^{\frac{1}{2} + \delta }\) and with the observation that \((\frac{1}{2} + \delta )^2 \rightarrow \frac{1}{4}\) as \(\delta \downarrow 0\). \(\square \)

7 Quenched Joint Functional Limit Theorem Via the Corrector Method

From Proposition 6.1 and the martingale central limit theorem, we infer the following result.

Proposition 7.1

For \(\mathrm {P}_{\!p}\)-almost all \(\omega \in \varOmega \),

where (B, M) is a two-dimensional centered Brownian motion with covariance matrix \(\varSigma = (\sigma _{ij})_{i,j=1,2}\) of the form

Proof

Let \(\omega \in \varOmega _{{\mathbf {0}}}\) such that there are infinitely many pre-regeneration points to the left and right of the origin in \(\omega \), and \(\alpha ,\beta \in {\mathbb {R}}\). We prove the convergence in distribution of \(n^{-\frac{1}{2}}(\alpha \psi (Y_{\lfloor nt\rfloor }) + \beta M_{\lfloor nt \rfloor })\) to a centered Brownian motion in the Skorokhod space D([0, 1]) under \(P_\omega \). To this end, we invoke the martingale functional central limit theorem [17, Theorem 3.2]. Let

for \(n \in {\mathbb {N}}\) and \(k=1,\ldots ,n\). In order to apply the result, it suffices to check the following two conditions:

for every \(\varepsilon > 0\) where \(c(\alpha ,\beta )\) is a suitable constant depending on \(\alpha ,\beta \). Here, \({\mathcal {G}}_k :=\sigma (Y_0,\ldots ,Y_k) \subset {\mathcal {G}}\) where \(Y_0,Y_1,\ldots \) are considered as functions \(V^{{\mathbb {N}}_0} \rightarrow V\). To check (7.2), we first define the functions \(f,g,h: \varOmega \rightarrow {\mathbb {R}}\),

These three functions are finite and integrable with respect to \(\mathrm {P}_{\!p}\). This follows from Proposition 6.1(c) for \(\psi \) and from the boundedness of \(\nu _{\omega }(v,w)\) as a function of \(\omega ,v,w\). From Proposition 6.1(b) (with \(u=Y_{k-1}\) and \(v= Y_k-Y_{k-1}\)), we infer for every \(k \in {\mathbb {N}}\):

Lemma 4.2 thus gives

This gives (7.2) with \(P_\omega \)-almost sure convergence instead of the weaker convergence in \(P_\omega \)-probability. Equation (7.3) follows by an argument in the same spirit. We therefore conclude that, for \(\mathrm {P}_{\!p}\)-almost all \(\omega \in \varOmega \),

in the Skorokhod space D[0, 1] as \(n \rightarrow \infty \). Now fix an \(\omega \in \varOmega _{{\mathbf {0}}}\) for which this convergence holds. For the rest of the proof, we work under \(P_\omega \). The above convergence in D[0, 1] implies convergence of the finite-dimensional distributions. By the Cramér-Wold device, we conclude that the finite-dimensional distributions of \(n^{-1/2}(\psi (\omega ,Y_{\lfloor nt\rfloor }),M_{\lfloor nt \rfloor })\) converge to the finite-dimensional distributions of (B, M). As the sequences \(n^{-1/2} \psi (\omega ,Y_{\lfloor nt\rfloor })\) and \(n^{-1/2} M_{\lfloor nt \rfloor }\) are tight in the Skorokhod space D[0, 1], so is \(n^{-1/2} (\psi (Y_{\lfloor nt\rfloor }),M_{\lfloor nt \rfloor })\), cf. [6, Section 15]. This implies (7.1). The formula for the covariances follows from (7.4). \(\square \)

We now give the proof of Theorem 2.5.

Proof of Theorem 2.5

In view of (7.1) and Theorem 4.1 in [6], it suffices to check that, for \(\mathrm {P}_{\!p}\)-almost all \(\omega \),

For a sufficiently small \(\delta \in (0,\frac{1}{4})\), we conclude from (6.9) that

for all \(k \in {\mathbb {N}}_0\) and a random variable \(D \in L^2({\mathbb {P}})\). Hence

Almost sure convergence with respect to \({\mathbb {P}}\) is equivalent to \(P_\omega \)-a. s. convergence for \(\mathrm {P}_{\!p}\)-almost all \(\omega \), hence (7.5) holds.

It remains to prove (2.6), that is, \({\mathbb {E}}[B(1)M(1)] = {\mathbb {E}}[B(1)^2] = \sigma ^2\). Uniform integrability, see (2.7) and (8.1) below, implies

To conclude that the two limits in (7.7) coincide, it suffices to show that

To see that this is true, recall from the proof of Lemma 4.4 that \(Y_k - Y_{k-1} = \varphi ({\overline{\omega }}(k\!-\!1),{\overline{\omega }}(k))\)\({\mathbb {P}}\)-a. s. for all \(k \in {\mathbb {N}}_0\) for some product-measurable function \(\varphi : \varOmega ^2 \rightarrow V\). Therefore, for \(k \in {\mathbb {N}}\), the increments \(X_k-X_{k-1}\) and \(\nu _{\omega }(Y_{k-1},Y_k)-(X_k-X_{k-1})\) of the processes \((X_n)_{n \in {\mathbb {N}}_0}\) and \((M_n-X_n)_{n \in {\mathbb {N}}_0}\) are functions of the pair \(({\overline{\omega }}(k\!-\!1),{\overline{\omega }}(k))\). Hence, \(X_n\) and \(M_n\) can be written in the form \(X_n = \varphi _n({\overline{\omega }}(0), \ldots , {\overline{\omega }}(n))\) and \(M_n-X_n = \psi _n({\overline{\omega }}(0), \ldots , {\overline{\omega }}(n))\) for measurable functions \(\varphi _n\) and \(\psi _n\). We claim that \(\varphi _n\) is antisymmetric and \(\psi _n\) is symmetric in the sense that with \({\mathbb {P}}\)-probability one, we have

Notice that (7.9) together with the reversibility of \(({\overline{\omega }}(n))_{n \in {\mathbb {N}}_0}\) (see Lemma 4.1) implies

i.e., \({\mathbb {E}}[X_n(M_n-X_n)]=0\). It thus remains to prove (7.9). While the first line of (7.9) corresponding to \(X_n\) clearly holds, we require some work to show the second. For \(\omega \in \varOmega \) and \(w \in N_{\omega }(v) = \{w \in V: p_{\omega ,0}(v,w) > 0\}\), an elementary calculation yields

In particular, \(\nu _{\omega }\,(Y_{k-1},Y_k) = X_k-X_{k-1}\) if \(Y_k \not = Y_{k-1}\). Therefore, \(M_k-M_{k-1}-(X_k-X_{k-1}) \not = 0\) iff \(Y_k = Y_{k-1}\). If \(Y_k = Y_{k-1}\), then \(M_k-M_{k-1}-(X_k-X_{k-1}) = \nu _{\omega }\,(Y_{k-1},Y_k)\). Together with (7.10) and the fact that, with \({\mathbb {P}}\)-probability one, \(Y_{k-1}=Y_k\) holds iff \({\overline{\omega }}(k-1)={\overline{\omega }}(k)\), this implies

i.e., (7.9) holds. \(\square \)

8 The Proof of the Einstein Relation

8.1 Proof of Equation (2.8)

For the proof of the Einstein relation, we use a combination of the approaches from [13, 16, 22].

Lemma 8.1

It holds that

Proof

By Lemma 4.1, \(({\overline{\omega }}(n))_{n \ge 0}\) is a stationary and ergodic sequence under \({\mathbb {P}}\). This sequence can be extended canonically to a two-sided stationary and ergodic sequence \(({\overline{\omega }}(n))_{n \in {\mathbb {Z}}}\) on the underlying space \(\varOmega _{{\mathbf {0}}}^{\mathbb {Z}}\). The increment sequences \((X_n-X_{n-1})\) and \((Y_n - Y_{n-1})_{n \in {\mathbb {Z}}}\) also form stationary and ergodic sequences by Lemma 4.4. Therefore, we can invoke Theorem 1 in [27] and conclude that

where \(\delta _{n,2} = \sum _{j=1}^{n} j^{-\frac{3}{2}} \big (\mathrm {E}_{p}[E_\omega [X_j]^2]\big )^{\frac{1}{2}}\). Here, we have

There exists a random variable \(D \in L^2({\mathbb {P}})\) such that (6.9) holds for some \(\delta > 0\) sufficiently small, i.e., \(|\chi (Y_j)| \le D + j^{\frac{1}{4}+\frac{\delta }{2}}\) for all \(j \in {\mathbb {N}}_0\)\({\mathbb {P}}\)-a. s. Hence,

Consequently,

if we pick \(\delta > 0\) sufficiently small. Consequently, (8.1) follows from (8.2). \(\square \)

8.2 Proof of Equation (2.9)

The first two steps of the proof of Theorem 2.4 are completed. We continue with Step 3, i.e., the proof of (2.9). It is based on a second order Taylor expansion for \(\sum _{j=1}^n \log p_{\omega ,\uplambda }(Y_{j-1},Y_{j})\) at \(\uplambda = 0\):

where it should be recalled from the paragraph following (2.4) that \(o_{\omega ,\uplambda }(v,w)\) tends to 0 uniformly in \(\omega \in \varOmega \) and \(v,w \in V\) as \(\uplambda \rightarrow 0\). Set

where we write \(p_\omega \) for \(p_{\omega ,0}\), and

Both, \(A_n\) and \(R_{\uplambda ,n}\) are random variables on \((\varOmega \times V^{{\mathbb {N}}_0},{\mathcal {F}}\otimes {\mathcal {G}})\).

Lemma 8.2

Let \(\uplambda \rightarrow 0\) and \(n\rightarrow \infty \) such that \(\lim _{n \rightarrow \infty } \uplambda ^{2}n = \alpha \ge 0\). Then

and \(R_{\uplambda ,n} \rightarrow 0\)\({\mathbb {P}}\)-a. s.

Proof

The convergence \(R_{\uplambda ,n} \rightarrow 0\) follows from the fact that \(o_{\omega ,\uplambda }(v,w)\) tends to 0 uniformly in \(\omega \in \varOmega \) and \(v,w \in V\) as \(\uplambda \rightarrow 0\).

For the proof of (8.4), notice that \(A_n-A_{n-1}\) is a function of \(({\overline{\omega }}(n-1),{\overline{\omega }}(n))\). To make this more transparent, we write

with the function \(\varphi \) from the proof of Lemma 4.4. Since \(({\overline{\omega }}(n))_{n \in {\mathbb {N}}_0}\) is ergodic under \({\mathbb {P}}\), so is \((A_n-A_{n-1})_{n \ge 1}\), see e.g. Lemma 5.6(c) in [2]. Birkhoff’s ergodic theorem gives

Further, for all v and all \(\omega \), \(p_{\omega }(v,\cdot )\) is a probability measure on \(N_{\omega }(v)\), hence \(\sum _{w\in N_{\omega }(v)} p_{\omega }''(v,w) = 0\). Consequently,

On the other hand, by Theorem 2.5, we have

where the second equality follows from the fact that the increments of square-integrable martingales are uncorrelated and the third equality follows from the fact that \((\nu _{\omega }(Y_{k-1},Y_{k}))_{k \in {\mathbb {N}}}\) is an ergodic sequence under \({\mathbb {P}}\). \(\square \)

Proposition 8.3

For any \(\alpha > 0\), it holds that

Proof

We follow Lebowitz and Rost [22] and use the (discrete) Girsanov transform introduced in Section 2. Indeed, using (8.3), we get

Now divide by \(\uplambda n \sim \sqrt{\alpha n}\) and use Theorem 2.5, Lemma 8.2, Slutsky’s theorem and the continuous mapping theorem to conclude that

Suppose that along with convergence in distribution, convergence of the first moment holds. Then we infer

where the last step follows from the integration by parts formula for two-dimensional Gaussian vectors. It remains to show that the family on the left-hand side of (8.5) is uniformly integrable. To this end, use Hölder’s inequality to obtain

By Lemma 8.1, the first supremum in the last line is finite. Concerning the finiteness of the second, notice that \(\uplambda ^{2} A_n\) and \(R_{\uplambda ,n}\) are bounded when \(\uplambda ^2n\) stays bounded (see the proof of Lemma 8.2 for details), whereas (2.7) gives \(\sup _{\uplambda ,n} {\mathbb {E}}[e^{3\uplambda M_n}] < \infty \). \(\square \)

8.3 Regeneration Points and Times

Given \(\uplambda \in (0,1]\), define \(\uplambda \)-dependent pre-regeneration points by:

The set of \(\uplambda \)-pre-regeneration points is denoted by \({\mathcal {R}}^{\mathrm {pre},\uplambda }\). The cluster is decomposed into independent pieces \(\omega _n^\uplambda :=[R^{\mathrm {pre},\uplambda }_{n-1},R^{\mathrm {pre},\uplambda }_{n})\), \(n \in {\mathbb {Z}}\). The \(\uplambda \)-regeneration times are defined as \(\tau ^{\uplambda }_{0} :=\rho ^{\uplambda }_{0} :=0\) and, inductively,

for \(n \in {\mathbb {N}}\). We further set \(\rho _n^\uplambda :=X_{\tau ^{\uplambda }_{n}} = {\mathsf {x}}(R^{\uplambda }_{n})\). In words, a \(\uplambda \)-regeneration point is a \(\uplambda \)-pre-regeneration point \(R^{\mathrm {pre},\uplambda }_{n}\) such that the walk after the first visit to \(R^{\mathrm {pre},\uplambda }_{n}\) never returns to \(R^{\mathrm {pre},\uplambda }_{n-1}\), the \(\uplambda \)-pre-regeneration point to the left. For \(n \in {\mathbb {N}}\), we write \(R^{\uplambda ,-}_{n}\) for the \(\uplambda \)-pre-regeneration point with the largest \({\mathsf {x}}\)-coordinate which is strictly to the left of \(R^{\uplambda }_{n}\). With this definition, the walk \((Y_k)_{k \in {\mathbb {N}}_0}\) will eventually hit the nth regeneration point \(R^{\uplambda }_{n}\). Afterwards, there may be excursions from \(R^{\uplambda }_{n}\) to the left, but none of those will reach \(R^{\uplambda ,-}_{n}\). In the context of regeneration-time arguments it will be useful at some points to work with a different percolation law than \(\mathrm {P}_{\!p}\) or \(\mathrm {P}_{\!p}^*\), namely, the cycle-stationary percolation law \(\mathrm {P}_{\!p}^\circ \), which is defined below.

Definition 8.4

The cycle-stationary percolation law\(\mathrm {P}_{\!p}^\circ \) is defined to be the unique probability measure on \((\varOmega ,{\mathcal {F}})\) with the following properties:

-

(i)

with \(\mathrm {P}_{\!p}^\circ \)-probability one, there are infinitely many pre-regeneration points to the left and to the right of the origin including one pre-regeneration point at the origin;

-

(ii)

the cycles \(\omega _n\), \(n \in {\mathbb {Z}}\) are i.i.d. under \(\mathrm {P}_{\!p}^\circ \);

-

(iii)

each cycle \(\omega _n\) has the same law under \(\mathrm {P}_{\!p}^\circ \) as \(\omega _1\) under \(\mathrm {P}_{\!p}^*\).

We write \({\mathbb {P}}^\circ _\uplambda \) for \({\mathbb {P}}_{\mathrm {P}_{\!p}^\circ ,\uplambda }^{{\mathbf {0}}}\).

We define

the \(\sigma \)-algebra of the walk up to time \(\tau _n^{\uplambda }\) and of the environment up to \(\rho _n^{\uplambda }\). The distances between \(\uplambda \)-regeneration times are not i.i.d., but 1-dependent.

Lemma 8.5

For any \(n \in {\mathbb {N}}\) and all measurable sets \(F \in {\mathcal {F}}_{\ge } = \sigma (p_{\langle v,w \rangle }: {\mathsf {x}}(v) \wedge {\mathsf {x}}(w) \ge 0)\) and \(G \in {\mathcal {G}}\),

In particular, \(((\tau _{n+1}^{\uplambda }-\tau _n^{\uplambda }, \rho _{n+1}^{\uplambda }-\rho _{n}^{\uplambda }))_{n \in {\mathbb {N}}}\) is a 1-dependent sequence of random variables under \({\mathbb {P}}_{\uplambda }\).

Since Lemma 8.5 is a natural observation and its proof is a rather straightforward but tedious adaption of the proof of Lemma 4.1 in [14], we omit the details of the proof.

The subsequent lemma provides the key estimate for the distances between \(\uplambda \)-regeneration points.

Lemma 8.6

There exist finite constants \(C,\varepsilon >0\) depending only on p such that, for every sufficiently small \(\uplambda > 0\),

In particular,

and

For the proof, we require the following lemma.

Lemma 8.7

There exist finite constants \(\varepsilon =\varepsilon (p)>0\), \(c_*=c_*(p) > 0\) such that, for all \(x \ge 0\),

Proof

It follows from Lemma 3.3(b) of [14] that there exists a constant \(c(p) \in (0,1)\) depending only on p such that

for all \(m \in {\mathbb {N}}_0\). Hence, the moment generating function \(\vartheta \mapsto \mathrm {E}_{p}^\circ [e^{\vartheta {\mathsf {x}}(R_1^{\mathrm {pre}})}]\) is finite in some open interval containing the origin, in particular, \({\mathsf {x}}(R_1^{\mathrm {pre}})\) has positive finite mean \(\mu (p)\) (depending only on p). Let \(\varepsilon \in (0,\mu (p)^{-1})\). Then, for some sufficiently small \(u>0\),

Fix \(x \ge 0\). Since the \(\omega _n\), \(n \in {\mathbb {N}}\) are i.i.d. under \(\mathrm {P}_{\!p}^\circ \), \({\mathsf {x}}(R^{\mathrm {pre}}_{\lfloor \varepsilon x \rfloor })\) is the sum of \(\lfloor \varepsilon x \rfloor \) i.i.d. random variables each having the same law as \({\mathsf {x}}(R_1^{\mathrm {pre}})\) under \(\mathrm {P}_{\!p}^\circ \). Consequently, Markov’s inequality gives

Further,

The first probability decays exponentially fast as \(x \rightarrow \infty \) by the Markovian structure of the percolation cluster under \(\mathrm {P}_{\!p}\), see Section 3 in [14]. The second probability is bounded above by \(e^{-c_* x/2}\) by what has already been shown. Replacing \(c_*\) by a smaller positive real, the second inequality of the lemma follows. \(\square \)

Proof of Lemma 8.6

We first derive (8.8) and (8.9) from (8.7). We only prove the second relation of (8.8). For \(\uplambda >0\), summation by parts and (8.7) give

which remains bounded as \(\uplambda \downarrow 0\). Analogously,

which again remains bounded as \(\uplambda \rightarrow 0\) and thus gives (8.9).

We now turn to the proof of (8.7). By Lemma 8.5 the law of \(\rho _{2}^\uplambda -\rho _1^\uplambda \) under \({\mathbb {P}}_\uplambda \) is the same as the law of \(\rho _1^\uplambda \) under \({\mathbb {P}}_\uplambda ^\circ \) given that \((Y_n)_{n \ge 0}\) never visits \(R^{\mathrm {pre},\uplambda }_{-1}\):

Let \(C :=\{X_k > {\mathsf {x}}(R^{\mathrm {pre},\uplambda }_{-1}) \text { for all } k \in {\mathbb {N}}\}\). In order for \(C^{\mathsf {c}}\) to occur, the walk \((Y_n)_{n \in {\mathbb {N}}_0}\) must travel at least \(1/\uplambda \) steps to the left on the backbone as the distance of \({\mathbf {0}} = R^{\mathrm {pre},\uplambda }_{0}\) and \(R^{\mathrm {pre},\uplambda }_{-1}\) is at least \(1/\uplambda \). From Lemma 5.1, Eq. (5.3), we thus conclude that

for all sufficiently small \(\uplambda > 0\). Hence, for all sufficiently small \(\uplambda > 0\), we have \({\mathbb {P}}_\uplambda ^\circ (C) \ge 1/2\). Fix such a \(\uplambda \). Then

and it thus remains to bound the probability of \(\{\rho _1^\uplambda \ge n\}\) for \(n \in {\mathbb {N}}_0\) under both \({\mathbb {P}}_\uplambda ^\circ \) and \({\mathbb {P}}_\uplambda \). From here on, we work under \({\mathbb {P}}_\uplambda ^\circ \); the corresponding proof with \({\mathbb {P}}_\uplambda \) is analogous.

The basic idea is that \(\rho _1^\uplambda \ge n\) if either there are unusually few \(\uplambda \)-pre-regeneration points in [0, n] or the walk \((Y_k)_{k \in {\mathbb {N}}_0}\) has to make too many excursions of length at least \(\lfloor \frac{1}{\uplambda }\rfloor \) to the left. To turn this idea into a rigorous proof, we first observe that for \(\varepsilon = \varepsilon (p)>0\) from Lemma 8.7, we have

The first probability on the right-hand side of (8.13) is bounded by

where we have used the elementary inequality \(\lfloor a \rfloor \cdot \lfloor b \rfloor \le \lfloor ab \rfloor \) for all \(a,b > 0\) and then Lemma 8.7.

We now turn to the second probability on the right-hand side of (8.13). Observe that a \(\uplambda \)-pre-regeneration point \(R^{\mathrm {pre},\uplambda }_{i}\) is a \(\uplambda \)-regeneration point iff after the first visit to it, the random walk \((Y_k)_{k \ge 0}\) never returns to \(R^{\mathrm {pre},\uplambda }_{i-1}\). We define \(Z_0',Z_1',Z_2',\ldots \) to be the sequence of indices of the \(\uplambda \)-pre-regeneration points visited by \((Y_k)_{k \ge 0}\) in chronological order, i.e., \(Z_j'=i\) if the jth visit of \((Y_k)_{k \ge 0}\) to \({\mathcal {R}}^{\mathrm {pre},\uplambda }\) is at the point \(R^{\mathrm {pre},\uplambda }_{i}\). We then define \(Z_0,Z_1,Z_2,\ldots \) to be the corresponding agile sequence, that is, each multiple consecutive occurrence of a number in the string is reduced to a single occurrence. For instance, if

then

Then \(R^{\mathrm {pre},\uplambda }_{i}\) is a \(\uplambda \)-regeneration point if for the first \(j \in {\mathbb {N}}\) with \(Z_j = i\), we have \(Z_{k} \ge i\) for all \(k \ge j\). Let

Then

We compare the latter probability with the corresponding probability for a biased nearest-neighbor random walk on \({\mathbb {Z}}\) which at any vertex is more likely to move left than the walk \((Z_k)_{k \in {\mathbb {N}}_0}\). More precisely, we may assume without loss of generality that on the underlying probability space there exists a biased nearest-neighbor random walk on \({\mathbb {Z}}\) which we denote by \((S_k)_{k \in {\mathbb {N}}_0}\) such that \({\mathbb {P}}_\uplambda ^\circ (S_0 = 0)=1\) and

According to (5.3), we have

for \(\uplambda > 0\) sufficiently small. This means that we may couple the walks \((S_k)_{k \in {\mathbb {N}}_0}\) and \((Z_k)_{k \in {\mathbb {N}}_0}\) such that \(\{Z_k-Z_{k-1} = -1\} \subseteq \{S_k-S_{k-1} = -1\}\) for all \(k \in {\mathbb {N}}\). Define

A moment’s thought reveals that \(\varrho \ge \varrho ^*\) and hence, for every \(n \in {\mathbb {N}}_0\), by Lemma A.2,

This completes the proof of (8.7). \(\square \)

Lemma 8.8

We have

and

As a consequence,

and

Proof

The uniform bounds in (8.16) follow from (8.14) and Jensen’s inequality. The bounds (8.17) follow from (8.14) and (8.15). Let us first prove (8.15). The time spent until the first \(\uplambda \)-regeneration is bounded below by the number of visits to the pre-regeneration points \(R^{\mathrm {pre}}_k\) with \(0 \le k < \lfloor 1/\uplambda \rfloor ^{-1}\), the pre-regeneration points between \({\mathbf {0}}\) and \(R^{\mathrm {pre},\uplambda }_{1}\). Fix such \(k \in \{0,\ldots ,\lfloor 1/\uplambda \rfloor ^{-1}-1\}\) and write \(N_k\) for the number of returns of \((Y_n)_{n \ge 0}\) to \(R^{\mathrm {pre}}_k\). We shall give a lower bound for \(E_{\omega ,\uplambda }[N_k]\). We may assume without loss of generality that \(R^{\mathrm {pre}}_k={\mathbf {0}}\). Under \(P_{\omega ,\uplambda }\), the number of returns of the walk \((Y_n)_{n \ge 0}\) to \({\mathbf {0}}\) is geometric with success probability being the escape probability

where the identity is standard in electrical network theory, see for instance [2, Formula (13)]. Consequently,

for all sufficiently small \(\uplambda > 0\). From the Nash-Williams inequality [23, Proposition 9.15], we infer

a bound which is independent of \(\omega \). Since there are \(\lfloor 1/\uplambda \rfloor \) such pre-regeneration points to the left of \(R^{\mathrm {pre},\uplambda }_{1}\), we conclude that

This proves the first part in (8.15). The second part is analogous or follows using Lemma 8.5.

Let us turn to (8.14). We shall prove the unconditioned case for \(\tau _{1}^{\uplambda }\); the conditioned case involving \(\tau _{2}^{\uplambda }-\tau _{1}^{\uplambda }\) follows similarly. We decompose the time \(\tau _{1}^{\uplambda }\) until hitting the first \(\uplambda \)-regeneration point \(R_1^\uplambda \) into the time \(\tau _1^{\uplambda ,{\mathcal {B}}}\) spent on the backbone \({\mathcal {B}}\) before hitting \(R_1^\uplambda \), see Fig. 2 and the paragraph preceding it, and the time \(\tau _1^{\uplambda , \mathrm {traps}}\) spent outside the backbone (i.e. inside traps) before first hitting \(R_1^\uplambda \). This gives

First we treat \({\mathbb {E}}_{\uplambda }[(\tau _1^{\mathrm {traps}})^{2}]\).

In order to control the time spent in traps we first bound the time spent in a fixed trap of finite length. Unfortunately the upper bound given in Lemma 6.1(b) in [14] is too rough. However, we follow the arguments there but only consider \(\kappa =4\). Let us consider a discrete line segment \(\{0,\ldots ,m\}, m\ge 2,\) and a nearest-neighbor random walk \((S_n)_{n \ge 0}\) on this set starting at \(i \in \{0,\ldots ,m\}\) with transition probabilities

for \(j=1,\ldots ,m-1\) and

For \(i=0\), we are interested in \(\tau _m :=\inf \{k \in {\mathbb {N}}: S_k = 0\}\), the time until the first return of the walk to the origin. Let \((Z_n)_{n \ge 0}\) be the agile version of \((Y_n)_{n \ge 0}\), i.e., the walk one infers after deleting all entries \(Y_n\) for which \(Y_n = Y_{n-1}\) from the sequence \((Y_0,Y_1,\ldots )\). The stopping times \(\tau _m\) will be used to estimate the time the agile walk \((Z_n)_{n \ge 0}\) spends in a trap of length m given that it steps into it.

Let \(V_{i} :=\sum _{k=1}^{\tau _m-1} \mathbb {1}_{\{S_k=i\}}\) be the number of visits to the point i before the random walk returns to 0, \(i=1,\ldots ,m\). Then \(\tau _m = 1+\sum _{i=1}^m V_i\) and, by Jensen’s inequality,

For \(i=0,\ldots ,m\), let

Given \(S_0=i\), when \(S_1 = i+1\), then \(\sigma _i < \sigma _0\). When the walk moves to \(i-1\) in its first step, it starts afresh there and hits i before 0 with probability \(\mathrm {P}_{\!{i}-1}(\sigma _i < \sigma _0)\). Determining \(\mathrm {P}_{\!{i}-1}(\sigma _i < \sigma _0)\) is the classical ruin problem, hence

In particular, for \(i=1,\ldots ,m-1\), \(r_i\) does not depend on m. Moreover, we have \(r_1 \le r_2 \le \ldots \le r_{m-1}\) and \(r_1 \le r_m \le r_{m-1}\). By the strong Markov property, for \(k \in {\mathbb {N}}\), \(\mathrm {P}_{\!0}(V_i = k) = \mathrm {P}_{\!0}(\sigma _i < \sigma _0) \, r_i^{k-1} (1-r_i)\) and hence

for some polynomial \(p_3(x)\) of degree 3 in x independent of \(\uplambda \). Letting \(\uplambda \rightarrow 0\), we find

and hence

Hence, using Eq. (8.19),

for some polynomial \({{\tilde{p}}}\). Let \(\ell _1\) denote the length of the trap with the trap entrance having the smallest nonnegative \({\mathsf {x}}\)-coordinate. Let \(\ell _0\) and \(\ell _2\) be the lengths of the next trap to the left and right, respectively, etc. The law of \(\ell _0\) differs from the law of the other \(\ell _n\) but this difference is not significant for our estimates, see Lemma 5.1 in [14]. We proceed as in the proof of Lemma 6.2 in [14]. For any \(\omega \in \varOmega ^*\) and any v on the backbone, by the same argument that leads to (24) in [2],

This bound is uniform in the environment \(\omega \in \varOmega ^*\) but depends on \(\uplambda \). Denote by \(v_{i}\) the entrance of the ith trap. By the strong Markov property, \(T_i\), the time spent in the ith trap, can be decomposed into M i.i.d. excursions into the trap: \(T_i = T_{i,1}+ \ldots + T_{i,M}\). Since \(v_i\) is forwards-communicating, (8.22) implies that \(P_{\omega ,\uplambda }(M \ge n) \le (1-{p}_{\mathrm {esc}})^{n}\), \(n \in {\mathbb {N}}\). In particular, M is stochastically bounded by a geometric random variable \({{\tilde{M}}}\) with success parameter \({p}_{\mathrm {esc}}\). Moreover, \(T_{i,1}, \ldots , T_{i,j}\) are i.i.d. conditional on \(\{M \ge j\}\). We now derive an upper bound for \(E_{\omega ,\uplambda }[T_{i,j}^{4} | M \ge j]\). To this end, we have to take into account the times the walk stays put. Each time, the agile walk \((Z_n)_{n \ge 0}\) makes a step in the trap, this step is preceded by an independent geometric number of times the lazy walk stays put. The success parameter of this geometric random variable depends on the position inside the trap. However, it is stochastically dominated by a geometric random variable G with \(\mathrm {P}_{\!0}(G \ge k) = \gamma _\uplambda ^k\) for \(\gamma _\uplambda = (1+e^{\uplambda })/(e^{\uplambda }+1+e^{-\uplambda })\). Plainly, \(\gamma _\uplambda \rightarrow \frac{2}{3}\) as \(\uplambda \rightarrow 0\). Consequently, estimate (8.21) and Jensen’s inequality give

where \(\ell _{i}\) is the length of the ith trap (which is treated as a constant under the expectation \(\mathrm {E}_0\)) and \({{\hat{p}}} = \mathrm {E}_0[G] \cdot {{\tilde{p}}}\) is again a polynomial. Moreover, by Jensen’s inequality and the strong Markov property,

for some constant \({{\tilde{c}}}\) independent of \(\omega \) and \(\uplambda \). We have

Hence

where \(p^*\) is a polynomial with coefficients independent of \(\uplambda \). Using Lemma 3.5 in [14], we find

where \(\uplambda _{\mathrm {c}}\) is defined in Proposition 2.2 and c(p) is a constant only depending on p. Due to (8.24), the dominated convergence theorem applies and gives the following bound:

Now let \(L:=-\min \{X_{k}, k\in {\mathbb {N}}\}\) be the absolute value of the leftmost visited \({\mathsf {x}}\)-coordinate of the walk. Since

we first consider

One application of the Cauchy-Schwarz inequality for the first sum and two applications for the second give

With the estimates (8.25) and (8.9) we obtain

The first term in the upper bound in (8.26) is treated in the same way. Next, we show that \({\mathbb {P}}_\uplambda (L \ge m)\) decays exponentially fast in m. Indeed, \(L \ge 2m\) implies that there is an excursion on the backbone to the left of length at least m or the origin is in a trap that covers the piece \([-m,0)\) and thus has length at least m. The probability that there is an excursion on the backbone of length at least m is bounded by a constant (independent of \(\uplambda \)) times \(e^{-2\uplambda m}\) by Lemma 6.3 in [14]. The probability that a trap that covers the piece \([-m,0)\) is bounded by a constant (again independent of \(\uplambda \)) times \(e^{-2\uplambda _{\mathrm {c}}m}\) by [3, pp. 3403–3404] or [14, Lemma 3.2]. We may thus argue as above to conclude that

Regarding the term \({\mathbb {E}}_{\uplambda }[T_{0}^{2}]\), we can apply (8.25). Controlling the mixed terms in (8.26) using the Cauchy-Schwarz inequality we obtain

Next we treat the time on the backbone. Since the strategy of proof is the same as for the traps we try to be as brief as possible. Write \(N(v) :=\sum _{n \ge 0} \mathbb {1}_{\{Y_n=v\}}\) for the number of visits of the walk \((Y_n)_{n \ge 0}\) to \(v \in V\). We have

We treat the second moment of the second sum first. Using the Cauchy-Schwarz inequality twice, we infer

The number of visits to \(v \in {\mathcal {B}}\) is stochastically dominated by a geometric random variable with success probability \({p}_{\mathrm {esc}}\), see (8.22). Hence

Using (8.9) and (8.23), we infer

We may argue similarly to infer the analogous statement for the first sum in (8.29). Hence, using again the Cauchy-Schwarz inequality for the mixed terms in (8.29), we conclude that

Using the Cauchy-Schwarz inequality for the mixed terms in decomposition (8.18) together with (8.28) and (8.30), we finally obtain the first statement in (8.14). The second statement in (8.14) then follows from Lemma 8.5. More precisely, \(\tau _2^\uplambda -\tau _1^\uplambda \) is a measurable function of the random walk path \((Y_{\tau _1^\uplambda +k})_{k \in {\mathbb {N}}_0}\) and the cluster to the right of the origin in \(\theta ^{R_{1}^{\uplambda ,-}} \!\! \omega \). Here, recall that \(R_{1}^{\uplambda ,-}\) denotes the right-most \(\uplambda \)-pre-regeneration point strictly to the left of \(R_{1}^{\uplambda }\). Lemma 8.5 thus yields

Here, \({\mathbb {P}}_\uplambda ^\circ (X_k > {\mathsf {x}}(R^{\mathrm {pre},\uplambda }_{-1}) \text { for all } k \in {\mathbb {N}}) \ge \frac{1}{2}\) by (5.3) for all sufficiently small \(\uplambda > 0\). Consequently,

To show that the second relation in (8.14) holds, we may now argue as for the first. The arguments carry over without substantial changes. The bounds required on \({\mathbb {P}}_\uplambda ^\circ (\rho _{1}^\uplambda \ge j)\) are contained in the proof of Lemma 8.6. We omit further details. \(\square \)

The existence of a regeneration structure allows to express the linear speed in terms of regeneration points and times.

Lemma 8.9

Let \(\uplambda >0\). Then

We omit the proof as it is standard and can be derived as [14, Proposition 4.3], with references to classical renewal theory replaced by references to renewal theory for 1-dependent variables as presented in [18]. As a consequence of Lemmas 8.6, 8.8 and 8.9, we obtain

8.4 Proof of Equation (2.10)

It remains to prove (2.10), i.e.,

for \(\alpha > 0\). The proof follows along the lines of Section 5.3 in [13]. In order to keep this paper self-contained, we repeat the corresponding arguments from [13] in the present context.

For \(\uplambda >0\), we set