Abstract

We study a concrete model of a confined particle in form of a Schrödinger operator with a compactly supported smooth potential coupled to a bosonic field at positive temperature. We show, that the model exhibits thermal ionization for any positive temperature, provided the coupling is sufficiently small. Mathematically, one has to rule out that zero is an eigenvalue of the self-adjoint generator of time evolution—the Liouvillian. This will be done by using positive commutator methods with dilations in the space of scattering functions. Our proof relies on a spatial cutoff in the coupling but does otherwise not require any unnatural restrictions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

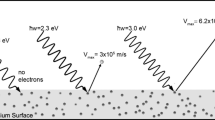

The phenomenon of thermal ionization can be viewed as a positive temperature generalization of the photoelectric effect: an atom is exposed to thermal radiation emitted by a black body of temperature \(T > 0\). Then photons of momentum \(\omega \) with a momentum density given by Planck’s law of black-body radiation,

where \(\beta = T^{-1}\), will interact with the electrons of the atom. Since there is a positive probability for arbitrary high energy photons, eventually one with sufficiently high energy will show up exceeding the ionization threshold of the atom.

For the zero temperature situation (photoelectric effect) there can be found qualitative and quantitative statements for different simplified models of atoms with quantized fields in [3, 11, 32]. If one replaces the atom by a finite-dimensional small subsystem, the model usually exhibits the behavior of return to equilibrium, see for example [2]. Here the existence of a Gibbs state of the atom leads to the existence of an equilibrium state of the whole system. One is confronted with a similar mathematical problem—disproving the degeneracy of the eigenvalue zero. The most common technique for handling this are complex dilations, or its infinitesimal analogue: positive commutators, which goes back to work of Mourre (cf. [23]). There are a number of papers which also use positive commutators in the context of return to equilibrium, see for example [10, 13, 15, 19].

A first rigorous treatment of thermal ionization was given by Fröhlich and Merkli in [7] and in a subsequent paper [8] by the same authors together with Sigal. The ionization appears mathematically as the absence of a time-invariant state in a suitable von Neumann algebra describing the complete system. The time-invariant states can be shown to be in one-to-one correspondence with the elements of the kernel of the Liouvillian. For the proof they used a global positive commutator method first established for Liouvillians in [19] and [6] with a conjugate operator on the field space as in [15]. Furthermore, they developed a new virial theorem.

A similar situation in a mathematical sense occurs if one considers a small finite-dimensional or confined system coupled to multiple reservoirs at different temperatures. Here one can prove as well the absence of time-invariant normal states, which translates into the absence of zero as an eigenvalue of the Liouvillian (cf. [5, 14, 20]). However, one can show the existence of so-called non-equilibrium steady states, which correspond to resonances of the Liouvillian (cf. [4, 14, 21]).

In [7] an abstract representation of a Hamiltonian diagonalized with respect to its energy with a single negative eigenvalue was considered. For the proof certain regularity assumptions of the interaction with respect to the energy were imposed. However, it is not so clear how these assumptions translate to a more concrete setting. The reference [8] on the other hand covers the case of a Schrödinger operator with a long-range potential but with only finitely many modes coupled via the interaction. Moreover, only a compact interval away from zero of the continuous spectrum was coupled.

The purpose of this paper is to transfer the results of [7, 8] to a more specific model of a Schrödinger operator with a compactly supported potential with finitely many eigenvalues. We consider a typical coupling term and we have to impose a spatial cutoff. However, we do not need any restrictions with respect to the coupling to the continuous subspace of the atomic operator as in [8]. Moreover, in contrast to [7, 8] our result holds uniformly for bounded positive temperatures. This is achieved by considering a finite approximation of the so-called level shift operator. For the proof we use the same commutator on the space of the bosonic field as in [7, 8, 15], and we also reuse the original virial theorem of [7, 8]. On the other hand we work with a different commutator on the atomic space, namely the generator of dilations in the space of scattering functions.

The organization of the paper is as follows. In Sect. 2 we introduce the model and define the Liouvillian. In addition, we state the precise form of our main result in Theorem 2.6 and all the necessary assumptions. We also give a more detailed outline of the proof in Sect. 2.6. Section 3 recalls the abstract virial theorem of [7, 8] and some related technical methods. Then we verify the requirements of the abstract virial theorem in our setting in Sect. 4. We repeat the definition of scattering states (Sect. 4.1) and use those for the concrete choice of the commutators. The major difficulty here is to check that the commutators with the interaction terms are bounded. This requires bounds which involve the scattering functions and is elaborated in Sect. 5. The application of the virial theorem then yields a concrete version—Theorem 4.5. This is the first key element for the proof of the main theorem. The second one is the actual proof that the commutator together with some auxiliary term is positive. This and the concluding proof of Theorem 2.6 at the end can be found in Sect. 6.

2 Model and the Main Result

A model of a small subsystem interacting with a bosonic field at positive temperature is usually represented as a suitable \(C^*\)- or \(W^*\)-dynamical system on a tensor product algebra consisting of the field and the atom, respectively. Note that by \(\mathcal {L}(\mathcal {H})\) we shall denote the space of all bounded linear operators on a space \(\mathcal {H}\).

The field is defined by a Weyl algebra with infinitely many degrees of freedom. To implement black-body radiation at a specific temperature \(T > 0\) the GNS representation with respect to a KMS (equilibrium) state depending on T is considered. For the atom the whole algebra \(\mathcal {L}(\mathcal {H}_{\mathrm p})\) for the atomic Hilbert space \(\mathcal {H}_{\mathrm p}= L^2({\mathbb {R}}^3)\) is used and the GNS representation with respect to an arbitrary mixed reference state is performed. The combined representation of the whole system generates a \(W^*\)-algebra where the interacting dynamics can be defined by means of a Dyson series in a canonical way. Its self-adjoint generator—the Liouvillian—is of great interest when studying such systems. The details of this construction can be found in [7, 8, 24]. Furthermore, it is shown in [7, 8] that the absence of zero as an eigenvalue of the Liouvillian implies the absence of time-invariant states of the \(W^*\)-dynamical system.

In this paper we start directly with the definition of the Liouvillian without repeating its derivation and the algebraic construction. The only difference in our setting to [7, 8] is the coupling term which can be realized as an approximation of step function couplings as considered in their work.

The purpose of this section is the definition of the concrete Liouvillian, the precise statement of the result—absence of zero as an eigenvalue—and the required conditions. At the end in Sect. 2.6, we explain the basic structure of the proof.

We start with the three ingredients of the model: the atom, the field and the interaction, which we first discuss separately and state the required assumptions.

2.1 The Atom

For the atom we consider a Schrödinger operator on the Hilbert space \(\mathcal {H}_{\mathrm p}:= L^2({\mathbb {R}}^3)\),

where we assume that

-

(H1)

\(V \in C_{\mathrm c}^\infty ({\mathbb {R}}^3)\),

-

(H2)

if \(\psi \in L^2({\mathbb {R}}^3)\) satisfies

$$\begin{aligned} \psi (x) = - \frac{1}{4\pi } \int \frac{\left| V(x) \right| ^{\frac{1}{2}} V(y)^{\frac{1}{2}} }{\left| x-y \right| } \psi (y) {\mathrm d}y , \quad \text { for a.e. } x \in {\mathbb {R}}^3, \end{aligned}$$(2.1)where \(V^{1/2} := |V|^{1/2} \text {sgn} V\), then \(\psi = 0\).

We note that the above assumptions have the following immediate consequences.

Proposition 2.1

If (H1) holds, then

-

(a)

\(H_{\mathrm p}\) is essentially self-adjoint on \(C_{\mathrm c}^\infty ({\mathbb {R}}^3)\) with domain \(\mathcal {D}(\varDelta )\),

-

(b)

\(H_{\mathrm p}\) has essential spectrum \([0,\infty )\),

-

(c)

the discrete spectrum of \(H_{\mathrm p}\), denoted by \(\sigma _{\mathrm d}(H_{\mathrm p})\), is finite,

-

(d)

\(H_{\mathrm p}\) has no positive eigenvalues,

-

(e)

\(H_{\mathrm p}\) has no singular spectrum.

If (H1) and (H2) hold, then

-

(f)

zero is not an eigenvalue of \(H_{\mathrm p}\).

Proof

(a) follows from Kato–Rellich since V is infinitesimally bounded with respect to \(-\varDelta \). (b) is shown for example in Example 6 of Section XIII.4 in [27]. (c) follows from [27, Theorem XIII.6]. (d) follows from the Kato-Agmon-Simon theorem ([27, Theorem XIII.58]). (e) follows from [27, Theorem XIII.21]. (f) Suppose \(\varphi \) were an eigenvector with eigenvalue zero. Then using the integral representation of the resolvent of the Laplacian, see for example [26, IX.7], we find \(\varphi (x) = - (4\pi )^{-1} \int _{\mathbb {R}^3} |x-y|^{-1} V(y) \varphi (y) dy\). From this it is straightforward to verify that \(\psi = |V|^{1/2} \varphi \) would be nonzero solution of (2.1). \(\square \)

We shall denote by \(P_{\mathsf {ess} }\) be the spectral projection to the essential spectrum of \(H_{\mathrm p}\). As an immediate consequence of the above proposition we find that (H1) and (H2) imply \(P_{\mathsf {ess} }= P_\text {ac}\), where \(P_\text {ac} \) denotes the projection onto the absolutely continuous spectral subspace of \(H_{\mathrm p}\). As we are in a statistical physics setting, we have to consider density matrices as states of our system. They form a subset of the Hilbert-Schmidt operators, so we will work in a space isomorphic to the latter,

Remark 2.2

More details about the condition (H2) and the behavior at the threshold of zero energy can be found in [16, 17]. In particular, we would like to point out that (H2) is mostly satisfied for the potentials which we consider. To this end, assume (H1) and let \(V_\alpha = \alpha V\) for \(\alpha \ge 0\). Let \(K_\alpha \) be the operator with integral kernel

Clearly \(K_\alpha = \alpha K_1\), and it is straightforward to see, that \(K_1\) is Hilbert-Schmidt and hence a compact operator. We conclude that for any \(k > 0\), there exist only finitely many \(\alpha \in [0,k]\) such that \(K_\alpha \) has eigenvalue one or equivalently that (H2) is violated for \(V_\alpha \). Furthermore, we note that (H2) is equivalent to the statement that the so-called Fredholm determinant \(\text {det}_2(1 - K_0)\) is nonzero, see [25]. For the definition of \(\text {det}_2\) we refer the reader to [27]. Now Fredholm determinants are finite analytic (cf. [29]) and \(\text {det}_2(1) \ne 0\), which could be used to obtain a second argument why potentials which do not satisfy (H2) are rather rare. Finally, we note the property (H2) is referred to in [25] as energy 0 being an exceptional point.

Remark 2.3

For the result and our proof one actually needs just finitely many derivatives of V. Therefore, one could weaken (H1). However it seems a bit tedious in the proof to keep track to which order exactly derivatives are required.

2.2 The Quantized Field

The quantized field will be described by operators on Fock spaces. Let \(\mathfrak {F}(\mathfrak {h})\) denote the bosonic Fock space over a Hilbert space \(\mathfrak {h}\), that is,

where \(\otimes _{\mathrm s}n\) denotes the n-fold symmetric tensor product of Hilbert spaces, and \(\mathfrak {h}^{\otimes _{\mathrm s}0} := {\mathbb {C}}\). For \(\psi \in \mathfrak {F}(\mathfrak {h})\), we write \(\psi _n\) for the n-th element in the direct sum and we use the notation \(\psi = (\psi _0, \psi _1, \psi _2, \ldots )\). The vacuum vector is defined as \(\varOmega := (1,0,0,\ldots )\). For a dense subspace \(\mathfrak {d} \subseteq \mathfrak {h}\) we define the dense space of finitely many particles in \(\mathfrak {F}(\mathfrak {h})\) by

where here \(\otimes _{\mathrm s}n\) represents the n-fold symmetric tensor product of vector spaces. As in the case of the atomic degrees of freedom we will work on the space of density matrices and thus use the space

where \(L^2({\mathbb {R}}\times \mathbb {S}^2 ) := L^2 ({\mathbb {R}}\times \mathbb {S}^2,{\mathrm d}u \times {\mathrm d}\varSigma )\) and \({\mathrm d}\varSigma \) denotes the standard spherical measure on \(\mathbb {S}^2\). We note that the canonical identification (2.2), outlined in Remark 2.5 below, is referred to as ‘gluing’ and was first introduced by Jakšić and Pillet in [13]. For \(\psi \in \mathfrak {F}\) notice that \(\psi _n\) can be understood as an \(L^2\) function in n symmetric variables \((u,\varSigma ) \in {\mathbb {R}}\times \mathbb {S}^2\).

Let \(\mathcal {H}\) be a Hilbert space. On \(\mathcal {H}\otimes \mathfrak {F}\) we define a so-called generalized annihilation operator for a function \(F \in L^2({\mathbb {R}}\times \mathbb {S}^2, \mathcal {L}( \mathcal {H}))\) by

Note that \(a(F) \varOmega = 0\). The generalized creation operator \(a^*(F)\) is defined as the adjoint of a(F). By definition \(F \mapsto a^*(F)\) is linear whereas \(F \mapsto a(F)\) is anti-linear. In the scalar case where \(\mathcal {H}= {\mathbb {C}}\), we have \(\mathcal {H}\otimes \mathfrak {F}= \mathfrak {F}\) and one obtains the usual creation and annihilation operators satisfying the canonical commutation relations, for \(f,g \in L^2({\mathbb {R}}\times \mathbb {S}^2)\),

We also define the field operators as \(\varPhi (F) = a(F) + a^*(F)\).

For a measurable function \(M :{\mathbb {R}}\times \mathbb {S}^2 \rightarrow {\mathbb {R}}\) we introduce the second quantization \(\mathsf {d} \varGamma (M)\), which is a self-adjoint operator on \(\mathfrak {F}\), given for \(\psi \in \mathfrak {F}\) by

In particular we will use the number operator,

2.3 Liouvillian with Interaction

We assume to have an interaction term with a smooth spatial cutoff. For \((\omega ,\varSigma ) \in {\mathbb {R}}_+ \times \mathbb {S}^2\), where \({\mathbb {R}}_+ := (0,\infty )\), we define a bounded multiplication operator on \(\mathcal {H}_{\mathrm p}\) by

where \(\kappa \) is a function on \({\mathbb {R}}_+\) and \(\chi \in \mathcal {S}({\mathbb {R}}^3)\)—the space of Schwartz functions, and for each \((\omega ,\varSigma )\), \({\tilde{G}}(\omega ,\varSigma )\) is a function on \({\mathbb {R}}^3\), satisfying the following conditions.

-

(I1)

Spatial cutoff: For all \(n \in \{0,1,2,3\}\) and \(\alpha \in {\mathbb {N}}_0^3\) the partial derivatives \( \partial _x^\alpha \partial ^n_\omega {\tilde{G}}\) exist and are continuous on \({\mathbb {R}}_+ \times \mathbb {S}^2 \times {\mathbb {R}}^3\), and there exists a polynomial P and an \(M \in {\mathbb {N}}_0\) such that for all \((\omega ,\varSigma ,x) \in {\mathbb {R}}_+ \times \mathbb {S}^2 \times {\mathbb {R}}^3\)

$$\begin{aligned} \left| \partial _x^\alpha \partial ^n_\omega {\tilde{G}}(\omega ,\varSigma )(x) \right| \le P(\omega ) \langle x \rangle ^M , \end{aligned}$$(2.4)where \(\left\langle x \right\rangle := (1 + x^2)^{1/2}\).

-

(I2)

UV cutoff: \(\kappa \) decays faster than any polynomial, that is, for all \(n \in {\mathbb {N}}\),

$$\begin{aligned} \sup _{\omega \ge 1} \omega ^n \left| \kappa (\omega ) \right| < \infty . \end{aligned}$$ -

(I3)

Regularity and infrared behavior: \(\kappa \in C^3({\mathbb {R}}_+)\) and one of the following two properties holds:

-

(i)

there exist \(k, C \in (0,\infty )\), \(p > 2\) such that

$$\begin{aligned} \left| \partial ^j_\omega \kappa (\omega ) \right| \le C \omega ^{p-j}, \quad \omega \in (0, k) , \quad j = 0,\ldots ,3 , \end{aligned}$$ -

(ii)

there exist \(J \in {\mathbb {N}}_0\) and \(\kappa _0 \in C^s([0,\infty ))\), with \(s = \max \{0,3-J\}\), such that

$$\begin{aligned} \kappa (\omega ) = \omega ^{-\frac{1}{2} + J } \kappa _0(\omega ), \quad \omega >0 , \end{aligned}$$and there exists an extension \(\tilde{G}_0\) of \(\tilde{G}\) to \([0,\infty ) \times \mathbb {S}^2 \times {\mathbb {R}}^3\) such that for all \(j \in \{0,\ldots ,s\}\) and all \(\alpha \in {\mathbb {N}}_0^3\) the partial derivatives \( \partial _x^\alpha \partial ^j_\omega {\tilde{G}}_0\) exist, are continuous, satisfy (2.4) for all \((\omega ,\varSigma ,x) \in [0,\infty ) \times \mathbb {S}^2 \times {\mathbb {R}}^3\), and

$$\begin{aligned}&\partial _{\omega }^j (\kappa _0(\omega ) \chi {\tilde{G}}_0(\omega ,\varSigma ))(x) |_{\omega =0} = (-1)^{j+J+1} \partial _{\omega }^j \overline{ (\kappa _0(\omega ) \chi {\tilde{G}}_0(\omega ,\varSigma ))(x)}|_{\omega =0} . \end{aligned}$$

-

(i)

Remark 2.4

The condition (I1) implies that there is a constant C such that \(\left\| G(\omega ,\varSigma ) \right\| _{\mathcal {L}(\mathcal {H}_{\mathrm p})} \le C \left| \kappa (\omega ) \right| \) holds for all \((\omega ,\varSigma ) \in {\mathbb {R}}_+ \times \mathbb {S}^2\). In particular, G has the same decay behavior like \(\kappa \) for \(\omega \rightarrow 0\) and \(\omega \rightarrow \infty \) as described in (I2) and (I3).

Let \(\beta > 0\) be the inverse temperature and let

be the probability distribution for black-body radiation. To describe the interaction in the positive temperature setting it is convenient to introduce a map \(\tau _\beta \) as follows. For a function \(F :{\mathbb {R}}_+ \times \mathbb {S}^2 \rightarrow \mathcal {L}(\mathcal {H}_{\mathrm p})\) we define a function \(\tau _\beta F :( \mathbb {R}\setminus \{ 0 \} ) \times \mathbb {S}^2 \rightarrow \mathcal {L}(\mathcal {H}_{\mathrm p})\) by

It is straightforward to verify that \(\tau _\beta \) maps the following spaces into each other

where we adapted the convention to use again the symbol for the restriction. Note that the measure \((\omega ^2 + \omega ) {\mathrm d}\omega {\mathrm d}\varSigma \) originates from the identification

where all elements f in the space on the right-hand side satisfy \(\sqrt{\rho _\beta ( \left| \cdot \right| ) } f, \sqrt{1+\rho _\beta ( \left| \cdot \right| )} f \in L^2({\mathbb {R}}^3)\).

Let \(\mathcal {C}_{\mathrm p}\) be the complex conjugation on \(\mathcal {H}_{\mathrm p}\) given by \(\mathcal {C}_{\mathrm p}\psi (x) := \overline{\psi (x)}\), and for an operator \(T \in \mathcal {L}(\mathcal {H}_{\mathrm p})\) we define \(\overline{T} := \mathcal {C}_{\mathrm p}T \mathcal {C}_{\mathrm p}\).

Let

which is a dense subspace of the composite Hilbert space

On \({\widetilde{\mathcal {D}}}\) we define for \(\lambda \in {\mathbb {R}}\) the Liouvillian by

where \(W := \varPhi (I)\), and

Note that by (I1)–(I3), \(G \in L^2({\mathbb {R}}_+ \times \mathbb {S}^2, (\omega ^2 + \omega ) {\mathrm d}\omega {\mathrm d}\varSigma , \mathcal {L}(\mathcal {H}_{\mathrm p}) )\) and therefore, \(I \in L^2({\mathbb {R}}\times \mathbb {S}^2, \mathcal {L}(\mathcal {H}_{\mathrm p}\otimes \mathcal {H}_{\mathrm p}))\) and the expression \(\varPhi (I)\) is well-defined. It will be shown below (Proposition 4.3) that \(L_\lambda \) is indeed essentially self-adjoint.

Remark 2.5

Let us give a unitarily equivalent definition of the Liouvillian (2.6), which uses the usual notations from physics and which does not involve the ‘gluing’ construction. Let \(c^*(k)\) and c(k), \(k \in {\mathbb {R}}^3\), denote the usual creation and annihilation operator-valued distributions of \(\mathfrak {F}(L^2({\mathbb {R}}^3))\). Let the free field energy operator on \(\mathfrak {F}(L^2({\mathbb {R}}^3))\) be given by \(H_{{\text {f}}}= \int |k| c^*(k) c(k) dk\). For the coupling function introduced in (2.3) we define \(\widehat{G}(\omega \varSigma ) = G(\omega ,\varSigma )\) for all \((\omega ,\varSigma ) \in \mathbb {R}_+ \times \mathbb {S}^2\). It follows from (I1)–(I3) that \(\widehat{G} \in L^2({\mathbb {R}}^3, (1+ \left| k \right| ^{-1}) {\mathrm d}k , \mathcal {L}( \mathcal {H}_{\mathrm p}))\). We consider the following operator in \(\mathcal {H}_{\mathrm p}\otimes \mathcal {H}_{\mathrm p}\otimes \mathfrak {F}(L^2({\mathbb {R}}^3)) \otimes \mathfrak {F}(L^2({\mathbb {R}}^3)) \),

which is defined on the dense subspace \(\hat{ \mathcal {D} } := C_{\mathrm c}^\infty ({\mathbb {R}}^3) \otimes C_{\mathrm c}^\infty ({\mathbb {R}}^3) \otimes \mathfrak {F}_{{\text {fin}}}( \mathcal {D}(|\cdot |)) \otimes \mathfrak {F}_{{\text {fin}}}(\mathcal {D}(|\cdot |))\). One can show that \(\widehat{L}_\lambda \) is unitarily equivalent to (2.6). To this end, we define the unitary transformation

with \(\psi (f)(\omega ,\varSigma ) := \omega f(\omega \varSigma )\), inducing the unitary transformation of Fock spaces \(\varGamma (\psi )\), see for example [26, Section X] ,

and the unitary transformation

inducing the unitary transformation of Fock spaces \(\varGamma (\tau )\). Furthermore, let \(V_\mathfrak {h}\) denote the canonical unitary map

characterized by mapping the tensor product of the vacua to the vacuum and satisfying \(V_\mathfrak {h} (b(f)\otimes {\text {Id}}+ {\text {Id}}\otimes b(g) ) V_\mathfrak {h}^* = b(f,g)\), here \(b(\cdot )\) stands for the usual annihilation operator on the corresponding Fock spaces. As a consequence of the definition it follows that \(U := \varGamma (\tau ) \circ V_{ L^2(\mathbb {R}_+ \times \mathbb {S}^2 ) } \circ ( \varGamma (\psi ) \otimes \varGamma (\psi ) ) \) is a unitary transformation

which satisfies the property

where \(a(u,\varSigma )\) denotes the usual annihilation operator-valued distribution of \(\mathfrak {F}(L^2( \mathbb {R}\times \mathbb {S}^2 ) ) \). Using (2.7) it is straightforward to verify that \( L_\lambda = U \widehat{L}_\lambda U^*\) on \({\widetilde{\mathcal {D}}}\).

2.4 Main Result

For the proof of our main result we need an additional assumption. The instability of the eigenvalues should be visible in second order in perturbation theory with respect to the coupling constant. This term is also called level shift operator and the corresponding positivity assumption Fermi Golden Rule condition. In Sect. 2.5 an example is provided where this is satisfied.

Fermi Golden Rule Condition

For \(E \in \sigma _{\mathrm d}(H_{\mathrm p})\) let \(p_E := \mathbb {1}_{\{E\}}(H_{\mathrm p})\) be the spectral projection corresponding to the eigenvalue E. For \(\varepsilon > 0\) and \(E \in \sigma _{\mathrm d}(H_{\mathrm p})\) let \(\gamma _\beta (E,\varepsilon )\) be the largest number such that

where

and we recall that \(P_{\mathsf {ess} }\) denotes the spectral projection to the essential spectrum of \(H_{\mathrm p}\). Furthermore we set \(\gamma _\beta (\varepsilon ) := \inf _{E \in \sigma _{\mathrm d}(H_{\mathrm p})} \gamma _\beta (E,\varepsilon )\).

-

(F)

By the Fermi Golden Rule Condition for \(\beta \) we mean that there exists an \(\varepsilon > 0\) such that \(\gamma _\beta (\varepsilon ) > 0\).

Notice that \(\gamma _\beta \) might depend on \(\beta \). In particular, this is the case if, as in [7, 8], (F) is verified using only the term \(\varepsilon F^{(1)}_\beta (E,\varepsilon )\) (for \(\varepsilon \rightarrow 0\)), which decays exponentially to zero if \(\beta \rightarrow \infty \). However, one can also obtain results uniformly in \(\beta \) for \(\beta \ge \beta _0 > 0\) (low temperature) by proving that \(F^{(2)}_\beta (E,\varepsilon )\) is positive for a fixed \(\varepsilon > 0\), which will be done in the next section, Corollary 2.8.

Let us now state the main result of this paper.

Theorem 2.6

Assume that (H1), (H2) and (I1)–(I3) hold. Let \(\beta _0 > 0\) and \(\varepsilon > 0\). Then there exists a constant \( C > 0\) such that the following holds. If \(\beta \ge \beta _0\) and \(0< \left| \lambda \right| < C \min \{ 1 , \gamma _\beta ^2(\varepsilon )\}\) the operator \(L_\lambda \) given in (2.6) does not have zero as an eigenvalue.

Remark 2.7

Our result implies the absence of a zero eigenstate in the Hilbert space realized by the class of Hilbert-Schmidt operators. However, it does not exclude the existence of resonances, which lead to so-called non-equilibrium steady states (NESS) described as in [4, 14, 21].

2.5 Application

In the following we present an example of a QED system with a linear coupling term (Nelson Model) where the conditions for the main theorem are satisfied. In particular, one can verify the Fermi Golden Rule condition (F) in this case. We summarize this in the following corollary to Theorem 2.6.

Corollary 2.8

Assume that (H1) and (H2) hold. Let

where \(\chi \in \mathcal {S}({\mathbb {R}}^3)\) is nonzero, and \(\kappa \) is a nonzero function on \({\mathbb {R}}_+\) satisfying (I2) and one of the following two conditions:

-

(a)

part (i) of (I3) holds,

-

(b)

\(\chi \) is real-valued and there exist \(J \in {\mathbb {N}}_0\) and a real-valued \(\kappa _0 \in C^s([0,\infty ))\), \(s = \max \{0,3-J\}\), satisfying

$$\begin{aligned} \frac{{\mathrm d}^j}{{\mathrm d}\omega ^j}\bigg |_{\omega =0} \kappa _0(\omega ) = 0, \qquad 1 \le j \le 3-J,~j \text { odd}, \end{aligned}$$such that

$$\begin{aligned} \kappa (\omega ) = \omega ^{-\frac{1}{2} + J} \kappa _0(\omega ) \end{aligned}$$(2.9)for all \(\omega \ge 0\).

Then for any \(\beta _0 > 0\) there exists a \(\lambda _0 > 0 \), such that whenever \(0< \left| \lambda \right| < \lambda _0\) and \(\beta \ge \beta _0\) the operator \(L_\lambda \), or equivalently \(\widehat{L}_\lambda \) defined in Remark 2.5, does not have zero as an eigenvalue.

Remark 2.9

A possible choice for \(\kappa _0\) in (2.9) would be \(\kappa _0(\omega ) = C e^{-c \omega ^2}\) for constants \(c,C\,{>}\,0\).

Proof

Derivatives with respect to \(\omega \) and x yield only polynomial growth in x and \(\omega \), respectively. Thus, (I1) is satisfied. The conditions (H1), (H2), (I2) and (I3) (i) (if (a) holds), are satisfied by assumption. In the case of (b) note that G in (2.8) can be multiplied with any phase \(e^{\mathrm {i}\varphi }\), \(\varphi \in {\mathbb {R}}\), which just yields unitary equivalent Liouvillians by means of the unitary transformation \({\text {Id}}_{\mathrm p}\otimes {\text {Id}}_{\mathrm p}\otimes \varGamma (e^{\mathrm {i}\varphi })\) (cf. [26, Section X] for the definition of \(\varGamma \)). Therefore, we can assume without loss of generality that instead of (2.9), we have

The condition (I3) (ii) is actually satisfied in this case, since

holds for all \(\varSigma \in \mathbb {S}^2\), \(x \in {\mathbb {R}}^3\) and \(j=0, \ldots ,3 - J\).

It remains the verification of the Fermi Golden Rule condition. This will follow from Proposition 2.10, below, since \(\sigma _{\mathrm d}(H_{\mathrm p})\) is finite. \(\square \)

Proposition 2.10

Suppose the assumptions of Corollary 2.8 hold, and let \(E \in \sigma _{\mathrm d}(H_{\mathrm p})\). Then for any \(\varepsilon > 0\) there exists a \(\gamma > 0\) (independent of \(\beta \)) such that

Proof

First note that \(p_E F^{(2)}_\beta (E,\varepsilon ) p_E\restriction _{{\text {ran}}p_E}\) is a non-negative matrix acting on the finite-dimensional space \({\text {ran}}p_E\). Let \(\varphi _E \in {\text {ran}}p_E\) denote a normalized eigenvector with respect to the lowest eigenvalue of \(p_E F^{(2)}_\beta (E,\varepsilon ) p_E\restriction _{{\text {ran}}p_E}\). We have to show that \(\left\langle \varphi _E, F^{(2)}_\beta (E,\varepsilon ) \varphi _E \right\rangle \ge \gamma \) for a \(\gamma > 0\) independent of \(\beta \).

First observe that

The integrand is continuous in \((\omega ,\varSigma )\) and non-negative. Thus, by the finite dimensionality of the eigenspace with eigenvalue E and continuity it suffices to show that for some \((\omega ,\varSigma )\) we have

which follows if we can show

We now claim that (2.10) holds for some \((\omega ,\varSigma )\). Otherwise, by the finite dimensionality of the range of \({\text {Id}}-P_\text {ess}\), the space

would be finite-dimensional as well. But this leads to contradiction. By unique continuation of eigenfunctions ([27, Theorem XIII.57]) \(\varphi _E(x) \not = 0\) for a.e. \(x \in {\mathbb {R}}^3\), and thus, \(\chi \varphi _E\) is nonzero. By assumption \(\kappa \) does not vanish on some nonempty open interval \(I \subset (0,\infty )\). It now follows that

is a subspace of (2.11) and has infinite dimension, by well-known methods (e.g. by calculating Wronskians and Vandermonde determinants). \(\square \)

Instead of using \(F^{(2)}_\beta (E,\varepsilon )\) one could also verify (F) with the first term \(F^{(1)}_\beta (E,\varepsilon )\) in the limit \(\varepsilon \rightarrow 0\). This does not improve the qualitative statement of Corollary 2.8 and has the drawback that \(\gamma _\beta (\varepsilon ) \rightarrow 0\) as \(\beta \rightarrow \infty \) as in [7, 8]. However, in certain situations it might give the dominant contribution for the lower bound \(\gamma _\beta (\varepsilon )\) and therefore the maximal admissible coupling strength in Theorem 2.6. In this context we would like to mention the “zero temperature result” about the leading order contribution of the ionization probability in the photoelectric effect [11].

2.6 Overview of the Proof

The first step is to find a suitable conjugate operator A consisting of a part \({A_{\mathrm p}}\) on the particle space \(\mathcal {H}_{\mathrm p}\) and a part \(A_{{\text {f}}}\) on the field space \(\mathfrak {F}\).

For the latter we make the same choice as established for the first time in [15] and later also used by Merkli and co-authors in [7, 8, 19], namely the second quantization of the generator of translations,

Let \(P_\varOmega \) denote the orthogonal projection onto the one-dimensional subspace containing the vacuum \(\varOmega \) (“vacuum subspace”). Formally, we obtain on \(\mathfrak {F}\) that

which yields a positive contribution on the space orthogonal to the vacuum. The Hilbert space \(\mathcal {H}\) can be further decomposed by means of the projection

as

To obtain a positive operator on \({\text {ran}}\varPi \), we proceed again as in [7, 8] and consider a bounded operator \(A_0\) on the whole space \(\mathcal {H}\). The Fermi Golden Rule Condition (F) then implies that

The details can be found in Sect. 6.2.

Let \(P_{{\text {disc}}}\) denote the spectral projection to the discrete spectrum of \(H_{\mathrm p}\). Note that \( { P_{{\text {disc}}} }^\perp = P_{\mathsf {ess} }\). The third space in (2.13) can be decomposed further by use of

We start with the space generated by the first projection \(P_{\mathsf {ess} }\otimes P_{\mathsf {ess} }\). In contrast to [8] the conjugate operator in the particle space will be defined as follows. We first diagonalize the non-negative part of \(H_{\mathrm p}\) by means of generalized eigenfunctions associated to the positive (continuous) spectrum, the scattering functions, which we recall in Sect. 4.1. This will establish a unitary map \(V_{{\mathsf {c} }}\) between the non-negative eigenspace of \(H_{\mathrm p}\) and \(L^2({\mathbb {R}}^3)\) with the property that \(V_{{\mathsf {c} }}^* H_{\mathrm p}V_{{\mathsf {c} }}= \hat{{\mathsf {k} }}^2\), where \(\hat{{\mathsf {k} }}= (\hat{{\mathsf {k} }}_1, \hat{{\mathsf {k} }}_2, \hat{{\mathsf {k} }}_3)\) denotes the vector of multiplication operators with the respective components. Let

be the generator of dilations, where \({\hat{{\mathsf {q} }}}:= \mathrm {i}\nabla = (\mathrm {i}\partial _1,\mathrm {i}\partial _2,\mathrm {i}\partial _3) \) and where we used the notation \({\hat{{\mathsf {q} }}}\hat{{\mathsf {k} }}:= \sum _{j=1}^3 {\hat{{\mathsf {q} }}}_j \hat{{\mathsf {k} }}_j\) and \(\hat{{\mathsf {k} }}{\hat{{\mathsf {q} }}}:= \sum _{j=1}^3 \hat{{\mathsf {k} }}_j {\hat{{\mathsf {q} }}}_j\). Then

has the effect that

which is strictly positive on \({\text {ran}}P_{\mathsf {ess} }\). We combine \(A_{{\text {f}}}\) and \({A_{\mathrm p}}\) to an operator on \(\mathcal {H}\) by

which yields

As \({A}\) is unbounded, it is necessary to use a virial theorem for the positive commutator method to work. We will indeed use the same abstract versions developed in [7, 8] which are repeated in Sect. 3. In order to be able to apply the virial theorem Theorem 3.4 it is necessary that the commutators are bounded on the atomic space (see (3.7) and (3.8)). Thus one has to include a regularization in \({A_{\mathrm p}}\). The exact definition of \({A}\) and of a regularized version \({A^{(\epsilon )}}\), as well as the verification of the conditions for the virial theorems, can be found in Sect. 4.

For the space corresponding to the sum of the remaining three projections in (2.14) we choose an operator Q on \(\mathcal {H}_{\mathrm p}\otimes \mathcal {H}_{\mathrm p}\) given as a bounded continuous function of \(L_{\mathrm p}\) in such a way that Q is strictly positive on \({\text {ran}}\mathbb {1}_{L_{\mathrm p}\not = 0}\). We add a suitable operator T depending on the interaction and \(\lambda \) to accomplish

for all \(\psi \in \ker L_\lambda \). Now the distance between the essential and the discrete spectrum is strictly positive and the distances between the distinct discrete eigenvalues of \(H_{\mathrm p}\) are bounded from below by a positive number. Therefore, Q as well is bounded from below by a positive constant on the space

The operator T will be viewed as an error term which will be estimated by \(N_{{\text {f}}}\), where we need in addition that Q satisfies \((L_{\mathrm p}^{-1} Q)^2 \le C Q\) for a constant C.

Finally, there will arise further error terms from the commutator of the interaction with \({A}\) and \(A_0\), respectively. The general idea to control them, is to estimate them in terms of \(N_{{\text {f}}}\) on \(\mathfrak {F}\) and in terms of bounded terms on \(\mathcal {H}_{\mathrm p}\otimes \mathcal {H}_{\mathrm p}\otimes {\text {ran}}P_\varOmega \), respectively. It is for the latter that we need the decompositions (2.13) and (2.14) as well as the corresponding positive operators mentioned above. On \({\text {ran}}P_{\mathsf {ess} }\otimes P_{\mathsf {ess} }\) we estimate them by \({\hat{{\mathsf {q} }}}^{-2}\) and then use that by the uncertainty principle

for \(\lambda > 0\) sufficiently small (cf. [26, X.2]).

3 Abstract Virial Theorems

In this section we recall the abstract virial theorems of [7, 8]. They are based on Nelson’s commutator theorem, which can be used for proving self-adjointness of operators which are not bounded from below. An important notion will be that of a GJN triple.

Definition 3.1

(GJN triple) Let \(\mathcal {H}\) be a Hilbert space, \(\mathcal {D} \subset \mathcal {H}\) a core for a self-adjoint operator \(Y \ge {\text {Id}}\), and X a symmetric operator on \(\mathcal {D}\). We say the triple \((X,Y,\mathcal {D})\) satisfies the Glimm-Jaffe-Nelson (GJN) condition, or that \((X,Y,\mathcal {D})\) is a GJN-triple, if there is a constant \(C < \infty \), such that for all \(\psi \in \mathcal {D}\):

Theorem 3.2

(GJN commutator theorem, [26, Theorem X.37]) If \((X,Y,\mathcal {D})\) satisfies the GJN condition, then X determines a self-adjoint operator (again denoted by X), such that \(\mathcal {D}(X) \supset \mathcal {D}(Y)\). Moreover, X is essentially self-adjoint on any core for Y, and (3.1) is valid for all \(\psi \in \mathcal {D}(Y)\).

A consequence of the GJN commutator theorem is that the unitary group generated by X leaves the domain of Y invariant. The concrete formulation stated in the next theorem is taken from [7].

Theorem 3.3

(Invariance of domain, [9]) Suppose \((X,Y,\mathcal {D})\) satisfies the GJN condition. Then, for all \(t \in {\mathbb {R}}\), \(e^{\mathrm {i}tX}\) leaves \(\mathcal {D}(Y)\) invariant, and there is a constant \(\kappa \ge 0\) such that

Based on the GJN commutator theorem, we can now describe the setting for a general virial theorem. Suppose one is given a self-adjoint operator \(\varLambda \ge {\text {Id}}\) with core \(\mathcal {D} \subset \mathcal {H}\), and let \(L, A, N, D, C_n\), \(n \in \{0,1,2,3\}\), be symmetric operators on \(\mathcal {D}\) satisfying the relations

and

where \(\varphi , \psi \in \mathcal {D}\). Furthermore we shall assume:

-

(V1)

\((X,\varLambda ,\mathcal {D})\) satisfies the GJN condition for \(X=L,N,D,C_n\), \(n\in \{0,1,2,3\}\). Consequently all these operators determine self-adjoint operators, which we denote by the same letters.

-

(V2)

A is self-adjoint, \(\mathcal {D} \subset \mathcal {D}(A)\), and \(e^{\mathrm {i}t A}\) leaves \(\mathcal {D}(\varLambda )\) invariant.

Theorem 3.4

(Abstract virial theorem, [7, Theorem 3.2]) Let \(\varLambda \ge {\text {Id}}\) be a self-adjoint operator in \(\mathcal {H}\) with core \(\mathcal {D} \subset \mathcal {H}\), and let \(L, A, N, D, C_n\), \(n \in \{0,1,2,3\}\), be symmetric on \(\mathcal {D}\) satisfying relations (3.3)–(3.5). Assume (V1) and (V2). Furthermore, assume that N and \(e^{\mathrm {i}t A}\) commute, for all \(t \in \mathbb {R}\), in the strong sense on \(\mathcal {D}\), and that there exist \( 0 \le p < \infty \) and \(C < \infty \) such that

for all \(\psi \in \mathcal {D}\). Then, if \(\psi \in \mathcal {D}(L)\) is an eigenvector of L, there is a sequence of approximating eigenvectors \(( \psi _n )_{n \in \mathbb {N}}\) in \(\mathcal {D}(L)\cap \mathcal {D}(C_1)\) such that \(\lim _{n \rightarrow \infty } \psi _n = \psi \) in \(\mathcal {H}\), and

Remark 3.5

A positivity condition for \(C_1\) will be established in Proposition 6.3.

4 Definition of the Commutator and Verification of the Virial Theorems

In this section we introduce generalized eigenstates associated to scattering states of the atomic Hamiltonian \(H_{\mathrm p}\). Using these scattering states we will then define explicit realizations for the operators \(L, A, N, D, C_n\), \(n \in \{0,1,2,3\}\), of Sect. 3. We then verify the assumptions of the abstract virial theorem in order to obtain a concrete virial theorem Theorem 4.5. This theorem will be one of the two ingredients for the proof of the main result of this paper. In this section we shall always assume that the potential V satisfies (H1) and (H2) and that (I1)–(I3) hold.

4.1 Scattering States

In this part we recall the theory of generalized eigenstates, which are associated to scattering states, and their corresponding spectral decomposition. The scattering states \(\varphi (k,\cdot )\), \(k \in {\mathbb {R}}^3\), can be defined as generalized eigenvectors,

or as solutions of the so-called Lippmann–Schwinger equation,

We discuss their properties in the following proposition which is from [12], see also [28, Theorem XI.41] and [25]. In particular, the scattering functions can be used for a spectral decomposition of the continuous spectrum of \(H_{\mathrm p}\).

Theorem 4.1

([28, Theorem XI.41], [12, 25]) Suppose (H1) and (H2) hold.

-

(a)

For all \(k \in {\mathbb {R}}^3\) there exists a unique solution \(\varphi (k,\cdot )\) of (4.1) which obeys \(|V|^{1/2} \varphi (k ,\cdot ) \in L^2(\mathbb {R}^3)\). Moreover for every \(k \in \mathbb {R}^3\) the function \(x \mapsto \varphi (k, x)\) is continuous.

-

(b)

For \(f \in L^2({\mathbb {R}}^3)\) the generalized Fourier transform

$$\begin{aligned} (V_{{\mathsf {c} }}f)(k) := f^\#(k) := (2\pi )^{-3/2} \mathsf {l.i.m.} \int \overline{\varphi (k,x)} f(x) {\mathrm d}x, \end{aligned}$$where \(\mathsf {l.i.m.} \int g(x) {\mathrm d}x := L^2\)-\(\lim _{R \rightarrow \infty } \int _{\left| x \right| < R} g(x) {\mathrm d}x\), exists.

-

(c)

We have \({\text {ran}}V_{{\mathsf {c} }}= L^2({\mathbb {R}}^3)\) and for \(f \in L^2({\mathbb {R}}^3)\)

$$\begin{aligned} \left\| V_{{\mathsf {c} }}f \right\| = \left\| P_{\mathsf {ess} }f \right\| . \end{aligned}$$In particular, \(V_{{\mathsf {c} }}\) is a partial isometry and \(V_{{\mathsf {c} }}\restriction _{{\text {ran}}P_{\mathsf {ess} }} :{\text {ran}}P_{\mathsf {ess} }\rightarrow L^2({\mathbb {R}}^3)\) is a unitary operator, and \(V_{{\mathsf {c} }}V_{{\mathsf {c} }}^* = {\text {Id}}\).

-

(d)

For \(f \in L^2({\mathbb {R}}^3)\) we have the spectral decomposition

$$\begin{aligned} (P_{\mathsf {ess} }f)(x) = \mathsf {l.i.m.} (2\pi )^{-3/2} \int f^\#(k) \varphi (k,x) {\mathrm d}k . \end{aligned}$$ -

(e)

If \(f \in \mathcal {D}(H_{\mathrm p})\), then

$$\begin{aligned} (H_{\mathrm p}f)^\#(k) = k^2 f^\#(k), \end{aligned}$$in other words, \(V_{{\mathsf {c} }}H_{\mathrm p}V_{{\mathsf {c} }}^* = \hat{{\mathsf {k} }}^2\).

The basic strategy of the proof of the theorem is to introduce the method of modified square integrable scattering functions, which can be found in [12, 28], originally developed by Rollnik. In particular, one introduces the so-called modified Lippmann–Schwinger equation

where

and \(V(y)^{1/2} := \left| V(y) \right| ^{1/2} {{\text {sgn}}}V(y) \). We note that is elementary to see that by (H1) the operator \(L_\kappa \) is a Hilbert-Schmidt operator, see [30, Theorem 1.22]. If for fixed \(k \in {\mathbb {R}}^3\) the function \(\varphi (k,\cdot )\) obeys (4.1) and \({\widetilde{\varphi }}(k,\cdot ) := |V|^{1/2} \varphi (k,\cdot )\) is an \(L^2\)-function, then \({\widetilde{\varphi }}(k,\cdot )\) obeys (4.2), provided (H1) holds (in fact it holds for a larger class of potentials [28]). On the other hand, if for a fixed \(k \in \mathbb {R}^3\), the modified Lippmann–Schwinger equation (4.2) has a unique \(L^2\)-solution \({\widetilde{\varphi }}(k,\cdot )\), then, as outlined in [28], the original Lippmann–Schwinger equation (4.1) has a unique solution \(\varphi (k,\cdot )\) satisfying \(|V|^{1/2} \varphi (k,\cdot ) \in L^2(\mathbb {R}^3)\). It is given by

Proof

By the assumptions on the potential the operator \(L_\kappa \) defined as in (4.3) is a Hilbert-Schmidt operator for all \(\kappa \ge 0\). Let \(\mathcal {E}\) denote the set of all \(\kappa \in (0,\infty )\) such that \(\psi = L_\kappa \psi \) has a nonzero solution in \(L^2\). We claim that \(\mathcal {E}\) is the empty set if (H1) holds. To this end, let \(\kappa > 0\) and assume \({\widetilde{\varphi }} = L_\kappa {\widetilde{\varphi }}\) for some \(L^2\)-function \({\widetilde{\varphi }}\). Now consider

It follows that \(\varphi (x) = o(|x|^{-1})\) as \(|x| \rightarrow \infty \), and that \( - \varDelta \varphi + V \varphi = \kappa ^2 \varphi . \) According [18] this implies that \(\varphi \) vanishes identically outside a sufficiently large sphere. Hence by the unique continuation theorem it follows that \(\varphi = 0\) and \({\widetilde{\varphi }} = \left| V \right| ^{1/2} \varphi = 0\). This is a contradiction, and we conclude that the set \(\mathcal {E}\) is empty for potentials which we consider. Thus by the Fredholm alternative whenever \(k\ne 0\) there is a unique \(L^2\) solution \({\widetilde{\varphi }}\) of the modified Lippmann–Schwinger equation \( {\widetilde{\varphi }}(x) = |V|^{1/2} e^{ikx} + (L_{|k|}{\widetilde{\varphi }})(x) \). As mentioned above it follows that the original Lippmann–Schwinger equation (4.1) has a unique solution \(\varphi \) satisfying \(|V|^{1/2}\varphi \in L^2\) given by (4.4). In the case \(k=0\) we argue analogously using (H2). This shows the first part of (a). The continuity follows in view of (4.1) from dominated convergence. (b)–(e) now follow from [28, Theorem XI.41], where we have seen in Proposition 2.1 that the essential and the absolutely continuous spectrum of \(H_{\mathrm p}\) coincide. \(\square \)

Furthermore, we can extend \(V_{{\mathsf {c} }}\) to a unitary operator by including the eigenfunctions into consideration. For this, we denote by \(\varphi _n\), \(n=1, \ldots , N\), the eigenvectors of \(H_{\mathrm p}\). We define

Obviously \(V_{\mathsf {d} }\restriction _{{\text {ran}}P_{{\text {disc}}}} :{\text {ran}}P_{{\text {disc}}}\rightarrow \ell ^2(N) \) is a unitary operator and \(V_{\mathsf {d} }\restriction _{{\text {ran}}P_{\mathsf {ess} }} = 0\). Thus,

is unitary.

4.2 Setup for the Virial Theorems

First, we describe the setting on the particle space \(\mathcal {H}_{\mathrm p}\). We consider a dense subspace given by

Note that \(\mathcal {D}_{\mathrm p}\) is dense since \(V_{{\mathsf {c} }}^* C_{\mathrm c}^\infty ({\mathbb {R}}^3)\subseteq {\text {ran}}P_{\mathsf {ess} }\) is dense in \({\text {ran}}P_{\mathsf {ess} }\). Now, based on the definition of the generator of dilations,

we define on \(\mathcal {D}_{\mathrm p}\) the conjugate operator \({A_{\mathrm p}}\) and a regularized version \({A_{\mathrm p}^{(\epsilon )}}\),

where

Note that \(\eta _0 \equiv 1\) and \({A_{\mathrm p}}= A_{\mathrm p}^{(0)}\). It is clear that both \(H_{\mathrm p}\) and \({A_{\mathrm p}^{(\epsilon )}}\), \(\epsilon \ge 0\), leave \(\mathcal {D}_{\mathrm p}\) invariant. Thus we can define \({\text {ad}}^{(n)}_{{A_{\mathrm p}^{(\epsilon )}}}(H_{\mathrm p})\) on \(\mathcal {D}_{\mathrm p}\) for all \(n \in {\mathbb {N}}\). Furthermore, the bounding operator is chosen as

Next, on the field space we set

Now, we can define on the dense subspace of the composite space \(\mathcal {H}\),

the operators

where \(\widehat{N_{{\text {f}}}}:= {\text {Id}}_{\mathrm p}\otimes {\text {Id}}_{\mathrm p}\otimes N_{{\text {f}}}\).

For operators X, Y with a dense domain \(\mathcal {D}_0\) we define multiple commutators by \({\text {ad}}^{(0)}_Y(X) = X\) and \({\text {ad}}^{(n+1)}_Y(X) = \mathrm {i}[{\text {ad}}^{(n)}_Y(X),Y]\) in the form sense on \(\mathcal {D}_0 \times \mathcal {D}_0\), provided the right-hand side is determined by a densly defined bounded or an essentially self-adjoint operator, in which case we denote the corresponding extension by the same symbol. Furthermore, we set \({\text {ad}}_Y(X) := {\text {ad}}^{(1)}_Y(X)\).

Now we define for \(n \in \{1,2,3\}\), \(\epsilon \ge 0\),

where

with

and we use the shorthand notation \(I_n:= I_n^{(0)}\), \(W_n := W_n^{(0)}\), \(C_1 := C_1^{(0)}\). We note that the above identites follow from a straightforward calculation. We will see in Proposition 4.2 that the expressions in the field operators in (4.14) and (4.15) are indeed well-defined and belong to \(L^2({\mathbb {R}}\times \mathbb {S}^2, \mathcal {L}(H_p){\otimes }\mathcal {L}(H_p)\). Furthermore, it will be proven in Proposition 4.3, that \(C_n^{(\epsilon )}\) and \(C_{{{\text {f}}},n}\), \(n \in \{1,2,3\}\), are in fact essentially self-adjoint on \({\mathcal {D}}\) and we denote their self-adjoint extensions by the same symbols. Moreover, it will be shown below in Proposition 6.3 that \(C_1\) is actually bounded from below. Thus, we can assign to \(C_1\) a quadratic form \(q_{C_1}\).

4.3 Verification of the Assumptions of the Virial Theorems

In the given setting just described we can now start to prove the assumptions of the virial theorem Theorem 3.4. Above all, we have to check the GJN condition for the different commutators. The most difficult part will be the discussion of the interaction terms \(W_n^{({{\text {f}}})}\) and \(W_n^{(\epsilon )}\), \(n \in \{1,2,3\}\), \(\epsilon > 0\), and \(W_1\). Here, the expressions in the field operators need to be sufficiently bounded. These bounds will be collected in the following proposition, which will be poven in Sect. 5.

Proposition 4.2

Let \(\partial _u\) denote the weak derivative of a \(\mathcal {L}(\mathcal {H}_{\mathrm p})\)-valued function in the sense of the strong operator topology. For all \(m \in \{0,1,2,3\}\), \(n \in {\mathbb {N}}_0\), \(j \in \{1,2,3\}\), and for all \((u,\varSigma )\), \(\epsilon \ge 0\), the operators

-

(1)

\(\partial _u^m {\text {ad}}^{(n)}_{{A_{\mathrm p}^{(\epsilon )}}}( \tau _\beta (G)(u,\varSigma ) )\),

-

(2)

\(\partial _u^m {\text {ad}}_{V_{{\mathsf {c} }}^* \hat{{\mathsf {k} }}_j V_{{\mathsf {c} }}}( {\text {ad}}^{(n)}_{{A_{\mathrm p}^{(\epsilon )}}}( \tau _\beta (G)(u,\varSigma ) ) )\),

-

(3)

\( \partial _u^m {\text {ad}}_{V_{{\mathsf {c} }}^* {\hat{{\mathsf {q} }}}_j V_{{\mathsf {c} }}}( {\text {ad}}^{(n)}_{{A_{\mathrm p}^{(\epsilon )}}}( \tau _\beta (G)(u,\varSigma ) ) )\),

-

(4)

\( \partial _u^m V_{{\mathsf {c} }}^* {\hat{{\mathsf {q} }}}_j V_{{\mathsf {c} }}{\text {ad}}^{(n)}_{{A_{\mathrm p}}}( \tau _\beta (G)(u,\varSigma ) )\),

are well-defined, and the corresponding functions \({\mathbb {R}}\times \mathbb {S}^2 \rightarrow \mathcal {L}(\mathcal {H}_{\mathrm p})\) of \((u,\varSigma )\) belong to \(L^2({\mathbb {R}}\times \mathbb {S}^2, \mathcal {L}(\mathcal {H}_{\mathrm p}))\). Moreover, there exists a constant C independent of \(\beta \) such that for \(n,m,s \in \{0,1\}\),

The result also holds true if we replace G by \(G^*\).

With the help of Proposition 4.2 we can now verify the necessary GJN conditions.

Proposition 4.3

The following triples are GJN:

-

(1)

\(({A_{\mathrm p}}, \varLambda _{\mathrm p}, \mathcal {D}_{\mathrm p})\),

-

(2)

\(({A_{\mathrm p}^{(\epsilon )}}, \varLambda _{\mathrm p}, \mathcal {D}_{\mathrm p})\), \(\epsilon > 0\),

-

(3)

\((H_{\mathrm p}, \varLambda _{\mathrm p}, \mathcal {D}_{\mathrm p})\),

-

(4)

\((L_\lambda , \varLambda , {\mathcal {D}})\), \(\lambda \in {\mathbb {R}}\),

-

(5)

\((\widehat{N_{{\text {f}}}}, \varLambda , {\mathcal {D}})\),

-

(6)

\((D, \varLambda , {\mathcal {D}})\),

-

(7)

\((C_i^{(\epsilon )}, \varLambda , {\mathcal {D}})\), \(\epsilon > 0\), \(i \in \{1,2,3\}\),

-

(8)

\((C_{{{\text {f}}},i}, \varLambda , {\mathcal {D}})\), \(i \in \{1,2,3\}\),

-

(9)

\((C_1, \varLambda , {\mathcal {D}})\).

In particular, \(L_\lambda \) is essentially self-adjoint on \({\mathcal {D}}\) for any \(\lambda \in {\mathbb {R}}\) due to (4). Moreover, D, \(C_1^{(\epsilon )}\), \(C_3^{(\epsilon )}\), \(\epsilon > 0\), and \(C_1^{({{\text {f}}})}\), \(C_3^{({{\text {f}}})}\) are relatively bounded by \(\widehat{N_{{\text {f}}}}^{1/2}\).

Proof

-

(1)

Using that \((A_{\mathsf {D} }, \hat{{\mathsf {k} }}^2 + {\hat{{\mathsf {q} }}}^2, C_{\mathrm c}^\infty ({\mathbb {R}}^3))\) is a GJN triple (cf. [8]) and \(V_{{\mathsf {c} }}^*\) an isometry, we have, for \(\psi \in \mathcal {D}_{\mathrm p}\),

$$\begin{aligned} \left\| {A_{\mathrm p}}\psi \right\| = \left\| V_{{\mathsf {c} }}^* A_{\mathsf {D} }V_{{\mathsf {c} }}\psi \right\| = \left\| A_{\mathsf {D} }V_{{\mathsf {c} }}\psi \right\| \le C \left\| (\hat{{\mathsf {k} }}^2 + {\hat{{\mathsf {q} }}}^2) V_{{\mathsf {c} }}\psi \right\| \le C' \left\| \varLambda _{\mathrm p}\psi \right\| , \end{aligned}$$for some constants \(C,C'\), and

$$\begin{aligned} \pm \mathrm {i}&(\left\langle {A_{\mathrm p}}\psi , \varLambda _{\mathrm p}\psi \right\rangle - \left\langle \varLambda _{\mathrm p}\psi , {A_{\mathrm p}}\psi \right\rangle )\\&= \pm \mathrm {i}\left( \left\langle A_{\mathsf {D} }V_{{\mathsf {c} }}\psi , (\hat{{\mathsf {k} }}^2 + {\hat{{\mathsf {q} }}}^2) V_{{\mathsf {c} }}\psi \right\rangle - \left\langle (\hat{{\mathsf {k} }}^2 + {\hat{{\mathsf {q} }}}^2)V_{{\mathsf {c} }}\psi , A_{\mathsf {D} }V_{{\mathsf {c} }}\psi \right\rangle \right) \\&\le C \left\langle V_{{\mathsf {c} }}\psi , (\hat{{\mathsf {k} }}^2 + {\hat{{\mathsf {q} }}}^2) V_{{\mathsf {c} }}\psi \right\rangle \\&\le C \left\langle \psi , \varLambda _{\mathrm p}\psi \right\rangle . \end{aligned}$$ -

(2)

On \(\mathcal {D}_{\mathrm p}\) we have

$$\begin{aligned} {A_{\mathrm p}^{(\epsilon )}}= V_{{\mathsf {c} }}^* \eta _\epsilon A_{\mathsf {D} }\eta _\epsilon V_{{\mathsf {c} }}= V_{{\mathsf {c} }}^* \eta _\epsilon ^2 A_{\mathsf {D} }V_{{\mathsf {c} }}+ V_{{\mathsf {c} }}^* \eta _\epsilon [A_{\mathsf {D} },\eta _\epsilon ] V_{{\mathsf {c} }}. \end{aligned}$$The operator \(V_{{\mathsf {c} }}^* \eta _\epsilon ^2 A_{\mathsf {D} }V_{{\mathsf {c} }}\) is relatively bounded by \(V_{{\mathsf {c} }}^* A_{\mathsf {D} }V_{{\mathsf {c} }}\), and thus also by \((\hat{{\mathsf {k} }}^2 + {\hat{{\mathsf {q} }}}^2 )V_{{\mathsf {c} }}\) as we have already seen in the proof of (1). Furthermore, as derivatives of \(\eta _\epsilon \) are also bounded, the operator

$$\begin{aligned}{}[A_{\mathsf {D} },\eta _\epsilon ] = \frac{1}{2} \sum _{j} [ {\hat{{\mathsf {q} }}}_j, \eta _\epsilon ] \hat{{\mathsf {k} }}_j \end{aligned}$$is relatively bounded by \(| \hat{{\mathsf {k} }}|\), and thus \(\eta _\epsilon [A_{\mathsf {D} },\eta _\epsilon ] V_{{\mathsf {c} }}\) is also relatively bounded by \((\hat{{\mathsf {k} }}^2 + {\hat{{\mathsf {q} }}}^2 )V_{{\mathsf {c} }}\) . This shows the first GJN condition.

For the second one, we compute

$$\begin{aligned} \pm \mathrm {i}\left( \left\langle {A_{\mathrm p}^{(\epsilon )}}\psi , \varLambda _{\mathrm p}\psi \right\rangle - \left\langle \varLambda _{\mathrm p}\psi , {A_{\mathrm p}^{(\epsilon )}}\psi \right\rangle \right) = \pm \left\langle V_{{\mathsf {c} }}\psi , \mathrm {i}[\hat{{\mathsf {k} }}^2 + {\hat{{\mathsf {q} }}}^2, \eta _\epsilon A_{\mathsf {D} }\eta _\epsilon ] V_{{\mathsf {c} }}\psi \right\rangle . \end{aligned}$$Thus, it suffices to show \( \pm \mathrm {i}[\hat{{\mathsf {k} }}^2 + {\hat{{\mathsf {q} }}}^2, \eta _\epsilon A_{\mathsf {D} }\eta _\epsilon ] \le C(\hat{{\mathsf {k} }}^2 + {\hat{{\mathsf {q} }}}^2)\) for some constant C on \(C_{\mathrm c}^\infty ({\mathbb {R}}^3)\). First,

$$\begin{aligned}{}[\hat{{\mathsf {k} }}^2, \eta _\epsilon A_{\mathsf {D} }\eta _\epsilon ] = \eta _\epsilon [\hat{{\mathsf {k} }}^2, A_{\mathsf {D} }] \eta _\epsilon = \sum _{j} \eta _\epsilon \hat{{\mathsf {k} }}_j [\hat{{\mathsf {k} }}_j, {\hat{{\mathsf {q} }}}_j] \hat{{\mathsf {k} }}_j \eta _\epsilon = -\mathrm {i}\eta _\epsilon \hat{{\mathsf {k} }}^2 \eta _\epsilon . \end{aligned}$$Second, in order to obtain \(\pm \mathrm {i}[{\hat{{\mathsf {q} }}}^2, \eta _\epsilon A_{\mathsf {D} }\eta _\epsilon ] \le C(\hat{{\mathsf {k} }}^2 + {\hat{{\mathsf {q} }}}^2)\), we use the basic operator inequality

$$\begin{aligned} A^*B + B^*A \le A^* A + B^* B \end{aligned}$$(4.17)to show that

$$\begin{aligned} {[{\hat{{\mathsf {q} }}}_j, \eta _\epsilon A_{\mathsf {D} }\eta _\epsilon ]} = \eta _\epsilon [{\hat{{\mathsf {q} }}}_j, A_{\mathsf {D} }] \eta _\epsilon + [{\hat{{\mathsf {q} }}}_j, \eta _\epsilon ] A_{\mathsf {D} }\eta _\epsilon + \eta _\epsilon A_{\mathsf {D} }[{\hat{{\mathsf {q} }}}_j, \eta _\epsilon ], \qquad j \in \{1,2,3\}, \end{aligned}$$(4.18)is relatively bounded by \(|\hat{{\mathsf {k} }}|\). This is clear for the first term in (4.18) as \(\eta _\epsilon [{\hat{{\mathsf {q} }}}_j, A_{\mathsf {D} }] \eta _\epsilon = \frac{\mathrm {i}}{2} \eta _\epsilon ^2 \hat{{\mathsf {k} }}_j\), and for the two remaining ones it follows analogously to the proof of the first GJN condition, since second derivatives of \(\eta _\epsilon \) are bounded.

-

(3)

We have, with regard to the first GJN condition,

$$\begin{aligned} \left\| H_{\mathrm p}\psi \right\| = \left\| V_{{\mathsf {c} }}^* \hat{{\mathsf {k} }}^2 V_{{\mathsf {c} }}\psi + H_{\mathrm p}P_{{\text {disc}}}\psi \right\| \le C \left\| V_{{\mathsf {c} }}^* (\hat{{\mathsf {k} }}^2 + {\hat{{\mathsf {q} }}}^2) V_{{\mathsf {c} }}\psi \right\| + \sup _{\lambda \in \sigma _{\mathrm d}(H_{\mathrm p})} \left| \lambda \right| \left\| \psi \right\| , \end{aligned}$$(4.19)as \(\hat{{\mathsf {k} }}^2\) is relatively bounded by \({\hat{{\mathsf {q} }}}^2 + \hat{{\mathsf {k} }}^2\). Furthermore,

$$\begin{aligned}&\left\langle H_{\mathrm p}\psi , \varLambda _{\mathrm p}\psi \right\rangle - \left\langle \varLambda _{\mathrm p}\psi , H_{\mathrm p}\psi \right\rangle \\&\quad = \left\langle V_{{\mathsf {c} }}^* \hat{{\mathsf {k} }}^2 V_{{\mathsf {c} }}\psi , V_{{\mathsf {c} }}^* ({\hat{{\mathsf {q} }}}^2 + \hat{{\mathsf {k} }}^2) V_{{\mathsf {c} }}\psi \right\rangle - \left\langle V_{{\mathsf {c} }}^* ({\hat{{\mathsf {q} }}}^2 + \hat{{\mathsf {k} }}^2) V_{{\mathsf {c} }}\psi , V_{{\mathsf {c} }}^* \hat{{\mathsf {k} }}^2 V_{{\mathsf {c} }}\psi \right\rangle \\&\quad = \left\langle \hat{{\mathsf {k} }}^2 V_{{\mathsf {c} }}\psi , {\hat{{\mathsf {q} }}}^2 V_{{\mathsf {c} }}\psi \right\rangle - \left\langle {\hat{{\mathsf {q} }}}^2 V_{{\mathsf {c} }}\psi , \hat{{\mathsf {k} }}^2 V_{{\mathsf {c} }}\psi \right\rangle . \end{aligned}$$Using now that \(\pm \mathrm {i}[{\hat{{\mathsf {q} }}}^2,\hat{{\mathsf {k} }}^2] \le C(\hat{{\mathsf {k} }}^2 + {\hat{{\mathsf {q} }}}^2)\) for some constant C, we get also the second GJN condition.

-

(4)

As \(H_{\mathrm p}\) is relatively bounded by \(\varLambda _{\mathrm p}\) in view of (4.19), \(L_0 = (H_{\mathrm p}\otimes {\text {Id}}_{\mathrm p}- {\text {Id}}_{\mathrm p}\otimes H_{\mathrm p}) \otimes {\text {Id}}_{{\text {f}}}\) is relatively bounded by \(\varLambda \). Next, by (I2), (I3), and Lemma A.3 we know that the interaction terms \(I^{(\mathsf {l} )}, I^{(\mathsf {r} )} \in L^2({\mathbb {R}}\times \mathbb {S}^2\), \(\mathcal {L}(H_p)\)). Hence W is bounded by \(\widehat{N_{{\text {f}}}}^{1/2}\) and thus bounded by \({\text {Id}}_{\mathrm p}\otimes {\text {Id}}_{\mathrm p}\otimes \varLambda _{{\text {f}}}^{1/2}\). Therefore, the first GJN condition is satisfied.

Next, as \((H_{\mathrm p}, \varLambda _{\mathrm p}, \mathcal {D}_{\mathrm p})\) is GJN, we get a constant C, such that for all \(\psi \in {\mathcal {D}}\),

$$\begin{aligned} \pm \mathrm {i}( \left\langle L_0 \psi , \varLambda \psi \right\rangle - \left\langle \varLambda \psi , L_0 \psi \right\rangle ) \le C\left\langle \psi , (\varLambda _{\mathrm p}\otimes {\text {Id}}_{\mathrm p}+ {\text {Id}}_{\mathrm p}\otimes \varLambda _{\mathrm p}) \otimes {\text {Id}}_{{\text {f}}}\psi \right\rangle , \end{aligned}$$which yields the second GJN condition for \(L_0\).

Again by Lemma A.3 and (I1)–(I3) we know that \((u,\varSigma ) \mapsto (u^2 + 1) I^{(\mathsf {l} )}(u,\varSigma )\) and \((u,\varSigma ) \mapsto (u^2 + 1) I^{(\mathsf {r} )}(u,\varSigma )\) are in \(L^2({\mathbb {R}}\times \mathbb {S}^2, \mathcal {L}( \mathcal {H}_{\mathrm p}))\). We have

$$\begin{aligned}&\left| \left\langle \varPhi ( I^{(\mathsf {l} )}) \psi , {\text {Id}}_{\mathrm p}\otimes {\text {Id}}_{\mathrm p}\otimes \varLambda _{{\text {f}}}\psi \right\rangle - \left\langle {\text {Id}}_{\mathrm p}\otimes {\text {Id}}_{\mathrm p}\otimes \varLambda _{{\text {f}}}\psi , \varPhi ( I^{(\mathsf {l} )}) \psi \right\rangle \right| \\&\qquad = \left| \left\langle \psi ,( a\left( ( {u} ^2 + 1) I^{(\mathsf {l} )}) - a^*(( {u} ^2 + 1) I^{(\mathsf {l} )}) \right) \psi \right\rangle \right| \\&\qquad \le C \left\| {\text {Id}}_{\mathrm p}\otimes {\text {Id}}_{\mathrm p}\otimes N_{{\text {f}}}^{1/2} \psi \right\| \left\| \psi \right\| \\&\qquad \le C' \left\langle \psi , \varLambda \psi \right\rangle \end{aligned}$$for some constants \(C,C'\). The same thing can be shown for the commutator with \(\varPhi (I^{(\mathsf {r} )})\).

It remains to consider the commutator of W with the \(\varLambda _{\mathrm p}\) terms. One has to show that the commutators

$$\begin{aligned}{}[\varPhi (I^{(\mathsf {l} )} ), V_{{\mathsf {c} }}^* ({\hat{{\mathsf {q} }}}^2 + \hat{{\mathsf {k} }}^2) V_{{\mathsf {c} }}], \qquad [\varPhi (I^{(\mathsf {r} )} ), V_{{\mathsf {c} }}^* ({\hat{{\mathsf {q} }}}^2 + \hat{{\mathsf {k} }}^2) V_{{\mathsf {c} }}] \end{aligned}$$are form-bounded by \(\varLambda _{\mathrm p}\), that is, in the sense of (3.2). This follows from Proposition 4.2 since we can write in the weak sense on \({\mathcal {D}}\),

$$\begin{aligned} {[\varPhi (I^{(\mathsf {l} )} ), V_{{\mathsf {c} }}^* {\hat{{\mathsf {q} }}}^2 V_{{\mathsf {c} }}]} = \sum _{j} [\varPhi (I^{(\mathsf {l} )} ), V_{{\mathsf {c} }}^* {\hat{{\mathsf {q} }}}_j V_{{\mathsf {c} }}] V_{{\mathsf {c} }}^* {\hat{{\mathsf {q} }}}_j V_{{\mathsf {c} }}+ V_{{\mathsf {c} }}^* {\hat{{\mathsf {q} }}}_j V_{{\mathsf {c} }}[\varPhi (I^{(\mathsf {l} )} ), V_{{\mathsf {c} }}^* {\hat{{\mathsf {q} }}}_j V_{{\mathsf {c} }}] \end{aligned}$$and analogously for terms involving \(\varPhi (I^{(\mathsf {r} )})\) as well as \(V_{{\mathsf {c} }}^* \hat{{\mathsf {k} }}^2 V_{{\mathsf {c} }}\).

-

(5)

Clearly, \(\left\| N_{{\text {f}}}\psi \right\| = \left\| \mathsf {d} \varGamma (1) \psi \right\| \le \left\| \mathsf {d} \varGamma (u^2 + 1) \psi \right\| \) for any \(\psi \in \mathfrak {F}_{{\text {fin}}}(C_{\mathrm c}^\infty ({\mathbb {R}}^3))\), which shows that \(\widehat{N_{{\text {f}}}}\) is relatively bounded by \(\varLambda \) on \({\mathcal {D}}\). Furthermore, \([\widehat{N_{{\text {f}}}}, \varLambda ] = 0\) on \({\mathcal {D}}\).

-

(6)

We have

$$\begin{aligned} D = \mathrm {i}\lambda ( a(I) - a^*(I) ). \end{aligned}$$Thus, the proof works as the one for \(L_\lambda \).

-

(7)

We first consider the atomic part. One can show by induction that for all \(n \in {\mathbb {N}}\) there exists \(f \in \mathcal {S}({\mathbb {R}}^3)\) such that

$$\begin{aligned} {\text {ad}}^{(n)}_{{A_{\mathrm p}^{(\epsilon )}}}(H_{\mathrm p}) = V_{{\mathsf {c} }}^* f(\hat{{\mathsf {k} }}) V_{{\mathsf {c} }}. \end{aligned}$$(4.20)Clearly, for \(n=1\),

$$\begin{aligned} {\text {ad}}_{{A_{\mathrm p}^{(\epsilon )}}}(H_{\mathrm p}) = \mathrm {i}V_{{\mathsf {c} }}^* [\hat{{\mathsf {k} }}^2, \eta _\epsilon A_{\mathsf {D} }\eta _\epsilon ] V_{{\mathsf {c} }}= \mathrm {i}V_{{\mathsf {c} }}^* \eta _\epsilon [\hat{{\mathsf {k} }}^2, A_{\mathsf {D} }] \eta _\epsilon V_{{\mathsf {c} }}= V_{{\mathsf {c} }}^* \eta _\epsilon \hat{{\mathsf {k} }}^2 \eta _\epsilon V_{{\mathsf {c} }}, \end{aligned}$$(4.21)which has the form (4.20). Next, as \([f(\hat{{\mathsf {k} }}), {\hat{{\mathsf {q} }}}_j] = -\mathrm {i}\partial _j f(\hat{{\mathsf {k} }})\),

$$\begin{aligned} {[ V_{{\mathsf {c} }}^* f(\hat{{\mathsf {k} }}) V_{{\mathsf {c} }}, {A_{\mathrm p}^{(\epsilon )}}]}&= V_{{\mathsf {c} }}^*\eta _\epsilon [ f(\hat{{\mathsf {k} }}) , A_{\mathsf {D} }] \eta _\epsilon V_{{\mathsf {c} }}= \frac{1}{2} \sum _{j} V_{{\mathsf {c} }}^*\eta _\epsilon [ f(\hat{{\mathsf {k} }}), {\hat{{\mathsf {q} }}}_j ] \hat{{\mathsf {k} }}_j \eta _\epsilon V_{{\mathsf {c} }}\end{aligned}$$yields again the form (4.20).

In particular, (4.20) implies that \({\text {ad}}^{(n)}_{{A_{\mathrm p}^{(\epsilon )}}}(H_{\mathrm p})\), \(n \in {\mathbb {N}}\), are bounded and

$$\begin{aligned} \pm \mathrm {i}[{\text {ad}}^{(n)}_{{A_{\mathrm p}^{(\epsilon )}}}(H_{\mathrm p}), \varLambda _{\mathrm p}] \le C \varLambda _{\mathrm p}\end{aligned}$$on \(\mathcal {D}_{\mathrm p}\) for some constant C, since

$$\begin{aligned} \pm \mathrm {i}[ f(\hat{{\mathsf {k} }}),\hat{{\mathsf {k} }}^2 + {\hat{{\mathsf {q} }}}^2] = \pm \mathrm {i}[ f(\hat{{\mathsf {k} }}),{\hat{{\mathsf {q} }}}^2] \le C(\hat{{\mathsf {k} }}^2 + {\hat{{\mathsf {q} }}}^2), \end{aligned}$$because \([ f(\hat{{\mathsf {k} }}),{\hat{{\mathsf {q} }}}_j]\), \(j \in \{1,2,3\}\), is bounded.

This together with (5) implies that \(({\text {ad}}^{(n)}_{A^{(\epsilon )}}(L_0), \varLambda , {\mathcal {D}})\), \(n\in \{1,2,3\}\), are GJN triples.

It remains to verify the GJN conditions for \((W_n^{(\epsilon )}, \varLambda , {\mathcal {D}})\), \(n\in \{1,2,3\}\). Analogously to the proof (4) for the GJN condition of \(L_\lambda \) we have to show that the expressions in the field operators and the commutators with \(V_{{\mathsf {c} }}^* \hat{{\mathsf {k} }}_j V_{{\mathsf {c} }}\) and \(V_{{\mathsf {c} }}^* {\hat{{\mathsf {q} }}}_j V_{{\mathsf {c} }}\), \(j \in \{1,2,3\}\) are integrable. That is, we have to show that

$$\begin{aligned} (u,\varSigma )&\mapsto \partial _u^m \tau _\beta ({\text {ad}}^{(n-m)}_{{A_{\mathrm p}^{(\epsilon )}}} (G))(u,\varSigma ), \\ (u,\varSigma )&\mapsto \partial _u^m [\tau _\beta ({\text {ad}}^{(n-m)}_{{A_{\mathrm p}^{(\epsilon )}}} (G))(u,\varSigma ), X], \end{aligned}$$\(X \in \{ V_{{\mathsf {c} }}^* \hat{{\mathsf {k} }}_j V_{{\mathsf {c} }}, V_{{\mathsf {c} }}^* {\hat{{\mathsf {q} }}}_j V_{{\mathsf {c} }}:j \in \{1,2,3\}\}\), \(n \in \{ 1,2,3 \}\), \(m \in \{ 0, \ldots ,n \}\), are in \(L^2({\mathbb {R}}\times \mathbb {S}^2, \mathcal {L}(\mathcal {H}_{\mathrm p}))\). This follows from Proposition 4.2.

-

(8)

Analogously to the proof of (7) it suffices to show that

$$\begin{aligned} (u,\varSigma )&\mapsto \partial _u^n \tau _\beta (G)(u,\varSigma ), \\ (u,\varSigma )&\mapsto \partial _u^n [\tau _\beta (G)(u,\varSigma ), X], \end{aligned}$$\(X \in \{ V_{{\mathsf {c} }}^* \hat{{\mathsf {k} }}_j V_{{\mathsf {c} }}, V_{{\mathsf {c} }}^* {\hat{{\mathsf {q} }}}_j V_{{\mathsf {c} }}:j \in \{1,2,3\}\}\), \(n \in \{ 1,2,3 \}\), are in \(L^2({\mathbb {R}}\times \mathbb {S}^2, \mathcal {L}(\mathcal {H}_{\mathrm p}))\). This follows again from Proposition 4.2.

-

(9)

We first consider again the free part. We have on \(\mathcal {D}_{\mathrm p}\),

$$\begin{aligned} \mathrm {i}[H_{\mathrm p}, {A_{\mathrm p}}] = \mathrm {i}[V_{{\mathsf {c} }}^* \hat{{\mathsf {k} }}^2 V_{{\mathsf {c} }}, {A_{\mathrm p}}] = \mathrm {i}V_{{\mathsf {c} }}^* [\hat{{\mathsf {k} }}^2, A_{\mathsf {D} }] V_{{\mathsf {c} }}= V_{{\mathsf {c} }}^* \hat{{\mathsf {k} }}^2 V_{{\mathsf {c} }}. \end{aligned}$$Then we can show as in the proof of (3) that also \(({\text {ad}}_{{A_{\mathrm p}}}(H_{\mathrm p}), \varLambda _{\mathrm p}, \mathcal {D}_{\mathrm p})\), is GJN and so is \(({\text {ad}}_{A}(L_0), \varLambda , {\mathcal {D}})\).

It remains to verify the GJN conditions for \((W_1, \varLambda , {\mathcal {D}})\). As in (7) and (8) one has to show that

$$\begin{aligned} (u,\varSigma )&\mapsto \partial _u^n \tau _\beta ({\text {ad}}^{(1-n)}_{{A_{\mathrm p}}} (G))(u,\varSigma ), \\ (u,\varSigma )&\mapsto \partial _u^n [\tau _\beta ({\text {ad}}^{(1-n)}_{{A_{\mathrm p}}} (G))(u,\varSigma ), X], \end{aligned}$$\(X \in \{ V_{{\mathsf {c} }}^* \hat{{\mathsf {k} }}_j V_{{\mathsf {c} }}, V_{{\mathsf {c} }}^* {\hat{{\mathsf {q} }}}_j V_{{\mathsf {c} }}:j \in \{1,2,3\}\}\), \(n \in \{0,1\}\), are in \(L^2({\mathbb {R}}\times \mathbb {S}^2, \mathcal {L}(\mathcal {H}_{\mathrm p}))\), which follows again from Proposition 4.2. \(\square \)

The statements of Proposition 4.3 allow the application of the virial theorem Theorem 3.4 for the regularized conjugate operator \({A^{(\epsilon )}}\), \(\epsilon >0\). In order to remove the regularization and transfer the result to \(C_1\), one has to consider the limit \(\epsilon \rightarrow 0\) for the corresponding quadratic forms \(q_{C^{(\epsilon )}_1}\) of \(C_1^{(\epsilon )}\). This is the content of the following lemma.

Lemma 4.4

Assume that \(\psi \in \mathcal {D}(\widehat{N_{{\text {f}}}}^{1/2})\) and \(q_{C^{(\epsilon )}_1}(\psi ) \le 0\) for all \( \epsilon \in (0,1)\). Then \(\psi \in \mathcal {D}(q_{C_1})\), and

Proof

Let us first recall that by definition

Step 1: We show that

Step 1 will follow once we have established that

for a constant C independent of \(\epsilon \in (0,1)\). To this end, we use that by standard estimates for creation and annihilation operators, we obtain for any \(\delta > 0\),

in the form sense on \(\mathcal {D}(\widehat{N_{{\text {f}}}}^{1/2})\), where we introduced the follwoing bounded operators on \(\mathcal {H}_{\mathrm p}\otimes \mathcal {H}_{\mathrm p}\),

To estimate this expression we use that

in the form sense for some constant C independent of \(\epsilon \), where we multiplied out the commutators, used (4.17) and the fact that the functions \((u,\varSigma ) \mapsto \partial _u \tau _\beta ( G) (u,\varSigma )\) and \((u,\varSigma ) \mapsto V_{{\mathsf {c} }}^* {\hat{{\mathsf {q} }}}_j V_{{\mathsf {c} }}\tau _\beta ( G)\) belong to \(L^2({\mathbb {R}}\times \mathbb {S}^2, \mathcal {L}(\mathcal {H}_{\mathrm p}))\) due to Proposition 4.2. This yields

Now using (4.21) and (4.26) to estimate (4.23) we obtain in the form sense on \(\mathcal {D}(\widehat{N_{{\text {f}}}}^{1/2})\) for any \(\epsilon > 0\)

Making \(\delta > 0\) sufficently small such that \(C \left| \lambda \right| \delta < 1\) and using that by assumption \(C_1^{(\epsilon )} \le 0\) and \(\psi \in \mathcal {D}(\widehat{N_{{\text {f}}}}^{1/2})\), we arrive at (4.25).

Step 2: We have (4.22).

First observe, that by dominated convergence, we have for all \(\varphi \in \mathcal {D}( V_{{\mathsf {c} }}^*| \hat{{\mathsf {k} }}| V_{{\mathsf {c} }})\),

Thus Step 2 will follow once we have shown that

Thus we have to show the convergence of the field operator of a commutator. To this end, we note that from Proposition 4.2 we know that

for \(H \in \{ \tau _\beta ( G), \partial _u \tau _\beta ( G) \}\), are in \(L^2({\mathbb {R}}\times \mathbb {S}^2, \mathcal {L}(\mathcal {H}_{\mathrm p}))\). Now,

Observe that \(\eta _\epsilon \) and \( \partial _{k_j} \eta _\epsilon \) are bounded uniformly in \(\epsilon \in (0,1)\). From (4.29) we see for \(\varphi \in \mathcal {D}( {\text {Id}}_{\mathrm p}\otimes N_{{\text {f}}})\) that

where for the limit we used that \( \partial _{k_j} \eta _\epsilon \) tends to zero as \(\epsilon \downarrow 0\), (4.24), and dominated convergence. Similarly, we find

Thus we find

where the last equality follows by verifying the identiy on the dense space \(\mathcal {D}_{\mathrm p}\), defined in (4.6), using a straightforward calculation, and then extending it to \(\varphi \) using Proposition 4.2. This shows (4.28) in view of the definition of \(W_1^{(\epsilon )}\) and \(W_1\), see (4.14). \(\square \)

Now we can prove the main result of this section, the concrete virial theorem for our setting.

Theorem 4.5

(Concrete virial theorem) Assume there exists \(\psi \in \mathcal {D}(L_\lambda )\) with \(L_\lambda \psi = 0\). Then \(\psi \in \mathcal {D}(q_{C_1})\) and \(q_{C_1}(\psi ) \le 0\).

Proof

Step 1: We have \(\psi \in \mathcal {D}(\widehat{N_{{\text {f}}}}^{1/2})\).

To show Step 1, we apply the virial theorem for \(\varLambda \) as in (4.10), \(\mathcal {D}\) as in (4.9), as well as the operators \(L=L_\lambda \), \(A = {\text {Id}}_{\mathrm p}\otimes {\text {Id}}_{\mathrm p}\otimes A_{{\text {f}}}\), \(N=\widehat{N_{{\text {f}}}}+1\), D as in (4.11), \(C_n = C_{{{\text {f}}},n}\), \(n \in \{0,1,2,3\}\) as in (4.13), which are symmetric on \(\mathcal {D}\) and satisfy (3.3)–(3.5) as a consequence of the definition. Next we verify the additional assumptions of the virial theorem. We have shown in Proposition 4.3 that (V1) is satisfied. Furthermore, on \(\mathfrak {F}_{{\text {fin}}}(C_{\mathrm c}^\infty ({\mathbb {R}}^3))\),

Thus, for some fixed t there is a constant C such that \(\left\| \varLambda _{{\text {f}}}e^{\mathrm {i}t A_{{\text {f}}}} \psi \right\| \le C\left\| \varLambda _{{\text {f}}}\psi \right\| \) for all \(\psi \in \mathfrak {F}_{{\text {fin}}}(C_{\mathrm c}^\infty ({\mathbb {R}}^3))\). As \(\mathfrak {F}_{{\text {fin}}}(C_{\mathrm c}^\infty ({\mathbb {R}}^3))\) is a core for \(\varLambda _{{\text {f}}}\), we find \(e^{\mathrm {i}t A_{{\text {f}}}} \mathcal {D}(\varLambda _{{\text {f}}}) \subseteq \mathcal {D}(\varLambda _{{\text {f}}})\). Hence, we conclude that \(\mathcal {D}(\varLambda )\) is invariant under the unitary group associated to A and hence (V2) holds. Furthermore, (3.6)–(3.8) are satisfied since D, \(C_{{{\text {f}}},1}\) and \(C_{{{\text {f}}},3}\) are relatively bounded by \(N_{{\text {f}}}^{1/2}\) (by Proposition 4.3). Thus we can apply the virial theorem, and obtain a sequence \((\psi _n)\) in \(\mathcal {D}(L_\lambda ) \cap \mathcal {D}(C_{{{\text {f}}},1})\) such that \(\psi _n \rightarrow \psi \) and

Now, it follows from lower semi-continuity of closed quadratic forms that \(\psi \) is in the form domain of \(C_{{{\text {f}}},1}\). As \(W_1^{({{\text {f}}})}\) is relatively bounded by \(\widehat{N_{{\text {f}}}}^{1/2}\) (as shown in the proof of Proposition 4.3 (8)), we conclude that \(\psi \in \mathcal {D}(\widehat{N_{{\text {f}}}}^{1/2})\).

Step 2: \(\psi \in \mathcal {D}(q_{C^{(\epsilon )}_1})\) and \(q_{C^{(\epsilon )}_1}(\psi ) \le 0 \).

To show Step 2, we apply the virial theorem once more with \(\varLambda \), D, L, and N as in Step 1. But now with \(A = {A^{(\epsilon )}}\), \(\epsilon > 0\) and \(C_n = C_n^{(\epsilon )}\), \(n \in \{0,1,2,3\}\) as in (4.12). Again, the operators are symmetric on \(\mathcal {D}\) and satisfy (3.3)–(3.5) as a consequence of the definition. Let us now verify the additional assumptions of the virial theorem. Again, by Proposition 4.3, (V1) and (3.6)–(3.8) are satisfied. Furthermore, by Proposition 4.3 we know that \(({A_{\mathrm p}^{(\epsilon )}}, \varLambda _{\mathrm p}, \mathcal {D}_{\mathrm p})\) is a GJN triple. Hence Theorem 3.2 shows that \(\exp ( \mathrm {i}t {A_{\mathrm p}^{(\epsilon )}})\), \(t \in {\mathbb {R}}\), leaves \(\mathcal {D}(\varLambda _{\mathrm p})\) invariant. This and the invariance for the unitary group generated by \(A_{{\text {f}}}\), as already shown in Step 1, imply (V2). Thus we can apply the virial theorem and obtain a sequence \((\psi _n)_{n\in {\mathbb {N}}}\) in \(\mathcal {D}(C_1^{(\epsilon )}) \cap \mathcal {D}(L_\lambda )\) such that \(\psi _n \rightarrow \psi \), \(n \rightarrow \infty \), and \(\lim _{n\rightarrow \infty } \left\langle \psi _n, C_1^{(\epsilon )} \psi _n \right\rangle = 0\). Now, since \(q_{C^{(\epsilon )}_1}\) is closed and thus lower semi-continuous, we obtain \(\psi \in \mathcal {D}(q_{C^{(\epsilon )}_1})\) and

Step 3: The theorem follows from Step 1, Step 2, and Lemma 4.4. \(\square \)

5 Estimates on the Scattering Functions

The aim of this section is to prove that the commutators of the interaction with the dilation operator in scattering space are sufficiently bounded. To achieve this, we use the Born series expansion of the scattering functions, that is, we expand them using the recursion formula of the Lippmann–Schwinger equation (4.1). Then we get the Born series terms, and a remainder term since we perform only finitely many recursion steps. The idea is that the remainder term decays fast enough for the momentum \(\left| k \right| \rightarrow \infty \) after sufficiently many iteration steps.

5.1 Born Series Expansion and Technical Preparations

First we show that that the scattering functions as well as their derivatives with respect to the wave vector k are bounded. For that we use the method of modified square integrable scattering functions as described in Sect. 4.1. Remember that \(\varphi (k,\cdot )\), \(k \in {\mathbb {R}}^3\), denote the continuous scattering functions on \({\mathbb {R}}^3\) and V a potential satisfying the assumptions (H1), (H2). As V is compactly supported we may assume that \({\text {supp}}V\) is contained in a ball around the origin of radius R.

We now set \(\widetilde{\varphi }(k,x) := \left| V(x) \right| ^{1/2} \varphi (k,x)\). Then \(\widetilde{\varphi }(k,\cdot ) \in L^2({\mathbb {R}}^3)\) for all k, and the function satisfies the modified Lippmann–Schwinger equation

where \(L_\kappa \) is defined in (4.3). We recall from Sect. 4.1 that we can recover the original scattering function from the modified one by

Now we extend the results of boundedness of the first derivative of the scattering functions in [25, Lemma 1.1.3] to derivatives of arbitrary order.

Proposition 5.1

Assume (H1) and (H2). Let \({\hat{D}}_k= \frac{k}{|k|} \nabla _k\) and let \(\partial _{k_j}\) be the derivative with respect to the j-th component of k. For all \(n \in {\mathbb {N}}_0\), \(m \in \{0,1\}\) there is a polynomial P such that for all x and \(k \ne 0\),

Proof

We get by the modified Lippmann–Schwinger equation

where \(e_k(x) := e^{\mathrm {i}k x}\). First we claim that \(({\text {Id}}- L_{\left| k \right| })^{-1}\) is uniformly bounded in \(k \in {\mathbb {R}}^3\). For this we note that \(\kappa \mapsto L_{\kappa }\) is continuous on \([0,\infty )\), which is easy to see, cf. [30, Theorem 1.22]. Moreover, \(\lim _{\kappa \rightarrow 0} \Vert L_\kappa \Vert \rightarrow 0\) follows from a result of Zemach and Klein, see [30, Theorem 1.23]. Since \(L_\kappa \) is Hilbert-Schmidt and hence compact, it follows from the Fredholm alternative that \({\text {Id}}- L_\kappa \) is invertible, provided \(\psi = L_{\kappa } \psi \) has no non-trivial solutions in \(L^2\). But as in the proof of Theorem 4.1, such non-trivial solutions are ruled out for all \(\kappa \ge 0\). Since the inverse is a continuous map on the space of bounded invertible operators, the claim about the bounded resolvent now follows.

Observe that \({\hat{D}}_k|k| = 1\) and \({\hat{D}}_k(k/|k|) = 0\). Thus, for any \(n \in {\mathbb {N}}_0\), \({\hat{D}}_k^n ({\text {Id}}- L_{\left| k \right| })^{-1} \) is again uniformly bounded in \(k \not = 0\), since differentiation with \({\hat{D}}_k\) yields just higher powers of \(({\text {Id}}- L_{\left| k \right| })^{-1}\) and radial derivatives of \(L_{\left| k \right| }\), which are again bounded operators since V decays fast enough. Similarly one sees that \(\partial _{k_j} {\hat{D}}_k^n ({\text {Id}}- L_{\left| k \right| })^{-1} \) is uniformly bounded in \(k \not = 0\). Note that the expression is not differentiable at the origin. Furthermore, for any \(n \in {\mathbb {N}}\),

as V is compactly supported. Thus, we have shown, for all \(n \in {\mathbb {N}}_0\),

Now we can differentiate (5.1), estimate the integral with Cauchy–Schwarz, and use that

is bounded uniformly in x, where the second inequality can be seen by considering the cases \(|x| < 2R\) and \(|x| \ge 2R\). \(\square \)