Abstract

We consider a matrix polynomial equation (MPE) \(A_nX^n+A_{n-1}X^{n-1}+\cdots +A_0=0\), where \(A_n, A_{n-1},\ldots , A_0 \in \mathbb {R}^{m\times m}\) are the coefficient matrices, and \(X\in \mathbb {R}^{m\times m}\) is the unknown matrix. A sufficient condition for the existence of the minimal nonnegative solution is derived, where minimal means that any other solution is componentwise no less than the minimal one. The explicit expressions of normwise, mixed and componentwise condition numbers of the matrix polynomial equation are obtained. A backward error of the approximated minimal nonnegative solution is defined and evaluated. Some numerical examples are given to show the sharpness of the three kinds of condition numbers.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We consider a matrix polynomial equation (MPE) of the following form

where \(A_n, A_{n-1},\ldots , A_0 \in \mathbb {R}^{m\times m}\) are the coefficient matrices, and \(X\in \mathbb {R}^{m\times m}\) is the unknown matrix.

Matrix polynomial equations often arise in queueing problems, differential equations, system theory, stochastic theory and many other areas [2, 3, 12, 18, 21, 27]. Different techniques have been studied for finding the minimal nonnegative solution. For the case \(n=2\), the MPE (1.1) is the well-known quadratic matrix equation (QME). In [10, 11, 20, 28], the structured QME, which is called the unilateral quadratic matrix equation (UQME), was studied. The authors showed that an algebraic Riccati equation \(XCX-AX-XD+B=0\) can be transformed into a UQME. Bini et al. [11] proposed an algorithm by complementing the transformation with the shrink-and-shift technique of Ramaswami for finding the solution of the UQME. Larin [29] generalized the Schur and doubling methods to the UQME. For the unstructured QME, which has a wide application in the quasi-birth–death process [6, 30], the minimal nonnegative solution is of importance. Davis [14, 15] considered Newton’s method for solving the unstructured QME. Higham and Kim [23, 24] studied the dominant and minimal solvent of the unstructured QME and they improved the global convergence properties of Newton’s method by incorporating an exact line searches. The logarithmic reduction method with quadratic convergence is introduced in [31].

For the case \(n=+\infty \), the MPE (1.1) is called power series matrix equation and often arises in Markov chains. For a given M/G/1-type matrix S, the computation of the probability invariant vector associated with S is strongly related to the minimal nonnegative solution of the MPE (1.1) with \(n=+\infty \). Latouche [6, 30] proved that Newton’s method could be applied to solve the power series matrix equation, and the matrix sequence obtained by Newton’s method converges to the minimal nonnegative solution. Bini et al. [5] solved the matrix polynomial equations by devising some new iterative techniques with quadratic convergence.

For the general case (\(n\ge 2\)), the cyclic reduction method [7,8,9], the invariant subspace algorithm [1] and the doubling technique [33] have been proposed for finding the minimal nonnegative solution of the MPE (1.1). Kratz and Stickel [26] proved that Newton’s method could also be applied to solve this general case. Seo and Kim [38] studied the relaxed Newton’s method for finding the minimal nonnegative solution of the MPE (1.1) and they also proved that the relaxed Newton’s method could work more efficiently than the general Newton’s method.

Since the minimal nonnegative solution of the MPE (1.1) is of practical importance and there is little work about the perturbation analysis for the MPE (1.1), this paper is devoted to the condition numbers of the MPE (1.1), which play an important role in perturbation analysis. We investigate three kinds of normwise condition numbers for Eq. (1.1). Note that the norm-wise condition number ignores the structure of both input and output data, so when the data are badly scaled or sparse, using norms to measure the relative size of the perturbation on its small or zero entries does not suffice to determine how well the problem is conditioned numerically. In this case, componentwise analysis can be one alternative approach by which much tighter and revealing bounds can be obtained. There are two kinds of alternative condition numbers called mixed and componentwise condition numbers, respectively, which are developed by Gohberg and Koltracht [17], and we refer to [16, 22, 34, 35, 39,40,41,42,43] for more details of these two kinds of condition numbers.

We also apply the theory of mixed and componentwise condition numbers to the MPE (1.1) and present local linear perturbation bounds for its minimal nonnegative solution by using mixed and componentwise condition numbers.

This paper is organized as follows. In Sect. 2, we give a sufficient condition for the existence of the minimal nonnegative solution. In Sect. 3, we investigate three kinds of normwise condition numbers and derive explicit expressions for them. In Sect. 4, we obtain explicit expressions and upper bounds for the mixed and componentwise condition numbers. In Sect. 5, we define a backward error of the approximate minimal nonnegative solution and derive an elegant upper and lower bound. In Sect. 6, we give some numerical examples to show the sharpness of these three kinds of condition numbers.

We begin with the notation used throughout this paper. \(\mathbb {R}^{m\times m}\) stands for the set of \(m\times m\) matrices with elements in field \(\mathbb {R}\). \(\Vert \cdot \Vert _2\) and \(\Vert \cdot \Vert _F\) are the spectral norm and the Frobenius norm, respectively. For \(X=(x_{ij})\in \mathbb {R}^{m\times m}\), \(\Vert X\Vert _\mathrm{max}\) is the max norm given by \(\Vert X\Vert _\mathrm{max}=\mathrm{max}_{i,j}\{|x_{ij}|\}\) and |X| is the matrix whose elements are \(|x_{ij}|\). For a vector \(v=(v_1,v_2,\ldots ,v_m)^T\in \mathbb {R}^m\), diag(v) is the diagonal matrix whose diagonal is given by a vector v and \(|v|=(|v_1|, |v_2|,\ldots , |v_m|)^T\). For a matrix \(A=(a_{ij})\in \mathbb {R}^{m\times m}\) and a matrix B, \(\mathrm{vec}(A)\) is a vector defined by \(\mathrm{vec}(A)=(a_1^T,\ldots ,a_m^T)^T\) with \(a_i\) as the i-th column of A, \(A\otimes B=(a_{ij}B)\) is the Kronecker product. For matrices X and Y, we write \(X\ge 0\ (X>0)\) and say that X is nonnegative (positive) if \(x_{ij}\ge 0\ (x_{ij}>0)\) holds for all i, j, and \(X\ge Y \ (X>Y)\) is used as a different notation for \(X-Y\ge 0 \ (X-Y>0)\).

2 Existence of the Minimal Nonnegative Solution

In this section, we give a sufficient condition for the existence of the minimal nonnegative solution of the MPE (1.1). Some basic definitions are stated as follows.

Definition 2.1

[25] Let F be a matrix function from \(\mathbb {R}^{m\times n}\) to \(\mathbb {R}^{m\times n}\). Then a nonnegative (positive) solution \(S_1\) of the matrix equation \(F(X)=0\) is a minimal nonnegative (positive) solution if for any nonnegative (positive) solution S of \(F(X)=0\), it holds that \(S_1\le S.\)

Definition 2.2

[19] A matrix \(A\in \mathbb {R}^{m\times m}\) is an M-matrix if \(A=sI-B\) for some nonnegative matrix B and s with \(s\ge \rho (B)\) where \(\rho \) is the spectral radius; it is a singular M-matrix if \(s=\rho (B)\) and a nonsingular M-matrix if \(s>\rho (B)\).

Theorem 2.3

Assume that the coefficient matrices \(A_{k}\)’s of the MPE (1.1) are nonnegative except \(A_{1}\) and \(-A_{1}\) is a nonsingular M-matrix. Then, there exists the unique minimal nonnegative solution to the MPE (1.1) if

Proof

We define a matrix function by

where the \(A_k\)’s are coefficients of the MPE (1.1) and \(X\in \mathbb {R}^{m\times m}\).

Consider the sequence \(\{X_{k}\}_{k=0}^{\infty }\) defined by

with \(X_{0} = 0\).

By Theorems A.16 and A.19 in [4], there exists a vector \(v>0\) such that \(Bv > 0\) if B is a nonsingular M-matrix, or \(Bv = 0\) if B is a singular irreducible M-matrix, i.e.,

Since \(-A_{1}\) is a nonsingular M-matrix, it follows that

We show that

hold for all \(i=0, 1, \ldots \).

Clearly,

Hence, (2.3) holds for \(i = 0\).

Suppose that (2.3) holds for \(i = l\). Then,

On the other hand, it follows from (2.2) that

So, (2.3) holds for \(i = l+1\). By induction, (2.3) holds for all \(i =0,1,\ldots \), which implies that \(\{X_{i}\}\) converges to a nonnegative matrix.

Let S be the nonnegative matrix to which \(\{X_{i}\}\) converges and let Y be a nonnegative solution of the MPE (1.1). It is trivial that \(X_{0} \le Y\). Suppose that \(X_{l} \le Y\). Then,

By induction, \(X_{i} \le Y\) for all \(i=0,1,\ldots \). Therefore, \(S \le Y\) for any nonnegative solution Y of the MPE (1.1), i.e., S is the minimal nonnegative solution of the MPE (1.1). \(\square \)

Remark 2.4

From the proof of Theorem 2.3, we can see that the sequence \(\{X_i\}\) generated by \(X_{i+1}=G(X_i)\) is monotonically increasing and convergent. So if \(X_i>0\) for some \(i\ge 0\), then the matrix sequence \(\{X_i\}\) monotonically converges to the minimal positive solution of the MPE (1.1).

Corollary 2.5

Under the assumption of Theorem 2.3, if

and one of the following conditions holds true:

-

(i)

Both \(A_0\) and \(A_1\) are irreducible matrices;

-

(ii)

\(A_0\) is a positive matrix.

Then, the MPE (1.1) has a minimal positive solution.

Proof

Note that if \(A_0\) and \(A_1\) are irreducible matrices, or if \(A_0\) is a positive matrix, we get \(X_1=-A_1^{-1}A_0>0\), where \(X_1\) is generated by iteration \(X_{i+1}=G(X_i)\) in the proof of Theorem 2.3. According to Remark 2.4, the existence of minimal positive solution of the MPE (1.1) can be proved by using the same technique listed in the proof of Theorem 2.3. \(\square \)

3 Normwise Condition Number

In this section, we investigate three kinds of normwise condition numbers of the MPE (1.1).

The perturbed equation of the MPE (1.1) is

For the notation simplification, we introduce the recursion function \(\Phi : \mathbb {N}\times \mathbb {N} \times \mathbb {R}^{m\times m}\times \mathbb {R}^{m\times m}\rightarrow \mathbb {R}^{m\times m}\) as defined in [38]:

where \(\mathbb {N}\) is the set of natural numbers and \(\mathbb {N}^+=\mathbb {N}-\{0\}\). It can be easily shown that

and

Using the function \(\Phi \), we can write the MPE (1.1) as

Lemma 3.1

(Theorem 2.1, [38]) If X and Y are \(m\times m\) matrices and \(\Phi \) is the recursion function defined by (3.2), then we have

By Lemma 3.1, Eq. (3.1) can be rewritten as

Dropping the high order terms in (3.3) yields

that is,

Applying the vec expression to (3.4) gives

where

Under certain conditions, usually satisfied in the applications, the matrix P is a nonsingular matrix as showed in [38]. We suppose that P is nonsingular in the remainder of this paper.

We define the following mapping

where X is the minimal nonnegative solution of the MPE (1.1).

Three kinds of normwise condition numbers are defined by

where

The nonzero parameters \(\delta _k\) in \(\Delta _1\) and \(\Delta _2\) provide some freedom in how to measure the perturbations. Generally, \(\delta _k\) is chosen as the functions of \(\Vert A_k\Vert _F\), and \(\delta _k=\Vert A_k\Vert _F\) is most often taken for \(k=0,1,\ldots , n\).

Theorem 3.2

Using the notations given above, the explicit expressions and upper bounds for the three kinds of normwise condition numbers at X of the MPE (1.1), where X is a solution to the MPE (1.1), are

where

Proof

It follows from (3.5) that

where

It yields

Note that \(\Vert r_1\Vert _2=\Delta _1\le \epsilon \), and it follows from (3.8) (when \(i=1\)) and inequality (3.14) that (3.10) holds.

According to (3.5), we get

Since \(\Vert r\Vert _2=\Delta _3\cdot \big \Vert \big [\Vert A_n\Vert _F,\ \Vert A_{n-1}\Vert _F, \ldots ,\Vert A_0\Vert _F\big ]\big \Vert _2\le \epsilon \sqrt{\sum _{k=0}^n\Vert A_k\Vert _F^2}\), then by (3.8) (when \(i=3\)) and inequality (3.15) we arrive at (3.12).

Let \(\epsilon =\Delta _2\). It follows from (3.13) that

On the other hand, (3.13) can be rewritten as

from which it is easy to get

where \(\mu =\sum _{k=0}^n\delta _k\Vert P^{-1}\big ((X^k)^T\otimes I_m\big )\Vert _2\).

Then, (3.11) is obtained according to inequalities (3.16) and (3.17). \(\square \)

Now we study another sensitivity analysis for the MPE (1.1). Consider the parameter perturbation of \(A_p: A_p(\tau )=A_p+\tau E_p\) and the equation

where \(E_p\in \mathbb {R}^{m\times m}\) and \(\tau \) is a real parameter.

Let \(Q(X,\tau )=\sum _{p=0}^nA_p(\tau )X^p\) and let \(X_{+}\) be any solution of the MPE (1.1) such that P is nonsingular. Then

-

(i)

\(Q(X_{+},0)=0\)

-

(ii)

\(Q(X,\tau )\) is differentiable arbitrarily many times in the neighborhood of \((X_{+},0)\), and

$$\begin{aligned} \frac{\partial Q}{\partial X}\Big |_{(X_{+}, 0)}&=\sum _{p=1}^n(I_m\otimes A_p)\sum _{q=0}^{p-1}\left( X_{+}^q\right) ^T\otimes X_{+}^{p-1-q}\\&=\sum _{p=1}^n\sum _{q=0}^{p-1}\left( X_{+}^q\right) ^T\otimes \left( A_pX_{+}^{p-1-q}\right) . \end{aligned}$$

Note that \(\frac{\partial Q}{\partial X}|_{(X_{+}, 0)}\) is exactly P in (3.6) and is nonsingular under our assumption. By the implicit function theory [36], there exists \(\delta >0\) for \(\tau \in (-\delta ,\delta )\), there is a unique \(X(\tau )\) satisfying:

-

(i)

\(Q(X(\tau ),\tau )=0, X(0)=X_{+}\);

-

(ii)

\(X(\tau )\) is differentiable arbitrarily many times with respect to \(\tau \).

For

taking derivative for both sides of (3.19) with respect to \(\tau \) at \(\tau =0\) gives

Applying the vec operator to (3.20) yields

where

According to [37], we can derive the Rice condition number of \(X_{+}\):

4 Mixed and Componentwise Condition Number

In this section, we investigate the mixed and componentwise condition numbers of the MPE (1.1). Explicit expressions to these two kinds of condition numbers are derived. We first introduce some well-known results. To define mixed and componentwise condition numbers, the following distance function is useful. For any \(a, b\in \mathbb {R}^m\), define \(\frac{a}{b}=[c_1, c_2, \ldots , c_m]^T\) as

Then we define

Consequently for matrices \(A, B\in \mathbb {R}^{m\times m}\), we define

Note that if \(d(a,b)<\infty \), \(d(a,b)=\mathrm{min}\{\nu \ge 0:|a_i-b_i|\le \nu |b_i|\ \mathrm{for}\ i=1,2,\ldots ,m\}\).

In the sequel, we assume \(d(a,b)<\infty \) for any pair (a, b). For \(\epsilon >0\), we set \(B^0(a,\epsilon )=\{x|d(x,a)\le \epsilon \}\). For a vector-valued function \(F: \mathbb {R}^p\rightarrow \mathbb {R}^q\), \(\text{ Dom }(F)\) denotes the domain of F.

The mixed and componentwise condition numbers introduced by Gohberg and Koltracht [17] are listed as follows:

Definition 4.1

[17] Let \(F: \mathbb {R}^p\rightarrow \mathbb {R}^q\) be a continuous mapping defined on an open set \(\text{ Dom }(F)\subset \mathbb {R}^p\) such that \(0\notin \text{ Dom }(F)\) and \(F(a)\ne 0\) for a given \(a\in \mathbb {R}^p\).

-

(1)

The mixed condition number of F at a is defined by

$$\begin{aligned} m(F,a)=\lim _{\epsilon \rightarrow 0} {\mathop {\mathop {\hbox {sup}}\limits _{x\ne a}}\limits _{x\in B^0(a,\epsilon )}} \frac{\Vert F(x)-F(a)\Vert _{\infty }}{\Vert F(a)\Vert _{\infty }}\frac{1}{d(x,a)}. \end{aligned}$$ -

(2)

Suppose \(F(a)=\big [f_1(a), f_2(a), \ldots , f_q(a)\big ]^T\) such that \(f_j(a)\ne 0\) for \(j=1,2,\ldots , q\). The componentwise condition number of F at a is defined by

$$\begin{aligned} c(F,a)=\lim _{\epsilon \rightarrow 0} {\mathop {\mathop {\hbox {sup}}\limits _{x\in B^0(a,\epsilon )}}\limits _{x\ne a}}\frac{d(F(x), F(a))}{d(x,a)}. \end{aligned}$$

The explicit expressions of the mixed and componentwise condition numbers of F at a are given by the following lemma [13, 17].

Lemma 4.2

Suppose F is Fréchet differentiable at a. We have

-

(1)

if \(F(a)\ne 0\), then

$$\begin{aligned} m(F,a)=\frac{\Vert F'(a){ diag}(a)\Vert _{\infty }}{\Vert F(a)\Vert _{\infty }}=\frac{\Vert |F'(a)||a|\Vert _{\infty }}{\Vert F(a)\Vert _{\infty }}; \end{aligned}$$ -

(2)

if \(F(a)=\big [f_1(a), f_2(a), \ldots , f_q(a)\big ]^T\) such that \(f_j(a)\ne 0\) for \(j=1,2,\ldots , q\), then

$$\begin{aligned} c(F,a)=\Vert { diag}^{-1}(F(a))F'(a){ diag}(a)\Vert _{\infty }=\left\| \frac{|F'(a)||a|}{|F(a)|}\right\| _{\infty }. \end{aligned}$$

Theorem 4.3

Let \(m(\varphi )\) and \(c(\varphi )\) be the mixed and componentwise condition numbers of the MPE (1.1), we have

where

Furthermore, we have two simple upper bounds for \(m(\varphi )\) and \(c(\varphi )\) as follows:

and

Proof

It follows from (3.5) that \(\mathrm{vec}(\Delta X)\approx P^{-1}Lr\), which implies that the Fr\(\acute{e}\)chet derivative of \(\varphi \) is

where \(\varphi \) is defined by (3.7). Let \(v=[\mathrm{vec}(A_n)^T, \mathrm{vec}(A_{n-1})^T, \ldots , \mathrm{vec}(A_0)^T]^T\). From (1) of Lemma 4.2, we obtain

where

It holds that

Therefore,

From (2) of Lemma 4.2, we obtain

Similarly, it holds that

\(\square \)

5 Backward Error

In this section, we investigate the backward error of an approximate solution Y to the MPE (1.1). The backward error is defined by

Let

then we can write the equation in (5.1) as

from which we get

Applying the vec operator to (5.2) yields

For convenience, we write (5.3) as

where

We assume that H is of full rank. This guarantees that (5.3) has a solution and the backward error is finite.

From (5.4), an upper bound for \(\theta (Y)\) is obtained

where \(H^+\) is the pseudoinverse of H, and \(\sigma _\mathrm{min}(H)\) is the minimal singular value of H which is nonzero under the assumption that H is of full rank.

Note that

Thus

6 Numerical Examples

In this section, we give three numerical examples to show the sharpness of the normwise, mixed and componentwise condition numbers. All computations are made in Matlab 7.10.0 with the unit roundoff being \(u\approx 2.2\times 10^{-16}\).

Example 6.1

We consider the matrix polynomial equation \(\sum _{k=0}^9A_kX^k=X\) with \(A_k=D^{-1}\bar{A}_k\) for \(k=0,1\ldots ,9\), where \(\bar{A}_k=rand(10)\) with rand as the random function in Matlab. The matrix D is a diagonal matrix whose entries are the row sums of \(\sum _{i=0}^9\bar{A}_k\) so that \((\sum _{k=0}^9A_k)\mathbf 1 _m=\mathbf 1 _m\). We rewrite the matrix polynomial as

Note that \(I_m-A_1\) is a nonsingular M-matrix and \(I_m-\sum _{k=0}^9A_k\) is a singular irreducible M-matrix. From Theorem 2.3, we know the minimal nonnegative solution S of Eq. (6.1) exists.

Suppose that the perturbations in the coefficient matrices are

where s is a positive integer and \(\circ \) is the Hadamard product. Note that \(I_m-\tilde{A}_1\) and \(I_m-\sum _{k=0}^9\tilde{A}_k\) are also nonsingular M-matrices. Hence the corresponding perturbed equation has a unique minimal nonnegative solution \(\tilde{S}\).

We use the Newton’s method proposed in [38] to compute the minimal nonnegative solution S and \(\tilde{S}\). Choose \(\delta _k=\Vert A_k\Vert _F\), from Theorem 3.2 we get three kinds of local normwise perturbation bounds: \(\Vert \Delta S\Vert _F/\Vert S\Vert _F\lesssim k_i(\varphi )\Delta _i\) for \(i=1,2,3\). Denote \(k_2^U=\sqrt{n}k_1(\varphi )\) and \(k_2^M(\varphi )=\mu /\Vert S\Vert _F\), we compare the above approximate perturbation bounds with the exact relative error \(\Vert \tilde{S}-S\Vert _F/\Vert S\Vert _F\). Table 1 shows that our estimates of the three normwise perturbation bounds are close to the exact relative error \(\Vert \tilde{S}-S\Vert _F/\Vert S\Vert _F\). It also shows that the perturbation bound given by \(k_3(\varphi )\Delta _3\) is sharper than the other two bounds.

Example 6.2

This example is taken from [32]. Consider the matrix polynomial equation \(A_0+A_1X+A_2X^2=0\). The coefficient matrices \(A_0, A_1, A_2 \in \mathbb {R}^{m\times m}\) with \(m=8\) are given by \(A_0=M_1^{-1}M_0\), \(A_1=I\), \(A_2=M_1^{-1}M_2\), where \(M_0=\mathrm{diag}(\beta _1,\ldots ,\beta _m)\), \(M_2=\rho \cdot \mathrm{diag}(\alpha _1,\ldots ,\alpha _m)\) and

where \(\alpha =(0.2, 0.2, 0.2, 0.2, 13, 1, 1, 0.2)\), \(\beta _i=2\) for \(i=1,\ldots , m\) and \(\rho =0.99\).

This example represents a queueing system in a random environment, where periods of severe overflows alternate with periods of low arrivals. Note that in this example, both \(A_0\) and \(A_2\) are nonpositive. \(A_1\) itself is a nonsingular M-matrix. Consider the following equation

Then Eq. (6.2) has same solutions as equation \(A_0+A_1X+A_2X^2=0\), and the coefficients matrices in (6.2) satisfy the conditions in Corollary 2.5, then Eq. (6.2) has a minimal positive solution X. For \(k=0,1,2\), let \(\Delta A_k=rand(m)\circ A_k\times 10^{-s}\), where s is a positive integer, then \(\tilde{A_k}=A_k+\Delta A_k\) is the perturbed coefficient matrix of the corresponding perturbed equation. Similarly, the minimal positive solution \(\tilde{X}\) of the perturbed matrix polynomial equation exists and can be obtained by using the Newton’s method in [38].

Let

and

Table 2 shows that the mixed and componentwise analysis give more tighter and revealing bounds than the normwise perturbation bounds.

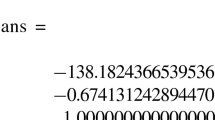

Example 6.3

We consider the matrix differential equation

Such equations may occur in connection with vibrating system. The characteristic polynomial is

Let

and

The coefficient matrices of \(P_3(X)=0\) satisfy the condition in Corollary 2.5, so there is a minimal positive solution \(X_*\) such that \(P_3(X_*)=0\).

Let s be a positive integer and suppose the coefficient matrices are perturbed by \(\Delta A_i\ (i=0,1,2)\), where

and

Using the notations listed in Examples 6.1 and 6.2, the perturbation bounds obtained by the normwise, mixed and componentwise condition numbers are listed in Table 3. Table 3 shows that our estimated perturbation bounds are sharp. Moreover, we observe that the simple upper bounds \(m_U(\varphi )\) and \(c_U(\varphi )\) of the mixed and componentwise condition numbers \(m(\varphi )\) and \(c(\varphi )\), which are obtained in Theorem 4.3, are also tight.

7 Conclusion

In this paper, one sufficient condition for the existence of the minimal nonnegative solution of a matrix polynomial equation is given. Three kinds of normwise condition numbers of the matrix polynomial equation are investigated. The explicit expressions and upper bounds for the mixed and componentwise condition numbers are derived. A backward error is defined and evaluated.

References

Akar, N., Sohraby, K.: An invariant subspace approach in M/G/1and G/M/1 type Markov chains. Commun. Statist. Stochastic Models 13, 381–416 (1997)

Alfa, A.S.: Combined elapsed time and matrix-analysis method for the discrete time \(GI/G/1\) and \(GI^X/G/I\) systems. Queueing Syst. 45, 5–25 (2003)

Bean, N.G., Bright, L., Latouche, G., Pearce, C.E.M., Pollett, P.K., Taylor, P.G.: The quasi-stationary behavior of quasi-birty-and-death process. Ann. Appl. Probab. 7, 134–155 (1997)

Bini, D.A., Iannazzo, B., Meini, B.: Numerical Solution of Algebraic Riccati Equations. SIAM, Philadelphia, PA (2012)

Bini, D.A., Latouche, G., Meini, B.: Solving matrix polynomial equations arising in queueing problems. Linear Algebra Appl. 340, 225–244 (2002)

Bini, D.A., Latouche, G., Meini, B.: Numerical Methods for Structured Markov Chains. Oxford University Press, New York (2005)

Bini, D.A., Meini, B.: On cyclic reduction applied to a class of Toeplitz-like matrices arising in queueing problems. In: Stewart, W.J. (ed.) Computations with Markov Chains, pp. 21–38. Springer, Boston, MA (1995)

Bini, D.A., Meini, B.: On the solution of a nonlinear matrix equation arising in queueing problems. SIAM J. Matrix Anal. Appl. 17, 906–926 (1996)

Bini, D.A., Meini, B.: Improved cyclic reduction for solving queueing problems. Numer. Algorithms 15, 57–74 (1997)

Bini, D.A., Iannazzo, B., Latouche, G., Meini, B.: On the solution of algebraic Riccati equations arising in fluid queues. Linear Algebra Appl. 413, 474–494 (2006)

Bini, D.A., Meini, B., Poloni, F.: Transforming algebraic Riccati equations into unilateral quadratic matrix equations. Numer. Math. 116, 553–578 (2010)

Butler, G.J., Johnson, C.R., Wolkowicz, H.: Nonnegative solutions of a quadratic matrix equation arising from comparison theorems in ordinary differential equations. SIAM J. Algebr. Discrete Methods 6, 47–53 (1985)

Cucker, F., Diao, H., Wei, Y.: On mixed and componentwise condition mumbers for Moore–Penrose inverse and linear least squares problems. Math. Comp. 76, 947–963 (2007)

Davis, G.J.: Numerical solution of a quadratic matrix equation. SIAM J. Sci. Stat. Comput. 2, 164–175 (1981)

Davis, G.J.: Algorithm 598: an algorithm to compute solvent of the matrix equation \(AX^2 + BX + C = 0\). ACM Trans. Math. Softw. 9, 246–254 (1983)

Diao, H.-A., Wei, Y., Qiao, S.: Structured condition numbers of structured Tikhonov regularization problem and their estimations. J. Comput. Appl. Math. 308, 276–300 (2016)

Gohberg, I., Koltracht, I.: Mixed, componentwise and structured condition numbers. SIAM J. Matrix Anal. Appl. 14, 688–704 (1993)

Gohberg, I., Lancaster, P., Rodman, L.: Matrix Polynomials. Academic Press, New York (1982)

Guo, C.-H., Higham, N.J.: Iterative solution of a nonsymmetric algebraic Riccati equation. SIAM J. Matrix Anal. Appl. 29, 396–412 (2007)

Guo, X.-X., Lin, W.-W., Xu, S.-F.: A structure-preserving doubling algorithm for nonsymmetric algebraic Riccati equation. Numer. Math. 103, 393–412 (2006)

He, Q.-M., Neuts, M.F.: On the convergence and limits of certain matrix sequences arising in quasi-birth-and-death Markov chains. J. Appl. Probab. 38, 519–541 (2001)

Higham, N.J.: A survey of componentwise perturbation theory in numerical linear algebra. In: Proceedings of the Symposium Applied Mathematics, vol. 48. American Mathematical Society, Providence, RI (1994)

Higham, N.J., Kim, H.-M.: Numerical analysis of a quadratic matrix equation. IMA J. Numer. Anal. 20, 499–519 (2000)

Higham, N.J., Kim, H.-M.: Solving a quadratic matrix equation by Newton’s method with exact line searches. SIAM J. Matrix Anal. Appl. 23, 303–316 (2001)

Kim, H.-M.: Convergence of Newton’s method for solving a class of quadratic matrix equations. Honam Math. J. 30, 399–409 (2008)

Kratz, W., Stickel, E.: Numerical solution of matrix polynomial equations by Newton’s method. IMA J. Numer. Anal. 7, 355–369 (1987)

Lancaster, P.: Lambda-matrices and Vibrating Systems. Pergamon Press, Oxford (1966)

Lancaster, P., Rodman, L.: Existence and uniqueness theorems for the algebraic Riccati equation. Int. J. Control 32, 285–309 (1980)

Larin, V.B.: Algorithms for solving a unilateral quadratic matrix equation and the model updating problem. Int. Appl. Mech. 50, 321–334 (2014)

Latouche, G.: Newton’s iteration for nonlinear equations in Markov chains. IMA J. Numer. Anal. 14, 583–598 (1994)

Latouche, G., Ramaswami, V.: A logarithmic reduction algorithm for quasi-birth-death processes. J. Appl. Probab. 30, 650–674 (1993)

Latouche, G., Ramaswami, V.: Introduction to matrix analytic methods in stochastic modeling. In: ASA-SIAM Series on Statistics and Applied Probability, vol. 5. SIAM, Philadelphia, PA (1999)

Latouche, G., Stewart, G.W.: Numerical methods for M/G/1 type queues. In: Stewart, W.J. (ed.) Computations with Markov Chains, pp. 571–581. Kluwer Academic Publishers, Dordrecht (1995)

Lin, Y., Wei, Y.: Normwise, mixed and componentwise condition numbers of nonsymmetric algebraic Riccati equations. J. Appl. Math. Comput. 27, 137–147 (2008)

Liu, L.D.: Mixed and componentwise condition numbers of nonsymmetric algebraic Riccati equation. Appl. Math. Comput. 218, 7595–7601 (2012)

Ortega, J.M., Rheinboldt, W.C.: Iterative Solution of Nonlinear Equation in Several Variables. Academic Press, New York (1970)

Rice, J.R.: A theory of condition. SIAM J. Numer. Anal. 3, 287–310 (1966)

Seo, J.-H., Kim, H.-M.: Convergence of pure and relaxed Newton methods for solving a matrix polynomial equation arising in stochastic models. Linear Algebra Appl. 440, 34–49 (2014)

Wang, W.-G., Wang, C.-S., Wei, Y.-M., Xie, P.-P.: Mixed, componentwise condition numbers and small sample statistical condition estimation for generalized spectral projections and matrix sign functions. Taiwan. J. Math. 20, 333–363 (2016)

Xiang, H., Wei, Y.-M.: Structured mixed and componentwise condition numbers of some structured matrices. J. Comput. Appl. Math. 202, 217–229 (2007)

Xue, J.-G., Xu, S.-F., Li, R.-C.: Accurate solutions of \(M\)-matrix Sylvester equations. Numer. Math. 120, 639–670 (2012)

Xue, J.-G., Xu, S.-F., Li, R.-C.: Accurate solutions of \(M\)-matrix algebraic Riccati equations. Numer. Math. 120, 671–700 (2012)

Zhou, L.-M., Lin, Y.-Q., Wei, Y.-M., Qiao, S.-Z.: Perturbation analysis and condition numbers of symmetric algebraic Riccati equations. Automatica 45, 1005–1011 (2009)

Acknowledgements

This work was supported by the National Research Foundation of Korea (NRF) Grant funded by the Korean Government (MSIP) (NRF-2017R1A5A1015722) and the Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education (2017R1D1A3B04033516). The authors thank the anonymous referees for providing very useful suggestions for providing this paper.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Meng, J., Seo, SH. & Kim, HM. Condition Numbers and Backward Error of a Matrix Polynomial Equation Arising in Stochastic Models. J Sci Comput 76, 759–776 (2018). https://doi.org/10.1007/s10915-018-0641-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10915-018-0641-x

Keywords

- Matrix polynomial equation

- Minimal nonnegative solution

- Perturbation analysis

- Condition number

- Mixed and componentwise

- Backward error