Abstract

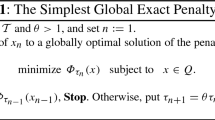

We propose a new approach to the theory of conditioning for numerical analysis problems for which both classical and stochastic perturbation theories fail to predict the observed accuracy of computed solutions. To motivate our ideas, we present examples of problems that are discontinuous at a given input and even have infinite stochastic condition number, but where the solution is still computed to machine precision without relying on structured algorithms. Stimulated by the failure of classical and stochastic perturbation theory in capturing such phenomena, we define and analyse a weak worst-case and a weak stochastic condition number. This new theory is a more powerful predictor of the accuracy of computations than existing tools, especially when the worst-case and the expected sensitivity of a problem to perturbations of the input is not finite. We apply our analysis to the computation of simple eigenvalues of matrix polynomials, including the more difficult case of singular matrix polynomials. In addition, we show how the weak condition numbers can be estimated in practice.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The condition number of a computational problem measures the sensitivity of an output with respect to perturbations in the input. If the input–output relationship can be described by a differentiable function f near the input, then the condition number is the norm of the derivative of f. In the case of solving systems of linear equations, the idea of conditioning dates back at least to the work of von Neumann and Goldstine [47] and Turing [45], who coined the term. For an algorithm computing f in finite precision arithmetic, the importance of the condition number \(\kappa \) stems from the “rule of thumb” popularized by Higham [29, §1.6],

The backward error is small if the algorithm computes the exact value of f at a nearby input, and a small condition number would certify that this is enough to get a small overall error. Higham’s rule of thumb comes from a first-order expansion, and in practice it often holds as an approximate equality and is valuable for practitioners who wish to predict the accuracy of numerical computations. Suppose that a solution is computed with, say, a backward error equal to \(10^{-16}\). If \(\kappa =10^2\), then one would trust the computed value to have (at least) 14 meaningful decimal digits.

The condition number can formally still be defined when f is not differentiable, though it may not be finite. If f is not locally Lipschitz continuous at an input, then the condition number is \(+ \infty \); a situation clearly beyond the applicability of Higham’s rule. Inputs at which the function f is not continuous are usually referred to as ill-posed. Based on the worst-case sensitivity, one would usually only expect a handful of correct digits when evaluating a function at such an input, and quite possibly none.Footnote 1 On the other hand, a small condition number is not a necessary condition for a small forward–backward error ratio: it is not inconceivable that certain ill-conditioned or even ill-posed problems can be solved accurately. Consider, for example, the problem of computing an eigenvalue of the \(4 \times 4\) matrix pencil (linear matrix polynomial)

this is a singular matrix pencil (the determinant is identically zero) whose only finite eigenvalue is simple and equal to 1 (see Sect. 3 for the definition of an eigenvalue of a singular matrix polynomial and other relevant terminology). The input is L(x) and the solution is 1. If the QZ algorithm [36], which is the standard eigensolver for pencils, is called via MATLAB’s command eig,Footnote 2 the output is:

All but the third computed eigenvalues are complete rubbish. This is not surprising: singular pencils form a proper Zariski closed set in the space of matrix pencils of a fixed format, and it is unreasonable to expect that an unstructured algorithm would detect that the input is singular and return only one eigenvalue. Instead, being backward stable, QZ computes the eigenvalues of some nearby matrix pencil, and almost all nearby pencils have 4 eigenvalues. On the other hand, the accuracy of the approximation of the genuine eigenvalue 1 is quite remarkable. Indeed, the condition number of the problem that maps L(x) to the exact eigenvalue 1 is infinite because the map from matrix pencils to their eigenvalues is discontinuous at any matrix pencil whose determinant is identically zero. To make matters worse, there exist plenty of matrix pencils arbitrarily close to L(x) whose eigenvalues are all nowhere near 1. For example, for any \(\epsilon> 0\),

where

has characteristic polynomial \(\epsilon ^2(\gamma _3-x)(x^3+\gamma _2 x^2 + \gamma _1 x + \gamma _0)\) and therefore, by an arbitrary choice of the parameters \(\gamma _i\), can have eigenvalues literally anywhere. Yet, unaware of this worrying caveat, the QZ algorithm computes an excellent approximation of the exact eigenvalue: 16 correct digits! This example has not been carefully cherry picked: readers are encouraged to experiment with any singular input in order to convince themselves that QZ often computesFootnote 3 accurately the (simple) eigenvalues of singular pencils, or singular matrix polynomials, in spite of being a discontinuous problem. See also [32] for more examples and a discussion of applications. Although the worst-case sensitivity to perturbations is indeed infinite, the raison d’être of the condition number, which is to predict the accuracy of computations on a computer, is not fulfilled.

Why does the QZ algorithm accurately compute the eigenvalue, when the map f describing this computational problem is not even continuous? Two natural attempts at explaining this phenomenon would be to look at structured condition numbers and/or average-case (stochastic) perturbation theory.

-

1.

An algorithm is structured if it computes the exact solution to a perturbed input, where the perturbations respect some special features of the input: for example, singular, of rank 3, triangular, or with precisely one eigenvalue. The vanilla implementation of QZ used here is unstructured in the sense that it does not preserve any of the structures that would explain the strange case of the algorithm that computes an apparently uncomputable eigenvalue.Footnote 4 It does, however, preserve the real structure. In other words, if the input is real, QZ computes the eigenvalues of a nearby real pencil. Yet, by taking real \(\gamma _i\) in the example above, it is clear that there are real pencils arbitrary close to L(x) whose eigenvalues are all arbitrarily far away from 1.

-

2.

The classical condition number is based on the worst-case perturbation of an input; as discussed in [29, §2.8], this approach tends to be overly pessimistic in practice. Numerical analysis pioneer James Wilkinson, in order to illustrate that Gaussian elimination is unstable in theory, but in practice its instability is only observed by mathematicians looking for it, is reported to have said [43]

Anyone that unlucky has already been run over by a bus.

In other words: in Wilkinson’s experience, the likelihood of seeing the admittedly terrifying worst case appeared to be very small, and therefore, Wilkinson believed that being afraid of the potential catastrophic instability of Gaussian elimination is an irrational attitude. Based on this experience, Weiss et al. [48] and Stewart [41] proposed to study the effect of perturbations on average, as opposed to worst case; see [29, §2.8] for more references on work addressing the stochastic analysis of roundoff errors. This idea was later formalized and developed further by Armentano [4]. This approach gives some hope to explain the example above, because it is known that the set of perturbations responsible for the discontinuity of f has measure zero [18]. However, this does not imply that on average perturbations are not harmful. In fact, as we will see, the stochastic condition number for the example above (or for similar problems) is still infinite! Average-case perturbation analysis, at least in the form in which it has been used so far, is still unable to solve the puzzle.

While neither structured nor average-case perturbation theory can explain the phenomenon observed above, Wilkinson’s colourful quote does contain a hint on how to proceed: shift attention from average-case analysis of perturbations to bounding rare events. We will get back to the matrix pencil (1) in Example 5.3, where we show that our new theory does explain why this problem is solved to high accuracy using standard backward stable algorithms.

In summary, the main contributions of this paper are

-

1.

a new species of “weak” condition numbers, which we call the weak worst-case condition number and the weak stochastic condition number that give a more accurate description of the perturbation behaviour of a computational map (Sect. 2);

-

2.

a precise probabilistic analysis of the sensitivity of the problem of computing simple eigenvalues of singular matrix polynomials (Sects. 4 and 5);

-

3.

an illustration of the advantages of the new concept by demonstrating that, unlike both classical and stochastic condition numbers, the weak condition numbers are able to explain why the apparently uncomputable eigenvalues of singular matrix polynomials, such as the eigenvalue 1 in the example above, can be computed with remarkable accuracy (Example 5.3);

-

4.

a concrete method for bounding the weak condition numbers for the eigenvalues of singular matrix polynomials (Sect. 6).

1.1 Related Work

Rounding errors, and hence the perturbations considered, are not random [29, 1.17]. Nevertheless, the observation that the computed bounds on rounding errors are overly pessimistic has led to the study of statistical and probabilistic models for rounding errors. An early example of such a statistical analysis is Goldstine and von Neumann [27], see [29, 2.8] and the references therein for more background. Recently, Higham and Mary [31] have obtained probabilistic rounding error bounds for a wide variety of algorithms in linear algebra. In particular, they give a rigorous foundation to Wilkinson’s rule of thumb, which states that constants in rounding error bounds can be safely replaced by their square roots.

The idea of using an average, rather than a supremum, in the definition of conditioning was introduced by Weiss et al. [48] in the context of the (matrix) condition number of solving systems of linear equations, and a more comprehensive stochastic perturbation theory was developed by Stewart [41]. In [4], Armentano introduced the concept of a smooth condition number and showed that it can be related to the worst-case condition. His work uses a geometric theory of conditioning and does not extend to singular problems.

The line of work on random perturbations is not to be confused with the probabilistic analysis of condition numbers, where a condition number is a given function, and the distribution of this function is studied over the space of inputs (see [13] and the references therein). Nevertheless, our work is inspired by the idea of weak average-case analysis [3] that was developed in this framework. Weak average-case analysis is based on the observation, which has origins in the work of Smale [40] and Kostlan [34], that discarding a small set from the input space can dramatically improve the expected value of a condition number, shifting the focus away from the average case and towards bounding the probability of rare events. Our contribution is to apply this line of thought to study random perturbations instead of random inputs. However, we stress that we do not seek to model the distribution of perturbations. The aim is to formally quantify statements such as “the set of bad perturbations is small compared to the set of good perturbations”. In other words, the (non-random) accumulation of rounding errors in a procedure would need a very good reason to give rise to a badly perturbed problem.

The conditioning of regular polynomial eigenvalue problems has been studied in detail by Tisseur [42] and by Dedieu and Tisseur in a homogeneous setting [15]. A probabilistic analysis of condition numbers (for random inputs) for such problems was given by Armentano and Beltrán [5] over the complex numbers and by Beltrán and Kozhasov [7] over the real numbers. Their work studies the distribution of the condition number on the whole space of inputs, and such an analysis only considers the condition number of regular matrix polynomials. A perturbation theory for singular polynomial eigenvalue problems was developed by de Terán and Dopico [14], and our work makes extensive use of their results. A method to solve singular generalized eigenvalue problems with plain QZ, based on applying a certain perturbation to them, is proposed in [32] (see also the references therein); note that our work goes beyond this, by showing how to estimate the weak condition number that could guarantee, often with overwhelming probability, that QZ will do fine even without any preliminary perturbation step.

1.2 Organization of the Paper

The paper is organized as follows: In Sect. 2, we review the rigorous definitions of the worst-case (von Neumann–Turing) condition number and the stochastic framework (Weiss et al., Stewart, Armentano) and comment on their advantages and limitations. We then define the weak condition numbers as quantiles and argue that, even when Wilkinson’s metaphorical bus hits von Neumann–Turing’s and Armentano-Stewart’s theories of conditioning, ours comes well endowed with powerful dodging skills. In Sect. 3, we introduce the perturbation theory of singular matrix polynomials, along with the definitions of simple eigenvalues and eigenvectors. We define the input–output map underlying our case study and introduce the directional sensitivity of such problems. In Sect. 4, which forms the core of this paper, we carry out a detailed analysis of the probability distribution of the directional sensitivity of the problems introduced in Sect. 3. In Sect. 5, we translate the probabilistic results from Sect. 4 into the language of weak condition numbers and prove the main results, Theorems 5.1 and 5.2. In Sect. 6, we sketch how our new condition numbers can be estimated in practice. Along the way we derive a simple concentration bound on the directional sensitivity of regular polynomial eigenvalue problems. Finally, in Sect. 7, we give some concluding remarks and discuss potential further applications.

2 Theories of Conditioning

For our purposes, a computational problem is a map between normed vector spacesFootnote 5

and we will denote the (possibly different) norms in each of these spaces by \(\Vert \cdot \Vert \). Following the remark on [30, p. 56], for simplicity of exposition in this paper we focus on absolute, as opposed to relative, condition numbers. The condition numbers considered depend on the map f and an input \(D\in \mathcal {V}\).

As we are only concerned with the condition of a fixed computational problem at a fixed input D, in what follows we omit reference to f and D in the notation.

Definition 2.1

(Worst-case condition number) The condition number of f at D is

If f is Fréchet differentiable at D, then this definition is equivalent to the operator norm of the Fréchet derivative of f. However, Definition 2.1 also applies (and can even be finite) when f is not differentiable. In complexity theory [8, 13], an elegant geometric definition of condition number is often used, which is essentially equivalent to Definition 2.1 under certain assumptions (which include smoothness).

The following definition is loosely derived from the work of Stewart [41] and Armentano [4], based on earlier work by Weiss et. al. [48]. In what follows, we use the terminology \(X\sim \mathcal {D}\) for a random variable with distribution \(\mathcal {D}\) and \({\mathbb {E}}_{X\sim \mathcal {D}}[\cdot ]\) for the expectation with respect to this distribution.

Definition 2.2

(Stochastic condition number) Let E be a \(\mathcal {V}\)-valued random variable with distribution \(\mathcal {D}\) and assume that \({\mathbb {E}}_{E\sim \mathcal {D}}[E]=0\) and \({\mathbb {E}}_{E\sim \mathcal {D}}[\Vert E\Vert ^2]=1\). Assume that the function f is measurable. Then, the stochastic condition number is

Remark 2.3

We note in passing that Definition 2.2 depends on the choice of a measure \(\mathcal {D}\). This measure is a parameter that the interested mathematician should choose as convenient; this is of course not particularly different than the freedom one is given in picking a norm. In fact, it is often convenient to combine these two choices, using a distribution that is invariant with respect to a given norm. Typical choices that emphasize invariance are the uniform (on a sphere) or Gaussian distributions, and the Bombieri-Weyl inner product when dealing with homogeneous multivariate polynomials [13, 16.1]. Technically speaking, the distribution is on the space of perturbations, rather than the space of inputs.

If f is differentiable at D and \(\mathcal {V}\) is finite dimensional, then it was observed by Armentano [4] that the stochastic condition number can be related to the worst-case one. We illustrate this relation in a simple but instructive special case. Consider the settingFootnote 6 where \(f:\mathbb {R}^m\rightarrow \mathbb {R}^n\) (\(m\ge n\)) is differentiable at \(D\in \mathbb {R}^m\), so that \(\kappa \) is the operator norm of the differential. If \(\sigma _1\ge \cdots \ge \sigma _m\) denote the singular values of \(\mathrm {d}{f}(D)\) (with \(\sigma _i=0\) for \(i>n\)), then \(\kappa = \sigma _1\). If \(\mathcal {D}\) is the uniform distribution on the sphere, then

where for (a) we used the fact that

and for (b) we used the orthogonal invariance of the uniform distribution on the sphere. As we will see in the case of singular polynomial eigenvalue problems with complex perturbations, the bound (2) does not hold in general, as the condition number can be infinite while the stochastic condition number is bounded. However, sometimes it can happen that the stochastic condition number is also infinite, because the “directional sensitivity” (see Definition 2.4) is not an integrable function. For example, for the problem of computing the eigenvalue of the singular pencil L(x) in the introduction, in spite of the fact that real perturbations are analytic for all but a proper Zariski closed set of perturbations [18], when restricting to real perturbations, we get

Despite this, QZ computes the eigenvalue 1 with 16 digits of accuracy.

To remedy the shortcomings of the stochastic condition number as defined in 2.2, we propose a change in focus from the expected value to tail bounds and quantiles, and the key concept for that purpose is the directional sensitivity. Just as the classical worst-case condition corresponds to the norm of the derivative, the directional sensitivity corresponds to a directional derivative. And, just as a function can have some, or all, directional derivatives while still not being continuous, a computational problem can have well-defined directional sensitivities but have infinite condition number.

Definition 2.4

(Directional sensitivity) The directional sensitivity of the computational problem f at the input D with respect to the perturbation E is

The directional sensitivity takes values in \([0,\infty ]\). In numerical analytic language, the directional sensitivity is the limit, for a particular direction of the backward error, of the ratio of forward and backward errors of the computational problem f; this limit is taken letting the backward error tend to zero (again having fixed its direction), which could also be thought of as letting the unit roundoff tend to zero. See e.g. [29, §1.5] for more details on this terminology.

The directional sensitivity is, if it is finite, \(\Vert E\Vert ^{-1}\) times the norm of the Gâteaux derivative \(\mathrm {d}{f}(D;E)\) of f at D in direction E. If f is Fréchet differentiable, then the Gâteaux derivative agrees with the Fréchet derivative, and we get

If E is a \(\mathcal {V}\)-valued random variable satisfying the conditions of Definition 2.2 and if f is Gâteaux differentiable in almost all directions, then by the Fatou–Lebesgue theorem we get

When integrating, null sets can be safely ignored; however, depending on the exact nature of the divergence (or lack thereof) of the integrand when approaching those null sets, the value of the integral need not be finite. To overcome this problem and still give probabilistically meaningful statements, we propose to use instead the concept of numerical null sets, i.e. sets of finite but small (in a sense that can be made precise depending on, for example, the unit roundoff of the number system of choice, the confidence level required by the user, etc.) measure. This is analogous to the idea that the “numerical zero” is the unit roundoff. We next define our main characters, two classes of weak condition numbers which generalize, respectively, the classical worst-case and stochastic condition numbers.

In the following, we fix a probability space \((\Omega ,\Sigma ,\mathbb {P})\) and a random variable \(E:\Omega \rightarrow \mathcal {V}\), where we consider \(\mathcal {V}\) endowed with the Borel \(\sigma \)-algebra. We further assume that

The following definitions assume that \(\sigma _E\) is \(\mathbb {P}\)-measurable. This is the case, for example, if f is measurable and the directional (Gâteaux) derivative \(\mathrm {d}f(D;E(\omega ))\) exists \(\mathbb {P}\)-a.e.

Definition 2.5

(Weak worst-case and weak stochastic condition number) Let \(0\le \delta <1\) and assume that \(\sigma _E\) is \(\mathbb {P}\)-measurable. The \(\delta \)-weak worst-case condition number and the \(\delta \)-weak stochastic condition number are defined as

Remark 2.6

We note that one can give a definition of the weak worst-case and weak stochastic condition number that does not require \(\sigma _E\) to be a random variable, by setting

where we used the notation \(|\mathcal {S}|=\mathbb {P}(\mathcal {S})\) for the measure of a set if there is no ambiguity. This form is reminiscent of the definition of weak average-case analysis in [3], and when \(\sigma _E\) is a random variable, it can be shown to be equivalent to 2.5. Moreover, this slightly more general definition better illustrates the essence of the weak condition numbers: these are the (worst-case and average-case) condition numbers that ensue when one is allowed to discard a “numerically invisible” subset from the set of perturbations.

The directional sensitivity has an interpretation as (the limit of) a ratio of forward and backward errors, and hence, the new approach provides a potentially useful general framework to give probabilistic bounds on the forward accuracy of outputs of numerically stable algorithms. Moreover, as we discussed in Sect. 6, upper bounds on the weak condition numbers can be computed in practice for a natural distribution. One can therefore see \(\delta \) as a parameter representing the confidence level that a user wants for the output, and any computable upper bound on \(\kappa _w\) becomes a practical reliability measure on the output, valid with probability \(1-\delta \). Although of course roundoff errors are not really random variables, we hope that modelling them as such can become, with this “weak theory”, a useful tool for numerical analysis problems whose traditional condition number is infinite.

3 Eigenvalues of Matrix Polynomials and Their Directional Sensitivity

Algebraically, the spectral theory of matrix polynomials is most naturally described over an algebraically closed field; however, the theory of condition is analytic in nature and it is sometimes of interest to restrict the coefficients, and their perturbations, to be real. In this section, we give a unified treatment of both real and complex matrix polynomials. For conciseness, we keep this overview very brief; interested readers can find further details in [14, 18, 19, 26, 37] and the references therein. A square matrix polynomial is a matrix \(P(x)\in \mathbb {F}[x]^{n\times n}\), where \(\mathbb {F}\in \{\mathbb {C},\mathbb {R}\}\) is a field. Alternatively, we can think of it as an expression

with \(P_i\in \mathbb {F}^{n\times n}\). If we require \(P_d \ne 0\), then the integer d in such an expression is called the degree of the matrix polynomial.Footnote 7 We denote the vector space of matrix polynomials over \(\mathbb {F}\) of degree at most d by \(\mathbb {F}^{n\times n}_d[x]\). A square matrix polynomial is called singular if \(\det P(x)\equiv 0\) and otherwise regular. An element \(\lambda \in \mathbb {C}\) is said to be a finite eigenvalue of P(x) if

where \(\mathbb {F}(x)\) is the field of fractions of \(\mathbb {F}[x]\), that is, the field of rational functions with coefficients in \(\mathbb {F}\). We assume throughout rank \(r\ge 1\) (which implies \(n\ge 1\)) and degree \(d\ge 1\). The geometric multiplicity of the eigenvalue \(\lambda \) is the amount by which the rank decreases in the above definition,

There exist matrices \(U, V \in \mathbb {F}[x]^{n\times n}\) with \(\det (U)\in \mathbb {F}\backslash \{0\}\), \(\det (V)\in \mathbb {F}\backslash \{0\}\), that transform P(x) into its Smith canonical form,

where the invariant factors \(h_i(x)\in \mathbb {F}[x]\) are nonzero monic polynomials such that \(h_i(x)|h_{i+1}(x)\) for \(i\in \{1,\ldots ,r-1\}\). If one has the factorizations \(h_{i}=(x-\lambda )^{k_i}\tilde{h}_{i}(x)\) for some \(\tilde{h}_i(x)\in \mathbb {C}[x]\), with \(0\le k_i\le k_{i+1}\) for \(i\in \{1,\ldots ,r-1\}\) and \((x-\lambda )\) not dividing any of the \(\tilde{h}_i(x)\), then the \(k_i\) are called the partial multiplicities of the eigenvalue \(\lambda \). The algebraic multiplicity \(a_\lambda \) is the sum of the partial multiplicities. Note that an immediate consequence of this definition is \(a_\lambda \ge g_\lambda \). If \(a_\lambda =g_\lambda \) (i.e. all nonzero \(k_i\) equal to 1), then the eigenvalue \(\lambda \) is said to be semisimple; otherwise, it is defective. If \(a_\lambda =1\) (i.e. \(k_i=1\) for \(i=r\) and zero otherwise), then we say that \(\lambda \) is simple; otherwise, it is multiple.

A square matrix polynomial is regular if \(r=n\), i.e. if \(\det P(x)\) is not identically zero. A finite eigenvalue of a regular matrix polynomial is simply a root of the characteristic equation \(\det P(x)=0\), and its algebraic multiplicity is equal to the multiplicity of the corresponding root. If a matrix polynomial is not regular it is said to be singular. More generally, a finite eigenvalue of a matrix polynomial (resp. its algebraic multiplicity) is a root (resp. the multiplicity as a root) of the equation \(\gamma _r(x)=0\), where \(\gamma _r(x)\) is the monic greatest common divisor of all the minors of P(x) of order r (note that \(\gamma _n(x)=\det P(x)\)).

Remark 3.1

The concept of an eigenvalue, and the other definitions recalled here, is valid also in the more general setting of rectangular matrix polynomials. However, in that scenario a generic matrix polynomial has no eigenvalues [18]; as a consequence, a perturbation of a matrix polynomial with an eigenvalue would almost surely remove it. This is a fairly different setting than in the square case, and a deeper probabilistic analysis of the rectangular case is beyond the scope of the present paper.

We mention in passing that there are possible ways to extend the analysis to the rectangular case, such as embedding them in a larger square matrix polynomial or (at least in the case of pencils, or linear matrix polynomials) consider structured perturbations that do preserve eigenvalues.

3.1 Eigenvectors

To define the eigenvectors, let \(\{b_1(x),\ldots ,b_{n-r}(x)\}\) and \(\{c_1(x),\ldots ,c_{n-r}(x)\}\) be minimal bases [19, 23, 37] of \(\ker P(x)\) and \(\ker P(x)^*\) (as vector spaces over \(\mathbb {F}(x)\)), respectively. For \(\lambda \in \mathbb {C}\), it is not hard to see [19, 37] that \(\ker _\lambda P(x):=\mathrm {span}\{b_1(\lambda ),\ldots ,b_{n-r}(\lambda )\}\) and \(\ker _\lambda P(x)^*:=\mathrm {span}\{c_1(\lambda ^*),\ldots ,c_{n-r}(\lambda ^*)\}\) are vector spaces over \(\mathbb {C}\) of dimension \(n-r\).

Note that \(\ker _\lambda P(x) \subseteq \ker P(\lambda )\) and \(\ker _\lambda P(x)^*\subseteq \ker P(\lambda )^*\) for \(\lambda \in \mathbb {C}\), and that the difference in dimension is the geometric multiplicity, \(\ker P(\lambda )-\ker _\lambda P(x) = \ker P(\lambda )^*- \ker _\lambda P(x)^* = g_\lambda \). A right eigenvector corresponding to an eigenvalue \(\lambda \in \mathbb {C}\) is defined [19, Sec. 2.3] to be a nonzero element of the quotient space \(\ker P(\lambda )/\ker _\lambda P(x)\). A left eigenvector is similarly defined as an element of \(\ker P(\lambda )^*/\ker _\lambda P(x)^*\). In terms of the Smith canonical form (3), the last \(n-r\) columns of U, evaluated at \(\lambda ^*\), represent a basis of \(\ker _\lambda P(x)^*\), while the last \((n-r)\) columns of V, evaluated at \(\lambda \), represent a basis of \(\ker _\lambda P(x)\).

In the analysis, we will be concerned with a quantity of the form \(|u^*P'(\lambda ) v|\), where u, v are representatives of eigenvectors. It is known [19, Lemma 2.9] that \(b \in \ker _\lambda P(x)\) is equivalent to the existence of a polynomial vector b(x) such that \(b(\lambda )=b\) and \(P(x)b(x)=0\). Then,

implies that for any representative of a left eigenvector \(u\in \ker P(\lambda )^*\) we get \(u^*P'(\lambda )b(\lambda )=0\). It follows that for an eigenvalue representative v, \(u^*P'(\lambda )v\) depends only the component of v orthogonal to \(\ker _\lambda P(x)\), and an analogous argument also shows that this expression only depends on the component of u orthogonal to \(\ker _\lambda P(x)^*\). In practice, we will therefore choose representatives u and v for the left and right eigenvalues that are orthogonal to \(\ker _{\lambda } P(x)^*\) and \(\ker _\lambda P(x)\), respectively, and have unit norm. If \(P(x)\in \mathbb {F}^{n\times n}_d[x]\) is a matrix polynomial with simple eigenvalue \(\lambda \), then there is a unique (up to sign) way of choosing such representatives u and v.

3.2 Perturbations of Singular Matrix Polynomials: The De Terán–Dopico Formula

Assume that \(P(x)\in \mathbb {F}^{n\times n}_d[x]\), where \(\mathbb {F}\in \{ \mathbb {R}, \mathbb {C}\}\), is a matrix polynomial of rank \(r\le n\), and let \(\lambda \) be a simple eigenvalue. Let \(X=[U\ u]\in \mathbb {C}^{n\times (n-r+1)}\) be a matrix whose columns form a basis of \(\ker P(\lambda )^*\), and such that the columns of \(U\in \mathbb {C}^{n\times (n-r)}\) form a basis of \(\ker _\lambda P(x)^*\). Likewise, let \(Y=[V \ v]\) be a matrix whose columns form a basis of \(\ker P(\lambda )\), such that the columns of \(V\in \mathbb {C}^{n\times (n-r)}\) form a basis of \(\ker _\lambda P(x)\). In particular, v and u are representatives of, respectively, right and left eigenvectors of P(x). The following explicit characterization of a simple eigenvalue is due to De Terán and Dopico [14, Theorem 2 and Eqn. (20)]. To avoid making a case distinction for the regular case \(r=n\), we agree that \(\det (U^*E(\lambda )V)=1\) if U and V are empty.

Theorem 3.2

Let \(P(x)\in \mathbb {F}^{n\times n}_d[x]\) be matrix polynomial of rank r with simple eigenvalue \(\lambda \) and X, Y as above. Let \(E(x)\in \mathbb {F}^{n\times n}_d[x]\) be such that \(X^*E(\lambda )Y\) is non-singular. Then, for small enough \(\epsilon >0\), the perturbed matrix polynomial \(P(x)+\epsilon E(x)\) has exactly one eigenvalue \(\lambda (\epsilon )\) of the form

Note that in the special case \(r=n\) we recover the expression for regular matrix polynomials from [42, Theorem 5] and [14, Corollary 1],

where u, v are left and right eigenvectors corresponding to the eigenvalue \(\lambda \).

3.3 The Directional Sensitivity of a Singular Polynomial Eigenproblem

We can now describe the input–output map that underlies our analysis. By the local nature of our problem, we consider a fixed matrix polynomial \(P(x)\in \mathbb {F}^{n\times n}_d[x]\) of rank r with simple eigenvalue \(\lambda \) and define the input–output function

that maps P(x) to \(\lambda \), maps \(P(x)+\epsilon E(x)\) to \(\lambda (\epsilon )\) for any E(x) and \(\epsilon >0\) satisfying the conditions of Theorem 3.2, and maps any other matrix polynomial to an arbitrary number other than \(\lambda \).

An immediate consequence of Theorem 3.2 and our definition of the input–output map is an explicit expression for the directional sensitivity of the problem. Here we write \(\Vert E\Vert \) for the Euclidean norm of the vector of coefficients of E(x) as a vector in \(\mathbb {F}^{n^2(d+1)}\). From now on, when talking about the “directional sensitivity of an eigenvalue in direction E”, we implicitly refer to the input–output map f defined above.

Corollary 3.3

Let \(\lambda \) be a simple eigenvalue of P(x) and let \(E(x)\in \mathbb {F}^{n\times n}_d[x]\) be a regular matrix polynomial. Then, the directional sensitivity of the eigenvalue \(\lambda \) in direction E(x) is

In the special case \(r=n\), we have

For the goals in this paper, these results suffice. However, we note that it is possible to obtain equivalent formulae for the expansion that, unlike the one by De Terán and Dopico, do not involve the eigenvectors of singular polynomials.

Finally, we introduce a parameter that will enter all of our results and coincides with the inverse of the worst-case condition number in the regular case \(r=n\). Choose representatives u, v of the eigenvectors that satisfy \(\Vert u\Vert =\Vert v\Vert =1\) and (if \(r<n\)) \(U^*u=V^*v=0\). For such a choice of eigenvectors, define

We conclude with the following variation in [42, Theorem 5]. For a proof of the following result, see [1, Lemma 2.1] or [2] for a discussion in a wider context.

Proposition 3.4

Let \(P(x)\in \mathbb {F}^{n\times n}_d[x]\) be a regular matrix polynomial and \(\lambda \in \mathbb {C}\) a simple eigenvalue. Then, the worst-case condition number of the problem of computing \(\lambda \) is \(\kappa = \gamma _P^{-1}\).

Remark 3.5

In practice, an algorithm such as QZ applied to P(x) will typically compute all the eigenvalues of a nearby matrix polynomial. Therefore, any conditioning results on the conditioning of our specific input–output map f will explain why the correct eigenvalue is found among the computed eigenvalues, but not tell us how to choose the right one in practice. For selecting the right eigenvalue, one could use heuristics, such as computing the eigenvalues of an artificially perturbed problem. For more details on these practical considerations, we refer to [32].

4 Probabilistic Analysis of the Directional Sensitivity

In this section, we study the probability distribution of the directional sensitivity of a singular polynomial eigenvalue problem To deal with real and complex perturbations simultaneously as far as possible, we follow the convention from random matrix theory [22] and parametrize our results with a parameter \(\beta \), where \(\beta =1\) if \(\mathbb {F}=\mathbb {R}\) and \(\beta =2\) if \(\mathbb {F}=\mathbb {C}\). We consider perturbations \(E(x)=E_0+E_1x+\cdots +E_dx^d\), which we identify with the matrix \(E=\begin{bmatrix} E_0&\cdots&E_d\end{bmatrix}\in \mathbb {F}^{n\times n(d+1)}\) (each \(E_i\in \mathbb {F}^{n\times n}\)), and denote by \(\Vert E\Vert \) the Euclidean norm of E considered as a vector in \(\mathbb {F}^N\), where \(N:=n^2(d+1)\) (equivalently, the Frobenius norm of the matrix E). When we say that E is uniformly distributed on the sphere, written \(E\sim \mathcal {U}(\beta N)\) with \(\beta =1\) for real perturbations and \(\beta =2\) if E is complex, we mean that the image of E under an identification \(\mathbb {F}^{n\times n(d+1)}\cong \mathbb {R}^{\beta N}\) is uniformly distributed on the corresponding unit sphere \(S^{\beta N-1}\). To avoid trivial special cases, we assume that \(r\ge 1\) and \(d\ge 1\), so that, in particular, \(N\ge 2\).

The following theorem characterizes the distribution of the directional sensitivity under uniform perturbations.

Theorem 4.1

Let \(P(x)\in \mathbb {F}_d^{n\times n}[x]\) be a matrix polynomial of rank r and let \(\lambda \) be a simple eigenvalue of P(x). If \(E\sim \mathcal {U}(\beta N)\), where \(\beta =1\) if \(\mathbb {F}=\mathbb {R}\) and \(\beta =2\) if \(\mathbb {F}=\mathbb {C}\), then the directional sensitivity of \(\lambda \) in direction E(x) satisfies

where \(Z_k\sim \mathrm {B}(\beta /2,\beta (k-1)/2)\) denotes a beta distributed random variable with parameters \(\beta /2\) and \(\beta (k-1)/2\), and \(Z_N\) and \(Z_{n-r+1}\) are independent.

The proof is given later in this section, after having introduced some preliminary concepts and results. If \(r=n\), then the directional sensitivity is distributed like the square root of a beta random variable, and in particular, it is bounded. Using the density of the beta distribution, we can derive the moments and tail bounds for the distribution of the directional sensitivity explicitly.

Corollary 4.2

Let \(P(x)\in \mathbb {F}_d^{n\times n}[x]\) be a matrix polynomial of rank r and let \(\lambda \) be a simple eigenvalue of P(x). If \(E\sim \mathcal {U}(\beta N)\), where \(\beta =1\) if \(\mathbb {F}=\mathbb {R}\) and \(\beta =2\) if \(\mathbb {F}=\mathbb {C}\), then the expected directional sensitivity of \(\lambda \) in direction E(x) is

If \(t\ge \gamma _P^{-1}\), then for \(r<n\) we have the tail bounds

If \(r=n\), then \(\sigma _E\le \gamma _P\).

Proof

For the expectation, using Theorem 4.1 in the case \(r<n\), we have

where \(X_k\) denotes a \(\mathrm {B}(\beta /2,\beta (k-1)/2)\) distributed random variable. The claimed tail bounds and expected values for \(r<n\) follow by applying Lemma A.1 with \(k=2\), \(a=c=\beta /2\), \(b=\beta (N-1)/2\), and \(d=\beta (n-r)/2\). If \(r=n\), the expected value follows along the lines, and the deterministic bound follows trivially from the boundedness of the beta distribution. \(\square \)

Remark 4.3

In the context of random inputs, it is common to study the logarithm of a condition number instead of the condition number itself [13, 21]. Thus, even when the expected condition is not finite, the expected logarithm may still be small. Using a standard argument (see e.g. [13, Proposition 2.26]), we can deduce a bound on the expected logarithm of the directional sensitivity:

The logarithm of the sensitivity is relevant as a measure for the loss of precision.

As the derivation of the bounds (6) using Lemma A.1 shows, the cumulative distribution functions in question can be expressed exactly in terms of integrals of hypergeometric functions. This way, the tail probabilities can be computed to high accuracy for any given t, see also Remark 4.6. However, as the derivation of the tail bounds in “Appendix A” also shows, the bounds given in Corollary 4.2 are sharp for fixed t and \(n-r\rightarrow \infty \), as well as for fixed \(n-r\) and \(t\rightarrow \infty \). Figure 1 illustrates these bounds for a choice of small parameters (\(n=4\), \(d=2\), \(r=2\), \(\gamma _P=1\)). Moreover, the bounds (6) have the added benefit of being easily interpretable. These tail bounds can be interpreted as saying that for large n and/or d, it is highly unlikely that the directional sensitivity will exceed \(\gamma _P^{-1}\) (which by Proposition 3.4 is the worst-case condition bound in the smooth case \(r=n\)).

Example 4.4

Consider again the matrix pencil L(x) from (1). This pencil has rank 3, and the cokernel and kernel are spanned by the vectors p(x) and q(x), respectively, given by

The matrix polynomial has the simple eigenvalue \(\lambda =1\), and the matrix L(1) has rank 2. The cokernel \(\ker L(1)^T\) and the kernel \(\ker L(1)\) are spanned by the columns of the matrices X and Y, given by

Let u be the second column of X and let v be the second column of Y. The vectors u and v are orthogonal to \(\ker _{\lambda } L(x)^T = \mathrm {span}\{p(1)\}\) and \(\ker _{\lambda } L(x)=\mathrm {span}\{q(1)\}\) and have unit norm. We therefore have

Hence, \(\gamma _L^{-1}=12.16\). Figure 2 shows the result of comparing the distribution of \(\sigma _E\), found empirically, with the bounds obtained in Theorem 4.1. The relative error in the plot is of order \(10^{-5}\).

The plan for the rest of this section is as follows: In Sect. 4.1, we recall some facts from probability theory and random matrix theory. In Sect. 4.2, we discuss the QR decomposition of a random matrix, and in Sect. 4.3 we use this decomposition to prove Theorem 4.1

4.1 Probabilistic Preliminaries

We write \(g\sim \mathcal {N}^1(\mu ,\Sigma )\) for a normal distributed (Gaussian) random vector g with mean \(\mu \) and covariance matrix \(\Sigma \), and \(g\sim \mathcal {N}^2(\mu ,\Sigma )\) for a complex Gaussian vector; this is a \(\mathbb {C}^n\)-valued random vector with expected value \(\mu \), whose real and imaginary parts are independent real Gaussian random vectors with covariance matrix \(\Sigma /2\) (a special case are real and complex scalar random variables, \(\mathcal {N}^{\beta }(\mu ,\sigma ^2)\)). We denote the uniform distribution on a sphere \(S^{n-1}\) by \(\mathcal {U}(n)\). Every Gaussian vector \(g\sim \mathcal {N}^1(0,I_n)\) can be written as a product \(g=rq\) with r and q independent, where \(r\sim \chi (n)\) is \(\chi \)-distributed with n degrees of freedom, and \(q\sim \mathcal {U}(n)\).

4.1.1 Projections of Random Vectors

The squared projected lengths of Gaussian and uniform distributed random vectors can be described using the \(\chi ^2\) and the beta distribution, respectively. A vector X is \(\chi ^2\)-distributed with k degrees of freedom, \(X\sim \chi ^2(k)\), if the cumulative distribution function (cdf) is

The special case \(\chi ^2(2)\) is the exponential distribution with parameter 1/2, written \(\mathrm {exp}(1/2)\). The beta distribution \(\mathrm {B}(a,b)\) is defined for \(a,b>-1\) and has cdf supported on [0, 1],

where \(\mathrm {B}(a,b)=\Gamma (a)\Gamma (b)/\Gamma (a+b)\) is the beta function. For a vector \(x\in \mathbb {F}^{n}\), denote by \(\pi _k(x)\) the projection onto the first k coordinates and by \(\Vert \pi _k(x)\Vert ^2=|x_1|^2+\cdots +|x_k|^2\) its squared length. The following facts are known:

-

If \(g\sim \mathcal {N}^\beta (0,I_n)\), then \(\beta \Vert \pi _k(g)\Vert ^2\sim \chi ^2(\beta k)\);

-

If \(q\sim \mathcal {U}(n)\), then \(\Vert \pi _k(q)\Vert ^2\sim \mathrm {B}(k/2,(n-k)/2)\).

The first claim is a standard fact about the normal distribution and can be derived directly from it, see, for example, [9]. The statement for the uniform distribution can be derived from the Gaussian one, but also follows by a change of variables from expressions for the volume of tubular neighbourhoods of subspheres of a sphere, see for example [13, Section 20.2]. Since all the distributions considered are orthogonally (in the real case) or unitarily (in the complex case) invariant, these observations hold for the projection of a random vector onto any k-dimensional subspace, not just the first k coordinates.

4.1.2 Random Matrix Ensembles

If P(x) is a singular matrix polynomial with a simple eigenvalue \(\lambda \), then the set of perturbation directions for which the directional sensitivity is not finite is a proper Zariski closed subset, see Theorem 3.2. It is therefore natural and convenient to consider probability measures on the space of perturbations that have measure zero on proper Zariski closed subsets. This is the case, for example, if the measure is absolutely continuous with respect to the Lebesgue measure. In this paper, we will work with real and complex Gaussian and uniform distributions. For a detailed discussion of the random matrix ensembles used here, we refer to [24, Chapters 1-2].

For a random matrix, we write \(G\sim \mathcal {G}^\beta _n(\mu ,\sigma ^2)\) if each entry of G is an independent \(\mathcal {N}^\beta (\mu ,\sigma ^2)\) random variable, and call this a Gaussian random matrix. In the case \(\beta =2\), this is called the Ginibre ensemble [25]. Centred (\(\mu =0\)) Gaussian random matrices are orthogonally (if \(\beta =1\)) or unitarily (if \(\beta =2\)) invariant ([35, Lemma 1]), and the joint density of their entries is given by

which takes into account the fact the real and imaginary parts of the entries of a complex Gaussian have variance 1/2. In addition, we consider the circular real ensemble \(\mathrm {CRE}(n)\) for real orthogonal matrices in O(n) and the circular unitary ensemble \(\mathrm {CUE}(n)\) [20] for unitary matrices in U(n), where both distributions correspond to the unique Haar probability measure on the corresponding groups.

4.2 The Probabilistic QR Decomposition

Any non-singular matrix \(A\in \mathbb {F}^{n\times n}\) has a unique QR decomposition \(A=QR\), where \(Q\in O(n)\) (if \(\mathbb {F}=\mathbb {R}\)) or U(n) (if \(\mathbb {F}=\mathbb {C}\)), and \(R\in \mathbb {F}^{n\times n}\) is upper triangular with \(r_{ii}>0\) [44, Part II]. The following proposition describes the distribution of the factors Q and R in the QR decomposition of a (real or complex) Gaussian random matrix.

Proposition 4.5

Let \(G\sim \mathcal {G}_n^\beta (0,1)\) be a Gaussian random matrix, \(\beta \in \{1,2\}\). Then G can be factored uniquely as \(G=QR\), where \(R=(r_{jk})_{1\le j\le k\le n}\) is upper triangular and

-

\(Q\sim \mathrm {CUE}(n)\) if \(\beta =2\) and \(Q\sim \mathrm {CRE}(n)\) if \(\beta =1\);

-

\(\beta r_{ii}^2\sim \chi ^2(\beta (n-i+1))\) for \(i\in \{1,\ldots ,n\}\);

-

\(r_{jk}\sim \mathcal {N}^\beta (0,1)\) for \(1\le j<k\le n\).

Moreover, all these random variables are independent.

An easy and conceptual derivation of the distribution of Q can be found in [35], while the distribution of R can be deduced from the known expression for the Jacobian of the QR decomposition [22, 3.3].

4.3 Proof of Theorem 4.1

In this section, we present the proofs of Theorem 4.1 and the corollaries that follow from it. To simplify notation, we set \(\ell =n-r+1\). Recall from Corollary 3.3 the expression

where the columns of \(X=[U\ u],Y=[V\ v]\in \mathbb {F}^{n\times \ell }\) are orthonormal bases of \(\ker P(\lambda )^*\) and \(\ker P(\lambda )\), the columns of U, V represent bases of \(\ker _\lambda P(x)^*\) and \(\ker _\lambda P(x)\), respectively, and \(\gamma _P\) is defined in (5).

Proof of Theorem 4.1

We first assume \(r<n\). By the scale invariance of the directional sensitivity \(\sigma _E\), we consider Gaussian perturbations \(E\sim \mathcal {N}^\beta (0,\sigma ^2I_{\beta N})\) (recall that we interpret E as a vector in \(\mathbb {F}^N\)), where \(\sigma ^2=(\sum _{j=0}^d |\lambda |^{2j})^{-1}\). This scaling ensures that the entries of \(E(\lambda )\) are independent \(\mathcal {N}^{\beta }(0,1)\) random variables. Since the distribution of \(E(\lambda )\) is orthogonally/unitarily invariant, the quotient \(|\det (X^*E(\lambda )Y|/|\det \left( U^*E(\lambda )V\right) |\) has the same distribution as the quotient \(|\det (G)/\det (\overline{G})|\), where G is the upper left \(\ell \times \ell \) submatrix of \(E(\lambda )\) and \(\overline{G}\) the upper left \((\ell -1)\times (\ell -1)\) matrix. For the distribution considered, G is almost surely invertible, with inverse \(H=G^{-1}\). By Cramer’s rule, \(|\det (G)/\det (\overline{G})|=|h_{\ell \ell }|^{-1}\). We are thus interested in the distribution

where \(h_{\ell \ell }\) is the lower right corner of the inverse of an \(\ell \times \ell \) Gaussian matrix G. To study the distribution of \(|h_{\ell \ell }|^{-1}\), we resort to the probabilistic QR decomposition discussed in Sect. 4.2. If \(G=QR\) is the unique QR decomposition of G with positive diagonals in R, then the inverse is given by \(H=R^{-1}Q^*\), and a direct inspection reveals that the lower right element \(h_{\ell \ell }\) of H is \(h_{\ell \ell } = q^*_{\ell \ell }/r_{\ell \ell }\).

From Sect. 4.2, it follows that \(Q\sim \mathrm {CRE}(n)\) or \(\mathrm {CUE}(n)\), and \(\beta r_{\ell \ell }^2\sim \chi ^2(\beta )\). Moreover, each column of Q is uniformly distributed on the sphere \(S^{\beta \ell -1}\), so that \(|q_{\ell \ell }|^2\sim \mathrm {B}(\beta /2,\beta (\ell -1)/2)\) (by Sect. 4.1.1), and \(\{r_{\ell \ell }^2,|q_{\ell \ell }|^2\}\) are independent. We therefore get

Setting \(\gamma _P=|u^*P'(\lambda )v| \cdot (\sum _{j=0}^d |\lambda |^{2j})^{-1/2}\) (see 5), we arrive at

Let \(p_0=(1,\lambda ,\cdots ,\lambda ^d)^T/(\sum _{i=0}^d |\lambda |^{2i})^{1/2}\). Then we can rearrange the coefficients of E(x) to a matrix \(F\in \mathbb {F}^{n^2\times (d+1)}\) so that

Moreover, if \(Q=[p_0\ p_1\ \cdots p_{d}]\) is an orthogonal/unitary matrix with \(p_0\) as the first column, then

where \(\tilde{p}_j=p_j\cdot (\sum _{i=0}^d |\lambda |^{2i})^{1/2}\). If we denote by \(G^{c}\) the vector consisting of those entries of \(E(\lambda )\) that are not in G, then

It follows that

Therefore, the factor \(r_{\ell \ell }^2\), itself a square of a (real or complex) Gaussian, is a summand in a sum of squares of \(N=n^2(d+1)\) Gaussians, and the quotient

is equal to the squared length of the projection of a uniform random vector in \(S^{\beta N-1}\) onto the first \(\beta \) coordinates. By Sect. 4.1.1, this is \(\mathrm {B}(\beta /2,\beta (N-1)/2)\) distributed. Denoting this random variable by \(Z_N\) and \(|q_{\ell \ell }|^2\) by \(Z_\ell \), we obtain

This establishes the claim in the case \(r<n\). If \(r=n\), we use the expression (see 4),

where u and v are eigenvectors. By orthogonal/unitary invariance, \(\sigma _E^2\) has the same distribution as the squared norm of a Gaussian. By the same argument as above, we can bound \(\Vert E\Vert \) in terms of \(\Vert E(\lambda )\Vert \), and the quotient with \(\Vert E(\lambda )\Vert ^2\) is then the squared projected length of the first \(\beta \) coordinates of a uniform distributed vector in \(S^{\beta N-1}\), which is \(\mathrm {B}(\beta /2,\beta (N-1)/2)\) distributed. \(\square \)

Remark 4.6

If \(N+\) is large, then for a (real or complex) Gaussian perturbation with entry-wise variance 1/N, by Gaussian concentration (see [11, Theorem 5.6]), \(\Vert E\Vert \) is close to 1 with high probability:

This means that the distribution of \(\Vert E\Vert \sigma _E\) for a Gaussian perturbation will be close to that of \(\sigma _E\) for a uniform perturbation. Even for moderate sizes of d and n, the result can be numerically almost indistinguishable.

In fact, when G is Gaussian, then the distribution can be expressed explicitly as

where \(_1F_1(a,b;z)\) denotes the confluent hypergeometric function (this follows by mimicking the proof of Theorem 4.1, expressing the distribution in terms of a quotient of a \(\chi ^2\) and a beta random variable, and writing out the resulting integrals). Similarly, using the same computations as in the proof of Lemma A.1, we get the exact expression

where \(_2F_1(a,b,c;z)\) is the hypergeometric function. The case distinction corresponds to different branches of the solution of the hypergeometric differential equation. See [38, 39] for more on computing with hypergeometric functions.

5 Weak Condition Numbers of Simple Eigenvalues of Singular Matrix Polynomials

The tail bounds on the directional sensitivity can easily be translated into statements about condition numbers and discuss some consequences and interpretations.

Theorem 5.1

Let \(P(x)\in \mathbb {C}^{n\times n}_d[x]\) be a matrix polynomial of rank r, and let \(\lambda \) be a simple eigenvalue of P(x). Then

-

the worst-case condition number is

$$\begin{aligned} \kappa = {\left\{ \begin{array}{ll} \infty &{} \text { if } r<n,\\ \frac{1}{\gamma _P} &{} \text { if } r=n \end{array}\right. } \end{aligned}$$(7) -

the stochastic condition number, with respect to uniformly distributed perturbations, is

$$\begin{aligned} \kappa _s = \frac{1}{\gamma _P}\frac{\pi }{2}\frac{\Gamma (N)\Gamma (n-r+1)}{\Gamma (N+1/2) \Gamma (n-r+1/2)} \end{aligned}$$(8) -

if \(r<n\) and \(\delta \in (0,1)\), then the \(\delta \)-weak worst-case condition number, with respect to uniformly distributed perturbations, is bounded by

$$\begin{aligned} \kappa _{w}(\delta ) \le \frac{1}{\gamma _P}\max \left\{ 1,\sqrt{\frac{n-r}{\delta N}}\right\} \end{aligned}$$(9)

The expression for the stochastic condition number involves the quotient of gamma functions, which can be simplified using the well-known bounds

which hold for \(x>0\) [49]. Using these bounds on the numerator and denominator of (8), we get the more interpretable

The bound on the weak condition number (9) shows that \(\kappa _w(1/2)\), which is the median of the same random variable of which \(\kappa _s\) is the expected value, is bounded by \(1/\gamma _P\), which is the expression of the worst-case condition number in the regular case \(r=n\).

The situation changes dramatically when considering real matrix polynomials with real perturbations, as in this case even the stochastic condition becomes infinite if the matrix polynomial is singular. In the statement, we denote the resulting condition number with respect to real perturbations by using the superscript \(\mathbb {R}\).

Theorem 5.2

Let \(P(x)\in \mathbb {R}^{n\times n}_d[x]\) be a real matrix polynomial of rank r, and let \(\lambda \in \mathbb {C}\) be a simple eigenvalue of P(x). Then,

-

the worst-case condition number is

$$\begin{aligned} \kappa ^{\mathbb {R}} = {\left\{ \begin{array}{ll} \infty &{} \text { if } r<n,\\ \frac{1}{\gamma _P} &{} \text { if } r=n \end{array}\right. } \end{aligned}$$(11) -

the stochastic condition number, with respect to uniformly distributed real perturbations, is

$$\begin{aligned} \kappa _s^{\mathbb {R}} = {\left\{ \begin{array}{ll} \infty &{} \text { if } r<n,\\ \frac{1}{\gamma _P} \frac{\Gamma (N/2)}{\sqrt{\pi }\Gamma ((N+1)/2)} &{} \text { if } r=n \end{array}\right. } \end{aligned}$$(12) -

if \(r<n\) and \(\delta \in (0,1)\), then the \(\delta \)-weak worst-case condition, with respect to uniformly distributed real perturbations, is

$$\begin{aligned} \kappa _{w}^{\mathbb {R}}(\delta ) \le \frac{1}{\gamma _P}\max \left\{ 1,\sqrt{\frac{n-r}{N}} \frac{1}{\delta }\right\} \end{aligned}$$ -

if \(r<n\) and \(\delta < \sqrt{(n-r)/N}\), then the \(\delta \)-weak stochastic condition number satisfies

$$\begin{aligned} \kappa _{ws}^{\mathbb {R}}(\delta ) \le \frac{1}{\gamma _P}\left( \frac{1}{1-\delta }\right) \left( 1+\sqrt{\frac{n-r}{N}} \log \left( \sqrt{\frac{n-r}{N}}\delta ^{-1}\right) \right) \end{aligned}$$(13)

It is instructive to compare the weak condition numbers in the singular case to the worst-case and stochastic condition number in the regular case. In the regular case (\(n=r\)), when replacing the worst-case with the stochastic condition we get an improvement by a factor of \(\approx N^{-1/2}\), which is consistent with previous work [4] (see also Sect. 2) relating the worst-case to the stochastic condition. We will see in Sect. 6.1 that the expected value in the case \(n=r\) captures the typical perturbation behaviour of the problem more accurately than the worst-case bound. Among many possible interpretations of the weak worst-case condition, we highlight the following:

-

Since the bounds are monotonically decreasing as the rank r increases, we can get bounds independent of r. Specifically, we can replace the quotient \(\sqrt{(n-r)/N}\) with \(1/\sqrt{n(d+1)}\). This is useful since, in applications, the rank is not always known.

-

While the stochastic condition number (12), which measures the expected sensitivity of the problem of computing a singular eigenvalue, is infinite, for \(4(n-r)<N\) the median sensitivity is bounded by

$$\begin{aligned} \kappa ^{\mathbb {R}}_w(1/2) \le \frac{1}{\gamma _P}. \end{aligned}$$The median is a more robust and arguably better summary parameter than the expectation.

-

Choosing \(\delta = e^{-N}\) in (13), we get a weak stochastic condition bound of

$$\begin{aligned} \kappa ^{\mathbb {R}}_{ws}(e^{-N}) \le \frac{1}{\gamma _P} \left( \frac{1+\sqrt{N(n-r)}}{1-e^{-N}}\right) . \end{aligned}$$That is, the condition number improves from being unbounded to sublinear in N, by just removing a set of inputs of exponentially small measure.

Example 5.3

Consider the matrix pencil L(x) from (1). This matrix pencil has rank 3, with only one simple eigenvalue \(\lambda =1\). As we will see in Example 4.4, the constant \(\gamma _L\) appearing in the bounds is

In this example, \(n=4\), \(d=1\) and \(r=3\), so that \(n-r=1\), \(N=n^2(d+1)=32\), and

For small enough \(\delta \), we get the (not optimized) bound \(\kappa _w^{\mathbb {R}}(\delta ) < 2.15 \cdot \delta ^{-1}\).

It is easy to translate Corollary 4.2 into the main results, Theorem 5.1 and Theorem 5.2. For the weak stochastic condition, we need the following observation, which is a variation in [3, Lemma 2.2].

Lemma 5.4

Let Z be a random variable such that \(\mathbb {P}\{Z\ge t\}\le C\frac{a}{t}\) for \(t>a\). Then for any \(t_0>a\),

Proof of Theorems 5.1 and 5.2

The statements about the worst-case, (7) and (11), and about the stochastic condition number, (8) and (12), follow immediately from Theorem 4.1 and Corollary 4.2.

For the weak condition number in the complex case, if \(\delta \le (n-r)/N\), then setting

we get \(\gamma _P t \ge 1\), and therefore, using the complex tail bound from Corollary 4.2,

This yields \(\kappa _w(\delta )\le t\). If \(\delta > (n-r)/N\), then we use the fact that the weak condition number is monotonically decreasing with \(\delta \) (intuitively, the larger the set we are allowed to exclude, the smaller the condition number will be), to conclude that \(\kappa _w(\delta )\le \kappa _w(\delta _0)\le 1/\gamma _P\), where \(\delta _0:=(n-r)/N\).

For the real case, if \(r<n\) we use the bound

which follows from (10). If \(\delta <\sqrt{(n-r)/N}\), set

Then

where for the last inequality we used the fact that \(N\ge 2\). We conclude that \(\kappa _w(\delta )\le t\). If \(\delta >\sqrt{(n-r)/N}\), then we use the monotonicity of the weak condition just as in the complex case. Finally, for the weak stochastic condition number in the real case, we use Lemma 5.4 with \(a=\gamma _P^{-1}\), \(C=\sqrt{(n-r)/N}\) and \(t_0=C(\delta \gamma _P)^{-1}\) in the conditional expectation. We just saw that \(\kappa _{w}^{\mathbb {R}}(\delta )\le t_0\), so that

where we used Lemma 5.4 in the second inequality. \(\square \)

6 Bounding the Weak Stochastic Condition Number

In this section, we illustrate how the weak condition number of the problem of computing a simple eigenvalue of a singular matrix polynomial can be estimated in practice. More precisely, we show that the weak condition number of a singular problem can be estimated in terms of the stochastic condition number of nearby regular problems. Before deriving the relevant estimates, given in Theorem 6.3, we discuss the stochastic condition number of regular matrix polynomials.

6.1 Measure Concentration for the Directional Sensitivity of Regular Matrix Polynomials

For the directional sensitivity in the regular case, \(r=n\), the worst-case condition number is \(\gamma _P^{-1}\), as was shown in Proposition 3.4. In addition, the expression for the stochastic condition number involves a ratio of gamma functions (see Corollary 4.2 or the case \(r=n\) in Theorems 5.1 and 5.2). From (10), we get the approximation \(\Gamma (k+1/2)/\Gamma (k)\approx \sqrt{k}\), so that the stochastic condition number for regular polynomial eigenvalue problems satisfies

This is compatible with previously known results about the stochastic condition number in the smooth setting (see discussion in Sect. 2). A natural question is whether the directional sensitivity is likely to be closer to this expected value, or closer to the upper bound \(\kappa \).

Theorem 4.1 describes the distribution of \(\sigma _E\) as that of the (scaled) square root of a beta random variable. Using the interpretation of beta random variables as squared lengths of projections of uniformly distributed vectors on the sphere (see Sect. 4.1.1), tail bounds for the distribution of \(\sigma _E\) therefore translate into the problem of bounding the relative volume of certain subsets of the unit sphere. A standard argument from the realm of measure concentration on spheres, Lemma 6.1, then implies that with high probability, \(\sigma _E\) will stay close to its mean.

Lemma 6.1

Let \(x\sim \mathcal {U}(\beta N)\) be a uniformly distributed vector on the (real or complex) unit sphere, where \(\beta =1\) if \(\mathbb {F}=\mathbb {R}\) and \(\beta =2\) if \(\mathbb {F}=\mathbb {C}\). Then

Proof

For complex perturbations, we get the straightforward bound

In the real case, a classic result (see [6, Lemma 2.2] for a short and elegant proof) states that the probability in question is bounded by

The claimed bound follows by replacing N with \(N-1\) for the sake of a uniform presentation. \(\square \)

The next corollary follows from the description of the distribution of \(\sigma _E\) in Theorem 4.1 and the characterization of beta random variables as squared projected lengths of uniform vectors from Sect. 4.1.1.

Corollary 6.2

Let \(P(x)\in \mathbb {F}_d^{n\times n}[x]\) be a regular matrix polynomial and let \(\lambda \) be a simple eigenvalue of P(x). If \(E\sim \mathcal {U}(\beta N)\), where \(\beta =1\) if \(\mathbb {F}=\mathbb {R}\) and \(\beta =2\) if \(\mathbb {F}=\mathbb {C}\), then for \(t\le \gamma _P^{-1}\) we have

6.2 The Weak Condition Number in Terms of Nearby Stochastic Condition Numbers

It is common wisdom that computing the condition number is as hard as solving the problem at hand, so at the very least we would like to avoid making the computation of the condition estimate more expensive than the computation of the eigenvalue itself. We will therefore aim to estimate the condition number of the problem in terms of the output of a backward stable algorithm for computing the eigenvalue and a pair of associated eigenvectors.

Let \(P(x)\in \mathbb {F}^{n\times n}_d[x]\) be a matrix polynomial of rank \(r<n\) with a simple eigenvalue \(\lambda \in \mathbb {C}\), and let \(E(x)\in \mathbb {F}^{n\times n}_d[x]\) be a regular perturbation. Denote by \(\lambda (\epsilon )\) the eigenvalue of \(P(x)+\epsilon E(x)\) that converges to \(\lambda \) (see Theorem 3.2), and let \(u(\epsilon )\) and \(v(\epsilon )\) be the corresponding left and right eigenvectors of the perturbed problem. As shown in [14, Theorem 4] (see Theorem 6.4), for all E(x) outside a proper Zariski closed set, the limits

converge to representatives of left and right eigenvectors of P(x) associated with \(\lambda \). Whenever these limits exist and represent eigenvectors of P(x), define

Note that these parameters depend implicitly on a perturbation direction E(x), even though the notation does not reflect this. The parameters \(\overline{\kappa }\) and \(\overline{\kappa }_s\) are the limits of the worst-case and stochastic condition numbers, \(\kappa (P(x)+\epsilon E(x))\) and \(\kappa _s(P(x)+\epsilon E(x))\), as \(\epsilon \rightarrow 0\). Since almost sure convergence implies convergence in probability, we get

whenever the left-hand side of this expression is finite.

A backward stable algorithm, such as vanilla QZ, computes an eigenvalue \(\tilde{\lambda }\) and associated unit norm eigenvectors \(\tilde{u}\) and \(\tilde{v}\) of a nearby problem \(P(x)+\epsilon E(x)\). If \(\epsilon \) is small, then \(\tilde{\lambda }\approx \lambda \), \(\tilde{u}\approx \overline{u}\) and \(\tilde{v}\approx \overline{v}\), so that we can approximate the values (15) using the output of such an algorithm. Unfortunately, this does not yet give us a good estimate of \(\gamma _P\), as the definition of \(\gamma _P\) makes use of very special representatives of eigenvectors (recall from Sect. 3.1 that for a singular matrix polynomials, eigenvectors are only defined as equivalence classes). The following theorem shows that we can still get bounds on the weak condition numbers in terms of \(\overline{\kappa }_s\).

Theorem 6.3

Let \(P(x)\in \mathbb {F}^{n\times n}_d[x]\) be a singular matrix polynomial of rank \(r<n\) with simple eigenvalue \(\lambda \in \mathbb {C}\). Then

If \(\delta \le (n-r)/N\), then for any \(\eta >0\) we have the tail bounds

For the proof of Theorem 6.3 we recall the setting of Sect. 3. Let \(X=[U \ u]\) and \(Y=[V \ v]\) be matrices whose columns are orthonormal bases of \(\ker P(\lambda )^*\) and \(\ker P(\lambda )\), respectively, such that U and V are bases of \(\ker _{\lambda }P(x)^{T}\) and \(\ker _{\lambda }P(x)\), respectively. If \(\overline{u}=u\) and \(\overline{v}=v\) in (15), then \(\overline{\gamma }_P=\gamma _P\). In general, however, we only get a bound. To see this, recall from Sect. 3.1 that \(\overline{u}^*P'(\lambda )\overline{v}\) depends only on the component of \(\overline{u}\) that is orthogonal to \(\ker _{\lambda }P(\lambda )^*\), and the component of \(\overline{v}\) that is orthogonal to \(\ker _{\lambda }P(\lambda )\). In particular, \(X^*P'(\lambda )Y\) has rank one, and we have (recall \(\ell =n-r+1\))

The key to Proposition 6.3 lies in a result analogous to Theorem 3.2 for the eigenvectors by de Terán and Dopico [14, Theorem 4].

Theorem 6.4

Let \(P(x)\in \mathbb {F}^{n\times n}_d[x]\) be matrix polynomial of rank r with simple eigenvalue \(\lambda \) and X, Y as above. Let \(E(x)\in \mathbb {F}^{n\times n}_d[x]\) be such that \(X^*E(\lambda )Y\) is non-singular. Let \(\zeta \) be the eigenvalue of the non-singular matrix pencil

and let a and b be the corresponding left and right eigenvectors. Then, for small enough \(\epsilon >0\), the perturbed matrix polynomial \(P(x)+\epsilon E(x)\) has exactly one eigenvalue \(\lambda (\epsilon )\) as described in Theorem 3.2, and the corresponding left and right eigenvectors satisfy

Given a matrix polynomial P(x) and a perturbation direction E(x), we can therefore assume that the eigenvectors of a sufficiently small perturbation in direction E(x) are approximated by \(\overline{u}=Xa\) and \(\overline{v}=Yb\), where a, b are the eigenvectors of the matrix pencil (17). We would next like to characterize these eigenvectors for random perturbations E(x). As with the rest of this paper, the following result is parametrized by a parameter \(\beta \in \{1,2\}\) which specifies whether we work with real or complex perturbations.

Proposition 6.5

Let \(P(x)\in \mathbb {F}^{n\times n}_d[x]\) be a matrix polynomial of rank \(r<n\) with simple eigenvalue \(\lambda \in \mathbb {C}\), and let \(E(x)\sim \mathcal {U}(\beta N)\) be a random perturbation. Let a, b be left and right eigenvectors of the linear pencil (17), let \(\overline{u}=Xa\) and \(\overline{v}=Yb\), and define \(\overline{\gamma }_P\) as in (15). Then

Proof

By scale invariance of (17), we may take E(x) to be Gaussian, \(E(x)\sim \mathcal {N}^{\beta }(0,\sigma ^2I_{\beta N})\) with \(\sigma ^2=(\sum _{j=0}^d |\lambda |^{2j})^{-1}\) (so that \(E(\lambda )\sim \mathcal {G}_n^\beta (0,1)\)). Set \(G:=X^*E(\lambda )Y\), so that \(G\sim \mathcal {G}_\ell ^\beta (0,1)\). Using (16), the eigenvectors associated with (17) are then characterized as solutions of

It follows that \(G^*a\) and Gb are proportional to \(e_\ell \), and hence,

Clearly, each of the vectors a and b individually is uniformly distributed. They are, however, not independent. To simplify notation, set \(H=G^{-1}\). For the condition estimate we get, using (16),

By orthogonal/unitary invariance of the Gaussian distribution, the random vector \(q:=He_{\ell }/\Vert H e_{\ell }\Vert \) is uniformly distributed on \(S^{\beta \ell -1}\). It follows that \(|e^{T}He_{\ell }|/\Vert H e_{\ell }\Vert \) is distributed like the absolute value of the projection of a uniform vector onto the first coordinate. For the expected value, the bound follows by observing that the expected value of such a projection is bounded by \((\ell -1)^{-1/2}\). For the tail bound, using (14) (with N replaced by \(\ell \)) we get

This was to be shown. \(\square \)

Proof of Theorem 6.3

If \(\overline{u}=Xa\) and \(\overline{v}=Yb\), then

and we get the upper bound

For the weak condition numbers, using Theorems 5.1 and 5.2, we get the bounds

For the tails bounds in the complex case, note that in the complex case we have

where we used Proposition 6.5 for the inequality. The real case follows in the same way. \(\square \)

7 Conclusions and Outlook

The classical theory of conditioning in numerical analysis aims to quantify the susceptibility of a computational problem to perturbations in the input. While the theory serves its purpose well in distinguishing well-posed problems from problems that approach ill-posedness, it fails to explain why certain problems with high condition number can still be solved satisfactory to high precision by algorithms that are oblivious to the special structure of an input. By introducing the notions of weak and weak stochastic conditioning, we developed a tool to better quantify the perturbation behaviour of numerical computation problems for which the classical condition number fails to do so.

Our methods are based on an analysis of directional perturbations and probabilistic tools. The use of probability theory in our context is auxiliary: the purpose is to quantify the observation that the set of adversarial perturbations is small. In practice, any reasonable numerical algorithm will find the eigenvalues of a nearby regular matrix polynomial, and the perturbation will be deterministic and not random. However, as the algorithm knows nothing about the particular input matrix polynomial, it is reasonable to assume that if the set of adversarial perturbations is sufficiently small, then the actual perturbation will not be in there. Put more directly, to say that the probability that a perturbed problem has large directional sensitivity is very small is to say that a perturbation, although non-random, would need a good reason to cause damage.

The results presented continue the line of work of [3], where it is argued that, just as sufficiently small numbers are considered numerically indistinguishable from zero, sets of sufficiently small measure should be considered numerically indistinguishable from null sets. One interesting direction in which the results presented can be strengthened is to use wider classes of probability distributions, including such that are discrete, and derive equivalent (possibly slightly weaker) results. One important side effect of our analysis is a focus away from the expected value, and more towards robust measures such as the medianFootnote 8 and other quantiles.

Our results hence have a couple of important implications, or “take-home messages”, that we would like to highlight:

-

1.

The results presented call for a critical re-evaluation of the notion of ill-posedness. It has become common practice to simply identify ill-posedness with having infinite condition, to the extent that condition numbers are often defined in terms of the inverse distance to a set of ill-posed inputs, an approach that has been popularized by J. Demmel [16, 17].Footnote 9 The question of whether the elements of such a set are actually badly behaved a practical sense is often left unquestioned. Our theory suggests that the set of inputs that are actually ill-behaved from a practical point of view can be smaller than previously thought.

-

2.

Average-case analysis (and its refinement, smoothed analysis [12]) is, while well intentioned, still susceptible to the caprices of specific probability distributions. More meaningful results are obtained when, instead of analysing the behaviour of perturbations on average, one shifts the focus towards showing that the set of adversarial perturbations is small; ideally so small, that hitting a misbehaving perturbation would suggest the existence of a specific explanation for this rather than just bad luck. In terms of summary parameters, our approach suggests using, in line with common practice in statistics, more robust parameters such as the median instead of the mean.

A natural question that arises from the first point is: if some problems that were previously thought of as ill-posed are not (in the sense that the set of discontinuous perturbation directions is negligible), then which problems are genuinely ill-posed? In the case of polynomial eigenvalue problems, we conjecture that problems with semisimple eigenvalues are not ill-conditioned in our framework; in fact, it appears that much of the analysis performed in this section can be extended to this setting. It is not completely obvious which problems should be considered ill-posed based on this new theory. That some inputs still should can be seen, for example, by considering Jordan blocks with zeros on the diagonal; the computed eigenvalues of perturbations of the order of machine precision will not recover the correct eigenvalue in this situation. Our analysis in the semisimple case is based on the fact that the directional derivative of the function to be computed exists in sufficiently many directions.

Another consequence is that much of the probabilistic analyses of condition numbers based on the distance to ill-posedness, while still correct, can possibly be refined when using a smaller set of ill-posed inputs. In particular, it is likely that condition bounds resulting from average-case and smoothed analysis can be refined. Finally, an interesting direction would be to examine problems with high or infinite condition number that are not ill-posed in a practical sense in different contexts, such as polynomial system solving or problems arising from the discretization of continuous inverse problems.

Notes

The number of accurate digits that can be expected when the problem is continuous but not locally Lipschitz continuous requires a careful discussion. It depends on the unit roundoff u, on the exact nature of the pathology of f, and on D. For example, computing the eigenvalues of a matrix similar to an \(n\times n\) Jordan block for \(n>1\) is Hölder continuous with exponent 1/n but not Lipschitz continuous. Usually this translates into expecting only about \(\root n \of {u}\) accuracy, up to constants, when working in finite precision arithmetic. For a more complete discussion, see [28], where pathological examples of derogatory matrices are constructed, whose eigenvalues are not sensitive to finite precision computations (for fixed u), or also [33, §3.3]. For discontinuous f, however, these subtleties alone cannot justify any accurately computed decimal digits.

MATLAB R2016a on Ubuntu 16.04.

Of course, if the exact solution is not known a priori, one faces the practical issue of deciding which of the computed eigenvalues is reliable. There are various ways in which this can be done in practice, such as artificially perturbing the problem; the focus of our work is on explaining why the correct solution has been shortlisted in the first place; see [32] for a more practical perspective.

There exist algorithms able to detect and exploit the fact that a matrix pencil is singular, such as the staircase algorithm [46].

One can, more generally, allow \(\mathcal {V}\) and \(\mathcal {W}\) to be anything with a notion of distance, such as general metric spaces or Riemannian manifolds. All the definitions of condition can be adapted accordingly; in this paper, we focus on the case of normed vector spaces. We will also only need such a map to be defined locally near an input of interest.

Armentano’s results apply to differentiable maps between Riemannian manifolds and cover the moments of the directional derivative as well: they are stronger and are derived with a more comprehensive approach.

By convention, the zero matrix polynomial has degree \(-\infty \).

The use of the median instead of the expected value in the probabilistic analysis of quantities was suggested by F. Bornemann [10].

For the complexity analysis of iterative algorithms, and in particular for problems related to convex optimization, the “distance to ill-posedness” approach may often be the most natural setting. For convex feasibility problems, for example, the ill-posed inputs form a wall separating primal from dual feasible problem instances, and closeness to this wall directly affects the speed of convergence of iterative algorithms; see [13] for more on this story.

References

B. Adhikari, R. Alam, and D. Kressner. Structured eigenvalue condition numbers and linearizations for matrix polynomials. Linear algebra and its applications, 435(9):2193–2221, 2011.

R. Alam and S. Safique Ahmad. Sensitivity analysis of nonlinear eigenproblems. SIAM Journal on Matrix Analysis and Applications, 40(2):672–695, 2019.

D. Amelunxen and M. Lotz. Average-case complexity without the black swans. Journal of Complexity, 41:82–101, 2017.

D. Armentano. Stochastic perturbations and smooth condition numbers. Journal of Complexity, 26(2):161–171, 2010.

D. Armentano and C. Beltrán. The polynomial eigenvalue problem is well conditioned for random inputs. SIAM Journal on Matrix Analysis and Applications, 40(1):175–193, 2019.

K. Ball. An elementary introduction to modern convex geometry. Flavors of geometry, 31:1–58, 1997.

K. Beltrán, C.and Kozhasov. The real polynomial eigenvalue problem is well conditioned on the average. Foundations of Computational Mathematics, May 2019.

L. Blum, F. Cucker, M. Schub, and S. Smale. Complexity and Real Computation. Springer-Verlag, New York, NY, USA, 1998.

L. Boltzmann. Referat über die Abhandlung von J.C. Maxwell: “Über Boltzmann’s Theorem betreffend die mittlere Verteilung der lebendige Kraft in einem System materieller Punkte”. Wied. Ann. Beiblätter, 5:403–417, 1881.

F. Bornemann. Private communication, 2018.