Abstract

Lipschitz continuity of the gradient mapping of a continuously differentiable function plays a crucial role in designing various optimization algorithms. However, many functions arising in practical applications such as low rank matrix factorization or deep neural network problems do not have a Lipschitz continuous gradient. This led to the development of a generalized notion known as the L-smad property, which is based on generalized proximity measures called Bregman distances. However, the L-smad property cannot handle nonsmooth functions, for example, simple nonsmooth functions like \(\vert x^4-1 \vert \) and also many practical composite problems are out of scope. We fix this issue by proposing the MAP property, which generalizes the L-smad property and is also valid for a large class of structured nonconvex nonsmooth composite problems. Based on the proposed MAP property, we propose a globally convergent algorithm called Model BPG, that unifies several existing algorithms. The convergence analysis is based on a new Lyapunov function. We also numerically illustrate the superior performance of Model BPG on standard phase retrieval problems and Poisson linear inverse problems, when compared to a state of the art optimization method that is valid for generic nonconvex nonsmooth optimization problems.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We solve possibly nonsmooth and nonconvex optimization problems of the form

where \(f:\mathbb R^N \rightarrow \overline{\mathbb R}\) is a proper lower semicontinuous function that is bounded from below. Special instances of the above mentioned problem include two broad classes of problems, namely, additive composite problems (Sect. 4.1) and composite problems (Sect. 4.2). Such problems arise in numerous practical applications such as, quadratic inverse problems [16], low-rank matrix factorization problems [36], Poisson linear inverse problems [9], robust denoising problems [38], deep linear neural networks [37], and many more.

In this paper, we design an abstract framework for provable globally convergent algorithms based on a quality measure for suitable approximation of the objective. A classical special case is that of a continuously differentiable \(f: \mathbb R^N \rightarrow \mathbb R\), whose gradient mapping is Lipschitz continuous over \(\mathbb R^N\). Such functions enjoy the well-known Descent Lemma (cf. Lemma 1.2.3 of Nesterov [39])

which describes the approximation quality of the objective f by its linearization \(f({\bar{\mathbf{x}}}) + \left\langle \nabla f({\bar{\mathbf{x}}}),\mathbf{x}-{\bar{\mathbf{x}}} \right\rangle \) in terms of a quadratic error estimate with certain \(\underline{L}, {\bar{L}}>0\). Such inequalities play a crucial role in designing algorithms that are used to minimize f. Gradient Descent is one such algorithm. We illustrate Gradient Descent in terms of sequential minimization of suitable approximations to the objective, based on the first order Taylor expansion – the linearization of f around the current iterate \(\mathbf{x}_{{k}}\in \mathbb R^N\). Consider the following model function at the iterate \(\mathbf{x}_{{k}}\in \mathbb R^N\):

where \(\left\langle \cdot ,\cdot \right\rangle \) denotes the standard inner product in the Euclidean vector space \(\mathbb R^N\) of dimension N and \(f(\cdot ; \mathbf{x}_{{k}})\) is the linearization of f around \(\mathbf{x}_{{k}}\). Set \(\tau >0\). Now, the Gradient Descent update can be written equivalently as follows:

Its convergence analysis is essentially based on the Descent Lemma (2), which we reinterpret as a bound on the linearization error (model approximation error) of f. However, obviously (2) imposes a quadratic error bound, which cannot be satisfied in general. For example, functions like \(x^4\) or \((x^3+y^3)^2\) or \((1-xy)^2\) do not have a Lipschitz continuous gradient. The same is true in several of the above-mentioned practical applications.

This issue was recently resolved in Bolte et al. [16], based on the initial work in Bauschke et al. [9], by introducing a generalization of the Lipschitz continuity assumption for the gradient mapping of a function, which was termed the “L-smad property”. In convex optimization, similar notion coined “relative smoothness” was proposed in Lu et al. [33]. Such a notion was also independently considered in Birnbaum et al. [12], before Lu et al. [33]. However, all these approaches rely on the model function (3), which is the linearization of the function. In this paper, we generalize to arbitrary model functions (Definition 5) instead of the linearization of the function.

We briefly recall the “L-smad property”. The main limitation of the Lipschitz continuous gradient notion is that it can only allow for quadratic approximation model errors. To go far beyond this setting, it then appears natural to invoke more general proximity measures as afforded by Bregman distances [17]. Several variants of Bregman distances exist in the literature [6, 16, 19, 33]. We focus on those distances that are generated from so-called Legendre functions (Definition 3). Consider a Legendre function h, then the Bregman distance between \(\mathbf{x} \in \mathrm {dom}\,h\) and \(\mathbf{y} \in \mathrm {int}\,\mathrm {dom}\,h\) is given by

A continuously differentiable function \(f: \mathbb R^N \rightarrow \mathbb R\) is L-smad with respect to a Legendre function \(h: \mathbb R^N \rightarrow \mathbb R\) over \(\mathbb R^N\) with \({\bar{L}},\underline{L}> 0\), if we have

Note that with \(h(\mathbf{x}) = \frac{1}{2}\Vert \mathbf{x} \Vert _{}^2\) in (6) we recover (2). We interpret the inequalities in (6) as a generalized distance measure for the linearization error of f. Similar to the Gradient Descent setting, minimization of \(f({\bar{\mathbf{x}}}) + \left\langle \nabla f({\bar{\mathbf{x}}}),\mathbf{x}-{\bar{\mathbf{x}}} \right\rangle + \frac{1}{\tau }D_{h}(\mathbf{x},{\bar{\mathbf{x}}})\) results in the Bregman proximal gradient (BPG) algorithm’s update step [16] (a.k.a. Mirror Descent [10]).

However, the L-smad property relies on the continuous differentiability of the function f, thus nonsmooth functions as simple as \(\vert x^4-1 \vert \) or \(\vert 1-(xy)^2 \vert \) or \(\log (1+ \vert 1-(xy)^2 \vert )\) cannot be captured under the L-smad property. This lead us to the development of the MAP property (Definition 7), where MAP abbreviates Model Approximation Property. Consider a function \(f:\mathbb R^N \rightarrow \mathbb R\) that is proper lower semicontinuous (lsc), and a Legendre function \(h : \mathbb R^N \rightarrow \mathbb R\) with \(\mathrm {dom}\,h= \mathbb R^N\). For certain \({\bar{\mathbf{x}}}\in \mathbb R^N\), we consider generic model function \(f(\mathbf{x};{\bar{\mathbf{x}}})\) that is proper lsc and approximates the function around the model center \({\bar{\mathbf{x}}}\), while preserving the local first order information (Definition 5). The MAP property is satisfied with constants \({{\bar{L}}} >0\) and \(\underline{L} \in \mathbb R\) if for any \({\bar{\mathbf{x}}}\in \mathbb R^N\) the following holds:

Note that we do not require the continuous differentiability of the function f. Our MAP property is inspired from Davis et al. [20]. However, their work considers only the lower bound with a weakly convex model function. Similar to the BPG setting, minimization of \(f(\mathbf{x}; {\bar{\mathbf{x}}}) + \frac{1}{\tau }D_{h}(\mathbf{x},{\bar{\mathbf{x}}})\) essentially results in Model BPG algorithm’s update step. We illustrate the MAP property with a simple example. Consider a composite problem \(f(x) = g(F(x)) := \vert x^4-1 \vert \), where \(F(x) := x^4-1\) and \(g(x) := \vert x \vert \). Note that neither the Lipschitz continuity of the gradient nor the L-smad property is valid for this problem. However, the MAP property is valid with \({\bar{L}}= \underline{L}= 4\) using \(f(x; {\bar{x}}) := g(F({\bar{x}}) + \nabla F({\bar{x}})(x-{\bar{x}}))\), where \(\nabla F({\bar{x}})\) is the Jacobian of F at \({{{\bar{x}}}}\), and \(D_{h}(x,{\bar{x}}) = \frac{1}{4}x^4 - \frac{1}{4}{{{\bar{x}}}}^4 - {{{\bar{x}}}}^3(x - {{{\bar{x}}}})\), generated by \(h(x) = \frac{1}{4}x^4\). We provide further details in Examples 6 and in 9.

1.1 Contributions and relations to prior work

Our main contributions are the following.

-

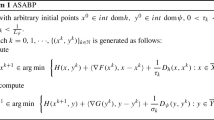

We introduce the MAP property, which generalizes the Lipschitz continuity assumption of the gradient mapping and the L-smad property [9, 16]. Earlier proposed notions were restricted to additive composite problems. The MAP property is essentially an extended Descent Lemma that is valid for generic composite problems (see Sect. 4) and beyond, based on Bregman distances. MAP like property was considered in Davis et al. [20], however with focus on stochastic optimization and lower bounds of their MAP like property. The MAP property relies on the notion of model function, that serves as a function approximation, and preserves the local first order information of the function. Our work extends the foundations laid by Drusvyatskiy et al. [25], Davis et al. [20] based on generic model functions (potentially nonconvex), and Ochs et al. [47] based on convex model functions. Taking inspiration from the update steps used in [20] and based on the MAP property, we propose the Model based Bregman Proximal Gradient (Model BPG) algorithm (Algorithm 1). Apart from the work in Davis et al. [20], another close variant of Model BPG is the line search based Bregman proximal gradient method [47], however, both the works do not consider the convergence of the full sequence of iterates.

-

The global convergence analysis typically relies on the descent property of the function values. However, using function values can be restrictive, and alternatives are sought [48]. We fix this issue by introducing a new Lyapunov function. We show that the (full) sequence generated by Model BPG converges to a critical point of the objective function. Notably, the usage of a Lyapunov function is popular for analysis of inertial algorithms [3, 5, 38, 46] and through our work we aim to popularize Lyapunov functions also for noninertial algorithms.

-

The global convergence analysis of Bregman proximal gradient (BPG) [16] relies on the full domain of the Bregman distance, which contradicts their original purpose to represent the geometry of the constraint set. Our convergence theorem relaxes this restriction under certain assumptions that are typically satisfied in practice. In general, this requires the limit points of the sequence to lie in the interior of domain of the employed Legendre function. While this is certainly still a restriction, nevertheless, the considered setting is highly nontrivial and novel in the general context of nonconvex nonsmooth optimization. Moreover, it allows us to avoid the common restriction of requiring (global) strong convexity of the Legendre function, a severe drawback that rules out many interesting applications in related approaches (Sect. 5.2). In the context of convex optimization, works such as Lu [32], Gutman and Peña [27] use the reference functions (notion similar to the Legendre function) that are not strongly convex. In nonconvex nonsmooth optimization, Legendre functions that are not strongly convex are considered in Davis et al. [20].

-

We validate our theory with a numerical section showing the flexibility and the superior performance of Model BPG compared to a state of the art optimization algorithm, namely, Inexact Bregman Proximal Minimization Line Search (IBPM-LS) [45], on standard phase retrieval problems and Poisson linear inverse problems.

1.2 Preliminaries and notations

All the notations are primarily taken from Rockafellar and Wets [51]. We work in a Euclidean vector space \(\mathbb R^N\) of dimension \(N\in \mathbb N^*\) equipped with the standard inner product \(\left\langle \cdot ,\cdot \right\rangle \) and induced norm \(\Vert \cdot \Vert _{}\). For a set \(C\subset \mathbb R^N\), we define \(\Vert C \Vert _{-}:=\inf _{\mathbf{s}\in C}\, \Vert \mathbf{s} \Vert _{}\). For any vector \(\mathbf{x}\in \mathbb R^N\), the ith coordinate is denoted by \(\mathbf{x}_i\). We work with extended-valued functions \(f:\mathbb R^N \rightarrow \overline{\mathbb R}\), \(\overline{\mathbb R}:= \mathbb R\cup \left\{ +\infty \right\} \). The domain of f is \(\mathrm {dom}\,f:= \left\{ \mathbf{x}\in \mathbb R^N\,\vert \, f(\mathbf{x}) < +\infty \right\} \) and a function f is proper, if \(\mathrm {dom}\,f\ne \emptyset \). It is lower semi-continuous (or closed), if \(\liminf _{\mathbf{x}\rightarrow \mathbf{\bar{\mathbf{x}}}} f(\mathbf{x}) \ge f(\bar{\mathbf{x}})\) for any \(\bar{\mathbf{x}}\in \mathbb R^N\). Let \(\mathrm {int}\,\Omega \) denote the interior of \(\Omega \subset \mathbb R^N\). We use the notation of f-attentive convergence \(\mathbf{x}\overset{f}{\rightarrow } \bar{\mathbf{x}} \Leftrightarrow (\mathbf{x},f(\mathbf{x})) \rightarrow (\bar{\mathbf{x}}, f(\bar{\mathbf{x}}))\) and the notation \({k}\overset{K}{\rightarrow }\infty \) for some \(K\subset \mathbb N\) to represent \({k}\rightarrow \infty \) where \({k}\in K\). The indicator function \(\delta _{C}\) of a set \(C\subset \mathbb R^N\) is defined by \(\delta _{C}(\mathbf{x})=0\), if \(\mathbf{x}\in C\) and \(\delta _{C}(\mathbf{x})=+\infty \), otherwise. The (orthogonal) projection of \(\bar{\mathbf{x}}\) onto C, denoted \(\mathrm {proj}_C(\bar{\mathbf{x}})\), is given by a minimizer of \(\min _{\mathbf{x}\in C}\, \Vert \mathbf{x}-\bar{\mathbf{x}} \Vert _{}\), which is well defined for a non-empty closed C. A set-valued mapping \(T:\mathbb R^N \rightrightarrows \mathbb R^M\) is defined by its graph \(\mathrm {Graph}T:=\left\{ (\mathbf{x},\mathbf{v})\in \mathbb R^N\times \mathbb R^M\,\vert \,\mathbf{v}\in T(\mathbf{x}) \right\} \) with domain given by \(\mathrm {dom}\,T:=\left\{ \mathbf{x}\in \mathbb R^N\,\vert \,T(\mathbf{x})\ne \emptyset \right\} \). Following Rockafellar and Wets [51, Def. 6.3], let \({\bar{\mathbf{x}}}\in C\), a vector \(\mathbf{v}\) is regular normal to C, written \(\mathbf{v} \in {\widehat{N}}_C({\bar{\mathbf{x}}})\), if \(\left\langle \mathbf{v},\mathbf{x}- {\bar{\mathbf{x}}} \right\rangle \le o(\Vert \mathbf{x}- {\bar{\mathbf{x}}} \Vert _{})\) for \(\mathbf{x}\in C\). Here, \(\mathbf{v}\) would be a normal vector, written \(\mathbf{v} \in N_C({\bar{\mathbf{x}}})\), if there exist sequences \(\mathbf{x}_k \rightarrow {\bar{\mathbf{x}}}\) and \(\mathbf{v}_k \rightarrow \mathbf{v}\), such that \(\mathbf{x}_k \in C\) with \(\mathbf{v}_k \in {\widehat{N}}_C(\mathbf{x}_k)\) for all \(k \in \mathbb N\). Following Rockafellar and Wets [51, Def. 8.3], we introduce subdifferential notions for nonsmooth functions. The Fréchet subdifferential of f at \(\bar{\mathbf{x}} \in \mathrm {dom}\,f\) is the set \(\widehat{\partial }f(\bar{\mathbf{x}})\) of elements \(\mathbf{v} \in \mathbb R^N\) such that

For \(\bar{\mathbf{x}}\not \in \mathrm {dom}\,f\), we set \(\widehat{\partial }f(\bar{\mathbf{x}}) = \emptyset \). The (limiting) subdifferential of f at \(\bar{\mathbf{x}}\in \mathrm {dom}\,f\) is defined by \( \partial f(\bar{\mathbf{x}}) := \left\{ \mathbf{v}\in \mathbb R^N\,\vert \,\exists \, \mathbf{y}_k \overset{f}{\rightarrow } \bar{\mathbf{x}},\;\mathbf{v}_{{k}}\in \widehat{\partial }f(\mathbf{y}_k),\;\mathbf{v}_{{k}}\rightarrow \mathbf{v} \right\} \,, \) and \(\partial f(\bar{\mathbf{x}}) = \emptyset \) for \(\bar{\mathbf{x}} \not \in \mathrm {dom}\,f\). As a direct consequence of the definition of the limiting subdifferential, we have the following closedness property at any \(\bar{\mathbf{x}}\in \mathrm {dom}\,f\):

A vector \(\mathbf{v} \in \mathbb R^N\) is a horizon subgradient of f at \({\bar{\mathbf{x}}}\), if there are sequences \(\mathbf{x}_k \overset{f}{\rightarrow } {\bar{\mathbf{x}}}\), \(\mathbf{v}_k \in {\widehat{\partial }} f(\mathbf{x}_k)\), one has \(\lambda _k\mathbf{v}_k \rightarrow \mathbf{v}\) for some sequence \(\lambda _k \searrow 0\). The set of all horizon subgradients \({\partial }^{\infty } f({\bar{\mathbf{x}}})\) is called horizon subdifferential. A point \(\bar{\mathbf{x}}\in \mathrm {dom}\,f\) satisfying \(\mathbf{0}\in \partial f(\bar{\mathbf{x}})\) is a called a critical point, which is a necessary optimality condition (Fermat’s rule [51, Thm. 10.1]) for \(\bar{\mathbf{x}}\) being a local minimizer. The set of critical points is denoted by

The set of (global) minimizers of a function f is

and the (unique) minimizer of f by \(\mathop {\hbox {argmin}}\limits _{\mathbf{x}\in \mathbb R^N}\, f(\mathbf{x})\) if \(\mathop {\hbox {Argmin}}\limits _{\mathbf{x}\in \mathbb R^N}\, f(\mathbf{x})\) is a singleton. We also use for short \(\mathop {\hbox {Argmin}}\limits f\) and \(\mathop {\hbox {argmin}}\limits f\).

Our global convergence theory relies on the so-called Kurdyka–Łojasiewicz (KL) property. It is a standard tool that is essentially satisfied by most of the functions that appear in practice. We just state the definition here from Attouch et al. [4] and refer to Bolte et al. [13,14,15], Kurdyka [28] for more details.

Definition 1

(Kurdyka–Łojasiewicz property) Let \(f:\mathbb R^N \rightarrow \overline{\mathbb R}\) and let \(\bar{\mathbf{x}}\in \mathrm {dom}\,\partial f\). If there exists \(\eta \in (0,\infty ]\), a neighborhood U of \(\bar{\mathbf{x}}\) and a continuous concave function \(\varphi :[0,\eta ) \rightarrow \mathbb R_+\) such that

and for all \(x\in U\cap [f(\bar{\mathbf{x}})< f(\mathbf{x}) < f(\bar{\mathbf{x}}) + \eta ]\) the Kurdyka–Łojasiewicz inequality

holds, then the function has the Kurdyka–Łojasiewicz property at \(\bar{\mathbf{x}}\). If, additionally, the function is lsc and the property holds at each point in \(\mathrm {dom}\,\partial f\), then f is called a Kurdyka–Łojasiewicz function.

We abbreviate Kurdyka–Łojasiewicz property as KL property. The function \(\varphi \) in the KL property is known as the desingularizing function. It is well known that the class of functions definable in an o-minimal structure [21] satisfy the KL property [14, Theorem 14]. Sets and functions that are semi-algebraic and globally subanalytic (for example, see [14, Section 4], [42, Section 4.5]) can be defined in an o-minimal structure.

We briefly review the concept of gradient-like descent sequence, that eases the global convergence analysis of Model BPG. We use the following results from Ochs [43]. Let \({\mathcal {F}}:\mathbb R^N\times \mathbb R^P \rightarrow \overline{\mathbb R}\) be a proper, lsc function that is bounded from below.

Assumption 1

(Gradient-like Descent Sequence [43]) Let \((\mathbf{u}_{n})_{{n}\in \mathbb N}\) be a sequence of parameters in \(\mathbb R^P\) and let \((\varepsilon _n)_{n\in \mathbb N}\) be an \(\ell _1\)-summable sequence of non-negative real numbers. Moreover, we assume there are sequences \((a_n)_{n\in \mathbb N}\), \((b_n)_{n\in \mathbb N}\), and \((d_{n})_{{n}\in \mathbb N}\) of non-negative real numbers, a non-empty finite index set \(I\subset {\mathbb {Z}}\) and \(\theta _i\ge 0\), \(i\in I\), with \(\sum _{i\in I}\theta _i = 1\) such that the following holds:

-

(H1)

(Sufficient decrease condition) For each \(n\in \mathbb N\), it holds that

$$\begin{aligned} {\mathcal {F}}(\mathbf{x}_{{n+1}},\mathbf{u}_{{n+1}}) + a_{{n}}d_{{n}}^2 \le {\mathcal {F}}(\mathbf{x}_{{n}},\mathbf{u}_{{n}})\,. \end{aligned}$$ -

(H2)

(Relative error condition) For each \(n\in \mathbb N\), it holds that: (set \(d_{j}=0\) for \(j\le 0\))

$$\begin{aligned} b_{{n+1}} \Vert \partial {\mathcal {F}}(\mathbf{x}_{{n+1}},\mathbf{u}_{{n+1}}) \Vert _{-} \le b \sum _{i\in I} \theta _{i}d_{{n+1}-i} + \varepsilon _{n+1} \,. \end{aligned}$$ -

(H3)

(Continuity condition) There exists a subsequence \(((\mathbf{x}_{n_j},\mathbf{u}_{n_j}))_{j\in \mathbb N}\) and \(({\tilde{\mathbf{x}}},{\tilde{\mathbf{u}}})\in \mathbb R^N\times \mathbb R^P\) such that \( (\mathbf{x}_{n_j},\mathbf{u}_{n_j}) \overset{{\mathcal {F}}}{\rightarrow } ({\tilde{\mathbf{x}}},{\tilde{\mathbf{u}}})\quad \text {as} \quad j\rightarrow \infty \,. \)

-

(H4)

(Distance condition) It holds that \(d_{{n}}\rightarrow 0 \Longrightarrow \Vert \mathbf{x}_{{n+1}}-\mathbf{x}_{{n}} \Vert _{2} \rightarrow 0 \) and \(\exists {n}^\prime \in \mathbb N:\forall {n}\ge {n}^\prime :d_{{n}}= 0 \Longrightarrow \exists {n}^{\prime \prime }\in \mathbb N:\forall {n}\ge {n}^{\prime \prime } :\mathbf{x}_{{n+1}}=\mathbf{x}_{{n}}\,.\)

-

(H5)

(Parameter condition) \((b_{{n}})_{{n}\in \mathbb N}\not \in \ell _1\,, \sup _{n\in \mathbb N} \frac{1}{b_{{n}}a_{{n}}} < \infty \,, \inf _{n}a_{{n}}=: {\underline{a}} > 0\,.\)

We now provide the global convergence statement from Ochs [43], based on Assumption 1. The set of limit points of a bounded sequence \(((\mathbf{x}_{{n}},\mathbf{u}_{{n}}))_{{n}\in \mathbb N}\) is \(\omega (\mathbf{x}_{0},\mathbf{u}_{0}) := \limsup _{{n}\rightarrow \infty }\, \left\{ (\mathbf{x}_{{n}},\mathbf{u}_{{n}}) \right\} \,,\) and denote the subset of \({\mathcal {F}}\)-attentive limit points by

Theorem 2

(Global convergence [43, Theorem 10]) Suppose \({\mathcal {F}}\) is a proper lsc KL function that is bounded from below. Let \((\mathbf{x}_{{n}})_{{n}\in \mathbb N}\) be a bounded sequence generated by an abstract algorithm parametrized by a bounded sequence \((\mathbf{u}_{{n}})_{{n}\in \mathbb N}\) that satisfies Assumption 1. Assume that \({\mathcal {F}}\)-attentive convergence holds along converging subsequences of \(((\mathbf{x}_{{n}},\mathbf{u}_{{n}}))_{{n}\in \mathbb N}\), i.e. \(\omega (\mathbf{x}_{0},\mathbf{u}_{0})=\omega _{{\mathcal {F}}}(\mathbf{x}_{0},\mathbf{u}_{0})\). Then, the following holds:

-

(i)

The sequence \((d_{{n}})_{{n}\in \mathbb N}\) satisfies \(\sum _{{k}=0}^{\infty } d_{{k}}< +\infty \,,\) i.e., the trajectory of the sequence \((\mathbf{x}_{{n}})_{{n}\in \mathbb N}\) has finite length w.r.t. the abstract distance measures \((d_{{n}})_{{n}\in \mathbb N}\).

-

(ii)

Suppose \(d_{{k}}\) satisfies \(\Vert \mathbf{x}_{{k+1}}-\mathbf{x}_{{k}} \Vert _{2}\le {\bar{c}} d_{{k}+{k}^\prime }\) for some \({k}^\prime \in {\mathbb {Z}}\) and \({\bar{c}}\in \mathbb R\), then \((\mathbf{x}_{{n}})_{{n}\in \mathbb N}\) is a Cauchy sequence, and thus \((\mathbf{x}_{{n}})_{{n}\in \mathbb N}\) converges to \({\tilde{\mathbf{x}}}\) from (H3).

-

(iii)

Moreover, if \((\mathbf{u}_{n})_{{n}\in \mathbb N}\) is a converging sequence, then each limit point of the sequence \(((\mathbf{x}_{n},\mathbf{u}_{n}))_{n\in \mathbb N}\) is a critical point of \({\mathcal {F}}\), which in the situation of .(ii) is the unique point \(({\tilde{\mathbf{x}}}, {\tilde{\mathbf{u}}})\) from (H3).

Legendre functions defined below generate Bregman distances, which are generalized proximity measures compared to the Euclidean distance.

Definition 3

(Legendre function [50, Section 26]) Let \(h: \mathbb R^N \rightarrow \overline{\mathbb R}\) be a proper lsc convex function. It is called:

-

(i)

essentially smooth, if h is differentiable on \(\mathrm {int}\,\mathrm {dom}\,h\), with moreover \(\Vert \nabla h(\mathbf{x}_{{k}}) \Vert _{} \rightarrow \infty \) for every sequence \((\mathbf{x}_{k})_{{k}\in \mathbb N} \in \mathrm {int}\,\mathrm {dom}\,h\) converging to a boundary point of \(\mathrm {dom}\,h\) as \(k\rightarrow \infty \);

-

(ii)

of Legendre type if \(h\) is essentially smooth and strictly convex on \(\mathrm {int}\,\mathrm {dom}\,h\).

Some properties of Legendre function include \(\mathrm {dom}\,\partial h = \mathrm {int}\,\mathrm {dom}\,h, \text { and }\, \partial h(\mathbf{x}) = \{\nabla h(\mathbf{x})\},\, \forall \mathbf{x}\in \mathrm {int}\,\mathrm {dom}\,h.\) Additional properties can be found in Bauschke and Borwein [6, Section 2.3]. For the purpose of our analysis, we later require that the Legendre functions are twice continuously differentiable (see Assumption 4). Legendre function is also referred as kernel generating distance [16], or a reference function [33]. Generic reference functions used in Lu et al. [33] are more general compared to Legendre functions, as they do not require essential smoothness. The Bregman distance associated with any Legendre function h is defined by

In contrast to the Euclidean distance, the Bregman distance is lacking symmetry. Prominent examples of Bregman distances can be found in Bauschke et al. [9, Example 1, 2] and for additional results, we refer the reader to Bauschke and Borwein [6], Bauschke et al. [7,8,9]. We provide some examples below.

-

Bregman distance generated from \(h(\mathbf{x}) = \frac{1}{2}\Vert \mathbf{x} \Vert _{}^2\) is the Euclidean distance.

-

Let \(\mathbf{x}, {\bar{\mathbf{x}}}\in \mathbb R_{++}^N\), the Legendre function \(h(\mathbf{x}) = -\sum _{i=1}^N\log (\mathbf{x}_i)\) (Burg’s entropy) is helpful in Poisson linear inverse problems [9].

-

Let \(\mathbf{x}\in \mathbb R_{+}^N\), \({\bar{\mathbf{x}}}\in \mathbb R_{++}^N\), the Legendre function \(h(\mathbf{x}) = \sum _{i=1}^N\mathbf{x}_i\log (\mathbf{x}_i)\) (Boltzmann–Shannon entropy), with \(0\log (0):= 0\) is helpful to handle simplex constraints [10].

-

Phase retrieval problems [16] use the Bregman distance based on the Legendre function \(h : \mathbb R^N \rightarrow \mathbb R\) that is given by \(h(\mathbf{x})= 0.25\Vert \mathbf{x} \Vert _{2}^4 + 0.5\Vert \mathbf{x} \Vert _{2}^2\,.\)

-

Matrix factorization problems [36, 52] use the Bregman distance based on the Legendre function \(h : \mathbb R^{N_1} \times \mathbb R^{N_2} \rightarrow \mathbb R\) that is given by \(h(\mathbf{x}, \mathbf{y}) = c_1(\Vert \mathbf{x} \Vert _{}^2 + \Vert \mathbf{y} \Vert _{}^2)^2 + c_2(\Vert \mathbf{x} \Vert _{}^2 + \Vert \mathbf{y} \Vert _{}^2)\) with certain \(c_1,c_2>0\) and \(N_1,N_2 \in \mathbb N\).

2 Problem setting and model BPG algorithm

We consider the optimization problem (1) where f satisfies the following assumption, which we impose henceforth.

Assumption 2

\(f:\mathbb R^N \rightarrow \overline{\mathbb R}\) is proper, lsc (possibly nonconvex nonsmooth) and coercive, i.e., as \(\Vert \mathbf{x} \Vert _{} \rightarrow \infty \) we have \(f(\mathbf{x}) \rightarrow \infty \).

Due to Rockafellar and Wets [51, Theorem 1.9], the function f satisfying Assumption 2 is bounded from below, and \(\mathop {\hbox {Argmin}}\limits _{\mathbf{x}\in \mathbb R^N} f(\mathbf{x})\) is nonempty and compact. Denote \(v({\mathcal {P}}) := \min _{\mathbf{x}\in \mathbb R^N} f(\mathbf{x}) > -\infty \,.\) We require the following definitions.

Definition 4

(Growth function [25, 47]) A differentiable univariate function \(\varsigma :\mathbb R_+ \rightarrow \mathbb R_+\) is called growth function if it satisfies \(\varsigma (0)=\varsigma _+^\prime (0) = 0\), where \(\varsigma ^\prime _+\) denotes the one sided (right) derivative of \(\varsigma \). If, in addition, \(\varsigma _+^\prime (t) >0\) for \(t>0\) and equalities \(\lim _{t\searrow 0} \varsigma _+^\prime (t) = \lim _{t\searrow 0} \varsigma (t)/\varsigma _+^\prime (t) = 0\) hold, we say that \(\varsigma \) is a proper growth function.

Example of a proper growth function is \(\varsigma (t) = \frac{\eta }{r}t^r\) for \(\eta ,r >0\). Lipschitz continuity and Hölder continuity can be interpreted with growth functions or, more generally, with uniform continuity [47]. We use the notion of a growth function to quantify the difference between a model function (defined below) and the objective.

Definition 5

(Model Function) Let f be a proper lower semi-continuous (lsc) function. A function \(f(\cdot ,{\bar{\mathbf{x}}}):\mathbb R^N \rightarrow \overline{\mathbb R}\) with \(\mathrm {dom}\,f(\cdot ,{\bar{\mathbf{x}}}) = \mathrm {dom}\,f\) is called model function for f around the model center \({\bar{\mathbf{x}}}\in \mathrm {dom}\,f\), if there exists a growth function \(\varsigma _{{\bar{\mathbf{x}}}}\) such that the following is satisfied:

A model function is essentially a first-order approximation to a function f, which explains the naming as “Taylor-like model” by Drusvyatskiy et al. [25]. The qualitative approximation property is represented by the growth function. Informally, the model function approximates the function well near the model center. Convex model functions are explored in Ochs et al. [47], Ochs and Malitsky [44]. However, in our setting, the model functions can be nonconvex. Nonconvex model functions were considered in Drusvyatskiy et al. [25], however only subsequential convergence was shown.

We refer to (11) as a bound on the model error, and the symbol \(\varsigma _{{\bar{\mathbf{x}}}}\) denotes the dependency of the growth function on the model center \({\bar{\mathbf{x}}}\). Typically the growth function depends on the model center, as we illustrate below.

Example 6

(Running Example) Let \(f(\mathbf{x}) = \vert g(\mathbf{x}) \vert \) with \(g(\mathbf{x}) = \Vert \mathbf{x} \Vert _{}^4 - 1\). With \({\bar{\mathbf{x}}}\in \mathbb R^N\) as the model center, and the model function

With the growth function is \(\varsigma _{{\bar{\mathbf{x}}}}(t) = 24\Vert {\bar{\mathbf{x}}} \Vert _{}^2 t^2 + 8t^4\), the model error obtained is

It is often of interest to obtain a uniform approximation for the model error \(\vert f(\mathbf{x}) - f(\mathbf{x};{\bar{\mathbf{x}}}) \vert \), where the growth function is not dependent on the model center. In general, obtaining such a uniform approximation is not trivial, and may even be impossible. Moreover, typically finding an appropriate growth function is not trivial. For this purpose, it is preferable to have a global bound on the model error that can be easily verified, the dependency on the model center is more structured, and the constants arising do not have any dependency on the model center. In the context of additive composite problems, previous works such as Bauschke et al. [9], Lu et al. [33], Bolte et al. [16] relied on Bregman distances to upper bound the model error. Based on this idea, we propose the following MAP property, which is valid for a huge class of structured nonconvex problems and also generalizes the previous works.

Definition 7

(MAP: Model Approximation Property) Let h be a Legendre function that is continuously differentiable over \(\mathrm {int}\,\mathrm {dom}\,h\). A proper lsc function f with \(\mathrm {dom}\,f \subset \mathrm {cl}\,\mathrm {dom}\,h\) and \(\mathrm {dom}\,f\cap \, \mathrm {int}\,\mathrm {dom}\,h\ne \emptyset \), and model function \(f(\cdot , {\bar{\mathbf{x}}})\) for f around \({\bar{\mathbf{x}}}\in \mathrm {dom}\,f \cap \mathrm {int}\,\mathrm {dom}\,h\) satisfy the Model Approximation Property (MAP) at \({\bar{\mathbf{x}}}\), with the constants \({{\bar{L}}} >0\), \(\underline{L} \in \mathbb R\), if for any \({\bar{\mathbf{x}}}\in \mathrm {dom}\,f\cap \mathrm {int}\,\mathrm {dom}\,h\) the following holds:

Remark 8

(Discussion on Definition 7)

-

(i)

The design of a model function is independent of an algorithm. However, algorithms can be governed by the model function (for example, see Model BPG below). The property of a model function is rather an analogue to differentiability or a (uniform) first-order approximation. Note that for \({{\bar{\mathbf{x}}}} \in \mathrm {int}\,\mathrm {dom}\,h\), the Bregman distance \(D_h(\mathbf{x},{{\bar{\mathbf{x}}}})\) is bounded by \(o(\Vert \mathbf{x}-{{\bar{\mathbf{x}}}} \Vert _{})\), which is a growth function. Therefore, the MAP property requires additional algorithm specific properties of the model function. In particular, we require the constants \({{\bar{L}}}\) and \(\underline{L}\) to be independent of \({\bar{\mathbf{x}}}\), which provides a global consistency between the model function approximations.

-

(ii)

The condition \(\mathrm {dom}\,f\subset \mathrm {cl}\,\mathrm {dom}\,h\) is a minor regularity condition. For example, if \(\mathrm {dom}\,f= [0,\infty )\) and \(\mathrm {dom}\,h= (0,\infty )\) (e.g., for h in Burg’s entropy), such a function h can be used in MAP property. However, the L-smad property [16] would require \(\mathbf{x},{\bar{\mathbf{x}}}\) in (12) to lie in \(\mathrm {int}\,\mathrm {dom}\,h\) (see also Sect. 4.1).

-

(iii)

Note that the choice of \({{\underline{L}}}\) is unrestricted in MAP property. For nonconvex f, \(\underline{L}\) is typically a positive real number. For convex f, typically the condition \({{\underline{L}}} \ge 0\) holds true. However, note that the values of \(\underline{L},{\bar{L}}\) are governed by the model function. For convex additive composite problems, \({{\underline{L}}} < 0\) can hold true for relatively strongly convex functions [33].

Example 9

(Running Example – Contd) We continue Example 6 to illustrate the MAP property. Let \(h(\mathbf{x}) = \frac{1}{4}\Vert \mathbf{x} \Vert _{}^4\), we clearly have

which in turn results in the following upper bound for the model error

The upper bound is obtained in terms of a Bregman distance. Clearly, the constants arising do not have any dependency on the model center.

We now propose the Model BPG algorithm, where the update step relies on the upper bound of the MAP property.

Remark 10

-

(i)

A closely related work in Davis et al. [20] considers only the lower bound of the MAP property and their algorithm terminates by choosing an iterate based on certain probability distribution. In stark contrast, Model BPG relies on the upper bound of the MAP property and there is no need to invoke any probabilistic argument to choose the final iterate. Also, Davis et al. [20] considers weakly convex model functions whereas we do not have such a restriction.

-

(ii)

For the global convergence analysis of Model BPG sequences, in addition to the condition \(\tau _{{k}}\in [{{\underline{\tau }}},{{{\bar{\tau }}}}]\) on step-size, the condition that \(\tau _k \rightarrow \tau \), as \(k \rightarrow \infty \) for certain \(\tau > 0\) is required (see Theorem 17 , 18).

-

(iii)

We note that Model BPG is applicable to a broad class of structured nonconvex and nonsmooth problems. In particular, Model BPG can be efficiently applied to those nonconvex and nonsmooth problems, for which the update step (13) involving the Bregman distance can be easily computed.

We now collect all the assumptions required for the global convergence analysis of a sequence generated by the Model BPG algorithm.

Assumption 3

Let h be a Legendre function that is \({\mathcal {C}}^2\) over \(\mathrm {int}\,\mathrm {dom}\,h\). Moreover, the conditions \(\mathrm {dom}\,f\cap \mathrm {int}\,\mathrm {dom}\,h\ne \emptyset \), \(\mathrm {crit}f\cap \mathrm {int}\,\mathrm {dom}\,h\ne \emptyset \) and \(\mathrm {dom}\,f \subset \mathrm {cl}\,\mathrm {dom}\,h\) hold true.

-

(i)

The exist \({{\bar{L}}} >0\), \(\underline{L} \in \mathbb R\) such that for any \({\bar{\mathbf{x}}}\in \mathrm {dom}\,f\, \cap \, \mathrm {int}\,\mathrm {dom}\,h\), f and the model function \(f(\cdot , {\bar{\mathbf{x}}})\) satisfy the MAP property at \({\bar{\mathbf{x}}}\) with constants \({{\bar{L}}},\underline{L}\).

-

(ii)

For any \({\bar{\mathbf{x}}}\in \mathrm {dom}\,f\cap \mathrm {int}\,\mathrm {dom}\,h\), the following qualification condition holds true:

$$\begin{aligned} \partial ^{\infty }_{\mathbf{x}}f(\mathbf{x};{\bar{\mathbf{x}}}) \cap (-N_{\mathrm {dom}\,h}(\mathbf{x})) = \{\mathbf{0}\}\,,\quad \forall \, \mathbf{x}\in \mathrm {dom}\,f\cap \mathrm {dom}\,h\,. \end{aligned}$$(14) -

(iii)

For all \(\mathbf{x}, \mathbf{y}\in \mathrm {dom}\,f\), the conditions \((\mathbf{0}, \mathbf{v}) \in \partial ^{\infty }f(\mathbf{x};\mathbf{y}) \,\,\text {implies}\,\, \mathbf{v}= \mathbf{0}\,,\) and \((\mathbf{v}, \mathbf{0}) \in \partial ^{\infty }f(\mathbf{x};\mathbf{y}) \,\,\text {implies}\,\,\mathbf{v}= \mathbf{0}\) hold true. Also, \(f(\mathbf{x}; \mathbf{y})\) is regular [51, Definition 7.25] at any \((\mathbf{x}, \mathbf{y}) \in \mathrm {dom}\,f\times \mathrm {dom}\,f\).

-

(iv)

The function \(f(\mathbf{x};{\bar{\mathbf{x}}})\) is a proper, lsc function and is continuous over \((\mathbf{x},{\bar{\mathbf{x}}}) \in \mathrm {dom}\,f\times \mathrm {dom}\,f\).

By \(\partial _\mathbf{x}f(\mathbf{x};{\bar{\mathbf{x}}})\) we mean the limiting subdifferential of the model function \(\mathbf{x}\mapsto f(\mathbf{x};{\bar{\mathbf{x}}})\) with \({\bar{\mathbf{x}}}\) fixed and \(\partial f(\mathbf{x};\mathbf{y})\) denotes the limiting subdifferential w.r.t \((\mathbf{x},\mathbf{y})\); dito for the horizon subdifferential.

Remark 11

(Discussion on Assumption 3) The qualification condition in (14) is required for the applicability of the subdifferential summation rule (see [51, Corollary 10.9]). Assumption 3(iii) and [51, Corollary 10.11] ensure that for all \(\mathbf{x}, \mathbf{y}\in \mathrm {dom}\,f\), the following holds true:

Our analysis relies on (Assumption 3(iii)’). However, note that Assumption 3(iii) is a sufficient condition for (Assumption 3(iii)’) to hold. Certain classes of functions mentioned in Sect. 4 satisfy (Assumption 3(iii)’) directly, instead of Assumption 3(iii). Assumption 3(iv) is typically satisfied in practice and plays a key role in Lemma 30. Based on Assumption 3(iii), for any fixed \({\bar{\mathbf{x}}}\in \mathrm {dom}\,f\), the model function \(f({\mathbf{x};{\bar{\mathbf{x}}}})\) is regular at any \(\mathbf{x}\in \mathrm {dom}\,f\). Using this fact, we deduce that the model function preserves the first order information of the function, in the sense that for \(\mathbf{x}\in \mathrm {dom}\,f\) the condition \(\partial _{\mathbf{y}} f({\mathbf{y}};\mathbf{x})|_{{\mathbf{y}} = \mathbf{x}} = {\widehat{\partial }} f(\mathbf{x})\) holds true (based on Ochs and Malitsky [44, Lemma 14]).

Many popular algorithms such as Gradient Descent, Proximal Gradient Method, Bregman Proximal Gradient Method, Prox-Linear method are special cases of Model BPG depending on the choice of the model function and the choice of Bregman distance, thus making it a unified algorithm (also c.f. Ochs et al. [47]). Examples of model functions are provided in Sect. 4. Let \(\tau >0\), \({\bar{\mathbf{x}}}\in \mathrm {dom}\,f\cap \mathrm {int}\,\mathrm {dom}\,h\), the update mapping (as in (13)) is defined by

Denote \(\varepsilon _k := \left( \frac{1}{\tau _{{k}}}-{\bar{L}}\right) >0\) and clearly \( {{\underline{\varepsilon }}}\le \varepsilon _{{k}}\le {{{\bar{\varepsilon }}}}\), where \({{{\bar{\varepsilon }}}} := \frac{1}{{\underline{\tau }}} - {\bar{L}}\) and \({{\underline{\varepsilon }}} := \frac{1}{{{\bar{\tau }}}} - {\bar{L}}\). Well-posedness of the update step (13) is given by the following result.

Lemma 12

Let Assumption 2, 3 hold true and let \({\bar{\mathbf{x}}}\in \mathrm {dom}\,f\cap \mathrm {int}\,\mathrm {dom}\,h\). Then, for all \(0<\tau <\frac{1}{{\bar{L}}}\) the set \(T_{\tau }({\bar{\mathbf{x}}})\) is a nonempty compact subset of \(\mathrm {dom}\,f\cap \mathrm {int}\,\mathrm {dom}\,h\).

Proof

As a consequence of MAP property due to Assumption 3(i) and nonnegativity of Bregman distances, the following property is satisfied

Coercivity of f transfers to that of the objective in (15), and we get the conclusion from standard arguments; see [51, Theorem 1.9]. \(\square \)

The conclusion of the lemma remains true under other sufficient conditions. For instance, if the model has an affine minorant and h is supercoercive (for example, see [16, Section 3.1]). We now show that Model BPG results in monotonically nonincreasing function values.

Lemma 13

(Sufficient Descent Property in Function values) Let Assumptions 2, 3 hold. Also, let \((\mathbf{x}_{{k}})_{{k}\in \mathbb N}\) be a sequence generated by Model BPG, then for \(k\ge 1\), the following holds

Proof

Due to (13), we have \(f(\mathbf{x}_{{k+1}};\mathbf{x}_{{k}})+ \frac{1}{\tau _{{k}}}D_h(\mathbf{x}_{{k+1}},\mathbf{x}_{{k}}) \le f(\mathbf{x}_{{k}};\mathbf{x}_{{k}}) = f(\mathbf{x}_{{k}})\,.\) From MAP property, we have \(f(\mathbf{x}_{{k+1}}) \le f(\mathbf{x}_{{k+1}};\mathbf{x}_{{k}}) + {{{\bar{L}}}}D_h(\mathbf{x}_{{k+1}},\mathbf{x}_{{k}})\,.\) The result follows by combining the previous arguments. \(\square \)

Remark 14

Under Assumptions 2, 3, the coercivity of f, Lemma 13 implies that the iterates of Model BPG lie in the compact set \(\{\mathbf{x}: f(\mathbf{x}) \le f(\mathbf{x}_0)\}\).

Assumption 4

-

(i)

For any bounded set \(B\subset \mathrm {dom}\,f\). There exists \(c>0\) such that for any \(\mathbf{x}, \mathbf{y}\in B\) we have

$$\begin{aligned} \Vert \partial _{\mathbf{y}}f(\mathbf{x};\mathbf{y}) \Vert _{-} \le c\Vert \mathbf{x}- \mathbf{y} \Vert _{} \,. \end{aligned}$$ -

(ii)

The function \(h\) has bounded second derivative on any compact subset \(B\subset \mathrm {int}\,\mathrm {dom}\,h\).

-

(iii)

For bounded \((\mathbf{u}_{{k}})_{{k}\in \mathbb N}\), \((\mathbf{v}_{{k}})_{{k}\in \mathbb N}\) in \(\mathrm {int}\,\mathrm {dom}\,h\), the following holds as \({k}\rightarrow \infty \):

$$\begin{aligned} D_{h}(\mathbf{u}_{{k}},\mathbf{v}_{{k}}) \rightarrow 0 \iff \Vert \mathbf{u}_{{k}}- \mathbf{v}_{{k}} \Vert _{} \rightarrow 0 \,. \end{aligned}$$

We now illustrate Assumption 4(i), which governs the variation of the model function w.r.t. model center.

Example 15

We continue Example 6 to illustrate Assumption 4(i). Note that that \(\nabla ^2g(\mathbf{x})\) is bounded over bounded sets. Consider any bounded set \(B\subset \mathbb R^N\). Define \(c := \sup _{{\bar{\mathbf{x}}}\in B}\Vert \nabla ^2g({\bar{\mathbf{x}}}) \Vert _{}\) and choose any \({\bar{\mathbf{x}}}\in B\), then consider the model function \(f(\mathbf{x};{\bar{\mathbf{x}}}) := \vert g({\bar{\mathbf{x}}}) + \left\langle \nabla g({\bar{\mathbf{x}}}),\mathbf{x}- {\bar{\mathbf{x}}} \right\rangle \vert \,.\) The subdifferential of the model function is given by \(\partial _{\bar{\mathbf{x}}}f(\mathbf{x}; {\bar{\mathbf{x}}}) = \mathbf{u}\nabla ^2g({\bar{\mathbf{x}}})(\mathbf{x}-{\bar{\mathbf{x}}})\,,\) where \(\mathbf{u} \in \partial _{g({\bar{\mathbf{x}}}) + \left\langle \nabla g({\bar{\mathbf{x}}}),\mathbf{x}- {\bar{\mathbf{x}}} \right\rangle } \vert g({\bar{\mathbf{x}}}) + \left\langle \nabla g({\bar{\mathbf{x}}}),\mathbf{x}- {\bar{\mathbf{x}}} \right\rangle \vert \). Considering the fact that \(\Vert \mathbf{u} \Vert _{} \le 1\) and by the definition of c we have \(\Vert \partial _{{\bar{\mathbf{x}}}} f(\mathbf{x}; {\bar{\mathbf{x}}}) \Vert _{-} \le c \Vert \mathbf{x}- {\bar{\mathbf{x}}} \Vert _{}\,,\) which verifies Assumption 4(i).

In order to exploit the power of KL property in the global convergence analysis of Model BPG, we make the following assumption.

Assumption 5

Let \({\mathcal {O}}\) be an o-minimal structure. The functions \({\tilde{f}}:\mathbb R^N \times \mathbb R^N \rightarrow \overline{\mathbb R}\,,\, (\mathbf{x},{\bar{\mathbf{x}}}) \mapsto f(\mathbf{x};{\bar{\mathbf{x}}})\) with \(\mathrm {dom}\,{\tilde{f}} := \mathrm {dom}\,f\times \mathrm {dom}\,f\), and \({\tilde{h}}:\mathbb R^N \times \mathbb R^N \rightarrow \overline{\mathbb R}\,,\, (\mathbf{x},{\bar{\mathbf{x}}}) \mapsto h({\bar{\mathbf{x}}}) + \left\langle \nabla h({\bar{\mathbf{x}}}),\mathbf{x}- {\bar{\mathbf{x}}} \right\rangle \) with \(\mathrm {dom}\,{\tilde{h}} := \mathrm {dom}\,h\times \mathrm {int}\,\mathrm {dom}\,h\) are definable \({\mathcal {O}}\).

An important feature of our analysis is that the Legendre function h satisfying Assumption 3 is not required to be strongly convex. Instead, we impose a significantly weaker condition in Assumption 6 provided below.

Assumption 6

For any compact convex set \(B\subset \mathrm {int}\,\mathrm {dom}\,h\), there exists \(\sigma _B >0\) such that h is \(\sigma _B\)-strongly convex over B, i.e., for any \(\mathbf{x}, \mathbf{y}\in B\) the condition \(D_h(\mathbf{x}, \mathbf{y})\ge \frac{\sigma _B}{2}\Vert \mathbf{x}- \mathbf{y} \Vert _{}^2\) holds.

Remark 16

(Discussion on Assumption 4–6) Assumption 4(i) is illustrated in Example 15. Assumption 4(ii) is typically used in the analysis of Bregman proximal methods [16, 38, 47]. Assumption 4(iii) (also see [47, Remark 18]) essentially states that the asymptotic behavior of vanishing Bregman distance is equivalent to that of vanishing Euclidean distance. Note that Assumption 4(iii) already uses bounded sequences in \(\mathrm {int}\,\mathrm {dom}\,h\), and thus it is satisfied for many Bregman distances, such as distances based on Boltzmann-Shannon entropy [47, Example 40] and Burg’s entropy [47, Example 41]. However, such distances may not satisfy Assumption 4(iii) if the sequences are bounded only in \(\mathrm {dom}\,h\) or in \(\mathrm {cl}\,\mathrm {dom}\,h\) (for example, see Sect. 5.2). Assumption 5 is used in Lemma 28 to deduce that \(F^{h}_{{\bar{L}}}\) satisfies KL property. Assumption 6 plays a key role in proving the global convergence of the sequence generated by Model BPG.

3 Global convergence analysis of model BPG algorithm

3.1 Main results

Our goal is to show that the sequence generated by Model BPG is a gradient-like descent sequence such that Theorem 2 is applicable. The convergence analysis of some popular algorithms (for example, PGM, BPG, PALM [15] etc) in nonconvex optimization is based on a descent property. Usually, the objective value is shown to decrease (for example, see [16, Lemma 4.1]). However, techniques used for additive composite setting relying on function values do not work anymore for general composite problems, hence alternatives like [48] are sought after. We analyse Model BPG using a Lyapunov function as our measure of progress. Our Lyapunov function \(F^h_{{\bar{L}}}\) is given by

and \(\mathrm {dom}\,F^h_{{\bar{L}}} = (\mathrm {dom}\,f)^2 \times (\mathrm {dom}\,h \times \mathrm {int}\,\mathrm {dom}\,h)\,.\) The set of critical points of \(F^h_{{\bar{L}}}\) is given by

The set of limit points of some sequence \((\mathbf{x}_{{k}})_{{k}\in \mathbb N}\) is denoted as follows \(\omega (\mathbf{x}_{0}) := \left\{ \mathbf{x}\in \mathbb R^N\,\vert \,\exists K\subset \mathbb N:\mathbf{x}_{{k}}\overset{K}{\rightarrow } \mathbf{x} \right\} ,\) and its subset of f-attentive limit points

To this regard, denote the following

Before we start with the convergence analysis, we present our main results. We defer their proofs to Sect. 3.2. Informally, the following results state that the sequence generated by Model BPG converges to a point \(\mathbf{x}\) such that \((\mathbf{x},\mathbf{x})\) is the critical point of \(F_{{\bar{L}}}^h\) and \(\mathbf{x}\) is a critical point of f.

Theorem 17

(Global convergence to a critical point of the Lyapunov function) Let Assumptions 2, 3, 4, 5, 6 hold. Let the sequence \((\mathbf{x}_{{k}})_{{k}\in \mathbb N}\) be generated by Model BPG (Algorithm 1) with \(\tau _{{k}}\rightarrow \tau \) for certain \(\tau > 0\) and the condition \(\omega ^{\mathrm {int}\,\mathrm {dom}\,h}(\mathbf{x}_{0}) =\omega (\mathbf{x}_{0})\) holds true. Then, convergent subsequences are \(F_{{\bar{L}}}^h\)-attentive convergent, and \(\sum _{{k}=0}^\infty \Vert \mathbf{x}_{{k+1}}- \mathbf{x}_{{k}} \Vert _{} < +\infty \, \text {(finite length property)}\,.\) The sequence \((\mathbf{x}_{{k}})_{{k}\in \mathbb N}\) converges to \(\mathbf{x}\) such that \((\mathbf{x},\mathbf{x})\) is a critical point of \(F_{{\bar{L}}}^h\).

Theorem 18

(Global convergence to a critical point of the objective function) Under the conditions of Theorem 17, the sequence generated by Model BPG converges to a critical point of f.

It is possible to deduce convergence rates for a certain class of desingularizing functions. Based on Attouch and Bolte [2], Bolte et al. [15], Frankel et al. [26], we provide the following convergence rates for Model BPG sequences.

Theorem 19

(Convergence rates) Under the conditions of Theorem 17, let the sequence \((\mathbf{x}_{{k}})_{{k}\in \mathbb N}\) generated by Model BPG converge to \(\mathbf{x}\in \mathrm {dom}\,f\cap \mathrm {int}\,\mathrm {dom}\,h\), and let \(F^{h}_{{\bar{L}}}\) satisfy KL property with the desingularizing function: \(\varphi (s) = cs^{1-\theta }\,,\) for certain \(c >0\) and \(\theta \in [0,1)\). Then, we have the following:

-

If \(\theta = 0\), then \((\mathbf{x}_{{k}})_{{k}\in \mathbb N}\) converges in finite number of steps.

-

If \(\theta \in (0, \frac{1}{2}]\), then \(\exists \, \rho \in [0,1)\), \(G > 0\) such that \(\forall \, k\ge 0\) we have \(\Vert \mathbf{x}_{{k}}- \mathbf{x} \Vert _{} \le G\rho ^k\,. \)

-

If \(\theta \in (\frac{1}{2},1)\), then \(\exists \, G>0\) such that \(\forall \, k\ge 0\) we have \(\Vert \mathbf{x}_{{k}}- \mathbf{x} \Vert _{} \le G k^{-\frac{1-\theta }{2\theta -1}}\,.\)

The proof is only a slight modification to the proof of Attouch and Bolte [2, Theorem 5], hence we skip it for brevity. In the above theorem \(\theta \) is the so-called KL exponent (also called Łojasiewicz exponent in classical algebraic geometry) of the Lyapunov function \(F^{h}_{{\bar{L}}}\) and not that of the function f. Thus the KL exponent of \(F^{h}_{{\bar{L}}}\) is nontrivial to deduce even if the KL exponent of f is known, as it has dependency on the model function and the Bregman distance. In this regard, we refer the reader to Li and Pong [30], Li et al. [31].

3.2 Additional results and proofs

We now look at some properties of \(F^h_{{\bar{L}}}\).

Proposition 20

The Lyapunov function defined in (16) satisfies the following:

- \(\mathrm{(i)}\):

-

For all \(\mathbf{x}\in \mathrm {dom}\,f\cap \mathrm {dom}\,h\) and \(\mathbf{y}\in \mathrm {dom}\,f\cap \mathrm {int}\,\mathrm {dom}\,h\), we have \( f(\mathbf{x}) \le F^h_{{\bar{L}}}(\mathbf{x},\mathbf{y})\,. \)

- \(\mathrm{(ii)}\):

-

For all \(\mathbf{x}\in \mathrm {dom}\,f\cap \mathrm {int}\,\mathrm {dom}\,h\), we have \( F^h_{{\bar{L}}}(\mathbf{x},\mathbf{x}) = f(\mathbf{x})\,. \)

- \(\mathrm{(iii)}\):

-

Moreover, we have \(\inf _{(\mathbf{x},\mathbf{y})\,\in \, \mathbb R^N\times \mathbb R^N} F^h_{{\bar{L}}}(\mathbf{x},\mathbf{y}) \ge v({\mathcal {P}}) > -\infty \,.\)

Proof

- \(\mathrm{(i)}\) :

-

This follows from MAP property and the definition of \(F^h_{{\bar{L}}}\) .

- \(\mathrm{(ii)}\) :

-

Substituting \(\mathbf{y}=\mathbf{x}\) in (16) gives the result.

- \(\mathrm{(iii)}\) :

-

By MAP property, we have \( v({\mathcal {P}})\le f(\mathbf{x}) \le f(\mathbf{x};\mathbf{y}) + {\bar{L}}D_h(\mathbf{x},\mathbf{y})\,, \) for all \( (\mathbf{x},\mathbf{y}) \in \mathrm {dom}\,F^h_{{\bar{L}}}\). Furthermore, we obtain the following:

$$\begin{aligned} \inf _{\mathbf{x}\in \mathrm {dom}\,f\,\cap \,\mathrm {dom}\,h}f(\mathbf{x}) \le \inf _{(\mathbf{x},\mathbf{y}) \in \mathrm {dom}\,F^h_{{\bar{L}}}}\left( f(\mathbf{x};\mathbf{y}) + {\bar{L}}D_h(\mathbf{x},\mathbf{y})\right) \,. \end{aligned}$$The statement follows using \(\inf _{\mathbf{x}\in \mathbb R^N}f(\mathbf{x}) = v({\mathcal {P}}) > -\infty \) due to Assumption 2 . \(\square \)

We proved the sufficient descent property in terms of function values in Lemma 13. We now prove the sufficient descent property of the Lyapunov function.

Proposition 21

(Sufficient descent property) Let Assumptions 2, 3 hold and let \((\mathbf{x}_{{k}})_{{k}\in \mathbb N}\) be a sequence generated by Model BPG, then for \(k\ge 1\) we have

Proof

From (13), we have \(f(\mathbf{x}_{{k+1}};\mathbf{x}_{{k}})+ \frac{1}{\tau _{{k}}}D_h(\mathbf{x}_{{k+1}},\mathbf{x}_{{k}}) \le f(\mathbf{x}_{{k}};\mathbf{x}_{{k}}) = f(\mathbf{x}_{{k}}).\) From MAP property, we have \(f(\mathbf{x}_{{k}}) \le f(\mathbf{x}_{{k}};\mathbf{x}_{{k-1}}) + {\bar{L}}D_h(\mathbf{x}_{{k}},\mathbf{x}_{{k-1}}).\) Thus, the result follows from the definition of \(F_{{\bar{L}}}^{h}\) in (16). \(\square \)

Proposition 22

Let Assumptions 2, 3 hold and let \((\mathbf{x}_{{k}})_{{k}\in \mathbb N}\) be a sequence generated by Model BPG. The following assertions hold:

- \(\mathrm{(i)}\):

-

\(\left\{ F_{{\bar{L}}}^{h}\left( \mathbf{x}_{{k+1}}, \mathbf{x}_{{k}}\right) \right\} _{k \in \mathbb N}\) is nonincreasing and converges to a finite value.

- \(\mathrm{(ii)}\):

-

\(\sum _{k = 1}^{\infty } D_h(\mathbf{x}_{{k+1}},\mathbf{x}_{{k}}) < \infty \) and \(\left\{ D_h(\mathbf{x}_{{k+1}},\mathbf{x}_{{k}}) \right\} _{k \in \mathbb N}\) converges to zero.

- \(\mathrm{(iii)}\):

-

For any \(n\in \mathbb N\), we have \( \min _{1 \le k \le n} D_h(\mathbf{x}_{{k+1}},\mathbf{x}_{{k}}) \le \frac{F_{{\bar{L}}}^{h}\left( \mathbf{x}_{1} , \mathbf{x}_{0}\right) -v({\mathcal {P}})}{{{\underline{\varepsilon }}} n}\,. \)

Proof

- \(\mathrm{(i)}\) :

-

Nonincreasing property follows trivially from Proposition 21 and as \(\varepsilon _{{k}}>0\). We know from Proposition 20(iii) that the Lyapunov function is lower bounded, which implies convergence of \(\left\{ F_{{\bar{L}}}^{h}\left( \mathbf{x}_{{k+1}}, \mathbf{x}_{{k}}\right) \right\} _{k \in \mathbb N}\) to a finite value.

- \(\mathrm{(ii)}\) :

-

Summing (18) from \(k = 1\) to n (a positive integer) and using \({{\underline{\varepsilon }}} \le {\varepsilon _{{k}}}\) we get

$$\begin{aligned} \sum _{k = 1}^{n} D_h(\mathbf{x}_{{k+1}},\mathbf{x}_{{k}}) \le \frac{1}{{{\underline{\varepsilon }}}}\left( F_{{\bar{L}}}^{h}\left( \mathbf{x}_{1} , \mathbf{x}_{0}\right) -v({\mathcal {P}})\right) , \end{aligned}$$(19)since \(F_{{\bar{L}}}^{h}\left( \mathbf{x}_{n + 1} , \mathbf{x}_{n}\right) \ge v({\mathcal {P}})\). Taking the limit as \(n \rightarrow \infty \), we obtain the first assertion, from which we deduce that \(\left\{ D_h(\mathbf{x}_{{k+1}},\mathbf{x}_{{k}}) \right\} _{k \in \mathbb N}\) converges to zero.

- \(\mathrm{(iii)}\) :

-

Follows from (19) and \(n\min _{1\le k \le n} \left( D_h(\mathbf{x}_{{k+1}},\mathbf{x}_{{k}}) \right) \le \sum _{k = 1}^{n} \left( D_h(\mathbf{x}_{{k+1}},\mathbf{x}_{{k}}) \right) \). \(\square \)

Lemma 23

(Relative error) Let Assumptions 2, 3, 4 hold. Let the sequence \((\mathbf{x}_{{k}})_{{k}\in \mathbb N}\) be generated by Model BPG, then there exists a constant \(C>0\) such that for certain \(k \ge 0\), we have

Proof

As per Rockafellar and Wets [51, Exercise 8.8] or Mordukhovich [35, Theorem 2.19], \(\partial F^h_{{\bar{L}}} (\mathbf{x}_{{k+1}}, \mathbf{x}_{{k}})\) is given by

because the Bregman distance is continuously differentiable around \(\mathbf{x}_{{k}}\in \mathrm {dom}\,f\cap \mathrm {int}\,\mathrm {dom}\,h\). Using Rockafellar and Wets [51, Corollary 10.11], Assumption 3(iv), and using the fact that h is \({\mathcal {C}}^2\) over \(\mathrm {int}\,\mathrm {dom}\,h\) (cf. Assumption 3) we obtain

Consider the following:

where in the first equality we use (21), in the second equality we use the result in (22) with \(\xi := (\xi _x , \xi _y)\) such that \(\xi _x \in \partial _{\mathbf{x}_{{k+1}}} f(\mathbf{x}_{{k+1}},\mathbf{x}_{{k}})\) and \(\xi _y \in \partial _{\mathbf{x}_{{k}}} f(\mathbf{x}_{{k+1}},\mathbf{x}_{{k}})\), and in the last step we used \(\nabla D_h(\mathbf{x}_{{k+1}},\mathbf{x}_{{k}}) = (\nabla h(\mathbf{x}_{{k+1}}) - \nabla h(\mathbf{x}_{{k}}), \nabla ^2 h(\mathbf{x}_{{k}})(\mathbf{x}_{{k+1}}- \mathbf{x}_{{k}}))\,.\) The optimality of \(\mathbf{x}_{{k+1}}\) in (13) implies the existence of \(\xi _{\mathbf{x}_{{k+1}}}^{{k}+1}\in \partial _{\mathbf{x}_{{k+1}}} f({\mathbf{x}_{{k+1}};\mathbf{x}_{{k}}})\) such that the following condition holds: \(\xi _{\mathbf{x}_{{k+1}}}^{{k}+1} + \frac{1}{\tau _{_{{k}}}} (\nabla h(\mathbf{x}_{{k+1}}) - \nabla h(\mathbf{x}_{{k}})) = \mathbf{0}\,.\) Therefore, the first block coordinate in (22) satisfies

Now consider the first term of the right hand side in (23). We have

where in the second step we used (24) and in the last step we applied mean value theorem along with the fact that the entity \(\Vert \nabla ^2h(\mathbf{x}_{{k+1}}+ s(\mathbf{x}_{{k+1}}- \mathbf{x}_{{k}})) \Vert _{}\) is bounded by a constant \({\tilde{L}}_h > 0\) for certain \(s \in [0,1]\), due to Assumption 4(ii). Considering the second term of the right hand side in (23), we have

where in the last step we used Assumption 4(i) and the fact that \(\Vert \nabla ^2h(\mathbf{x}_{{k}}) \Vert _{}\) is bounded by \(L_h\). The result follows from combining the results obtained for (24). \(\square \)

We now consider results on generic limit points and show that stationarity can indeed be attained for iterates produced by Model BPG.

Proposition 24

For a bounded sequence \((\mathbf{x}_{{k}})_{{k}\in \mathbb N}\) such that \(\Vert \mathbf{x}_{{k+1}}- \mathbf{x}_{{k}} \Vert _{} \rightarrow 0\) as \(k \rightarrow \infty \), the following holds:

-

(i)

\(\omega (\mathbf{x}_{0})\) is connected and compact,

-

(ii)

\(\lim _{{k}\rightarrow \infty } \mathrm {dist}(\mathbf{x}_{{k}},\omega (\mathbf{x}_{0})) = 0\).

The proof relies on the same technique as the proof of Bolte et al. [15, Lemma 3.5] (also see Bolte et al. [15, Remark 3.3]). We now show that the sequence generated by Model BPG \((\mathbf{x}_{{k}})_{{k}\in \mathbb N}\) indeed attains \(\Vert \mathbf{x}_{{k+1}}- \mathbf{x}_{{k}} \Vert _{} \rightarrow 0\) as \(k \rightarrow \infty \), which in turn enables the application of Proposition 24 to deduce the properties of the sequence generated by Model BPG crucial for the proof of global convergence.

Proposition 25

Let Assumption 2, 3, 4 hold. Let \((\mathbf{x}_{{k}})_{{k}\in \mathbb N}\) be a sequence generated by Model BPG. Then, \(\mathbf{x}_{{k+1}}-\mathbf{x}_{{k}}\rightarrow 0\) as \({k}\rightarrow \infty \).

Proof

The result follows as a simple consequence of Proposition 22(ii) along with Assumption 4(iii). \(\square \)

Analyzing the full set of limit points of the sequence generated by Model BPG is difficult, as illustrated in Ochs et al. [47]. Obtaining the global convergence is still an open problem. Moreover, the work in Ochs et al. [47] relies on convex model functions. In order to simplify slightly the setting, we restrict the set of limit points to the set \(\mathrm {int}\,\mathrm {dom}\,h\). Such a choice may appear to be restrictive, however, Model BPG when applied to many practical problems results in sequences that have this property as illustrated in Sect. 5. The subset of \(F_{{\bar{L}}}^h\)-attentive (similar to f-attentive) limit points is

Also, we define \(\omega _{F_{{\bar{L}}}^h}^{(\mathrm {int}\,\mathrm {dom}\,h)^2} := \omega _{F_{{\bar{L}}}^h} \cap (\mathrm {int}\,\mathrm {dom}\,h\times \mathrm {int}\,\mathrm {dom}\,h)\).

Proposition 26

Let Assumptions 2, 3, 4 hold. Let \((\mathbf{x}_{{k}})_{{k}\in \mathbb N}\) be a sequence generated by Model BPG. Then, the following holds:

-

(i)

\(\omega ^{\mathrm {int}\,\mathrm {dom}\,h}(\mathbf{x}_{0})=\omega _f^{\mathrm {int}\,\mathrm {dom}\,h}(\mathbf{x}_{0})\),

-

(ii)

\(\mathbf{x}\in \omega _f^{\mathrm {int}\,\mathrm {dom}\,h}(\mathbf{x}_{0})\) if and only if \((\mathbf{x},\mathbf{x}) \in \omega _{F_{{\bar{L}}}^h}^{(\mathrm {int}\,\mathrm {dom}\,h)^2}(\mathbf{x}_{0})\).

-

(iii)

\(F_{{\bar{L}}}^h\) is constant and finite on \(\omega _{F_{{\bar{L}}}^h}^{(\mathrm {int}\,\mathrm {dom}\,h)^2}(\mathbf{x}_{0})\) and \(f\) is constant and finite on \(\omega _f^{\mathrm {int}\,\mathrm {dom}\,h}(\mathbf{x}_{0})\) with same value.

Proof

(i) We show the inclusion \(\omega ^{\mathrm {int}\,\mathrm {dom}\,h}(\mathbf{x}_{0})\subset \omega _f^{\mathrm {int}\,\mathrm {dom}\,h}(\mathbf{x}_{0})\) and \(\omega _f^{\mathrm {int}\,\mathrm {dom}\,h}(\mathbf{x}_{0})\subset \omega ^{\mathrm {int}\,\mathrm {dom}\,h}(\mathbf{x}_{0})\) is clear by definition. Let \(\mathbf{x}^\star \in \omega ^{\mathrm {int}\,\mathrm {dom}\,h}(\mathbf{x}_{0})\), then we obtain

By Assumption 4(iii) combined with the fact that \(\mathbf{x}_{{k}}\overset{K}{\rightarrow } \mathbf{x}^\star \), we have \(D_{h}(\mathbf{x}^\star ,\mathbf{x}_{{k}})\rightarrow 0\) as \({k}\overset{K}{\rightarrow }\infty \), which, together with the lower semicontinuity of \(f\), implies the following: \( f(\mathbf{x}^\star ) \ge \liminf _{{k}\overset{K}{\rightarrow }\infty } f(\mathbf{x}_{{k+1}}) \ge f(\mathbf{x}^\star ) \,, \) thus \(\mathbf{x}^\star \in \omega _f^{\mathrm {int}\,\mathrm {dom}\,h}(\mathbf{x}_{0})\).

(ii) If \(\mathbf{x}\in \omega _f^{\mathrm {int}\,\mathrm {dom}\,h}(\mathbf{x}_{0})\), then we have \(\mathbf{x}_{{k}}\overset{K}{\rightarrow }\mathbf{x}\) for \(K\subset \mathbb N\), and \(f(\mathbf{x}_{{k}}) \overset{K}{\rightarrow } f(\mathbf{x})\). As a consequence of Proposition 22 and Assumption 4(iii), \(D_{h}(\mathbf{x}_{{k+1}},\mathbf{x}_{{k}})\rightarrow 0\) as \({k}\rightarrow \infty \), which implies that \(\mathbf{x}_{{k+1}}\overset{K}{\rightarrow }\mathbf{x}\). The first part of the proof implies \(f(\mathbf{x}_{{k+1}}) \overset{K}{\rightarrow } f(\mathbf{x})\). We also have \(F_{{\bar{L}}}^h(\mathbf{x}_{{k+1}},\mathbf{x}_{{k}})\overset{K}{\rightarrow } f(\mathbf{x})\) which we prove below, which implies that \((\mathbf{x},\mathbf{x})\in \omega _{F_{{\bar{L}}}^h}^{\mathrm {int}\,\mathrm {dom}\,h}(\mathbf{x}_{0})\). Note that by definition of \(F_{{\bar{L}}}^h\) we have

MAP property gives \( f(\mathbf{x}_{{k+1}}) \le F_{{\bar{L}}}^{h}(\mathbf{x}_{{k+1}},\mathbf{x}_{{k}}) \le f(\mathbf{x}_{{k+1}}) + ({\bar{L}}+ \underline{L})D_h(\mathbf{x}_{{k+1}},\mathbf{x}_{{k}}) \,. \) Thus, we have that \(F_{{\bar{L}}}^h(\mathbf{x}_{{k+1}},\mathbf{x}_{{k}})\overset{K}{\rightarrow } f(\mathbf{x})\) as \(D_h(\mathbf{x}_{{k+1}},\mathbf{x}_{{k}}) \overset{K}{\rightarrow } 0\). Conversely, suppose \((\mathbf{x},\mathbf{x})\in \omega _{F_{{\bar{L}}}^h}^{\mathrm {int}\,\mathrm {dom}\,h}(\mathbf{x}_{0})\) and \(\mathbf{x}_{{k}}\overset{K}{\rightarrow }\mathbf{x}\) for \(K\subset \mathbb N\). This, together with \(D_{h}(\mathbf{x}_{{k+1}},\mathbf{x}_{{k}})\rightarrow 0\) as \(k \overset{K}{\rightarrow } \infty \), induces \(F_{{\bar{L}}}^h(\mathbf{x}_{{k+1}},\mathbf{x}_{{k}})\overset{K}{\rightarrow } f(\mathbf{x})\), which further implies \(f(\mathbf{x}_{{k+1}})\overset{K}{\rightarrow } f(\mathbf{x})\) due to the following. Note that we have

Finally we have \( F_{{\bar{L}}}^{h}(\mathbf{x}_{{k+1}},\mathbf{x}_{{k}}) + ({\bar{L}}-\underline{L})D_h(\mathbf{x}_{{k+1}},\mathbf{x}_{{k}}) \le f(\mathbf{x}_{{k+1}}) \le F_{{\bar{L}}}^{h}(\mathbf{x}_{{k+1}},\mathbf{x}_{{k}}) \,. \) Thus, with \(D_h(\mathbf{x}_{{k+1}},\mathbf{x}_{{k}}) \rightarrow 0\) as \(k \overset{K}{\rightarrow } \infty \) and \(F_{{\bar{L}}}^{h}(\mathbf{x}_{{k+1}},\mathbf{x}_{{k}}) \overset{K}{\rightarrow } f(\mathbf{x})\), we deduce that \(f(\mathbf{x}_{{k+1}}) \overset{K}{\rightarrow } f(\mathbf{x})\). And therefore \(\mathbf{x}\in \omega _f^{\mathrm {int}\,\mathrm {dom}\,h}(\mathbf{x}_{0})\).

(iii) By Proposition 21, the sequence \((F_{{\bar{L}}}^h(\mathbf{x}_{{k+1}},\mathbf{x}_{{k}}))_{{k}\in \mathbb N}\) converges to a finite value \(\underline{F}\). Note that \(D_{h}(\mathbf{x}_{{k+1}},\mathbf{x}_{{k}})\rightarrow 0\) as \({k}\overset{K}{\rightarrow }\infty \) due to Proposition 22 (ii), when combined with Assumption 4(iii) implies that \(\Vert \mathbf{x}_{{k+1}}- \mathbf{x}_{{k}} \Vert _{} \rightarrow 0\). For \((\mathbf{x}^\star ,\mathbf{x}^\star )\in \omega _{F_{{\bar{L}}}^h}^{(\mathrm {int}\,\mathrm {dom}\,h)^2}(\mathbf{x}_{0},\mathbf{x}_{0})\) there exists \(K\subset \mathbb N\) such that \(\mathbf{x}_{{k}}\overset{K}{\rightarrow } \mathbf{x}^\star \) and \(F_{{\bar{L}}}^h(\mathbf{x}_{{k+1}},\mathbf{x}_{{k}}) \overset{K}{\rightarrow } F_{{\bar{L}}}^h(\mathbf{x}^\star ,\mathbf{x}^\star )= f(\mathbf{x}^\star )\), i.e., the value of the limit point is independent of the choice of the subsequence. The result follows directly and by using (i). \(\square \)

The following result states that \(F_{{\bar{L}}}^h\)-attentive sequences converge to a critical point.

Theorem 27

(Sub-sequential convergence) Let Assumptions 2, 3, 4 hold. If the sequence \((\mathbf{x}_{{k}})_{{k}\in \mathbb N}\) is generated by Model BPG, then

Proof

From (20), we have \(\Vert \partial F_{{\bar{L}}}^h(\mathbf{x}_{{k+1}}, \mathbf{x}_{{k}}) \Vert _{-} \le C \Vert \mathbf{x}_{{k+1}}- \mathbf{x}_{{k}} \Vert _{}\) for some constant \(C >0\). Using \(\Vert \mathbf{x}_{{k+1}}-\mathbf{x}_{{k}} \Vert _{}\rightarrow 0\), convergence of \((\tau _{{k}})_{{k}\in \mathbb N}\), and Proposition 26(i) yields (25), by the closedness property of the limiting subdifferential (8). \(\square \)

Discussion. Subsequential convergence to a stationary point was already considered in few works. In particular, the work in Drusvyatskiy et al. [25] already provides such a result, however, it relies on certain abstract assumptions. Even though such assumptions are valid for some practical algorithms, the authors do not consider a concrete algorithm. Moreover, their abstract update step depends on the minimization of the model function, which can require additional regularity conditions on the problem. For example, if the model function is linear, then the domain must be compact to guarantee the existence of a solution. A related line-search variant of Model BPG was considered in Ochs et al. [47], for which subsequential convergence to a stationarity point was proven. The subsequential convergence results in Ochs et al. [47] are more general than our work, as they analyse the behavior of limit points in \(\mathrm {dom}\,h\), \(\mathrm {cl}\,\mathrm {dom}\,h\), \(\mathrm {int}\,\mathrm {dom}\,h\) (cf. Ochs et al. [47, Theorem 22]). Our analysis is restricted to limit points in \(\mathrm {int}\,\mathrm {dom}\,h\), as typically such an assumption holds in practice (see Sect. 5). Though subsequential convergence is satisfactory, proving global convergence is nontrivial, in general.

Lemma 28

Let Assumptions 2, 3, 4, 5 hold. Then, the Lyapunov function \(F^{h}_{{\bar{L}}}\) is definable in \({\mathcal {O}}\), and satisfies KL property at any point of \(\mathrm {dom}\,\partial F^{h}_{{\bar{L}}}\).

The proof is straightforward application of Ochs [42, Corollary 4.32] and Bolte et al. [14, Theorem 14]. For additive composite problems, the global convergence analysis of BPG based methods [16, 38] relies on strong convexity of h. However, in our setting we relax such a requirement on h, via Assumption 6. Note that imposing such an assumption is weaker than imposing the strong convexity of h, as we only need the strong convexity property to hold over a compact convex set. Such a property can be satisfied even if h is not strongly convex, for example, Burg’s entropy (see Sect. 5.2). We now present the proof of Theorem 17, result pertaining to the global convergence of the sequence generated by Model BPG.

Proof of Theorem 17

Note that the sequence \((\mathbf{x}_{{k}})_{{k}\in \mathbb N}\) generated by Model BPG is a bounded sequence (see Remark 14). The proof relies on Theorem 2, for which we need to verify the conditions (H1)–(H5). Due to Lemma 28, \(F_{{\bar{L}}}^h\) satisfies Kurdyka–Łojasiewicz property at each point of \(\mathrm {dom}\,\partial F^{h}_{{\bar{L}}}\). Note that as \(\omega ^{\mathrm {int}\,\mathrm {dom}\,h}(\mathbf{x}_{0}) =\omega (\mathbf{x}_{0})\) holds true, there exists a sufficiently small \(\varepsilon >0\) such that \({\tilde{B}} := \{\mathbf{x}: \mathrm {dist}(\mathbf{x}, \omega (\mathbf{x}_{0}))\le \varepsilon \} \subset \mathrm {int}\,\mathrm {dom}\,h\). As \(\omega (\mathbf{x}_{0})\) is compact due to Proposition 24(i), the set \({\tilde{B}}\) is also compact. Moreover, the convex hull of the set \({\tilde{B}}\) denoted by \(B:=\text {conv} \,{\tilde{B}}\) is also compact, as the convex hull of a compact set is also compact in finite dimensional setting. A simple calculation reveals that the set B lies in the set \(\mathrm {int}\,\mathrm {dom}\,h\). Thus, due to Proposition 25 along with Proposition 24(ii), without loss of generality, we assume that the sequence \((\mathbf{x}_{{k}})_{{k}\in \mathbb N}\) generated by Model BPG lies in the set B. By definition of \({\sigma }_B\) as per Assumption 6 we have \(D_{h}(\mathbf{x}_{{k+1}},\mathbf{x}_{{k}}) \ge \frac{\sigma _B}{2} \Vert \mathbf{x}_{{k+1}}-\mathbf{x}_{{k}} \Vert _{}^2 \,,\) through which we obtain

which is (H1) with \(d_k = \frac{\varepsilon _k \sigma _B}{2} \Vert \mathbf{x}_{{k+1}}-\mathbf{x}_{{k}} \Vert _{}^2\) and \(a_k= 1\). We also have existence of \(\mathbf{w}_{{k+1}}\in \partial F_{{\bar{L}}} ^h(\mathbf{x}_{{k+1}},\mathbf{x}_{{k}})\) such that the conclusion of Lemma 23 holds true for some \(C>0\), which is (H2) with \(b =C\), since the coefficients for both Euclidean distances are bounded from above. The continuity condition (H3) is deduced from a converging subsequence, whose existence is guaranteed by boundedness of \((\mathbf{x}_{{k}})_{{k}\in \mathbb N}\), and Proposition 26 guarantees that such convergent subsequences are \(F_{{\bar{L}}}^h\)-attentive convergent. The distance condition (H4) holds trivially as \(\varepsilon _k >0\) and \(\sigma _B >0\). The parameter condition (H5), holds because \(b_n = 1\) in this setting, hence \((b_{{n}})_{{n}\in \mathbb N}\not \in \ell _1\) and also we have \(\sup _{n\in \mathbb N} \frac{1}{b_{{n}}a_{{n}}} =1 < \infty \,, \quad \inf _{n}a_{{n}}= 1 > 0\,.\) Theorem 2 implies the finite length property from which we deduce that the sequence \((\mathbf{x}_{{k}})_{{k}\in \mathbb N}\) generated by Model BPG converges to a single point, which we denote by \(\mathbf{x}\). As \((\mathbf{x}_{{k+1}})_{{k}\in \mathbb N}\) also converges to \(\mathbf{x}\), the sequence \(((\mathbf{x}_{{k+1}}, \mathbf{x}_{{k}}))_{{k}\in \mathbb N}\) converges to \((\mathbf{x},\mathbf{x})\), which is a critical point of \(F^h_{{{{\bar{L}}}}}\) due to Theorem 27. \(\square \)

The global convergence result in Theorem 17 shows that Model BPG converges to a point, which in turn can be used to represent a critical point of the Lyapunov function. However, our goal is to find a critical point of the objective function f. Firstly, we need the following result, which establishes the connection between fixed points of the update mapping and critical points of f.

Lemma 29

Let Assumptions 2, 3 hold. For any \(0<\tau <({1}/{{\bar{L}}})\) and \({\bar{\mathbf{x}}}\in \mathrm {dom}\,f\cap \mathrm {int}\,\mathrm {dom}\,h\), the fixed points of the update mapping \(T_{\tau }({\bar{\mathbf{x}}})\) are critical points of f.

Proof

Let \({\bar{\mathbf{x}}}\in \mathrm {dom}\,f\cap \mathrm {int}\,\mathrm {dom}\,h\) be a fixed point of \(T_{\tau }\), in the sense the condition \( {\bar{\mathbf{x}}}\in T_{\tau }({\bar{\mathbf{x}}})\) holds true. By definition of \(T_{\tau }({\bar{\mathbf{x}}})\), the following condition holds true: \( \mathbf{0} \in \partial f(\mathbf{x};{\bar{\mathbf{x}}}) + \frac{1}{\tau }\left( \nabla h(\mathbf{x})-\nabla h({\bar{\mathbf{x}}})\right) \) at \(\mathbf{x}= {\bar{\mathbf{x}}}\), which implies that \(\mathbf{0} \in \partial f({\bar{\mathbf{x}}};{\bar{\mathbf{x}}})\). We know that \(\partial f({\bar{\mathbf{x}}};{\bar{\mathbf{x}}}) \subset \partial f({\bar{\mathbf{x}}})\), thus \({\bar{\mathbf{x}}}\) is a critical point of the function f. \(\square \)

We also require the following technical result. The following lemma proves the sequential closedness property of the update mapping.

Lemma 30

(Continuity property) Let Assumptions 2, 3, 4 hold. Let the sequence \((\mathbf{x}_{{k}})_{{k}\in \mathbb N}\) be bounded such that \(\mathbf{x}_{{k}}\rightarrow {\bar{\mathbf{x}}}\), where \(\mathbf{x}_{{k}}\in \mathrm {dom}\,f\cap \mathrm {int}\,\mathrm {dom}\,h\) \(\forall \, k \in \mathbb N\), and \({\bar{\mathbf{x}}}\in \mathrm {dom}\,f\cap \mathrm {int}\,\mathrm {dom}\,h\). Let \(\tau _{{k}}\rightarrow \tau \), such that \(0< \underline{\tau } \le \tau _{{k}}\le {{{\bar{\tau }}}} < {1}/{{{{\bar{L}}}}}\). Assume that there exists a bounded set \(B \subset \mathrm {int}\,\mathrm {dom}\,h\), such that \(T_{\tau _{{k}}}(\mathbf{x}_{{k}}) \subset B\), \(\mathbf{x}_{{k}}\in B\), \(\forall k \in \mathbb N\). If \(\limsup _{k \rightarrow \infty }T_{\tau _{{k}}}(\mathbf{x}_{{k}}) \subset \mathrm {dom}\,f\cap \mathrm {int}\,\mathrm {dom}\,h\), then \(\limsup _{k \rightarrow \infty }T_{\tau _{{k}}}(\mathbf{x}_{{k}}) \subset T_{\tau }({\bar{\mathbf{x}}})\).

Proof

Consider any sequence \((\mathbf{y}_{{k}})_{{k}\in \mathbb N}\) such that for any \(k\in \mathbb N\), the condition \(\mathbf{y}_{{k}}\in T_{\tau _{{k}}}(\mathbf{x}_{{k}})\) holds true. Recall that \(f(\mathbf{x};\mathbf{y})\) is continuous on its domain due to Assumption 3(iv). By optimality of \(\mathbf{y}_k \in T_{\tau _{{k}}}(\mathbf{x}_{{k}})\), for any \(\mathbf{z}\in \mathbb R^N\) we have

As a consequence of boundedness of the sequence \((\mathbf{y}_{{k}})_{{k}\in \mathbb N}\), by Bolzano-Weierstrass Theorem there exists a convergent subsequence. Let \(\mathbf{y}_{{k}}\overset{K}{\rightarrow } \pi \) such that \(\pi \in \mathrm {dom}\,f\cap \mathrm {int}\,\mathrm {dom}\,h\). Note that \(\tau _{{k}}\overset{K}{\rightarrow } \tau \) for some \( K \subset \mathbb N\). Applying limit on both sides of (26) using the continuity of the model function and the Bregman distance gives

which implies that \(\pi \) minimizes the function \(f(\cdot ;{\bar{\mathbf{x}}}) + \frac{1}{\tau }D_h(\cdot ,{\bar{\mathbf{x}}})\). This implies that \(\pi \in T_{\tau }({\bar{\mathbf{x}}})\) and the result follows. \(\square \)

We now provide the proof of Theorem 18, that states that the sequence generated by Model BPG indeed converges to a critical point of the objective function.

Proof of Theorem 18

The sequence \((\mathbf{x}_{{k}})_{{k}\in \mathbb N}\) generated by Model BPG under the assumptions as in Theorem 17 is globally convergent, thus let \(\mathbf{x}_{{k}}\rightarrow \mathbf{x}\) and also \(\mathbf{x}_{{k+1}}\rightarrow \mathbf{x}\). As \(\mathbf{x}_{{k+1}}\in T_{\tau _{{k}}}(\mathbf{x}_{{k}})\) and \(\tau _{{k}}\) converges to \(\tau \), with Lemma 30 we deduce that \(\mathbf{x}\in T_{\tau }(\mathbf{x})\,.\) Additionally, with the result in Lemma 30, we deduce that \(\mathbf{x}\) is the fixed point of the mapping \( T_{\tau }(\mathbf{x})\), i.e., \(\mathbf{x}\in T_{\tau }(\mathbf{x})\). Then, using Lemma 29 we conclude that \(\mathbf{x}\) is a critical point of the function f.

\(\square \)

4 Examples

In this section we consider special instances of \(({\mathcal {P}})\), namely, additive composite problems and a broad class of composite problems. The goal is to quantify assumptions for these problems such that the global convergence result (Theorem 18) of Model BPG is applicable. We enforce the following blanket assumptions.

-

(B1)

The function h is a Legendre function that is \({\mathcal {C}}^2\) over \(\mathrm {int}\,\mathrm {dom}\,h\). For any compact convex set \(B\subset \mathrm {int}\,\mathrm {dom}\,h\), there exists \(\sigma _B >0\) such that h is \(\sigma _B\)-strongly convex with bounded second derivative on B. Moreover, for bounded \((\mathbf{u}_{{k}})_{{k}\in \mathbb N}\), \((\mathbf{v}_{{k}})_{{k}\in \mathbb N}\) in \(\mathrm {int}\,\mathrm {dom}\,h\), the following holds as \({k}\rightarrow \infty \):

$$\begin{aligned} D_{h}(\mathbf{u}_{{k}},\mathbf{v}_{{k}}) \rightarrow 0 \iff \Vert \mathbf{u}_{{k}}- \mathbf{v}_{{k}} \Vert _{} \rightarrow 0 \,. \end{aligned}$$ -

(B2)

The function f is coercive and additionally the conditions \(\mathrm {dom}\,f\cap \mathrm {int}\,\mathrm {dom}\,h\ne \emptyset \), \(\mathrm {crit}f\cap \mathrm {int}\,\mathrm {dom}\,h\ne \emptyset \), \(\mathrm {dom}\,f \subset \mathrm {cl}\,\mathrm {dom}\,h\) hold true.

-

(B3)

The functions \({\tilde{f}}:\mathbb R^N \times \mathbb R^N \rightarrow \overline{\mathbb R}\,,\, (\mathbf{x},{\bar{\mathbf{x}}}) \mapsto f(\mathbf{x};{\bar{\mathbf{x}}})\) with \(\mathrm {dom}\,{\tilde{f}} := \mathrm {dom}\,f\times \mathrm {dom}\,f\), and \({\tilde{h}}:\mathbb R^N \times \mathbb R^N \rightarrow \overline{\mathbb R}\,,\, (\mathbf{x},{\bar{\mathbf{x}}}) \mapsto h({\bar{\mathbf{x}}}) + \left\langle \nabla h({\bar{\mathbf{x}}}),\mathbf{x}- {\bar{\mathbf{x}}} \right\rangle \) with \(\mathrm {dom}\,{\tilde{h}} := \mathrm {dom}\,h\times \mathrm {int}\,\mathrm {dom}\,h\) are definable in an o-minimal structure \({\mathcal {O}}\).

4.1 Additive composite problems

We consider the following nonconvex additive composite problem:

which is a special case of \(({\mathcal {P}})\). Additive composite problems arise in several applications, such as standard phase retrieval [16], low rank matrix factorization [36], deep linear neural networks [37], and many more. We present below the BPG algorithm, a specialization of Model BPG that is applicable for additive composite problems.

We impose the following conditions that are common in the analysis of forward–backward algorithms [46], which are used to optimize additive composite problems.

-

(C1)

\(f_0 : \mathbb R^N \rightarrow \overline{\mathbb R}\) is a proper, lsc function and is regular at any \(\mathbf{x}\in \mathrm {dom}\,f_0\) and

$$\begin{aligned} \partial ^{\infty }f_0(\mathbf{x}) \cap (-N_{\mathrm {dom}\,h}(\mathbf{x})) = \{\mathbf{0}\}\,,\quad \forall \, \mathbf{x}\in \mathrm {dom}\,f_0 \cap \mathrm {dom}\,h\,. \end{aligned}$$(30) -

(C2)

\(f_1 : \mathbb R^N \rightarrow \overline{\mathbb R}\) is a proper, lsc function and is \({\mathcal {C}}^2\) on an open set that contains \(\mathrm {dom}\,f_0\). Also, there exist \({{\bar{L}}}, {\underline{L}}>0\) such that for any \({\bar{\mathbf{x}}}\in \mathrm {dom}\,f_0\, \cap \, \mathrm {int}\,\mathrm {dom}\,h\), the following holds:

$$\begin{aligned} -\underline{L}D_{h}(\mathbf{x},{\bar{\mathbf{x}}}) \le f_1(\mathbf{x})- f_1({\bar{\mathbf{x}}}) - \left\langle \nabla f_1({\bar{\mathbf{x}}}),\mathbf{x}- {\bar{\mathbf{x}}} \right\rangle \le {\bar{L}}D_{h}(\mathbf{x},{\bar{\mathbf{x}}}) \,, \end{aligned}$$(31)for all \(\mathbf{x}\in \mathrm {dom}\,f_0 \cap \mathrm {dom}\,h\,.\)

Note that with Assumption (C1), (C2) it is easy to deduce that \(\mathrm {dom}\,f_0 = \mathrm {dom}\,f\). For \({\bar{\mathbf{x}}}\in \mathrm {dom}\,f\), the model function \(f(\cdot ; {\bar{\mathbf{x}}}): \mathbb R^N \rightarrow \overline{\mathbb R}\) at \(\mathbf{x}\in \mathrm {dom}\,f\) is given by

Using the model function in (32) and the condition (31), we deduce that there exist \(\underline{L},{\bar{L}}>0\) such that for any \({\bar{\mathbf{x}}}\in \mathrm {dom}\,f\cap \mathrm {int}\,\mathrm {dom}\,h\), MAP property is satisfied at \({\bar{\mathbf{x}}}\) with \(\underline{L},{\bar{L}}\) as the following holds true: