Abstract

ITER diagnostic systems provide measurements to the Plasma Control System (PCS) in real-time. These measurements are used for plasma control and machine protection. Latency is an important parameter in the assessment of such systems. It is a time gap between capturing an external event by hardware and finishing the processing of acquired data. PCS requires the diagnostic systems to introduce a maximum total latency of 10 to 100 ms, therefore, the systems need to be tested if they meet the requirements. The system evaluated in this paper is a reference real-time image acquisition system developed as a base for ITER diagnostic systems. It consists of hardware based on the Micro Telecommunications Computing Architecture (MicroTCA) standard, developed firmware, and software. It supports cameras with various interfaces. In the paper, two cameras, with a Camera Link and 1 GigE Vision interfaces were selected to perform latency evaluation. The paper presents two methods of measuring the latency of image acquisition. The first one is based on precise time stamping consecutive stages of acquisition. This approach allows for determining which step of acquisition takes more or less time. In consequence, the software or hardware can be optimized. The other one uses LED to evaluate a particular camera, by checking the time of camera reaction to the trigger. A dedicated testing framework is developed to perform automated tests to evaluate latency. It supports collecting and analyzing the results of measurements. Besides that, a dedicated hardware is used to perform the latency tests using LED. The results and discussion of the measurements are presented in the manuscript. They show the latency evaluated using earlier proposed methods, comparing the cameras used in the image acquisition system.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

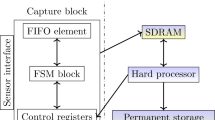

The Image Acquisition System (IAS) is a reference system developed as a base for ITER diagnostics that uses VIS and IR cameras. It uses hardware and software dedicated to the acquisition of data from cameras [1, 2]. The system is based on the Micro Telecommunications Computing Architecture (MicroTCA.4, MCTA.4) standard. It consists of cameras connected to proper frame grabbers that are placed in the MTCA chassis and later connected via a Peripheral Component Interconnect Express (PCIe) interface to the external high-performance computer [1,2,3,4]. Frame grabbers are dedicated to acquiring images from cameras. However, they also support clock synchronization and triggering via the MTCA backplane and allow to run algorithms on raw data inside the Field-Programmable Gate Array (FPGA). A dedicated timing module, based on IEEE 1588 protocol is used, to provide precise synchronization and triggering in such system [5]. The hardware components of the system are presented in the Fig. 1.

The software used in IAS consists of several layers. The first one is the Linux device driver. It is responsible for communication with frame grabbers and cameras. Next, there is a low-level libraries layer. They use the Generic Interface for Cameras (GenICam) standard. The main goal of GenICam is to provide a unified programming interface for all kinds of cameras, no matter what hardware interface is used or what types of features are implemented [6]. They are responsible for reading data into buffers and providing API for communication with hardware. At the top, there is a high-level application that manages buffers and allows setting up the hardware and acquiring, archiving, displaying, and processing images.

Latency describes a time gap between capturing an external event by hardware and an adequate response of the computer controller [7]. In the case of the IAS, it is time from the beginning of image acquisition to the end of image processing by both hardware and software components. It is a critical parameter in the performance assessment of IASs. In image diagnostics in ITER, the maximum total latency of measurement should be 10 to 100 ms. Low latency is needed to transfer the measurement in real-time to PCS for plasma control and machine protection [8,9,10].

The paper presents the methodology, experimental setup, and measurement results for evaluating the latency in IAS.

Latency in the Image Acquisition System

Various factors contribute to the total latency of the IAS: camera reaction to trigger, camera interface, data transmission protocol, PCIe interface, software, or used image processing algorithms. Recognizing these components is necessary for the proper evaluation of latency in the IAS [11].

In the beginning, the camera captures the frame. The process is not as instant as it may seem. The reaction time to trigger is not known, however, some cameras define the constant delay from the trigger. Therefore it is crucial to find the actual moment when a camera responds to the trigger.

After finishing processing data in the camera, data transmission begins. It includes transfer from the camera to the frame grabber and from the frame grabber to the computer. The first part is highly dependent on the interface that is used in a camera. The second part of transmission can be influenced by many factors such as data transmission protocol and PCIe interface. The values of latency can be estimated by knowing the size of the data, the data transmission protocol, and the speed of transfer at every step. After the measurements, the actual results can be compared with theoretical assumptions.

Frame grabber may generate latency related to buffering, copying, and processing the data acquired directly from the camera. Moreover, software introduces latency by copying the data and retrieving from the raw data desired information. Another important factor is how fast the software can read a chunk of data one after another.

Image acquisition takes several steps to complete. Latency related to data transmission cannot be optimized by changes in software during the development of IAS. The only way to make it lower is to exchange hardware components supporting protocols and interfaces with higher throughputs.

Latency Measurement Methodology

The paper presents two methods to measure the latency of the IAS. The first one uses time stamping and the other uses a dedicated test setup with a triggered LED as a light source. Using both of them guarantees covering all stages of image acquisition in IAS.

The key component of latency measurements is the synchronization module based on the IEEE 1588 protocol. It uses the Master-Slave architecture and is synchronized using the Grand Master Clock (GMC), which obtains current time data through a Global Positioning System (GPS) receiver. The time synchronization module ensures accurate timestamping of individual events with a precision of up to 50 ns(rms) [5]. The timing module generates a reference clock signal (100 MHz) synchronized with the GMC that is distributed to all the slots inside the chassis over a dedicated TCLK_A backplane line. All frame grabber modules are configured to use the 100 MHz clock as a reference and therefore they are precisely synchronized and can be triggered by the MTCA timing module. Time synchronization of the operating system (OS) is achieved by reading the time from the timing module and then correcting the OS time. The accuracy of the operating system time synchronization is up to 1 μs(rms) [5].

The first method involves timestamping individual elements of acquisition, which is similar to the method proposed in [12]. Because of using the timing module, the timestamps are accurate up to 1 μs. Dividing the acquisition into stages allows to determine which one is the highest, and check the stability of every stage.

In the second case, the key element is checking the time of reaction to the trigger. The most important part of the conducted measurement is the detection of changes in image brightness resulting from the LED light turning on and camera exposure. Similar to the first case, the LED and the camera are synchronized using the timing module.

Latency Evaluation Using Timestamps

Timestamps used for latency measurements are presented in Fig. 2. They are obtained in the firmware and the software. The first timestamp is the time of image triggering by the frame grabber T0. The next timestamp is the time of receiving the first data packet by the frame grabber T1, which begins data acquisition. It consists of the camera’s reaction to the trigger, exposure time, image reading, and initiating the data transmission between the camera and the frame grabber. The next timestamp is the time of the end of image reading by the frame grabber T2, which, relative to the previous one, represents the time of data transmission through the interface used in the camera. The first timestamp on the software side is the time of reading the data by the low-level library T3. Together with the previous one, it indicates the time of frame grabber transmission and transfer through the PCIe interface. The next two timestamps are given in the high-level application and denote the time of data reading T4 (the time of reading the entire frame) and the completion of data header analysis, at the point when the data is available for the next phase of processing, analysis, or archiving T5. Time T5 marks the end of data acquisition on the computer side.

Latency Evaluation Using LED

The testing procedure involves acquiring the image with the LED on and off to find the area where the LED light is located in the image. After that, the acquisition is started and after capturing a few first frames (to make sure there is no unwanted, previous exposure of the sensor) the main part of the measurements is started. The LED is turned on for a given time (from 1 μs to 10 ms, depending on the scenario) as well as the camera exposure. Both of them are controlled by the signals that are described in two scenarios (Fig. 3).

In the first case, the LED starts emitting light at time T0. At time T1 = 1 ms, the frame exposure by the camera begins. In each iteration, the LED light duration is increased by 1 μs, and the brightness of the image area where the LED light is located is measured.

In the second case, the first iteration of LED emitting light begins at time T0, which, unlike the previous scenario, emits light for a predefined time (0.5 ms), which remains constant during measurements. At time T1 = 1 ms, the frame exposure by the camera starts. In each iteration, the start of LED illumination is delayed by 1 μs compared to the previous iteration, and the brightness of the 2D area in the image where the LED light is located is measured. A sudden brightness jump indicates that the camera during exposure was able to detect the LED light.

Experimental Setup

The experimental setup uses the hardware components presented in Fig. 1. Although the IAS can support various cameras, in experiments two cameras were used: Mikrotron EoSens 3CL 3011 and Dalsa Genie TS-C2500 as shown in Fig. 4.

Mikrotron EoSens 3CL is the camera that uses a Camera Link interface. The interface in the full mode can support a 6.8 Gb/s throughput. The pixel size is 8 bits and is transmitted as one byte. The maximum frame rate with reduced resolution to 120 × 2 is 181,000 frames per second (FPS) [13]. The camera documentation does not provide any information about its reaction time to the trigger [13].

Dalsa Genie TS-C2500 uses the 1 GigE Vision interface. The interface supports a maximum theoretical 1 Gb/s of throughput. The pixel size is 8 bits and is transmitted as one byte. The maximum frame rate that the camera supports is 3012 FPS with reduced vertical lines to 2560 × 10. The camera has a configurable value of delay that the minimum is 106 μs [14].

The last component of the setup is an external computer dedicated to image acquisition. The specification of the computer is presented in Table 1.

Dedicated Testing Hardware

For LED testing purposes the setup was built as shown in Fig. 5. The LED is placed in a special tube that protects the camera’s lens from the light from the background. The LED is connected to the timing module using the cable, allowing the control of the LED from the software that supports the timing module.

Dedicated Testing Software

The software was developed to perform latency evaluation tests. It is responsible for automated execution and result analysis.

The first component is a library used to collect and store individual timestamps in a JSON file. It allows to saving of both system time and time obtained from another source, as well as their conversion. In addition, the metadata is saved to verify collected data and for their description.

The main component of the software is the framework responsible for the execution of automated tests. In the beginning, it initiates and configures the IAS, including cameras frame grabbers, and timing module. The configurations are stored in INI files. They contain pre-defined frame grabber and camera configurations (frame size and frames per second, which are close to or equal to the maximum for a given frame size). The main part of the framework is the application that allows to acquire, process, and archive data from cameras. The application injects software timestamps when the key stage of acquisition is completed (see Fig. 2). The library from the previous paragraph is used to collect and store the timestamps. The application is controlled by the script providing the ability to run the application in a defined period, starting and stopping generating triggers for the camera and launching the application again with a different configuration.

For the analysis and various visualization methods of the files, a script written in Python was used. Examples are presented in Section V. It allows displaying individual timestamps as the difference between consecutive ones and displaying these differences on a graph.

Furthermore, a set of scripts was developed for conducting tests using an LED and analyzing the obtained results. They are written in Python, allowing to capturing of frames and lighting up the LED using signals from the timing module (see Fig. 3). The results of calculated brightnesses are saved in a JSON file and further analyzed using another script.

Experimental Results

The tests were focused on the timestamping of essential elements of image acquisition. Besides the cameras were evaluated in terms of their latency. The final results consist of an aggregation of 70 sets of measurements for each camera.

Camera Configuration

There are plenty of factors impacting data acquisition latency e.g. data transmission that are highly dependent on the frame size so it is crucial to measure the latency for different frame sizes and frame rates. Eight configuration files were prepared for each camera, specified in Tables 2 and 3 to evaluate latency. They cover a range of camera resolutions from 2 × 120 to the maximum. For each resolution, the corresponding frame rate was set close to or equal to the maximum for a given frame size according to the manuals of cameras [14, 15].

Timestamping Stages of Acquisition

The key measurements in the paper are based on timestamping the stages of data acquisition in IAS (see Fig. 2). They determine which part of the acquisition takes less or more time and check their jitter.

Figure 6 shows the total latency in the IAS using Mikrotron EoSens 3CL and Fig. 8 shows the total latency in the IAS using Dalsa Genie TS-C2500 camera. Latencies are presented using a box-and-whisker plot. The red line represents the median and the green triangle shows the mean value of the given measurement.

Figures 7 and 9 compare the stages of acquisition depending on the frame size. Tables 4 and 5 summarize both figures and present the exact values of measured latencies.

For each configuration, 5 sets of measurements were conducted. The single set of measurements was collected during 10 s of image acquisition. Depending on the frame rate (see Table 2) from 2851 to 1,811,590 samples were gathered for the Mikrotron EoSens 3CL camera and (see Table 3) from 200 to 25,000 for the Dalsa Genie TS-C2500.

Camera Latency Evaluation

The very first component of total latency in IAS is dependent on a camera. The paper is not strictly focused on the evaluation of a particular model of a camera, however, it presents the methodology and results of measurements of cameras used in the IAS. Three of eight configurations (see Table 3) were chosen to evaluate the camera (640 × 480, 1280 × 1024, and maximum). The exact moment when it is assumed the LED is emitting light (see Fig. 3) is when the calculated value of brightness in the area where the LED is located is higher than the preceding noise. The accuracy of the measurement is 1 μs because that was the step between consecutive measurements.

The Mikrotron EoSens 3CL manual does not inform about the value of delay related to the reaction to trigger. Table 6 presents the measured value of the camera latency.

On the other hand, Dalsa Genie TS-C2500 defines the configurable value (that is fixed during the acquisition) of the delay from the trigger that at the minimum is set to 106 μs. Table 7 shows the results of the measurements.

Discussion and Conclusions

The low latency characterizes Mikrotron EoSens 3CL. Camera Link interface is very stable as shown by the very low standard deviation of measurements.

To achieve high frame rates (above 25,000 FPS, first three sets of measurements, see frame grabber and PCIe and low-level libraries latency in Fig. 7) it is necessary to group frames during acquisition. Instead of acquiring frame by frame, the group of e.g. 5 or 10 frames is acquired at once from the frame grabber. This approach optimizes data transmission, however, it introduces another latency related to the grouping. The frame grabber and PCIe and low-level libraries latency shows the influence of grouping the frames because the library reads more than one frame and waits for the remaining frames in the group. That leads to both higher latency as expected and higher standard deviation.

The frame grabber and PCIe transmission is the most influential and unstable stages of image acquisition. It is dependent on the PCIe interface used in the computer and the data protocol. The low-level libraries and software latency have a negligible contribution to the total latency.

Dalsa Genie TS-C2500 is not as fast as Mikrotron EoSens 3CL. It introduces more latency than is described in its manual (106 μs, see IV chapter). The camera latency is stable, however, it is not strictly dependent on the frame size. The 1 Gb/s Ethernet interface introduces the greatest part of total latency starting from the 120 × 120 resolution. In the case of this camera, the grouping is not needed because it operates at lower frame rates (see Table 3). The frame grabber and PCIe transmission are slower than in the case of Mikrotron EoSens 3CL because of the more time-consuming data processing in the frame grabber. However, both are unstable and contribute significantly to the total latency. The low-level libraries and software latency are minor similar to the previous camera.

The tests show that the measured firmware and software latencies (T2 to T5, see Fig. 2) are sufficient to support a variety of camera configurations. Tables 8 and 9 show that in every case no matter of camera or configuration the latency introduced by the firmware and software (T5 - T2) is always lower than the frame group interval. Therefore the IAS can be considered efficient and meets the requirements for the plasma diagnostic systems in ITER.

Summary

Performance and latency evaluation are essential elements of an assessment of real-time acquisition systems. The total latency of the IAS is the sum of latencies of acquisition stages such as camera, interface, and software latency. That knowledge has led to the development of a set of methods for testing and evaluating the IAS based on the MTCA.4 architecture.

Two methods were proposed and tested to evaluate the latency of imaging systems. Using both of them covers all stages of image acquisition. The dedicated testing framework is responsible for performing the automated tests using the mentioned earlier methods. It provides a comprehensive analysis of collected data.

Mikrotron EoSens 3CL and Dalsa Genie TS-C2500 cameras were evaluated using the framework. The measurement results show the difference between cameras and camera interfaces. The results are consistent with camera specifications which proves the accuracy of the used method. The measured values related to firmware and software latencies show that the IAS is sufficient to support possible camera configurations. Dalsa Genie TS-C2500 is over 5 times slower than Mikrotron EoSens 3CL. The latency introduced by the software for both cameras is negligible (∼10 μs).

The prepared libraries and tools are universal, making them easily reusable for testing other cameras and hardware components in the future. The framework is necessary for evaluating the IAS software during its development.

Data Availability

No datasets were generated or analysed during the current study.

References

D. Makowski, A. Mielczarek, P. Perek, G. Jablonski, M. Orlikowski, B. Sakowicz, A. Napieralski, P. Makijarvi, S. Simrock, V. Martin, High-performance image acquisition and processing system with MTCA.4. IEEE Trans. Nucl. Sci. 62, 925–931 (2015). https://doi.org/10.1109/TNS.2015.2415582

D. Makowski, Application of PCI express interface in high-performance video systems, in: 2015 22nd International Conference Mixed Design of Integrated Circuits & Systems (MIXDES), IEEE, 2015: pp. 141–143. https://doi.org/10.1109/MIXDES.2015.7208498

D. Makowski, M. Fenner, F. Ludwig, U. Mavrič, A. Mielczarek, A. Napieralski, P. Perek, H. Schlarb, Standardized solution for management controller for MTCA.4. IEEE Trans. Nucl. Sci. 62 (2015). https://doi.org/10.1109/TNS.2015.2408452

D. Makowski, A. Mielczarek, P. Perek, A. Napieralski, L. Butkowski, J. Branlard, M. Fenner, H. Schlarb, B. Yang, High-speed data processing module for LLRF. IEEE Trans. Nucl. Sci. 62, 1083–1090 (2015). https://doi.org/10.1109/TNS.2015.2416120

G. Jablonski, D. Makowski, A. Mielczarek, M. Orlikowski, P. Perek, A. Napieralski, P. Makijarvi, S. Simrock, IEEE 1588 time synchronization board in MTCA.4 form factor. IEEE Trans. Nucl. Sci. 62 (2015). https://doi.org/10.1109/TNS.2014.2375954

GenICam Standard, (2016). https://www.emva.org/wp-content/uploads/GenICam_Standard_v2_1_1.pdf (accessed November 28, 2023)

A. Ivutin, E. Larkin, Estimation of latency in embedded real-time systems, in: 2014 3rd Mediterranean Conference on Embedded Computing (MECO), IEEE, 2014: pp. 236–239. https://doi.org/10.1109/MECO.2014.6862704

R. Reichle, P. Andrew, G. Counsell, J.-M. Drevon, A. Encheva, G. Janeschitz, D. Johnson, Y. Kusama, B. Levesy, A. Martin, C.S. Pitcher, R. Pitts, D. Thomas, G. Vayakis, M. Walsh, Defining the infrared systems for ITER. Rev. Sci. Instrum. 81 (2010). https://doi.org/10.1063/1.3491199

L. Letellier, C. Guillon, M. Ferlet, M.H. Aumeunier, T. Loarer, E. Gauthier, S. Balme, B. Cantone, E. Delchambre, D. Elbèze, S. Larroque, F. Labassé, D. Blanchet, Y. Peneliau, L. Rios, F. Mota, C. Hidalgo, A. Manzanares, V. Martin, F. Le Guern, R. Reichle, M. Kocan, System level design of the ITER equatorial visible/infrared wide angle viewing system. Fusion Eng. Des. 123, 650–653 (2017). https://doi.org/10.1016/J.FUSENGDES.2017.06.005

S. Salasca, M.H. Aumeunier, F. Benoit, B. Cantone, Y. Corre, E. Delchambre, M. Ferlet, E. Gauthier, C. Guillon, D. Van Houtte, D. Keller, F. Labasse, S. Larroque, T. Loarer, F. Micolon, B. Peluso, M. Proust, D. Blanchet, Y. Peneliau, J. Alonso, E. De La Cal, C. Hidalgo, P. Martin, M. Medrano, F. Mota, J.L. De Pablos, L. Rios, E. Rincon, R. Vila, A. Manzanares, V. Martin, R. Reichle, F. Le Guern, The ITER Equatorial Visible/Infra-Red Wide Angle Viewing System: Status of design and R&D, Fusion Engineering and Design 96–97 (2015) 932–937. https://doi.org/10.1016/J.FUSENGDES.2015.02.062

T. Sielhorst, W. Sa, A. Khamene, F. Sauer, N. Navab, Measurement of absolute latency for video see through augmented reality, in: 2007 6th IEEE and ACM International Symposium on Mixed and Augmented Reality, IEEE, 2007: pp. 215–220. https://doi.org/10.1109/ISMAR.2007.4538850

S. Esquembri, D. Sanz, E. Barrera, M. Ruiz, A. Bustos, J. Vega, R. Castro, Hardware timestamping for an image acquisition system based on FlexRIO and IEEE 1588 v2 standard. IEEE Trans. Nucl. Sci. 63, 228–235 (2016). https://doi.org/10.1109/TNS.2016.2516640

Mikrotron GmbH, EoSens® 3CL Camera Manual, (2010)

Teledyne DALSA, Genie™ TS Series Camera User’s Manual, (2012)

Mikrotron Camera Compare, (2024). https://mikrotron.de/cameracompare/ (accessed January 25, 2024)

Acknowledgements

This work has been carried out within the framework of the EUROfusion Consortium, funded by the European Union via the Euratom Research and Training Programme (Grant Agreement No 101052200 — EUROfusion) and as a part of co-financed by the Polish Ministry of Education and Science within the framework of the international project called ’PMW’ International Projects Co-financed. Views and opinions expressed are however those of the author(s) only and do not necessarily reflect those of the European Union or the European Commission. Neither the European Union nor the European Commission can be held responsible for them.

Author information

Authors and Affiliations

Contributions

All authors took part in writing the manuscript. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Pietrzak, P., Perek, P. & Makowski, D. Latency Evaluation in the Image Acquisition System Based on MTCA.4 Architecture for Plasma Diagnostics. J Fusion Energ 43, 12 (2024). https://doi.org/10.1007/s10894-024-00411-0

Accepted:

Published:

DOI: https://doi.org/10.1007/s10894-024-00411-0