Abstract

Bistability is a key property of many systems arising in the nonlinear sciences. For example, it appears in many partial differential equations (PDEs). For scalar bistable reaction-diffusions PDEs, the bistable case even has taken on different names within communities such as Allee, Allen-Cahn, Chafee-Infante, Nagumo, Ginzburg-Landau, \(\Phi ^4\), Schlögl, Stommel, just to name a few structurally similar bistable model names. One key mechanism, how bistability arises under parameter variation is a pitchfork bifurcation. In particular, taking the pitchfork bifurcation normal form for reaction-diffusion PDEs is yet another variant within the family of PDEs mentioned above. More generally, the study of this PDE class considering steady states and stability, related to bifurcations due to a parameter is well-understood for the deterministic case. For the stochastic PDE (SPDE) case, the situation is less well-understood and has been studied recently. In this paper we generalize and unify several recent results for SPDE bifurcations. Our generalisation is motivated directly by applications as we introduce in the equation a spatially heterogeneous term and relax the assumptions on the covariance operator that defines the noise. For this spatially heterogeneous SPDE, we prove a finite-time Lyapunov exponent bifurcation result. Furthermore, we extend the theory of early warning signs in our context and we explain, the role of universal exponents between covariance operator warning signs and the lack of finite-time Lyapunov uniformity. Our results are accompanied and cross-validated by numerical simulations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Scalar PDEs with bistability have been deeply studied within many communities [2, 3, 16, 30, 33, 42]. An algebraically particularly simple form of this class of PDEs is given by

where \(x\in [0,L]\) for some fixed interval length \(L>0\), \(t\ge 0\) and with a parameter \(\alpha \in {\mathbb {R}}\). One natural option is to view (1) as an initial-boundary value problem endowed with zero Dirichlet conditions on [0, L] and \(u(x,0)=u_0(x)\), given \(u_0\in H^1_0\), where \(H^1_0=H^1_0([0,L],{\mathbb {R}})\) denotes the usual Sobolev space with one weak derivative in \(L^2([0,L],{\mathbb {R}})\). The main result presented by Chafee-Infante in [16] was to study the number of steady states for (1) and their respective stability, depending on the value of \(\alpha \). More precisely, let us denote by \(\{-\lambda _k'\}_{k\in {\mathbb {N}}\setminus \{0\}}\) the eigenvalues of \(\Delta \), the Laplacian operator with Dirichlet conditions, that take the form \(-\lambda _k':=-\bigg ( \dfrac{\pi k}{L} \bigg )^2\) for \(k\in {\mathbb {N}}\setminus \{0\}\). It has been shown that for \(\alpha <\lambda _1'\) the origin in \(H^1_0\), i.e. the state being identically equal to zero, is the only steady state of the system and it is asymptotically stable. Conversely, for \(\lambda _k'<\alpha \le \lambda _{k+1}'\) and \(k\in {\mathbb {N}}\setminus \{0\}\), the origin loses its stability and the number of different stationary solutions becomes \(2k+1\). Of those, only two are asymptotically stable while the rest are unstable. Specifically, the only stable solutions are the ones that bifurcate from the origin when \(\alpha \) crosses \(\lambda _1'\). The study of the steady states of more general systems has been presented in detail in [33] using a geometric approach. For example, the equation

for a bounded and continuous almost everywhere positive function g and assumptions taken as in the previous case, has been studied. We will refer to (2) as a heterogeneous case due to the additional spatial heterogeneity induced by g. We are going to use certain important properties of the classical spatially homogeneous PDE that are inherited in the heterogeneous case, such as the loss of stability by the origin when \(\alpha \) crosses \(\lambda _1\), for \(\{-\lambda _k\}_{k\in {\mathbb {N}}\setminus \{0\}}\) the eigenvalues of the Schrödinger operator \(\Delta -g\). Moreover, the dimension of the unstable manifold of the origin is k when \(\lambda _k<\alpha <\lambda _{k+1}\). The existence of an attractor is satisfied for almost all \(\alpha \) values by the dissipativity of system ( [33], Chapter 5).

Beyond the deterministic PDE dynamics, our second main ingredient are stochastic dynamics techniques as we want to study an SPDE variant of (2). We start with an introduction to the background in the stochastic case. It is well known that the definition of a stochastic bifurcation is much more complex than in the deterministic case [4, 6, 35]. For example, in [21] it is shown, how an ODE supercritical pitchfork normal form, when perturbed by noise, yields a unique attractor in the form of a singleton despite the ODE being bistable above the deterministic pitchfork bifurcation point. In particular, it was proven how the Lyapunov exponent along such an attractor is strictly negative. Thus, the solutions under the same noise are expected to synchronize asymptotically following the attractor of the random dynamical system described by the problem. This event is called synchronization by noise. Later on, a similar approach was carried over [14] for an SPDE variant of the pitchfork normal form, i.e., for a stochastic variant of (1). For the SODE pitchfork case, it was shown that on finite time scales, the bifurcation effect still persists, first in the fast-slow setting [5, 6]. Afterwards, synchronization by noise was shown to be not instantaneous for the pitchfork SODE case [12], in the sense that finite-time Lyapunov exponents have a positive probability to be positive above the bifurcation threshold. The recent work [11] has proven, how this result carries over to SPDEs without spatially heterogeneous terms. One of the objectives of this article is to prove that several results can be extended to a generalized, heterogeneous in space, family of SPDEs.

The SPDE we study is a reaction-diffusion equation with additive noise

with \(x\in {\mathcal {O}}:=[0,L]\), \({\mathcal {H}}:=\{v\in L^2({\mathcal {O}}):v(0)=v(L)=0\}\), \(\sigma >0\), \({\mathcal {V}}:=H^1_0({\mathcal {O}})\) and \(W_t\) a stochastic forcing to be specified below. We will assume \(f(x)=\alpha -g(x)\) to be bounded, with g having Hölder regularity \(\gamma >\dfrac{1}{2}\) and \(g>0\) almost everywhere in \({\mathcal {O}}\). The stochastic process \(W_t\) will be taken as a two-sided Q-Wiener process \(W_t\), whose covariance operator Q is trace class and self-adjoint. We assume that the eigenvalues of Q satisfy \(q_j>0\), for \(j\in {\mathbb {N}}\setminus \{0\}\), and they are associated with eigenfunctions \(b_j\) such that \(b_j=e_j'\) for \(j>D>0\) and some integer \(D>0\), with \(e_j'\) normalized eigenfunctions of the Laplacian with zero Dirichlet boundary conditions. This assumption leads to the fact that the \(b_j\) are finite combinations of \(e_k'\) for \(j,k\in \{1,...,D\}\). It can be shown (following the steps in [23, Chapter 7]) that the SPDE has a \({\mathbb {P}}-a.s.\) mild solution

The assumptions on Q imply the existence of a compact attractor for the random dynamical system \((\varphi ,\theta )\) defined by (3) as shown in [26]. Furthermore, the attractor a is a singleton for every \(\alpha \in {\mathbb {R}}\); see [14]. Such a singleton attractor result is proven using the order-preservation of the SPDE [17, 43].

This paper proves the behaviour of finite-time Lyapunov exponents (FTLE) on the attractor of (3) depending on \(\alpha \). For \(\alpha <\lambda _1\) the FTLE are \({\mathbb {P}}-a.s.\) negative and for \(\alpha >\lambda _1\) there is positive probability for them to be positive. Such a result can also be expanded, following [11], for the cases where \(\alpha \) crosses other eigenvalues of \(\Delta -g\). In addition to FTLE, there is another natural way to study small stochastic perturbations near deterministic bifurcations, which is based upon moments. For stochastic ODEs [6, 8, 9, 35, 36], it is well understood that critical slowing down near a bifurcation induces growth of the covariance. This growth can then be used in applications as an early-warning sign. For our SPDE, we study early warning signs associated to the covariance operator for the solution of the linearized system following the results of [32, 38]. In particular, we are going to prove a hyperbolic-function divergence of the covariance operator, when approaching a bifurcation, and of the variance in time of the solution u on every point in \(\mathring{{\mathcal {O}}}\). The precise rate of the phenomenon can be described depending on the studied spatial point. We then discuss the estimate of the error due to the linearization of the system and we cross-validate our theoretical results by numerical simulations.

1.1 Hypothesis and Assumptions

In this paper, the object of study is the following parabolic SPDE with zero Dirichlet and given initial conditions:

with \(x\in {\mathcal {O}}:=[0,L]\), \({\mathcal {H}}:=\{v\in L^2({\mathcal {O}}):v(0)=v(L)=0\}\), and \({\mathcal {V}}:=H^1_0({\mathcal {O}})\). The stochastic process \(W_t\) is a two-sided Q-Wiener process in \({\mathcal {H}}\) with covariance operator Q that is assumed trace class, self-adjoint and positive. The function f will be taken as \(f=-g+\alpha \) with g almost everywhere positive and uniformly Hölder regular of order \(\dfrac{1}{2}<\gamma \le 1\), in particular it could be uniformly Lipschitz in [0, L], and \(\alpha \in {\mathbb {R}}\). The first equation in (4) can written as

with \(A:=\Delta -g\). Therefore, we observe that A is a Schrödinger operator whose main properties are collected in Appendix A. In particular, the eigenvalues of \(A:=\Delta -g\), \(\{-\lambda _k\}_{k\in {\mathbb {N}}\setminus \{0\}}\), are strictly negative. As described in the introduction above, the eigenvalues and eigenfunctions of \(\Delta \) are \(-\lambda _k':=-\Big (\dfrac{\pi k}{L}\Big )^2\) and \(e_k':=\sqrt{\dfrac{2}{L}}\text {sin}\Big (\dfrac{\pi k}{L} x\Big )\) with \(k\in {\mathbb {N}}\setminus \{0\}\) and \(x\in {\mathcal {O}}\). In Appendix A, it is illustrated how \(\{-\lambda _k\}_{k\in {\mathbb {N}}\setminus \{0\}}\) and the corresponding normalized in \({\mathcal {H}}\) eigenfunctions of A, \(\{e_k\}_{k\in {\mathbb {N}}\setminus \{0\}}\), behave asymptotically in k in comparison to the eigenvalues \(\{-\lambda _k'\}_{k\in {\mathbb {N}}\setminus \{0\}}\) and eigenfunctions \(\{e_k'\}_{k\in {\mathbb {N}}\setminus \{0\}}\) respectively. Both \(\Delta \) and A are assumed to be closed operators on \({\mathcal {H}}\).

The norm on a Banach space X is denoted as \(||\cdot ||_X\) with the exception of the \(L^p\) spaces for which it is noted as \(||\cdot ||_p\) for any \(1\le p\le \infty \) or for further cases later described in detail. We use \(\langle \cdot ,\cdot \rangle \) to indicate the standard scalar product in \(L^2({\mathcal {O}})\). We use the Landau notation on sequences \(\{\rho ^1_k\}_{k\in {\mathbb {N}}\setminus \{0\}}\subset {\mathbb {R}},\;\{\rho ^2_k\}_{k\in {\mathbb {N}}\setminus \{0\}}\subset {\mathbb {R}}_{>0}\) as \(\rho ^1_k=O(\rho ^2_k)\) if \(0\le \underset{k\rightarrow \infty }{\lim }\dfrac{|\rho ^1_k|}{\rho ^2_k}<\infty \). We define the symbol \(\sim \) to indicate \(\rho _1\sim \rho _2\) if \(0<\underset{\rho _2\rightarrow \infty }{\lim }\dfrac{\rho _1}{\rho _2}<\infty \) and we apply it also on sequences \(\{\rho ^1_k\}_{k\in {\mathbb {N}}\setminus \{0\}}\subset {\mathbb {R}},\;\{\rho ^2_k\}_{k\in {\mathbb {N}}\setminus \{0\}}\subset {\mathbb {R}}_{>0}\) meaning that \(0<\underset{k\rightarrow \infty }{\lim }\dfrac{\rho ^1_k}{\rho ^2_k}<\infty \).

Next, we proceed to specify the assumptions on the noise. The probability space that we will use is \((\Omega ,{\mathcal {F}},{\mathbb {P}})\) with \(\Omega :={\mathcal {C}}_0({\mathbb {R}};{\mathcal {H}})\) composed of the functions \(\omega :{\mathbb {R}}\rightarrow {\mathcal {H}}\) such that \(\omega (0)=0\) and endowed with the compact-open topology. With \({\mathcal {F}}\) we denote the Borel sigma-algebra on \(\Omega \) and with \({\mathbb {P}}\) a Wiener measure generated by a two-sided Wiener process. As in [11], we consider \(\omega _t=W_t(\omega )\) and the two-sided filtration \(({\mathcal {F}}_{t_1}^{t_2})_{t_1<t_2}\) defined as \({\mathcal {F}}_{t_1}^{t_2}:={\mathfrak {B}}(\omega _{t_2}-\omega _{t_1})\). We can then obtain

We also introduce the Wiener shift for all \(t>0\)

Thus \((\theta ^t)_{t\in {\mathbb {R}}}\) is a family of \({\mathbb {P}}\)-preserving transformations that satisfies the flow property and \((\Omega ,{\mathcal {F}},{\mathbb {P}},(\theta ^t)_{t\in {\mathbb {R}}})\) is an ergodic metric dynamical system [18]. The covariance operator Q is assumed to have eigenvalues \(\{q_k\}_{k\in {\mathbb {N}}\setminus \{0\}}\) and eigenfunctions \(\{b_k\}_{k\in {\mathbb {N}}\setminus \{0\}}\) with the following properties:

-

1.

there exists a \(D\in {\mathbb {N}}\setminus \{0\}\) such that \(b_j=e_j'\) for all \(j>D\),

-

2.

\(q_j>0\) for all \(j\in {\mathbb {N}}\setminus \{0\}\),

-

3.

\(\sum _{j=1}^\infty q_j \lambda _j'^\gamma <+\infty \) for a \(\gamma >0\).

The first property implies that \(b_k\) is a finite combination of \(\{e_j'\}_{j\in \{1,...,D\}}\) for \(k\le D\). The choice of \(D>2\) can be used to have more freedom on the choice of Q both within analytical and numerical results. As stated in Appendix B, the first and third assumption imply the continuity of the solutions of (4) in \({\mathcal {V}}\) if \(u_0\in {\mathcal {V}}\) and that, for \(u_0\in {\mathcal {H}}\), there exists \({\mathbb {P}}-a.s.\) a unique mild solution

1.2 Properties of the Determistic Case

The heterogeneous system (4) with \(\sigma =0\) inherits some properties from the classical spatially homogeneous PDE:

Proposition 1.1

Set \(u_*\in {\mathcal {H}}\) and let \({\mathcal {D}}(A)\) be the domain of A. Suppose \(A:{\mathcal {D}}(A)\subset {\mathcal {H}}\longrightarrow {\mathcal {H}}\) is a sectorial linear operator and \(F:U\rightarrow {\mathcal {H}}\) is a locally Lipschitz operator so that U is a neighbourhood of \(u_*\) and we have

with \(u_*+v\in U\), \(B\in {\mathcal {L}}_b({\mathcal {H}})\) with \({\mathcal {L}}_b({\mathcal {H}})\) denoting bounded linear operators on \({\mathcal {H}}\), \(G:U\rightarrow {\mathcal {H}}\) and \(\Vert G(u)\Vert \le c \Vert u\Vert _{\mathcal {V}}^{\gamma } \) for \(\gamma >1\), any \(u\in {\mathcal {V}}\) and a constant \(c>0\).Footnote 1 Assume \(0=Au_*+F(u_*)\) and that the spectrum of \(A+B\) is in \(\{\lambda \in {\mathbb {C}}:\text {Re}(\lambda )<-\epsilon \}\) for a \(\epsilon >0\), then \(u_*\) is a locally asymptotically stable steady state of the evolution equation \({\dot{u}}=Au+F(u)\), where \(u(t)=u(\cdot ,t)\) and dot denotes the time derivative.

A proof of this standard proposition can be found in [33, Theorem 5.1.1]. By Proposition 1.1 it is clear that the zero function is an asymptotic stable steady state of (4) with \(\sigma =0\) when \(\alpha <\lambda _1\). In fact, for this parameter range it is also the only steady state by the strict negativity of A and the strict dissipativity given by F. When \(\alpha >\lambda _1\) the stability setting changes. The next proposition describes such a case; see [33, Corollary 5.1.6].

Proposition 1.2

Set \(u_*\), A and F as in Proposition 1.1. Assume again \(0=Au_*+F(u_*)\) and that the spectrum of \(A+B\) has non-empty intersection with \(\{\lambda \in {\mathbb {C}}:\text {Re}(\lambda )>\epsilon \}\) for an \(\epsilon >0\), then \(u_*\) is an unstable steady state of \({\dot{u}}=Au+F(u)\).

The last result can be applied to show that the zero function is unstable for \(\alpha >\lambda _1\). Nonetheless, the global dissipativity still implies the existence of a compact attractor [33, Section 5.3]. The next theorem, based on [19], establishes the bifurcation of near steady states from the trivial branch of zero solutions when \(\alpha \) crosses a bifurcation point.

Theorem 1.3

(Crandall-Rabinowitz) Set X and Y Banach spaces, \(u_*\in X\) and U neighbourhood of \((u_*,0)\) in \(X\times {\mathbb {R}}\). Let \(F:U\subset X\times {\mathbb {R}}\rightarrow Y\) be a \({\mathcal {C}}^3\) function in \((u_*,0)\) with \(F(u_*,p)=0\) for all \(p\in (-\delta ,\delta )\) for \(\delta >0\). Suppose the linearization \(G:={\text {D}}_X F(0,0)\) is a Fredholm operator with index zero. Assume that \(\dim (\text {Ker}(G))=1\), \(\text {Ker}(G)=\text {Span}\{\phi _0\}\) and that \({\text {D}}_p {\text {D}}_X F(u_*,0)\phi _0\not \in \text {Range}(G)\). Then \((u_*,0)\) is a bifurcation point and there exists a \({\mathcal {C}}^1\) curve \(s\mapsto (\phi (s),p(s))\), for s in a small interval, that passes through \((u_*,0)\) so that

In a sufficiently small neighbourhood U the only solutions to (6) are the map \(s\mapsto (\phi (s),p(s))\) and the curve \(\{(u_*,\alpha ):(u_*,\alpha )\in U\}\).

A proof of the last result can be found in [33, Lemma 6.3.1, Theorem 6.3.2] and [37, Theorem 5.1]. For \(Y={\mathcal {H}}\), the space X generated by \(\{e_k\}_{k\in {\mathbb {N}}\setminus \{0\}}\), the normalized eigenfunctions of A, and given the nonlinear operator

then the Crandall-Rabinowitz theorem implies the existence of a bifurcation, which can be shown to be a pitchfork, at \(\alpha =\lambda _k\) and in \(u_*\) taken as the origin function in \({\mathcal {V}}\). It can also be proven that the new steady states that arise at \(k=1\) are locally asymptotically stable.

1.3 Random Dynamical System and Attractor

The next properties are described in [13] and we summarize them here as we will need them later on.

Proposition 1.4

There exists a \(\theta ^t\)-invariant subset \(\Omega '\subset \Omega \) of full probability measure such that for all \(\omega \in \Omega '\) and \(t\ge 0\) there is a Fréchet differentiable \({\mathcal {C}}^1\)-semiflow on \({\mathcal {H}}\)

that safisfies the following for any \(\omega \in \Omega '\):

-

(P1)

For all \(T>0\) and \(u_0\in {\mathcal {H}}\), the mapping \((\omega ,t)\mapsto \varphi _\omega ^t(u_0)\) in \(\Omega \times [0,T]\mapsto {\mathcal {H}}\) is the unique pathwise mild solution of (4).

-

(P2)

It satisfies the cocycle property, i.e., for any \(t_1,t_2>0\)

$$\begin{aligned} \varphi _\omega ^{t_1+t_2}=\varphi _{\theta ^{t_1} \omega }^{t_2} \circ \varphi _\omega ^{t_1}. \end{aligned}$$ -

(P3)

Fixing \(t_2>t_1>0\), for any \(u\in {\mathcal {H}}\) the \({\mathcal {H}}\)-valued random variable \(\varphi _{\theta ^{t_1} \omega } ^{t_2-t_1}(u)\) is \({\mathcal {F}}_{t_1}^{t_2}\)-measurable.

The pair of mappings \((\theta ,\varphi )\) is the random dynamical system (RDS) generated by (4). Also, property (P3) implies that the process \(u_t\), defined by \(u_t:=\varphi _\omega ^t(u_0)\) for generic \(\omega \in \Omega '\), is \({\mathcal {F}}_0^t\)-adapted. For any \({\mathcal {C}}^1\) Fréchet differentiable mapping \(\varphi :{\mathcal {H}}\rightarrow {\mathcal {H}}\), we denote by \({\text {D}}_u\varphi \in {\mathcal {L}}({\mathcal {H}})\) the Fréchet derivative of \(\varphi \) in \(u\in {\mathcal {H}}\), for \({\mathcal {L}}({\mathcal {H}})\) that indicates the space of linear operators on \({\mathcal {H}}\). The next proposition, regarding the Fréchet derivative, is obtained by [26, Lemma 4.4].

Proposition 1.5

For all \(T>0\) and \(u_0,v_0\in {\mathcal {H}}\) and given the Fréchet differentiable \({\mathcal {C}}^1\) semiflow described in Proposition 1.4, we have that the mapping \(\Omega \times [0,T]\mapsto {\mathcal {H}}\) given by \((\omega ,t)\mapsto {\text {D}}_{u_0} \varphi _\omega ^t(v_0)\) is the unique solution of the first variation evolution equation along u with initial conditions set on \(u_0\) and \(v_0\):

Next, we present the relevant result for the existence and uniqueness of an invariant measure. The next lemma and proposition [15, Chapter 8] are important for following results and are implied by Lemma B.3 and the strict positivity of the eigenvalues of Q.

Lemma 1.6

The transition semigroup of the system (4) satisfies the strong Feller property and irreducibility.

By Krylov-Bogoliubov Theorem, for the existence, Doob’s Theorem and by Khas’minskii’s Theorem [24, 34] we obtain the next proposition.

Proposition 1.7

There exists a unique stationary measure \(\mu \) for the process \(u_t\) which is equivalent to any transition probability measure \(P_t(u_0,\cdot )\) for all \(t>0\) and \(u_0\in {\mathcal {H}}\), in the sense that they are mutually absolutely continuous. It is defined as

for any \({\mathcal {B}}\in {\mathfrak {B}}({\mathcal {H}})\), the sigma-algebra of Borelian sets in \({\mathcal {H}}\), and it is concentrated on \({\mathcal {V}}\).

Finally, we can consider the random attractor itself.

Definition 1.8

A global random attractor of an RDS \((\varphi ,\theta )\) is a compact random set \({\mathcal {A}}\subset {\mathcal {H}}\), i.e., it depends on \(\omega \in \Omega '\) and satisfies:

-

It is invariant under the RDS, which means \(\varphi _\omega ^t({\mathcal {A}}(\omega ))={\mathcal {A}}(\theta ^t \omega )\);

-

It is attracting so that for every bounded set \({\mathcal {B}}\subset {\mathcal {H}}\)

$$\begin{aligned} \lim _{t\rightarrow \infty } \Vert \varphi ^t_{\theta ^{-t}\omega }({\mathcal {B}})-{\mathcal {A}}(\omega ) \Vert _{\mathcal {H}} =0 \end{aligned}$$for \({\mathbb {P}}\)-a.s. in \(\omega \).

We can use Proposition 1.7 to prove a strong property of the random attractor.

Proposition 1.9

For any \(\alpha \in {\mathbb {R}}\) the random dynamical system generated by (4) has a global random attractor \({\mathcal {A}}\) that is a singleton, i.e., there exists a random variable \(a:\Omega \rightarrow {\mathcal {H}}\) such that \({\mathcal {A}}(\omega )=a(\omega )\) \({\mathbb {P}}\)-a.s., and it is \({\mathcal {F}}_{-\infty }^0\)-measurable. The law of the attractor a is the unique invariant measure of the system.

The proof of this proposition follows the steps of [14, Theorem 6.1]. In particular, the existence of the global random attractor \({\mathcal {A}}\) is the result of [26, Theorem 4.5] and its \({\mathcal {F}}_{-\infty }^0\)-measurability can be derived from [20, Theorem 3.3]. Another important property is the order-preservation of the system (4), i.e., for two initial conditions \(u_1(x,0)\le u_2(x,0)\) for almost all \(x\in {\mathcal {O}}\) the solutions satisfy \(u_1(x,t)\le u_2(x,t)\) for almost all \(x\in {\mathcal {O}}\) and \(t>0\). This is a consequence of [43, Theorem 5.1] and [17, Theorem 5.8].

The main result of the paper [11] is the description of the possible influences of the attractor a on close solutions, for different values of \(\alpha \). This can be expressed by the sign of the leading finite-time Lyapunov exponent (FTLE).

Definition 1.10

The (leading) finite-time Lyapunov exponents, at a time \(t>0\) and sample \(\omega \in \Omega \), of an RDS \((\varphi ,\theta )\) with Fréchet differentiable semiflow \(\varphi \) is defined by the following equation:

for any \(u\in {\mathcal {H}}\).

The FTLE describes the influence of the linear operator \({\text {D}}_{u} \varphi ^t_\omega \), for given \(\omega \in \Omega \), \(u\in {\mathcal {H}}\) and \(t>0\), on elements of \({\mathcal {H}}\) close to u. A positive FTLE indicates that close functions in \({\mathcal {H}}\), that are near u, tend to separate in time t. Conversely, a negative FTLE indicates the distance between elements in a neighbourhood of u tends to be smaller after time t. Of great interest is the FTLE on the attractor, defined as

By the subadditive ergodic theorem there exists a limit \(t\rightarrow \infty \) of \({\mathfrak {L}}_1(t;\omega )\) for \(\omega -{\mathbb {P}}\)-a.s., referred to as the Lyapunov exponent of \(a(\omega )\).Footnote 2

Next, we comment on extension to further bifurcations beyond the first pitchfork bifurcation point. As previously described, any \(\alpha \in \{\lambda _j\}_{j\in {\mathbb {N}}\setminus \{0\}}\) is a deterministic bifurcation threshold of the system for which, when crossed, two new steady states appear and the origin in \({\mathcal {H}}\) increases dimension of its unstable manifold by one. To study this case we make use of wedge products. For a separable Hilbert space \({\mathcal {H}}\) and for \(v_1,...,v_k\in {\mathcal {H}}\), the wedge product (k-blade) is denoted by

We define the following scalar product for all \(v_1,...,v_k,w_1,...,w_k\in {\mathcal {H}}\),

with \((v_{j_1},w_{j_2})_{j_1,j_2}\) denoting the \(k\times k\) matrix with \(j_1,j_2\)-th element \((v_{j_1},w_{j_2})\). The set \(\wedge ^k {\mathcal {H}}\) is the closure of finite linear combinations of k-blades under the norm defined by such inner product. Given \(\{e_j\}_{j\in {\mathbb {N}}\setminus \{0\}}\) as a basis of \({\mathcal {H}}\), we can define a basis for \(\wedge ^k {\mathcal {H}}\) whose elements are the k-blades

with \({\textbf {i}}=(i_1,...,i_k)\) for \(0<i_1<...<i_k\). Given \(B\in {\mathcal {L}}({\mathcal {H}})\) we can obtain an operator in \({\mathcal {L}}(\wedge ^k {\mathcal {H}})\) by the following operation:

for all \(v_1,...,v_k\in {\mathcal {H}}\). Such operators satisfy the propertyFootnote 3

The last definitions permit us to introduce

Such functions describe the behaviour of volumes defined by the position of elements of \({\mathcal {H}}\) in a neighbourhood of \(u\in {\mathcal {H}}\) or of the attractor.

2 Bounds for FTLEs

The proofs of the theorems considered in this section build upon the approach described in [11]. The main results are Theorems 2.1 and 2.4. Theorem 2.1 gives an upper bound to \({\mathfrak {L}}_k\) for any value of \(\alpha \). It follows from this result that for \(k=1\) and \(\alpha <\lambda _1\) the FTLE is almost surely negative. Theorem 2.4 provides a lower bound to the highest admissible value that \({\mathfrak {L}}_k\) can assume. Specifically, it shows that there is a positive probability of \({\mathfrak {L}}_k\) to be positive for \(\alpha \) beyond the k-th bifurcation.

2.1 Upper Bound

Theorem 2.1

For any \(k\ge 1\),

with probability 1 for all \(t>0\).

Proof

Given \({\textbf {v}}_t=v^1_t\wedge ...\wedge v^k_t=\wedge ^k {\text {D}}_{a(\omega )} \varphi _\omega ^t({\textbf {v}}_0)\), with \({\textbf {v}}_0\in \wedge ^k {\mathcal {H}}\), we obtain by [11, Lemma 3.5] and the min-max principle that

for \(B^t_\omega :=-3 a (\theta ^t \omega )^2\). \(\square \)

2.2 Lower Bound

Lemma 2.2

With probability 1, \(a(\theta ^t\omega )\in {\mathcal {V}}\) for all \(\alpha ,t\in {\mathbb {R}}\). Also, for any \(T,\epsilon >0\), there exists an \({\mathcal {F}}^T_{-\infty }\)-measurable set \(\Omega _0\subset \Omega \) such that \({\mathbb {P}}(\Omega _0)>0\) and

Proof

We begin the proof assuming for the initial condition \(u_0\in {\mathcal {V}}\). From Proposition 1.7 and the continuity of the solution in \({\mathcal {V}}\) we get for the singleton attractor that \(a(\omega )\in {\mathcal {V}}\) for all \(\omega \in \Omega \). Additionally, from Lemma 1.6 and Proposition 1.7 we know that the invariant measure that describes the law of the attractor is locally positive on \({\mathcal {V}}\). As a result it follows that \(||a(\omega )||_{\mathcal {V}}\in (0,\eta )\), for all \(\omega \in \Omega _1\) with \(\Omega _1\) that is \({\mathcal {F}}_{-\infty }^0\)-measurable [18, Proposition 3.1] and \({\mathbb {P}}(\Omega _1)>0\) and for a constant \(\eta >0\) dependent on \(\Omega _1\). We now define the family of operators \(S(t):=\text {e}^{(A+\alpha )t}\) in order to study the solutions of

From this SPDE we subtract the Orstein-Uhlenbeck process which is solution of

with zero Dirichlet boundary conditions and takes the form

to introduce \({\tilde{u}}:=u-z\). Hence, we obtain the random PDE

whose mild solution is

with \({\tilde{u}}_0:={\tilde{u}}(0)=u_0\) and \(\iota (x)=-x^3\) for all \(x\in {\mathcal {O}}\). From [1, 25] we obtain that S(t) is an analytic semigroup. The norms \(||\cdot ||_{\mathcal {V}}=||(-\Delta )^{\frac{1}{2}}\cdot ||_{\mathcal {H}}\) and \(||\cdot ||_A=||(-A)^{\frac{1}{2}}\cdot ||_{\mathcal {H}}\) are equivalent in \({\mathcal {V}}\) as proven in Appendix A. Therefore we obtain the following inequalities from [33]:

for a certain constant \(c>0\). Another important estimate is obtained by the fact that the nonlinear term \(\iota :{\mathcal {V}}\longrightarrow {\mathcal {H}}\) is locally Lipschitz and thus for any \(u_1,u_2\in U\subset {\mathcal {V}}\) there exists a constant \(\ell >0\) such that

with U being a bounded subset of \({\mathcal {V}}\). Using a cut-off technique, we can truncate \(\iota \) outside of a ball in \({\mathcal {V}}\) of radius \(R>0\) and center in the null function and obtain the globally Lipschitz function

with \(\Theta :{\mathbb {R}}^+\longrightarrow [0,1]\), a \({\mathcal {C}}^1\) cut-off function. As for \(\iota \), the Lipschitz inequality takes the form, for a certain \({\tilde{\ell }}>0\),

From (9), the estimates (10) and the fact that \({\tilde{\iota }}(u)=\iota (u)\) on the ball with center in the null function and radius R in \({\mathcal {V}}\), we can obtain the following inequality

for which we have assumed R large enough to have \(\underset{t\in [0,T]}{\sup }\ ||{\tilde{u}}(t)+z(t) ||_{\mathcal {V}}<R\). By [18, Proposition 3.1] we can consider the set

which has positive probability. Since \(\Omega _1\in {\mathcal {F}}_{-\infty }^0\) and \(\Omega _2\in {\mathcal {F}}_0^T\), they are independent and \(\Omega _0:=\Omega _1\cap \Omega _2\in {\mathcal {F}}_{-\infty }^T\) has also positive probability. We therefore fix \(\omega \in \Omega _0\) and derive from (11)

and then

The rest of the proof is equivalent to the steps in [11, Proposition 2.7], where it is proven that the right-hand side in (12) is bounded by \(c_1 \eta \), for \(c_1>0\) that does not depend on t but is dependent on T, by assuming \({\tilde{u}}_0=a(\omega )\). \(\square \)

We define now, for \({\textbf {v}}=v_1\wedge ...\wedge v_k\) and \({\textbf {w}}=w_1\wedge ...\wedge w_k \in \wedge ^k {\mathcal {H}}\),

with \(\Pi _{{\textbf {i}}}\) denoting the projection on \({\textbf {e}}_{{\textbf {i}}}:=e_{i_1}\wedge ...\wedge e_{i_k}\), the operator \(\Pi _{{\textbf {i}}}^\perp :=I-\Pi _{{\textbf {i}}}\) as the projection on the hyperplane perpendicular to \({\textbf {e}}_{{\textbf {i}}}\), I being the identity operator on \(\wedge ^k {\mathcal {H}}\), \({\textbf {i}}=\{i_1,...,i_k\}\) and \({\textbf {i}}_0=\{1,...,k\}\) aside from permutations. The scalar product present in such construction is defined in (8). We denote by \(||\cdot ||\) the norm defined by it on \(\wedge ^k{\mathcal {H}}\). In the next proof we make use of \(\Lambda _{{\textbf {i}}_0}:=\sum _{j=1}^k (\alpha -\lambda _{i_j})\).

Lemma 2.3

Let \(T>0\) and \(0<\epsilon \ll \dfrac{1}{k} (\lambda _{k+1}-\lambda _k)\) be fixed, and let \(\omega \in \Omega \) be an event with the property that the nonlinear term \(B^t_\omega :=-3 a (\theta ^t \omega )^2\) satisfies

for all \(t\in [0,T]\). Finally, assume \({\textbf {v}}_0=v_0^1\wedge ...\wedge v_0^k\in \wedge ^k L^2\) satisfies \(Q_\delta ^{(k)}({\textbf {v}}_0)>0\) for \(\delta >0\) for which

for all \({\textbf {i}}\ne {\textbf {i}}_0\). Under these conditions, the k-blade \({\textbf {v}}_t:=\wedge ^k {\text {D}}_{a(\omega )}\varphi _\omega ^t({\textbf {v}}_0)\), corresponding to the solutions \(v_t^j={\text {D}}_{a(\omega )}\varphi _\omega ^t(v_0^j)\) of the first variation equation with initial condition set at \(v_0^j\) for \(j\in \{1,...,k\}\) and assumed at time \(t>0\), satisfies the inequality

Proof

We know that

We then denote \(v_j=v^j_t={\text {D}}_{a(\omega )}\varphi _\omega ^t(v^j_0)\). For the multiplication operator \(B=B_\omega ^t\) we use the hypothesis \(||B||_{\mathcal {V}}\le \epsilon \) which implies that \(||B v||_{\mathcal {H}} \le \epsilon ||v ||_{\mathcal {H}}\) for any \(v \in {\mathcal {H}}\). Hence, we obtain

We know the existence of some coefficients \(\rho _{{\textbf {i}}}\) so that

This leads to

for which

We can obtain therefore

and

This gives us the following result

Hence, for parameters that satisfy

for all \({\textbf {i}}\ne {\textbf {i}}_0\), or equivalently,

we can deduce that

\(\square \)

Theorem 2.4

For any \(k\ge 1\), \(0<\eta \ll \lambda _{k+1}-\lambda _k\) and \(T>0\), there exists \(\Omega _0\subset \Omega \), a positive probability event, such that

for all \(\omega \in \Omega _0, \; t\in [0,T]\).

Proof

Lemma 2.2 proves for any \(\epsilon >0\) the existence of a set \(\Omega _0\subset \Omega \) so that for all \(\omega \in \Omega _0\) we have the bound \(||a(\theta ^t \omega )||_{\mathcal {V}}<\epsilon \) for \(t\in [0,T]\). Such result, along with the fact that \({\mathcal {V}}\) is a Banach algebra and a subset of \(L^2({\mathcal {O}})\), satisfies the first hypothesis of Lemma 2.3. Precisely, for any \(\epsilon >0\) we obtain \({\mathbb {P}}\Big (\{||B_\omega ^t||_{\mathcal {V}}< \epsilon , \text {for all }t\in [0,T]\}\Big )>0\).

From Lemma 2.3, we know that \(Q_\delta ^{(k)}({\textbf {v}}_0)>0\) implies \(Q_\delta ^{(k)}({\textbf {v}}_t)>0\) for all \(t\in [0,T]\) and, assuming \(\delta =\sqrt{\epsilon }\ll 1\) in (13),

This result gives

We take \({\textbf {v}}_0\in \wedge ^k {\mathcal {H}}\) and \(M>1\) such that \(Q_{\frac{\delta }{M}}^{(k)}({\textbf {v}}_0)\ge 0\).Footnote 4 It follows that

Since \(Q_{\frac{\delta }{M}}^{(k)}({\textbf {v}}_0)\ge 0\) we know that \(||{\textbf {v}}_0||^2\le (1+\frac{\delta }{M})||\Pi _{{\textbf {i}}_0}{} {\textbf {v}}_0||^2\) and, therefore,

Since \(Q_\delta ^{(k)}({\textbf {v}}_t)\le \delta ||{\textbf {v}}_t||^2\), and using (14) and (15), we obtain

The proof is complete considering Lemma 2.2, the fact that the prefactor can be close to 1 for \(M\longrightarrow +\infty \) and taking \(\eta =2 k \delta \ll \lambda _{k+1}-\lambda _k\) with \(\delta =\sqrt{\epsilon }\). \(\square \)

Remark

Theorems 2.1 and 2.4 prove that the right extreme of the interval of the possible values assumed by \({\mathfrak {L}}_k\) is \(\Lambda _{{\textbf {i}}_0}\). In particular for \(k=1\) it is \(\alpha -\lambda _1\).

For \(k>1\) the value of \(\alpha \) that satisfies \(\Lambda _{{\textbf {i}}_0}=0\) is not \(\lambda _k\), therefore the change of sign of the highest possible value assumed by \({\mathfrak {L}}_k\) is not associated with a bifurcation event. The fact that \(\dfrac{1}{k}\sum _{j=1}^k \lambda _j<\lambda _k\) implies that \(\Lambda _{{\textbf {i}}_0}=0\) is satisfied for \(\alpha <\lambda _k\) i.e. before the k-th bifurcation threshold.

Remark

The introduction of the heterogeneous term g in (4) induces a change of value in the bifurcation thresholds \(\{\lambda _k\}_{k\in {\mathbb {N}}\setminus \{0\}}\) but maintains their existence. For any \(k\in {\mathbb {N}}\setminus \{0\}\) there is a dependence on \(\Lambda _{{\textbf {i}}_0}\) of g for all \(\alpha \in {\mathbb {R}}\) and therefore the choice of g shifts the values of \(\alpha \) at which the highest possible value of \({\mathfrak {L}}_k\) changes sign.

3 Early Warning Signs

The Chafee-Infante equation has been used to model dynamics in many application areas, e.g., in climate systems (for instance in [27]). It is well-understood that, in many applications, it is crucial to take into account stochastic dynamics. In this class of systems, stochasticity can reveal early warning signs for abrupt changes. In the case of (4), the tipping phenomenon happens at bifurcation points upon varying \(\alpha \). The most important change in the system happens when \(\alpha \) crosses \(\lambda _1\). Then a pitchfork bifurcation occurs and the null function loses its stability. Therefore, we will consider \(\alpha <\lambda _1\) and the operator \(A+\alpha \) that generates a \({\mathcal {C}}_0\)-contraction semigroup (see Appendix B).

3.1 Early Warning Signs for the Linearized Problem

In [32, 38] early warning signs for SPDEs which are based on the covariance operator of the linearized problem are presented. The reliability of such approximation relies upon estimates for the higher-order terms, which we are going to consider in the next section. First, we focus on the linearized problem, which is given by

for \(t>0\), \(x\in {\mathcal {O}}\), with \(A_\alpha =A+\alpha \) and Dirichlet boundary conditions. The existence and uniqueness in \(L^2(\Omega ,{\mathcal {F}},{\mathbb {P}};{\mathcal {H}})\) of the mild solution of (16), defined as

is satisfied when its covariance operator,

is trace class for all \(t>0\) [23, Theorem 5.2].Footnote 5 Since \(A_\alpha \) generates a \({\mathcal {C}}_0\)-contraction semigroup such a requirement can be satisfied by bounded Q.

In order to prove the existence and uniqueness in \(L^2(\Omega ,{\mathcal {F}},{\mathbb {P}};{\mathcal {H}})\) of the mild solution of (4) one only has to require continuity in \({\mathcal {H}}\) of the solution of (16). Hence the conditions (see Appendix B) we take for Q are:

- 1\('\):

-

there exists an \(D>0\) such that \(b_j=e_j'\) for all \(j>D\),

- 2\('\):

-

\(q_j>0\) for all \(j\in {\mathbb {N}}{\setminus }\{0\}\),Footnote 6

- 3\('\):

-

\(\sum _{j=1}^\infty q_j \lambda _j'^\gamma <+\infty \) for a \(\gamma >-1\),Footnote 7

- 4\('\):

-

Q is bounded.Footnote 8

We can then conclude [22, Theorem 2.34] that the transition semigroup of the system (16) has a unique invariant measure which is Gaussian and has mean zero and covariance operator \(V_\infty :=\underset{t\rightarrow \infty }{\lim }\ V(t)\). Such an operator is linear, self-adjoint, non-negative, and continuous in \({\mathcal {H}}\). Using the stated properties it is possible to prove the following proposition and obtain a first early warning sign building upon [38].

Proposition 3.1

Consider any pair of linear operators A and Q, to whose respective eigenfunctions and eigenvalues we refer as \(\{e_k,\lambda _k\}_{k\in {\mathbb {N}}\setminus \{0\}}\) and \(\{b_k,q_k\}_{k\in {\mathbb {N}}\setminus \{0\}}\), that satisfy the properties 1’, 2’, 3’, 4’, and assume \(A_\alpha =A+\alpha \) self-adjoint operator that generates a \({\mathcal {C}}_0\)-contraction semigroup for a fixed \(\alpha \in {\mathbb {R}}\). Then the covariance operator \(V_\infty \) given by the first equation in (16) satisfies

for all \(j_1,j_2\in {\mathbb {N}}\setminus \{0\}\).

Proof

First, we consider the Lyapunov equation derived in [22, Lemma 2.45] and obtain

for all \(f_1,f_2\in {\mathcal {H}}\). From the self-adjointness of \(A_\alpha \) and \(V_\infty \), for any \(j_1,j_2\in {\mathbb {N}}{\setminus }\{0\}\) we deduce that

We can then obtain the relation (see Appendix C)

which finishes the proof. \(\square \)

The early warning sign is qualitatively visible because for \(j_1=j_2=1\) the Eq. (17) diverges when \(\alpha =\lambda _1\). This allows one to apply usual techniques (extracting a scaling law from a log-log plot) to estimate the bifurcation point, if sufficient data from the system is available. We also note that the proposition can easily be extended to operators A that are not self-adjoint by studying in that case the real parts of its eigenvalues. Additionally, the strict positivity of Q can be substituted by non-negativity of the operator, although in such case the divergence by the \(j_1=j_2=1\) component is not implied. The result (17) leads to a second type of early warning sign.

Theorem 3.2

For any pair of linear operators A and Q that satisfy the properties stated in Proposition 3.1 and for A whose eigenfunctions \(\{e_k\}_{k\in {\mathbb {N}}\setminus \{0\}}\) form a basis of \({\mathcal {H}}\), we have

for any \(f_1,f_2\in {\mathcal {H}}\).

Proof

Applying the method described in Appendix C and the previous proposition,

\(\square \)

The last theorem demonstrates that, given \(f_1\) and \(f_2\) that have a component in \(e_1\), the resulting scalar product \(\langle V_\infty f_1,f_2\rangle \) diverges in \(\alpha =\lambda _1\). Theorem 3.1 can describe local behaviour and it can also be used to study the effect of stochastic perturbations on a single point \(x_0\in \mathring{{\mathcal {O}}}\). This can be achieved by taking \(f_1, f_2\in {\mathcal {H}}\) in (19) equal to a function

for \(\chi _{[\cdot ,\cdot ]}\) meant as the indicator function on an interval. Clearly, \(f_\epsilon \) is in \(L^1({\mathcal {O}})\) and in \(L^2({\mathcal {O}})\). The \(L^1\) norm is equal to 1 for small \(\epsilon \) and the sequence \(\{f_\epsilon \}_\epsilon \) converges weakly in \(L^1\) to the Dirac delta in \(x_0\) for \(\epsilon \rightarrow 0\). For these reasons and recalling that by the Sobolev inequality \(\{e_j\}_{j\in {\mathbb {N}}{\setminus }\{0\}}\subset H^1_0({\mathcal {O}}) \subset L^\infty ({\mathcal {O}})\), we can obtain \(\underset{{\epsilon \rightarrow 0}}{\lim }\langle V_\infty f_\epsilon ,f_\epsilon \rangle \).

Corollary 3.3

Consider any pair of linear operators A and Q with same properties as required in Theorem 3.2 and \(\{f_\epsilon \}\) defined in (20), then

for all \(x_0\in \mathring{{\mathcal {O}}}\).

Proof

Fix \(x_0\in \mathring{{\mathcal {O}}}\). The goal of this proof is to show that the series in (19) satisfies the dominated convergence hypothesis for \(f_1=f_2=f_\epsilon \) for all \(0<\epsilon \). In order to achieve that, we split the series

in two parts.

-

Part 1

Following (54) in Appendix A we get the existence of the constants \(c,M>0\) that satisfy \(||e_j-e_j'||_\infty \le c\dfrac{1}{j}\), \(||e_j||_\infty \le M\) and \(\lambda _j\sim j^2\) for all indexes \(j\in {\mathbb {N}}\setminus \{0\}\). For \(j_1=j_2\), we can use Hölder’s inequality to study the series

$$\begin{aligned} \dfrac{1}{2} \sum _{j=1}^\infty \bigg |\dfrac{\langle f_\epsilon ,e_j\rangle ^2}{\lambda _j-\alpha }\langle e_j,Q e_j\rangle \bigg |\le \dfrac{M^2}{2} q_* \sum _{j=1}^\infty \dfrac{1}{\lambda _j-\alpha }<+\infty , \end{aligned}$$(23)for \(q_*=\underset{j\in {\mathbb {N}}\setminus \{0\}}{\sup }\{q_j\}\).

-

Part 2

To prove the convergence of (22) we consider

$$\begin{aligned} \begin{aligned}&\sum _{j_1=1}^\infty \sum _{j_2\ne j_1} \bigg |\dfrac{ \langle f_\epsilon ,e_{j_1}\rangle \langle f_\epsilon ,e_{j_2}\rangle }{\lambda _{j_1}+\lambda _{j_2}-2\alpha }\langle e_{j_1},Q e_{j_2}\rangle \bigg |\le M^2 \sum _{j_1=1}^\infty \sum _{j_2\ne j_1} \bigg |\dfrac{\langle e_{j_1},Q e_{j_2}\rangle }{\lambda _{j_1}+\lambda _{j_2}-2\alpha }\bigg |\\&\le M^2 \sum _{j_1=1}^\infty \sum _{j_2\ne j_1} \bigg |\dfrac{\langle e_{j_1}',Q e_{j_2}'\rangle +\langle e_{j_1}',Q (e_{j_2}-e_{j_2}')\rangle +\langle e_{j_1}-e_{j_1}',Q e_{j_2}'\rangle +\langle e_{j_1}-e_{j_1}',Q (e_{j_2}-e_{j_2}')\rangle }{\lambda _{j_1}+\lambda _{j_2}-2\alpha }\bigg |. \end{aligned} \end{aligned}$$We recall that \(\langle e_{j_1}',Q e_{j_2}'\rangle =q_{j_1} \delta _{j_2}^{j_1}\) for \(j_1,j_2>D\), for \(\delta \) meant as the Kronecker delta. Also, by the inclusion in \({\mathcal {H}}\) of the space of functions \(L^\infty ({\mathcal {O}})\) with Dirichlet conditions, we can state there exists \(c_1>0\) so that for all \(w\in {\mathcal {H}}\), \(||w||_{\mathcal {H}}\le c_1 ||w||_\infty \). Therefore, by Hölder’s inequality and the min-max principle, it follows that

$$\begin{aligned} \begin{aligned}&\sum _{j_1=1}^\infty \sum _{j_2\ne j_1} \bigg |\dfrac{ \langle f_\epsilon ,e_{j_1}\rangle \langle f_\epsilon ,e_{j_2}\rangle }{\lambda _{j_1}+\lambda _{j_2}-2\alpha }\langle e_{j_1},Q e_{j_2}\rangle \bigg |\le M^2 q_* D^2\\&\quad + M^2 q_* \sum _{j_1=1}^\infty \sum _{j_2\ne j_1} \bigg |\dfrac{c c_1 \frac{1}{j_2}+c c_1 \frac{1}{j_1}+c^2 c_1^2 \dfrac{1}{j_1 j_2}}{\lambda _{j_1}+\lambda _{j_2}-2\alpha }\bigg |\\&\le M^2 q_* D^2{+} M^2 q_* c c_1 \Bigg ( \sum _{j_1=1}^\infty \sum _{j_2\ne j_1} \dfrac{1}{(\lambda _{j_1}{+}\lambda _{j_2}-2\alpha )} \Bigg ( \bigg |\dfrac{1}{j_2}\bigg |{+} \bigg |\dfrac{1}{j_1}\bigg |+ \bigg |\dfrac{c c_1}{j_1 j_2}\bigg |\Bigg )\Bigg )<\infty . \end{aligned} \end{aligned}$$(24)

From (23) and (24) we have proven the convergence of (22). By the weak convergence in \({\mathcal {H}}\) of \(f_\epsilon \) to \(\delta _{x_0}\), the Dirac delta on \(x_0\), and the dominated convergence theorem we can then calculate

which yields the desired result. \(\square \)

The efficiency of the early warning sign described in Corollary 3.3 for any \(x_0\in \mathring{{\mathcal {O}}}\) is underlined by the following Proposition [41, Theorem 2.6].

Proposition 3.4

Given the Schrödinger operator \(A=\Delta -g:H^2({\mathcal {O}})\cap H^1_0({\mathcal {O}})\rightarrow L^2({\mathcal {O}})\) and \(g\in L^2({\mathcal {O}})\), then its eigenfunctions \(\{e_k\}_{k\in {\mathbb {N}}\setminus \{0\}}\) admit exactly \(k-1\) roots in \(\mathring{{\mathcal {O}}}\) respectively.

From Proposition 3.4 and the strict positivity of Q, the influence of the divergence of (17) for \(j_1=j_2=1\) affects all interior points of \({\mathcal {O}}\). Hence, on each point \(x_0\in \mathring{{\mathcal {O}}}\) one gets a divergence of (21) of hyperbolic-function type multiplied by the constant \(e_1(x_0)^2\bigg (\sum _{n=1}^\infty q_n \langle e_1,b_n\rangle ^2\bigg )\). Specifically, the point at which the divergence is visible the most is for \(x_0\in \mathring{{\mathcal {O}}}\) such that \(x_0=\underset{x\in \mathring{{\mathcal {O}}}}{\text {argmax}}\{|e_1(x) |\}\). This is highly relevant for practical applications as it determines a good measurement location for the spatially heterogeneous SPDE.

Remark

The choice of g influences the early warning signs described by (17), (19) and (21) in two aspects. By affecting the values of \(\{\lambda _j\}_j\) it is related to the value of \(\alpha \) at which warning signs diverge and from its relation with the functions \(\{e_j\}_j\) it influences the directions on \({\mathcal {H}}\) on which such divergence is stronger. The point \(x_0=\underset{x\in \mathring{{\mathcal {O}}}}{\text {argmax}}\{|e_1(x) |\}\) at which (21) assumes the highest values close to the bifurcation, i.e. \(x_0\in \mathring{{\mathcal {O}}}\) that satisfies

also depends on the choice of g by construction.

4 Error and Moment Estimates

In the following section we will study the non-autonomous system

for \(0<\epsilon \ll 1\), \(\alpha \in {\mathcal {C}}([0,\tau ])\) for a given \(\tau >0\) and the \(\sigma ,g,W_t,{\mathcal {H}},{\mathcal {O}}\) defined as in (4). The covariance operator Q is assumed to satisfy properties 1’, 2’, 3’ and 4’ from the previous section. The slow dependence on time is in accordance to the fact that in simulations of realistic applications, the previous parameter \(\alpha \) in (4) is not constant but slowly changing; see also [31]. For fixed \(\epsilon \) we will assume \( \alpha (\epsilon t)<\lambda _1\) in \(0\le \epsilon t\le \tau \). Therefore we will study the system before the first non-autonomous bifurcation. Following the methods used in [6, 10] we want to understand the probabilistic properties of the first time in which the solutions of (25), with \(u_0\) close to the null function, get driven apart from a chosen solution of the equivalent deterministic system, i.e. (25) with \(\sigma =0\), taking as metric the distance induced by a fractional Sobolev norm of small degree.

In this section we use the Landau notation on scalars \(\rho _1\in {\mathbb {R}},\;\rho _2>0\) as \(\rho _1=O(\rho _2)\) if there exists a constant \(c>0\) that satisfies \(|\rho _1 |\le c \rho _2\). Such constant is independent from \(\epsilon \) and \(\sigma \), however it depends on g and \(\alpha \).

The first equation in (25) can be studied for the slow time \(\epsilon t\) setting, named again for convenience t, giving the form

through rescaling of time.

We will denote the \(A^s\)-norm of power \(s\ge 0\) of any function \(\phi =\sum _{k=1}^\infty \rho _k e_k \in {\mathcal {H}}\) with \(\{\rho _k\}_{k\in {\mathbb {N}}\setminus \{0\}}\subset {\mathbb {R}}\) as

The functions in \({\mathcal {H}}\) that present finite \(A^s\)-norm define the space \({\mathcal {V}}^s\). It can be proven that the \(A^s-\)norm is equivalent to the fractional Sobolev norm on \(W^{s,2}_0({\mathcal {O}})\), the Sobolev space of degree s, \(p=2\) and Dirichlet conditions, labeled as \({\mathcal {V}}^s-\)norm,

Such result is given by the fact that, as shown in Appendix A, \({\mathcal {D}}((-\Delta )^\frac{1}{2})={\mathcal {D}}((-A)^\frac{1}{2})\) and from the characterization of interpolation spaces described by [40, Theorem 4.36]. In particular, it implies \({\mathcal {V}}^s:={\mathcal {D}}((-A)^\frac{s}{2})=W^{s,2}_0({\mathcal {O}})\).

For simplicity, we will always assume \(g(x)\ge 1\) for all \(x\in {\mathcal {O}}\), so that for any couple of parameters \(0\le s<s_1\) and \(\phi \in {\mathcal {V}}^{s_1}\), \(||\phi ||_{A^s}\le ||\phi ||_{A^{s_1}}\). The following lemma shows an important property, a Young-type inequality, of the \(A^s-\)norms which we exploit.

Lemma 4.1

Set \(0<r,s\) and \(0<q<\dfrac{1}{2}\) so that \(q+\dfrac{1}{2}<r+s\). Then there exists \(c(r,s,q)>0\) for which

for any \(\phi _1\in {\mathcal {V}}^r\) and \(\phi _2\in {\mathcal {V}}^s\).

Proof

The equivalence of the \(A^{s_1}\)-norm and the \({\mathcal {V}}^{s_1}\)-norm for any \(0\le s_1\le 1\) justifies the study of (26) for the \({\mathcal {V}}^{s_1}\)-norms. The important change that such choice permits is the fact that for any \(\phi \in {\mathcal {H}}\) we can rewrite the series

for \({\tilde{\rho }}_k:=-\dfrac{\text {sign}(k) {\text {i}}}{\sqrt{2}}\langle \phi ,e_k'\rangle \), \({\tilde{\rho }}_0=0\) and for the symbol \({\text {i}}\) meant as the imaginary unit.Footnote 9 Furthermore, it is easy to prove that for any \(0\le s_1\le 1\)

Since the product of any couple of elements in \(\Big \{\dfrac{1}{\sqrt{L}}\text {e}^{{\text {i}}\frac{\pi k}{L} x}\Big \}_{k\in {\mathbb {Z}}}\) is proportional to another member of the set, we can apply the Young-type inequality in [7, Lemma 4.3] which implies the existence of a constant \(c'(r,s,q)\) that satisfies

for any \(\phi _1\in {\mathcal {V}}^r\) and \(\phi _2\in {\mathcal {V}}^s\). From the equivalence of the \(A^s\)-norms and the \({\mathcal {V}}^s\)-norms we can state that there exists \(c(r,s,q)>0\) that satisfies the desired inequality. \(\square \)

From the fact that \(\alpha (t)<\lambda _1\) for \(t<\tau \) we know that any solution \({\bar{u}}\) of the deterministic problem

with initial conditions in \({\mathcal {H}}\), approaches the null function exponentially in \({\mathcal {H}}\) in any time \(0\le t\le \tau \). Furthermore, following the proof of [10, Proposition 2.3] we prove in the following Proposition equivalent results for the \(A^1-\)norm.

Proposition 4.2

Given \({\bar{u}}\), a solution of (27) such that \(||{\bar{u}}(\cdot ,0)||_A:=||{\bar{u}}(\cdot ,0)||_{A^1}\le \delta \), then

for all \(0\le t\le \tau \). Furthermore, we have that \(||{\bar{u}}(\cdot ,t)||_A\) approaches 0 exponentially in \(0\le t\le \tau \).

Proof

We define the Lyapunov function

for any \(\phi \in {\mathcal {V}}\).

Therefore we obtain

by using the min-max principle in

We have hence shown that, given initial condition \({\bar{u}}(\cdot ,0)=u_0\in {\mathcal {H}}\),

for a constant \(c_1>0\). The result now follows since the norm defined by \(F_\text {L}\) is equivalent to \(||\cdot ||_A\). \(\square \)

Given a function \({\bar{u}}\) that satisfies Proposition 4.2 for a fixed \(\delta >0\), we define the set in \({\mathcal {V}}\)

for \(s,h>0\). The first-exit time from \({\mathcal {B}}(h)\) is the stopping time

With these definitions we can obtain an estimate for the probability of jump outside of the defined neighbourhood over a finite time scale.

Theorem 4.3

Set \(q_*=\underset{j\in {\mathbb {N}}\setminus \{0\}}{\text {sup}}\{q_j\}\). For any \(0<s<\dfrac{1}{2}\) and \(\epsilon ,\nu >0\) there exist constants \(\delta _0,\kappa =\kappa (s),h_1, C_{\frac{h^2}{q_* \sigma ^2}}(\kappa ,T,\epsilon ,s)>0\) for which, given \(0<\sqrt{q_*}\sigma \ll h<h_1 \epsilon ^\nu \) and a function \({\bar{u}}\) that solves (27) and \(||{\bar{u}}(\cdot ,0)||_A\le \delta _0\), the solution of (25) with \(u_0={\bar{u}}(\cdot ,0)\) satisfies

for any \(0\le T \le \tau \).

The inequality obtained in the previous theorem does not require \(h^2\gg q_* \sigma ^2\) but such assumption simplifies the dependence of \(C_{\frac{h^2}{q_* \sigma ^2}}(\kappa ,T,\epsilon ,s)\) on \(\dfrac{h^2}{q_* \sigma ^2}\), otherwise nontrivial, and enables further study on the moments of the exit-time.

The proof of such error estimate is based on [10, Proposition 2.4] which is divided in the study of the linear problem obtained from (25) and the extension of the results to the nonlinear cases.

4.1 Preliminaries

We define \(\psi :=u-{\bar{u}}\) for u solution of (25) and \({\bar{u}}\) solution of (27) with initial conditions \({\bar{u}}(\cdot ,0)=u(\cdot ,0)=u_0\). Then \(\psi \) is solution of

The first equation of the system (28) is equivalent, by Taylor’s formula, to

with

for a certain \(0<\eta <1\).

4.2 Linear Case

Suppose \(\psi _0\) is a solution of

Then \(\psi _k:=\langle \psi _0,e_k\rangle \) satisfies

for a family of independent Wiener processes \(\{\beta _j\}_{j\in {\mathbb {N}}\setminus \{0\}}\). We can now prove the following lemmas.

Lemma 4.4

There exists a constant \(c_0>0\) so that

for \(\text {Var}\) that indicates the variance, \(q_*:=\sup _j \{q_j\}\), all \(0\le t\le \tau \) and \(k\in {\mathbb {N}}\setminus \{0\}\).

Proof

By definition,

for \(0\le t \le \tau \) and \(k\in {\mathbb {N}}\setminus \{0\}\). \(\square \)

The subsequent lemma relies on methods presented in [10] and part of the proof follows [5, Theorem 2.4]. The generality in noise requires additional steps, due in particular to the fact that the eigenfunctions of A do not diagonalize Q.Footnote 10

Lemma 4.5

Given \(c^+>0\) and \(0 \le T \le \tau \) for which \(\lambda _k-\underset{0\le t \le T}{\text {min}} \{\alpha (t)\}\le c^+ \lambda _k\) for all \(k\in {\mathbb {N}}\setminus \{0\}\), then there exists a constant \(\gamma _0>0\) that satisfies

for any \(0<\gamma \le \gamma _0\), for \(q_*:=\underset{j}{\sup } \{q_j\}\) and \(c_0\) obtained in Lemma 4.4.

Proof

For fixed \(k\in {\mathbb {N}}\setminus \{0\}\), the solution \(\psi _k\) of (31) is a Gaussian process. In fact, we have

for a Wiener process \(\beta \) and \(0\le t\le \tau \). The Eq. (33) can be proven with the following considerations:

-

For any \(0\le t\le \tau \) and \(n\in {\mathbb {N}}\setminus \{0\}\) we define \(\Xi _n(t)=\sum _{j=1}^n \sqrt{q_j} \langle b_j,e_k\rangle \beta _j(t)\). Then, given the integers \(n>m>0\),

$$\begin{aligned} {\mathbb {E}}\big (|\Xi _n(t)-\Xi _m(t)|^2\big )=t \sum _{j=m+1}^n q_j \langle b_j,e_k\rangle ^2\le \tau \sup _j\{q_j\}=\tau q_*. \end{aligned}$$Hence \(\Xi _n(t)\) converges to a random variable \(\Xi (t)\) in \(L^2(\Omega )\) for all \(0 \le t \le \tau \).

-

It is clear that

$$\begin{aligned} \Xi (0)=0 \end{aligned}$$and that the series has independent increments.

-

The time increments of \(\Xi \) from \(t_1\) to \(t_2\) with \(t_1<t_2 \le \tau \) are normally distributed with mean 0 and with variance \((t_2-t_1) \sum _{j=1}^\infty q_j \langle b_j,e_k\rangle ^2\). This can be proven through the Lèvy continuity theorem and the pointwise convergence in time of the characteristic functions of \(\Xi _n(t)\).

-

The almost sure continuity of \(\Xi \) is implied by the Borel-Cantelli Lemma and by the almost sure continuity of all elements in \(\{\Xi _n\}_{n\in {\mathbb {N}}\setminus \{0\}}\).

From (31) and (33) we can state that \(\psi _k\) is represented by Duhamel’s formula,

Due to the dependence on time t of \(-\lambda _k(t-t_1)+\int _{t_1}^t\alpha (t_2) {\text {d}}t_2\), \(\psi _k\) is not a martingale. Following [6, Proposition 3.1.5] we will study the martingale \(\text {e}^{\frac{1}{\epsilon }\big (\lambda _k t-\int _0^t\alpha (t_1){\text {d}}t_1 \big )}\psi _k\) and split the time interval in \(0=s_0<s_1<...<s_N=T\). For convenience, we define for all \(0\le t\le T\), \(D(t):=-\lambda _k t+\int _0^t\alpha (t_1) {\text {d}}t_1\). Hence

We can now apply a Bernstein-type inequality ([6]). Therefore,

for which we used Lemma 4.4 in the last inequality. By assumption we know \(-\lambda _k+\alpha (t)<0\) for all \(k\in {\mathbb {N}}\setminus \{0\}\) and \(0\le t\le T\). We can set the sequence of times \(\{s_j\}_{j=0}^N\) so that there exists \(\gamma _0>0\) for which \(\dfrac{\lambda _k T-\int _0^T \alpha (t_1){\text {d}}t_1}{\gamma _0\epsilon }=N\in {\mathbb {N}}{\setminus }\{0\}\) and

In conclusion, for \(\gamma _0>0\) small enough, such choice leads to

for any \(\gamma _0\ge \gamma \). \(\square \)

The inequality (32) can be treated as follows:

for assumptions equivalent to the previous lemma. Moreover, for \(\dfrac{h^2}{q_* \sigma ^2}\) big enough, (34) can be optimized on \(\gamma \) at \(\gamma =\dfrac{c_0 q_* \sigma ^2}{h^2 \lambda _k}\), resulting in

The previous lemmas are sufficient to prove the subsequent theorem whose proof follows the steps of [10, Theorem 2.4 in the linear case].

Theorem 4.6

For any \(0<s<\dfrac{1}{2}\), \(0<\epsilon \), \(q_*\sigma ^2\ll h^2\), \(0 \le T \le \tau \) there exist the constants \(0<\kappa (s), C_{\frac{h^2}{q_* \sigma ^2}}(\kappa ,T,\epsilon ,s)\) such that the solution \(\psi _0\) of (30) satisfies

Proof

Set \(\eta ,\rho >0\) so that \(\rho =\dfrac{1}{2}-s\). Then, for any sequence \(\{h_k\}_{k\in {\mathbb {N}}\setminus \{0\}}\subset {\mathbb {R}}_{>0}\) that satisfies \(h^2=\sum _{k=1}^\infty h_k^2\),

for which we used (35) in the last inequality. We can assume \(h_k=C(\eta ,s)h^2\lambda _k^{-1+s+\frac{\eta }{2}}\) with

Since \(\lambda _k\sim k^2\) from (54), we can use Riemann Zeta function \(\xi (\nu ):=\sum _{k=1}^\infty k^{-\nu }\) to prove that \(C(\eta ,s)>0\) for any \(\eta >0\). In fact, we have \(\xi (2-2\,s-\eta )<\infty \) for any \(0<\eta <2\rho \) and

for the symbol \(\propto \) that indicates proportionality of the terms for a constant dependent on g. For \(0<\eta <2\rho \) we can write

for \(\ell _T\propto \dfrac{h^2}{q_* \sigma ^2} \dfrac{c^+ e T}{c_0 \epsilon }\) and \(\ell \propto \dfrac{h^2}{q_* \sigma ^2}\dfrac{C(\eta ,s)}{c_0}\). We define

for which we know that

We can therefore bound

by assuming \(\iota \) decreasing in \([0,\infty )\). Such case is satisfied for \(\dfrac{h^2}{q_* \sigma ^2}\) larger than a constant of order 1 dependent on the choice of \(\eta \) and on s. Conversely, the theorem would be trivially proven.

Setting \(x'=\ell (1+x^2)^\frac{\eta }{2}\) and \(y=-\ell +x'\) we can state

for which we used \(\Big (1+\frac{y}{\ell }\Big )^\frac{2}{\eta }-1\ge \frac{2 y}{\eta \ell }\) and took the constants \(c_1(\eta ),c_2(\eta )>0\) that are uniformly bounded in \(\ell \) since \(\eta <12\). Such results lead to

The proof is concluded assuming \(\eta =\rho =\dfrac{1}{2}-s\), so that \(C(\eta ,s)\propto \xi \Big (\dfrac{3}{2}-s\Big )^{-1}\), \(\kappa (s)\propto \dfrac{C(\eta ,s)}{c_0}\) and

\(\square \)

Remark

The dependence of \(C_{\frac{h^2}{q_* \sigma ^2}}(\kappa ,\tau ,\epsilon ,s)\) on \(\tau \) and \(\epsilon \) is well known. In fact, the relation \(C_{\frac{h^2}{q_* \sigma ^2}}(\kappa ,\tau ,\epsilon ,s)\sim \dfrac{\tau }{\epsilon }\) is a property that we use to obtain moment estimates further in the paper. Such proportionality is inherited from (35). Additionally, for \(q_* \sigma ^2\ll h^2 \), the relation \(C_{\frac{h^2}{q_* \sigma ^2}}(\kappa ,\tau ,\epsilon ,s)\sim \Big (\dfrac{h^2}{q_* \sigma ^2}\Big )^{\frac{1}{2}}\) holds. Furthermore, the constants \(C_{\frac{h^2}{q_* \sigma ^2}}(\kappa ,\tau ,\epsilon ,s)\) and \(\kappa \) depend on the choice of g and \(\alpha \) from construction and the definition of \(c_0\) in Lemma 4.4.

We study now the error estimate given by \(\psi _*\), solution of

for the function \({\bar{u}}\) assumed in the construction of \(\psi \) in (28). In particular, \({\bar{u}}\) satisfies the hypothesis in Proposition 4.2. The proof of the next corollary is inspired by [5, Proposition 3.7] and makes use of the Young-type inequality proven in Lemma 4.1.

Corollary 4.7

For any \(0<s<\dfrac{1}{2}\) and \(0<\epsilon \), \(q_*\sigma ^2\ll h^2\), there exist \(M,\delta _0>0\) such that for any \(\delta \le \delta _0\) and \({\bar{u}}\) solution of (27) for which \(||{\bar{u}}(\cdot ,0)||_A\le \delta \), the ensuing constants \(0<\kappa (s), C_{\frac{h^2}{q_* \sigma ^2}} (\kappa ,\tau ,\epsilon ,s)\) obtained in Theorem 4.6 satisfy

for the solution \(\psi _*\) of (36) and any \(0 \le T \le \tau \).

Proof

Define, for \(M>0\),

and the event

Denote then the norm \(||\cdot ||_{A^s}\) norm as

for \(\phi \in {\mathcal {V}}^s\), the fractional Sobolev space of order 2 and degree \(0<s<\dfrac{1}{2}\) with Dirichlet conditions.

Taking \(\psi _0\) the solution of (30), we can state from Duhamel’s formula that

for all \(0 \le t \le \tau \). Therefore, for \(t<\tilde{\tau }\) and for \(\omega \in {\tilde{\Omega }}\),

for which we used Cauchy-Schwarz inequality and the min-max principle on \(||\cdot ||_{A^s}\). From the Young-type inequality in Lemma 4.1 and the control over the deterministic solution in Propostion 4.2 we obtain

for any \(||{\bar{u}}(\cdot ,0) ||_A\le \delta \). Labeling \(\eta =\lambda _1-\underset{0\le t_1 \le T}{\max } \alpha (t_1)>0\), we imply from (38) and (39),

For any \(0<s<\dfrac{1}{2}\), we can always find \(\delta _0,M>0\) such that \(M^2>\Big (1+M\dfrac{\epsilon c(r,s)}{\eta }\delta ^2\Big )^2\) for any \(\delta \le \delta _0\). From the definition of \(\tilde{\tau }\) it holds \(||\psi _*(\cdot ,\tilde{\tau }) ||_{A^s}^2=M^2\,h^2\) which is in contradiction with (40), hence we infer that \({\mathbb {P}}({\tilde{\Omega }})=0\) and

The inequality (37) is proven from Theorem 4.6. \(\square \)

Notation For notation, we omit M from (37) since it can be absorbed in the construction of \(C_{\frac{h^2}{q_* \sigma ^2}}(\kappa ,\tau ,\epsilon ,s)\) and \(\kappa \). We note that the constants M and \(\delta _0\) are dependent on g and \(\alpha \).

Remark

The exponential decay of \(||{\bar{u}}(\cdot ,t)||_A\) proven in Proposition 4.2 can be used in (39) to achieve more freedom on the choice of \(\delta \).

4.3 Nonlinear Case

In order to study the estimate error for (28) the following lemmas are required.

Lemma 4.8

Set \(0 \le t \le \tau \). For G defined as in (29) and a function \(\psi (\cdot ,t)\in V^s\) with \(\dfrac{1}{3}<s<\dfrac{1}{2}\), the inequality

holds when \(0<r<\dfrac{1}{2}-3\Big (\dfrac{1}{2}-s\Big )\) and for a certain \(c(r,s)<\infty \).

Proof

The current proof relies on Lemma 4.1. By definition of the function G, (29), and Proposition 4.2,

In the last inequality, we have labeled for simplicity \((1+\delta )c(r,s)\) as c(r, s) for \(||{\bar{u}} (\cdot ,0)||_A\le \delta \). \(\square \)

The next lemma can be proven following the same steps as [10, Lemma 3.5, Corollary 3.6].

Lemma 4.9

Given \(0<r<\dfrac{1}{2}\) so that \(G(\psi (\cdot ,t))\in V^r\) for all \(0 \le t \le \tau \) and \(\psi \) solution of (28) and set \(q<r+2\), then there exists \(M(r,q)<\infty \) that satisfies, for all \(0 \le t \le \tau \),

with \(\psi _*\) solution of (36).

We can then prove Theorem 4.3 according to a similar method to the one used in the proof of [10, Theorem 2.4].

.

Proof Theorem 4.3

Assume \(h_1,h_2>0\) such that \(h=h_1+h_2\) and set \(\dfrac{1}{3}<s<\dfrac{1}{2}\). Then

For \(t<\tau _{{\mathcal {B}}(h)}\) and since \(h=O(1)\), the inequalities (41) and (42) imply

for \(0<r<\dfrac{1}{2}-3\Big (\dfrac{1}{2}-s\Big )\) and \(0<q<r+2\). Assuming \(q\ge s\) and setting the parameter \(h_2=M(r,q) \epsilon ^{\frac{q-r}{2}-1} c(r,s) h^2\) we obtain

We can then estimate \({\mathbb {P}}\Big (\tau _{{\mathcal {B}}(h)}<T\Big )\le {\mathbb {P}}\Big (\underset{0\le t\le T}{\sup }\ ||\psi _*(\cdot ,t) ||_{A^s}\ge h_1\Big )\) with Corollary 4.7 for \(h_1=h-h_2=h\Big (1-O\Big (\dfrac{h}{\epsilon ^\nu }\Big )\Big )\) and \(\nu =1-\dfrac{q-r}{2}\). The choice of \(s\le q <r+2\) makes so that the inequality (42) holds for any \(\nu >0\).

When \(0<s<\dfrac{1}{3}\), the inclusion \(V^{s_1}\subset V^s\) if \(s<s_1\) implies that

for which the last term can be controlled as before by choosing \(\dfrac{1}{3}<s_1<\dfrac{1}{2}\). The inequality (43) holds since we assume \(g\ge 1\) and therefore \(\lambda _1>1\). \(\square \)

4.4 Moment Estimates

The inequality presented in Theorem 4.3 enables an estimation of the moments of \(\tau _{{\mathcal {B}}(h)}\). The proof of the next corollary relies on the step described in [6, Proposition 3.1.12].

Corollary 4.10

For any \(k\in {\mathbb {N}}\setminus \{0\}\), \(0<s<\dfrac{1}{2}\), \(\sigma \sqrt{q_*}\ll h\) and equivalent assumptions to Theorem 4.3, the following estimate holds:

for a constant \(c{=}c_{\kappa ,s}{>}0\) and \(\tilde{\kappa }{=}\kappa \bigg (1{-}O\Big (\dfrac{h}{\epsilon ^\nu }\Big )\bigg )\), given \(h,\kappa ,\nu >0\) from Theorem 4.3.

Proof

The \(k^\text {th}-\)moment can be estimated by

for any \(T>0\). From the hypothesis we know that \(C_{\frac{h^2}{q_* \sigma ^2}}(\kappa ,t,\epsilon ,s)\sim \dfrac{t}{\epsilon } \Big (\dfrac{h^2}{q_* \sigma ^2}\Big )^{\frac{1}{2}}\) by construction, therefore there exists a \(c>0\) such that \(C_{\frac{h^2}{q_* \sigma ^2}}(\kappa ,t,\epsilon ,s)<c\dfrac{t}{\epsilon } \Big (\dfrac{h^2}{q_* \sigma ^2}\Big )^{\frac{1}{2}}\) and

The last term on the right member can be optimized in T at \(T=\dfrac{\epsilon }{c} \Big ( \dfrac{h^2}{q_*\sigma ^2}\Big )^{-\frac{1}{2}} \text {exp}\Bigg \{{\tilde{\kappa }}\dfrac{h^2}{q_*\sigma ^2}\Bigg \}\), from which the corollary is proven. \(\square \)

The right-hand side in (44) depends on g and \(\alpha \) due to the presence of c and \({\tilde{\kappa }}\). The nature of the dependence is not trivial but it is visible that it affects the bound also in the exponential function.

5 Numerical Simulations

In order to cross-validate and visualize the results we have obtained, we use numerical simulations. We simulate (4) using a finite difference discretization and the semi-implicit Euler-Maruyama method [39, Chapter 10]. In detail:

-

An integer \(N\gg 1\) has been chosen in order to study the interval \({\mathcal {O}}=[0,L]\) in \(N+2\) points, each distant \(h=\dfrac{L}{N+1}\) from the closest neighbouring point.

-

The time was approximated by studying an interval of length \(T\ge 5000\) at the values \(\{j\;dt\}_{j=0}^{nt}\) for \(nt:=\dfrac{T}{dt}\in {\mathbb {N}}\) and \(nt\gg 0\).

-

The Laplacian operator is simulated as, \(\mathtt {\Delta }\), the tridiagonal \(N\times N\) matrix with values \(-\dfrac{2}{h^2}\) on main diagonal and \(\dfrac{1}{h^2}\) on the first superdiagonal and subdiagonal. The operator \(A_\alpha \) was then approximated with

$$\begin{aligned} \texttt{A}_\alpha :=\mathtt {\Delta }-\texttt{g}+\alpha \texttt{I}, \end{aligned}$$for \(\texttt{g}\) the diagonal \(N\times N\) matrix with elements \(\texttt{g}_{n,n}=g(n\;h)\) for any \(n\in \{1,...,N\}\) and \(\texttt{I}\) as the \(N\times N\) identity matrix.

-

The values assumed by the solution u in \(\mathring{{\mathcal {O}}}\) are approximated by the \(N\times (nt+1)\) matrix \(\texttt{u}\). Set \(j\in \{0,...,nt\}\), the \(j^\text {th}\) column of \(\texttt{u}\) is labeled \(\texttt{u}_j\).

-

The noise is been studied through the following:

-

The integer \(0<M\ll N\), chosen in order to indicate the number of directions of interest in \({\mathcal {H}}\) on which the noise will be considered to have effect;

-

The \(M\times N\) matrix \(\texttt{e}'\) with elements \(\texttt{e}'(m,n):=e'_m(n \; h)\);

-

The \(M\times M\) orthonormal matrix \(\texttt{O}\) defined by \(\texttt{O}(j_1,j_2):=\langle b_{j_1},e_{j_2}'\rangle \);

-

The vertical vector \(\texttt{q}\) composed of the first M eigenvalues of Q ordered by index and \(\texttt{q}^{\frac{1}{2}}\), the element-wise squared root of \(\texttt{q}\).

-

The random vertical vectors \(\texttt{W}_j\) with M elements generated by independent standard Gaussian distributions each \(j^\text {th}\) iteration.

-

Such constructions enable the approximation

for any \(j\in \{0,...,nt-1\}\). Figures 1 and 2 show the resulting plots for \(g(x)=cos(3x)+1\) and for \(g(x)=\dfrac{x}{L}\) distinguishing the cases in which \(\alpha \) is less or higher than \(\lambda _1\).Footnote 11 It is visible in figures (a) that for \(\alpha <\lambda _1\) the solution remains close to the null function and assumes no persistent shape. Pictures (b) display the change caused by the crossing of the bifurcation threshold. In particular, the fact that the solution jumps away from the null functions and remains close to an equilibrium. The perturbation generated by noise can then create jumps to other stable deterministic stationary solutions whose shape is defined by choice of g.

Simulation of (4) with \(g(x)=cos(3x)+1\) and \(\lambda _1\approx 1.188\). Each subfigure presents a surf plot and a contour plot obtained with (45) with the same noise sample. On the left \(\alpha \) is chosen before the bifurcation and on the right is taken beyond the bifurcation threshold. Metastable behaviour is visible on the second case

Simulation of (4) with \(g(x)=\dfrac{x}{L}\) and \(\lambda _1\approx 0.708\). The choice of \(\alpha \) and the corresponding behaviour is equivalent to the previous figure. The shape of g influences the value \(\lambda _1\) and the equilibria of the deterministic system (4), i.e. for \(\sigma =0\). Therefore it affects the behaviour of the solution and the bifurcation threshold

5.1 Simulation of early warning signs

As previously stated, the linearization is more effective for \(\alpha \) not close to \(\lambda _1\). The rest of the section is devoted to compare numerically the early warning signs, meant as the left-hand side of (17),(19) and (21), applied to solutions of (4) with the expected analytical result given by their application on (16). We show the effect on the early warning signs of the dissipative nonlinear term present in (4) and how it hinders the divergences, in the limit \(\alpha \longrightarrow \lambda _1\) from below, of the right-hand side of (17),(19) and (21).

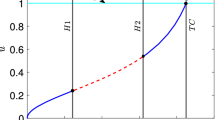

The results of (47) applied on the matrix \(\texttt{u}\), matrix obtained through the iteration of (45), are displayed in the picture for \(k=k_1=k_2\in \{1,2,3,4,5\}\) and different choices of g. The dots indicate the mean of such values obtained from 10 simulations with same parameters and initial conditions, but generated with different noise samples. The grey area has width equal to the double of the recorded numerical standard deviation and it is centered on the mean results. The blue line displays the result (48) for the linear system. For \(\alpha \) distant from \(\lambda _1\) the black and blue line show similar values

The fact that the invariant measure of the linear system (16), \(\mu \), is Gaussian with covariance operator \(V_\infty \) and mean equal to the null function [23, Theorem 5.2] implies that

for all \(f_1,f_2\in {\mathcal {H}}\). Therefore \(\langle V_\infty e_{k_1},e_{k_2}\rangle \) from (17) can be compared with

that is the numerical covariance of the projection of the solution of (45) on the selected approximations of the eigenfunctions of \(A_\alpha \). These are constructed as \(\texttt{e}_k(n):=e_k(n \; h)\) and obtained numerically through the "quantumstates" MATLAB function defined in [28]. The numerical scalar product  is defined by

is defined by  , for any pair of vectors \(\texttt{v},\texttt{w}\in {\mathbb {R}}^N\).Footnote 12

, for any pair of vectors \(\texttt{v},\texttt{w}\in {\mathbb {R}}^N\).Footnote 12

The plots in Fig. 3 illustrate for two examples of g the results of (47) for the chosen indexes \(k=k_1=k_2\in \{1,2,3,4,5\}\) and \(\alpha \) close to \(\lambda _1\). They are then compared with

which is the numerical approximation of the right-hand side of (17) on \(k=1\), with \(\{\texttt{b}_j\}_{j=1}^M\) the row vectors of \(\texttt{O}\texttt{e}'\) meant to replicate the eigenfunctions of Q.Footnote 13

It is clear from Fig. 3 that the dissipativity given by the nonlinear term in the system (4) hinders the variance of the system for \(\alpha \) close to \(\lambda _1\) and that the difference between early warning sign on (16) and the average of (47) with \(k_1=k_2=1\) on solutions given by (45) with different noise samples grows with \(\alpha \). For \(\alpha \) distant from \(\lambda _1\) the values of such results is close and the behaviour of the plots is similar.

A similar method can also be applied to replicate the early warning sign in (21). We can choose a function \(f_0\in L^\infty ({\mathcal {H}})\) such that \(f_0(0)=f_0(L)=0\) and for which there exist the integer \(0<p<N+2\) and \(x_0=ph\in {\mathcal {O}}\) that satisfy

by Corollary 3.3. From Proposition 3.4, one expects a hyperbolic-function divergence when \(\alpha \) reaches \(\lambda _1\) from below. Figures 4 and 5 compare \(\langle V_\infty f_0,f_0\rangle \) for different values of \(\alpha <\lambda _1\) in the form

shown as black dots, and the approximation of the right-hand side in Eq. (49)

in blue for a certain integer \(0<M_1\ll N\).Footnote 14 The subplots in Figs. 4 and 5 are given by the same simulations as in Fig. 3a, b respectively. Each dot in the subplot is the average of (50) obtained from 10 simulations which differ only by the sample for the noise taken. The numerical standard deviation is represented by the grey area.Footnote 15

Simulations of \({\tilde{V}}(p)\) obtained from 10 sample solutions of (4) with \(g(x)=cos(3x)+1\) and \(\lambda _1\approx 1.188\) simulated with (45). The black dots indicate the mean results and the width of the grey area corresponds to the double of the standard deviation for the relative \(\alpha \). It is clear that the early warning sign is close to the expected result for the linear case until a neighbourhood of the bifurcation threshold on which the nonlinear dissipative term avoids the divergence. The space discretization is achieved taking \(N=100\) internal points of \({\mathcal {O}}\) into account

Simulations of \({\tilde{V}}(p)\) obtained from 10 sample solutions of (4) with \(g(x)=\dfrac{x}{L}\) and \(\lambda _1\approx 0.708\). The results are similar to the previous figure but the choice of g appears to have influence over the difference of the expected early warning sign applied on the solution of the linear system (16) and its simulation applied on (4)

As in Fig. 3, the early warning sign (50) on the linear system assumes higher values than the average numerical variance obtained on projections on chosen spaces (51) of solutions of the nonlinear system. The difference in the two results is more evident when the dissipative nonlinear term is more relevant, which is close to the bifurcation. For such values of \(\alpha \) it seems also evident that the standard deviation on the simulations is wider. The cause of it is clear from Theorems 2.1 and 2.4.

6 Conclusion and Outlook

In this paper we have studied properties of a generalization of a Chafee-Infante type PDE with Dirichlet boundary conditions on an interval, by introducing a component heterogeneous in space. We have proven that it has a local supercritical pitchfork bifurcations from the trivial branch of zero solutions, equivalently to the original homogeneous PDE. We have then considered the effects of the crossing of the first bifurcation when the system is perturbed by additive noise. We have shown the existence of a global attractor regardless the crossing of the bifurcation threshold and found the rate at which the highest possible value of the FTLE approaches 0. It is then a crucial observation that this rate of the FTLE approaching zero is precisely the inverse to the order of divergence found in the early warning sign given by the variance over infinite time of the linearized system along almost any direction on \({\mathcal {H}}\) when approaching the bifurcation threshold from below. An equivalent rate was then proven when studying the variance for any specific internal point of the interval. Note carefully that the consistent rates for FTLE scaling and the corresponding inverse covariance operator and pointwise-variance scaling are obtained by completely different proof techniques. Yet, one can clearly extract from the proofs that the linearized variational and linearized covariance equations provide the explicit rates, so a natural conjecture is that the same principle of common scaling laws will also apply to a wide variety of other bifurcations. In summary, here we have already given a complete picture of a very general class of SPDEs with a additive noise when the deterministic PDE exhibits a classical single-eigenvalue crossing bifurcation point from a trivial branch.

In order to study the reliability of the early warning sign on the nonlinear system, the first exit-time from a small neighbourhood of the deterministic solution was studied by obtaining an upper bound of its distribution function. Hence, lower bounds of the moments have been derived. Lastly, numerical simulations have shown the consistency of the behaviour of the early warning sign applied on the solutions of the linear and nonlinear system for sufficient distance from the threshold.