Abstract

A novel multi-camera active-vision reconfiguration method is proposed for the markerless shape recovery of unknown deforming objects. The proposed method implements a model fusion technique to obtain a complete 3D mesh-model via triangulation and a visual hull. The model is tracked using an adaptive particle filtering algorithm, yielding a deformation estimate that can, then, be used to reconfigure the cameras for improved surface visibility. The objective of reconfiguration is maximization of the total surface area visible through stereo triangulation. The surface area based objective function directly relates to maximizing the accuracy of the shape recovered, as stereo triangulation is more accurate than visual hull building when the number of viewpoints is limited. The reconfiguration process comprises workspace discretization, visibility estimation, optimal stereo-pose selection, and path planning to ensure 2D tracked feature consistency. In contrast to other reconfiguration techniques that rely on a priori known, and at times static, object models, our method focuses on a priori unknown deforming objects. The proposed method operates on-line and has been shown to outperform static-camera based systems through extensive simulations and experiments with an increased surface visibility in the presence of occluding obstacles.

Similar content being viewed by others

Abbreviations

- C M :

-

A matrix of the object model’s center point repeated k-times [k × 3].

- K :

-

An indexing matrix of all positional combinations [pmax × ck].

- K ∗ :

-

A filtered indexing matrix [pred × ck].

- L(t):

-

The system and workspace constraints at demand instant t.

- M :

-

The object model at demand instant t.

- M + :

-

The expected object model deformation at the next demand instant.

- O + :

-

The expected obstacle model at the next demand instant

- P c(t):

-

The set of camera parameters at demand instant t.

- S(t):

-

The surface area of the object at demand instant t.

- R(k):

-

The estimated surface area visibility score for the kth positional combination.

- V :

-

A matrix of test positional vectors for reconfiguration [nv × 3].

- V ∗(t + 1):

-

The expected model visibility at the next demand instant.

- V map :

-

The normalized visibility proportion mapping matrix [np × nv].

- \(\textbf {V}_{\textbf {map}}^{*}\) :

-

A subset of Vmap [np × cn].

- X test :

-

A matrix of test points from model M+ [k × 3]

- \(\textbf {X}_{\textbf {test}}^{*}\) :

-

The set of test points projected onto an arbitrary plane [k × 3].

- Y :

-

A Boolean matrix of test point visibly [k × 4].

- a j :

-

The area of the jth polygon in the model at the current demand instant.

- c k :

-

The number of stereo camera pairs at the current demand instant.

- \(c_{k}^{*}\) :

-

The number of filtered stereo camera pairs at the current demand instant.

- c M :

-

The center point of the object model [1 × 3].

- \(\mathbf {c}_{M}^{+}\) :

-

The projected center point of the object model [1 × 3].

- \(\mathbf {c}_{M}^{*}\) :

-

A point in space produced by the triangulation of two rays associated with the object’s center point and stereo-pair placement, [1 × 3]

- c n :

-

The number of stereo camera pairs at the current demand instant

- c o b s :

-

The center point of the obstacle [1 × 3].

- d d :

-

A vector of distances along the test position vector from the model center to all test points.

- \(d_{thresh}^{d} \) :

-

The maximum allowable distance for dd.

- d w :

-

A vector of distances between the model center and all test points on a plane.

- \(d_{thresh}^{w} \) :

-

The maximum allowable distance for dw.

- g :

-

The distance from the object’s bounding rectangle to the image edge in pixels.

- h :

-

The stereo camera pair placement score.

- k ∗ :

-

An arbitrary row of K∗ matrix [1 × ck].

- n p :

-

The number of model polygons at the current demand instant.

- n v :

-

The number of test positional vectors.

- p ∗ :

-

A point on a test positioning vector [1 × 3].

- p c :

-

The mean stereo camera pair’s position at the current demand instant [1 × 3].

- \(\mathbf {p}_{c}^{+} \) :

-

The mean stereo camera pair’s position at the next demand instant [1 × 3].

- \(\mathbf {p}_{b}^{1} ,\mathbf {p}_{b}^{2} \) :

-

The Bézier-curve control points [1 × 3].

- p max :

-

The total number of possible positional combinations.

- p r e d :

-

The number of positional combinations used.

- q :

-

The unit vector normal of an arbitrary test point.

- vdepth :

-

A vector of Boolean visibility values for each test point [k × 1].

- vHPR :

-

A vector of Boolean visibility values for each test point [k × 1].

- vnorm :

-

A vector of Boolean visibility values for each test point [k × 1].

- vtest :

-

A unit test vector from V [1 × 3]

- vwidth :

-

A vector of Boolean visibility values for each test point [k × 1].

- y :

-

A vector of logical conjunction of Y across rows [k × 1].

- β :

-

The maximum path motion angle.

- 𝜃 min :

-

The minimum angular separation between two sets of stereo camera pairs.

- \(\sigma _{thresh}^{d} \) :

-

The standard deviation threshold value for depth operator.

- \(\sigma _{thresh}^{w} \) :

-

The standard deviation threshold value for depth operator.

- ϕ :

-

The angular separation between the test positional vector and a test point normal.

- ϕ max :

-

The maximum angle between a test positional vector and point normal.

References

Song, B., Ding, C., Kamal, A., Farrell, J.A., Roy-Chowdhury, A.K.: Distributed camera networks. IEEE Signal Process. Mag. 28(3), 20–31 (2011)

Piciarelli, C., Esterle, L., Khan, A., Rinner, B., Foresti, G.L.: Dynamic reconfiguration in camera networks: a short survey. IEEE Trans. Circuits Syst. Video Technol. 26(5), 965–977 (2016)

Ilie, A., Welch, G., Macenko, M.: A Stochastic Quality Metric for Optimal Control of Active Camera Network Configurations for 3D Computer Vision Tasks. In: ECCV Workshop on Multicamera and Multimodal Sensor Fusion Algorithms and Applications, pp 1–12 (2008)

Cowan, C.K.: Model-Based Synthesis of Sensor Location. In: IEEE International Conference on Robotics and Automation (ICRA), pp 900–905 (1988)

Tarabanis, K.A., Tsai, R.Y., Abrams, S.: Planning Viewpoints that Simultaneously Satisfy Several Feature Detectability Constraints for Robotic Vision. In: International Conference on Advanced Robotics “Robots in Unstructured Environments”, vol. 2, pp 1410–1415 (1991)

Qureshi, F.Z., Terzopoulos, D.: Surveillance in Virtual Reality: System Design and Multi-Camera Control. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp 1–8 (2007)

Piciarelli, C., Micheloni, C., Foresti, G.L.: PTZ Camera Network Reconfiguration. In: International Conference on Distributed Smart Cameras (ICDSC), pp 1–7 (2009)

Collins, R.T., Amidi, O., Kanade, T.: An Active Camera System for Acquiring Multi-View Video. In: International Conference on Image Processing, pp 1–4 (2002)

Chen, S.Y., Li, Y.F.: Vision sensor planning for 3-d model acquisition. IEEE Trans. Syst. Man, Cybern. Part B Cybern. 35(5), 894–904 (2005)

Schramm, F., Geffard, F., Morel, G., Micaelli, A.: Calibration Free Image Point Path Planning Simultaneously Ensuring Visibility and Controlling Camera Path. In: IEEE International Conference on Robotics and Automation (ICRA), pp 2074–2079 (2007)

Amamra, A., Amara, Y., Benaissa, R., Merabti, B.: Optimal Camera Path Planning for 3D Visualisation. In: SAI Computing Conference, pp 388–393 (2016)

Mir-Nasiri, N.: Camera-Based 3D Object Tracking and Following Mobile Robot. In: IEEE Conference on Robotics, Automation and Mechatronics, pp 1–6 (2006)

Abrams, S., Allen, P.K., Tarabanis, K.A.: Dynamic Sensor Planning. In: IEEE International Conference on Robotics and Automation (ICRA), vol. 2, pp 605–610 (1993)

Tarabanis, K.A., Tsai, R.Y., Allen, P.K.: The MVP sensor planning system for robotic vision tasks. IEEE Trans. Robot. Autom. 11(1), 72–85 (1995)

Christie, M., Machap, R., Normand, J.-M., Olivier, P., Pickering, J.: Virtual Camera Planning: a Survey. In: International Symposium on Smart Graphics, pp 40–52 (2005)

Bakhtari, A., Naish, M.D., Eskandari, M., Croft, E.A., Benhabib, B.: Active-vision-based multisensor surveillance-an implementation. IEEE Trans. Syst. Man, Cybern. Part C Appl. Rev. 36(5), 668–680 (2006)

MacKay, M.D., Fenton, R.G., Benhabib, B.: Pipeline-architecture based real-time active-vision for human-action recognition. J. Intell. Robot. Syst. 72(3–4), 385–407 (2013)

Schacter, D.S., Donnici, M., Nuger, E., MacKay, M.D., Benhabib, B.: A multi-camera active-vision system for deformable-object-motion capture. J. Intell. Robot. Syst. 75(3), 413–441 (2014)

Herrera, J.L. A., Chen, X.: Consensus Algorithms in a Multi-Agent Framework to Solve PTZ Camera Reconfiguration in UAVs. In: International Conference on Intelligent Robotics and Applications, pp 331–340 (2012)

Konda, K.R., Conci, N.: Real-Time Reconfiguration of PTZ Camera Networks Using Motion Field Entropy and Visual Coverage. In: Proceedings of the International Conference on Distributed Smart Cameras, p 18 (2014)

Natarajan, P., Hoang, T.N., Low, K.H., Kankanhalli, M., Hoang, T.N., Low, K.H.: Decision-Theoretic Coordination and Control for Active Multi-Camera Surveillance in Uncertain, Partially Observable Environments. In: International Conference on Distributed Smart Cameras (ICDSC), pp 1–6 (2012)

Song, B., Soto, C., Roy-Chowdhury, A.K., Farrell, J.A.: Decentralized Camera Network Control Using Game Theory. In: 2008 Second ACM/IEEE International Conference on Distributed Smart Cameras, pp 1–8 (2008)

Ding, C., Song, B., Morye, A., Farrell, J.A., Roy-Chowdhury, A.K.: Collaborative sensing in a distributed PTZ camera network. IEEE Trans. Image Process. 21(7), 3282–3295 (2012)

Del Bimbo, A., Dini, F., Lisanti, G., Pernici, F.: Exploiting distinctive visual landmark maps in pan-tit-zoom camera networks. Comput. Vis. Image Underst. 114(6), 611–623 (2010)

Piciarelli, C., Micheloni, C., Foresti, G.L.: Occlusion-Aware Multiple Camera Reconfiguration. In: International Conference on Distributed Smart Cameras (ICDSC), p 88 (2010)

Indu, S., Chaudhury, S., Mittal, N.R., Bhattacharyya, A.: Optimal Sensor Placement for Surveillance of Large Spaces. In: International Conference on Distributed Smart Cameras (ICDSC), pp 1–8 (2009)

Schwager, M., Julian, B.J., Angermann, M., Rus, D.: Eyes in the sky: decentralized control for the deployment of robotic camera networks. Proc. IEEE 99(9), 1541–1561 (2011)

Konda, K.R., Rosani, A., Conci, N., De Natale, F.G.B.: Smart Camera Reconfiguration in Assisted Home Environments for Elderly Care. In: European Conference on Computer Vision (ECCV), pp 45–58 (2014)

Tarabanis, K.A., Allen, P.K., Tsai, R.Y.: A survey of sensor planning in computer vision. IEEE Trans. Robot. Autom. 11(1), 86–104 (1995)

Pito, R.: A solution to the next best view problem for automated surface acquisition. IEEE Trans. Pattern Anal. Mach. Intell. 21(10), 1016–1030 (1999)

Wong, L.M., Dumont, C., Abidi, M.A.: Next Best View System in a 3D Object Modeling Task. In: Proceedings 1999 IEEE International Symposium on Computational Intelligence in Robotics and Automation. CIRA’99 (Cat. No.99EX375), pp 306–311 (1999)

Bircher, A., Kamel, M., Alexis, K., Oleynikova, H., Siegwart, R.: Receding Horizon ‘Next-Best-View’ Planner for 3D Exploration. In: 2016 IEEE International Conference on Robotics and Automation (ICRA), pp 1462–1468 (2016)

Liska, C., Sablatnig, R.: Adaptive 3D Acquisition Using Laser Light. In: Czech Pattern Recognition Workshop, pp 111–117 (2000)

Chan, M.-Y., Mak, W.-H., Qu, H.: An Efficient Quality-Based Camera Path Planning Method for Volume Exploration. In: International Symposium on Visual Computing, pp 12–21 (2008)

Benhamou, F., Goualard, F., Languénou, É., Christie, M.: Interval constraint solving for camera control and motion planning. ACM Trans. Comput. Log. 5(4), 732–767 (2004)

Assa, J., Wolf, L., Cohen-Or, D.: The virtual director: a correlation-based online viewing of human motion. Comput. Graph. Forum 29(2), 595–604 (2010)

Naish, M.D., Croft, E.A., Benhabib, B.: Simulation-Based Sensing-System Configuration for Dynamic Dispatching. In: IEEE International Conference on Systems, Man and Cybernetics, vol. 5, pp 2964–2969 (2001)

Naish, M.D., Croft, E.A., Benhabib, B.: Coordinated dispatching of proximity sensors for the surveillance of manoeuvring targets. Robot. Comput. Integr. Manuf. 19(3), 283–299 (2003)

Bakhtari, A., Benhabib, B.: An active vision system for multitarget surveillance in dynamic environments. IEEE Trans. Syst. Man, Cybern. Part B Cybern. 37(1), 190–198 (2007)

Bakhtari, A., MacKay, M.D., Benhabib, B.: Active-vision for the autonomous surveillance of dynamic, multi-object environments. J. Intell. Robot. Syst. 54(4), 567–593 (2009)

Tan, J.K., Ishikawa, S., Yamaguchi, I., Naito, T.: Yokota, m.: 3-D recovery of human motion by mobile stereo cameras. Artif. Life Robot. 10(1), 64–68 (2006)

Malik, R., Malik, R., Bajcsy, P., Bajcsy, P.: Automated Placement of Multiple Stereo Cameras. In: ECCV Workshop on Omnidirectional Vision, Camera Networks and Non-Classical Cameras (2008)

Hasler, N., Rosenhahn, B., Thormählen, T., Wand, M., Gall, J., Seidel, H.P.: Markerless Motion Capture with Unsynchronized Moving Cameras. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp 224–231 (2009)

MacKay, M.D., Fenton, R.G., Benhabib, B.: Time-varying-geometry object surveillance using a multi-camera active-vision system. Int. J. Smart Sens. Intell. Syst. 1(3), 679–704 (2008)

MacKay, M.D., Fenton, R.G., Benhabib, B.: Multi-camera active surveillance of an articulated human form - an implementation strategy. Comput. Vis. Image Underst. 115(10), 1395–1413 (2011)

Hofmann, M., Gavrila, D.M.: Multi-view 3D human pose estimation in complex environment. Int. J. Comput. Vis. 96(1), 103–124 (2012)

Schacter, D.S.: Multi-Camera Active-Vision System reconfiguration for deformable object motion capture. University of toronto (2014)

Zhao, W., Gao, S., Lin, H.: A robust hole-filling algorithm for triangular mesh. Vis. Comput. 23(12), 987–997 (2007)

Hilton, A., Stoddart, A.J., Illingworth, J., Windeatt, T.: Reliable surface reconstruction from multiple range images, pp. 117–126 (1996)

Davis, J., Marschner, S.R., Garr, M., Levoy, M.: Filling Holes in Complex Surfaces Using Volumetric Diffusion. In: Proceedings. First International Symposium on 3D Data Processing Visualization and Transmission, pp 428–861 (2002)

Kalal, Z., Matas, J., Mikolajczyk, K.: Online Learning of Robust Object Detectors during Unstable Tracking. In: 2009 IEEE 12Th Int. Conf. Comput. Vis. Work. ICCV Work. 2009, pp 1417–1424 (2009)

Forsyth, D.A., Ponce, J.: Computer Vision: a Modern Approach, 2Nd Edn. Pearson, London (2012)

Hughes, J.F. et al.: Computer Graphics: Principles and Practice, 3Rd Edn. Addison-Wesley Professional, Boston (2013)

Laurentini, A.: Visual hull concept for silhouette-based image understanding. IEEE Trans. Pattern Anal. Mach. Intell. 16(2), 150–162 (1994)

Terauchi, T., Oue, Y., Fujimura, K.: A Flexible 3D Modeling System Based on Combining Shape-From-Silhouette with Light-Sectioning Algorithm. In: International Conference on 3-D Digital Imaging and Modeling, pp 196–203 (2005)

Hernández Esteban, C., Schmitt, F.: Silhouette and stereo fusion for 3d object modeling. Comput. Vis. Image Underst. 96(3), 367–392 (2004)

Cremers, D., Kolev, K.: Multiview stereo and silhouette consistency via convex functionals over convex domains. IEEE Trans. Pattern Anal. Mach. Intell. 33(6), 1161–1174 (2011)

Liu, Y., Dai, Q., Xu, W.: A point-cloud-based multiview stereo algorithm for free-viewpoint video. IEEE Trans. Vis. Comput. Graph. 16(3), 407–418 (2010)

Hebert, P. et al.: Combined Shape, Appearance and Silhouette for Simultaneous Manipulator and Object Tracking. In: International Conference on Robotics and Automation, pp 2405–2412 (2012)

Song, P., Wu, X., Wang, M.Y.: Volumetric stereo and silhouette fusion for image-based modeling. Vis. Comput. 26(12), 1435–1450 (2010)

Nuger, E., Benhabib, B.: Multicamera fusion for shape estimation and visibility analysis of unknown deforming objects. J. Electron. Imaging 25(4), 41009 (2016)

Huang, C.-H., Cagniart, C., Boyer, E., Ilic, S.: A bayesian approach to multi-view 4D modeling. Int. J. Comput. Vis. 116(2), 115–135 (2016)

Matusik, W., Buehler, C., Raskar, R., Gortler, S.J., McMillan, L.: Image-Based Visual Hulls. In: Proceedings of the Annual Conference on Computer Graphics and Interactive Techniques, SIGGRAPH, pp 369–374 (2000)

Corazza, S., Mündermann, L., Gambaretto, E., Ferrigno, G., Andriacchi, T.P.: Markerless motion capture through visual hull, articulated ICP and subject specific model generation. Int. J. Comput. Vis. 87(1–2), 156–169 (2010)

Li, Q., Xu, S., Xia, D., Li, D.: A Novel 3D Convex Surface Reconstruction Method Based on Visual Hull. In: Pattern Recognition and Computer Vision, vol. 8004, p 800412 (2011)

Roshnara Nasrin, P.P., Jabbar, S.: Efficient 3D Visual Hull Reconstruction Based on Marching Cube Algorithm. In: International Conference on Innovations in Information, Embedded and Communication Systems (ICIIECS), pp 1–6 (2015)

Mercier, B., Meneveaux, D., Fournier, A.: A framework for automatically recovering object shape, reflectance and light sources from calibrated images. Int. J. Comput. Vis. 73(1), 77–93 (2007)

Lorensen, W.E., Cline, H.E.: Marching Cubes: a High Resolution 3D Surface Construction Algorithm. In: Proceedings of the 14Th Annual Conference on Computer Graphics and Interactive Techniques (SIGGRAPH), vol. 21, no. 4, pp 163–169 (1987)

Lazebnik, S., Furukawa, Y., Ponce, J.: Projective visual hulls. Int. J. Comput. Vis. 74(2), 137–165 (2007)

Tomasi, C., Kanade, T.: Shape and motion from image streams: a factorization method. Proc. Natl. Acad. Sci. 90(21), 9795–9802 (1993)

Pollefeys, M., Vergauwen, M., Cornelis, K., Tops, J., Verbiest, F., Van Gool, L.: Structure and Motion from Image Sequences. In: Proceedings of the Conference on Optical 3D Measurement Techniques, pp 251–258 (2001)

Lhuillier, M., Quan, L.: A quasi-dense approach to surface reconstruction from uncalibrated images. IEEE Trans. Pattern Anal. Mach. Intell. 27(3), 418–433 (2005)

Snavely, N., Seitz, S.M., Szeliski, R.: Photo tourism: exploring photo collections in 3d. ACM Trans. Graph. 25(3), 835–846 (2006)

Del Bue, A., Agapito, L.: Non-rigid stereo factorization. Int. J. Comput. Vis. 66(2), 193–207 (2006)

Huang, Y., Tu, J., Huang, T.S.: A Factorization Method in Stereo Motion for Non-Rigid Objects. In: IEEE International Conference on Acoustics, Speech and Signal Processing, No. 1, pp 1065–1068 (2008)

Bay, H., Ess, A., Tuytelaars, T., Van Gool, L.: Speeded up robust features (SURF). Comput. Vis. Image Underst. 110(3), 346–359 (2008)

Lowe, D.G.: Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 60(2), 91–110 (2004)

Furukawa, Y., Ponce, J.: Accurate, dense, and robust multiview stereopsis. IEEE Trans. Pattern Anal. Mach. Intell. 32(8), 1362–1376 (2010)

Kalman, R.E.: A new approach to linear filtering and prediction problems 1. ASME Trans. J. Basic Eng. 82 (Series D), 35–45 (1960)

Welch, G., Bishop, G.: An introduction to the Kalman filter. In Pract. 7(1), 1–16 (2006)

Ristic, B., Arulampalam, S., Gordon, N.: A Tutorial on Particle Filters. In: Beyond the Kalman Filter: Particle Filter for Tracking Applications, pp 35–62. Artech House, Boston (2004)

Sui, Y., Zhang, L.: Robust tracking via locally structured representation. Int. J. Comput. Vis. 119(2), 110–144 (2016)

Gonzales, C., Dubuisson, S.: Combinatorial resampling particle filter: an effective and efficient method for articulated object tracking. Int. J. Comput. Vis. 112(3), 255–284 (2015)

Kwolek, B., Krzeszowski, T., Gagalowicz, A., Wojciechowski, K., Josinski, H.: Real-Time Multi-View Human Motion Tracking Using Particle Swarm Optimization with Resampling. In: International Conference on Articulated Motion and Deformable Objects (AMDO), pp 92–101 (2012)

Zhang, X., Hu, W., Xie, N., Bao, H., Maybank, S.: A robust tracking system for low frame rate video. Int. J. Comput. Vis. 115(3), 279–304 (2015)

Maung, T.H.H.: Real-time hand tracking and gesture recognition system using neural networks. World Acad. Sci. Eng. Technol. 50, 466–470 (2009)

Agarwal, A., Datla, S., Tyagi, B., Niyogi, R.: Novel design for real time path tracking with computer vision using neural networks. Int. J. Comput. Vis. Robot. 1(4), 380–391 (2010)

Katz, S., Tal, A., Basri, R.: Direct visibility of point sets. ACM Trans. Graph. 26(3), 1–12 (2007)

Möller, T., Trumbore, B.: Fast, minimum storage ray-triangle intersection. J. Graph. Tools 2(1), 21–28 (1997)

Kim, W.S., Ansar, A.I., Steele, R.D., Steinke, R.C.: Performance Analysis and Validation of a Stereo Vision System. In: IEEE International Conference on Systems, Man and Cybernetics, vol. 2, pp 1409–1416 (2005)

Blender Online Community: Blender - a 3D modelling and rendering package. Blender Institute, Amsterdam (2016)

Vedaldi, A., Fulkerson, B.: {VLFeat}- an Open and Portable Library of Computer Vision Algorithms. In: ACM International Conference on Multimedia (2010)

Bouguet, J.-Y.: Camera calibration toolbox for matlab (2004)

MacKay, M.D., Fenton, R.G., Benhabib, B.: Active Vision for Human Action Sensing. In: Technological Developments in Education and Automation, pp 397–402. Springer (2010)

Acknowledgements

The authors would like to acknowledge the support received, in part, by the Natural Sciences and Engineering Research Council of Canada (NSERC).

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix A

This appendix briefly outlines the model generation and deformation estimation methods. The nature of the problem requires the model generation method to yield the most accurate and complete model of the target object without a priori knowledge of the object’s identity. A generic approach to the problem may include cases where cameras are poorly positioned around the target object, thus, triangulation methods recovering only incomplete surface patches while a visual hull would over-estimate the bounding volume. In contrast, a fusion technique that combines triangulation with a visual hull would produce a model with highly accurate triangulated surface patches and a complete estimate of object’s volume.

Model generation for a priori unknown objects implies that an accurate measurement between the recovered shape and the true model is not possible during run-time from the system’s perspective. The model’s accuracy could be measured for test objects whose model is known to the user, but during the system’s run-time, a ground truth model is unavailable. Therefore, the model generation method must inherently attempt to maximize the recovery accuracy and completeness through the implemented shape recovery technique. The deformation estimation method receives the recovered, solid object model and current camera parameters from the model generation method and applies an adaptive particle filtering algorithm to estimate the deformation.

The model generation method comprises three steps: surface-patch triangulation through stereo-camera pairs, visual hull carving, and fusion. The surface patch triangulation yields a set of surface patches that represent stereo-visible regions of the target object based on the stereo-cameras position relative to the object. Stereo-triangulation is the most accurate approach to recover surface information for a priori unknown objects for a multi-camera system.

The model generation and deformation estimation methods were validated in our work through multiple simulated experiments and compared to existing methods in the literature. The methods were compared to the fusion algorithm developed by Li et al. [65], wherein their reconstruction method was shown to recover both concave and convex geometries. In order to provide a comparative analysis between the model generation method proposed herein and that of Li et al., multiple simulated experiments are presented below for the model generation and deformation estimation of multiple a priori unknown objects deforming in the workspace. The comparison also varies the number of cameras available in the system to illustrate a camera-saturated workspace approach, otherwise known as a brute-force solution to the camera placement problem. It is noted that the brute-force approach of increasing the number of cameras in the system is both computationally expensive and results in a much more constricted workspace due to the presence of the extra cameras.

The comparison of the methods presented herein includes three unique object deformations with five camera configurations. The initial object model and final deformation for Simulation A are presented in Fig. 20, Simulation B in Fig. 21, and Simulation C in Fig. 22. The camera configurations were numbered I to VI and are presented in Figs. 23, 24 and 25, respectively. The methods were compared based on the error between the generated model’s total surface area versus the ground truth model, and the error between their volumes. The comparative results are presented in Table 3. One major difference must be noted, namely, the errors calculated for the method developed by Li et al. were for the model recovered at the current demand instant, while the errors for chosen model generation method were based on the estimated deformation for the next demand instant. The comparisons illustrate the accuracy of the chosen model generation and deformation estimation methods when compared to other methods available in the literature.

Simulation A, top row – initial object deformation, bottom row – final object deformation [61]

Simulation B, top row – initial object deformation, bottom row – final object deformation [61]

Simulation C, top row – initial object deformation, bottom row – final object deformation [61]

Camera configuration I (left), II (right) [61]

Camera configuration III (left), IV (right) [61]

Camera configuration V (left), VI (right) [61]

A final comparison is presented in Table 4, wherein the chosen model generation and deformation estimation method was compared to itself with the expectation that the target object was a priori known and assumed to have a fixed surface area. Overall, the known model method showed less error except for the case where the object’s surface area changed in Simulation A.

Appendix: B

The figures illustrate the visibility results for 12 supplementary simulations. The simulations were run using the same deforming object as in Section 4. However, for these simulations, the camera models were based on the calibrated camera parameters used in the experiment, namely, a 18 mm focal length, 4:3 aspect ratio sensor, and lens distortion. To set up these simulations, the camera and obstacle positions were scaled in the workspace. The results followed the same trend as the simulations in Section 4, but produced slightly lower visibility metric with increased variance. This disparity can be attributed to the added lens distortions and the impact of the foreshortening effect of the short focal length lens.

Appendix: C

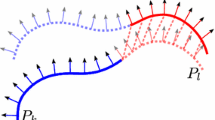

The following figures illustrate the (bird-eye-view) deformation sequences and subsequent camera reconfigurations at select demand instants for the simulations described in Section 4 above. The estimated visible polygons of the object model are colored green to illustrate the recovered stereo-surface area of the target object.

Figures 28, and 29 illustrate every odd frame of the first simulation set for the static camera placement.

Figures 30, and 31 illustrate every odd frame of the first simulation set for the reconfiguration camera placement through the proposed method.

Figures 32 and 33 illustrate every odd frame of the first simulation set for the ideal reconfiguration camera placement through the proposed method.

Tables 5 and 6 represent the x and y global positions of all six cameras in the first simulation relative world reference frame upon which the target object was centered.

Appendix: D

The following figures illustrate the (bird-eye-view) deformation sequences and subsequent camera reconfigurations at select demand instants for the experiments described in Section 5 above. The estimated visible polygons of the object model are colored green to illustrate the recovered stereo-surface area of the target object. The remainder of the recovered model that was not estimated as visible is colored red.

Figures 34, 35, 36, 37 and 38 illustrate every odd frame of experiments 1–5, and include the comparison of the reconfiguration through the proposed method to the performance of the static method.

Table 7 represents the x and y global positions of all six cameras in the fifth experiment relative world reference frame upon which the target object was centered.

Rights and permissions

About this article

Cite this article

Nuger, E., Benhabib, B. Multi-Camera Active-Vision for Markerless Shape Recovery of Unknown Deforming Objects. J Intell Robot Syst 92, 223–264 (2018). https://doi.org/10.1007/s10846-018-0773-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10846-018-0773-0