Abstract

The stochastic activity of neurons is caused by various sources of correlated fluctuations and can be described in terms of simplified, yet biophysically grounded, integrate-and-fire models. One paradigmatic model is the quadratic integrate-and-fire model and its equivalent phase description by the theta neuron. Here we study the theta neuron model driven by a correlated Ornstein-Uhlenbeck noise and by periodic stimuli. We apply the matrix-continued-fraction method to the associated Fokker-Planck equation to develop an efficient numerical scheme to determine the stationary firing rate as well as the stimulus-induced modulation of the instantaneous firing rate. For the stationary case, we identify the conditions under which the firing rate decreases or increases by the effect of the colored noise and compare our results to existing analytical approximations for limit cases. For an additional periodic signal we demonstrate how the linear and nonlinear response terms can be computed and report resonant behavior for some of them. We extend the method to the case of two periodic signals, generally with incommensurable frequencies, and present a particular case for which a strong mixed response to both signals is observed, i.e. where the response to the sum of signals differs significantly from the sum of responses to the single signals. We provide Python code for our computational method: https://github.com/jannikfranzen/theta_neuron.

Similar content being viewed by others

1 Introduction

Neural spiking is a random process due to the presence of multiple sources of noise. This includes the quasi-random input received by a neuron which is embedded in a recurrent network (network noise), the unreliability of the synapses (synaptic noise), and the stochastic opening and closing of ion channels (channel noise) (Gabbiani & Cox, 2017; Koch, 1999). This stochasticity and the resulting response variability is a central feature of neural spiking (Holden, 1976; Tuckwell, 1989). Therefore, studies in computational neuroscience have to account for this stochasticity as it has important implication for the signal transmission properties.

Computational studies of stochastic neuron models often assume that the driving fluctuations are temporally uncorrelated. This white-noise assumption implies that the correlation time \(\tau _s\) of the input fluctuations is much smaller than the time scale of the membrane potential \(\tau _m\). Put differently, the input noise is regarded as fast compared to every other process present in the neural system. This assumption grants a far-reaching mathematical tractability of the problem (Abbott & van Vreeswijk, 1993; Brunel, 2000; Burkitt, 2006; Holden, 1976; Lindner & Schimansky-Geier, 2001; Ricciardi, 1977; Richardson, 2004; Tuckwell, 1989) but is violated in a number of interesting cases. First, fluctuations that arise in a recurrent network often exhibit reduced power at low frequencies (green noise) (Bair et al., 1994; Câteau & Reyes, 2006; Pena et al., 2018; Vellmer & Lindner, 2019). Second, fluctuations in oscillatory systems, e.g. caused by the electroreceptor of the paddlefish, can be band-pass filtered (Bauermeister et al., 2013; Neiman & Russell, 2001). Finally and most prominently, fluctuations that emerge due to synaptic filtering of postsynaptic potentials (Brunel & Sergi, 1998; Lindner, 2004; Lindner & Longtin, 2006 Moreno-Bote & Parga, 2010; Rudolph & Destexhe, 2005) or due to slow ion channel kinetics (Fisch et al., 2012; Schwalger et al., 2010), have reduced power at high frequencies (red noise).

There are two important types of neuron models with distinct responses characteristics: Integrators (type I neurons) and resonators (type II neurons) (Izhikevich, 2007). The canonical model for a type I neuron is the quadratic integrate-and-fire model or, mathematically equivalent, in terms of a phase variable, the theta neuron. Here we study the response characteristics of the theta neuron, driven by a low-pass filtered noise, the Ornstein-Uhlenbeck (OU) process. This model has been studied analytically by Brunel and Latham (2003) for the limits of very short and very long correlation times. Furthermore, Naundorf et al. (2005a, b) solved the associated Fokker-Planck equation for the voltage and the noise variable for selected parameter sets in order to obtain the stationary firing rate and the firing rate’s linear response to a weak periodic stimulus.

Here, we put forward semi-analytical results for the stationary firing rate by means of the matrix-continued-fraction (MCF) method for arbitrary ratios of the two relevant time scales \(\tau = \tau _s/\tau _m\). We present exhaustive parameter scans of the stationary firing rate with respect to variations of the bifurcation parameter and the correlation time. Furthermore, our method also allows to calculate how a, not necessarily weak, periodic signal in the presence of a correlated background noise is encoded in the firing-rate of the model neuron. Because recently, non-weak signals, for which the linear response does not provide a good approximation to the firing rate, have attracted attention (Novikov & Gutkin, 2020; Ostojic & Brunel, 2011; Voronenko & Lindner, 2017, 2018), we also develop semi-analytical tools for the linear as well as the non-linear response of the firing rate to one or two periodic signals. To the best of our knowledge, this is the first application of the MCF method in computational neuroscience.

This paper is organized as follows. In Sect. 2 we introduce the model system and the associated Fokker-Planck equation. In Sect. 3 we compute the stationary firing rate of a theta neuron subject to correlated noise by means of the MCF method. Section 4 generalizes the ideas of the MCF method to the case where the model is driven by the OU noise and an additional periodic signal. Finally, in Sect. 4.3 we compute the firing rate response to two periodic signals. We conclude with a short summary of our results.

2 Model

The quadratic integrate-and-fire (QIF) model uses the normal form of a saddle-node on invariant circle (SNIC) bifurcation (Izhikevich, 2007) with a time-dependent input \(I(\hat{t})\):

In order to make the connection to physical time units transparent, we have kept on the l.h.s. a time constant, which is of the order of the membrane time \(\tau _m\)Footnote 1, typically 10ms. In the following however for the ease of notation we use a nondimensional time \(t = \hat{t}/\tau _m\), i.e. we measure time as well as any other time constants, e.g. the correlation time below, in multiples of the membrane time constant. Similarly, all frequencies and firing rates are given in multiples of the inverse membrane time constant (additional rescalings are considered below, see e.g. Eqs. (7) and (10)).

In the new nondimensional time the QIF model takes the usual form:

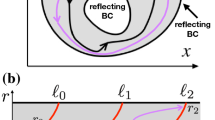

If the variable x(t) reaches the threshold \(x_\mathrm{th} = \infty\), a spike is created at time \(t_i = t\) and x(t) is immediately reset to \(x_\mathrm{re} = -\infty\). If the input is assumed to be constant it can serve as a bifurcation parameter and allows the model to switch between the excitable (\(I<0\)) and mean-driven regime (\(I > 0\)). The model for \(I<0\) is illustrated in Fig. 1A, including the stable and unstable fixed point at \(x=\pm \sqrt{I}\) as well as the reset. The QIF model can be transformed into the theta neuron by the transformation \(x = \tan (\theta /2)\) (cf., Fig. 1A):

The advantage of such a phase description is, that the threshold \(\theta _\mathrm{th} = \pi\) and reset \(\theta _\mathrm{re} = -\pi\) lie at finite values. We will use this phase description of a canonical Type I neuron in the remainder of this paper.

We assume that the input I(t) consists of three parts:

a constant mean input \(\mu\), a temporally correlated noise \(\eta (t)\) and a periodic signal s(t) (see Fig. 1B). Note that the temporal average of the input \(\bar{I} = \lim _{T\rightarrow \infty } \int _0^T I(t)dt / T\) is only affected by \(\mu\) because the temporal averages are set to \(\bar{\eta }(t) = 0\) and \(\bar{s}(t) = 0\) without loss of generality. The correlated noise \(\eta\) is given by an Ornstein-Uhlenbeck process with auto-correlation function \(\langle \eta (t)\eta (t+\Delta t)\rangle = \sigma ^2 \exp (-\Delta t/\tau )\) and correlation time \(\tau\); it can be generated by an extra stochastic differential equation, a trick from statistical physics known as Markovian embedding of a colored noise (see e.g. Dygas et al., 1986; Guardia et al., 1984; Langer, 1969; Mori, 1965; Siegle et al., 2010, and the review by Hänggi & Jung, 1995). We remind the reader that the correlation time \(\tau\) is given in terms of the membrane time constant, i.e. \(\tau = \tau _s / \tau _m\) is actually the ratio between the true correlation time \(\tau _s\) (given for instance in ms) and the membrane time \(\tau _m\). In the limit \(\tau \rightarrow 0\) the noise \(\eta (t)\) becomes uncorrelated, i.e. white. However, if the variance \(\sigma ^2\) is held constant, as in Eq. (5), the effect of the noise on the neuron vanishes together with the correlation time. A non-trivial white-noise limit can be more properly described in terms of the noise intensity \(D=\tau \sigma ^2\); if D is held constant, the noise still affects the dynamics for vanishing correlation times. For such a constant intensity scaling the effect of the noise vanishes as \(\tau \rightarrow \infty\).

Type-I neuron model. A Representation of the deterministic QIF and the equivalent theta neuron model. The blue line shows the QIF models potential \(U(x) = -\partial _x (x^2 + I)\) in the excitable regime (\(I<0\)). Upon reaching the threshold \(x_\mathrm{th} = \infty\) a spike is created and x is reset to \(x_\mathrm{re} = - \infty\). For the equivalent theta neuron model, obtained by the transformation \(x=\tan (\theta /2)\), a spike is created whenever \(\theta\) passes \(\theta _\mathrm{th}=\pi\), no additional reset rule is needed. B Illustration of a theta neuron subject to a temporally correlated OU noise (blue) as well as a periodic signal (red) and the resulting spike train with stochastic spike times (orange)

For \(s(t)=0\) the system shows spontaneous spiking (not related to any signal). In this case the parameter space is three-dimensional, i.e. all statistics depend only on \((\mu ,\sigma ,\tau )\). This dependence however can be reduced to just two independent parameters (\(\hat{\mu }, \hat{\tau }\)) defined by

This transformation also affects the phase \(\tan (\hat{\theta }/2)=\tan (\theta /2)/\sqrt{\sigma }\) and time \(\hat{t}=\sqrt{\sigma }t\) in Eq. (3) and consequently rescales the firing rate

Under an additional periodic driving \(s(t) = \varepsilon \cos (\omega t)\) the signal will be rescaled as well: \(\hat{s}(\hat{t}) = \hat{\varepsilon } \cos (\hat{\omega } \hat{t})\) with

For several periodic signals the respective amplitudes and frequencies will be rescaled in the same manner.

For the constant intensity scaling we use a similar transformation and set \(D=1\):

again, the state variables are affected by this scaling as well: \(\tan (\tilde{\theta }/2)=\tan (\theta /2)/D^{1/3}\) and \(\tilde{t}=D^{1/3} t\). The firing rates in the scaled and unscaled parameter space are related by

We make use of these scalings in the discussion of the results. For the ease of notation, we omit the hat and tilde over the parameters.

2.1 The Fokker-Planck equation

The stochastic system of interest can be written by two Langevin equations

where \(f(\theta , \eta , s(t)) = (1-\cos\,\theta )\) \(+(1+\cos\,\theta )\) \((\mu + \eta (t) + s(t))\). The relation to the governing equation for the probability density function (PDF) is the well known Fokker-Planck equation (FPE) (Risken, 1984). The PDF denotes the probability to find the phase \(\theta\) and noise \(\eta\) at time t around certain values. In the neural context the PDF can be related to the instantaneous firing rate r(t) (see for instance Brunel & Sergi, 1998; Naundorf et al., 2005a, b, and Moreno-Bote & Parga, 2010) as we recall in the following. The FPE is given by:

The two dimensional partial differential equation is completed by two natural boundary conditions

a periodic boundary condition

and the normalization condition

There is a corresponding continuity equation that relates the temporal derivative of the PDF to the spatial derivative of the probability current:

where \(J_\theta\) and \(J_\eta\) are the probability currents in the \(\theta\) and \(\eta\) direction, respectively:

An important insight is that the probability current in the phase direction \(J_\theta\) at the threshold \(\theta = \pi\) is directly related to the instantaneous firing rate r(t):

In the last equality we have used that the dynamics of the theta neuron becomes independent of the input at the threshold; specifically, we have \(f(\pi ,\eta , s(t)) = 2\).

The solution of the two-dimensional Fokker-Planck equation and the boundary conditions listed above is a difficult problem, even in the simplest case of the (time-independent) stationary solution in the absence of a periodic stimulus. Different authors have proposed approximate solutions in limit cases, e.g. for the case of very slow or very fast Ornstein-Uhlenbeck noise (Brunel & Latham, 2003), for weak noise in the mean-driven regime (Galán, 2009; Zhou et al., 2013), or, in the case of a periodic modulation of the firing rate, for very low or very high stimulus frequencies (Fourcaud-Trocmé et al., 2003). A numerical method to solve the two-dimensional Fokker-Planck equation in terms of an eigenfunction expansion was presented by Naundorf et al. (2005a, b); similar approaches have been pursued to describe two one-dimensional white-noise driven neuron models either coupled directly (Ly & Ermentrout, 2009) or subject to a shared input noise (Deniz & Rotter, 2017). Eigenfunction expansions have also been used to describe the activity in neural populations and neural networks, see e.g. Knight (2000) and Doiron et al. (2006). Turning back to the problem of single-neuron models, beyond the theta neuron, different approximations to the multi-dimensional Fokker-Planck equation for neuron models with Ornstein-Uhlenbeck noise have been suggested for the perfect integrate-and-fire model (Fourcaud & Brunel, 2002; Lindner, 2004; Schwalger et al., 2010, 2015) and for the leaky integrate-and-fire model (Alijani & Richardson, 2011; Brunel & Sergi, 1998; Brunel et al., 2001; Moreno et al., 2002; Moreno-Bote & Parga, 2004, 2006, 2010; Schuecker et al., 2015; Schwalger & Schimansky-Geier, 2008). We note that with respect to the driving noise, the related simpler case of an exponentially correlated two-state (dichotomous) noise permits the exact analytical solution for a few statistical measures such as the firing rate and stationary voltage distribution (Droste & Lindner, 2014; Müller-Hansen et al., 2015), the power spectrum and linear response function (Droste & Lindner, 2017), and the serial correlation coefficient of the interspike intervals (Lindner, 2004; Müller-Hansen et al., 2015).

3 Stationary firing rate

If we consider a system that is subject to a temporally correlated noise but no external signal (\(s(t) = 0\)) then the probability density asymptotically approaches a stationary distribution \(P_0(\theta , \eta )\) which is what we consider now. The FPE for this stationary distribution reads

with the stationary Fokker-Planck operator \(\hat{L}_0(\theta ,\eta ) = \hat{L}(\theta , \eta , 0)\). Once the stationary probability density is known it can be used to obtain the stationary firing rate \(r_0\). Alternatively to Eq. (21) one can calculate the firing rate by

where \(J_{\theta ,0}(\theta ,\eta )\) denotes the component of the probability current in the direction of the phase for \(s(t)\equiv 0\). To see how to arrive at this equation, we take the stationary case of Eq. (18) and integrate it over all values of \(\eta\). The integral term \(\int d\eta \, \partial _\eta J_\eta = J(\theta , \eta = \infty ) - J(\theta , \eta = -\infty )\) vanishes because of the natural boundary conditions and it follows that \(\partial _\theta \int d\eta \, J_\theta = 0\). Consequently the integrated \(\theta\) current does not depend on \(\theta\) and is everywhere equal to the firing rate. An additional integration over \(\theta\), yielding the factor \(2\pi\), leads to Eq. (23).

Stationary phase distribution of the theta neuron in the excitable regime (\(\mu =-1\), A), at the bifurcation point (\(\mu =0\), B) and in the mean-driven regime (\(\mu =1\), C). Dynamics of the corresponding deterministic systems are shown at the right. For the phase distributions the variance of the OU noise is held constant at \(\sigma ^2=1\) while the correlation time varies as shown in A. For \(\tau \rightarrow 0\) the effect of the noise vanishes, i.e. the model becomes deterministic. The distributions have been calculated using the MCF method. Parameters MCF method: \(n_\text {max} = p_\text {max} = 200\)

Stationary firing rate in the constant variance scaling (\(\sigma ^2=1\)) for different values of \(\mu\) and \(\tau\). Contour lines from A are shown again in B and C. Interestingly, the firing rate of the theta neuron can increase, decrease and even exhibit non-monotonic behavior with respect to the correlation time \(\tau\) of the OU noise as shown in B. Calculations by the MCF Method are confirmed by stochastic simulations (gray dots). Parameters MCF method: \(n_\text {max} = p_\text {max} = 150\)

3.1 The MCF method

In the previous section it was shown that the stationary probability density is interesting on its own because it is directly related to the stationary firing rate. Here we outline the core ideas and assumptions that are necessary to compute the stationary PDF \(P_0(\theta ,\eta )\) by means of the matrix-continued-fraction method, which has been put forward by Risken (1984).

As a first step, the stationary probability density is expanded with respect to the phase \(\theta\) and noise \(\eta\) by two sets of eigenfunctions, namely the complex exponential functions \(e^{i n \theta }/\sqrt{2\pi }\) and Hermite functions \(\phi _p(\eta )\) (see Bartussek, 1997 for a similar choice):

Note, that \(\left( c_{n,p}\right) ^* = c_{-n,p}\) because \(P_0(\theta , \eta )\) is real. Thus, we must only determine the expansion coefficients for \(n \ge 0\). Both sets satisfy the periodic and natural boundary conditions in \(\theta\) and \(\eta\), respectively. A first application of this result is the determination of the marginal probability density by

which is illustrated for different values of \(\mu\) and \(\tau\) in Fig. 2. The stationary firing rate is conveniently expressed by only two of the coefficients,

This expression can be derived by inserting the expansion into Eq. (23) and using the properties of the coefficients and eigenfunctions, in particular (80) and (81) of the Hermite functions.

The coefficients can be determined by a substitution of the expansion Eq. (24) into the stationary FPE (22) which yields the tridiagonal recurrence relation, see Appendix A:

with the coefficient vectors \(\varvec{c}_n = \left( c_{n,0}, c_{n,1}, ... \right) ^T\) and \(\varvec{c}_0 = \left( 1,0,0, ... \right) ^T\). The matrix \(\hat{K}_n\) is given by

where \(\mathbbm{1}\) is the identity matrix and \(\hat{A}\), \(\hat{B}\) are defined by

Solving Eq. (27) for \(c_{n,p}\) is difficult because the matrices are infinite and the equation constitutes a relation between three unknown. As a first step to find the coefficients, one can truncate the expansion in Eq. 24 to obtain finite matrices. In practice, we assume that all Hermite functions and Fourier modes become negligible for large p or n, so that the corresponding coefficients vanishFootnote 2\(c_{n,p} = 0\) for \(p>p_\text {max}\) or \(n>n_\text {max}\). To solve the second problem (of having three unknowns), we define transition matrices \(\hat{S}_n\) by

which upon insertion into Eq. (27) yield:

For any coefficient vectors \(\varvec{c}_n\) this equation is satisfied provided the term in square brackets vanishes. The relation between the two unknown transition matrices can be expressed by:

and leads by recursive insertion to an infinite matrix continued fraction

where \(1/\cdot\) denotes the inverse of a matrix. This fraction is truncated after \(n>n_\mathrm{max}\). The matrix \(\hat{S}_0\) determines the following coefficients via Eq. (31):

which are needed for the computation of the firing rate according to Eq. (26).

3.2 Constant variance scaling

The MCF method provides a fast computational method to determine the stationary firing rate \(r_0\) in a large part of the parameter space. Together with different analytical approximations it is possible to cover the complete dependence of \(r_0\) on the parameters \(\mu\), \(\tau\) and \(\sigma\). In the following figures, we additionally verify the MCF results by comparison to numerical simulations of Eq. (11) using a Euler-Maruyama scheme with time step \(\Delta t = 5 \cdot 10^{-3}\) for \(N_\text {trials} = 5 \cdot 10^5\) trials of length \(T_\text {max} = 500\). For more details see the repository. In Fig. 3 we use the constant variance scaling (see Sect. 2) with \(\sigma =1\). A different choice for \(\sigma\) would result in a rescaling of the axes according to Eq. (6). As depicted in Fig. 3B, for short as well as large correlation times, the firing rate approaches limit values indicated by the horizontal lines. For \(\tau \rightarrow 0\), the effect of the correlated noise vanishes so that the short time limit is equal to the deterministic firing rate

where \(\Theta (\mu )\) is the Heaviside function. In the case \(\tau \rightarrow \infty\), the noise causes a slow modulation of the firing rate; computing the long-correlation-time limit then corresponds to averaging the deterministic firing rate over the distribution of the noise (quasi-static noise approximation, see Moreno-Bote & Parga, 2010)

We recall that for a QIF model driven by white noise the firing rate is always larger than the deterministic rate (Lindner et al., 2003). In contrast, a colored noise may decrease the firing rate (Brunel & Latham, 2003; Galán, 2009) as shown in Fig. 3. For large correlation times, the decrease in the firing rate is a direct consequence of the concave curvature of the deterministic firing rate \(r_\text {det}(I)\) at large \(\mu\) as illustrated in Fig. 4. This can be understood as follows. If we take the linear approximation of the deterministic rate around the operation point \(\mu\) then, not surprisingly, with a symmetric input distribution of the noise, the averaging yields the deterministic firing rate at the operation point:

In the relevant range the underlined term is larger than the function \(r_\text {det}(I)\) in Eq. (37) as it can be seen from Fig. 4. Consequently, the resulting integral in Eq. (38) (i.e. the deterministic firing rate) is larger than the actual firing rate in the long-correlation-time limit, Eq. (37). This is the mechanism by which a colored noise can reduce the firing rate in the mean-driven regime.

Mechanism for the firing rate reduction. The decrease of the firing rate due to strongly correlated noise in the mean-driven regime is a consequence of the concave curvature of the deterministic firing rate \(r_\text {det}(I)\). For large \(\tau\) the firing rate can be approximated by averaging the deterministic firing rate over the noise distribution according to Eq. (37), this yields the blue point on the dashed line

Decrease of the firing rate with respect to the correlation time \(\tau\) at fixed variance \(\sigma ^2=1\). Analytical approximations according to Eq. (39) (blue line) are compared to the firing rate obtained by the MCF method (orange line) and again verified by stochastic simulations (gray dots). Parameters MCF method: \(n_\text {max} = p_\text {max} = 200\)

For weak noise in the mean-driven regime (\(\sigma \ll \mu\)) this drop in the firing rate can be calculated analytically as done by Galán (2009). The formula requires the phase response curve (PRC) of the theta neuron, which is well known (Ermentrout, 1996), resulting in the following compact expression for the firing rate:

(please note the transition from cyclic frequencies used in Galán, 2009 to firing rates). The formula predicts clearly a reduction of the firing rate by colored noise; specifically, \(r_0\) decreases monotonically with increasing correlation times. It should be noted, however, that in the strongly mean-driven regime, in which this theory is valid, the changes in the firing rate are very small (see Fig. 5A, B). If the driving is less strong and deviations of the firing rate from \(r_\text {det}\) are more pronounced, the theory according to Eq. (39) no longer provides a good approximation, (see Fig. 5C).

Comparison between the firing rate and the deterministic rate. Difference between \(r(\mu ,\tau )\) and the deterministic firing rate \(r_\mathrm{det}(\mu )\) for \(\sigma ^2 = 1\). As expected, in the excitable regime (\(\mu < 0\)) the firing rate of the stochastic system is increased compared to the deterministic rate. For the mean-driven regime (\(\mu > 0\)) the firing rate can be both increase or decreased depending on the particular value of both \(\mu\) and \(\tau\). Parameters MCF method: \(n_\text {max} = p_\text {max} = 150\)

Is at least the qualitative prediction of an overall rate reduction due to correlated noise correct? To answer this question, we plot in Fig. 6 the difference between the firing rate and the deterministic limit \(r_0-r_\text {det}\) for a broad of correlation times \(\tau\) and inputs \(\mu\). This difference can be both positive and negative. Trivially, in the excitable regime (\(\mu <0\)) the firing rate in the presence can only be larger than the vanishing deterministic rate (here \(r_\text {det} = 0\)). In the mean-driven regime the changes can be both positive (for sufficiently small \(\mu\)) and negative (for larger \(\mu\)); the exact line of separation is displayed by a solid line in Fig. 6.

3.3 Constant intensity scaling

Instead of a constant variance, we can also keep the noise intensity fixed (\(D=\sigma ^2 \tau\)). The corresponding stationary firing rate as a function of \(\mu\) and \(\tau\) is shown in Fig. 7A. One advantage of the constant-intensity scaling is that it permits a non-trivial white noise limit (\(\tau \rightarrow 0\)), displayed in Fig. 7B, C by the dashed lines (Brunel & Latham, 2003). In the opposite limit of a long correlation time the noise variance vanishes, which implies that \(r_0\) approaches the deterministic rate.

Remarkably, for a sufficiently strong mean input current \(\mu\), the rate attains a minimum at intermediate correlation times. Considering the long as well as the short correlation-time approximation by Moreno-Bote and Parga (2010) (see our Eq. (37)) and Brunel and Latham (2003) (see Eq. (3.19) therein), respectively, this behavior can be expected. Generally, we find that the firing rate for any \(\tau\) is smaller than the white-noise limit.

Stationary firing rate in the constant intensity scaling (\(D=1\)) for different values of \(\mu\) and \(\tau\). Contour lines from A are shown again in B and C. Interestingly, the firing rate is always smaller than the corresponding white noise limit \(\tau \rightarrow 0\) (dashed line) and can show non-monotonic behavior with a minimum depending on \(\tau\) and \(\mu\), see B. Here, known analytical approximations by Fourcaud-Trocmé et al. (2003) (solid purple lines) and Moreno-Bote and Parga (2010) (dashed purple lines) are compared to calculations by the MCF Method (orange lines). Parameters MCF method: \(n_\text {max} = p_\text {max} = 150\)

4 Response to periodic stimulus

In the previous section we have considered a theta neuron with an input current I(t) that consisted of a constant input \(\mu\) and a colored noise \(\eta (t)\). We now turn to a more general case that involves an additional periodic signal

as illustrated in Fig. 8A and demonstrate how the MCF method can be used to compute the response of the firing rate.

We consider the time-dependent signal s(t) as a perturbation with amplitude \(\varepsilon\). The respective FPE can be expressed by the stationary Fokker-Planck operator \(\hat{L}_0\) as defined in the last section and an additional term that represents the effect of the periodic signal:

with \(\hat{L}_\text {per} = \partial _\theta (1+\cos \theta )\). As a result of the periodic forcing, we can no longer expect that the probability density converges to a stationary distribution; instead the probability density approaches a so called cyclo-stationary state with period \(T = 2\pi / \omega\):

Since this distribution fully determines the asymptotic firing rate, this implies for the latter \(r(t + T) = r(t)\).

Cyclo-stationary firing rate. A Illustration of a theta neuron model subject to a temporally correlated OU noise and a periodic signal. B The firing rate (orange line; simulation) approaches a cyclo-stationary state (black line; MCF method) due to the periodicity of the signal (green line). In the linear regime the firing rate is well approximated by \(r(t) \approx r_0 + \vert \chi (\omega )\vert s(t-\varphi _{11}/\omega )\). Parameters: \(\mu = 0.5\), \(\sigma ^2 = 1\), \(\tau = 1\), \(\varepsilon = 0.1\), and \(\omega = 2\). The cyclo-stationary firing rate was calculated by the MCF method with \(n_\text {max} = p_\text {max} = 100\). Simulation parameters: In this figure, the number of realizations was up-scaled to \(N_\text {trials} = 1 \cdot 10^6\) for visual purposes. For all realizations, the initial values are \(\eta (t=0) = 0\) and \(\theta (t=0) = -\pi\)

To determine the cyclo-stationary PDF we again use a twofold expansion, first a Fourier expansion that reflects the periodic nature of the signal and second a Taylor expansion with respect to the small amplitude of the periodic signal \(\varepsilon\):

Note that \(P_{\ell , k}(\theta , \eta )=P^*_{\ell , -k}(\theta , \eta )\) because \(P(\theta , \eta , t)\) is real. The expansion Eq. (43) can be substituted into Eq. (41) to obtain a system of coupled differential equations that are no longer time dependent and can be solved iteratively with respect to \(\ell\):

with \(\hat{L}_k = \hat{L}_0 + ik\omega\). The normalization of the probability density provides additional conditions for these functions:

Here \(\delta _{i,j}\) is the Kronecker delta. Clearly, \(P_{0,0}(\theta , \eta ) = P_0(\theta , \eta )\) is the stationary probability density. This system of coupled differential equations Eq. (44) can be solved iteratively (\(\ell \rightarrow \ell +1\)). Notice that whenever \(P_{\ell , k}(\theta , \eta )\) is governed by a homogeneous differential equation, i.e. \(\hat{L}_k P_{\ell , k}(\theta , \eta ) = 0\), the trivial solution \(P_{\ell , k}(\theta , \eta ) = 0\) does satisfy Eq. (45) and is thus a solution (except for \(k=\ell =0\)). Therefore, for \(\ell =0\) we find that all coefficients except \(P_{0,0}(\theta , \eta )\) vanish. For \(\ell =1\) we find two non-vanishing coefficients, namely \(P_{1,-1}(\theta , \eta )\) and \(P_{1,1}(\theta , \eta )\). Generally, all coefficients \(P_{\ell ,k}(\theta , \eta )\) for \(k>\ell\) and \(k + \ell = \text {odd}\) vanish (see Fig. 16). The remaining inhomogeneous differential equations can be solved by means of the MCF method (see Appendix B).

The cyclo-stationary firing rate can now be expressed in terms of the functions \(P_{\ell , k}(\theta , \eta )\) using Eq. (21), exploiting the symmetry \(P_{\ell , k} =P^*_{\ell , -k}\) and \(P_{\ell , k>\ell }=0\):

with:

where arg(\(\cdot\)) is the complex argument. We recover our well known stationary firing rate for \(\ell =k=0\), i.e. \(r_{0,0} = r_0\). Note that some of the terms \(r_{\ell , k}\) in Eq. (46) vanish because of the underlying symmetry of the governing equations Eq. (44).

4.1 Linear response

For small \(\varepsilon\) the linear term in the expansion, i.e. the linear response \(r_{1,1}\), already provides a good approximation of the asymptotic firing rate r(t):

Note that all other terms \(r_{1, k\ne 1}\) vanish. The function \(\vert r_{1,1}(\omega ) \vert\) is also commonly known as the absolute value of the susceptibility \(\vert \chi (\omega ) \vert\) that quantifies the amplitude response of the firing rate. The phase shift with respect to the signal is described by \(\varphi _{1,1}\). An exemplary signal s(t) together with the linear response, given in terms of the amplitude and phase shift, is shown in Fig. 8. For the chosen small signal amplitude \(\varepsilon\), the linear theory indeed captures very well the cyclo-stationary part of the firing rate. There is also a transient response due to the chosen initial condition of the ensemble, here we however focus solely on the cyclo-stationary response.

Susceptibility and phase shift. The absolute value of the susceptibility \(\vert \chi (\omega )\vert\) and phase shift \(\varphi _{11}\) are computed by the MCF method for two different correlation times \(\tau\). The results are confirmed by stochastic simulations and compared to known limit cases for \(\omega \rightarrow 0\) and \(\omega \rightarrow \infty\) according Eqs. (50) and (51), respectively. Parameters: \(\mu =0.1\), \(\sigma ^2 = 1\). Parameters MCF method: \(n_\text {max} = p_\text {max} = 200\). Simulation parameters: \(T=5 \cdot 10^3\), \(dt = 1 \cdot 10^{-2}\) and \(N_\text {trials} = 1.6 \cdot 10^4\)

Before we discuss the rate modulation with respect to different parameters, we compare our numerical results against known approximations (Fourcaud-Trocmé et al., 2003) (see Fig. 9). First we verify the low frequency limit \(\omega \rightarrow 0\). In this case the signal s(t) is slow and can be considered as a quasi-constant input. Expanding the firing rate with respect to the signal amplitude \(\varepsilon\) yields:

A comparison with Eq. (48) allows to identify the low frequency limit of the susceptibility and phase shift:

As we can compute the firing rate \(r_0\) for different values of \(\mu\) (see Sect. 3), the derivative above can be calculated numerically.

Second, in the opposite limit of large frequencies \(\omega \rightarrow \infty\), the theta neuron acts as a low-pass filter (Fourcaud-Trocmé et al., 2003):

Hence, the susceptibility becomes very small in the high-frequency limit which is also noticeable by the pronounced random deviations of our simulation results in this specific limit. Both limit cases are well captured by our method for two values of the correlation time (\(\tau =0.1, 1\)) in the mean driven regime. We see that here the main effect of increasing the correlation time is to diminish the resonance of the response: For \(\tau =0.1\) the susceptibility peaks around \(\omega \approx 2\pi r_0\) (note, that for small \(\tau\): \(r_0 \approx r_\text {det}\)); this peak is gone for \(\tau =1\) because the effect of the noise, keeping its variance constant, increases with \(\tau\). All these features are in detail confirmed by the results of stochastic simulations (symbols in Fig. 9).

The general dependence of the susceptibility, focusing on its magnitude only, is inspected in Fig. 10 for the constant variance and in Fig. 11 for the constant intensity scaling. Qualitative different behavior of \(\vert \chi (\omega )\vert\) can be observed between the mean-driven \(\mu > 0\) and excitable regime \(\mu < 0\). In the mean-driven regime the theta neuron exhibits a strong resonance near \(\omega _\text {det} = 2 \pi r_\text {det}\) that increases with decreasing effect of the noise, i.e. in the constant variance scaling the resonance becomes stronger as \(\tau \rightarrow 0\) (see Fig. 10 top) while for the constant intensity scaling the resonance increases as \(\tau \rightarrow \infty\), (see Fig. 11 top).

In the excitable regime resonances are weak or absent. First of all, the baseline firing rate of the neuron vanishes as the effect of the noise decreases (cf. Figs. 3A and 7A) and so does the susceptibility (see Figs. 10 and 11 bottom). Secondly, the theta neuron becomes a low-pass filter where \(\vert \chi (\omega )\vert\) decreases with increasing \(\omega\) regardless of the correlation time \(\tau\).

Right at the bifurcation point \(\mu =0\) there are still no pronounced resonances with respect to \(\omega\). However, the dependence of the linear response on the correlation time is somewhat different to the excitable regime: the susceptibility increases if the effect of the noise becomes very weak, i.e. \(\tau \rightarrow 0\) for the constant variance scaling (see Fig. 10 middle) and \(\tau \rightarrow \infty\) for the constant intensity scaling (see Fig. 11 middle).

4.2 Nonlinear response

For larger signal amplitudes nonlinear response functions have to be considered:

Here we have included all terms up to the 3rd order in \(\varepsilon\) (cf. Eq. (46)). The nonlinear response features higher Fourier modes and a correction \(r_{2,0}\) of the time-averaged firing rate. The response functions \(r_{\ell , k}\) and their respective argument \(\varphi _{\ell ,k}\) of course depend on the model parameters \(\mu\), \(\tau\) and \(\sigma\) as well as the signal frequency \(\omega\).

For a neuron in the mean-driven regime the frequency dependence for three selected response functions is shown in Fig. 12B. In contrast to the linear response \(\vert r_{1,1}\vert\) the functions \(\vert r_{2,2}\vert\) and \(\vert r_{3,3}\vert\) display additional resonances for instance at \(\omega \approx 1 = \pi r_\mathrm{det}\). This behavior is not specific to the theta neuron, for instance such resonances can be observed for the LIF neuron as well (Voronenko & Lindner, 2017). These additional resonances give rise to strong nonlinear effects even if the signal is weak, see Fig. 12A. In the particular case shown in Fig. 12 the signal frequency was chosen to match the resonance frequency of the second-order response \(\vert r_{2,2}\vert\) so that the linear response alone no longer provides a good approximation to the firing rate r(t). Instead the second-order response must be included, illustrating the importance of the nonlinear theory even for comparatively weak signals.

By means of the MCF method it is possible to achieve a near perfect fit of the actual firing rate by including many correction terms; see Fig. 12A where we have included all terms up to the 10th order. However, note that the computational cost of each further correcting term increases roughly linearly with the order \(\ell\) of the signal amplitude.

Nonlinear response. A Periodic signal and firing rate response of the theta neuron model. Here, the linear theory (dotted line) fails to accurately describe the firing rate (solid black line). This is mainly because the signal frequency is chosen to match half the deterministic firing frequency \(\omega _\text {det} / 2 =1\) where the nonlinear response functions \(\vert r_{2,2}\vert\) and \(\vert r_{3,3}\vert\) are close to their local maximum, see B. However, already the second-order response (dashed orange line) provides a good approximation to the actual firing rate and is improved further if higher-order terms are considered (cyan line). All responses are calculated by the MCF method with \(n_\text {max} = p_\text {max} =150\). Parameters: \(\sigma = 1\), \(\mu = 1\), \(\tau = 0.1\), \(\varepsilon = 0.5\) and \(\omega =1\)

We now discuss the amplitude response functions \(\vert r_{\ell ,k}\vert\) to the third order in \(\varepsilon\) for varying values of the mean input and correlation time (cf. Fig. 13). The linear response \(\vert r_{1,1}\vert\), already discussed in the preceding section and shown here for completeness (Fig. 13A I, B I, C I), displays in the mean-driven regime (\(\mu =1\)), and to a lesser degree also at the bifurcation point (\(\mu =0\)), a well known resonance peak near the firing frequency \(\omega _0 = 2 \pi r_{0}\); it acts as a low-pass filter in the excitable regime (\(\mu = -0.5\)). Increasing the correlation time and thereby the effect of the noise diminishes this resonance.

The first nonlinear term \(r_{2,0}\) describes the effect of the periodic signal on the time-averaged firing rate; we discuss this term first for the mean-driven regime (Fig. 13C II). Similar to the findings for a stochastic LIF model (Voronenko & Lindner, 2017, Fig. 3B) at low noise we find that a resonant driving at a frequency corresponding to the firing rate \(\omega _0\) does not evoke any change of the time-averaged firing rate while a frequency slightly below or above this frequency evokes a reduction or increase of the rate, respectively. If we deviate too strongly from \(\omega _0\) however the effect of the signal on the time-averaged rate becomes very small. Increasing the correlation time increases the effect of the noise and smears out these nonlinear resonances.

The effect of the periodic signal on the time-averaged firing rate in the excitable regime and at the bifurcation point is quite different (Fig. 13A II, B II). Here the rate is always increased by the periodic signal, similar to what was found already for an excitable LIF model (Voronenko & Lindner, 2017, Fig. 3A). Furthermore, at the bifurcation point and at low noise intensities (green curve in B II) there is a pronounced maximum as a function of frequency \(\omega\) attained at a frequency higher than \(\omega _0\).

Generally, in the higher-order response functions, we observe a number of peaks versus frequency (see e.g. Fig. 13A–C V). The resonances in the mean-driven regime (C V) and at low noise (green curve) are found near \(\omega _\text {det}\), \(\omega _\text {det}/2\) and \(\omega _\text {det}/3\). Note again, that in this regime the deterministic frequency \(\omega _\text {det}=2\pi r_\text {det}\) of the oscillator and the stationary firing frequency \(\omega _0\) are close. In the excitable regime both the linear and nonlinear response functions also exhibit for most driving frequencies a nonmonotonic behavior with respect to the correlation time, i.e. with respect to the strength of the noise.

4.3 Response to two periodic signals

So far we have discussed the theta neuron’s linear and nonlinear firing rate response to a single periodic signal. In this section we derive a scheme that allows to calculate the response if the model neuron receives two periodic signals:

Calculating the firing rate in this case will not only help to understand how a theta neuron responds to two periodic signals but can also be used to calculate the 2nd order response to arbitrary signals (Voronenko & Lindner, 2017).

As a starting point we formulate the corresponding FPE:

This equation still agrees with Eq. (41) except for s(t) which contains two periodic signals. Again we are interested in the PDF for which all initial condition have been forgotten and the time dependence of \(P(\theta ,\eta ,t)\) is only due to the time dependence of the signal s(t). Note that since the sum of two periodic signals is not necessarily periodic, the functions \(P(\theta ,\eta ,t)\) and r(t) are not periodic either. In fact, s(t) is only periodic if the ratio of the two frequencies is a rational number, i.e. \(\omega _1/\omega _2 \in \mathbb {Q}\). We chose a Fourier representation with respect to \(\omega _1 t\), \(\omega _2 t\) and expand with respect to the small amplitudes \(\varepsilon _1\), \(\varepsilon _2\):

For notational convenience we have omitted the arguments of the coefficients \(P_{k_1,k_2}^{\ell _1,\ell _2}(\theta , \eta )\).

Because \(P(\theta ,\eta ,t)\) is a real valued function, the coefficients obey

As for the case of a single periodic signal, inserting Eq. (55) into Eq. (54) gives a system of time-independent coupled differential equations:

with \(\hat{L}_{k_1,k_2} = \hat{L}_0 + i(k_1\omega _1 + k_2 \omega _2)\) and \(P_{k_1,k_2}^{\ell _1,\ell _2}= 0\) for \(\ell _1 < 0\) or \(\ell _2 < 0\). The normalization of the probability density provides again the additional conditions:

The differential equations (57) are analogous to Eq. (44) and can be solved by means of the MCF method (see Appendix C). In the following we explicitly provide the hierarchy of coupled differential equations up to the second order of \(\varepsilon _1, \varepsilon _2\), i.e. for \(\ell _1 + \ell _2 \le 2\). The zeroth-order term \(\ell _1 + \ell _2 = 0\) describes the unperturbed system. As we have already argued for the case of a single periodic signal the function \(P_{0,0}^{0,0}\), governed by

is the only non-vanshing zeroth-order term because for every other value of \(k_1, k_2\) the trivial solution does satisfies Eq. (58). Therefore \(P_{0,0}^{0,0} = P_0\) is the stationary probability density from Sect. 3. The stationary PDF in turn determines the two non-vanishing linear (\(\ell _1 + \ell _2 = 1\)) correction terms:

Finally, the linear terms determine the second order terms (\(\ell _1 + \ell _2 = 2\)):

Nonlinear response to two periodic signals. A\(_I\)-A\(_{IV}\)) Amplitudes of the response functions \(r_{l_1,l_2}^{k_1,k_2}\) (cf. Eq. (71)). Note that the response functions \(\vert r_{0,1}^{0,1}\vert\) and \(\vert r_{0,2}^{0,2}\vert\) that are not shown here are identical to the response functions that are shown in A\(_I\) and A\(_{II}\) if the frequencies \(\omega _1\) and \(\omega _2\) are interchanged (both account for a single signal). The response functions \(\vert r_{1,1}^{1,1}\vert\) and \(\vert r_{1,-1}^{1,1}\vert\), that describe the interaction effect of both signals on the firing rate, exhibit additional resonances near \(\omega _1 + \omega _2 = 2 \pi r_\text {det}\) and \(\vert \omega _1 - \omega _2\vert = 2 \pi r_\text {det}\). B and C show the firing rate in response to two periodic signals where the sum of the frequencies does and does not match the aforementioned condition \(\omega _1 + \omega _2 = 2 \pi r_\text {det}\), respectively. If the condition is matched the sum of responses to each individual signal does not provide a good approximation to the actual firing rate but the full response to the sum of signals has to be calculated (see B). Parameters: \(\mu = 1\), \(\sigma ^2 = 1\), \(\tau =0.05\), \(\varepsilon _1 = 0.3\), \(\varepsilon _2 = 0.1\) with frequencies \(\omega _1 =0.5\), \(\omega _2 = 1.5\) in B and \(\omega _1 =1.0\), \(\omega _2 = 1.5\) in C. Parameters MCF Method: \(p_\text {max} = n_\text {max} = 100\)

As for the case of a single periodic signal, the rate response r(t) can be expressed in terms of the functions \(P_{k_1,k_2}^{\ell _1,\ell _2}\) using Eqs. (21) and (56):

with

The response of the firing rate up to the second order in the amplitudes reads:

The first five lines represent the first and second order responses of the firing rate for a theta neuron that receives a single periodic signal, either \(s_1(t)\) or \(s_2(t)\). For instance, \(\vert r_{1,0}^{1,0}(\omega _1,\omega _2)\vert = \vert r_{1,1}(\omega _1)\vert\) (the linear response amplitude to \(s_1\)) and \(\vert r_{2,0}^{2,0}(\omega _1,\omega _2)\vert = \vert r_{2,2}(\omega _1)\vert\) (the response amplitude at the second harmonic of \(s_1\)) do not depend on the frequency of the second signal \(\omega _2\) as it can be seen in Fig. 14A\(_I\) and A\(_{II}\). The response functions \(r_{\ell ,k}(\omega )\) for a single periodic signal have already been discussed in the previous sections. The last two terms, proportional to \(\varepsilon _1 \varepsilon _2\), are of particular interest here, because they arise only due to the interaction of two periodic signals. The corresponding response amplitudes \(\vert r_{1,1}^{1,1}\vert\) and \(\vert r_{1,1}^{1,-1}\vert\) are shown in Fig. 14A\(_{III}\) and A\(_{IV}\). In accordance with previous observations for the leaky integrate-and-fire model with white noise and a periodic driving (Voronenko & Lindner, 2017) we find two distinct cases in the mean-driven regime. First, if neither the sum nor the difference \(\omega _1 \pm \omega _2\) is close to the firing frequency \(2 \pi r_\text {det}\) then the response to the sum of two signals is well described by the sum of responses to the separate signals. A particular set of frequencies \(\omega _1\) and \(\omega _2\) for which this is the case is shown in Fig. 14C where the second order response to the sum of two signals (black solid line) agrees very well with the sum of the second order responses to one signal at a time (dashed line). Second, if \(\omega _1 + \omega _2 \approx 2 \pi r_\text {det}\) or \(\vert \omega _1 - \omega _2\vert \approx 2 \pi r_\text {det}\) the firing rate is significantly affected by the interaction of both signals (see Fig. 14A\(_{III}\) and A\(_{IV}\)). An example of the firing rate as a function of time where these interaction terms are crucial is shown in Fig. 14B. Here the aforementioned response to the sum of two signals and sum of responses to one signal at a time disagree significantly.

5 Summary and outlook

In this paper we have studied the firing rate of the canonical type-I neuron model, the theta neuron, subject to a temporally correlated Ornstein-Uhlenbeck noise and additional periodic signals. We have solved the associated multi-dimensional Fokker-Planck-equation numerically by means of the matrix-continued-fraction (MCF) method, put forward by Risken (1984). For our problem the MCF method provided reliable solutions for a wide range of parameters; the main restriction is that the correlation time cannot be to large and additionally in the excitable regime the noise intensity (as also known from other application of the method, see Lindner & Sokolov, 2016 for a recent example) cannot be to small. To the best of our knowledge this is the first application of this method in computational neuroscience, advancing the results by Naundorf et al. (2005a, b) on the same model.

When the neuron receives no additional periodic signal, i.e. when the model is driven solely by the correlated noise, our method allows a quick and accurate computation of the stationary firing rate. We investigated the rate for a large part of the parameter space, confirmed the MCF results by comparison with stochastic simulations and discussed the agreement with known analytical approximations (Fourcaud-Trocmé et al., 2003; Galán, 2009; Moreno-Bote & Parga, 2010). We found that, in contrast to the white noise case (Lindner et al., 2003), correlated noise can both increase and decrease the stationary firing rate of a type-I neuron and we identified the conditions under which one or the other behavior can be observed.

In the presence of a single additional periodic signal both the probability density function and the firing rate approach a cyclo-stationary solution, which can be found by extending the MCF method to the time-dependent Fokker-Planck-equation. The corresponding rate modulation is for a weak signal given by the linear response function, the well known susceptibility, which has been addressed before numerically (Naundorf et al., 2005a, b) and analytically in limit cases (Fourcaud-Trocmé et al., 2003). Here we went beyond the linear response and computed also the higher-order response to a single periodic stimulus. Similar to what was found for a periodically driven leaky integrate-and-fire model with white background noise (Voronenko & Lindner, 2017), we identified driving frequencies at which the higher harmonics can be stronger than the firing rate modulation with the fundamental frequency. For a variety of nonlinear response functions, we observed resonant behavior.

Finally, we generalized the numerical approach to the case of two periodic signals and studied the nonlinear response up to second order. We found that for certain frequency combinations the mixed response to the two signals can lead to a drastically different rate modulation than predicted by pure linear response theory; this is similar to what was observed in a leaky integrate-and-fire neuron with white background noise (Voronenko & Lindner, 2017).

Our method could be extended to neuron models that include more complicated correlated noise, for instance, a harmonic noise (Schimansky-Geier & Zülicke, 1990) that can mimic special sources of intrinsic fluctuations (Engel et al., 2009). Another problem that could be addressed by this method is the computation of the spike-train power spectrum in the stationary state. Furthermore the linear and nonlinear response to the modulation of other parameters, e.g. the noise intensity (Boucsein et al., 2009; Lindner & Schimansky-Geier, 2001; Silberberg et al., 2004; Tchumatchenko et al., 2011), could be of interest and be computed with the methods outlined in this paper.

Notes

One way to derive the QIF model is to consider the limit of a large slope factor \(\Delta _v\) in the exponential integrate-and-fire model \(C \dot{v} = I_0 - g_L v + g_L \Delta _v\exp ((v-v_t)/\Delta _v)\) which itself results from a simplification of a conductance-based model (Fourcaud-Trocmé et al., 2003). By choosing the new variable \(x = (v-v_t)/(\sqrt{2}\Delta _v)\) and expanding the exponential function up to the second order for \(v - v_t \ll \Delta _v\), one finds \(\sqrt{2}\tau _m \dot{x} = \mu + x^2\), where \(\tau _m = C/g_L\) is the membrane-time constant. For simplicity we neglected the prefactor \(\sqrt{2}\) in Eq. (1).

How fast the MCF method converges with the number of Hermite functions and Fourier modes considered depends on the system parameters as demonstrated in the repository. More precisely, for a fixed \(p_\text {max}\) or \(n_\text {max}\) we observed that the MCF method fails for large correlation times and additionally in the excitable regime for small noise intensities. However, for these particular limit cases analytical approximations already exist (see Sects. 3.2 and 3.3). Choosing a \(p_\text {max} = n_\text {max} \ge 150\), we can even capture these limit cases sufficiently well (see Fig. 3).

References

Abbott, L., & van Vreeswijk, C. (1993). Asynchronous states in networks of pulse-coupled oscillators. Physical Review E, 48, 1483.

Alijani, A. K., & Richardson, M. J. E. (2011). Rate response of neurons subject to fast or frozen noise: From stochastic and homogeneous to deterministic and heterogeneous populations. Physical Review E, 84, 011919.

Bair, W., Koch, C., Newsome, W., & Britten, K. (1994). Power spectrum analysis of bursting cells in area MT in the behaving monkey. The Journal of Neuroscience, 14, 2870.

Bartussek, R. (1997). Ratchets driven by colored Gaussian noise. In L. Schimansky-Geier & T. Pöschel (Eds.), Stochastic Dynamics, page 69. Berlin, London, New York: Springer.

Bauermeister, C., Schwalger, T., Russell, D., Neiman, A. B., & Lindner, B. (2013). Characteristic effects of stochastic oscillatory forcing on neural firing: Analytical theory and comparison to paddlefish electroreceptor data. PLoS Computational Biology, 9, e1003170.

Boucsein, C., Tetzlaff, T., Meier, R., Aertsen, A., & Naundorf, B. (2009). Dynamical response properties of neocortical neuron ensembles: Multiplicative versus additive noise. The Journal of Neuroscience, 29, 1006.

Brunel, N. (2000). Dynamics of sparsely connected networks of excitatory and inhibitory spiking neurons. Journal of Computational Neuroscience, 8, 183.

Brunel, N., & Latham, P. E. (2003). Firing rate of the noisy quadratic integrate-and-fire neuron. Neural Computation, 15, 2281.

Brunel, N., & Sergi, S. (1998). Firing frequency of leaky integrate-and-fire neurons with synaptic current dynamics. Journal of Theoretical Biology, 195, 87.

Brunel, N., Chance, F. S., Fourcaud, N., & Abbott, L. F. (2001). Effects of synaptic noise and filtering on the frequency response of spiking neurons. Physical Review Letters, 86, 2186.

Burkitt, A. N. (2006). A review of the integrate-and-fire neuron model: I. Homogeneous synaptic input. Biological Cybernetics, 95, 1.

Câteau, H., & Reyes, A. D. (2006). Relation between single neuron and population spiking statistics and effects on network activity. Physical Review Letters, 96, 058101.

Deniz, T., & Rotter, S. (2017). Solving the two-dimensional Fokker-Planck equation for strongly correlated neurons. Physical Review E, 95, 012412.

Doiron, B., Rinzel, J., & Reyes, A. (2006). Stochastic synchronization in finite size spiking networks. Physical Review E, 74, 030903.

Droste, F., & Lindner, B. (2014). Integrate-and-fire neurons driven by asymmetric dichotomous noise. Biological Cybernetics, 108, 825.

Droste, F., & Lindner, B. (2017). Exact results for power spectrum and susceptibility of a leaky integrate-and-fire neuron with two-state noise. Physical Review E, 95, 012411.

Dygas, M. M., Matkowsky, B. J., & Schuss, Z. (1986). A singular perturbation approach to non-markovian escape rate problems. SIAM Journal on Applied Mathematics, 46, 265.

Engel, T. A., Helbig, B., Russell, D. F., Schimansky-Geier, L., & Neiman, A. B. (2009). Coherent stochastic oscillations enhance signal detection in spiking neurons. Physical Review E, 80, 021919.

Ermentrout, B. (1996). Type I membranes, phase resetting curves, and synchrony. Neural Computation, 8, 979.

Fisch, K., Schwalger, T., Lindner, B., Herz, A., & Benda, J. (2012). Channel noise from both slow adaptation currents and fast currents is required to explain spike-response variability in a sensory neuron. The Journal of Neuroscience, 32, 17332.

Fourcaud, N., & Brunel, N. (2002). Dynamics of the firing probability of noisy integrate-and-fire neurons. Neural Computation, 14, 2057.

Fourcaud-Trocmé, N., Hansel, D., van Vreeswijk, C., & Brunel, N. (2003). How spike generation mechanisms determine the neuronal response to fluctuating inputs. The Journal of Neuroscience, 23, 11628.

Gabbiani, F., & Cox, S. J. (2017). Mathematics for neuroscientists. Academic Press.

Galán, R. F. (2009). Analytical calculation of the frequency shift in phase oscillators driven by colored noise: Implications for electrical engineering and neuroscience. Physical Review E, 80(3), 036113.

Guardia, E., Marchesoni, F., & San Miguel, M. (1984). Escape times in systems with memory effects. Physics Letters A, 100, 15.

Hänggi, P., & Jung, P. (1995). Colored noise in dynamical-systems. Advances in Chemical Physics, 89, 239.

Holden, A. V. (1976). Models of the stochastic activity of neurones. Berlin: Springer-Verlag.

Izhikevich, E. M. (2007). Dynamical systems in neuroscience: the geometry of excitability and bursting. Cambridge, London: The MIT Press.

Knight, B. W. (2000). Dynamics of encoding in neuron populations: Some general mathematical features. Neural Computation, 12, 473.

Koch, C. (1999). Biophysics of computation - information processing in single neurons. New York, Oxford: Oxford University Press.

Langer, J. S. (1969). Statistical theory of the decay of metastable states. Annals of Physics, 54, 258.

Lindner, B. (2004). Interspike interval statistics of neurons driven by colored noise. Physical Review E, 69, 022901.

Lindner, B., & Longtin, A. (2006). Comment on characterization of subthreshold voltage fluctuations in neuronal membranes by M. Rudolph and A. Destexhe. Neural Computation, 18, 1896.

Lindner, B., & Schimansky-Geier, L. (2001). Transmission of noise coded versus additive signals through a neuronal ensemble. Physical Review Letters, 86, 2934.

Lindner, B., & Sokolov, I. M. (2016). Giant diffusion of underdamped particles in a biased periodic potential. Physical Review E, 93, 042106.

Lindner, B., Longtin, A., & Bulsara, A. (2003). Analytic expressions for rate and CV of a type I neuron driven by white Gaussian noise. Neural Computation, 15, 1761.

Ly, C., & Ermentrout, B. (2009). Synchronization dynamics of two coupled neural oscillators receiving shared and unshared noisy stimuli. Journal of Computational Neuroscience, 26(3), 425–443.

Moreno, R., de la Rocha, J., Renart, A., & Parga, N. (2002). Response of spiking neurons to correlated inputs. Physical Review Letters, 89, 288101.

Moreno-Bote, R., & Parga, N. (2004). Role of synaptic filtering on the firing response of simple model neurons. Physical Review Letters, 92, 028102.

Moreno-Bote, R., & Parga, N. (2006). Auto- and crosscorrelograms for the spike response of leaky integrate-and-fire neurons with slow synapses. Physical Review Letters, 96, 028101.

Moreno-Bote, R., & Parga, N. (2010). Response of integrate-and-fire neurons to noisy inputs filtered by synapses with arbitrary timescales: Firing rate and correlations. Neural Computation, 22, 1528.

Mori, H. (1965). A continued-fraction representation of time-correlation functions. Progress in Theoretical Physics, 34, 399.

Müller-Hansen, F., Droste, F., & Lindner, B. (2015). Statistics of a neuron model driven by asymmetric colored noise. Physical Review E, 91, 022718.

Naundorf, B., Geisel, T., & Wolf, F. (2005a). Action potential onset dynamics and the response speed of neuronal populations. Journal of Computational Neuroscience, 18, 297.

Naundorf, B., Geisel, T., & Wolf, F. (2005b). Dynamical response properties of a canonical model for type-I membranes. Neurocomputing, 65, 421.

Neiman, A., & Russell, D. F. (2001). Stochastic biperiodic oscillations in the electroreceptors of paddlefish. Physical Review Letters, 86, 3443.

Novikov, N., & Gutkin, B. (2020). Role of synaptic nonlinearity in persistent firing rate shifts caused by external periodic forcing. Physical Review E, 101(5), 052408.

Ostojic, S., & Brunel, N. (2011). From spiking neuron models to linear-nonlinear models. PLoS Computational Biology, 7, e1001056.

Pena, R. F., Vellmer, S., Bernardi, D., Roque, A. C., & Lindner, B. (2018). Self-consistent scheme for spike-train power spectra in heterogeneous sparse networks. Frontiers in Computational Neuroscience, 12(9).

Ricciardi, L. M. (1977). Diffusion processes and related topics on biology. Berlin: Springer-Verlag.

Richardson, M. J. E. (2004). Effects of synaptic conductance on the voltage distribution and firing rate of spiking neurons. Physical Review E, 69, 051918.

Risken, H. (1984). The Fokker-Planck Equation. Berlin: Springer.

Rudolph, M., & Destexhe, A. (2005). An extended analytical expression for the membrane potential distribution of conductance-based synaptic noise (Note on characterization of subthreshold voltage fluctuations in neuronal membranes). Neural Computation, 18, 2917.

Schimansky-Geier, L., & Zülicke, C. (1990). Harmonic noise: Effect on bistable systems. Zeitschrift für Physik B Condensed Matter, 79, 451.

Schuecker, J., Diesmann, M., & Helias, M. (2015). Modulated escape from a metastable state driven by colored noise. Physical Review E, 92, 052119.

Schwalger, T., & Schimansky-Geier, L. (2008). Interspike interval statistics of a leaky integrate-and-fire neuron driven by Gaussian noise with large correlation times. Physical Review E, 77, 031914.

Schwalger, T., Fisch, K., Benda, J., & Lindner, B. (2010). How noisy adaptation of neurons shapes interspike interval histograms and correlations. PLoS Computational Biology, 6, e1001026.

Schwalger, T., Droste, F., & Lindner, B. (2015). Statistical structure of neural spiking under non-poissonian or other non-white stimulation. Journal of Computational Neuroscience, 39, 29.

Siegle, P., Goychuk, I., Talkner, P., & Hänggi, P. (2010). Markovian embedding of non-markovian superdiffusion. Physical Review E, 81, 011136.

Silberberg, G., Bethge, M., Markram, H., Pawelzik, K., & Tsodyks, M. (2004). Dynamics of population rate codes in ensembles of neocortical neurons. Journal of Neurophysiology, 91, 704.

Tchumatchenko, T., Malyshev, A., Wolf, F., & Volgushev, M. (2011). Ultrafast population encoding by cortical neurons. The Journal of Neuroscience, 31, 12171.

Tuckwell, H. C. (1989). Stochastic processes in the neuroscience. Philadelphia, Pennsylvania: SIAM.

Vellmer, S., & Lindner, B. (2019). Theory of spike-train power spectra for multidimensional integrate-and-fire neurons. Physical Review Research, 1(2), 023024.

Voronenko, S., & Lindner, B. (2017). Nonlinear response of noisy neurons. New Journal of Physics, 19, 033038.

Voronenko, S., & Lindner, B. (2018). Improved lower bound for the mutual information between signal and neural spike count. Biological Cybernetics, 112, 523.

Zhou, P., Burton, S. D., Urban, N., & Ermentrout, G. B. (2013). Impact of neuronal heterogeneity on correlated colored-noise-induced synchronization. Frontiers in Computational Neuroscience, 7, 113.

Funding

Open Access funding enabled and organized by Projekt DEAL. This work was supported by Deutsche Forschungsgemeinschaft: LI-1046/4-1 and LI-1046/6-1.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors have no competing interests to declare that are relevant to the content of this article.

Conflict of interest

The authors declare no conflict of interest.

Additional information

Action Editor: Brent Doiron

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

A. Stationary case - derivation of the tridiagonal recurrence relation

Here we demonstrate how the problem of solving the FPE (22) for the stationary PDF \(P_0(\theta , \eta )\), can be translated into an equivalent problem of solving a tridiagonal recurrence relation for the expansion coefficients \(c_p^n\). These coefficients can then be found by means of the matrix-continued-fraction method as it was demonstrated in Sect. 3.1.

First, we recall the stationary FPE

and the expansion of the PDF by two sets of orthonormal eigenfunctions \(e^{i n \theta }/ \sqrt{2 \pi }\) and \(\phi _p(\eta )\)

The Fourier modes and Hermite functions satisfy the periodic boundary condition in \(\theta\) and natural boundary conditions in \(\eta\), respectively. Using the orthonormality

of these functions one can show that the normalization condition of the PDF determines \(c_{0,0}\):

Before addressing the full problem of finding the recursive relation for the coefficients \(c_{n,p}\), we first calculate \(\hat{L}_\eta P_0(\theta ,\eta )\) using the expansion (75):

Remember that the Hermite functions can expressed by the Hermite polynomials \(H_p(x)\) as follows:

Where \(\alpha\) is an arbitrary scaling factor. Making use of the two properties

of the Hermite functions we can derive a handy expression for \(\hat{L}_\eta \phi _0(\eta ) \phi _p(\eta )\) by choosing \(\alpha = \sqrt{2}\sigma\):

Illustration of the MCF method. Expanding the probability density using eigenfunctions according to Eq. (75) and insertion into the FPE (72) leads to a relation for the expansion coefficients \(c_{n,p}\) where only nearest neighbors interact (including diagonals). The coefficients can then be computed by truncating the system and introducing transition matrices \(\hat{S}_n\) that can be obtained from a matrix continued fraction, see Sect. 3.1

Hence, \(\phi _0 \phi _p\) is a eigenfunction of the operator \(\hat{L}_\eta\). Combining Eq. (82), the expansion (75) and the FPE (72) yields

We split the sum into three parts, perform an index shift and use Eq. (80) to obtain

Furthermore, we introduce the orthonormal operators

so that \(\hat{O}_\theta e^{im\theta } = \delta _{n,m}\) and \(\hat{O}_\eta \phi _p = \delta _{p,q}\). Multiplying Eq. (84) from the left by \(\hat{O}_\eta \hat{O}_\theta\) allows to get rid of the sum over n and to find the following recursive relation.

The sum can be interpreted as a product of matrices and vectors. We introduce the coefficient vector

and the symmetric matrices

which allows for an elegant reformulation of Eq. (86) by the tridiagonal recurrence relation

Note, that for \(n=0\) we can readily infer the remaining elements of \(c_0\) (remember that \(c_{0,0} = 1\))

Equation (90) can by simplified by multiplication with \(\hat{B}^{-1}/n\) from the left to obtain the expression used in Sect. 3.1:

This is the tridiagonal recurrence relation which we have solved in the main part by the MCF method (illustrated in Fig. 15).

B. Cyclo-stationary case - MCF method

In this section we expand the MCF method to the case of an additional periodic signal \(s(t) = \varepsilon \cos (\omega t)\) and calculate the cyclo-stationary firing rate r(t). In Sect. 4 we have already shown that the time-dependent FPE for this problem, i.e. Eq. (41), can be transformed into a set of time-independent differential equations that are recursively related (cf. Eq. (44)):

with \(P_{\ell <0, k} = 0\). This hierarchy of coupled differential equations can be solved iteratively starting at the stationary PDF \(P_{0,0}\). The dependence is illustrated in Fig. 16 where many terms \(P_{\ell ,k}\) vanish (grey circles) due to the normalization condition of the PDF as explained in Sect. 4. In order to solve the corresponding differential equation for each \(P_{\ell , k}(\theta ,\eta )\) we chose the same ansatz as in the previous section:

Expansion of the PDF and coupling hierarchy. If the theta neuron is subject to a periodic signal the cyclo-stationary PDF can be expanded according to Eq. (94). Inserting this ansatz into the FPE leads to a system of time-independent recursively coupled differential equations (93). The coupling hierarchy is shown here. The stationary PDF \(P_{0,0}\) determines \(P_{1,1}\) which in turn determines \(P_{2,0}\) and \(P_{2,2}\) and so on. Gray dots represent terms that vanish as explained in Sect. 4

By substituting this ansatz into Eq. (47) a relation between the expansion coefficients \(c_{n, p}^{(\ell ,k)}\) and response functions \(r_{\ell ,k}\) (which determine the full firing rate r(t) via Eq. (46)) can be derived:

To find \(c_{n, p}^{(\ell ,k)}\), we again transform the differential equations (93) into coefficient equations by means of the expansion (94) (see derivation of the tridiagonal recurrence relation in the previous section) and obtain

For notational convenience we introduced the coefficient vectors

and droped the superscripts \(\varvec{c}_n := \varvec{c}_n^{(\ell ,k)}\). Further we denote the sum of the previously computed coefficient vectors by \(\varvec{c}_n' := \varvec{c}_n^{(\ell -1,k+1)} + \varvec{c}_n^{(\ell -1,k-1)}\).

As in the previous section Eq. (96) is multiplied by \(\hat{B}^{-1}/n\) from the left to obtain a more compact expression

with

In order to solve the 2-dimensional coefficient equation (98) we must assume that all Hermite functions and Fourier modes become negligible for large p or n, so that the corresponding coefficients vanish: \(c_{n,p} := c_{n,p}^{(\ell ,k)} = 0\) for \(p>p_\mathrm{max}.\) or \(n>n_\mathrm{max}\). Specifically, we have checked how key statistics as for instance the firing rate depend on p and n and observed saturation for sufficiently large p and n; we then take these as maximal values.

The normalization condition of the PDF is determines the coefficient \(c_{0,0} := c_{0,0}^{(\ell ,k)}\):

The remaining elements of \(\varvec{c}_{0}\) vanish. This can be seen from Eq. (96) that simplifies considerably for \(n = 0\)

The involved matrix \(A_k = \hat{A} + k \omega \mathbbm {1}\) is diagonal with non-vanishing elements \((A_k)_{p,p} \ne 0\) for \(p \ne 0\). This implies that Eq. (102) can only be fulfilled if \(c_{p\ne 0,0} = 0\). The resulting coefficient vector

serves as the initial condition in the following. All other coefficient vectors can be derived iteratively:

Here we have introduced the transition matrices \(\hat{S}_n\) and \(\hat{S}_n^R\) as done in the case of no periodic signal and the additional vectors \(\varvec{d}_n\) and \(\varvec{d}_n^R\), which take the inhomogeneity \(\varvec{\tilde{c}}_n\) in Eq. (98) into account (Risken, 1984). The ansatz Eq. (104) is substituted into Eq. (98), which yields

This equation is satisfied when both expressions in the square brackets vanish. This allows to derive two recursive relation. First, from the left hand side

and second, from the right hand side

Analogous expressions can be derived for \(\hat{S}^R_n\) and \(d_n^R\). Because all coefficient vectors \(\varvec{c}_{n}\) are assumed to vanish for \(n>\vert n_\mathrm{max}\vert\) it follows that

This defines the initial condition that is needed to determine the remaining transition matrices and vectors for \(0< n < n_\mathrm{max}\):

and for \(-n_\mathrm{max}< n < 0\)

To summarize, the rate response functions \(r_{\ell , k}\) and hence the full firing rate can be calculated following an iterative scheme illustrated in Fig. 16. Starting point is the zeroth order term in the signal amplitude (\(\ell =0\)), where \(\varvec{c}'_n = 0\) is known. For each iteration step, i.e. \(\ell \rightarrow \ell +1\), the following series of steps is executed.

-

1.

Compute the sum \(\varvec{c}_n' := \varvec{c}_n^{(\ell -1,k+1)} + \varvec{c}_n^{(\ell -1,k-1)}\) of the previously computed coefficient vectors and the matrices involved in the computations of \(K_n\) according to Eq. (99).

-

2.

Compute all transition matrices \(\hat{S}_n\) and vectors \(\varvec{d}_n\) (and \(\hat{S}_n^R\), \(\varvec{d}_n^R\)) iteratively starting at \(n=n_\mathrm{max}\) (and \(n=-n_\mathrm{max}\)) using Eqs. (111)–(114).

-

3.

Find all coefficient vectors \(\varvec{c}_n := \varvec{c}_n^{(\ell ,k)}\) iteratively according to Eqs. (104) and (105) using the initial condition Eqs. (103) for \(n=0\) and the transition matrices and vectors from the previous step.

-

4.

Substitute the coefficients \(c_{n,p}^{(\ell , k)}\) into Eq. (95) and determine the response functions \(r_{\ell , k}\).

C. MCF method: response to two periodic signals

In Sect. 4.3 we were interested in the nonlinear response r(t) of the noisy theta neuron subject to two periodic signals. To this end, we need to compute the response functions \(r_{k_1,k_2}^{\ell _1,\ell _2}\) that are related to the expansion functions \(P_{k_1,k_2}^{\ell _1,\ell _2}\) by Eq. (69). The latter in turn obey the following differential equation.

This system of coupled differential equations can be solved iteratively as described in Sect. 4.3. We wish to find the function \(P_{k_1,k_2}^{\ell _1,\ell _2}\) given that the functions on the right-hand side of Eq. (115) are already computed. For each \(P_{k_1,k_2}^{\ell _1,\ell _2}\) the differential equation has in principle the same structure as Eq. (93) from the previous section. Hence, it can be solved using the same numerical scheme based on the MCF method, using Eqs. (94)–(114), except for a change in the notation that reflects the expansion with respect to two signals:

Further two differences are:

-

1.

Replace \(k \omega\) by \(k_1 \omega _1 + k_2 \omega _2\) which effects the computation of \(K_n\) according to Eq. (99).

-

2.

The function \(P_{k_1,k_2}^{\ell _1,\ell _2}\) is determined by four previously computed expansion functions \(P_{k_1+1,k_2}^{\ell _1-1,\ell _2}\), \(P_{k_1-1,k_2}^{\ell _1-1,\ell _2}\), \(P_{k_1,k_2+1}^{\ell _1,\ell _2-1}\) and \(P_{k_1,k_2-1}^{\ell _1,\ell _2-1}\). This affects the computation of \(\varvec{c}_n'\) as follows:

$$\begin{aligned} \begin{aligned} \varvec{c}_n'&= \varvec{c}_{n}^{(\ell _1-1,\ell _2,k_1-1,k_2)} + \varvec{c}_{n}^{(\ell _1-1,\ell _2,k_1-1,k_2)}\\ {}&+\varvec{c}_{n}^{(\ell _1,\ell _2-1,k_1,k_2-1)} + \varvec{c}_{n}^{(\ell _1,\ell _2-1,k_1,k_2+1)} \end{aligned} \end{aligned}$$(116)

Note that the known initial coefficient vector in Eq. (103) is still \(\varvec{c}_0 = (1,0,...,0)^T\), when computing the stationary firing rate \(r_{0,0}^{0,0}\) and \(\varvec{c}_0 = (0,0,...,0)^T\) else.

The hierarchy of coupled differential equations is indeed different to the previous section and is provided up to the 2nd order in the signal amplitude in Sect. 4.3.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Franzen, J., Ramlow, L. & Lindner, B. The steady state and response to a periodic stimulation of the firing rate for a theta neuron with correlated noise. J Comput Neurosci 51, 107–128 (2023). https://doi.org/10.1007/s10827-022-00836-6

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10827-022-00836-6